Abstract

The proposed multilayer network-based comparative document analysis (MUNCoDA) method supports the identification of the common points of a set of documents, which deal with the same subject area. As documents are transformed into networks of informative word-pairs, the collection of documents form a multilayer network that allows the comparative evaluation of the texts. The multilayer network can be visualized and analyzed to highlight how the texts are structured. The topics of the documents can be clustered based on the developed similarity measures. By exploring the network centralities, topic importance values can be assigned. The method is fully automated by KNIME preprocessing tools and MATLAB/Octave code.

-

•

Networks can be formed based on informative word pairs of a multiple documents

-

•

The analysis of the proposed multilayer networks provides information for multi-document summarization

-

•

Words and documents can be clustered based on node similarity and edge overlap measures

Keywords: Text-mining, Multi-document summarization, Document clustering, Network similarity

Graphical abstract

Specifications table

| Subject Area: | Computer Science |

| More specific subject area: | Network analysis and text mining |

| Method name: | Multilayer network based comparative document analysis (MUNCoDA) method |

| Name and reference of original method: |

Term frequency-inverse document frequency: Robertson, Stephen. "Understanding inverse document frequency: on theoretical arguments for IDF." Journal of documentation (2004). Multilayer networks: Boccaletti, Stefano, et al. "The structure and dynamics of multilayer networks." Physics Reports 544.1 (2014): 1-122. |

| Resource availability: | https://github.com/abonyilab |

Method details

Multi-document summarization extracts information from multiple texts written about the same topic. The purpose of the method is to objectively explore the relationships between documents, identify key topics and compare documents according to the explored set of focal points. Contrary to classical multi-document summarization the extracted information is represented in a multiplex network which layers represent the network of the most informative word-pairs of the documents. The automated analysis of the network provides objective information about the topics and similarities of the documents.

The steps of the proposed method are shown in Fig. 1. In the first step, the scope of the analysis needs to be identified that determines which documents are available for the study of the topic (e.g., sustainability reports, scientific papers, etc.). The next step is the preprocessing of the text that means the removal of stopwords, stemming, removal of short/long terms, and removal of frequent/infrequent terms (locally or globally) and term weighting and normalization. The informative word pairs are extracted from the word co-occurrences define the edges of the networks of the documents. Finally, the multiplex network is generated where the layers represent the networks of the documents. In the final step, the nodes and the layers are clustered based on their similarities.

Fig. 1.

The process of the proposed multilayer network-based comparative document analysis method.

Background on MUNCoDA algorithm

Text mining is becoming a frequently utilized tool to extract informative patterns from unstructured or semi-structured data sources [1]. Text mining can also help in Comparative Document Analysis (CDA), which means the joint discovery of commonalities and differences between two individual documents [2]. Multi-document summarization aims to produce a summary delivering the majority of information content from a set of documents about an explicit or implicit main topic [3]. The result of multi-document analysis allows the comparative evaluation of document content. Semantic similarity of words and documents can be determined by human decision-based, information content-based [4], probability-based or word-pair-based [5] approaches.

Network analysis techniques may be used to understand better the relationships between texts, based on which quantitative (e.g., degree, centrality, clustering coefficient) and qualitative semantic interpretations (e.g., collocation, semantic relation, encoding semantic topics) can be made [6]. Ontology-based approaches, which use advanced text mining tools to unlock the inherent relationship between concepts, can be used to improve the retrieval of design information in a large, unstructured text data [7]. With networks not only the relationships within a document be discovered, but also whether there is overlap between information from different sources [8]. Text mining combined with network analysis can also be used to extract keywords from various sources and track the evolution of a topic over time [9].

Informative word pairs of documents can form multilayer networks that are ideal models for representing multidimensional data [10]. The proposed method is based on the novel combination of the previously presented concept as it will be shown in the following subsections.

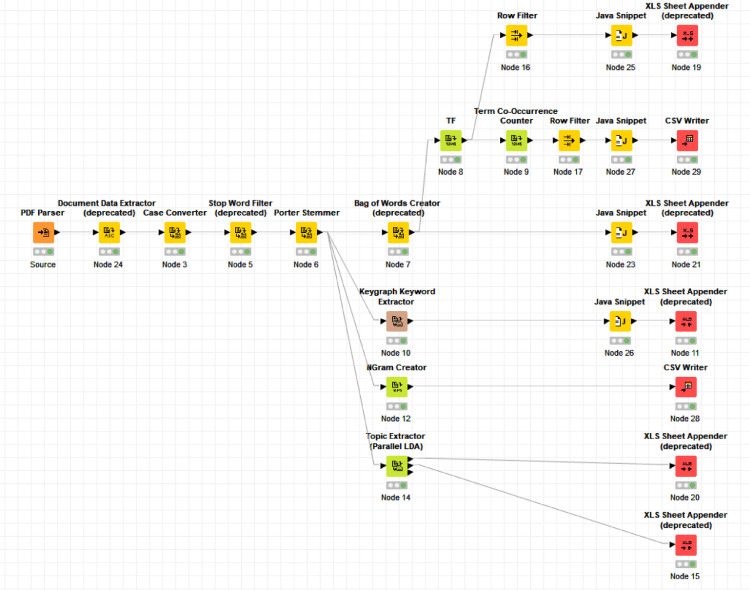

Document preprocessing and term co-occurrence extraction

The first step of the KNIME workflow developed for the preprocessing of the documents is the text extraction from the pdf files (see Fig. 2.). After the extraction of document-related information and conversion the text to lower case, the stop words are eliminated. A Porter Stammer node is used for stemming, which means the reduction of the inflectional and derivationally related forms of a word to a common base form. The method processes the bag of words containing the terms occurring in the documents. The TF node computes the relative term frequency (tf) of each term according to each document and adds a column containing the tf value. The Term Co-Occurrence counter node counts the number of co-occurrences for the given list of terms within the selected parts e.g. sentence, paragraph, section and title of the corresponding document. At the end of the text mining, the processed data is written to excel or csv files.

Fig. 2.

The preprocessing workflow developed in KNIME.

Besides the core preprocessing process, the relevant keywords are also extracted by a graph-based approach (with the Keygraph Keyword Extractor node), NGrams were also generated by the and the NGram Creator node. The Topic Extractor node has also been utilized to cluster the words based on the latent Dirichlet allocation (LDA) generative statistical model which topic areas are used for verification.

Extraction of the most informative word-pairs

Contrary to classical text mining, besides studying the frequencies of individual words, the distributions of word pairs in the documents were considered, so in this method the word pairs are referred as terms, denoted by t.

To measure how commonly or rarely the term is applied across all documents, the number of documents containing the terms nt is calculated [11]:

| (1) |

where D represents the set of the studied documents, so nt is between 1 and M=|D|, where M stands for the number of documents.

As the P(t|D) conditional probability represents how common or rare is the term across the documents, the logarithmically scaled inverse fraction of the documents that contain a term is used to evaluate how much information the term provides.:

| (2) |

The occurrence of the word pairs in a given document are represented by the variable ft,d, that shows how many times a term t occurs in a given document d. To prevent bias towards longer documents, these ft,d raw term counts are normalized by the raw count of the most frequently occurring terms in the document, so the augmented frequency is calculated as:

| (3) |

where K=[0,1] is a tuning parameter, usually K=0.5;

The “term frequency - inverse document frequency” measure combines the previously presented term frequency and inverse document frequency measures to evaluate the information content of a term:

| (4) |

Based on the presented measures the extracted word-pairs are filtered as the word-pairs should appear in almost every documents, nt > trn, and should provide useful information tfidf(t, d, D) > trinfo.

Generation of a multiplex network of documents

Our key idea is that the filtered the word-pairs can be used to form networks of the documents. As the t term consist of the pair of the wi -th and the,wj-th words, the edge between these words in the d-th layer of the multiplex network is weighted as tf(t, d)|wi, wj ∈ d. In this way, a single network can be created for each document, from which a multiplex network can be defined.

In the resulted multiplex network, the nodes represent words, while the edges represent the word-pairs weighted by how frequent of their connection in the given document. The network has precisely as many layers as documents are analyzed (M) and consist of a fixed set of nodes (words) connected by different types of links in each layer (where N represents the number of selected words from the D set of documents).

A general/global picture about the problem, which discussed in the input documents can be obtained by merging the layers of the multiplex network into a “total projection” layer. Instead of the aggregation of the layers [12], this layer is defined based on the average or the maximum of the tf(t, d) values,

| (5) |

| (6) |

Evaluation of word and document similarities

The analysis is done by determining the degree of similarity between the nodes (words) and layers (documents) and evaluating the node centralities.

The similarity of words in a given document evaluated based on the relative term-frequency of the word-pairs, , which is directly represented by the edge weighs. Based on this interpretation only similarities of the frequently co-occurring words are given. Based on the calculation of the transitive similarities of the paths can be determined for each word-pairs. For the non-neighbor nodes the products of sequentially multiplying similarity values of node pairs of the paths can then be calculated.

As more than one path can exists among these paths, we select that has the minimum similarity degree, therefore the calculation of the transitive similarity leads to the problem of finding the P shortest path set [13]

| (7) |

The procedure can be easily implemented by the well-known Floyd's algorithm:

| for k =1:N |

| for i =1:N |

| for j =1:N |

| if < |

| ← |

| end if |

The resulted similarity matrix of the d-th document allows the clustering the words in form of dendrograms and layer wise two-dimensional distance-preserving visualization of the words by multidimensional scaling (MDS), where the distance is . By using MDS, the subject areas do not need to be preliminary identified, the tool allows the objective identification of the subject areas. In a targeted study, when subject areas are known, it is possible to determine which words are most closely related to a particular expression (topic title), thus allows the measurement the depth of a document's coverage of the given subject. and clustering the thematic areas of the documents [14].

The similarity of the documents can be evaluated based on the aggregation of the pairwise comparison of the word similarities:

| (8) |

The above presented Ls(α, β) measure can be considered as the transitive similarity based extension of the edge overlap measure that is used to compare the similarities of the layers of multiplex networks [14]. The developed measure can be also used to evaluate the coverage of a given document: as measures how the d document is similar to the layer defined based the maximum of the tf(t,d) values.

The importance of the words and word-pairs can be evaluated based on classical network centrality measures. As these measures are well known and available in most of the network analysis packages, we do not discuss them in this paper. We only note that the documents can also be compared based on the rank correlation of these measures.

The calculation of the similarities has been implemented in MATLAB and the code is available at the website of the corresponding author (https://github.com/abonyilab/MUNCoDA).

Method validation

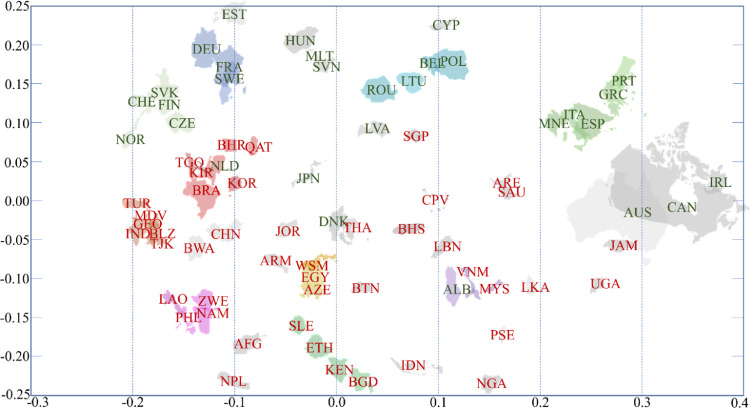

The methodology is validated based on Voluntary National Reviews (VNR) [15]. The VNRs of 75 countries were analyzed, which were available in English. In this complex example, MUNCAD's method has been used to explore potential future collaboration opportunities for the countries and to identify topic areas of the implementation of 2030 Agenda.

Word pairs that are found in at least five documents and have a tf-idf value of equal or more than 0.6 were analyzed. The selection criteria depend on the scope of the analysis and the structure of the documents, therefore it should be determined by the analyst. The authors suggest to utilize criteria based on the tf-idf values of the analysed word pairs. The results of the analysis were validated by grouping the countries shown in Fig. 3. (and summarized in Fig. 4) based on the authors' expertise. Fig. 3. shows that there are countries in a group that has either a geographical similarity (common border) or an actual level of development. In Fig. 4. the shapes of the countries in the same group are marked with the same color. The color of the country code letters indicates their level of development (green: developed countries, red: developing countries). The summary results obtained support the expected groups of countries according to the sustainable development goals [16]. This result confirms that countries around the world can be grouped based on their VNR documents [16] with the proposed MUNCoDA method that is proven to be applicable to explore the relationships between documents in the same subject area.

Fig. 3.

The dendrogram-based clustering of the analyzed countries.

Fig. 4.

Similarity-based 2D visualization of countries around the world based on published VNRs.

The Matlab code of the dendrogram used for document comparison is the following:

| %% clustering based on degree correlation Country |

| CK=1-L_sim; %L_sim contains the similarity measures of the M documents, so it is an M x M matrix. |

| ZCK = linkage(CK,'complete'); |

| figure (3) |

| [H,T,outperm_ck]= dendrogram(ZCK,0,'Orientation','left','Labels',listcountries); |

The Matlab code of the multidimensional scaling based visualization of the similarities of the countries is the following:

| %% Similarity-based 2D visualization of the documents |

| figure (4) |

| clf |

| Y = mdscale(L_sim,2); %,'Start','random','criterion','sammon' |

| plot(Y(:,1),Y(:,2),'w.') |

| hold on |

| text(Y(:,1),Y(:,2),listcountries,'Fontsize',8); |

Conclusions

The multilayer network-based comparative analysis of documents (MUNCoDA) method has been developed to standardize and analyze valuable inconsistent textual information in documents dealing with the same subject area. The proposed method creates a multiplex network based on informative word pairs of documents, in which the edges represent the relevant relationships and can be weighted based on the information measures used in text mining. Networks can be analyzed on their own (per document) so that the relationships between topics and word pairs can be identified and visualized. Word pairs can be compared based on their node similarity and centrality measures. In the multiplex network, relationships can be classified based on edge overlap and layer similarity measures. Document exploration is based on an automated KNIME workflow and MATLAB/Octave code that calculates the informative word-pairs, generates the network and evaluates the similarities of the words and the documents.

Declaration of Competing Interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We acknowledge the financial support of Széchenyi 2020 under the GINOP-2.3.2-15-2016-00016.

References

- 1.Talib R., Hanif M.K., Ayesha S., Fatima F. Text mining: techniques, applications and issues. International Journal of Advanced Computer Science and Applications. 2016;7(11):414–418. [Google Scholar]

- 2.Ren X., Lv Y., Wang K., Han J. Proceedings of the Tenth ACM International Conference on Web Search and Data Mining. 2017. Comparative document analysis for large text corpora; pp. 325–334. [Google Scholar]

- 3.Wan X., Yang J. Proceedings of the 31st annual international ACM SIGIR conference on Research and development in information retrieval. 2008. Multi-document summarization using cluster-based link analysis; pp. 299–306. [Google Scholar]

- 4.P. Resnik, Using information content to evaluate semantic similarity in a taxonomy, arXiv preprint cmp-lg/9511007. (1995) 1-6.

- 5.Bollegala D., Matsuo Y., Ishizuka M. Measuring semantic similarity between words using web search engines. WWW. 2007;7(2007):757–766. [Google Scholar]

- 6.Drieger P. Semantic network analysis as a method for visual text analytics. Procedia-social and behavioral sciences. 2013;79:4–17. [Google Scholar]

- 7.Shi F., Chen L., Han J., Childs P. A data-driven text mining and semantic network analysis for design information retrieval. Journal of Mechanical Design. 2017;139(11):111402–111416. [Google Scholar]

- 8.Martin M.K., Pfeffer J., Carley K.M. Network text analysis of conceptual overlap in interviews, newspaper articles and keywords. Social Network Analysis and Mining. 2013;3(4):1165–1177. [Google Scholar]

- 9.Madani F. ‘Technology Mining’ bibliometrics analysis: applying network analysis and cluster analysis. Scientometrics. 2015;105(1):323–335. [Google Scholar]

- 10.Gadár L., Abonyi J. Frequent pattern mining in multidimensional organizational networks. Scientific reports. 2019;9(1):1–12. doi: 10.1038/s41598-019-39705-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Feldman R., Sanger J. Cambridge University Press; New York, USA: 2007. The text mining handbook: advanced approaches in analyzing unstructured data. [Google Scholar]

- 12.Boccaletti S., Bianconi G., Criado R., Del Genio C.I., Gómez-Gardenes J., Romance M., Sendiña-Nadalje I., Wang Z., Zanin M. The structure and dynamics of multilayer networks. Physics Reports. 2014;544(1):1–122. doi: 10.1016/j.physrep.2014.07.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Erdélyi M., Abonyi J. 7th International symposium of hungarian researchers on computational intelligence. 2006. Node similarity-based graph clustering and visualization; pp. 483–494. [Google Scholar]

- 14.Seber G.A. Vol. 252. John Wiley & Sons; 2009. (Multivariate observations). [Google Scholar]

- 15.Sustainable Development Goals Knowledge Platform, online source:https://sustainabledevelopment.un.org/memberstates, accessed: 31 March 2020.

- 16.Sebestyén V., Domokos E., Abonyi J. Focal points for sustainable development strategies - text mining based comparative analysis of voluntary national reviews. Journal of Environmental Management. 2020;236 doi: 10.1016/j.jenvman.2020.110414. [DOI] [PubMed] [Google Scholar]