Abstract

Clinical dashboards summarize indicators of high-volume patient data in a concise, user-friendly visual format. There are few studies of the use of dashboards to improve professional practice in anesthesiology. The objective of the present study was to describe the user-centered development, implementation and preliminary evaluation of clinical dashboards dealing with anesthesia unit management and quality assessment in a French university medical center. User needs and technical requirements were identified in end user interviews and then synthesized. Several representations were then developed (according to good visualization practice) and submitted to end users for appraisal. Lastly, dashboards were implemented and made accessible for everyday use via the medical center’s network. After a period of use, end user feedback on the dashboard platform was collected as a system usability score (range 0 to 100). Seventeen themes (corresponding to 29 questions and 42 indicators) were identified. After prioritization and feasibility assessment, 10 dashboards were ultimately implemented and deployed. The dashboards variously addressed the unit’s overall activity, compliance with guidelines on intraoperative hemodynamics, ventilation and monitoring, and documentation of the anesthesia procedure. The mean (standard deviation) system usability score was 82.6 (11.5), which corresponded to excellent usability. We developed clinical dashboards for a university medical center’s anesthesia units. The dashboards’ deployment was well received by the center’s anesthesiologists. The dashboards’ impact on activity and practice after several months of use will now have to be assessed.

Electronic supplementary material

The online version of this article (10.1007/s10877-020-00522-x) contains supplementary material, which is available to authorized users.

Keywords: Anesthesia, Dashboard, Data reuse, Data visualization

Introduction

Visual analytics is “the science of analytical reasoning facilitated by interactive visual interfaces” [1]. When applied to data, visual analytics techniques produce dashboards, among other visual analysis tools [2]. A dashboard is defined as a single-screen visual representation of data from several sources. Graphical displays and tables are used to display qualitative and quantitative indicators. The most relevant information on a dashboard has to be assimilated by managers in a single glance, with a view to improving the decision-making process [3, 4]. Over the last 10 years, dashboard technologies have been deployed widely in the field of healthcare [5]. Clinical dashboards enable easy access to several sources of data on a large number of patients, after aggregation and synthesis into concise, usable indicators. Furthermore, clinical dashboards are intended to provide clinicians with feedback on their practices and thus enable improvements in the quality of patient care [6, 7].

Healthcare organizations have introduced dashboards for various purposes, such as monitoring health system performance [8], reducing medication errors and thus optimizing treatment [9, 10], improving decision-making in the Emergency Department [11–13], reducing the incidence of infections [14–16] and improving the quality of care in maternity units [17]. The end users may be physicians [8, 16, 18], pharmacists [9] or nurses [5, 12]. The use of clinical dashboards was reportedly associated with shorter report turnaround times [18] and a lower incidence of ventilator-associated pneumonia [14].

The data collected by an anesthesia information management system (AIMS) are mainly used to improve the overall documentation of activities and procedures or to monitor specific activities (such as blood transfusion or compliance with antibiotic administration protocols) [19–23]. Although the importance of improving intraoperative practices (such as mechanical ventilation, blood pressure control, and anesthesia depth monitoring) has been emphasized in recent years, there are still very few reports on the use of visual analytic tools that could help anesthesiologists monitor and improve their professional practice. Nelson et al. have reported on the implementation of dashboards to support clinical consistency in medications and airway management for children receiving radiation therapy [24]. Other researchers have reported the use of anesthesia dashboards to reduce discrepancies in controlled substance documentation in the operating room [25] or as an audit tool for obstetric anesthesia and pediatric anesthesia practice [26, 27].

It is widely accepted that end users must be involved throughout the design process (from the earliest conceptual steps to the final evaluation), so that a technology is usable enough for easy, correct, safe use and thus achieves its intended clinical and/or organizational impact [28]. At Lille University Medical Center (Lille, France), more than 65,000 anesthesia procedures are performed each year by 15 specialist surgical units (e.g. heart and lung surgery, obstetrics, orthopedics, pediatric surgery, etc.). The Medical Center’s Department of Anesthesia is supported by a data warehouse [29–31] fed with data from the AIMS and a billing application (diagnoses, medical procedures, hospital stays, death, etc.). The data warehouse also frequently supplies data for retrospective clinical studies. In an earlier survey, we had found that our medical center’s anesthesiologists were keen to introduce dashboards for clinical research, the evaluation of professional practice, and organizational management [32]. The objective of the present study was to describe the user-centered development, implementation and preliminary evaluation of clinical dashboards dealing with unit management and quality assessment in the anesthesia units at Lille University Medical Center.

Materials and methods

In a first step (“end user needs”), we met potential end users and conducted semi-directive interviews to define the end goal. We used the interview material to identify and synthesize issues, and thus identify indicators (variables, measurements, and filters). Each indicator was associated with one or more dashboards. In a second step (“prototyping”), we developed a number of potential solutions by applying good visualization practice relevant to the tool under development (including simple representations, consistent layouts, labels, and date formatting) [33–35] and presented them to end users for appraisal. In the third and last step (“deployment and preliminary evaluation”), the dashboards were implemented and made accessible to end users for everyday use. After a period of use, user feedback was collected and analyzed.

End user needs

In order to record end user needs, two investigators conducted semi-structured interviews together. The semi-structured interviews were based on a grid that enabled the investigators to explore the issues of interest, the currently available key indicators, and the indicators’ availability, representations and limitations ("Appendix 1").

The interviews were conducted in the Department of Anesthesia between March and May 2019. The interviews were audio-recorded. We contacted physicians from all 15 anesthesia units and met the respondees or other physicians recommended by the respondees.

Similar themes and questions that had been expressed in different ways by the participants were grouped together under a single topic. The themes were prioritized by the frequency of reporting. For each theme, we checked on the availability of the corresponding data in the hospital’s data warehouse or the technical feasibility of retrieving additional data from the hospital’s main information system. Based on the clinicians’ feedback and the scientific literature, we selected key indicators for each topic.

Prototyping

Data were retrieved from an anesthesia data warehouse [29, 30] developed at Lille University Medical Center. The warehouse contains pre-operative and intraoperative data from the AIMS and post-operative data from a billing application.

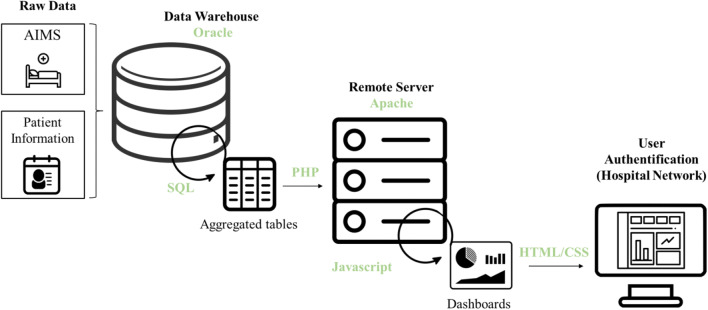

The web interface was implemented using HTML, CSS and JavaScript, while PHP and Oracle were used on the server side. The visualization of the dashboards was rendered using Chart.js and D3.js libraries [36]. The application was implemented on an Apache web server [37] running on a secure, private virtual server (Windows Server 2012 R2 Datacenter Edition). The server could be accessed over the hospital’s private network after user authentication. The clinical dashboards were updated every trimester. The web application’s architecture is shown schematically in Fig. 1.

Fig. 1.

Raw data from the AIMS and billing software were integrated in a data warehouse. An aggregation step produced suitable aggregated tables for each dashboard. The application was hosted on the hospital’s web server and could be accessed over the hospital network after user authentication

Each dashboard corresponded to a single theme and presented the theme’s key indicators. To ensure that the indicators’ visual representations were well understood, we developed different versions of each indicator. The clinicians were invited to compare the versions, select their preferred version, and explain how they understood it. We selected the best-liked version of each indicator. For each dashboard, we developed several templates based on good visualization recommendations [33–35] with, for instance, clear labelling, consistent positioning of buttons and features across the dashboards, and with a global arrangement of the dashboard that respects the sequence of cognitive tasks involved (first select and filter at the top and on the left of the dashboard, and then read in the main part of the screen). Then, we tested different layouts, formats and styles (colors, text format, etc.) with the aim of offering a concise, precise, clear representation. Each template was submitted to the users for appraisal, and the best-liked template was selected for further use.

The data were aggregated in advance on the server-side, in order to reduce client-side calculation times and avoid the sharing of non-aggregated data. All indicators were computed beforehand for each combination of dimensions. Hence, when the application was queried, the indicators were displayed immediately.

Deployment and preliminary evaluation

Anesthesiologists who were involved in the development process were informed by e-mail of the dashboards’ availability, content, and instructions for use. Furthermore, they were asked to disseminate this information to any other potentially concerned colleagues.

Two months after the dashboards had been implemented, we interviewed (i) the anesthesiologists involved in the dashboard development and (ii) other anesthesiologists aware of the project. The interviewees were asked to use the dashboard in front of the investigator. Afterwards, we asked the interviewees to rate the dashboard’s ease of use, its accessibility, its practical suitability, and whether it matched their needs. At the end of the interview, the interviewees were asked to rate the system usability scale (SUS) score [38, 39]. The 10-item SUS questionnaire provides an overview of subjective assessments of usability. For each item, the score is rated on a Likert scale ranging from 1 (“strongly disagree”) to 5 (“strongly agree”). The final scores for items 1, 3, 5, 7, and 9 were equal to the respondee’s Likert scale score minus 1, whereas the final scores for items 2, 4, 6, 8, and 10 were equal to 5 minus the respondee’s Likert scale score. To obtain the overall SUS score, the item scores were added and then multiplied by 2.5. To obtain the overall SUS score, the item scores were added and then multiplied by 2.5; the higher the SUS score, the more usable the dashboard.

Results

End user needs

Of the 21 anesthesiologists invited to take part in the design process, 12 agreed and were interviewed. These anesthesiologists came from eight of the department’s anesthesia units. The participants’ level of experience also varied; 2 had been in practice for less than 5 years, 5 had been in practice for 5 to 10 years, and 5 had been in practice for over 10 years. Three of the anesthesiologists were unit managers. The mean (SD) interview duration was 45 (12) min.

We identified 17 themes and 29 related issues in the context of unit management and quality assessment. Thirty-nine indicators/metrics were then identified. Some indicators were common to several issues (e.g. the number of procedures), although the exact representation could differ from one theme to another. Each type of indicator had to be computed for several time periods (the week, the month, and the year) and for each anesthesiology unit (heart and lung surgery, obstetrics, pediatrics, etc.). These themes, questions and indicators are detailed in Table 1. Measures and sample values are also available for each indicator in Online Resource ESM1.

Table 1.

A synthesis of user needs: identified themes, issues and related indicators, according to the context

| Context | Dashboard (theme) | Questions | Indicators |

|---|---|---|---|

| Unit management | Overall activity of the unita |

How is the level of activity changing? How is the mortality rate changing? How is the length of stay changing? |

The number of procedures Mortality The length of stay |

| Patient flow in the unita |

What is the patient volume in the hospital’s various units, at each step in the hospital stay? What is the patient pathway (i.e. a transfer from one unit to another) at each step in the hospital stay? |

The number of patients in the hospital’s various units on admission, before the operation, immediately after operation and before discharge The patient’s final vital status (dead/alive) |

|

| Room occupancy rate | What is the operating room’s level of occupancy? |

The operating time The perating room opening hours The non-occupancy rate during opening hours |

|

| Human resources | Are operations always performed with nurse anesthetists? | The proportion of operations lacking a nurse anesthetist | |

| Organization of the operating room | What is the waiting time between induction and the surgeon’s arrival? | The time interval between induction and surgeon’s arrival | |

| Quality assessment | Compliance with hemodynamic guidelinesa |

What is the proportion of operations with low mean arterial pressure? What is the proportion of operations with oxygen desaturation? What is the proportion of operations with bradycardia or tachycardia? |

The proportion of operations during which the mean arterial pressure was below 65 mmHg for at least 15 min The proportion of operations during which the SpO2 was below 90% for at least 15 min The proportion of operations during which the heart rate was below 60 bpm or over 120 bpm for at least 15 min |

| Compliance with ventilatory guidelinesa |

What is the trend for use of the tidal volume? What is the trend for use of the positive end-expiratory pressure (PEEP)? What is the trend for use of the plateau pressure (PPlat)? What is the trend for use of the driving pressure? What proportion of procedures complies with ventilation guidelines? |

The mean tidal volume by year and by sex The mean tidal volume based on the ideal body weight by year and by sex The proportion of patients receiving more than 8 ml/kg, based on ideal body weight The proportion of patients with a mean PEEP outside the range 5–10 cm H2O The mean PEEP by year and by sex The proportion of patients with a PPlat over 30 cm H2O The mean PPlat by year and by sex The proportion of patients with a driving pressure over 15 cm H2O The mean driving pressure by year and by sex |

|

| Assessment of post-operative complicationsa |

What proportion of patients is transferred to intensive care? What is the post-operative mortality rate? What are the changes over time in transfer to intensive care and the post-operative mortality rate? |

Number of transfer in intensive care Number of death in intensive care Transfer rate to intensive care Mortality rate during the hospital stay |

|

| Documentation of the anesthesia procedurea | Is the information about the anesthesia procedure well documented in the anesthesia records? | The proportion of records with documented events: recruitment maneuvers, the patient’s position, checks on pressure points, analgesia evaluation, and time trends in the four measurements | |

| Consumption of neuromuscular blocking agents (NMBAs)a | Which NMBAs are used? | The number of operations with administration of succinylcholine, atracurium, cisatracurium, mivacurium, rocuronium or vecuronium | |

| Consumption of opioids and local anesthetics during childbirtha | Which opioids and local anesthetics are used for epidural procedures? | The number of deliveries with administration of lidocaine, sufentanil, ropivacaine, levobupivacaine, bupivacaine, or epinephrine | |

| Airway management in the post-anesthesia care unita | What is the practice for tracheal extubation? | The number of tracheal extubations in the post-anesthesia care unit | |

| Fluid administration and blood transfusion |

What types of fluid (crystalloids or synthetic colloids) are administered? What quantities of fluid are administered? |

The number of operations with administration of Ringer’s solution, Ringer’s lactate, Ringer’s acetate, hydroxyethyl starches, and/or gelatins The quantity of administered solutions |

|

| Type of anesthesiaa | What type of anesthesia (general anesthesia, regional anesthesia, or sedation) is applied? | The number of operations under general anesthesia, sedation, regional anesthesia, or a combination of these, respectively | |

| Transfusion | What proportion of patients receive a blood transfusion? |

The proportion of patients transfused in the operative room The proportion of patients transfused in the post-operative care units |

|

| Antibiotic prophylaxis | What proportion of procedures complies with the guidelines on antibiotic prophylaxis? |

The time interval between antibiotic administration and incision The proportion of operations that complies with the guidelines |

|

| Postoperative analgesic use | Which analgesia regimens are applied after surgery? |

The number of patients given analgesics The duration of the course of analgesics |

aIndicates the themes that were subsequently implemented

The following functional requirements were also identified: sending the dashboard by e-mail, printing it (for display in the department), easy but secure access on over the hospital network, categorical variables (such as ASA status), time periods (such as a personalized date filter), and the ability to switch the indicators from a number to a percentage.

We also identified five technical requirements. The solution had to be free of charge, easily maintainable by future developers, easily updated, with user-friendly graphics, and easy to connect to the hospital information system.

Prototyping

Three of the identified themes were excluded due to a lack of data. Of the 14 remaining themes, ten were developed as dashboards; these corresponded to the themes most frequently mentioned during the interviews and whose implementation was judged to be feasible (indicated by an a in Table 1).

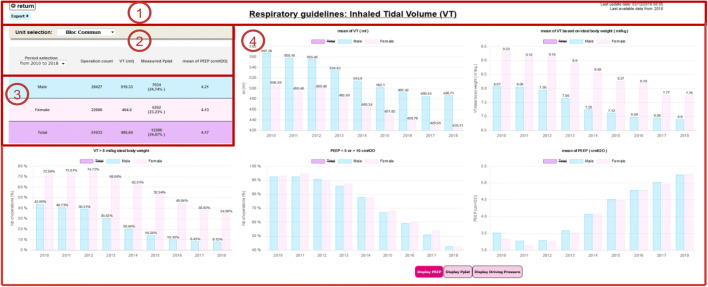

Each dashboard had the same layout and graph arrangement, and was composed of four sections—giving a clear, uncluttered interface. Section 1 comprised the dashboard’s title, information on data availability, and a print button. Section 2 included a filter for selecting the surgical unit. The section’s time scale could be switched to display information from the previous 3, 6 or 12 months, the whole period from 2010 onwards, or a custom date range. Section 3 displayed a summary table. Section 4 was the main part of the dashboard, and contained all the charts.

Deployment and preliminary evaluation

The dashboards went online on September 1st, 2019. Twenty end users (4 residents, 4 nurse anesthetists, and 12 anesthesiologists, including the head of the department and a unit manager) from nine anesthesia units were interviewed. Twelve users (60%) had not taken part in the development step and so were not familiar with the dashboards. Eight of the interviewees had been in practice for less than 5 years, 6 had been in practice for 5 to 10 years, and 6 had had been in practice for over 10 years.

The interviewees had a good opinion of the dashboards; which were considered to be highly usable. The mean (SD) overall SUS score was 82.6 (11.5). The results for each item are presented in Table 2.

Table 2.

System usability scale scores

| The system usability scale items | Mean (SD) score |

|---|---|

| Q1: I think that I would like to use this system frequently | 4.6 (0.7) |

| Q2: I found the system unnecessarily complex | 1.4 (0.5) |

| Q3: I thought the system was easy to use | 4.7 (0.5) |

| Q4: I think that I would need the support of a technical person to be able to use this system | 2.1 (1.3) |

| Q5: I found the various functions in this system were well integrated | 4.5 (0.8) |

| Q6: I thought there was too much inconsistency in this system | 1.6 (0.7) |

| Q7: I would imagine that most people would learn to use this system very quickly | 4.3 (0.6) |

| Q8: I found the system very cumbersome to use | 1.5 (0.8) |

| Q9: I felt very confident using the system | 4.2 (1.0) |

| Q10: I needed to learn a lot of things before I could get going with this system | 2.7 (1.5) |

| Total | 82.6 (11.5) |

Overall, the dashboards were considered to be user-friendly and easy to read, and the information could be rapidly accessible. The end users considered that the dashboards constituted a good way to monitor changes in practice from an individual perspective. Moreover, the interviewees considered that dashboards be used to monitor the impact of a change in the unit’s quality improvement policy (e.g. documentation of the anesthesia procedure) and the compliance with the current guidelines (e.g. on ventilation).

Despite the very positive feedback, several opportunities for improvement were identified. Firstly, the end users wanted the home page to be more attractive. The computation of some indicators needed to be more clearly explained. Other interviewers would have liked to have more unit-specific dashboards (e.g. for the assessment of specialist surgical procedures).

Lastly, the interviewees reported that their newly acquired familiarity with dashboards was likely to prompt them to come up with more themes that could be usefully addressed by these systems.

Discussion

Here, we described the user-centered development, implementation and preliminary assessment of clinical dashboards in the Department of Anesthesia at Lille University Medical Center. Ten dashboards were developed and encompassed 39 indicators. The anesthesiologists who had used the dashboards for 2 months gave very positive feedback on the system, including good usability and high perceived usefulness.

One strength of the present study was the involvement of end users throughout the development process, with the objective of meeting their needs as closely as possible. Judging from the excellent SUS scores, this objective was met.

To the best of our knowledge, there are very few publication on the development of clinical dashboards for managing units and improving quality in anesthesiology [24–27]. By developing clinical dashboards for mechanical ventilation and blood pressure control, the present research is part of Lille University Medical Center’s current effort to improve intraoperative management. Specifically, in reference to current guidelines and recent literature, key indicators identified by end-users included the incidence of hypotension [40], ventilatory settings and related monitored variables (particularly driving pressure) [41], fluid administration [42] and blood transfusion [43]. Interestingly, there is significant potential for improvement in compliance with current recommendations of good practice, as they are based on relatively recent studies. Figure 2 shows that the practice of using small tidal volumes and PEEP, which is the basis of protective lung ventilation [41], has consistently increased over the past few years in our hospital. Thus, the dashboards will allow each anesthesia staff to monitor its own practice along time. Moreover, by providing data on postoperative complications, the dashboards will allow to directly assess the impact of any practice change on postoperative outcome. Finally, since the impact of practice changes likely depends on patient and/or surgical risk factors [44], our dashboards will allow for prioritization of different goals across operating rooms [for example, the primary focus will be on ventilatory endpoints in abdominal or thoracic surgery (high risk of respiratory complications), and instead on mean arterial pressure in neurosurgery (low blood pressure can promote cerebral ischemia and increase intracranial pressure)].

Fig. 2.

The ventilation management dashboard. Section 1 features the dashboard’s name, information about data availability, and print button. Section 2 offers settings for selecting the medical unit and the time period displayed. Section 3 is a summary table. Section 4 is the main part of the dashboard, with all the charts

It is important to note that the dashboard system is not embedded in the AIMS; if ever the center changes its AIMS, we would still be able to produce dashboards with data from the data warehouse. We followed a user-centered process when developing this tool, in order to guarantee usability and ensure that the information displayed did not lead to misunderstandings or interpretation errors. This approach is in line with current guidelines on developing health information technology and medical devices [45].

Although the present development process involved anesthesiologists, this tool could also be used by residents, nurses, surgeons, and healthcare managers. During the design process, the end users’ lack of knowledge about this type of technology limited the number of proposals; indeed, the clinical staff were not used to having access to aggregated data (except for the individual patient follow-up). Moreover, they were not familiar with designing graphics themselves. After the dashboards had been released, the staff became more familiar with the tool and thus were more likely to suggest new themes that could be addressed with the help of dashboards.

The next step of this project is to finalize the tool by addressing the problems encountered and reported by users (see Sect. 3.3). To this end, we will use a set of usability heuristics adapted to the visualization of dashboards [46] to evaluate and improve their usability. Besides, the acceptability of the dashboard by clinicians and units’ heads will be assessed through a questionnaire inspired by the unified theory of acceptance and use of technology [47]. In subsequent work, we intend to assess the dashboards’ medium-term acceptability with anesthetists and other healthcare professionals. After a few months of use, a further round of development might be useful for adapting the existing dashboards and generating new ones that address novel themes and issues and are applicable to other medical specialties. The integration of new data sources could also be considered. On the clinical front, we will have to evaluate the impact of dashboards on activity, practices and endpoints, such as patient outcomes [48]. In this sense, a dashboard could be considered to be an audit tool [49]; it will also be important to establish how dashboards might (i) help clinicians to become more aware of their practice and habits, (ii) change the clinicians’ representations of their activities, and (iii) assist with the decision-making process. For example, the discovery and appropriation of indicators by clinicians will help them to define targets, according to the context of their service and the recommendations of learned societies.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Acknowledgements

We thank Pascal Vivier for delivery of the server. We also thank Anaïs Demay for conducting the preliminary survey. Lastly, we thank the staff at Lille University Medical Center’s anesthesia units for making themselves available during the dashboard design process.

Appendix 1: The interview grid

Are there any issues about the quality of care or unit management that need to be addressed?

Can you already find these answers? If so, how do you proceed?

Is this a one-off issue (i.e. it only happens once and does not require follow-up) or a recurring issue (i.e. it comes up regularly and requires follow-up)?

Are there already indicators for these issues or are you still in an exploratory phase, i.e. you are not sure which indicator(s) to use?

If displayed on a dashboard, which data and information would enable you to address the issue? What information would you need to answer the problem in mind?

On the dashboards, which data format would be most meaningful for you? Graphs, tables, numbers, or other formats? You can give more than one answer, if need be.

How often should the displayed data be updated?

Do you know of other staff how might be interested in these dashboards? If so, who? And why do you think would they be interested?

Do you think you would see any benefits for your practice if a dashboard could be developed to address this issue? Or, on the contrary, would a dashboard make your practice more difficult or less risky?

Overall, do you see any benefits in creating dashboards for the issues that you are dealing with? What disadvantages or difficulties must be overcome?

Compliance with ethical standards

Conflict of interest

The authors declare no conflicts of interest with regard to the present research.

Ethical approval

In accordance with French law, as the study did not involve patients, it was not submitted to an ethics committee. The data warehouse has been declared to the Commission Nationale de l'Informatique et des Libertés (CNIL, the French data protection authority. N° DEC20120228-00).

Informed consent

All the professionals interviewed gave their consent to participate in the study.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Thomas JJ, Cook KA. Illuminating the path: the research and development agenda for visual analytics. 1 janv 2005 (cité 17 mars 2020); Disponible sur: https://www.hsdl.org/?abstract&did=.

- 2.Wong PC, Thomas J. Visual analytics. IEEE Comput Graph Appl. 2004;24(5):20–21. doi: 10.1109/mcg.2004.39. [DOI] [PubMed] [Google Scholar]

- 3.Few S. Information dashboard design : the effective visual communication of data/S. Few. Cambridge: O’Reilly Media; 2006. [Google Scholar]

- 4.Vallurupalli V, Bose I. Business intelligence for performance measurement: a case based analysis. Decis Support Syst. 2018;111:72–85. [Google Scholar]

- 5.Wilbanks BA, Langford PA. A review of dashboards for data analytics in nursing. Comput Inform Nurs. 2014;32(11):545–549. doi: 10.1097/CIN.0000000000000106. [DOI] [PubMed] [Google Scholar]

- 6.Daley K, Richardson J, James I, Chambers A, Corbett D. Clinical dashboard: use in older adult mental health wards. Psychiatrist. 2013;37(3):85–88. [Google Scholar]

- 7.Dowding D, Randell R, Gardner P, Fitzpatrick G, Dykes P, Favela J, et al. Dashboards for improving patient care: review of the literature. Int J Med Inf. 2015;84(2):87–100. doi: 10.1016/j.ijmedinf.2014.10.001. [DOI] [PubMed] [Google Scholar]

- 8.Karami M, Safdari R. From information management to information visualization: development of radiology dashboards. Appl Clin Inform. 2016;7(2):308–329. doi: 10.4338/ACI-2015-08-RA-0104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Waitman LR, Phillips IE, McCoy AB, Danciu I, Halpenny RM, Nelsen CL, et al. Adopting real-time surveillance dashboards as a component of an enterprise wide medication safety strategy. Jt Comm J Qual Patient Saf. 2011;37(7):326–332. doi: 10.1016/s1553-7250(11)37041-9. [DOI] [PubMed] [Google Scholar]

- 10.Bakos KK, Zimmermann D, Moriconi D. Implementing the clinical dashboard at VCUHS. In: NI 2012 11th international congress on nursing informatics, 23–27 June 2012, Montreal, Canada, 2012. [PMC free article] [PubMed]

- 11.Franklin A, Gantela S, Shifarraw S, Johnson TR, Robinson DJ, King BR, et al. Dashboard visualizations: supporting real-time throughput decision-making. J Biomed Inform. 2017;71:211–221. doi: 10.1016/j.jbi.2017.05.024. [DOI] [PubMed] [Google Scholar]

- 12.Yoo J, Jung KY, Kim T, Lee T, Hwang SY, Yoon H, et al. A real-time autonomous dashboard for the emergency department: 5-year case study. JMIR mHealth uHealth. 2018;6(11):e10666. doi: 10.2196/10666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Martin N, Bergs J, Eerdekens D, Depaire B, Verelst S. Developing an emergency department crowding dashboard: a design science approach. Int Emerg Nurs. 2018;39:68–76. doi: 10.1016/j.ienj.2017.08.001. [DOI] [PubMed] [Google Scholar]

- 14.Zaydfudim V, Dossett LA, Starmer JM, Arbogast PG, Feurer ID, Ray WA, et al. Implementation of a real-time compliance dashboard to help reduce SICU ventilator-associated pneumonia with the ventilator bundle. Arch Surg. 2009;144(7):656–662. doi: 10.1001/archsurg.2009.117. [DOI] [PubMed] [Google Scholar]

- 15.Linder JA, Schnipper JL, Tsurikova R, Yu DT, Volk LA, Melnikas AJ, et al. Electronic health record feedback to improve antibiotic prescribing for acute respiratory infections. Am J Manag Care. 2010;12((Suppl HIT)):e311–e319. [PubMed] [Google Scholar]

- 16.McLaughlin N, Afsar-manesh N, Ragland V, Buxey F, Martin NA. Tracking and sustaining improvement initiatives leveraging quality dashboards to lead change in a neurosurgical department. Neurosurgery. 2014;74(3):235–244. doi: 10.1227/NEU.0000000000000265. [DOI] [PubMed] [Google Scholar]

- 17.Patel MS, Rathi B, Tashfeen K, Yarubi MA. Development and implementation of maternity dashboard in regional hospital for quality improvement at ground level: a pilot study. Oman Med J. 2019;34(3):194–199. doi: 10.5001/omj.2019.38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Morgan MB, Branstetter BF, Lionetti DM, Richardson JS, Chang PJ. The radiology digital dashboard: effects on report turnaround time. J Digit Imaging. 2008;21(1):50–58. doi: 10.1007/s10278-007-9008-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Douglas JR, Ritter MJ. Implementation of an anesthesia information management system (AIMS) Ochsner J. 2011;11(2):102–114. [PMC free article] [PubMed] [Google Scholar]

- 20.Simpao AF, Tan JM, Lingappan AM, Gálvez JA, Morgan SE, Krall MA. A systematic review of near real-time and point-of-care clinical decision support in anesthesia information management systems. J Clin Monit Comput. 2017;31(5):885–894. doi: 10.1007/s10877-016-9921-x. [DOI] [PubMed] [Google Scholar]

- 21.Gálvez JA, Ahumada L, Simpao AF, Lin EE, Bonafide CP, Choudhry D, et al. Visual analytical tool for evaluation of 10-year perioperative transfusion practice at a children’s hospital. J Am Med Inform Assoc. 2014;21(3):529–534. doi: 10.1136/amiajnl-2013-002241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pabalate J. The effect of electronic feedback on anesthesia providers’ timely preoperative antibiotic administration. Dissertation, p 91.

- 23.Tremper KK, Mace JJ, Gombert JM, Tremper TT, Adams JF, Bagian JP. Design of a novel multifunction decision support display for anesthesia care: AlertWatch® OR. BMC Anesthesiol. 2018;18(1):16. doi: 10.1186/s12871-018-0478-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Nelson O, Sturgis B, Gilbert K, Henry E, Clegg K, Tan JM, et al. A visual analytics dashboard to summarize serial anesthesia records in pediatric radiation treatment. Appl Clin Inform. 2019;10(4):563–569. doi: 10.1055/s-0039-1693712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Dolan JE, Lonsdale H, Ahumada LM, Patel A, Samuel J, Jalali A, et al. Quality initiative using theory of change and visual analytics to improve controlled substance documentation discrepancies in the operating room. Appl Clin Inform. 2019;10(3):543–551. doi: 10.1055/s-0039-1693688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pandya ST, Chakravarthy K, Vemareddy A. Obstetric anaesthesia practice: dashboard as a dynamic audit tool. Indian J Anaesth. 2018;62(11):838–843. doi: 10.4103/ija.IJA_346_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cravero JP, Sriswasdi P, Lekowski R, Carpino E, Blum R, Askins N, et al. Creation of an integrated outcome database for pediatric anesthesia. Pediatr Anesth. 2016;26(4):345–355. doi: 10.1111/pan.12857. [DOI] [PubMed] [Google Scholar]

- 28.ISO 9241-210:2010. ISO. https://www.iso.org/cms/render/live/en/sites/isoorg/contents/data/standard/05/20/52075.html. Accessed 1 Dec 2019.

- 29.Lamer A, Jeanne M, Vallet B, Ditilyeu G, Delaby F, Tavernier B, et al. Development of an anesthesia data warehouse: preliminary results. IRBM. 2013;34(6):376–378. [Google Scholar]

- 30.Lamer A, Jeanne M, Ficheur G, Marcilly R. Automated data aggregation for time-series analysis: study case on anaesthesia data warehouse. Stud Health Technol Inform. 2016;221:102–106. [PubMed] [Google Scholar]

- 31.Lamer A, Jeanne M, Marcilly R, Kipnis E, Schiro J, Logier R, et al. Methodology to automatically detect abnormal values of vital parameters in anesthesia time-series: proposal for an adaptable algorithm. Comput Methods Programs Biomed. 2016;129:160–171. doi: 10.1016/j.cmpb.2016.01.004. [DOI] [PubMed] [Google Scholar]

- 32.Lamer A, Demay A, Marcilly R. Data reuse through anesthesia data warehouse: searching for new use contexts. Stud Health Technol Inform. 2018;255:102–106. [PubMed] [Google Scholar]

- 33.Brown B, Balatsoukas P, Williams R, Sperrin M, Buchan I. Interface design recommendations for computerised clinical audit and feedback: hybrid usability evidence from a research-led system. Int J Med Inform. 2016;94:191–206. doi: 10.1016/j.ijmedinf.2016.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Hartzler AL, Chaudhuri S, Fey BC, Flum DR, Lavallee D. Integrating patient-reported outcomes into spine surgical care through visual dashboards: lessons learned from human-centered design. EGEMS (Wash DC) 2015;3(2):1133. doi: 10.13063/2327-9214.1133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kluger AN, DeNisi A. The effects of feedback interventions on performance: a historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119(2):254–284. [Google Scholar]

- 36.Chart.js | open source HTML5 charts for your website. https://www.chartjs.org/. Accessed 1 Dec 2019.

- 37.Welcome! The Apache HTTP Server Project. https:// apache.org/. Accessed 1 Dec 2019.

- 38.Brooke J. SUS—A quick and dirty usability scale. Usability evaluation in industry. London: Taylor and Francis; 1996. [Google Scholar]

- 39.Bangor A, Kortum P, Miller J. Determining what individual SUS scores mean: adding an adjective rating scale. J Usability Stud. 2009;4(3):114–123. [Google Scholar]

- 40.Sessler DI, Bloomstone JA, Aronson S, Berry C, Gan TJ, Kellum JA, et al. Perioperative Quality Initiative consensus statement on intraoperative blood pressure, risk and outcomes for elective surgery. Perioperative Quality Initiative-3 Workgroups. Br J Anaesth. 2019;122(5):563–574. doi: 10.1016/j.bja.2019.01.013. [DOI] [PubMed] [Google Scholar]

- 41.Ruscic KJ, Grabitz SD, Rudolph MI, Eikermann M. Prevention of respiratory complications of the surgical patient: actionable plan for continued process improvement. Curr Opin Anaesthesiol. 2017;30(3):399–408. doi: 10.1097/ACO.0000000000000465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Vallet B, Blanloeil Y, Cholley B, Orliaguet G, Pierre S, Tavernier B. Guidelines for perioperative haemodynamic optimization. Société française d’anesthésie et de réanimation. Ann Fr Anesth Reanim. 2013;32(10):e151–e158. doi: 10.1016/j.annfar.2013.09.010. [DOI] [PubMed] [Google Scholar]

- 43.Desai N, Schofield N, Richards T. Perioperative patient blood management to improve outcomes. Anesth Analg. 2018;127(5):1211–1220. doi: 10.1213/ANE.0000000000002549. [DOI] [PubMed] [Google Scholar]

- 44.Mathis MR, Naik BI, Freundlich RE, Shanks AM, Heung M, Kim M, et al. Preoperative risk and the association between hypotension and postoperative Acute Kidney Injury. Anesthesiology. 2020;132(3):461–475. doi: 10.1097/ALN.0000000000003063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Règlement (UE) 2017/745 du Parlement Européen et du Conseil - du 5 avril 2017 - relatif aux dispositifs médicaux, modifiant la directive 2001/83/CE, le règlement (CE) no 178/2002 et le règlement (CE) no 1223/2009 et abrogeant les directives du Conseil 90/385/CEE et 93/42/CEE.:175. https://eur-lex.europa.eu/legal-content/FR/TXT/?uri=OJ:L:2017:117:TOC. Accessed 1 Dec 2019.

- 46.Dowding D, Merrill JA. The development of heuristics for evaluation of dashboard visualizations. Appl Clin Inform. 2018;09(03):511–518. doi: 10.1055/s-0038-1666842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Venkatesh V, Morris MG, Davis GB, Davis FD. User acceptance of information technology: toward a unified view. MIS Q. 2003;27(3):425–478. [Google Scholar]

- 48.Birdas TJ, Rozycki GF, Dunnington GL, Stevens L, Liali V, Schmidt CM. “Show Me the Data”: a recipe for quality improvement success in an academic surgical department. J Am Coll Surg. 2019;228(4):368–373. doi: 10.1016/j.jamcollsurg.2018.12.013. [DOI] [PubMed] [Google Scholar]

- 49.Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, et al. Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012 doi: 10.1002/14651858.CD000259.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.