Abstract

In the absence of accurate medical records, it is critical to correctly classify implant fixture systems using periapical radiographs to provide accurate diagnoses and treatments to patients or to respond to complications. The purpose of this study was to evaluate whether deep neural networks can identify four different types of implants on intraoral radiographs. In this study, images of 801 patients who underwent periapical radiographs between 2005 and 2019 at Yonsei University Dental Hospital were used. Images containing the following four types of implants were selected: Brånemark Mk TiUnite, Dentium Implantium, Straumann Bone Level, and Straumann Tissue Level. SqueezeNet, GoogLeNet, ResNet-18, MobileNet-v2, and ResNet-50 were tested to determine the optimal pre-trained network architecture. The accuracy, precision, recall, and F1 score were calculated for each network using a confusion matrix. All five models showed a test accuracy exceeding 90%. SqueezeNet and MobileNet-v2, which are small networks with less than four million parameters, showed an accuracy of approximately 96% and 97%, respectively. The results of this study confirmed that convolutional neural networks can classify the four implant fixtures with high accuracy even with a relatively small network and a small number of images. This may solve the inconveniences associated with unnecessary treatments and medical expenses caused by lack of knowledge about the exact type of implant.

Keywords: implant fixture classification, artificial intelligence, deep learning, convolutional neural networks, periapical radiographs

1. Introduction

Since Professor Brånemark’s introduction of the concept of osseointegration in the 1960s through preclinical and clinical studies, implant dentistry has developed rapidly, becoming a common treatment for tooth loss [1,2,3]. Starting from basic machined surface implants, various surface treatment methods, such as resorbable blasting and sandblasted large-grit acid-etching, have been developed, and the threads and platform shapes of implants have continued to evolve with slight improvements [4,5,6]. At present, the survival and success rates of these improved implants are very high in a wide variety of clinical situations, including systemic diseases and cases posing limitations in bone quality and volume at the implantation site [7,8,9,10]. Thus, dental implants show much better long-term stability compared to conventional fixed partial dentures or removable dental prostheses, with many studies reporting survival rates of more than 95% for dental implants [11,12].

Continued developments in this area have led to the availability of a variety of implant systems in the market in recent years [13,14,15]. Implant systems are selected and placed according to the preferences and familiarity of clinicians, as well as the masticatory force, bone quality, bone volume, and restoration space available in the patient’s tooth loss area [13,16,17,18]. With time, some of the older implant varieties have been discontinued and their production ceased, while many new types of implants, which are considerably different from the existing implant fixtures, have been introduced by the same company. Moreover, clinicians’ preferences for implant systems change over time. Jokstad et al. [15] reported the existence of approximately 220 implant brands from 80 companies worldwide. Even so, the number of implant brands in the market has increased since the publication of this study.

These developments are important because as the types of implants being used have changed over time, knowledge about these implant systems and their inter-compatibilities need to be updated for the current generation of working clinicians [19,20]. The younger generation of clinicians may lack experience with implant systems used 20 to 30 years ago, and it may be difficult for certain dentists to identify new implant systems simply by viewing the images of the fixtures in radiographs. For this reason, it can be difficult to find the most suitable replacement for a screw even when common complications occur with the implants, such as screw loosening and screw fractures. This could cause many difficulties in clinical situations, requiring new prosthetics to be manufactured. Then, it is possible that implants may no longer be maintained as required because new prostheses may not be available or other complications may arise, although no issues exist with regard to the osseointegration of the implant fixtures and the surrounding alveolar bone. In the absence of other medical records, knowledge about the type of implant would be revealed only by relying on radiographs because most parts of implant fixtures are buried in the alveolar bone, which cannot be observed in oral examination. Thus, radiographic identification of implants is especially important to provide appropriate diagnoses and treatments to patients.

Research has also been conducted to develop and evaluate implant recognition software (IRS) via creation of a database and classification of the features of implant systems fulfilling the same functions [14]. However, the database lists the characteristics of the implants based on the information provided by the implant manufacturer in the brochure. Therefore, to identify the desired implant, the details in each of the nine drop-down menus, including implant type, thread feature, surface, and collar details, must be entered manually. Moreover, the software cannot directly analyze images.

Artificial intelligence (AI) has come to play a crucial role in healthcare in recent times. In particular, convolutional neural networks (CNNs) are excellent for the detection of breast cancer, skin diseases, and diabetic retinopathy through the study of medical images [21,22,23]. CNN is the most essential algorithm for current deep learning, which is driving AI development in recent years. CNN is particularly useful for finding patterns to recognize objects and scenes in an image. CNN learns directly from the data, using patterns to classify images without the need to manually extract features. [24,25] In the dental field, AI is widely used for the detection of dental caries, measurement of alveolar bone loss due to periodontitis, numbering of teeth through tooth shape recognition, and detection of the inferior alveolar nerve [24,25,26,27,28].

Transfer learning with pre-trained networks has been used for high accuracy and generalization. Transfer learning is also effective for applying learned features from large datasets to small datasets to raise their accuracy and performance. In this study, five popular pre-trained networks in the Pareto frontier were applied for implant type classification while considering the accuracy and computational burden [29,30]. The Pareto frontier comprises all networks that outperform the other networks on both metrics considered for comparison (in this case, accuracy and prediction time). Deeper networks can generally achieve higher accuracy by learning richer feature representations. However, deep networks such as Xception and DenseNet require larger amounts of computing power and are characterized by longer prediction times when using graphic processing units (GPUs), but this aspect is difficult to comply with in average research and clinical environments.

Therefore, in this research, we find the optimal pre-trained network architecture that satisfies both the accuracy and the computing power requirements for the classification of implant fixture periapical radiograph images. The tested networks were SqueezeNet, GoogLeNet, ResNet-18, MobileNet-v2, and ResNet-50.

2. Materials and Methods

2.1. Ethics Statement

This study was approved by the Institutional Review Board (IRB) of the Yonsei University Dental Hospital (Approval number: 2-2019-0068). The IRB waived the need for individual informed consent, and thus, a written/verbal informed consent was not obtained from any participant, as this study featured a non-interventional retrospective design and all the data were analyzed anonymously.

2.2. Materials

In this study, we used images of 801 patients (aged 19–84 years) undergoing intraoral x-rays (Carestream RVG 2200 intraoral x-ray system with 6100 sensor, carestream dental, Rochester, NY, USA) using paralleling technique with 60 kVp, 7 mA, and 0.08~0.1 sec between 2005 and 2019 at Yonsei University Dental Hospital. Images containing four types of implants were selected for this work: Brånemark Mk TiUnite, Dentium Implantium, Straumann Bone Level, and Straumann Tissue Level implants (Table 1).

Table 1.

Implant type and data number.

| Implant System | Brånemark Mk TiUnite Implant | Dentium Implantium Implant | Straumann Bone Level Implant | Straumann Tissue Level Implant |

|---|---|---|---|---|

| Number of data | 197 | 193 | 203 | 208 |

2.3. Methods

2.3.1. Preprocessing

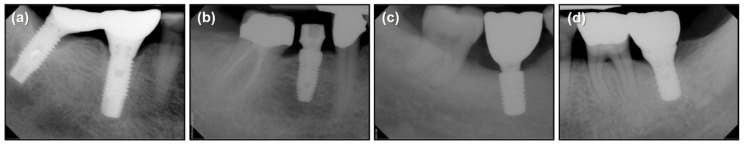

Images containing only one implant type were used for the network training (Figure 1). If the image presented more than one implant type, a region containing only one implant type was cropped manually. No filtering or enhancement was applied to the images, and thus, the network parameters were learned from the raw images.

Figure 1.

Periapical radiographs of the four types of selected implants. (a) Brånemark Mk TiUnite, (b) Dentium Implantium, (c) Straumann Bone Level, and (d) Straumann Tissue Level implants.

2.3.2. Network Pre-training

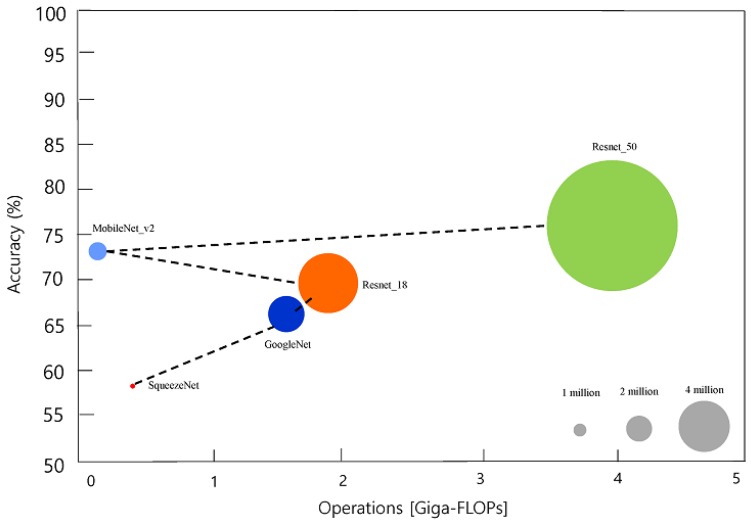

We tested from smaller to bigger network architectures in the Pareto frontier (Figure 2) [29,30]. All the pre-trained networks were trained on more than a million images from the ImageNet database [31], which can classify the images into 1000 object categories, such as keyboard, mouse, pencil, and many animals. As a result, the networks could learn rich feature representations for a wide range of images. The basic properties of the pre-trained networks are presented in Table 2.

Figure 2.

Relative speeds and accuracies of the different networks used in this study. Black dash line represents for Pareto frontier: data from Benchmark Analysis of Representative Deep Neural Network Architectures [30].

Table 2.

Properties of pre-trained networks.

| Network | Depth | Size (Megabyte) | Parameters (Millions) | Image Input Size |

|---|---|---|---|---|

| SqueezeNet | 18 | 4.6 | 1.24 | 227 × 227 |

| GoogLeNet | 22 | 27 | 7 | 224 × 224 |

| ResNet-18 | 18 | 44 | 11.7 | 224 × 224 |

| MobileNet-v2 | 54 | 13 | 3.5 | 224 × 224 |

| ResNet-50 | 50 | 96 | 25.6 | 224 × 224 |

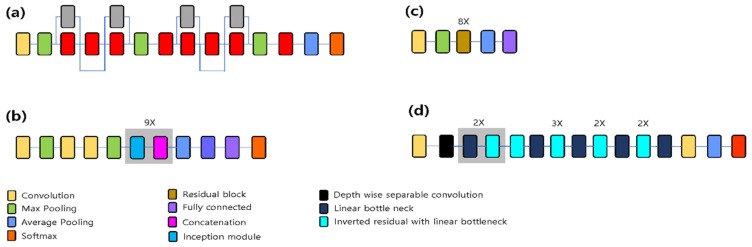

SqueezeNet: The SqueezeNet architecture is introduced to show the AlexNet-level accuracy with 50× fewer parameters and model compression techniques. The network is an 18-layer-deep DAGNetwork [32]. SqueezeNet v1.1 was used in this article. It has an accuracy similar to that of SqueezeNet v1.0, but requires fewer floating point operations per prediction (Figure 3a) [33].

Figure 3.

Pretrained network architectures. (a) SqueezeNet, (b) GoogLeNet, (c) ResNet-18, and (d) MobileNet-v2. In ResNet-50, each 2-layer residual block of ResNet-18 is replaced in the 34-layer net with the 3-layer bottleneck block.

GoogLeNet: GoogLeNet introduced the inception module to extract features more effectively using various filter sizes (1 × 1, 3 × 3, and 5 × 5). This network also uses 1 × 1 convolution to reduce the network dimension and computation burden (Figure 3b) [34].

ResNet: ResNet was introduced to solve the unexpected low performance of deeper network architectures. The network uses skip connection to convey information from the previous input layer to the output layer [35]. Several variants with different output sizes exist, including Resnet-18, ResNet-34, ResNet-50, ResNet-101, and ResNet-152. In this study, ResNet-18 (Figure 3c) and ResNet-50 were used. ResNet-18 has 8 repetition of 2-layer residual block whereas ResNet-50 has 16 repetition of 3-layer bottleneck block.

MobileNet-v2: The network is 54 layers deep and is specialized toward use in mobile devices. It introduces more computationally effective convolution layers, such as the depthwise, pointwise, expansion, and projection convolution layers (Figure 3d) [36].

2.3.3. Data Augmentation

Various data augmentation techniques were used to prevent overfitting due to the small dataset size. Training images were rotated from −20° to 20°, randomly horizontally rotated, translated horizontally and vertically from −30 to 30 pixels, and scaled horizontally and vertically from 0.7 to 1.2.

2.3.4. Training Options

Of the 801 images, the dataset was randomly divided into the training:validation:testing sets in the ratio 6:2:2. NVIDIA Titan RTX GPU was used for the network along with MATLAB 2019b (MathWorks, Natick, MA, USA). The models were trained for a maximum of 500 epochs with the sgdm optimizer [37]. The minibatch size was 10, and the initial learning rate was 3 × e−4. The training process was stopped early when the validation patience values of 20, 40, 60, 80, 100, 120, and 140 (Table S1). Multiple validation patience values were used to prevent overfitting for each pre-trained network in different epochs. The model with the best performance for each pre-trained network on a validation dataset was selected. If the model showed same performance for multiple validation patience, the model of the smallest validation patience among them was selected.

2.3.5. Metrics for Accuracy Comparison

The accuracy, precision, recall, and F1 score were calculated as follows with the test dataset using the confusion matrix.

| (1) |

| (2) |

| (3) |

| (4) |

TP: true positive, FP: false positive, FN: false negative, TN: true negative

3. Results

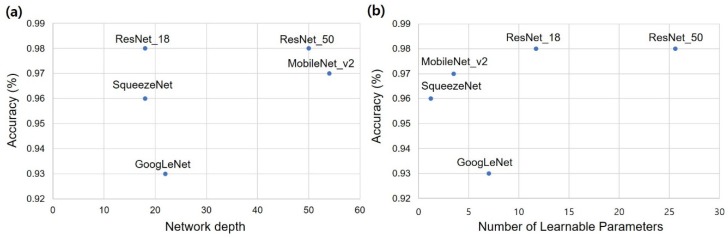

3.1. Classification Performance

The accuracy of the implant fixture system classification is described in Table 3. All five models showed a test accuracy of more than 90%. Network depth and test accuracy showed no significant trends (Figure 4a). The number of learnable parameters and test accuracy were roughly proportional, but the graph for the ResNet-18 model was already saturated (Figure 4b). SqueezeNet and MobileNet-v2, which are relatively small networks with less than four million parameters, showed an accuracy of approximately 96% and 97%, respectively.

Table 3.

Accuracy of implant fixture system classification according to the pre-trained networks in this research.

| Pre-Trained Network | Test Accuracy | Precision | Recall | F1 Score |

|---|---|---|---|---|

| SqueezeNet | 0.96 | 0.96 | 0.96 | 0.96 |

| GoogLeNet | 0.93 | 0.92 | 0.94 | 0.93 |

| ResNet-18 | 0.98 | 0.98 | 0.98 | 0.98 |

| MobileNet-v2 | 0.97 | 0.96 | 0.96 | 0.96 |

| ResNet-50 | 0.98 | 0.98 | 0.98 | 0.98 |

Figure 4.

Test accuracy with respect to network depth and number of parameters. (a) Network depth, (b) Number of parameters.

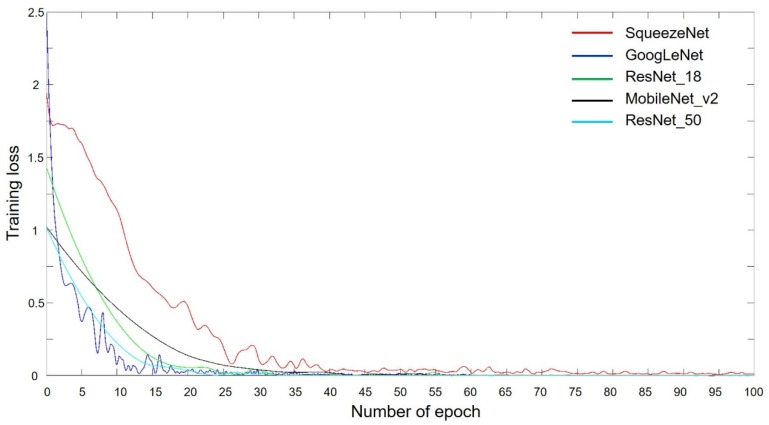

3.2. Training Progress

GoogLeNet and ResNet showed a sharp decrease in training loss, while SqueezeNet exhibited a relatively slower decrease than both these networks in this regard. However, MobileNet-v2 showed the slowest training loss reduction. All the models converged within 100 epochs (Figure 5).

Figure 5.

Training progress of the five pretrained networks with respect to the number of epochs.

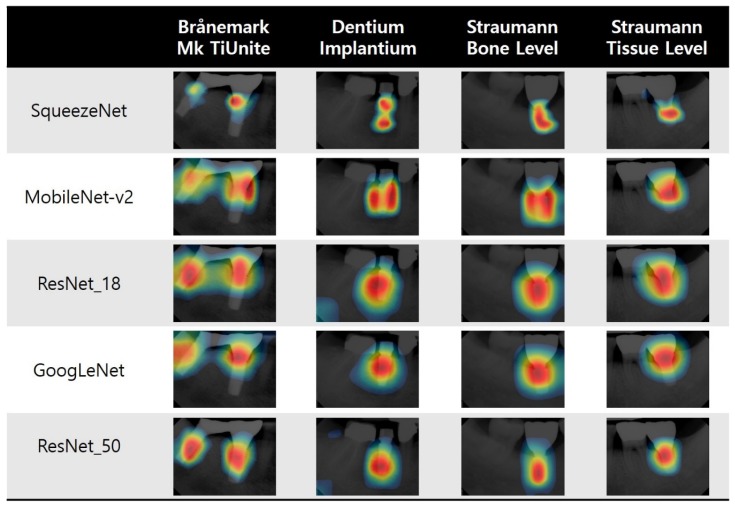

3.3. Visualization of Model Classification

A class activation map (CAM) indicates the discriminative region used by CNN to identify an image as the category [38]. In CAMs, every network searches for the implant fixture. Thus, we can visually confirm the reason for the high accuracy of all five networks. However, the parts and ranges of the fixtures that are focused on differ from one model to another (Figure 6).

Figure 6.

Example of the class activation maps of the five pretrained networks for the four selected implant fixture types.

4. Discussion

Categorizing the implant fixture type in periapical radiographs with high accuracy is important for maintenance purposes, especially in the absence of accurate medical records. However, this exercise has not been attempted owing to difficulties in image processing and feature extraction. This study shows that a deep learning algorithm can solve this problem by learning features in an end-to-end style using training image data and without the need for complicated image preprocessing. Furthermore, the visual interpretations by the trained networks provide a reasonable explanation of the high accuracy achieved by the deep learning models.

The intraoral x-ray was taken with paralleling technique for standardization. However, absolute parallel angle with implant fixture and the sensor is not possible. This minor arbitrary angle between implant fixture and the sensor contributed as data augmentation to achieve high accuracy of our models.

It is generally known that accuracy tends to increase with deep learning, because the deeper the network, the deeper the learning. This trend was not evident in the results of this experiment, and the increase in the number of network layers was not necessarily proportional to the increase in accuracy. This is because ResNet-18 showed little loss of information between layers due to features such as skip connection. Thus, it can be considered that sufficient learning was possible despite the fewer number of layers.

As the number of parameters that can be learned increased, the graph of the ResNet-18 network became saturated once the accuracy increased. SqueezeNet and MobileNet-v2, which are small networks with less than four million parameters, showed accuracies exceeding 96%. This is due to the fact that in periapical x-rays, the number of features required to classify the four implant fixtures may be small, thus, sufficient learning is possible with a relatively small number of parameters. In the case of ResNet-18, the network already showed a test accuracy close to 1, similar to the case of ResNet-50. Therefore, it appears that the current network structure requires approximately up to 10 million features to distinguish the four implant fixtures in periapical x-rays. In the future, this number can be reduced as the network structure evolves.

This study confirmed that a CNN can analyze implant images and automatically classify the four selected implant fixture types with high accuracy even with a relatively small network size and a small number of images. This means that implant classification networks can be easily learned with relatively low computing power and be applied in mobile environments, making them useful and convenient in clinical situations. Moreover, even a small number of radiographs of older implant systems can be used to create a network to distinguish the different types of implants. This may do away with unnecessary treatments and medical expenses caused by not knowing the exact type of implant. The results of this study may also help in the development of decision assistance software using medical images. This study also showed that trained models search for a distinct part of each implant precisely. As per the CAMs of ResNet-50 and SqueezeNet, which showed the best localization, the networks searched for the connection between the fixture and the abutment for the Brånemark implants, for the overall fixation area in the Dentium and Straumann (BL) implants, and for the transgingival portion and fixture connection in the Straumann tissue level implants. All these parts are distinctive of each implant type. These findings prove that the deep learning model can identify the discriminative features of each implant type well. The CAM of each model revealed a slightly different focus, which will likely improve if the number of datasets increases and the accuracy of the model improves.

This study also presents some limitations. First, only four types of implant systems were selected. Since many types of implants currently exist in the market and some have been in use for a long time, clinicians encounter many more types of implants in practice [15,20]. To expand the results of this study, it is necessary to build a database by collecting a wide variety of implant fixture images, including those of implant types that are rarely seen. In this study, we implemented a network that can detect and classify implants with high accuracy even with a small number (about 200) of radiographic images by using exhaustive image augmentation. Therefore, it may be not difficult to acquire adequate numbers of radiographs and create a classification network that includes the various implant systems not included in this study.

This study employed images containing only one implant to determine the possibility of distinguishing implant fixtures. However, further research is needed to create a network that can detect implant fixtures using uncropped images, or to apply a technique to detect multiple implants simultaneously using networks such as You-Only-Look-Once (YOLO) and Single Shot multibox Detector (SSD).

In addition, we trained the network using only periapical radiographic images. As clinicians also use panorama radiographs for treatment purposes, it is necessary to construct a network that is able to learn and identify implant types using panorama radiographs.

In the future, the network should be able to not only classify implant types, but also their diameters and lengths. If the length of the implant can be detected automatically, the degree of marginal bone loss around the implant can be easily checked, which in turn can lead to the development of an algorithm to estimate the health and prognosis of the implant as well as diagnose peri-implantitis [39,40]. This could lead to the development of a clinically useful diagnostic software for implant-related complications. It is also very important to accurately identify the diameter of the implant, because this parameter is closely related to the implant connection type. Implants can have a variety of connections depending on the diameter, and because they are very different (depending on the implant system), they may have narrow, regular, or wide connections, which are closely related to the component compatibility of the implant prosthesis system [41,42]. By developing a network that accurately classifies the diameter of the implant, the implant system can be automatically identified, and it would be possible to know what components should be prepared for repair and maintenance when mechanical complications occur. Clinicians will also be able to obtain information about other implant systems that are compatible with the detected system. Even if it is difficult to obtain information about an implant system currently due to the discontinuation of its production and sales, a system can be established to help clinicians easily procure and respond to the provided information.

5. Conclusions

This study confirmed that the investigated CNNs could classify four implant fixtures with high accuracy despite the relatively small network and the small number of images. The CAM of each network was shown to distinguish the characteristic features of each implant fixture system. The results of this study may help clinicians and patients avoid unnecessary treatment and medical expenses resulting from not knowing the exact type of implant. To expand the results of this study, it is necessary to build a database comprising a wide variety implant fixture systems, including implant types that are rarely seen.

Supplementary Materials

The following are available online at https://www.mdpi.com/2077-0383/9/4/1117/s1, Table S1: Test and validation accuracy according to multiple validation patience.

Author Contributions

Conceptualization, J.-E.K., J.-S.S., B.-H.C., and J.J.H.; methodology, J.-E.K., Y.-H.J., and J.J.H.; software, J.J.H.; validation, J.-E.K., Y.-H.J., and J.J.H.; formal analysis, J.J.H.; investigation, J.J.H.; resources, J.J.H.; data curation, J.-E.K. and N.-E.N.; writing—original draft preparation, J.-E.K. and J.J.H.; writing—review and editing, J.-E.K., N.-E.N., J.-S.S., Y.-H.J., B.-H.C., and J.J.H.; visualization, J.J.H.; supervision, J.J.H.; project administration, J.-E.K. and J.J.H.; funding acquisition, J.-E.K. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the Yonsei University College of Dentistry Fund (6-2019-0008).

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Branemark P.-I. Tissue-integrated prostheses: Osseointegration in clinical dentistry. Quintessence. 1985;54:99–115. [Google Scholar]

- 2.Adell R., Lekholm U., Rockler B., Branemark P.I. A 15-year study of osseointegrated implants in the treatment of the edentulous jaw. Int. J. Oral Surg. 1981;10:387–416. doi: 10.1016/S0300-9785(81)80077-4. [DOI] [PubMed] [Google Scholar]

- 3.Adell R., Eriksson B., Lekholm U., Brånemark P.-I., Jemt T. A long-term follow-up study of osseointegrated implants in the treatment of totally edentulous jaws. Int. J. Oral Maxillofac. Implant. 1990;5:347–359. [PubMed] [Google Scholar]

- 4.Le Guéhennec L., Soueidan A., Layrolle P., Amouriq Y. Surface treatments of titanium dental implants for rapid osseointegration. Dent. Mater. 2007;23:844–854. doi: 10.1016/j.dental.2006.06.025. [DOI] [PubMed] [Google Scholar]

- 5.Lee J.H., Frias V., Lee K.W., Wright R.F. Effect of implant size and shape on implant success rates: A literature review. J. Prosthet. Dent. 2005;94:377–381. doi: 10.1016/j.prosdent.2005.04.018. [DOI] [PubMed] [Google Scholar]

- 6.Brunette D.M. The effects of implant surface topography on the behavior of cells. Int. J. Oral Maxillofac. Implant. 1988;3:231–246. [PubMed] [Google Scholar]

- 7.Lekholm U., Gunne J., Henry P., Higuchi K., Lindén U., Bergström C., Van Steenberghe D. Survival of the Brånemark implant in partially edentulous jaws: A 10-year prospective multicenter study. Int. J. Oral Maxillofac. Implant. 1999;14:639–645. [PubMed] [Google Scholar]

- 8.Del Fabbro M., Testori T., Francetti L., Weinstein R. Systematic review of survival rates for implants placed in the grafted maxillary sinus. Int. J. Periodontics Restor. Dent. 2004;24:565–577. doi: 10.1016/j.prosdent.2005.04.024. [DOI] [PubMed] [Google Scholar]

- 9.Koka S., Babu N.M., Norell A. Survival of dental implants in post-menopausal bisphosphonate users. J. Prosthodont. Res. 2010;54:108–111. doi: 10.1016/j.jpor.2010.04.002. [DOI] [PubMed] [Google Scholar]

- 10.Javed F., Al-Hezaimi K., Al-Rasheed A., Almas K., Romanos G.E. Implant survival rate after oral cancer therapy: A review. Oral Oncol. 2010;46:854–859. doi: 10.1016/j.oraloncology.2010.10.004. [DOI] [PubMed] [Google Scholar]

- 11.Swierkot K., Lottholz P., Flores-de-Jacoby L., Mengel R. Mucositis, peri-implantitis, implant success, and survival of implants in patients with treated generalized aggressive periodontitis: 3- to 16-year results of a prospective long-term cohort study. J. Periodontol. 2012;83:1213–1225. doi: 10.1902/jop.2012.110603. [DOI] [PubMed] [Google Scholar]

- 12.Pjetursson B.E., Thoma D., Jung R., Zwahlen M., Zembic A. A systematic review of the survival and complication rates of implant-supported fixed dental prostheses (FDP s) after a mean observation period of at least 5 years. Clin. Oral Implant. Res. 2012;23:22–38. doi: 10.1111/j.1600-0501.2012.02546.x. [DOI] [PubMed] [Google Scholar]

- 13.Al-Johany S.S., Al Amri M.D., Alsaeed S., Alalola B. Dental Implant Length and Diameter: A Proposed Classification Scheme. J. Prosthodont. 2017;26:252–260. doi: 10.1111/jopr.12517. [DOI] [PubMed] [Google Scholar]

- 14.Michelinakis G., Sharrock A., Barclay C.W. Identification of dental implants through the use of Implant Recognition Software (IRS) Int. Dent. J. 2006;56:203–208. doi: 10.1111/j.1875-595X.2006.tb00095.x. [DOI] [PubMed] [Google Scholar]

- 15.Jokstad A., Braegger U., Brunski J.B., Carr A.B., Naert I., Wennerberg A. Quality of dental implants. Int. Dent. J. 2003;53:409–443. doi: 10.1111/j.1875-595X.2003.tb00918.x. [DOI] [PubMed] [Google Scholar]

- 16.Lazzara R.J. Criteria for implant selection: Surgical and prosthetic considerations. Pract. Periodontics Aesthetic Dent. PPAD. 1994;6:55–62. [PubMed] [Google Scholar]

- 17.Demenko V., Linetskiy I., Nesvit K., Shevchenko A. Ultimate masticatory force as a criterion in implant selection. J. Dent. Res. 2011;90:1211–1215. doi: 10.1177/0022034511417442. [DOI] [PubMed] [Google Scholar]

- 18.Palmer R.M., Howe L.C., Palmer P.J. Implants in Clinical Dentistry. CRC Press; Boca Raton, FL, USA: 2011. [Google Scholar]

- 19.Jaarda M.J., Razzoog M.E., Gratton D.G. Geometric comparison of five interchangeable implant prosthetic retaining screws. J. Prosthet. Dent. 1995;74:373–379. doi: 10.1016/S0022-3913(05)80377-4. [DOI] [PubMed] [Google Scholar]

- 20.Al-Wahadni A., Barakat M.S., Abu Afifeh K., Khader Y. Dentists’ most common practices when selecting an implant system. J. Prosthodont. 2018;27:250–259. doi: 10.1111/jopr.12691. [DOI] [PubMed] [Google Scholar]

- 21.Golden J.A. Deep learning algorithms for detection of lymph node metastases from breast cancer: Helping artificial intelligence be seen. JAMA. 2017;318:2184–2186. doi: 10.1001/jama.2017.14580. [DOI] [PubMed] [Google Scholar]

- 22.Ghosh R., Ghosh K., Maitra S. Automatic detection and classification of diabetic retinopathy stages using CNN; Proceedings of the 2017 4th International Conference on Signal Processing and Integrated Networks; Noida, India. 2–3 February 2017; pp. 550–554. [Google Scholar]

- 23.Pham T.-C., Luong C.-M., Visani M., Hoang V.-D. Deep CNN and data augmentation for skin lesion classification; Proceedings of the Asian Conference on Intelligent Information and Database Systems; Donghoi city, Vietnam. 19–21 March 2018; pp. 573–582. [DOI] [Google Scholar]

- 24.Hwang J.J., Jung Y.H., Cho B.H., Heo M.S. An overview of deep learning in the field of dentistry. Imaging Sci. Dent. 2019;49:1–7. doi: 10.5624/isd.2019.49.1.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Casalegno F., Newton T., Daher R., Abdelaziz M., Lodi-Rizzini A., Schurmann F., Krejci I., Markram H. Caries Detection with Near-Infrared Transillumination Using Deep Learning. J. Dent. Res. 2019;98:1227–1233. doi: 10.1177/0022034519871884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lee J.H., Kim D.H., Jeong S.N., Choi S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 27.Krois J., Ekert T., Meinhold L., Golla T., Kharbot B., Wittemeier A., Dorfer C., Schwendicke F. Deep Learning for the Radiographic Detection of Periodontal Bone Loss. Sci. Rep. 2019;9:8495. doi: 10.1038/s41598-019-44839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Kim J., Lee H.S., Song I.S., Jung K.H. DeNTNet: Deep Neural Transfer Network for the detection of periodontal bone loss using panoramic dental radiographs. Sci. Rep. 2019;9:17615. doi: 10.1038/s41598-019-53758-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Canziani A., Paszke A., Culurciello E. An analysis of deep neural network models for practical applications. arXiv. 20161605.07678 [Google Scholar]

- 30.Bianco S., Cadene R., Celona L., Napoletano P. Benchmark Analysis of Representative Deep Neural Network Architectures. IEEE Access. 2018;6:64270–64277. doi: 10.1109/ACCESS.2018.2877890. [DOI] [Google Scholar]

- 31.Russakovsky O., Deng J., Su H., Krause J., Satheesh S., Ma S., Huang Z., Karpathy A., Khosla A., Bernstein M., et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015;115:211–252. doi: 10.1007/s11263-015-0816-y. [DOI] [Google Scholar]

- 32.Iandola F., Han S., Moskewicz M., Ashraf K., Dally W., Keutzer K. SqueezeNet: AlexNet-level accuracy with 50x fewer parameters and <0.5MB model size. arXiv. 20161602.07360 [Google Scholar]

- 33.Zeng P.Y., Rane N., Du W., Chintapalli J., Prendergast G.C. Role for RhoB and PRK in the suppression of epithelial cell transformation by farnesyltransferase inhibitors. Oncogene. 2003;22:1124–1134. doi: 10.1038/sj.onc.1206181. [DOI] [PubMed] [Google Scholar]

- 34.Szegedy C., Wei L., Yangqing J., Sermanet P., Reed S., Anguelov D., Erhan D., Vanhoucke V., Rabinovich A. Going deeper with convolutions; Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition; Boston, MA, USA. 7–12 June 2015; pp. 1–9. [Google Scholar]

- 35.Flamant Q., Stanciuc A.M., Pavailler H., Sprecher C.M., Alini M., Peroglio M., Anglada M. Roughness gradients on zirconia for rapid screening of cell-surface interactions: Fabrication, characterization and application. J. Biomed. Mater. Res. Part A. 2016;104:2502–2514. doi: 10.1002/jbm.a.35791. [DOI] [PubMed] [Google Scholar]

- 36.Sandler M., Howard A., Zhu M., Zhmoginov A., Chen L. MobileNetV2: Inverted Residuals and Linear Bottlenecks; Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition; Salt Lake City, UT, USA. 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- 37.Murphy K.P. Machine Learning: A Probabilistic Perspective. The MIT Press; Cambridge, MA, USA: 2012. [Google Scholar]

- 38.Zhou B., Khosla A., Lapedriza A., Oliva A., Torralba A. Learning deep features for discriminative localization; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 2921–2929. [Google Scholar]

- 39.Cassetta M., Di Giorgio R., Barbato E. Are Intraoral Radiographs Accurate in Determining the Peri-implant Marginal Bone Level? Int. J. Oral Maxillofac. Implant. 2018;33:847–852. doi: 10.11607/jomi.5352. [DOI] [PubMed] [Google Scholar]

- 40.Cassetta M., Di Giorgio R., Barbato E. Are intraoral radiographs reliable in determining peri-implant marginal bone level changes? The correlation between open surgical measurements and peri-apical radiographs. Int. J. Oral Maxillofac. Surg. 2018;47:1358–1364. doi: 10.1016/j.ijom.2018.05.018. [DOI] [PubMed] [Google Scholar]

- 41.Hsu K.W., Shen Y.F., Wei P.C. Compatible CAD-CAM titanium abutments for posterior single-implant tooth replacement: A retrospective case series. J. Prosthet. Dent. 2017;117:363–366. doi: 10.1016/j.prosdent.2016.07.023. [DOI] [PubMed] [Google Scholar]

- 42.Karl M., Irastorza-Landa A. In Vitro Characterization of Original and Nonoriginal Implant Abutments. Int. J. Oral Maxillofac. Implant. 2018;33:1229–1239. doi: 10.11607/jomi.6921. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.