Abstract

Objective:

Even though safe and effective treatments for depression are available, many individuals with a diagnosis of depression do not obtain treatment. This study aimed to develop a tool to identify persons who might not initiate treatment among those who acknowledge a need.

Methods:

Data were aggregated from the 2008–2014 U.S. National Survey on Drug Use and Health (N=391,753), including 20,785 adults given a diagnosis of depression by a health care provider in the 12 months before the survey. Machine learning was applied to self-report survey items to develop strategies for identifying individuals who might not get needed treatment.

Results:

A derivation cohort aggregated between 2008 and 2013 was used to develop a model that identified the 30.6% of individuals with depression who reported needing but not getting treatment. When applied to independent responses from the 2014 cohort, the model identified 72% of those who did not initiate treatment (p<.01), with a balanced accuracy that was also significantly above chance (71%, p<.01). For individuals who did not get treatment, the model predicted 10 (out of 15) reasons that they endorsed as barriers to treatment, with balanced accuracies between 53% and 65% (p<.05 for all).

Conclusions:

Considerable work is needed to improve follow-up and retention rates after the critical initial meeting in which a patient is given a diagnosis of depression. Routinely collected information about patients with depression could identify those at risk of not obtaining needed treatment, which may inform the development and implementation of interventions to reduce the prevalence of untreated depression.

The Substance Abuse and Mental Health Services Administration (SAMHSA) estimated that in 2014 less than half of the 43.6 million adults with a mental illness received mental health services (1). National guidelines recommend universal depression screening along with adequate systems for accurate diagnosis, treatment, and follow-up (2). However, many adults diagnosed as having depression do not receive treatment (3–5), despite the availability of safe and effective psychological (6) and pharmacological (7) treatments. The financial cost of nonengagement is high (8,9), and antidepressant use among patients with mental illness is associated with reduced mortality and rate of completed suicide (10). Taken together, recent data from the World Health Organization (WHO) suggest that only 16.5% of individuals with major depressive disorder each year receive minimally adequate treatment (11).

Thornicroft and colleagues (11) described the broad flow of depressed patients through the acute care pathway. After a diagnosis, 57% of patients reported needing treatment, 71% of these made at least one visit (initiated treatment), and 41% of these received treatment that met at least minimal standards (11). Ethnographic and experimental research into the second step revealed barriers to initiating treatment (9,12–17). Practical barriers included perceived or real inability to pay (or lack of insurance coverage), lack of child care or transport, and not knowing where to go (9,16–18). Psychological barriers included stigmatization of depression, doubts that treatment is effective, or concerns that others may find out (14,16,17,19–21), and these barriers were particularly prevalent among women and people of color (5,16,17,19,20). Culture-specific nuances, such as the somatization of depressive symptoms among women of color (17,22), can also complicate the detection of depression and the uptake of treatment. This had led groups to emphasize the need for tailoring interventions to specific patient strata (16,17,20,21,23,24).

What can be done to continue to develop this line of research? Other areas of medicine have highlighted the importance of “real-time” preemptive efforts to avoid unwanted outcomes, such as predischarge care planning for patients at high risk of readmission (25). Behavioral economic research has illustrated the utility of “nudges”—aspects of choice architectures that alter behavior in a desired way without restricting options or altering economic incentives. Patients fall into statistically reliable groups on the basis of their reasons for not initiating treatment (15), and certain patient groups may benefit from tailored interventions to improve treatment uptake (16,17). Therefore, one application of this concept could be to direct preemptive efforts toward patients at risk of not initiating treatment (and to determine why they might not). This would require analytic strategies to identify barriers at the patient rather than the group level. In such situations, machine learning is useful for extracting patterns from a wide range of characteristics that are statistically related to an outcome of interest (26,27).

The study’s goal was to develop a tool to estimate which individual patients might not initiate treatment among those who acknowledge a need. First, we applied machine learning to a large volume of retrospective patient-related information to develop a case identification algorithm that could be applied in a physician’s waiting room. Second, among patients who endorsed needing treatment but not getting it, we used self-reported variables to predict patients’ specific reasons for not getting treatment. Third, to make machine learning results more interpretable for clinicians, we developed an open-source software library for calculating and illustrating exactly how each participant’s characteristics contributed to each prediction by the algorithm. These real-time methods for identifying patients at risk of not initiating treatment may help health systems improve treatment uptake before patients decide not to engage in behavioral health care.

METHODS

We used data from the National Survey on Drug Use and Health (NSDUH), conducted annually by SAMHSA, which provides nationally representative data on substance abuse and mental illness in the U.S. civilian, noninstitutionalized population ages $12. In brief, participants complete the survey at home on a computer provided by the interviewer, largely without assistance, and are compensated $30 for approximately one hour. NSDUH uses a state-based design, with an independent, multistage area probability sample within each state and the District of Columbia. There is no planned overlap of sample dwelling units or residents. Weighted response rates have ranged between 71.2% (2014) and 75.7% (2009). Institutional review board approval and informed consent were not needed because this was a secondary analysis of public data.

Sample Selection and Outcome Deftnition

We combined individual participant data from public use files between 2008 and 2014 (N=391,753), excluding individuals under age 18 (N=121,526) and retaining adults who reported that in the past year a doctor had told them that they had depression (N=20,829). The primary outcome was a participant’s self-reported (binary) response to the question, “During the past 12 months, was there any time when you needed mental health treatment or counseling for yourself but didn’t get it?” We excluded 44 participants who did not respond. Of these 20,785 participants, 6,271 (30%) indicated that they did not get the treatment or counseling that they needed. These participants were then asked, “Which of these statements explains why you did not get the mental health treatment or counseling you needed?” with 14 specific options and one option for “other” reasons. [A CONSORT diagram is included in an online supplement to this article.] Participants were allowed to choose more than one option, and 46.2% of individuals did so. For these analyses, we excluded 61 participants (.98%) who did not give any reason.

Predictor Selection

We preselected a small number of participant-level characteristics that were surveyed consistently from 2008 to 2014, have been identified in prior epidemiological studies as relevant to depression (28), and could be self-reported via Web-based assessment. These included sociodemographic characteristics, information about current behavioral health and suicidal thoughts, and a brief medical history. We used categorical single imputation whenever participants were missing a value for a predictor variable (<1% for most variables) and conducted sensitivity analyses to ensure that including these participants did not unduly influence results [see online supplement].

Statistical Modeling

Machine learning.

Machine learning methods identify patterns of information in data that are useful in predicting outcomes at the single-participant level (26,29,30). We used a tree-based machine learning algorithm (extreme gradient boosting, or XGBoost) that is fast and has free open-source implementations (https://github.com/dmlc/xgboost). This algorithm works by fitting an ensemble of small decision trees and iteratively focusing each new tree on predicting misclassified observations from previous trees (31,32). The algorithm also includes a number of explicit procedures to avoid “overfitting”—that is, when the algorithm attempts to fit the noise instead of the underlying systematic relationship. Algorithm hyperparameters were selected by cross-validation. Statistical significance was examined by using label permutation testing (33). Particular care was taken to address issues of imbalanced class proportions when predicting the response variables, including a bootstrapped up-sampling process and adjusted probability thresholds. In addition, given these class imbalances, we focused on a metric known as balanced accuracy [(sensitivity+specificity)/2] whose null distribution is centered on 50%, unlike traditional accuracy (34,35) [see online supplement].

Individual participant variable importance.

Machine learning has been characterized as a “black box” approach with limited interpretability because the rationale behind individual predictions is obscured by the complexity of the model. Researchers typically examine variable importance across the whole sample to determine how much each predictor variable contributes to the overall model. Although this gives some insight into the most influential variables across all predictions, there is no guarantee that they are also the most influential for a specific prediction for a particular individual. With this in mind, we developed and introduced an open-source software library for deriving individual participant-level measures of variable importance from xgboost ensembles (https://github.com/AppliedDataSciencePartners/xgboostExplainer). We broke down the (directional) impact of each predictor variable for a single participant and illustrated these impacts to show a clinician exactly how the model weighted each variable when making the prediction for that individual. Critically, this means that these “impacts” are not static coefficients as in a logistic regression—the impact of a feature is dependent on the specific path that the observation took through the ensemble of trees [see online supplement].

Training and testing.

We developed the case-finding model with data from 2008 to 2013. Models were constructed and examined with repeated fivefold cross-validation (three repeats). Relevant descriptions of model discrimination were determined at each stage, including positive predictive values (that is, the probability that a participant did not get treatment, given that the model predicted that the participant would not) and the area under the receiver operating characteristic curve (AUC). To avoid opportune data splits, model performance metrics were averaged across the test folds and repeats.

Independent validation.

Models that show significant performance in test folds during cross-validation may still not generalize to an independent sample (29,30,36). Therefore, we applied our case-finding models to the 2014 cohort that was not used in model development. Participant characteristics were similarly distributed across training and testing data sets, although smoking was noticeably less common and anxiety disorders were more common in 2014.

Analyses were conducted with the R statistical language (version 3.2.2; http://cran.r-project.org/), and code is available upon request.

RESULTS

Getting Treatment Among Those With a Perceived Need

We focused on adults who stated that they were diagnosed as having depression by a clinician in the past year (N=20,785). The gender and racial-ethnic breakdown of the cohort was as follows: female, 72%; male, 28%; white, 77%; Hispanic, 10%; black, 7%; multiracial, 4%; Asian, 1%; Native American, 1%; and Native Hawaiian/Pacific Islander, 1%. The cohort was mostly between the ages of 18 and 49, and approximately half (54%) had private health insurance. At the time of responding, 54.7% of the sample endorsed five of the nine DSM criteria for a current major depressive episode. Overall, 30.2% endorsed needing treatment but not receiving it, consistent with recent global estimates that used formal criteria for 12-month major depression (11).

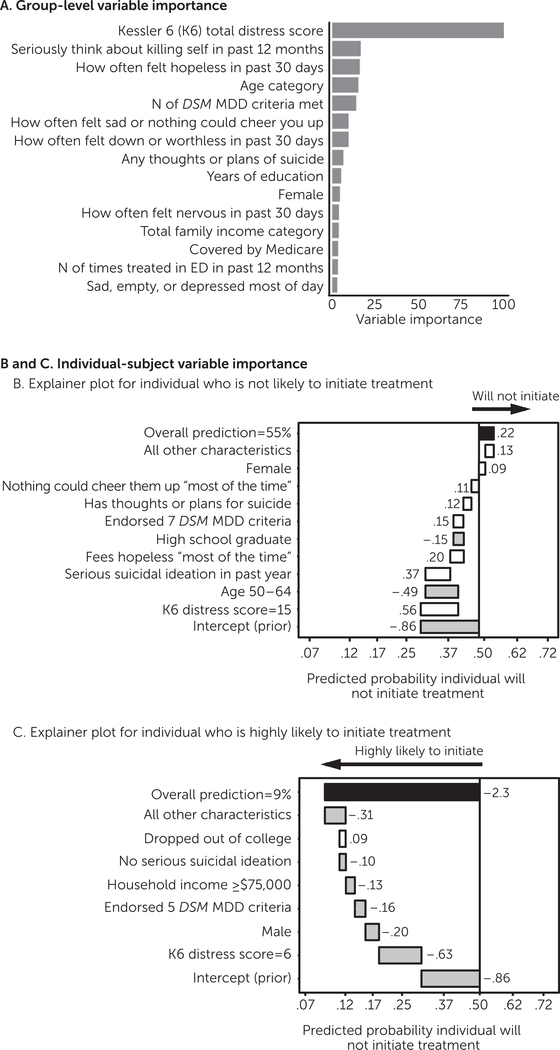

We developed a case-finding model to identify patients with depression who did not receive mental health treatment among those with a perceived need. In the training cohort (2008–2013), 30.6% of patients needed but did not get treatment. During cross-validation, the model performed significantly above chance in predicting that a participant would not receive needed treatment: balanced accuracy=70.6%6.9%, p<.001; AUC=.79, 95% confidence interval [CI]=.78–80), with a positive predictive value (PPV) of 50.1%61.1% and a sensitivity of 73.4%61.6% (Table 1). To understand which variables were most influential at a group level, we included a variable importance plot (Figure 1A) illustrating the average improvement in accuracy (gain) brought by a particular variable. To show how these predictions can be interpreted for an individual participant, we also derived and illustrated the change in log-odds attributable to each variable for an individual. Figure 1B shows an explainer plot for an individual predicted not to get needed treatment, and Figure 1C shows such a plot for an individual with a high predicted probability of treatment initiation.

TABLE 1.

Accuracy in predicting treatment noninitation among individuals with a past-year depression diagnosis who endorsed a need for treatment, by NSDUH cohort (in percentages)a

| Metric | Training cohort (2008–2013) |

Validation cohort (2014) |

|||||

|---|---|---|---|---|---|---|---|

| Test-fold performance |

Chance performanceb |

Observed performance | Chance performanceb |

||||

| M | SD | M | SD | M | SD | ||

| Accuracy | 69.5 | 1.0 | 51.1 | 1.1 | 69.7 | 51.4 | 4.6 |

| Balanced accuracy | 70.6 | .9 | 50.3 | .4 | 70.5 | 50.5 | 4.8 |

| Positive predictive value | 50.1 | 1.1 | 30.9 | .4 | 47.5 | 28.7 | 4.1 |

| Negative predictive value | 85.3 | .7 | 69.7 | .4 | 86.4 | 72.1 | 3.8 |

| Sensitivity | 73.4 | 1.6 | 48.4 | 2.8 | 72.4 | 48.5 | 7.2 |

| Specificity | 67.8 | 1.4 | 52.3 | 2.7 | 68.6 | 52.5 | 5.2 |

NSDUH, National Survey on Drug Use and Health. During model training (2008–2013), testfold area under the receiver operating curve (AUC) was .792 (95% confidence interval [CI]=.78–.80.). During model validation (2014), the AUC was .777 (CI=.76–79).

Chance performance reflects the mean of that metric during permutation testing.

FIGURE 1. Factors influencing noninitiation of mental health treatment by an individual with a past-year depression diagnosis who endorsed a need for treatmenta.

a Plot A shows the amount by which the model accuracy improved when each variable was included (on average). In plots B and C, the x-axis indicates the predicted probability of the response. Shaded bars indicate that a characteristic contributed to initiation, and white bars indicate that a characteristic contributed toward noninitiation. Black bars reflect the overall model prediction. Values beside the bars indicate the change in log-odds attributable to that characteristic. MDD, major depressive disorder. ED, emergency department

The case-finding model reliably identified individuals who needed but did not get treatment in the independent 2014 cohort, in which 28.2% of patients did not get treatment (Table 1). Model performance was again significantly above chance (balanced accuracy, 70.5%, permutation-based p<.01; AUC=.78, CI=.76–79), with a PPV of 47.5% (CI=46%–49%) and a sensitivity of 72.4% (CI=71%–74%). Therefore, in an independent sample, this model identified over 70% of those who did not initiate treatment, and when the model predicted that a patient would initiate treatment, there was an 86% chance that the participant would do so (negative predictive value of 86.4%). Conclusions remained the same, and performance was comparable when analyses excluded participants for missing data, rather than imputing missing data, and in analyses with more restrictive inclusion criteria. Models that included sociodemographic information alone had much worse performance [see online supplement.]

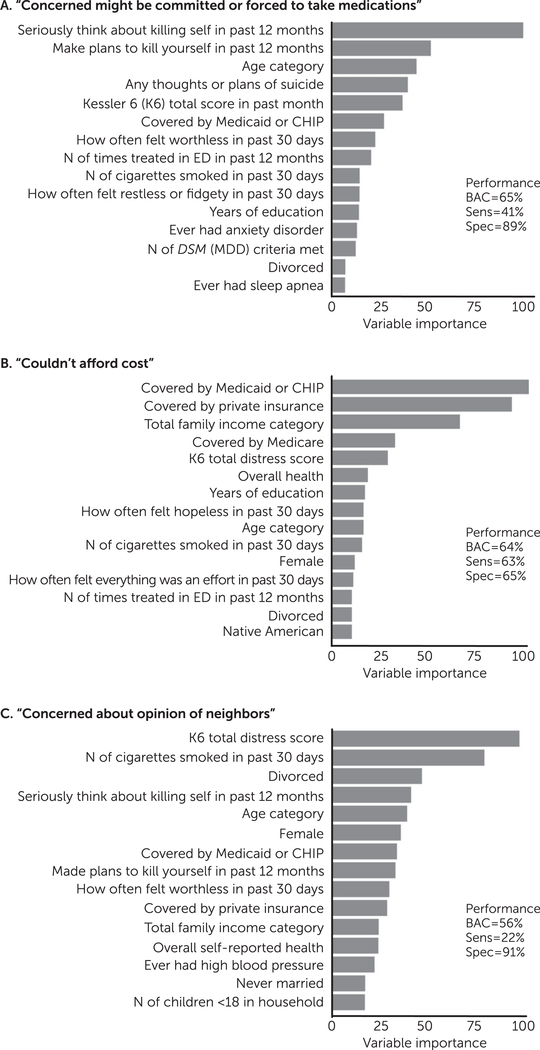

Reasons for Not Getting Needed Treatment

Most individuals (2008–2014) endorsed one (53.8%), two (18.2%), or three (12.3%) reasons for not getting treatment (median=1, mean=2.10). The most common reason was being unable to afford the cost (47.7%), and the least common reason was lack of transport or treatment too far (5.8%) (Table 2). For each reason, we trained a classifier to predict whether patients who did not get treatment would (or would not) endorse that particular reason for not getting treatment. Ten of the 15 self-reported reasons were predictable with a balanced accuracy (range 53%–65%) and sensitivity (range 15%–63%) both above chance (all p<.05) (Table 2). For the models with the three highest balanced accuracies, we examined variable importance plots to understand which were most influential at a group level (Figure 2). For “concerned you might be committed or forced to take medications,” the model relied on suicide-related features. For “couldn’t afford cost,” the model relied on information about health insurance and household income [see online supplement].

TABLE 2.

Self-reported reasons for not initiating treatment among individuals with a past-year depression diagnosis who endorsed a need for treatment, by NSDUH cohorta

| Reason | Endorsement rate (%) |

External validation performanceb |

|||||

|---|---|---|---|---|---|---|---|

| 2008–2014 | 2008–2013 | 2014 | BAC | Sens | PPV | PLR | |

| Couldn’t afford cost | 47.7 | 47.9 | 46.4 | 64.2* | 62.9* | 61.2 | 1.81 |

| Thought I could handle without treatment | 22.2 | 22.2 | 22.4 | 55.8* | 31.0* | 31.5 | 1.59 |

| Didn’t know where to go for service | 16.7 | 16.0 | 20.6 | 52.9* | 20.6* | 26.6 | 1.40 |

| Some other reason | 15.3 | 15.0 | 16.8 | 51.8 | 17.9 | 20.1 | 1.25 |

| Thought I might be committed or forced to take meds | 15.2 | 15.3 | 14.8 | 64.9* | 40.6* | 39.5 | 3.75 |

| Didn’t have time/too busy | 14.2 | 14.3 | 13.8 | 56.2* | 24.8* | 24.1 | 1.99 |

| Not enough health insurance coverage | 11.7 | 11.5 | 13.1 | 55.3* | 20.6* | 23.6 | 2.06 |

| Concerned about opinion of neighbors | 11.0 | 10.9 | 11.8 | 56.3* | 21.9* | 24.0 | 2.36 |

| Didn’t think treatment would help | 10.9 | 10.9 | 11.0 | 53.0* | 16.1* | 16.3 | 1.58 |

| Concern about confidentiality | 9.7 | 9.7 | 9.8 | 54.1* | 16.8* | 17.4 | 1.93 |

| Didn’t think I needed it at that time | 8.6 | 8.6 | 8.6 | 53.3* | 14.5* | 14.6 | 1.82 |

| Concern about effect on job | 8.1 | 8.0 | 8.4 | 51.8 | 11.1 | 11.8 | 1.47 |

| Health insurance didn’t cover it | 6.5 | 6.6 | 6.1 | 48.6 | 3.4 | 3.4 | .55 |

| Didn’t want others to find out | 6.5 | 6.4 | 6.8 | 52.3 | 10.6 | 11.5 | 1.77 |

| Had no transportation or treatment too far | 5.8 | 5.6 | 7.2 | 52.5 | 10.1 | 13.2 | 1.98 |

NSDUH, National Survey on Drug Use and Health.

BAC, balanced accuracy (%); Sens, sensitivity (%); PPV, positive predictive value (%); PLR, positive likelihood ratio. Asterisks indicate where BAC and Sens external validation metrics were significantly greater than chance during permutation testing (p<.05) [see online supplement for full permutation-based performance metrics].

FIGURE 2. Predicted self-reported reasons for not initiating treatment by an individual with a past-year depression diagnosis who endorsed a need for treatmenta.

aGroup-level variable importance was inspected for the three most predictable self-reported barriers to obtaining treatment. Variable importance (x-axis) reflects the average improvement in predictive accuracy when a variable was included in the model (gain improvement). BAC, balanced accuracy; CHIP, Children’s Health Insurance Program; ED, emergency department; MDD, major depressive disorder; Sens, sensitivity; Spec, specificity

DISCUSSION AND CONCLUSIONS

These data indicated that between 2008 and 2014, approximately 30% of U.S. individuals with 12-month major depressive disorder reported needing but not receiving mental health treatment. We used a small number of patient-reportable items to develop a case-finding algorithm-to identify individuals who did not initiate treatment after receiving a diagnosis and acknowledging treatment need. The balanced accuracy, sensitivity, and PPV of the model were significantly better than chance, even in a large independent validation cohort. Patients who said that they needed but did not get treatment also selected among 15 possible reasons why they did not get treatment. We were able to predict above chance whether patients would endorse a specific reason for 10 of the 15 possible reasons. This combination of large data sets and machine learning tools provides an empirical platform for experimental research and highlights the potential for improving overall treatment outcomes by minimizing the number of people who do not initiate treatment after endorsing a need.

U.S. Preventive Services Task Force 2016 guidelines noted that “research is needed to assess barriers to establishing adequate systems of care and how these barriers can be addressed” (2). Corroborating recent WHO findings (11), our data suggest that around 30% of patients with a diagnosis of depression who acknowledged treatment need did not receive it. This study makes an important step toward a broader discussion of reasons for not getting treatment and how to improve treatment initiation (9,18,37,38). We found that cost or cost-related reasons were perceived as a barrier by more than half the patients, even though some generic antidepressants cost less than $10 per month (free under Medicare Part D and Medicaid and the Children’s Health Insurance Program). Two other primary reasons were not knowing where to go (16.7%) and fear of being committed or forced to take medications (15.2%). Many of the endorsed reasons may reflect depressive symptoms (for example, pessimistic thoughts that treatment will not help may reflect negative thoughts associated with depression).

It is clear that treatment uptake is (and remains) a substantial barrier that prevents universal screening efforts from reaching their full potential for improving population mental health. The utility of depression treatments depends on the first step—treatment initiation. We developed a case-finding tool that can help identify individuals who do not initiate treatment. The most influential variable pertained to suicidal ideation, which is consistent with previous findings of an association between suicidal ideation and deterrents to treatment (39). Other variables were related to insurance status, demographic factors, and general medical comorbidities, and such variables are not currently used for predictive purposes (9,18,39–45). An ultimate goal would be to identify individuals who do not initiate treatment (for more concerted outreach) and to estimate how likely they are to accept various treatment options. Levels of risk can then be relayed to the clinician or case manager to help foster shared decision making and minimize barriers to initiating treatment from the outset.

The natural next step is to explore prospectively whether statistical models such as this could help develop and tailor engagement interventions, such as motivational interviewing, psychoeducation, or care management (16,46–48). For example, if an individual is predicted to be concerned about cost, is it effective to subsidize care or highlight cheaper options? Although the NSDUH survey was not designed explicitly for this, it offered an opportunity to develop hypotheses and tools for study to determine whether the approach can improve treatment uptake. Because low motivation, hypersomnia, and low energy are cardinal symptoms of depression, aggressive outreach may be required to encourage some individuals to begin and remain in care (46,49), and thus better targeting of patients in need of encouragement may make outreach cost-effective.

The study had some limitations. It relied on self-reported survey data rather than data from clinical practice. Although we focused on adults with diagnoses of depression in the past 12 months, it could not be guaranteed that patients were specifically responding about experiences of depression (versus another mental health issue), and patients may also have had multiple episodes of mental illness in that period. We included persons who had been told by a clinician that they had depression, which may have biased the sample toward those with more easily recognizable symptoms or with better access to providers. In addition, at least some patients with major depression experience sudden gains and may have recovered without treatment (14,50), although long-term outcomes are generally not favorable for untreated patients (18).

Although prediction of specific concerns for not getting treatment was statistically robust, the predictions may best be considered as a helpful warning sign rather than requiring urgent action. An advantage of these models is that they require only simple data that can be obtained quickly. However, they may be improved by inclusion of more training data or by integration of other sources of predictor variables (for example, electronic medical records and feedback from caregivers and family members); this would help improve the model’s sensitivity. Nonetheless, the model’s performance is comparable to that of other predictive models in psychiatry that included large validation samples (30,36,51). Unfortunately, the data did not permit patients to indicate a lack of treatment availability (or unacceptable wait time), and thus we focused on patient-perceived barriers rather than structural system-level barriers. Causal associations cannot be drawn from this retrospective, cross-sectional analysis, especially because NSDUH does not sequence symptoms, perceived need, and the decision not to obtain treatment. Finally, it is not clear to what extent the context of health and mental health care in the United States influenced both the predictor variables (for example, public insurance), the reasons (for example, cost), and the outcomes, and thus it will be important to examine similar data in countries with universal or other health care models.

Supplementary Material

Acknowledgments

This work was supported by Yale University, the William K. Warren Foundation, Microsoft Inc., the U.S. Department of Veterans Affairs, and grant 5UL1TR000142 from the National Center for Advancing Translational Sciences. The authors thank Gregory McCarthy, Ph.D., Myrna Weissman, Ph.D., and Harlan Krumholz, M.D., for advice and thoughtful comments on the manuscript. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality, the Substance Abuse and Mental Health Services Administration, or the Inter-University Consortium for Political and Social Research. The funding agencies bear no responsibility for use of these data or for interpretations or inferences based on such use.

Dr. Chekroud reports holding equity in Spring Care, Inc. He is lead inventor on three patent submissions related to treatment for major depressive disorder (USPTO docket no. Y0087.70116US00, provisional application no. 62/491,660, and provisional application no. 62/629,041). He reports having consulted for Fortress Biotech. Mr. Foster is a founding partner of Applied Data Science Partners. Dr. Koutsouleris reports receipt of speaker fees from Otsuka/Lundbeck. He holds a patent related to psychosis risk prediction (application no. 14/910588). Mr. Chandra reports holding equity in Spring Health (d.b.a. Spring Care, Inc.). He is an inventor on two patent submissions related to treatment for major depressive disorder (docket no. Y0087. 70116US00 and provisional application no. 62/629,041). Dr. Subramanyan reports holding equity in and being an advisor to Spring Health (d.b.a. Spring Care, Inc.). He reports holding equity in San Francisco Psychiatrists, Inc. Dr. Gueorguieva reports receipt of consulting fees from Mathematica Policy Research and Palo Alto Health Sciences. She holds a provisional patent submission (docket no. Y0087.70116US00). Dr. Paulus reports being an advisor to Spring Care, Inc. He reports receipt of royalties from UpToDate and receipt of grant support from Janssen Pharmaceuticals. Dr. Krystal reports receipt of consulting fees from Amgen, LLC, AstraZeneca Pharmaceuticals, Biogen, Biomedisyn Corp., Forum Pharmaceuticals, Janssen Pharmaceuticals, Otsuka America Pharmaceuticals, Sunovion Pharmaceuticals, Taisho Pharmaceutical Co., and Takeda Industries. He is on the scientific advisory boards of Biohaven Pharmaceuticals, Blackthorn Therapeutics, Lohocla Research Corp., Luc Therapeutices, Pfizer Pharmaceuticals, Spring Care, Inc., and TRImaran Pharma. He reports holding stock in ArRETT Neuroscience and Biohaven Pharmaceuticals Medical Sciences and stock options in Blackthorn Therapeutics and Luc Therapeutics. Dr. Krystal has the following patents and inventions: patent no. 5,447,948, application no. PCTWO06108055A1, application no. 14/197,767, application no. 14/306,382, provisional use application no. 61/973/961, and application docket no. Y0087.70116US00.

Footnotes

The other authors report no financial relationships with commercial interests.

REFERENCES

- 1.Behavioral Health Trends in the United States: Results From the 2014 National Survey on Drug Use and Health. Rockville, MD, Substance Abuse and Mental Health Services Administration, Center for Behavioral Health Statistics and Quality, 2015. http://http://www.samhsa.gov/data/sites/default/files/NSDUH-FRR1-2014/NSDUH-FRR1-2014.pdf%5Cnhttp://www.samhsa.gov/data/ [Google Scholar]

- 2.Siu AL, Bibbins-Domingo K, Grossman DC, et al. : Screening for depression in adults: US Preventive Services Task Force recommendation statement. JAMA 315:380–387, 2016 [DOI] [PubMed] [Google Scholar]

- 3.Kessler RC, Berglund P, Demler O, et al. : The epidemiology of major depressive disorder: results from the National Comorbidity Survey Replication (NCS-R). JAMA 289:3095–3105, 2003 [DOI] [PubMed] [Google Scholar]

- 4.Hasin DS, Goodwin RD, Stinson FS, et al. : Epidemiology of major depressive disorder: results from the National Epidemiologic Survey on Alcoholism and Related Conditions. Archives of General Psychiatry 62:1097–1106, 2005 [DOI] [PubMed] [Google Scholar]

- 5.Wang PS, Lane M, Olfson M, et al. : Twelve-month use of mental health services in the United States. Archives of General Psychiatry 62:629–640, 2009 [DOI] [PubMed] [Google Scholar]

- 6.Amick HR, Gartlehner G, Gaynes BN, et al. : Comparative benefits and harms of second-generation antidepressants and cognitive-behavioral therapies in initial treatment of major depressive disorder: systematic review and meta-analysis. BMJ 351:h6019, 2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gartlehner G, Hansen RA, Morgan LC, et al. : Comparative benefits and harms of second-generation antidepressants for treating major depressive disorder. Annals of Internal Medicine 155:772–785, 2011 [DOI] [PubMed] [Google Scholar]

- 8.Martin SJ, Bassi S, Dunbar-Rees R: Commitments, norms and custard creams: a social influence approach to reducing did not attends (DNAs). Journal of the Royal Society of Medicine 105: 101–104, 2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Mitchell J, Selmes T: Why don’t patients attend their appointments? Maintaining engagement with psychiatric services. Advances in Psychiatric Treatment 13:423–434, 2007 [Google Scholar]

- 10.Tiihonen J, Lönnqvist J, Wahlbeck K, et al. : Antidepressants and the risk of suicide, attempted suicide, and overall mortality in a nationwide cohort. Archives of General Psychiatry 63:1358–1367, 2006 [DOI] [PubMed] [Google Scholar]

- 11.Thornicroft G, Chatterji S, Evans-Lacko S, et al. : Undertreatment of people with major depressive disorder in 21 countries. British Journal of Psychiatry 210:119–124, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Saraceno B, van Ommeren M, Batniji R, et al. : Barriers to improvement of mental health services in low-income and middle-income countries. Lancet 370:1164–1174, 2007 [DOI] [PubMed] [Google Scholar]

- 13.Saxena S, Thornicroft G, Knapp M, et al. : Resources for mental health: scarcity, inequity, and inefficiency. Lancet 370:878–889, 2007 [DOI] [PubMed] [Google Scholar]

- 14.Kazdin AE: Addressing the treatment gap: a key challenge for extending evidence-based psychosocial interventions. Behaviour Research and Therapy 88:7–18, 2017 [DOI] [PubMed] [Google Scholar]

- 15.Nutting PA, Rost K, Dickinson M, et al. : Barriers to initiating depression treatment in primary care practice. Journal of General Internal Medicine 17:103–111, 2002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Grote NK, Zuckoff A, Swartz H, et al. : Engaging women who are depressed and economically disadvantaged in mental health treatment. Social Work 52:295–308, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Swartz HA, Zuckoff A, Grote NK, et al. : Engaging depressed patients in psychotherapy: integrating techniques from motivational interviewing and ethnographic interviewing to improve treatment participation. Professional Psychology, Research and Practice 38: 430–439, 2007 [Google Scholar]

- 18.Kohn R, Saxena S, Levav I, et al. : The treatment gap in mental health care. Bulletin of the World Health Organization 82: 858–866, 2004 [PMC free article] [PubMed] [Google Scholar]

- 19.Thornicroft G, Mehta N, Clement S, et al. : Evidence for effective interventions to reduce mental-health-related stigma and discrimination. Lancet 387:1123–1132, 2016 [DOI] [PubMed] [Google Scholar]

- 20.Thornicroft G, Brohan E, Kassam A, et al. : Reducing stigma and discrimination: candidate interventions. International Journal of Mental Health Systems 2:3, 2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lasalvia A, Zoppei S, Van Bortel T, et al. : Global pattern of experienced and anticipated discrimination reported by people with major depressive disorder: a cross-sectional survey. Lancet 381: 55–62, 2013 [DOI] [PubMed] [Google Scholar]

- 22.Brown C, Abe-Kim JS, Barrio C: Depression in ethnically diverse women: implications for treatment in primary care settings. Professional Psychology, Research and Practice 34:10–19, 2003 [Google Scholar]

- 23.Yousaf O, Grunfeld EA, Hunter MS: A systematic review of the factors associated with delays in medical and psychological help-seeking among men. Health Psychology Review 9:264–276, 2015 [DOI] [PubMed] [Google Scholar]

- 24.Magaard JL, Seeralan T, Schulz H, et al. : Factors associated with help-seeking behaviour among individuals with major depression: a systematic review. PLoS One 12:e0176730, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Billings J, Dixon J, Mijanovich T, et al. : Case finding for patients at risk of readmission to hospital: development of algorithm to identify high risk patients. BMJ 333:327, 2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Huys QJM, Maia TV, Frank MJ: Computational psychiatry as a bridge from neuroscience to clinical applications. Nature Neuroscience 19:404–413, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chekroud AM: Bigger data, harder questions-opportunities throughout mental health care. JAMA Psychiatry 74:1183–1184, 2017 [DOI] [PubMed] [Google Scholar]

- 28.Olfson M, Blanco C, Marcus SC: Treatment of adult depression in the United States. JAMA Internal Medicine 176:1482–1491, 2016 [DOI] [PubMed] [Google Scholar]

- 29.Hastie T, Tibshirani R, Friedman J: The elements of statistical learning. Elements 1:337–387, 2009 [Google Scholar]

- 30.Chekroud AM, Zotti RJ, Shehzad Z, et al. : Cross-trial prediction of treatment outcome in depression: a machine learning approach. Lancet. Psychiatry 3:243–250, 2016 [DOI] [PubMed] [Google Scholar]

- 31.Friedman JH: Stochastic Gradient Boosting. Computational Statistics and Data Analysis 38:367–378, 1999 [Google Scholar]

- 32.Chen T, He T, Benesty M: xgboost: eXtreme Gradient Boosting 0–3, 2015. https://cran.r-project.org/web/packages/xgboost/index.html [Google Scholar]

- 33.Zheutlin AB, Chekroud AM, Polimanti R, et al. : Multivariate pattern analysis of genotype-phenotype relationships in schizophrenia. Schizophrenia Bulletin (Epub ahead of print, March 9, 2018). https://academic.oup.com/schizophreniabulletin/advance-article/doi/10.1093/schbul/sby005/4925163 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Brodersen KH, Ong CS, Stephan KE, et al. : The balanced accuracy and its posterior distribution; in ICPR 2010: 20th International Conference on Pattern Recognition Washington, DC, IEEE Computer Society, 2010 [Google Scholar]

- 35.Koutsouleris N, Kahn RS, Chekroud AM, et al. : Multisite prediction of 4-week and 52-week treatment outcomes in patients with first-episode psychosis: a machine learning approach. Lancet. Psychiatry 3:935–946, 2016 [DOI] [PubMed] [Google Scholar]

- 36.Chekroud AM, Gueorguieva R, Krumholz HM, et al. : Reevaluating the efficacy and predictability of antidepressant treatments: a symptom clustering approach. JAMA Psychiatry 74:370–378, 2017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Patel V, Chisholm D, Parikh R, et al. : Addressing the burden of mental, neurological, and substance use disorders: key messages from Disease Control Priorities, 3rd edition. Lancet 387:1672–1685, 2016 [DOI] [PubMed] [Google Scholar]

- 38.Thornicroft G, Deb T, Henderson C: Community mental health care worldwide: current status and further developments. World Psychiatry 15:276–286, 2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Goldsmith SK, Pellmar TC, Kleinman AM, et al. : Reducing Suicide: A National Imperative. Washington, DC, National Academies Press, 2002 [PubMed] [Google Scholar]

- 40.Bland RC, Newman SC, Orn H: Help-seeking for psychiatric disorders. Canadian Journal of Psychiatry 42:935–942, 1997 [DOI] [PubMed] [Google Scholar]

- 41.Gallo JJ, Marino S, Ford D, et al. : Filters on the pathway to mental health care: II. sociodemographic factors. Psychological Medicine 25:1149–1160, 1995 [DOI] [PubMed] [Google Scholar]

- 42.Narrow WE, Regier DA, Norquist G, et al. : Mental health service use by Americans with severe mental illnesses. Social Psychiatry and Psychiatric Epidemiology 35:147–155, 2000 [DOI] [PubMed] [Google Scholar]

- 43.Fagiolini A, Goracci A: The effects of undertreated chronic medical illnesses in patients with severe mental disorders. Journal of Clinical Psychiatry 70(suppl 3):22–29, 2009 [DOI] [PubMed] [Google Scholar]

- 44.Fleury MJ, Ngui AN, Bamvita JM, et al. : Predictors of healthcare service utilization for mental health reasons. International Journal of Environmental Research and Public Health 11:10559–10586, 2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bonabi H, Müller M, Ajdacic-Gross V, et al. : Mental health literacy, attitudes to help seeking, and perceived need as predictors of mental health service use: a longitudinal study. Journal of Nervous and Mental Disease 204:321–324, 2016 [DOI] [PubMed] [Google Scholar]

- 46.Simon GE, Ludman EJ, Tutty S, et al. : Telephone psychotherapy and telephone care management for primary care patients starting antidepressant treatment: a randomized controlled trial. JAMA 292:935–942, 2004 [DOI] [PubMed] [Google Scholar]

- 47.Wang PS, Simon GE, Avorn J, et al. : Telephone screening, out-reach, and care management for depressed workers and impact on clinical and work productivity outcomes: a randomized controlled trial. JAMA 298:1401–1411, 2007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.McKay MM, Hibbert R, Hoagwood K, et al. : Integrating evidence-based engagement interventions into “real world” child mental health settings. Brief Treatment and Crisis Intervention 4:177–186, 2004 [Google Scholar]

- 49.Simon GE, Perlis RH: Personalized medicine for depression: can we match patients with treatments? American Journal of Psychiatry 167:1445–1455, 2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Tang TZ, DeRubeis RJ: Sudden gains and critical sessions in cognitive-behavioral therapy for depression. Journal of Consulting and Clinical Psychology 67:894–904, 1999 [DOI] [PubMed] [Google Scholar]

- 51.Barak-Corren Y, Castro VM, Javitt S, et al. : Predicting suicidal behavior from longitudinal electronic health records. American Journal of Psychiatry 174:154–162, 2017 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.