Abstract

Interoperability between heterogenous (health) IT systems relies on standards, which are communicated to system vendors in the form of so-called conformance profiles. Clinical information systems are often subjected to mandatory conformance testing and certification prior to being admitted into the health information exchange (HIE). The requirements specified in conformance profiles are therefore instrumental for ensuring the correctness and safety of the emerging HIE network. How can we ensure the quality and safety of conformance requirements themselves? We have adapted a system-theoretic hazard analysis method (STPA) for this purpose and applied it to an industrial case study in British Columbia, the Clinical Data eXchange (CDX) system. Our results indicate that the method is effective in detecting missing and erroneous constraints.

Introduction

Establishing and increasing interoperability among heterogenous health IT systems has been an ongoing concern in many jurisdictions, with the “goal of optimizing the health of individuals and populations”1. Interoperability problems are not only sources of inefficiencies but also have been implicated as causes for technology-induced medical accidents2–4. Interoperability quality assurance is usually based on some form of conformance testing and certification of participating systems.5 The requirements communicated to system vendors and utilized in conformance testing are often referred to as conformance profiles.6 These requirements play an instrumental role in ensuring the quality and safety of the emerging health information exchange (HIE) network. This begs the question of how to ensure their correctness and completeness.

A range of hazard analysis method have been developed to identify safety hazards in systems.7 However, while hazard analysis methods have been used for analyzing healthcare processes in general,8 their application in the context of health IT has been scarce. Most published studies are retrospective in nature, i.e., they seek to identify the root causes for technology-induced accidents after their occurrence.2, 9 They target concrete IT system implementations rather than system requirements. The authors are not aware of any prospective hazard analysis study with the goal to analyse the safety of health IT system requirements, as published in normative conformance profiles.

The objective of this paper is to fill this gap. We extend the system-theoretic hazard analysis method STPA (System- Theoretic Process Analysis)10 for this purpose and demonstrate its effectiveness with an HIE case study in British Columbia, the Clinical Data eXchange (CDX) system. Our analysis was able to identify several missing and erroneous requirements in the CDX conformance profile.

Background and Literature Review

The various methods proposed for identifying hazards in safety-critical (health) IT systems fall into four categories: (1) Model-based methods are analytical techniques for identifying hazards based on some abstraction of the system under investigation. The method used in this paper is based on STAMP (System-Theoretic Accident Model and Processes), a model that abstracts systems as webs of control loops.10 As a relatively new model, STAMP has mostly been applied outside of healthcare IT. However, we demonstrated the use of STAMP for studying the causal factors for a health IT related accident in our earlier work.9 More conventional model-based hazard analysis methods abstract system behaviour in form of event chains and have seen more applications in healthcare, e.g., Fault Tree Analysis (FTA) and Failure Modes and Effects Analysis (FMEA)11–13. (2) Inspection-based methods seek to identify safety hazards by evaluating health IT-systems against design heuristics, which are often linked to usability.14, 15 (3) Simulation-based methods study system behaviour in simulated environments to identify hazards. These methods include clinical usability studies that gather feedback from human participants16, 17 as well as automated tests of specific safety-related functions, e.g., clinical decision support alerts.18 (4) Reporting-based methods utilize incident reporting processes to record and analyze safety concerns.19–22

Notably, the four above categories of hazard analysis methods align with subsequent phases of the information technology lifecycle (cf. Figure 1) and should ideally be combined to provide a comprehensive safety management process. In practice, however, most safety initiatives in health IT projects focus on the later lifecycle phases (V&V, deployment and operation & maintenance), with little or no consideration of hazard analysis during requirements analysis and design. This is seen as a problem as hazards identified earlier in the development lifecycle can be mitigated with lower cost and risk.23 Our paper demonstrates that model-based hazard analysis is a feasible and effective tool to identify hazards at the level of requirements for interoperable health IT systems.

Figure 1.

Alignment of hazard analysis (HA) method types with technology lifecycle phases

Methods

System Theoretic Process Analysis (STPA) is a relatively new prospective hazard analysis (HA) method introduced by Leveson.10 STPA is more suitable for modelling socio-technical systems than other traditional HA methods, e.g., fault tree analysis (FTA) and failure modes and effects analysis (FMEA).7 STPA views safety as a process control problem. A system under investigation is modeled and analyzed as a web of control loops, referred to as the “control structure”. The elements in the control structure do not need to (and generally do not) represent components of real system designs. Therefore, STPA can be applied early on in the system development lifecycle, even at the level of requirements engineering.24

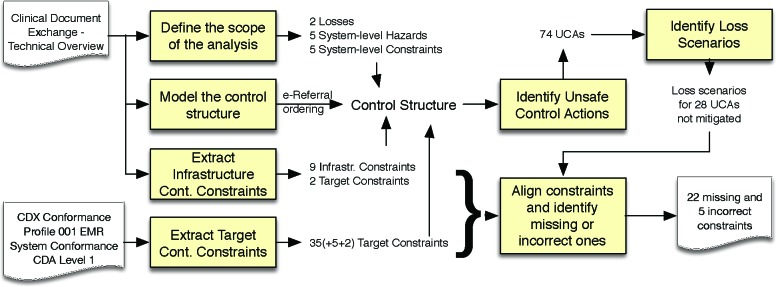

We extended STPA for the specific purpose of performing hazard analysis on interoperability conformance profiles. Figure 2 summarizes this extended method. The analysis takes two inputs: (1) an interoperability Conformance Profile and (2) a specification of the overall HIE system (referred to as “System Specification” in Figure 2). Note that while these two types of inputs exist in all standards-based interoperability projects, they can be part of the same physical document, or they may be contained in separate documents.

Figure 2.

STPA-based hazard analysis method for interoperability conformance profiles

The first activity is to define the scope of the analysis, identifying losses to avoid as well as an initial set of system- level hazards and constraints that would mitigate these hazards. Losses may be related to anything of value to a stakeholder, including but not limited to patient harm.

A central activity in STPA is to model the control structure, taking in consideration any constraints extracted from the Conformance Profile as well as the constraints specified for the common HIE infrastructure (System Specification). STPA control structures consist of hierarchical control loops, where each loop involves a controller (active element), a process (passive element), an actuator (used by the controller to influence the process) and a sensor (providing the controller with feedback on the status of the process). Any of these elements may represent a device or a human actor.

Each controller in the resulting control structure will either be associated to a conformance target (e.g., an EMR device participating in the HIE) or the common HIE infrastructure. The “extract target controller constraints” activity in Figure 2 seeks to identify all safety-relevant constraints on controllers associated with the conformance target. Target controller constraints can usually be identified easily, since conformance profiles tend to be highly structured, normative documents that contain itemized lists and use controlled language. The extraction of infrastructure controller constraints is not as simple, since overall system specifications often do not provide an itemized list of infrastructure controller constraints. These documents are not written as requirements specifications (prescriptive) but as system explanations (descriptive). The analysts must therefore identify infrastructure controller constraints in semi-structured narrative. Some relevant infrastructure constraints may not even be mentioned.

Once the control structure has been modelled, the next step is to identify unsafe control actions. An unsafe control action (UCA) is a control action that, in a particular context may lead to a hazard. UCAs are identified by examining each control action in the control structure with a set of guide words to explore circumstances that may lead to hazards, given worst case assumptions. The guide words are “not provided”, “provided (in error)”, “too early”, “too late”, “out of sequence”, “stopped too soon”, and “applied too long”.

The analysis then seeks to identify loss scenarios, i.e., scenarios that may involve UCAs. Each loss scenario describes the conditions under which a specific UCA may happen. Loss scenarios are elicited by considering two types of questions: “why would Unsafe Control Actions occur?” and “why would control actions be improperly executed?”. The first question explores issues relating to improper or missing feedback, while the second question considers issues related to the execution of control actions.

The final step seeks to align the constraints in the control structure with the identified loss scenarios to determine whether the controller constraints sufficiently mitigate these scenarios or whether there are missing or incorrect constraints. In cases where loss scenarios are not sufficiently mitigated, there may be multiple alternative solutions for adding additional constraints to controllers in the control structure. These can be pointed out as alternative options to mitigate the hazard. The alignment step may also indicate incorrect constraints. The final output of this activity is a list of missing constraints (including alternatives) and incorrect constraints.

We evaluated the effectiveness of the described above STPA-based hazard analysis method in context of an HIE interoperability initiative in British Columbia, Canada (B.C.). The B.C. Clinical Data eXchange (CDX) system is an HL7 CDA-based25 HIE for clinical information systems, including electronic medical record (EMR) systems, hospital informations systems, and laboratory information systems. The CDX system infrastructure is operated by the Interior Health Authority but also provides services to Northern Health Authority and Vancouver Coastal Health Authority. Detailed information on the CDX system, including its technical specifications are publicly available at www.bccdx.ca. Health IT vendors wanting to participate in the CDX system must pass conformance testing to demonstrate their software’s compliance to a published conformance profile.26 The overall HIE infrastructure is described in a separate document.27 We selected the CDX system for our experiment, because we were collaborating with the CDX team on developing CDX capabilities in a major open source EMR system used in Canada (OSCAR).28 The CDX system supports different clinical workflows, including e-referrals, consultations, laboratory data exchange, general progress updates, etc. In order to contain the scope of our experiment, we focussed the hazard analysis on the e-referral ordering workflow: A primary caregiver sends a referral order (including directions and medical information about the patient) to a secondary caregiver. We did not have any knowledge of incomplete or incorrect safety requirements in the CDX conformance profile prior to our analysis. The hazard analysis method was executed by one researcher (first author) and its results were checked by another researcher (second author). For the purpose of this study, we consider the proposed hazard analysis method as effective if it indicates the existence of unmitigated hazards due to missing or incorrect constraints in the CDX conformance profile.

Results

The results of the hazard analysis study are summarized in Figure 3. When scoping the analysis to the e-referral ordering process, we identified two general types of losses, five system-level hazards and six system-level constraints to mitigate these hazards (cf. Table 1).

Figure 3.

Summary of study results

Table 1.

Scope of the analysis: System-level losses, hazards and constraints

| Losses | System-level Hazards | System-level Constraints |

|---|---|---|

|

|

|

Figure 4 shows the control structure modeled for the e-referral system: the primary caregiver (PCG) indirectly controls the patient’s health process via the ordering actuator and the secondary caregiver (SCG). Moreover, the PCG receives feedback on the patient’s health process via the reporting sensor. Since the focus of the analysis is limited to the ordering workflow, the ordering actuator has been decomposed but the reporting sensor remains opaque. This decomposition was done without making any assumptions about the design of the participating system. It was purely based on the information provided in the CDX system specification.27 The elements belonging to the common HIE infrastructure are highlighted with bold frames. The decomposed control structure is described as follows:

Figure 4.

Control Structure

The PCG creates orders with a software device (referred to as Order Entry Controller – OEC in Figure 4).

The OEC sends orders using the CDX middleware (Message Routing Controller – MRC).

The OEC finds providers and clinics capable of receiving CDX messages using the CDX middleware (Provider and Institution Registry – PIR).

The SCG displays orders with a software device (referred to as the Order Processing Controller – OPC).

The OPC checks the CDX middleware for new orders and downloads any messages that have been queued for that clinic.

The PCG controls an EMR chart for the patient (referred to as P EMR Charting). The OEC can read from and write to it.

The SCG controls an EMR chart for the patient (referred to as S EMR Charting). The OPC can read from and write to it.

In the above list, we underlined words that give rise to actions or feedback the control structure. For similar reasons, we use exclamation marks (!) and question marks (?) when naming actions and feedback in Figure 4. The use of solid arrows for actions and dashed arrows for feedback provides further visual distinction.

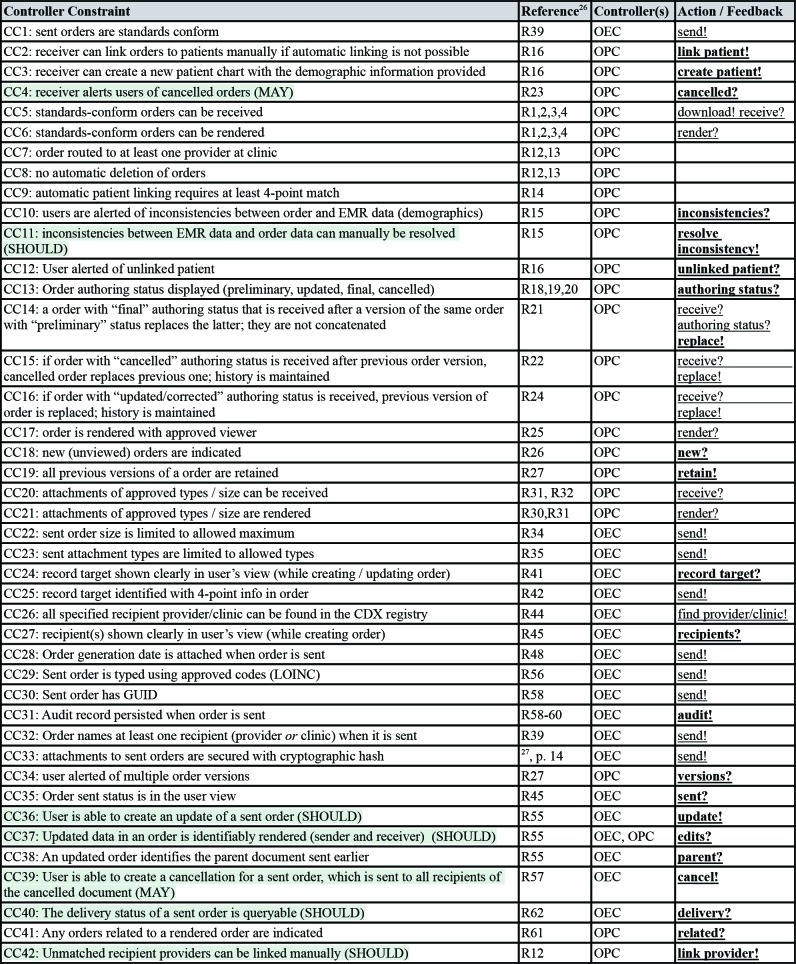

The CDX conformance profile26 includes 62 nominal requirements statements. Not all of these requirements are marked as mandatory. As in many normative standards, the CDX conformance profile uses the words SHALL, SHOULD and MAY to define mandatory, recommended and optional requirements, respectively. Moreover, not all requirements in conformance profiles are safety-relevant. Other types of requirements pertain for example to information security and privacy. We extracted 42 safety-relevant controller constraints for the conformance target from the CDX conformance profile, including five mandatory and two optional ones. They are listed in Table 2, along with references to the respective nominal conformance requirements, the associated controller(s), control action(s) and feedback. Non-mandatory constraints were highlighted. Moreover, we used bold font to highlight control actions and feedback that will be added to the initial control structure.

Table 2.

Target controller constraints extracted from conformance profile

We extracted 11 safety-relevant controller constraints from the CDX System Specification,27 as listed in Table 3. Notably, two of these constraints can only be enforced by the participating systems and not by the HIE infrastructure (highlighted in Table 3). The first constraints (CC43) requires that participating systems regularly check the CDX infrastructure for newly queued messages. One reason why this requirement may have been omitted from the CDX conformance profile could be to allow for manual polling functionality. For these types of systems, the onus would be on the user (the SCG in our model) to regularly check for new messages. However, the second of these constraints (CC52) should be associated with the conformance target and included in the conformance profile. It requires that the OPC will retry any failed or incomplete message downloads. This constraint must be understood in context of infrastructure constraint CC51, which states that messages are considered delivered (i.e., removed from the list observed by feedback “queue?”) as soon as a participant has attempted a download action.

Table 3.

Infrastructure controller constraints extracted from system specification

Figure 5 shows the control structure after adding the actions and feedback from the extracted controller constraints. The updated model includes 18 actions to be examined for identifying unsafe control actions (UCAs). STPA uses a canonical form to denote UCAs: <Source=<Type=<ControlAction=<Context=<Hazards>=, where <Source= is the responsible controller, <Type= describes the guide word of the action (e.g., “provide”, “does not provide”, “too early”, “too late”, etc.), <Control Action= identifies the action, <Context= describes any context relevant to this UCA, and <Hazards= references the related hazards. For example, consider the following UCA: “PCG provides create! with mis-identified / ambiguous / missing record target [H2]”

Figure 5.

Control Structure with added actions and feedback (from extracted constraints)

We identified 74 UCAs using the STPA guideword technique and elaborated each UCA with one or multiple loss scenarios, describing situations where these UCAs may occur. We then aligned the extracted controller constraints with each UCA to determine whether the associated loss scenarios were sufficiently mitigated by the constraints extracted from the conformance profile and system specification. We found that was not the case for 33 UCAs.

Table 4 shows an excerpt of the results of this analysis activity. The first UCA shown (UCA 1.1) is an example for a case that was found to be sufficiently mitigated by the three controller constraints (CC1, 24 and 25) in the conformance profile. The other shown UCAs are examples for cases that were not found to be sufficiently mitigated by extracted constraints. In most cases, the identified loss scenarios could be mitigated by defining additional constraints to be added to the conformance profile. In other cases, the analysis revealed errors in existing conformance requirements. This is the case, for example, for UCA 6.3: This UCA may occur when the HIE infrastructure routes messages in a different order than they were received.

Table 4.

Unsafe control actions, loss scenarios and missing/incorrect conformance constraints

In some cases, the possibility of whether or not a UCA can occur depends on assumptions about infrastructure controller constraints. As discussed the methods section of this paper, in contrast to the constraints related to the conformance target, not all constraints related to the infrastructure may be specified in the System Specification. We therefore confirmed derived assumptions about the HIE infrastructure with the CDX technical team. In the case of UCA6.3, the CDX team confirmed that the message bus does not guarantee preservation of the sequence of sent orders. This means that UCA6.3 and its associated loss scenarios are indeed possible. As a mitigation, we suggested corrections to controller constraints CC14-16 (as shown in Table 4).

Altogether, our STPA-based hazard analysis method identified 22 missing and 5 incorrect constraints in the CDX conformance profile.

Discussion

In contrast to other safety-relevant industry sectors, prospective hazard analysis is rarely performed in the context of healthcare IT projects. The socio-technical nature of health information systems has been seen as an impediment when applying traditional, technology-focussed safety engineering methods.9 Therefore, current safety engineering methods in healthcare IT are often limited to evaluating existing systems, for example using clinical simulations17 and usability studies.16

While these methods have shown to generate valuable results, we believe that there is an important role to play for prospective hazard analysis methods that can be applied earlier in the development lifecycle of a health IT system, even at the requirements stage. The quality (safety) of conformance requirements for interoperability infrastructures should be ensured as much as possible, before vendors start implementing them in their products. STPA is a relatively new hazard analysis method that lends itself well to the analysis of sociotechnical systems. The method presented in this paper is STPA-based, with extensions specifically geared to analyze interoperability conformance profiles. In our experimental case study, it delivered results that can be used to improve the quality (safety) of an interoperability conformance profile. Critics of hazard analysis methods have raised questions of reliability and validity of the analysis results.29 The question of reliability is concerned with whether different analysts would arrive at the same result. Indeed, we do not claim that a different analysis team would arrive at exactly the same set of missing and incorrect requirements. The question as to whether or not a specific UCA can be considered “sufficiently mitigated” by a set of constraints depends on human judgement and is likely to vary in some cases when considered by different analysts. Nevertheless, our hazard analysis method identified a number of high profile loss scenarios that are objectively not mitigated given the requirements specified in the CDX conformance profile, e.g., UCA 6.3 and UCA 7.3 in Table 4. The question of validity mainly concerns hazard analysis methods that encompass quantitative measurements of risk scores, which are often based on estimates of probability, observability and severity of events. The method used in this paper does not employ such measurements and thus avoids such criticism.

At this point, we have only analyzed a part of the CDX interoperability workflows, i.e., the e-referral ordering process. Our current work is on expanding the scope of our analysis to include additional workflows. Our long term plan is to confirm the effectiveness of our method with a second, unrelated case study.

Figures & Table

References

- 1.HIMSS. What is Interoperability? (Healthcare Information and Management Systems Society) 2019. https://www.himss.org/library/interoperability-standards/what-is-interoperability.

- 2.Horsky J, Kuperman GJ, Patel VL. Comprehensive analysis of a medication dosing error related to CPOE. J Am Med Inform Assoc. 2005;12:377–382. doi: 10.1197/jamia.M1740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Magrabi F, Ong MS, Runciman W, Coiera E. Using FDA reports to inform a classification for health information technology safety problems. J Am Med Inform Assoc. 2012;19:45–53. doi: 10.1136/amiajnl-2011-000369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mason-Blakley F, Weber J. A systems theory classification of EMR hazards: preliminary results. Stud Health Technol Inform. 2013;183:214–219. [PubMed] [Google Scholar]

- 5.D’Amore JD, Mandel JC, Kreda DA, et al. Are Meaningful Use Stage 2 certified EHRs ready for interoperability? Findings from the SMART C-CDA Collaborative. J Am Med Inform Assoc. 2014;21:1060–1068. doi: 10.1136/amiajnl-2014-002883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Kawamoto K, Honey A, Rubin K. The HL7-OMG healthcare services specification project: motivation, methodology, and deliverables for enabling a semantically interoperable service-oriented architecture for healthcare. J Am Med Inform Assoc. 2009;16:874–881. doi: 10.1197/jamia.M3123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ericson Clifton A. II. Vol. 2005. John Wiley & Sons; Hazard Analysis Techniques for System Safety; p. 528. [Google Scholar]

- 8.Inc, Joint Commission Resources. Failure Mode and Effects Analysis in Health Care. Joint Commission on. 2005:192. [PubMed] [Google Scholar]

- 9.Weber-Jahnke J, Mason-Blakley F. On the Safety of Electronic Medical Records. Foundations of Health Informatics Engineering and Systems - Lecture Notes in Computer Science. 2012;7151:177–194. [Google Scholar]

- 10.Leveson N. MIT Press; 2011. Engineering a Safer World: Systems Thinking Applied to Safety; p. 555. [Google Scholar]

- 11.AIP Conference Proceedings. 1. Vol. 2045. AIP Publishing; 2018. Application of failure mode and effect analysis for medical technology processes in tertiary hospitals in Manila. 2018. [Google Scholar]

- 12.Caudill-Slosberg M, Weeks WB. Case study: identifying potential problems at the human/technical interface in complex clinical systems. Am J Med Qual. 2005;20:353–357. doi: 10.1177/1062860605280196. [DOI] [PubMed] [Google Scholar]

- 13.Bonnabry P, Despont-Gros C, Grauser D, et al. A risk analysis method to evaluate the impact of a computerized provider order entry system on patient safety. J Am Med Inform Assoc. 2008;15:453–460. doi: 10.1197/jamia.M2677. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zhang J, Johnson TR, Patel VL, Paige DL, Kubose T. Using usability heuristics to evaluate patient safety of medical devices. Journal of Biomedical Informatics. 2003;36:23–30. doi: 10.1016/s1532-0464(03)00060-1. [DOI] [PubMed] [Google Scholar]

- 15.Carvalho CJ, Borycki EM, Kushniruk AW. Using heuristic evaluations to assess the safety of health information systems. Stud Health Technol Inform. 2009;143:297–301. [PubMed] [Google Scholar]

- 16.Kushniruk AW, Borycki EM, Kannry J. Commercial versus in-situ usability testing of healthcare information systems: towards “public” usability testing in healthcare organizations. Stud Health Technol Inform. 2013;183:157–161. [PubMed] [Google Scholar]

- 17.Kushniruk AW, Borycki EM, Anderson J, Anderson M, Nicoll J, Kannry J. Using clinical and computer simulations to reason about the impact of context on system safety and technology-induced error. Stud Health Technol Inform. 2013;194:154–159. [PubMed] [Google Scholar]

- 18.Kilbridge PM, Welebob EM, Classen DC. Development of the Leapfrog methodology for evaluating hospital implemented inpatient computerized physician order entry systems. Qual Saf Health Care. 2006;15:81–84. doi: 10.1136/qshc.2005.014969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Magrabi F, Baker M, Sinha I, et al. Clinical safety of England’s national programme for IT: a retrospective analysis of all reported safety events 2005 to 2011. Int J Med Inform. 2015;84:198–206. doi: 10.1016/j.ijmedinf.2014.12.003. [DOI] [PubMed] [Google Scholar]

- 20.Symposium on Human Interface. Springer; 2011. Design effective voluntary medical incident reporting systems: a literature review. 2011. [Google Scholar]

- 21.Cochon L, Lacson R, Wang A, et al. Assessing information sources to elucidate diagnostic process errors in radiologic imaging—a human factors framework. Journal of the American Medical Informatics Association. 2018;25:1507–1515. doi: 10.1093/jamia/ocy103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Palojoki S, Makela M, Lehtonen L, Saranto K. An analysis of electronic health record-related patient safety incidents. Health Informatics J. 2017;23:134–145. doi: 10.1177/1460458216631072. [DOI] [PubMed] [Google Scholar]

- 23.Jens HW, Fieran M-B, Morgan P. Information System Hazard Analysis - A method for identifying technology- induced latent errors for safety. Stud Health Technol Inform. 2015;208:342–346. [PubMed] [Google Scholar]

- 24.Leveson NG. Safety Analysis in Early Concept Development and Requirements Generation. INCOSE International Symposium. 2018;28:441–455. [Google Scholar]

- 25.Dolin RH, Alschuler L, Boyer S, et al. HL7 Clinical Document Architecture, Release 2. Journal of the American Medical Informatics Association. 2006;13:30–39. doi: 10.1197/jamia.M1888. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Robertson C, Martens J, DeLeenheer J, Collet I. CDX Conformance Profile 001 EMR System Conformance CDA Level 1. Northern Health / Interior Health - wwwbccdxca. :201733. [Google Scholar]

- 27.Chapman J, Lott B, Bruce A. Clinical Document Exchange - Technical Overview. Interior Health - bccdxca. :201627. [Google Scholar]

- 28.Ruttan J. OSCAR. The Architecture of Open Source Applications. 2012 http://aosabook.org/en/index.html. [Google Scholar]

- 29.Franklin BD, Shebl NA, Barber N. Failure mode and effects analysis: too little for too much. BMJ Qual Saf. 2012;21:607–611. doi: 10.1136/bmjqs-2011-000723. [DOI] [PubMed] [Google Scholar]