Abstract

Effective human-robot collaboration in shared autonomy requires reasoning about the intentions of the human partner. To provide meaningful assistance, the autonomy has to first correctly predict, or infer, the intended goal of the human collaborator. In this work, we present a mathematical formulation for intent inference during assistive teleoperation under shared autonomy. Our recursive Bayesian filtering approach models and fuses multiple non-verbal observations to probabilistically reason about the intended goal of the user without explicit communication. In addition to contextual observations, we model and incorporate the human agent’s behavior as goal-directed actions with adjustable rationality to inform intent recognition. Furthermore, we introduce a user-customized optimization of this adjustable rationality to achieve user personalization. We validate our approach with a human subjects study that evaluates intent inference performance under a variety of goal scenarios and tasks. Importantly, the studies are performed using multiple control interfaces that are typically available to users in the assistive domain, which differ in the continuity and dimensionality of the issued control signals. The implications of the control interface limitations on intent inference are analyzed. The study results show that our approach in many scenarios outperforms existing solutions for intent inference in assistive teleoperation, and otherwise performs comparably. Our findings demonstrate the benefit of probabilistic modeling and the incorporation of human agent behavior as goal-directed actions where the adjustable rationality model is user customized. Results further show that the underlying intent inference approach directly affects shared autonomy performance, as do control interface limitations.

Additional Key Words and Phrases: Human Intent Recognition, Intent Inference, Shared Autonomy, Probabilistic Modeling, Assistive Robotics, Assistive Teleoperation, Human-Robot Interaction

1. INTRODUCTION

Human-robot teams are common in many application areas, such as domestic service, search and rescue, surgery, and driving vehicles. In the area of assistive robotics, shared autonomy is utilized to aid the human in the operation of an assistive robot through the augmentation of human control signals with robotics autonomy [13, 19, 23, 30]. One fundamental requirement for effective human-robot collaboration in shared autonomy is human intent recognition. In order to meaningfully assist the human collaborator the robot has to correctly infer the intended goal of the user from a number of potential task-relevant goals—known as the intent inference problem (Figure 1).

Fig. 1.

Schematic of human intent recognition for shared autonomy.

Humans are very good at anticipating the intentions of others with non-verbal communication [11]. Research studies in experimental psychology show that humans demonstrate a strong and early inclination to interpret observed behaviors of others as goal-directed actions [7]. Evidence shows that even young human infants are inclined to interpret certain types of human behaviors as goal-directed actions. For example, a developmental proposition to the question of how the child develops a theory of mind posits that infants learn about the signal value of adult actions because these behaviors predict the locations of interesting objects and events [28]. Such a teleological interpretation of actions in terms of goals could inform us why the action has been performed, i.e., it provides a special type of explanation for the action.

Taking inspiration from psychology and teleological interpretations of actions, we aim to explore how the robotics autonomy can implicitly observe and leverage non-verbal human actions to perform human intent recognition. We focus specifically on human commands provided to a shared-control assistive robotic system through a control interface. Such control command information is readily available, and the operation of these control interfaces is already familiar to end users and does not divert attention from the task execution. Directly leveraging the user’s interaction with the control interface to perform intent recognition could pose critical challenges as a consequence of the limitations of the interface or due to the underlying motor-impairment. We therefore model the user’s interaction with the control interface as probabilistic goal-directed actions, in order to allow for uncertainty in reasoning about the user’s intention.

We consider the user control inputs as representative of the action the user wants to take, and build a probabilistic behavior model with adjustable rationality to inform human intent recognition. We furthermore introduce a user-customized optimization of the adjustable rationality for user personalization, which admits for suboptimality in the human actions due to any number of reasons (discussed further in Section 3.5). For intent recognition, we model the uncertainty over the user’s goal within a recursive Bayesian filtering framework. Our framework allows for the continuous update of the intent probability for each goal hypothesis based on the available observations. Furthermore, it enables the seamless fusion and incorporation of any number of observation sources, allowing the intent inference to leverage rich sources of information. Finally, the framework can explicitly express uncertainty in the resulting inference, which is critical to know in shared autonomy as assistance towards wrong goals could be worse than providing no assistance.1

In this paper, we perform a variety of user studies in different scenarios to evaluate intent recognition and its affect on shared autonomy performance. Our experimental work furthermore evaluates intent inference during novice teleoperation as well as under shared autonomy assistance, and using multiple control interfaces typically employed within the assistive domain, which is first within the field.

In summary, our work makes the following contributions:

It introduces a user-customized optimization of adjustable rationality in a probabilistic model human actions as goal-directed observations for performing intent inference, which thus can account for human action suboptimality within the assistive domain. We demonstrate the benefit of individualized adjustable rationality for intent inference.

It presents a mathematical framework for human intent recognition which enables the seamless fusion of observations from any number of information sources in order to probabilistically reason about the intended goal of the user.

It examines intent inference under multiple control interfaces that typically are utilized within the assistive domain, a first within the field.

It provides a more extensive evaluation of intent inference than is typically seen within the literature, including a larger set of candidate goals, trials with change of intentions, an examination of the impact on shared autonomy performance, comparison to human inference baselines, and evaluation by assistive technology end-users.

The remainder of the paper is organized as follows. Related work is discussed in Section 2. Section 3 casts intent inference within a recursive Bayesian filtering framework and presents our algorithm. Implementation details are provided in Section 4. Details of experimental work are presented in Section V, and discussed further in Section 6 with results. In Section 7, we present the discussion and we conclude with directions for future research in Section 8.

2. RELATED WORK

Intent inference—also referred to as inference of the desired goal, target, action, or behavior—has been investigated under various settings [26]. For instance, intent inference includes human activity recognition in the area of computer vision [1] using spatio-temporal representations, and task executions in surgical telemanipulation systems using Hidden Markov Models [25].

Probabilistic methods often are used to infer unknown intentions from human movements. A latent variable model is proposed to infer the human intentions from an ongoing movement, which is verified for target inference in robot table tennis and movement recognition for interactive humanoid robots [35]. A data-driven approach synthesizes anticipatory knowledge of human motions and subsequent action steps for the prediction of human reaching motion towards a target [31]. Predictive inverse optimal control methods also are utilized to estimate human motion trajectories and shown to work for anticipating the target locations and activity intentions of human hand motions [27].

Instead of human movements and motions, in this work we investigate how shared autonomy systems can take advantage of the indirect signals people implicitly provide when operating a robot through a direct control interface (e.g., a joystick), and what are the implications of the control interface limitations on intent inference. The commercial control interfaces that are accessible to those with motor-impairments are limited in both the dimensionality and continuity of the control signals. Examples include 2- or 3-D control joysticks, and more limited interfaces such as Head Arrays and Sip-N-Puff control interfaces (1-D control). For all, the dimensionality of the control signal is considerably lower than the control space of the robot—making the intent inference problem more challenging.

In shared autonomy, many systems simplify the intent inference problem by focusing on predefined tasks and behaviors or by assuming that the robot has access to the user’s intent a priori [19, 23]. One approach to infer the user’s intent could be to have the user communicate the intended goal explicitly, for example via verbal commands as observations. There exist works that rely on explicit commands from the user to communicate intent via an interface, such as a GUI [34]. However requiring explicit communication from the user could lead to ineffective collaboration and increased cognitive load [12]. To infer intentions and maintain a common understanding of the shared goal during human-robot collaborative tasks, a number of human-robot interaction approaches investigate the use of non-verbal communication including gestures, expressions, and gaze. For example, user gaze patterns are studied to understand human control behavior for shared manipulation [3] and to perform anticipatory actions [14]. The eye gaze data quality can depend on the calibration accuracy and projection method errors of the eye-tracker [14]. Gestures, along with language referential expressions, are utilized for object inference in human-robot interactions [36].

Intent inference in shared autonomy is often represented as a confidence in the prediction of the intended goal, based on instantaneous observations [2, 9, 13]. Some shared-autonomy systems compute a belief over the space of possible goals. A memory-based inference approach utilizes the history of trajectory inputs and applies Laplace’s method about the optimal trajectory between any two points to approximate the distribution over goals [9]. Memory-based inference is utilized in previous works involving shared autonomy and human-robot systems [30] [9] [8]. Another approach uses the Laplace’s approximation [9] and formulates the problem as one of optimizing a Partially Observable Markov Decision Process (POMDP) over the user’s goal to arbitrate control over a distribution of possible outcomes [20]. The approach considers user inputs for the prediction model and uses a hand-specified distance-based user cost function in order to achieve a closed-form value function computation.

In this work, we consider probabilistic modeling of implicit observations and compute a belief over the space of possible goals within a Bayesian filtering framework. In addition to a distance-based likelihood observation used in the prior works, our approach incorporates a fusion of multiple observations, and introduces a probabilistic modeling of user control inputs as goal-directed actions that customizes the rationality index value to each individual user, and thus can account for their particular behavior. The motivation for our approach are prior studies which show that users vary greatly in their performance, preferences and desires [9, 20, 37]—suggesting a need for assistive systems to customize to individual users.

Finally, in the majority of the literature on shared-control systems that perform intent inference, the focus of the experimental work is on the control sharing and robot assistance [2, 13, 21, 30]. The intent inference itself is mostly assessed only implicitly, and seldom the central aim of a study. Inferred intent however can have critical impact on the shared autonomy performance: incorrect predictions can result in unfortunate circumstances including collisions, while imprecise predictions impact the robot’s ability to correctly assist the user towards their intended goal. This in turn can result in large penalties in time and user preference on assistance [9]. In this paper, we therefore present a more extensive evaluation of intent inference than is typically seen within the literature.

3. FRAMEWORK FOR INTENT INFERENCE UNDER SHARED AUTONOMY

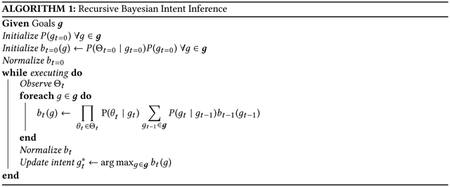

We first mathematically define the intent inference problem and then present our framework (Algorithm 1). Our target domain is assistive robots endowed with shared autonomy that assist the user towards his/her intended goal.

3.1. Problem Formulation

Let the environment have a discrete set of accessible goals2 g, known at runtime to both user and robot. The human’s intended goal is unknown to the robot, and so the robot must infer (predict) the most likely goal g* ∈ g that the user is trying to reach: the intent inference problem. With knowledge of g* the robotics autonomy can meaningfully assist the user in shared autonomy. Note that the user might change his/her intended goal during the execution, and intent inference that updates in real time will allow the assistance provided to dynamically adjust.

3.2. Intent Estimation

We formulate the intent inference problem for shared autonomy as Bayesian filtering in a Markov model, which allows us to model the uncertainty over the candidate goals as a probability distribution over the goals. Bayesian models have shown to be effective for inference in cognitive science [4] and human-robot interaction research [36]. We cast the intent inference problem as a classification task where the robot aims to infer the most likely goal class g* from the set of possible goals g, given a set of observations (features).

We represent the goal gt as the query variable (hypothesis) and the observed features Θ0,…,Θt as the evidence variables, where Θt is a k-dimensional vector of k observations , i = 1 : k, and t represents the current time. For compactness we use colon notation to write Θ1,…,Θt as Θ0:t. The uncertainty over goals is then represented as the probability of each goal hypothesis. The goal probability conditioned on a single observation source θi over t timesteps can be represented by Bayes’ rule as,

| (1) |

where the superscript i has been dropped for notational simplicity, and the posterior probability P (gt | θ0:t) at time t represents the belief bt (g) after taking the single observation source into account, where bt (g) is a single element of the posterior distribution bt. Since the Hidden Markov Model allows for a conditional independence assumption between observations at the current and previous timesteps given the current goal estimate (θt ⊥ θ0:t−1 | gt), we simplify P (θt | gt,θ0:t −1) to P (θt | gt). Applying the law of total probability, the conditional goal probability becomes,

| (2) |

which becomes,

| (3) |

upon applying the definition of conditional probability to P (gt,gt−1 | θ0:t−1) and the Markov assumption, (gt ⊥ θ0:t−1 | gt−1. The computation of bt(g) thus is recursive update, and so encodes memory of prior goal distributions. Furthermore, P (gt | gt−1) is the conditional transition distribution of changing to goal gt at time t given that the goal was gt−1 at time t − 1. The model thus encodes that the user’s intent or goal can change over time.

We now take into consideration multiple observation sources θ1, …,θk as k evidence variables, which can derive from any number of sources—for example, control commands or cues such as eye gaze. We assume the k observations sources to be conditionally independent of each other given a goal g, (θi ⊥ θj | g), ∀i ≠ j. Thus, Equation 3 becomes

| (4) |

We present our algorithm for inferring the probability distribution over goals in Algorithm 1. The posterior distribution at time t, denoted bt, represents the belief after taking observations into account. The set of prior probabilities at t = 0, P (gt=0), ∀g ∈ g, represents the robot’s initial belief over the goals. The beliefs then are continuously updated, by computing the posteriors P (gt | Θ0:t), ∀ g ∈ g, as more observations become available.

Finally, to predict the most likely goal gt* ∈ g, we select the goal class that is most probable according to the maximum a posteriori decision,

| (5) |

3.3. Inference Uncertainty

Within the domain of shared-control assistive robotics, it is important to express uncertainty in the robot’s prediction of the intended goal—because assisting towards the wrong goal could be worse than providing no assistance. We express prediction uncertainty as a confidence computed as the difference between the probability of the most probable and second most probable goals,

| (6) |

When the robot is uncertain about the intended goal of the user, a variety of behaviors might be implemented, for example to hold off on providing assistance or assist towards multiple goals simultaneously, if possible.

3.4. Human Behavior Model as an Observation

We are interested in investigating how we can utilize implicit intent information encoded in the signals people provide to operate a robot. Taking inspiration from psychology and teleological interpretations of actions [7], we aim to explore how the robotics autonomy can implicitly observe and leverage non-verbal human actions to perform human intent recognition. Specifically, we model the user’s interaction with the control interface that they use to operate the robot as probabilistic goal-directed actions, in order to reason about the user’s intention. We consider the user inputs as representative of the actions the user wants to take to reach a goal g. We model these actions as observations using Boltzmann-rationality, which has been shown to explain human behavior on various data sets [15].

We incorporate adjustable rationality in a probabilistic behavior model such that in any state s (robot configuration) the probability that action ug is chosen by a rational human agent to reach goal g is given as,

| (7) |

where Qg (s,ug) denotes the Q-value when the intended goal is g. β is a rationality index (discussed further in Section 3.5) that controls how diffuse are the probabilities. We model Qg (s,ug) as the cost of taking action ug at configuration s and acting optimally from that point on to reach the goal g. We approximate optimal action selection with an autonomy policy, and compute this cost as the agreement between the user control uh and the autonomy control ur to reach the goal g. In our implementation, a policy based on potential fields [22] is employed, and agreement is measured in terms of the cosine similarity, computed as arccos ((uh · u r) / ∥ u h ∥∥ u r ∥) where denotes the dot product and ∥ · ∥ denotes the vector norm. A moving average filter with a two second time window is applied·to the observations, in order to consider a brief history of observations and reduce any undesired oscillations due to noisy or corrective control signals.

3.5. User-customized Optimization for Personalization

A perfectly rational model assumes that a human agent always acts optimally to reach his/her goal, when in reality a number of factors might induce suboptimality—for example, limitations and challenges imposed by the lower degree of freedom (DoF) interfaces (e.g., 3-axis joystick, Sip-N-Puff, Head Array) available to control high-DoF robotic systems, cognitive impairments, physical impairments, and environment factors such as obstacles or distractions. Furthermore, not only do users differ in their abilities but also in the manner in which they interact with the robot. Therefore, a critical detail is to include adjustable rationality in the human action model for user personalization and adaptation.

We introduce a user-customized optimization of adjustable rationality that customizes the value of β to each user. Adjustable rationality is represented by the rationality index parameter β in the Boltzmann policy model (Equation 7). β reflects the robot’s belief about the optimality of the human agent actions. By tuning β, the robot can account for human agents who may behave suboptimally in their actions, and thus improve inference from their actions. In order to find the value of the rationality index for a human agent, we perform an optimization procedure on data gathered while the human teleoperates the robot to reach multiple goals in the environment. In particular, we find the value β* that minimizes the average log-loss for goal inference across a dataset trajectories given the true labels Y for the intended goal and the probability estimates P for the inference over goals,

| (8) |

and the log-loss is computed as,

| (9) |

where M is the number of samples in the trajectory, N is the number of goals, yi,j is a binary indicator of whether or not the prediction j is the correct classification for goal instance i, and pi,j is the probability associated with the goal j at timestep i. The value of β* returned by the optimization procedure is then used to model the human actions using Equation 7, and P (ug | s,g) are used as observations in the inference predictions of Equation

4. IMPLEMENTATION

In this section, we detail our implementations of our intent inference algorithm, and the shared autonomy that utilizes the inferred intent to provide assistance.

4.1. Autonomy Inference

In shared autonomy, often times the only cues available to the system comes from the input commands received from the human user (as discussed above) and, via sensing the robot’s environment. Our approach, Recursive Bayesian Intent Inference (RBII), allows for the seamless fusion of any number of observations to perform human intent recognition. In order to examine how the incorporation of different observation sources affects the intent inference and shared autonomy, we implement two different observation schemes (RBII-1 and RBII-2).

RBII-1:

The first observation scheme considers a single modality, the proximity to a goal, as this feature is utilized most in existing shared autonomy work [9, 13, 21, 30]. We compute proximity θd as the Euclidean distance between the current position of the robot end-effector x and the goal xg. For Algorithm 1, we model the likelihood using the principle of maximum entropy such that given the goal g, the class conditional probability decreases exponentially as the likelihood of g decreases, P (θd | g) ∝ exp(−κ · θd). κ is set to the mean of the range of values that θd can take.

RBII-2:

In the second observation scheme we consider a probabilistic fusion of multiple observations. Specifically, in addition to proximity to the goal we model the actions of the human agent as observations. Following a model of human action from cognitive science [4], we model the user as Boltzmann-rational in their actions to reach a goal g (Section 3.4) and furthermore, perform an optimization to customize the value of adjustable rationality for each user (Section 3.5). In our implementation, the global optimum is approximated using a probabilistic optimization algorithm (simulated annealing [24]). The individualized value of adjustable rationality returned by the optimization procedure is used to model the human actions using Equation 7 and P (ug |s,g) are used as observations by our algorithm to make inference predictions.

Lastly, our approach encodes the possibility that the human’s goal might change during task execution (Section 3.2). We compute the probability of changing goals in the case of n number of goals as,

| (10) |

Note that when Δ = 0, the model represents the case when the user exclusively pursues one goal during the execution. When Δ = (n − 1)/n, the model represents the possibility of choosing a new goal at random at each timestep. Our implementation sets Δ = 0.1, and initializes the probability distribution over goals to be uniform.

4.2. Assistance under Shared Autonomy

In this section, we discuss implementation details of the shared autonomy that utilizes the inferred intent. Blending-based shared autonomy methods combine the user and autonomy control of the robot by some arbitration function that determines the relative contribution of each. Although not optimal for operation in complex safety-concerned environments [33], blending is one of the most used shared autonomy paradigms due to its computational efficiency and effectiveness [9, 13, 29]. Furthermore, it has been shown in subjective evaluations that users tend to prefer blending and find it easier to learn over some other approaches of shared autonomy, for example as compared to probabilistic shared control (PSC) [10] and a POMDP-based policy method [20]. We implement a linear blending-based paradigm to provide assistance,

| (11) |

where uh denotes the user control command, ur the autonomy control command generated under a potential field policy [22], and ublend the shared autonomy command sent to the robot. Note that ur is available in all parts of the robot state space, for every goal g ∈ g such that g is treated as an attractor and all the other goals g \ g as repellers. α ∈ [0, 1] is a blending factor which arbitrates how much control remains with the human user versus the autonomy. In our implementation, α is a piecewise linear function of the confidence in the intent prediction,

| (12) |

where C (g) is defined as in Equation 6, the difference between the highest and second highest probable goals. δ1 is a lower bound on C (g) (set to 30%) below which assistance is not active, δ2 is an upper bound on C (g) (set to 90%), above which assistance is maximal. The upper bound on assistance α is given by δ3 (set to 70%). Note that: (i) Different approaches to intent inference will generate different values for C (g), and so the amount of assistance accordingly will differ. (ii) In particular, if C (g) is lower, meaning that the inference is not very certain in its prediction, the amount of assistance also will be lower. (iii) If the inferred goal is wrong the robot will assist towards the wrong goal, with potentially serious implications.

5. EXPERIMENTS ON INTENT INFERENCE

Our experimental work aims to evaluate the performance of the intent inference algorithm, as well as its impact on shared autonomy. We furthermore are interested in how the control interface used by the human affects the intent inference performance. This is particularly important in the domain of assistive robotics, as traditional joystick control interfaces are often not accessible to people with severe motor impairments who instead use limited control interfaces to operate the robot. In this work, we consider the 3-axis joystick and the 1-D Sip-N-Puff as the representative assistive domain input interfaces. We performed two human subject studies, that aim (i) to characterize the complexity and variability of the intent inference problem, (ii) to compare the inference performance of our approach to existing approaches utilized in prior shared autonomy work, (iii) to evaluate the impact of interface on intent inference, and (iv) to evaluate the impact of inference on shared autonomy assistance.

5.1. Hardware

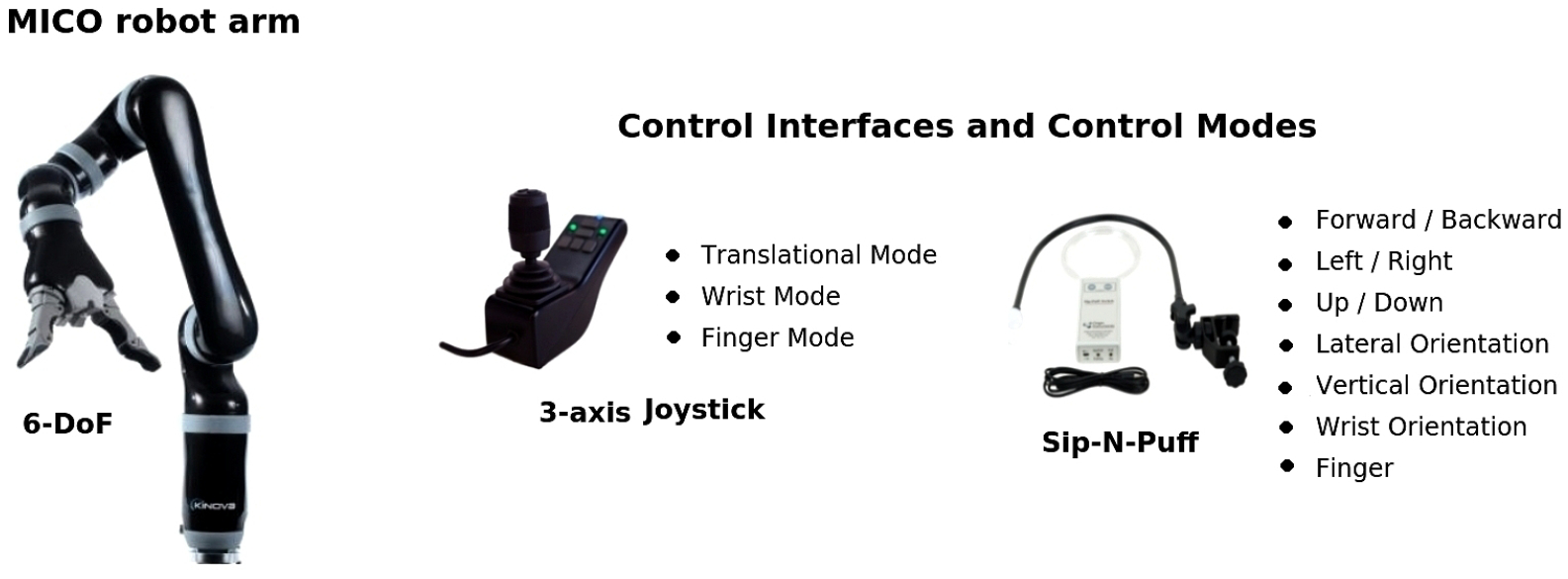

Robot Platform:

Our research platform for the designed experiments was the MICO robotic arm (Kinova Robotics, Canada), a 6-DoF manipulator with a 2 finger gripper (Figure 2). The MICO is a research edition of the JACO arm (Kinova Robotics, Canada) which is used commercially within the assistive domain.

Fig. 2.

MICO robotic arm and the control interfaces used in the study, with illustration of the control modes for the robotic arm teleoperation.

Control Interfaces:

The control interfaces used in the study were (i) a traditional 3-axis joystick (Kinova Robotics, Canada) that have typically been utilized for operating robotic arms, and (ii) a 1-D Sip-N-Puff interface (Origin Instruments, United States) accessible to people with severe motor-impairments. Direct teleoperation using control interfaces, which are lower dimensional than the control space of the robotic arm, requires the user to switch between one of several control modes (mode switching). Modes partition the controllable degrees of freedom of the robot such that each control mode maps the input dimensions to a subset of the arm’s controllable degrees of freedom. The control interfaces and defined control modes are shown in Figure 2. Teleoperation with the 3-axis joystick requires at minimum 3 control modes and the required number of control modes increases to 6 in the case of the Sip-N-Puff interface.

5.2. Software

In addition to the two variants of our algorithm detailed in Section 4.1 (RBII-1 and RBII-2), for comparative purposes we also implement two approaches to intent inference utilized in previous shared autonomy works—Amnesic Inference [2, 9, 13] and Memory-based Inference [8, 9, 20, 30].

Amnesic Inference:

The Amnesic inference approach associates a confidence in the prediction of the user’s goal as a hinge-loss function, where it is assumed that the closer a goal is, the more likely it is the intended goal,

| (13) |

where d is the distance to the goal and D a threshold past which the confidence c (g) is 0. It is possible to design richer confidence functions, but in practice this function often is used for its simplicity. The approach is termed as Amnesic prediction [9], because it ignores all information except the instantaneous observations. In our implementation, d is the Euclidean distance ∥ x − xr ∥ between the current position of robot xr and the goal xg, and D is set to 1.0 m (maximum reach of the MICO robot arm).

Memory-based Inference:

The Memory-based prediction [9] approach is a Bayesian formulation that takes into consideration the history of a trajectory to predict the most likely goal. Let ξx →y denote a trajectory starting at pose x and ending at y. Using the principle of maximum entropy [38], the probability of a trajectory for a specific goal g is given as P (ξ | g) ∝ exp(−cg (ξ)); that is, the probability of the trajectory decreases exponentially with cost. It is assumed that the cost is additive along the trajectory.

| (14) |

Such a solution becomes too expensive to compute in high-dimensional spaces (e.g., for robotic manipulation), and so [9] estimates the most likely goal by approximating the integral over trajectories using Laplace’s method and a first order approximation,

| (15) |

In practice the cost cg is often the Euclidean distance and the goal probabilities are initialized with a uniform prior [21, 30], which we do in our implementation as well.

5.3. Human Inference Study

We first aim to ground the complexity of the intent inference problem through a study of human inference ability, by having a human observer interpret the motion of a robotic arm to infer its intended goal. Humans are very good at anticipating the intentions of others with non-verbal communication [11] and by observation of goal-directed actions [7].

In our experiments, the robot motion trajectories were pre-recorded demonstrations by a human expert operating a 3-axis joystick to reach a goal. Related work has addressed the topic of human interpretation of robot motion trajectories generated by autonomy policies [8] with the aim to generate intent inexpressive trajectories. We however are interested in robot motion generated by human control commands, given our target domain of assistive teleoperation. We study human inference under a variety of goal scenarios of varied complexity (Figure 3, left).

Fig. 3.

Left: Goal scenarios of varied complexity used in human inference study. Right: Example setup shows the robot executing a trajectory to reach a goal. The subject predicts the intended goal during robot motion.

We furthermore are interested in how a change of intent can affect the inference. Our human demonstrator therefore provided two types of motion: (i) No change of intent, were the robot motion maintained a single goal from start to end and (ii) Change of intent, where the robot motion switched during the execution the goal which it was reaching towards.

Design:

The study involved a variety of goal scenarios with varied complexity: in total, 8 different scenarios involving 2 to 4 potential goals (Figure 3, left). For each goal scenario, one change of intent demonstration was recorded (8 in total) as well as no change of intent trajectories for each goal in the scene (22 trajectories in total). We chose a within-subjects design and each subject observed the 30 recorded demonstrated trajectories replayed on the robot.

Subject Allocation:

We recruited 12 subjects without motor-impairments from the local community (5 male, 7 female, aged 19–35). The subjects were novice users and had no prior experience operating a robotic arm. All participants gave their informed, signed consent to participate in the studies, which was approved by Northwestern University Institutional Review Board.

Protocol:

At the start of the experiment, the subjects were given a 5 minute training period in which they observed 1 pre-recorded human teleoperation trajectory towards a random goal in the environment, to get familiarized with the robot motion capabilities. The 30 teleoperated trajectories for the 8 goal scenarios then were executed (re-played) in counterbalanced order on the robot. For each trajectory motion, we tasked the human subjects to observe the motion of the robot and predict which object was the intended goal by verbally mentioning the “Object Name” (e.g.,“cup”) or “Number” (e.g., “one”). The subjects were allowed to change their inference at any time during the robot motion. They were also given the option to express uncertainty about the intended goal, by saying “not sure” or “zero”. Note that by default the inference is registered as uncertain (“zero”) at the start of the trajectory motion. The subject inferences were recorded by the experimenter via a button press. Figure 3 (right) shows the experimental setup for the study.

5.4. Autonomy Inference Study

Our second study aims to evaluate how well the autonomy can infer the intent of a user under novice teleoperation and how the inferred intent affects assistance in shared autonomy.

Design:

We adopted a between-subjects experimental design with two conditions that differed in the control interface type used by the subjects to operate the robot: (i) a 3-axis joystick, and (ii) a 1-D Sip-N-Puff interface. All other elements were same in the two conditions. Four intent inference approaches were evaluated: (i) Amnesic inference, (ii) Memory-based inference, (iii) RBII-1 and (iv) RBII-2. Each computed the inference online as the subject teleoperated the robot to complete tasks. Four different tasks of varied complexity were used to evaluate the intent inference performance (Figure 4). The first 3 tasks involved object retrieval where the goals were the approach grasp pose on the objects. The fourth task involved pouring and placing operations where the goals were tied to the manipulation operations: the pose of the initiation of the pour over the bowls and placing in the dish rack. Fetching objects and manipulation for meal preparation have been identified as the top preferred ADL tasks [5] by end-users.

Fig. 4.

Task scenarios and associated grasp pose on goals used to evaluate intent inference under novice teleoperation (with and without shared autonomy). Note that for Task 3 the two goals on the extreme have the same grasp pose as shown in task 2.

Subject Allocation:

We recruited 24 subjects without motor-impairments (uninjured) from the local community (11 male, 13 female, aged 19–40) and 6 end-user subjects (5 male, 1 female, aged 18–47). Twelve of the subjects without motor-impairments (5 males, 2 females) and 3 of the end-user subjects (2 male, 1 female) used the 3-axis joystick interface, and the remaining subjects used the Sip-N-Puff interface. The end-user group included 4 subjects with spinal cord injury (SCI), 1 with muscular dystrophy (MD), and 1 with cerebral palsy (CP). All participants gave their informed, signed consent to participate in the studies, which was approved by the Northwestern University Institutional Review Board.

Protocol:

At the start, the subjects were given a 10 minute training period in which they become familiar with using the control interface and the robot operation. Next, they teleoperated the robot to perform a training task (a similar setup as shown in Task 3 in Figure 4, but with different positions and orientations of the objects) and this data was used to perform the optimization procedure to tune the rationality index parameter β for each subject (as discussed in Section 3.5). The optimized value β* was used in the RBII-2 approach for intent inference.

There were two stages in the study protocol. In stage one, the subjects teleoperated the robot without assistance. The subjects performed the tasks shown in Figure 4, and they were instructed to (i) complete each goal in every task setup and (ii) for each task perform one additional trial in which they changed goal during the task execution. The change of goal was recorded with a time stamp via a button press. All 4 approaches for intent inference (discussed in Sections 4.1 and 5.2) computed the inference online as the user teleoperated the robot to complete tasks. In stage two, all the previous trials were performed again but now with assistance under shared autonomy. Furthermore, stage two trials were performed twice, once with the RBII-1 approach and once with the RBII-2 approach.

6. ANALYSIS AND RESULTS

We first discuss performance measures and then present the analysis with the results. For each performance measure, one factor repeated measure ANOVA (Analysis of Variance) was performed to determine significant differences (p < 0.05). Once the significance was established, multiple post-hoc pairwise comparisons were performed by using Bonferroni Confidence interval adjustments. For all figures, the notation * implies p < 0.05, * * implies p < 0.01, * * * implies p < 0.001.

6.1. Performance Measures

Percentage of Correct Predictions:

Percent correct predictions is a metric commonly employed in machine learning works. We compute percent correct as the percentage of time (success rate) the inference identified the correct intended goal of the user with confidence (C (g) > 30%).

Cross-Entropy Loss (Log-Loss):

Assessing the uncertainty of a prediction is an important indicator of performance which is not captured by the percent correct metric. The cross-entropy or log-loss considers prediction uncertainty by including the classification probability in its calculation. In the case of N goals, and given the true labels Y for the intended goal and the inference probability estimates P for inference, we calculate the average log-loss across a trajectory as,

| (16) |

where M is the number of samples in the trajectory, yi,j is a binary indicator of whether or not the prediction j is the correct classification for goal instance i, and pi,j is the probability associated with the goal j at timestep i. Note that a perfect inference model would have a log-loss of 0 and that log-loss increases as the predicted probability diverges from the intended goal. Note also that log-loss is unable to be computed for the Amnesic inference, as it is not a probabilistic method.

Task Completion Time:

It is important to consider how quickly the user is able to complete a task with and without assistance. Our intuition is that the intent inference method directly affects the onset of shared autonomy assistance and thus will indirectly affect the task completion time. That is, better inference will result in correct, earlier, and stronger assistance.

Number of Control Mode Switches:

Teleoperation of robotic arms (e.g., 6-D control) using traditional control interfaces, such as 3-axis joystick, require the user to switch between one of several control modes (mode switching) that are subsets of the full control space. Mode switches can become extremely challenging in the assistive domain, wherein the interfaces available to individuals with motor impairments are even more limited (e.g., 1-D Sip-N-Puff). Our intuition is that the intent inference will indirectly affect the number of mode switches required to complete tasks, if earlier assistance results in fewer mode switches.

6.2. Human Inference

Figure 5 shows the performance of human intent inference on the demonstration trajectories. Percentage of time the predictions were (i) correct with confidence (C (g) > 30%), (ii) uncertain (C (g) < 30%), and (iii) incorrect with confidence (C (g) > 30%) are analyzed.

Fig. 5.

Human inference performance on the demonstration trajectories. Plot shows mean and standard error.

The results show that inferring the intended goal of robotic arm motion is a challenging task, even for humans. The human subjects made fewer incorrect predictions as compared to correct predictions, but also were inclined to indicate more uncertainty. Also, the percentage of correct predictions were comparatively lower in the case of the change-of-intent trajectories in which the robot changed the intended goal during motion. Interestingly, the percentage of time inference was uncertain was unaffected by whether there was a change-of-intent.

6.3. Autonomy Inference

6.3.1. Novice teleoperation.

We compared the predictive accuracy of our method against the Amnesic and Memory-based autonomy intent inference techniques. We first analyzed the inference performance with partially observed trajectories.

Figure 6 shows the average percentage time correct and confident predictions with partially observed trajectories. The Amnesic inference performed poorly and failed to get the prediction correct for a higher percentage of time as compared to the other methods. All other methods performed comparatively better, with RBII-2 performing best for the change-of-intent trajectories, the Memory-based approach performing best for trajectories without a change of intent, and RBII-2 consistently outperforming RBII-1. The Memory-based technique utilizes the information about the past trajectory history and struggled to recover from a change of intent towards goals. The superior performance of RBII-2 on the change-of-intent trajectories is attributed to the incorporation of our more sophisticated probabilistic model of the goal-directed user actions in the RBII-2 likelihood model, which enabled the prediction to quickly recover to the correct intended goal.

Fig. 6.

Performance comparison across four autonomy inference methods and two interfaces, during novice teleoperation for the subjects without motor-impairments (top) and end-user subjects (bottom). The percentage of time the prediction is correct & confident as the trajectory progresses is shown (errors are cumulative).

The statistically significant trends were that all techniques significantly outperformed the Amnesic inference technique in all scenarios (p < 0.001), except in the case of end users with the 3-axis joystick and change-of-intent trajectories. For this case, the RBII-2 technique still performed significantly better (p < 0.05 for RBII-1, p < 0.01 for RBII-2), however the Memory-based technique lost its significance. Between the RBII-1, RBII-2 and Memory-based methods, there were no statistically significant differences.

An interesting observation is that although the 3-axis joystick provides more degree of freedom control as compared to a Sip-N-Puff interface, it could also be more difficult to operate for people with motor-impairments (for example, because of limited finger and hand motion or hand tremor) and thus be more prone to noisy signals. Thus, there can be scenarios, when performing intent inference from a higher-dimensional interface is comparatively more challenging, as seen here in our end-user data (for example, under the change of intent trajectories the Memory-based method struggled to get higher percentage time correct predictions much earlier in the trajectory in the case of end users operating the 3-axis joystick).

Figure 7 shows the performance of the autonomy intent inference methods during novice teleoperation (without autonomy assistance), measured in terms of average log-loss with partially observed trajectories. (Note that a perfect inference model would have a log-loss of 0.) The Memory-based technique performed comparatively better in the case of no change-of-intent trajectories, in all scenarios. It was penalized for wrong predictions by the log-loss performance metric in two scenarios: when the user expressed a change of intention during task execution and also when the tasks involved more than two goals. In the case of change-of-intent trajectories, the RBII-2 technique outperformed the other approaches. We saw one case where the RBII-2 technique was unable to outperform RBII-1: the end-user group operating the 3-axis joystick and without a change-of-intent. This further indicates that performing intent inference for the (arguably more informative) 3-axis joystick interface could be more challenging, for some user groups.

Fig. 7.

Performance comparison across the probabilistic inference methods and two interfaces, during novice teleoperation for subjects without motor-impairments (top) and the end-user subjects (bottom). The average log-loss as the trajectory progresses is shown (errors are cumulative).

Figure 8 shows the mean log-loss across complete trajectories. The statistically significant trends were that RBII-2 significantly outperformed the Memory-based approach for nearly all change-of-intent scenarios: control subjects operating both interfaces (p < 0.01) and end-user subjects operating the Sip-N-Puff interface (p < 0.05). (For end users operating the joystick, the trend was observable but not significant.) For all approaches the log-loss was significantly (p < 0.001) higher with a change of intent (unsurprisingly) versus no change of intent.

Fig. 8.

Average log-loss for the autonomy inference methods and two interfaces, during novice teleoperation for subjects without motor-impairments (top) and the end-user subjects (bottom). The average log-loss is shown, with mean and standard error.

6.3.2. Benefit of Adjustable Rationality Model of Human Actions.

We examined how the rationality index parameter affects performance. To test the benefit of optimizing the rationality index for each subject, we computed the average log-loss on each subject’s trajectory set using first that subjects optimized value of the rationality index β* and then the optimized values of each other subject ¬β* (i.e., β is not optimized for the current subject). Figure 9 shows that the optimized values of the rationality index β* resulted in significantly (p < 0.001) lower log-loss as compared to using the not-individualized rationality index values of other subjects ¬β*. This indicates that customizing the rationality index values for the human actions likelihood model to each user results in better intent inference performance. Lastly, we also note that the optimized rationality index values β* were comparatively higher for subjects using 3-axis joystick as compared to the subjects who used the Sip-N-Puff interface, indicating that the operators with the 3-axis joystick were able to provide more rational actions.

Fig. 9.

Benefit of user-customized adjustable rationality in our model of user actions. Note that ¬β* refers to using the not-individualized rationality index values of other subjects.

6.3.3. Effect of Intent Inference on Shared Autonomy Performance.

We analyzed the implications of the underlying intent inference approach on shared autonomy performance (with assistance). For the shared autonomy trials we focused only on our RBII-1 and the RBII-2 techniques.

Figure 10 shows the task completion times with and without assistance. The with-assistance trials involved shared-autonomy operation under the RBII-1 and RBII-2 inference techniques. The without-assistance trials imply direct teleoperation (Teleop). The task completion times were significantly higher (p < 0.05) with the Sip-N-Puff, in all cases as compared to the 3-axis joystick (not marked in figure to reduce visual clutter). Importantly, assistance under both variants of our approach, RBII-1 and RBII-2, had significantly lower (p < 0.001) task completion times than Teleop, in all scenarios. Overall, for both interfaces and subject groups, better and faster inference during shared-autonomy operation with RBII-2 resulted in lower task completion times than RBII-1; and this result was significant (p < 0.05) for the subjects without motor impairments. Likewise, assistance under both inference variants resulted in significantly fewer mode switches (p < 0.001), for both interfaces and subject groups. Again, RBII-2 outperformed RBII-1 on this metric (but not significantly), indicating better assistance informed by the RBII-2 intent inference.

Fig. 10.

Comparison of task completion time and number of mode switches across two variants of our autonomy inference method under shared-control operation (RBII-1, RBII-2) and without autonomy assistance (Teleop), using two interfaces. Mean and standard error over all executions are shown.

6.3.4. Subjective Evaluation.

The subjective evaluation was performed using an experiment survey after each trial to understand how subjects felt about the intent inference performance and the assistance under shared autonomy. It also gave us insight as to whether users experienced performance differences during the robot operation under different intent inference schemes. Results are summarized in Figure 11 and Table I. Scores of 1 and 7 indicate that the responder strongly agrees or disagrees, respectively. There were noticeable difference in subjective ratings for both the intent inference and shared autonomy performance across the two techniques, with RBII-2 consistently rated better than RBII-1. Results further indicate that the subjects, especially the end-user group were satisfied with the intent inference and shared autonomy performance.

Fig. 11.

Subjective evaluation shows the percentage distribution of Likert scores (indicated in color codes).

7. DISCUSSION

The human inference study results indicated that inferring the intended goal of a robot is a challenging task, even for humans. One important takeaway from our study is that humans tend both to make fewer incorrect predictions but also indicate more uncertainty. This has important implications for the assistive domain, since most often providing the wrong assistance is worse than providing no assistance. Thus, there is worth in knowing when the autonomy inference is uncertain and to what extent. The human subjects were also quickly able to switch their prediction in the case of change-of-intent, though with comparatively more incorrect predictions.

The autonomy intent inference methods were evaluated on novice teleoperation with and without shared autonomy assistance, and using two different control interfaces. The Amnesic inference failed to generate the correct prediction with sufficiently high confidence as compared to other techniques. This demonstrates the benefit of using a Bayesian approach for inference, under which taking proper account of the prior information to compute the posterior results in better performance. Memory-based inference that utilizes the information about the past trajectory history resulted in highly confident predictions comparatively earlier in the execution. However, it also more often was wrong in its predictions with high probabilities and unable to recover when the user expressed a change-of-intent, particularly when the tasks involved more than two objects. Some limitations of the Memory-based method are recognized in an exploratory experiment in [9]. RBII-1 has the same observation source for its likelihood model, and performed better than the Memory-based technique in the case of change-of-intent scenarios but worse when there was no change of intent. Overall, RBII-2 outperformed other approaches in terms of faster correct predictions with higher probabilities and responded well to changing user goals. The demonstrated strengths of our approach were the ability to express uncertainty and to quickly respond in the case of change of intentions from the user, which allowed it to quickly correct and recover the robot’s belief from incorrect inferences. In addition to contextual observations, probabilistic modeling and incorporating the human agent’s behavior as goal-directed actions with user-customized optimization of the adjustable rationality improved the overall performance. Furthermore, even under limited interface operation our proposed technique was able to perform well to recognize human intent.

We have shown that with the probabilistic modeling of human actions as goal-directed observations the robotics autonomy can take advantage of indirect signals that the user implicitly provides during shared autonomy, even with lower dimensional interfaces controlling higher-DoF robot systems. The inclusion of adjustable rationality in our model accounts for suboptimal behavior in user actions, resulting in better inference performance. Notably, suboptimal user behavior is inherent to the assistive domain. We also demonstrated the benefit of using an optimized adjustable rationality model for users, which produced better results. One weakness of our approach is that the data likelihood function under the Boltzmann-rationality model is not convex. The non-convexity makes finding optimal model parameters difficult and not efficient computationally. However, in practice finding optimal model parameters was only required once per subject.

Under direct teleoperation, the average task completion time as well as the average number of mode switches were significantly higher in the case of the Sip-N-Puff interface as compared to the 3-axis joystick. The inference predictions utilized to provide assistance in shared autonomy resulted in a significant reduction in the task completion time and the number of mode switches across both interfaces. Our results further verified that the underlying intent inference approach directly affects the assistance and the overall shared autonomy performance. The overall superior performance of RBII-2 as compared to RBII-1 under shared autonomy substantiates the benefit of the probabilistic modeling of human actions in addition to the contextual observations. Better and faster inference under shared autonomy with the RBII-2 technique was able to reduce the average task completion time and the average number of mode switches under shared autonomy. The subjective evaluation results further reinforce our findings. The subjects were satisfied with the intent inference and shared autonomy performance, favoring the RBII-2 technique—especially the end-user group, who are the target population in assistive robotics domain.

We emphasize the importance of thoroughly evaluating the intent inference itself when used for shared autonomy operation. There are implications of the control interface on intent inference, and intent inference affects shared autonomy performance. There is a particular benefit from probabilistic formulation of intent inference within the domain of assistive robotics—having an estimate of prediction uncertainty can be leveraged during shared-control operation, to determine whether and when to provide assistance, and by how much. For superior assistance under shared autonomy, the inference approach should provide correct predictions with high probabilities earlier in task executions.

In future work, the probabilistic modeling of other observations such as spatial goal orientations, visual cues, and distance metrics, as well as the handling of continuous goal regions, could further be explored. The effect of priors (initial goal probability distribution) and the goal transition probability are also interesting directions for future research.

8. CONCLUSIONS

In this work, we presented an intent inference formulation that models the uncertainty over the user’s goal in a recursive Bayesian intent inference algorithm to probabilistically reason about the intended goal of the user without explicit communication. Our approach is able to fuse multiple observations to reason about the intended goal of the user. We introduced a probabilistic behavior model of the user’s actions that incorporates an adjustable rationality and, importantly, an individualized optimization that adapts the rationality index value for user and interface personalization. We performed probabilistic modeling of implicit observations, including our user behavior model and a distance-based likelihood observation. In user studies, we first examined human inference of robot motion, and then evaluated and compared the performance of our algorithm to existing intent inference approaches using multiple control interfaces that are typically available to users in the assistive domain. We furthermore examined the impact of different variants of our inference method on shared control operation. The findings of our study showed that in addition to contextual observations, probabilistic modeling and the incorporation of human agent behavior as goal-directed actions improves intent recognition, and also demonstrate the benefit of optimizing adjustable rationality to individual users. Furthermore, even under limited control interfaces, our proposed technique was able to perform well in recognizing human intent. The results also showed that the underlying intent inference approach directly affects shared autonomy performance, as do control interface limitations.

Table 1.

Subjective Evaluation - Autonomy Inference and Assistance

| Mean Score | ||||

|---|---|---|---|---|

| End-user group | ||||

| The robot was able to correctly infer the intended goal. | 3-axis | RBII-1 | 5.2 ± 1.3 | 6.1 ± 1.0 |

| RBII-2 | 5.7 ± 1.1 | 6.4 ± 0.6 | ||

| Sip-N-Puff | RBII-1 | 5.0 ± 1.1 | 5.5 ± 0.8 | |

| RBII-2 | 5.7 ± 0.7 | 6.3 ± 0.6 | ||

| The assistance from the robot was useful to accomplish the task. | 3-axis | RBII-1 | 5.3 ± 1.2 | 6.7 ± 0.5 |

| RBII-2 | 5.7 ± 1.0 | 6.9 ± 0.2 | ||

| Sip-N-Puff | RBII-1 | 5.0 ± 1.1 | 5.9 ± 0.9 | |

| RBII-2 | 5.8 ± 0.8 | 6.6 ± 0.5 | ||

CCS Concepts:

Mathematics of computing → Probabilistic inference problems;

Probabilistic algorithms; Bayesian computation;

Computing methodologies → Theory of mind;

Computer systems organization → Robotics;

Human-centered computing → Human computer interaction (HCI);

ACKNOWLEDGMENTS

Research reported in this publication was supported by the National Institute of Biomedical Imaging and Bioengineering (NIBIB) and National Institute of Child and Human Development (NICHD) under award numbers R01EB019335 and R01EB024058. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. This work was also supported by grant from U.S. Office of Naval Research under the Award Number N00014-16-1-2247. The authors would also like to thank Jessica Pedersen OTR/L, ATP/SMS for helping with the recruitment of motor-impaired subjects.

Footnotes

This work is a continuation of the work presented in our prior conference paper [18]. In current work, we extend the intent inference evaluation across multiple interfaces that typically are available to people with motor-impairments, include end users in addition to subjects without motor-impairments, and provide a more extensive and detailed evaluation of intent inference. Furthermore, we optimize the adjustable rationality for each user and examine the benefit of the individualized adjustable rationality model of the human actions for inference.

Contributor Information

SIDDARTH JAIN, Northwestern University, USA and Shirley Ryan AbilityLab, USA.

BRENNA ARGALL, Northwestern University, USA and Shirley Ryan AbilityLab, USA.

REFERENCES

- [1].Jake K Aggarwal and Michael S Ryoo. 2011. Human activity analysis: A review. ACM Computing Surveys (CSUR) 43, 3 (2011), 16. [Google Scholar]

- [2].Argall Brenna D. 2016. Modular and adaptive wheelchair automation. In Experimental Robotics. 835–848. [Google Scholar]

- [3].Aronson Reuben M., Thiago Santini, Thomas C. KuÌĹbler, Kasneci Enkelejda, Srinivasa Siddhartha, and Admoni Henny. 2018. Anticipatory robot control for efficient human-robot collaboration. In Proceedings of 13th ACM/IEEE International Conference on Human-Robot Interaction (HRI). [Google Scholar]

- [4].Baker Chris L, Saxe Rebecca, and Tenenbaum Joshua B. 2009. Action understanding as inverse planning. Cognition 113, 3 (2009), 329–349. [DOI] [PubMed] [Google Scholar]

- [5].Chung Cheng-Shiu, Wang Hongwu, and Cooper Rory A. 2013. Functional assessment and performance evaluation for assistive robotic manipulators: Literature review. The journal of spinal cord medicine 36, 4 (2013), 273–289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Ciptadi Arridhana, Hermans Tucker, and Rehg James M.. 2013. An In Depth View of Saliency. In BMVC. BMVA Press. [Google Scholar]

- [7].Csibra Gergely and Gergely György. 2007. ‘Obsessed with goals’: Functions and mechanisms of teleological interpretation of actions in humans. Acta psychologica 124, 1 (2007), 60–78. [DOI] [PubMed] [Google Scholar]

- [8].Dragan Anca and Srinivasa Siddhartha. 2014. Integrating human observer inferences into robot motion planning. Autonomous Robots 37, 4 (2014), 351–368. [Google Scholar]

- [9].Dragan Anca D and Srinivasa Siddhartha S. 2013. A policy-blending formalism for shared control. The International Journal of Robotics Research 32, 7 (2013), 790–805. [Google Scholar]

- [10].Ezeh Chinemelu, Trautman Pete, Devigne Louise, Bureau Valentin, Babel Marie, and Carlson Tom. 2017. Probabilistic vs linear blending approaches to shared control for wheelchair driving. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR). [DOI] [PubMed] [Google Scholar]

- [11].Goldman Alvin I. 1992. In defense of the simulation theory. Mind & language 7, 1–2 (1992), 104–119. [Google Scholar]

- [12].Goodrich Michael A and Olsen Dan R. 2003. Seven principles of efficient human robot interaction. In Proceedings of IEEE International Conference on Systems, Man and Cybernetics. [Google Scholar]

- [13].Gopinath Deepak, Jain Siddarth, and Argall Brenna D. 2017. Human-in-the-loop optimization of shared autonomy in assistive robotics. IEEE Robotics and Automation Letters 2, 1 (2017), 247–254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Huang Chien-Ming and Mutlu Bilge. 2016. Anticipatory robot control for efficient human-robot collaboration. In Proceedings of 11th ACM/IEEE International Conference on Human-Robot Interaction (HRI). [Google Scholar]

- [15].Andreas Hula P Read Montague, and Peter Dayan. 2015. Monte carlo planning method estimates planning horizons during interactive social exchange. PLoS computational biology 11, 6 (2015), e1004254. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Jain Siddarth and Argall Brenna. 2014. Automated perception of safe docking locations with alignment information for assistive wheelchairs. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). [Google Scholar]

- [17].Jain Siddarth and Argall Brenna. 2016. Grasp Detection for Assistive Robotic Manipulation. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Jain Siddarth and Argall Brenna. 2018. Recursive Bayesian Human Intent Recognition in Shared-Control Robotics. In Proceedings of IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Jain Siddarth, Farshchiansadegh Ali, Broad Alexander, Abdollahi Farnaz, Mussa-Ivaldi Ferdinando, and Argall Brenna. 2015. Assistive robotic manipulation through shared autonomy and a body-machine interface. In Proceedings of the IEEE International Conference on Rehabilitation Robotics (ICORR). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Javdani Shervin, Admoni Henny, Pellegrinelli Stefania, Srinivasa Siddhartha S, and Bagnell J Andrew. [n. d.]. Shared autonomy via hindsight optimization for teleoperation and teaming. The International Journal of Robotics Research ([n.d.]), 0278364918776060. [Google Scholar]

- [21].Javdani Shervin, Srinivasa Siddhartha, and Bagnell Andrew. 2015. Shared Autonomy via Hindsight Optimization. In Proceedings of Robotics: Science and Systems. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Khatib Oussama. 1986. Real-time obstacle avoidance for manipulators and mobile robots. In Autonomous robot vehicles. 396–404. [Google Scholar]

- [23].Kim Dae-Jin, Rebekah Hazlett-Knudsen Heather Culver-Godfrey, Rucks Greta, Cunningham Tara, Portee David, Bricout John, Wang Zhao, and Behal Aman. 2012. How autonomy impacts performance and satisfaction: Results from a study with spinal cord injured subjects using an assistive robot. IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans 42, 1 (2012), 2–14. [Google Scholar]

- [24].Scott Kirkpatrick C Gelatt Daniel, and Vecchi Mario P. 1983. Optimization by simulated annealing. Science 220, 4598 (1983), 671–680. [DOI] [PubMed] [Google Scholar]

- [25].Kragic Danica, Marayong Panadda, Li Ming, Okamura Allison M, and Hager Gregory D. 2005. Human-machine collaborative systems for microsurgical applications. The International Journal of Robotics Research (IJRR) 24, 9 (2005), 731–741. [Google Scholar]

- [26].Losey Dylan P, McDonald Craig G, Battaglia Edoardo, and O’Malley Marcia K. 2018. A Review of Intent Detection, Arbitration, and Communication Aspects of Shared Control for Physical Human–Robot Interaction. Applied Mechanics Reviews 70, 1 (2018), 010804. [Google Scholar]

- [27].Monfort Mathew, Liu Anqi, and Ziebart Brian D. 2015. Intent Prediction and Trajectory Forecasting via Predictive Inverse Linear-Quadratic Regulation.. In AAAI. [Google Scholar]

- [28].Moore Chris and Corkum Valerie. 1994. Social understanding at the end of the first year of life. Developmental Review 14, 4 (1994), 349–372. [Google Scholar]

- [29].Muelling Katharina, Venkatraman Arun, Valois Jean-Sebastien, Downey John, Weiss Jeffrey, Javdani Shervin, Hebert Martial, Schwartz Andrew, Collinger Jennifer, and Bagnell Andrew. 2015. Autonomy Infused Teleoperation with Application to BCI Manipulation. In Proceedings of Robotics: Science and Systems. [Google Scholar]

- [30].Muelling Katharina, Venkatraman Arun, Valois Jean-Sebastien, John E Downey Jeffrey Weiss, Javdani Shervin, Hebert Martial, Schwartz Andrew B, Collinger Jennifer L, and Bagnell J Andrew. 2017. Autonomy infused teleoperation with application to brain computer interface controlled manipulation. Autonomous Robots (2017), 1–22. [Google Scholar]

- [31].Pérez-D’Arpino Claudia and Shah Julie A. 2015. Fast target prediction of human reaching motion for cooperative human-robot manipulation tasks using time series classification. In IEEE International Conference on Robotics and Automation (ICRA). [Google Scholar]

- [32].Radu Bogdan Rusu Wim Meeussen, Chitta Sachin, and Beetz Michael. 2009. Laser-based perception for door and handle identification. In Proceedings of the IEEE International Conference on Advanced Robotics. [Google Scholar]

- [33].Trautman Pete. 2015. Assistive planning in complex, dynamic environments: a probabilistic approach. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics (SMC). [Google Scholar]

- [34].Tsui Katherine, Yanco Holly, Kontak David, and Beliveau Linda. 2008. Development and evaluation of a flexible interface for a wheelchair mounted robotic arm. In Proceedings of the 3rd ACM/IEEE international conference on Human robot interaction (HRI). [Google Scholar]

- [35].Wang Zhikun, Mülling Katharina, Deisenroth Marc Peter, Amor Heni Ben, Vogt David, Schölkopf Bernhard, and Peters Jan. 2013. Probabilistic movement modeling for intention inference in human–robot interaction. The International Journal of Robotics Research 32, 7 (2013), 841–858. [Google Scholar]

- [36].Whitney David, Eldon Miles, Oberlin John, and Tellex Stefanie. 2016. Interpreting multimodal referring expressions in real time. In IEEE International Conference on Robotics and Automation (ICRA). [Google Scholar]

- [37].You Erkang and Hauser Kris. 2011. Assisted Teleoperation Strategies for Aggressively Controlling a Robot Arm with 2D Input. In Proceedings of Robotics: Science and Systems. [Google Scholar]

- [38].Ziebart Brian D, Maas Andrew L, Bagnell J Andrew, and Dey Anind K. 2008. Maximum Entropy Inverse Reinforcement Learning. In AAAI. 1433âĂŞ1438. [Google Scholar]