Abstract

In dental diagnosis, recognizing tooth complications quickly from radiology (e.g., X-rays) takes highly experienced medical professionals. By using object detection models and algorithms, this work is much easier and needs less experienced medical practitioners to clear their doubts while diagnosing a medical case. In this paper, we propose a dental defect recognition model by the integration of Adaptive Convolution Neural Network and Bag of Visual Word (BoVW). In this model, BoVW is used to save the features extracted from images. After that, a designed Convolutional Neural Network (CNN) model is used to make quality prediction. To evaluate the proposed model, we collected a dataset of radiography images of 447 patients in Hanoi Medical Hospital, Vietnam, with third molar complications. The results of the model suggest accuracy of 84% ± 4%. This accuracy is comparable to that of experienced dentists and radiologists.

Keywords: dental defect recognition, BoVW, radiology, Adaptive Convolutional Neural Network, dental complications

1. Introduction

Aches from dental defect is one of the worst pains experienced [1] and is difficult to identify or diagnose [2] among people of different age groups. Even though a dentist or radiologist can diagnose dental diseases correctly, there are situations where double confirmations are needed. For instance, in the case where a less experienced dentist or radiologist with a different area of specialty is diagnosing a dental X-ray, a system with the ability to perform object recognition with a high level of accuracy will play an important role in supporting his diagnosis. Another common instance is when the workload of an experienced dentist, who only needs a few seconds to make a diagnosis on one X-ray image, becomes cumbersome when there are a few hundred cases to diagnose, making mistakes inevitable. On the other hand, the automated system can be used for teaching student dentists on performing their housemanship (for educational purposes).

Extensive researches have been done on diagnosing health problems using medical images. For instance, Wu et al. [3] performed diagnosis on periapical lesion using texture analysis and put all texture features into a BoW, while examining all similarities using K-means nearest neighbor classifier. Another example is from Zare et al. [4] who used Scale Invariant Feature Transform (SIFT) [5] and Local Binary Patterns (LBP) [6] as descriptors to build Bag of Visual Word (BoVW), after which images were classified using a Support Vector Machine (SVM). Zare’s method achieved a higher accuracy in putting medical images into various categories. These images in different categories have been made public and used by other researchers. This method is similar to Bouslimi’s method [7]. The major difference is that Bouslimi applied natural language processing for medical images using a dictionary containing while Medical Subject Headings (MeSH) was a controlled dictionary for the purpose of indexing journals, articles, and books in medical and life sciences. It is interesting to note that Zare and Mohammad Reza et al. [8] used a method similar to that of Bouslimi in medical image retrieval, but they suggested using 3 different techniques, which are annotated by binary classification, annotated by probabilistic latent semantic analysis, and annotated by top similar images [7]. All these works are mostly focused in the medical field with radiography images.

There are some other deep learning research works for teeth complication detection. Lee et al. [9] and Li et al. [10] proposed Generating Suspected Tooth-Marked Regions by generating a bounding box for every suspected tooth-marked region. Lee et al. [9] also suggested using Feature Extraction in order to extract feature vectors of the tooth-marked regions (Region on Interests, ROIs) instead of the whole tongue images and finally classification by training a multiple-instance SVM. Lee et al. [11] combined a pre-trained deep Convolutional Neural Network (CNN) architecture and a self-trained network, using periapical radiographic images to determine the optimal CNN algorithm and weights. Pre-trained GoogLeNet Inception v3 CNN network was used for pre-processing the images.

Other studies applied deep learning in medical diagnosis for defective retina (innermost part of the eyes) by Kermany et al. [12] and Rampasek et al. [13] to process medical images and provide an accurate and timely diagnosis of key pathology in each image in return. These images were pre-trained [11,12,13] to reduce the computational time for predicting a result. In cancer detection and diagnosis, Ribli et al. [14] proposed a Computer Aided Design system, which was initially developed to help radiologists analyze screening low-energy X-rays (mammograms). Hu et al. [15] applied a standard R-CNN algorithm to cervical images with long follow-up and rigorously defined pre-cancer endpoints to develop a detection algorithm that can identify cervical pre-cancer. There are quite a few applications of standard CNN to other diseases such as Parkinson disease by Thurston [16], while Rastegari et al. [17] suggested using different machine learning methods including SVM, Random Forest, and Naïve Bayes to apply into different feature sets. In addition to these methods, Similarity Network Analysis (SMA) was performed to validate optimal feature set obtained by using MIGMC technique. To obtain feature standardization, the result of this indicates that standardization could improve all classifiers’ performance.

There are a few notable mentions of BoVW applications outside of the medical community. These applications vary, such as discovering prohibited images by Smith et al. [18]. These items are classified into 6 groups: Gun, Knife, Wrench, Pliers, Scissors, and Hammers. Piñol et al. [19] combined Selective Search with BoVW and CNN for detecting handguns in an airport X-ray image. In summary, there is no established technique for diagnosing dental disease, especially third molar problems. There are research works related to every step of image processing that apply digital image processing methods, traditional classification techniques, and deep learning networks.

In this paper, a dental defect recognition model by the integration of Adaptive Convolution Neural Network and Bag of Visual Word (BoVW) is proposed. The radiologist capturing each image is different, thereby using different radiation dose, angle, focal position, beam filtration, fan angle, etc. This causes important visual features for a clinical diagnosis to be mixed with noisy pixels in the input images. To solve this problem, we apply an image filtering and edge detection technique. First, we use a median filter, and then a bilateral filter. Afterward, we apply the Sobel edge detection technique so that the result image will be more accurate, focusing only on the ROIs rather than irrelevant pixel filled with noises. After noise removal and image smoothing, we set out a ROI.

In this research, we use feature extraction methods namely Scale-Invariant Feature Transform (SIFT) [20] and Speeded Up Robust Features (SURF) [21,22,23] as they give more key-points, which are needed in this case. Since these feature extraction methods do not use the bounding box concept [5,24], we can achieve a bounding box concept by ignoring certain regions of the image using “mask” and specifying X and Y positions on the images. All extracted features are kept in a visual BoVW [23] as unique patterns which can be found in an image. Features are kept in different groups [21,23]. All previous steps are done before feeding the feature into a Neural Network or a SVM for training and afterward prediction.

2. Materials and Methods

2.1. Materials

The dataset used in this research is originally from the Hanoi Medical University, Vietnam. The dataset included 447 third molar X-ray images, which were classified into three different groups (‘R8_Lower’, ‘R8_Null’, and ‘R8_Upper_Lower’) depending on which part of the dental set was affected by third molars (i.e., by the appearance or how badly tilted they were). All of these images were labeled and sorted by experienced dentists. The dataset was pre-processed by initially converting each image to grayscale, then segmentation, edge detection, and mask ROI before processing by the following four feature extractors: Oriented FAST and Rotated BRIEF (ORB) with BoVW; SURF with BoVW; SIFT with BoVW; and Convolutional Network for Classification and Detection (VGG16). ORB, SURF, and SIFT extracted 2800 features while VGG16 extracted 512 features. We did not train the VGG16 feature extractor because we used a pre-trained model, but in the case of ORB, SURF, and SIFT, we had to train the model using SVM. To train these models, we had to do the following:

1. Key-points and descriptors were extracted by feature extraction technique (i.e., ORB, SIFT, or SURF).

2. All extracted descriptors were clustered into 2800 clusters using K-Means to get visual vocabularies for the BoVW.

3. The visual vocabularies were used to compute the BoVW features from ORB, SIFT, or SURF.

2.2. Method

2.2.1. Architecture

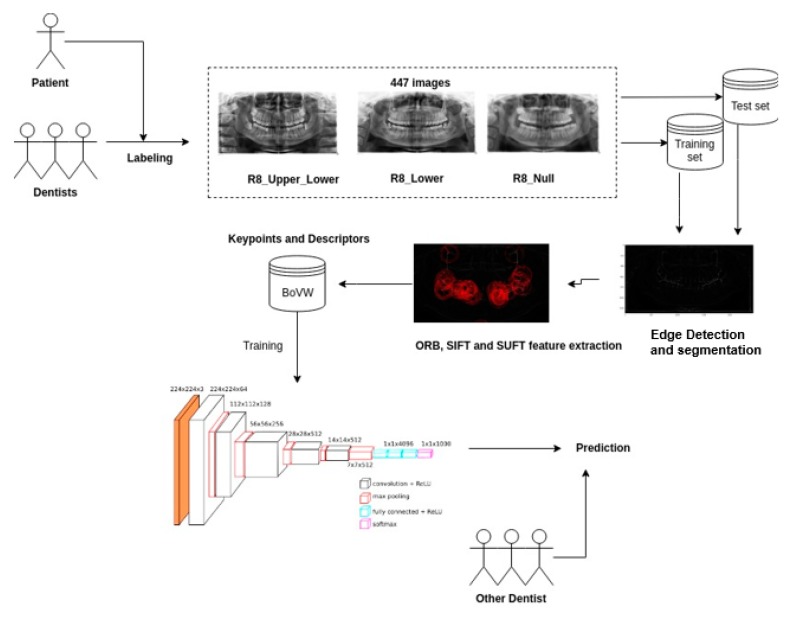

Figure 1 shows the architecture of the procedure in this study. The first step was data gathering step. The dentists provided a properly labeled data set, which consisted of 447 third molar X-ray images taken prior to treatment. The dental experts are from the Hanoi Medical University and are currently working professional dentists.

Figure 1.

The architecture for third molars’ prediction.

Next, we applied image filtering and edge detection techniques to smoothen the images and remove unnecessary noise by median filter, and then by a bilateral filter. Afterward, we applied the Sobel edge detection technique. After smoothing the image, we extracted features (ROI)—the third molars—in image pre-processing step. First, ROI, the area affected by third molar complications t, was detected by using a mask stating the X and Y location of this defect. Then, we applied SIFT and SURF algorithms to find key points and descriptors of images. All extracted features were saved into a BoVW in order to prepare for training a model. Finally, we fed these BoVW into our designed CNN or an SVM model to make the clinical quality prediction.

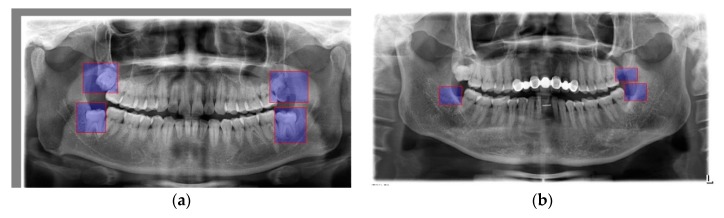

In a typical dental radiography, there are four third molars: two third molars on the upper jaw and two others on the lower jaw. Figure 2a marks out the positions of the third molars with a clear annotation. Figure 2b shows images with missing or treated third molars.

Figure 2.

(a) Third molar positions; (b) treated third molars where blue boxes are the disease parts.

2.2.2. ROI Creation

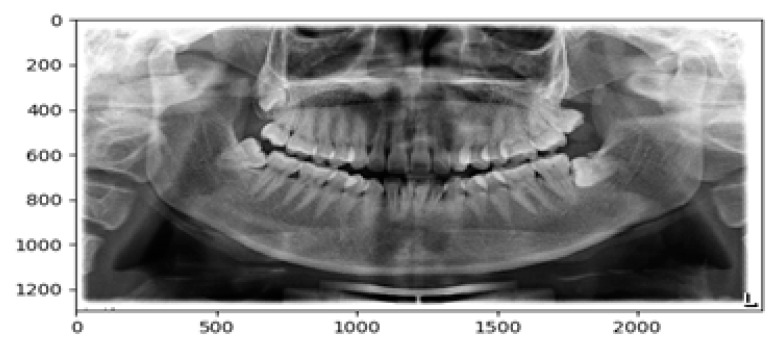

Since for most human jaw structure and the teeth positions appear at the same area, we can mark out the third molar by specifying their positions using a mask. The mask is not for cropping the image but for specifying which region we would like to examine on the image while ignoring the irrelevant parts. After specifying the mask, we drew the ROI using X and Y coordinates where third molar was usually located. To get this location, we placed the image into a calibrated graph as seen in Figure 3. All third molars must fall within these two regions so that we could specify the coordinates as

Figure 3.

Measuring a dental image where scale bars are measured in pixels.

{0: rows, 0: cols}

Image {300: 900, 490: 2200}

The change of these figures depends on the image dimension. But for our dataset, these figures are valid.

2.2.3. Image Smoothing

Once we mark out the ROIs, we can proceed to edge detection. The edge detection is required because in the next step we will use a feature detection technique in order to mark key points and because a radiography image displays a lot of unwanted noise that affects the image detection process after that. The edge detection technique’s steps are:

Step 1: Removing noise by blurring most of what might be quantum noise (mottle) with a median filter of kernel size 3.

Step 2: Other noises were removed by bilateral filter with diameter of pixel-9, while Sigma-Color and Sigma-Space were 75 each.

Step 3: Then, we applied Sobel edge detection for both X and Y gradient of the image.

Step 4: Finally, we converted gradients back to uint8, and then blended both gradients into 1.

2.2.4. Feature Extraction

After edge detection, we proceeded to feature extraction. We experimented with multiple feature extraction techniques such as SUFT, SIFT, and ORB [23] because they were faster and accurately detected more features than other feature extraction methods [20]. We got better results with ORB. The feature extraction was applied only to the mask ROI as stated in ROI Creation Section.

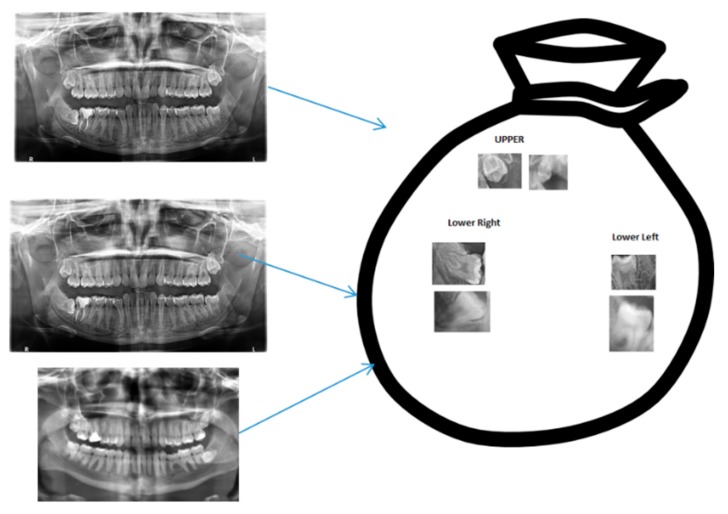

2.2.5. Bag of Visual Words

A BoVW stores key descriptors frequency of occurrence from the feature extraction phase. A BoVW represents the frequency of descriptors’ occurrence. It does not save the positions of these descriptors but it could save their orientation. In this paper, we created a cluster of these descriptors by K-means clustering algorithm. After clustering, the center of each cluster would be used as the dictionary’s vocabularies. Finally, for each image, we created a histogram from the vocabularies and the frequency of the vocabularies in the image. This histogram was our BoVW. We saved it into a pickle to use in training our SVM/CNN. Figure 4 shows an abstract representation of a BoVW.

Figure 4.

Clustered Key Features into groups where blue arrows imply extraction from the original images to a bag of visual features (words).

2.2.6. Model Training

We train the model using SVM because of its dynamic nature. SVM has some advantages for doing so, for example, the ability against over-fitting [25]. The model is able to handle any number of input space as well. To avoid these high dimensional input spaces, we assume that most of the features are irrelevant. Feature extraction/selection tries to point out these unnecessary features. Any classifier using the worst features will have much better performance than a classifier using random features.

2.2.7. Convolutional Neural Network

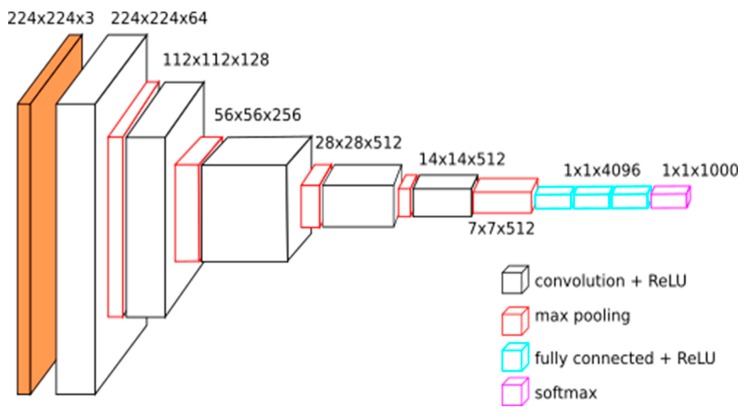

Convolutional neural network (CNN) used in this research contains 16 hidden convolutional layers, a max pooling layer, and fully-connected layers. Figure 5 shows a clear description of a CNN. In this CNN, both convolution and fully-connected layers apply activation function called Rectified Linear Unit (ReLu) while the output layer applies SoftMax activation function to estimate the probability of the various classes. These experiments use TensorFlow-backed Keras as the framework for deep learning and scikit-learn for classification and evaluation. This helps us reduce the amount of boiler plate codes written. This also helps us achieve a faster research work as well.

Figure 5.

The structure of a Convolutional Neural Network (CNN) [26].

3. Experiments

The experimental objective is to compare the performance of different feature extraction methods (ORB, SIFT, SURF, and VGG16) with multiple classifiers namely:

Logistic Regression

Support Vector Machine (RBF Kernel)

ANN/Multi-Layer Perceptron (1 hidden ReLU layer and 100 nodes)

Decision Tree

Gradient Boosting

Random Forest

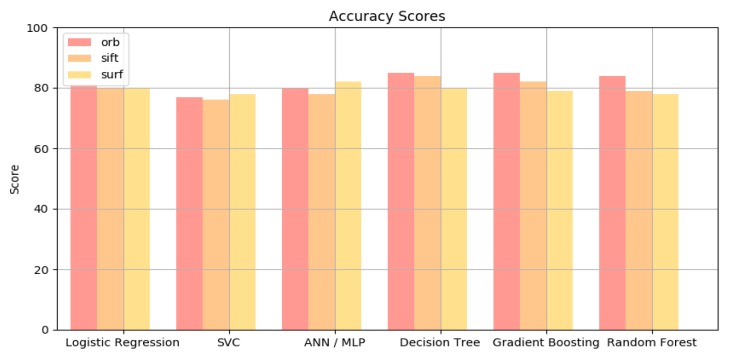

Table 1 shows the results from experiments which were done with 10-fold cross validation method. The number of folds indicates how the dataset is divided. In this case, the dataset is separated into 10 equivalent parts, where one part is chosen to validate against the remaining. This improves the accuracy of the model as a whole. In general, the VGG16 feature extractor performs just as good as ORB, SIFT, and SURF feature extractors because it has just fewer features (512 vs. 2800 features). Since VGG16 does not need training, it is more efficient than ORB, SIF, or SURF BoVW. Furthermore, neural network model such as VGG16 can run parallel in GPU, which gives it an efficiency edge over BoVW extractor. The results in this table show that Decision Tree, ANN/MLP, and Gradient Boosting generally get better results in accuracy comparing to the other classifiers. Note that ORB extractors are also much better than all other extractors. This is due to the fact that ORB has the fastest key points matching and best performance among others such as SIFT and SURF [27]. On the average, accuracy of the proposed model is slightly higher than that of VGG16 as shown by a chart in Figure 6. SVM model completes training in a shorter time which is good for low performance computers, but the prediction usually takes longer than the VGG16 (30 s vs. 5 s, respectively). In real life applications, the time difference may not be so daunting.

Table 1.

The experimental results by applying different classifiers (notation - stands for “undefined”).

| Classifiers | ORB | SURF | SIFT | VGG16 |

|---|---|---|---|---|

| Logistic Regression | 81% | 80% | 80% | - |

| SVC | 77% | 76% | 78% | - |

| ANN / MLP | 83% | 78% | 82% | - |

| Decision Tree | 85% | 84% | 80% | - |

| Gradient Boosting | 85% | 82% | 79% | - |

| Random Forest | 84% | 79% | 78% | - |

| CNN | - | - | - | 84% |

Figure 6.

The comparison of accuracy among related methods.

4. Conclusions

In this paper, we proposed an Adaptive Convolution Neural Network and BoVW for dental defect recognition. In BoVW, we represent an image as a set of features, which consists of key-points and descriptors. Taking a look in the application feature extractions algorithm and BoVW in the medical as well as other branches of science, the possibilities are enormous. We can see that with this model, the prediction can be done quickly and correctly. In the experiments, we collect a dataset of radiography images from 447 patients in Hanoi Medical Hospital, Vietnam, with third molar complications. The results of the proposed model suggest an accuracy of 84% ± 4%. This accuracy is comparable to that of the experienced dentists and radiologists. Miss-classification is caused by the fact that dental images are very closely related in features. This requires some specific methods to overcome this challenge. The upside to this is that image classification with ORB, SIFT, and SURF were good. These descriptors are strong tools when they are applied into dental field, in particular, or medical field, in general. In the combination with SVM classifier, it builds an efficient and dependable system for classification and prediction.

Modified Hopfield neural network [26] or advanced fuzzy decision making [28] would be the solution to our problem, which warrants further research. Moreover, similar to the research in [29,30], the proposed algorithms need to be applied to different datasets. In future works, we will make the implementations on a wider range of different, complex dental image datasets to verify the suitability of this algorithm. Other integrations between deep learning and fuzzy computing in our previous studies [28,31,32] would be vital for favorable outcomes in upcoming research.

Acknowledgments

The authors would like to thank the supports of the major project from Department of Science and Technology, Hanoi City, under grant number No. 01C-08/12-2018-3.

Author Contributions

Conceptualization, A.C.A. and V.T.N.N.; methodology, V.T.N.N., L.H.S., and T.T.N.; software, C.N.G. and T.M.T.; formal analysis, V.T.N.N.; investigation, M.T.G.T. and H.B.D.; data curation, C.N.G., M.T.G.T. and H.B.D.; writing—original draft preparation, A.C.A. and T.M.T.; writing—review and editing, L.H.S. and T.T.N. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Ankarali H., Ataoglu S., Ankarali S., Guclu H. Pain Threshold, Pain Severity and Sensory Effects of Pain in Fibromyalgia Syndrome Patients: A new scale study. Bangladesh J. Med. Sci. 2018;17:342–350. doi: 10.3329/bjms.v17i3.36987. [DOI] [Google Scholar]

- 2.Ravikumar K.K., Ramakrishnan K. Pain in the face: An overview of pain of nonodontogenic origin. Int. J. Soc. Rehabil. 2018;3:1. [Google Scholar]

- 3.Wu Y., Xie F., Yang J., Cheng E., Megalooikonomou V., Ling H. Medical Imaging 2012: Computer-Aided Diagnosis. Volume 8315. International Society for Optics and Photonics; Washington, DC, USA: Feb, 2012. Computer aided periapical lesion diagnosis using quantized texture analysis; p. 831518. [Google Scholar]

- 4.Zare M.R., Müller H. A Medical X-Ray Image Classification and Retrieval System; Proceedings of the Pacific Asia Conference On Information Systems (PACIS); Chiayi, Taiwan. 27 June–1 July 2016; Atlanta, GA, USA: Association for Information System; 2016. [Google Scholar]

- 5.Bay H., Tuytelaars T., Van Gool L. Surf: Speeded up robust features; Proceedings of the European Conference on Computer Vision; Amsterdam, The Netherlands. 8–16 October 2016; Berlin/Heidelberg, Germany: Springer; 2016. pp. 404–417. [Google Scholar]

- 6.Prakasa E. Texture feature extraction by using local binary pattern. INKOM J. 2016;9:45–48. doi: 10.14203/j.inkom.420. [DOI] [Google Scholar]

- 7.Bouslimi R., Akaichi J. Automatic medical image annotation on social network of physician collaboration. Netw. Model. Anal. Health Inform. Bioinform. 2015;4:10. doi: 10.1007/s13721-015-0082-5. [DOI] [Google Scholar]

- 8.Miao C., Xie L., Wan F., Su C., Liu H., Jiao J., Ye Q. SIXray: A Large-scale Security Inspection X-ray Benchmark for Prohibited Item Discovery in Overlapping Images; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Long Beach, CA, USA. 16–20 June 2019; pp. 2119–2128. [Google Scholar]

- 9.Lee J.H., Kim D.H., Jeong S.N., Choi S.H. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J. Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 10.Li X., Zhang Y., Cui Q., Yi X., Zhang Y. Tooth-Marked Tongue Recognition Using Multiple Instance Learning and CNN Features. IEEE Trans. Cybern. 2018;49:380–387. doi: 10.1109/TCYB.2017.2772289. [DOI] [PubMed] [Google Scholar]

- 11.Lee J.H., Kim D.H., Jeong S.N., Choi S.H. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J. Periodontal Implant Sci. 2018;48:114–123. doi: 10.5051/jpis.2018.48.2.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Kermany D.S., Goldbaum M., Cai W., Valentim C.C., Liang H., Baxter S.L., Dong J. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell. 2018;172:1122–1131. doi: 10.1016/j.cell.2018.02.010. [DOI] [PubMed] [Google Scholar]

- 13.Rampasek L., Goldenberg A. Learning from everyday images enables expert-like diagnosis of retinal diseases. Cell. 2018;172:893–895. doi: 10.1016/j.cell.2018.02.013. [DOI] [PubMed] [Google Scholar]

- 14.Ribli D., Horváth A., Unger Z., Pollner P., Csabai I. Detecting and classifying lesions in mammograms with deep learning. Sci. Rep. 2018;8:4165. doi: 10.1038/s41598-018-22437-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hu L., Bell D., Antani S., Xue Z., Yu K., Horning M.P., Long L.R. An observational study of deep learning and automated evaluation of cervical images for cancer screening. Obstet. Gynecol. Surv. 2019;74:343–344. doi: 10.1097/OGX.0000000000000687. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kim D., Wit H., Thurston M. Artificial intelligence in nuclear medicine: Automated interpretation of Ioflupane-123 DaTScan for Parkinson’s disease using deep learning; Proceedings of the European Congress of Radiology; Vienna, Austria. 28 February–4 March 2018. [Google Scholar]

- 17.Rastegari E., Azizian S., Ali H. Machine Learning and Similarity Network Approaches to Support Automatic Classification of Parkinson’s Diseases Using Accelerometer-based Gait Analysis; Proceedings of the 52nd Hawaii International Conference on System Sciences; Maui, HI, USA. 8–11 January 2019. [Google Scholar]

- 18.Smith R.E., Tournier J.D., Calamante F., Connelly A. SIFT: Spherical-deconvolution informed filtering of tractograms. Neuroimage. 2013;67:298–312. doi: 10.1016/j.neuroimage.2012.11.049. [DOI] [PubMed] [Google Scholar]

- 19.Piñol D.C., Reyes E.J.M. Automatic Handgun Detection in X-ray Images using Bag of Words Model with Selective Search. arXiv. 20191903.01322 [Google Scholar]

- 20.Joachims T. Text categorization with support vector machines: Learning with many relevant features; Proceedings of the European Conference on Machine Learning; Chemnitz, Germany. 21–23 April 1998; Berlin/Heidelberg, Germany: Springer; 1998. pp. 137–142. [Google Scholar]

- 21.Karim A.A.A., Sameer R.A. Image Classification Using Bag of Visual Words (BoVW) J. Al Nahrain Univ. Sci. 2018;21:76–82. doi: 10.22401/ANJS.21.4.11. [DOI] [Google Scholar]

- 22.Rublee E., Rabaud V., Konolige K., Bradski G.R. ORB: An efficient alternative to SIFT or SURF; Proceedings of the 2011 International Conference on Computer Vision (ICCV); Barcelona, Spain. 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- 23.Tareen S.A.K., Saleem Z. A comparative analysis of sift, surf, kaze, akaze, orb, and brisk; Proceedings of the IEEE 2018 International Conference on Computing, Mathematics and Engineering Technologies (iCoMET); Sukkur, Pakistan. 3–4 March 2018; pp. 1–10. [Google Scholar]

- 24.Wojnar A., Pinheiro A.M. Annotation of medical images using the SURF descriptor; Proceedings of the 2012 9th IEEE International Symposium on Biomedical Imaging (ISBI); Barcelona, Spain. 2–5 May 2012; pp. 130–133. [Google Scholar]

- 25.Karami E., Prasad S., Shehata M. Image matching using SIFT, SURF, BRIEF and ORB: Performance comparison for distorted images. arXiv. 20171710.02726 [Google Scholar]

- 26.Hemanth D.J., Anitha J., Mittal M. Diabetic retinopathy diagnosis from retinal images using modified hopfield neural network. J. Med. Syst. 2018;42:247. doi: 10.1007/s10916-018-1111-6. [DOI] [PubMed] [Google Scholar]

- 27.Gultom Y., Arymurthy A.M., Masikome R.J. Batik Classification using Deep Convolutional Network Transfer Learning. J. Ilmu Komput. Dan Inf. 2018;11:59–66. doi: 10.21609/jiki.v11i2.507. [DOI] [Google Scholar]

- 28.Ngan T.T., Tuan T.M., Minh N.H., Dey N. Decision making based on fuzzy aggregation operators for medical diagnosis from dental X-ray images. J. Med. Syst. 2016;40:280. doi: 10.1007/s10916-016-0634-y. [DOI] [PubMed] [Google Scholar]

- 29.Guo P., Xue Z., Long L.R., Antani S. Cross-Dataset Evaluation of Deep Learning Networks for Uterine Cervix Segmentation. Diagnostics. 2020;10:44. doi: 10.3390/diagnostics10010044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lochman J., Zapletalova M., Poskerova H., Izakovicova Holla L., Borilova Linhartova P. Rapid Multiplex Real-Time PCR Method for the Detection and Quantification of Selected Cariogenic and Periodontal Bacteria. Diagnostics. 2020;10:8. doi: 10.3390/diagnostics10010008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tuan T.M., Duc N.T., Van Hai P. Dental diagnosis from X-ray images using fuzzy rule-based systems. Int. J. Fuzzy Syst. Appl. 2017;6:1–16. [Google Scholar]

- 32.Tuan T.M., Fujita H., Dey N., Ashour A.S., Ngoc V.T.N., Chu D.T. Dental diagnosis from X-ray images: An expert system based on fuzzy computing. Biomed. Signal Process. Control. 2018;39:64–73. [Google Scholar]