Abstract

Background:

Many children with autism spectrum disorder (ASD) demonstrate atypical responses to multisensory stimuli. These disruptions, which are frequently seen in response to audiovisual speech, may produce cascading effects on the broader development of children with ASD. Perceptual training has been shown to enhance multisensory speech perception in typically developed adults. This study was the first to examine the effects of perceptual training on audiovisual speech perception in children with ASD.

Method:

A multiple baseline across participants design was utilized with four 7- to 13-year-old children with ASD. The dependent variable, which was probed outside the training task each day using a simultaneity judgment task in baseline, intervention, and maintenance conditions, was audiovisual temporal binding window (TBW), an index of multisensory temporal acuity. During perceptual training, participants completed the same simultaneity judgment task with feedback on their accuracy after each trial in easy-, medium-, and hard-difficulty blocks.

Results:

A functional relation between the multisensory perceptual training program and TBW size was not observed. Of the three participants who were entered into training, one participant demonstrated a strong effect, characterized by a fairly immediate change in TBW trend. The two remaining participants demonstrated a less clear response (i.e., longer latency to effect, lack of functional independence). The first participant to enter the training condition demonstrated some maintenance of a narrower TBW post-training.

Conclusions:

Results indicate TBWs in children with ASD may be malleable, but additional research is needed and may entail further adaptation to the multisensory perceptual training paradigm.

Keywords: autism, multisensory integration, perceptual training, audiovisual, plasticity

Autism spectrum disorder (ASD) has historically been defined by deficits in social communication and by the presence of restricted and repetitive patterns of behavior, interests, and activities (American Psychological Association [APA], 2000). Recent changes to diagnostic criteria, however, now recognize sensory differences as a core characteristic of ASD (i.e., one of the four restricted/repetitive behavior features; APA, 2013). Children with ASD may show differences in their patterns of responding to information within the individual sensory modalities (e.g., within vision and audition; Baranek, David, Poe, Stone, & Watson, 2006; see Ben-Sasson et al., 2009 for a review). In addition, children on the autism spectrum display differences in how they combine information across multiple sensory modalities (i.e., in their multisensory integration; Murray, Lewkowicz, Amedi, & Wallace, 2016). Of particular note are differences in the ability to combine audiovisual input during natural speech.

A recent meta-analysis by Feldman et al. (2018) synthesized the large extant literature on audiovisual multisensory integration in individuals with ASD, and reinforced the presence of differences in integrating auditory and visual input in individuals on the autism spectrum. One of the most consistent findings was reduced temporal acuity for audiovisual speech (Noel, De Niear, Stevenson, Alais, & Wallace, 2017; Stevenson et al., 2014; Woynaroski et al., 2013), as indexed by larger temporal binding windows (TBWs; i.e., the period of time over which individuals tend to combine what they see and hear into a unitary percept; Wallace & Stevenson, 2014). Abnormally large TBWs can lead to integration of information that may be unrelated, causing confusion and/or requiring increased processing effort (Bahrick & Todd, 2012), and could ultimately result in weaknesses in the ability to accurately comprehend and represent speech cues (Wallace & Stevenson, 2014). It has been proposed that difficulties in integrating audiovisual speech may contribute to the broader range of “higher-level” core- and related-symptoms, such as language impairments, that are seen in ASD (e.g., Cascio, Woynaroski, Baranek, & Wallace, 2016). Concurrent correlations in the extant literature (e.g., Megnin et al., 2012; Mongillo et al., 2008; Patten, Watson, & Baranek, 2014; Righi et al., 2018; Smith, Zhang, & Bennetto, 2017) lend some empirical support to this theory.

If difficulties integrating audiovisual speech contribute to the other deficits seen in children with ASD, then intervening upon differences in multisensory integration, in particular for audiovisual speech, may translate to improved outcomes for children affected by autism (e.g., Baum, Stevenson, & Wallace, 2015; Cascio et al., 2016; Damiano-Goodwin et al., 2018; Feldman et al., 2018). To our knowledge, though, only two attempts at targeting audiovisual speech perception have been described in children with ASD (Irwin, Preston, Brancazio, D’angelo, & Turcios, 2015; Williams, Massaro, Peel, Bosseler, & Suddendorf, 2004); neither of these studies focused on the temporal domain. Thus, there is a pressing need to determine the extent to which temporal acuity (i.e., TBW) for audiovisual speech is malleable or plastic in children with ASD.

A number of perceptual training programs targeting the audiovisual TBW have been assessed in typically developed (TD) adults. These training programs have provided feedback after each trial of either a simultaneity judgment (SJ) task (i.e., wherein participants indicate whether they perceived auditory and visual stimuli to have occured at the same time; Powers, Hillock, & Wallace, 2009) or a temporal order judgment task (i.e., wherein participants indicate whether they perceived an auditory stimulus or a visual stimulus to have been presented first; Setti et al., 2014). These training programs have been shown to narrow the TBW in TD adults using simple stimuli (i.e., auditory beeps and visual flashes; Powers et al., 2009; Setti et al., 2014; Sürig, Bottari, & Röder, 2018) as well as audiovisual speech stimuli (De Niear, Gupta, Baum, & Wallace, 2018), and to do so in a relatively short period of time (i.e., 4-5 training sessions), particularly when task difficulty is high (De Niear, Koo, & Wallace, 2016). To our knowledge, however, the effects of perceptual training, specifically focused on audiovisual temporal acuity, have not been assessed in children with ASD.

Purpose

This study represents a preliminary effort to examine the extent to which TBWs for audiovisual speech are malleable with perceptual training in children with ASD. The following specific research questions were posed:

Does our perceptual training narrow (i.e., decrease) TBW size for audiovisual speech in school-aged children with ASD compared to baseline conditions?

Do changes in TBW size persist after the training has been withdrawn?

Can the perceptual training be implemented with a high degree of procedural fidelity?

Do participants report that the perceptual training was (a) helpful or (b) enjoyable?

Methods

Our Institutional Review Board approved the recruitment and study procedures. Parents provided written informed consent, and participants provided written assent prior to participation in the study. All children were compensated for participating.

Participants

Participants were four children with ASD between 7 and 14 years old who were enrolled in a large scale study focused on multisensory function in individuals with ASD (see Table 1 for pseudonyms and participant characteristics). Inclusion criteria for this study were (a) diagnosis of ASD according to DSM-5 criteria (APA, 2013) as confirmed by a research-reliable administration of the Autism Diagnostic Observation Schedule 2 Module 3 (Lord et al., 2012) and clinical judgment of a licensed psychologist on the research team, (b) normal hearing and normal or corrected-to-normal vision per screening and parent report, (c) no history of seizure disorders, (d) no diagnosed genetic disorders (e.g., Down syndrome, Fragile X), and (e) demonstrated ability to complete an SJ task. Study eligibility was confirmed by members of the research team (i.e., clinical psychologists, speech-language pathologists) during study visits that occurred 4-7 months prior to the onset of this study as a part of the larger parent project. Exclusion criteria were medication or other intervention changes during the course of the perceptual training study. Two additional participants recruited for this study were subsequently excluded according to this criterion (i.e., unanticipated medication changes during the study); their data are available from the corresponding author upon request.

Table 1.

Participant Characteristics

| Pseudonym | Sex | Race and Ethnicity | Age on Study Day 1 | ADOS CSS | NVIQ |

|---|---|---|---|---|---|

| Nick | M | Black or African American Non-Hispanic | 8;2 | 6 | 103 |

| Jay | M | White Non-Hispanic | 7;6 | 10 | 108 |

| Nelson | M | Black or African American Non-Hispanic | 14;0 | 9 | 93 |

| Carsyn | F | White Non-Hispanic | 8;4 | 9 | 111 |

Notes. ADOS CSS = Calibrated severity score from the Autism Diagnostic Observation Schedule 2 Module 3 (Lord et al., 2012); NVIQ = Nonverbal IQ standard score from the Leiter International Performance Scale-3 (Roid, Miller, Pomplun, & Koch, 2013). Age is presented as years;months. ADOS CSS and NVIQ were collected 4-7 months prior to study onset.

Experimental Design and Analysis

This study used a multiple-baseline across participants design (Gast & Ledford, 2018). In this study design, the introduction of perceptual training was time-lagged, such that the training condition was introduced to the first tier (i.e., participant) after a stable baseline was achieved, and the training was introduced to subsequent tiers after certain conditions were satisfied. The time-lagged introduction allows each participant to serve as their own control, by comparing the data before and after the introduction of the training; subsequent tiers then serve as controls for those tiers that have entered training by remaining in the baseline condition (Gast & Ledford, 2018). This study was carried out in accordance with recommended procedures for conducting a single case experimental research design (i.e., the Single-Case Reporting Guideline In BEhavioural Interventions [SCRIBE]; Tate et al., 2016).

Data analysis was conducted via visual analysis, which is the most commonly-used method for data analysis in single case designs (Smith, 2012) and is widely considered to constitute best practice in these designs (Gast & Ledford, 2018; Horner et al., 2005; Kazdin, 2011; Kratochwill, Levin, Horner, & Swoboda, 2014). Visual analysis was completed by a member of the research team (TGW, senior author) who was blind to participant status on the basis of the change in (a) level, (b) trend, and (c) variability of our DV (i.e., TBWs), as well as the (d) immediacy of that change and (e) the lack of covariation between tiers (i.e., participants; Gast & Ledford, 2018).

Setting and Materials

The experimental tasks were completed in a sound- and light-attenuated room (WhisperRoom Inc., Morristown, TN, USA). Visual stimuli were presented on a Samsung Syncmaster 2233RZ 22 inch PC monitor. Auditory stimuli were presented binaurally via Sennheiser HD559 supra-aural headphones. Stimulus presentation was managed by E-prime software. Responses were recorded via keyboard during SJ probes and via a serial response box during perceptual training.

Stimuli

The stimuli used in the SJ probes and perceptual training were videos of a female speaker saying the syllable “ba” at a natural rate and volume with neutral affect. Similar to other stimuli described in the literature (e.g., Quinto, Thompson, Russo, & Trehub, 2010; Woynaroski et al., 2013), these videos were recorded against a neutral background with the speaker’s full face and neck visible. To create asynchronous audiovisual stimuli, the auditory and visual tracks were separated and manipulated in the video editing software Adobe Premiere. Each stimulus video presentation was 1.85s in duration.

Procedure

General procedures.

Participants completed study procedures as part of a larger research camp that took place 3-4 days per week from June 13, 2017 to August 4, 2017. Participants engaged in two 1-hr sessions in the WhisperRoom booths with one of three examiners (JIF, KD, JGC). When participants were in baseline and maintenance conditions, they completed the SJ speech probe first and then completed baseline procedures (see Table 2). When participants were in the training condition, they first completed the perceptual training task and then completed the SJ speech probe.

Table 2.

Description of Procedures by Phase

| Phase | Description of Procedures | Length of Phase-Specific Procedures | Overall Sequence |

|---|---|---|---|

| Baseline | Participants had access to quiet, unisensory (i.e., auditory- or visual-only), nonsocial activities (e.g., simple computer games, card games, music, puzzles, coloring) | Approximately 30-40 minutes | Participants first completed the SJ probe, then baseline activities |

| Training | Participants completed the perceptual training SJ speech task | Approximately 30-40 minutes | Participants first completed the training, then the SJ probe |

| Maintenance | Same as baseline | Approximately 30-40 minutes | Same as baseline |

Note. SJ = Simultaneity judgement. The entire session was completed in a sound- and light-attenuated booth (WhisperRoom Inc., Morristown, TN, USA). Across conditions, the SJ probes consisted of five blocks of a simultaneity judgement of audiovisual speech task and took approximately 20-30 minutes to complete in total.

When participants were not completing research procedures, they had access to a variety of preferred activities (e.g., board and video games, toys, music). No other therapies or interventions were provided by the study team during this time. Parents furthermore did not report that their children participated in any outside interventions such as speech-language therapy or occupational therapy or received any applied behavior analysis consultation or therapy during the timeframe for the study.

SJ probe.

Prior to completing the SJ probe, participants completed one practice round as a comprehension check. During the comprehension check, the participants were shown four videos of the woman saying “ba:” two synchronous (i.e., simultaneous presentation of the auditory and visual components of “ba”), one asynchronous with an auditory stimulus lead of 900ms, and one asynchronous with a visual stimulus lead of 900ms, presented in random order. Participants had to answer all four questions correctly (i.e., press 1 on the keyboard for “same time”/synchronous on synchronous trials, press 2 for “different time”/asynchronous on asynchronous trials) in order to end the comprehension check and progress to the probes.

Participants completed five blocks of the SJ probe. During each block, the videos of the female speaker saying “ba” were presented synchronously and at 14 stimulus onset asynchronies (SOAs; the difference in the relative timing of the initiation of the auditory and visual components of the stimuli, with negative values indicating auditory-first and positive values indicating visual-first): ±500ms, ±400ms, ±350ms, ±300ms, ±250ms, ±150ms, and ±100ms. Each SOA (including synchrony) was presented two times in each block in a random order. Participants were able to select images of preferred media (e.g., Pokémon, Minecraft, Mario), which were presented randomly between the trials to maintain participant engagement. Six total images (from a bank of fifteen per theme) were randomly inserted between SJ video stimuli during each block of the probe, as specified in the E-prime code for the SJ probe. Participants took approximately 20-30 minutes to complete the SJ probe each day, inclusive of breaks between blocks.

Calculation of TBWs.

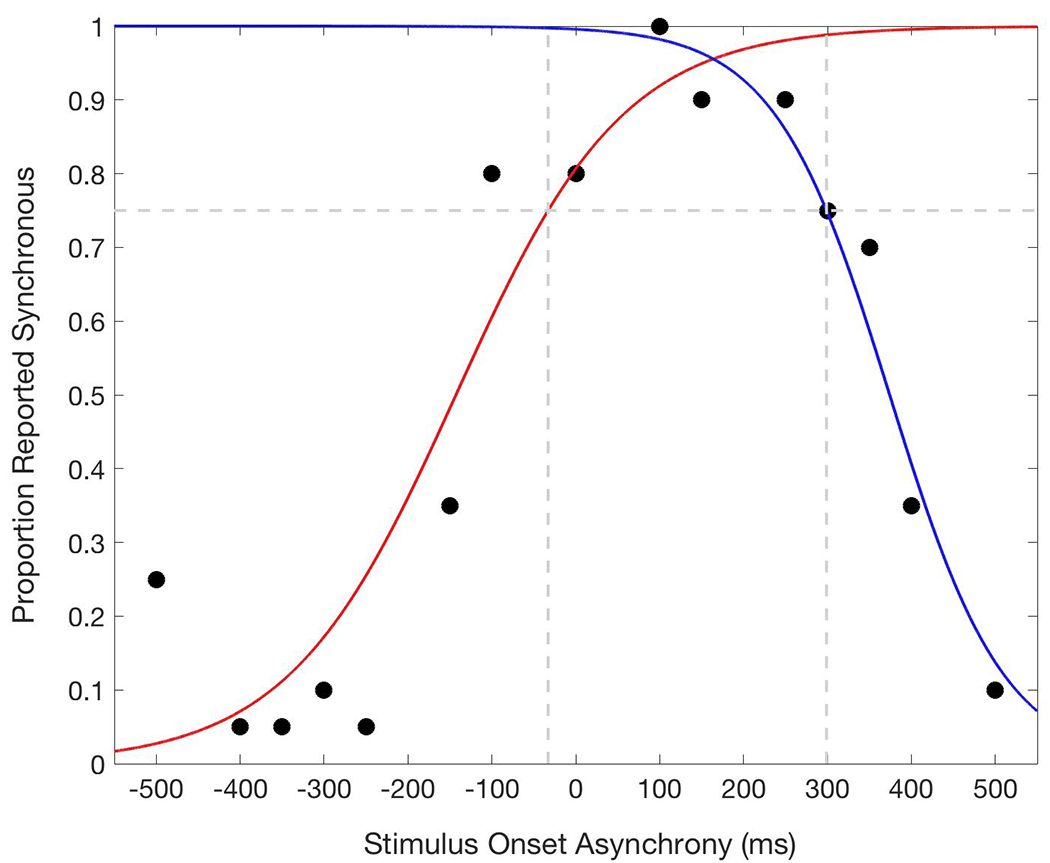

TBWs were derived for each child by fitting his/her rate of perceived synchrony across SOAs in the SJ probes (i.e., the number of times that the child answered “synchronous” over the total number of trials presented for each SOA) to two psychometric functions using the glmfit function in MATLAB (see Powers et al., 2009; Stevenson et al., 2014 for detailed descriptions regarding this approach to the derivation of TBWs), one for auditory-leading (i.e., left; negative SOAs) trials and another for visual-leading (i.e., right; positive SOAs) trials, after normalizing the data (i.e., setting the maximum value to 100%; see Figure 1). The point at which each psychometric curve crossed 75% perceived synchrony defined the left- and right-TBW. The TBW was then calculated as the difference between these values.

Figure 1.

Example temporal binding window (TBW) for a participant in the experiment (i.e., “Carysn” during a baseline day). The blue line represents the psychometric function fit to the right data points (visual first trials). The red line represents the psychometric function fit to the left data points (auditory first trials). The vertical dotted lines represent the point at which each line reaches .75 accuracy (the horizontal dotted line; i.e., −32.9ms and 298.8ms). The TBW is the distance between these two values (i.e., 331.7ms).

While the participants were in the baseline condition, participants’ TBWs widened. This countertherapeutic trend was expected, to some extent, based on findings from a prior study of TD adults who were repeatedly exposed to SJ speech tasks without perceptual training/feedback (see De Niear et al., 2018). There were occasions when participants’ rates of perceived synchrony could not be fit to two psychometric functions; when possible, the curves were forced to fit that day’s probe data (see Interobserver Reliability), though in most cases the participant’s TBW for that day was considered missing. There were ten total data points (10.9% of data points across participants) that were considered missing.

Baseline procedures.

When participants were in baseline, they engaged in quiet activities in the WhisperRoom (i.e., listening to music; simple computer games such as Tetris, snake, solitaire, and minesweeper; card games such as war, Uno, or memory; reading a book to him/herself; puzzles, coloring, napping) after completing the SJ probe (i.e., for approximately 30-40 minutes). Activities were specifically chosen to be unisensory (i.e., auditory-only or visual-only) and minimally-social, to limit face-to-face exposure to synchronous audiovisual speech during this period, when children were not in training. Participants completed these activities in the WhisperRoom in order to keep other members of the research team and the other participants blind to condition. Participants had access to these activities at other times during the day; thus, they did not lose access to these activities when they were in the training condition.

Perceptual training.

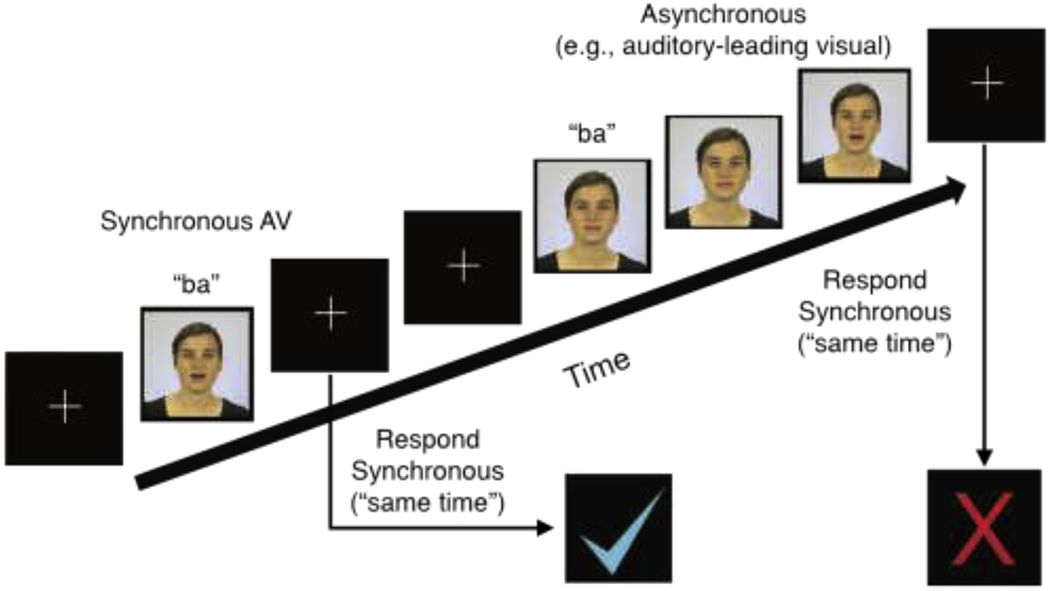

The perceptual training paradigm was set-up similarly to the SJ probe, with the addition of computer-delivered feedback after each trial (i.e., a blue a check mark following correct responses and a red X following incorrect trials; see Figure 2). Additionally, participants were given a serial response box with clearly-labeled “same time” and “different time” buttons to reduce cognitive load during the training.

Figure 2.

Depiction of the perceptual training paradigm. AV = audiovisual.

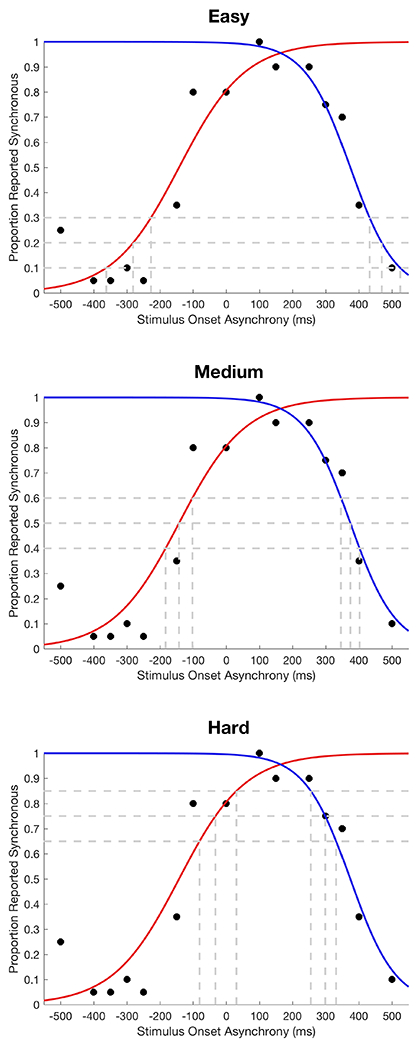

Training difficulty was individualized and adaptive in nature, progressing in each session from relatively easy to relatively more challenging levels established based on each participants’ most recent probe data, in order to establish behavioral momentum while maximizing learning (De Niear et al., 2016; Sürig et al., 2018). Specifically, for each day that participants were in training, their TBW probe data from the day before (or the last definable TBW, in the event of missing data due to poor fit for psychometric curves) were analyzed in order to create three difficulty levels, presented as one level of easy difficulty, two levels of medium difficulty, and four levels of hard difficulty (see Table 3). At all levels of difficulty, 48 trials were presented, 50% of which were synchronous. For the easy condition, the SOAs were chosen at the points where each psychometric curve (left and right) fit to the SJ probe data crossed 10%, 20%, and 30% report of synchrony, with a minimum SOA of 133ms and a maximum SOA of 500ms (see Figure 3). For the medium condition, the SOAs were chosen at 40%, 50%, and 60% report of synchrony, with a minimum SOA of 133ms and a maximum SOA of 400ms. For the difficult condition, the SOAs were chosen at 65%, 75%, and 85% report of synchrony, with a minimum SOA of 133ms and a maximum SOA of 300ms. All training SOAs were rounded to the nearest 50ms or 16.7ms (i.e., one frame difference between the visual and auditory stimuli). Additionally, all training SOAs were presented equally in both auditory-first (negative) and visual-first (positive) trials so the average of all asynchronous trials would equal 0ms (i.e., true synchrony). As with the probe, participants first completed a comprehension check and were able to select images of preferred media to increase motivation; five or six total images were randomly inserted between SJ trials by E-Prime. Participants took approximately 30-40 minutes to complete the perceptual training each day.

Table 3.

Difficulty Levels Utilized in the Perceptual Training Paradigm

| Difficulty | Number of Levels | % Perceived Synchronous | Min SOA | Max SOA |

|---|---|---|---|---|

| Easy | 1 | 10%, 20%, 30% | 133ms | 500ms |

| Medium | 2 | 40%, 50%, 60% | 133ms | 400ms |

| Hard | 4 | 65%, 75%, 85% | 133ms | 300ms |

Note. Number of Levels = the number of times that this condition was presented in each training session; SOA = Stimulus onset asynchrony; % Perceived Synchronous = the level of reported synchrony on psychometric curves fit to participant data and used to derive training SOAs (Note that a small % reported synchronous at non-zero SOAs represents accurate perception of asynchrony). Training SOAs were derived as the percent perceived synchronous, rounded to the nearest multiple of 50ms or 16.7ms (i.e., frame) or to the minimum or maximum values set for that difficulty level.

Figure 3.

Example training stimulus onset asynchronies (SOAs) derived for a participant in the experiment (i.e., “Carysn” during a baseline day). In the easy condition, the training SOAs would be ±433ms, ±466ms, and ±500ms based on the right curve (blue; based on visual-first trials; note the original value of 516ms was rounded down to the maximum value of 500ms) and ±183ms, ±283ms and ±366ms based on the left curve (red; based on auditory first-trials). In the medium condition, the training SOAs would be ±350ms, ±366ms, and ±400ms based on the right curve and ±133ms, ±150ms and ±183ms based on the left curve (note the original value of 100ms was rounded up to the minimum value of 133). In the hard condition, the training SOAs would be ±250ms, ±299ms, and ±300ms based on the right curve (note the original value of 350ms was rounded down to the maximum value of 300ms) and ±133ms based on the left curve (note the original values of 33ms and 83ms were rounded up to the minimum value of 133).

Social validity.

At the end of the training sessions, participants completed a questionnaire using REDCap (Harris et al., 2009). The questionnaire consisted of five questions, three on a 5-point Likert scale (i.e., “Did you think the game was easy?”, “Did you think this game was fun?”, and “Did you think this game was helpful?”), one yes/no question (i.e., “Would you play this game in your free time?”), and one open-ended question (i.e., “Is there anything else you want to tell us about this game?”). To facilitate participant comprehension, the questions and options were read aloud, and each item on the Likert scale was paired with a picture of a face that matched the option (i.e., an emoji).

Phase changes.

At the end of every day of the study, the probe data were plotted for each participant and presented to the blinded rater, who decided when to implement all phase changes, with certain a priori criteria to guide decision making. For the first participant to enter the perceptual training phase, the data in all tiers had to be stable or in a countertherapeutic trend for three consecutive days. For subsequent participants, the training was introduced (a) after the preceding participant demonstrated a clear therapeutic trend in three out of four consecutive study days, (b) after the preceding participant was in training for six consecutive days, or (c) at the beginning of the final week of the research camp, in the event that all participants with atypical TBWs had not entered training by that time. A priori, our guidelines for when the training was to be withdrawn were: (a) participants demonstrated a clear countertherapeutic trend, defined as three consecutive study days wherein the TBW increased by at least 120%, compared to the previous day’s TBW, (b) participants demonstrated a clear plateau, defined as three consecutive study days wherein TBW size was equal to or greater than the TBW for the prior training day after initial evidence of a decrease in TBW size, (c) the participant demonstrated a clear therapeutic trend (i.e., change in level, trend, and/or variability of TBW data), (d) after six days in training, or (e) the study concluded. Note that criterion (d) for withdrawal was based on our anticipated timeline for achieving maximal training effects, according to prior work in TD adults, but was relaxed based on our observation of an extended timeline for behavior change in school age children with ASD, in particular in our youngest participant.

Interobserver Reliability

Although the MATLAB script to derive TBW was an objective measure consistently used throughout the study, there was some level of human influence on the data processing. When the script’s default approach of binomial logistic regression did not readily generate a logical TBW, a member of the research team attempted to fit the data by either (a) removing isolated points that caused the glmfit function to generate implausible curves or (b) utilizing the fit function to fit all the data to a Gaussian curve. Therefore, a member of the research team naive to condition and hypotheses re-calculated TBWs for a subset of the data to permit testing of interobserver agreement/reliability (IOA). IOA data were collected on 20% of the TBW data for each participant in each condition, as selected by a random number generator. The intraclass correlation coefficient (ICC) was used to quantify IOA using the irr package (Gamer, Lemon, Fellows, & Singh, 2012) in R (R Core Team, 2017).

Procedural Fidelity

Procedural fidelity data were collected for the examiners in the WhisperRoom/s from video recordings of the probe, baseline, and perceptual training sessions using checklists established prior to the study. Expected behaviors in the probe sessions included the child looking at the computer and wearing headphones set to the proper volume, the examiner not providing feedback based on correctness of child response, and the minimization of potential distractors. Expected behaviors for the baseline condition included the participant only engaging in allowed activities, the examiner not providing the training, and the examiner not initiating social interactions with the child. Expected behaviors for the training condition included the child looking at the computer and wearing headphones set to the correct volume, the examiner setting up the task correctly, and the examiner not providing additional feedback to the child (i.e., the only feedback the child received was from the automated computer task).

Procedural fidelity data were collected by two members of the research team naive to condition and hypotheses (MC, YL) on 20% of the probe sessions across all conditions, examiners, and participants, then on 20% of the baseline sessions across all examiners and participants, and then on 20% of the training sessions across all examiners and participants. Procedural fidelity data were collected in this order to ensure that the coders remained blind to condition and outcomes. Sessions to be checked for procedural fidelity were chosen by random number generators, and examiners who were in the WhisperRoom/s with participants were unaware of which sessions would be selected for fidelity checks.

Results

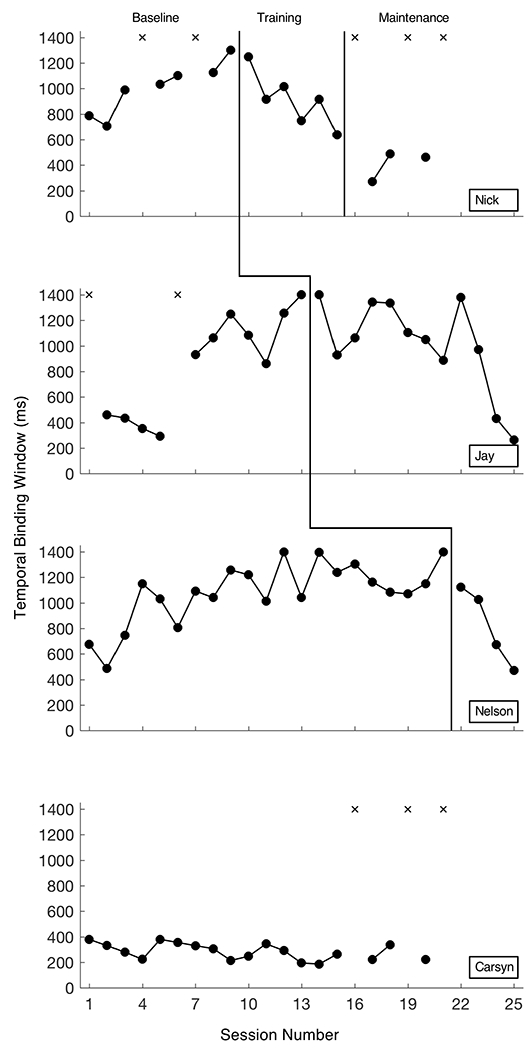

Figure 4 shows participants’ TBWs in the baseline, perceptual training, and maintenance conditions.

Figure 4.

Temporal binding windows (TBWs) for all participants during baseline, perceptual training, and maintenance phases of the experiment. X represents TBWs that could not be derived. “Carsyn” remained in baseline throughout the study, as she entered with (and maintained) a narrow TBW. Data collection was terminated earlier for “Carsyn” and for “Nick” than for others based on school calendars/start dates.

Overall Trends in Baseline

In the baseline condition, there was substantial variability in TBW for most participants, and it took several days until all four participants reached criteria that allowed for the first to enter the training condition. Three of the participants demonstrated widening of their windows while in baseline, as evidenced by an increased level in the TBW data for those participants. All of the participants who entered training did so following days when their TBW was ≥1000ms.

Responses to Perceptual Training

Nick.

Upon beginning the perceptual training condition, Nick showed a very clear and immediate response as demonstrated by a change in trend with a very short latency following introduction of the perceptual-based training. The training was withdrawn from Nick while he was in a downward trend due to a priori decisions about the length of the training condition, set according to our time to observation of maximal effects in TD adults in prior work (Powers et al., 2009).

Nick was the only participant who completed a maintenance condition. This condition lasted six days, and on three days Nick demonstrated some maintenance of the effect of training, as evidenced by a consistent, narrow TBW. Notably, his TBW in the maintenance condition was consistently smaller than any of his previously measured TBWs (i.e., those in baseline and training). Although this result is promising, we cannot draw conclusions about the maintenance of the gains made during training due to the lack of three replications of this effect (i.e., Nelson and Jay did not complete a maintenance phase).

Jay.

In baseline, Jay initially presented with a stable, narrow TBW in a downward trend, but began to demonstrate a sharp upward trend and extreme widening of his window on Day 6. Jay demonstrated a delayed response after beginning the training on Day 14 of the study. For the first four days wherein he completed the training, there was no consistent change in level, trend, or variability. Although Jay did demonstrate a downward trend on Days 19, 20, and 21 of the study, his TBW widened on Day 22 of the study, following a break in training (i.e., a weekend). The sudden downward trend that followed for Jay coincided with Nelson’s entry into the training condition, necessitated by the onset of the final week of the study (i.e., All study participants were scheduled to be in school the following week, so we could not continue the experiment and maintain adequate experimental control). The training was withdrawn from Jay due to the study concluding while he was in a downward trend.

Nelson.

Nelson demonstrated an immediate response to the perceptual training, but Jay and Nelson’s responses to the training do not constitute a true replication of the effect due to a lack of independence and experimental control. As we lack three replications of the effect, we cannot conclude that there is a functional relation between the perceptual training and narrowing of TBWs. The training was also withdrawn from Nelson due to the study concluding while he was in a downward trend.

Carsyn.

Carsyn demonstrated a stable, narrow (i.e., potentially mature) TBW throughout the study. This participant was not entered into training, but was retained as a “control” in an extended baseline.

Social Validity

The social validity survey was collected after each training session. Regarding perceived difficulty, Nick and Jay on average indicated that they felt the game was “not easy or hard,” while Nelson found the game consistently “kind of hard.” Regarding the reported level of fun, on average Nick felt the game was “kind of fun,” Jay felt the game was “very fun” and Nelson felt the game was “not fun or boring.” Regarding the helpfulness of the game, on average Nick felt the game was “not helpful or unhelpful,” Jay thought the game was “kind of helpful” and Nelson was unsure. None of the participants indicated that they would play this game in their free time.

On the open-ended question, both Nick and Nelson expressed some confusion about the perceptual training following their first session. Nick said, “Every time I click on same, it just shows the X” while Nelson said, “[The computer] was cheating” and “Each round [within a given] level [of difficulty] seemed harder.” However, by the end some participants indicated that they “really enjoyed/liked” the training and that they wanted the feedback during the probes (wherein they did not receive feedback on their responses).

Interobserver Reliability

IOA was collected by recalculating TBWs for 22 randomly-selected training sessions using the same MATLAB script. This represented 23.9% of all SJ speech probes collected and 27.2% of all TBWs that were able to be calculated from the SJ speech probes. The intraclass correlation coefficient (ICC) indicated an outstanding level of inter-rater reliability, ICC = 0.989 (Yoder, Lloyd, & Symons, 2018). Therefore, there was very little variance attributable to the individual calculating the TBW.

Procedural Fidelity

Procedural fidelity was collected based on 20.5% of all SJ speech probe sessions. The average fidelity was 95.1% across all three examiners (range: 93.5% - 97.2%). The most common reason examiners did not receive full marks on the procedural fidelity checklist for probe sessions was failure to completely explain the task each day, as by the middle of the experiment the participants were fully aware of the study procedures (and often recited the task instructions verbatim on their own).

Procedural fidelity was also collected on 27.5% of all baseline sessions and 29.2% of all perceptual training sessions. The average fidelity was 99.6% for the baseline sessions across all three examiners (range: 98.8% - 100%) and 96.7% for the training sessions (range: 96.0% - 97.7%). Procedural fidelity was thus very high, and it is unlikely that differences in the implementation of experimental procedures contributed to differences in the data.

Discussion

This study represents the first experimental manipulation designed to impact the temporal acuity of multisensory integration, specifically targeting the temporal binding window for audiovisual speech, in children with ASD. The primary results indicate that TBWs may be malleable in at least some children with ASD, as evidenced by narrowing during computer-based perceptual training (and widening during baseline). Although there was some evidence to suggest that perceptual training may induce narrowing in the TBWs of at least some children with ASD, we could not conclude that there was a functional relation between the perceptual training and narrowing of TBWs. Additionally, only one of the three participants who received the perceptual training (Nick) demonstrated a reduction in TBW size relative to the onset of the study, whereas the other two participants (Jay and Nelson) demonstrated only a reduction in TBW size relative to the onset of the training, following an expansion in TBW size during baseline. Though the present results suggest that training-based approaches may offer some promise for improving audiovisual temporal acuity in this clinical population, additional research is needed.

There were striking differences observed between participants’ responses to the training. Nelson demonstrated the most immediate response to training, as evidenced by a clear change in level and trend on the first day of the training, while Jay demonstrated the latest (i.e., longest latency to an) apparent response to the training. It is notable that Nelson was the oldest participant in the study, and Jay was the youngest. The existing literature led us to hypothesize that the effects of training would occur quickly post-onset, as TD adults demonstrate almost immediate responses to perceptual training (e.g., De Niear et al., 2018; Powers et al., 2009). Further research utilizing a group research design (e.g., a randomized controlled trial) is needed to determine if there are differential effects observed in response to training according to baseline characteristics, such as chronological age and/or developmental stage (as there is strong evidence that the TBW narrows during the course of typical development; e.g., Hillock-Dunn & Wallace, 2012), and/or other factors, such as sensory phenotypes (given that clinical aspects of sensory functioning appear to covary with audiovisual integration; e.g., Feldman et al., 2019).

In addition to our expectations about the immediacy of the effects, we also expected that the participants would quickly reach a plateau after an initial drop in TBW size during training, as the literature on TD adults indicates that participants plateau after an initial decrease in TBW size (e.g., De Niear et al., 2018; Powers et al., 2009). However, in our experiment both Nick and Jay continued to display narrowing of their TBWs after four sessions, and it is unclear whether any of the participants had maximized the benefits of the training. Therefore, future research should attempt to ascertain the intensity of perceptual training needed to achieve maximal benefits in children with ASD.

One limitation of the present study is that we are only able to draw conclusions about the short-term effectiveness of the perceptual training on TBWs due to the timing of the probes, which were administered immediately after the training during the intervention phase of the study. Though we intended to collect maintenance data on all of the participants to assess the longer-term effects of the perceptual training, this could not be done for practical reasons (i.e., school starting). Although there was some evidence that the gains might be maintained (i.e., based on the data observed in maintenance for Nick), the lack of three replications of the maintenance effect limits our ability to draw conclusions about the long-term effectiveness of the perceptual training. There is some evidence that gains made during perceptual training programs are maintained by TD adults up to one week after training (De Niear et al., 2018; Powers et al., 2009). Future studies should plan to collect maintenance data to assess whether similar effects are observed in children with ASD.

Additionally, some participants demonstrated extreme widening and variability of their TBW during the baseline phase of the study. There are several possible explanations for this effect. One possibility is that the time-lagged introduction of the IV, which resulted in some participants staying in an extended baseline for up to 21 days, contributed to this widening, as repeated exposure to SJ probes without training is known to result in some degree of widening in TBWs (De Niear et al., 2018; Powers et al., 2009). It has been hypothesized that perceptual training programs may cause shifts in perceptual criteria rather than changes in temporal acuity (i.e., while in training, individuals may decrease how often they report synchrony; De Niear et al., 2018); it is possible that repeated exposure may similarly cause an increase in how often participants report synchrony. It is also possible that the duration of the baseline caused some testing-related effects (Gast & Ledford, 2018). For example, children could have become frustrated with the task and stopped trying their best, or they could have become fatigued from having to do the task three or four days per week for up to seven weeks. In any of these cases, the likelihood of TBW widening could be reduced by shortening the baseline condition for participants in future studies.

Current guidelines for single case design reporting indicate that five data points is the minimum acceptable number needed in each phase for high quality evidence (Horner et al., 2005). Three participants in the present report demonstrated extreme widening and variability within five observable data points in baseline. Thus, the acquisition of five data points in baseline prior to the initiation of perceptual training is not advisable for this clinical population. An investigation evaluating the number of observations required to obtain acceptably stables estimates of TBW in school age children with ASD is needed in order to make specific recommendations about the number of observations that should be collected at baseline before any training is introduced (see Sandbank & Yoder, 2014; Yoder et al., 2018). It may be, however, that the single case design utilized here (i.e., multiple baseline across participants or any other design that would involve prolonged exposure to the probe necessary to evaluate TBW size) is not the ideal approach for exploring the plasticity of temporal binding in children on the autism spectrum.

Another limitation of this study was that our DV (TBW) could not be derived on every day of the study. Current single case reporting guidelines (see Tate et al., 2016) do not address how to treat missing data due to the limitations inherent in psychophysical methods; therefore, we plotted these data points as X’s located at the maximum possible TBW (i.e., 1400ms) and did not include those points in our visual analysis. In the cases where participants’ TBW could not be derived, it was generally because participants’ report of synchrony did not predictably decrease with increasing SOAs. It is interesting to note that Nick and Jay, who both had two days when their TBWs could not be derived while in baseline, had no missing data points while in training. This decrease in missing data could have been a result of the training. We hypothesize that participants may have learned how to better perform the task while in the training, leading to better data from the SJ probes even without a decrease in TBW size. Alternatively, during the training phase, participants completed the SJ probes after completing the training, while in the baseline and maintenance phases they completed the SJ probes prior to doing quiet activities. It is possible that the slight differences in the time of day when probes were presented (e.g., approximately 1 hour difference) may have impacted their performance, though to our knowledge no work has evaluated the effect of time of day on multisensory temporal perception. Regardless, a continuously definable measure, such as “noise” or variability in responding to stimuli that vary in degree of asynchrony, could aid us in defining a response to the training in the future.

Although we recruited four participants with relatively similar profiles, broadly speaking (see Table 1), there were clear differences observed between the participants in their baseline data. Carysn demonstrated a consistently narrow TBW while in the baseline condition. The other three participants entered the study with TBWs that were relatively larger and/or more unstable and vulnerable to exposure to asynchrony. Further research is needed to understand the factors that may explain variability in the magnitude and malleability of TBWs in children on the autism spectrum and to determine who is most appropriate for this type of perceptual training.

This study represents an important first step in evaluating the plasticity of multisensory integration in children with ASD. Theory suggests that improvements in sensory and multisensory function may translate to improvements in a broad range of higher level symptoms associated with ASD (Cascio et al., 2016; Feldman et al., 2018). Additional research is needed, however, to have a high level of confidence that the targeted perceptual training tested here will yield proximal gains (i.e., decreased TBWs), and to test whether any proximal effects of the computer-based training will translate to distal gains in core- and related-deficits associated with ASD. We hypothesize that this perceptual training targeting temporal binding of multisensory speech cues may lead to improvements in behaviors such as coordinated gaze to the face of one’s communication partners, possibly resulting in more downstream gains in engagement, social communication skill, and/or language learning over time. At present, however, our ability to measure distal outcomes for verbally-fluent children with ASD in the context of single case designs is limited by the measures available. There are currently few observational measures that can be used to continuously assess autism symptom severity and communication skill in school aged-children and adolescents with ASD. The authors of the ADOS-2 have developed a measure that can be used to document change in autism symptom severity in young and/or minimally-verbal children with ASD (Grzadzinski et al., 2016); however, they have not yet released such a measure for older and/or verbally-fluent children with ASD (development of this measure remains in progress). Other observational measures have been developed for verbally-fluent children with ASD, such as the Dyadic Communication Measure for Autism (Aldred, Green, & Adams, 2004). These measures may be considered for future research, in particular if they are demonstrated to be psychometrically sound or appropriate for verbally-fluent children and adolescents with ASD. Some metrics of social communication and language ability have been commonly used in the intervention literature, such as the number of communication acts and/or mean length of utterance, but it is unclear whether such metrics are valid for detecting short-term effects of perceptual training in school-aged children.

Before conducting further research on the perceptual training approach used in this study, certain modifications are suggested by the social validity data. The most pressing issue is the participants’ reported confusion on the first day of training. It is unclear what caused this confusion, though adding practice trials wherein the participants are explicitly taught about the training SOAs could facilitate better understanding of this task. Another issue, albeit a less pressing one, is that participants did not consistently report enjoying the training task. Anecdotal evidence from this study leads us to believe that adding a scoring system or other elements that would make the perceptual training paradigm feel more like a game could possibly increase the participants’ enjoyment.

Conclusion

This study was the first to empirically assess the impact of perceptual training on audiovisual temporal function in children with ASD. Although we cannot yet conclude that there is a functional relation between the training and changes in the TBWs for audiovisual speech, there was evidence for the plasticity of TBWs at school age in this clinical population. Future research utilizing single case research methods or a well-controlled group design is warranted to further evaluate the potential benefits of this perceptual training-based approach.

Highlights.

This study represents the first experimental manipulation of multisensory integration, specifically temporal binding of audiovisual speech stimuli, in children with ASD.

Results indicate that audiovisual integration for speech-related stimuli may be malleable in children with ASD.

Future research using either single-case or group research designs is warranted to further evaluate the promise of multisensory perceptual training approaches in ASD.

Acknowledgements

This work was supported by NIH U54 HD083211 (PI: Neul), NIH/NCATS KL2TR000446 (PI: Woynaroski), NIH/NIDCD 1R21 DC016144 (PI: Woynaroski), NIH T32 MH064913 (PI: Winder), and NIH/NCATS UL1 TR000445 (PI: Bernard). The authors would like to thank the families who participated in our study, as well as the thoughtful comments from our reviewers that led to a vastly improved manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aldred CR, Green J, & Adams C (2004). A new social communication intervention for children with autism: Pilot randomised controlled treatment study suggesting effectiveness. Journal of Child Psychology and Psychiatry, 45, 1420–1430. doi: 10.1111/j.1469-7610.2004.00338.x [DOI] [PubMed] [Google Scholar]

- American Psychological Association. (2000). Diagnostic and statistical manual of mental disorders-IV-TR. Washington, DC: APA. [Google Scholar]

- American Psychological Association. (2013). Diagnostic and statistical manual of mental disorders-5. Washington, DC: APA. [Google Scholar]

- Bahrick LE, & Todd JT (2012). Multisensory processing in autism spectrum disorders: Intersensory processing disturbance as atypical development In Stein BE (Ed.), The new handbook of multisensory processes (pp. 657–674). Cambridge, MA: MIT Press. [Google Scholar]

- Baranek GT, David FJ, Poe MD, Stone WL, & Watson LR (2006). Sensory Experiences Questionnaire: Discriminating sensory features in young children with autism, developmental delays, and typical development Journal of Child Psychology and Psychiatry, 47, 591–601. doi: 10.1111/j.1469-7610.2005.01546.x [DOI] [PubMed] [Google Scholar]

- Baum SH, Stevenson RA, & Wallace MT. (2015). Behavioral, perceptual, and neural alterations in sensory and multisensory function in autism spectrum disorder. Progress in Neurobiology, 134, 140–160. doi: 10.1016/j.pneurobio.2015.09.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ben-Sasson A, Hen L, Fluss R, Cermak SA, Engel-Yeger B, & Gal E (2009). A meta-analysis of sensory modulation symptoms in individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 39, 1–11. doi: 10.1007/s10803-008-0593-3 [DOI] [PubMed] [Google Scholar]

- Cascio CJ, Woynaroski T, Baranek GT, & Wallace MT (2016). Toward an interdisciplinary approach to understanding sensory function in autism spectrum disorder. Autism Research, 9, 920–925. doi: 10.1002/aur.1612 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Damiano-Goodwin CR, Woynaroski TG, Simon DM, Ibañez LV, Murias M, Kirby A, … Cascio CJ (2018). Developmental sequelae and neurophysiologic substrates of sensory seeking in infant siblings of children with autism spectrum disorder. Developmental Cognitive Neuroscience, 29, 41–53. doi: 10.1016/j.dcn.2017.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Niear MA, Gupta PB, Baum SH, & Wallace MT (2018). Perceptual training enhances temporal acuity for multisensory speech. Neurobiology of Learning and Memory, 147, 9–17. doi: 10.1016/j.nlm.2017.10.016 [DOI] [PubMed] [Google Scholar]

- De Niear MA, Koo B, & Wallace MT (2016). Multisensory perceptual learning is dependent upon task difficulty. Experimental Brain Research, 234, 3269–3277. doi: 10.1007/s00221-016-4724-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman JI, Dunham K, Cassidy M, Wallace MT, Liu Y, & Woynaroski TG (2018). Audiovisual multisensory integration in individuals with autism spectrum disorder: A systematic review and meta-analysis. Neuroscience & Biobehavioral Reviews, 95, 220–234. doi: 10.1016/j.neubiorev.2018.09.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Feldman JI, Kuang W, Conrad JG, Tu A, Santapuram P, Simon DM, … Woynaroski TG. (2019). Brief report: Differences in multisensory integration covary with differences in sensory responsiveness in children with and without autism spectrum disorder. Journal of Autism and Developmental Disabilities, 49, 397–403. doi: 10.1007/s10803-018-3667-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Lemon J, Fellows I, & Singh P (2012). irr: Various coefficients of interrater reliability and agreement (Version 0.84). Retrieved from https://CRAN.R-project.org/package=irr

- Gast DL, & Ledford JR (2018). Single case research methodology: Applications in special education and behavioral sciences (3rd ed.). New York: Routledge. [Google Scholar]

- Grzadzinski R, Carr T, Colombi C, McGuire K, Dufek S, Pickles A, & Lord C (2016). Measuring changes in social communication behaviors: Preliminary development of the Brief Observation of Social Communication Change (BOSCC). Journal of Autism and Developmental Disorders, 7, 2464–2479. doi: 10.1007/s10803-016-2782-9 [DOI] [PubMed] [Google Scholar]

- Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, & Conde JG (2009). Research electronic data capture (REDCap) – A metadata-driven methodology and workflow process for providing translational research informatics support. Journal of Biomedical Informatics, 42, 377–381. doi: 10.1016/j.jbi.2008.08.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillock-Dunn A, & Wallace MT (2012). Developmental changes in the multisensory temporal binding window persist into adolescence. Developmental Science, 15, 688–696. doi: 10.1111/j.1467-7687.2012.01171.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horner RH, Carr EG, Halle J, McGee G, Odom S, & Wolery M (2005). The use of single-subject research to identify evidence-based practice in special education. Exceptional Children, 71, 165–179. doi: 10.1177/001440290507100203 [DOI] [Google Scholar]

- Irwin J, Preston J, Brancazio L, D’angelo M, & Turcios J (2015). Development of an audiovisual speech perception app for children with autism spectrum disorders. Clinical Linguistics & Phonetics, 29, 76–83. doi: 10.3109/02699206.2014.966395 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazdin AE (2011). Data evaluation In Single-case research designs: Methods for clinical and applied settings (2nd ed., pp. 284–322). New York: Oxford. [Google Scholar]

- Kratochwill TR, Levin JR, Horner RH, & Swoboda CM (2014). Visual analysis of single-case intervention research: Conceptual and methodological issues In Kratochwill TR & Levin JR (Eds.), Single-case intervention research: Methodological and statistical advances (pp. 91–125). Washington, DC: American Psychological Association. [Google Scholar]

- Lord C, Rutter M, DiLavore P, Risi S, Gotham K, & Bishop SL (2012). Autism Diagnostic Observation Schedule, second edition (ADOS-2) manual (Part I): Modules 1-4. Los Angeles, CA: Western Psychological Services. [Google Scholar]

- Megnin O, Flitton A, Jones CRG, de Haan M, Baldeweg T, & Charman T (2012). Audiovisual speech integration in autism spectrum disorders: ERP evidence for atypicalities in lexical-semantic processing. Autism Research, 5, 39–48. doi: 10.1002/aur.231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mongillo EA, Irwin JR, Whalen D, Klaiman C, Carter AS, & Schultz RT (2008). Audiovisual processing in children with and without autism spectrum disorders. Journal of Autism and Developmental Disorders, 38, 1349–1358. doi: 10.1007/s10803-007-0521-y [DOI] [PubMed] [Google Scholar]

- Murray MM, Lewkowicz DJ, Amedi A, & Wallace MT (2016). Multisensory processes: A balancing act across the lifespan. Trends in Neurosciences, 39, 567–579. doi: 10.1016/j.tins.2016.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noel JP, De Niear MA, Stevenson R, Alais D, & Wallace MT (2017). Atypical rapid audio-visual temporal recalibration in autism spectrum disorders. Autism Research, 10, 121–129. doi: 10.1002/aur.1633 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patten E, Watson LR, & Baranek GT (2014). Temporal synchrony detection and associations with language in young children with ASD. Autism Research and Treatment, 2014, 1–8. doi: 10.1155/2014/678346 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powers AR, Hillock AR, & Wallace MT (2009). Perceptual training narrows the temporal window of multisensory binding. Journal of Neuroscience, 29, 12265–12274. doi: 10.1523/JNEUROSCI.3501-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinto L, Thompson WF, Russo FA, & Trehub SE (2010). A comparison of the McGurk effect for spoken and sung syllables. Attention, Perception, & Psychophysics, 72, 1450–1454. doi: 10.3758/APP.72.6.1450 [DOI] [PubMed] [Google Scholar]

- R Core Team. (2017). R: A language and environment for statistical computing (Version 3.4.1). R Foundation for Statistical Computing: Vienna, Austria: Retrieved from https://www.R-project.org/ [Google Scholar]

- Righi G, Tenenbaum EJ, McCormick C, Blossom M, Amso D, & Sheinkopf SJ (2018). Sensitivity to audio-visual synchrony and its relation to language abilities in children with and without ASD. Autism Research, 11, 645–653. doi: 10.1002/aur.1918 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roid GH, Miller LJ, Pomplun M, & Koch C (2013). Leiter International Performance Scale (3rd ed.). Torrance, CA: Western Psychological Services. [Google Scholar]

- Sandbank M, & Yoder P (2014). Measuring representative communication in young children with developmental delay Topics in Early Childhood Special Education, 34, 133–141. doi: 10.1177/0271121414528052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Setti A, Stapleton J, Leahy D, Walsh C, Kenny RA, & Newell FN (2014). Improving the efficiency of multisensory integration in older adults: Audio-visual temporal discrimination training reduces susceptibility to the sound-induced flash illusion. Neuropsychologia, 61, 259–268. doi: 10.1016/j.neuropsychologia.2014.06.027 [DOI] [PubMed] [Google Scholar]

- Smith EG, Zhang S, & Bennetto L (2017). Temporal synchrony and audiovisual integration of speech and object stimuli in autism. Research in Autism Spectrum Disorders, 39, 11–19. doi: 10.1016/j.rasd.2017.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith JD (2012). Single-case experimental designs: A systematic review of published research and current standards. Psychological Methods, 17(4), 510–550. doi: 10.1037/a0029312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RA, Siemann JK, Schneider BC, Eberly HE, Woynaroski TG, Camarata SM, & Wallace MT (2014). Multisensory temporal integration in autism spectrum disorders. Journal of Neuroscience, 34, 691–697. doi: 10.1523/JNEUROSCI.3615-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sürig R, Bottari D, & Röder B (2018). Transfer of audio-visual temporal training to temporal and spatial audio-visual tasks. Multisensory Research, 31, 556–578. doi: 10.1163/22134808-0000261 [DOI] [PubMed] [Google Scholar]

- Tate RL, Perdices M, Rosenkoetter U, McDonald S, Togher L, Shadish W, … Vohra S. (2016). The Single-Case Reporting Guideline In BEhavioural Interventions (SCRIBE) 2016: Explanation and elaboration. Archives of Scientific Psychology, 4, 10–31. doi: 10.1037/arc0000027 [DOI] [Google Scholar]

- Wallace MT, & Stevenson RA (2014). The construct of the multisensory temporal binding window and its dysregulation in developmental disabilities. Neuropsychologia, 64, 105–123. doi: 10.1016/j.neuropsychologia.2014.08.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams JHG, Massaro DW, Peel NJ, Bosseler A, & Suddendorf T (2004). Visual–auditory integration during speech imitation in autism. Research in Developmental Disabilities, 25, 559–575. doi: 10.1016/j.ridd.2004.01.008 [DOI] [PubMed] [Google Scholar]

- Woynaroski TG, Kwakye LD, Foss-Feig JH, Stevenson RA, Stone WL, & Wallace MT (2013). Multisensory speech perception in children with autism spectrum disorders. Journal of Autism and Developmental Disorders, 43, 2891–2902. doi: 10.1007/s10803-013-1836-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoder PJ, Lloyd BP, & Symons FJ (2018). Observational measurement of behavior (2nd ed.). New York: Brookes Publishing. [Google Scholar]