Abstract

Background

Attempts to achieve digital transformation across the health service have stimulated increasingly large-scale and more complex change programmes. These encompass a growing range of functions in multiple locations across the system and may take place over extended timeframes. This calls for new approaches to evaluate these programmes.

Main body

Drawing on over a decade of conducting formative and summative evaluations of health information technologies, we here build on previous work detailing evaluation challenges and ways to tackle these. Important considerations include changing organisational, economic, political, vendor and markets necessitating tracing of evolving networks, relationships, and processes; exploring mechanisms of spread; and studying selected settings in depth to understand local tensions and priorities.

Conclusions

Decision-makers need to recognise that formative evaluations, if built on solid theoretical and methodological foundations, can help to mitigate risks and help to ensure that programmes have maximum chances of success.

Keywords: Health information technology, Implementation, Evaluation

Background

Many countries worldwide see large-scale system-wide health information technology (HIT) programmes as a means to tackle existing health and care challenges [1–3]. For example, the United States (US) federal government’s estimated $30 billion national stimulus package promotes the adoption of electronic health records (EHRs) through the Health Information Technology for Economic and Clinical Health (HITECH) Act [4]. Similarly, the English National Health Service (NHS) has invested £4 billion in a national digitisation fund [5]. Digitisation strategies and funding schemes reflect national circumstances, but such programmes face common challenges. These include for example tensions in reconciling national and local requirements. While some standardisation of data transactions and formats is essential to ensure interoperability and information exchange, there is also a need to cater for local exigencies, practices and priorities [6].

Summative evaluations that seek to capture the eventual outcomes of large national programmes appear to answer questions about the effectiveness of public investments. However, funders and administrators are under pressure to demonstrate outcomes quickly - often within the lifetime of programmes, whilst the full benefits of major change programmes can take a long time to materialise. Premature summative evaluation can generate unwarranted narratives of “failure” with damaging political consequences [7].

The success or failure of HIT projects involves many different dimensions and at times incommensurable factors [8, 9]. The political context may change within the medium- to long-term timeframes of a major change programme, [6, 8] as seen with some aspects of the English National Programme for Information Technology (NPfIT) [10, 11]. A formative evaluation approach cannot avoid these issues, but can help to better navigate the associated complexities. It can identify apparently productive processes, emerging unintended consequences, and inform the programme’s delivery strategy in real time [12, 13]. It seeks to capture perceptions of actors involved about what is, and is not, working well and feed back findings into programme management. Such evaluations often involve gathering qualitative and quantitative data from various stakeholders and then feeding back emerging issues to implementers and decision-makers so that strategies can be put in place to mitigate risks and maximise benefits.

Our team has conducted several formative evaluations of large HIT programmes and developed significant expertise over the years [14–16]. In doing so, we have encountered numerous theoretical and methodological challenges. We here build on a previous paper discussing the use of formative approaches for the evaluation of specific technology implementations in the context of shifting political and economic landscapes [10, 14]. In this previous work (Table 1), we described the complex processes of major HIT implementation and configuration. We argued that evaluation requires a sociotechnical approach and advocated multi-site studies exploring processes over extended timeframes, as such processes are not amenable to conventional positivist evaluation methodologies.

Table 1.

Summary of recommendations for formative evaluation of large-scale health information technology [17]

| Before-during-after study designs are ill suited to explore large-scale electronic health record implementations due to shifting policy landscapes and over-optimistic deployment schedules. They also do not sufficiently take local views and interpretations into account. | |

| Formative evaluations need to consider this changing landscape and explore stakeholder perspectives to gain insights into how local actors understand and implement change. | |

| Sociotechnical approaches can help to conceptualise the interactions between people, technology and work processes. They can help to draw a more nuanced picture of the implementation and adoption landscape than traditional positivist paradigms. |

We here offer an extension of this work to explore not only implementations of specific functionality (such as electronic health records (EHRs)), but their programmatic integration with ancillary systems (e.g. electronic prescribing and medicines administration, radiology). This can help to gain insights into the emergence and evolution of information infrastructures (systems of systems) that are increasingly salient as we see functional integration within hospitals and across care settings. We also consider mechanisms of spread, evolving networks/processes, and vendor markets [17].

Main text

The difficulty of attributing outcomes

The first challenge concerns the difficulty of attributing outcomes (i.e. exploring what caused a specific outcome) for major changes in HIT. Although often required to justify investments, the direct effects of complex HIT such as EHRs are difficult to track and measure [18]. This is particularly true for large-scale transformative and systemic upgrades in infrastructures, which are not one-off events, but occur through multiple iterations and interlinkages with existing systems. Such systems tend to have distributed effects with hard-to-establish gradually emerging baselines (when compared to local discrete technologies implemented in specific settings, although the effects of these can also be hard to measure) [19]. Infrastructure renewal is a long term process where current achievements rest on earlier upgrades over long timeframes as systems are incrementally extended and optimised [20]. An example may be the implementation of EHRs and their integration with ancillary systems. Here, decision-makers are championing not just one, but multiple implementations of various transformative systems.

Theoretically informed formative evaluations that draw on science and technology studies and acknowledge the interrelationship between social and technological factors can help to address this issue [21]. A particularly effective methodology is exploring selected settings in depth to understand local complexities, while also monitoring a wider number of settings in less detail to understand general trends. Complex research designs drawing on case study methods and a range of sociotechnical approaches can help to explore how technological and social factors shape each other over time [22]. They can therefore provide an insight into local changes and potential mechanisms leading to outcomes [22]. In our current work on evaluating the Global Digital Exemplar Programme, for example, we are conducting 12 in-depth case studies of purposefully recruited hospitals. In addition, we are collecting more limited longitudinal qualitative data across all 33 hospitals participating in the Programme [23]. This research design offers a balance between depth (achieved through the case studies) and breadth (achieved through testing emerging findings across the broader sample).

Balancing local diversity and autonomy with national aims

Decision-makers cannot simply roll out standard solutions across the health service as sites vary in terms of clinical practices, existing information systems and data structures, size and organisational structures, contexts and local demographics. A key challenge for evaluation of large programmes is reconciling tensions between bringing specific sites up to international best practice, and levelling up the local ecosystem [24]. Organisational settings differ in their local contexts, structures and (emerging) service configurations. They are often separate autonomous entities that may be in competition [25]. Various groups of clinical staff and decision-makers may have different priorities (e.g. between decision-makers and various groups of clinical staff). Programme visions may be differently interpreted by local stakeholders, which can lead to unanticipated outcomes and deviation from central aims. In the US, the Meaningful Use criteria have for instance resulted in increasing implementation of EHRs, but the impact on quality and safety is still unknown and concern has been expressed that they may have stifled local innovation [26].

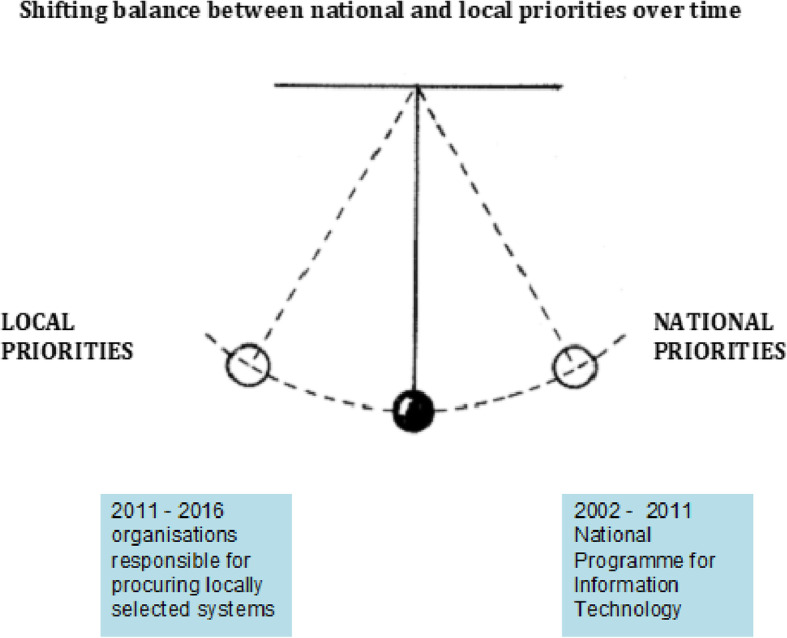

There is a tension between local and national priorities – and there is no stable way to reconcile these. Instead, strategies constantly shift between these poles, never standing still, pulled by a network of stakeholder groups with conflicting interests in a process that has been conceptualised as a swinging pendulum (Fig. 1) [14]. For example the UK NPfIT exemplified a strong pull towards national priorities, with a strategy that focused on concerted procurement and interoperability. In the period that followed, organisations were responsible for the procurement of locally selected systems. The pendulum swung the other way.

Fig. 1.

Tension between local and national priorities in large health information technology programmes [3]

To explore this process and associated tensions, evaluators need to study evolving networks, relationships, and processes to understand how various stakeholders are mobilised nationally and locally as part of the change programme and what the perceived effects of these mobilisations are. This may involve working closely with national Programme Leads to identify current policy directions and intended national strategy, whilst also exploring local experiences of this strategy. From our experience, it can be helpful to move from arms-length critical analyst to constructive engagement with different stakeholders groups. Establishing long-term personal relationships with senior decision-makers whilst retaining independence is important is this respect. These need to be characterised by mutual trust and frank discussion where evaluators play the role of a ‘critical friend’ at times delivering painful truths.

A recurring example of tensions in our work relates to progress measures. National measures of progress designed to provide justification for programme resources are liable to clash with local priorities and circumstances. Participating local organisations may negatively perceive achieving these as requiring large amounts of resources in comparison to limited local benefits and driven by the need to satisfy reporting demands. Some agreement over a limited core set of measures to satisfy both local and national demands may be helpful.

The evolving nature of HIT programmes over time

Takian and colleagues noted how the policy context changed in the course of a single long-term change programme [17]. These factors may result in various stakeholders chasing moving targets and scope creep. For example, the economic recession of 2008–13 heavily influenced the English NPfIT, which led to a lack of sustained funding [27].

Although important, shifting socio-political environments only constitute part of the picture. A long-term view of nurturing evolving infrastructures highlights that visions of best practices will inevitably change over time [28]. They also often have no definite end point and there is at times no consensus about strategic direction. We have previously discussed this in the context of digital maturity, which is a somewhat contested concept [29]. Different kinds of programme management and evaluation tools may be needed that give cognisance to this kind of evolution. These may include an emphasis on flexibility and reflexivity, where decision-makers can adjust strategies and roadmaps in line with emerging needs and changing environments. This approach will also require learning historical lessons and drawing on the wealth of experience of those who have experienced similar initiatives first hand.

Changes in medical techniques and diagnosis, models for care delivery, and vendor offerings affect available technologies (and vice versa). The market may not immediately be able to respond to new policy-driven models, and therefore evaluations and policies need to consider these dimensions [30]. This may involve exploring evolving vendor-user relationships, the emergence and mobilisation of user groups, procurement frameworks, and market diversity [31]. Our work, for instance, shows that, reinforced by the English NPfIT, multi-national mega-suite solutions revolving around core EHR systems increasingly dominate the UK market. These offer a relatively well-established and reliable pathway to achieving digital maturity and interoperability. The alternative pathway involves knitting together EHRs with a range of other functionality provided by diverse vendors. This may offer advantages in allowing an adopter to achieve a Best-of-Breed (BoB) solution unique to each local setting, and potentially better suited to local organisations [32]. However, there are difficulties for vendors of modular solutions designed for BoB to enter the market and develop interfaces. Existing EHR vendors are struggling to upgrade their systems to become mega-packages. Implementers must carefully consider interoperability challenges and innovation opportunities afforded by various systems. Programmes must ensure procurement approaches stimulate (or at least to not inhibit) a vibrant marketplace.

Scaling of change through developing a self-sustaining learning ecosystem

Large HIT change programmes are often concerned with not only stimulating local changes but also with promoting ongoing change ensuring that efforts are sustained and scaled beyond the life of the programme [33]. But this is not straightforward, partly due to lack of agreement over suitable metrics of success and partly due to limited understanding of the innovation process [34].

Studies of the emergence and evolution of information infrastructures have in turn helped articulate new strategies for promoting/sustaining such change [35–37]. However, the notion of scaling-up tacitly implies that innovation stops when diffusion starts. A more nuanced perspective flags that innovations evolve as they scale (‘innofusion’), requiring strong learning channels between adopter communities and vendors [38].

Evaluators can explore success factors and barriers to scaling qualitatively and formatively feed these back to decision-makers who can then adjust their strategies accordingly. Evaluation needs to address local change in tandem with evolving networks at ecosystem level. By studying a range of adopter sites and their relationships with each other, as well as other stakeholders that are part of the developing ecosystem, evaluators can identify mechanisms that promote digital transformation and spread. Understanding these dynamics can also help decision makers focus strategy on achieving programme objectives. By addressing networks and relationships, evaluators can, for example, explore how knowledge spreads throughout the wider health and care ecosystem in which the change programme is embedded, and how stakeholders were motivated to exchange and trade knowledge [39].

Conclusions

We are now entering an era that emphasises patient-centred care and data integration across primary, secondary and social care. This is linked to a shift from discrete technological changes to systemic long-term infrastructural change associated with large national/regional HIT change programmes. There are some attempts to characterise and study these changes including our own [17]. However, these provide only a partial picture, which we have built on here based on our ongoing experiences reflecting our current thinking (see Table 2).

Table 2.

Summary of key recommendations emerging for evaluating large-scale health information technology change programmes

| Study selected settings in depth in order to understand local complexities, whilst also exploring the wider number of settings that are part of the change programme to understand general trends | |

| Study evolving networks, relationships, and processes exploring how various stakeholders are mobilised nationally and locally as part of the change programme, and the perceived effects of these mobilisations | |

| Study changing organisational, economic, political, vendor and market contexts | |

| Study mechanisms of spread to accelerate programme objectives and align strategy accordingly to focus on these opportunities |

We now need new methods of programme management geared towards developing learning in ecosystems of adopters and vendors. These evolutionary perspectives also call for broader approaches to complex formative evaluations that can support the success of programmes and help to mitigate potential risks.

Although there is no prescriptive way to conduct such work, we hope that this paper helps decision-makers to commission work that is well suited to the subject of study, and implementers embarking on the evaluative journey to navigate this complex landscape.

Acknowledgements

We gratefully acknowledge the input of the wider GDE Evaluation team and the Steering Group of this evaluation.

Abbreviations

- BoB

Best-of-Breed

- EHR

Electronic Health Record

- HIT

Health Information Technology

- HITECH

Health Information Technology for Economic and Clinical Health

- NHS

National Health Service

- NPfIT

National Programme for Information Technology

- US

United States

Authors’ contributions

KC, RW and AS conceived this paper. KC and RW led the drafting of the manuscript. BDF, MK, HTN, SH, WL, HM, KM, SE and HP contributed to the analysis and interpretation of data and commented on drafts of the manuscript. All authors have read and approved the submitted manuscript.

Funding

This article has drawn on a programme of independent research funded by NHS England. The views expressed are those of the author(s) and not necessarily those of the NHS, NHS England, or NHS Digital. This work was also supported by the National Institute for Health Research (NIHR) Imperial Patient Safety Translational Research Centre. The views expressed in this publication are those of the authors and not necessarily those of the NHS, the NIHR or the Department of Health and Care. The funders had no input in this manuscript.

Availability of data and materials

Not applicable.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

All authors are investigators on the evaluation of the GDE programme (https://www.ed.ac.uk/usher/digital-exemplars). AS was a member of the Working Group that produced Making IT Work, and was an assessor in selecting GDE sites. BDF supervises a PhD student partly funded by Cerner, unrelated to this paper.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.O’Malley AS. Tapping the unmet potential of health information technology. N Engl J Med. 2011;364(12):1090–1091. doi: 10.1056/NEJMp1011227. [DOI] [PubMed] [Google Scholar]

- 2.Greenhalgh T, Stones R. Theorising big IT programmes in healthcare: strong structuration theory meets actor-network theory. Soc Sci Med. 2010;70(9):1285–1294. doi: 10.1016/j.socscimed.2009.12.034. [DOI] [PubMed] [Google Scholar]

- 3.Coiera E. Building a national health IT system from the middle out. J Am Med Inform Assoc. 2009;16(3):271–273. doi: 10.1197/jamia.M3183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Thornton M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010;2010(363):501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 5.NHS £4 billion digitisation fund: Industry reaction. Available from: https://www.itproportal.com/2016/02/08/nhs-4-billion-digitisation-fund-industry-reaction/. Accessed 13 Dec 2019.

- 6.Greenhalgh T, Potts HW, Wong G, Bark P, Swinglehurst D. Tensions and paradoxes in electronic patient record research: a systematic literature review using the meta-narrative method. Milbank Q. 2009;87(4):729–788. doi: 10.1111/j.1468-0009.2009.00578.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hendy J, Reeves BC, Fulop N, Hutchings A, Masseria C. Challenges to implementing the national programme for information technology (NPfIT): a qualitative study. BMJ. 2005;331(7512):331–336. doi: 10.1136/bmj.331.7512.331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Berg M. Implementing information systems in health care organizations: myths and challenges. Int J Med Inform. 2001;64(2–3):143–156. doi: 10.1016/S1386-5056(01)00200-3. [DOI] [PubMed] [Google Scholar]

- 9.Wilson M, Howcroft D. Power, politics and persuasion: a social shaping perspective on IS evaluation. In Proceedings of the 23rd IRIS Conference. 2000. pp. 725–739. [Google Scholar]

- 10.Greenhalgh T, Russell J, Ashcroft RE, Parsons W. Why national eHealth programs need dead philosophers: Wittgensteinian reflections on policymakers’ reluctance to learn from history. Milbank Q. 2011;89(4):533–563. doi: 10.1111/j.1468-0009.2011.00642.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Greenhalgh T, Stramer K, Bratan T, Byrne E, Russell J, Potts HW. Adoption and non-adoption of a shared electronic summary record in England: a mixed-method case study. BMJ. 2010;340:c3111. doi: 10.1136/bmj.c3111. [DOI] [PubMed] [Google Scholar]

- 12.McGowan JJ, Cusack CM, Poon EG. Formative evaluation: a critical component in EHR implementation. J Am Med Inform Assoc. 2008;15(3):297–301. doi: 10.1197/jamia.M2584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Catwell L, Sheikh A. Evaluating eHealth interventions: the need for continuous systemic evaluation. PLoS Med. 2009;6(8):e1000126. doi: 10.1371/journal.pmed.1000126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Sheikh A, Cornford T, Barber N, Avery A, Takian A, Lichtner V, Petrakaki D, Crowe S, Marsden K, Robertson A, Morrison Z. Implementation and adoption of nationwide electronic health records in secondary care in England: final qualitative results from prospective national evaluation in “early adopter” hospitals. BMJ. 2011;343:d6054. doi: 10.1136/bmj.d6054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cresswell KM, Bates DW, Williams R, Morrison Z, Slee A, Coleman J, Robertson A, Sheikh A. Evaluation of medium-term consequences of implementing commercial computerized physician order entry and clinical decision support prescribing systems in two ‘early adopter’ hospitals. J Am Med Inform Assoc. 2014;21(e2):e194–e202. doi: 10.1136/amiajnl-2013-002252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Cresswell K, Callaghan M, Mozaffar H, Sheikh A. NHS Scotland’s decision support platform: a formative qualitative evaluation. BMJ Health Care Informatics. 2019;26(1):e100022. doi: 10.1136/bmjhci-2019-100022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Takian A, Petrakaki D, Cornford T, Sheikh A, Barber N. Building a house on shifting sand: methodological considerations when evaluating the implementation and adoption of national electronic health record systems. BMC Health Serv Res. 2012;12(1):105. doi: 10.1186/1472-6963-12-105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chaudhry B, Wang J, Wu S, Maglione M, Mojica W, Roth E, Morton SC, Shekelle PG. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med. 2006;144(10):742–752. doi: 10.7326/0003-4819-144-10-200605160-00125. [DOI] [PubMed] [Google Scholar]

- 19.Clamp S, Keen J. Electronic health records: is the evidence base any use? Med Inform Internet Med. 2007;32(1):5–10. doi: 10.1080/14639230601097903. [DOI] [PubMed] [Google Scholar]

- 20.Wiegel V, King A, Mozaffar H, Cresswell K, Williams R, Sheik A. A systematic analysis of the optimization of computerized physician order entry and clinical decision support systems: a qualitative study in English hospitals. Health Informatics J. 2019;30:1460458219868650. doi: 10.1177/1460458219868650. [DOI] [PubMed] [Google Scholar]

- 21.Sismondo S. An introduction to science and technology studies. Chichester: Wiley-Blackwell; 2010. [Google Scholar]

- 22.Cresswell KM, Sheikh A. Undertaking sociotechnical evaluations of health information technologies. J Innov Health Informatics. 2014;21(2):78–83. doi: 10.14236/jhi.v21i2.54. [DOI] [PubMed] [Google Scholar]

- 23.Global Digital Exemplar (GDE) Programme Evaluation. Available from: https://www.ed.ac.uk/usher/research/projects/global-digital-exemplar-evaluation. Accessed 13 Dec 2019.

- 24.Gunter TD, Terry NP. The emergence of national electronic health record architectures in the United States and Australia: models, costs, and questions. J Med Internet Res. 2005;7(1):e3. doi: 10.2196/jmir.7.1.e3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cresswell K, Sheikh A. Organizational issues in the implementation and adoption of health information technology innovations: an interpretative review. Int J Med Inform. 2013;82(5):e73–e86. doi: 10.1016/j.ijmedinf.2012.10.007. [DOI] [PubMed] [Google Scholar]

- 26.Slight SP, Berner ES, Galanter W, Huff S, Lambert BL, Lannon C, Lehmann CU, McCourt BJ, McNamara M, Menachemi N, Payne TH. Meaningful use of electronic health records: experiences from the field and future opportunities. JMIR Med Inform. 2015;3(3):e30. doi: 10.2196/medinform.4457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.The Devil’s in the Detail. Available from: https://www.birmingham.ac.uk/Documents/college-mds/haps/projects/cfhep/projects/007/CFHEP007SCRIEFINALReport2010.pdf. Accessed 13 Dec 2019.

- 28.Aanestad Margunn, Grisot Miria, Hanseth Ole, Vassilakopoulou Polyxeni. Information Infrastructures within European Health Care. Cham: Springer International Publishing; 2017. Information Infrastructures and the Challenge of the Installed Base; pp. 25–33. [Google Scholar]

- 29.Cresswell K, Sheikh A, Krasuska M, Heeney C, Franklin BD, Lane W, Mozaffar H, Mason K, Eason S, Hinder S, Potts HW. Reconceptualising the digital maturity of health systems. Lancet Digit Health. 2019;1(5):e200–e201. doi: 10.1016/S2589-7500(19)30083-4. [DOI] [PubMed] [Google Scholar]

- 30.Mozaffar H, Williams R, Cresswell K, Morrison Z, Bates DW, Sheikh A. The evolution of the market for commercial computerized physician order entry and computerized decision support systems for prescribing. J Am Med Inform Assoc. 2015;23(2):349–355. doi: 10.1093/jamia/ocv095. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Mozaffar Hajar. The New Production of Users. First Edition. | New York : Routledge, 2016. | Series: Routledge studies in innovation, organization and technology ; 42: Routledge; 2016. User Communities as Multi-Functional Spaces; pp. 219–246. [Google Scholar]

- 32.Light B, Holland CP, Wills K. ERP and best of breed: a comparative analysis. Bus Process Manag J. 2001;7(3):216–224. doi: 10.1108/14637150110392683. [DOI] [Google Scholar]

- 33.Mandl KD, Kohane IS. No small change for the health information economy. N Engl J Med. 2009;360(13):1278–1281. doi: 10.1056/NEJMp0900411. [DOI] [PubMed] [Google Scholar]

- 34.Charif AB, Zomahoun HT, LeBlanc A, Langlois L, Wolfenden L, Yoong SL, Williams CM, Lépine R, Légaré F. Effective strategies for scaling up evidence-based practices in primary care: a systematic review. Implement Sci. 2017;12(1):139. doi: 10.1186/s13012-017-0672-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Making IT work: harnessing the power of health information technology to improve care in England. Available from: https://www.gov.uk/government/publications/using-information-technology-to-improve-the-nhs/making-it-work-harnessing-the-power-of-health-information-technology-to-improve-care-in-england. Accessed 8 Jan 2020.

- 36.Monteiro E, Hanseth O. Social shaping of information infrastructure: on being specific about the technology. InInformation technology and changes in organizational work. Boston: Springer; 1996. pp. 325–343. [Google Scholar]

- 37.Williams R, Stewart J, Slack R. Social learning in technological innovation: experimenting with information and communication technologies. Cheltenham: Edward Elgar Publishing; 2005.

- 38.Fleck J. Innofusion: feedback in the innovation process. InSystems science. Boston: Springer; 1993. pp. 169–174. [Google Scholar]

- 39.Gorman Michael E. Trading Zones and Interactional Expertise. 2010. Introduction: Trading Zones, Interactional Expertise, and Collaboration; pp. 1–3. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Not applicable.