Abstract

According to statistics of the American Cancer Society, in 2015, there are about 91,270 American adults diagnosed with melanoma of the skin. For the European Union, there are over 90,000 new cases of melanoma annually. Although melanoma only accounts for about 1% of all skin cancers, it causes most of the skin cancer deaths. Melanoma is considered one of the fastest-growing forms of skin cancer, and hence the early detection is crucial, as early detection is helpful and can provide strong recommendations for specific and suitable treatment regimens. In this work, we propose a method to detect melanoma skin cancer with automatic image processing techniques. Our method includes three stages: pre-process images of skin lesions by adaptive principal curvature, segment skin lesions by the colour normalisation and extract features by the ABCD rule. We provide experimental results of the proposed method on the publicly available International Skin Imaging Collaboration (ISIC) skin lesions dataset. The acquired results on melanoma skin cancer detection indicates that the proposed method has high accuracy, and overall, a good performance: for the segmentation stage, the accuracy, Dice, Jaccard scores are 96.6%, 93.9% and 88.7%, respectively; and for the melanoma detection stage, the accuracy is up to 100% for a selected subset of the ISIC dataset.

Keywords: Melanoma, Skin Cancer, Medical image segmentation, Medical image processing, Principal curvatures, Colour normalisation, ABCD rule

Introduction

Cancer [1–3]—one of the most difficult challenges of modern medicine—begins when cells of a part of the body grow up extraordinarily and out of control. These cancer cells take up the nutrients with the normal strong cells. Hence, the strong cells died off and the body gets weaker and the immune system is no longer able to protect the body. These cancer cells can metastasis from this part to other parts of the body. There are several parts of the body that may be affected by cancer such as the liver, lung, bone, breast, prostate, bladder, and rectum, and the skin can be also affected by cancer.

Skin cancer can be classified into two groups: melanoma and non-melanoma [4, 5]. According to statistics of WHO, in 2017, there are between two and three million non-melanoma skin cancers and 132,000 melanoma skin cancers occur globally each year. The melanoma skin cancer is the fastest growing forms of skin cancer, and it causes most of deaths among forms of skin cancer. Basically, all forms of skin cancer can be cured by radiation therapy, chemotherapy, immunotherapy etc. or their combination, but the treatment regimen will be more effective if patients are diagnosed and treated early.

For the melanoma skin cancer, the ABCD rule—Asymmetry, Border, Colour, Diameter [6]—is used for detecting melanoma. There are several studies focusing on melanoma skin cancer [7–9]. Most of them are based on the ABCD rule. However, to improve the accuracy of diagnosis by the ABCD rule, it is necessary to segment the regions of skin lesions. There are several skin lesions segmentation methods such as the segmentation methods based on the colour channel optimization [10–12], level set–based methods [13, 14], the iterative stochastic regions merging [15], deep learning with convolution neural networks [16–21] etc. On one hand, the accuracy of segmentation methods based on various colour channels optimization and based on level set methods is usually not high. Further, the final results are affected by different factors such as hairs and marked signs by pens/rules, and these methods fail to reliably segment very low–density regions etc. On the other hand, the recent deep learning-based methods require a large amount of training data and extensive computational time and resources. Moreover, to obtain good accuracy in supervised deep learning models, large scale training data incorporating the various factors mentioned above are paramount in obtaining final segmentations.

In this paper, we propose a melanoma skin cancer detection based on adaptive principle curvature and well-defined pre-processing steps. This method includes three stages: (1) pre-processing (hair detection and hair removal), (2) the skin lesions segmentation and (3) feature extraction and evaluation of skin lesion score to diagnose melanoma. Our contribution mainly focuses on the first and second stages. In the first stage, we propose an adaptive principal curvature to detect the hairs. This stage is used for removing hairs and making the skin lesions to be clearly visible as well as to increase the overall accuracy of evaluation of the skin lesion score considered here. It is further useful to improve the quality of the segmentation task in the next stage. In the second stage, we propose a colour normalisation to increase the potential of the visibility of skin lesions regions. We handle the proposed melanoma skin cancer detection method on the publicly available International Skin Imaging Collaboration (ISIC) dataset. The acquired result is assessed based on the given ground truth. For the third stage, our contribution focuses on implementation of the ABCD rule.

The rest of the paper is organised as follows. Section 2 presents materials and the proposed melanoma skin cancer detection method based on the adaptive principal curvature, colour image normalisation and the ABCD rule [6]. Section 3 presents experimental results for three tasks: the hair detection and removal, the skin lesions segmentation and the melanoma detection. Finally, Section 4 concludes.

Materials and Methods

Materials

For the dataset of dermoscopic skin lesions images, we use the ISIC 2017: https://www.isic-archive.com. The total size of the dataset is 5.4 GB, and it includes about 2000 dermoscopic images with given ground truth segmented by dermoscopic experts and superpixel masks. The ID of images has the form: ISIC_00xxxxx. The ground truth for segmentation has the same ID as the corresponding image ISIC_00xxxxx_segmentation. The superpixel masks have the same ID as the corresponding image ISIC_00xxxxx_superpixels. The superpixel masks are used for feature extraction. Moreover, it also provides a validation dataset with 150 images and a test dataset with 600 images. All dermoscopic images of the dataset are stored in RGB-colour and in the JPEG format. Ground truth and superpixel masks are stored in the PNG format. For feature extraction and classification, the ISIC dataset also provides a ground truth in a CVS file that stores the melanoma detection results. The file contains binary values: one (1) for melanoma and zero (0) for the benign/suspicious cases.

Methods

The goal of the proposed melanoma detection method is to extract important information from the skin lesions. However, to improve the quality of the extracted information, we need the following stages:

Pre-processing: The most important task of pre-processing is to detect and remove hairs. The hair will affect the quality of the skin lesions segmentation as well as the quality of feature extraction. For this task, we propose an adaptive principal curvature to detect the hairs and implement the inpainting method that we had proposed [22] to remove the hairs.

Segmentation: Because colours of skin lesions vary and can be similar to the background skin tone, to improve the thresholding segmentation quality, we propose here a colour normalisation. The colour normalisation will improve colours of skin lesions to discriminate against skin tone. This manner is helpful for skin lesions segmentation.

Detection: After segmenting the skin lesions, we need to extract the necessary information to evaluate the skin lesions score based on the ABCD rule [6].

Pre-Processing Stage—Hair Detection and Hair Removal

We consider that a grayscale image of the skin lesion u(x), x= (x1, x2)∈ R2 is a regular surface. The local shape of characteristics of the surface at a given pixel (i, j), i ∈ {1, …, m}, j ∈ {1, …, n}, m and n are number of pixels by the image horizon and the image vertical, respectively, can be presented by the Hessian:

where σ is a standard deviation, and it plays the role of a spatial scale parameter of the Gaussian kernel for the low-pass filters in a window with an odd size:

y = (y1, y2), y1, y2 ∈ {1, 3, 5, …}, and w = Gσ(y) ⋆ u, the operator ⋆ denotes 2D convolution. Therefore, w is a result of applying the 2D convolution of the Gaussian kernel with the standard deviation σ to the input image u. In practice, the symmetric Gaussian kernel (i.e., y1 = y2) is usually used.

The maximum and the minimum eigenvalues of the Hessian are called to be principal curvatures and . The maximum principal curvature detects the dark lines on the light background and the minimum principal curvature —light lines on the dark background [23–25]. We note that these principal curvatures are defined on every pixel (i, j).

Let Λ+, Λ− be matrices of the maximum principal curvatures and the minimum principal curvatures, respectively. That means:

We call the matrix

to be a matrix of the adaptive principal curvatures.

The adaptive principal curvature is expected to improve the hair contrast better than the gradient magnitude as well as the maximum principal curvature. Unlike the gradient magnitude that detects only the borders of hairs, the adaptive principal curvatures will create a solid structure (sketch structure). We utilise the acquired result to make the inpainting regions for removing hairs on the skin lesions image.

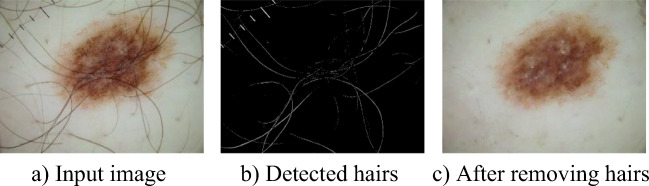

To remove hairs, we need to combine with the image inpainting methods. In this paper, we use the image inpainting method based on the adaptive Mumford-Shah model and multiscale parameter estimation that we already proposed in the work [22]. Figure 1 shows the results of the hair detection and the hair removal on the image with the ID of ISIC_0010006.

Fig. 1.

Hair detection and removal by the adaptive principal curvatures

Segmentation Stage—Skin Lesions Segmentation

To segment the skin lesions, we need to consider the colour models. There are some researches focusing on the colour models such as the RGB model, the HSV model, the Lab model, and the XYZ model. However, colours of skin lesions and skin tone are very similar in many cases and there are some skin lesions that have very low intensity. So, if we use thresholding segmentation methods such as the ISODATA method or the Otsu method, the accuracy of segmentation result is not high, and in many cases, the thresholding segmentation methods cannot complete the segmentation task.

Our goal for the skin lesions segmentation focuses on the RGB-colour model. However, we need to normalise the colour channels before calculating the image threshold to apply the thresholding segmentation. Because the skin tone and colours of the skin lesions usually depend on the red channel more than the green and the blue channels, we normalised the red band of the RGB-colour model.

Let u = (uR, uG, uB) be a colour image of the skin lesions, where uR, uG, uB are image intensity of the red band, the green band and the blue band components, respectively. We call the value

to be the new normalised red band.

The normalised red band is suitable for segmenting dermoscopic images, since the normalised red band will eliminate the effect of varying image intensities and will not depend on the shadow and other shading effects.

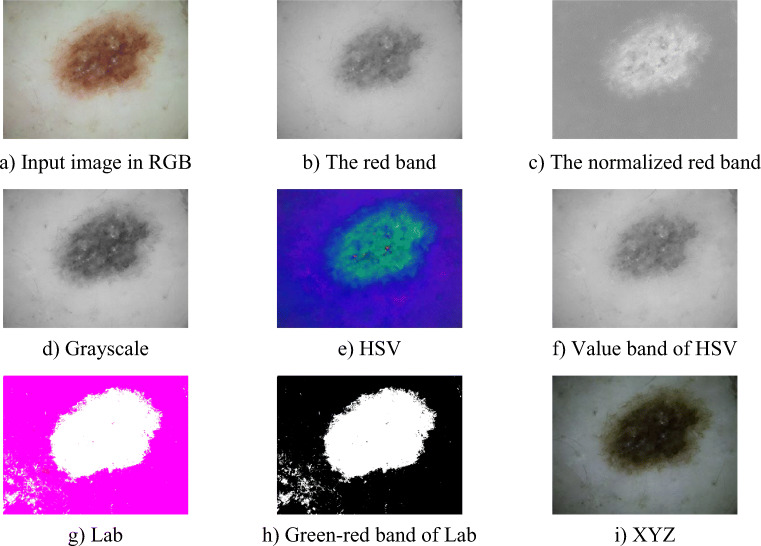

Figure 2 shows the skin lesions presentation by the red band, the normalised red band and by other colour models. As can be seen, the presented region of the skin lesions by the normalised red band is more exact than by the red band. In the case of the red band, the low intensity regions of skin lesions are lost. The presentation of skin lesions of other colour models such as grayscale, HSV, Lab and XYZ cannot remove shadow and shading effects. Otherwise, they include some false detections (in the case of Lab). For the normalised red band, the presented regions are very exact. The normalised red band also clarifies the very low intensity regions of the skin lesions and also excludes shadow and shading effects as well as other illumination effects. Hence, we can see clearly that the skin lesions and the background skin regions separately, and the colours of the skin region are, in general, homogeneous. We evaluate the homogeneity by using the grey level co-occurrence matrix (GLCM) [26]:

where is an element at a location (i, j) of the normalised symmetrical GLCM and N is a size of GLCM.

Fig. 2.

Skin lesions presentation by the red band, the normalised red band, grayscale, HSV, Lab and XYZ models

Note that this is helpful in applying thresholding-based segmentation methods or morphological segmentation methods.

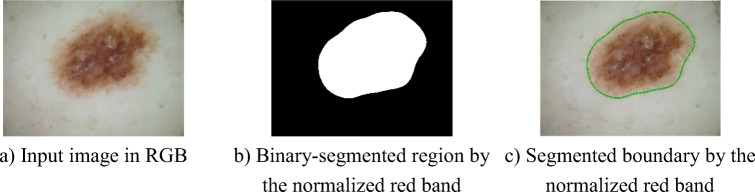

Figure 3 shows the skin lesions segmentation result based on the normalised red band. In this case, we used the Gaussian kernel to make the image get smoother. As can be seen, the low-intensity regions around the skin lesions are also segmented. This is necessary to assess features of the skin lesion by the ABCD rule. The global threshold is evaluated by the Otsu method.

Fig. 3.

Skin lesions segmentation by the normalised red band

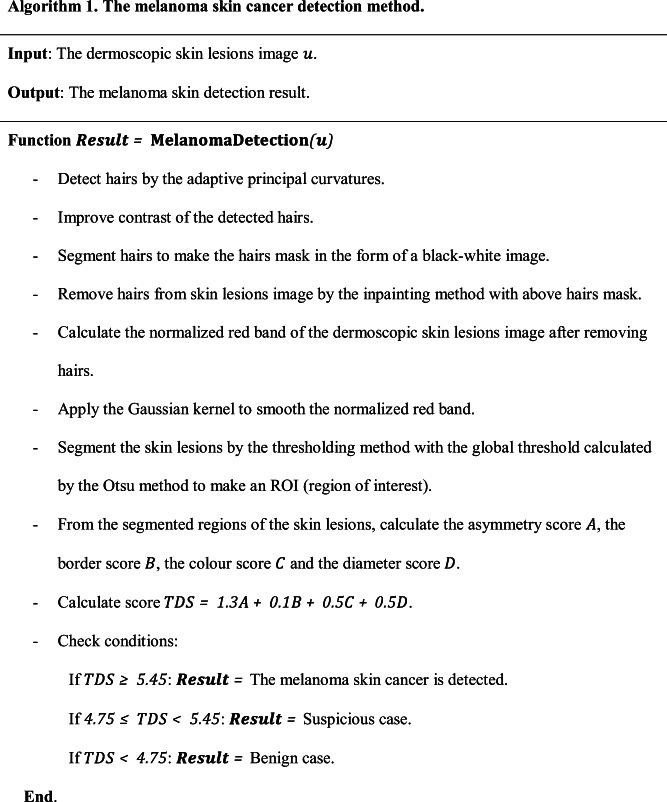

Detection Stage—Feature Extraction and Score Evaluation

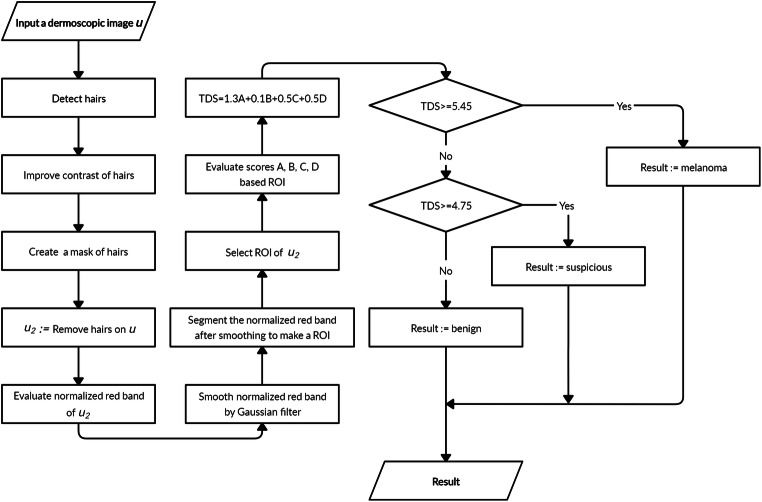

In this stage, according to the ABCD rule [6], we will extract features to evaluate the total dermatoscopic score (TDS score). The ABCD rule is used for differentiating benign from malignant melanocytic tumours. The ABCD rule is used to evaluate the TDS score based on the A—asymmetry, B—border, C—colour, and D—diameter. The TDS score is a linear expression of all features scores:

where A, B, C, D are the score of asymmetry, border, colour and diameter, respectively; a, b, c, d—impact coefficients of asymmetry, border, colour and diameter features, respectively. By the ABCD rule, values of the impact coefficients are fixed as a = 1.3, b = 0.1, c = 0.5, d = 0.5.

To conclude the skin lesions by the ABCD rule, it is necessary to base on the following condition: if TDS ≥ 5.45, the skin lesion is malignant (cancerous); if 4.75 ≤ TDS < 5.45, the skin lesion is suspicious (need deeper checker); if TDS < 4.75, benign melanocytic lesion (safe). The partial scores are evaluated based on extracting the various features: asymmetry, border, colour and diameter.

The asymmetry

The asymmetry score of skin lesion is evaluated based on both the contour asymmetry and the asymmetry of the distribution of dermoscopic colours and structures. The asymmetry needs to be evaluated on either side of each axis for the colours and structures and the contour of the lesions. If there is no asymmetry in any axes, the asymmetry score is 0. If there is asymmetry by one axis, the asymmetry score is 1. In the case of asymmetry by both axes, the asymmetry score is 2. The impact factor of the asymmetry score in the TDS score is a = 1.3.

The border

The border score is evaluated upon whether there is a sharp, abrupt cut-off of pigment pattern at the periphery of the skin lesions or a gradual, indistinct cut-off. By this way, the lesions are divided into eight parts. The border score is the number of parts, whether there is a sharp or gradual cut-off. The minimum border score is 0 and the maximum border score is 8. The impact factor of the border score in the TDS score is b = 0.1.

The colour

To determine the colour score, it is necessary to consider the following colours: white, red, light brown, dark brown, blue-grey and black. The light and dark brown reflect melanin localised mainly in the epidermis and/or superficial dermis; the black represents melanin in the upper granular layer, stratum corneum or all layers of the epidermis; the blue-grey is for melanin in the papillary dermis, and the white corresponds to areas of regression; and the red reflects the degree of inflammation or neovascularization. If there is a presence of one from the above colours, the colour score will be increased by 1. The maximum colour score is 6 and the minimum colour score is 1. The impact factor of the colour score in the TDS score is c = 0.5.

The diameter

If the diameter of the region of the skin lesion is greater than 6 mm, it alarms of malignancy. The diameter score is evaluated based on its size. The diameter of a skin lesion is evaluated as a maximum distance between two pixels of the lesion. In practice, to evaluate the diameter, we need to know the resolution of the image. For 1 mm of diameter, the diameter score is added by 1. The impact factor of the diameter score in the TDS score is d = 0.5. The proposed melanoma skin cancer detection method is presented in Algorithm 1. The corresponding overall flowchart is presented in Fig. 4.

Fig. 4.

Flowchart of the proposed melanoma skin cancer detection method

Experimental Results and Discussion

To implement the proposed melanoma skin cancer detection method, we handle the experiments of the proposed method on MATLAB 2018b.

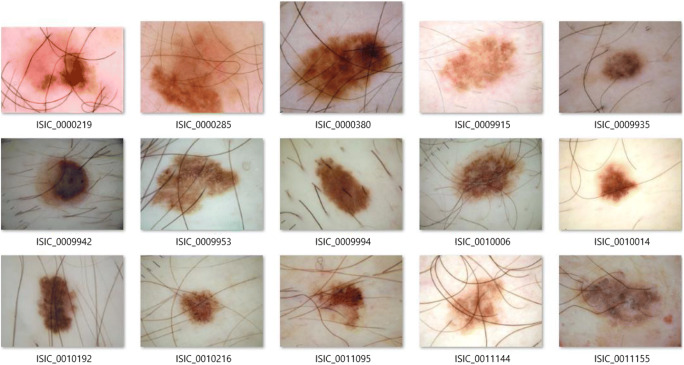

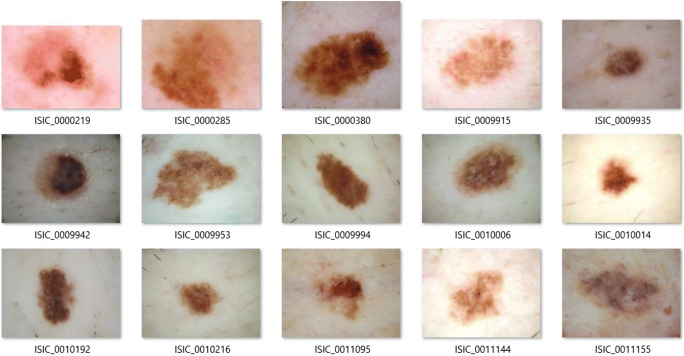

The Test Set of Dermoscopic Images

In order to implement the proposed method fully (with all three stages), we select a subset of images of the dataset. We note that the selected dermoscopic images are selected randomly, but they need to satisfy the two following conditions: (1) the selected images must contain hairs, and (2) skin lesions of the selected images must include all kinds—melanoma, suspicious and benign. Because the proposed method is not a learning-based method, we only select 15 images to test. Basically, the proposed method can be extended to a larger subset of images. One more reason that we choose 15 images is that dermoscopic images of the ISIC dataset have various resolutions and various ratios. Hence, it is very difficult to evaluate the diameter of a lesion. In our experiments, we extract the diameter score from the metadata. If all dermoscopic images have the same resolution and ratio, the evaluation of diameter can be resolved absolutely. We note that the diameter of the skin lesion is the maximum distance of two pixels of the segmented region. Next, we convert all selected images to the PNG format because the JPEG compression reduces the quality of post-processing tasks [27, 28]. The JPEG format uses the lossy-compressed method, it can influence on accuracy and performance of the segmentation task as well as feature extraction and evaluation of the skin lesion score. Though this conversion does not improve the quality of the images, it helps in avoiding artefacts affecting the feature extraction. All selected images are presented in Fig. 5.

Fig. 5.

The selected dermoscopic skin lesions images for the tests

Error Metrics

In the proposed melanoma skin cancer detection method, there are three implemented stages: the hair detection and removal, the skin lesions segmentation and the TDS evaluation. For the hair detection and removal, since there is no well-defined ground truth for hair removal of the skin lesions available, we cannot assess the quality of this particular task. For the segmentation task, we use the following metrics [29, 30]: accuracy, sensitivity, specificity, the Sorensen-Dice and the Jaccard metrics to assess.

Accuracy, Sensitivity and Specificity

Accuracy (%) [29] measures how well a binary segmentation method correctly identifies or excludes a condition:

where TP, TN, FP, FN denote true positive, true negative, false positive and false negative, respectively. They are basic statistical indices that can be evaluated based on predicted segmentation result and the ground truth. Note that the ground truth of the ISIC dataset is handled by experienced dermatologists.

Sensitivity (%) [29] is evaluated as the proportion of real positives that are correctly identified:

Specificity (%) [29] is computed as the proportion of real negatives that are correctly identified:

The Sorensen-Dice Similarity (Dice Score, F1-Score)

The Sorensen-Dice similarity [29] is another way to measure segmentation accuracy and is computed as follows:

The value of the Dice score is between 0 and 1 (or from 0 to 100%). The higher the Dice score, the better the image segmentation result.

The Jaccard Similarity

The Jaccard score [30] have a relation with the Dice similarity as follows:

The range of value of the Jaccard score is from 0 to 100%. The higher Jaccard score, the better the image segmentation result.

Note that in many works about medical image segmentation including skin lesion segmentation, the Jaccard score is the most important metric to measure the segmentation result quality [30].

For the melanoma detection task, there is a given ground truth in a CSV file. Hence, we can use this ground truth to assess the accuracy of the proposed melanoma detection method. This ground truth is only classified into two groups: melanoma and the benign/suspicious case.

Test Cases and Discussion

The Hair Detection and Removal

As we explained above, because there is no ground truth for the hair removal task, in this test case, we do not discuss the accuracy of this task. We can assess the quality of the hair removal task by the naked eyes.

The result of the hair removal task of all selected images is presented in Fig. 6. As can be seen, all hairs were removed with high accuracy.

Fig. 6.

The hair removal task of all selected images

About the execution time of this stage, the hair detection only takes 0.2 s to complete the task. We note that the quality of all selected images is higher HD+ (high definition with resolution higher 720 dpi). Hence, this result is very good. For the hair removal, since we utilised an iterative adaptive inpainting method based on the Mumford-Shah model, the final performance depends on a tolerance level. We plan to investigate other non-iterative inpainting methods that can obtain similar performance in the future.

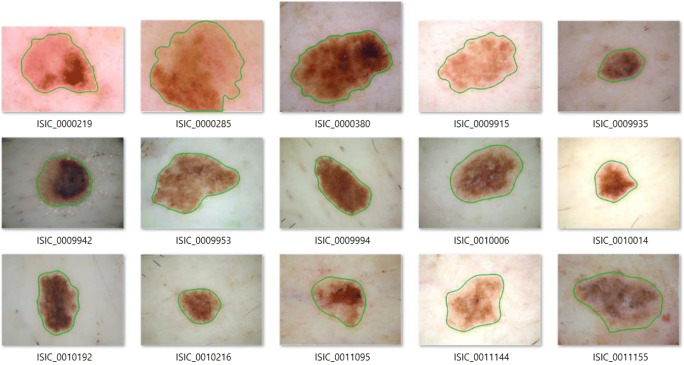

The Skin Lesions Segmentation

For the skin lesions segmentation task, we handle on all selected images. First, we evaluate the normalised red band. Second, we use the Gaussian kernel to smooth the normalised red band to make the contour of segmented regions become smoother. Third, we use the Otsu method to evaluate the global threshold and segment the image to the black-white mode. Finally, we apply some filters to remove small segments and defects.

Figure 7 presents the segmented skin lesions of all selected images. The segmented regions are bounded by the green lines. As can be seen, the segmented results are very good. The skin lesions with very low intensity are also segmented.

Fig. 7.

The segmentation result of all selected images

Table Table 1 shows the metrics by the Dice score, the Jaccard score, accuracy, sensitivity and specificity of the segmentation results of the skin lesions images by the proposed colour normalisation method. The best case of the Dice score is 97.5%, for the Jaccard score is 96.6% and for the accuracy is 99.4%. The proposed method achieved a very high accuracy.

Table 1.

The error metric by the Sorensen-Dice score and Jaccard score for all selected dermoscopic skin lesions images

| Image ID | Dice | Jaccard | Accuracy | Sensitivity | Specificity |

|---|---|---|---|---|---|

| ISIC_0000219 | 0.957890 | 0.919183 | 0.97182 | 0.92477 | 0.99678 |

| ISIC_0000285 | 0.906199 | 0.828486 | 0.90343 | 0.99743 | 0.82084 |

| ISIC_0000380 | 0.965933 | 0.934110 | 0.97531 | 0.96881 | 0.97899 |

| ISIC_0009915 | 0.968396 | 0.938728 | 0.9815 | 0.95803 | 0.99136 |

| ISIC_0009935 | 0.982479 | 0.965562 | 0.99631 | 0.97859 | 0.9984 |

| ISIC_0009942 | 0.959263 | 0.921715 | 0.98319 | 0.92172 | 1 |

| ISIC_0009953 | 0.865455 | 0.762822 | 0.90309 | 0.76282 | 1 |

| ISIC_0009994 | 0.974861 | 0.950954 | 0.99059 | 0.9735 | 0.99453 |

| ISIC_0010006 | 0.887868 | 0.798348 | 0.94429 | 1 | 0.92853 |

| ISIC_0010014 | 0.950408 | 0.905502 | 0.98925 | 0.99893 | 0.98814 |

| ISIC_0010192 | 0.974285 | 0.949859 | 0.99031 | 0.97872 | 0.99299 |

| ISIC_0010216 | 0.970316 | 0.942343 | 0.99393 | 0.99022 | 0.99434 |

| ISIC_0011095 | 0.930935 | 0.870794 | 0.97703 | 0.98456 | 0.97563 |

| ISIC_0011144 | 0.927802 | 0.865327 | 0.96841 | 0.97783 | 0.96594 |

| ISIC_0011155 | 0.857128 | 0.749977 | 0.91646 | 1 | 0.88852 |

| Average: | 0.938615 | 0.886914 | 0.965661 | 0.961062 | 0.967666 |

Table Table 2 shows the comparison of the average Dice score and the average Jaccard score of the following methods: the Yuan [18] method based on the fully convolutional-deconvolutional networks, the Berseth [17] method and the Rashika et al. [31] method based on U-Net networks, the Bi et al. [32] based on the deep residual networks, and the proposed method. With the above collection of images, the proposed method gives the highest average Dice and average Jaccard scores. The results also indicate that the proposed method acquired the best segmentation result. We note that the comparison is only relative. Since the proposed method is not based on a machine-learning approach, and other methods are based on deep learning, a straightforward comparison is not absolutely fair. Moreover, the deep learning models were pre-trained on various subsets of images of the ISIC dataset [31]. In practice, if all dermoscopic images of the ISIC dataset have the same resolution, we can extend the proposed method to test on all images.

Table 2.

Comparison of average Dice score and average Jaccard score of the methods

Melanoma Detection

For the melanoma detection stage, we use the ABCD rule as we presented above. A score, B score and C score are evaluated based on the description of the ABCD rule. For the D score, since the scale of real size of skin lesions and the size of skin lesions on the images are different for every case, we cannot give an efficient evaluation. Hence, we extract the diameter of skin lesions from the metadata of the dermoscopic images.

Figure 8 presents the result of melanoma detection. The first image is a case of the melanoma, the second one, the suspicious case, the third one, the benign. We can see that, for the melanoma case, the skin lesion region is asymmetric by both axes, there are many cut-offs on the border and there are many colours in the lesion. In this particular case, the diameter is 4 mm. The TDS score is 5.9, and it is greater than 5.45 value.

Fig. 8.

Melanoma skin detection results

Table 3 present the number of melanoma detection cases. In all 15 selected images, there are two cases diagnosed with melanoma skin cancer. The number of suspicious cases is 8 and for the benign, 5 cases. The number of melanoma detection cases are the same as the ground truth. Because in the ground truth, there is no classification into suspicious and benign cases, we cannot give the accuracy. However, the total of the suspicious and the benign cases are 13 and this value is the same as our result.

Table 3.

The result of the melanoma skin cancer detection by the proposed method

| Criteria | Melanoma | Suspicious | Benign |

|---|---|---|---|

| Case | 2 | 8 | 5 |

| Accuracy | 100% | - | - |

For the performance, this melanoma detection task is handled very fast. It only takes under 1 s to process every image.

The performance of all stages of the proposed melanoma skin cancer detection method is about 15 s to process a dermoscopic image with a high resolution (HD+). If we apply other faster inpainting methods [33–35] to remove hairs at the pre-processing stage, the general performance of the proposed method will be higher.

Conclusions

In this article, we have proposed a melanoma skin cancer detection method. The proposed method includes three stages: the hair detection and removal by the adaptive principal curvature, the skin lesions segmentation by the colour normalisation, and the melanoma detection by extracting features of skin lesion with the ABCD rule. The adaptive principal curvature is very useful to detect the hairs. The colour normalisation is very efficient to detect the skin lesion with very low intensity, and it also excludes the shadow and shading effects. The ABCD rule is used for evaluating the TDS score to detect the melanoma. Accuracy and performance of every task such as the hair detection, the skin lesions segmentation and the melanoma detection as well as of the general proposed method are very good. A limitation of the proposed method is that we tested on a subset of images since the resolution of images of the ISIC dataset varies; there is a difficulty for defining the diameters of skin lesions across images. Therefore, we need to extract the diameters of skin lesions from the metadata. However, this issue can be solved if we can add a rule into all images or we can set the same resolution for all images. In future, we will investigate and fuse deep-learning models along with the particular features utilised here for the melanoma skin cancer detection to further improve the accuracy of every task and we plan to implement the training process on a larger collection of images obtained across different sites.

Compliance with Ethical Standards

Conflict of Interest

The authors declare that they have no conflict of interest.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.“Melanoma: Statistics,” American Cancer Society, Jul. 2016. [Online]. Available: https://www.cancer.net/cancer-types/melanoma/statistics. Accessed 6 Nov. 2018

- 2.“Melanoma skin cancer,” European Commission, 2017. [Online]. Available: https://ec.europa.eu/research/health/pdf/factsheets/melanoma_skin_cancer.pdf. Accessed 6 Nov. 2018

- 3.Seyed HH, Mohammadamin D. Review of cancer from perspective of molecular. Journal of Cancer Research and Practice. 2017;4(4):127–129. doi: 10.1016/j.jcrpr.2017.07.001. [DOI] [Google Scholar]

- 4.Yu Lequan, Chen Hao, Dou Qi, Qin Jing, Heng Pheng-Ann. Automated Melanoma Recognition in Dermoscopy Images via Very Deep Residual Networks. IEEE Transactions on Medical Imaging. 2017;36(4):994–1004. doi: 10.1109/TMI.2016.2642839. [DOI] [PubMed] [Google Scholar]

- 5.Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, Thrun S. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.M. Kunz and W. Stolz, “ABCD rule,” Dermoscopedia Organization, 17 Jan. 2018. [Online]. Available:https://dermoscopedia.org/ABCD_rule. Accessed 11 Nov. 2018

- 7.E. Bernart, J. Scharcanski and S. Bampi, “Segmentation and classification of melanocytic skin lesions using local and contextual features,” in 2016 IEEE International Conference on Image Processing (ICIP), Phoenix, 2016.

- 8.Wong A, Clausi DA. Segmentation of Skin Lesions From Digital Images Using Joint Statistical Texture Distinctiveness. IEEE Transactions on Biomedical Engineering. 2014;61(4):1220–1230. doi: 10.1109/TBME.2013.2297622. [DOI] [PubMed] [Google Scholar]

- 9.Iyatomi H, Celebi ME, Schaefer G, Tanakad M. Automated color calibration method for dermoscopy images. Computerized Medical Imaging and Graphics. 2011;35(2):89–98. doi: 10.1016/j.compmedimag.2010.08.003. [DOI] [PubMed] [Google Scholar]

- 10.A. A. A. Al-abayechi, X. Guo, W. H. Tan and H. A. Jalab, “Automatic skin lesion segmentation with optimal colour channel from dermoscopic images,” ScienceAsia, vol. 40S, pp. 1–7, 2014. [DOI] [PubMed]

- 11.D. N. H. Thanh, U. Erkan, V. B. S. Prasath, V. Kumar and N. N. Hien, “A Skin Lesion Segmentation Method for Dermoscopic Images Based on Adaptive Thresholding with Normalization of Color Models,” in IEEE 2019 6th International Conference on Electrical and Electronics Engineering, Istanbul, 2019.

- 12.D. N. H. Thanh, N. N. Hien, V. B. S. Prasath, U. Erkan, K. Adytia: Adaptive Thresholding Skin Lesion Segmentation with Gabor Filters and Principal Component Analysis,” in The 4th International Conference on Research in Intelligent and Computing in Engineering RICE'19, Hanoi, 2019

- 13.D. N. H. Thanh, N. N. Hien, V. B. S. Prasath, L. T. Thanh and N. H. Hai, “Automatic Initial Boundary Generation Methods Based on Edge Detectors for the Level Set Function of the Chan-Vese Segmentation Model and Applications in Biomedical Image Processing,” in The 7th International Conference on Frontiers of Intelligent Computing: Theory and Application (FICTA-2018), Danang, 2018.

- 14.Z. Ma and J. M. R. S. Tavares, “Segmentation of Skin Lesions Using Level Set Method,” in Computational Modeling of Objects Presented in Images. Fundamentals, Methods, and Applications (Lecture Notes in Computer Science, vol 8641), Springer, 2014, pp. 228–233.

- 15.Wong A., Scharcanski J., Fieguth P. Automatic Skin Lesion Segmentation via Iterative Stochastic Region Merging. IEEE Transactions on Information Technology in Biomedicine. 2011;15(6):929–936. doi: 10.1109/TITB.2011.2157829. [DOI] [PubMed] [Google Scholar]

- 16.Al-Masni MA, Al-Antari MA, Choi MT, Han SM, Kim TS. Skin lesion segmentation in dermoscopy images via deep full resolution convolutional networks. Computer Methods and Programs in Biomedicine. 2018;162:221–231. doi: 10.1016/j.cmpb.2018.05.027. [DOI] [PubMed] [Google Scholar]

- 17.M. Berseth, “ISIC 2017-Skin Lesion Analysis Towards Melanoma,” arXiv:1703.00523, 2017.

- 18.Y. Yuan, “Automatic skin lesion segmentation with fully convolutional-deconvolutional networks,” arXiv:1703.05165, 2017.

- 19.L. Bi, J. Kim, E. Ahn, D. Feng and M. Fulham, “Semi-automatic skin lesion segmentation via fully convolutional networks,” in 2017 IEEE 14th International Symposium on Biomedical Imaging (ISBI 2017), Melbourne, 2017.

- 20.Jaisakthi SM, Mirunalini P, Aravindan C. Automated skin lesion segmentation of dermoscopic images using GrabCut and k-means algorithms. IET Computer Vision. 2018;12(8):1088–1095. doi: 10.1049/iet-cvi.2018.5289. [DOI] [Google Scholar]

- 21.Burdick J, Marques O, Weinthal J, Furht B. Rethinking Skin Lesion Segmentation in a Convolutional Classifier. Journal of Digital Imaging. 2018;31(4):435–440. doi: 10.1007/s10278-017-0026-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.D. N. H. Thanh, V. B. S. Prasath, N. V. Son and L. M. Hieu, “An Adaptive Image Inpainting Method Based on the Modified Mumford-Shah Model and Multiscale Parameter Estimation,” Computer Optics, vol. 42, no. 6, 2018.

- 23.H. Deng, W. Zhang, E. Mortensen, T. Dietterich and L. Shapiro, “Principal Curvature-Based Region Detector for Object Recognition,” in IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, 2007

- 24.A. F. Frangi, W. J. Niessen, K. L. Vincken and M. A. Viergever, “Multiscale Vessel Enhancement Filtering,” in Medical Image Computing and Computer-Assisted Intervention — MICCAI’98, Cambridge , 1998.

- 25.Sato Y, Nakajima S, Shiraga N, Atsumi H. Three-dimensional multi-scale line filter for segmentation and visualization of curvilinear structures in medical images. Medical Image Analysis. 1998;2(2):143–168. doi: 10.1016/S1361-8415(98)80009-1. [DOI] [PubMed] [Google Scholar]

- 26.Haralick RM, Shanmugan K, Dinstein I. Textural Features for Image Classification. IEEE Transactions on Systems, Man, and Cybernetics. 1973;SMC-3:610–621. doi: 10.1109/TSMC.1973.4309314. [DOI] [Google Scholar]

- 27.Lau WL, Li ZL, Lam KWK. Effects of JPEG compression on image classification. International Journal of Remote Sensing. 2003;24(7):1535–1544. doi: 10.1080/01431160210142842. [DOI] [Google Scholar]

- 28.Elkholy M, Hosny MM, El-Habrouk HMF. Studying the effect of lossy compression and image fusion on image classification. Alexandria Engineering Journal. 2019;58:143–149. doi: 10.1016/j.aej.2018.12.013. [DOI] [Google Scholar]

- 29.C. Gabriela, L. Diane and P. Florent, “What is a good evaluation measure for semantic segmentation,” in The British Machine Vision Conference, Bristol, 2013.

- 30.Abdel AT, Allan H. Metrics for evaluating 3D medical image segmentation: analysis, selection, and tool. BMC Medical Imaging. 2015;15:1–29. doi: 10.1186/s12880-015-0042-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.M. Rashika and D. Ovidiu, “Deep Learning for Skin Lesion Segmentation,” in IEEE International Conference on Bioinformatics and Biomedicine (BIBM 2017), Kansa, 2017.

- 32.L. Bi, J. Kim, E. Ahn and D. Feng, “Automatic Skin Lesion Analysis using Large-scale Dermoscopy Images and Deep Residual Networks,” arXiv:1703.04197, 2017.

- 33.N. H. Hai, L. M. Hieu, D. N. H. Thanh, N. V. Son, V. B. S. Prasath, “An Adaptive Image Inpainting Method Based on the Weighted Mean,” Informatica, vol. 43, no. 4, 2019 (In press).

- 34.D. N. H. Thanh, V. B. S. Prasath, L. M. Hieu, H. Kawanaka, “An Adaptive Image Inpainting Method Based on the Weighted Mean,” in 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Spokane, 2019.

- 35.D. N. H. Thanh, N. V. Son, V. B. S. Prasath, “Distorted Image Reconstruction Method with Trimmed Median,” in 2019 3rd International Conference on Recent Advances in Signal Processing, Telecommunications & Computing (SigTelCom), Hanoi, 2019.