Abstract

The aim of this study was to test an interactive up-to-date meta-analysis (iu-ma) of studies on MRI in the management of men with suspected prostate cancer. Based on the findings of recently published systematic reviews and meta-analyses, two freely accessible dynamic meta-analyses (https://iu-ma.org) were designed using the programming language R in combination with the package “shiny.” The first iu-ma compares the performance of the MRI-stratified pathway and the systematic transrectal ultrasound-guided biopsy pathway for the detection of clinically significant prostate cancer, while the second iu-ma focuses on the use of biparametric versus multiparametric MRI for the diagnosis of prostate cancer. Our iu-mas allow for the effortless addition of new studies and data, thereby enabling physicians to keep track of the most recent scientific developments without having to resort to classical static meta-analyses that may become outdated in a short period of time. Furthermore, the iu-mas enable in-depth subgroup analyses by a wide variety of selectable parameters. Such an analysis is not only tailored to the needs of the reader but is also far more comprehensive than a classical meta-analysis. In that respect, following multiple subgroup analyses, we found that even for various subgroups, detection rates of prostate cancer are not different between biparametric and multiparametric MRI. Secondly, we could confirm the favorable influence of MRI biopsy stratification for multiple clinical scenarios. For the future, we envisage the use of this technology in addressing further clinical questions of other organ systems.

Electronic supplementary material

The online version of this article (10.1007/s10278-019-00312-1) contains supplementary material, which is available to authorized users.

Keywords: Evidence-based medicine, Radiology, Prostate cancer, Magnetic resonance imaging, Meta-analysis

Introduction

The traditional scientific publication process has not substantially changed over the last decades. A rigorous peer-review process will ideally ensure that the results are displayed in an understandable manner and the conclusions are sound. However, publication in paper or online journals also comes with several drawbacks [1]. First, scientific articles are static documents. Especially in a fast developing and heavily computerized field like radiology [2, 3], consecutive, dynamic updates are needed to keep results in relation to the latest studies. As a prominent example, magnetic resonance imaging (MRI) of the prostate is increasingly gaining importance in the clinical workup of men with suspected prostate cancer [4] and has developed rapidly in recent years. For example, on a technical level, image acquisition may have a substantial influence on the diagnostic accuracy, e.g., by using a field strength of 1.5 or 3.0T [5], or using endorectal vs. body coils. After acquisition, several factors during the interpretation may change diagnostic accuracy, e.g., using different sequences (bi or multiparametric MRI; bpMRI vs. mpMRI [6–8]) or using different readout systems such as a Likert-like scale or PI-RADS version 1 or version 2 [9].

The main results of a scientific study are summarized in selected tables and figures chosen by the authors at time of publication, but other relevant information that could be extracted from the raw data and may be applicable to specific patient scenarios or clinical settings are lost or difficult to ascertain. Moreover, data visualization is presented as the end product of a scientific analysis and less as means to explore the dataset.

In the last decades, the advent of free open-source software, the widespread adoption of the internet, and the massive increase in computing power has led to inexpensive methods which may help overcome some of these limitations. In particular, the statistical programming language R, bundled with the freely available RStudio and shiny software suite, melds data and real-time graphical presentation. The end result is a dynamic website which enables interactive exploration of a dataset from peer-reviewed publications by the user.

Evidence-based medicine (EBM) is defined as “integrating individual clinical expertise with the best available external clinical evidence from systematic research” [10]. Systematic reviews and meta-analyses of randomized controlled clinical trials (RCTs) are the cornerstone and represent the highest level of evidence in EBM [11]. In systematic reviews, all relevant databases are searched with a broadly formulated search query (identification), followed by a manual review of titles, abstracts, and full-texts to exclude non-contributory articles (screening), duplicates, studies with overlapping populations, and those that fall under other predefined exclusion criteria (eligibility evaluation) [12]. The raw results of all eligible studies are either manually extracted from the publication full-texts or acquired from the authors and undergo a pooled statistical analysis (meta-analysis). One of the main strengths of systematic reviews is the larger number of subjects and the consequent high statistical power. A limitation is that results may become less applicable to individual patient care (e.g., specific patient characteristics or health care setting), especially since they are typically peer-reviewed and published as a static article in a scientific journal. This limitation may be mitigated by instead or additionally publishing the data in the form of an interactive website. By hosting an interactive meta-analysis on a freely accessible website, data visualization becomes an exploratory tool and enables the user to investigate relevant subgroups of the data which are directly applicable to their patient and clinical scenario. Furthermore, this way, the underlying data can continuously be updated as new original studies on the topic are published, until a general consensus is found.

It was our hypothesis that an interactive meta-analysis of a wide range of published prostate MRI studies enables selection of relevant subgroups from large datasets in order to gain insights for select groups of patients or caretakers.

Materials and Methods

This study focuses on the use of R (v. 3.6.1; R Foundation for Statistical Computing, Vienna, Austria), combined with RStudio (v. 1.1.442) and shiny (v. 1.1.0; both by RStudio Inc., Boston MA, USA). R is a programming language designed for statistical computing and graphical analysis. At the time of writing (December 2019), it ranks 16th on the TIOBE-Index (https://www.tiobe.com/tiobe-index/), a measure of popularity or frequent usage in programming languages. In this context, popularity is roughly defined as “frequently queried in internet search engines”; a full definition of the index can be found on https://www.tiobe.com/tiobe-index/programming-languages-definition/. Of course, this definition does not explicitly include ease of use or usefulness in a particular scenario, but serves as a mere indicator that R is widely used and has a large peer support base. Though there are other solutions available for interactive, web-based data analysis, and visualization, R is free, open-source, and available for all major platforms (Windows, Mac, and Linux) and could thus enable the interested reader to apply the methodology to their own work and may help foster more widespread adoption of interactive meta-analyses. All of the software needed can easily be installed on a local computer (see Supplementary File 1).

Basic Structure of a Shiny App

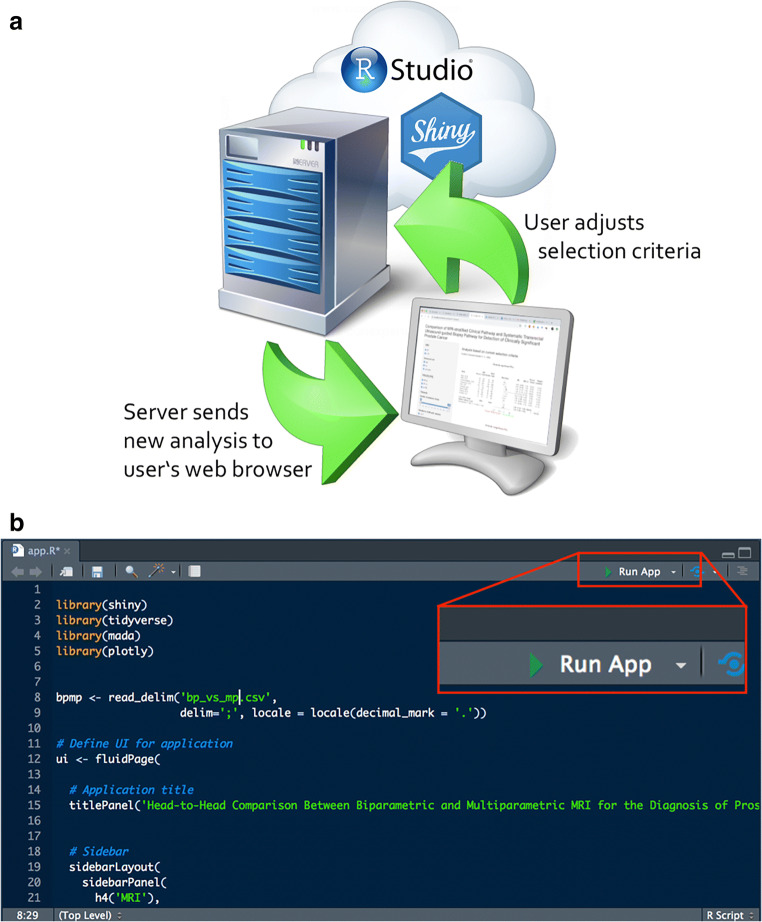

A shiny app consists essentially of two components: The webpage/front end is the part that the user sees and interacts with (user interface, UI), and the back end, which contains the instructions for the server on how to analyze/display the data and how to react to the user inputs (Fig. 1). These two parts can either reside in separate (ui.R and server.R) or in the same file (app.R). The shiny app itself is written in R which is then translated on the fly by the Shiny server into HTML, CSS, and JavaScript, and the output is updated with any new user input. In RStudio, the app can be launched by simply clicking the “Run App” button in the upper right corner of the code viewer (Fig. 1b).

Fig. 1.

a Schematic of RStudio/shiny running on a server (“in the cloud”), immediately processing the user’s selections and rendering the new analysis in real time. b The RStudio code window with the “Run App” button, which enables running a shiny app directly out of the R development environment

Systematic Review

To test our approach for interactive meta-analyses, we included the data from two recently published systematic reviews and meta-analyses [13, 14]. In the first review [14], the performance of multiparametric (mpMRI, T2w + DWI + DCE) versus biparametric (bpMRI, T2w + DWI) MRI in diagnosing prostate cancer was assessed.

By systematically searching the PubMed and Embase databases with relevant keywords (such as “prostate,” “MR,” “multiparametric,” “DCE,” “specificity,” etc.) a total of 24 diagnostic test accuracy studies with 2668 patients met the inclusion criteria (large sample size, original research article, providing sufficient information to reconstruct 2 × 2 contingency tables).

All studies included compared the two MRI protocols (biparametric and multiparametric MRI) for prostate cancer diagnosis by utilizing histopathologic findings as the reference standard.

Data from these studies were then extracted based on predefined clinicopathologic, study, and MRI characteristics. Furthermore, the methodologic quality of studies was rated.

For the subsequent analysis, the head-to-head comparison between bpMRI and mpMRI was set as the primary outcome, yet further 26 subgroup analyses stratified by select clinicopathologic, study, and MRI variables were performed and deemed secondary outcomes [14].

Based on 2 × 2 contingency tables from single studies, pooled estimates of sensitivity and specificity for bpMRI and mpMRI were computed with hierarchic logistic regression modeling including bivariate modeling and hierarchic summary receiving operator characteristic (HSROC) modeling. HSROC analyses model the variability between studies, allowing to test for the theoretically expected non-independence of sensitivity and specificity between studies [15]. Results were displayed as HSROC curves, an extension of the classical ROC curve displaying the diagnostic performance as a function of sensitivity and specificity. Meta-regression analysis aided in comparing the diagnostic performance between bpMRI and mpMRI.

Concerning the second review [13], the utility of two pathways in detecting prostate cancers was compared by analyzing the results of recently published RCTs. To diagnose prostate cancers, patients can either be examined by magnetic resonance imaging followed by targeted biopsy of the prostate tissue of interest (“MRI-stratified pathway”) or patients can be diagnosed by means of systematic transrectal ultrasound-guided prostate biopsies (TRUS-Bx).

The PubMed, EMBASE, and Cochrane databases were systematically searched by applying the “Population/Intervention/Comparator/Outcomes/Study design” (PICOS) criteria and relevant keywords (e.g., prostate cancer, MRI, target biopsy). RCTs presenting clinically significant prostate cancer (csPCa) detection rates of both pathways in patients with clinically suspected prostate cancer were included. Of the initial 333 articles identified, 9 RCTs with 2908 patients were finally included in the analysis based on the following study exclusion criteria: studies with a very limited number of patients, studies reported as other publication types than RCTs, studies addressing different topics, studies providing limited information thus preventing extraction of cancer detection rates, and finally, studies with overlapping study populations.

The primary outcome was defined as the detection rates of csPCa of the MRI-stratified pathway and the TRUS-Bx pathway and their relative detection rate (detection rate of MRI-stratified pathway divided by detection rate of TRUS-Bx pathway). The comparison of the detection rate and relative detection rate of any prostate cancer (PCa) and clinically insignificant prostate cancers (cisPCa) of both pathways and subgroup analyses for csPCa stratified by select variables were considered secondary outcome [13].

iu-ma

On the iu-ma.org website, we deployed a simple HTML container page featuring a short introduction and conclusion on the topic, while the actual interactive part/shiny app is included via an inline frame.

We included the following filter criteria, which can be used in any arbitrary combination, in order to enable the user to explore different subgroups of the study population:

MRI field strength (1.5T vs. 3T)

Endorectal coil vs. body coil

DCE temporal resolution

Type of DCE readout

Readout scoring system (PI-RADS v1 vs. PI-RADS v2)

Number of patients in the study

Prospective vs. retrospective study design

Cancer location with respect to the zonal anatomy of the prostate

Suspected vs. biopsy-confirmed cancer prior to MRI

Reference standard (biopsy vs. prostatectomy)

Clinically significant PCa vs. all PCa

Analysis (per patient/lesion/sector)

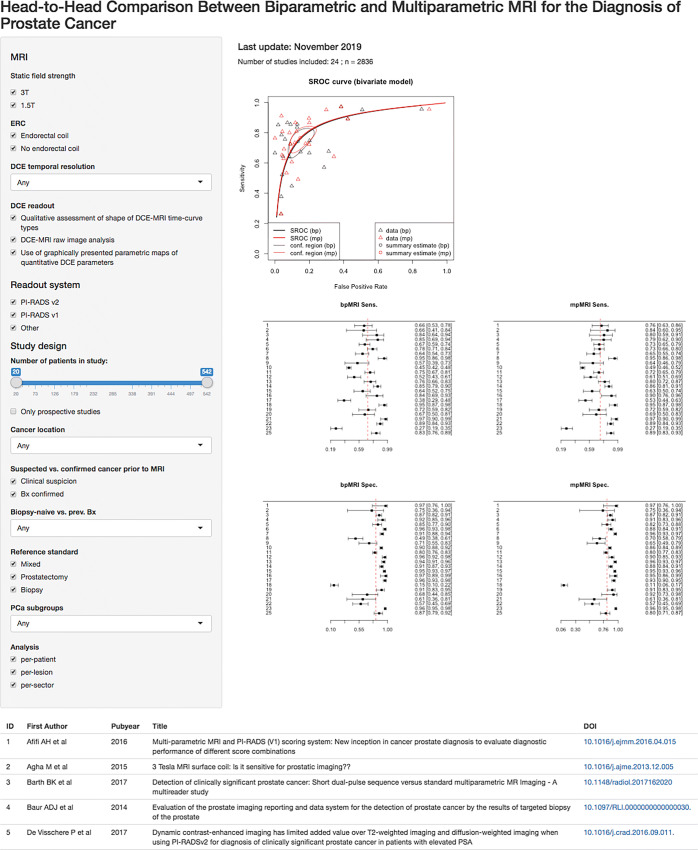

For mutually exclusive (binary) choices such as “clinically significant PCa,” we chose shiny’s selectInput, for non-mutually exclusive variables such as the MRI field strength, we used checkboxGroupInput. The study population selection was implemented with a sliderInput. The user inputs are displayed in a gray bar on the left-hand side of the page (screenshot in Fig. 2). On the right-hand side, we placed the HSROC curves and four forest plots featuring sensitivity and specificity (top and bottom row) of bp vs. mpMRI (left vs. right column). Below, we placed a table with all studies displayed and a Sankey diagram illustrating the study selection process. Sankey diagrams are flow diagrams in which the width of the arrows/lines is proportional to the number of articles included in the respective workflow. All of these elements are dynamic and are updated with every user input. In Fig. 3, we show the example of a user who would like to know whether these results are applicable to their center, where only 3T MRI without endorectal coil are used, with a high temporal DCE resolution and quantitative DCE readout; he or she would see that in the 3 studies (n = 233 patients) applicable to that scenario; the HSROC summary estimates are virtually identical for bp and mpMRI, as are the sensitivity and specificity estimates (in line with the overall conclusion).

Fig. 2.

Screenshot of the iu-ma on biparametric vs. multiparametric MRI in the detection of prostate cancer. The user selections/inputs are in the left gray sidebar, on the basis of which the graphs on the right and table in the bottom are rendered by the application

Fig. 3.

Example of a subgroup analysis (3T + no ERC + quantitative DCE readout). Note that in comparison with Fig. 2, only three studies are displayed. The overall result, however, remains the same (similar performance of bp and mpMRI)

Due to the danger of discovering false positives when performing numerous subgroup analyses, we refrained from computing p values for the individual steps.

Results

We have successfully created an iu-ma with the live version available at http://www.iu-ma.org/ and the raw data and source code at https://github.com/iu-ma/bpmpmri. A screen capture video of its usage is shown online in the Supplementary File 2. For live display of the latest version, we make use of the service http://shinyapps.io (provided by RStudio Inc.), to which the app can be directly deployed from the RStudio command line. It is also possible to run a shiny server on a dedicated web server, the setup of which, however, is beyond the scope of this paper.

Biparametric vs. Multiparametric MRI

As described in the original paper, a pooled sensitivity and specificity of 0.74 and 0.9 for bpMRI and 0.76 and 0.89 for mpMRI was found (thus, no significant difference was detected between bpMRi and mpMRI). The HSROC AUC was 0.9 and identical between the two MRI protocols. Considerable heterogeneity to a similar extent was detected in both bpMRI and mpMRI. As for the analyses of the 26 subgroups, no significant differences in sensitivity and specificity and heterogeneity between the MRI protocols were observed [14]. By conducting further subgroup analyses using the interactive tool on the iu-ma webpage, we could confirm this finding, even after including more studies, as we also observed similar results between bpMRI and mpMRI in regard to the diagnostic performance.

MR-Stratified Biopsy Pathway

In the original meta-analyses, the MRI-stratified pathway was found to significantly detect more csPCa than its competitor TRUS-Bx (relative detection rate of 1.45).

The detection rates for cisPCa did not differ significantly between both pathways; however, the MRI-stratified pathway was significantly more successful in detecting any form of prostate cancer (relative detection rate of 1.39). As for the subgroup analyses, the MRI-stratified pathway significantly outperformed the TRUS-Bx pathway when considering only biopsy-naïve (relative detection rate of 1.42) and prior negative biopsy patients (relative detection rate of 1.6). Furthermore, significantly better detection rates were also observed in many other subgroups (i.e., when only considering studies utilizing endorectal coils) [13].

Using the interactive tool on the iu-ma webpage, further extensive subgroup analyses can be performed conveniently, tailored to the needs of certain patients or clinicians. The default setting on the website implied that the MRI-stratified pathway was slightly better in detecting csPCa (risk ratio of 1.35, [0.92; 1.98]) while the TRUS-Bx pathway less often detected cisPCa (1.3, [0.93; 1.84]). By changing the default setting in one category while keeping the other settings constant, further insights showing the performance of subgroups in comparison with the default settings can be gained.

In studies performed on 3T scanners (5 studies with 1841 patients), the risk ratio for csPCa was 1.4 ([0.88; 2.23]) while the risk ratio for cisPCa was 1.19 ([0.9; 1.58]). Meanwhile in studies performed on 1.5T scanners (2 studies with 355 patients), the risk ratio for csPCa was 1.26 ([0.58; 2.7]) while being 2.2 ([1.18; 4.10]) for cisPCa. Thus, it seems that 3T is more suitable for both detecting csPCa and cisPCa. In contrast, studies on 3T scanners showed less favorable results for the overall detection of PCa in comparison with 1.5T scanners (1.35, [0.93; 1.96] vs. 1.45, [0.79; 2.65]). However, it should be noted that the number of studies/patients at 1.5T is rather low, resulting in large confidence intervals and potential spurious differences.

Furthermore, in studies without concurrent systematic biopsies in the MRI-stratified pathway (2 studies with 712 patients), the risk ratio for the detection of csPCa increased (1.35, [0.92; 1.98] vs. 1.82 [1.11; 2.98]) and the risk ratio for the detection of cisPCa decreased (1.3, [0.93; 1.84] vs. 0.44, [0.29; 0.66]) in comparison with studies with biopsies (7 studies with 2196 patients) thus implying that the use of concurrent systematic biopsies in the MRI-stratified pathway is less advantageous. However, the use of biopsies seemed to improve detection rates for all forms of PCa (1.27, [0.73; 2.19] vs. 1.38, [1.03; 1.84]).

As for studies utilizing biopsy core-related information (3 studies with 591 patients), the subgroup analysis indicated that its use was less beneficial. The risk ratio for csPCa (0.95, [0.73; 1.23] vs. 1.93, [1.61; 2.3]) and for all PCa (1.1, [0.96; 1.27] vs. 1.71, [1.18; 2.47]) was lower and the risk ratio for cisPCa (1.48, [0.81; 2.7] vs. 1.13, [0.66; 1.92]) was higher in comparison with studies exclusively using the Gleason score (4 studies with 1605 patients).

Moreover, a subgroup analysis stratified by the PI-RADS version showed that the use of PI-RADS v1.0 (3 studies with 1527 patients) was superior in detecting cisPCa (1.6, [0.93; 2.77] vs. 1.43, [1.12; 1.84]) and all PCa (1.55, [1.07; 2.25] vs. 0.97, [0.82; 1.15]) while PI-RADS v2.0 (2 studies with 700 patients) outperformed v1.0 in detecting clinically significant cancers (1.34, [0.47; 3.85] vs. 0.46, [0.31; 0.68]).

Lastly, we found that MR/US fusion (3 studies with 887 patients) substantially decreased the detection rates of cisPCa (0.85, [0.27; 2.67] vs. 1.25, [0.96; 1.63]).

Discussion

Systematic reviews pool the information from several studies, leading to larger numbers of included patients. Meta-analyses represent a high level of evidence in EBM and allow for generalized inference. However, the heterogeneity of study populations and study designs may limit their applicability to individual patients. This may be especially true in radiological studies, where there are many possible variables related to patient characteristics, technical image acquisition, and standardized interpretation that are difficult to account for in a standard analysis. Although these can be investigated by subgroup evaluations, these are usually limited to a few general subgroups and do not allow for closer examination of various combinations, as the number of combinations may be too large when many variables are included (“combinatorial explosion”). In the present paper, we apply a recently proposed method [16] to present the results of systematic reviews and meta-analyses to radiological studies concerning prostate imaging. We hypothesize that by directly connecting the presentation (i.e., figures and tables) to the underlying data, this tool of visual data exploration may be useful to all stakeholders involved in patient care, i.e., not only radiologists but also referring physicians and patients themselves. Furthermore, the ability to easily update the underlying data table enables researchers to close the gap between the ongoing publication of new clinical studies and systematic summaries.

While the original meta-analyses put their main focus on the comparison between MR-stratified prostate and TRUS-Bx for PCa detection in general and within several subgroups, we were able to extend these subgroups analyses with the iu-ma webpage. The authors of the meta-analyses did not focus in particular on the difference between clinically significant and insignificant PCa. By using the iu-ma webpage, we could show that on the one hand, the MRI-stratified pathway is better in detecting csPCa, but on the other hand, also detects a higher rate of cisPCa. Therefore, patients undergoing the MRI-stratified pathway should be aware of the risk of detecting cisPCa.

In the underlying meta-analysis, no dedicated subgroup analysis was performed between studies performed on a 3T or 1.5T scanner. However, this is a very important comparison for institutions that have both scanners available and need to decide where patients should be scanned and for institutions that may think about buying a new scanner. As our subgroup analyses revealed that 3T scanner is more suitable for both detecting csPCa and cisPCa, this may also influence future guidelines and/or clinical practice.

Additionally, the applicability of PI-RADS v1.0 and PI-RADS v2.0 for the evaluation of the prostate MRI is still discussed [17]. Here, our tool could show that PI-RADS v1.0 outperforms PI-RADS v2.0 regarding detecting cisPCa while PI-RADS v2.0 detects more csPCa. Thus, we suggest using PI-RADS v2.0 as it decreases the detection rate of cisPCa while increasing the detection rate of csPCa.

As another additional subgroup analysis, the impact of the image fusion method can be evaluated on our website. The current data suggest that MR/US fusion yields lower/better detection of cisPCa.

The present paper represents the first step in establishing this tool of visual data exploration of radiological meta-analyses. The next steps include expansion of the meta-analyses on our website to other clinical questions and organ systems. We believe that adding an interactive website may add greatly to most conventionally published meta-analyses. Once we have incorporated more meta-analyses about a wider range of topics into our repository, we plan to assess their impact in a more quantitative manner, e.g., with online usage statistics and questionnaires.

Our work has several limitations that need to be mentioned:

First, we only focused on the interactive display of meta-analyses, whereas most other types of studies (clinical, technical, …) could probably also benefit from additional interactive data display.

Second, for the sake of simplicity and user-friendliness, we only give the user the ability to filter the data according to various criteria but fix the types and number of graphs and tables that can be generated. The rationale is that the novice user may not be familiar with the different graphs and instruments of meta-analyses and may be overwhelmed when given too many options to manipulate the output, thus hindering the usability.

Third, the format requires some basic understanding of the users of biostatistics and the methods used for analysis (an in-depth lecture of the underlying paper may suffice for this purpose). Since the user has the potential ability to perform many tests, providing p values for all calculated differences would bear a substantial danger of producing false positive results. Since the number of tests is not known a priori, this becomes impossible to correct. Furthermore, if the user applies very strict selection criteria including only a small number of studies, the power of the meta-analysis may become eroded.

Next, we focused on the programming language R and the software RStudio and shiny. There are other free (e.g., Python/bokeh) or commercial (e.g., Tableau) software solutions available which enable building interactive, graphical data analysis and could achieve similar results. However, since meta-analyses in epidemiology/medicine are often performed natively in R and the corresponding packages are established and well-validated, R was the natural choice to implement this idea.

Lastly, updating the underlying data table with new original studies cannot be performed fully automatically and still requires the manual work of a qualified researcher. However, the process can be facilitated and accelerated by automatic search alerts [18].

Conclusions

Interactive meta-analyses directly connect figures and tables with the underlying data allowing for facilitated updating of the data as original studies are continuously published. We were able to demonstrate that detection rates of PCa were not different between bpMRI and mpMRI, even when analyzing multiple subgroups and including new studies. Moreover, multiple subgroup analysis confirmed the favorable influence of MRI biopsy stratification for multiple clinical scenarios.

Electronic Supplementary Material

(DOCX 26 kb)

Acknowledgments

The authors would like to thank the R core developer team and the R community.

Funding Information

This research was funded in part through the NIH/NCI Cancer Center Support Grant P30 CA008748. ASB was supported by the Peter Michael Foundation (USA), the Prof. Dr. Max Cloëtta Foundation (CH), medAlumni UZH (CH), and the Swiss Society of Radiology (CH). JK was supported by a grant of the European Society of Radiology (ESOR).

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Krumholz HM. The end of journals. Circ Cardiovasc Qual Outcomes. 2015;8:533–534. doi: 10.1161/CIRCOUTCOMES.115.002415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Paats A, Alumäe T, Meister E, Fridolin I. Retrospective analysis of clinical performance of an estonian speech recognition system for radiology: effects of different acoustic and language models. J Digit Imaging. 2018;31:615–621. doi: 10.1007/s10278-018-0085-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Denck J, Landschütz W, Nairz K, Heverhagen JT, Maier A, Rothgang E. Automated billing code retrieval from MRI scanner log data. J Digit Imaging. 2019;32:1103–1111. doi: 10.1007/s10278-019-00241-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Alkadi R, Taher F, El-baz A, Werghi N. A deep learning-based approach for the detection and localization of prostate cancer in T2 magnetic resonance images. J Digit Imaging. 2018;32:793–807. doi: 10.1007/s10278-018-0160-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Soher BJ, Dale BM, Merkle EM. A review of MR physics: 3T versus 1.5T. Magn Reson Imaging Clin Am. 2007;15:277–290. doi: 10.1016/j.mric.2007.06.002. [DOI] [PubMed] [Google Scholar]

- 6.Schimmöller L, Quentin M, Arsov C, Hiester A, Buchbender C, Rabenalt R, Albers P, Antoch G, Blondin D. MR-sequences for prostate cancer diagnostics: validation based on the PI-RADS scoring system and targeted MR-guided in-bore biopsy. Eur Radiol. 2014;24:2582–2589. doi: 10.1007/s00330-014-3276-9. [DOI] [PubMed] [Google Scholar]

- 7.Hoeks CMA, Barentsz JO, Hambrock T, Yakar D, Somford DM, Heijmink SWTPJ, Scheenen TWJ, Vos PC, Huisman H, Van Oort IM, Witjes JA, Heerschap A, Fütterer JJ. Prostate cancer: Multiparametric MR imaging for detection, localization, and staging. Radiology. 2011;261:46–66. doi: 10.1148/radiol.11091822. [DOI] [PubMed] [Google Scholar]

- 8.Arsov C, Rabenalt R, Blondin D, Quentin M, Hiester A, Godehardt E, Gabbert HE, Becker N, Antoch G, Albers P, Schimmöller L. Prospective randomized trial comparing magnetic resonance imaging (MRI)-guided in-bore biopsy to MRI-ultrasound fusion and transrectal ultrasound-guided prostate biopsy in patients with prior negative biopsies. Eur Urol. 2015;68:713–720. doi: 10.1016/j.eururo.2015.06.008. [DOI] [PubMed] [Google Scholar]

- 9.Weinreb JC, Barentsz JO, Choyke PL, Cornud F, Haider MA, Macura KJ, Margolis D, Schnall MD, Shtern F, Tempany CM, Thoeny HC, Verma S. PI-RADS Prostate Imaging - Reporting and Data System: 2015, Version 2. Eur Urol. 2016;69:16–40. doi: 10.1016/j.eururo.2015.08.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Sackett DL. Evidence-based medicine. Semin Perinatol. 1997;21:3–5. doi: 10.1016/S0146-0005(97)80013-4. [DOI] [PubMed] [Google Scholar]

- 11.Greenhalgh T: How to Read a Paper, 5th edition. BMJ Books, 2010

- 12.Moher D, Liberati A, Tetzlaff J, Altman DG, Altman DG, Antes G, Atkins D, Barbour V, Barrowman N, Berlin JA, Clark J, Clarke M, Cook D, D’Amico R, Deeks JJ, Devereaux PJ, Dickersin K, Egger M, Ernst E, Gøtzsche PC, Grimshaw J, Guyatt G, Higgins J, Ioannidis JPA, Kleijnen J, Lang T, Magrini N, McNamee D, Moja L, Mulrow C, Napoli M, Oxman A, Pham B, Rennie D, Sampson M, Schulz KF, Shekelle PG, Tovey D, Tugwell P, PRISMA Group Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. J Chin Integr Med. 2009;7:889–896. doi: 10.3736/jcim20090918. [DOI] [Google Scholar]

- 13.Woo Sungmin, Suh Chong Hyun, Eastham James A., Zelefsky Michael J., Morris Michael J., Abida Wassim, Scher Howard I., Sidlow Robert, Becker Anton S., Wibmer Andreas G., Hricak Hedvig, Vargas Hebert Alberto. Comparison of Magnetic Resonance Imaging-stratified Clinical Pathways and Systematic Transrectal Ultrasound-guided Biopsy Pathway for the Detection of Clinically Significant Prostate Cancer: A Systematic Review and Meta-analysis of Randomized Controlled Trials. European Urology Oncology. 2019;2(6):605–616. doi: 10.1016/j.euo.2019.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Woo S, Suh CH, Kim SHSYSH, Cho JY, Kim SHSYSH, Moon MH. Head-to-head comparison between biparametric and multiparametric mri for the diagnosis of prostate cancer: a systematic review and meta-analysis. Am J Roentgenol. 2018;211:W226–W241. doi: 10.2214/AJR.18.19880. [DOI] [PubMed] [Google Scholar]

- 15.Harbord Roger M., Whiting Penny, Sterne Jonathan A.C., Egger Matthias, Deeks Jonathan J., Shang Aijing, Bachmann Lucas M. An empirical comparison of methods for meta-analysis of diagnostic accuracy showed hierarchical models are necessary. Journal of Clinical Epidemiology. 2008;61(11):1095–1103. doi: 10.1016/j.jclinepi.2007.09.013. [DOI] [PubMed] [Google Scholar]

- 16.Elliott Julian H., Turner Tari, Clavisi Ornella, Thomas James, Higgins Julian P. T., Mavergames Chris, Gruen Russell L. Living Systematic Reviews: An Emerging Opportunity to Narrow the Evidence-Practice Gap. PLoS Medicine. 2014;11(2):e1001603. doi: 10.1371/journal.pmed.1001603. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Becker Anton S., Cornelius Alexander, Reiner Cäcilia S., Stocker Daniel, Ulbrich Erika J., Barth Borna K., Mortezavi Ashkan, Eberli Daniel, Donati Olivio F. Direct comparison of PI-RADS version 2 and version 1 regarding interreader agreement and diagnostic accuracy for the detection of clinically significant prostate cancer. European Journal of Radiology. 2017;94:58–63. doi: 10.1016/j.ejrad.2017.07.016. [DOI] [PubMed] [Google Scholar]

- 18.Brown B: Keeping Current with the Literature, n.d.. https://www.nihlibrary.nih.gov/resources/subject-guides/keeping-current (accessed April 8, 2019).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 26 kb)