Abstract

Coronavirus is still the leading cause of death worldwide. There are a set number of COVID-19 test units accessible in emergency clinics because of the expanding cases daily. Therefore, it is important to implement an automatic detection and classification system as a speedy elective finding choice to forestall COVID-19 spreading among individuals. Medical images analysis is one of the most promising research areas, it provides facilities for diagnosis and making decisions of a number of diseases such as Coronavirus. This paper conducts a comparative study of the use of the recent deep learning models (VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, and MobileNet_V2) to deal with detection and classification of coronavirus pneumonia. The experiments were conducted using chest X-ray & CT dataset of 6087 images (2780 images of bacterial pneumonia, 1493 of coronavirus, 231 of Covid19, and 1583 normal) and confusion matrices are used to evaluate model performances. Results found out that the use of inception_Resnet_V2 and Densnet201 provide better results compared to other models used in this work (92.18% accuracy for Inception-ResNetV2 and 88.09% accuracy for Densnet201).

Communicated by Ramaswamy H. Sarma

Keywords: Computer-aided diagnosis, coronavirus automatic detection, Covid-19, CT and X-ray images, pneumonia, deep learning

1. Introduction

Identified first time in Wuhan city of China in late December 2019 (Aanouz et al., 2020; Elfiky & Azzam, 2020; Elmezayen et al., 2020; Enayatkhani et al., 2020; Fausto et al., 2020; Ghosh et al., 2020; Muralidharan et al., 2020; Phulen et al., 2020; Rajib et al., 2020; Rothan & Byrareddy, 2020; Rowan & Laffey, 2020; Salman et al., 2020; Sourav et al., 2020; Umesh et al., 2020), Covid19 is a respiratory disease that is caused by the new coronavirus SARS-CoV-2 (Severe Acute Respiratory Syndrome Coronavirus 2 (SARS-Cov-2) 2019 (Abdelli et al., 2020; Rakesh et al., 2020; Rameez et al., 2020; World Health Organization, 2020). Covid19 is the name given by the World Health Organization (WHO) on 11 February 2020 (World Health Organization, 2020). It causes illnesses ranging from the common cold to more severe pathologies (Rowan & Laffey, 2020). First people to have contracted the virus went to Wuhan market in China’s Hubei Province. The disease would, therefore, to have originated from an animal (zoonosis) but the origin has not been confirmed (Boopathi et al., 2020; CNHC, 2020; El Zowalaty & Järhult, 2020; Kandel et al., 2020; Manoj et al., 2020; Pant et al., 2020; Roosa et al., 2020; Sun et al., 2020; Wilder-Smith et al., 2020).

The period between contamination and appearance of the first Covid19 symptoms can extend to 15 days. Therefore, people carrying the virus without knowing can affect other people, which allows the spread of the virus in a large way. In fact, after a few weeks of confirmed cases in Wuhan, Covid19 was not only spread into China but crossed the border (212 countries) and the number of people affected increased and claimed many victims. Indeed, on 11 February 2020, the World Health Organization declared COVID19 a pandemic (Djalante et al., 2020; Landry et al., 2020; Lee & Morling, 2020; Rowan & Laffey, 2020; Saurabh et al., 2020; Wahedi et al., 2020; World Health Organization, 2020). While writing this paper, the number of confirmed cases has reached 3,641,205 including 251,943 deaths, and 1,192,948 recovred (Worldometers, 2020) (Last updated: May 04, 2020, 23:45 GMT).

The main symptoms of Covid19 are fever (38 °C or higher), dry cough, the difficulty of breathing, tiredness, aches, and pains, sore throat, and diarrhea for some people. Sudden loss of smell, without nasal obstruction and total disappearance of taste, are also symptoms that have been observed in patients. In people developing more severe forms, respiratory difficulties are found, which can lead to hospitalization in intensive care and death (Landry et al., 2020; Sharifi-Razavi et al., 2020; Simcock et al., 2020; World Health Organization, 2020).

The way with Covid19 is transmitted makes it a very dangerous disease. In fact, the disease can be transmitted by droplets (secretions projected invisible when talking, sneezing, or coughing). It is therefore considered that close contact with a sick person is necessary to transmit the disease: the same place of residence, direct contact within one meter when talking, coughing, sneezing, or in the absence of protective measures. One of the other preferred vectors of virus transmission is in contact with unwashed hands soiled with droplets (Anwarul et al., 2020; Elfiky, 2020a; Enmozhi et al., 2020; Landry et al., 2020; Li, Guan, et al., 2020; Liu, Han, et al., 2020; Lu et al., 2020; Rowan & Laffey, 2020; Yang et al., 2020).

Waiting for a vaccine of Covid19, the World Health Organization is advising that certain precautions be taken (Elkbuli et al., 2020; Li et al., 2020; World Health Organization, 2020). We can cite the following precautions: frequent hand washing with soap or a hydroalcoholic solution; avoid close contact, such as kissing or shaking hands, with people who are coughing or sneezing; covering of mouth with the crease of the elbow, or a disposable handkerchief, when coughing or sneezing; no touching the eyes, nose or mouth; in case of respiratory symptoms and fever, wearing a mask. To limit the spreading of Covid19, some countries limited the movements, and the activities in the cities, and others are under lockdown (Elfiky, 2020; Ghosh et al., 2020; Li et al., 2020).

The real-time polymerase chain reaction (RT-PCR) is the standard for detecting Covid19, but its problem is that it takes time to confirm patients as well as it’s expensive (Huang et al., 2020). Therefore, medical image processing can overcome this problem by affirming positive covid19 patients. Indeed, Chest X-ray and Computed Tomography (CT) are the most used image in medical image processing (Liu et al., 2020; Ng et al., 2020) and several researches used them to develop models that can help radiologists to predict the disease. On the other hand, in the last years, deep learning gave an excellent result in medical image analysis and this allows the specialists to make good decisions when diagnosing patients. Hence, various studies have demonstrated the capacity of neural systems, particularly convolutional neural systems to precisely recognize the presence of Pneumonia (Gozes et al., 2020; Xu et al., 2020). In this study, we are going to present a comparison of different Deep Convolutional Neural Network (DCNN) algorithms (VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, and MobileNet_V2) to automatically classify X-ray images into Coronavirus, Bacteria, and Normal.

The contributions of our paper are as follows: (1) We design fined tuned versions of (VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, and MobileNet_V2), (2) To avoid over-fitting in different models, we used weight decay and L2-regularizers. (3) The models have been tested on the chest X-ray & CT dataset for multiclass classification.

The structure of this paper is as follows. The paper starts with detailing the literature review in Section 2, Section 3 describes the proposed method, Section 4 presents some results obtained and their interpreting. The discussion is given in Section 5. Conclusion section ends the paper along with few upcoming tasks to be headed in the section” Conclusions and future directions”.

2. Related work

Since the vaccine is not yet developed, the right measure to reduce the epidemic is to isolate people who are positively affected. But the problem is making a quick diagnostic to distinguish positive patients from negative. In this scenario, several studies were presented allowing to identify abnormalities in Chest X-ray and CT images. Indeed, Gozes et al. (2020) proposed a model allowed to differentiate coronavirus patients from healthy patients. The proposed system produced a localization map of the lung abnormality as well as measurements. Indeed, it was split into two subsystems: Subsystem A: a 3D analysis was used to detect nodules and small opacities using commercial off-the-shelf software thereafter measurements and localization were provided. Subsystem B: the first step is the lung Crop stage where the lung region of interest (ROI) was extracted using a lung segmentation module (U-net architecture). The second step is the detection of coronavirus abnormalities using deep convolutional neural network model ResNet50. The third step was the abnormality localization step. If a new slice identified positive, the network-activation maps were extracted using the Grad-cam technique. Thereafter, after the combination of the output of subsystem A and subsystem B, the authors added a Corona score calculated by a volumetric summation of the network-activation maps.

An automatic and deep learning-based method using X-ray images to predict Covid19 was proposed by Narin et al. (2020). The proposed method used three Deep Convolution Neural Network architectures. They have used a dataset containing 50 X-ray images of covid19 patients and 50 normal X-ray images and all the images were resized to 224 × 224. To overcome the problem of the limited number dataset, the authors used transfer learning models. The dataset was divided into two parts: 80% for training and 20% for testing. The developed DCNN was based on pre-trained models (ResNet50, Inception_V3, and Inceptio_ResNet_V2) allowed to identify Covid19 from normal X-ray images. They used also a transfer learning technique and the k-fold method was used as a cross-validation method with k = 5. The obtained results showed the pre-trained model ResNet50 gave good (the value of accuracy is equal to 98%).

In Hemdan et al. (2020), a deep learning classifiers framework “COVIDX-Net” helping radiologists to automatically identify Covid19 was proposed. The developed framework allows classifying Covid19 X-ray images into positive and negative Covid19. Authors used seven DCNN architectures (VGG19, DenseNet121, ResNetV2, InceptionV3, InceptionResNetV2, Xception, and MobileNetV2). They also used a dataset including 50 X-ray images split into two categories normal and Covid19 positive cases (25 X-ray images for each). The images were resized to 224 × 224 pixels. 80% of images were used for the training stage and 20% for testing. The obtained results depicted that VGG19 and DenseNet201 architectures have good performances with an F1 score of 89% and 91% for normal and covid19.

In order to identify Covid19 cases from other Pneumonia (Bacteria and virus) and normal cases, Farooq and Hafeez (2020) proposed a convolutional neural network (CNN) framework. They used the COVDIX dataset made by Wang and Wong (2020). The dataset contains 5941 chest radiography images collected from 2839 patients. In this work, they used a portion of the COVIDX dataset, and it was divided into four sets: Covid19 (48 images), Bacterial (660), Viral (931), and Normal (1203 images). In the training step, which was performed in 3 steps, the Cyclical Learning Rate was used for helping to select the optimal learning rate and that for each step. The obtained results depict that the proposed Covid-ResNes gave good identification accuracy of 96.23% compared to Covid-Net 83.5%.

Bhandary et al. (2020) reported a deep learning framework to classify lung abnormalities like pneumonia using chest X-ray images and cancer using lung CT images. The proposed model was based on a Modified AlexNet model (MAN). Hence, they proposed two models: A) a MAN model combined with Support Vector Machine (SVM) used to identify pneumonia images from normal images. For the results, the proposed model showed good results (accuracy 96.8%) compared to other models AlexNet, VGG16, VGG19, ResNet50, and MAN_Softmax. B) For this examination, the lung CT images were used. Authors merged MAN with Ensemble-Feature-Technique (EFT) to improve the performance of classification. After extracting features from images, the Principal Component Analysis (PCA) was implemented. Finally, to classify CT images into Malignant and Benign, the model was combined with SVM, k-Nearest Neighbors (k-NN), and Random Forest (RF). The obtained results depicted that MAN combined with SVM achieved good accuracy with and without EFT 97.27% and 86.47 respectively.

In Zhang et al. (2020), the authors presented a deep learning model allowed to detect Covid19 from healthy people using Chest X-ray images. The model was based on three components: The first one is the backbone network which is composed of 18 layers residual convolutional neural network. Its rule is to extract the high-level features from the chest X-ray image. The second one is the classification head intended to generate a classification score Pcls. It was powered by the extracted features by the backbone network. The third component is the anomaly detection head allows generating a scalar anomaly score Pano. After calculating the classification score and scalar anomaly score, the decision was made according to a threshold T. The obtained results showed that the sensitivity decreased as long as the value of threshold T decreased (sensitivity of 96% for T = 0.15).

The work (Xu et al., 2020) reported a method to distinguish COVID-19 from Influenza-A viral pneumonia and healthy images using deep learning techniques. They used multiple CNN to classify Computed Tomography (CT) images. The presented process can be summarized as 4 steps: 1) the images were pre-processed to extract effective pulmonary regions 2) a 3D CNN was used to segment multiple candidate image cubes 3) a model of image classification was used to distinguish the images patch into Covid19, Influenza-A and normal 4) by using the noisy-or Bayesian function an overall analysis report for one CT sample was calculated. The VNET-IR-RPN model was used for the segmentation while ResNet-18 model and ResNet-18 with the location-attention mechanism model were used for the classification step. The experimental results show that the ResNet-18 model with the location-attention mechanism gave the overall accuracy rate of 86.7%.

A new deep learning model, that allows segmenting and quantifying infection regions in CT scans of COVID-19 patients, was reported by Shan et al. (2020). Authors used VB-Net Neural Network and a human-in-the-loop (HITL) approach in the order to help radiologists to clarify automatic annotation of each case. Then, they used evaluation metrics to assess the effectiveness of the model (volumes and percentage of infection in the whole lung). They divided the CT images into a set of collections. These CT images that were contoured manually by the radiologists will feed the segmentation network for training. Then, the segmentation results were manually corrected by radiologists and were considered as new data to feed the model. This process was repeated to iteratively build the model.

El Asnaoui et al. (2020) presented a comparison of recent DCNN architectures for automatic binary classification of pneumonia images based on fined tuned versions of VGG16 (Simonyan & Zisserman, 2014; Zhang et al., 2019), VGG19 (Simonyan & Zisserman, 2014; Zhang et al., 2019), DenseNet201 (Huang et al., 2017), Inception_ResNet_V2 (Szegedy et al., 2016), Inception_V3 (Szegedy et al., 2015), Resnet50 (He et al., 2016) and MobileNet_V2 (Sandler et al., 2018). The proposed work has been tested using chest X-ray & CT dataset.

The study selection is designed for high sensitivity over precision, to guarantee that no relevant studies were leaved out. At this time, all works done in this field focus on binary classification except few studies. For this purpose, the main goal of this work is going to present a comparison of recent deep convolutional neural network architectures for automatic multiclass classification of X-ray and CT images between normal, bacteria, and coronavirus in order to answer to the following research questions (RQ): RQ1). Is there any DL technique that distinctly outperforms other DL techniques? RQ2). Can DL use to early screen coronavirus from CT and X-ray images? RQ3). What is the diagnostic accuracy that DL can be attained based on CT and X-ray images? RQ4). Can DL assist in the efforts to accurately detect and track the progression or resolution of the coronavirus?

3. Materials and methodology

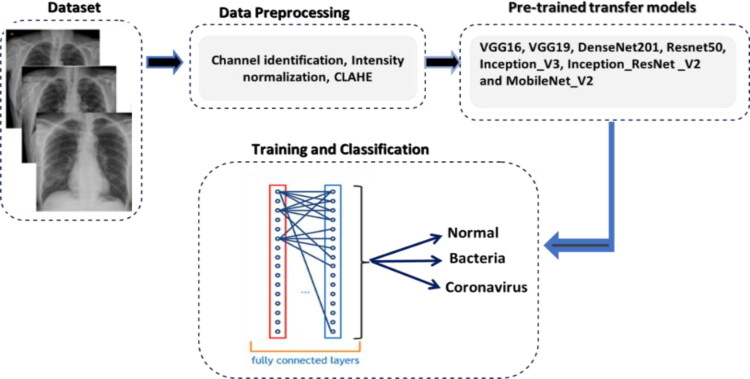

In this study, we built our contribution for automatic multiclass classification on two new publicly available image datasets (chest X-ray & CT dataset) (Cohen et al., 2020; Kermany et al., 2018). Figure 1 depicts the diagram of the main proposed methodology. As it is shown, the entire contribution is mainly divided into four steps: dataset, data pre-processing, pre-trained transfer models, and finally training and classification. The following sections provide in detail the steps of the present contribution.

Figure 1.

Block diagram of the proposed methodology.

3.1. Dataset

This present work introduces two publicly available image datasets that contain X-ray and computed tomography (CT) images. The first dataset (Kermany et al., 2018) is named chest X-ray & CT dataset and composed of 5856 images and has two categories (4273 pneumonia and 1583 normal) whereas the second one is named Covid Chest X-ray Dataset (Cohen et al., 2020). It contains 231 Covid19 Chest X-ray images. We added images of the second dataset to the first one in order to constitute our dataset which finally composed of 6087 images (jpeg format) and has three classes (2780 bacterial pneumonia, 1724 coronavirus (1493 viral pneumonia, 231 covid19) and 1583 normal). Figure 2 depicts an example of chest X-rays in patients with pneumonia, the normal chest X-ray (Figure 2(a)) shows clear lungs with no zones of abnormal opacification. Moreover, Figure 2(b) shows a focal lobar consolidation (white arrows). In addition, Figure 2(c) shows with a more diffuse “interstitial” pattern in both lungs (Kermany et al., 2018) while Figure 2(d) presents an image of patient infected by covid19 (Cohen et al., 2020).

Figure 2.

Examples of Chest X-rays in patients with pneumonia.

3.2. Data preprocessing

The next stage is to pre-process input images using different pre-processing techniques. The motivation behind image pre-processing is to improve the quality of visual information of each input image (eliminate or decrease noise present in the original input image, improve image quality through increased contrast, delete the low or high frequencies, etc). In this study, we used intensity normalization and Contrast Limited Adaptive Histogram Equalization (CLAHE) (El Asnaoui et al., 2020).

For data splitting, the dataset was randomly split in this experiment with 80% of the images for training and 20% of the images for validation. We ensure that the images chosen for validation are not used during training in order to perform successfully the classification task.

3.3. Pre-trained transfer models

In this study, we implemented the present contribution for automatic multiclass classification based VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, and MobileNet_V2 models for the classification of Chest X-ray images to normal, bacteria and coronavirus classes. These different models are explained in (El Asnaoui et al., 2020). Moreover, these deep learning models require a large amount of training data, which is yet not available in this field of applications (El Asnaoui et al., 2020). Following the context of no availability of medical imaging dataset and motivated by the success of deep learning and medical image processing, the present work is going to apply transfer learning technique that was utilized by using ImageNet data to overcome the training time and insufficient data.

Data augmentation is used for the training process after dataset pre-processing and splitting and has the goal to avoid the risk of over-fitting. Moreover, the strategies we used include geometric transforms such as rescaling, rotations, shifts, shears, zooms, and flips (El Asnaoui et al., 2020).

3.4. Training and classification

After data pre-processing, splitting, and data augmentation techniques used, the training dataset size is increased and ready to be passed to the feature extraction step with the proposed models in order to extract the appropriate and pertinent features. The extracted features from each proposed model are flattened together to create the vectorized feature maps. The generated feature vector is passed to a multilayer perceptron to classify each image into corresponding classes. The Cyclical Learning Rate was used for helping to select the optimal learning rate (Smith, 2017).

4. Experiments

4.1. Experimental parameters

The present experimentations were performed based on the following experimental parameters: For simulation, Python programming language is used, and Keras/tensorflow as deep learning backend. The training and validation steps were performed on NVIDIA Tesla P40 with 24 Go RAM. Moreover, all the images of the dataset were resized to 224 × 224 pixels except those of Inception_V3 and Inception_Resnet_V2 models that were resized to 299 × 299. To train the models, we set batch size, number of epochs, and learning rate to 32, 300, and 0.00001 respectively. The learning rate used is based on Cyclical Learning Rates (Smith, 2017) with these parameters: base_lr = 0.00001, max_lr = 0.001, step_size = 2000, mode = exp_range and gamma = 0.99994. Adam with β1 = 0.9, β2 = 0.999 is used for optimization. Besides, we employed weight decay to reduce the over-fitting of the models. A fully connected layer was trained with the Rectified Linear Unit (ReLU). For fine-tuning, we modified the last dense layer in all models to output three classes corresponding to normal, bacteria and coronavirus instead of 1000 classes as was used for ImageNet. Categorical_crossentropy was used in this work as a classical loss function. The implementation of the proposed deep transfer learning models is done using a computer with Processor: Intel (R) Core (TM) i7- 7700 CPU @ 3.60 GHZ and 8 Go in RAM running on a Microsoft Windows 10 Professional (64-bit).

4.2. Performance metrics

The performance of the proposed classification model was evaluated based on accuracy, sensitivity, specificity, precision, and F1 score (Bhandary et al., 2020; Blum & Chawla, 2001). Given the number of false positives (FP), true positives (TP), false negatives (FN) and true negatives (TN), the parameters are mathematically defined as follows:

| (1) |

Moreover, the present study supports the use of confusion matrix analysis in validation (Ruuska et al., 2018) since it is strong to type of relationship and any data distribution, it makes a stringent evaluation of validity, and it provides extra information on the type and sources of errors. Before starting the analysis of the confusion matrix of each model, let’s first see how it is structured and define all the parameters and variables that can be extracted (Table 1).

Table 1.

Confusion matrix structure.

| Predicted |

||||

|---|---|---|---|---|

| Bacteria | Coronavirus | Normal | ||

| Actual | Bacteria | Pbb | Pcb | Pnb |

| Coronavirus | Pbc | Pcc | Pnc | |

| Normal | Pbn | Pcn | Pnn | |

where:

Pbb :Bacteria class were correctly classified as Bacteria.

Pcb :Bacteria class were incorrectly classified as Coronavirus.

Pnb :Bacteria class were incorrectly classified as Normal.

Pbc :Coronavirus class were incorrectly classified as Bacteria.

Pcc :Coronavirus class were correctly classified as Coronavirus.

Pnc :Coronavirus class were incorrectly classified as Normal.

Pbn :Normal class were incorrectly classified as Bacteria.

Pcn :Normal class were incorrectly classified as Coronavirus.

Pnn :Normal class were correctly classified as Normal.

Using these parameters, we can define other variables:

True Positives TP: True Negatives TN:

| TP(Bacteria) : | Pbb | TN(Bacteria) : | Pcc+Pnc+Pcn+Pnn | |

| TP(Coronavirus) : | Pcc | TN(Coronavirus) : | Pbb+Pnb+Pbn+Pnn | |

| TP(Normal) : | Pnn | TN(Normal) : | Pbb+Pcb+Pbc+Pcc |

False Positives FP: False Negatives FN:

| TN(Bacteria) : | Pbc+Pbn | TN(Bacteria) : | Pcb+Pnb | |

| TN(Coronavirus) : | Pcb+Pcn | TN(Coronavirus) : | Pbc+Pnc | |

| TN(Normal) : | Pnb+Pnc | TN(Normal) : | Pbn+Pcn |

4.3. Multi-classification results

In this section, we present the multi-classification results followed by a brief discussion of the results given by each model.

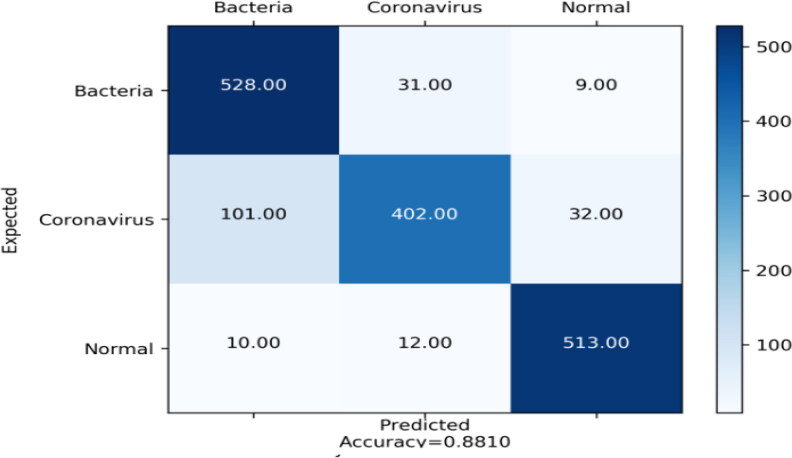

4.3.1. DensNet201

The table (Table 2) of Densnet201 reports that Normal class was identified with good precision, sensitivity and specificity (92.59%, 95.88%, and 96.28% respectively), that means that the sum of false positives was low, the sum of false negatives was low and the sum of true negatives was high respectively. The accuracy value is equal to 31.31% which is a third of the model’s accuracy (Figure 3).

Table 2.

Evaluation metric for DenNet201.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 513 | 1062 | 22 | 41 | 31.31 | 95.88 | 96.28 | 92.59 | 94.21 |

| Bacteria | 528 | 959 | 40 | 111 | 32.23 | 92.95 | 89.62 | 82.62 | 87.48 |

| Coronavirus | 402 | 1060 | 133 | 43 | 24.54 | 75.14 | 96.10 | 90.33 | 82.04 |

Figure 3.

Confusion matrix of DensNet201.

For Bacteria class (Table 2), it was identified with good sensitivity of 92.95% because the sum of false negatives was low. About specificity and precision, their values were reasonable 89.62% and 82.62%, and this means that sum of true negatives was relatively high, and the sum of false positives was relatively low respectively. For accuracy, it is equal to 32.23%.

Regarding Coronavirus class (Table 2), it was distinguished well since precision and specificity were good (90.33%, and 96.10%) and reasonable sensitivity (75.14%). These values can be explained by the fact that the sum of false positives was low, the sum of true negatives was high, and the sum of false negatives was low respectively. The accuracy value is equal to 24.54% which is a third of the model’s accuracy.

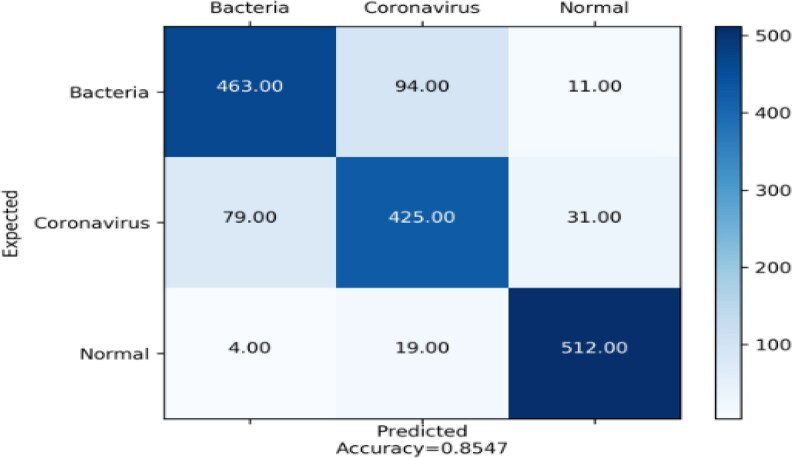

4.3.2. Inception_resnet_V2

For Inception_Resnet_V2 table (Table 3), we see that Normal class was classified with good precision, sensitivity, and specificity. The value of precision is 94.40% means that sum of false positives was low. For sensitivity, the value is 97.75% because the sum of false negatives was low while the specificity is of value 97.18% which is by reason of the sum of true negatives was high. Finally, the value of accuracy is 31.92% (Figure 4).

Table 3.

Evaluation metric for Inception_Resnet_V2.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 523 | 1072 | 12 | 31 | 31.92 | 97.75 | 97.18 | 94.40 | 96.05 |

| Bacteria | 544 | 1002 | 24 | 68 | 33.21 | 95.77 | 93.64 | 88.88 | 92.20 |

| Coronavirus | 443 | 1074 | 92 | 29 | 27.04 | 82.80 | 97.37 | 93.85 | 87.98 |

Figure 4.

Confusion matrix of Inception_ResNet_V2.

Bacteria class (Table 3) was detected with good specificity and sensitivity (93.64% and 95.77%) and with reasonable precision of 88.88%. The values obtained are due to the fact that the sum of true negatives was high, the sum of false negatives was low, and the sum of false positives was relatively low. Whereas the accuracy is equivalent to 33.21%.

For the Coronavirus class (Table 3), we observe that it was identified with good specificity and precision (97.37% and 93.85%) and likewise with a moderate value of sensitivity 82.80%. We can explain those values by the fact the sum of true negatives was high, and the sum of false positives was low (specificity and precision) and also the sum of false negatives was practical low (sensitivity). We notice that the value of accuracy is 27.04%.

4.3.3. Inception_V3

The table of Inception_V3 model (Table 4) depicts that the Normal class was distinguished well since precision, sensitivity, and specificity reached good value (93.76%, 95.51%, and 96.91%). This can be explained by the sum of false positives and false negatives were low (precision and sensitivity), and the sum of true negatives was high. Furthermore, accuracy has a value of 31.19%.

Table 4.

Evaluation metric for Inception_V3.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 511 | 1069 | 24 | 34 | 31.19 | 95.51 | 96.91 | 93.76 | 94.62 |

| Bacteria | 525 | 963 | 43 | 107 | 32.05 | 92.42 | 90.00 | 83.06 | 87.50 |

| Coronavirus | 406 | 1048 | 129 | 55 | 24.78 | 75.88 | 95.01 | 88.06 | 81.52 |

Concerning Bacteria class (Table 4), it was detected well since sensitivity and specificity were equivalent to 92.42% and 90.00% and also with tolerable precision (83.06%). These values can be interpreted by the sum of false negatives was low and the sum of true negatives was high (sensitivity and specificity). On the other hand, the value of precision (83.06%) is due to the sum of true negatives was low. Moreover, it can be observed that the value of accuracy is 32.05%.

Coronavirus class (Table 4) was identified relatively well because precision and sensitivity were reasonable and also with good specificity. Their values were 88.06%, 75.88% and 95.01% respectively. We can explain these values like this: the sum of false positives was relatively low for the precision; the sum of false negatives was practically low for the sensitivity and the sum of true negatives was high for the specificity. While the value of accuracy is equal to 24.78% (Figure 5).

Figure 5.

Confusion matrix of Inception_V3.

4.3.4. Mobilenet_V2

The obtained results by Mobilenet_V2 (Table 5) tell us that Normal class was detected with good values of precision, sensitivity, and specificity (92.41%, 95.70%, and 96.19%). These achieved values are due to the fact that the false positives and sum of false positives were low for precision and sensitivity respectively and also the sum of true negatives was high. We can see that the value of the accuracy is 31.25%.

Table 5.

Evaluation metric for Mobilenet_V2.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 512 | 1061 | 23 | 42 | 31.25 | 95.70 | 96.19 | 92.41 | 94.03 |

| Bacteria | 463 | 987 | 105 | 83 | 28.26 | 81.51 | 92.24 | 84.79 | 83.12 |

| Coronavirus | 425 | 990 | 110 | 113 | 25.94 | 79.43 | 89.75 | 78.99 | 79.21 |

Acceptable precision and sensitivity where the values are 84.79% and 81.51% and also good specificity of value 92.24% were identified Bacteria class (Table 5). This means that sum of false positives and the sum of false negatives were low (precision and sensitivity). Likewise, the value of specificity is explained by the sum of true negatives was high. As we can see the value of accuracy is 28.26% (Figure 6).

Figure 6.

Confusion matrix of Mobilenet_V2.

About Coronavirus class (Table 5), it was detected relatively well since precision, sensitivity, and specificity were reasonable. The values are 78.99%, 79.43% and 89.75% respectively. The obtained results can be explained by the sum of false positives and false negatives were practically low for the precision and sensitivity as well as the sum of true negatives was acceptable high for the specificity. While the value of the accuracy is 25.94%.

4.3.5. Resnet50

Concerning the Resnet50 results (Table 6), we may notice that Normal class was detected with good precision, sensitivity, and specificity (95.22%, 93.27%, and 97.73%). This can be explained by the sum of false positives and false negatives were low (precision and sensitivity). Besides, the value of specificity is due to the fact of the sum of true negatives that was high. As noticed, the value of accuracy is 30.46%.

Table 6.

Evaluation metric for Resnet50.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 499 | 1078 | 36 | 25 | 30.46 | 93.27 | 97.73 | 95.22 | 94.23 |

| Bacteria | 506 | 982 | 62 | 88 | 30.89 | 89.08 | 91.77 | 85.18 | 87.09 |

| Coronavirus | 429 | 1012 | 106 | 91 | 26.19 | 80.18 | 91.74 | 82.50 | 81.32 |

For the Bacteria class (Table 6), the good value of specificity (91.77%) is obtained because the sum of true negatives was high. In addition, the value of sensitivity and precision (89.08% and 85.18%) were reasonable since the sum of false negatives and false positives were low. For accuracy, the value is equal to 30.89%.

Regarding Coronavirus class (Table 6), we can see that it was distinguished relatively well because precision and sensitivity were reasonable with values: 82.50% and 80.18% respectively and also with good value of specificity (91.74%). This means that the sum of false positives and false negatives were acceptable low and also the sum of true negatives was high. The value of accuracy is equal to 26.19% (Figure 7).

Figure 7.

Confusion matrix of Resnet50.

4.3.6. VGG16

The results given by VGG16 (Table 7) show that Normal class was detected with good sensitivity (92.71%) that is caused by the low sum of false negatives. Likewise, it distinguished with reasonable precision and specificity (77.01% and 86.58%) which is caused by the low sum of false positives and the high sum of true negatives. We can see that the value of accuracy is 30.28%.

Table 7.

Evaluation metric for VGG16.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 496 | 955 | 39 | 148 | 30.28 | 92.71 | 86.58 | 77.01 | 84.13 |

| Bacteria | 448 | 888 | 120 | 182 | 27.35 | 78.87 | 82.99 | 71.11 | 74.79 |

| Coronavirus | 282 | 1021 | 253 | 82 | 17.21 | 52.71 | 92.56 | 77.47 | 62.73 |

Bacteria class (Table 7) was identified with acceptable values of precision, sensitivity, and specificity (71.11%, 78.87%, and 82.99%). These values are produced by the low sum of false positives and sum of false negatives and by the high sum of true negatives. The value of accuracy is 27.35%.

Coronavirus class (Table 7) was determined with good specificity (92.56%) given by the high sum of true negatives and with reasonable precision (77.47%) caused by the low sum of false positives. In addition, it is distinguished with wicked sensitivity (52.71%) which is a result of the poor sum of false negatives. As it is observed, the accuracy value is 17.21% (Figure 8).

Figure 8.

Confusion matrix of VGG16.

4.3.7. VGG19

Regarding VGG19 results (Table 8), we observe that Normal class was classified with good specificity (90.29%) and reasonable sensitivity and precision (85.23% and 80.99%). These values are obtained by the fact that the sum of true negatives was high (specificity) and the sum of false positives and the false negatives were low (precision and sensitivity). We can see that the value of accuracy is 27.83%.

Table 8.

Evaluation metric for VGG19.

| Class | TP | TN | FN | FP | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) |

|---|---|---|---|---|---|---|---|---|---|

| Normal | 456 | 996 | 79 | 107 | 27.83 | 85.23 | 90.29 | 80.99 | 83.06 |

| Bacteria | 475 | 798 | 93 | 272 | 28.99 | 83.62 | 74.57 | 63.58 | 72.24 |

| Coronavirus | 257 | 1032 | 278 | 71 | 15.68 | 48.03 | 93.56 | 78.35 | 59.55 |

For Bacteria class (Table 8), it was identified relatively well since precision, sensitivity, and specificity were reasonable (63.58%, 83.62%, and 74.57%). This means that the sum of false positives was low for precision. In addition, the values of sensitivity and specificity are caused by the sum of false negatives that were low and the sum of true negatives that were high respectively. The value of the accuracy is equal to 28.99%.

Analogous, Coronavirus class (Table 8) was detected with good specificity (93.56%) caused by the high sum of true negatives and with acceptable precision (78.35%) caused by the reasonable low sum of false positives. Likewise, it is identified with wicked sensitivity (48.03%) which is a result of the low sum of false negatives. For accuracy, its value is 15.68% (Figure 9).

Figure 9.

Confusion matrix of VGG19.

4.4. Experimental comparisons

This subsection compares the experimental results of classifying X-ray images using the different networks. The experimental results will be compared in terms of training and testing time and metrics defined in Equation (1).

Table 9 is a summary of the confusion matrix performance of all used models. Thereby, as we can read, the highest performance values have been yielded an accuracy of 92.18%, sensitivity of 92.11%, specificity of 96.06%, precision of 92.38% and F1 score value of 92.07% for Inception_Resnet_V2 pre-trained model. Furthermore, good metrics values (accuracy 88.09%, sensitivity 87.99%, specificity 94.00%, precision 88.52% and F1 score 87.91%) were obtained by DensNet201.The same thing can be said for Inception_V3, the acquired performances were good (accuracy 88.03%, sensitivity 87.94%, specificity 93.97%, precision 88.30%, and F1 score 87.88%). Regarding Mobilenet_V2, the values attained can be described as follows: accuracy of 85.47%, sensitivity 85.55%, specificity 92.73%, precision 85.40%, and F1 score value of 85.45%. We can notice that good performance values were accomplished for Resnet50 (accuracy 87.54%, sensitivity 87.51%, specificity 93.75%, precision 87.63%, and F1 score 87.55%). About VGG16, the model produced low-performance values as the accuracy of 74.84%, the sensitivity of 74.76%, the specificity of 87.37%, the precision of 75.20%, and the F1 Score value of 73.88%. Moreover, the table shows us that VGG19 model achieved the lowest performance as the accuracy of 72.52%, the sensitivity of 72.29%, the specificity of 86.14%, the precision of 74.31%, and F1 score value of 71.62%. As a conclusion, the Inception_Resnet_V2 architecture furnished superiority up the other architectures both training and testing steps followed by Densnet201.

Table 9.

Evaluation metric for different models.

| Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | F1 Score (%) | |

|---|---|---|---|---|---|

| Inception_Resnet_V2 | 92.18 | 92.11 | 96.06 | 92.38 | 92.07 |

| DensNet201 | 88.09 | 87.99 | 94.00 | 88.52 | 87.91 |

| Resnet50 | 87.54 | 87.51 | 93.75 | 87.63 | 87.55 |

| Mobilenet_V2 | 85.47 | 85.55 | 92.73 | 85.40 | 85.45 |

| Inception_V3 | 88.03 | 87.94 | 93.97 | 88.30 | 87.88 |

| VGG16 | 74.84 | 74.76 | 87.37 | 75.20 | 73.88 |

| VGG19 | 72.52 | 72.29 | 86.14 | 74.31 | 71.62 |

Furthermore, Table 10 depicts the comparative computational times in second for different models tested during this study. For inception_ResNet_V2, the elapsed time for training and testing stages was 79 184.28 s and 262 s. DensNet201 has required 68 859.73 and 225 s for training and testing steps. Likewise, for Resnet50, 58 069.93 and 194 s were required to finish training and testing steps. Similarly, for Mobilenet_V2, the elapsed time for training and testing were 58 693.21 and 196 s. For Inception_V3, it was necessary to have 58 485.06 and 193 s to achieve training and testing steps respectively. Regarding VGG16, it has required 53 621.49 and 181 s while VGG19 has required 53 493.08 and 181 s for training and testing steps respectively.

Table 10.

Comparative computational time in seconds.

| Model | Training (s) | Testing (s) |

|---|---|---|

| Inception_Resnet_V2 | 79 184.28 | 262 |

| DensNet201 | 68 859.73 | 225 |

| Resnet50 | 58 069.93 | 194 |

| Mobilenet_V2 | 58 693.21 | 196 |

| Inception_V3 | 58 485.06 | 193 |

| VGG16 | 53 621.49 | 181 |

| VGG19 | 53 493.08 | 181 |

5. Discussion

In the present work, we conducted a comparative study of the most known deep learning architectures to detect and classify of coronavirus pneumonia using CT and X-ray images.

From different tables above (Tables 2–8), we notice that the coronavirus class reaches low values in terms of accuracy, sensitivity, specificity, precision, and F1 score. Thereby, the main problem of the present work is the limited number of coronavirus X-ray images used for the training of different proposed deep learning models. In order to overcome this issue, we used deep transfer learning techniques. Moreover, Covid Chest X-ray Dataset (Cohen et al., 2020) contains a melange of brain and chest images that can decrease the accuracy and other metrics. The images of other classes are only images of chest. In the coming days, we are planning to improve this study with different models, if we reach more data.

We compared the different pre-trained models according to accuracy, sensitivity, specificity, precision, F1 score, and training and testing times. As tabulated by Table 9, the obtained results showed that the Incpetion_Resnet_V2 gave good classification performance (92.18% of accuracy) followed by Densnet201 with 88.09% of accuracy. Contrariwise, VGG19 and VGG16 are the lowest compared with other DL architectures, since these last models help to obtain respectively 74.84% and 72.52% of accuracy.

Moreover, Table 10 illustrates a comparison between the different deep learning models used in the experiments in terms of computational times. From this table, we observe that Incpetion_Resnet_V2 even it gives a good result it is not fast because it takes 79 184.28 and 262 in training and testing steps respectively followed by Densnet201. In addition, we notice that Inception_V3 is fast and provides good results (88.03% of accuracy). We can conclude that the scientist has the choice to choose between the accuracy and the computation time to finally select the DL technique to use, but since we are in the medical field, the accuracy of the DL techniques stays major selection criteria.

Consequently, we recommend the Incpetion_Resnet_V2 (92.18% of accuracy, 92.11% of sensitivity, 96.06% of specificity, 92.38% of precision, and 92.07% of F1 score) model based on X-ray and CT images to be used to identify the health status of patients against the coronavirus. We hope that the results obtained during this study may serve as an initial step towards developing from X-ray and CT images a sophisticated coronavirus detection to save as many lives as possible.

6. Conclusion and future work

We investigated in this work automated methods used to classify the chest X-ray & CT images into bacterial pneumonia, coronavirus, and normal classes using seven deep learning architectures (VGG16, VGG19, DenseNet201, Inception_ResNet_V2, Inception_V3, Resnet50, and MobileNet_V2). The main goal is to answer the following research questions: RQ1). Is there any DL technique that distinctly outperforms other DL techniques? RQ2). Can DL use to early screen coronavirus from CT and X-ray images? RQ3). What is the diagnostic accuracy that DL can be attained based on CT and X-ray images? RQ4). Can DL assist in the efforts to accurately detect and track the progression or resolution of the coronavirus? Toward this end, the experiments were conducted using chest X-ray & CT dataset. Moreover, the performances of these experiments were evaluated using various performance metrics. Furthermore, the obtained results show that Inception_Resnet_V2 provides better results compared to other architectures cited in this work (accuracy is higher than 92%). Due to the high performance achieved by this model, we believe that these results help doctors to make decisions in clinical practice.

Ongoing work intends to develop a full system for coronavirus using deep learning detection, segmentation, and classification. In addition, the performance may be improved using more datasets, more sophisticated feature extraction techniques based on deep learning such as You-Only-Look-Once (YOLO) (Al-Masni et al., 2018), and U-Net (Ronneberger et al., 2015) that was developed for biomedical image segmentation.

Acknowledgements

We thank the reviewer for his/her thorough review and highly appreciate the comments, corrections and suggestions that ensued, which significantly contributed to improving the quality of the publication.

Disclosure statement

We declare that we have no conflicts of interest to disclose.

Author’s contributions

The experiments and the programming stage were carried out by Khalid El Asnaoui. All authors wrote the paper, and all approve this submission.

Materials

Chest X-ray & CT dataset (Kermany et al., 2018) available at https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia

Covid Chest X-ray Dataset (Cohen et al., 2020) available at https://github.com/ieee8023/covid-chestxray-dataset

References

- Aanouz I., Belhassan A., El Khatabi K., Lakhlifi T., El Idrissi M., & Bouachrine M. (2020). Moroccan medicinal plants as inhibitors of COVID-19: Computational investigations. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1758790 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abdelli I., Hassani F., Brikci S. B., & Ghalem S. (2020). In silico study the inhibition of Angiotensin converting enzyme 2 receptor of COVID-19 by Ammoides verticillata components harvested from western Algeria. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1763199 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al-Masni M. A., Al-Antari M. A., Park J.-M., Gi G., Kim T.-Y., Rivera P., Valarezo E., Choi M.-T., Han S.-M., & Kim T.-S. (2018). Simultaneous detection and classification of breast masses in digital mammograms via a deep learning YOLO-based CAD system. Computer Methods and Programs in Biomedicine, 157, 85–94. 10.1016/j.cmpb.2018.01.017 [DOI] [PubMed] [Google Scholar]

- Anwarul H., Bilal A. P., Arif H., Fikry A. Q., Farnoosh A., Falah M. A., Majid S., Hossein D., Behnam R., Masoumeh M., Koorosh S., Ali A. S., & Mojtaba F. (2020). A review on the cleavage priming of the spike protein on coronavirus by angiotensin-converting enzyme-2 and furin. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1754293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhandary A., Prabhu G. A., Rajinikanth V., Thanaraj K. P., Satapathy S. C., Robbins D. E., Shasky C., Zhang Y.-D., Tavares J. M. R. S., & Raja N. S. M. (2020). Deep-learning framework to detect lung abnormality—A study with chest X-Ray and lung CT scan images. Pattern Recognition Letters, 129, 271–278. 10.1016/j.patrec.2019.11.013 [DOI] [Google Scholar]

- Blum A., Chawla S. (2001). Learning from labeled and unlabeled data using graph mincuts. Proceedings of the Eighteenth International Conference on Machine Learning (pp. 19–26). 10.1184/R1/6606860.v1 [DOI] [Google Scholar]

- Boopathi S., Poma A. B., & Kolandaivel P. (2020). Novel 2019 Coronavirus structure, mechanism of action, antiviral drug promises and rule out against its treatment. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1758788 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chinese National Health Commission (CNHC ). (2020, March). Reported cases of 2019-nCoV. Retrieved March, 2020, from https://ncov.dxy.cn/ncovh5/view/pneumonia?from=groupmessage&isappinstalled=0

- Cohen J. P., Morrison P., Dao L. (2020). COVID-19 image data collection, arXiv: 2003.11597. https://github.com/ieee8023/covid-chestxray-dataset.

- Djalante R., Lassa J., Setiamarga D., Mahfud C., Sudjatma A., Indrawan M., Haryanto B., Sinapoy M. S., Haryanto I., Djalente S., Gunawan L. A., Anindito R., Warsilah H., & Surtiari G. A. (2020). Review and analysis of current responses to COVID-19 in Indonesia: Period of January to March 2020. Progress in Disaster Science, 6, 100091 10.1016/j.pdisas.2020.100091 [DOI] [PMC free article] [PubMed] [Google Scholar]

- El Asnaoui K., Chawki Y., & Idri A. (2020). Automated methods for detection and classification pneumonia based on X-ray images using deep learning. arXiv: 2003.14363.

- El Zowalaty M. E., & Järhult J. D. (2020). From SARS to COVID-19: A previously unknown SARS- related coronavirus (SARS-CoV-2) of pandemic potential infecting humans - Call for a One Health approach. One Health (Amsterdam, Netherlands), 9, 100124 10.1016/j.onehlt.2020.100124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elfiky A. A. (2020a). SARS-CoV-2 RNA dependent RNA polymerase (RdRp) targeting: An insilico perspective. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1761882 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elfiky A. A. (2020b). Natural products may interfere with SARSCoV-2 attachment to the host cell. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1761881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elfiky A. A., & Azzam E. B. (2020). Novel guanosine derivatives against MERS CoV polymerase: An in silico perspective. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1758789 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elkbuli A., Ehrlich H., & McKenney M. (2020). The effective use of telemedicine to save lives and maintain structure in a healthcare system: Current response to COVID-19. The American Journal of Emergency Medicine. 10.1016/j.ajem.2020.04.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elmezayen A. D., Al-Obaidi A., Şahin A. T., & Yelekçi K. (2020). Drug repurposing for coronavirus (COVID-19): in silico screening of known drugs against coronavirus 3CL hydrolase and protease enzymes. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1758791 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enayatkhani M., Hasaniazad M., Faezi S., Guklani H., Davoodian P., Ahmadi N., Einakian M. A., Karmostaji A., & Ahmadi K. (2020). Reverse vaccinology approach to design a novel multi-epitope vaccine candidate against COVID-19: An in silico study. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1756411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Enmozhi S. K., Kavitha R., Irudhayasamy S., & Jerrine J. (2020). Andrographolide As a Potential Inhibitor of SARS-CoV-2 Main Protease: An In Silico Approach. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1760136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farooq M., & Hafeez A. (2020). COVID-ResNet: A deep learning framework for screening of COVID19 from radiographs. arXiv: 2003.14395.

- Fausto J., Hirano L., Lam D., Mehta A., Mills B., Owens D., Perry E., & Curtis J. R. (2020). Creating a palliative care inpatient response plan for COVID19—The UW medicine experience. Journal of Pain and Symptom Management. 10.1016/j.jpainsymman.2020.03.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ghosh A., Gupta R., & Misra A. (2020). Telemedicine for diabetes care in India during COVID19 pandemic and national lockdown period: Guidelines for physicians. Diabetes & Metabolic Syndrome, 14(4), 273–276. 10.1016/j.dsx.2020.04.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gozes O., Frid-Adar M., Greenspan H., Browning P. D., Zhang H., Ji W., Bernheim A., & Siegel E. (2020). Rapid AI development cycle for the Coronavirus (COVID-19) pandemic: Initial results for automated detection & patient monitoring using deep learning CT image analysis. arXiv: 2003.05037.

- He K., Zhang X., Ren S., Sunet J. (2016). Deep residual learning for image recognition. Proceedings of the IEEE conference on computer vision and pattern recognition CVPR’16 (pp. 770–778). IEEE; 10.1109/CVPR.2016.90 [DOI] [Google Scholar]

- Hemdan E. D., Shouman M. A., & Karar M. E. (2020). COVIDX-Net: A framework of deep learning classifiers to diagnose COVID-19 in X-ray images. arXiv: 2003.11055.

- Huang G., Liu Z., Van Der Maaten N., & Weinberger K. Q. (2017). Densely connected convolutional networks [Paper presentation]. Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition CVPR’17 (pp. 2261–2269). IEEE; 10.1109/CVPR.2017.243 [DOI] [Google Scholar]

- Huang P., Liu T., Huang L., Liu H., Lei M., Xu W., Hu X., Chen J., & Liu B. (2020). Use of chest CT in combination with negative RT-PCR assay for the 2019 novel coronavirus but high clinical suspicion. Radiology, 295(1), 22–23. 10.1148/radiol.2020200330 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kandel N., Chungong S., Omaar A., & Xing J. (2020). Health security capacities in the context of COVID-19 outbreak: An analysis of international health regulations annual report data from 182 countries. The Lancet, 395(10229), 1047–P1053. 10.1016/S0140-6736(20)30553-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kermany D. S., Zhang K., & Goldbaum M. (2018). Labeled optical coherence tomography (Oct) and chest X-ray images for classification. Mendeley Data. 10.17632/rscbjbr9sj.2. [DOI] [Google Scholar]

- Landry M. D., Landry M. D., Geddes L., Park Moseman A., Lefler J. P., Raman S. R., & Wijchen J. v. (2020). Early reflection on the global impact of COVID19, and implications for physiotherapy. Physiotherapy. 10.1016/j.physio.2020.03.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee A., & Morling J. (2020). COVID19 - The need for public health in a time of emergency. Public Health, 182, 188–189. 10.1016/j.puhe.2020.03.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li J. P. O., Shantha J., Wong T. W., Wong E. Y., Mehta J., Lin H., Lin X., Strouthidis N. G., Park K. H., Fung A. T., McLeod S. D., Busin M., Parke D. W., Holland G. N., Chodosh J., Yeh S., & Ting D. S. W. (2020). Preparedness among ophthalmologists: During and beyond the COVID-19 pandemic. Ophthalmology, 127(5), 569–572. 10.1016/j.ophtha.2020.03.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Q., Guan X., Wu P., Wang X., Zhou L., Tong Y., Ren R., Leung K. S. M., Lau E. H. Y., Wong J. Y., Xing X., Xiang N., Wu Y., Li C., Chen Q., Li D., Liu T., Zhao J., Liu M., … Feng Z. (2020). Early transmission dynamics in Wuhan, China, of novel coronavirus–infected pneumonia. The New England Journal of Medicine, 382(13), 1199–1207. 10.1056/NEJMoa2001316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu H., Liu F., Li J., Zhang T., Wang D., & Lan W. (2020). Clinical and CT imaging features of the COVID-19 pneumonia: Focus on pregnant women and children. Journal of Infection, 80(5), e7–e13. 10.1016/j.jinf.2020.03.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu R., Han H., Liu F., Lv Z., Wu K., Liu Y., Feng Y., & Zhu C. (2020). Positive rate of RT-PCR detection of SARS-CoV-2 infection in 4880 cases from one hospital in Wuhan, China, from Jan to Feb 2020. Clinica Chimica Acta; International Journal of Clinical Chemistry, 505, 172–175. 10.1016/j.cca.2020.03.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu C., Liu X., & Jia Z. (2020). 2019-nCoV transmission through the ocular surface must not be ignored. The Lancet, 395(10224), e39 10.1016/S0140-6736(20)30313-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Manoj K. G., Sarojamma V., Ravindra D., Gayatri G., Lambodar B., & Ramakrishna V. (2020). In-silico approaches to detect inhibitors of the human severe acute respiratory syndrome coronavirus envelope protein ion channel. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1751300 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muralidharan N., Sakthivel R., Velmurugan D., & Gromiha M. M. (2020). Computational studies of drug repurposing and synergism of lopinavir, oseltamivir and ritonavir binding with SARS-CoV-2 protease against COVID-19. Journal of Biomolecular Structure and Dynamics, 10.1080/07391102.2020.1752802 [DOI] [PubMed] [Google Scholar]

- Narin A., Kaya G., & Pamuk Z. (2020). Automatic detection of Coronavirus disease (COVID-19) using X-ray images and deep convolutional neural networks. arXiv: 2003.10849. [DOI] [PMC free article] [PubMed]

- Ng M. Y., Lee E. Y., Yang J., Yang F., Li X., Wang H., Lui M. M., Lo C. S. Y., Leung B., Khong P. L., Hui K. Y., Yuen K., & Kuo M. D. (2020). Imaging profile of the COVID-19 infection: Radiologic findings and literature review. Radiology: Cardiothoracic Imaging, 2(1), e200034 10.1148/ryct.2020200034 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pant S., Singh M., Ravichandiran V., Murty U. S. N., & Srivastava H. K. (2020). Peptide-like and small-molecule inhibitors against Covid-19. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1757510 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phulen S., Nishant S., Manisha P., Pramod A., Hardeep K., Subodh K., Sanjay S., Harish K., Ajay P., Deba P. D., & Bikash M. (2020). In-silico homology assisted identification of inhibitor of RNA binding against 2019- nCoV N-protein (N terminal domain). Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1753580 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rajib I., Rimon P., Archi S. P., Nizam U., Md Sajjadur R., Al Mamun A., Md Nayeem H., Md Ackas A., & Mohammad A. H. (2020). A molecular modeling approach to identify effective antiviral phytochemicals against the main protease of SARS-CoV-2. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1761883 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rakesh S. J., Jagdale S. S., Bansode S. B., Shankar S. B., Tellis M. B., Pandya V. K., Chugh A., Giri A. P. & Kulkarni M. J. (2020). Discovery of potential multi-target-directed ligands by targeting host-specific SARSCoV-2 structurally conserved main protease$. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1760137 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rameez J. K., Rajat K. J., Gizachew M. A., Monika J., Ekampreet S., Amita P., Rashmi P. S., Jayaraman M., & Amit K. S. (2020). Targeting SARS-CoV-2: A systematic drug repurposing approach to identify promising inhibitors against 3C-like proteinase and 2′-O-ribose methyltransferase. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1753577 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ronneberger O., Fischer P., & Brox T. (2015). U-net: Convolutional networks for biomedical image segmentation [Paper presentation]. Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention (pp. 234–241). Springer; 10.1007/978-3-319-24574-4_28 [DOI] [Google Scholar]

- Roosa K., Lee Y., Luo R., Kirpich A., Rothenberg R., Hyman J. M., Yan P., & Chowell G. (2020). Real-time forecasts of the COVID-19 epidemic in China from February 5th to February 24th, 2020. Infectious Disease Modelling, 5, 256–263. 10.1016/j.idm.2020.02.002 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothan H. A., & Byrareddy S. N. (2020). The epidemiology and pathogenesis of coronavirus disease (COVID-19) outbreak. Journal of Autoimmunity, 109, 102433 10.1016/j.jaut.2020.102433 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rowan N. J., & Laffey J. G. (2020). Challenges and solutions for addressing critical shortage of supply chain for personal and protective equipment (PPE) arising from Coronavirus disease (COVID19) pandemic - Case study from the Republic of Ireland. The Science of the Total Environment, 725, 138532 10.1016/j.scitotenv.2020.138532 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ruuska S., Hämäläinen W., Kajava S., Mughal M., Matilainen P., & Mononen J. (2018). Evaluation of the confusion matrix method in the validation of an automated system for measuring feeding behaviour of cattle. Behavioural Processes, 148, 56–62. 10.1016/j.beproc.2018.01.004 [DOI] [PubMed] [Google Scholar]

- Salman A. K., Komal Z., Sajda A., Reaz U., & Zaheer U. H. (2020). Identification of chymotrypsin-like protease inhibitors of SARS-CoV-2 via integrated computational approach. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1751298 [DOI] [PubMed] [Google Scholar]

- Sandler M., Howard A., Zhu M., Zhmoginov A., & Chen L.-C. (2018). MobileNetV2: Inverted residuals and linear bottlenecks [Paper presentation]. Proceeding of the IEEE Conference on Computer Vision and Pattern Recognition CVPR’18 (pp. 4510–4520). IEEE; 10.1109/CVPR.2018.00474 [DOI] [Google Scholar]

- Saurabh K. S., Anshul S., Satyendra K. P., Shashikant S., Nilambari S. G., Rupali S. P., & Shailendra S. G. (2020). An in-silico evaluation of different Saikosaponins for their potency against SARS-CoV-2 using NSP15 and fusion spike glycoprotein as targets. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1762741 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shan F., Gao y., Wang Y., Shi W., Shi N., Han M., Xue Z., Shen D., & Shi Y. (2020). Lung infection quantification of COVID-19 in CT images with deep learning. arXiv: 2003.04655.

- Sharifi-Razavi A., Karimi N., & Rouhani N. (2020). COVID-19 and intracerebral haemorrhage: causative or coincidental? New Microbes and New Infections, 35, 100669 10.1016/j.nmni.2020.100669 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simcock R., Thomas T. V., Estes C., Filippi A. R., Katz M. A., Pereira I. J., & Saeed H. (2020). COVID-19: Global Radiation oncology's targeted response for pandemic preparedness. Clinical and Translational Radiation Oncology, 22, 55–68. 10.1016/j.ctro.2020.03.009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Simonyan K., & Zisserman A. (2014). Very deep convolutional networks for large-scale image recognition. arXiv: 1409.1556.

- Smith L. N. (2017). Cyclical learning rates for training neural networks [Paper presentation]. Proceedings of the 2017 IEEE Winter Conference on Applications of Computer Vision WACV (pp. 464–472). IEEE; 10.1109/WACV.2017.58 [DOI] [Google Scholar]

- Sourav D., Sharat S., Sona L., & Atanu S. R. (2020). An investigation into the identification of potential inhibitors of SARS-CoV-2 main protease using molecular docking study. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1763201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sun J., He W.-T., Wang L., Lai A., Ji X., Zhai X., Li G., Suchard M. A., Tian J., Zhou J., Veit M., & Su S. (2020). COVID-19: Epidemiology, Evolution, and cross-disciplinary perspectives. Trends in Molecular Medicine, 26(5), 483–495. 10.1016/j.molmed.2020.02.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Szegedy C., Ioffe S., Vanhoucke V., & Alemi A. (2016). Inception-v4, inception-ResNet and the impact of residual connections on learning. arXiv: 1602.07261.

- Szegedy C., Vanhoucke V., Ioffe S., Shlens J., & Wojna Z. (2015). Rethinking the inception architecture for computer vision. arXiv: 1512.00567.

- Umesh D. K., Chandrabose S., Sanjeev K. S., & Vikash K. D. (2020). Identification of new anti-nCoV drug chemical compounds from Indian spices exploiting SARS-CoV-2 main protease as target. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1763202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wahedi H. M., Ahmad S., & Abbasi S. W. (2020). Stilbene-based natural compounds as promising drug candidates against COVID-19. Journal of Biomolecular Structure and Dynamics. 10.1080/07391102.2020.1762743 [DOI] [PubMed] [Google Scholar]

- Wang L., & Wong A. (2020). COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images. arXiv: 2003.09871v2. [DOI] [PMC free article] [PubMed]

- Wilder-Smith A., Chiew C. J., & Lee V. J. (2020). Can we contain the COVID-19 outbreak with the same measures as for SARS? The Lancet Infectious Diseases, 20(5), e102–E107. 10.1016/S1473-3099(20)30129-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- World Health Organization (2020). Novel Coronavirus (2019-nCoV) Situation Report–28. Retrieved March 2020, from https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200217-sitrep-28-covid-19.pdf?sfvrsn=a19cf2ad_2

- Worldometers (2020, April 21). https://www.worldometers.info/coronavirus/

- Xu X., Jiang X., Ma C., Du P., Li X., Lv S., Yu L., Chen Y., Su J., Lang G., Li Y., Zhao H., Xu K., Ruan L., & Wu W. (2020). Deep learning system to screen Coronavirus disease 2019 Pneumonia. arXiv: 2002.09334. [DOI] [PMC free article] [PubMed]

- Yang J., Zheng Y., Gou X., Pu K., Chen Z., Guo Q., Ji R., Wang H., Wang Y., & Zhou Y. (2020). Prevalence of comorbidities and its effects in coronavirus disease 2019 patients: A systematic review and meta-analysis. International Journal of Infectious Diseases, 94(94), 91–95. 10.1016/j.ijid.2020.03.017 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang J., Xie Y., Li Y., Shen C., & Xia Y. (2020). COVID-19 screening on chest X-ray images using deep learning based anomaly detection. arXiv: 2003.12338.

- Zhang Q., Wang H., Yoon S. W., Won D., & Srihari K. (2019). Lung nodule diagnosis on 3D computed tomography images using deep convolutional neural networks. Procedia Manufacturing, 39, 363–370. 10.1016/j.promfg.2020.01.375 [DOI] [Google Scholar]