Supplemental Digital Content is available in the text.

Keywords: clinical computing, continuous predictive analytics monitoring, critical care, deterioration, intensive care unit transfer, predictive analytics

Abstract

Objectives:

Early detection of subacute potentially catastrophic illnesses using available data is a clinical imperative, and scores that report risk of imminent events in real time abound. Patients deteriorate for a variety of reasons, and it is unlikely that a single predictor such as an abnormal National Early Warning Score will detect all of them equally well. The objective of this study was to test the idea that the diversity of reasons for clinical deterioration leading to ICU transfer mandates multiple targeted predictive models.

Design:

Individual chart review to determine the clinical reason for ICU transfer; determination of relative risks of individual vital signs, laboratory tests and cardiorespiratory monitoring measures for prediction of each clinical reason for ICU transfer; and logistic regression modeling for the outcome of ICU transfer for a specific clinical reason.

Setting:

Cardiac medical-surgical ward; tertiary care academic hospital.

Patients:

Eight-thousand one-hundred eleven adult patients, 457 of whom were transferred to an ICU for clinical deterioration.

Interventions:

None.

Measurements and Main Results:

We calculated the contributing relative risks of individual vital signs, laboratory tests and cardiorespiratory monitoring measures for prediction of each clinical reason for ICU transfer, and used logistic regression modeling to calculate receiver operating characteristic areas and relative risks for the outcome of ICU transfer for a specific clinical reason. The reasons for clinical deterioration leading to ICU transfer were varied, as were their predictors. For example, the three most common reasons—respiratory instability, infection and suspected sepsis, and heart failure requiring escalated therapy—had distinct signatures of illness. Statistical models trained to target-specific reasons for ICU transfer performed better than one model targeting combined events.

Conclusions:

A single predictive model for clinical deterioration does not perform as well as having multiple models trained for the individual specific clinical events leading to ICU transfer.

Patients who deteriorate on the hospital ward and are emergently transferred to the ICU have poor outcomes (1–6). Early identification of subtly worsening patients might allow quicker treatment and improved outcome. To aid clinicians, early warning scores have been advanced that compare vital signs and laboratory tests to thresholds. The reception has been mixed (7, 8). Vital signs are often delayed, incorrect, or never measured (9, 10), and laboratory tests require blood draws and time. Nonetheless, these are the major (or only) inputs into popular track-and-trigger systems such as the National Early Warning Score (NEWS) and others (11–20). Thresholds for awarding risk points are based on clinical experience but suffer from lack of validation and the loss of information that forced dichotomization inevitably brings (21–24). Only some are trained on clinical events. For example, electronic cardiac arrest risk triage is trained for early detection of hospital patients with cardiac arrest (14), and the Rothman Index is trained to detect patients who will die in the next 12 months (15).

It is a natural exercise of clinicians to synthesize disparate data elements into a clinical picture of the patient. After 1981 when Knaus et al (25) introduced the Acute Physiology and Chronic Health Evaluation score, more methods have been introduced that combine elements of clinical data to yield quantitative estimates of patients’ health status and risk. Although varied in inputs, targets, patient populations, and mathematical tools, most are single scores meant for universal use (26). This is not altogether unreasonable. For example, we found that large abrupt spikes in risk estimation using a single model to identify patients at risk for ICU transfer had a positive predictive value 25% for imminent acute adverse event (27). But use of only a single model is a limited approach, as there are many paths of deterioration—accurate capture of all of these paths at the same time is challenging.

Importantly, most systems ignore the wealth of information that is present in cardiorespiratory monitoring. Although many patients are continuously monitored, these data are bulky and require mathematical analysis. Nonetheless, they contribute equally to vital signs and laboratory tests in detection of patients at risk of clinical deterioration and ICU transfer from a cardiac medical and surgical ward (28). We note, furthermore, that risk estimation for neonatal sepsis using advanced mathematical analysis that considers only continuous cardiorespiratory monitoring saves lives (29).

Finally, all of these early detection systems present one score as an omniscient risk marker for all conditions and patients (26). Clinicians know, although, that there are many paths to clinical deterioration. For example, the most common forms of deterioration leading to ICU transfer are respiratory instability (27, 30–35), hemodynamic instability (30, 33, 34), sepsis (27, 36), bleeding (33, 36), neurologic decompensation (36), unplanned surgery (33), and acute renal failure or electrolyte abnormalities (36). The multiplicity of candidate culprit organ systems suggests that a single model is not likely to catch all the patients who are worsening (33, 34).

Here, we tested three approaches to predictive analytics monitoring. The first is untrained models that apply thresholds and cutoffs to vital signs, and we represent the class with NEWS. The second was to train a universal predictive model on all patients who went to the ICU using measured values of vital signs, laboratory tests and continuous cardiorespiratory monitoring. This approach has advantages of learning from continuous cardiorespiratory monitoring and from the range of measured vital signs and laboratory test values, avoiding problems of dichotomization by thresholds. The third is a set of models trained on patients who had specific reasons for ICU transfer identified by clinician review, which has the additional advantage of learning signatures of specific target illnesses.

Approaches that might improve track-and-trigger systems include the use of libraries of predictive models that are tailored for specific reasons for clinical deterioration within the widely varied venues within the modern hospital, risk estimation along the continuum of measured values rather than threshold crossings, and analysis of continuous data in addition to vital signs and laboratory tests. Here we test these ideas.

MATERIALS AND METHODS

Study Design

In early detection of hospital patients at risk of clinical deterioration and the need of escalation to ICU care, we wished to test the idea that a single predictive analytics model using thresholds of vital signs and laboratory tests could be improved upon by a set of target-specific models that used continuous risk estimates and cardiorespiratory monitoring.

We examined the empiric risk profiles of individual vital signs, laboratory tests and cardiorespiratory monitoring variables for seven clinical deterioration phenotypes. We made predictive statistical models based on logistic regression adjusted for repeated measures for each phenotype as well as their composite and examined their performance in detecting other deterioration phenotypes. The University of Virginia Institutional Review Board approved the study.

Study Population

We studied 8,111 consecutive admissions from October 2013 to September 2015 on a 73-bed adult acute care cardiac and cardiovascular surgery ward at the University of Virginia Hospital (34). We used an institutional electronic data warehouse to access electronic medical record (EMR). Six patients were added to the original cohort (n = 8,105) who did not have complete continuous cardiorespiratory monitoring data.

We reviewed the charts of the 457 patients who were transferred to the ICU because of clinical deterioration. Five clinical reviewers developed and implemented clinical definitions indicating reasons for deterioration and reviewed records for the 48 hours prior to and following ICU transfer. To evaluate inter-rater reliability, we calculated a weighted kappa coefficient on a nested 50 events that were evaluated by all reviewers. The new clinical review was independent of our earlier work (34), which used a combined outcome of ICU transfer, urgent surgery, or unexpected death.

Clinical and Continuous Cardiorespiratory Monitoring Data

We analyzed vital signs and laboratory tests recorded in the EMR and the continuous seven-lead electrocardiogram (ECG) signal that is standard of practice. Vital signs and laboratory tests were sampled and held. We calculated cardiorespiratory dynamics at 15-minute intervals over 30-minute windows.

Statistical Analyses

To estimate the relative risk of an event as a function of measured variables, we constructed predictiveness curves (40). In order to reduce bias due to repeated measures and missing data, we used a bootstrapping technique. We sampled one measurement within 12 hours before each event and one measurement from all nonevent patients at a random time during their stay. The distributions of time since admission were not significantly different for these two groups (data not shown). We calculated the relative risk of an event at each decile of the sampled variable, then interpolated the risk to 20 points evenly spaced in the range of the variable. We repeated this process of sampling, calculating relative risk, and interpolating 30 times. Finally, we averaged the 30 risk estimate curves to obtain a bootstrapped predictiveness curve at the 20 evenly spaced points, and we display the result as a heat map.

To relate the predictors to the outcomes, we used multivariable logistic regression analysis adjusted for repeated measures. The output of the resulting regression expressions is the probability of ICU transfer in the next 12 hours. To test for the significance of differences between the outputs of predictive models, we calculated the area under the receiver operating characteristic (ROC) curves for NEWS, a model trained to target all the ICU transfers, and seven additional models, one for each reason for ICU transfer. The ROC curves report on discrimination between data belonging to patients with and without ICU transfer in the next 12 hours. For each reason for transfer, therefore, we calculated three ROC areas. For the group of seven specific reasons for ICU transfer, we tested the difference between NEWS and the outputs of the general model, and between the outputs of the general model and the outputs of the seven specifically targeted models using the paired t test.

In model development, we adhered to the Transparent Reporting of multivariable prediction model for Individual Prognosis Or Diagnosis statement recommendations (41).

RESULTS

Study Population

The main admitting services were cardiology (49%) and cardiac surgery (19%); the remainder were equally from other medical or surgical services. The median age was 59 (interquartile range, 55–75). The mortality rate was 0.4% and 17% for patients who did not or did require ICU transfer.

Appendix Figure 1 (Supplemental Digital Content 1, http://links.lww.com/CCX/A172) is an UpSet plot (42) of the numbers of patients and their reasons for ICU transfer. The most common reason for transfer was respiratory instability alone, followed by respiratory instability and suspected sepsis. The kappa statistic ranged from 0.616 to 1.0 indicating moderate to excellent agreement among the reviewers.

Relative Risks for ICU Admission

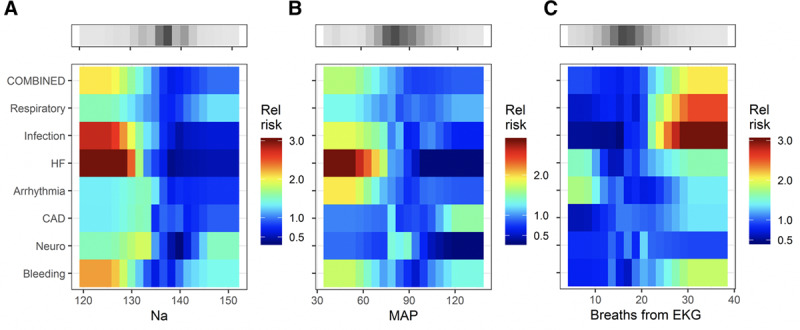

Figure 1 shows the relationship of risk (color) to reason for admission to ICU (row) and measured variable (column). Each of the three subparts shows a set of predictiveness curves as heat maps for kinds of measurements—a laboratory test (Na) in Figure 1A, a vital sign (mean arterial pressure [MAP]) in Figure 1B, and a measure from continuous cardiorespiratory monitoring (breathing rate derived from the ECG (28) in Figure 1C. The gray-scale bars at the top show the densities of the measurements.

Figure 1.

Heat maps that relate the relative risk (color bar; 1 means the risk is average for the ward) to the reason for ICU transfer (rows) and measured value of a predictor (abscissa) for a representative laboratory value (A, serum Na in meq/dL), vital sign (B, mean arterial pressure [MAP] in mm Hg), and cardiorespiratory measure from time series analysis of continuous cardiorespiratory monitoring (C, breaths per minute derived from the electrocardiogram [EKG]). The gray-scale bars show the density of the measurements. The figure may be interpreted as follows. The change in color from deep red to blue from left to right in the fourth row of (A) signifies that low serum Na concentration was associated with a high risk for ICU transfer for escalation of heart failure (HF) therapy. CAD = coronary artery disease, RCT = randomized control trial.

The major finding of this subset of the overall results is that serum Na and the MAP, when low, signify impending ICU transfer for escalation of heart failure (HF) therapy, but they do not portend ICU transfer for any of the other reasons. Figure 1C shows that a rise in the continuously measured respiratory rate identifies patients with respiratory instability or infection to such a degree that ICU transfer takes place. These maps indicate the heterogeneity of patient characteristics but are not quantitative tests. For that purpose, we used logistic regression models.

The top rows of the colored matrices show the risk profiles of a predictive model trained on all of the events combined. The smaller ranges of color demonstrate that the predictive utility of Na for HF, MAP for HF, and breaths from ECG for respiratory instability or infection are blunted. Thus, by combining outcomes, the ability of a multivariable statistical model to detect any individual clinical cause for ICU transfer is diminished.

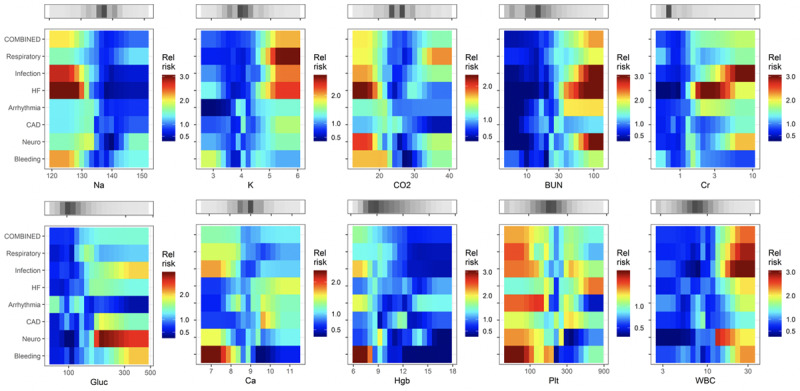

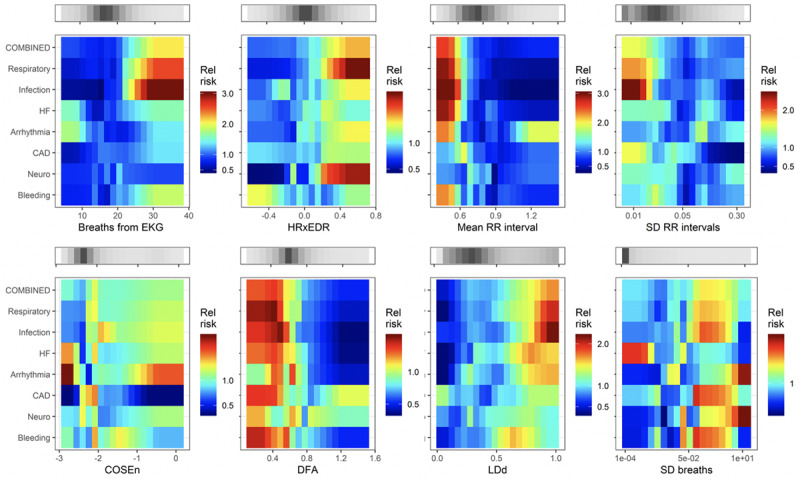

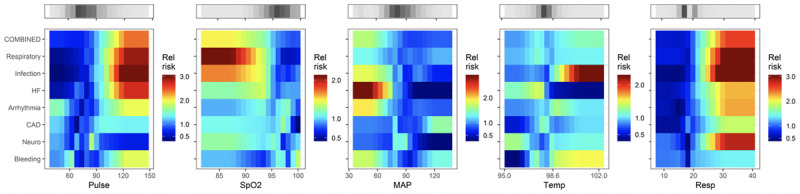

Figures 2–4 show the complete catalog of relationship of risks to clinical causes for ICU transfer and measured variable. The finding is that the phenotypes of reasons for ICU transfer differ from each other. Consider bleeding (bottom row) as an example—it is uniquely marked by hypokalemia, hypocalcemia, thrombocytopenia and, of course, anemia. As a result, we would expect a predictive model trained to detect bleeding would not perform well in detecting other reasons for ICU transfer, nor would we expect models trained on other reasons for ICU transfer to do well at detecting bleeding.

Figure 2.

Heat maps that relate the relative risk of the reason for ICU transfer to the measured values of predictor laboratory values. BUN = blood urea nitrogen, CAD = coronary artery disease, Cr = creatinine, Hbg = hemoglobin, HF = heart failure, Plt = platelet.

Figure 4.

Heat maps that relate the relative risk of the reason for ICU transfer to measured values of predictors derived from cardiorespiratory monitoring. CAD = coronary artery disease, COSEn = coefficient of sample entropy (37, 38), DFA = detrended fluctuation analysis (39), EKG = electrocardiogram, HF = heart failure, HRxEDR = cross-correlation of heart rate and breathing rate derived from electrocardiogram, LDd = local density score (34), RR = respiratory rate.

Figure 3.

Heat maps that relate the relative risk of the reason for ICU transfer to the nurse-entered values of predictor vital signs. HF = heart failure, MAP = mean arterial pressure, Spo2 = oxygen saturation.

Statistical Models

We limited the number of predictor variables to one per 10 events. Vital signs figured in all eight of the trained models; laboratory tests and continuous cardiorespiratory monitoring measures each figured in six models. The continuous cardiorespiratory monitoring measure most commonly included was ECG-derived respiratory rate (four models). The CAD model had the most continuous cardiorespiratory monitoring measures, two of its three (detrended fluctuation analysis and coefficient of sample entropy) components.

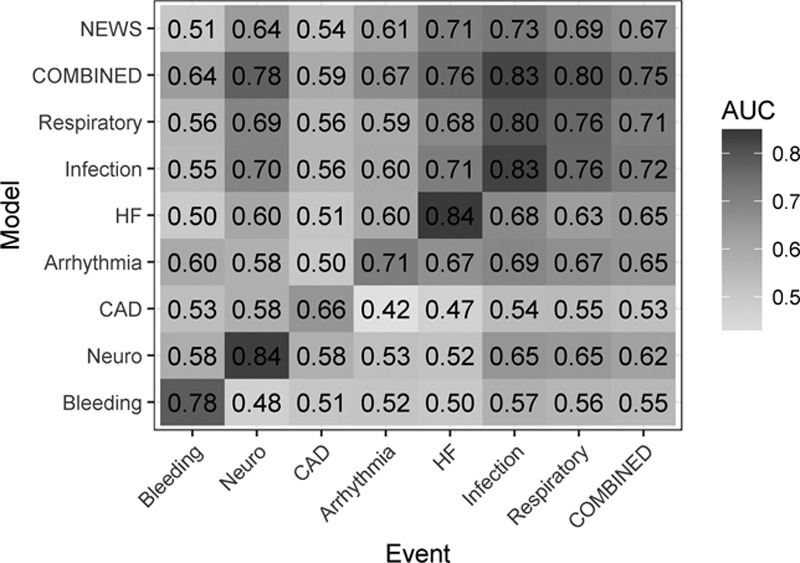

The abilities of models to detect on- and off-target causes for ICU transfer are demonstrated in Figure 5, a matrix of ROC areas that result when the model targeting the event in the rows is used for early diagnosis of the event listed in the columns. The diagonal from bottom left to top right shows the ROC areas of the models as trained for the target events; off-diagonal values give the values for detecting off-target events. For example, the model to detect bleeding has a ROC area of 0.78 (the value in the cell at the lower left of the matrix), but that model has no ROC area of greater than 0.6 in detecting other reasons for ICU transfer (bottom row), and the ROC areas of models trained for other events (left-hand column) exceed 0.6 in only case, the model trained on all events combined.

Figure 5.

Receiver operating characteristic (ROC) curve areas for models trained for the events listed in rows, and tested for events arranged as columns. The top row is the performance of the National Early Warning Score (NEWS); the next to top row and the final column represent results of a model trained on all the ICU transfer events combined. The gray scale reflects the value of the ROC area. AUC = area under the curve, CAD = coronary artery disease, HF = heart failure.

Additionally, Figure 5 shows variation of ROC areas from 0.66 to 0.84 along the diagonal, meaning some events are easier to detect than others. For example, models to detect ICU transfer due to infection or neurologic events have ROC area greater than 0.8, while that for myocardial ischemia was less than 0.7. Although reasonably common on this cardiac and cardiac surgery ward, we note that unstable coronary syndromes are not particularly good candidates for early detection. Arguably, there is no consistent prodrome for the instability of a coronary plaque.

We can directly compare the general model trained on all events to the models targeting specific reasons for ICU transfer by comparing the values in the second row (ROC area of the model targeting all events combined) to the values in the diagonal from lower left to upper right (ROC areas of the individual models). The combined event model performs about as well as the individually trained model infection (0.83 vs 0.83) and respiratory instability (0.80 vs 0.76). These are the most common reasons for ICU transfer (Appendix Fig. 1, Supplemental Digital Content 1, http://links.lww.com/CCX/A172), accounting for the finding.

The results of using the NEWS score to detect the individual or combined events is shown in the top row. The highest ROC area for NEWS was for infection, 0.73. Use of this single score for the entire population of combined events yielded ROC area 0.67. Of models trained for individual classes of ICU transfer, whose ROC areas appear in the diagonal, only that for unstable coronary symptoms was lower (0.66).

The mean NEWS ROC area was 0.63, significantly lower than the mean ROC area of 0.72 of the general model trained on combined events (p < 0.01, paired t test), which was lower than the mean ROC area of 0.78 of the specifically targeted models (p < 0.05).

DISCUSSION

We studied statistical models for early detection of subacute potentially catastrophic illnesses leading to ICU transfer from a cardiac and cardiac surgery ward. This is a high-profile clinical event—the mortality rose by more than 40-fold in those who deteriorated (34). We targeted specific reasons for clinical deterioration, used predictors that were continuous rather than thresholded, and included mathematical analyses of continuous cardiorespiratory monitoring data. Our major findings are that phenotypes vary among the myriad of clinical conditions that lead to ICU transfer, and that statistical models trained specifically performed better than a model trained on all events combined, which in turn performed better than the untrained NEWS score.

We have used multivariable logistic regression models to test hypotheses about the difference in characteristics of endotypes of patients that deteriorated and required escalation to ICU care. The major hypothesis is that models trained on one endotype of patient do not necessarily perform well in detecting other kinds of patients. This directly tests the prevailing practice of using a single predictive model throughout the hospital. It is important to note that we here use the statistical models only for hypothesis testing and not for their clinical impact. We have not tested these models in a clinical setting, and a reasonable question is how to implement so many. We favor the idea of calculating all the model results for all the patients all the time and presenting the clinician with a summary statistic.

The need to look for multiple modes of critical illness was recently supported by the finding of four sepsis phenotypes of responses and outcomes (43). We found different sepsis signatures in the medical as opposed to the surgical ICU (33). Thus, no single predictive model for sepsis seems adequate. Likewise, there are distinct respiratory deterioration phenotypes within chronic obstructive pulmonary disease and acute respiratory distress syndrome (44, 45). This heterogeneity of manifestations of illness, a point that resonates with bedside clinicians, argues against models that use the same thresholds for all situations. (An exception is sepsis in premature infants, where heart rate characteristics index monitoring identifies an abnormal phenotype that is common to many acute neonatal illnesses [46], and in a large RCT, was found to save lives [47].)

We emphasize the importance of continuous cardiorespiratory monitoring in early detection of subacute potentially catastrophic illnesses. We found distinct physiologic signatures for sepsis, hemorrhage leading to large unplanned transfusion and respiratory failure leading to urgent unplanned intubation. Display of risk estimates using two of the resulting statistical models based solely on cardiorespiratory monitoring (33) was associated with a 50% reduction in the rate of septic shock (48). Furthermore, cardiorespiratory monitoring data add to vital signs and laboratory tests in the population reported here (34). We propose that no scheme that omits cardiorespiratory monitoring data will perform as well as those that include it.

A strength of this study is the individual review of charts to identify reasons for ICU transfer. Clinicians recognize that illness presentations are complex, nuanced, and not well-documented (49). Statistical models trained for one kind of deterioration had poor performance in detecting other kinds. As examples, bleeding—a common form of deterioration on our mixed medical-surgical cardiac ward—was well-detected by a model trained specifically for it, but no other model had reasonable performance; hemoglobin was, logically, a powerful prediction of ICU transfer for bleeding but not at all useful when myocardial ischemia was the problem; coefficient of sample entropy, a detector of atrial fibrillation, was useful only for identifying patients transferred to the ICU for arrhythmia but nothing else.

Here, we show large differences in signatures of illness and performance of statistical models in early identification of patients at risk of ICU transfer. The approach of Redfern et al (10) is exemplary of most efforts in clinical surveillance for early detection of subacute potentially catastrophic illnesses. We propose more precise methods: clinician review of events so that predictive models are more focused, addition of continuous cardiorespiratory monitoring when it is available to fill in the timeline between nurse visits and blood draws, and the use of predictive models that individually target the diagnoses that lead to ICU transfer in our patients.

Our results argue against a one-size-fits-all approach, no matter how Big the Data nor how Deep the Learning. In this era of increasingly precise and personalized medicine, we propose “precision predictive analytics monitoring” as a more focused and clinically informed approach to early detection of subacute potentially catastrophic illnesses in the hospitalized patient.

Supplementary Material

Footnotes

Drs. Blackwell and Keim-Malpass contributed equally as first author.

Supplemental digital content is available for this article. Direct URL citations appear in the HTML and PDF versions of this article on the journal’s website (http://journals.lww.com/ccejournal).

Supported, in part, by grant from Frederick Thomas Advanced Medical Analytics Fund.

Dr. Moorman is Chief Medical Officer and shareholder and Dr. Clark is Chief Scientific Officer and shareholder in Advanced Medical Predictive Devices, Diagnostics, and Displays, Charlottesville, VA. The remaining authors have disclosed that they do not have any potential conflicts of interest.

REFERENCES

- 1.Escobar GJ, Gardner MN, Greene JD, et al. Risk-adjusting hospital mortality using a comprehensive electronic record in an integrated health care delivery system. Med Care. 2013;51:446–453. doi: 10.1097/MLR.0b013e3182881c8e. [DOI] [PubMed] [Google Scholar]

- 2.Rosenberg AL, Hofer TP, Hayward RA, et al. Who bounces back? Physiologic and other predictors of intensive care unit readmission. Crit Care Med. 2001;29:511–518. doi: 10.1097/00003246-200103000-00008. [DOI] [PubMed] [Google Scholar]

- 3.Delgado MK, Liu V, Pines JM, et al. Risk factors for unplanned transfer to intensive care within 24 hours of admission from the emergency department in an integrated healthcare system. J Hosp Med. 2013;8:13–19. doi: 10.1002/jhm.1979. [DOI] [PubMed] [Google Scholar]

- 4.Escobar GJ, Greene JD, Gardner MN, et al. Intra-hospital transfers to a higher level of care: Contribution to total hospital and intensive care unit (ICU) mortality and length of stay (LOS). J Hosp Med. 2011;6:74–80. doi: 10.1002/jhm.817. [DOI] [PubMed] [Google Scholar]

- 5.O’Callaghan DJ, Jayia P, Vaughan-Huxley E, et al. An observational study to determine the effect of delayed admission to the intensive care unit on patient outcome. Crit Care. 2012;16:R173. doi: 10.1186/cc11650. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Reese J, Deakyne SJ, Blanchard A, et al. Rate of preventable early unplanned intensive care unit transfer for direct admissions and emergency department admissions. Hosp Pediatr. 2015;5:27–34. doi: 10.1542/hpeds.2013-0102. [DOI] [PubMed] [Google Scholar]

- 7.Ginestra JC, Giannini HM, Schweickert WD, et al. Clinician perception of a machine learning-based early warning system designed to predict severe sepsis and septic shock. Crit Care Med. 2019;47:1477–1484. doi: 10.1097/CCM.0000000000003803. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.van Galen LS, Struik PW, Driesen BE, et al. Delayed recognition of deterioration of patients in general wards is mostly caused by human related monitoring failures: A root cause analysis of unplanned ICU admissions. PLoS One. 2016;11:e0161393. doi: 10.1371/journal.pone.0161393. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Redfern OC, Griffiths P, Maruotti A, et al. Missed Care Study Group. The association between nurse staffing levels and the timeliness of vital signs monitoring: A retrospective observational study in the UK. BMJ Open. 2019;9:e032157. doi: 10.1136/bmjopen-2019-032157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Redfern OC, Pimentel MAF, Prytherch D, et al. Predicting in-hospital mortality and unanticipated admissions to the intensive care unit using routinely collected blood tests and vital signs: Development and validation of a multivariable model. Resuscitation. 2018;133:75–81. doi: 10.1016/j.resuscitation.2018.09.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.The Royal College of Physicians: The National Early Warning Score (NEWS) Thresholds and Triggers. 2017. Available at: https://www.rcplondon.ac.uk/projects/outputs/national-early-warning-score-news-2. Accessed April 27, 2020. [Google Scholar]

- 12.Smith ME, Chiovaro JC, O’Neil M, et al. Early warning system scores for clinical deterioration in hospitalized patients: A systematic review. Ann Am Thorac Soc. 2014;11:1454–1465. doi: 10.1513/AnnalsATS.201403-102OC. [DOI] [PubMed] [Google Scholar]

- 13.Churpek MM, Wendlandt B, Zadravecz FJ, et al. Association between intensive care unit transfer delay and hospital mortality: A multicenter investigation. J Hosp Med. 2016;11:757–762. doi: 10.1002/jhm.2630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Churpek MM, Yuen TC, Park SY, et al. Derivation of a cardiac arrest prediction model using ward vital signs*. Crit Care Med. 2012;40:2102–2108. doi: 10.1097/CCM.0b013e318250aa5a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Rothman MJ, Rothman SI, Beals J., 4th Development and validation of a continuous measure of patient condition using the electronic medical record. J Biomed Inform. 2013;46:837–848. doi: 10.1016/j.jbi.2013.06.011. [DOI] [PubMed] [Google Scholar]

- 16.Bittman J, Nijjar AP, Tam P, et al. Early warning scores to predict noncritical events overnight in hospitalized medical patients: A prospective case cohort study. J Patient Saf. 2017 Sep 12 doi: 10.1097/PTS.0000000000000292. [online ahead of print] [DOI] [PubMed] [Google Scholar]

- 17.Alam N, Hobbelink EL, van Tienhoven AJ, et al. The impact of the use of the Early Warning Score (EWS) on patient outcomes: A systematic review. Resuscitation. 2014;85:587–594. doi: 10.1016/j.resuscitation.2014.01.013. [DOI] [PubMed] [Google Scholar]

- 18.Bartkowiak B, Snyder AM, Benjamin A, et al. Validating the electronic cardiac arrest risk triage (eCART) score for risk stratification of surgical inpatients in the postoperative setting: Retrospective cohort study. Ann Surg. 2019;269:1059–1063. doi: 10.1097/SLA.0000000000002665. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Green M, Lander H, Snyder A, et al. Comparison of the between the flags calling criteria to the MEWS, NEWS and the electronic Cardiac Arrest Risk Triage (eCART) score for the identification of deteriorating ward patients. Resuscitation. 2018;123:86–91. doi: 10.1016/j.resuscitation.2017.10.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rojas JC, Carey KA, Edelson DP, et al. Predicting intensive care unit readmission with machine learning using electronic health record data. Ann Am Thorac Soc. 2018;15:846–853. doi: 10.1513/AnnalsATS.201710-787OC. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Royston P, Altman DG, Sauerbrei W. Dichotomizing continuous predictors in multiple regression: A bad idea. Stat Med. 2006;25:127–141. doi: 10.1002/sim.2331. [DOI] [PubMed] [Google Scholar]

- 22.Wynants L, van Smeden M, McLernon DJ, et al. Topic Group ‘Evaluating diagnostic tests and prediction models’ of the STRATOS initiative. Three myths about risk thresholds for prediction models. BMC Med. 2019;17:192. doi: 10.1186/s12916-019-1425-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cohen J, Criti F. The cost of dichotomization. Appl Psychol Meas. 1983;7:249–253. [Google Scholar]

- 24.Fedorov V, Mannino F, Zhang R. Consequences of dichotomization. Pharm Stat. 2009;8:50–61. doi: 10.1002/pst.331. [DOI] [PubMed] [Google Scholar]

- 25.Knaus WA, Zimmerman JE, Wagner DP, et al. APACHE-acute physiology and chronic health evaluation: A physiologically based classification system. Crit Care Med. 1981;9:591–597. doi: 10.1097/00003246-198108000-00008. [DOI] [PubMed] [Google Scholar]

- 26.Smith GB, Prytherch DR, Schmidt PE, et al. Review and performance evaluation of aggregate weighted ‘track and trigger’ systems. Resuscitation. 2008;77:170–179. doi: 10.1016/j.resuscitation.2007.12.004. [DOI] [PubMed] [Google Scholar]

- 27.Keim-Malpass J, Kitzmiller RR, Skeeles-Worley A, et al. Advancing continuous predictive analytics monitoring: Moving from implementation to clinical action in a learning health system. Crit Care Nurs Clin North Am. 2018;30:273–287. doi: 10.1016/j.cnc.2018.02.009. [DOI] [PubMed] [Google Scholar]

- 28.Moody GB, Mark RG, Zoccola A, et al. Derivation of respiratory signals from multi-lead ECGs. Comput Cardiol. 1985;12:113–116. [Google Scholar]

- 29.Moorman JR, Delos JB, Flower AA, et al. Cardiovascular oscillations at the bedside: Early diagnosis of neonatal sepsis using heart rate characteristics monitoring. Physiol Meas. 2011;32:1821–1832. doi: 10.1088/0967-3334/32/11/S08. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Bapoje SR, Gaudiani JL, Narayanan V, et al. Unplanned transfers to a medical intensive care unit: Causes and relationship to preventable errors in care. J Hosp Med. 2011;6:68–72. doi: 10.1002/jhm.812. [DOI] [PubMed] [Google Scholar]

- 31.Cohen RI, Eichorn A, Motschwiller C, et al. Medical intensive care unit consults occurring within 48 hours of admission: A prospective study. J Crit Care. 2015;30:363–368. doi: 10.1016/j.jcrc.2014.11.001. [DOI] [PubMed] [Google Scholar]

- 32.Hillman KM, Bristow PJ, Chey T, et al. Duration of life-threatening antecedents prior to intensive care admission. Intensive Care Med. 2002;28:1629–1634. doi: 10.1007/s00134-002-1496-y. [DOI] [PubMed] [Google Scholar]

- 33.Moss TJ, Lake DE, Calland JF, et al. Signatures of subacute potentially catastrophic illness in the ICU: Model development and validation. Crit Care Med. 2016;44:1639–1648. doi: 10.1097/CCM.0000000000001738. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Moss TJ, Clark MT, Calland JF, et al. Cardiorespiratory dynamics measured from continuous ECG monitoring improves detection of deterioration in acute care patients: A retrospective cohort study. PLoS One. 2017;12:e0181448. doi: 10.1371/journal.pone.0181448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Le Guen MP, Tobin AE, Reid D. Intensive care unit admission in patients following rapid response team activation: Call factors, patient characteristics and hospital outcomes. Anaesth Intensive Care. 2015;43:211–215. doi: 10.1177/0310057X1504300211. [DOI] [PubMed] [Google Scholar]

- 36.Dahn CM, Manasco AT, Breaud AH, et al. A critical analysis of unplanned ICU transfer within 48 hours from ED admission as a quality measure. Am J Emerg Med. 2016;34:1505–1510. doi: 10.1016/j.ajem.2016.05.009. [DOI] [PubMed] [Google Scholar]

- 37.Lake DE, Moorman JR. Accurate estimation of entropy in very short physiological time series: The problem of atrial fibrillation detection in implanted ventricular devices. Am J Physiol Heart Circ Physiol. 2011;300:H319–H325. doi: 10.1152/ajpheart.00561.2010. [DOI] [PubMed] [Google Scholar]

- 38.Carrara M, Carozzi L, Moss TJ, et al. Classification of cardiac rhythm using heart rate dynamical measures: Validation in MIT-BIH databases. J Electrocardiol. 2015;48:943–946. doi: 10.1016/j.jelectrocard.2015.08.002. [DOI] [PubMed] [Google Scholar]

- 39.Carrara M, Carozzi L, Moss TJ, et al. Heart rate dynamics distinguish among atrial fibrillation, normal sinus rhythm and sinus rhythm with frequent ectopy. Physiol Meas. 2015;36:1873–1888. doi: 10.1088/0967-3334/36/9/1873. [DOI] [PubMed] [Google Scholar]

- 40.Pepe MS, Feng Z, Huang Y, et al. Integrating the predictiveness of a marker with its performance as a classifier. Am J Epidemiol. 2008;167:362–368. doi: 10.1093/aje/kwm305. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Collins GS, Reitsma JB, Altman DG, et al. TRIPOD Group. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): The TRIPOD statement. The TRIPOD Group. Circulation. 2015;131:211–219. doi: 10.1161/CIRCULATIONAHA.114.014508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Lex A, Gehlenborg N, Strobelt H, et al. UpSet: Visualization of intersecting sets. IEEE Trans Vis Comput Graph. 2014;20:1983–1992. doi: 10.1109/TVCG.2014.2346248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Seymour CW, Kennedy JN, Wang S, et al. Derivation, validation, and potential treatment implications of novel clinical phenotypes for sepsis. JAMA. 2019;321:2003–2017. doi: 10.1001/jama.2019.5791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lawler PR, Fan E. Heterogeneity and phenotypic stratification in acute respiratory distress syndrome. Lancet Respir Med. 2018;6:651–653. doi: 10.1016/S2213-2600(18)30287-X. [DOI] [PubMed] [Google Scholar]

- 45.Augustin IML, Spruit MA, Houben-Wilke S, et al. The respiratory physiome: Clustering based on a comprehensive lung function assessment in patients with COPD. PLoS One. 2018;13:e0201593. doi: 10.1371/journal.pone.0201593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Moss TJ, Lake DE, Moorman JR. Local dynamics of heart rate: Detection and prognostic implications. Physiol Meas. 2014;35:1929–1942. doi: 10.1088/0967-3334/35/10/1929. [DOI] [PubMed] [Google Scholar]

- 47.Moorman JR, Carlo WA, Kattwinkel J, et al. Mortality reduction by heart rate characteristic monitoring in very low birth weight neonates: A randomized trial. J Pediatr. 2011;159:900–906.e1. doi: 10.1016/j.jpeds.2011.06.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Ruminski CM, Clark MT, Lake DE, et al. Impact of predictive analytics based on continuous cardiorespiratory monitoring in a surgical and trauma intensive care unit. J Clin Monit Comput. 2019;33:703–711. doi: 10.1007/s10877-018-0194-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Despins LA. Automated deterioration detection using electronic medical record data in intensive care unit patients: A systematic review. Comput Inform Nurs. 2018;36:323–330. doi: 10.1097/CIN.0000000000000430. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.