Abstract

Background:

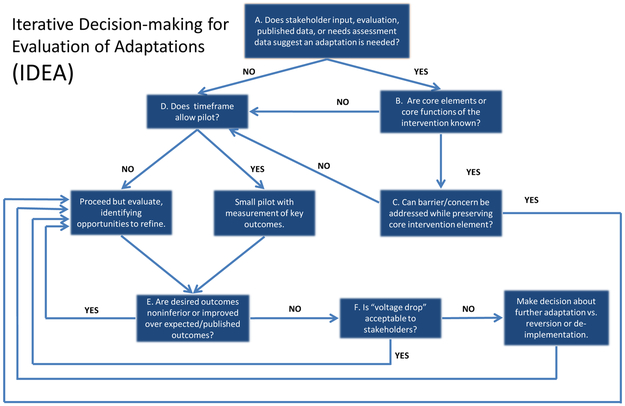

Evidence-based practices (EBPs) are frequently adapted to maximize outcomes while maintaining fidelity to core EBP elements. Many step-by-step frameworks for adapting EBPs have been developed, but these models may not account for common complexities in the adaptation process. In this paper we introduce the Iterative Decision-making for Evaluation of Adaptations (IDEA), a tool to guide adaptations that addresses these issues.

Framework Design and Use:

Adapting EBPs requires attending to key contingencies incorporated into the IDEA, including: the need for adaptations; fidelity to core EBP elements; the timeframe in which to make adaptations; the potential to collect pilot data; key clinical and implementation outcomes; and stakeholder viewpoints. We use two examples to illustrate application of the IDEA.

Conclusions:

The IDEA is a practical tool to guide EBP adaptation that incorporates important decision points and the dynamism of ongoing adaptation. Its use may help implementation scientists, clinicians, and administrators maximize EBP impact.

Keywords: adaptation, evidence-based practice, evidence-based healthcare, implementation, culture, fidelity, modification

Background

Implementation science aims to improve healthcare by maximizing the adoption, appropriate use, and sustainability of evidence-based practices (EBPs) in real-world clinical settings. In this context, there is ongoing debate regarding the role of modifications to EBPs. This debate also includes adaptations, defined as a planned, proactive type of modification. On the one hand, there is an understanding that EBPs must be delivered with some level of fidelity (i.e. in a manner that is consistent with the design or intent of said EBP; Eke, Neumann, Wilkes, & Jones, 2006; Kendall & Frank, 2018; McKleroy et al., 2006; Stirman et al., 2015). On the other hand, there is growing recognition that adaptations to EBPs are not only common (Aarons et al., 2012; Chambers & Norton, 2016; Wiltsey Stirman et al., 2013), but may be essential to maximizing clinical effectiveness in certain settings and populations (Chambers, Glasgow, & Stange, 2013; Iwelunmor et al., 2015; Marques et al., 2019; Stirman et al., 2012). For example, adaptations may be crucial to reducing health disparities, especially if the unmodified EBP would be ineffective or otherwise inappropriate for use with a historically underserved population (Bernal & Domenech Rodríguez, 2012; Cabassa & Baumann, 2013). Thus, adaptations to EBPs have gone from nuisances to be eliminated to important tools to be harnessed in the pursuit of effective healthcare.

With that said, fundamental uncertainties remain regarding how best to conceptualize adaptations in implementation science. In the literature to date, adaptation has commonly been described as a process or mechanism associated with successful implementation or sustainability (Stirman et al., 2012; Iwelunmor et al., 2016). Fostering appropriate adaptation, however, has also been identified as an implementation strategy (Aarons et al., 2012; Powell et al., 2015). Adaptability has also been described as a characteristic of an intervention that may be a potential determinant (Damschroder et al., 2009), as those interventions that lend themselves to adaptation may be more likely to be adopted or sustained in differing contexts. This recognition has given rise to a number of modular EBPs that are, by design, easily adapted, at least in terms of specific clinical content (e.g. Bauer et al., 2016; Farchione et al., 2012). Other researchers have focused on identifying core functions that may be relevant across psychosocial EBPs, settings, or diagnoses (e.g. Kennedy & Barlow, 2018; Martin et al., 2018). Finally, it is possible to view adaptation as an implementation outcome, similar to the way that fidelity has been conceptualized (Proctor et al., 2011).

For our purposes in this manuscript, we adopt the view that adaptation of EBPs can be fruitfully viewed as an implementation strategy (Aarons et al., 2012; Powell et al., 2015). Through this lens, the practical question for implementation scientists and clinicians is how to select and make adaptations to EBPs that enhance their effectiveness. To that end, a fast-developing body of research has aimed to provide guidance for adapting EBPs (Bernal & Domenech Rodríguez, 2012; Bumbarger & Kerns, 2019; Ferrer-Wreder, Sundell, & Mansoory, 2012). A recent review suggested important similarities and differences across proposed frameworks for such adaptations (Escoffery et al., 2018). In deciding what components of an EBP require adaptation, some frameworks emphasized understanding and preserving core elements (e.g. Rolleri et al., 2014), whereas others prioritized improving the fit between the intervention and target population. In consolidating their review results, Escoffery and colleagues (2018) concluded that existing adaptation frameworks contained some combination of up to eleven distinct steps, including (but not limited to) selection of the EBP in question; consultation with experts and/or stakeholders; pilot testing of the modified EBP; and eventual full-scale implementation and testing.

Additional work related to cultural adaptations has emphasized the importance of specific steps in the adaptation process. For example, Cabassa and Baumann (2013) have noted the importance of incorporating input from diverse stakeholders including researchers, clients, clinicians, administrators, and community members. This input ideally takes the form of formative research methods (e.g. focus groups and in-depth qualitative interviews), incorporating questions related to the EBP in question as well as the context in which it will be delivered. Furthermore, making cultural adaptations requires particular attention to the acceptability and feasibility of the adapted EBP, along with ecological validity and ongoing evaluation (Barrera Jr., Castro, Strycker, & Toobert, 2013).

In sum, existing models have outlined series of steps for making adaptations to EBPs. These stage models, while useful, by their nature may struggle to incorporate the cyclical, interdependent nature of EBP implementation, and say little about the interactions among these different stages of the adaptation process itself. These interactions may be pivotal, however. For example, collecting pilot data may be crucial in the absence of stakeholder consensus regarding the need for a particular adaptation with a given population, but may be less important if such a consensus already exists. In the latter case, in fact, postponing the implementation of an adapted EBP while data to examine the effectiveness of these adaptations are collected might represent an unnecessary delay.

Given these complexities, a linear, phased approach to adaptation may not be feasible due to the dynamic contexts into which EBPs are implemented (Chambers et al., 2013). Broad guidance to inform or document decision-making, rather than a prescribed set of steps, may be more appropriate and responsive to the realities of the implementation process. We therefore set out to expand upon the existing literature on adaptations to EBPs by developing an adaptation decision-making framework that incorporates contingencies through a series of decision points. While currently focused on the perspective of adaptations made by treatment developers, consideration of these contingencies will allow implementation scientists, administrators, and clinicians to tailor the adaptation process to the specific circumstances under which they are implementing a given EBP. It is our hope that, by clearly stating the importance of stakeholders, other voices – including the practitioners and those receiving the interventions – will be heard and considered during the decision-making process. The framework aims to support adaptations to the EBPs made at the broader level, future research should examine how to support adaptations made daily in treatment sessions. Below, we describe the key components of this framework, and discuss its potential utility for selecting, tracking, documenting, and evaluating adaptations to EBPs in healthcare.

Components of the IDEA

Our adaptation framework, entitled the Iterative Decision-making for Evaluation of Adaptations (IDEA), can be found in Figure 1. An initial assumption of the IDEA is that stakeholders have identified an EBP, and that the primary question is whether and how to adapt it for a given setting. In this section we describe our rationale for each decision point included in the framework, along with supporting literature.

Figure 1:

The Iterative Decision-making for Evaluation of Adaptations (IDEA)

A. Do clinical, stakeholder, or empirical data suggest that an adaptation to the EBP is needed?

The first decision point in the IDEA requires determining whether there are data indicating that adaptation to the EBP in question is needed for a given setting or population. The decision of whether to adapt may be made based on available evidence from published literature, evaluation data from other programs, the researchers’ own data, and stakeholders’ perspectives. If, for example, stakeholders make it clear that engagement will be poor without adaptation, then adaptation may be needed even in the absence of quantitative data on engagement or outcomes. Other factors that determine whether an intervention should be adapted include differences between (a) the target population and the population with which the intervention was originally tested, (b) the target domain of the intervention, and (c) the contextual factors (Bernal & Domenech Rodríguez, 2012; Cabassa & Baumann, 2013; Ferrer-Wreder et al., 2012; Lau, 2006).

Several existing frameworks provide guidance regarding how to approach each of these factors. For example, Aarons’ Dynamic Adaptation Process (DAP; Aarons et al., 2012), highlights a multi-step, stakeholder-driven process for determining the need for adaptations, and in turn for deciding what specific adaptations to make to a given EBP. Within the DAP, decisions about the adaptation are made by an implementation resource team comprising a variety of stakeholders, with attention to preserving the effective elements of the intervention. Intervention Mapping may also be useful in approaching this step, as highlighted in the IM Adapt systematic planning process (Highfield et al., 2015).

In the absence of initial data, theory, or stakeholder input suggesting the need for adaptation in a given setting or population, we propose piloting the EBP without adaptation, as the adaptation process itself can be time-consuming and may inadvertently reduce the effectiveness of the EBP if it is not undertaken in response to a perceived need. In that case, implementing the EBP as originally designed with a small segment of the population may be helpful if time permits. Ideally, data from this initial pilot can in turn inform the possible need for future adaptations. If there is at least moderately compelling evidence of the need for adaptation, then consideration of the core elements or functions of the EBP is required, as described immediately below.

B. Are core elements or core functions of the EBP known?

Consideration of core elements of the EBP is crucial for determining which, if any, of its components should be considered “off limits” for adaptation (Anyon et al., 2019). Ideally, fidelity to core elements will be maintained, with adaptations reserved for peripheral elements that are theoretically not crucial to the EBP’s beneficial effects. The CPCRN’s Adaptation Planning Tool (http://cpcrn.org/wp-content/uploads/2015/02/6bAdaptationPlanningTool.doc) suggests that there is a continuum for changes that may be made to the core elements (content, delivery mechanisms, or methods) of an intervention: ‘red light’ indicates changes that may be fidelity inconsistent and should therefore be avoided; ‘yellow light’ indicates changes that should be made cautiously; and ‘green light’ indicates changes that are fidelity consistent. Various adaptation coding systems include considerations of fidelity in valence ratings (e.g. Bishop et al., 2014; Wiltsey-Stirman, Baumann, & Miller, 2019).

In fact, consensus has been building that core elements should be conceptualized in terms of core functions that can take on varying forms (Kirk et al., 2018; Mittman, 2018). For instance, if education is a necessary component of an EBP, it can take the form of written materials, information delivered by peers, training by health workers, or a video. For example, if the function of an activity in a parenting activity is to increase the amount of praise that a child receives, the form could take the form of practicing through a game or a role play rather than reading a handout and assigning practice at home.

Particularly if adaptation will impact an element of an EBP that is considered essential, consideration of the form or means by which the element can be conveyed might make a fidelity-consistent adaptation that preserves the core function feasible. At such times, adaptation of this nature can lead to refinements to the intervention. For example, cognitive therapy originally employed written worksheets to allow individuals to practice the cognitive restructuring skills they were learning. Written materials can be a barrier for individuals who read and write at lower levels, experience certain forms of disability, or lack access to printed materials. An adaptation that evolved, and eventually became an accepted form of the intervention, was the mnemonic device “catch, check, change,” which allows individuals to remember and walk through a cognitive restructuring process without the need for writing (Creed, Reisweber, & Beck, 2011).

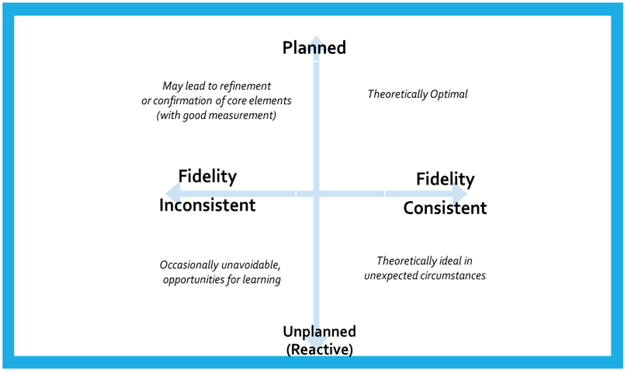

Figure 2 illustrates different types of adaptations that may occur in relation to the degree of timing and fidelity to core treatment elements. When fidelity-inconsistent adaptations prove unavoidable, measurement is particularly important. Using careful data collection, we may occasionally learn that aspects of interventions that are thought to be necessary for effectiveness may not be as central as previously assumed (at least for certain settings or populations). For example, for an intervention that aims to improve diabetes outcomes, a module on medication adherence may be pivotal for some individuals or populations but unnecessary for others. Chambers and Norton (2016) advocate for a measurement system that allows for conclusions about core elements for specific populations to be drawn. This approach is also consistent with the recent movement toward precision medicine—that is, the use of large datasets (“big data”) to guide medical care for individuals—in the sense that careful measurement of the effectiveness of adapted EBPs for different populations can point toward which EBP elements or functions are truly required to maximize outcomes.

Figure 2:

2×2 grid demonstrating interplay between adaptation and fidelity

Ultimately, decisions about what types of adaptations are fidelity-consistent (preserve core elements or functions) or fidelity-inconsistent (change or remove core elements or functions) must be made in consultation with the existing literature, input from the intervention developer, guidance from other individuals with expertise in the intervention or target outcomes, and any available evaluation data. In the absence of data or theory regarding core elements or functions of the EBP, we ultimately recommend caution in making adaptations, as adaptations may inadvertently remove or dilute the very elements that are most crucial to its effectiveness. In that situation, a pilot study may be particularly useful (Step D below). If core elements or functions are known, however, then the next decision point must first be addressed.

C. Can limitations of the EBP for this setting or population be addressed while preserving core elements or functions?

If core elements or functions of the EBP are known, then they need to be considered alongside the concerns or barriers raised in Step A above to determine whether a proposed adaptation can address these concerns while maintaining fidelity to the EBP’s core elements or functions. Ideally, of course, adaptations will address key stakeholder concerns or address issues uncovered from existing data while leaving core elements or functions intact (i.e. maintaining fidelity). If that is not the case, however—that is, if adaptations are deemed necessary for implementation despite not preserving core elements or functions—then we recommend particularly careful data collection. For example, consider the cognitive therapy worksheets described briefly in the previous section (Step B above). While such worksheets were at one time considered a core element of CBT, they simply were not feasible for certain populations; careful data collection led to the understanding that the core function of such worksheets (i.e. cognitive restructuring) could be achieved in other ways (Creed, Reisweber, & Beck, 2011). In another example, an evidence-based parenting intervention had a manual with a minimum of 17 sessions addressing five of their core elements. While in some contexts the length of this intervention was feasible, in others it was not (e.g., Baumann et al., 2019). Upon conversations with treatment developers about the feasibiliy of the intervention, the manual was shortened to ten sessions for a study. In a different context, only two of the core elements of the same intervention are being delivered in community settings. In this case, the treatment developer, community providers and research team decided which were the core elements that could “stand alone” in a two-session day for prevention purposes.

More broadly, gathering data to assess the impact of adaptations is important for informing decisions about alternative adaptations or strategies should the adapted EBP prove less than fully successful, before a large portion of the population participates in the intervention with suboptimal benefits. This data collection can take place within the context of a small pilot or a broader rollout, as described below.

D. Does the timeframe of the proposed rollout of the EBP allow for a pilot study that includes proposed adaptations?

At several points throughout the adaptation process we recommend collecting pilot data to determine whether the unadapted EBP (From Step A above) or adapted EBP (Step B, Step C) is working as intended. We recognize, however, that not all clinical settings or policy contexts can accommodate the time needed to conduct a thorough pilot evaluation. If pilot studies can be conducted, then we strongly recommend them. If not, then it is crucial to embed whatever data collection is feasible into the actual rollout of the EBP in question.

Evaluation of implementation projects is a central challenge. Ongoing, cyclical tests of change, accompanied by careful evaluation, are strongly recommended regardless of the setting. Ideally, evaluation in the context of adaptations will include two components not typically present in traditional clinical studies: (a) clear description of what adaptations have been made to the EBP, and (b) evaluation of the implementation process itself. We describe each of these components below.

Describing Adaptations.

In the absence of a clear description of the adaptation(s) being made to an EBP in a given setting, it will be virtually impossible to determine the extent to which that adaptation was successful. A framework for cataloguing adaptations was recently developed, entitled the Framework for Reporting Adaptations and Modifications – Expanded (Wiltsey-Stirman et al., 2019), itself based on earlier work (Stirman, Miller, Toder, & Calloway, 2013). The FRAME can be used to describe the nature of various types of EBP adaptations, including: (1) when and how in the implementation process the adaptation was made, (2) the extent to which the adaptation was planned vs. reactive, (3) decision-makers involved in determining the adaptation, (4) what specifically is adapted, (5) the level of delivery at which the adaptation is made, (6) the type or nature of adapted material, (7) consistency of the adaptation with fidelity considerations, and (8) the rationale behind the adaptation, including (a) the purpose for the adaptation and (b) relevant contextual factors.

Evaluating the implementation process itself.

If an EBP does not demonstrate anticipated clinical benefits in a given setting, it may not at first be apparent whether the fault lay with the EBP itself (including its adapted components), or with efforts to implement that EBP. For example, if clinicians are not appropriately trained in the use of a psychotherapy treatment manual that has been adapted to fit their clinical context, then the clinical effects of that manual may appear unexpectedly low. In that situation, it would be erroneous to simply conclude that the adaptation itself was the source of the poor performance of the treatment manual. Recent evidence suggests that both fidelity and adaptation may contribute to outcomes (Marques et al., 2019). Alternatively, an adaptation may not “hit the mark” and address the most central barriers to effectiveness. In other circumstances, an intervention may in fact not be as effective for some segments of the population as it is with others, and an alternative strategy may be warranted. Thus, careful evaluation of the implementation process itself is also crucial, and can be guided by any of several implementation evaluation frameworks (e.g. RE-AIM; Glasgow, Vogt, & Boles, 1999).

Assuming that adaptations have been clearly described, and that the adapted EBP was in fact implemented as intended, then attention can be turned to the clinical effectiveness of the adapted EBP, as described in the next section.

E. To what extent is the adapted EBP successful?

The definition of “successful,” in this case—referring now to the clinical effectiveness of the adapted EBP, rather than the success of the strategy used to implement the EBP—is likely to vary widely depending on the context. In some situations, success of the EBP may be defined in relation to previous data on its effectiveness in other clinical contexts, such as whether pre-post effect sizes on standardized self-report assessments fall within the range found in the original research or in other clinical settings or whether individuals experience other desired outcomes at rates that are comparable to previous evaluations. In other situations, success of the EBP may be also defined in terms of stakeholder preferences, policy mandates, feasibility, acceptability, consumer engagement or other clinical metrics.

Regardless of the specific metric for success chosen, if the adapted EBP is deemed successful, then it may be retained in that setting or population. We nonetheless advocate for continued data collection (connecting to the box in Figure 1 labeled “Proceed but evaluate”), as more comprehensive clinical data, updated research findings, or changes in stakeholders’ perspectives may suggest opportunities for refinement and further adaptation. In some cases, however, the EBP may fail to achieve the results expected based on previous research evidence, an outcome termed ‘voltage drop’ (Kilbourne, Neumann, Pincus, Bauer, & Stall, 2007). If voltage drop occurs, then additional questions must be considered, as described next.

F. Is “voltage drop” acceptable to stakeholders?

If voltage drop occurs, at least two issues should be considered when deciding whether to stop, further adapt, or continue to evaluate the adapted intervention. First, consider whether further data is needed, and whether the drop in effectiveness is part of the adjustment of the new intervention to the context. For example, in scale-up studies, there may be a process where practitioners may be learning to implement the intervention, a “work thought struggle” process that requires time and support to enhance the effectiveness of the intervention (Askeland et al., 2019; Forgatch & DeGarmo, 2011; Johnson et al., 2019; Sigmarsdóttir et al., 2019). Second, it is also crucial to determine whether key stakeholders are willing to continue the use of the less-than-fully-successful adapted EBP. From a public health perspective, widespread implementation of somewhat less effective interventions may be preferable to less accessible but more effective interventions, or to even less effective usual care (Martin, Murray, Darnell, & Dorsey, 2018). In cases in which (a) there are few data to point toward further refinement of the EBP, (b) there are no readily available alternatives to the adapted EBP, and/or (c) switching to a new intervention entirely would be impractical, then the adapted EBP may represent the best option despite modest voltage drop. Opportunities to learn and refine during implementation may inform further adaptation.

There are some instances, however, in which voltage drop is unacceptable to stakeholders, or, even if acceptable to stakeholders, the data may indicate more harm than benefit from the adapted EBP. In these cases, de-implementation of the adapted EBP, and implementation of alternative strategies, may need to be considered (Helfrich, Hartmann, Parikh, & Au, 2019; McKay, Morshed, Brownson, Proctor, & Prusaczyk, 2018; Norton, Kennedy, & Chambers, 2017). Regardless of whether stakeholders further adapt the EBP, revert to the unadapted EBP, or adopt a new clinical intervention entirely, we recommend ongoing evaluation to identify opportunities for improvement.

Application of the IDEA to a Recent Implementation Trial

In this section we demonstrate application of the IDEA to the Behavioral Health Interdisciplinary Program (BHIP) Enhancement Project. The project was jointly funded as research and quality improvement (Bauer et al., 2015; Bauer, Miller, et al., 2019; Bauer, Weaver, et al., 2019) by the US Department of Veterans Affairs (VA). The overarching goal of the BHIP Enhancement Project was to align care processes with the collaborative care model (CCM; Wagner, Austin, & Von Korff, 1996) in VA-based outpatient mental health teams consisting of about 6-8 full-time clinical staff known as BHIPs. The CCM, rather than representing a specific psychosocial treatment or medication regimen, is instead an evidence-based way to structure care for chronic health conditions. It incorporates six elements in the service of more continuous, anticipatory, and evidence-based care: work role redesign to support such care; enhanced patient self-management support; provider decision support; use of clinical information systems to track panel-level outcomes; linkage to community resources; and the support of health care leadership (Bodenheimer, Wagner, & Grumbach, 2002a, 2002b). Thus, for this project, the CCM was considered the EBP that was potentially in need of adaptation for the BHIP setting.

A. Do data suggest that an adaptation to the EBP is needed?

Preliminary data from two meta-analyses (Miller et al., 2013; Woltmann et al., 2012) suggested that the CCM itself represented an effective way to structure mental health care in outpatient settings. However, results from these reviews, combined with stakeholder feedback from VA’s Office of Mental Health and Suicide Prevention, suggested that the CCM as traditionally delivered in randomized controlled trials would need to be adapted for BHIP-based clinical practice. First, many trials hired a dedicated care manager to engage in care coordination tasks such as administering phone-based symptom assessments, conducting outreach calls after treatment sessions, and maintaining a clinical registry (particularly relevant to the CCM elements of work role redesign and clinical information systems). Given resource constraints in many VA mental health clinics, however, it was unlikely that BHIP teams would be able to dedicate a staff member to serve this role in isolation. Instead, each BHIP team would need to consider how to accomplish the goals of these CCM elements without hiring additional staff. More broadly, stakeholder feedback suggested that adoption of the CCM would need to be highly attentive to local needs, priorities, and the resources available within BHIPs. Thus, a modular approach—with each BHIP team considering each CCM element, but prioritizing the element(s) deemed most useful for improving their care practices—was seen as essential for successful CCM implementation. Using the FRAME (Wiltsey-Stirman et al., 2019), these adaptations would therefore be classified in the following ways:

When did adaptation occur? Pre-implementation

Were adaptations planned? Yes

Who participated in the decision to adapt? Researchers, with input from program leaders in the form of VA’s Office of Mental Health and Suicide Prevention

What was the goal? To improve feasibility

What was modified? Content

At what level of delivery was the adaptation made? Clinic/unit level, in that each BHIP team would be responsible for deciding how to prioritize the CCM elements, specifically in the absence of a dedicated care manager

What is the nature of the content modification? The nature would be determined by each individual site, but potential options could include: shortening/condensing, lengthening/extending, (depending on the time dedicated to each CCM element); removing/skipping elements (given the absence of a care manager)

Reasons: Competing demands/mandates (for prioritizing some CCM elements more heavily than others); available resources (for absence of care manager role)

B, C: Are core elements or core functions of the EBP known? Can barriers be addressed while preserving core intervention elements?

A literature review (Miller et al., 2013) suggested that no single CCM element was essential, or superfluous, to the usefulness of CCM-based care. The study team therefore concluded that the modular approach described immediately above was reasonable (i.e. that requiring BHIP teams to consider each CCM element, but to focus on the element(s) deemed of highest importance, was likely to preserve the core of the CCM). Within the context of the FRAME (Wiltsey-Stirman et al., 2019), these changes were therefore considered fidelity consistent. A pilot study, however, was deemed necessary to determine whether this adapted approach to the CCM would be effective in the BHIP setting.

D. Does the timeframe of the proposed rollout of the EBP allow for a pilot study that includes proposed adaptations?

The study team sought, and received, pilot funding to implement the adapted CCM with one BHIP team (VA QUERI RRP #13-237). This study was conducted in 2013-2014.

E, F. To what extent is the adapted EBP successful? Is “voltage drop” acceptable to stakeholders?

Qualitative findings from the pilot study suggested that the adapted CCM was generally feasible and acceptable to the BHIP team members who participated. These results also suggested, however, that additional structure for the adapted CCM would be helpful in pursuing a broader rollout to more fully meet system needs. Specifically, after completing the pilot study, the study team developed the BHIP-CCM Enhancement Guide, a workbook meant to guide BHIP teams through discussion of 27 clinical processes that could be aligned more closely with the six CCM elements.

The BHIP-CCM Enhancement Guide was then used to implement the adapted CCM in nine additional BHIP teams in the context of a larger implementation trial (VA QUERI QUE #15-289; Bauer et al., 2015; Bauer, Miller, et al., 2019; Bauer, Weaver, et al., 2019). That study demonstrated improvements in hospitalization rates for Veterans treated by CCM-enhanced teams, as well as improved mental health-related quality of life for patients with three or more mental health diagnoses.

At this stage, further rollouts of CCM-based BHIP care in VA are being considered (US Department of Veterans Affairs, 2019). Results from the implementation trial have in turn suggested further adaptations to increase the sustainability of CCM-based care within BHIP teams after implementation support ends, as well as increase its spread to additional BHIP teams within each VA medical center.

Hypothetical Application of the IDEA in a Non-Research Context

Adaptations in the BHIP-CCM Enhancement Project, described above, occurred primarily at the programmatic level. Below, we describe application of the IDEA to the implementation of trauma-focused psychotherapy in a community clinic. While hypothetical, this example is based on an amalgam of implementation projects in similar settings, and is meant to illustrate how the IDEA can be applied in such cases.

A. Do clinical, stakeholder, or empirical data suggest that an adaptation to the EBP is needed?

Administrators in a community mental health agency, in consultation with their therapists, made a decision to implement a trauma-focused psychotherapy due to high rates of trauma exposure and PTSD among the population it serves. After attending a training and reviewing the manual and materials with their stakeholder advisory board, the therapists expressed some concerns about some of the terminology used, which can carry negative connotations in the culture of their local population. They worried that other terms are jargon that won’t be relatable to their clientele. They also had concerns about the complexity of some of the clinical worksheets. Furthermore, they needed to translate language and concepts into the language spoken by many of their clients.

B, C: Are core elements or core functions of the EBP known? Can barriers be addressed while preserving core intervention elements?

The therapists sought consultation from the trainer, who further described the core functions and goals of the activities in question. Together, they adapted the terminology to make it less “jargon-y” and reduce the possibility of misinterpretation of key concepts. They also discussed ways to simplify the worksheets while preserving their core functions (e.g., supporting cognitive restructuring) and made the agreed upon changes. Materials were translated. These changes were brought back to their consumer advisory board, and additional suggestions regarding metaphors and improving the clarity and simplicity of the worksheets were incorporated.

Using the FRAME (Wiltsey-Stirman et al., 2019), these adaptations would therefore be classified in the following ways:

When did adaptation occur? Pre-implementation

Were adaptations planned? Yes

Who participated in the decision to adapt? Therapists, consumer advisory board, expert trainer and administrators (coalition of stakeholders)

What was the goal? To improve fit, to address cultural factors

What was modified? Content

At what level of delivery was the adaptation made? Clinic level

What is the nature of the content modification? Tailoring, changing materials

Reasons: Recipient ethnicity, language, and literacy levels

D. Does the timeframe of the proposed rollout of the EBP allow for a pilot study that includes proposed adaptations?

Because the adaptations did not alter the core functions of the intervention, and the consultation phase of the training allowed the therapists to try the adapted protocol with some of their clients, therapists used the materials and adjusted if/as needed with their local consultant. They examined engagement and effectiveness through the use of attendance data, feedback from their clients, and weekly assessment.

E, F. To what extent is the adapted EBP successful? Is “voltage drop” acceptable to stakeholders?

The changes were well-received, and evaluation of the first 10 cases suggested that outcomes were of similar magnitude to those found in effectiveness studies and dropout was low. However, the therapists provided additional feedback based on their experiences in delivering the therapy, as well as their clients’ reactions to the material. As described in Step B, therapists consulted with the trainer to determine whether their suggested changes (e.g., more culturally relevant examples and metaphors) could be made while preserving the intervention’s core functions. The agency then integrated these additional adaptations into the materials. They did not conduct a formal pilot, but continued to monitor engagement and outcomes to ensure that outcomes were not compromised.

Conclusions

In this paper we introduce the Iterative Decision-making for Evaluation of Adaptations (IDEA), a pragmatic decision guide for adaptation in contexts and circumstances that differ from those in which the original development and testing of an EBP took place. In contrast to adaptation frameworks that lay out a series of linear steps for adaptation, the IDEA is designed as a recursive series of decision points that reflects the non-linear and dynamic contexts in which interventions are implemented, scaled up, and sustained. In conjunction with evaluation frameworks such as the FRAME (Wiltsey-Stirman et al., 2019), it lays out a way to consider and document the process of adaptation itself. We also demonstrate how the IDEA can be applied to implementation projects, using the example of the BHIP-CCM Enhancement Project (Bauer, Miller, et al., 2019) and a hypothetical community-based implementation of trauma-focused psychotherapy.

Maximizing the utility of the IDEA will require thorough evaluation of the success of adapted EBPs, which is in turn likely to require mixed quantitative and qualitative methods (Bumbarger & Kerns, 2019). As detailed in other work, the use of quality improvement methodologies, benchmarking strategies, qualitative analyses, or mixed/rapid quantitative and qualitative approaches for evaluation may be appropriate, depending on available resources (c.f., Baumann, Cabassa, & Wiltsey Stirman, 2017; Bumbarger & Kerns, 2019). Qualitative approaches and clinical data (e.g., appointments kept) may allow assessment of factors such as satisfaction, whereas benchmarking strategies may be most appropriate for assessing impact on health outcomes.

Although our BHIP-CCM example is illustrative of the process of making decisions about adaptation, it is based on a funded trial, and represents a “best case” that will not always be feasible outside the context of research. When funds are not available, the pilot and evaluation may need to be scaled back. A related limitation of the IDEA is that, while it emphasizes the importance of evaluation and piloting, the methods that can be employed during implementation in many contexts may not be sufficient to confidently determine the impact of an adaptation. Efforts to pool information regarding adaptations to similar interventions may more rapidly expand our knowledge base about the types of adaptations that are likely to support implementation goals (Chambers & Norton, 2016). It is also important to recognize that adaptations may fail to compensate for key differences that are inherent in some contexts, and it is essential to differentiate the characteristics that might contribute to poor fit or voltage drop from the success of the attempted adaptation. When sufficient data are available, baseline targeted moderation (BTM) and baseline targeted moderated mediation (BTMM) may represent important tools for addressing this limitation (Howe et al., 2019). However, insofar as the IDEA may serve as a heuristic to guide implementation outside the context of a comprehensive research infrastructure, it may be more useful to stakeholders who require practical guidance for working through and documenting the adaptation process.

We acknowledge that adaptation decisions (and, indeed, implementation science decisions more broadly) are rarely clear-cut. Despite its iterative approach, the binary nature of the IDEA (i.e. its reliance on yes/no decision points) represents a limitation in real-world clinical settings. It is possible, even likely, that different stakeholders may have different views at each decision point. For example, frontline clinicians may favor shortening or simplifying an EBP to accommodate busy caseloads; patients may desire modifications to incorporate valued cultural or religious viewpoints; researchers may be wary of making adaptations that threaten “their” EBP or a study’s internal validity; and administrators may worry that a pilot phase will delay the rollout of a much-needed intervention. Ultimately, the extent to which the IDEA will be useful for a given project will depend on the ability of stakeholders to arrive at consensus in the face of conflicting priorities and viewpoints. However, a clear decision process and emphasis on preservation of core functions and rapid integration of practice-level findings may facilitate consensus and collaboration. Furthermore, application of the IDEA relies heavily on the accuracy and relevance of the data used to proceed along each decision path. Thus, use of the IDEA is intended to help various stakeholders focus on key questions (e.g. What data suggest we should adapt this EBP? Is a pilot study warranted?). The answers to these types of questions will depend on the EBP, clinical setting, personnel, and resources available. The IDEA may therefore be less useful for “on-the-fly” or impromptu modifications to EBPs that are made by individual clinicians while face-to-face with patients, although with a good infrastructure for capture and interpretation of clinical data, more individualized adaptation and evaluation may also be feasible.

In sum, the IDEA is meant as a practical guide for conceptualizing and documenting adaptations to EBPs to new clinical contexts. Its use may help move the field forward by incorporating important decision points that acknowledge the complexity of the adaptation process itself. It has been designed to help implementation scientists and clinical decision-makers balance the need for adaptation and fidelity to maximize EBP impact. We are hopeful that future work, supported by careful documentation and practical, user-friendly data collection and synthesis, will allow for comprehensive evaluation of the framework.

Acknowledgments:

We would like to thank Alicia Williamson for her assistance in structuring and formatting Figure 1. Funding sources for this work include: NIMH R01 MH 106506 (PI: Stirman); QUERI QUE 15-289 (PI: Bauer; Co-I: Miller). We would also like to acknowledge the Implementation Research Institute (IRI; NIMH 5R25MH08091607), of which Dr. Miller is a current fellow, Dr. Wiltsey-Stirman is a past fellow, and Dr. Baumann is current faculty.

Footnotes

Conflict of Interest Statement: all authors indicate that they have no competing interests.

Contributor Information

Christopher J. Miller, VA Boston Healthcare System, Center for Healthcare Organization and Implementation Research (CHOIR), Harvard Medical School, Department of Psychiatry.

Shannon Wiltsey-Stirman, VA Palo Alto Healthcare System, National Center for PTSD Dissemination and Training Division Stanford University, Department of Psychiatry and Behavioral Sciences.

Ana A. Baumann, Washington University at St. Louis.

References

- Aarons GA, Green AE, Palinkas LA, Self-Brown S, Whitaker DJ, Lutzker JR, … Chaffin MJ (2012). Dynamic adaptation process to implement an evidence-based child maltreatment intervention. Implementation Science, 7(1), 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anyon Y, Roscoe J, Bender K, Kennedy H, Dechants J, Begun S, & Gallager C (2019). Reconciling Adaptation and Fidelity: Implications for Scaling Up High Quality Youth Programs. Journal of Primary Prevention, 40(1), 35–49. [DOI] [PubMed] [Google Scholar]

- Askeland E, Forgatch MS, Apeland A, Reer M, & Grønlie AA (2019). Scaling up an empirically supported intervention with long-term outcomes: the nationwide implementation of Generation PMTO in Norway. Prevention Science, 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrera M Jr, Castro FG, Strycker LA, & Toobert DJ (2013). Cultural adaptations of behavioral health interventions: A progress report. Journal of Consulting and Clinical Psychology, 81(2), 196. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, Krawczyk L, Miller CJ, Abel E, Osser DN, Franz A, … Godleski LJT (2016). Team-based telecare for bipolar disorder. Telemedicine and eHealth, 22(10), 855–864. [DOI] [PubMed] [Google Scholar]

- Bauer MS, Miller C, Kim B, Lew R, Weaver K, Coldwell C, … Stolzmann K (2015). Partnering with health system operations leadership to develop a controlled implementation trial. Implementation Science, 11(1), 22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, Miller CJ, Kim B, Lew R, Stolzmann K, Sullivan J, … Connolly S (2019). Effectiveness of Implementing a Collaborative Chronic Care Model for Clinician Teams on Patient Outcomes and Health Status in Mental Health: A Randomized Clinical Trial. JAMA Network Open, 2(3), e190230–e190230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, Weaver K, Kim B, Miller CJ, Lew R, Stolzmann K, … Elwy AR (2019). The Collaborative Chronic Care Model for mental health conditions from evidence synthesis to policy impact to scale-up and spread. . Medical Care, 57, S221–S227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baumann A, Cabassa LJ, & Wiltsey Stirman S (2017). Adaptation in dissemination and implementation science. Dissemination Implementation Research in Health: Translating Science to Practice, 2, 286–300. [Google Scholar]

- Baumann AA, Rodríguez MMD, Wieling E, Parra-Cardona JR, Rains LA, & Forgatch MS (2019). Teaching GenerationPMTO, an evidence-based parent intervention, in a university setting using a blended learning strategy. Pilot and feasibility studies, 5(1), 91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernal GE, & Domenech Rodríguez MM (2012). Cultural adaptations: Tools for evidence-based practice with diverse populations: American Psychological Association. [Google Scholar]

- Bishop DC, Pankratz MM, Hansen WB, Albritton J, Albritton L, & Strack J (2014). Measuring fidelity and adaptation: reliability of a instrument for school-based prevention programs. Evaluation in the Health Professions, 37(2), 231–257. [DOI] [PubMed] [Google Scholar]

- Bodenheimer T, Wagner EH, & Grumbach K (2002a). Improving primary care for patients with chronic illness. JAMA, 288(14), 1775–1779. [DOI] [PubMed] [Google Scholar]

- Bodenheimer T, Wagner EH, & Grumbach K (2002b). Improving primary care for patients with chronic illness: the chronic care model, Part 2. JAMA, 288(15), 1909–1914. [DOI] [PubMed] [Google Scholar]

- Bumbarger BK, & Kerns SE (2019). Introduction to the Special Issue: Measurement and Monitoring Systems and Frameworks for Assessing Implementation and Adaptation of Prevention Programs. Journal of Primary Prevention. [DOI] [PubMed] [Google Scholar]

- Cabassa LJ, & Baumann AA (2013). A two-way street: bridging implementation science and cultural adaptations of mental health treatments. Implementation Science, 8(1), 90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, Glasgow RE, & Stange KC (2013). The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implementation Science, 8(1), 117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA, & Norton WE (2016). The adaptome: advancing the science of intervention adaptation. American Journal of Preventive Medicine, 51(4), S124–S131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Creed TA, Reisweber J, & Beck AT (2011). Cognitive therapy for adolescents in school settings: Guilford Press. [Google Scholar]

- Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, & Lowery JC (2009). Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Science, 4(1), 50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eke AN, Neumann MS, Wilkes AL, & Jones PL (2006). Preparing effective behavioral interventions to be used by prevention providers: the role of researchers during HIV Prevention Research Trials. AIDS Education and Prevention, 18, 44–58. [DOI] [PubMed] [Google Scholar]

- Escoffery C, Lebow-Skelley E, Udelson H, Böing EA, Wood R, Fernandez ME, & Mullen PD (2018). A scoping study of frameworks for adapting public health evidence-based interventions. Translational Behavioral Medicine, 9(1), 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farchione TJ, Fairholme CP, Ellard KK, Boisseau CL, Thompson-Hollands J, Carl JR, … Barlow DH (2012). Unified protocol for transdiagnostic treatment of emotional disorders: a randomized controlled trial. Behavior Therapy, 43(3), 666–678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrer-Wreder L, Sundell K, & Mansoory S (2012). Tinkering with perfection: Theory development in the intervention cultural adaptation field. Paper presented at the Child & Youth Care Forum. [Google Scholar]

- Forgatch MS, & DeGarmo DS (2011). Sustaining fidelity following the nationwide PMTO™ implementation in Norway. Prevention Science, 12(3), 235–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glasgow RE, Vogt TM, & Boles SM (1999). Evaluating the public health impact of health promotion interventions: the RE-AIM framework. American Journal of Public Health, 89(9), 1322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helfrich CD, Hartmann CW, Parikh TJ, & Au DH (2019). Promoting health equity through de-implementation research. Ethnicity and Disease, 29(Suppl 1), 93–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Highfield L, Hartman MA, Mullen PD, Rodriguez SA, Fernandez ME, & Bartholomew LK (2015). Intervention mapping to adapt evidence-based interventions for use in practice: increasing mammography among African American women. BioMed Research International, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Howe G, Leijten P, Zhang J, Brincks A, Weeland J, & Rajas LM (2019). Abstract of Distinction: When Is It Time to Revise or Adapt Our Prevention Programs? Using Baseline Target Moderation to Assess Variation in Prevention Impact Paper presented at the Society for Prevention Research 27th Annual Meeting, San Francisco, CA. [DOI] [PubMed] [Google Scholar]

- Iwelunmor J, Blackstone S, Veira D, Nwaozuru U, Airhihenbuwa C, Munodawafa D, … Ogedegbe G (2015). Toward the sustainability of health interventions implemented in sub-Saharan Africa: a systematic review and conceptual framework. Implementation Science, 11(1), 43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson JE, Stout RL, Miller TR, Zlotnick C, Cerbo LA, Andrade JT, … Wiltsey-Stirman S (2019). Randomized cost-effectiveness trial of group interpersonal psychotherapy (IPT) for prisoners with major depression. Journal of Consulting and Clinical Psychology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall PC, & Frank HE (2018). Implementing evidence-based treatment protocols: Flexibility within fidelity. Clinical Psychology: Science and Practice, 25(4), e12271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kennedy KA, & Barlow DH (2018). The unified protocol for transdiagnostic treatment of emotional disorders: An introduction. Applications of the Unified Protocol for Transdiagnostic Treatment of Emotional Disorders, 1–16. [Google Scholar]

- Kilbourne AM, Neumann MS, Pincus HA, Bauer MS, & Stall R (2007). Implementing evidence-based interventions in health care: application of the replicating effective programs framework. Implementation Science, 2(1), 42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirk MA, Haines E, Powell B, Rokoske F, Weinberger M, & Birken S (2018, December). Understanding core components: Recommendations from a case study for identifying, reporting, and using core components in adaptation. Poster presentation at the 11th Annual Conference on the Science of Dissemination and Implementation in Health, Washington, DC. [Google Scholar]

- Lau AS (2006). Making the case for selective and directed cultural adaptations of evidence-based treatments: examples from parent training. Clinical Psychology: Science and Practice, 13(4), 295–310. [Google Scholar]

- Marques L, Valentine SE, Kaysen D, Mackintosh M-A, De Silva D, Louise E, … Simon NM (2019). Provider fidelity and modifications to cognitive processing therapy in a diverse community health clinic: Associations with clinical change. Journal of Consulting and Clinical Psychology, 87(4), 357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin P, Murray LK, Darnell D, & Dorsey S (2018). Transdiagnostic treatment approaches for greater public health impact: Implementing principles of evidence-based mental health interventions. Clinical Psychology: Science and Practice, 25(4), e12270. [Google Scholar]

- McKay VR, Morshed AB, Brownson RC, Proctor EK, & Prusaczyk B (2018). Letting Go: Conceptualizing Intervention De-implementation in Public Health and Social Service Settings. American Journal of Community Psychology, 62(1-2), 189–202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKleroy VS, Galbraith JS, Cummings B, Jones P, Harshbarger C, Collins C, … & ADAPT Team. (2006). Adapting evidence–based behavioral interventions for new settings and target populations. AIDS Education & Prevention, 18(supp), 59–73. [DOI] [PubMed] [Google Scholar]

- Miller CJ, Grogan-Kaylor A, Perron BE, Kilbourne AM, Woltmann E, & Bauer MS (2013). Collaborative chronic care models for mental health conditions: cumulative meta-analysis and meta-regression to guide future research and implementation. Medical Care, 51(10), 922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mittman B (2018). Evaluating complex interventions: Confronting and guiding (versus ignoring and suppressing) heterogeneity and adaptation In Brown H & Smith JD (Eds.), Prevention Science Methods Group Webinar. [Google Scholar]

- Norton WE, Kennedy AE, & Chambers DA (2017). Studying de-implementation in health: an analysis of funded research grants. Implementation Science, 12(1), 144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, … Kirchner JE (2015). A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science, 10(1), 21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, … Hensley M (2011). Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Administration Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolleri LA, Fuller TR, Firpo-Triplett R, Lesesne CA, Moore C, & Leeks KD (2014). Adaptation guidance for evidence-based teen pregnancy and STI/HIV prevention curricula: from development to practice. American Journal of Sexuality Education, 9(2), 135–154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sigmarsdóttir M, Forgatch MS, Guðmundsdóttir EV, Thorlacius Ö, Svendsen GT, Tjaden J, & Gewirtz AH (2019). Implementing an Evidence-Based Intervention for Children in Europe: Evaluating the Full-Transfer Approach. Journal of Clinical Child and Adolescent Psychology, 48(sup1), S312–S325. [DOI] [PubMed] [Google Scholar]

- Stirman SW, Gutner CA, Crits-Christoph P, Edmunds J, Evans AC, & Beidas RS (2015). Relationships between clinician-level attributes and fidelity-consistent and fidelity-inconsistent modifications to an evidence-based psychotherapy. Implementation Science, 10(1), 115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stirman SW, Kimberly J, Cook N, Calloway A, Castro F, & Charns M (2012). The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implementation Science, 7(1), 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stirman SW, Miller CJ, Toder K, & Calloway A (2013). Development of a framework and coding system for modifications and adaptations of evidence-based interventions. Implementation Science, 8(1), 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- US Department of Veterans Affairs (2019). VA continues to pioneer new approaches for treating Veteran mental health conditions. Retrieved from https://www.va.gov/opa/pressrel/pressrelease.cfm?id=5217

- Wagner EH, Austin BT, & Von Korff M (1996). Organizing care for patients with chronic illness. Milbank Quarterly, 511–544. [PubMed] [Google Scholar]

- Wiltsey-Stirman S, Baumann A, & Miller CJ (2019). The FRAME: An expanded framework for reporting adaptations and modifications to evidence-based interventions. Implementation Science, in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiltsey Stirman S, Calloway A, Toder K, Miller CJ, DeVito AK, Meisel SN, … Crits-Christoph P (2013). Community mental health provider modifications to cognitive therapy: implications for sustainability. Psychiatric Services, 64(10), 1056–1059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woltmann E, Grogan-Kaylor A, Perron B, Georges H, Kilbourne AM, & Bauer MS (2012). Comparative effectiveness of collaborative chronic care models for mental health conditions across primary, specialty, and behavioral health care settings: systematic review and meta-analysis. American Journal of Psychiatry, 169(8), 790–804. [DOI] [PubMed] [Google Scholar]