Abstract

Background

Wearable trackers for monitoring physical activity (PA) and total sleep time (TST) are increasingly popular. These devices are used not only by consumers to monitor their behavior but also by researchers to track the behavior of large samples and by health professionals to implement interventions aimed at health promotion and to remotely monitor patients. However, high costs and accuracy concerns may be barriers to widespread adoption.

Objective

This study aimed to investigate the concurrent validity of 6 low-cost activity trackers for measuring steps, moderate-to-vigorous physical activity (MVPA), and TST: Geonaut On Coach, iWown i5 Plus, MyKronoz ZeFit4, Nokia GO, VeryFit 2.0, and Xiaomi MiBand 2.

Methods

A free-living protocol was used in which 20 adults engaged in their usual daily activities and sleep. For 3 days and 3 nights, they simultaneously wore a low-cost tracker and a high-cost tracker (Fitbit Charge HR) on the nondominant wrist. Participants wore an ActiGraph GT3X+ accelerometer on the hip at daytime and a BodyMedia SenseWear device on the nondominant upper arm at nighttime. Validity was assessed by comparing each tracker with the ActiGraph GT3X+ and BodyMedia SenseWear using mean absolute percentage error scores, correlations, and Bland-Altman plots in IBM SPSS 24.0.

Results

Large variations were shown between trackers. Low-cost trackers showed moderate-to-strong correlations (Spearman r=0.53-0.91) and low-to-good agreement (intraclass correlation coefficient [ICC]=0.51-0.90) for measuring steps. Weak-to-moderate correlations (Spearman r=0.24-0.56) and low agreement (ICC=0.18-0.56) were shown for measuring MVPA. For measuring TST, the low-cost trackers showed weak-to-strong correlations (Spearman r=0.04-0.73) and low agreement (ICC=0.05-0.52). The Bland-Altman plot revealed a variation between overcounting and undercounting for measuring steps, MVPA, and TST, depending on the used low-cost tracker. None of the trackers, including Fitbit (a high-cost tracker), showed high validity to measure MVPA.

Conclusions

This study was the first to examine the concurrent validity of low-cost trackers. Validity was strongest for the measurement of steps; there was evidence of validity for measurement of sleep in some trackers, and validity for measurement of MVPA time was weak throughout all devices. Validity ranged between devices, with Xiaomi having the highest validity for measurement of steps and VeryFit performing relatively strong across both sleep and steps domains. Low-cost trackers hold promise for monitoring and measurement of movement and sleep behaviors, both for consumers and researchers.

Keywords: fitness trackers, mobile phone, accelerometry, physical activity, sleep

Introduction

Background

Physical activity (PA) and sleep are modifiable determinants of morbidity and mortality among adults and specifically contribute to the development of diseases such as obesity, type 2 diabetes, cardiovascular diseases, low quality of life, and mental health problems [1-6]. Engaging in at least 30 min of moderate-to-vigorous physical activity (MVPA) per day, getting between 7 and 9 hours of total sleep time (TST) per night, and spending relatively more time on light PA rather than being sedentary are associated with beneficial health outcomes [1-6]. A large proportion of adults do not meet the guidelines for one or more of these behaviors [6,7]. PA and sleep are, together with time spent on sedentary behavior (SB), codependent behaviors: they are part of one 24-hour day, and time spent on one behavior will impact the time spent on at least one of the other behaviors. It is, therefore, recommended to target these behaviors together [8].

Successful health promotion interventions rely on behavior change techniques that address modifiable determinants of health behavior [9]. A behavior change technique reported as both effective [10] and highly appreciated by users [11,12] is self-monitoring of health behavior. Self-monitoring refers to keeping a record of the behavior that is performed [13]. Self-monitoring tools provide opportunities for self-management of health as well as for remote activity tracking by health care providers as part of a patient’s treatment regimen [14]. Subjective ways of self-monitoring, such as self-report using retrospective measures (eg, diaries and questionnaires), often come with high participant burden and reporting biases [14]. Self-reported sleep duration in sleep logs showed an overestimation in comparison with objective measurements, especially when sleep duration was below the recommended health norms [15]. Activity trackers conversely offer automated, objective, and convenient means for self-monitoring PA and sleep. This paper focused on self-monitoring via consumer-based activity trackers as intervention tools for PA and sleep, more specifically by investigating the validity of low-cost trackers. Such trackers rarely monitor SB [16,17], which is why SB, although important in 24-hour movement behaviors, falls outside the scope of this paper.

Activity trackers may include pedometers, smartphone-based accelerometers, and accelerometers in advanced electronic wearable trackers or in smartwatches. However, pedometers do not provide information on sleep, and smartphone-based accelerometers have shown lower accuracy when measuring PA compared to advanced electronic wearable trackers [18], making advanced electronic wearable trackers and smartwatches more suitable to accurately self-monitor PA and sleep. Smartwatches (eg, Apple Watch) offer several other functions apart from activity tracking such as communication and entertainment and are usually more expensive than advanced electronic wearable trackers (eg, Fitbit Charge). Advanced electronic wearable trackers (termed as activity trackers hereafter) are usually wrist-or belt-worn, provide 24-hour self-monitoring, and often include real-time behavioral feedback or more detailed feedback shown after synchronization with other electronic devices (eg, tablet, smartphone, or PC) [19]. Several commercial activity trackers are available to the public and are increasingly integrated into effective intervention programs to improve activity behaviors [20,21].

There has been an increased interest by adults in activity trackers. For example, in Flanders, Belgium, 8% of adults owned an activity tracker (22% owned a type of wearable, including sports watches and smartwatches) in 2018 compared with only 2% (8% owned a type of wearable, including sports watches and smartwatches) owning one in 2015 [22]. Characteristics of activity trackers may impact their continued use and further adoption. Cost is likely to be a barrier to increased adoption of higher-end trackers [19,23]. Indeed, activity trackers appear to be used less among adults who are less educated, unemployed [19], and have a lower income [22]. Notably, unhealthy lifestyles such as insufficient PA [24] and insufficient sleep duration [25] are more prevalent among people of lower to medium socioeconomic status (SES) than among those of higher SES. Therefore, providing accurate, low-cost options to self-monitor PA and sleep in their daily lives is crucial for public health, as a lack of valid low-cost trackers may increase the health and digital divide between lower and higher income groups in the society. However, nonadoption of activity trackers in low SES populations can probably not only be attributed to the high cost of the devices but may also be a matter of priorities and affordances. Further research in this area is necessary. Having valid low-cost trackers not only plays a role in low SES populations but also in the general population; cost-effective solutions are needed for scaling up interventions in a public health context where financial resources are limited [26]. Having accurate, low-cost activity trackers can be expected to increase the feasibility of scaling up interventions that rely on activity trackers.

The unequal access to valid tools because of cost barriers is often studied within health literacy conceptual frameworks. Health literacy refers to having the ability and motivation to take responsibility for one’s own health [27]. Low health literacy has been associated with worse health outcomes [27], and improving access to tools that can help understand their own health behavior via self-monitoring and taking responsibility to take care of one’s own health may improve health literacy. There is increasing attention to expanding the health literacy model to electronic health (eHealth) literacy or digital health literacy, defined as the ability of people to use emerging technology tools to improve or enable health and health care [28]. Digital health literacy appears to be associated with a lower SES [29].

More specifically, the importance of accuracy of low-cost trackers can also be understood from the technology acceptance model that emphasizes the need for trust and perceived usefulness, together with perceived ease of use, of a tool before users are willing to adopt them [30].

When using activity trackers, their accuracy needs to be established to avoid counterproductive effects, such as falsely signaling that people are meeting guidelines and need not make any extra efforts, whereas, in fact, these people may not reach the sufficient sleep or PA levels [31]. Conversely, an underestimation of actual behavior can also cause people to get demotivated and to no longer make efforts to do better [32]. Accuracy of the tracker has also been cited by users as the trackers’ most important characteristic [19]. To effectively use wearable activity trackers for health self-management in daily life, accuracy needs to be assessed in free-living settings because laboratory-based validity studies tend to overestimate validity [18]. The validity to measure PA in free-living conditions has been examined for several activity trackers, such as Fitbit One, Zip, Ultra, Classic, Flex [16-18,33-38], Misfit Shine [18], and Withings Pulse [18]. In general, studies found the highest validity for Fitbit trackers [18]. Most validated trackers showed high correlations with an ActiGraph accelerometer for number of steps [16,39,40]. MVPA is less often studied and less accurately measured by activity trackers than step count [39]. Activity trackers showed moderate-to-strong correlations with ActiGraph accelerometers on MVPA, with Fitbit trackers and Withings Pulse showing the highest accuracy [39]. In addition, for TST, several wearable activity trackers currently on the market have been assessed for validity, including Fitbit (Flex and Charge HR) [41-43], Withings Pulse [39,41], Basis Health Tracker [41], Garmin [44], and Polar Loop [44]. Validity results for TST were very divergent, ranging from low to strong validity, with Fitbit again showing better validity [39,44]. The accuracy of PA and/or TST depends on the position where the tracker is worn, for example, the wrist vs the hip [33], and can be improved by combining accelerometry with the heart rate measurement [45,46].

The cost of the trackers in the abovementioned published validation studies was often not reported, but their price in the current market (at the end of January 2019) ranged from €50 (US $56) to €130 (US $146) for an unused, basic model (with Misfit Flash as the exception at €42 [US $47]). Most trackers that are popular in the consumer market and that are reported on in scientific publications cost more than €50 (US $56) and commonly more than €100 (US $112) [14]. A recent industry report states that when spending less than US $50, users are likely to get a product of mediocre accuracy [47], although it is unclear whether this statement was empirically based. To our knowledge, only 2 studies have examined the validity of low-cost trackers. Wahl et al’s [48] study of the Polar Loop (price in June 2019 around €60 [US $67]), Beurer AS80 (price in June 2019 around €42 [US $47]), and Xiaomi Mi Band (price in June 2019 around €25 [US $28]) suggested that only the Mi Band had good validity for step count. However, this study was conducted in a laboratory and not in free-living conditions. In one other study, the validity of the Xiaomi Mi Band for measuring TST was evaluated relative to a manual switch-to-sleep-mode measurement, with positive results [49]. However, this study did not use an objective measurement tool for comparison. We are not aware of any validation studies of low-cost activity trackers against objective measurement methods conducted in free-living conditions, and many of the most commonly available low-cost trackers do not appear to have been validated in any form.

In summary, wearable activity trackers can be a useful tool in health promotion and remote treatment monitoring for PA and TST. However, high costs and accuracy concerns may be barriers to widespread adoption [50]. Assessing the validity of low-cost trackers may play a major role at the population level to encourage health behavior in the future and among low SES groups who are most at risk for poor health and in need of healthy behavior promotion. To enable activity self-monitoring in daily life, the accuracy of low-cost wearable activity trackers needs to be established in free-living conditions. Current validation studies have mainly focused on wearable activity trackers that cost above €50 (US $56).

Objectives

This study aimed to assess the validity of low-cost wearable activity trackers among adults (≤€50 [US $56]) for the objective measurement of PA and TST in daily life against free-living gold standards (ActiGraph GT3X+ accelerometer and BodyMedia SenseWear). This study was exploratory in nature and did not have firm hypotheses regarding the validity of specific low-cost trackers. However, it may be expected that trackers with heart rate monitoring are more accurate than those without heart rate monitoring. This may be because heart rate measurement contributes to a more accurate estimate of intensity and energy expenditure, resulting in a more accurate discrimination between activity and nonactivity [45,46].

Methods

Participants and Procedure

A concurrent validity study among adults was designed in which a low-cost tracker was validated against a free-living condition standard for steps, active minutes (MVPA), and TST. A high-cost tracker (Fitbit Charge 2) was also validated against these gold standards, to compare with validation outcomes for the low-cost trackers. In each participant, three 24-hour observation days were collected for each low-cost tracker. Power analyses (run in G*Power 3.1.9.2) suggested that to detect a 2-tailed significant correlation (H1) of 0.49 to 0.90, with 80% power (values based on the study by Brooke et al [44]), a sample size of between 6 and 29 was required.

A total of 20 healthy participants aged between 18 and 65 years living in Flanders, Belgium, were recruited using convenience sampling. Inclusion criteria were having no current physical limitations, medical conditions, or psychiatric conditions that may impact movement or sleep. Descriptive information collected on participants consisted of age, sex, self-reported height and weight, and highest attained education. All participants read and signed an informed consent form. The Ethics Committee of the University Hospital of Ghent approved the study protocol (B670201731732).

Instruments

Convergent Measure

As this is a free-living study, the ActiGraph GT3X+ (ActiGraph) triaxial accelerometer was used as a reliable and valid reference for measuring step count [51-53] and MVPA [54,55]. The GT3X+ has been shown to be a valid measure of both step count compared with direct observation (percentage error <1.5% [52]; percentage error ≤1.1% [53]; and intraclass correlation coefficient [ICC]≥0.84 [51]) and MVPA compared with indirect calorimetry (r=0.88) [54]. Accelerometer data were initialized, downloaded, and processed using ActiLife version 5.5.5 software (ActiGraph). The Freedson Adult cut-points were applied to categorize PA measured by using the ActiGraph accelerometer (sedentary activity=0-99 counts per min, light activity=100-1951 counts per min, moderate activity=1952-5723 counts per min, and vigorous activity ≥5724 counts per min) [54]. A 15-second epoch was used when downloading the data. The ActiGraph GT3X+ was fitted to the right side of the participants’ waist in accordance with the manufacturer’s instructions. Only days with valid data of the ActiGraph were included in the analysis. A valid day was defined as a 24-hour period in which at least 10 hours of data wear time were recorded [56]. Nonwear time was analyzed as a run of zero counts lasting more than 60 min with an allowance of 2 min of interruptions. Using this algorithm, the risk of misclassification of nonwear time as sedentary time was avoided [57].

The BodyMedia SenseWear (BodyMedia Inc) is a portable multisensor device that can provide information regarding the total energy expenditure, TST, circadian rhythm, and other activity metrics. In this study, the SenseWear was used as the reference for sleep duration. SenseWear has been validated as a measure of TST compared with polysomnography (r=0.83; SE of estimate 37.71) [58]. Data were analyzed in SenseWear Professional 8.1 software [59]. The SenseWear was placed over the triceps muscle on the nondominant arm between the acromion and olecranon processes, in accordance with the manufacturer’s instructions.

Low-Cost Activity Trackers

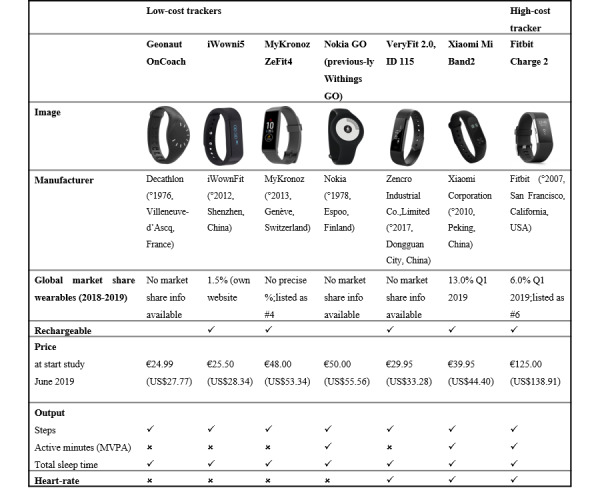

In total, 6 low-cost activity trackers were selected (Figure 1) based on their price at the time of the study (≤€50 [US $56]), their market share (eg, MyKronoz and Xiaomi), whether or not they included a heart rate measurement and output (steps, MVPA or active minutes, and TST), and availability from popular web-based purchase sites in Europe where the study was conducted. Furthermore, we tested the Fitbit Charge 2 to also include a comparison between a low-cost activity tracker and a validated high-cost activity tracker. Fitbit was selected as a high-cost activity tracker because it was one of the most popular activity trackers on the market at the start of the study and was already validated for measuring steps, MVPA, and TST [17]. All participants received a Wiko smartphone in loan (Lenny 3, Android 6.0 Marshmallow, price €99.99 [US $119.80] in June 2019) to pair the trackers with, to cancel out any potential individual differences in smartphone pairing.

Figure 1.

Tracker characteristics.

All devices measured steps and TST. Only Xiaomi, Nokia, and also Fitbit used a specific variable that quantifies intensive forms of PA. These 3 devices reported active minutes with no further subdivision. As all the devices set a goal of 30 min PA per day (similar to the MVPA recommendations for adults), it was assumed that the measured variable corresponded to MVPA as measured by the ActiGraph. However, specific information regarding intensity cut-points is not publicly available. TST was used, excluding daytime naps, for comparison with the SenseWear that was only worn at night. Only Fitbit, VeryFit, and Xiaomi measured the heart rate. Data were extracted using the proprietary software for all devices, in the same fashion that a consumer would use the software, and were visually checked for outliers.

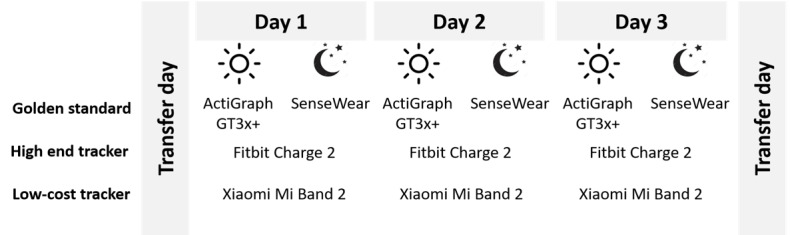

Free-Living Protocol

As it was not feasible or comfortable to wear all trackers at the same time, participants were instructed to wear one of the low-cost devices in combination with the Fitbit tracker on their nondominant wrist. They were also instructed to simultaneously wear the ActiGraph on their hip during daytime and the SenseWear on their upper arm at nighttime. Furthermore, the participants were provided with a diary to write down the time they put on and took off the devices. This way it could be checked that the devices were always worn simultaneously. If this was not the case, data of the device that was worn separately were deleted to avoid a mismatch of the measurements. Participants received the 6 low-cost trackers in a random order. The position of the low-cost and high-cost tracker on the nondominant wrist (first or second in distance from the wrist) was varied across days. Each tracker was worn for a period of 3 consecutive days and nights. A period of 3 days and 3 nights was chosen to balance between achieving sufficient data for the question under study without burdening the participants. Between 2 periods, a 1-day gap allowed for switching the devices. During daytime, the devices were worn during all waking hours, except during water-based activities. When participants went to bed, they were asked to remove the ActiGraph and put on the SenseWear instead. In Figure 2, a typical measurement period for one device is shown.

Figure 2.

An example of the measurement protocol for one period.

PA or TST may differ between weekdays and weekend days. Although this study did not intend to explain differences in PA or TST but rather the degree of agreement between 2 measurements on any given day, a difference in how often a tracker was measured on a certain day rather than another day may influence validity results. For example, validity has shown to be lower for measuring a low number of steps or high number of steps. Our study design controlled for this potential influence by randomly varying the days across participants on which a particular tracker was worn. Across all data points, we would then expect all measurement days to be relatively equally represented, as was the case in our study. The percentage of weekend days in total measurement days ranged between 25% and 33%. In addition, on particular weekdays, there were very few differences (2%-9% difference between the tracker with the lowest number of measurements on a certain day and the tracker with the highest number of measurements on a particular day).

Statistical Analysis

Analyses were performed using IBM SPSS Statistics version 24.0 (SPSS Inc). All analyses were performed on a daily measurement level, counting a measured day as a unit of analysis. Analyses consisted of measures of agreement, systematic differences, and bias and limits of agreement. Measures of agreement (equivalence testing) included the Spearman correlation coefficient (r) to examine the association between steps, active minutes, and TST measured by trackers and convergent measure (also illustrated in scatter plots). As sleep and PA data were nonnormally distributed, (1) a Spearman correlation, a nonparametric statistical test, was used instead of a Pearson correlation and (2) ICC (absolute agreement, 2-way random, single measures, 95% CI) that reflects the effect of individual differences on observed measures. Measures of systematic differences included mean absolute percentage errors (MAPEs) of tracker measurements compared with those of the convergent measure. MAPEs were calculated with the following formula: mean difference activity tracker−convergent measure × 100/mean gold standard. Bland-Altman plots with their associated limits of agreement were used to examine biases between measurements from the trackers and the convergent measure. The following cutoff values were used to interpret the Spearman correlation coefficient: <0.20=very weak, 0.20 to 0.39=weak, 0.40 to 0.59=moderate, 0.60 to 0.79=strong, and 0.80 to 1.0=very strong [60]. The cutoff values to interpret the ICC were as follows: <0.60=low, 0.60 to 0.75=moderate, 0.75 to 0.90=good, and >0.90=excellent [48].

A series of linear mixed effects models with restricted maximum likelihood estimation were used to examine the association between steps, MVPA minutes, and TST measured by the commercial trackers and convergent measures, accounting for the structure of the data (repeated measures clustered within participants). The pattern of results was similar to that obtained from the abovementioned analyses. Data are, therefore, presented in Multimedia Appendix 1.

Results

Descriptive Statistics

A total of 3 participants discontinued their participation in the study: one participant dropped out at the start of the study because of the combination of high perceived burden of the research protocol and a busy personal schedule, and consequently, no data were collected and analyzed from this participant; one participant was not able to meet the protocol toward the end of the study because of conflict with his/her work schedule; and one participant had to end participation because of an unexpected hospital admission (17/20, 85% retention rate). The average age of the analyzed sample of participants who started the study (n=19) was 37.6 (SD 13.4) years; of 19 participants, 13 were female. The sample was highly educated, with 17 participants having achieved a higher education degree (academic or nonacademic). Their average BMI was 23.5 (SD 4.4) kg/m2. Two participants were overweight (BMI of 25-30 kg/m2), and 2 participants were obese (BMI ≥30 kg/m2). The level of MVPA measured at baseline with the International Physical Activity Questionnaire varied from 10 to 351 min per day (SD 91) [61,62].

All participants owned a smartphone; 5 of 19 participants had previous experience with wearable trackers (n=3; Fitbit). As can be expected in a highly educated sample, they were all very familiar with digital tools and required little assistance in installation or usage. We did not expect any impact of participants’ experience on the validity measurements, as (1) these would not have a differential effect of any potential misuse between different trackers and (2) control procedures were put in place to prevent any misuse. Potential misuse could consist of a wrong placement of the tracker. Participants received a thorough briefing at the start of the study and a daily check-up of any issues to ensure any baseline differences in familiarity with digital tools were canceled out and to reduce the risk for misuse. No issues with misuse were noted.

Issue of Usability With Low-Cost Trackers

In total, each device was intended to be tested for 60 days. As one of the participants did not start, the maximum number of potential measurement days per tracker was reduced to 57. The number of days of available data varied per tracker because of dropouts at the end of the study by some participants and because of technical issues experienced with some trackers, which resulted in fewer days of available data.

Of 57 measurement days, VeryFit had 55 (96%) measured days for PA (lost days: 2 because of no data shown in the app) and 51 (86%) measured days for sleep (lost days: 3 because of participant noncompliance and 3 because of no data shown in the app). Of 57 measurement days, iWown had 52 (89%) measured days for PA (lost days: 4 because the tracker did not pair and 1 because of no data shown in the app) and 51 (89%) measured days for sleep (lost days: 4 because the tracker did not pair and 2 because of no data shown in the app). Xiaomi was not worn by 2 participants because of dropping out, reducing potential measurement days to 51. Of 51 measured days, Xiaomi had 48 (94%) measured days for PA (lost days: 2 because of participant noncompliance and 1 because of no data shown in the app) and 44 (86%) measured days for sleep (lost days: 6 because of participant noncompliance and 1 because of no data shown in the app). Of 57 measured days, Nokia had 49 (86%) measured days for PA (8 lost days because of no data shown in the app) and 46 (81%) measured days for sleep (lost days: 8 because of no data shown in the app and 3 because of participant noncompliance). MyKronoz was not worn by 3 participants because of dropping out; one participant accidentally removed the data, reducing potential measurement days to 45. Of 45 measured days, MyKronoz had 40 (89%) measurement days for PA (5 lost days because of no data shown in the app) and 24 (53%) for sleep (lost days: 11 because of no data shown in the app and 10 because of participant noncompliance). Of 57 measured days, Geonaut had 37 (65%) measured days for PA (lost days: 12 because of no data shown in the app, 9 because of the device not pairing, and 5 because of participant noncompliance) and 30 (53%) measured days for sleep (lost days: 9 days because of the device not pairing, 8 because of no data shown in the app, and 4 because of participant noncompliance).

Participants were especially frustrated about a device not pairing, as this meant they had to reinstall the tracker and also lost their past activity history. Thus, VeryFit and Xiaomi showed little data loss because of usability problems, whereas especially for Geonaut and MyKronoz, data were lost because of usability problems. In general, more data were lost for sleep than for PA. Usable data in the analyses were further reduced because of technical issues experienced with the convergent measures, which resulted in fewer days of data for which comparisons could be made (the number of usable data points are shown in all tables).

Validity of Low-Cost Trackers

Physical Activity

Table 1 shows the mean steps, mean minutes of MVPA, and the corresponding standard deviations for all trackers for measuring steps and MVPA.

Table 1.

Mean steps and minutes of moderate-to-vigorous physical activity per day measured by the low-cost trackers, Fitbit and ActiGraph.

| Tracker | Number of measured days | Mean (SD) | Range | |

| Number of steps per day | ||||

|

|

Geonaut | 37 | 8026 (4352) | 657-19,413 |

|

|

iWown | 51 | 7668 (5169) | 259-22,759 |

|

|

MyKronoz | 40 | 10,431 (4764) | 485-24,493 |

|

|

Nokia | 50 | 5896 (3113) | 325-13,976 |

|

|

VeryFit | 55 | 7320 (4481) | 649-22,628 |

|

|

Xiaomi | 48 | 7317 (4535) | 369-20,866 |

|

|

Fitbit | 307 | 9662 (4866) | 451-24,664 |

|

|

ActiGraph | 316 | 8126 (4314) | 188-23,121 |

| Number of minutes of moderate-to-vigorous physical activity per day | ||||

|

|

Nokia | 49 | 5 (12) | 0-52 |

|

|

Xiaomi | 46 | 80 (48) | 0-190 |

|

|

Fitbit | 305 | 45 (49) | 0-239 |

|

|

ActiGraph | 328 | 41 (31) | 0-150 |

Agreement testing for steps diverged between the Spearman r coefficient and ICC (Table 2). All trackers, except iWown, showed strong (Nokia, Geonaut, VeryFit, and MyKronoz) to very strong (Xiaomi and Fitbit) agreement with the ActiGraph measurements based on the Spearman r coefficient (all above 0.60). On the basis of ICC, MyKronoz, iWown, and Nokia showed low agreement (ICC<0.60), whereas Geonaut had moderate and Xiaomi, Fitbit, and VeryFit had a good agreement with the ActiGraph measurements (ICC=0.75-0.90). These coefficients are in line with the interpretation of the MAPE scores, showing the largest mean deviation from the ActiGraph measurements for iWown (35.28%) and the smallest for the Xiaomi tracker (17.14%).

Table 2.

Correlation coefficients, intraclass correlation coefficients, associated 95% CI of the measurements, and mean absolute percentage error scores for measuring steps and moderate-to-vigorous physical activity.

| Tracker | n | Spearman r (95% CI) | Intraclass correlation coefficient | 95% CI | Mean absolute percentage error (%) | |

| Steps | ||||||

|

|

Geonaut | 36 | 0.63a (0.31 to 0.87) | 0.68a | 0.46 to 0.82 | 24.63 |

|

|

iWown | 50 | 0.53a (0.16 to 0.77) | 0.51a | 0.28 to 0.69 | 35.28 |

|

|

MyKronoz | 38 | 0.77a (0.45 to 0.95) | 0.59a | 0.22 to 0.79 | 25.79 |

|

|

Nokia | 50 | 0.77a (0.51 to 0.94) | 0.56a | 0.27 to 0.74 | 22.62 |

|

|

VeryFit | 54 | 0.78a (0.61 to 0.89) | 0.82a | 0.62 to 0.91 | 24.87 |

|

|

Xiaomi | 45 | 0.91a (0.81 to 0.97) | 0.90a | 0.77 to 0.95 | 17.14 |

|

|

Fitbit | 300 | 0.91a (0.86 to 0.94) | 0.87a | 0.66 to 0.93 | 25.73 |

| Moderate-to-vigorous physical activity | ||||||

|

|

Nokia | 16 | 0.24 (−0.11 to 0.50) | 0.18 | −0.10 to 0.44 | 108.17 |

|

|

Xiaomi | 45 | 0.26 (−0.08 to 0.54) | 0.15 | −0.08 to 0.39 | 293.29 |

|

|

Fitbit | 298 | 0.56a (0.47 to 0.63) | 0.56a | 0.48 to 0.64 | 114.30 |

aP<.001.

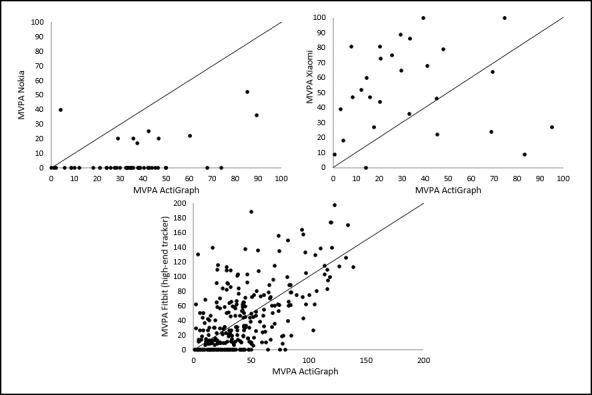

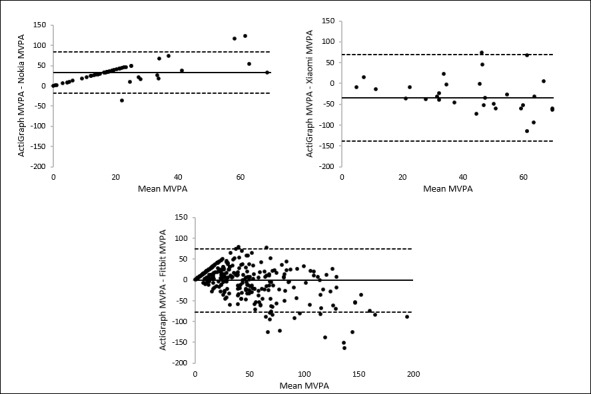

For measuring MVPA, correlations between the MVPA measurements of the trackers and the ActiGraph accelerometer were weak for Nokia and Xiaomi and moderate for Fitbit (Table 2). The ICC showed low agreement for MVPA between all 3 trackers and the ActiGraph accelerometer (ICC<0.60). The MAPE scores also indicate very large mean deviations from the ActiGraph measurements for MVPA (>100%), which confirm the low accuracy of the trackers for measuring MVPA.

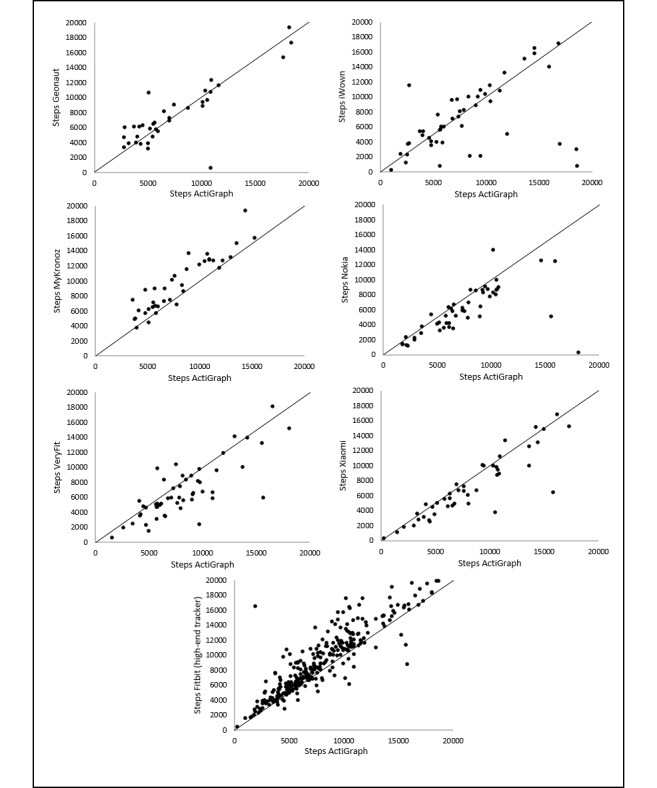

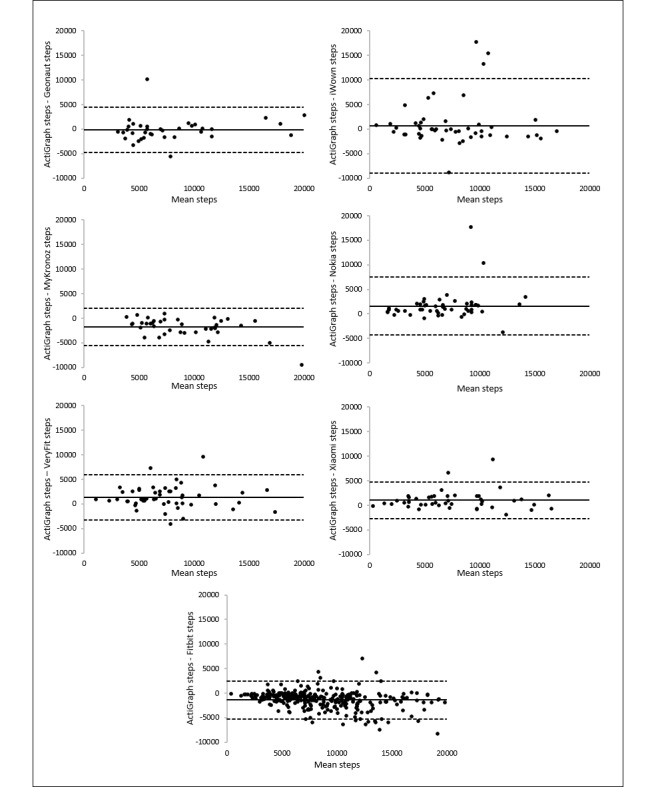

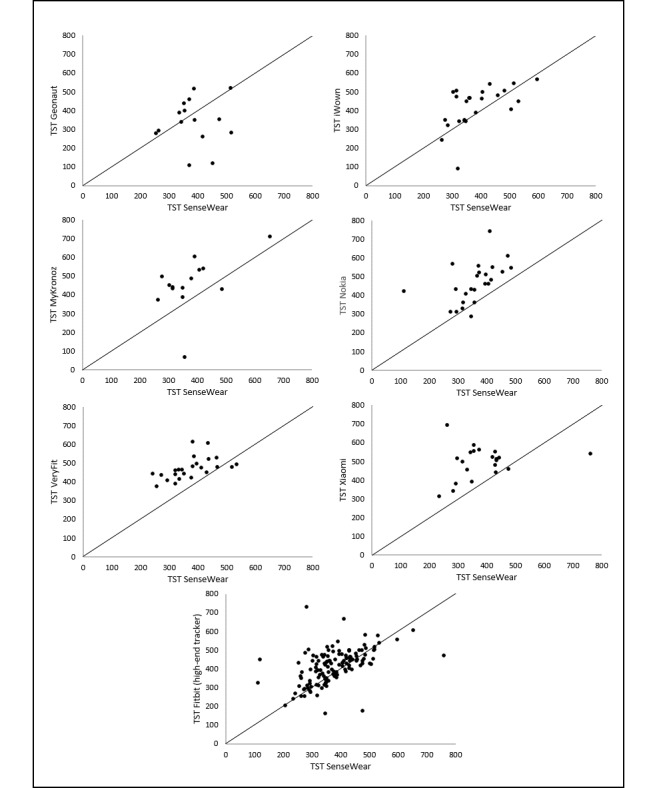

Correlations for steps and MVPA are illustrated in Figures 3 and 4. Scatter and deviation of the points around the line that reflects the perfect agreement between the measurements are larger for measuring MVPA than for measuring steps. The largest scatter for measuring steps is found for iWown (Figure 3). On the basis of scatterplots, a careful statement on overestimation or underestimation of the measurement of the trackers can be made. This is based on the location of the data points relative to the line that represents the perfect agreement between the measurements. For Xiaomi, Nokia, and VeryFit, the majority of the data points are located below that line, meaning an underestimation of the number of steps. For iWown, MyKronoz, and Fitbit, the majority of the data points are located above the line, meaning an overestimation of the number of steps. For Geonaut, no clear underestimation or overestimation is visualized. A large scatter for all 3 trackers that measure MVPA was observed, with no obvious relation between the MVPA measurements of the trackers and the MVPA measurements of the ActiGraph. For Nokia, an underestimation is visualized, and for Xiaomi, however, an overestimation is visualized. For Fitbit, no clear underestimation or overestimation is visualized.

Figure 3.

Correlations between steps estimates per day from the trackers and the ActiGraph.

Figure 4.

Correlations between moderate-to-vigorous physical activity estimates per day from the trackers and the ActiGraph.

These findings are also visualized by using Bland-Altman plots. Bland-Altman plots were used to visualize the differences between the steps and MVPA measurements of the ActiGraph accelerometer and each tracker (y-axis) against the average number of steps or number of minutes of MVPA of the measurements of these 2 devices (x-axis). Mean differences with the ActiGraph accelerometer and the limits of agreement are presented in Table 3 (illustrated in Figures 5 and 6 for steps and MVPA, respectively). A positive value of the mean difference indicates an underestimation of the measurements of the tracker compared with the ActiGraph measurements, whereas a negative value indicates an overestimation. The systematic overestimation or underestimation (mean differences) and the range between the upper and lower limits of the agreement reflect the accuracy of the measurements of the tracker compared with the measurements of the ActiGraph accelerometer. The broader the range between the lower and the upper limit, the less accurate the measurements are.

Table 3.

Mean differences of activity measures with the ActiGraph accelerometer and limits of agreement of the activity trackers.

| Tracker | n | Mean difference of steps (ActiGraph−Tracker) | Limits of agreement, range | Width of the limits of agreement | |

| Steps | |||||

|

|

Geonaut | 36 | −146 | −4802 to 4509 | 9311 |

|

|

iWown | 50 | 638 | −8993 to 10,270 | 19,263 |

|

|

MyKronoz | 38 | −1798 | −5563 to 1967 | 7530 |

|

|

Nokia | 50 | 1609 | −4229 to 7447 | 11,676 |

|

|

VeryFit | 54 | 1356 | −3276 to 5989 | 9265 |

|

|

Xiaomi | 45 | 1011 | −2713 to 4737 | 7450 |

|

|

Fitbit | 300 | −1369 | −5238 to 2499 | 7737 |

| Moderate-to-vigorous physical activity | |||||

|

|

Nokia | 16 | 32.55 | −18.35 to 83.45 | 101.80 |

|

|

Xiaomi | 45 | −35.14 | −138.96 to 68.68 | 207.64 |

|

|

Fitbit | 298 | −1.27 | −77.07 to 74.52 | 151.59 |

Figure 5.

Bland-Altman plots of the trackers for measuring steps. The middle line shows the mean difference (positive values indicate an underestimation of the wearable and negative values indicate an overestimation) between the measurements of steps of the wearables and the ActiGraph and the dashed lines indicate the limits of agreement (1.96×SD of the difference scores).

Figure 6.

Bland-Altman plots of the trackers for measuring moderate-to-vigorous physical activity. The middle line shows the mean difference (positive values indicate an underestimation of the wearable, and negative values indicate an overestimation) between the measurements of moderate-to-vigorous physical activity of the wearables and the ActiGraph, and the dashed lines indicate the limits of agreement (1.96×SD of the difference scores).

For measuring steps and MVPA, the table and the plots (Figures 5 and 6) all showed large limits. The Xiaomi tracker showed the narrowest limits (7450 steps) for measuring steps, whereas iWown showed the broadest limits (19,263 steps). These results are in line with the interpretations of validity findings based on the Spearman r, the ICC, and the MAPE score.

For MVPA, the ranges between the lower and upper limit of agreement are very large, indicating a low accurate measurement by all 3 trackers measuring MVPA. The Bland-Altman plots showed the broadest limits for Xiaomi (207.64 min) and the narrowest limits for Nokia (101.80 min).

Thus, several but not all low-cost trackers showed high accuracy to measure steps. Xiaomi trackers even outperformed the Fitbit tracker in measuring steps. However, none of the trackers showed good accuracy to measure MVPA, including Fitbit, which did nevertheless reach a slightly higher validity than the low-cost trackers in measuring MVPA.

Total Sleep Time

Table 4 reports the mean minutes of TST and corresponding standard deviations for all trackers.

Table 4.

Mean total sleep time per day measured by the low-cost trackers, Fitbit and SenseWear.

| Tracker | Number of measured days | Total sleep time (minutes), mean (SD) | Range (minutes) | |

| Total sleep time | ||||

|

|

Geonaut | 30 | 341 (123) | 110-589 |

|

|

iWown | 52 | 421 (108) | 91-624 |

|

|

MyKronoz | 24 | 457 (143) | 70-746 |

|

|

Nokia | 46 | 464 (108) | 247-743 |

|

|

VeryFit | 51 | 472 (59) | 193-614 |

|

|

Xiaomi | 44 | 495 (87) | 285-695 |

|

|

Fitbit | 287 | 414 (91) | 68-733 |

|

|

SenseWear | 147 | 373 (83) | 112-653 |

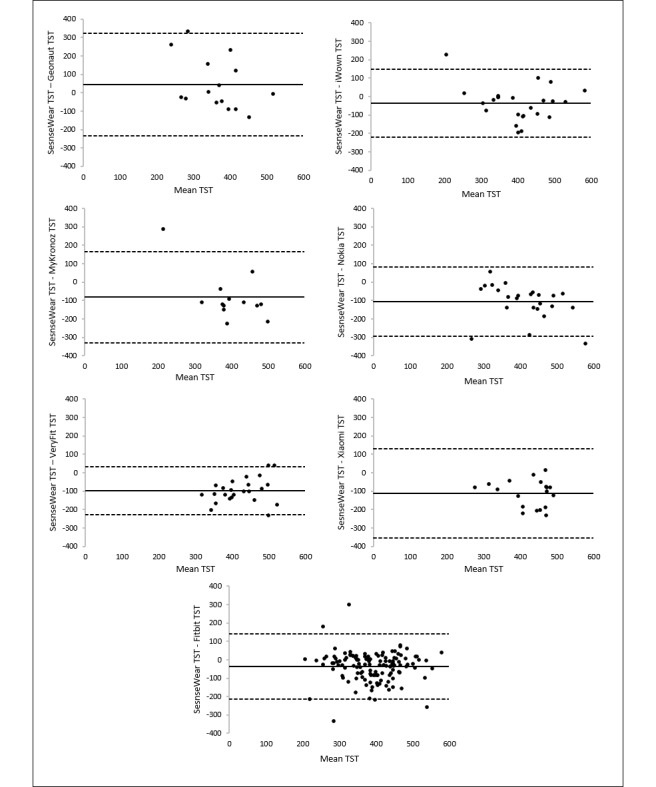

Spearman correlations between the TST measurements of the trackers and the TST measurements of the SenseWear armband show large diversity between trackers, ranging from very weak (Geonaut) to strong (VeryFit). The ICCs, however, indicate low agreement (ICC<0.60) between the measurements of all trackers and the measurements of the SenseWear. This could reflect a systematic underestimation or overestimation of TST by the trackers, which is not evident from the Spearman r coefficient. The MAPE scores of all trackers also indicate a large mean deviation from the SenseWear measurements for TST, ranging from 20.57% for Fitbit to 39.08% for Xiaomi. The correlation coefficients, ICC values, associated 95% CI, and MAPE scores for measuring TST are shown in Table 5.

Table 5.

Correlation coefficients, intraclass correlation coefficients, associated 95% CI of the measurements, and mean absolute percentage error scores for measuring total sleep time.

| Tracker | n | Spearman r (95% CI) | Intraclass correlation coefficient | 95% CI | Mean absolute percentage error (%) | |

| Total sleep time | ||||||

|

|

Geonaut | 15 | 0.04 (−0.45 to 0.60) | 0.05 | −0.44 to 0.52 | 26.59 |

|

|

iWown | 24 | 0.57a (0.19 to 0.84) | 0.52a | 0.18 to 0.76 | 21.33 |

|

|

MyKronoz | 14 | 0.45 (−0.22 to 0.86) | 0.40a | −0.07 to 0.74 | 38.15 |

|

|

Nokia | 14 | 0.66b (0.30 to 0.88) | 0.30a | −0.10 to 0.63 | 38.63 |

|

|

VeryFit | 24 | 0.73b (0.48 to 0.83) | 0.26 | −0.11 to 0.61 | 30.73 |

|

|

Xiaomi | 21 | 0.21 (−0.34 to 0.68) | 0.13 | −0.13 to 0.45 | 39.08 |

|

|

Fitbit | 134 | 0.57b (0.40 to 0.69) | 0.46b | 0.28 to 0.60 | 20.57 |

aP<.05.

bP<.001.

The correlations for TST are also illustrated in Figure 7. This figure visualizes the large discrepancy between the Spearman correlation coefficient and the ICC, specifically evident for Nokia and VeryFit. Although a clear relation is visible between the measurements (Spearman r), almost all data points are above the line that represents the perfect agreement between the measurements. This indicates a systematic overestimation of the TST measurements of Nokia and VeryFit compared with the convergent measure. Figure 7 also shows the largest scatter for MyKronoz.

Figure 7.

Correlations between total sleep time estimates from the trackers and the SenseWear.

Bland-Altman plots for TST revealed the smallest limits for VeryFit (263.39 min) and the broadest limits for Geonaut (558.25 min). These results are in line with the findings based on the Spearman r coefficient and the scatter of the data points. The mean differences with the SenseWear armband measurements and the limits of agreement are presented in Table 6 and illustrated in Figure 8.

Table 6.

Mean differences of total sleep time measures with the SenseWear and limits of agreement of the activity trackers.

| Tracker | n | Mean difference of total sleep time (SenseWear−smartwatch) | Limits of agreement, range | Width of the limits of agreement (minutes) |

| Geonaut | 15 | 44.93 | −234.19 to 324.06 | 558.25 |

| iWown | 24 | −36.79 | −221.22 to 147.63 | 368.85 |

| MyKronoz | 14 | −82.29 | −330.55 to 165.98 | 496.53 |

| Nokia | 24 | −106.46 | −293.72 to 80.80 | 374.52 |

| VeryFit | 24 | −97.63 | −229.32 to 34.07 | 263.39 |

| Xiaomi | 21 | −112.14 | −355.40 to 131.12 | 486.52 |

| Fitbit | 134 | −36.91 | −213.9 to 140.16 | 354.14 |

Figure 8.

Bland-Altman plots of the trackers for measuring total sleep time. The middle line shows the mean difference (positive values indicate an underestimation of the wearable and negative values indicate an overestimation) between the measurements of total sleep time of the wearables and the SenseWear, and the dashed lines indicate the limits of agreement (1.96×SD of the difference scores).

Thus, low-cost trackers showed low (eg, Geonaut and Xiaomi) to strong (eg, VeryFit) correlations to measure TST, with some trackers such as VeryFit and Nokia systematically overestimating TST. Fitbit showed low (based on ICC) to moderate (based on the Spearman r coefficient) validity to measure TST and was outperformed by VeryFit to measure TST on all indicators of accuracy.

Discussion

Principal Findings

This study examined the validity of low-cost trackers (≤€50 [US $56]) for measuring adults’ steps, moderate-to-vigorous PA, and TST in free-living conditions. In general, the low-cost trackers were most accurate in the measurement of steps, somewhat accurate for the measurement of sleep, and lacked validity for the measurement of MVPA time. Validity ranged widely between the various low-cost trackers tested. The performance of the best of the low-cost trackers approached or even exceeded that of the Fitbit Charge 2 (the high-cost comparison tracker), whereas the worst of the low-cost trackers had weak validity. Notably, VeryFit 2.0 performed relatively strongly across both sleep and steps domains, whereas the Xiaomi Mi Band 2 appeared to have the highest validity for the measurement of steps.

The finding that many of the low-cost trackers are accurate for measuring steps is promising, given that steps is the metric reported by users of trackers as being of most interest [63]. We found that the low-cost trackers were most accurate for measuring steps in comparison with sleep and minutes of MVPA. This order for validity (ie, measuring steps more accurately than measuring sleep and in turn more accurately than measuring MVPA) is consistent with findings for these metrics in high-cost trackers [39], although in our study, the low-cost trackers demonstrated weak-to-moderate validity for MVPA minutes (Spearman r ranged from 0.24 to 0.56), whereas previous research in high-cost trackers has suggested moderate-to-strong validity (eg, Ferguson et al’s [39] study of high-cost trackers reported Pearson r ranging from 0.52 to 0.91). It is possible that some of the differences between the reference values for MVPA derived from the ActiGraph accelerometers and the values recorded by the low-cost trackers may have originated from a measurement error associated with the reference device. Furthermore, a possible explanation for the weak-to-nil validity found in our study could be that the PA variables measured by the low-cost trackers were not explicitly identified as MVPA. However, because all devices had set a goal of 30-min PA per day (similar to the MVPA recommendations for adults), we assumed that the measured variable corresponded to MVPA as measured by the ActiGraph accelerometer. Nevertheless, specific information regarding algorithm intensity cut-points was not provided and publicly available from these low-cost trackers. Therefore, the discrepancies in this study may be a result of both definitional and measurement problems (eg, sensitivity algorithm). In this regard, it may be very useful in the future, when manufacturers provide more insight into the cut-points and algorithms that were used to translate the raw data into useful information (such as steps and minutes of MVPA).

Although research-grade accelerometers are the closest we have to a gold standard for the measurement of MVPA in free-living conditions, the MVPA values derived from them can vary by the order of magnitude depending on parameters such as epoch length and cut-points [64]. Furthermore, wear position has an impact on the validity of MVPA. Studies comparing the validity of research-grade accelerometers at different body locations consistently show that the hip position is more accurate than the wrist [65]. Despite the recognized superior validity of hip-worn accelerometers and trackers, over the past 5 years or so, there has been a shift for both consumer trackers and research-grade accelerometers to increasingly be designed for wrist wear, presumably because of improved logistics, such as comfort and convenience. This clear shift in the market highlights that validity should not be considered the be-all and end-all. Issues such as usability, compliance, and adherence are also important, although they tend to receive less attention in the scientific literature.

Evidence for the validity of the low-cost trackers for the measurement of sleep duration was mixed. Some trackers performed quite strongly. For example, the top performing tracker, VeryFit 2.0, demonstrated a Spearman r of 0.73 for TST compared with the reference device (SenseWear), which was actually superior to the Fitbit Charge HR (r=0.57). However, the Bland-Altman analyses revealed that VeryFit 2.0 tended to overestimate sleep by around 1.5 hours per night compared with the reference device. If this overestimation was consistent, it could be argued that the data might still be useful for self-monitoring changes in sleep over time. However, the Bland-Altman 95% limits of agreement spanned a range of 263 min, suggesting that the extent of overestimation varied considerably on different administrations. It, therefore, seems questionable whether the sleep estimates derived from VeryFit 2.0 are accurate enough to help a user meaningfully monitor/change their sleeping patterns.

The finding that low-cost trackers have strong validity for measuring steps and some validity for measuring sleep is likely to be of interest to public health researchers and clinicians alike. There is considerable interest in using activity trackers to intervene on lifestyle activities, with a recent meta-analysis finding positive evidence for short-term effectiveness but less evidence for sustained effects [66]. There is well-recognized usage attrition associated with activity trackers over time. For example, a 2017 study gave entry-level Fitbit trackers to 711 users and found that approximately 50% of participants had stopped using them at 6 months and 80% had stopped by 10 months [67]. The most common reasons for not using Fitbit was technical failure or difficulty (57%), losing the device (13%), or forgetting to wear it (13%). Nonetheless, low-cost devices fill an important gap in the consumer market between the high-cost activity trackers that are prohibitively expensive to provide to clinical or research cohorts at scale (unless sizable funding is available) but likely to be more aesthetically pleasing and acceptable to wearers than traditional pedometers [19,63]. The findings of this study, which highlight the Xiaomi Mi Band 2 and VeryFit 2.0 devices as having acceptable validity, are therefore helpful. We bought the trackers as individual buyers on the consumer market. Researchers intending to use these in large-scale research cohorts may purchase these at an even lower cost in bulk. Another promising feature of VeryFit 2.0 is that it has an application programming interface (API) that allows software developers to create custom software that can be integrated directly with the tracker (ie, data from the tracker can be sent automatically to the custom software). There is a growing trend for eHealth and mobile health research to use Fitbit and Garmin API [68-70]. Therefore, validated low-cost trackers with APIs offer new data collection and intervention possibilities.

Our study included trackers with and without heart rate measurements. All trackers with the highest validity included heart rate measures, whereas those without showed lower validity. However, we cannot conclude from this study that the heart rate function increased validity. Studies testing the same model with and without the heart rate function and assessing the validity of the heart rate measurement in itself would be needed to make this claim. The price of included trackers ranged from around €25 (US $28) to approximately €50 (US $55). The prices of the most accurate types, VeryFit 2.0 and Xiaomi Mi Band 2, are situated in the middle of this range (€30-€40 [US $33-US $44]). This yields two models that are very attractive and accessible to the general public. Thus, price may not be the determining factor in the validity of the trackers: more expensive within this range is not necessarily better. On the contrary, we cannot conclude that price plays no role and that trackers even less expensive than those included here (<€25 [US $28]) may also be valid. Indeed, a study on pedometers provided for free as gadgets with cereal boxes found that those were not valid [71].

Although validity evidence from this study for low-cost devices measuring steps, MVPA, and TST is not unequivocally good across the devices, user experience is also extremely important. A device that has high validity may not necessarily have a positive user experience. Future research examining the user experience of low-cost trackers (eg, focusing on issues such as functionality, reliability, and ease of use, both of the device itself and its accompanying app) will be valuable. Our preliminary experiences suggest that the user experience of the low-cost trackers may be less positive than that of the high-cost trackers (eg, we tended to experience fewer technical issues with Fitbit trackers than with the other devices in this study). It can be assumed that the higher price of the high-cost trackers is partly determined by the investments made by the manufacturer to improve the user experience and to better develop the app supporting the tracker. Moreover, the low-cost activity trackers appear most valid for measuring steps. Pedometers that count steps are available at an even lower cost, but unlike activity trackers, they offer little additional functionality (eg, feedback, information, and social support) in an accompanying app and are considered less usable by people than activity trackers [72-74]. Further work to explore these issues more rigorously and in greater depth is warranted.

Strengths and Limitations

A strength of our study is that it is the first to scrutinize the validity of low-cost trackers addressing an important gap in the scientific literature to date. Methodological strengths of the study are the relatively large number of devices that were tested using the same methodology (allowing a direct comparison of the devices’ performance), the devices that tested multiple metrics (steps, MVPA, and sleep), and that efforts were made to minimize bias, for example, by randomizing the order in which participants wore the devices. Limitations included that our sample was relatively young and healthy. On the basis of previous literature, it seems likely that validity for measuring steps is likely to be somewhat lower in older and clinical populations (eg, obese) [75]. As already noted, our reference devices were research-grade accelerometers with known validity limitations of their own. Therefore, they represent convergent validity rather than criterion validity, and there is a risk that we may be underestimating the low-cost trackers’ true validity. A further limitation is that this is a fast-moving field with new devices continually entering and exiting the market. In particular, since our study started, the Xiaomi MiBand 2 is replaced by its successor, the MiBand 3. Therefore, it would be beneficial that future research continuously investigates the validity of new low-cost trackers and other emerging devices. Furthermore, having an insight into the used algorithms and used cutoffs would be beneficial.

Conclusions

This study was the first to examine the validity of low-cost trackers. It found that validity was strongest for the measurement of steps; there was some evidence of validity for the measurement of sleep, whereas validity for the measurement of MVPA time was weak. Validity ranged between devices, with Xiaomi having the highest validity for the measurement of steps and VeryFit performing relatively strongly across both sleep and steps domains. The tested low-cost trackers hold promise for the cost-efficient measurement of movement behaviors. Further research investigating the user experience of low-cost devices and their accompanying apps is needed before these devices can be confidently recommended.

Abbreviations

- API

application programming interface

- eHealth

electronic health

- ICC

intraclass correlation coefficient

- MAPE

mean absolute percentage error

- MVPA

moderate-to-vigorous physical activity

- PA

physical activity

- SB

sedentary behavior

- SES

socioeconomic status

- TST

total sleep time

Appendix

Parameter estimates from linear mixed effects models examining the association between commercial trackers and ActiGraph (steps, moderate-to-vigorous physical activity) and SenseWear (total sleep time).

Footnotes

Conflicts of Interest: None declared.

References

- 1.Bassuk SS, Manson JE. Epidemiological evidence for the role of physical activity in reducing risk of type 2 diabetes and cardiovascular disease. J Appl Physiol (1985) 2005 Sep;99(3):1193–204. doi: 10.1152/japplphysiol.00160.2005. http://journals.physiology.org/doi/full/10.1152/japplphysiol.00160.2005?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PubMed] [Google Scholar]

- 2.Cappuccio FP, Cooper D, D'Elia L, Strazzullo P, Miller MA. Sleep duration predicts cardiovascular outcomes: a systematic review and meta-analysis of prospective studies. Eur Heart J. 2011 Jun;32(12):1484–92. doi: 10.1093/eurheartj/ehr007. [DOI] [PubMed] [Google Scholar]

- 3.Cappuccio FP, D'Elia L, Strazzullo P, Miller MA. Quantity and quality of sleep and incidence of type 2 diabetes: a systematic review and meta-analysis. Diabetes Care. 2010 Feb;33(2):414–20. doi: 10.2337/dc09-1124. http://europepmc.org/abstract/MED/19910503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Guallar-Castillón P, Bayán-Bravo A, León-Muñoz LM, Balboa-Castillo T, López-García E, Gutierrez-Fisac JL, Rodríguez-Artalejo F. The association of major patterns of physical activity, sedentary behavior and sleep with health-related quality of life: a cohort study. Prev Med. 2014 Oct;67:248–54. doi: 10.1016/j.ypmed.2014.08.015. [DOI] [PubMed] [Google Scholar]

- 5.Magee L, Hale L. Longitudinal associations between sleep duration and subsequent weight gain: a systematic review. Sleep Med Rev. 2012 Jun;16(3):231–41. doi: 10.1016/j.smrv.2011.05.005. http://europepmc.org/abstract/MED/21784678. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Maher CA, Mire E, Harrington DM, Staiano AE, Katzmarzyk PT. The independent and combined associations of physical activity and sedentary behavior with obesity in adults: NHANES 2003-06. Obesity (Silver Spring) 2013 Dec;21(12):E730–7. doi: 10.1002/oby.20430. doi: 10.1002/oby.20430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cassidy S, Chau JY, Catt M, Bauman A, Trenell MI. Cross-sectional study of diet, physical activity, television viewing and sleep duration in 233,110 adults from the UK Biobank; the behavioural phenotype of cardiovascular disease and type 2 diabetes. BMJ Open. 2016 Mar 15;6(3):e010038. doi: 10.1136/bmjopen-2015-010038. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=27008686. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chastin SF, Palarea-Albaladejo J, Dontje ML, Skelton DA. Combined effects of time spent in physical activity, sedentary behaviors and sleep on obesity and cardio-metabolic health markers: a novel compositional data analysis approach. PLoS One. 2015;10(10):e0139984. doi: 10.1371/journal.pone.0139984. http://dx.plos.org/10.1371/journal.pone.0139984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Kok G, Gottlieb NH, Peters GY, Mullen PD, Parcel GS, Ruiter RA, Fernández ME, Markham C, Bartholomew LK. A taxonomy of behaviour change methods: an Intervention Mapping approach. Health Psychol Rev. 2016 Sep;10(3):297–312. doi: 10.1080/17437199.2015.1077155. http://europepmc.org/abstract/MED/26262912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Michie S, Abraham C, Whittington C, McAteer J, Gupta S. Effective techniques in healthy eating and physical activity interventions: a meta-regression. Health Psychol. 2009 Nov;28(6):690–701. doi: 10.1037/a0016136. [DOI] [PubMed] [Google Scholar]

- 11.Middelweerd A, van der Laan DM, van Stralen MM, Mollee JS, Stuij M, te Velde SJ, Brug J. What features do Dutch university students prefer in a smartphone application for promotion of physical activity? A qualitative approach. Int J Behav Nutr Phys Act. 2015 Mar 1;12:31. doi: 10.1186/s12966-015-0189-1. https://ijbnpa.biomedcentral.com/articles/10.1186/s12966-015-0189-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.DeSmet A, de Bourdeaudhuij I, Chastin S, Crombez G, Maddison R, Cardon G. Adults' preferences for behavior change techniques and engagement features in a mobile app to promote 24-hour movement behaviors: cross-sectional survey study. JMIR Mhealth Uhealth. 2019 Dec 20;7(12):e15707. doi: 10.2196/15707. https://mhealth.jmir.org/2019/12/e15707/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2008 May;27(3):379–87. doi: 10.1037/0278-6133.27.3.379. [DOI] [PubMed] [Google Scholar]

- 14.Hickey AM, Freedson PS. Utility of consumer physical activity trackers as an intervention tool in cardiovascular disease prevention and treatment. Prog Cardiovasc Dis. 2016;58(6):613–9. doi: 10.1016/j.pcad.2016.02.006. [DOI] [PubMed] [Google Scholar]

- 15.Lauderdale DS, Knutson KL, Yan LL, Liu K, Rathouz PJ. Self-reported and measured sleep duration: how similar are they? Epidemiology. 2008 Nov;19(6):838–45. doi: 10.1097/EDE.0b013e318187a7b0. http://europepmc.org/abstract/MED/18854708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Gomersall SR, Ng N, Burton NW, Pavey TG, Gilson ND, Brown WJ. Estimating physical activity and sedentary behavior in a free-living context: a pragmatic comparison of consumer-based activity trackers and ActiGraph accelerometry. J Med Internet Res. 2016 Sep 7;18(9):e239. doi: 10.2196/jmir.5531. https://www.jmir.org/2016/9/e239/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Rosenberger ME, Buman MP, Haskell WL, McConnell MV, Carstensen LL. Twenty-four hours of sleep, sedentary behavior, and physical activity with nine wearable devices. Med Sci Sports Exerc. 2016 Mar;48(3):457–65. doi: 10.1249/MSS.0000000000000778. http://europepmc.org/abstract/MED/26484953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kooiman TJ, Dontje ML, Sprenger SR, Krijnen WP, van der Schans CP, de Groot M. Reliability and validity of ten consumer activity trackers. BMC Sports Sci Med Rehabil. 2015;7:24. doi: 10.1186/s13102-015-0018-5. https://bmcsportsscimedrehabil.biomedcentral.com/articles/10.1186/s13102-015-0018-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Alley S, Schoeppe S, Guertler D, Jennings C, Duncan MJ, Vandelanotte C. Interest and preferences for using advanced physical activity tracking devices: results of a national cross-sectional survey. BMJ Open. 2016 Jul 7;6(7):e011243. doi: 10.1136/bmjopen-2016-011243. http://bmjopen.bmj.com/cgi/pmidlookup?view=long&pmid=27388359. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Vandelanotte C, Duncan MJ, Maher CA, Schoeppe S, Rebar AL, Power DA, Short CE, Doran CM, Hayman MJ, Alley SJ. The effectiveness of a web-based computer-tailored physical activity intervention using Fitbit activity trackers: randomized trial. J Med Internet Res. 2018 Dec 18;20(12):e11321. doi: 10.2196/11321. https://www.jmir.org/2018/12/e11321/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Olsen HM, Brown WJ, Kolbe-Alexander T, Burton NW. A brief self-directed intervention to reduce office employees' sedentary behavior in a flexible workplace. J Occup Environ Med. 2018 Oct;60(10):954–9. doi: 10.1097/JOM.0000000000001389. [DOI] [PubMed] [Google Scholar]

- 22.Vandendriessche K, de Marez L. Imec R&D. Leuven: imec; 2019. [2019-08-17]. [Digital Media Trends in Flanders] https://www.imec.be/static/476531-IMEC-Digimeter-Rapport%202020-WEB-f6fe6fa9efafc16bd174620ca1f90376.PDF?utm_source=website&utm_medium=button&utm_campaign=digimeter_rapport_2019. [Google Scholar]

- 23.Jia Y, Wang W, Wen D, Liang L, Gao L, Lei J. Perceived user preferences and usability evaluation of mainstream wearable devices for health monitoring. PeerJ. 2018;6:e5350. doi: 10.7717/peerj.5350. doi: 10.7717/peerj.5350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Beenackers MA, Kamphuis CB, Giskes K, Brug J, Kunst AE, Burdorf A, van Lenthe FJ. Socioeconomic inequalities in occupational, leisure-time, and transport related physical activity among European adults: a systematic review. Int J Behav Nutr Phys Act. 2012 Sep 19;9:116. doi: 10.1186/1479-5868-9-116. https://ijbnpa.biomedcentral.com/articles/10.1186/1479-5868-9-116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lallukka T, Sares-Jäske L, Kronholm E, Sääksjärvi K, Lundqvist A, Partonen T, Rahkonen O, Knekt P. Sociodemographic and socioeconomic differences in sleep duration and insomnia-related symptoms in Finnish adults. BMC Public Health. 2012 Jul 28;12:565. doi: 10.1186/1471-2458-12-565. https://bmcpublichealth.biomedcentral.com/articles/10.1186/1471-2458-12-565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Murray E, Hekler E, Andersson G, Collins L, Doherty A, Hollis C, Rivera DE, West R, Wyatt JC. Evaluating digital health interventions: key questions and approaches. Am J Prev Med. 2016 Nov;51(5):843–51. doi: 10.1016/j.amepre.2016.06.008. http://europepmc.org/abstract/MED/27745684. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Sørensen K, van den Broucke S, Fullam J, Doyle G, Pelikan J, Slonska Z, Brand H, (HLS-EU) Consortium Health Literacy Project European Health literacy and public health: a systematic review and integration of definitions and models. BMC Public Health. 2012 Jan 25;12:80. doi: 10.1186/1471-2458-12-80. https://bmcpublichealth.biomedcentral.com/articles/10.1186/1471-2458-12-80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.van der Vaart R, Drossaert C. Development of the digital health literacy instrument: measuring a broad spectrum of Health 1.0 and Health 2.0 skills. J Med Internet Res. 2017 Jan 24;19(1):e27. doi: 10.2196/jmir.6709. https://www.jmir.org/2017/1/e27/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Neter E, Brainin E. eHealth literacy: extending the digital divide to the realm of health information. J Med Internet Res. 2012 Jan 27;14(1):e19. doi: 10.2196/jmir.1619. https://www.jmir.org/2012/1/e19/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Porter CE, Donthu N. Using the technology acceptance model to explain how attitudes determine internet usage: The role of perceived access barriers and demographics. J Bus Res. 2006 Sep;59(9):999–1007. doi: 10.1016/j.jbusres.2006.06.003. [DOI] [Google Scholar]

- 31.Piwek L, Ellis DA, Andrews S, Joinson A. The rise of consumer health wearables: promises and barriers. PLoS Med. 2016 Feb;13(2):e1001953. doi: 10.1371/journal.pmed.1001953. http://dx.plos.org/10.1371/journal.pmed.1001953. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kononova A, Li L, Kamp K, Bowen M, Rikard RV, Cotten S, Peng W. The use of wearable activity trackers among older adults: focus group study of tracker perceptions, motivators, and barriers in the maintenance stage of behavior change. JMIR Mhealth Uhealth. 2019 Apr 5;7(4):e9832. doi: 10.2196/mhealth.9832. https://mhealth.jmir.org/2019/4/e9832/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Evenson KR, Goto MM, Furberg RD. Systematic review of the validity and reliability of consumer-wearable activity trackers. Int J Behav Nutr Phys Act. 2015 Dec 18;12:159. doi: 10.1186/s12966-015-0314-1. https://ijbnpa.biomedcentral.com/articles/10.1186/s12966-015-0314-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tully MA, McBride C, Heron L, Hunter RF. The validation of Fibit Zip physical activity monitor as a measure of free-living physical activity. BMC Res Notes. 2014 Dec 23;7:952. doi: 10.1186/1756-0500-7-952. https://bmcresnotes.biomedcentral.com/articles/10.1186/1756-0500-7-952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Sasaki JE, Hickey A, Mavilia M, Tedesco J, John D, Keadle SK, Freedson PS. Validation of the Fitbit wireless activity tracker for prediction of energy expenditure. J Phys Act Health. 2015 Feb;12(2):149–54. doi: 10.1123/jpah.2012-0495. [DOI] [PubMed] [Google Scholar]

- 36.Sushames A, Edwards A, Thompson F, McDermott R, Gebel K. Validity and reliability of Fitbit Flex for step count, moderate to vigorous physical activity and activity energy expenditure. PLoS One. 2016;11(9):e0161224. doi: 10.1371/journal.pone.0161224. http://dx.plos.org/10.1371/journal.pone.0161224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Nelson MB, Kaminsky LA, Dickin DC, Montoye AH. Validity of consumer-based physical activity monitors for specific activity types. Med Sci Sports Exerc. 2016 Aug;48(8):1619–28. doi: 10.1249/MSS.0000000000000933. [DOI] [PubMed] [Google Scholar]

- 38.Dominick GM, Winfree KN, Pohlig RT, Papas MA. Physical activity assessment between consumer- and research-grade accelerometers: a comparative study in free-living conditions. JMIR Mhealth Uhealth. 2016 Sep 19;4(3):e110. doi: 10.2196/mhealth.6281. https://mhealth.jmir.org/2016/3/e110/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Ferguson T, Rowlands AV, Olds T, Maher C. The validity of consumer-level, activity monitors in healthy adults worn in free-living conditions: a cross-sectional study. Int J Behav Nutr Phys Act. 2015 Mar 27;12:42. doi: 10.1186/s12966-015-0201-9. https://ijbnpa.biomedcentral.com/articles/10.1186/s12966-015-0201-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Schneider M, Chau L. Validation of the Fitbit Zip for monitoring physical activity among free-living adolescents. BMC Res Notes. 2016 Sep 21;9(1):448. doi: 10.1186/s13104-016-2253-6. https://bmcresnotes.biomedcentral.com/articles/10.1186/s13104-016-2253-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mantua J, Gravel N, Spencer R. Reliability of sleep measures from four personal health monitoring devices compared to research-based actigraphy and polysomnography. Sensors (Basel) 2016 May 5;16(5):pii: E646. doi: 10.3390/s16050646. http://www.mdpi.com/resolver?pii=s16050646. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.de Zambotti M, Baker FC, Willoughby AR, Godino JG, Wing D, Patrick K, Colrain IM. Measures of sleep and cardiac functioning during sleep using a multi-sensory commercially-available wristband in adolescents. Physiol Behav. 2016 May 1;158:143–9. doi: 10.1016/j.physbeh.2016.03.006. http://europepmc.org/abstract/MED/26969518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Dickinson DL, Cazier J, Cech T. A practical validation study of a commercial accelerometer using good and poor sleepers. Health Psychol Open. 2016 Jul;3(2):2055102916679012. doi: 10.1177/2055102916679012. http://europepmc.org/abstract/MED/28815052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Brooke SM, An H, Kang S, Noble JM, Berg KE, Lee J. Concurrent validity of wearable activity trackers under free-living conditions. J Strength Cond Res. 2017 Apr;31(4):1097–106. doi: 10.1519/JSC.0000000000001571. [DOI] [PubMed] [Google Scholar]

- 45.Brage S, Brage N, Franks PW, Ekelund U, Wong M, Andersen LB, Froberg K, Wareham NJ. Branched equation modeling of simultaneous accelerometry and heart rate monitoring improves estimate of directly measured physical activity energy expenditure. J Appl Physiol (1985) 2004 Jan;96(1):343–51. doi: 10.1152/japplphysiol.00703.2003. http://journals.physiology.org/doi/full/10.1152/japplphysiol.00703.2003?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub%3dpubmed. [DOI] [PubMed] [Google Scholar]

- 46.van de Water AT, Holmes A, Hurley D. Objective measurements of sleep for non-laboratory settings as alternatives to polysomnography--a systematic review. J Sleep Res. 2011 Mar;20(1 Pt 2):183–200. doi: 10.1111/j.1365-2869.2009.00814.x. doi: 10.1111/j.1365-2869.2009.00814.x. [DOI] [PubMed] [Google Scholar]

- 47.Colon A, Duffy J. PCMag. 2020. Feb 13, [2019-05-17]. The Best Fitness Trackers for 2020 https://www.pcmag.com/picks/the-best-fitness-trackers.

- 48.Wahl Y, Düking P, Droszez A, Wahl P, Mester J. Criterion-validity of commercially available physical activity tracker to estimate step count, covered distance and energy expenditure during sports conditions. Front Physiol. 2017;8:725. doi: 10.3389/fphys.2017.00725. doi: 10.3389/fphys.2017.00725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Xie J, Wen D, Liang L, Jia Y, Gao L, Lei J. Evaluating the validity of current mainstream wearable devices in fitness tracking under various physical activities: comparative study. JMIR Mhealth Uhealth. 2018 Apr 12;6(4):e94. doi: 10.2196/mhealth.9754. https://mhealth.jmir.org/2018/4/e94/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mackert M, Mabry-Flynn A, Champlin S, Donovan EE, Pounders K. Health literacy and health information technology adoption: the potential for a new digital divide. J Med Internet Res. 2016 Oct 4;18(10):e264. doi: 10.2196/jmir.6349. https://www.jmir.org/2016/10/e264/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Rowlands AV, Stone MR, Eston RG. Influence of speed and step frequency during walking and running on motion sensor output. Med Sci Sports Exerc. 2007 Apr;39(4):716–27. doi: 10.1249/mss.0b013e318031126c. [DOI] [PubMed] [Google Scholar]

- 52.Le Masurier GC, Lee SM, Tudor-Locke C. Motion sensor accuracy under controlled and free-living conditions. Med Sci Sports Exerc. 2004 May;36(5):905–10. doi: 10.1249/01.mss.0000126777.50188.73. [DOI] [PubMed] [Google Scholar]

- 53.Le Masurier GC, Tudor-Locke C. Comparison of pedometer and accelerometer accuracy under controlled conditions. Med Sci Sports Exerc. 2003 May;35(5):867–71. doi: 10.1249/01.MSS.0000064996.63632.10. [DOI] [PubMed] [Google Scholar]

- 54.Freedson PS, Melanson E, Sirard J. Calibration of the Computer Science and Applications, Inc accelerometer. Med Sci Sports Exerc. 1998 May;30(5):777–81. doi: 10.1097/00005768-199805000-00021. [DOI] [PubMed] [Google Scholar]

- 55.Santos-Lozano A, Santín-Medeiros F, Cardon G, Torres-Luque G, Bailón R, Bergmeir C, Ruiz JR, Lucia A, Garatachea N. Actigraph GT3X: validation and determination of physical activity intensity cut points. Int J Sports Med. 2013 Nov;34(11):975–82. doi: 10.1055/s-0033-1337945. [DOI] [PubMed] [Google Scholar]

- 56.Rich C, Geraci M, Griffiths L, Sera F, Dezateux C, Cortina-Borja M. Quality control methods in accelerometer data processing: defining minimum wear time. PLoS One. 2013;8(6):e67206. doi: 10.1371/journal.pone.0067206. http://dx.plos.org/10.1371/journal.pone.0067206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Troiano RP, Berrigan D, Dodd KW, Mâsse LC, Tilert T, McDowell M. Physical activity in the United States measured by accelerometer. Med Sci Sports Exerc. 2008 Jan;40(1):181–8. doi: 10.1249/mss.0b013e31815a51b3. [DOI] [PubMed] [Google Scholar]

- 58.Shin M, Swan P, Chow CM. The validity of Actiwatch2 and SenseWear armband compared against polysomnography at different ambient temperature conditions. Sleep Sci. 2015;8(1):9–15. doi: 10.1016/j.slsci.2015.02.003. https://linkinghub.elsevier.com/retrieve/pii/S1984-0063(15)00009-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Malavolti M, Pietrobelli A, Dugoni M, Poli M, Romagnoli E, de Cristofaro P, Battistini NC. A new device for measuring resting energy expenditure (REE) in healthy subjects. Nutr Metab Cardiovasc Dis. 2007 Jun;17(5):338–43. doi: 10.1016/j.numecd.2005.12.009. [DOI] [PubMed] [Google Scholar]

- 60.Landis JR, Koch GG. The measurement of observer agreement for categorical data. Biometrics. 1977 Mar;33(1):159–74. [PubMed] [Google Scholar]

- 61.Kim Y, Park I, Kang M. Convergent validity of the international physical activity questionnaire (IPAQ): meta-analysis. Public Health Nutr. 2013 Mar;16(3):440–52. doi: 10.1017/S1368980012002996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Craig CL, Marshall AL, Sjöström M, Bauman AE, Booth ML, Ainsworth BE, Pratt M, Ekelund U, Yngve A, Sallis JF, Oja P. International physical activity questionnaire: 12-country reliability and validity. Med Sci Sports Exerc. 2003 Aug;35(8):1381–95. doi: 10.1249/01.MSS.0000078924.61453.FB. [DOI] [PubMed] [Google Scholar]

- 63.Maher C, Ryan J, Ambrosi C, Edney S. Users' experiences of wearable activity trackers: a cross-sectional study. BMC Public Health. 2017 Nov 15;17(1):880. doi: 10.1186/s12889-017-4888-1. https://bmcpublichealth.biomedcentral.com/articles/10.1186/s12889-017-4888-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Banda JA, Haydel KF, Davila T, Desai M, Bryson S, Haskell WL, Matheson D, Robinson TN. Effects of varying epoch lengths, wear time algorithms, and activity cut-points on estimates of child sedentary behavior and physical activity from accelerometer data. PLoS One. 2016;11(3):e0150534. doi: 10.1371/journal.pone.0150534. http://dx.plos.org/10.1371/journal.pone.0150534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Rosenberger ME, Haskell WL, Albinali F, Mota S, Nawyn J, Intille S. Estimating activity and sedentary behavior from an accelerometer on the hip or wrist. Med Sci Sports Exerc. 2013 May;45(5):964–75. doi: 10.1249/MSS.0b013e31827f0d9c. http://europepmc.org/abstract/MED/23247702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Brickwood KJ, Watson G, O'Brien J, Williams AD. Consumer-based wearable activity trackers increase physical activity participation: systematic review and meta-analysis. JMIR Mhealth Uhealth. 2019 Apr 12;7(4):e11819. doi: 10.2196/11819. https://mhealth.jmir.org/2019/4/e11819/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Hermsen S, Moons J, Kerkhof P, Wiekens C, de Groot M. Determinants for sustained use of an activity tracker: observational study. JMIR Mhealth Uhealth. 2017 Oct 30;5(10):e164. doi: 10.2196/mhealth.7311. https://mhealth.jmir.org/2017/10/e164/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Shaw R, Levine E, Streicher M, Strawbridge E, Gierisch J, Pendergast J, Hale S, Reed S, McVay M, Simmons D, Yancy W, Bennett G, Voils C. Log2Lose: development and lessons learned from a mobile technology weight loss intervention. JMIR Mhealth Uhealth. 2019 Feb 13;7(2):e11972. doi: 10.2196/11972. https://mhealth.jmir.org/2019/2/e11972/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Krans M, van de Wiele L, Bullen N, Diamond M, van Dantzig S, de Ruyter B, van der Lans A. A group intervention to improve physical activity at the workplace. In: Oinas-Kukkonen H, Win K, Karapanos E, Karppinen P, Kyza E, editors. Persuasive Technology: Development of Persuasive and Behavior Change Support Systems. Cham: Springer; 2019. pp. 322–33. [Google Scholar]

- 70.Lynch BM, Nguyen NH, Moore MM, Reeves MM, Rosenberg DE, Boyle T, Vallance JK, Milton S, Friedenreich CM, English DR. A randomized controlled trial of a wearable technology-based intervention for increasing moderate to vigorous physical activity and reducing sedentary behavior in breast cancer survivors: The ACTIVATE Trial. Cancer. 2019 Aug 15;125(16):2846–55. doi: 10.1002/cncr.32143. [DOI] [PubMed] [Google Scholar]

- 71.de Cocker K, Cardon G, de Bourdeaudhuij I. Validity of the inexpensive Stepping Meter in counting steps in free living conditions: a pilot study. Br J Sports Med. 2006 Aug;40(8):714–6. doi: 10.1136/bjsm.2005.025296. http://europepmc.org/abstract/MED/16790485. [DOI] [PMC free article] [PubMed] [Google Scholar]