Abstract

Several studies have attempted to investigate how the brain codes emotional value when processing music of contrasting levels of dissonance; however, the lack of control over specific musical structural characteristics (i.e., dynamics, rhythm, melodic contour or instrumental timbre), which are known to affect perceived dissonance, rendered results difficult to interpret. To account for this, we used functional imaging with an optimized control of the musical structure to obtain a finer characterization of brain activity in response to tonal dissonance. Behavioral findings supported previous evidence for an association between increased dissonance and negative emotion. Results further demonstrated that the manipulation of tonal dissonance through systematically controlled changes in interval content elicited contrasting valence ratings but no significant effects on either arousal or potency. Neuroscientific findings showed an engagement of the left medial prefrontal cortex (mPFC) and the left rostral anterior cingulate cortex (ACC) while participants listened to dissonant compared to consonant music, converging with studies that have proposed a core role of these regions during conflict monitoring (detection and resolution), and in the appraisal of negative emotion and fear‐related information. Both the left and right primary auditory cortices showed stronger functional connectivity with the ACC during the dissonant portion of the task, implying a demand for greater information integration when processing negatively valenced musical stimuli. This study demonstrated that the systematic control of musical dissonance could be applied to isolate valence from the arousal dimension, facilitating a novel access to the neural representation of negative emotion.

Keywords: anterior cingulate cortex, emotion, fMRI, medial prefrontal cortex, music, tonal dissonance, valence

1. INTRODUCTION

Despite the growing empirical interest in unraveling the neural basis of music‐evoked emotions, our understanding of the brain mechanisms involved is still in its infancy. Both the recognition of emotions, as expressed by different types of music and the actual emotional states induced have been studied by neuroimaging [reviewed in: (Koelsch, 2010, Koelsch, 2014)]. However, even though affective neuroscientists understand the importance of controlling for variables that may confound evidence on emotion processes, only few of these studies have ensured an adequate level of control over the musical variables. This issue has rendered difficult the task of linking brain activations with specific musical structural features. In the present study, we aimed to examine if an optimized experimental control of the musical structure could allow a finer differentiation of brain activity in response to music‐evoked emotions. Specifically, the objective was to examine the allocation of distinct levels of tonal consonance/dissonance in the three‐dimensional affective space defined by valence, arousal and potency (Lang, Greenwald, Bradley, & Hamm, 1993; Russell, 1979; Smith & Ellsworth, 1985), and to investigate the neural mechanisms that underlie our emotional response to tonal dissonance (Bigand, Parncutt, & Lerdahl, 1996; Bigand & Parncutt, 1999; Lerdahl & Krumhansl, 2007) while strictly controlling for other variables within the musical structure.

The perspective taken in this investigation supports Leonard Meyer's influential work on “emotion and meaning in music” (Meyer, 1961) and his proposal of “a causal connection between the musical materials, their organization and the connotations evoked”. According to Meyer the confirmation, violation or suspension of musical expectations elicits emotions in the listener. Continuing Meyer's exploration, researchers began to examine associations between certain musical structural features and distinct physiological responses that are strongly connected with emotions (Gabrielsson & Juslin, 2003; Gomez & Danuser, 2007; Koelsch, 2010; Krumhansl, 1997; Sloboda, 1991). Empirical work on the perception of musical dissonance has shown that unexpected chords and increments in tonal dissonance have strong effects on perceived tension (Bigand & Parncutt, 1999; Bigand, Parncutt, & Lerdahl, 1996; Krumhansl, 1996; Lerdahl & Krumhansl, 2007), which has been linked to emotional experience during music listening (Steinbeis, Koelsch, & Sloboda, 2006). Neurophysiological studies have further reported an increased neural engagement of key regions linked to emotion processing when participants listen to dissonant compared to consonant music (Blood, Zatorre, Bermudez, & Evans, 1999; Gosselin et al., 2006; Koelsch, 2005; Koelsch, Fritz, Cramon, Müller, & Friederici, 2006).

In the present study, we employed functional magnetic resonance imaging (fMRI) to investigate how the brain codes emotional value when processing musical stimuli of contrasting levels of tonal dissonance, strictly controlled by changes in interval content (Bravo, 2013, 2014). We use the term “tonal dissonance” to refer to the effects of tonal function (Costère, 1954; Hindemith, 1984; Koechlin, 2001; Riemann, 2018; Schenker & Oster, 2001), sensory dissonance (von Helmholtz & Ellis, 1895) and horizontal motion (Ansermet, 2000) on perceived musical tension (Bigand et al., 1996; Bigand & Parncutt, 1999; Lerdahl & Krumhansl, 2007). Several studies have provided insights regarding the neural substrates of our emotional responses to dissonant music (Blood et al., 1999; Foss, Altschuler, & James, 2007; Gosselin et al., 2006; Green et al., 2008; Koelsch et al., 2006; Peretz, Blood, Penhune, & Zatorre, 2001) [reviewed in: (Bravo, Cross, Stamatakis, & Rohrmeier, 2017)]; however, the paradigms utilized did not control for specific musical structural features and/or other general variables, which are known to influence the response to music‐evoked emotions. Within the musical structure, music cognition research has shown that both horizontal motion (i.e., melody or contour) (Lerdahl & Krumhansl, 2007) and instrumental timbre (i.e., the quality of a musical sound that allows distinguishing different types of sound production in terms of musical instruments) (Barthet, Depalle, Kronland‐Martinet, & Ystad, 2010; Menon et al., 2002; Paraskeva & McAdams, 1997) can strongly influence the emotional appraisal of musical stimuli. Furthermore, musical expression (e.g., there is often a lifeless quality in computerized experimental musical sequences) (Koelsch et al., 2006) and degree of familiarity (i.e., participants may recognize certain musical excerpts but not others when listening to pre‐existing music) have also been found to impact on participants' response, affecting the emotional value of music (Aust et al., 2014; Jäncke, 2008; Peretz, Gaudreau, & Bonnel, 1998; Peretz & Zatorre, 2005) and/or decreasing the arousal potential of musical information (Schellenberg, Peretz, & Vieillard, 2008).

In order to control for these potential confounds, the following characteristics were considered during the construction of the experimental design in the present study. (a) The stimuli were purpose‐made and did not include pre‐existing music, as was the case in other previous studies: (Koelsch et al., 2006; Koelsch, Rohrmeier, Torrecuso, & Jentschke, 2013; Mitterschiffthaler, Fu, Dalton, Andrew, & Williams, 2007). This strategy enables the assessment of whether brain areas linked to the appraisal of emotional stimuli, would respond in the absence of prior expectations and memory associations influenced by familiarity (Jäncke, 2008; Schellenberg et al., 2008). (b) Unlike Blood et al. (1999) the musical materials were composed in the form of variations. This was done in order to control for two specific potentially confounding variables: horizontal motion (i.e., melody) and cadence type (i.e., harmonic progression used at the end of a section), both of which give musical phrases a distinctive profile, which in turn is known to impact on perceived tonal tension (Bigand et al., 1996; Bigand & Parncutt, 1999; Krumhansl, 1997; Lerdahl & Krumhansl, 2007). (c) In contrast to the studies conducted by Koelsch et al. (2006), Koelsch, Rohrmeier, et al. (2013), and Koelsch et al. (2013) the music conditions only differed in terms of dissonance level controlled by interval content (Bravo, 2013), while all of the other parameters such as amplitude, tempo and, specially, instrumental timbre were deliberately kept equal across conditions. (d) The stimuli were realistic music unfolding through time (i.e., a chorale composed by the first author in the style of the romantic period) as opposed to other studies that have worked with single chords (Pallesen et al., 2005) or randomly arranged groups of dyads/triads (Foss et al., 2007).

Prior to the fMRI study, we conducted a behavioral experiment with an independent group of participants where we employed the same musical materials as in the fMRI study. The behavioral study aimed to analyse the distribution of the two contrasting experimental conditions (i.e., consonant and dissonant music) in the three‐dimensional affective space defined by valence, arousal and potency (Osgood, Suci, & Tannenbaum, 1967; Russell, 1979). Valence, arousal and potency are the three affective dimensions, which are widely considered to explain the fundamental variance of emotional responses (Lang et al., 1993; Russell, 1979; Smith & Ellsworth, 1985).

In the neuroscientific study, a passive music listening task was employed, as opposed to an emotional discrimination task, to examine whether the brain areas underlying participants' responses to dissonance would engage without an explicit instruction for cognitive processing of emotional responses. By adopting this approach the focus was oriented towards the neural substrates that could inform about the more unconscious or involuntary effects of dissonance, such as those exerted in audiovisual contexts [for other passive listening paradigms see: (Brown, Martinez, & Parsons, 2004; Menon et al., 2002)].

The present study tested four conceptually‐related experimental hypotheses: (i) whether different brain regions would be involved in the processing of musical stimuli with contrasting levels of dissonance; (ii) whether increased dissonance would recruit certain areas that are known to be implicated in the appraisal/evaluation of negative emotion: medial prefrontal cortex, rostral anterior cingulate cortex and amygdala (Anderson & Phelps, 2001; Etkin, Egner, & Kalisch, 2011; Mechias, Etkin, & Kalisch, 2010; Phelps, 2004); (iii) whether these evaluative regions would interact distinctively with other areas involved in processing negative emotions (withdrawal network): left insula, left fusiform and superior parietal and superior occipital cortices (Wager, Phan, L, & Taylor, 2003), and whether they would exert influences on occipitoparietal attentional pathways under the different music conditions; and finally, (iv) whether the auditory cortex plays a role in the emotional processing of negatively valenced auditory information. Functional connectivity analysis (Psycho–Physiological Interactions, PPI) was used to examine whether there was an interaction between the psychological state of the participants while experiencing the musical stimuli and the corresponding functional coupling between the above described regions of interest.

2. MATERIALS AND METHODS

2.1. Subjects

Twenty‐eight individuals (14 females, mean = 27.8 years, SD = 8.68) took part in the preliminary behavioral experiment, which was performed to evaluate the stimulus material. They reported no long‐term hearing impairment. All participants were right‐handed. The sample did not include any professional musician. Five participants (2 male, 3 female) reported having received informal musical training for less than three years. All subjects gave written informed consent. The study received ethical approval from the Music Faculty Research Ethics Committee (University of Cambridge), reference number 12/13.4.

For the fMRI experiment, data were obtained from sixteen healthy volunteers (eight females; mean = 29 years, SD = 5.16). Participants were right handed, native Spanish speakers born in Argentina (subjects were not screened by ethnicity but by home language), from Fundación Científica del Sur Centre community (radiology residents, radiographers and administrative personnel), with no history of neurological or psychiatric disease, or use of psychotropic medication. All participants gave written consent for taking part in the experiment. The study received ethical approval from the Music Faculty Research Ethics Committee (University of Cambridge), Reference Number 12/13.4, and from the Institutional Review Board of Fundación Científica del Sur (Buenos Aires, Argentina).

2.2. Stimulus material

The stimuli employed in the present study were based on musical materials created for a previous audiovisual experiment, which aimed to examine the effect of tonal dissonance on the emotional interpretation of visual information (Bravo, 2013). For the mentioned experiment, a choral piece, written in the form of musical variations, was made to sound more or less consonant or dissonant by modifying its harmonic structure (i.e., interval content manipulation) producing two otherwise‐identical versions of the same musical piece. The consonant condition consisted of a theme followed by three variations written in a romantic musical style. The dissonant condition was achieved by lowering by a semitone the second violin, viola and violoncello lines (of the consonant piece), while maintaining the other instruments (i.e., first violin and double bass) at their original pitch.

The level of tonal dissonance (Bigand et al., 1996; Bigand & Parncutt, 1999) was the manipulated variable, while other factors that are also known to contribute to the building and release of musical tension such as instrumental timbre, dynamics, rhythm, textural density and melodic contour were strictly controlled. The particular experimental transformation employed to create the contrasting conditions further allowed the level of consonance/dissonance to be uniform throughout a given category. The scores for the consonant and dissonant conditions were written in Sibelius (version 6.2, Avid, http://www.sibelius.com), exported as MIDI files (musical instrument digital interface), and played with the same virtual instruments (Strings audio samples from Sibelius 6.2 Library ‐ Sound Essentials) [further information about stimuli construction is available in: (Bravo, 2013)].

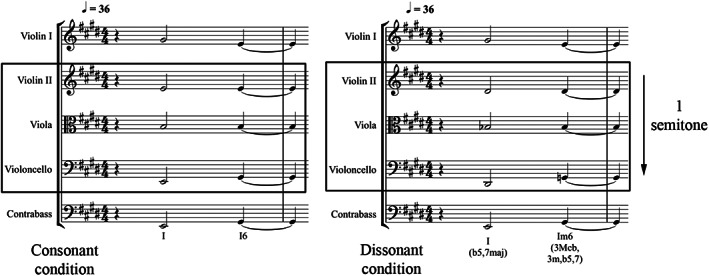

Table 1 summarizes interval class content (Forte, 1820; Temperley, 2010) for the first measure of the consonant and dissonant conditions (Figure 1), and portrays the comparative level of consonance/dissonance throughout a given category.

Table 1.

Interval class content for the first measure of the consonant and dissonant conditions. The model only considers interval classes (i.e., unordered pitch‐intervals measured in semitones). Each digit stands for the number of times an interval class appears in the set

| Interval class | 1 | 2 | 3 | 4 | 5 | 6 |

|---|---|---|---|---|---|---|

| Consonant condition | 0 | 0 | 3 | 6 | 5 | 0 |

| Dissonant condition | 4 | 2 | 2 | 3 | 6 | 2 |

Figure 1.

First measure of the consonant (left) and dissonant (right) music conditions. The dissonant condition was obtained by lowering, by a semitone, the second violin, viola and violoncello lines, while keeping the other instruments (first violin and double bass) at their original pitch

The consonant condition is primarily governed by collections of intervals considered to be consonant (thirds, fourths and fifths). Whereas, the dissonant condition is characterized by the presence of strongly dissonant intervals (major seconds, minor seconds, and tritones). For the present study, these musical variations were separated, with cut‐points located at the end of each musical phrase/variation (corresponding to the last chord of each system in Figure 2), resulting in one set of four consonant variations (consonant condition: variations C1, C2, C3, and C4) and another set of four dissonant variations (dissonant condition: variations D1, D2, D3, and D4). Each variation represented a 30 s music block, and consisted of 9 chords presentations (variations 1, 2, 3) or 10 chords (variation 4), totaling 37 chord presentations per music condition. Although only one music block per category was found to be sufficient to elicit distinctive valence inferences between conditions at a behavioral level [pre‐test: significant differences observed when comparing variations C1 vs. D1; F (1, 27) = 23.637, p < .001], we designed the experiment to include the complete set of variations per condition, in order to reliably estimate the hemodynamic response function (HRF) and to show detectable differences between conditions in the neuroscientific setting.

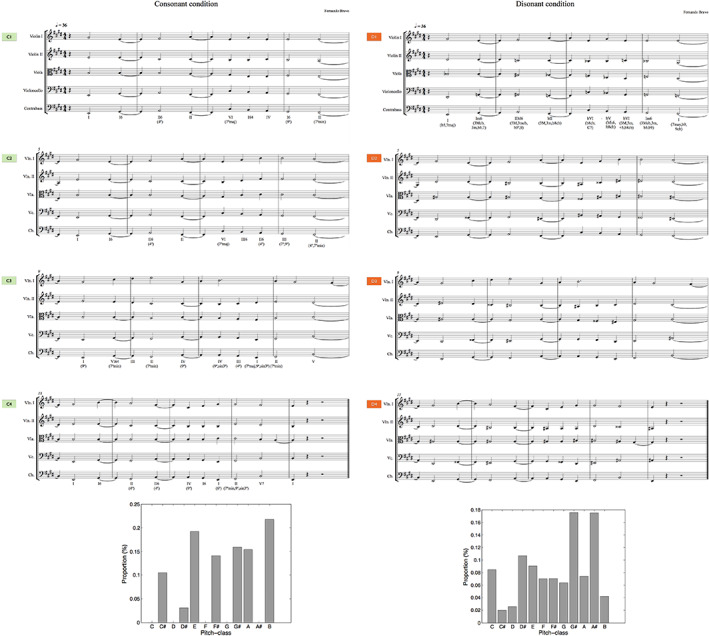

Figure 2.

(Top) The musical stimuli comprised eight music excerpts with a duration of 30 s each: four consonant variations (consonant condition: variations C1, C2, C3 and C4) and four dissonant variations (dissonant condition: variations D1, D2, D3 and D4). (Bottom) Key profile for the consonant (left) and dissonant (right) conditions [analysis conducted with MIDI Toolbox (Eerola & Toiviainen, 2004)]

These two sets of variations (Figure 2, top) were thus expected to produce contrasting emotional valence ratings, and no a priori assumption was made regarding their effect on affective arousal or potency. Figure 2 (bottom) shows the distribution of pitch‐classes for each condition employing the pcdist1 function [MIDI Toolbox for Matlab (Eerola & Toiviainen, 2004)] to calculate the pitch‐class distribution of the notematrix (the command plotdist was used to create the labeled bar graph of the distribution) (Eerola & Toiviainen, 2004). From the resulting graph, it can be inferred that the consonant condition clearly conveys an E major key content, while the dissonant condition, which spreads through the entire chromatic scale, articulates a much more ambiguous tonal center.

The musical stimuli employed in the behavioral and the fMRI experiments were the same. Each 30 s music excerpt (e.g., musical variation C1) was preceded by 12 s of neutral sound (neutral condition), taken from the study conducted by Eldar and collaborators (Eldar, Ganor, Admon, Bleich, & Hendler, 2007), where it was used to control for the effect of nonemotional auditory stimuli (i.e., neutral valence/arousal), and described as “simple monotonic tones” (created in Cubase VST 5 from Steinberg, Reaktor 3.0 and Kontakt 1.0 from Native Instruments Software Synthesis, GmbH, Berlin, Germany). The neutral sound was treated as “aural” baseline for the neuroimaging analysis. Trials were separated by 18 s of silence (rest condition). Rest blocks were utilized as “basic” baseline to identify sound encoding during task performance. All sound stimuli were processed using ProTools (Version 9, Avid, http://www.avid.com/protools); stimulus preparation included cutting and adding 300 ms raised‐cosine onset/offset ramps at the beginning/end of each sound block, as well as adjustment for loudness to an average sound level of −12.8 dB over all stimuli.

2.3. Procedure

2.3.1. Behavioural experiment

Participants were tested individually in a sound‐insulated room. They were presented with the two sets of variations. Stimulus sequences were pseudo‐randomized for each participant individually. Participants were asked to report how they felt (personal reaction) while listening to each musical variation using judgments of valence, potency and arousal that were registered with the pencil‐and‐paper version of the 9‐point Self‐Assessment Manikin (SAM) scales (Lang et al., 1993). As previously utilized (Bradley & Lang, 1994; Lang et al., 1993; Lang, Bradley, & Cuthbert, 1999) and to ensure an adequate understanding of the dimensions of valence, potency and arousal; before the experiment started subjects were instructed with a list of words from the pertinent end of each semantic differential scale (e.g., for the valence dimension: happy, pleased, satisfied, contented, hopeful, relaxed vs. unhappy, annoyed, unsatisfied, despairing or bored) in order to identify the anchors of the SAM rating scales (similar instructions accompanied the three scales of valence, arousal and potency). Participants were instructed to evaluate the eight musical variations and also the neutral condition.

2.3.2. Functional magnetic resonance imaging (fMRI) experiment

During the fMRI scanning session, participants were presented with sets of four musical variations in pseudo‐randomized order. The ordering of each set was determined to ensure: a) an even starting level of consonance/dissonance (i.e., 2 sets starting with a consonant variation, and 2 sets starting with a dissonant variation) and, ii. an even distribution of consonance/dissonance level within each set (i.e., 2 sets with alternating consonance/dissonance level following each trial, and 2 sets with a balanced presentation of consonance/dissonance either in the middle or in the extremes of each set) [examples of pseudo‐randomized orderings employed: C1, D3, C4, D4 / D2, C2, D1, C3 / D3, C2, C1, D1 / C4, D2, D4, C3]. Stimuli presentation was grouped in series of rest (18 s) + neutral sound (12 s) + musical variation (30 s) (Figure 3).

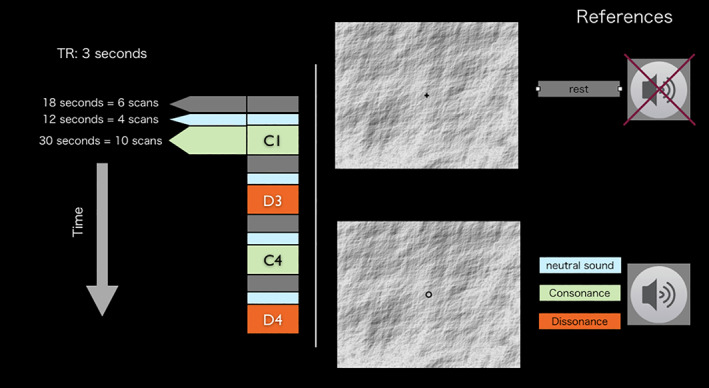

Figure 3.

Experimental Design: within a scanning session, each participant was presented with eight musical variations (four consonant and four dissonant) in pseudo‐randomised order. Each variation (30 s) was preceded by the neutral condition (12 s) and separated by rest (18 s)

Participants were given the following instruction: “Please lie quietly with your eyes open and fixate on the cross. Relax, stay still and try not to think of anything in particular. When you see a circle, try to concentrate on the music” (Figure 3, right). All auditory stimuli were presented binaurally with a high‐quality MRI‐compatible headphone system (Etymotic ER30). Familiarity ratings were measured at the end of fMRI experiment (i.e., outside the scanner). Participants were presented with the same stimuli employed in the experiment (sequences belonging to the two sound categories) in pseudo‐randomized order, and asked to rate their familiarity on a five‐point bipolar scale ranging from 1‐unfamiliar to 5‐familiar.

2.4. MRI data acquisition

Scanning was performed with a 3.0 T system (General Electric, Signa). Prior to the functional magnetic resonance measurements, high‐resolution (1 × 1 × 1 mm) T1‐weighted anatomical images were acquired from each participant using three‐dimensional fast spoiled gradient‐echo (3D‐FSPGR) sequence. Continuous Echo Planar Imaging (EPI) with blood oxygenation level‐dependent (BOLD) contrast was utilized with a TE of 40 ms and a TR of 3,000 ms. The matrix acquired was 64 × 64 voxels (in plane resolution of 3 mm × 3 mm). Slice thickness was 4 mm with an interslice gap of 0.7 mm (35 slices, whole brain coverage).

2.5. fMRI data analysis

Data were processed using Statistical Parametric Mapping (SPM), version 12 (http://www.fil.ion.ucl.ac.uk/spm). Following correction for the temporal difference in acquisition between slices, EPI volumes were realigned and resliced to correct within subject movement. A mean EPI volume was obtained during realignment and the structural MRI was coregistered with that mean volume. The coregistered structural scan was normalized to the Montreal Neurological Institute (MNI) T1 template (Friston et al., 1995). The same deformation parameters obtained from the structural image, were applied to the realigned EPI volumes, which were resampled into MNI‐space with isotropic voxels of 3 mm3. The normalized images were smoothed using a 3D Gaussian kernel and a filter size of 6 mm FWHM. A temporal high‐pass filter with a cut‐off frequency of 192 Hz was applied with the purpose of removing scanner attributable low frequency drifts in the fMRI time series [note: although SPM's default high‐pass cut‐off is set to 128 Hz, we increased the cut‐off frequency to 192 Hz, since this strategy has been proposed to increase the signal‐to‐noise ratio when using block lengths of more than 15 s of duration, as was the case for our study design (Henson, 2007)]. A blocked design was modeled using a canonical hemodynamic response function that emulates the early peak at 5 s and the subsequent undershoot (Friston, Zarahn, Josephs, Henson, & Dale, 1999). The design matrix for the first level analysis included the following four regressors: consonance, dissonance, neutral sound and rest. Parameter estimate images were generated. Seven contrast images per individual were calculated: dissonance > consonance, consonance > dissonance, dissonance > rest, consonance > rest, dissonance > neutral, consonance > neutral and neutral > rest. The significant map for the group random effects analysis was thresholded at p < .001 for voxel‐level inference with a cluster‐level threshold of p < .05 with FWE (family wise error) correction, which controls for the expected proportion of false‐positive clusters.

Second level group analyses were carried out using one‐sample t‐tests. The significant map for the group random effects analysis was thresholded at voxel level p < .001 uncorrected, with a cluster level threshold of p < .05 corrected using FWE (family wise error). Whole‐brain analyses were performed for the linear contrasts that compared sound conditions against the rest condition. The analysis of all the linear contrasts that comprised sound conditions between each other was restricted to regions of interest (i.e., small volume correction) that were defined based on a) meta‐analytic reviews (statistical summaries of empirical findings across studies) that have provided evidence supporting neural correlates for negative emotion processing and the appraisal of behaviorally relevant/threatening stimuli (Anderson & Phelps, 2001; Carretié, Albert, López‐Martín, & Tapia, 2009; Corbetta, Patel, & Shulman, 2008; Corbetta & Shulman, 2002; Etkin et al., 2011; Vytal & Hamann, 2010; Wager et al., 2003), and b) previous neuroscientific studies that have investigated the neural response to tasks involving the emotional evaluation of musical dissonance (Blood et al., 1999; Foss et al., 2007; Gosselin et al., 2006; Green et al., 2008; Koelsch et al., 2006). Although the findings from these studies only partially overlap (and in several cases contradict each other) [reviewed in: (Bravo et al., 2017)], they show evidence for a role of the parahippocampal cortex and medial prefrontal cortices (e.g., anterior cingulate cortex and medial prefrontal cortex) in the emotional evaluation of perceived degrees of dissonance. Therefore, three regions were targeted for small volume correction: medial prefrontal cortex, anterior cingulate cortex and the parahippocampal gyrus (Table 2). All ROIs were defined using anatomical masks of the described areas with WFU PickAtlas Toolbox (Maldjian, Laurienti, Kraft, & Burdette, 2003).

Table 2.

Regions of interest for fMRI analysis of linear contrasts that comprised sound conditions

| Region of interest | Motivation | References |

|---|---|---|

| Anterior cingulate cortex and medial prefrontal cortex | Neural correlates of emotional responses to dissonance appraisal and expression of negative emotion | Green et al., 2008; Foss et al., 2007 Etkin et al., 2011; Wager et al., 2003; Anderson & Phelps, 2001; Carretié et al., 2009; Vytal & Hamann, 2010 |

| Parahippocampal gyrus | Neural correlates of emotional responses to dissonance | Blood et al., 1999; Koelsch et al., 2006; Gosselin et al., 2006 |

2.6. PPI analysis

Following the approach developed by Friston et al. (1997) functional connectivity was measured in terms of PPI. Seeds ROI in the left anterior cingulate cortex and in the left medial prefrontal cortex were selected on the basis of significantly activated clusters (at threshold level p < .001 voxel uncorrected, p < .05 cluster FWE‐corrected) from the subtractive analysis for the contrast dissonance vs. consonance. The group cluster peaks (MNI: −9 47 10, in the rostral anterior cingulate cortex; and MNI: −12 50 10, in the left medial prefrontal cortex) were used as point of reference to identify individual subject activation peaks that complied with the following two rules: a) were within a 24 mm radius, and b) were within the boundaries of the corresponding brain area [defined using the WFU pickatlas toolbox: (Lancaster et al., 2000; Maldjian et al., 2003)].

After the identification of the relevant statistical peaks for each subject, spheres were defined around these peaks with a 6 mm radius, which were used as the seed regions of interest (ROIs) for the PPI analysis. This type of analysis is used to detect target regions for which the covariation of activity between seed and target regions is significantly different between experimental conditions of interest. At the first level, contrasts were calculated for each volunteer based on the interaction term between the contrast of interest (dissonance > consonance) and each seed ROI's activity timecourse (Friston et al., 1997). For each seed ROI, the contrast images from all subjects were used in voxel‐wise one‐sample t‐tests at the second level (at threshold level p < .001 voxel uncorrected, p < .05 cluster FWE‐corrected) to identify clusters of voxels for which the PPI effect was significant for the group.

2.7. Parametric modulation analysis

The musical structural manipulation employed (dissonance level controlled by interval content) entails effects not only on the emotional (valence) response, but also on tonalness level. Tonalness has been defined as “the degree to which a sonority evokes the sensation of a single pitched tone” (Parncutt, 1989) in the sense that sonorities with high tonalness evoke a clear perception of a tonal center (Krumhansl, 2001), while musical sequences with lower tonalness convey more unpredictable and equivocal tonal centers.

Low tonalness musical passages could itself engage conflict detection/resolution brain mechanisms involved in the presumably automatic process through which listeners develop a sense of key in a musical sequence (Krumhansl & Kessler, 1982; Krumhansl & Toiviainen, 2001; Sloboda, 1976, 1986). In order to assess whether tonalness could also be an explanatory variable for the signal changes revealed in the comparison between dissonant and consonant variations, we used parametric modulation analysis and subsequently compared valence‐modulated effects with a model based on tonalness level. Previous behavioral studies have shown that the degree of tonalness correlates strongly with the percept of valence (Bravo, 2014; Bravo, Cross, Hawkins, et al., 2017; Bravo, Cross, Stamatakis, & Rohrmeier, 2017). Hence, this analysis could further allow to dissociate the effect of tonalness and valence on brain activity.

We utilized a quantitative probabilistic framework, Temperley's Bayesian model of tonalness (Temperley, 2002, 2010), to estimate the tonalness level of each variation. Temperley (2010) suggested a way to calculate tonalness level, following a Bayesian “structure‐and‐surface” approach, as the overall probability of a pitch‐class set occurring in a tonal piece. For musical sequences with a length similar to those employed in this experiment, the approach takes the joint probability of a passage with its most probable analysis ‐the maximum value of P(structure ∩ surface)‐ as representative of the overall probability of the passage (Temperley, 2010). Because of the specific, uniform manipulation applied to the experimental stimuli, the model predicted a constant level within condition, turning tonalness into a categorical (two‐level) experimental variable. Table 3 shows the two sound conditions that were employed in this study, together with their respective tonalness values. The fact that the dissonant variations contained many chromatic pitch‐class sets, yielded no facilitated/compatible keys (even for individual segments), resulting in a low tonalness value judged by the model.

Table 3.

Tonalness values for the two sound conditions utilized in the experiment calculated using the Kostka‐Payne key‐profiles (Kostka, 2003; Kostka, Payne, & Almen, 2012)

| Condition | Pitch‐class set prime form | Tonalness |

|---|---|---|

| Dissonant variations (D1, D2, D3, D4) | [0 1 3 5 6 8] | 0.00049 |

| Consonant variations (C1, C2, C3, C4) | [0 1 2 3 4 5] | 0.000006 |

Parametric modulation allows to model how brain activity varies across multiple levels of a behavioral or psychological variable of interest, enabling to estimate brain performance relationships (Büchel, Holmes, Rees, & Friston, 1998). We used parametric modulation to test for a linear relationship between brain activity and the valence ratings for each variation, as collected in the behavioral study (ordered items from Table 4: D2 > D3 > D1 > D4 > C4 > C1 > C2 > C3). We estimated a GLM that modeled the activity in the respective column for each trial (i.e., each variation) modulated by a linear function of the parameter value. SPM12 splits the effect into two columns (an unmodulated effect and a parametric column). Single subject contrasts were calculated to test whether the valence‐modulated effect would fit the brain activity significantly better than the tonalness model. The resulting subject‐specific contrast maps were carried forward onto group‐level analyses using one‐sample t tests.

Table 4.

(A) Individual and composite ratings for consonant and dissonant variations in the valence dimension [mean, standard deviation and, 95% confidence intervals showing adjusted values for repeated measures (Loftus & Masson, 1994)]. Consonant variations are named C1, C2, C3 and C4, dissonant variations are named D1, D2, D3 and D4. (B) Valence rating for each consonant variation (C1, C2, C3, C4) compared with all dissonant variations (D1, D2, D3, D4). Adjustment for multiple comparisons: Bonferroni

| (A) Valence ratings for consonant and dissonant variations | ||||

|---|---|---|---|---|

| Musical variation | Mean | Std. deviation | 95% CI (adjusted) | |

| Consonant | ||||

| C1 | 4.25 | 1.295 | ||

| C2 | 4.14 | 1.580 | ||

| C3 | 4.11 | 1.663 | ||

| C4 | 4.32 | 1.786 | ||

| Composite‐rating cons. | 4.205 | 1.018 | [3.870, 4.534] | |

| Dissonant | ||||

| D1 | 6.07 | 2.071 | ||

| D2 | 6.36 | 1.638 | ||

| D3 | 6.21 | 1.475 | ||

| D4 | 5.86 | 1.580 | ||

| Composite‐rating Diss. | 6.125 | 1.139 | [5.712, 6.532] | |

| (B) Comparison of valence ratings between consonant and dissonant variations | ||||

|---|---|---|---|---|

| Musical variation | Mean diff. | Std. error | 95% CI | Sig. |

| C1 vs. | ||||

| D1 | −1.821 | 0.375 | [−2.967, −0.676] | .000 |

| D2 | −2.107 | 0.274 | [−2.944, −1.270] | .000 |

| D3 | −1.964 | 0.306 | [−2.901, −1.028] | .000 |

| D4 | −1.607 | 0.372 | [−2.744, −0.470] | .002 |

| C2 vs. | ||||

| D1 | −1.929 | 0.534 | [−3.562, −0.295] | .012 |

| D2 | −2.214 | 0.422 | [−3.505, −0.924] | .000 |

| D3 | −2.071 | 0.378 | [−3.226, −0.917] | .000 |

| D4 | −1.714 | 0.463 | [−3.128, −0.301] | .010 |

| C3 vs. | ||||

| D1 | −1.964 | 0.489 | [−3.460, −0.469] | .004 |

| D2 | −2.250 | 0.462 | [−3.662, −0.838] | .000 |

| D3 | −2.107 | 0.403 | [−3.338, −0.876] | .000 |

| D4 | −1.750 | 0.470 | [−3.188, −0.312] | .009 |

| C4 vs. | ||||

| D1 | −1.750 | 0.531 | [−3.373, −0.127] | .028 |

| D2 | −2.036 | 0.441 | [−3.383, −0.688] | .001 |

| D3 | −1.893 | 0.470 | [−3.328, −0.458] | .004 |

| D4 | −1.536 | 0.473 | [−2.981, −0.091] | .031 |

Note: For statistical tests see main text.

3. RESULTS

3.1. Behavioural results

Repeated measures ANOVAs were conducted to assess whether there were differences between the individual average ratings for the eight variations in each of the three affective dimensions [valence: 1 (positive) to 9 (negative), potency: 1 (low) to 9 (high), and arousal: 1 (high) to 9 (low)]. Mauchly's test of sphericity was not significant (p = .09 for valence ratings, p = .57 for arousal ratings and p = .66 for potency ratings), indicating that the data did not violate the sphericity assumption of the univariate approach to repeated measures analysis of variance. Multivariate tests showed that there were no significant differences either in the arousal dimension, Wilks' Lambda F (7, 21) = 1.442, p = .241, or in the potency dimension, Wilks' Lambda F (7, 21) = 2.321, p = .064. Results, however, indicated that participants did rate the eight variations differently in the valence dimension, Wilks' Lambda F (7, 21) = 11.515, p < .001. The means and standard deviations for the valence rating of each variation are presented in Table 4 (top).

As detailed above, multivariate results (based on the linear combination of the dependent variables) were not significant for the dimensions of potency and arousal; therefore, neither univariate tests nor composite ratings were examined for these specific dimensions. This result indicated that the specific musical structure manipulation employed did not affect participants' rating of affective potency and arousal.

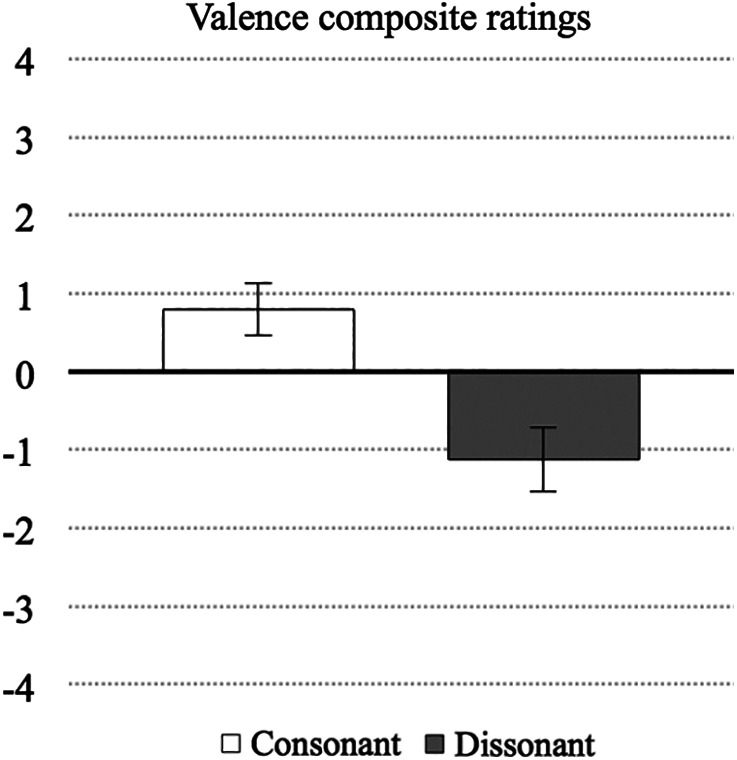

Since multivariate tests were significant for the valence dimension [Wilks' Lambda F (7, 21) = 11.51, p < .001], univariate tests and comparisons between composite ratings were evaluated. The overall F score does not inform which pairs of valence ratings have significantly different means. Post hoc contrasts were conducted to determine the nature of this effect comparing all variations of contrasting levels of dissonance with each other (Bonferroni corrected). The univariate tests evidenced a significant effect of consonance/dissonance level on the valence dimension (Table 4, bottom). Composite‐ratings for the consonant and dissonant condition in this dimension (i.e., mean of C1‐C2‐C3‐C4 and mean of D1‐D2‐D3‐D4) were computed to assess whether there would be a significant difference between the average valence ratings for all four consonant variations compared with the average valence rating for all four dissonant variations. A paired samples t test indicated that the valence composite‐rating for all four consonant variations was on average significantly more positive than the valence composite‐rating for all four dissonant variations, t (27) = 6.87, p < .001. Inspection of the composite‐ratings (showed in Table 4, top) indicates that the difference between means is 1.91 points on a 9 point‐scale. After converting the negative and positive valence ratings to negative and positive numbers respectively, the ratings for the consonant and dissonant conditions are shown in Figure 4.

Figure 4.

Valence ratings (y‐axis) for the consonant and dissonant music conditions (x‐axis). Error bars show 95% confidence intervals [adjusted for repeated measures following the method proposed by Loftus & Masson, 1994]

3.2. Neutral sound compared with the musical variations

Each music variation was preceded by a 12 s neutral sound category, which was utilized within the neuroimaging study as auditory baseline condition to verify basic response to auditory stimuli. Repeated measures ANOVAs were conducted to examine whether there were differences between the average ratings for the neutral condition compared with the ratings for the consonant and dissonant variations in all three emotional dimensions. Composite scores were used for this comparison. Mauchly's test of sphericity was not significant (p = .1 for valence, p = .12 for arousal, p = .30 for potency) indicating that the data did not violate the sphericity assumption. Results indicated that participants did rate the three conditions differently (valence: F (2, 54) = 15.84, p < .01; arousal: F (2, 54) = 17.93, p < .01; potency: F (2, 54) = 17.93, p = .01). Examination of the means for the ratings of the neutral condition (valence mean = 4.86, SD = 1.671; arousal mean = 7.250, SD = 1.602; potency mean = 3.820, SD = 1.906) compared with the composite‐ratings for the consonant and dissonant music variations, using simple within‐subjects contrasts (paired samples t test, corrected for multiple comparisons with Bonferroni adjustment), indicated a significant difference in the valence ratings between the dissonant and neutral conditions, t (27) = 9.92, p < .01, d[difference] = 1.26. A significant difference was also observed in the arousal ratings between the neutral and the consonant conditions, t (27) = 14.82, p < .01, d = 1.15; and between the neutral and dissonant conditions, t (27) = 26.29, p < .01, d = 2.12. In the potency dimension, a significant difference was found between the neutral condition and the consonant condition, t (27) = 7.77, p = .01, d = 1.19.

It is important to note that the statistical analysis indicated that the neutral sound induced neutral valence ratings (i.e., yielded an average value between the strong dissonant and the consonant conditions) when experienced in the context of the musical materials employed for the present experiment.

3.3. Ratings for individual variations compared within condition

The level of consonance/dissonance was intended to be uniform throughout a given condition, however, it was important to examine whether the transformation applied to create the contrasting conditions (i.e., interval content manipulation) could impact in a nonuniform way on the different variations and, consequently, on the emotion experienced by the participants. This issue had particular relevance since these variations would be used in the neuroscientific experiment and, to answer our experimental questions, it was critical to confirm that variations corresponding to a same consonance/dissonance level would consistently induce comparable affective states. Confirming our assumption, no significant differences were found in the emotional ratings between variations belonging to the same level of consonance/dissonance (consonant variations: valence, F (2.8, 77.8) = 0.136, p = .933; arousal, F (2.7, 73.6) = 0.443, p = .705; potency, F (2.7, 75.2) = 0.727, p = .53; dissonant variations: valence, F (2.6, 70.5) = 0.592, p = .599; arousal, F (2.8, 76.2) = 0.191, p = .892; potency, F (2.4, 65.3) = 1.964, p = .14 (comparisons conducted with Greenhouse–Geisser correction).

In conclusion, the statistical analyses demonstrated that the structural characteristics of the musical materials employed in the experiment induced contrasting valence ratings, and no significant effects either on arousal or on the potency dimensions.

3.4. Functional MRI results

3.4.1. Main effects of sound and music compared to rest

For the general comparison of the neutral condition relative to the rest condition, we observed significant activations in the primary and secondary auditory cortex (AC) bilaterally. Similar patterns of brain response were found for the contrasts: dissonance > rest and consonance > rest. Table 5 and Figure 5 present the findings for these respective contrasts (thresholded at p < .001 for voxel‐level inference with a cluster‐level threshold of p < .05 FWE‐corrected for the whole brain volume). Overall, these results converge with previous evidence from studies that have employed passive listening paradigms involving harmonized chord progressions (Brown et al., 2004; Menon et al., 2002; Ohnishi et al., 2001), in which signal changes were observed bilaterally in primary and secondary auditory cortices when contrasted to silent baselines.

Table 5.

(A) Results (FWE‐corrected p < .05 for cluster‐level inference) of group General Linear Model for the contrasts: neutral > rest, consonance > rest, dissonance > rest, dissonance > consonance and consonance > dissonance. (B) Results of group psychophysiological interaction analysis (PPI) with seed voxels (sphere with a 6 mm radius) located around highest peak for each subject within the left rostral Anterior Cingulate Cortex and the left Medial Prefrontal Cortex, for the regions that significantly differed in the SPM contrast dissonance > consonance. The regions described showed stronger positive functional connectivity with the left rostral anterior cingulate cortex during the dissonant condition compared to the consonant condition

| Region | Peak MNI | Voxels | Max t‐value (z‐value) | Mean t (std.) | p‐value (FWE) |

|---|---|---|---|---|---|

| (A) Subtractive analysis | |||||

| Neutral > rest | |||||

| Temporal sup L | −54 –43 13 | 256 | 7.56 (4.79) | 4.73 (0.70) | <.0001 |

| Temporal mid L | 158 | 4.80 (0.75) | Within Cl. | ||

| Heschl L | 19 | 4.15 (0.34) | Within Cl. | ||

| Rolandic Oper L | 18 | 4.16 (0.33) | Within Cl. | ||

| SupraMarginal L | 11 | 4.07 (0.28) | Within Cl. | ||

| Temporal sup R | 60 –31 1 | 272 | 8.35 (4.72) | 5.17 (1.05) | <.0001 |

| Temporal mid R | 113 | 5.21 (1.13) | Within Cl. | ||

| Heschl R | 14 | 4.19 (0.35) | Within Cl. | ||

| Consonance > rest | |||||

| Temporal sup L | −51 –22 4 | 87 | 5.41 (3.97) | 4.36 (0.43) | <.0001 |

| Heschl L | 22 | 4.11 (0.32) | Within Cl. | ||

| Rolandic Oper L | 4 | 3.86 (0.07) | Within Cl. | ||

| Temporal mid L | 5 | 3.85 (0.06) | Within Cl. | ||

| Temporal sup R | 51 –16 1 | 83 | 4.94 (3.75) | 4.17 (0.32) | 0.007 |

| Heschl R | 10 | 3.96 (0.18) | Within Cl. | ||

| Dissonance > rest | |||||

| Temporal sup L | −18 –34 16 | 194 | 6.88 (4.56) | 4.51 (0.60) | <.0001 |

| Heschl_L | 40 | 4.41 (0.49) | Within Cl. | ||

| Rolandic_Oper_L | 13 | 4.00 (0.15) | Within Cl. | ||

| Temporal_Mid_L | 15 | 3.93 (0.15) | Within Cl. | ||

| SupraMarginal_L | 2 | 4.00 (0.13) | Within Cl. | ||

| Temporal_Sup_R | 60 –16 1 | 115 | 6.26 (4.33) | 4.39 (0.58) | .038 |

| Rolandic_Oper_R | 13 | 4.06 (0.18) | Within Cl. | ||

| Dissonance > consonance | |||||

| Anterior cingulate L | −9 47 10 | 5 | 4.70 (3.63) | 4.30 (0.27) | .047 |

| Medial prefrontal L | −12 50 10 | 17 | 4.53 (3.54) | 4.08 (0.23) | .022 |

| Parietal sup. L | −15 –64 43 | 7 | 4.70 (3.63) | 4.24 (0.32) | * uncorrected p < .001 |

| Occipital sup. R | 21–73 37 | 1 | 4.91 (3.73) | 4.90 (0.18) | * uncorrected p < .001 |

| Cuneus R. | 18–73 37 | 1 | 5.13 (3.84) | 5.12 (0.18) | * uncorrected p < .001 |

| Temporal mid. L | −51 –49 –2 | 6 | 4.36 (3.45) | 4.01 (0.20) | * uncorrected p < .001 |

| Consonance > dissonance | |||||

| (no significant activations were observed) | |||||

| (B) Functional connectivity analysis | |||||

| Seed region (6 mm radius sphere) located around highest peak for each subject within the left rostral anterior cingulate cortex | |||||

| PPI – dissonance > consonance | |||||

| Temporal sup. L | −57 –16 4 | 16 | 4.80 (3.52) | 3.41 (0.29) | 0.047 |

| Heschl L | −60 –10 10 | 5 | 3.41 (2.89) | 3.15 (0.17) | Cl. |

| Temporal sup. R | 57 –4 4 | 23 | 4.42 (3.34) | 3.21 (0.22) | 0.034 |

| Heschl R | 54 –7 7 | 9 | 3.61 (3.01) | 3.19 (0.23) | Cl. |

| Seed region (6 mm radius sphere) located around highest peak for each subject within the left medial prefrontal cortex | |||||

| (no significant results were observed) | |||||

Note: * indicates significant results at uncorrected p < .001, but not significant at p < .05 FWE‐corrected for cluster‐level inference.

Abbreviations: L, left; R, right; within Cl., areas integrating the above detailed cluster‐level p‐value.

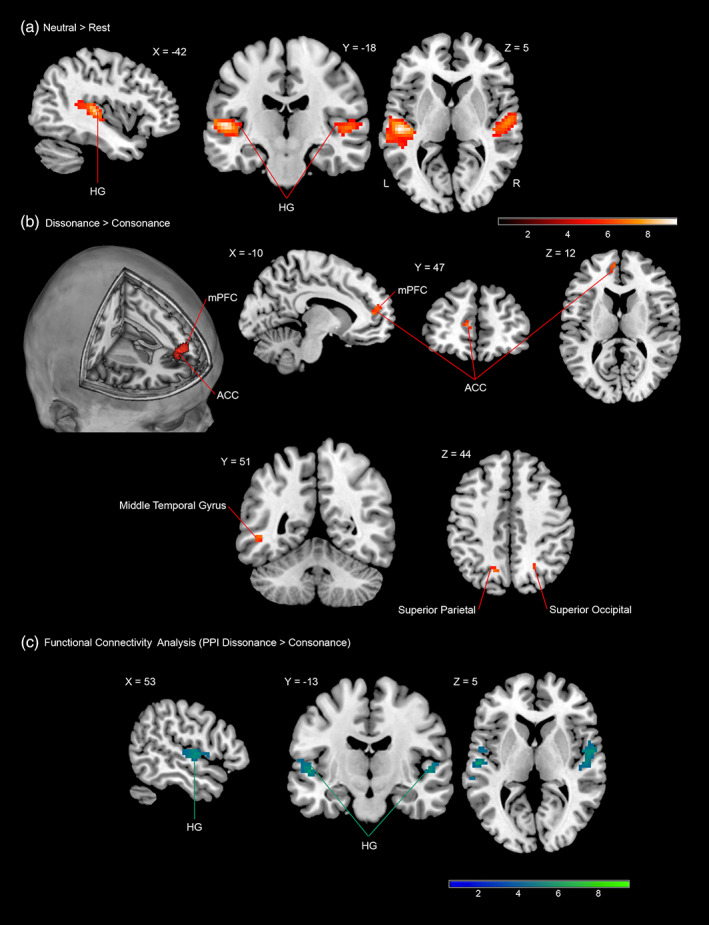

Figure 5.

FMRI results (FWE‐corrected p < .05 for cluster‐level inference). Coloured areas (red) reflect: (a) statistical parametric maps (SPM) of the direct contrast between the neutral condition and rest superimposed onto a standard brain in stereotactic MNI space. Similar patterns of brain response were found for the contrasts: dissonance > rest and consonance > rest; signal changes were observed bilaterally in primary and secondary auditory cortices including Heschl's gyri (HG) bilaterally (sagittal, coronal and axial views). (b) Statistical parametric maps showing voxels in the left medial prefronal cortext (mPFC) and left rostral anterior cingulate cortex (ACC) in which the response was higher during dissonant music compared to consonant music (3D rendering sagittal oblique, sagittal, coronal and axial views). This contrast also evidenced signal differences (at uncorrected p < .001 for voxel‐level inference) in the left superior parietal, right superior occipital, and left middle temporal gyrus (coronal and axial views). (c) Blue colour identifies voxels in both the left ant right primary auditory cortex, which exhibited stronger functional connectivity with seed voxels (6 mm sphere) located in the left rostral anterior cingulate cortex during the dissonant condition compared with the consonant condition

3.4.2. Comparison of interest: Dissonant music vs. consonant music

The statistical parametric maps for the contrast dissonance > consonance revealed significant activation in the left medial prefrontal cortex (mPFC) and in the left rostral anterior cingulate cortex (ACC) (Table 5 and Figure 5). The observation of left‐lateralized neural responses in medial prefrontal cortices (mPFC and ACC) during the dissonance condition support previous studies that have reported activity within these brain structures being modulated by withdrawal‐related affects (i.e., emotions induced by unpleasant events) (Barrett & Wager, 2006; Wager et al., 2003). The findings are also in agreement with empirical evidence which has suggested that the mPFC and the ACC could be fundamental elements of a brain network in charge of the appraisal and expression of negative emotion (Carretié et al., 2009; Carretié, Hinojosa, Mercado, & Tapia, 2005; Etkin et al., 2011).

The analysis of the contrast dissonance > consonance also evidenced signal differences (at uncorrected p < .001 for voxel‐level inference) in the left Superior Parietal, right Superior Occipital, right Cuneus and left Middle Temporal Gyrus (Table 5 and Figure 5); however, the functional significance of these results should be treated with caution since they only passed an uncorrected p < .001 threshold. It should be noted, however, that signal changes within these visual and attentional areas (i.e., left Parietal Superior, right Occipital Superior and right Cuneus) were only observed while participants listened to the dissonant portion of the task. These findings converge with previous empirical evidence that has reported activation of both spatial and nonspatial attentional pathways in response to negative stimuli or threat‐related signals (Cahill & McGaugh, 1995; Carretié et al., 2008; Carretié, Martín‐Loeches, Hinojosa, & Mercado, 2001; Dolcos & Cabeza, 2002; Dolcos, LaBar, & Cabeza, 2004; Kosslyn et al., 1996; Lang et al., 1998; Phelps, LaBar, & Spencer, 1997).

The results did not reveal any suprathreshold activations at the level of the parahippocampal gyrus (even after small volume correction). This suggests that the involvement of the parahippocampal gyrus in previous studies targeting musical dissonance could be mediating other functions, such as associative or contextual memory (Aminoff, Kveraga, & Bar, 2013; Bar, Aminoff, & Schacter, 2008; Bravo, Cross, Hawkins, et al., 2017), possibly linked to the cognitive processes initiated by the tasks themselves which participants were instructed to perform within these previous studies (e.g., emotion labeling/categorization) (Blood et al., 1999; Bravo, Cross, Hawkins, et al., 2017; Gosselin et al., 2006; Koelsch et al., 2006).

For the opposite contrast (consonance against dissonance), no significant activations were observed.

3.4.3. Familiarity ratings

Familiarity ratings obtained at the end of the fMRI experiment revealed no significant differences between the two music conditions (p = .723), which indicated that the musical materials in both conditions appeared to be similarly unfamiliar to all subjects.

3.4.4. PPI analysis

We tested whether the rostral ACC and the mPFC could exert influences on parietooccipital or sensory areas via reciprocal functional connections [e.g., regulation of attention (Bush, Luu, & Posner, 2000; Cardinal, Parkinson, Hall, & Everitt, 2002; Carretié et al., 2005; Carretié, Hinojosa, Martín‐Loeches, Mercado, & Tapia, 2004; Lane et al., 1998; Ploghaus et al., 1999; Posner, 1995; Turak, Louvel, Buser, & Lamarche, 2002)]. We employed a PPI analysis, defining a seed region (sphere with a 6 mm radius) around the highest activated peak for each subject within the ACC. The results did not show significant functional connectivity between the left rostral ACC and any occipitoparietal attentional cortices. However, both the left and right primary auditory cortex showed stronger functional connectivity with the left rostral ACC during the dissonant condition (compared with the consonant condition), indicating a potential modulatory influence of medial prefrontal areas on perceptual auditory cortices, and suggesting a demand for greater information integration while participants were listening to the dissonant musical variations.

The statistical analysis did not show significant results for the PPI analysis based on the mPFC as seed region, within the experimental contrast of interest (dissonance vs. consonance). The results for this analysis are described in Table 5 and displayed in Figure 5.

3.4.5. Parametric modulation analysis

No significant suprathreshold clusters were found at a group level when comparing the model including the valence rating as parametric regressor with the tonalness model. These results indicate that there were no significant differences between the effects of tonalness level and those modulated by the valence ratings. It is important to note, however, that the small differences between the average valence ratings within condition could not hold enough variability to model a distinctive interaction between trials and the valence variate, when compared to the tonalness model.

4. DISCUSSION

The present experiment involved participants listening to instrumental music, which was strictly controlled for relative degrees of consonance/dissonance. A behavioral experiment was firstly conducted to evaluate the distribution of the two contrasting conditions in the three‐dimensional affective space defined by valence, arousal and potency (Osgood et al., 1967; Russell, 1979). No significant differences between the two conditions were found either in the arousal or in the potency dimensions. However, the statistical analysis revealed that participants rated the music variations differently in terms of valence. A comparison between all variations of contrasting consonance/dissonance level with each other evidenced a significant effect of consonance/dissonance level on the valence ratings. The consonant variations received, consistently, more positively valenced judgments compared to the dissonant variations. Furthermore, no significant differences were found between variations belonging to the same condition in any of the three affective dimensions, supporting our assumption of a uniform affective impact within music category.

Overall, these findings appear to indicate that the specific musical structure manipulation employed (i.e., consonance/dissonance level controlled by interval content) could be applied to isolate emotional valence from the arousal dimension, which many studies fail to dissociate (Carretié et al., 2009) and, more importantly, that it may facilitate the identification of the neural circuitry involved in the processing of negative emotion.

In the neuroimaging (fMRI) study, 16 subjects with no more than amateur musical training (i.e., less than 3 years) listened to the same musical materials as in the behavioral study. Since the main interest was centered in assessing the brain unprompted response to music, a passive listening task was employed instead of an emotional discrimination task. This approach had the value of providing information about more subliminal functions of music, such as its role in audiovisual settings. In agreement with previous studies that have used passive listening paradigms containing harmonized musical passages (Brown et al., 2004; Menon et al., 2002; Ohnishi et al., 2001), signal changes in primary and secondary auditory cortices (bilaterally) were detected during the presentation of music or neutral sound compared to the rest condition. There was no evidence for supra‐threshold signal changes in the contrast consonance versus dissonance. While participants listened to dissonant compared to consonant music, activation was found within a cluster comprising the left medial prefrontal cortex and the left rostral anterior cingulate cortex. Brain responses to dissonance were also observed in attentional (parietal superior) and visual areas (occipital superior). To our knowledge, this is the first observation of responses in such areas during passive listening to strictly controlled musical dissonance.

4.1. Comparison with other neuroimaging studies employing musical dissonance in their paradigms (task‐related and instrumental‐timbre influences)

Two relevant neuroscientific studies should be mentioned, which have already explored the affective reactions elicited by consonance and dissonance (Blood et al., 1999; Koelsch et al., 2006). In the positron emission tomography (PET) study by Blood et al. (1999), dissonance level was controlled by presenting participants with a melody specifically composed for the study, which was manipulated through altering the harmonic structure of its accompanying chords. During the neuroimaging experiment, participants were instructed to respond to an emotional discrimination task (i.e., rating of emotional valence) following each of the six scans that every subject underwent. Increasing dissonance correlated with activity in right parahippocampal gyrus and right precuneus. Higher ratings of unpleasantness, which correlated with increasing dissonance, covaried with cerebral blood flow changes in right parahippocampal gyrus and left posterior cingulate. In the study by Koelsch et al. (2006), fMRI was employed to investigate the brain circuits mediating emotions with positive and negative valence elicited by consonant and permanently dissonant counterparts of classical music excerpts. Participants performed two tasks while being scanned: a rhythmic tapping task with the purpose of controlling participants' attention to the stimuli and an emotional discrimination task, in which participants had to indicate how pleasant or unpleasant they felt following each musical excerpt. During the presentation of unpleasant music (contrasted to pleasant music), activations were found in left hippocampus, left parahippocampal gyrus, right temporal pole, and left amygdala. When contrasting pleasant versus unpleasant music, they observed activations of the Heschl's gyrus, anterior superior insula, and left inferior frontal gyrus.

Our study builds up from this work, and tries to overcome certain limitations observed in these influential studies. In both cases, participants were instructed to perform specific tasks during the scanning sessions (i.e., not a passive listening task). This particular aspect of the experimental design should be taken into consideration when comparing results, since differences in the activated neural circuitry could be related to the cognitive processes initiated by the tasks themselves, and not only due to the affective impact elicited by the musical structural characteristics (Brown et al., 2004).

Furthermore, in the fMRI experiment by Koelsch et al. (2006) the unpleasant stimuli were obtained by electronically manipulating naturalistic music taken from commercially available CDs. As stated by the authors: “for each pleasant stimulus, a new sound file was created in which the original (pleasant) excerpt was recorded simultaneously with two pitch shifted versions of the same excerpt, the pitch‐shifted versions being one tone above and a tritone below the original pitch”. It is important to note that, while the experiment carefully controlled for aspects such as dynamics, rhythmic structure and melodic contour; instrumental timbre differences can be recognized in an aural comparative inspection of pleasant versus unpleasant counterparts, which are caused by the specific manipulation employed (i.e., merged audio files). Due to the fact that the study did not use original stimuli but commercially available music, results observed in certain subtractive analyses could be interpreted, not only as the reaction to different levels of dissonance, but also as brain mechanisms underlying personal associations to the sonically manipulated familiar music (e.g., instrumental tone color alteration). Empirical evidence has shown that musical tension can be induced by many factors, including instrumental timbre (Barthet et al., 2010; Menon et al., 2002; Paraskeva & McAdams, 1997). Accordingly, timbre may elicit specific neural responses that could be confounded with the dissonance processing circuitry, and therefore, harmonic transformation might not be the only explanatory variable for the findings reported in Koelsch et al. (2006). In the present study instrumental timbre was controlled by employing identical sample‐based virtual instruments in the two contrasting conditions (i.e., the manipulation of the musical materials was performed at the MIDI‐level and not at the sound‐wave level); consequently, the instrumental tone color remained unmodified.

4.2. Neural circuitry involved in processing unpleasant events (ACC and mPFC involvement in the appraisal and expression of negative emotion)

The significant activations in the medial prefrontal cortex (mPFC) and rostral anterior cingulate cortex (ACC), which were present while participants listened to the dissonant condition compared to the consonant condition, exhibit an overlap with part of the neural circuitry involved in the reaction to unpleasant events (Carretié et al., 2005; Carretié et al., 2009). The mPFC and the ACC appear to be fundamental elements of a brain network that is in charge of evaluating the risks of a situation and deciding a response (Carretié et al., 2009; Etkin et al., 2011). Other components of this network are the amygdala and the anterior insula; however, signal changes were not observed, at a group level, in these other regions. As “evaluative” brain areas, the mPFC and the ACC share two important properties: they acquire direct information from sensory cortices or sensory nuclei, and they have direct access to effector regions. Specifically, the mPFC and the rostral ACC show anatomical projections to the amygdala, the periaqueductal grey and the hypothalamus, as well as to other regions of the limbic system, including its outputs structures (Beckmann, Johansen‐Berg, & Rushworth, 2009; Chiba, Kayahara, & Nakano, 2001; Etkin et al., 2011; Rempel‐Clower & Barbas, 1998). Furthermore, they are both strongly interconnected and can modulate the activity of one another (Cavada, Compañy, Tejedor, Cruz‐Rizzolo, & Reinoso‐Suárez, 2000; Morecraft, Geula, & Mesulam, 1992). Studies have shown that nonnegative events with an arousal value similar to that of negative events do not elicit activations in this brain circuit, and therefore, it has been proposed that the valence dimension, and the correspondent significance for the individual, might explain processes initiated in these evaluative regions (Carretié et al., 2009).

A number of recent human neuroimaging, animal electrophysiology, and human and animal lesion studies exploring the role of the ACC and mPFC in the processing of anxiety and fear, have reported an involvement of these regions in the appraisal and expression of negative emotion (Etkin et al., 2011; Hashemi et al., 2019; Mechias et al., 2010). Appraisal refers to evaluation of the meaning that a stimulus (internal or external) presents to an organism. Only stimuli that are appraised as motivationally significant will induce an emotional reaction. Empirical evidence during trace fear paradigms has indicated that the ACC is involved in making cue‐related fear memory associations and in the termination of fear behavior (“un‐freezing”) (Steenland, Li, & Zhuo, 2012). Appraisal may require slow and carefully controlled conscious processing; however, this process can also be automatic and occur under conditions of restricted awareness, focusing on basic stimulus dimensions such as affective valence. Studies have consistently found greater activation of medial prefrontal cortices in response to unconsciously perceived negatively valenced events compared to nonnegative unconscious stimulation (Carretié et al., 2005). In light of our findings, and considering that we utilized a passive listening task, the increased neural response observed in the mPFC and ACC during dissonance (compared to consonance) may provide further support for an automatic brain response to an impending threat; in this case, as symbolically implied by musical dissonance. Regardless of the fact that many response patterns are culturally learned and happen in a specific context, there is substantial evidence indicating that dissonant music induces unpleasant connotations in most ordinary western‐culture listeners (Blood et al., 1999; Costa, Bitti, & Bonfiglioli, 2000; Plomp & Levelt, 1965; Trainor & Heinmiller, 1998; Zentner & Kagan, 1996). As such, this connotative facet of musical dissonance has been characteristically employed to shape the emotional comprehension of visual narratives in horror films, where it is often employed to signal threats or to negatively bias the spectators' attribution of mental states to the characters depicted in the films (Bravo, 2013, 2014).

4.3. The ACC role in conflict detection and conflict resolution mechanisms

The neuroscientific data analyses showed no significant differences when comparing valence‐modulated effects (parametric modulation) with a model based solely on tonalness level. These results may indicate that the activity found at the level of the ACC could also be initiated by conflict detection/resolution mechanisms involved in listeners demanding attempt to find a tonal center in the dissonant musical sequences used in our experiment (Etkin et al., 2011; Etkin, Egner, Peraza, Kandel, & Hirsch, 2006; Krumhansl & Kessler, 1982; Krumhansl & Toiviainen, 2001; Temperley, 2010).

Defined as “the degree to which a sonority evokes the sensation of a single pitched tone” (Parncutt, 1989), the notion of tonalness is central to Western music. Certain musical passages can evoke a clear perception of a tonal center (i.e., high tonalness) while other may communicate more unpredictable and equivocal tonal centers (i.e., low tonalness) (Krumhansl & Toiviainen, 2001; Lerdahl & Krumhansl, 2007; Temperley, 2010). From a music theoretical point of view, tonalness is intimately linked to distinctive musical structural features, including: melodic organization (Ansermet, 2000), the conventional use of harmonic sequences (Costère, 1954; Hindemith, 1984; Koechlin, 2001; Riemann, 2018; Schenker & Oster, 2001), and the tendencies for certain tones and harmonies to be “resolved” to others (Bigand et al., 1996; Bigand & Parncutt, 1999; Lerdahl & Krumhansl, 2007). From the perspective of experimental research in music cognition, the tonalness level of a musical passage entails hierarchical melodic and harmonic implications (Koelsch, Rohrmeier, et al., 2013; Krumhansl & Toiviainen, 2001; Lerdahl & Krumhansl, 2007) and can, consequently, induce either clear (unambiguous) or conflictive tonal expectations in the listeners (Huron, 2008; Lerdahl & Krumhansl, 2007; Meyer, 1961; Temperley, 2002).

Cognitive control denotes to the ability to guide information processing and behavior in the service of a goal (Carter & van Veen, 2007). One monitoring function capable of regulating the extent to which cognitive control is engaged is thought to be the detection of conflict, which occurs when competing and mutually incompatible representations are concurrently active during task performance (Botvinick, Braver, Barch, Carter, & Cohen, 2001; Botvinick, Cohen, & Carter, 2004; Gruber & Goschke, 2004; van Veen & Carter, 2002, 2006). Historically, findings from EEG and functional neuroimaging studies had led to the idea that the ACC could be the brain's conflict detection device (Bush et al., 2000, 1998). Engagement of the ACC has been reported in paradigms employing cognitively demanding tasks that contain stimulus response selection under competing streams of information (e.g., Stroop‐like tasks, verbal and motor response selection, divided‐attention task) (Bush et al., 1998). In the Counting Stroop task, sets of up to four vertically tiled words appear on the screen with an interval gap of 2 s. The task requires participants to report the number of words in each set. Neutral trials contain a single semantic category (e.g., “house” written three times), congruent trials include number words that are congruent with the correct response (e.g., “three” written three times) and interference trials comprise number words that are incongruent with the correct answer (e.g., “two” written three times). EEG and functional neuroimaging studies of the Stroop effect have revealed increased activation in the ACC during interference trials (Bush et al., 2000, 1998; MacLeod, 1991; MacLeod & MacDonald, 2000; Stroop, 1935). Similar results have also been found in other cognitive tasks that manipulate conflict through the coactivation of incompatible response channels induced by conflicting information, such as the Eriksen flanker task (Eriksen & Eriksen, 1974; Eriksen & Schultz, 1979) and the Simon task (Ridderinkhof, 2002; Simon, 1969; Simon & Berbaum, 1990). Neuroimaging studies employing these tasks have almost invariably found dorsal ACC and dorsomedial prefrontal cortex activity during cognitive conflict detection processes, and specifically when comparing high‐ and low‐conflict trial types (Botvinick et al., 2001; Durston, Thomas, Worden, Yang, & Casey, 2002; Kerns et al., 2004; van Veen & Carter, 2005; van Veen, Cohen, Botvinick, Stenger, & Carter, 2001), whereas activity in the lateral prefrontal cortices was associated with the resolution of cognitive conflict (Botvinick, Nystrom, Fissell, Carter, & Cohen, 1999; Egner & Hirsch, 2005; Kerns et al., 2004).

Theoretically, conflict can occur anywhere within the information processing system. Although researchers had initially found the ACC engaged only during conflict between response representations (Milham et al., 2001; Nelson, Reuter‐Lorenz, Sylvester, Jonides, & Smith, 2003; van Veen et al., 2001), which suggested that the ACC could be selectively triggered by such conflicts; successive studies have shown that the ACC can also be engaged during conflicts between other types of semantic and conceptual representations (Badre & Wagner, 2004; van Veen & Carter, 2005; Weissman, Giesbrecht, Song, Mangun, & Woldorff, 2003). Music cognition research has suggested that low tonalness sequences could be inherently conflict‐demanding due to their tendency towards equivocal referential tonal centers (Bonin & Smilek, 2016; Huron, 2008; Johnson‐Laird, Kang, & Chang, 2012; Krumhansl & Kessler, 1982; Parncutt, 1989, 2011; Temperley, 2010), which in turn has been associated with the listeners' experience of increased musical tension (Bigand et al., 1996; Bigand & Parncutt, 1999; Blood et al., 1999; Bravo, Cross, Hawkins, et al., 2017; Lerdahl & Krumhansl, 2007; Schellenberg & Trehub, 1994; Temperley, 2010). We therefore believe that the enhanced activation of the rostral ACC (when comparing dissonant against consonant sequences) could also be explained in terms of conflict monitoring processes.

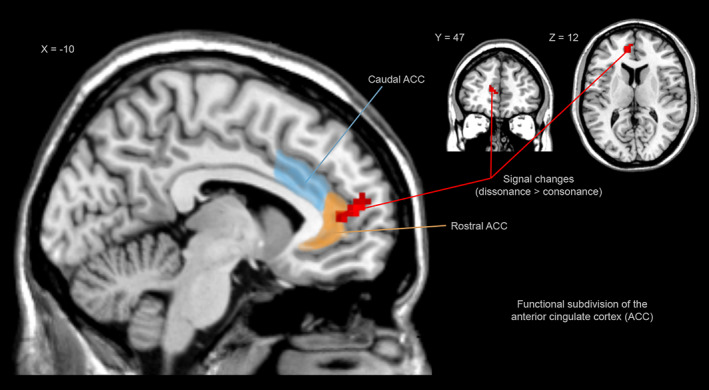

Interestingly, in contrast to the “dorsal” ACC findings during “cognitive” conflict monitoring, tasks involving “emotional” conflict processing, such as the processing of emotional distracters (Vuilleumier, Armony, Driver, & Dolan, 2001), and the processing of negatively valenced stimuli in emotional Stroop tasks (Bishop, Duncan, Brett, & Lawrence, 2004; Bush et al., 2000; Whalen et al., 1998) have consistently shown signal changes in the “rostral” anterior cingulate. Researchers have, consequently, proposed a functional division of the ACC in which caudal‐dorsal regions serve a variety of cognitive functions whereas rostral (or ventral) regions have been thought to play a role in the processing of emotional conflict (Bishop et al., 2004; Bush et al., 2000; Compton et al., 2003; Etkin et al., 2011; Whalen et al., 1998). This functional subdivision has also been reflected by distinct patterns of neural connectivity found in ventral, compared to dorsal portions of the ACC (see aforementioned citations). For instance, studies using the “emotional” Counting Stroop task [which employs emotionally valenced words instead of number words for the interference trials (e.g., “murder” written three times) (Whalen, Bush, Shin, & Rauch, 2006)], have shown that different portions of the ACC can be recruited by manipulating the type of information (emotional vs. cognitive) that is being processed (Bush et al., 1998; Drevets & Raichle, 1998; Mayberg et al., 1999; Shulman et al., 1997; Whalen et al., 1998). The processing of cognitive information in high conflict situations has revealed increased activity in dorsal regions of the ACC (i.e., cognitive subdivision of the ACC according to Bush's theory), whereas processing affectively valenced information has been shown to activate the rostral‐ventral regions of the ACC (i.e., affective subdivision of the ACC) (Figure 6). The fact that the ventral regions of the ACC were suppressed during cognitively demanding (but affectively neutral) parts of the Counting Stroop tasks and, conversely, that these ventral ACC regions were the only significant activations during the affective interference portion, has been interpreted as implying a role of the rostral ACC in the emotional processing of more complex stimuli, in particular, the evaluation of the emotional valence of words. Our findings seem consistent with those reported in emotional conflict paradigms (Figure 6) and appear to support a function of the rostral ACC in the monitoring of conflict also within affective valenced musical information. Our results are also in line with studies tying rostral ACC activation to fear extinction (Phelps et al., 2004) and placebo anxiety reduction (Petrovic et al., 2005), processes deemed to demand “emotional” conflict resolution mechanisms (Etkin et al., 2006). As such, the rostral cingulate activation evidenced while participants listened to the dissonant (compared to consonant) sequences could further indicate a mechanism in which control over an emotional stimulus (i.e., tonally conflictive and negatively‐valenced musical sequence) is actively recruited in an attempt to diminish its effect or decrease its aversive perception (Blood et al., 1999; Koelsch et al., 2006; Petrovic et al., 2005; Price et al., 1999).

Figure 6.

According to the theory proposed by Bush and collaborators (Bush et al., 2000) the ACC presents a functional division in which caudal‐dorsal regions (blue colour) serve a variety of cognitive functions whereas rostral‐ventral regions are involved in emotional tasks (orange colour). Red colour indicates fMRI results, sagittal view showing stronger signals during dissonance (compared to consonance) in the left medial prefrontal cortex (mPFC) and in the left anterior cingulate cortex (ACC)

4.4. Emotional modulation of attention and perception