Abstract

Hypnosis and hypnotic suggestions are gradually gaining popularity within the consciousness community as established tools for the experimental manipulation of illusions of involuntariness, hallucinations and delusions. However, hypnosis is still far from being a widespread instrument; a crucial hindrance to taking it up is the amount of time needed to invest in identifying people high and low in responsiveness to suggestion. In this study, we introduced an online assessment of hypnotic response and estimated the extent to which the scores and psychometric properties of an online screening differ from an offline one. We propose that the online screening of hypnotic response is viable as it reduces the level of responsiveness only by a slight extent. The application of online screening may prompt researchers to run large-scale studies with more heterogeneous samples, which would help researchers to overcome some of the issues underlying the current replication crisis in psychology.

Introduction

Hypnosis and hypnotic suggestions have been shown to be useful experimental tools to test theories of cognitive neuroscience (Oakley & Halligan, 2013; Raz, 2011), especially theories related to consciousness (Cardeña, 2014; Terhune, Cleeremans, Raz, & Lynn, 2017). For instance, hypnotic suggestions can evoke changes in the feeling of voluntariness (Weitzenhoffer, 1974, 1980) or modify one`s sense of agency (Haggard, Cartledge, Dafydd, & Oakley, 2004; Lush et al., 2017; Polito, Barnier, & Woody, 2013). Responses to suggestions frequently involve alterations in perception, such as the experience of positive and negative hallucinations or delusions (Kihlstrom, 1985; Oakley & Halligan, 2009). Moreover, hypnotic suggestions can be employed to simulate some properties of neurological and psychiatric conditions in healthy subjects (Barnier & McConkey, 2003; Oakley, 2006). Finally, correlations between hypnotisability and measures employed by consciousness researchers (e.g. the rubber hand illusion; the vicarious pain questionnaire; mirror touch synaesthesia) have recently been found (Lush et al., 2019). These correlations suggest that measures common in the consciousness literature are driven by hypnotic suggestibility. There is therefore an increasing need for an expansion of hypnosis research. Unfortunately, the successful application of hypnotic suggestions demands plenty of resources, making it impractical for researchers to run large-scale hypnosis related studies. In order to conduct experiments involving hypnosis, researchers generally need to recruit from a specific subsample of people based on their tendency to respond to hypnotic suggestions. To achieve this, researchers run hypnosis screening sessions before recruitment, so that, for example, they can identify the participants at the lowest and highest end of the scale (low and highly hypnotisable people, respectively). High and low hypnotisability are usually defined as the top and bottom 10–15% of screening scores (Barnier & McConkey, 2004; Anlló, Becchio & Sackur, 2017). Therefore, screening procedures are time-consuming; to identify a single highly suggestible participant for an experiment, one has to find, on average, ten people who are willing to undertake a screening that can last from 40 up to 90 min depending on the applied method.

The hypnosis screening procedure has moved through a long developmental process in which it has become more and more user friendly. Initially, the screening consisted of two steps, a preliminary group session applying the Harvard Group Scale of Hypnotic Susceptibility Form A (HGSHS:A; Shor & Orne, 1963) and an individual session using the Stanford Hypnotic Susceptibility Scale Form C (SHSS:C; Weitzenhoffer & Hilgard, 1962) conducted with only those scoring very high or low in the first session. The later development of a reliable group screening method, the Waterloo-Stanford Group Scale of Hypnotic Susceptibility (WSGC; Bowers, 1993), has drastically mitigated the time required for screening as it allows researcher to screen up to a dozen people in about 90 min (although it was originally intended to act as a second screen after an HGSH:A, a single screen with the WSGC is quite reliable enough to select subjects capable of later having compelling subjective responses to difficult suggestions, e.g. digit–colour synesthesia, Anderson, Seth, Dienes, & Ward, 2014, or compelling objective reductions in Stroop interference to alexia (word blindness) suggestions, e.g. Parris, Dienes, Bate, & Gothard, 2014). Recently, the Sussex Waterloo Scale of Hypnotizability (SWASH; Lush, Moga, McLatchie & Dienes, 2018) was introduced, which is a modified version of the WSGC. The SWASH includes new items to measure the subjective experiences of the participants (compare also the Carleton University Responsiveness to Suggestion Scale [CURSS, Spanos, Radtke, Hodgins, Stam, & Bertrand, 1983], the Creative Imagination Scale [CIS, Wilson & Barber, 1978], and the Experiential Scale for the WSGC [Kirsch, Milling, & Burgess, 1988]). The length of the procedure was reduced to 40 min and it can be run with larger groups than the WSGC (Lush et al., 2018). Moreover, the dream and age regression suggestions were not included in the SWASH. These highly personalised items of the WSGC can be risky by virtue of possibly triggering unpleasant memories or emotions (Cardeña & Terhune, 2009; Hilgard, 1974).

Nonetheless, the application of the least demanding methods (such as the SWASH, the CURSS or the CIS), still requires potential participants to attend a group session, which makes the screening procedure relatively time-consuming and limits the subject pools to psychology students who are the easiest to incentivise to participate in a group screening on campuses. These two barriers of large-scale hypnosis studies could be overcome by employing fully automatised, online hypnosis screening procedures. In the last two decades, psychological science has witnessed growth in the application of online data collection for experimental purposes, paving the way for researchers to collect large samples in a short period of time (Reips, 2000; though it can come with its own problems, e.g. Dennis, Goodson & Pearson, 2018). In order to adapt the hypnosis screening procedure online, one needs to ensure that the non “live” version can induce similar objective and subjective hypnotic responses as with a “live” hypnotist. Indeed, suggestibility scores of participants are comparable when the hypnotic induction and suggestions are delivered by a pre-recorded audiotape and when they are delivered by an experimenter (Barber & Calverley, 1964; Fassler, Lynn, & Knox, 2008; Lush, Scott, Moga, & Dienes, 2019). These findings underpin the idea that the participants could easily undergo a hypnosis screening procedure in their own rooms by listening to a pre-recorded script and filling out the booklets online. Nevertheless, online data collection has its own perils, namely, the data acquired by online questionnaires might not be as reliable and the results might not be consistent with the ones of the traditional data collection procedures (Krantz & Dalal, 2000). Therefore, the reliability of new online questionnaires, such as the online version of a hypnosis screening procedure, needs to be tested even if there is evidence that the quality of the data and the findings of online-based studies can be similar to those obtained by traditional methods (Gosling, Vazire, Srivastava, & John, 2004; Buhrmester, Kwang, & Gosling, 2011).

In this project, our purpose is to explore the extent to which an online hypnotic screening procedure is reliable and consistent with an offline procedure. To this aim, we measured people`s hypnotic suggestibility with the SWASH on two separate occasions and in two different environments. Henceforth, we call every type of data collection carried out in a controlled environment with the experimenter present an offline screening, whereas undertaking a hypnotic screening alone in one’s own room under one’s own control will be called online screening. In addition, we are interested in the extent to which the length of the delay between first and second screen can influence the reliability and the scores of hypnotic suggestibility. The question about the stability of hypnotic suggestibility over periods of few days or even decades have inspired various research projects (e.g. Fassler, Lynn, & Knox, 2008; Lynn, Weekes, Matyi, & Neufeld, 1988; Piccione, Hilgard, & Zimbardo, 1989). To assess the stability of hypnotic suggestibility, we recruited half of the sample from the subject pool of the year of 2016 and the other half from the year of 2017, both of whom have already received offline screening. Therefore, for some of the participants, the delay between the two screenings is not more than 6 months (short delay group), whereas for the others, it is at least one and a half years (long delay group). For practical reasons, the first screening was organised offline, in groups of 20–40 for all the participants, whereas the second screening was either an online screening or another offline one. By this method, we are able to estimate how strongly the type of the screening and the length of the delay can influence the suggestibility scores of the people; we can also assess their influence on the test–retest reliability and the validity of the screening. Taken together, this project strives to explore whether a well-established offline screening procedure could be replaced for practical purposes by an online version, which could help consciousness researchers run more and larger hypnosis studies by drastically cutting the recruitment-related costs.

While responding to hypnotic suggestions, people tend to experience as of being in some form of trance or altered state (Kihlstrom, 2005; Kirsch, 2011). This experience is usually measured by subjective reports of depth of hypnosis (e.g. Hilgard & Tart, 1966), which is, interestingly, strongly associated with people`s ability to respond to hypnotic suggestions (Wagstaff, Cole, & Brunas-Wagstaff, 2008). We investigate this link by assessing the strength of relationship between hypnotic suggestibility scores and depth of hypnosis reports, and the extent to which the mentioned experimental manipulations can influence this relationship. We also aim to evaluate the extent to which depth of hypnosis is influenced by the type of data collection and the length of the delay between screens to ensure that people experience comparable level of hypnotic depth during online and offline screens.

In our analyses, we solely employed estimation procedures instead of testing the existence of differences with an inferential statistical tool such as the null-hypothesis significance test (Fisher, 1925; Neyman & Pearson, 1933) or the Bayes factor (e.g. Dienes, 2011; Rouder et al., 2009). Estimation is recommended over inferential statistics when the existence of a difference is established or it is not relevant (Jeffreys 1961; Wagenmakers et al., 2018). The second point proves to be decisive for our case, since it is not necessary to test the existence of any investigated effect to answer our research questions. For instance, the core aim of the current project was to conclude regarding the applicability of online hypnosis screening by comparing the SWASH scores, the reliability and the validity of online and offline hypnosis screening. Imagine a scenario in which an inferential statistical tool demonstrates evidence for the difference between the offline and online groups in favour of the offline group in all aspects that assess the quality of the measurement. Importantly, this outcome per se cannot give a definite answer to our central question as the mere fact that offline screening is significantly better than online screening neglects the question of magnitude of the difference. To reject or accept the idea that online screening is viable, we need to know the extent to which the quality of offline and online screening differs so that we can decide whether the benefits of the online screen outbalance its costs. Further, the fact that the two types of screening will correlate cannot be in doubt; the question is simply the strength of the relationship between them.

To explore the range of plausible effect sizes, estimation methods, either from the Bayesian (Kruschke, 2010, 2013; Rouder, Lu, Speckman, Sun, & Jiang, 2005; Wagenmakers, Morey, & Lee, 2016) or from the frequentist school (Cumming, 2014) can be used. Here, we applied a Bayesian tool, estimation by calculating the 95% Bayesian Credibility Intervals, as this is the method that is appropriate to answer our research question; namely, how confident can we be that the true effect size lies within a specific interval (Wagenmakers et al., 2018). Only Credibility Intervals allow us to make claims such as that the true value of the effect size is probably not larger or smaller than a particular value.

Methods

Participants

Psychology students at the University of Sussex participated in an offline hypnosis screening as part one of their modules during the first semester of their studies. We recruited psychology students who had started their BSc studies in the year of 2016 or 2017 and who had provided their contact information in an offline hypnosis screening session. Both subject pools consisted of around 300 students and we randomly assigned half of them to each experimental group (experimental groups described below). Thus, we invited around 150 people for each group. We continued data collection until the end of the spring semester of 2018. In the second session, 73 students participated. However, we could not trace back the data of two students to their first session results and so we needed to exclude them from all of the analyses, leaving us with 71 participants in total. Twenty-six students attended the offline session (23 females, Mage = 19.7, SDage = 1.8) and 45 students completed the screening online (41 females, Mage = 21.0, SDage = 5.3).

We informed each participant about the nature of the study and only those students were be able to attend who agreed to the terms and conditions of the study. After finishing the experiment, the participants were debriefed and received a payment of £5 or course credit. The study has been approved by the Ethical Committee of the University of Sussex (Sciences & Technology C-REC).

Materials

One of the authors produced the audio recording of the hypnosis procedure (induction and the suggestions); the length of this recording was 28 min. The questionnaire applied in the first session for data collection was created in MatLab (MathWorks, 2016), whereas the questionnaire that was used in the second session was a PHP-based website. The PHP script, the materials and the documentation on how to instal the software can be accessed at https://osf.io/6twdp/.

Measures

The measures introduced below were utilised in the first occasion of the data collection. The second occasion only included the assessment of the hypnotic suggestibility measured by the SWASH regardless of the type of the session (offline or online). Note that, although several questionnaires were registered along with the first screening, we only used the suggestibility scores of the participants in this project (see our research questions in the last paragraph of the Introduction).

SWASH

The hypnotisability of the students was measured by the SWASH. This scale is a modified version of the WSGC (Bowers, 1993) which contains 10 suggestions and corresponding items measuring objective suggestibility and the subjective experiences of the participants about each suggestion.

Data collection in 2016

As part of the first session in 2016 the following four questionnaires were registered: (a) Barratt Impulsiveness Scale (BIS-11), which consists of 30 items and measures people`s tendency to behave impulsively (Patton & Stanford, 1995); (b) Free Will Inventory (FWI), which includes 29 items measuring people`s beliefs about free will and their relationships with these beliefs (Nadelhoffer, Shepard, Nahmias, Sripada, & Ross, 2014); (c) Short Form of the Five Facet Mindfulness Questionnaire (FFMQ-SF), which is a 24-item scale assessing the mindfulness skills of individuals via self-report (Bohlmeijer, ten Klooster, Fledderus, Veehof, & Baer, 2011); (d) Dissociative Experiences Scale-II (DES-II), which is a 28-item self-report questionnaire developed by Bernstein and Putman (1986).

Data collection in 2017

In 2017, we administered the following four questionnaires in the first data collection session of: (a) a 15-min-long breath counting exercises based on Study 2 of Levinson, Stoll, Kindy, Merry & Davidson (2014); (b) the Mindful Attention Awareness Scale (MAAS) consisting 15 Likert scale items (Brown & Ryan, 2003); (c) the Schizotypal Personality Questionnaire-Brief (SPQ-B), which consists of dichotomous questions (Raine & Benishay, 1995); (d) the DES-II that was used in 2016.

Design

We employed a 2 × 2 × 2 mixed design. The within subject variable is the date of the data collection (first session vs. second session). The between subjects independent variables are the form of the second hypnosis screening session (offline vs. online) and the length of the delay between the first and the second sessions (short delay [few months] vs. long delay [more than a year]).

Procedure

There were three forms of data collection: (1) group sessions at the university with the experimenter present (first, offline screen); (2) individual sessions in a small experimental room at the university with the experimenter present (second, offline screen); (3) individual sessions at home (second, online screen). All of the participants engaged in the first, offline, screen and later they were invited to attend in a second screen that was either offline or online. The procedure of the screening was identical in each case and followed the steps below.

After providing informed consent, the participants had the opportunity to provide contact details for a database in case they were willing to participate in hypnosis related research in the future. Next, they were asked to adjust the volume of their headphones until it was moderately loud by listening to a test tune. Before starting the hypnotic induction procedure, they were notified that the whole procedure would last about 45 min and that they should not take a break. By pressing the start button, participants ran the hypnotic induction and suggestions. After the de-induction, participants were asked to fill out the SWASH response booklet, rating their response to each suggestion. Finally, the participants were thanked for attending and debriefed.

Data analysis

Data transformation

We computed the Objective and Subjective suggestibility scores of the participants as described in the SWASH manual (Lush et al., 2018) and then we doubled all subjective scores so that both of the objective and subjective scores fell between 0 and 10. By taking the weighted average of these derived scores, we calculated the composite SWASH score of each participant, which was used in the majority of the analyses. For more details on the calculation of the SWASH scores, see Lush et al. (2018; manual available at https://osf.io/wujk8/). Given that the distributions of the objective, subjective and composite SWASH scores of the first screen were all fairly normal (see Fig. 1 in Preregistration), we assumed that the dataset of the second screen was also normally distributed. Therefore, we planned to use parametric methods to estimate the strength of correlation between continuous variables (Pearson`s r).

Bayesian estimation

In this project, we estimated the population effect sizes and did not test hypotheses. Thus, here, we report the estimates (e.g. mean or correlation) and the 95% Bayesian credibility intervals (CI) applying a uniform prior distribution. Note that, although, the bounds of the CIs are numerically equal to the bounds of the confidence intervals (assuming a uniform prior), their interpretation is different (e.g. Morey, Hoekstra, Rouder, Lee, & Wagenmakers, 2016).

Implementation of the preregistration

The design and research questions of this study were preregistered at osf.io/3abje. In order to ensure the reproducibility of the analysis and decrease analytic flexibility, we preregistered an analysis script, written in R (R Core Team, 2018), a prior to data collection. The script includes all of the steps defined in the preregistration and an additional data simulation, which helped us test and debug the script. In this paper, we present the results of analyses that were preregistered in the above-mentioned R script and results of two additional, non-preregistered analyses: (1) test–retest reliability of SWASH scores; (2) correlation between SWASH and depth of hypnosis scores. We deviated from the analysis script in one aspect. The calculation of the 95% CIs of the differences between two correlations was incorrect in the original script due to an issue with back-transformation of Fisher`s z values of difference scores to Pearson`s r (e.g. Meng, Rosenthal & Rubin, 1992; Olkin & Finn, 1995). Therefore, we used the cocor R package (Diedenhofen & Musch, 2015), which is based on the approximation method of Zou (2007), to estimate the 95% CIs of the differences between correlations.

Results

SWASH scores

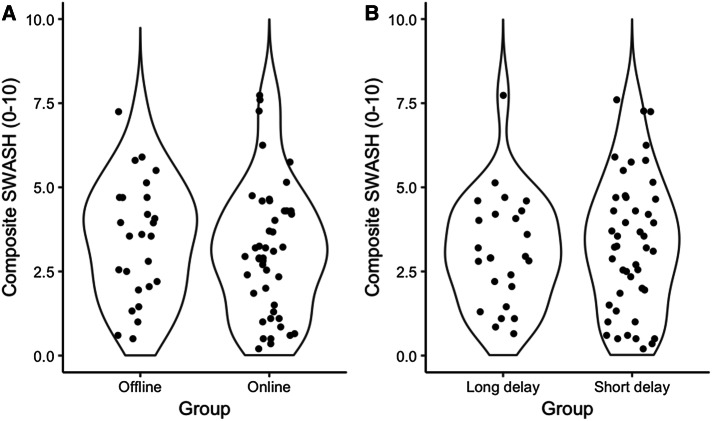

The mean of the composite SWASH scores in the offline group (M = 3.44) was only slightly larger than the mean of the online group (M = 3.13) rendering their difference negligible (Mdiff = 0.31, 95% CI [-0.59, 1.22]). Crucially, the difference between the groups is unlikely to be larger than 1.22. The difference between the offline and online groups is likely to be negligible or small for both of the objective (Mdiff = 0.39, 95% CI [− 0.58, 1.36]) and subjective subscales (Mdiff = 0.24, 95% CI [− 0.72, 1.19]). Panel A of Fig. 1 demonstrates that the distribution of the composite SWASH scores of the offline group is akin to the online group. The density of the data is similar between the groups even around the right tail (top) of the distribution indicating that similar proportion of the participants scored high on the SWASH in the offline and online groups. The mean of the composite SWASH scores was comparable in the short (M = 3.32) and long delay groups (M = 3.10), and the plausible values of their differences vary around zero with a maximum difference of 1.10 (Mdiff = 0.21, 95% CI [− 0.67, 1.10]). Table 1 presents the means and 95% CIs of all groups and comparisons with the composite, objective and subjective scores separately.

Fig. 1.

Violin plots depicting the distribution of composite SWASH scores of the second screens broken down either by the type of the screen (offline vs. online, a) or by the length of the delay (long vs. short delay, b). Each black dot indicates a composite SWASH score of a participant

Table 1.

The mean composite, objective and subjective SWASH Scores with 95% CIs broken down by the type of the second screen and the length of the delay

| Group | Measure | ||

|---|---|---|---|

| Composite | Objective | Subjective | |

| Offline | 3.44 [2.73, 4.16] | 3.92 [3.17, 4.67] | 2.96 [2.19, 3.73] |

| Online | 3.13 [2.54, 3.71] | 3.53 [2.89, 4.17] | 2.72 [2.13, 3.32] |

| Difference | 0.31 [− 0.59, 1.22] | 0.39 [− 0.58, 1.36] | 0.24 [− 0.72, 1.19] |

| Short delay | 3.32 [2.72, 3.91] | 3.89 [3.26, 4.52] | 2.74 [2.14, 3.35] |

| Long delay | 3.10 [2.42, 3.78] | 3.28 [2.54, 4.02] | 2.93 [2.19, 3.67] |

| Difference | 0.21 [− 0.67, 1.10] | 0.61 [− 0.34, 1.57] | − 0.19 [− 1.12, 0.75] |

Values within the squared brackets represent the 95% confidence intervals. Data presented in this table are based solely on the second screen

Validity

The correlation between the objective and subjective subscales of the SWASH was strong for the offline screen (r = .78, 95% CI [0.56, 0.89]) as well as for the online screen (r = .79, 95% CI [0.65, 0.88]) indicating appropriate validity in this respect. The difference between the offline and online screen in terms of the strength of the correlation between the objective and subjective scales was close to zero (r = − .02, 95% CI [− .25, 0.17]).

Test–retest reliability (non-preregistered)

Correlation between the first and the second screen scores was strong for the subjective subscale, but only moderate for the objective subscale irrespective of the type of the screen. For the composite scores, the correlation was strong for the online and offline group as well indicating a good enough test–retest reliability of the SWASH. Interestingly, the test–retest reliability of the online group was possibly higher than that of the offline group, although, only to a small extent (see Table 2 for rs and their 95% CIs). The correlation between the first and second screen scores was strong in the short delay group for the subscales as well as for the composite scores. However, the correlation was only moderate in the long delay group implying that the test–retest reliability of the SWASH is influenced by the length of the delay between the screens from a weak to a moderate extent. Table 2 presents the exact correlation values and their 95% CIs separately for the experimental groups.

Table 2.

Test–retest reliability of SWASH Scores broken down by type of screen and length of delay

| Group | Measure | ||

|---|---|---|---|

| Composite | Objective | Subjective | |

| Offline | 0.62 [0.31, 0.81] | 0.43 [0.05, 0.70] | 0.69 [0.42, 0.85] |

| Online | 0.74 [0.57, 0.85] | 0.59 [0.35, 0.75] | 0.77 [0.61, 0.87] |

| Difference | − 0.12 [− .45, 0.14] | − 0.16 [− 0.57, 0.20] | − 0.07 [− 0.37, 0.15] |

| Short delay | 0.79 [0.65, 0.88] | 0.65 [0.44, 0.79] | 0.81 [0.68, 0.89] |

| Long delay | 0.55 [0.20, 0.78] | 0.37 [− 0.03, 0.67] | 0.56 [0.21, 0.78] |

| Difference | 0.24 [− 0.02, 0.61] | 0.28 [− 0.08, 0.70] | 0.25 [− 0.01, 0.61] |

The correlation values are all Pearson’s rs and the 95% CIs are reported within the squared brackets

Depth of hypnosis

Difference between the groups

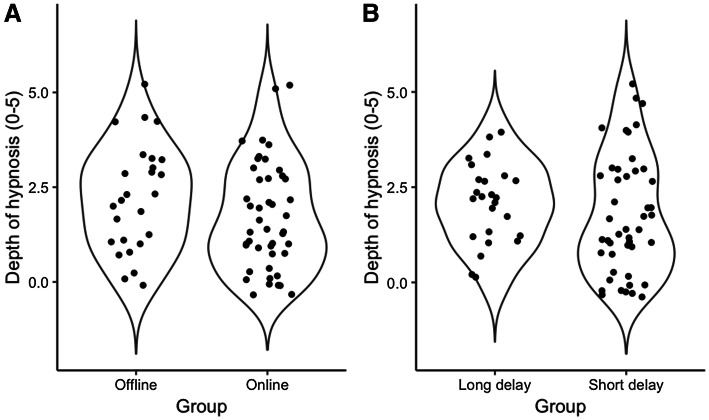

The participants reported somewhat higher depth of hypnosis scores in the offline (M = 2.15, 95% CI [1.61, 2.70]) than in the online (M = 1.73, 95% CI [1.31, 2.16]) group. Nonetheless, the difference between the groups is not substantial and the maximum plausible value of this difference is 1.10 (M = 0.42, 95% CI [-0.26, 1.10]). The mean of the depth of hypnosis scores in the short delay (M = 1.80, 95% CI [1.35–2.26]) compared to the long delay group (M = 2.04, 95% CI [1.59, 2.49]) differed only to a small extent (M = − 0.24, 95% CI [− 0.87, 0.40]). Figure 2 portrays the distribution of the depth of hypnosis scores broken down by the type of the second screen (panel A) and the length of the delay between the first and second screen (panel B). The depth of hypnosis scores are similarly distributed in the offline and online groups.

Fig. 2.

Violin plots representing the distribution of depth of hypnosis scores separately for the offline and online screens (a), and for the short and long delay groups (b)

Correlation between SWASH and depth of hypnosis scores (non-preregistered)

The correlation between the SWASH and depth of hypnosis scores was strong for all but one measure in the online and for all in the offline screen group (all r > .54). The strength of the correlation is unlikely to be larger than 0.21 in the offline group than in the online group rendering the difference between the two groups minimal. There was strong correlation between the depth of hypnosis scores and all measures in the short delay group (all r > .70), and the correlations were moderate to strong in the long delay group (all r > .31). The difference between the two groups for the strength of the correlations was weak to moderate, and it was the highest for the objective scores. Table 3 shows all of the correlation values and their 95% CIs separately for the experimental groups and for all of the measures.

Table 3.

Correlation between SWASH and depth of hypnosis scores broken down by the type of screen and the length of delay

| Group | Measure | ||

|---|---|---|---|

| Composite | Objective | Subjective | |

| Offline | 0.76 [0.53, 0.89] | 0.66 [0.37, 0.84] | 0.77 [0.54, 0.89] |

| Online | 0.70 [0.52, 0.83] | 0.54 [0.29, 0.72] | 0.81 [0.67, 0.89] |

| Difference | 0.06 [− 0.21, 0.28] | 0.12 [− 0.22, 0.43] | − 0.04 [− 0.28, 0.15] |

| Short delay | 0.79 [0.65, 0.88] | 0.70 [0.51, 0.82] | 0.83 [0.71, 0.90] |

| Long delay | 0.55 [0.20, 0.78] | 0.31 [− 0.10, 0.63] | 0.70 [0.41, 0.86] |

| Difference | 0.24 [− 0.03, 0.60] | 0.38 [0.02, 0.81] | 0.13 [− 0.07, 0.42] |

The correlation values are all Pearson’s rs and the 95% CIs are reported within the squared brackets

Discussion

The purpose of the present study was to explore whether online hypnosis screening is feasible as the adaptation of this method could ease the recruitment-related costs of hypnosis research. To this aim, we estimated the extent to which offline and online hypnosis screening scores, measured by the SWASH, are comparable. The results revealed that the difference between offline and online groups was small to negligible in all aspects and, importantly, applying online rather than offline screening is unlikely to reduce the composite screening score by more than 1.22 and the objective score by more than 1.36 out of ten. To put these effect sizes in perspective, for instance, a recent meta-analysis of four studies investigating the influence of standard induction procedures on suggestibility found that, on average, people score 1.46 higher (out of ten) on scales assessing objective responses to suggestions if they had received a priori induction compared to no induction (Martin & Dienes, 2019). Moreover, the average SWASH score in the online group was comparable to the result of an earlier screen conducted in group sessions at the same university (Lush et al., 2018). Finally, it is not only the average scores in the online group that can be deemed acceptable, the distribution of SWASH scores were also akin in the offline and online groups even at the positive end of the scale. This implies that some people can successfully respond to many suggestions when they undertake an online screening (see Fig. 1). None of this was obvious before the data were collected.

The correlation between objective and subjective scores was strong for both of the offline and online groups; crucially, the correlation in the online group can only be as small as 0.65. This indicates that the validity of the SWASH remained acceptable even with online data collection. Moreover, the strength of the correlation between the subjective and objective components of the SWASH found by Lush et al. (2018) was 0.70, which is consistent with our results. The strength of the correlation between SWASH scores of the first and second screens was medium in the offline and strong in the online group. The lower bound of the 95% CI in the online group was 0.57 implying that the test–retest reliability of the online measurement is adequate. These values are also appropriate in relative terms. For instance, Fassler et al. (2008) employed the CURSS which has an objective and a subjective subscale such as the SWASH, in two occasions and the test–retest correlations were 0.59 and 0.77 for the objective and subjective components, respectively. These results are in line with the correlations found by us in the online group. Overall, the psychometric properties of online screening were excellent; the quality of data collected online has shown to be consistent with the quality of offline data gathered within this study and as part of earlier studies with the SWASH and other hypnosis screening tools.

Modern theories of hypnosis advocate the notion that all hypnosis is self-hypnosis, since the hypnotic subject is the one who actively responds to the suggestions and creates the requested experience (Kihlstrom, 2008; Raz, 2011). This does not mean, however, that the experimenter has no influence on the responsiveness of the subject. For instance, the presence of an experimenter can be helpful in building up a rapport and facilitating responsiveness of the participants (e.g. Gfeller, Lynn, & Pribble, 1987). Nonetheless, the experimenter can also bias the responses of the subjects (e.g. Barber & Calverley, 1966; Troffer & Tart, 1964), and importantly, this level of bias can strongly vary across participants as it is almost impossible to deliver the induction and suggestions in an identical way multiple times. Therefore, the application of fully automatised screenings, such as the online version, can subserve the standardisation of the assessment of hypnotic suggestibility.

Introducing online hypnosis screening would markedly decrease the amount of time experimenters need to invest to find participants for their studies. However, to complete a screening procedure, the participants still need to spend 45–60 min without taking a break; otherwise, the data would be not usable for recruitment purposes. A substantial part of the screening is assigned to the standard hypnotic induction, which consists of various suggestions mostly to relax; however, the responses to these suggestions are not assessed directly during the screening (e.g. Shor & Orne, 1963; Weitzenhoffer & Hilgard, 1962). Would it be feasible to exclude the standard induction from the screening procedure to save time for the participants? Cognitive theories of hypnosis, such as the cold control theory (Barnier, Dienes, & Mitchell, 2008; Dienes & Perner, 2007), emphasise the role of the feeling of involuntariness in differentiating hypnotic from non-hypnotic responses. This feeling is also known as the “classical suggestion effect” (Weitzenhoffer, 1974, 1980). Therefore, according to cold control theory, not the practice of induction, but the feeling of involuntariness is the demarcation criterion, and it is important to ensure with self-report measures that the participants experienced a reduction in the level of control over their own behaviour (e.g. Palfi, Parris, McLatchie, Kekecs, & Dienes, 2018)1. From a practical perspective, it is important to bear in mind that the presence of a standard induction can increase responsiveness to the suggestions in the screening, on average, by 1.46 (Martin & Dienes, 2019) compared to the absence of the induction; and that the strength of the effect of an induction fluctuates across suggestions (Terhune & Cardeña, 2016). Nonetheless, as argued earlier in this paper, a general reduction of responsiveness does not qualify as decisive argument for retaining the induction procedure. As long as the absence of the induction does not produce a floor-effect or alters markedly the ranking of the suggestibility scores, the screening can be perfectly adequate for screening people for individual differences in response. Indeed, there are existing attempts to assess responsiveness to suggestions without exposing the participants to an induction, such as the Barber Suggestibility Scale (Barber & Glass, 1962) and the CIS (Wilson & Barber, 1978). These scales can be easily administered in a context presented as a test of imagination while applying motivational instructions to replace the induction or simply leaving out the induction. The existing evidence suggests that employing motivational instructions creates similar level of responsiveness as the application of the induction; however, the absence of the induction significantly dwindles the level of responsiveness to suggestions (Barber & Wilson, 1978). Future research could explore the extent to which the exclusion or replacement of the induction from the SWASH would be feasible and assess whether it would be beneficial.

A secondary interest of the current study was to assess the extent to which the length of the delay between the first and second screening affects the outcome of the screen and the psychometric properties of the measurement tool. Repeated assessment of suggestibility can negatively affect the suggestibility scores, for instance, if the delay amid the two occasions takes only a few days or weeks (Barber & Calverley, 1966; Fassler et al., 2008; Lynn et al., 1988). This reduction in suggestibility may be caused by boredom; the participants can become disengaged with the procedure by virtue of finding it repetitive (Barber & Calverley, 1966; Fassler et al., 2008). In our case, the short delay was a minimum of 5 months and we found no indication of substantial differences between the short and long delay groups among the SWASH subscales. For instance, Fassler et al. (2008) found a difference of 0.77 on the objective scores between the first and second session2, but according to our data, the largest plausible difference is only 0.34. Nonetheless, the effect of boredom on the subjective scores observed by Fassler et al. (2008) was 1.05,3 which is compatible with our results as the lower bound of the difference in that aspect was 1.12. Taken together, our data imply that the negative effect of boredom might wear off or becomes negligible after 5 months; however, more research is needed to settle this matter and identify the ideal amount of delay that can prevent boredom effects in repeated designs.

We note that our sample was restricted to university students, which might preclude the generalisation of our findings, crucially, the applicability of online hypnosis screening, to a wider population. Nonetheless, the problem of generalisability represents a universal issue in experimental hypnosis research. For instance, a meta-analysis on 27 studies investigating hypnotically induced analgesia found that from the studies with non-clinical samples (N = 19), only one was run with people recruited from the local community whereas all the other studies were run with students (Montgomery, Duhamel, & Redd, 2000). Recruiting from a wider population would not only increase generalisability of the findings, but it would further facilitate researchers to run large-scale hypnosis studies strengthening the replicability of the findings. Future research is needed to explore the extent to which online hypnosis research can be applied to screen and recruit people from local communities.

Finally, the vast majority of our participants were females; hence, the gender imbalance in our sample might be another factor hindering the generalisability of our findings. Research on the link between gender and hypnotic suggestibility has provided ambiguous results with some studies finding virtually no effect (Cooper & London, 1966; Dienes, Brown, Hutton, Kirsch, Mazzoni, & Wright, 2009; McConkey, Barnier, Maccallum, & Bishop, 1996) and some studies demonstrating a small effect size (Green, 2004; Green & Lynn, 2010; Morgan & Hilgard, 1973; Page & Green, 2007; Rudski, Marra, & Graham, 2004). Studies showing a small effect size of gender consistently found that women score higher than men, which might be caused by a divergence in a personality trait that partly underlies suggestibility or difference between women and men in how they assess the difficulty of the suggestions (Rudski, Marra, & Graham, 2004). Nonetheless, these explanations are conjectures that have yet to be tested. With only seven men in the current data set, we can only speculate how much gender might moderate the difference the online compared to the offline measurement of hypnotic suggestibility.

Conclusion

Altogether, the online assessment of hypnotic suggestibility appears to be feasible and the benefits far outweigh the downsides involved with its application. Although, online screening might be less engaging than the traditional, offline measurement of suggestibility and so it can result in slightly lower suggestibility scores, our study suggests that the effect size of this negative impact lies within acceptable boundaries. Crucially, the application of online hypnosis screening can subserve the execution of large-scale data collection with heterogeneous samples consisting of student and non-student participants as well. Furthering our knowledge based on small sample studies comes with many risks (e.g. Loken & Gelman, 2017), but the relative high cost of hypnosis screening procedures hinders the researchers of the field from running well-powered studies. Therefore, we argue that the adaptation of online hypnosis screening is salutary and it helps experimental hypnosis research to realise its full potentials.

Acknowledgements

The preregistration, the data and the analysis script can be retrieved from https://osf.io/c46xa/. The authors declare no financial conflict of interest with the reported research. The project was not supported by any grant or financial funding. Bence Palfi and Peter Lush are grateful to the Dr Mortimer and Theresa Sackler Foundation, which supports the Sackler Centre for Consciousness Science.

Footnotes

An operational definition of hypnosis necessitates the usage of induction to render suggestions hypnotic, and labels all suggestions without a priori induction imaginative suggestion (Braffman & Kirsch, 1999; Kirsch, 1997; Kirsch & Braffman, 2001). This line of thinking would preclude us from omitting the induction in case we want to measure hypnotic suggestibility.

The reported raw difference was 0.54; however, we adjusted this value from a scale of 0–7 to the scale of the SWASH, which is 0–10.

The reported raw difference was 2.2; we adjusted this value as well from a scale of 0–21 to the scale of the SWASH in which values can vary between 0 and 10.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Anderson HP, Seth AK, Dienes Z, Ward J. Can grapheme-color synesthesia be induced by hypnosis? Frontiers in human neuroscience. 2014;8:220. doi: 10.3389/fnhum.2014.00220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anlló H, Becchio J, Sackur J. French norms for the Harvard Group Scale of hypnotic susceptibility, form A. International Journal of Clinical and Experimental Hypnosis. 2017;65(2):241–255. doi: 10.1080/00207144.2017.1276369. [DOI] [PubMed] [Google Scholar]

- Barber TX, Calverley DS. Comparative effects on “hypnotic-like” suggestibility of recorded and spoken suggestions. Journal of Consulting Psychology. 1964;28(4):384. doi: 10.1037/h0045217. [DOI] [PubMed] [Google Scholar]

- Barber TX, Calverley DS. Toward a theory of hypnotic behavior: Experimental evaluation of Hull’s postulate that hypnotic susceptibility is a habit phenomenon 1. Journal of Personality. 1966;34(3):416–433. doi: 10.1111/j.1467-6494.1966.tb01724.x. [DOI] [PubMed] [Google Scholar]

- Barber TX, Glass LB. Significant factors in hypnotic behavior. The Journal of Abnormal and Social Psychology. 1962;64(3):222. doi: 10.1037/h0041347. [DOI] [PubMed] [Google Scholar]

- Barber TX, Wilson SC. The Barber suggestibility scale and the creative imagination scale: Experimental and clinical applications. American Journal of Clinical Hypnosis. 1978;21(2–3):84–108. doi: 10.1080/00029157.1978.10403966. [DOI] [PubMed] [Google Scholar]

- Barnier AJ, Dienes Z, Mitchell CJ. How hypnosis happens: New cognitive theories of hypnotic responding. In: Heap. M, Brown RJ, Oakley DA, editors. The Oxford handbook of hypnosis: Theory, research, and practice. London: Routledge; 2008. pp. 141–177. [Google Scholar]

- Barnier AJ, McConkey KM. Hypnosis, human nature, and complexity: Integrating neuroscience approaches into hypnosis research. International Journal of Clinical and Experimental Hypnosis. 2003;51(3):282–308. doi: 10.1076/iceh.51.3.282.15524. [DOI] [PubMed] [Google Scholar]

- Barnier AJ, McConkey KM. Defining and identifying the highly hypnotizable person. The highly hypnotizable person: Theoretical, experimental and clinical issues. 2004;15:30–61. [Google Scholar]

- Bernstein EM, Putnam FW. Development, reliability, and validity of a dissociation scale. The Journal of Nervous and Mental Disease. 1986;174(12):727–735. doi: 10.1097/00005053-198612000-00004. [DOI] [PubMed] [Google Scholar]

- Bohlmeijer E, ten Klooster PM, Fledderus M, Veehof M, Baer R. Psychometric properties of the five facet mindfulness questionnaire in depressed adults and development of a short form. Assessment. 2011;18(3):308–320. doi: 10.1177/1073191111408231. [DOI] [PubMed] [Google Scholar]

- Bowers KS. The Waterloo-Stanford Group C (WSGC) scale of hypnotic susceptibility: Normative and comparative data. International Journal of Clinical and Experimental Hypnosis. 1993;41(1):35–46. doi: 10.1080/00207149308414536. [DOI] [PubMed] [Google Scholar]

- Braffman W, Kirsch I. Imaginative suggestibility and hypnotizability: An empirical analysis. Journal of Personality and Social Psychology. 1999;77(3):578. doi: 10.1037//0022-3514.77.3.578. [DOI] [PubMed] [Google Scholar]

- Brown KW, Ryan RM. The benefits of being present: Mindfulness and its role in psychological well-being. Journal of personality and social psychology. 2003;84(4):822. doi: 10.1037/0022-3514.84.4.822. [DOI] [PubMed] [Google Scholar]

- Buhrmester M, Kwang T, Gosling SD. Amazon’s Mechanical Turk: A new source of inexpensive, yet high-quality data? Perspectives on Psychological Science. 2011;6(1):3–5. doi: 10.1177/1745691610393980. [DOI] [PubMed] [Google Scholar]

- Cardeña E. Hypnos and psyche: How hypnosis has contributed to the study of consciousness. Psychology of Consciousness: Theory, Research, and Practice. 2014;1(2):123. [Google Scholar]

- Cardeña E, Terhune DB. A note of caution on the Waterloo-Stanford Group Scale of Hypnotic Susceptibility: A brief communication. International Journal of Clinical and Experimental Hypnosis. 2009;57(2):222–226. doi: 10.1080/00207140802665484. [DOI] [PubMed] [Google Scholar]

- Cooper LM, London P. Sex and hypnotic susceptibility in children. International Journal of Clinical and Experimental Hypnosis. 1966;14(1):55–60. doi: 10.1080/00207146608415894. [DOI] [PubMed] [Google Scholar]

- Cumming G. The new statistics: Why and how. Psychological Science. 2014;25(1):7–29. doi: 10.1177/0956797613504966. [DOI] [PubMed] [Google Scholar]

- Dennis, S. A., Goodson, B. M., & Pearson, C. (2018). Mturk workers’ use of low-cost “virtual private servers” to circumvent screening methods: A research note. 10.2139/ssrn.3233954 [DOI]

- Diedenhofen B, Musch J. cocor: A comprehensive solution for the statistical comparison of correlations. PloS One. 2015;10(4):e0121945. doi: 10.1371/journal.pone.0121945. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dienes Z. Bayesian versus orthodox statistics: Which side are you on? Perspectives on Psychological Science. 2011;6(3):274–290. doi: 10.1177/1745691611406920. [DOI] [PubMed] [Google Scholar]

- Dienes Z, Brown E, Hutton S, Kirsch I, Mazzoni G, Wright DB. Hypnotic suggestibility, cognitive inhibition, and dissociation. Consciousness and cognition. 2009;18(4):837–847. doi: 10.1016/j.concog.2009.07.009. [DOI] [PubMed] [Google Scholar]

- Dienes Z, Perner J. Executive control without conscious awareness: The cold control theory of hypnosis. In: Jamieson G, editor. Hypnosis and conscious states: The cognitive neuroscience perspective. Oxford: Oxford University Press; 2007. p. 293314. [Google Scholar]

- Fassler O, Lynn SJ, Knox J. Is hypnotic suggestibility a stable trait? Consciousness and Cognition. 2008;17(1):240–253. doi: 10.1016/j.concog.2007.05.004. [DOI] [PubMed] [Google Scholar]

- Fisher RA. Statistical methods for research workers. Edinburgh: Oliver & Boyd; 1925. [Google Scholar]

- Gfeller JD, Lynn SJ, Pribble WE. Enhancing hypnotic susceptibility: Interpersonal and rapport factors. Journal of Personality and Social Psychology. 1987;52(3):586. doi: 10.1037//0022-3514.52.3.586. [DOI] [PubMed] [Google Scholar]

- Gosling SD, Vazire S, Srivastava S, John OP. Should we trust web-based studies? A comparative analysis of six preconceptions about internet questionnaires. American Psychologist. 2004;59(2):93. doi: 10.1037/0003-066X.59.2.93. [DOI] [PubMed] [Google Scholar]

- Green JP. The five factor model of personality and hypnotizability: Little variance in common. Contemporary Hypnosis. 2004;21(4):161–168. [Google Scholar]

- Green JP, Lynn SJ. Hypnotic responsiveness: Expectancy, attitudes, fantasy proneness, absorption, and gender. International Journal of Clinical and Experimental Hypnosis. 2010;59(1):103–121. doi: 10.1080/00207144.2011.522914. [DOI] [PubMed] [Google Scholar]

- Haggard P, Cartledge P, Dafydd M, Oakley DA. Anomalous control: When ‘free-will’ is not conscious. Consciousness and Cognition. 2004;13(3):646–654. doi: 10.1016/j.concog.2004.06.001. [DOI] [PubMed] [Google Scholar]

- Hilgard ER, Tart CT. Responsiveness to suggestions following waking and imagination instructions and following induction of hypnosis. Journal of Abnormal Psychology. 1966;71(3):196. doi: 10.1037/h0023323. [DOI] [PubMed] [Google Scholar]

- Hilgard JR. Sequelae to hypnosis. International Journal of Clinical and Experimental Hypnosis. 1974;22(4):281–298. doi: 10.1080/00207147408413008. [DOI] [PubMed] [Google Scholar]

- Jeffreys H. Theory of probability. 3. Oxford: Oxford University Press; 1961. [Google Scholar]

- Kihlstrom JF. Hypnosis. Annual Review of Psychology. 1985;36(1):385–418. doi: 10.1146/annurev.ps.36.020185.002125. [DOI] [PubMed] [Google Scholar]

- Kihlstrom JF. Is hypnosis an altered state of consciousness or what? Contemporary Hypnosis. 2005;22(1):34–38. [Google Scholar]

- Kihlstrom JF. The Oxford handbook of hypnosis: Theory, research, and practice. Oxford: Oxford University Press; 2008. The domain of hypnosis, revisited; pp. 21–52. [Google Scholar]

- Kirsch I. Suggestibility or hypnosis: What do our scales really measure? International Journal of Clinical and Experimental Hypnosis. 1997;45(3):212–225. doi: 10.1080/00207149708416124. [DOI] [PubMed] [Google Scholar]

- Kirsch I. The altered state issue: Dead or alive? International Journal of Clinical and Experimental Hypnosis. 2011;59(3):350–362. doi: 10.1080/00207144.2011.570681. [DOI] [PubMed] [Google Scholar]

- Kirsch I, Braffman W. Imaginative suggestibility and hypnotizability. Current Directions in Psychological Science. 2001;10(2):57–61. [Google Scholar]

- Kirsch I, Milling LS, Burgess C. Experiential scoring for the Waterloo-Stanford Group C scale. International Journal of Clinical and Experimental Hypnosis. 1998;46(3):269–279. doi: 10.1080/00207149808410007. [DOI] [PubMed] [Google Scholar]

- Krantz JH, Dalal R. Validity of Web-based psychological research. In: Birnbaum MH, editor. Psychological experiments on the Internet. San Diego: Academic; 2000. pp. 35–60. [Google Scholar]

- Kruschke JK. Doing Bayesian data analysis: A tutorial with R and BUGS. Burlington: Academic; 2010. [Google Scholar]

- Kruschke JK. Bayesian estimation supersedes the t test. Journal of Experimental Psychology: General. 2013;142(2):573. doi: 10.1037/a0029146. [DOI] [PubMed] [Google Scholar]

- Levinson DB, Stoll EL, Kindy SD, Merry HL, Davidson RJ. A mind you can count on: Validating breath counting as a behavioral measure of mindfulness. Frontiers in Psychology. 2014;5:1202. doi: 10.3389/fpsyg.2014.01202. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loken E, Gelman A. Measurement error and the replication crisis. Science. 2017;355(6325):584–585. doi: 10.1126/science.aal3618. [DOI] [PubMed] [Google Scholar]

- Lush P, Caspar EA, Cleeremans A, Haggard P, Magalhães De Saldanha da Gama PA, Dienes Z. The power of suggestion: Posthypnotically induced changes in the temporal binding of intentional action outcomes. Psychological Science. 2017;28(5):661–669. doi: 10.1177/0956797616687015. [DOI] [PubMed] [Google Scholar]

- Lush P, Moga G, McLatchie N, Dienes Z. The Sussex-Waterloo Scale of Hypnotizability (SWASH): Measuring capacity for altering conscious experience. Neuroscience of Consciousness. 2018;2018(1):niy006. doi: 10.1093/nc/niy006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lush, P., Scott, R. B., Anil, S., & Dienes, Z. (2019). Is the rubber hand illusion a suggestion effect?(Manuscript in preparation).

- Lush, P., Scott, R. B., Moga, G., & Dienes, Z. (2019). Norms for a computerized version of the SWASH. (Manuscript in preparation).

- Lynn SJ, Weekes JR, Matyi CL, Neufeld V. Direct versus indirect suggestions, archaic involvement, and hypnotic experience. Journal of Abnormal Psychology. 1988;97(3):296. doi: 10.1037//0021-843x.97.3.296. [DOI] [PubMed] [Google Scholar]

- Martin, J. R., & Dienes, Z. (2019). Bayes to the rescue: Does the type of hypnotic induction matter? Psychology of Consciousness. (in press).

- McConkey K, Barnier AJ, Maccallum FL, Bishop K. A normative and structural analysis of the HGSHS: A with a large Australian sample. Australian Journal of Clinical & Experimental Hypnosis. 1996;24(1):1–11. [Google Scholar]

- Meng XL, Rosenthal R, Rubin DB. Comparing correlated correlation coefficients. Psychological Bulletin. 1992;111:172–175. [Google Scholar]

- Montgomery GH, Duhamel KN, Redd WH. A meta-analysis of hypnotically induced analgesia: How effective is hypnosis? International Journal of Clinical and Experimental Hypnosis. 2000;48(2):138–153. doi: 10.1080/00207140008410045. [DOI] [PubMed] [Google Scholar]

- Morey RD, Hoekstra R, Rouder JN, Lee MD, Wagenmakers EJ. The fallacy of placing confidence in confidence intervals. Psychonomic Bulletin and Review. 2016;23(1):103–123. doi: 10.3758/s13423-015-0947-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan AH, Hilgard ER. Age differences in susceptibility to hypnosis. International journal of clinical and experimental Hypnosis. 1973;21(2):78–85. [Google Scholar]

- Nadelhoffer T, Shepard J, Nahmias E, Sripada C, Ross LT. The free will inventory: Measuring beliefs about agency and responsibility. Consciousness and Cognition. 2014;25:27–41. doi: 10.1016/j.concog.2014.01.006. [DOI] [PubMed] [Google Scholar]

- Neyman J, Pearson ES. IX. On the problem of the most efficient tests of statistical hypotheses. Philosophical Transactions of the Royal Society of London. Series A. 1933;231(694–706):289–337. [Google Scholar]

- Oakley DA. Hypnosis as a tool in research: Experimental psychopathology. Contemporary Hypnosis. 2006;23(1):3–14. [Google Scholar]

- Oakley DA, Halligan PW. Hypnotic suggestion and cognitive neuroscience. Trends in Cognitive Sciences. 2009;13(6):264–270. doi: 10.1016/j.tics.2009.03.004. [DOI] [PubMed] [Google Scholar]

- Oakley DA, Halligan PW. Hypnotic suggestion: Opportunities for cognitive neuroscience. Nature Reviews Neuroscience. 2013;14(8):565. doi: 10.1038/nrn3538. [DOI] [PubMed] [Google Scholar]

- Olkin I, Finn JD. Correlations redux. Psychological Bulletin. 1995;118:155–164. [Google Scholar]

- Page RA, Green JP. An update on age, hypnotic suggestibility, and gender: A brief report. American Journal of Clinical Hypnosis. 2007;49(4):283–287. doi: 10.1080/00029157.2007.10524505. [DOI] [PubMed] [Google Scholar]

- Palfi, B., Parris, B. A., McLatchie, N., Kekecs, Z., & Dienes, Z. (2018). Can unconscious intentions be more effective than conscious intentions? Test of the role of metacognition in hypnotic response. Cortex, (Stage 1 Registered Report). https://osf.io/h6znt/. [DOI] [PubMed]

- Parris BA, Dienes Z, Bate S, Gothard S. Oxytocin impedes the effect of the word blindness post-hypnotic suggestion on Stroop task performance. Social Cognitive and Affective Neuroscience. 2014;9(7):895–899. doi: 10.1093/scan/nst063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Patton JH, Stanford MS. Factor structure of the Barratt impulsiveness scale. Journal of Clinical Psychology. 1995;51(6):768–774. doi: 10.1002/1097-4679(199511)51:6<768::aid-jclp2270510607>3.0.co;2-1. [DOI] [PubMed] [Google Scholar]

- Perry C, Laurence JR. Hypnotic depth and hypnotic susceptibility: A replicated finding. International Journal of Clinical and Experimental Hypnosis. 1980;28(3):272–280. doi: 10.1080/00207148008409852. [DOI] [PubMed] [Google Scholar]

- Piccione C, Hilgard ER, Zimbardo PG. On the degree of stability of measured hypnotizability over a 25-year period. Journal of Personality and Social Psychology. 1989;56(2):289. doi: 10.1037//0022-3514.56.2.289. [DOI] [PubMed] [Google Scholar]

- Polito V, Barnier AJ, Woody EZ. Developing the Sense of Agency Rating Scale (SOARS): An empirical measure of agency disruption in hypnosis. Consciousness and Cognition. 2013;22(3):684–696. doi: 10.1016/j.concog.2013.04.003. [DOI] [PubMed] [Google Scholar]

- R Core Team. (2018). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria.

- Raine A, Benishay D. The SPQ-B: A brief screening instrument for schizotypal personality disorder. Journal of Personality Disorders. 1995;9(4):346–355. [Google Scholar]

- Raz A. Hypnosis: A twilight zone of the top-down variety: Few have never heard of hypnosis but most know little about the potential of this mind–body regulation technique for advancing science. Trends in Cognitive Sciences. 2011;15(12):555–557. doi: 10.1016/j.tics.2011.10.002. [DOI] [PubMed] [Google Scholar]

- Reips U-D. The Web experiment method: Advantages, disadvantages, and solutions. In: Birnbaum MH, editor. Psychological experiments on the Internet. San Diego: Academic; 2000. pp. 89–117. [Google Scholar]

- Rouder JN, Lu J, Speckman P, Sun D, Jiang Y. A hierarchical model for estimating response time distributions. Psychonomic Bulletin & Review. 2005;12(2):195–223. doi: 10.3758/bf03257252. [DOI] [PubMed] [Google Scholar]

- Rouder JN, Speckman PL, Sun D, Morey RD, Iverson G. Bayesian t tests for accepting and rejecting the null hypothesis. Psychonomic bulletin & review. 2009;16(2):225–237. doi: 10.3758/PBR.16.2.225. [DOI] [PubMed] [Google Scholar]

- Rudski JM, Marra LC, Graham KR. Sex differences on the HGSHS: A. International Journal of Clinical and Experimental Hypnosis. 2004;52(1):39–46. doi: 10.1076/iceh.52.1.39.23924. [DOI] [PubMed] [Google Scholar]

- Shor RE, Orne EC. Norms on the Harvard Group scale of hypnotic susceptibility, form A. International Journal of Clinical and Experimental Hypnosis. 1963;11(1):39–47. doi: 10.1080/00207146308409226. [DOI] [PubMed] [Google Scholar]

- Spanos NP, Radtke HL, Hodgins DC, Stam HJ, Bertrand LD. The Carleton University Responsiveness to Suggestion Scale: Normative data and psychometric properties. Psychological Reports. 1983;53(2):523–535. doi: 10.2466/pr0.1983.53.2.523. [DOI] [PubMed] [Google Scholar]

- Terhune DB, Cardeña E. Nuances and uncertainties regarding hypnotic inductions: Toward a theoretically informed praxis. American Journal of Clinical Hypnosis. 2016;59(2):155–174. doi: 10.1080/00029157.2016.1201454. [DOI] [PubMed] [Google Scholar]

- Terhune DB, Cleeremans A, Raz A, Lynn SJ. Hypnosis and top-down regulation of consciousness. Neuroscience and Biobehavioral Reviews. 2017;81:5974. doi: 10.1016/j.neubiorev.2017.02.002. [DOI] [PubMed] [Google Scholar]

- Troffer SA, Tart CT. Experimenter bias in hypnotist performance. Science. 1964;145(3638):1330–1331. doi: 10.1126/science.145.3638.1330. [DOI] [PubMed] [Google Scholar]

- Wagenmakers EJ, Marsman M, Jamil T, Ly A, Verhagen J, Love J, et al. Bayesian inference for psychology. Part I: Theoretical advantages and practical ramifications. Psychonomic bulletin & review. 2018;25(1):35–57. doi: 10.3758/s13423-017-1343-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagenmakers EJ, Morey RD, Lee MD. Bayesian benefits for the pragmatic researcher. Current Directions in Psychological Science. 2016;25(3):169–176. [Google Scholar]

- Wagstaff GF, Cole JC, Brunas-Wagstaff J. Measuring hypnotizability: The case for self-report depth scales and normative data for the Long Stanford Scale. Intl. Journal of Clinical and Experimental Hypnosis. 2008;56(2):119–142. doi: 10.1080/00207140701849452. [DOI] [PubMed] [Google Scholar]

- Weitzenhoffer AM. When is an “instruction” an “instruction”? International Journal of Clinical and Experimental Hypnosis. 1974;22(3):258–269. doi: 10.1080/00207147408413005. [DOI] [PubMed] [Google Scholar]

- Weitzenhoffer AM. Hypnotic susceptibility revisited. American Journal of Clinical Hypnosis. 1980;22(3):130–146. doi: 10.1080/00029157.1980.10403217. [DOI] [PubMed] [Google Scholar]

- Weitzenhoffer AM, Hilgard ER. Stanford hypnotic susceptibility scale, form C. Palo Alto: Consulting Psychologists Press; 1962. [Google Scholar]

- Wilson SC, Barber TX. The Creative Imagination Scale as a measure of hypnotic responsiveness: Applications to experimental and clinical hypnosis. American Journal of Clinical Hypnosis. 1978;20(4):235–249. doi: 10.1080/00029157.1978.10403940. [DOI] [PubMed] [Google Scholar]

- Zou GY. Toward using confidence intervals to compare correlations. Psychological Methods. 2007;12(4):399–413. doi: 10.1037/1082-989X.12.4.399. [DOI] [PubMed] [Google Scholar]