Abstract

Enzymes are proteins that can efficiently catalyze specific biochemical reactions, and they are widely present in the human body. Developing an efficient method to identify human enzymes is vital to select enzymes from the vast number of human proteins and to investigate their functions. Nevertheless, only a limited amount of research has been conducted on the classification of human enzymes and nonenzymes. In this work, we developed a support vector machine- (SVM-) based predictor to classify human enzymes using the amino acid composition (AAC), the composition of k-spaced amino acid pairs (CKSAAP), and selected informative amino acid pairs through the use of a feature selection technique. A training dataset including 1117 human enzymes and 2099 nonenzymes and a test dataset including 684 human enzymes and 1270 nonenzymes were constructed to train and test the proposed model. The results of jackknife cross-validation showed that the overall accuracy was 76.46% for the training set and 76.21% for the test set, which are higher than the 72.6% achieved in previous research. Furthermore, various feature extraction methods and mainstream classifiers were compared in this task, and informative feature parameters of k-spaced amino acid pairs were selected and compared. The results suggest that our classifier can be used in human enzyme identification effectively and efficiently and can help to understand their functions and develop new drugs.

1. Introduction

Enzymes, also known as biocatalysts, are proteins that can catalyze chemical reactions in living cells efficiently and specifically, and they play a key role in the survival of humans, other animals, and plants. Over the last few decades, enzymes in increasing numbers have been identified and have been found to have a variety of properties and play diverse roles in the survival, growth, and development of organisms.

Depending on the properties of the reaction catalyzed, enzymes are classified into six classes according to enzyme commission (EC) numbers [1]: oxidoreductases, transferases, hydrolases, lyases, isomerases, and ligases. Owing to the specificity of enzymes, i.e., an enzyme can only catalyze a specific chemical reaction in a cell, accurately classifying and predicting enzyme classes is of vital importance when searching for unknown enzymes and developing new drugs, including zymin.

The traditional approach to the identification of proteins through wet experimental methods has typically been time and resource intensive. With the development of protein sequencing technology and improvements in computing power, computational methods based on amino acid sequence data of peptides, especially machine learning methods, have been widely used to classify and predict the function of diverse classes of proteins [2–7].

Currently, several researchers have focused on developing methods that can be used for the identification of enzymes. Jensen et al. first predicted enzyme classes using sequence-based physicochemical features and an Artificial Neural Network (ANN) in 2002 [8]. Chou and Cai proposed the GO-PseAAC predictor, which combined gene ontology (GO) and Pseudo amino acid composition (PseAAC) as features to search for and used the nearest neighbor algorithm approach [9]. Later, Cai et al. first applied the SVM algorithm to enzyme classification [10] and combined functional domain composition (FunD) with PseAAC to predict the classes of enzymes [11, 12]. Furthermore, a predictor named EzyPred was developed by Shen and Chou that uses FunD and the Pseudo position-specific scoring matrix (PsePSSM) as features [13]. In 2009, Nasibov and Kandemir-Cavas classified enzymes by the K-nearest neighbor (KNN) method and the minimum distance-based predictor using AAC [14]. Concu et al. provided a distinctive method using the 3D structure rather than sequence information [15]. Qiu et al. developed a method based on PseAAC and discrete wavelet transform (WT) that was trained by the SVM algorithm [16]. Shi and Hu used low-frequency power spectral density and increment of diversity, combined with AAC and PseAAC, and built an SVM-based predictor [17]. In addition, Zou et al. introduced a multilabel learning method to identify multifunctional enzymes [18]. Later, a new method was put forward by Niu et al. that used a protein-protein network [19]. In recent years, deep-learning methods like convolutional neural networks were used for the classification of enzymes and achieved good results [20, 21].

All of these classification methods improved the classification performance based on previous research. Nevertheless, all of these researchers concentrated on classifying different types of enzymes, and very few methods have been developed to predict whether a protein is an enzyme or a nonenzyme. Wu et al. devoted themselves to this issue and designed an SVM-based method combining PseAAC with the rigidity [22], flexibility, and irreplaceability of amino acids to identify human enzyme classes. However, this method only reached an overall accuracy of 72.6% by 5-fold cross-validation using 372 features, and thus, the performance of this task needs to be further improved.

On the basis of the above research, in this work, we developed a new machine learning method to classify human enzymes and nonenzymes. First, we introduced a feature representation strategy based on AAC and the composition of k-spaced amino acid pairs (CKSAAP). Next, for features represented by the methods above, the feature selection technique based on analyses of variance (ANOVA) was applied to minimize the features we used and to improve its overall accuracy. Finally, the selected features were fed into the classifiers found from SVM for training. As a result, an accuracy of 76.46% and 76.21% by 6-fold cross-validation was achieved in the training set and test set, respectively, by using 40 feature parameters. Furthermore, the performances of different feature representation strategies under the SVM classifier and the performances of different classifiers were compared and discussed, and important feature parameters in this task were selected and compared.

2. Materials and Methods

2.1. Datasets

The training sequence data used in this study were first reported by Wu et al. [22] and were obtained from the Universal Protein Resource (UniProt), the protein database with the most abundant information and resources; the training sequence data were composed of data from three databases: Swiss-Prot, TrEMBL, and PIR-PSD [23]. Six subclasses of human enzymes and nonhuman enzymes can be filtered and downloaded for free. To ensure the correctness and representativeness of the training data, the following data preprocessing process was used: (1) Human enzyme sequences of enzymes whose function had not been experimentally verified and those labeled as fragments were eliminated. (2) Enzyme sequences containing ambiguous residues (“B,” “J,” “O,” “U,” “X,” and “Z”) were excluded. (3) The CD-HIT program was applied to remove highly similar enzyme sequences using 30% as the cutoff of sequence identity [24, 25].

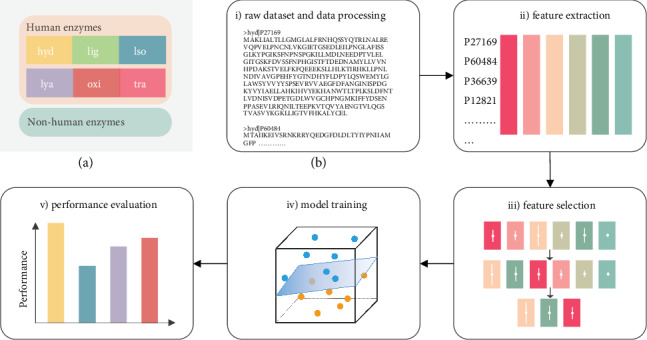

After the above data preprocessing steps were completed, 1117 human enzymes and 2099 nonhuman enzymes were selected as training sequences in the analysis. Among them, the human enzyme sequences consist of 6 subclasses, as shown in Figure 1(a), with the overall workflow in our study shown in Figure 1(b).

Figure 1.

Overall workflow. (a) The original sequence dataset used. The dataset consists of human enzymes and nonhuman enzymes. Among them, human enzymes consist of 6 subsets, which represent the catalytic effects on different types of biochemical reactions: oxidoreductases, transferases, hydrolases, lyases, isomerases, and ligases. (b) The workflow of our study. Raw protein sequences were first preprocessed and fed into a feature extraction process, and then, a three-step feature selection technique was used to reduce feature parameters. Last, the selected feature parameters were used to train an SVM-based model, and the performance of the model was evaluated by several evaluation indexes.

Furthermore, to evaluate the effect of the model more accurately, a set of test data was selected from the dataset used by Cai and Chou [11] and downloaded from UniProt [23]; these data included a total of 1954 sequences, including 684 enzymes and 1270 nonhuman enzyme sequences, respectively.

2.2. Feature Extraction

One of the most important steps in our method was to extract the feature vector of the selected sequences. Many works have focused on feature extraction of proteins. AAC [26, 27], dipeptide composition (DPC) [28, 29], Geary correction [30], composition-transition-distribution [10, 31, 32], PseAAC [33–37], and other feature extraction methods [38–40] have been proposed and widely applied to describe different kinds of protein primary sequences. Here, we presented and then applied AAC and CKSAAP to extract features.

The AAC encoding strategy calculates the frequency of each type of the 20 amino acids in a primary protein sequence [26], which can be formulated as follows:

| (1) |

where N(i) denotes the number of the amino acid types i (i.e., A, C, D, E, etc.) and L denotes the length of the sequence. This strategy obtains a 20-D feature vector for each primary sequence.

The CKSAAP encoding strategy reflects the short-range interaction of the sequence. The frequency of 400 amino acid pairs in k-space is calculated using this strategy [41]. The frequency can be defined as follows:

| (2) |

where N(i, j) denotes the number of the amino acid types i and j in k-space. L denotes the length of the sequence. This strategy obtains a 400-D feature vector for each primary sequence. Taking k = 1 as an example, there are 400 amino acid pairs in 1-space, i.e., A∗A, A∗C, A∗D, etc., where ∗ denotes other amino acids as the gap [42]. In this research, k = 0, 1, 2, 3, 4, and 5 are used to extract features and measure the comparative effectiveness. Therefore, the dipeptide composition (DPC) is the same descriptor as CKSAAP when k = 0 [43]. Moreover, in our work, features of sequences are extracted by the iFeature toolkit [44].

2.3. Feature Selection

Feature selection was utilized to optimize the prediction model and improve the accuracy of the human-enzyme classification task. In previous research, principal component analysis (PCA), the minimal redundancy maximal relevance (mRMR) algorithm [45, 46], the maximum relevance maximum distance (MRMD) algorithm [47], the genetic algorithm, etc., were proposed for feature selection and applied in protein classification. Here, ANOVA is used to select the most representative features.

ANOVA is an effective method used in statistics to test for a significant relationship between the selected variable and group variables [48, 49]. In our paper, ANOVA can be applied to measure the correlation between a selected feature and all features. The F statistic (F(δ)) of a feature δ is defined as follows:

| (3) |

where sMSB2(δ) and sMSW2(δ) represent the mean square between (MSB) and the mean square within (MSW), respectively, which can be interpreted as the sample variance between groups and the sample variance within groups. In the theory of statistics, F(δ) satisfies the F-distribution, which is used for the significance test. However, in our study, we only focused on the relative values of F(δ) to indicate the correlation between the feature and the overall size. Features with a larger F(δ) are selected because a larger F(δ) implies that they are more strongly related to the group features and more likely to contribute to the classification.

2.4. Support Vector Machine

The SVM algorithm is one of the most popular machine learning algorithms which has been successfully applied in many areas [50–58]. The SVM algorithm is based on statistical learning theory and is widely used in various domains. In the field of protein prediction, SVM has been applied to predicting protein category, secondary structure, physical and chemical properties, etc. and has achieved remarkable results [31, 59–63].

The core idea of SVM is to map the vectors from a low-dimension input space to a high-dimension Hilbert space, in which a linear separating hyperplane is constructed by a kernel function, and to try to maximize the margin among the support vectors of each class by adjusting the linear separating hyperplane. Usually, varieties of kernel functions can be used in SVM algorithms, including linear function, polynomial function, sigmoid function, and radial basis function (RBF). Previous research has shown that RBF performs much better than the other three kinds of kernel functions. Hence, RBF was used in our work as the kernel function [31, 59–63].

During the course of algorithm implementation, the open-source package libSVM supplied by Chang and Lin was used to implement the SVM algorithm [64]. Two parameters, c and γ, related to loss function and kernel function, respectively, were optimized by the method of gridding search using 6-fold cross-validation.

2.5. Performance Evaluation

Overfitting is an inevitable problem in machine learning. To reduce the influence of overfitting on model training, jackknife cross-validation or n-fold cross-validation is used to examine the power of the model on the training set [65]. The jackknife cross-validation method divides the training set into k subsets randomly, one of which is used to verify the accuracy of the model, and the other k‐1 subsets are used to train the model. This method can avoid overfitting by generalizing the model with k-times repetition and is widely used in the machine learning process of small sample size data.

The performance of each model can be measured in terms of accuracy (ACC), sensitivity (SE), and specificity (SP) [66–72]. A confusion matrix can be set up with the help of the classification results, which further classifies the classification results of a binary classifier into four categories: true positive (TP), true negative (TN), false positive (FP), and false negative (FN) [73, 74]. These metrics are usually adopted to evaluate prediction quality [75–89]. Based on this, the parameters above can be expressed as follows:

| (4) |

where ACC is used to evaluate the overall performance of the model and SE and SP are used to measure the predictive ability of the model for positive and negative cases. Higher values of these parameters represent a better prediction performance of the model.

In addition, the receiver operating characteristic (ROC) curve is applied to evaluate the performance of the model further [90–100]. ROC curves are used to illustrate the diagnostic ability of a binary classifier, which shows the changes of SP and SE with varied thresholds. The area under the ROC curve (AUC) can be used to determine which classifier performs better in a quantitative way. ROC curve analysis can reflect the real performance of the model, especially for an unbalanced dataset.

3. Results and Discussion

3.1. Comparison of Feature Extraction Methods

We first compared the performance of common feature extraction methods on the training set identified by the SVM classifier. Feature vectors with high dimensions were selected by ANOVA or mRMR methods, depending on which method could maximize accuracy. The features of the sequences were extracted by the iFeature toolkit [44] and were then selected and classified using MATLAB and libSVM. The accuracies of the various methods are shown in Supplementary Materials (available here), calculated by 6-fold cross-validation. We found that AAC and composition, transition, and distribution (CTD) descriptors can classify human enzymes accurately, with an accuracy from 74.4% to 75.9%, and that AAC can achieve the highest accuracy, which means the frequency of all 20 amino acids can provide the most useful information about human enzyme classification, and thus, more useful information can be added to AAC to improve the model's prediction performance.

Based on the above discussion, other descriptors can be added to AAC to improve the model. The results of the predicted accuracy using different added descriptors are shown in Table 1, where the feature selection technique in ANOVA and mRMR with higher accuracy was used. The control variable method is used to find the optimal feature extraction method. Specifically, the dimension used for feature selection is unchanged (30-D), and the performance of the SVM classifier under different feature extraction methods is compared to find the best feature extraction method for the identification of human enzymes. Based on the performance of the different descriptors on the training set, CKSAAP, which included not only information about the composition and sequence order but also information about the residue correlation, was determined to be the descriptor that can provide new valid information on the basis of AAC to improve the model performance.

Table 1.

Accuracy of models trained with various feature parameters added into AAC by 6-fold cross-validation.

| Feature parameters added into AAC | Feature selection method | Added number of features/total number of features | Accuracy |

|---|---|---|---|

| CTD-C [10] | mRMR | 20/39 | 75.1547% |

| CTriad [101] | mRMR | 30/343 | 71.0349% |

| DPC [28] | ANOVA | 30/400 | 75.5569% |

| DDE [28] | ANOVA | 30/400 | 67.0483% |

| TPC [26] | ANOVA | 30/8000 | 75.5569% |

| PseAAC [33] | ANOVA | 30/50 | 73.5075% |

| Geary [30] | mRMR | 30/240 | 75.8706% |

| CKSAAP (k = 0~5) | ANOVA | 30/2400 | 75.9282% |

| CKSAAP (k = 0) | ANOVA | 30/400 | 75.7776% |

| CKSAAP (k = 1) | ANOVA | 30/400 | 76.0885% |

| CKSAAP (k = 2) | ANOVA | 30/400 | 75.7147% |

| CKSAAP (k = 3) | ANOVA | 30/400 | 76.0878% |

| CKSAAP (k = 4) | ANOVA | 30/400 | 75.8708% |

| CKSAAP (k = 5) | ANOVA | 30/400 | 75.8701% |

3.2. Necessity of Feature Selection

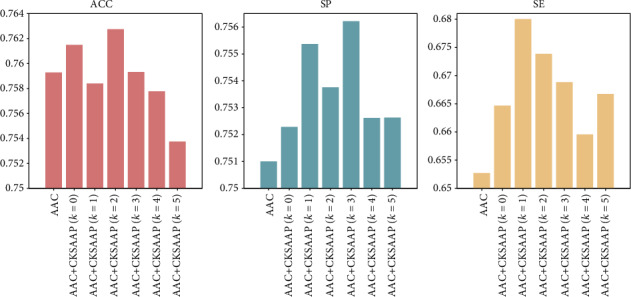

Then, the performance of our method, using the AAC and CKSAAP descriptors as features, was measured in different dimensions that were selected to determine whether the feature selection method should be used to reduce redundant information and further improve the performance of our model. We employed AAC alone and AAC and 6 types of CKSAAP together as the predictor to train the SVM model. The results are presented in Figure 2. Relative to SE, SP, the ACC model using all of the features of AAC and CKSAAP was not much improved compared to using AAC alone and was even decreased, in spite of features in CKSAAP that include useless information that influences the precision of our model. This result could lead to the conclusion that a feature selection technique is necessary to reduce redundant information and improve the precision of our model.

Figure 2.

Comparison of SVM models trained by AAC alone versus AAC plus 6 types of CKSAAP.

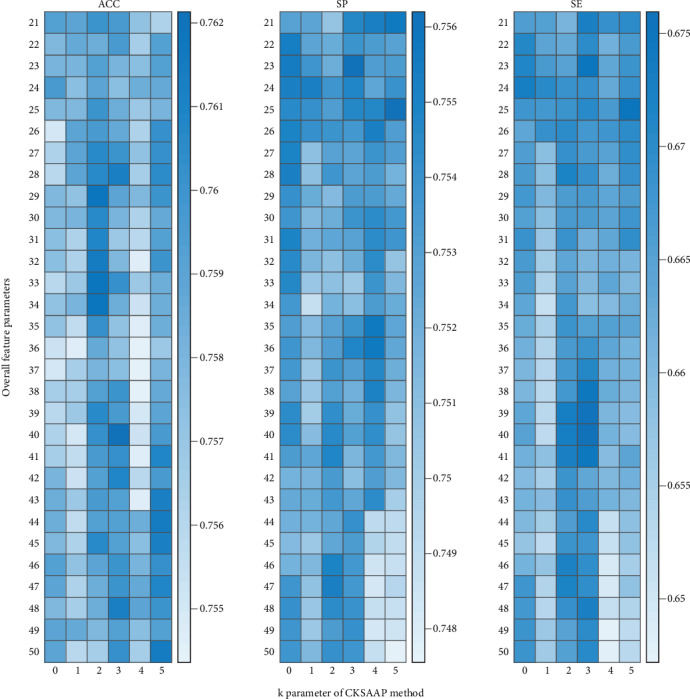

3.3. Selection of Significant Features

After determining the feature selection techniques necessary to improve the prediction accuracy of the model, the size of the significant features of the CKSAAP descriptors that we selected needed to be identified. We used ANOVA to select informative k-spaced amino acid pairs. The definite means are as follows: (1) Evaluate all of the amino acid pairs and sort them according to the difference between the two types of amino acids. (2) Each CKSAAP feature is sequentially added to the parameter subset with AAC according to the sorted order. (3) The SVM-based model is trained using the parameter subset. Then, all of the results are compared to find the best feature subset of the significant features we selected.

According to these methods, taking k = 3 as an example, the top 30 feature parameters of CKSAAP were selected and are shown as Figure 3, and the variance of 50 feature parameters in both the training and test sets are also shown. A∗∗∗A and L∗∗∗L have a large variance in both the training and test sets, foreshadowing that they contain more information.

Figure 3.

Results of the top 30 feature parameters of CKSAAP (k = 3). The radius of each point indicates the variance of the feature parameter in the training set or test set.

We used the top 30 feature parameters of CKSAAP from ANOVA added into the AAC parameters to train the model, change the value of k during feature extraction, and change the number of features added to AAC at the same time to select the model with the best performance, instead of only changing the feature extraction method, and the results are shown in Figure 4. We obtained a maximum accuracy when we used 20 AAC parameters and 20 CKSAAP parameters (k = 3) for 40 feature parameters overall. The c/γ values used in the SVM-based model are 1.1487 (20.2) and 147.0334 (27.2), respectively. The accuracy reached 76.2135%, and SP and SE reached 0.7530 and 0.6760, respectively, which are all higher than the accuracy achieved in past research. We also measured the performance of the above model by making predictions on the test set and obtained an overall accuracy of 76.4585%, which indicates that the SVM model we established performs well in the classification of human enzymes. The 20 informative 3-spaced amino acid pairs that are used in the model training stage are L∗∗∗L, P∗∗∗P, A∗∗∗A, S∗∗∗S, G∗∗∗G, E∗∗∗E, K∗∗∗K, R∗∗∗R, A∗∗∗L, Q∗∗∗Q, E∗∗∗K, L∗∗∗A, K∗∗∗E, A∗∗∗G, L∗∗∗G, G∗∗∗P, S∗∗∗L, E∗∗∗L, V∗∗∗L, and G∗∗∗L (∗ indicates the other characters between two amino acids, i.e., the space), which may play important roles in human enzymes.

Figure 4.

Results of ACC, SP, and SE of the model trained by 20 AAC parameters and 1–30 important CKSAAP parameters selected by the ANOVA technique.

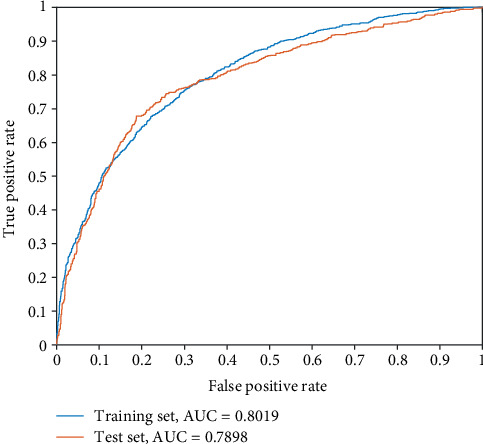

Furthermore, various mainstream classifiers, i.e., Naive Bayes, Random Forest, Logistic, K-nearest neighbor (KNN), and Ensembles for Boosting [102–105] are compared with our model in both the training set and the test set using 6-fold cross-validation in Table 2, and the result shows that the SVM-based classifier in our paper performs best. In addition, the ROC curve of our model performed well on both the training set and the test set, as shown in Figure 5, which confirms the classification effect of the model. The AUC reached 0.8019 and 0.7898 in the training set and the test set, respectively, demonstrating that our method for human-enzyme classification is effective and that more accurate classification results can now be obtained.

Table 2.

Comparison of the performance of various mainstream classifiers and the classifier implemented in our paper. ACC, SP, and SE of different classifiers on both the training set and the test set are compared.

| Classifiers | Training set | Test set | ||||

|---|---|---|---|---|---|---|

| ACC | SP | SE | ACC | SP | SE | |

| This work (SVM) | 76.2135% | 0.753 | 0.676 | 76.4585% | 0.762 | 0.657 |

| Naive Bayes | 61.0697% | 0.466 | 0.833 | 65.7625% | 0.507 | 0.794 |

| Random Forest | 74.3781% | 0.703 | 0.454 | 74.7691% | 0.710 | 0.472 |

| Logistic | 69.5274% | 0.598 | 0.374 | 68.4237% | 0.587 | 0.329 |

| KNN | 62.8420% | 0.474 | 0.646 | 63.0502% | 0.480 | 0.658 |

| Ensembles for Boosting | 69.6206% | 0.588 | 0.420 | 68.6796% | 0.573 | 0.411 |

Figure 5.

The ROC curves of our model on both the training set and test set, with AUCs of 0.8019 and 0.7898, respectively.

4. Conclusion

In this study, we proposed an effective and novel method to identify human enzymes using AAC and CKSAAP that is based on short-range interactions of amino acid pairs rather than the physicochemical properties of the sequences. By using ANOVA to select informative feature parameters, 20 amino acid pairs in 3-space are selected to add 20 residues and feed their frequency into an SVM classifier. The jackknife cross-validated accuracy was 76.46% in the training set, demonstrating that fewer feature parameters were used and a higher accuracy was reached compared to previous research. Moreover, we compared the performance of the model using different feature extraction methods, and the results showed that residue-frequency-based methods perform better than other methods, and a web server based on our method will be implemented in the future. In addition, some important feature parameters selected by ANOVA, e.g., A∗∗∗A and L∗∗∗L, may contain vital information in regard to the identification of human enzymes, which we hope to discuss more deeply in the future.

Acknowledgments

The work was supported by the National Natural Science Foundation of China (No. 61901103) and the Natural Science Foundation of Heilongjiang Province (No. LH2019F002).

Contributor Information

Ying Zhang, Email: zhangying_hmu@163.com.

Liran Juan, Email: lrjuan@hit.edu.cn.

Data Availability

In our experiment, the sequence data of the training set and the feature vectors of both the training set and the test set extracted by the iFeature toolkit are available online at https://github.com/Fu-Zhang/Identification-of-human-enzymes. The sequence data of the test set are available in the Supplementary Materials of Reference [11].

Conflicts of Interest

The authors have declared no competing interests.

Authors' Contributions

YZ and LJ conceived and designed the project. LZ and BD conducted experiments and analyzed the data. LZ and LJ wrote the paper. ZT and YZ revised the manuscript. All authors read and approved the final manuscript.

Supplementary Materials

Accuracy of model training with various feature extraction methods by 6-fold cross-validation. Two feature selection methods, ANOVA and mRMR, are used and the feature selection method with higher accuracy is selected and included in the table.

References

- 1.Nomenclature E, Webb E. San Diego, CA, USA: Academic Press; 1992. [Google Scholar]

- 2.Tan J.-X., Lv H., Wang F., Dao F.-Y., Chen W., Ding H. A survey for predicting enzyme family classes using machine learning methods. Current Drug Targets. 2019;20(5):540–550. doi: 10.2174/1389450119666181002143355. [DOI] [PubMed] [Google Scholar]

- 3.Xu L., Liang G., Wang L., Liao C. A novel hybrid sequence-based model for identifying anticancer peptides. Genes. 2018;9(3):p. 158. doi: 10.3390/genes9030158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Liu B., Zhu Y., Yan K. Fold-LTR-TCP: protein fold recognition based on triadic closure principle. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz139. [DOI] [PubMed] [Google Scholar]

- 5.Wei L., Zou Q., Liao M., Lu H., Zhao Y. A novel machine learning method for cytokine-receptor interaction prediction. Combinatorial Chemistry & High Throughput Screening. 2016;19(2):144–152. doi: 10.2174/1386207319666151110122621. [DOI] [PubMed] [Google Scholar]

- 6.Wang X., Yu B., Ma A., Chen C., Liu B., Ma Q. Protein-protein interaction sites prediction by ensemble random forests with synthetic minority oversampling technique. Bioinformatics. 2019;35(14):2395–2402. doi: 10.1093/bioinformatics/bty995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Qu K., Wei L., Zou Q. A review of DNA-binding proteins prediction methods. Current Bioinformatics. 2019;14(3):246–254. doi: 10.2174/1574893614666181212102030. [DOI] [Google Scholar]

- 8.Jensen L. J., Skovgaard M., Brunak S. Prediction of novel archaeal enzymes from sequence-derived features. Protein Science. 2002;11(12):2894–2898. doi: 10.1110/ps.0225102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chou K.-C., Cai Y.-D. Using GO-PseAA predictor to predict enzyme sub-class. Biochemical and Biophysical Research Communications. 2004;325(2):506–509. doi: 10.1016/j.bbrc.2004.10.058. [DOI] [PubMed] [Google Scholar]

- 10.Cai C. Z., Han L. Y., Ji Z. L., Chen Y. Z. Enzyme family classification by support vector machines. Proteins: Structure, Function, and Bioinformatics. 2004;55(1):66–76. doi: 10.1002/prot.20045. [DOI] [PubMed] [Google Scholar]

- 11.Cai Y.-D., Chou K.-C. Using functional domain composition to predict enzyme family classes. Journal of Proteome Research. 2005;4(1):109–111. doi: 10.1021/pr049835p. [DOI] [PubMed] [Google Scholar]

- 12.Cai Y.-D., Chou K.-C. Predicting enzyme subclass by functional domain composition and pseudo amino acid composition. Journal of Proteome Research. 2005;4(3):967–971. doi: 10.1021/pr0500399. [DOI] [PubMed] [Google Scholar]

- 13.Shen H.-B., Chou K.-C. EzyPred: a top-down approach for predicting enzyme functional classes and subclasses. Biochemical and Biophysical Research Communications. 2007;364(1):53–59. doi: 10.1016/j.bbrc.2007.09.098. [DOI] [PubMed] [Google Scholar]

- 14.Nasibov E., Kandemir-Cavas C. Efficiency analysis of KNN and minimum distance-based classifiers in enzyme family prediction. Computational Biology and Chemistry. 2009;33(6):461–464. doi: 10.1016/j.compbiolchem.2009.09.002. [DOI] [PubMed] [Google Scholar]

- 15.Concu R., Dea-Ayuela M. A., Perez-Montoto L. G., et al. Prediction of enzyme classes from 3D structure: a general model and examples of experimental-theoretic scoring of peptide mass fingerprints of Leishmania proteins. Journal of Proteome Research. 2009;8(9):4372–4382. doi: 10.1021/pr9003163. [DOI] [PubMed] [Google Scholar]

- 16.Qiu J.-D., Huang J.-H., Shi S.-P., Liang R.-P. Using the concept of Chou’s pseudo amino acid composition to predict enzyme family classes: an approach with support vector machine based on discrete wavelet transform. Protein and Peptide Letters. 2010;17(6):715–722. doi: 10.2174/092986610791190372. [DOI] [PubMed] [Google Scholar]

- 17.Shi R., Hu X. Predicting enzyme subclasses by using support vector machine with composite vectors. Protein and Peptide Letters. 2010;17(5):599–604. doi: 10.2174/092986610791112710. [DOI] [PubMed] [Google Scholar]

- 18.Zou Q., Chen W., Huang Y., Liu X., Jiang Y. Identifying multi-functional enzyme by hierarchical multi-label classifier. Journal of Computational and Theoretical Nanoscience. 2013;10(4):1038–1043. doi: 10.1166/jctn.2013.2804. [DOI] [Google Scholar]

- 19.Niu B., Lu Y., Lu J., et al. Prediction of Enzyme’s family based on protein-protein interaction network. Current Bioinformatics. 2015;10(1):16–21. doi: 10.2174/157489361001150309122016. [DOI] [Google Scholar]

- 20.Li Y., Wang S., Umarov R., et al. DEEPre: sequence-based enzyme EC number prediction by deep learning. Bioinformatics. 2018;34(5):760–769. doi: 10.1093/bioinformatics/btx680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Memon S. A., Khan K. A., Naveed H. Enzyme function prediction using deep learning. Biophysical Journal. 2020;118(3, article 533a) doi: 10.1016/j.bpj.2019.11.2926. [DOI] [Google Scholar]

- 22.Wu Y., Tang H., Chen W., Lin H. Predicting human enzyme family classes by using pseudo amino acid composition. Current Proteomics. 2016;13(2):99–104. doi: 10.2174/157016461302160514003437. [DOI] [Google Scholar]

- 23.Consortium T. U. UniProt: a hub for protein information. Nucleic Acids Research. 2015;43(D1):D204–D212. doi: 10.1093/nar/gku989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fu L., Niu B., Zhu Z., Wu S., Li W. CD-HIT: accelerated for clustering the next-generation sequencing data. Bioinformatics. 2012;28(23):3150–3152. doi: 10.1093/bioinformatics/bts565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Zou Q., Lin G., Jiang X., Liu X., Zeng X. Sequence clustering in bioinformatics: an empirical study. Briefings in Bioinformatics. 2018;21(1):1–10. doi: 10.1093/bib/bby090. [DOI] [PubMed] [Google Scholar]

- 26.Bhasin M., Raghava G. P. Classification of nuclear receptors based on amino acid composition and dipeptide composition. Journal of Biological Chemistry. 2004;279(22):23262–23266. doi: 10.1074/jbc.M401932200. [DOI] [PubMed] [Google Scholar]

- 27.Liu B. BioSeq-Analysis: a platform for DNA, RNA, and protein sequence analysis based on machine learning approaches. Briefings in Bioinformatics. 2019;20(4):1280–1294. doi: 10.1093/bib/bbx165. [DOI] [PubMed] [Google Scholar]

- 28.Saravanan V., Gautham N. Harnessing computational biology for exact linear B-cell epitope prediction: a novel amino acid composition-based feature descriptor. OMICS: A Journal of Integrative Biology. 2015;19(10):648–658. doi: 10.1089/omi.2015.0095. [DOI] [PubMed] [Google Scholar]

- 29.Tang H., Zhao Y. W., Zou P., et al. HBPred: a tool to identify growth hormone-binding proteins. International Journal of Biological Sciences. 2018;14(8):957–964. doi: 10.7150/ijbs.24174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Sokal R. R., Thomson B. A. Population structure inferred by local spatial autocorrelation: an example from an Amerindian tribal population. American Journal of Physical Anthropology. 2006;129(1):121–131. doi: 10.1002/ajpa.20250. [DOI] [PubMed] [Google Scholar]

- 31.Cai C., Han L., Ji Z. L., Chen X., Chen Y. Z. SVM-Prot: web-based support vector machine software for functional classification of a protein from its primary sequence. Nucleic Acids Research. 2003;31(13):3692–3697. doi: 10.1093/nar/gkg600. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dubchak I., Muchnik I., Holbrook S. R., Kim S.-H. Prediction of protein folding class using global description of amino acid sequence. Proceedings of the National Academy of Sciences of the United States of America. 1995;92(19):8700–8704. doi: 10.1073/pnas.92.19.8700. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Chou K. C. Prediction of protein cellular attributes using pseudo-amino acid composition. Proteins: Structure, Function, and Bioinformatics. 2001;43(3):246–255. doi: 10.1002/prot.1035. [DOI] [PubMed] [Google Scholar]

- 34.Chou K.-C. Using amphiphilic pseudo amino acid composition to predict enzyme subfamily classes. Bioinformatics. 2005;21(1):10–19. doi: 10.1093/bioinformatics/bth466. [DOI] [PubMed] [Google Scholar]

- 35.Shen Y., Tang J., Guo F. Identification of protein subcellular localization via integrating evolutionary and physicochemical information into Chou’s general PseAAC. Journal of Theoretical Biology. 2019;462:230–239. doi: 10.1016/j.jtbi.2018.11.012. [DOI] [PubMed] [Google Scholar]

- 36.Liu B., Gao X., Zhang H. BioSeq-Analysis2.0: an updated platform for analyzing DNA, RNA, and protein sequences at sequence level and residue level based on machine learning approaches. Nucleic Acids Research. 2019;47(20, article e127) doi: 10.1093/nar/gkz740. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tang H., Chen W., Lin H. Identification of immunoglobulins using Chou’s pseudo amino acid composition with feature selection technique. Molecular BioSystems. 2016;12(4):1269–1275. doi: 10.1039/c5mb00883b. [DOI] [PubMed] [Google Scholar]

- 38.Zhu X.-J., Feng C.-Q., Lai H.-Y., Chen W., Hao L. Predicting protein structural classes for low-similarity sequences by evaluating different features. Knowledge-Based Systems. 2019;163:787–793. doi: 10.1016/j.knosys.2018.10.007. [DOI] [Google Scholar]

- 39.Yu B., Qiu W., Chen C., et al. SubMito-XGBoost: predicting protein submitochondrial localization by fusing multiple feature information and eXtreme gradient boosting. Bioinformatics. 2019;36 doi: 10.1093/bioinformatics/btz734. [DOI] [PubMed] [Google Scholar]

- 40.Zhao X., Jiao Q., Li H., et al. ECFS-DEA: an ensemble classifier-based feature selection for differential expression analysis on expression profiles. BMC Bioinformatics. 2020;21(1):p. 43. doi: 10.1186/s12859-020-3388-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chen K., Jiang Y., Du L., Kurgan L. Prediction of integral membrane protein type by collocated hydrophobic amino acid pairs. Journal of Computational Chemistry. 2009;30(1):163–172. doi: 10.1002/jcc.21053. [DOI] [PubMed] [Google Scholar]

- 42.Chen K., Kurgan L., Rahbari M. Prediction of protein crystallization using collocation of amino acid pairs. Biochemical and Biophysical Research Communications. 2007;355(3):764–769. doi: 10.1016/j.bbrc.2007.02.040. [DOI] [PubMed] [Google Scholar]

- 43.Chen Z., Chen Y., Wang X., Wang C., Yan R., Zhang Z. Prediction of ubiquitination sites by using the composition of k-spaced amino acid pairs. PLoS One. 2011;6(7, article e22930) doi: 10.1371/journal.pone.0022930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Chen Z., Zhao P., Li F., et al. iFeature: a python package and web server for features extraction and selection from protein and peptide sequences. Bioinformatics. 2018;34(14):2499–2502. doi: 10.1093/bioinformatics/bty140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Peng H., Long F., Ding C. Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Transactions on Pattern Analysis and Machine Intelligence. 2005;27(8):1226–1238. doi: 10.1109/TPAMI.2005.159. [DOI] [PubMed] [Google Scholar]

- 46.Wang S. P., Zhang Q., Lu J., Cai Y. D. Analysis and prediction of nitrated tyrosine sites with the mRMR method and support vector machine algorithm. Current Bioinformatics. 2018;13(1):3–13. doi: 10.2174/1574893611666160608075753. [DOI] [Google Scholar]

- 47.Zou Q., Zeng J., Cao L., Ji R. A novel features ranking metric with application to scalable visual and bioinformatics data classification. Neurocomputing. 2016;173:346–354. doi: 10.1016/j.neucom.2014.12.123. [DOI] [Google Scholar]

- 48.Ding H., Li D. Identification of mitochondrial proteins of malaria parasite using analysis of variance. Amino Acids. 2015;47(2):329–333. doi: 10.1007/s00726-014-1862-4. [DOI] [PubMed] [Google Scholar]

- 49.Yang W., Zhu X.-J., Huang J., Ding H., Lin H. A brief survey of machine learning methods in protein sub-Golgi localization. Current Bioinformatics. 2019;14:234–240. doi: 10.2174/1574893613666181113131415. [DOI] [Google Scholar]

- 50.Zhang X., Zou Q., Rodriguez-Paton A., Zeng X. Meta-path methods for prioritizing candidate disease miRNAs. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2019;16(1):283–291. doi: 10.1109/TCBB.2017.2776280. [DOI] [PubMed] [Google Scholar]

- 51.Zeng X., Liao Y., Liu Y., Zou Q. Prediction and validation of disease genes using HeteSim scores. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2017;14(3):687–695. doi: 10.1109/TCBB.2016.2520947. [DOI] [PubMed] [Google Scholar]

- 52.Hong Z., Zeng X., Wei L., Liu X. Identifying enhancer-promoter interactions with neural network based on pre-trained DNA vectors and attention mechanism. Bioinformatics. 2019;36 doi: 10.1093/bioinformatics/btz694. [DOI] [PubMed] [Google Scholar]

- 53.Tan J. X., Li S. H., Zhang Z. M., et al. Identification of hormone binding proteins based on machine learning methods. Mathematical Biosciences and Engineering. 2019;16(4):2466–2480. doi: 10.3934/mbe.2019123. [DOI] [PubMed] [Google Scholar]

- 54.Huo Y., Xin L., Kang C., Wang M., Ma Q., Yu B. SGL-SVM: a novel method for tumor classification via support vector machine with sparse group Lasso. Journal of Theoretical Biology. 2020;486 doi: 10.1016/j.jtbi.2019.110098. [DOI] [PubMed] [Google Scholar]

- 55.Wang Y., Shi F., Cao L., et al. Morphological segmentation analysis and texture-based support vector machines classification on mice liver fibrosis microscopic images. Current Bioinformatics. 2019;14(4):282–294. doi: 10.2174/1574893614666190304125221. [DOI] [Google Scholar]

- 56.Du X., Li X., Li W., Yan Y., Zhang Y. Identification and analysis of cancer diagnosis using probabilistic classification vector machines with feature selection. Current Bioinformatics. 2018;13(6):625–632. doi: 10.2174/1574893612666170405125637. [DOI] [Google Scholar]

- 57.Zhang N., Sa Y., Guo Y., Lin W., Wang P., Feng Y. Discriminating Ramos and Jurkat cells with image textures from diffraction imaging flow cytometry based on a support vector machine. Current Bioinformatics. 2018;13:50–56. doi: 10.2174/1574893611666160608102537. [DOI] [Google Scholar]

- 58.Jiang Q. H., Wang G. H., Jin S. L., Li Y., Wang Y. D. Predicting human microRNA-disease associations based on support vector machine. International Journal of Data Mining and Bioinformatics. 2013;8(3):282–293. doi: 10.1504/ijdmb.2013.056078. [DOI] [PubMed] [Google Scholar]

- 59.Xu L., Liang G., Liao C., Chen G.-D., Chang C.-C. An efficient classifier for Alzheimer’s disease genes identification. Molecules. 2018;23(12):p. 3140. doi: 10.3390/molecules23123140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Liu B., Li C., Yan K. DeepSVM-fold: protein fold recognition by combining support vector machines and pairwise sequence similarity scores generated by deep learning networks. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz098. [DOI] [PubMed] [Google Scholar]

- 61.Qiao Y., Xiong Y., Gao H., Zhu X., Chen P. Protein-protein interface hot spots prediction based on a hybrid feature selection strategy. BMC Bioinformatics. 2018;19(1):p. 14. doi: 10.1186/s12859-018-2009-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Cheng L., Yang H., Zhao H., et al. MetSigDis: a manually curated resource for the metabolic signatures of diseases. Briefings in Bioinformatics. 2019;20(1):203–209. doi: 10.1093/bib/bbx103. [DOI] [PubMed] [Google Scholar]

- 63.Cheng L., Zhuang H., Yang S., Jiang H., Wang S., Zhang J. Exposing the causal effect of C-reactive protein on the risk of type 2 diabetes mellitus: a Mendelian randomization study. Frontiers in Genetics. 2018;9:p. 657. doi: 10.3389/fgene.2018.00657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Chang C.-C., Lin C.-J. LIBSVM. Acm Transactions on Intelligent Systems and Technology. 2011;2(3):1–27. doi: 10.1145/1961189.1961199. [DOI] [Google Scholar]

- 65.Ding H., Yang W., Tang H., et al. PHYPred: a tool for identifying bacteriophage enzymes and hydrolases. Virologica Sinica. 2016;31(4):350–352. doi: 10.1007/s12250-016-3740-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Zeng X., Zhu S., Liu X., Zhou Y., Nussinov R., Cheng F. deepDR: a network-based deep learning approach to in silico drug repositioning. Bioinformatics. 2019;35(24):5191–5198. doi: 10.1093/bioinformatics/btz418. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Xu H., Zeng W., Zeng X., Yen G. G. A polar-metric-based evolutionary algorithm. IEEE Transactions on Cybernetics. 2020:1–12. doi: 10.1109/TCYB.2020.2965230. [DOI] [PubMed] [Google Scholar]

- 68.Zeng X., Zhong Y., Lin W., Zou Q. Predicting disease-associated circular RNAs using deep forests combined with positive-unlabeled learning methods. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz080. [DOI] [PubMed] [Google Scholar]

- 69.Zeng X., Zhu S., Lu W., et al. Target identification among known drugs by deep learning from heterogeneous networks. Chemical Science. 2020 doi: 10.1039/c9sc04336e. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Chen C., Zhang Q., Ma Q., Yu B. LightGBM-PPI: predicting protein-protein interactions through LightGBM with multi-information fusion. Chemometrics and Intelligent Laboratory Systems. 2019;191:54–64. doi: 10.1016/j.chemolab.2019.06.003. [DOI] [Google Scholar]

- 71.Wang G., Wang Y., Feng W., et al. Transcription factor and microRNA regulation in androgen-dependent and -independent prostate cancer cells. BMC Genomics. 2008;9(Supplement 2):p. S22. doi: 10.1186/1471-2164-9-S2-S22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Wang G., Wang Y., Teng M., Zhang D., Li L., Liu Y. Signal transducers and activators of transcription-1 (STAT1) regulates microRNA transcription in interferon gamma-stimulated HeLa cells. PLoS One. 2010;5(7, article e11794) doi: 10.1371/journal.pone.0011794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Zeng X., Wang W., Chen C., Yen G. G. A consensus community-based particle swarm optimization for dynamic community detection. IEEE Transactions on Cybernetics. 2019:1–12. doi: 10.1109/TCYB.2019.2938895. [DOI] [PubMed] [Google Scholar]

- 74.Liu X., Hong Z., Liu J., et al. Computational methods for identifying the critical nodes in biological networks. Briefings in Bioinformatics. 2020;21(2):486–497. doi: 10.1093/bib/bbz011. [DOI] [PubMed] [Google Scholar]

- 75.Shen C., Ding Y., Tang J., Jiang L., Guo F. LPI-KTASLP: prediction of lncRNA-protein interaction by semi-supervised link learning with multivariate information. IEEE Access. 2019;7:13486–13496. doi: 10.1109/ACCESS.2019.2894225. [DOI] [Google Scholar]

- 76.Ding Y., Tang J., Guo F. Identification of drug-side effect association via semi-supervised model and multiple kernel learning. IEEE Journal of Biomedical and Health Informatics. 2019;23(6):2619–2632. doi: 10.1109/JBHI.2018.2883834. [DOI] [PubMed] [Google Scholar]

- 77.Ding Y., Tang J., Guo F. Identification of drug-side effect association via multiple information integration with centered kernel alignment. Neurocomputing. 2019;325:211–224. doi: 10.1016/j.neucom.2018.10.028. [DOI] [Google Scholar]

- 78.Wei L., Wan S., Guo J., Wong K. K. A novel hierarchical selective ensemble classifier with bioinformatics application. Artificial Intelligence in Medicine. 2017;83:82–90. doi: 10.1016/j.artmed.2017.02.005. [DOI] [PubMed] [Google Scholar]

- 79.Wei L., Xing P., Zeng J., Chen J., Su R., Guo F. Improved prediction of protein-protein interactions using novel negative samples, features, and an ensemble classifier. Artificial Intelligence in Medicine. 2017;83:67–74. doi: 10.1016/j.artmed.2017.03.001. [DOI] [PubMed] [Google Scholar]

- 80.Liu B., Li K. iPromoter-2L2.0: identifying promoters and their types by combining smoothing cutting window algorithm and sequence-based features. Molecular Therapy-Nucleic Acids. 2019;18:80–87. doi: 10.1016/j.omtn.2019.08.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Zhu X., He J., Zhao S., Tao W., Xiong Y., Bi S. A comprehensive comparison and analysis of computational predictors for RNA N6-methyladenosine sites of Saccharomyces cerevisiae. Briefings in Functional Genomics. 2019;18(6):367–376. doi: 10.1093/bfgp/elz018. [DOI] [PubMed] [Google Scholar]

- 82.Shan X., Wang X., Li C. D., et al. Prediction of CYP450 enzyme-substrate selectivity based on the network-based label space division method. Journal of Chemical Information and Modeling. 2019;59(11):4577–4586. doi: 10.1021/acs.jcim.9b00749. [DOI] [PubMed] [Google Scholar]

- 83.Chu Y., Kaushik A. C., Wang X., et al. DTI-CDF: a cascade deep forest model towards the prediction of drug-target interactions based on hybrid features. Briefings in Bioinformatics. 2019 doi: 10.1093/bib/bbz152. [DOI] [PubMed] [Google Scholar]

- 84.He J., Fang T., Zhang Z., Huang B., Zhu X., Xiong Y. PseUI: pseudouridine sites identification based on RNA sequence information. BMC Bioinformatics. 2018;19(1):p. 306. doi: 10.1186/s12859-018-2321-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Cheng L., Zhao H., Wang P., et al. Computational methods for identifying similar diseases. Molecular Therapy - Nucleic Acids. 2019;18:590–604. doi: 10.1016/j.omtn.2019.09.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Cheng L. Computational and biological methods for gene therapy. Current Gene Therapy. 2019;19(4):210–210. doi: 10.2174/156652321904191022113307. [DOI] [PubMed] [Google Scholar]

- 87.Zhang Z. Y., Yang Y. H., Ding H., Wang D., Chen W., Lin H. Design powerful predictor for mRNA subcellular location prediction in Homo sapiens. Briefings in Bioinformatics. 2020 doi: 10.1093/bib/bbz177. [DOI] [PubMed] [Google Scholar]

- 88.Wang G., Luo X., Wang J., et al. MeDReaders: a database for transcription factors that bind to methylated DNA. Nucleic Acids Research. 2018;46(D1):D146–D151. doi: 10.1093/nar/gkx1096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Cheng L., Wang P., Tian R., et al. LncRNA2Target v2.0: a comprehensive database for target genes of lncRNAs in human and mouse. Nucleic Acids Research. 2019;47(D1):D140–D144. doi: 10.1093/nar/gky1051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Hsieh F., Turnbull B. W. Nonparametric and semiparametric estimation of the receiver operating characteristic curve. Annals of Statistics. 1996;24(1):25–40. [Google Scholar]

- 91.Wei L., Hu J., Li F., Song J., Su R., Zou Q. Comparative analysis and prediction of quorum-sensing peptides using feature representation learning and machine learning algorithms. Briefings in Bioinformatics. 2018;18 doi: 10.1093/bib/bby107. [DOI] [PubMed] [Google Scholar]

- 92.Liu B., Zhu Y. ProtDec-LTR3.0: protein remote homology detection by incorporating profile-based features into Learning to Rank. IEEE ACCESS. 2019;7:102499–102507. doi: 10.1109/ACCESS.2019.2929363. [DOI] [Google Scholar]

- 93.Fang T., Zhang Z., Sun R., et al. RNAm5CPred: prediction of RNA 5-methylcytosine sites based on three different kinds of nucleotide composition. Molecular Therapy - Nucleic Acids. 2019;18:739–747. doi: 10.1016/j.omtn.2019.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Xiong Y., Wang Q., Yang J., Zhu X., Wei D. Q. PredT4SE-stack: prediction of bacterial type IV secreted effectors from protein sequences using a stacked ensemble method. Frontiers in Microbiology. 2018;9:p. 2571. doi: 10.3389/fmicb.2018.02571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Cheng L., Hu Y., Sun J., Zhou M., Jiang Q. DincRNA: a comprehensive web-based bioinformatics toolkit for exploring disease associations and ncRNA function. Bioinformatics. 2018;34(11):1953–1956. doi: 10.1093/bioinformatics/bty002. [DOI] [PubMed] [Google Scholar]

- 96.Cheng L., Qi C., Zhuang H., Fu T., Zhang X. gutMDisorder: a comprehensive database for dysbiosis of the gut microbiota in disorders and interventions. Nucleic Acids Research. 2020;48(D1):D554–D560. doi: 10.1093/nar/gkz843. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Lai H. Y., Zhang Z. Y., Su Z. D., et al. iProEP: a computational predictor for predicting promoter. Molecular Therapy-Nucleic Acids. 2019;17:337–346. doi: 10.1016/j.omtn.2019.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.Sun X., Jin T., Chen C., Cui X., Ma Q., Yu B. RBPro-RF: use Chou’s 5-steps rule to predict RNA-binding proteins via random forest with elastic net. Chemometrics and Intelligent Laboratory Systems. 2020;197, article 103919 doi: 10.1016/j.chemolab.2019.103919. [DOI] [Google Scholar]

- 99.Zhao Y., Wang F., Juan L. MicroRNA promoter identification in Arabidopsis using multiple histone markers. BioMed Research International. 2015;2015:10. doi: 10.1155/2015/861402.861402 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Zhao Y., Wang F., Chen S., Wan J., Wang G. Methods of microRNA promoter prediction and transcription factor mediated regulatory network. BioMed Research International. 2017;2017:8. doi: 10.1155/2017/7049406.7049406 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Shen J., Zhang J., Luo X., et al. Predicting protein-protein interactions based only on sequences information. Proceedings of the National Academy of Sciences of the United States of America. 2007;104(11):4337–4341. doi: 10.1073/pnas.0607879104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Lv Z., Jin S., Ding H., Zou Q. A random forest sub-Golgi protein classifier optimized via dipeptide and amino acid composition features. Frontiers in Bioengineering and Biotechnology. 2019;7:p. 215. doi: 10.3389/fbioe.2019.00215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Ning Q., Ma Z., Zhao X. dForml(KNN)-PseAAC: Detecting formylation sites from protein sequences using K-nearest neighbor algorithm via Chou's 5-step rule and pseudo components. Journal of Theoretical Biology. 2019;470:43–49. doi: 10.1016/j.jtbi.2019.03.011. [DOI] [PubMed] [Google Scholar]

- 104.Hu H., Zhang L., Ai H., et al. HLPI-ensemble: prediction of human lncRNA-protein interactions based on ensemble strategy. RNA Biology. 2018;15(6):797–806. doi: 10.1080/15476286.2018.1457935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Tagore S., Gorohovski A., Jensen L. J., Frenkel-Morgenstern M. ProtFus: a comprehensive method characterizing protein-protein interactions of fusion proteins. PLoS Computational Biology. 2019;15(8, article e1007239) doi: 10.1371/journal.pcbi.1007239. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Accuracy of model training with various feature extraction methods by 6-fold cross-validation. Two feature selection methods, ANOVA and mRMR, are used and the feature selection method with higher accuracy is selected and included in the table.

Data Availability Statement

In our experiment, the sequence data of the training set and the feature vectors of both the training set and the test set extracted by the iFeature toolkit are available online at https://github.com/Fu-Zhang/Identification-of-human-enzymes. The sequence data of the test set are available in the Supplementary Materials of Reference [11].