Abstract

Anticipating rewards has been shown to enhance memory formation. While substantial evidence implicates dopamine in this behavioral effect, the precise mechanisms remain ambiguous. Because dopamine nuclei have been associated with two distinct physiological signatures of reward prediction, we hypothesized two dissociable effects on memory formation. These two signatures are a phasic dopamine response immediately following a reward cue that encodes its expected value, and a sustained, ramping response that has been demonstrated during high reward uncertainty (Fiorillo, Tobler, and Schultz 2003). Here, we show in humans that the impact of reward anticipation on memory for an event depends on its timing relative to these physiological signatures. By manipulating reward probability (100%, 50%, or 0%) and the timing of the event to be encoded (just after the reward cue versus just before expected reward outcome), we demonstrated the predicted double dissociation: early during reward anticipation, memory formation was improved by increased expected reward value, whereas late during reward anticipation, memory formation was enhanced by reward uncertainty. Notably, while the memory benefits of high expected reward in the early interval were consolidation-dependent, the memory benefits of high uncertainty in the later interval were not. These findings support the view that expected reward benefits memory consolidation via phasic dopamine release. The novel finding of a distinct memory enhancement, temporally consistent with sustained anticipatory dopamine release, points toward new mechanisms of memory modulation by reward now ripe for further investigation.

Keywords: Memory, reward, uncertainty

Introduction

Episodic memory formation, an important component of learning, is enhanced during reward anticipation: Just as the desire to get an ‘A’, or to understand the world, can motivate individuals to remember information, the promise of money can motivate people to form new memories (Wittmann et al. 2005; Adcock et al. 2006; Gruber and Otten 2010) and even enhance memory for incidental events (Mather and Schoeke 2011; Murty and Adcock 2014). However, the mechanisms of memory enhancement during reward anticipation remain incompletely understood (for reviews see Shohamy and Adcock 2010; Miendlarzewska, Bavelier, and Schwartz 2016).

One proposed mechanism involves the neuromodulator dopamine, released during reward anticipation, which directly stabilizes long-term potentiation to support memory formation. In the dopaminergic midbrain, the ventral tegmental area (VTA) sends afferent projections to the hippocampus (Gasbarri et al. 1994; Gasbarri, Sulli, and Packard 1997), which is populated with dopamine receptors (Dawson et al. 1986; Camps et al. 1989; Bergson et al. 1995; Little, Carroll, and Cassin 1995; Ciliax et al. 2000; Khan et al. 2000; Lewis et al. 2001; Jiao, Paré, and Tejani-Butt 2003). Indeed, applying dopamine receptor antagonists in the hippocampus blocks memory formation for new, rewarding events (Bethus, Tse, and Morris 2010). Prior work has also shown that during reward anticipation, activation of the dopaminergic midbrain (Wittmann et al. 2005; Adcock et al. 2006) and increased midbrain connectivity with the hippocampus (Adcock et al. 2006) predict successful memory formation. However, this mechanism of memory enhancement is only one among many known cellular and network actions of dopamine. Even within the hippocampus, these models must be elaborated to incorporate knowledge about dopamine receptor distributions (see Shohamy and Adcock, 2010 for review) and multiple temporal profiles of dopamine neuronal responses.

More specifically, rapid phasic burst responses scale with the expected reward value of a reward or a cue predicting reward (Fiorillo, Tobler, and Schultz 2003; Tobler, Fiorillo, and Schultz 2005), whereas a slower, anticipatory sustained response has been reported to be associated with reward uncertainty (Fiorillo, Tobler, and Schultz 2003). In the hippocampus, particularly, dopamine receptors do not closely appose dopamine terminals (for review see Shohamy & Adcock, 2010) and thus phasic responses versus sustained responses are likely to differentially influence hippocampal dopamine receptors; this distinction is likely to exist in other cortical regions and mechanisms as well. Thus, in this study, we proposed that over several seconds of reward anticipation, phasic and sustained dopamine neuronal excitation should differentially modulate memory formation, and furthermore that we could characterize these distinct dopamine profiles using a behavioral paradigm in humans. Specifically, we hypothesized that for events immediately following a reward-predicting cue, phasic dopamine release would drive memory enhancements when expected reward value is high. On the other hand, for events closer to a potentially rewarding outcome, sustained dopamine release should drive memory enhancements when reward uncertainty is high.

Many of the effects of dopamine on long-term memory occur during consolidation, thus, to ensure that memory performance would reflect the consolidation-dependent mechanisms, our initial tests of these hypotheses examined retrieval after a 24-hour delay. However, dopamine has been implicated not only in enhancing both early- and late-phase long term potentiation (Otmakhova and Lisman 1996; Lemon and Manahan-Vaughan 2006) but also in increasing neuronal replay (McNamara et al. 2014) and changing dynamic hippocampal physiology (Otmakhova and Lisman, 1999; Martig and Mizumori, 2011). While some of these mechanisms should be apparent only after a delay (i.e., 24 hours), other mechanisms could be apparent immediately. Thus, secondarily, we also sought to establish whether putatively phasic versus sustained dopaminergic influences on memory would be present only after a period that allowed for consolidation, or would also be evident immediately after encoding.

We set out to dissociate the putative influence of two distinct dopaminergic responses on memory formation during reward anticipation. To parse these effects, we designed a study in which we used overlearned abstract cues to indicate reward probability, establishing expected reward value independently from uncertainty. We further manipulated the epoch of encoding during reward anticipation: we presented trial-unique, incidental items either early (400ms after cue presentation), to capture a rapid dopamine response anticipated to scale with expected reward value, or late (3-3.6s after cue presentation), to capture a sustained dopamine response anticipated to scale with high reward uncertainty. Finally, in addition to testing memory following a 24-hour consolidation interval, we tested a second group 15 minutes-post encoding, to examine whether the effects on memory performance were all dependent on consolidation. We used an incidental memory task, as opposed to an intentional memory task, because we aimed to develop an experimental context that created distinct dopaminergic profiles: phasic and ramping dopamine responses. Had we told participants there would be a memory test for the items shown, motivational salience would then be attached to the items themselves, disrupting the distinct dopamine profiles we aimed to elicit. With this paradigm, we examined whether expected reward value and reward uncertainty yielded temporally and mechanistically distinct influences on memory formation, as would be predicted for these distinct triggers for dopamine release.

Methods

Subjects

Forty healthy young adult volunteers participated in the study. All participants provided informed consent, as approved by the Duke University Institutional Review Board. Data from additional participants were excluded due to failure to follow the instructions (n =1), poor cue-outcome learning (n = 2) or computer error (n = 3). Individuals participated in one of two experiments: Experiment 1 (n = 20, 12 female, mean age = 27.45 ± 3.82 years) or Experiment 2 (n = 20, 12 female, mean age = 21.90 ± 3.23 years).

Design and Procedure

Reward Learning

The first phase of the experiment involved reward learning. During reward learning, participants were presented with abstract cues, all Tibetan characters, which predicted 100%, 50%, or 0% probability of subsequent monetary reward. Participants were instructed to try to learn the relationship between the cues and reward. They were presented with the cue (1s), a unique image of an everyday object (2s), then an image of either a dollar bill or a scrambled dollar bill (400ms), indicating a reward or no reward respectively. A jittered fixation-cross separated trials (1-8s). No motor contingency was required to earn the reward. Independent of performance, participants were paid a monetary bonus equal to the amount accumulated over the outcomes in one block of the task. Participants saw 40 trials per condition, distributed evenly over five blocks. Prior to the first block and following every block, participants were asked to rate their certainty of receiving reward following each cue along a sliding scale from “Certain: No Reward” to “Certain: Reward.” To be included in the analysis, during learning participants had to meet a minimum criteria of identifying the 100% reward cues as more associated with reward than the 0% cues, as assessed by average certainty score across all 5 blocks.

Incidental Encoding

In the second phase of the experiment, the abstract cues used in the reward learning phase were used to modulate incidental encoding; these cues predicted 100%, 50%, and 0% reward probability. Because the associations were deterministic, reward probabilities established expected reward value, with 100% higher than 50% and 0% rewarded cues. In contrast, 50% predictive cues established higher uncertainty relative to the 100% and 0% predictive cues.

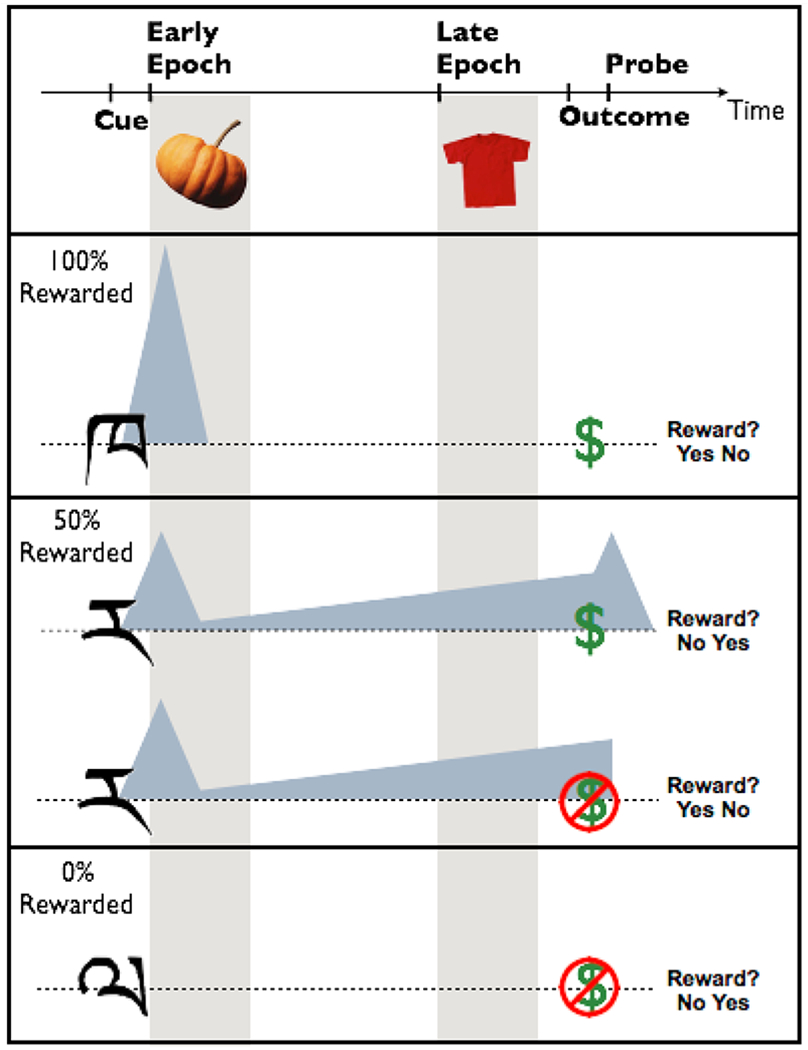

During the incidental encoding task, participants saw a cue (400ms), followed by a unique novel object (1s) either immediately after the cue (400ms post-cue onset, objects remained on the screen for 1s; object offset was 3.2s pre-outcome) or just prior to outcome (3-3.6s post-cue onset, objects remained on the screen for 1s; object offset was 0-0.6s pre-outcome). Note - object presentation and reward outcome did not overlap. These encoding epochs were chosen based on the timing of the phasic dopamine response [<500ms (Schultz, Dayan, and Montague 1997)] and the sustained ramping response [2s (Fiorillo, Tobler, and Schultz 2003); also 4-6s (Howe et al. 2013; Totah, Kim, and Moghaddam 2013)]. After the image offset and during the delay, a fixation cross was shown. A dollar bill or scrambled dollar bill, indicating a reward or no reward respectively (400ms), appeared 4.6 s after cue onset for all trials. After reward feedback, participants were presented with the probe question, “Did you receive a reward?” (1s). Participants were instructed to quickly and accurately make a “yes” or “no” button press. The exact motor component could not be anticipated since the yes/no, right/left location was random from trial-to-trial. A jittered fixation-cross separated trials (1-7s). In sum, there were six conditions in the design: three probabilities of reward (100%: high expected reward value/certain, 50%: medium expected reward value/uncertain, and 0%: no expected reward value/certain) crossed with the early or late encoding epochs (Figure 1). There were 20 trials per condition, evenly dispersed among five blocks.

Figure 1.

The task was designed to dissociate two physiological profiles of a putative dopamine response during reward anticipation - a phasic response that occurs rapidly and scales with expected reward value (Early Epoch) and a sustained response that increases with uncertainty (Late Epoch). Shaded triangles indicate these dopamine profiles relative to the cue, encoding epochs, outcome, and reward probe events in each trial. Cues associated with 100%, 50%, or 0% reward probability were presented for 400ms. Incidental encoding objects were presented for 1s either immediately following the cue (400ms post-cue onset and 3.2s pre-outcome) or shortly before anticipated reward outcome (3-3.6s post-cue onset and 0-0.6s pre-outcome). Finally, a probe asking participants whether or not they received a reward, with the yes/no response mapping counterbalanced, followed for 1s.

Recognition Memory Test

Participants performed an old/new recognition memory test where they viewed 280 “new” objects and 280 “old” objects. Although “old” objects were from both the reward learning and incidental encoding phases, only the old objects from the incidental encoding phase were included in analyses to calculate memory performance. They rated their confidence by saying “Definitely Sure,” “Pretty Sure,” or “Just Guessing” for each memory judgment. When examining memory accuracy for trials participants labeled as “guesses” (one-sample t-tests within guesses: [hits – false alarms]/all responses) memory was significantly greater than chance. Because of this we included all trials (those labeled as guesses, medium confidence, and high confidence) in the analysis.

Experiment 1 – 24-Hour Retrieval

In Experiment 1, participants returned at the same time the next day to complete the recognition memory test, approximately 24 hours after encoding.

Experiment 2 – Immediate Retrieval

In Experiment 2, participants completed the recognition memory test 15 minutes after completing the encoding task.

Analysis

Memory performance for all analyses was calculated as a corrected hit rate ([hits – false alarms]/all responses). This study was a between-subjects design with specific hypotheses about how dopamine signaling would impact memory. To address our primary research question, we first examined the effects of reward probability (100%, 50%, 0%) and encoding epoch (early, late) in the 24-hour group. Then to examine if the observed effects were consolidation dependent, we examined memory in the Immediate group, comparing memory performance across groups using retrieval time (Immediate, 24-hour) as a between-subjects factor and reward probability (100%, 50%, 0%) as a within-subjects factor. All analyses were corrected for multiple comparisons, either by ANOVA or with sequential Bonferroni correction.

Within Experiments/Groups:

Repeated-measures ANOVA (3 x 2) was used to examine the effects of reward probability (100%, 50%, 0%) and encoding epoch (early, late) on subsequent memory performance. Any significant interaction between reward probability and encoding epoch was investigated further using post-hoc analyses. One-way repeated-measures ANOVAs with reward probability as a within-subjects factor, conducted separately at early encoding (400ms post-cue onset) and late encoding (3-3.6s post-cue onset,) epochs, were used to examine how reward probability related to memory formation at each encoding epoch during anticipation. Significant one-way ANOVAs prompted additional follow-up analyses: specifically, a test for a linear trend increasing with probability was used to examine how expected reward value related to memory in the early encoding epoch, and post-hoc t-tests were used to compare memory for certain (100% or 0%) versus uncertain (50%) trials in the late encoding epoch.

To examine alternate explanations that variability in attention and task engagement at encoding could account for the subsequent memory performance across conditions, we examined performance on the reward probe. Specifically, we conducted 1-way ANOVAs and follow-up pairwise Student’s t-tests as well as tests for a linear trend to determine whether reaction time or accuracy for the reward probe varied as a function of reward probability or encoding epoch.

Finally, to test the alternate explanation that memory for items presented following the 50% probability cue may be influenced by reward outcome (rewarded, unrewarded), we completed two-tailed paired Student’s t-tests to examine whether there were differences in memory for rewarded versus unrewarded trials within that probability condition. These t-tests were conducted separately at the early and late encoding epochs.

Across Experiments:

Since both Experiment 1 (Group 1, 24 hour delay) and Experiment 2 (Group 2, 15 minute delay) revealed differences in memory performance for early versus late encoding epochs, we next tested whether the patterns at each encoding epoch significantly differed across groups (according to retrieval time). We thus first performed 3 x 2 ANOVAs with reward probability (100%, 50%, 0%) as a within-subjects factor and retrieval time (immediate, 24-hours) as a between-subjects factor. We conducted this analysis at both the early and late encoding epochs [Early epoch: reward probability (100%, 50%, 0%) x retrieval time (24 hour vs. Immediate); Late epoch: reward probability (100%, 50%, 0%) x retrieval time (24 hour vs. Immediate)]. A significant interaction between reward probability and retrieval time prompted post-hoc pairwise ANOVAs to examine whether the deltas between immediate and 24-hour retrieval were significantly different across reward probability conditions.

Lastly, we also performed a 2 (encoding epoch: early, late) x 3 (reward probability: 100%, 50%, 0%) x 2 (group: immediate, 24 hour) repeated measures ANOVA using encoding epoch and reward probability as within-subjects factors and group as a between-subjects factor to test for a 3-way interaction.

Results

Reward Learning

Participants in both groups successfully learned the meaning of the cues during the reward-learning phase. In the 24-hour memory group, participants in the final block reported the 100% probable cue as 99.46% (± 0.20 SEM) likely to predict reward, the 50% cue as 52.65% (± 3.39 SEM) likely to predict reward and the 0% cue as 2.29% (± 1.79 SEM) likely to predict reward. In the Immediate memory group, participants in the final block reported the 100% probable cue as 99.37% (± 0.43 SEM) likely to predict reward, the 50% cue as 55.26% (± 2.68 SEM) likely to predict reward and the 0% cue as 1.44% (± 1.07 SEM) likely to predict reward.

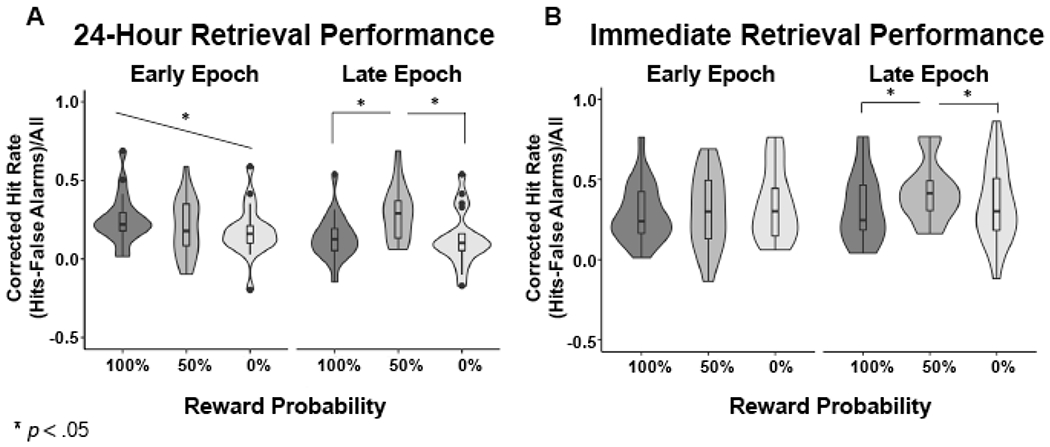

Experiment 1 – 24-Hour Retrieval Group

Because the aim of the study was to examine the memory effects of distinct temporal components of reward anticipation and relate those components to determinants of dopamine physiology, we completed a 3 x 2 repeated measures, within-subjects ANOVA looking at as a function of reward probability (100%, 50%, 0%) and encoding epoch (early, late). We found a main effect of reward probability (F(2,18) = 5.56, p = 0.01), no main effect of encoding epoch (F(1,19) = 2.57, p = 0.13), and a strong interaction between encoding epoch and reward probability (F(2,18) = 7.50, p = 0.004). Follow-up one-way ANOVAs examining the effect of reward probability on memory within early and late encoding epochs revealed a significant effect in the late epoch (F(2,19) = 13.25, p < 0.0001) and a trend-level effect in the early epoch (F(2,19) = 2.41, p = 0.10). Post-hoc tests to examine memory performance during the early encoding epoch revealed a significant linear trend such that memory scaled with increasing reward probability (Linear trend: R square = 0.03, p = 0.04). Thus, early during anticipation, memory performance linearly tracked expected reward value (Figure 2A). Post-hoc t-tests to examine memory performance during the late encoding epoch revealed greater memory following 50% cues compared to the 100% and 0% cues, with no difference in performance between 100% and 0% cues (100% vs. 50%: t(19) = 4.34, p = 0.0004; 50% vs. 0%: t(19) = 4.20, p = 0.0005; 100% vs. 0%: t(19) = 0.31, p = 0.76; corrected for multiple comparisons using the sequential Bonferroni technique (Holm 1979)). Thus, late in reward anticipation, greater reward uncertainty benefitted memory (Figure 2A).

Figure 2.

Memory Performance for Early and Late Encoding Epoch at 24-Hour and Immediate Retrieval: A. In the 24-hour Retrieval group, Early Epoch memory linearly increased with expected reward value; Late Epoch memory was greatest for items encoded during reward uncertainty. B. In the Immediate Retrieval group, Early Epoch memory did not differ by expected reward value. As in the 24-hour Retrieval group, Late Epoch memory for the Immediate Retrieval group was greatest for items encoded during reward uncertainty.

It was also possible that the memory benefit we attributed to the uncertain anticipatory context could instead be explained by associations with reward outcomes. To investigate this alternative explanation, we performed t-tests between the rewarded and unrewarded uncertain trials, during both early and late epochs (corrected for multiple comparisons). We found no differences in memory as a function of reward outcome in either epoch (early, rewarded vs. unrewarded: t(19) = 0.10, p =0.92 ; late, rewarded vs. unrewarded: t(19) = 1.09, p = 0.29).

Experiment 2 – Immediate Retrieval Group

Examining memory after a 24-hour retrieval delay ensured that performance reflected all the known consolidation-dependent mechanisms of dopamine on memory, but did not allow us to distinguish between effects acting at encoding versus consolidation. Thus, in Experiment 2, we recruited a new group of participants to complete an immediate retrieval test, 15 minutes after encoding. All analyses for Experiment 1 were repeated for Experiment 2. Analyses of immediate retrieval performance replicated effects of reward uncertainty on items presented late in the anticipation epoch; however, they did not show effects of reward probability on items presented early in the epoch, as follows:

A 3 x 2 repeated-measures, within-subjects ANOVA revealed a trend for a main effect of reward probability (F(2, 18) = 3.33, p = 0.06) and a main effect of encoding epoch (F(1, 19) = 8.008, p= 0.01), with memory greater at late than early encoding epochs (t(19) = 2.83, p = 0.01). Importantly, there was again a significant interaction between reward probability and encoding epoch (F(2, 18) = 3.711, p = 0.04). Post-hoc one-way ANOVAs within early and late encoding epochs revealed a significant difference in memory for the late epoch (F(2,19) = 4.95, p = 0.01) but no difference for the early epoch (F(2,19) = 1.31, p = 0.28). Post-hoc t-tests to examine memory performance during the late encoding epoch revealed significantly greater memory following 50% cues relative to both 100% and 0% cues (100% vs. 50%: t(19) = 2.97, p = 0.008; 50% vs. 0%: t(19) = 2.57, p = 0.02), with no difference between the latter (100% vs. 0%: t(19) = 0.66, p = 0.52; corrected for multiple comparisons). The presence of the uncertainty effect at both immediate and 24-hour retrieval indicates that this effect was not dependent on consolidation (Figure 2B).

By contrast, the effect of expected reward value for items in the early encoding epoch was not present at immediate retrieval. Although the ANOVA demonstrated no significant difference in memory performance by reward probability, the test for a linear trend was an a priori analysis. We found no significant linear trend (R square = 0.01, p = 0.14). Thus, the influence of reward probability on memory for items presented early during reward anticipation was not present during immediate retrieval, and only appeared after 24 hours (Figure 2B).

As was the case in the 24-hour retrieval group, analyses for the immediate retrieval group revealed no effects of reward outcome on memory during uncertain trials (early, rewarded vs. unrewarded t(19) < 0.0001, p = 1.00; late, rewarded vs. unrewarded t(19) = 1.33, p = 0.20; corrected for multiple comparisons).

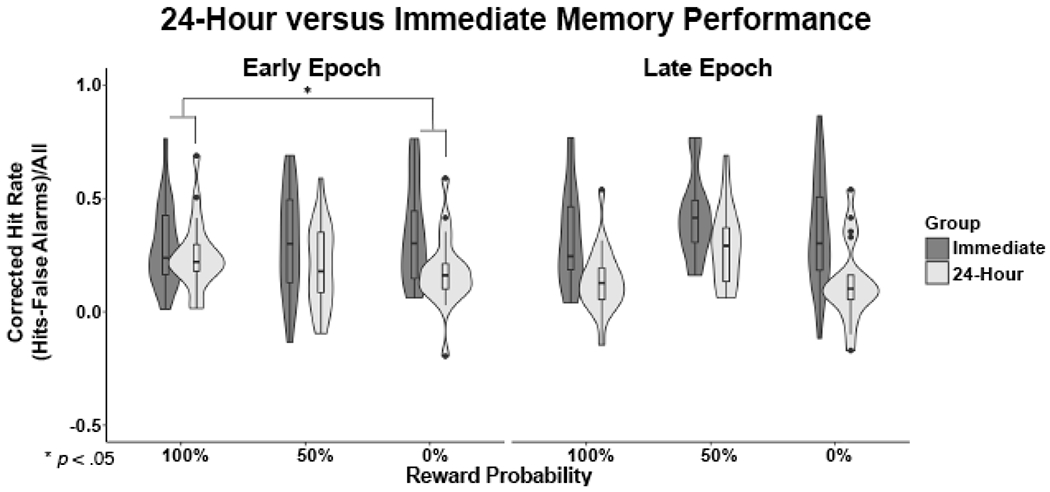

Contrasting 24-Hour and Immediate Memory Groups from Experiments 1 & 2:

To quantify whether memory patterns within early and late encoding epochs changed over a 24-hour period of consolidation, we ran two 3 x 2 repeated-measures ANOVAs, one per encoding epoch (early, late), with the within-subject factor reward probability (100%, 50%, 0%) and the between-subjects factor retrieval time (24-hour group, Immediate group) and looked for an interaction between the two. We found a significant interaction at the early epoch (F(2,37) = 4.281, p = 0.021) but not at the late epoch (F(2,37) = 1.826, p = 0.175). Follow-up pairwise ANOVAs revealed that the decrement in memory performance as a function of retrieval period (24-hour vs. immediate) was significantly greater for 0% than 100% reward (F(1,38) = 8.76, p = 0.005), with no other significant differences (all other ANOVAs: F(1,38) < 1.75, p > 0.19). After consolidation, memory for items encoded during the early epoch decreased more following 0% cues than following 100% cues. Thus, the relationship between memory and reward anticipation remained consistent from immediate to 24-hour retrieval for items at the late encoding epoch, but changed significantly across retrieval periods in the early encoding epoch (Figure 3).

Figure 3.

Retrieval Group by Expected Reward Value Interaction Within the Early Epoch, there was a significant interaction between reward probability (100%, 50%, 0%) and retrieval group (Immediate, 24- hour). The difference between the 24-hour and Immediate Retrieval groups was smaller at 100% reward probability and greater at 0% reward probability. Thus, in the Early Epoch, the impact of reward value on memory is only evident after overnight consolidation.

To test whether our distinct patterns of results observed within the 24-Hour retrieval group and the Immediate retrieval group would withstand a 3-way interaction we performed a 2 (encoding epoch: early, late) x 3 (reward probability: 100%, 50%, 0%) x 2 (group: immediate, 24 hour) repeated measures ANOVA using encoding epoch and reward probability as within-subjects factors and group as a between-subjects factor.

As expected, we observed a significant main effect of reward probability F(2,76) = 6.40, p = 0.003, no significant main effect of encoding epoch F(1,38) = 1.50, p = 0.228, and a significant main effect of group F(1,38) = 7.45, p = 0.01. In addition, we observed the following significant interactions: reward probability x encoding epoch F(2,76) = 11.04, p < 0.001, reward probability x group F(2,76) = 3.54, p = 0.034, and encoding epoch x group F(1,38) = 10.33, p = 0.003. However, we did not observe a significant 3 way interaction F(2,76) = 1.77, p = 0.178.

Post-hoc analyses following up on the main effect of reward probability revealed greater memory for the 50% reward condition compared to both the 100% and 0% conditions [50% vs. 0%: t(39) = 2.70, p = 0.01; 100% vs. 50%: t(39) = 3.11, p = 0.003, 100% vs. 0%: t(39) = 0.15, p = 0.88; corrected for multiple comparisons]. This result is qualified by the interaction between reward probability and encoding epoch as well as reward probability and group (see below).

As expected, post-hoc analyses following up on the main effect of group revealed better memory for the Immediate than 24 hour retrieval group (t(33.51) = 2.70, p = 0.011).

The significant interaction of reward probability x encoding epoch was driven by no differences in memory performance in the early epoch (all ps > 0.45), but greater memory performance in the uncertain condition compared to both the 100% and 0% condition during the late epoch [50% vs 100%: t(39) = 5.18, p < 0.001; 50% vs. 0%: t(39) = 4.75, p < 0.001; corrected for multiple comparisons].

The significant interaction of reward probability x group showed that within the 24 hour group there were differences in memory performance across all three reward probability levels with uncertainty having the strongest effect on memory performance (50% > 0%: t(19) = 3.34, p < 0.005; 50% > 100% t(19) = 1.97, p = 0.06; 100% > 0%: t(19) = 2.15, p = 0.04; note 50% vs. 100% and 100% vs. 0% do not survive correction for multiple comparisons). In the Immediate retrieval group there was significantly better memory for the uncertain cues compared to the 100% predictive cues (t(19) = 2.46, p = 0.02).

The significant interaction of encoding epoch x group was driven by significantly greater memory in the Late epoch compared to the Early epoch in the Immediate group (t(19) = 2.83, p = 0.01). There were no differences in memory that varied by encoding epoch in the 24 hour group (t(19) = 1.60, p = 0.13).

These results largely confirm findings from our individual group ANOVAs, with the exception that we expected to observe a significant 3-way interaction (reward probability x encoding epoch x group). The differences we observed across groups (linear trend in memory by reward probability in the early epoch only for the 24 hour group, and greater memory for the uncertain than certain reward probabilities in both groups) should be replicated in a larger sample.

Experiments 1 & 2: Confidence During Recognition Memory Test

To examine the degree to which participant’s self-reported confidence ratings for old images varied according to reward probability, encoding epoch, or group, we ran a repeated-measures ANOVA with reward probability (0%, 50%, 100%) and encoding epoch (early, late) as within-subjects factors and retrieval time (Immediate, 24-hour) as a between-subjects factor. As anticipated, average confidence levels for hits were higher in the Immediate vs. 24-hour retrieval group (F(1,38) = 13.796, p = .001). However, average confidence levels did not significantly differ as a function of reward probability (F(1.63,61.85) = .125, p = .841), encoding epoch (F(1,38) = .373, p = .545), or any interactions between the three factors (all ps > 0.147). Note, variation in degrees of freedom reflects Greenhouse-Geisser correction for a sphericity violation.

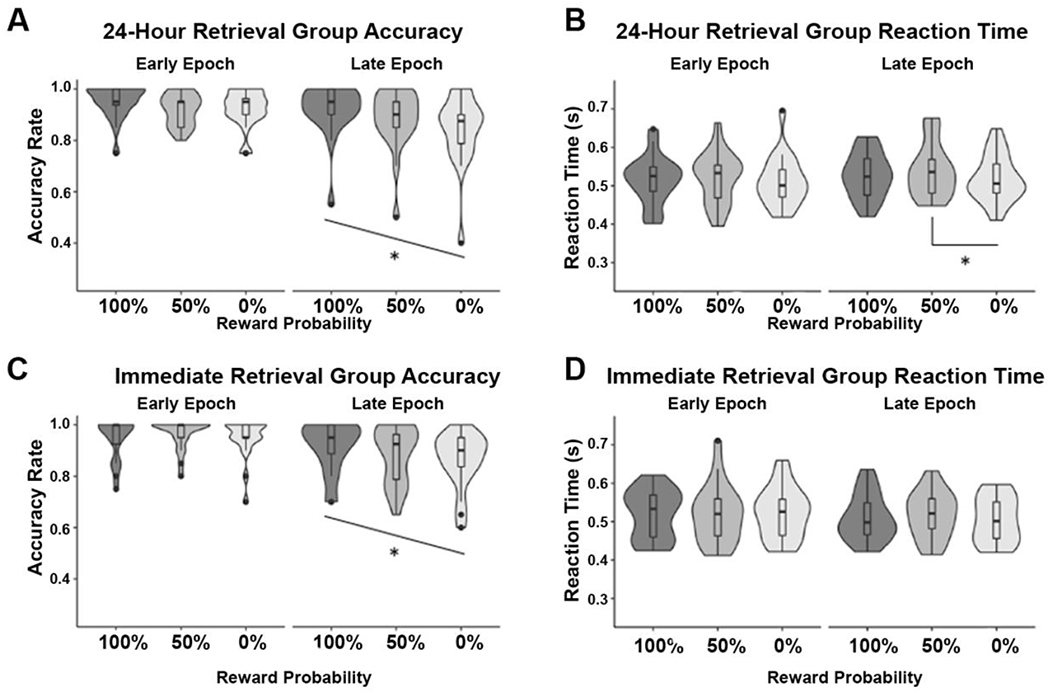

Experiments 1 & 2: Accuracy and Reaction Time During Encoding

To test the explanation that task engagement during encoding may explain the observed relationships between reward anticipation and memory, we examined whether the patterns of accuracy or reaction time for reward probes resembled subsequent memory performance across conditions. In both groups, one-way repeated-measures ANOVAs showed no accuracy differences in probe response by reward probability for trials with items presented in the early epoch (24-hour: F(2,19) = 2.37, p = 0.11; Immediate: F(2,19) = 1.42, p = 0.25; Figure 4A and 4C). However, probe accuracy on trials with items presented in the late epoch in both groups significantly differed with reward probability (24-hour: F(2,19) = 9.83, p = 0.0004; Immediate: F(2,19) = 9.58, p < 0.0001), and revealed significant linear trends, such that people performed more accurately as expected reward value increased (24-hour: R square = 0.06, p < 0.0001; Immediate: R square = 0.04, p = 0.004; Figure 4A and 4C).

Figure 4.

Accuracy and Reaction Times for Reward Probe at Encoding 24-hour retrieval group: A. Unlike memory, accuracy for responding to the yes/no reward probe on trials with items presented in the early epoch did not differ by expected reward value. For trials with items in the late epoch, accuracy linearly increased with increasing expected reward value. B. Reaction times on trials with items presented in the early epoch did not differ by expected reward value. Reaction times on trials with items presented in the late epoch were slower for the 50% trials than the 0% trials. Immediate retrieval group: C. The pattern of accuracy for detecting the yes/no reward probe was similar to the 24-hour retrieval group. D. There were no significant differences in reaction time for any condition.

One-way repeated-measures ANOVAs of reaction time on trials with items presented in the late epoch revealed a significant difference at 24-hour but not Immediate retrieval (24-hour: F(2,19) = 4.25, p = 0.02; Immediate: F(2,19) = 1.97, p = 0.15; Figure 4B and 4D). Follow-up pairwise t-tests showed that the 24-hour effects were driven by slower reaction times for 50% rewarded trials relative to 0% rewarded trials (50% vs. 0%: t(19) = 3.84, p = 0.001; 100% vs. 50%: t(19) = 1.44, p = 0.17, 100% vs. 0%: t(19) = 1.18, p = 0.25; Figure 4B). There were no significant reaction time differences on trials with items presented in the early epoch (24-hour: F(2,19) = 1.12, p = 0.24; Immediate: F(2,19) = 0.15, p = 0.86; Figure 4B and 4D). Thus, reaction time and accuracy on the reward probe were modulated by reward probability, but not in a manner that explained the observed memory effects.

Discussion

Our findings demonstrate reward anticipation influences on memory formation that are temporally specific: In the early anticipation encoding epoch, 400ms after the presentation of the reward cue, and temporally coincident with phasic dopamine responses, item memory scaled with expected reward value. During a late anticipation encoding epoch, just prior to a predicted outcome, memory was instead greatest for items presented during high uncertainty. The memory benefit for items presented just after cues for greater rewards, was evident only after 24 hours, implying a mechanism requiring consolidation to modulate memory formation. The memory benefit for items presented just prior to uncertain outcomes, however, was evident both immediately and 24 hours after encoding, implying a distinct underlying mechanism that modulates memory formation at encoding.

Dopaminergic Accounts

While this is, to our knowledge, the first behavioral demonstration of dissociable contexts for encoding within reward anticipation, the results build on expectations generated from prior neuroimaging and physiological studies. Previous work using functional magnetic resonance imaging (fMRI) has demonstrated dissociable neural responses within the dopaminergic system for expected reward value and uncertainty (Preuschoff, Bossaerts, and Quartz 2006; Tobler et al. 2007), with one study demonstrating dissociable temporal patterns in the striatum: activation in the first second after a reward cue scaling with expected reward value and in the following seconds leading up to reward outcome scaling with uncertainty (Preuschoff, Bossaerts, and Quartz 2006). Physiologically, cues associated with greater expected reward value elicit greater phasic dopamine firing in the midbrain at latencies less than 400ms (Fiorillo, Tobler, and Schultz 2003; Tobler, Fiorillo, and Schultz 2005), whereas a sustained dopaminergic ramp has been shown to increase with greater reward uncertainty over a two second period of reward anticipation (Fiorillo, Tobler, and Schultz 2003). Because phasic dopamine would be predicted to benefit memory early in the reward anticipation period and sustained anticipatory dopamine to have an effect close to the reward outcome, the present study implies previously undescribed, functionally specific relationships between memory and phasic versus sustained dopamine.

Dissociable effects on memory are grounded in observations of other differential effects of dopaminergic firing modes. Phasic burst firing preferentially influences downstream targets via synaptic release, while sustained low-frequency activity results in extrasynaptic release (Floresco et al. 2003). Extracellular dopamine levels have been demonstrated not only to increase for increased tonic dopamine activity (Floresco et al. 2003), but also to exhibit sustained, ramping dopamine levels lasting on the order of seconds (Roitman et al. 2004; Stuber et al. 2005; Howe et al. 2013). The mismatch of distribution of dopamine receptors in the hippocampus relative to dopamine terminals indicates that phasic dopamine firing in the midbrain cannot be communicated to hippocampal synapses as a temporally precise signal (see Shohamy & Adcock 2010 for review). Optogenetic findings have also revealed that higher (simulating phasic) versus lower (simulating tonic, or possibly sustained) levels of dopamine release have differential influences on dopamine receptors in the hippocampus (Rosen, Cheung, and Siegelbaum 2015). Relationships between hippocampal dopamine release and hippocampal memory formation have yet to be demonstrated. While further work thus remains to elucidate phasic versus sustained (or tonic) dopamine effects on memory, the extant literature supports multiple dissociable mechanisms of dopaminergic influence at distinct timescales (Düzel et al. 2010; Shohamy and Adcock 2010).

The present observation of a 24-hour memory benefit following higher expected reward value early during reward anticipation is consistent with a previously described relationship in the literature between dopamine and consolidation-dependent memory effects. Expected reward value predicts phasic dopamine activity in the VTA (Schultz, Dayan, and Montague 1997; Schultz 1998; Fiorillo, Tobler, and Schultz 2003; Pan et al. 2005; Tobler, Fiorillo, and Schultz 2005; Cohen et al. 2012). Dopamine has been associated with enhancement of late-phase long term potentiation (Lemon and Manahan-Vaughan 2006) and is a critical element in the synaptic-tagging and capture theory of memory consolidation (Sajikumar and Frey 2004; Lisman, Grace, and Duzel 2011; Redondo and Morris 2011). Additionally, optogenetically-induced burst firing of dopaminergic fibers results in increased hippocampal replay during post-learning sleep and increased memory (McNamara et al. 2014). Thus, previous work supports a relationship between dopamine and enhanced hippocampal memory consolidation. Our novel demonstration of a temporally-specific effect of expected reward value on memory during the early epoch of reward anticipation are consistent with phasic dopamine driving consolidation-dependent memory processes.

On the other hand, our observation of a memory benefit during high uncertainty just prior to reward outcome, evident immediately and persisting after consolidation, suggests a mechanism of memory enhancement that occurs at encoding. High reward uncertainty has been associated with sustained, ramping dopamine firing in the VTA (Fiorillo, Tobler, and Schultz 2003). As noted above, relationships between dopamine release and hippocampal memory formation have yet to be demonstrated. What has been shown, however, is that tonic dopamine has immediately observable effects on hippocampal physiology (Otmakhova and Lisman, 1999; Martig and Mizumori, 2011; Rosen, Cheung, and Siegelbaum 2015) and the threshold for early long term potentiation (Li et al. 2003), providing candidate mechanisms whereby sustained dopamine may contribute to memory at immediate retrieval, in the hippocampus and elsewhere. This hypothesis opens new avenues for future investigation.

By demonstrating and dissociating both immediate and consolidation-dependent memory benefits related to reward anticipation, our study takes an important first step towards reconciling conflicting patterns of findings in the memory literature. Prior rodent work has demonstrated the importance of consolidation for dopamine-dependent memory formation (Bethus, Tse, and Morris 2010; McNamara et al. 2014) and theoretical mechanisms of dopamine synaptic activity have emphasized consolidation (Lisman, Grace, and Duzel 2011; Redondo and Morris 2011). However, some reward anticipation effects on memory are not consolidation-dependent (Murty and Adcock 2014; Gruber, Gelman, and Ranganath 2014). Our data integrates across previous studies, suggesting that while phasic, synaptic dopamine effects may indeed be dependent on consolidation, effects of sustained and extrasynaptic dopamine may occur at encoding.

Alternative Accounts and Limitations

In the present study, we did not manipulate dopamine directly. It is thus possible that our effects, and in particular, the uncertainty benefit modulating encoding, were not dopaminergic in nature. Although prior studies using pharmacological manipulations have already contributed direct evidence that dopamine affects memory formation in humans (Knecht et al. 2004; Chowdhury et al. 2012), they have not been shown to selectively affect specific modes of dopamine firing, and indeed L-Dopa should enhance both. Our hypotheses were based on work showing sustained neuronal firing in the VTA scaling with greater uncertainty. It has been debated whether this signal represents sustained dopaminergic firing or represents an accumulation of phasic responses (Niv, Duff, and Dayan 2005). There is evidence that ramping activity in the VTA may be GABAergic in nature (Cohen et al. 2012). Other work, however, is consistent with a sustained signal that is actively maintained (Howe et al. 2013; Totah, Kim, and Moghaddam 2013; Lloyd and Dayan 2015; MacInnes et al. 2016; Murty, Ballard, and Adcock 2016). In addition, sustained dopamine release in efferent regions has been demonstrated scaling with reward proximity and reward magnitude (Howe et al. 2013); a response to uncertainty has yet to be experimentally examined in efferent regions. Other neurotransmitters, such as acetylcholine, offer additional potential mechanisms for enhanced memory formation; these are not mutually exclusive. The hippocampus in particular is densely populated with cholinergic receptors (Alkondon and Albuquerque 1993) and acetylcholine has been discussed as important for expected uncertainty (Yu and Dayan 2005; Sarter et al. 2014), which may be similar to the cued uncertainty in this study. Finally, under some behavioral contexts, hippocampal dopamine release appears to require neuronal activity within the locus coeruleus, implicating noradrenergic neurons (Smith and Greene 2012; Kempadoo et al. 2016; Takeuchi et al. 2016). The current work introduces possibilities for future experiments that disentangle the roles of specific neuromodulators in encoding during high reward uncertainty.

This behavioral project also lacks neural evidence of engagement of specific regions within the medial temporal lobe (MTL) and the rest of the brain. Our discussion and interpretation focus predominantly on the hippocampus given extensive evidence supporting its role in reward-related memory formation and the evidence for modulation of its physiology. However, there is also strong support for engagement of the perirhinal cortex during familiarity memory: indeed research suggests the perirhinal cortex is critical for familiarity memory while the hippocampus is important for recollection (Diana, Yonelinas, and Ranganath 2007; Eichenbaum, Yonelinas, and Ranganath 2007; Suzuki and Naya 2014). We included all trial types in our analyses here: guesses, medium, and high confidence trials. Prior work relating these confidence ratings to memory processes suggests that our overall memory measure is likely to reflect the function of not only the hippocampus proper, but also the broader MTL including perirhinal cortex. In addition, when analyzing self-reported confidence data for old images (specifically hits), we observed significant group differences (greater confidence in the Immediate than 24-hour group), but no other significant effects. From prior literature (Lisman, Grace, and Duzel 2011; Shohamy and Adcock 2010) it might be predicted that participants would report highest confidence ratings for trials associated with highest reward (or uncertainty), particularly given that if individuals recollect an object it should be associated with high confidence. Notably in this paradigm we did not use a remember/know assessment or otherwise specifically assess recollection. Furthermore, both recollection and familiarity can support high confidence, so that confidence alone should not be used as a measure of recollection (though typically highest confidence ratings are associated with recollection (Yonelinas et al. 2010)). Future iterations using a remember/know structure to assess recollection, fMRI, and more trials may permit analyzing guesses and low-confidence trials separately from high confidence trials. We would predict that guesses and low-confidence trials would recruit the perirhinal cortex, while high-confidence trials would recruit the hippocampus, allowing the test of additional, anatomical hypotheses about distinct mechanisms for the reward anticipation effects shown here.

Multiple alternative accounts were also considered as potential explanations of our memory findings. One intuitive possibility is that enhanced memory formation was a result of greater task engagement, specifically increased attention. Pearce Hall introduced the notion of attention influencing learning of uncertain stimuli (Pearce and Hall 1980) and this theory has been corroborated by others showing increased attention to uncertain cues (Hogarth et al. 2008; Koenig et al. 2017). As such, attention may have a particularly strong influence on learning in the 50% reward condition, (i.e., attention may increase towards the end of the trial). One measure we have for attention in the current paradigm is reaction time for responding to the reward probe; this may reflect encoding of the reward event but can only indirectly reflect attention prior to reward delivery. This limits the conclusions we can draw regarding the influence of attention during the cue and anticipation periods, which occur prior to reward delivery. At the time of the reward probe, in the Immediate Retrieval Group, there were no significant differences in reaction time when objects were shown early or late in the epoch. In the 24 Hour Retrieval Group, while there were no differences in reaction time when objects occurred in the early epoch, reaction time for 50% reward cues was significantly slower than 0% reward cues, but, importantly no different from 100% reward cues. The best manner of assessing the influence of attention during encoding may be to include eye tracking and/or neuroimaging measures in future iterations of the task. Given the present data, it is difficult to quantify how much of our observed effects are due to attention, though we speculate it is playing an important role.

Another possible alternative was that the memory benefit for uncertainty in the late encoding epoch was due to a phasic dopaminergic response to reward delivery. However, there was no evidence for this relationship, as there was no memory difference for items presented prior to rewarded versus unrewarded outcomes on the uncertain trials.

Lastly, the effect of reward probability on information presented during the early epoch was not particularly strong and the follow-up linear trend showing increasing memory with increasing reward probability explained a low percent of the variance in the data. In addition, the differences we observed across groups was not robust to a 3-way interaction. As such, future experiments, including more trials and a larger sample should replicate these results.

Conclusion

The present study builds on prior findings that reward anticipation modulates memory formation. Here we show that, within reward anticipation, there are distinct temporal contexts for encoding, with mechanistically distinct impact on memory outcomes. By mapping these distinct encoding contexts onto the putative physiological profiles for expected reward value and uncertainty, this work suggests a novel working model of dopaminergic influence on memory formation for future investigation: that whereas phasic dopamine release acts to facilitate memory consolidation, sustained dopamine release acts to benefit memory encoding. Integrating disparate findings, our proposed model paves the way for future research examining contextually-regulated mechanisms of reward-enhanced memory formation.

Acknowledgements

The authors would like to thank Vishnu Murty for helpful comments on this manuscript. This work was supported by a NIMH BRAINS award (R01MH094743) to R.A.A and a KL2 award (TR002554) to K.C.D. The authors declare no competing financial interests.

References

- Adcock R Alison, Thangavel Arul, Whitfield-Gabrieli Susan, Knutson Brian, and Gabrieli John D. E.. 2006. “Reward-Motivated Learning: Mesolimbic Activation Precedes Memory Formation.” Neuron 50 (3): 507–17. [DOI] [PubMed] [Google Scholar]

- Alkondon M, and Albuquerque EX. 1993. “Diversity of Nicotinic Acetylcholine Receptors in Rat Hippocampal Neurons. I. Pharmacological and Functional Evidence for Distinct Structural Subtypes.” The Journal of Pharmacology and Experimental Therapeutics 265 (3): 1455–73. [PubMed] [Google Scholar]

- Bergson C, Mrzljak L, Smiley JF, Pappy M, Levenson R, and Goldman-Rakic PS. 1995. “Regional, Cellular, and Subcellular Variations in the Distribution of D1 and D5 Dopamine Receptors in Primate Brain.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 15 (12): 7821–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bethus Ingrid, Tse Dorothy, and Morris Richard G. M.. 2010. “Dopamine and Memory: Modulation of the Persistence of Memory for Novel Hippocampal NMDA Receptor-Dependent Paired Associates.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 30 (5): 1610–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camps M, Cortés R, Gueye B, Probst A, and Palacios JM. 1989. “Dopamine Receptors in Human Brain: Autoradiographic Distribution of D2 Sites.” Neuroscience 28 (2): 275–90. [DOI] [PubMed] [Google Scholar]

- Chowdhury Rumana, Guitart-Masip Marc, Bunzeck Nico, Dolan Raymond J., and Düzel Emrah. 2012. “Dopamine Modulates Episodic Memory Persistence in Old Age.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 32 (41): 14193–204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciliax BJ, Nash N, Heilman C, Sunahara R, Hartney A, Tiberi M, Rye DB, Caron MG, Niznik HB, and Levey AI. 2000. “Dopamine D(5) Receptor Immunolocalization in Rat and Monkey Brain.” Synapse 37 (2): 125–45. [DOI] [PubMed] [Google Scholar]

- Cohen Jeremiah Y., Haesler Sebastian, Vong Linh, Lowell Bradford B., and Uchida Naoshige. 2012. “Neuron-Type-Specific Signals for Reward and Punishment in the Ventral Tegmental Area.” Nature 482 (7383): 85–88. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson TM, Gehlert DR, McCabe RT, Barnett A, and Wamsley JK. 1986. “D-l Dopamine Receptors in the Rat Brain: A Quantitative Autoradiographic Analysis.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 6 (8): 2352–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diana Rachel A., Yonelinas Andrew P., and Ranganath Charan. 2007. “Imaging Recollection and Familiarity in the Medial Temporal Lobe: A Three-Component Model.” Trends in Cognitive Sciences 11 (9): 379–86. [DOI] [PubMed] [Google Scholar]

- Düzel Emrah, Bunzeck Nico, Guitart-Masip Marc, and Düzel Sandra. 2010. “NOvelty-Related Motivation of Anticipation and Exploration by Dopamine (NOMAD): Implications for Healthy Aging.” Neuroscience and Biobehavioral Reviews 34 (5): 660–69. [DOI] [PubMed] [Google Scholar]

- Eichenbaum H, Yonelinas AP, and Ranganath C. 2007. “The Medial Temporal Lobe and Recognition Memory.” Annual Review of Neuroscience 30: 123–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo Christopher D., Tobler Philippe N., and Schultz Wolfram. 2003. “Discrete Coding of Reward Probability and Uncertainty by Dopamine Neurons.” Science 299 (5614): 1898–1902. [DOI] [PubMed] [Google Scholar]

- Floresco Stan B., West Anthony R., Ash Brian, Moore Holly, and Grace Anthony A.. 2003. “Afferent Modulation of Dopamine Neuron Firing Differentially Regulates Tonic and Phasic Dopamine Transmission.” Nature Neuroscience 6 (9): 968–73. [DOI] [PubMed] [Google Scholar]

- Gasbarri A, Sulli A, and Packard MG. 1997. “The Dopaminergic Mesencephalic Projections to the Hippocampal Formation in the Rat.” Progress in Neuro-Psychopharmacology & Biological Psychiatry 21 (1): 1–22. [DOI] [PubMed] [Google Scholar]

- Gasbarri A, Verney C, Innocenzi R, Campana E, and Pacitti C. 1994. “Mesolimbic Dopaminergic Neurons Innervating the Hippocampal Formation in the Rat: A Combined Retrograde Tracing and Immunohistochemical Study.” Brain Research 668 (1-2): 71–79. [DOI] [PubMed] [Google Scholar]

- Gruber Matthias J. , Gelman Bernard D., and Ranganath Charan. 2014. “States of Curiosity Modulate Hippocampus-Dependent Learning via the Dopaminergic Circuit.” Neuron 84 (2): 486–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gruber Matthias J., and Otten Leun J.. 2010. “Voluntary Control over Prestimulus Activity Related to Encoding.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 30 (29): 9793–9800. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogarth Lee, Dickinson Anthony, Austin Alison, Brown Craig, and Duka Theodora. 2008. “Attention and Expectation in Human Predictive Learning: The Role of Uncertainty.” Quarterly Journal of Experimental Psychology 61 (11): 1658–68. [DOI] [PubMed] [Google Scholar]

- Holm Sture. 1979. “A Simple Sequentially Rejective Multiple Test Procedure.” Scandinavian Journal of Statistics, Theory and Applications 6 (2): 65–70. [Google Scholar]

- Howe Mark W., Tierney Patrick L., Sandberg Stefan G., Phillips Paul E. M., and Graybiel Ann M.. 2013. “Prolonged Dopamine Signalling in Striatum Signals Proximity and Value of Distant Rewards.” Nature 500 (7464): 575–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jiao Xilu, Paré William P., and Tejani-Butt Shanaz. 2003. “Strain Differences in the Distribution of Dopamine Transporter Sites in Rat Brain.” Progress in Neuro-Psychopharmacology & Biological Psychiatry 27 (6): 913–19. [DOI] [PubMed] [Google Scholar]

- Kempadoo Kimberly A., Mosharov Eugene V., Choi Se Joon, Sulzer David, and Kandel Eric R.. 2016. “Dopamine Release from the Locus Coeruleus to the Dorsal Hippocampus Promotes Spatial Learning and Memory.” Proceedings of the National Academy of Sciences of the United States of America 113 (51): 14835–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Khan ZU, Gutiérrez A, Martín R, Peñafiel A, Rivera A, and de la Calle A. 2000. “Dopamine D5 Receptors of Rat and Human Brain.” Neuroscience 100 (4): 689–99. [DOI] [PubMed] [Google Scholar]

- Knecht Stefan, Breitenstein Caterina, Bushuven Stefan, Wailke Stefanie, Kamping Sandra, Flöel Agnes, Zwitserlood Pienie, and Ringelstein E. Bernd. 2004. “Levodopa: Faster and Better Word Learning in Normal Humans.” Annals of Neurology 56 (1): 20–26. [DOI] [PubMed] [Google Scholar]

- Koenig Stephan, Kadel Hanna, Uengoer Metin, Schubö Anna, and Lachnit Harald. 2017. “Reward Draws the Eye, Uncertainty Holds the Eye: Associative Learning Modulates Distractor Interference in Visual Search.” Frontiers in Behavioral Neuroscience 11 (July): 128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lemon Neal, and Manahan-Vaughan Denise. 2006. “Dopamine D1/D5 Receptors Gate the Acquisition of Novel Information through Hippocampal Long-Term Potentiation and Long-Term Depression.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 26 (29): 7723–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis DA, Melchitzky DS, Sesack SR, Whitehead RE, Auh S, and Sampson A. 2001. “Dopamine Transporter Immunoreactivity in Monkey Cerebral Cortex: Regional, Laminar, and Ultrastructural Localization.” The Journal of Comparative Neurology 432 (1): 119–36. [DOI] [PubMed] [Google Scholar]

- Li Shaomin, Cullen William K., Anwyl Roger, and Rowan Michael J.. 2003. “Dopamine-Dependent Facilitation of LTP Induction in Hippocampal CA1 by Exposure to Spatial Novelty.” Nature Neuroscience 6 (5): 526–31. [DOI] [PubMed] [Google Scholar]

- Lisman John, Grace Anthony A., and Duzel Emrah. 2011. “A neoHebbian Framework for Episodic Memory; Role of Dopamine-Dependent Late LTP.” Trends in Neurosciences 34 (10): 536–47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Little KY, Carroll FI, and Cassin BJ. 1995. “Characterization and Localization of [1251] RTI-121 Binding Sites in Human Striatum and Medial Temporal Lobe.” Journal of Pharmacology and Experimental. http://jpet.aspetjournals.org/content/274/3/1473.short. [PubMed] [Google Scholar]

- Lloyd Kevin, and Dayan Peter. 2015. “Tamping Ramping: Algorithmic, Implementational, and Computational Explanations of Phasic Dopamine Signals in the Accumbens.” PLoS Computational Biology 11 (12): e1004622. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maclnnes Jeff J., Dickerson Kathryn C., Chen Nan-Kuei, and Adcock R. Alison. 2016. “Cognitive Neurostimulation: Learning to Volitionally Sustain Ventral Tegmental Area Activation.” Neuron 89 (6): 1331–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martig Adria K.., and Mizumori Sheri J.. 2011. “Ventral Tegmental Area Disruption Selectively Affects CA1/CA2 but not CA3 Place Fields during a Differential Reward Working Memory Task.” Hippocampus 21 (2): 172–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mather Mara, and Schoeke Andrej. 2011. “Positive Outcomes Enhance Incidental Learning for Both Younger and Older Adults.” Frontiers in Neuroscience 5 (November): 129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McNamara Colin G., Tejero-Cantero Álvaro, Trouche Stéphanie, Campo-Urriza Natalia, and Dupret David. 2014. “Dopaminergic Neurons Promote Hippocampal Reactivation and Spatial Memory Persistence.” Nature Neuroscience 17 (12): 1658–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miendlarzewska Ewa A., Bavelier Daphne, and Schwartz Sophie. 2016. “Influence of Reward Motivation on Human Declarative Memory.” Neuroscience and Biobehavioral Reviews 61 (February): 156–76. [DOI] [PubMed] [Google Scholar]

- Murty Vishnu P., and Adcock R. Alison. 2014. “Enriched Encoding: Reward Motivation Organizes Cortical Networks for Hippocampal Detection of Unexpected Events.” Cerebral Cortex 24 (8): 2160–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murty Vishnu P., Ballard Ian C., and Adcock R. Alison. 2016. “Hippocampus and Prefrontal Cortex Predict Distinct Timescales of Activation in the Human Ventral Tegmental Area.” Cerebral Cortex, January 10.1093/cercor/bhw005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niv Yael, Duff Michael O., and Dayan Peter. 2005. “Dopamine, Uncertainty and TD Learning.” Behavioral and Brain Functions: BBF 1 (May): 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otmakhova NA, and Lisman JE. 1996. “D1/D5 Dopamine Receptor Activation Increases the Magnitude of Early Long-Term Potentiation at CA1 Hippocampal Synapses.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 16 (23): 7478–86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otmakhova NA, and Lisman JE. 1999. “Dopamine Selectively Inhibits the Direct Cortical Pathway to the CA1 Hippocampal Region.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 19 (4): 1437–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pan Wei-Xing, Schmidt Robert, Wickens Jeffery R., and Hyland Brian I.. 2005. “Dopamine Cells Respond to Predicted Events during Classical Conditioning: Evidence for Eligibility Traces in the Reward-Learning Network.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 25 (26): 6235–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pearce JM, and Hall G. 1980. “A Model for Pavlovian Learning: Variations in the Effectiveness of Conditioned but Not of Unconditioned Stimuli.” Psychological Review 87 (6): 532–52. [PubMed] [Google Scholar]

- Preuschoff Kerstin, Bossaerts Peter, and Quartz Steven R.. 2006. “Neural Differentiation of Expected Reward and Risk in Human Subcortical Structures.” Neuron 51 (3): 381–90. [DOI] [PubMed] [Google Scholar]

- Redondo Roger L., and Morris Richard G. M.. 2011. “Making Memories Last: The Synaptic Tagging and Capture Hypothesis.” Nature Reviews. Neuroscience 12 (1): 17–30. [DOI] [PubMed] [Google Scholar]

- Roitman Mitchell F., Stuber Garret D., Phillips Paul E. M., Wightman R. Mark, and Carelli Regina M.. 2004. “Dopamine Operates as a Subsecond Modulator of Food Seeking.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 24 (6): 1265–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rosen Zev B., Cheung Stephanie, and Siegelbaum Steven A.. 2015. “Midbrain Dopamine Neurons Bidirectionally Regulate CA3-CA1 Synaptic Drive.” Nature Neuroscience 18 (12): 1763–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sajikumar Sreedharan, and Frey Julietta U.. 2004. “Late-Associativity, Synaptic Tagging, and the Role of Dopamine during LTP and LTD.” Neurobiology of Learning and Memory 82 (1): 12–25. [DOI] [PubMed] [Google Scholar]

- Sarter Martin, Lustig Cindy, Howe William M., Gritton Howard, and Berry Anne S.. 2014. “Deterministic Functions of Cortical Acetylcholine.” The European Journal of Neuroscience 39 (11): 1912–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W 1998. “Predictive Reward Signal of Dopamine Neurons.” Journal of Neurophysiology 80 (1): 1–27. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dayan P, and Montague PR. 1997. “A Neural Substrate of Prediction and Reward.” Science 275 (5306): 1593–99. [DOI] [PubMed] [Google Scholar]

- Shohamy Daphna, and R. Alison Adcock. 2010. “Dopamine and Adaptive Memory.” Trends in Cognitive Sciences 14 (10): 464–72. [DOI] [PubMed] [Google Scholar]

- Smith Caroline C., and Greene Robert W.. 2012. “CNS Dopamine Transmission Mediated by Noradrenergic Innervation.” The Journal of Neuroscience: The Official Journal of the Society for Neuroscience 32 (18): 6072–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stuber Garret D., Roitman Mitchell F., Phillips Paul E. M., Carelli Regina M., and Wightman R. Mark. 2005. “Rapid Dopamine Signaling in the Nucleus Accumbens during Contingent and Noncontingent Cocaine Administration.” Neuropsychopharmacology: Official Publication of the American College of Neuropsychopharmacology 30 (5): 853–63. [DOI] [PubMed] [Google Scholar]

- Suzuki Wendy A., and Naya Yuji. 2014. “The Perirhinal Cortex.” Annual Review of Neuroscience 37: 39–53. [DOI] [PubMed] [Google Scholar]

- Takeuchi Tomonori, Duszkiewicz Adrian J., Sonneborn Alex, Spooner Patrick A., Yamasaki Miwako, Watanabe Masahiko, Smith Caroline C., et al. 2016. “Locus Coeruleus and Dopaminergic Consolidation of Everyday Memory.” Nature 537 (7620): 357–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler Philippe N., Fiorillo Christopher D., and Schultz Wolfram. 2005. “Adaptive Coding of Reward Value by Dopamine Neurons.” Science 307 (5715): 1642–45. [DOI] [PubMed] [Google Scholar]

- Tobler Philippe N., O’Doherty John P., Dolan Raymond J., and Schultz Wolfram. 2007. “Reward Value Coding Distinct from Risk Attitude-Related Uncertainty Coding in Human Reward Systems.” Journal of Neurophysiology 97 (2): 1621–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Totah Nelson K. B., Kim Yunbok, and Moghaddam Bita. 2013. “Distinct Prestimulus and Poststimulus Activation of VTA Neurons Correlates with Stimulus Detection.” Journal of Neurophysiology 110 (1): 75–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wittmann Bianca C., Schott Björn H., Guderian Sebastian, Frey Julietta U., Heinze Hans-Jochen, and Düzel Emrah. 2005. “Reward-Related FMRI Activation of Dopaminergic Midbrain Is Associated with Enhanced Hippocampus-Dependent Long-Term Memory Formation.” Neuron 45 (3): 459–67. [DOI] [PubMed] [Google Scholar]

- Yonelinas Andrew P., Aly Mariam, Wang Wei-Chun, and Koen Joshua D.. 2010. “Recollection and Familiarity: Examining Controversial Assumptions and New Directions.” Hippocampus 20 (11): 1178–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu Angela J., and Dayan Peter. 2005. “Uncertainty, Neuromodulation, and Attention.” Neuron 46 (4): 681–92. [DOI] [PubMed] [Google Scholar]