Significance

Flying insects are Earth’s most diverse taxonomic class. They exhibit remarkable abilities to locate resources while flying through complex 3D environments. However, we still have not deciphered how flying insects integrate multiple stimuli and extract depth to locate distant targets. Here, we assessed long-range search behavior in tethered flies using virtual reality. We first show that insects of multiple species can navigate to virtual 3D objects. We then show flies can employ perspective and motion parallax to distinguish size and distance amidst a complex background, and can incorporate directional airflow and odor to navigate toward distant targets. These results provide the foundation to elucidate search processes for pest management, pollination, and dispersal, and apply them to robotics and search algorithms.

Keywords: behavioral ecology, dispersal, foraging, multisensory integration, search algorithm

Abstract

The exemplary search capabilities of flying insects have established them as one of the most diverse taxa on Earth. However, we still lack the fundamental ability to quantify, represent, and predict trajectories under natural contexts to understand search and its applications. For example, flying insects have evolved in complex multimodal three-dimensional (3D) environments, but we do not yet understand which features of the natural world are used to locate distant objects. Here, we independently and dynamically manipulate 3D objects, airflow fields, and odor plumes in virtual reality over large spatial and temporal scales. We demonstrate that flies make use of features such as foreground segmentation, perspective, motion parallax, and integration of multiple modalities to navigate to objects in a complex 3D landscape while in flight. We first show that tethered flying insects of multiple species navigate to virtual 3D objects. Using the apple fly Rhagoletis pomonella, we then measure their reactive distance to objects and show that these flies use perspective and local parallax cues to distinguish and navigate to virtual objects of different sizes and distances. We also show that apple flies can orient in the absence of optic flow by using only directional airflow cues, and require simultaneous odor and directional airflow input for plume following to a host volatile blend. The elucidation of these features unlocks the opportunity to quantify parameters underlying insect behavior such as reactive space, optimal foraging, and dispersal, as well as develop strategies for pest management, pollination, robotics, and search algorithms.

Long-range search is essential for nearly all aspects of animal behavior from locating mates and food to population dispersal (1–5). Deciphering search behavior is important for understanding sensorimotor translation in the nervous system and its role in an organism’s survival. Translating the search process is useful for generating efficient algorithms for artificial intelligence, robotics, and internet search protocols, among others (6, 7). Search involves the discrimination of environmental cues, identification of relevant objects, and the subsequent active translation of the organism toward a particular resource or location (1, 3). These conditions demand a continuous and dynamic assessment of stimulus space with subsequent action to accurately locate distant targets. As a consequence, understanding proximate mechanisms of search behavior requires not only the evaluation of initial and final conditions of the search but also the quantitative measurement of stimulus context and behavioral response at all points of the search trajectory (3, 5).

Flying insects play an integral role in human and environmental ecosystems as pests, vectors, pollinators, and nutrient cyclers. While it is obvious that each of these scenarios requires insects to find objects of interest, it is unclear what features of the natural world are utilized for this purpose. Here, we define long-range behavior (1, 3, 4) as locating objects at distances extending three orders of magnitude of body size (e.g., >10 m for a 1-cm-long insect). At these scales, most insects cannot visually resolve many cues of interest such as fruits, flowers, leaves, or conspecifics, thus necessitating multimodal integration to localize the object of interest. Therefore, insects have been suggested to employ long-range optomotor anemotaxis (see SI Appendix, Table S1 for a glossary of keywords) in flight by integrating wide-field visual cues, wind direction for upwind orientation, and olfactory cues from the fine-scale structure of odor plumes (3, 8, 9). Insects are known to utilize wide-field cues such as horizon, slip, ground translation, and sky rotation to maintain stable flight (3, 10–13). Insects have been shown to make use of small-field cues such as perspective and motion parallax to localize objects while walking (14, 15) and measure distance traveled in flight (16, 17). However, we do not know if insects make use of these features to rapidly discriminate, localize, and navigate to objects amidst a complex three-dimensional (3D) landscape while in flight. For example, while difficult to achieve in stochastic real-world environments, it is possible that insects could utilize a form of image matching (18) to the two-dimensional (2D) pattern and odor identity of relevant objects during long-range search without employing 3D or odor plume features.

Deconstructing multimodal search behavior therefore necessitates the precise measurement of a flying insect’s response to objects under relevant ecological contexts that provide dynamic 3D visual scenery, windscapes, and odor flux over large spatial and temporal scales. The detection and response of flying insects to objects and multimodal cues in the natural world have been well-studied in pollinator systems, albeit at relatively short spatial scales (<2 m) (19–21). However, due to their small size, relatively high flight speeds, and large dispersal scales, a quantification of where and when flying insects detect and respond to objects at larger scales required for long-range behavior is relatively unknown (3, 5). Essentially, we can either track the insect or the stimulus in nature, but not both simultaneously. For these reasons, such observations have been limited to confined areas (∼10 m2) (22) and nonecological (10, 23, 24) or unimodal (25, 26) conditions that cannot replicate the search process in its entirety.

Virtual reality (VR) technology provides an opportunity to precisely control stimulus delivery and modulate behavioral output. By using minimal stimulus features such as moving stripes, spots, and binary odor pulses, VR arenas have allowed us to understand sensorimotor coupling and integration mechanisms in the nervous system (10, 23–26). However, these arenas cannot be used to assess long-range search behavior, nor have they been applied to ecological questions to date (10, 23–26). For example, vertical bars, a commonly used 2D stimulus in most VRs, cannot be utilized to assess depth cues such as perspective and motion parallax (11, 23, 27–31). Existing arenas also lack the capability to simultaneously present dynamic visual scenery containing 3D objects in the presence of airflow fields and odor flux (10, 23–26) at large scales critical for the multimodal search behavior exhibited by flying insects in nature (3, 8, 18, 32).

Here, we assessed critical parameters of long-range search including reactive distance, perspective, motion parallax, anemotaxis, and plume following using a multimodal virtual reality (MultiMoVR) arena. Current technological limitations restrict the ability to produce arbitrary color spectra, analog odor and wind, and simultaneous comprehensive biomechanical feedback present in the real world. To account for these confounding variables, we present dynamic and controlled stimulus feedback to tethered animals using VR stimuli compared against observed real-world search behaviors to assess the efficacy of our technique. This required a model system whose ecology and multimodal preferences were well-studied and stereotyped for specific objects. We thus chose the apple fly Rhagoletis pomonella as our model system. R. pomonella are specialist insects with adults of both sexes using specific visual and odor cues of ripe fruit in the tree canopy to locate sites for mating and oviposition (22, 33). These multimodal cues are well-documented (22, 33), as is an ethogrammatic description of fly orientation behavior in the field (1, 22). To this end, we provided photorealistic scenes and perspective-accurate stimuli of 3D tree models along with grass and sky textures in a 1,025 × 1,025-m landscape, including directional airflow and odor. This landscape was presented in a periodic boundary condition such that as the animal approaches the end of the virtual landscape, it is seamlessly placed at the opposite side, so the animal can essentially translate infinitely in any direction. Using this arena, we show that multiple Dipteran species, including a North American pest (R. pomonella), tropical vector (Aedes aegypti), Asian species (Pselliophora laeta), and cosmopolitan pollinator (Eristalis tenax), can navigate toward virtual 3D objects in a complex environment using this system. We then measure the reactive distance (19–21) of R. pomonella to objects during long-range search. We also show that R. pomonella utilizes motion parallax and perspective to discriminate virtual objects of varying sizes and distances in a complex 3D environment, respond to directional airflow based on velocity, and orient to directional odor flux in VR. We finally discuss how this evidence opens avenues of exploration for a variety of biological and technological applications.

Results

Localization of Virtual Ecologically Relevant Objects in a Complex Environment.

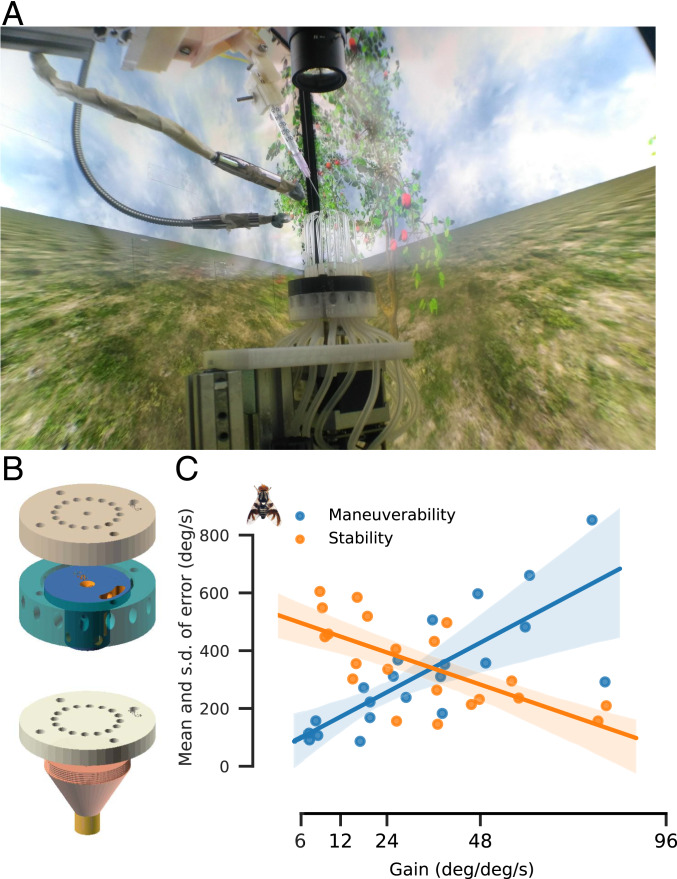

We built the VR system in a modular fashion to allow for different species and stimuli (Fig. 1 A and B; see SI Appendix, Methods, Fig. S1 A–D, and Tables S1 and S2, Movie S1, and ref. 34 for system description, calibration, software, bill of materials, and computer-aided design [CAD] files). For animals moving in fluids such as air, the relationship between biomechanics and translation is nondeterministic due to the stochasticity and nature of fluid dynamics. Thus, due to practical considerations, prior VR studies with flying insects (10, 11, 23, 24) arbitrarily selected a particular gain of their choice. However, gain values set limits on the ability of the animal to turn, compensate, and translate in response to stimuli (12), which results in over- or underrepresentation of the animal’s intended direction in space. To provide an objective method to measure gain for tethered flying insects in our arena, we measured the range of gain where stability (mean of response error, measured as impose − impose response) and maneuverability (SD of response error) of the insect’s virtual heading to externally imposed yaw rotations in our arena were comparable. We identified the optimal gain as the region where the ratios of these values were close to 1. For R. pomonella (apple fly), this region was around 36 deg⋅deg1⋅s−1 (i.e., the world moved 36° for every degree of wingbeat amplitude difference over 1 s) (Fig. 1C and SI Appendix, Fig. S1C). For A. aegypti (yellow fever mosquito), this region was around 75 deg⋅deg−1⋅s−1 (SI Appendix, Fig. S1E). For males of P. laeta (crane fly), it was around 40 deg⋅deg−1⋅s−1 (SI Appendix, Fig. S1F). However, female crane flies showed little to no stabilization response (SI Appendix, Fig. S1G). Similarly, Daphnis nerii (oleander hawkmoth) also showed no stabilization response (SI Appendix, Fig. S1H). This is likely due to lower wingbeat frequencies of female crane flies (50 Hz for female vs. 80 Hz for male crane flies) and hawkmoths (36 Hz for both males and females).

Fig. 1.

MultiMoVR arena. (A) The MultiMoVR arena is a 32-cm-wide, 60-cm-tall prism-shaped arena composed of three 165-Hz in-plane switching (IPS) monitors. The tethered insect is surrounded by capillaries that provide directional wind and odor. A photorealistic scene based on real-world scenery is wrapped around the three monitors. (B) Closed-loop wind and odor delivery system design with a revolver controlling the direction of wind and odor controlled by a 3/2 valve. (C) Error is defined as the difference between external impose and response by the insect (here R. pomonella). Stability is defined as the mean of the error, and maneuverability is defined as the SD of the error; 95% CI is indicated as shaded regions around the lines.

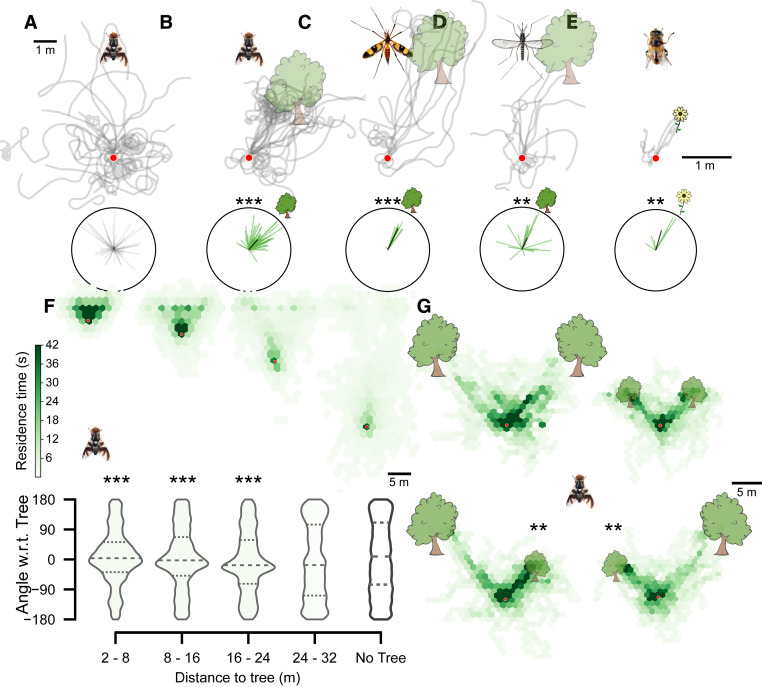

Using the aforementioned calculated gain, we first characterized the behavior of apple flies in the presence of virtual tree-like objects (Fig. 2 A and B and Movie S2). In a world with only grass and sky, the flies did not choose to translate in any particular virtual direction, indicating that they did not localize or navigate to any aspect of the scenery such as a cloud or a patch of grass (Fig. 2A; n = 11 flies, n = 103 trials, trial duration 15 s, Rayleigh test mean angle −17.83°, R = 0.062, z = 1.809, P = 0.634). This also indicates that static elements in the insect’s field of view such as bezels, capillaries, and the camera did not evoke any gaze fixation as evidenced by the lack of any specific direction in this landscape and as observed in previous VRs (10, 23–26). However, when provided a tree, flies fixated on and approached the tree, and exhibited stereotypical object avoidance/landing responses with rapid saccades (rapid body turns within the tree canopy) and foreleg extension when within <10 cm of elements in the canopy (35, 36) (Fig. 2B, SI Appendix, Fig. S2B, and Movie S2; n = 11 flies, n = 103 trials, trial duration 15 s, Rayleigh test mean angle 42.54°, R = 0.306, z = 1.809, P = 4.69e-07). This indicates that flying insects can distinguish, navigate, and respond to virtual 3D objects from the surrounding visual scenery. In a world with two identical trees, flies oriented and approached both trees equally, as described previously for field behavior (22) (SI Appendix, Fig. S2C; n = 11 flies, n = 103 trials, trial duration 15 s, Rayleigh test mean angle 4.692°, R = 0.22, z = 1.809, P = 0.634).

Fig. 2.

Response of tethered insects to virtual objects of varying sizes and distances. (A and B) Virtual trajectories of R. pomonella (apple fly) to worlds with no tree (A) and a tree on the right (B), at a 3-m virtual distance (n = 11 flies, n = 103 trials). (C) Virtual trajectories of P. laeta (crane fly) to a tree on the right, at a 4-m virtual distance (n = 6 flies, n = 15 trials). (D) Virtual trajectories of A. aegypti (yellow fever mosquito) to a tree on the right, at a 3-m virtual distance (n = 6 flies, n = 13 trials). (E) Virtual trajectories of E. tenax (hoverfly) to a 1-m-high flower on the right, at a 1-m virtual distance (n = 2 flies, n = 6 trials). All polar plots provide corresponding mean angles for each trajectory, with the black line indicating total mean. (F) Hexbinned plots of apple fly trajectories against trees placed at 3, 6, 12, and 24 m from the initial position (n = 20 flies, n = 129 trials). Violin plots indicate angles with respect to (w.r.t.) the tree at different distance bins. (G) Hexbinned plots of apple fly trajectories against large distant trees vs. small nearby trees that subtend identical visual angles at the initial position (n = 9 flies, n = 96 trials). **P < 0.01, ***P < 0.001. Rayleigh test for B and C and binomial test for D. Red circles indicate the starting position.

Along with a pest (apple fly), we also measured localization of virtual tree-like objects in a crane fly (P. laeta; Fig. 2C and SI Appendix, Fig. S2 D–F; n = 6 flies, n = 15 trials, trial duration 15 s, Rayleigh test mean angle −25.56°, R = 0.445, z = 1.666, P = 1.00e-05; Movie S3) and for a disease vector (male mosquito, A. aegypti; Fig. 2D and SI Appendix, Fig. S2 G–I; n = 6 flies, n = 13 trials, trial duration 15 s, Rayleigh test mean angle −24.19°, R = 0.390, z = 2.400, P = 0.005; Movie S3). We also used virtual flowers for a pollinator (hoverfly, E. tenax; Fig. 2E and SI Appendix, Fig. S2 J–L; n = 2 flies, n = 6 trials, trial duration 30 s, Rayleigh test mean angle −16.35°, R = 0.488, z = 1.429, P = 0.007; Movie S3). We show that all four species can distinguish, navigate, and respond to virtual 3D objects from the surrounding background. In addition, hoverflies are eponymous in their ability to hover over objects just before landing. To observe search behavior in the final moments before landing, we closed the loop for both heading and flight speed for hoverflies. We used the wing-beat amplitude sum scaled appropriately in closed loop (SI Appendix, Methods, hoverfly experiments) to control the virtual flight speed and preliminary results show the possibility of virtual hovering in our arena (last 20 s of Movie S3). Our experiments show that multiple insect species can search and navigate to 3D virtual objects in a complex landscape with ground and sky over large spatial scales.

Determination of Reactive Distance Using Perspective Cues.

In preliminary analyses, subtle differences in lighting, angle of the virtual sun, or perspective could cause changes in object preference, indicating that the 3D nature of the object might be important. For example, when two identical but asymmetrical 3D objects are placed equidistant from the observer in space, the objects will appear different from the observer’s viewpoint (SI Appendix, Fig. S2A). This phenomenon is fundamentally unique to 3D geometry and is impossible to recreate with identical 2D objects or even 3D objects that are symmetrical such as cylinders and spheres. To account for this and prevent side bias, all objects were mirror-flipped with respect to the vertical to present both trees from the same initial perspective to the insect. This requirement suggested that perspective was utilized by flies in our virtual arena.

To assess the effect of perspective regarding 3D objects, we assessed the response of apple flies to identical virtual trees at different distances. Distant trees were less likely to be fixated on or approached (Fig. 2F and SI Appendix, Fig. S2M; n = 20 flies, n = 129 trials, trial duration 10, 15, 30, and 60 s; Rayleigh test at 3, 6, 12, and 24 m and no tree conditions, respectively; mean angle −11.47°, −7.83°, −0.64°, 10.47°, −17.83°; R = 0.48, 0.27, 0.34, 0.19, 0.06; z = 2.785, 3.001, 2.694, 2.294, 1.809; P = 9.54e-11, 9.79e-07, 1.08e-07, 0.05, 0.634). Flies navigated to 16-m2 tree models up to a maximum distance of 24 m, as predicted by field data (37). As such, we were able to quantify the reactive distance of apple flies to their host during long-range search, which indicates the limits of their visually guided object localization.

Decoupling Size and Distance Using Motion Parallax.

To differentiate the effects of object size and distance, we also presented apple flies with two tree objects, one twice as big and twice as far as the other tree such that, at the starting location, the trees subtended the same angular size (Movie S4). The flies oriented and approached the smaller, closer tree more frequently than the larger, farther tree (Fig. 2G and SI Appendix, Fig. S2N; n = 9 flies, n = 96 trials, trial duration 30 s; binomial test for large vs. small and small vs. large trees, respectively; z = 3.022, 2.586; P = 0.001, 0.004). However, when both trees were equally far, they showed no preference for either tree (Fig. 2G and SI Appendix, Fig. S2N; n = 9 flies, n = 96 trials, trial duration 30 s; binomial test for small vs. small and large vs. large, respectively; z = 0.482, 0; P = 0.315, 0.5). This shows that apple flies can make use of both object size and distance information obtained from localized motion parallax cues to discriminate and navigate to objects for long-range search behavior. This indicates that complex visual processing is utilized for choosing targets in a complex world.

Orientation to Directional Airflow in the Absence of Optic Flow.

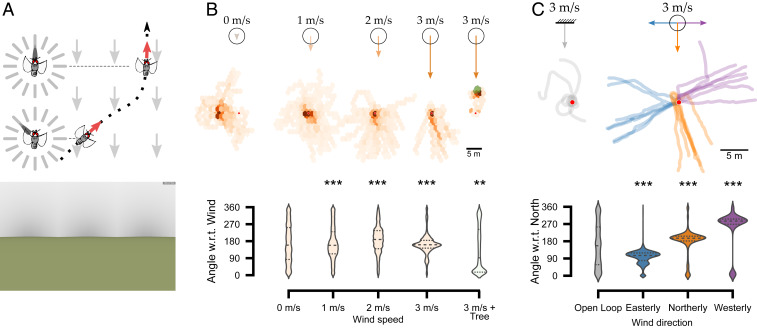

To assess if insects can make use of cues from directional airflow in the absence of optic flow, we characterized trajectories of apple flies placed in different wind tunnel-like airflow fields (here called “windfields”) and velocities in a visually featureless environment (zero optic flow; Fig. 3A). We use this term rather than “wind” to clarify that tethered insects are not advected and therefore do not experience the complete mechanosensory feedback they would experience in true wind. In closed loop, the airflow was presented to the animal from different capillaries depending on its orientation with respect to the global windfield (Fig. 3A). At zero airflow, flies did not orient in any particular direction (Fig. 3B and SI Appendix, Fig. S2O; n = 22 flies, n = 158 trials, trial duration 15 s, Rayleigh test mean angle −80.97°, R = 0.19, z = 2.551, P = 0.24). But, as windfield velocities increased, flies oriented more in the downwind direction as suggested by previous studies (31, 38) (Fig. 3B and SI Appendix, Fig. S2O; n = 22 flies, n = 158 trials, trial duration 15 s; Rayleigh test for 1, 2, and 3 m/s, respectively; mean angle 137.7°, 177.51°, −160.97°; R = 0.28, 0.43, 0.63; z = 2.551, 3.775, 2.785; P = 2.04e-08, 1.09e-05, 9.44e-07). To account for potential artifacts produced by mechanical deflections of the wing at high wind speed, we placed a tree upwind in this high-velocity windfield. In this scenario, flies flew upwind toward the tree, indicating that they could orient upwind at these windfield velocities given an appropriate visual context (i.e., tree-like object; Fig. 3B and SI Appendix, Fig. S2O; n = 22 flies, n = 158 trials, trial duration 15 s, Rayleigh test for 3 m/s + tree, mean angle −3.20°, R = 0.14, z = 2.004, P = 3.23e-03).

Fig. 3.

Response of apple flies to windscapes at large spatial and temporal scales. (A) Based on the windfield and the fly’s virtual position and heading, the appropriate set of capillaries continuously provides directional airflow cues. (A, Bottom) The visual scenery with zero optic flow. (B) Virtual trajectories in zero optic flow and different airflow velocities, 0, 1, 2, 3, and 3 m/s + tree (n = 22 flies, n = 158 trials). (B, Bottom) Violin plots of angle with respect to wind in different directional airflow speeds. (C) Virtual trajectories against zero optic flow and different windfields: open loop, easterly, northerly, and westerly winds at 3 m/s (n = 5 flies, n = 58 trials). (C, Bottom) Violin plots indicate angle with respect to north in different windscape directions. Red dots indicate the starting location. **P < 0.01, ***P < 0.001. Rayleigh test for B and C.

We also presented global windfields as either open-loop, northerly, easterly, or westerly. In the open-loop scenario, the airflow was always delivered from the front capillaries regardless of fly orientation and the flies displayed no preferred heading (Fig. 3C). But in a directional windfield such as easterly, where the wind blows from east to west, the flies oriented westward and maintained their downwind heading (Fig. 3C; n = 5 flies, n = 58 trials, trial duration 15 s; Rayleigh test for open-loop, easterly, northerly, and westerly windfields, respectively; mean angle 56.36°, 102.76°, −163.65°, −64.82°; R = 0.085, 0.892, 0.756, 0.862; z = 1.591, 1.512, 1.512, 1.511; P = 0.18, 0.1e-03, 7.5e-05, 0.12e-03). This indicates that the flies actively oriented using directional airflow cues provided by our virtual windscape even in the absence of optic flow.

Airflow and Odor Act Synergistically for Virtual Plume Following.

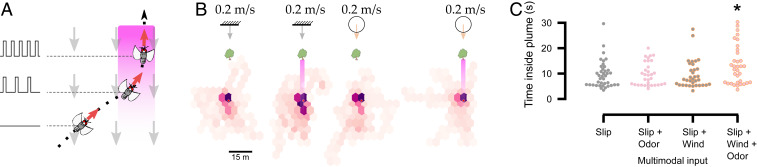

Finally, we tested apple flies in a wind tunnel-like odor flux and windscape. Several studies have shown that in real-world turbulent conditions, odorscapes exist in the form of plumes composed of intermittent packets of odor interspersed with clean air. Thus, odor is detected by flying insects as flux rather than a smooth concentration gradient, and insect antennae are flux detectors that respond to instantaneous changes in concentration rather than the absolute concentration at any moment (39, 40). To accommodate these requirements in our arena, we presented a known attractive fruit blend odor (41) for R. pomonella as intermittent pulses placed in virtual space over a 2-m-wide strip that increased in frequency as the flies approached the virtual odor source, starting from 1 to 10 Hz over 20 m (Fig. 4A). This simulated the punctate nature of odor information in an odor plume. We note that concentration could also be adjusted separately at a speed of up to 5 Hz through software control of the digital mass flow controllers as shown previously for this stimulus system (42). However, given that insects primarily respond to flux, for the purposes of these experiments we varied only pulse frequency and not concentration. To provide final target cues, we placed a tree far away, such that the visual cues alone would not elicit upwind flight (Fig. 2F). We then presented flies with four conditions. The slip condition provided only visual slip based on heading and airflow velocity with no change in airflow direction, meaning that airflow cues were in an open loop as described in the previous section. The slip and odor condition provided visual slip and increasing odor frequency only from the frontal capillaries, with the fly starting in the center of the odor strip but at a 45° angle. The slip and windfield condition provided visual slip and a global windfield at 0.2 m/s as described above. Finally, the slip, windfield, and odor condition provided all three parameters in a closed loop. Despite an initial cross-wind heading, flies remained significantly longer in the virtual odor plume region only when directional airflow + odor were combined (Fig. 4 B and C, SI Appendix, Fig. S2O, and Movie S5; n = 8 flies, n = 33 trials, Mann–Whitney U test with respect to the slip + windfield + odor condition, U = 619, P = 0.026). Decoupling airflow and odor stimuli showed that either stimulus alone did not result in significant time spent within the virtual odor plume over control (slip) conditions, as predicted by current anemotactic odor plume-tracking models (Fig. 4 B and C, SI Appendix, Fig. S2O, and Movie S5; n = 8 flies, n = 33 trials; Mann–Whitney U test with respect to slip for slip + odor and slip + windfield, respectively; U = 769, 735; P = 0.292, 0.254) (8). While both parameters are always coupled in nature, presenting airflow fields and odor flux in isolation allows us to quantify their relative contribution to upwind optomotor anemotaxis. Our results suggest that both wind direction and odor flux must be present simultaneously for flying insects to orient within odor plumes.

Fig. 4.

Response of apple flies to odor flux at large spatial and temporal scales. (A) Based on the fly’s virtual position and odorfield, an odor pulse is released at the specified frequency. (B) Hexbinned plots of trajectories for visual slip, slip + odor, slip + windfield, and slip + windfield + odor (n = 8 flies, n = 33 trials). Pink gradient represents odorfield. (C) Swarm plots indicate time inside plume regions. Red dots indicate the starting location. *P < 0.05, Mann–Whitney U test.

Discussion

Here we show using virtual reality that flying insects with limited computational capabilities make use of several features such as foreground segmentation, perspective, motion parallax, and integration of multiple modalities to rapidly discriminate, localize, and navigate to objects amidst a complex 3D landscape while in flight. From an algorithmic and robotics perspective, current state-of-the-art algorithms still cannot accomplish this task due to the complexity of environment, camera motion, and computational demands (7). Our results provide a template for future application of these features that are inherent to flying insects.

First, we determined that multiple species including Tephritid fruit flies (R. pomonella), mosquitoes (A. aegypti), crane flies (P. laeta), and hoverflies (E. tenax) could distinguish objects from complex visual scenery over 600 m2. Each species approached objects and exhibited close-range behaviors such as object avoidance/landing responses including, in the case of hoverflies, hovering behavior over the virtual object itself. These behaviors indicate that multiple species can visually localize and navigate to 3D virtual objects in a large complex scene (Fig. 2). We note that flying insects might potentially approach any isolated stationary object simply for the sake of landing and conserving energy, and the behavioral response to virtual objects has been shown in other studies (10, 23–26). However, our experiments using the apple fly (R. pomonella) show that tethered flying insects in VR perform figure–ground discrimination, tease apart the objects in the foreground amidst a complex background from a moving frame of reference, and navigate toward a preferred target while accounting for orientation, object size and distance, self-motion, slip, airflow, and odor cues.

To assess behavior in these complex virtual environments, it was important to calibrate system gain to ensure that test insects were able to change direction to orient to multiple objects and stimuli. Interestingly, the arbitrary gain values used in previous studies have been much lower than the values we have calculated here (36 to 75 vs. 1 to 5 deg⋅deg−1⋅s−1) (11, 31). Low gain severely limits the range of angular velocities and maneuvers insects can reliably perform. For example, the maximum angular velocity with such low gains is roughly 50 to 250 deg/s, while flies turning in free flight have been measured at 1,500 deg/s (12), which was attained in this study with the calculated gains. Thus, for analyses assessing navigation to objects with a calibrated ground and sky as shown here, it is suggested that gain be calculated for each species of interest. We note that our method to calculate gain was not effective in insects with wingbeat frequencies lower than 50 Hz, such as moths (D. nerii) and female crane flies (P. laeta). This is primarily due to the camera-tracking paradigm, which needs at least one full wingbeat cycle to measure wingbeat amplitude difference and lower wingbeat frequencies cause considerable latency to the system. Also, lower–wingbeat-frequency insects are likely to modulate flight parameters in multiple ways besides wingbeat amplitude difference, from changing angle of attack, abdomen position (24), to midstroke manipulation. Using a static torque sensor and/or tracking abdomen position in a closed loop would alleviate some of these challenges (24).

Using this calculated gain, we show that R. pomonella flies can approach tree-like objects (4 × 4 × 4 m) from up to roughly 24 m away and prefer to approach closer objects even if they are smaller. While 2D patterns can be used to measure visual acuity, these stimuli cannot measure how far the animal will localize and navigate to an object of interest. This value is a critical parameter for ecological models such as reactive space, optimal foraging, dispersal rates, and several other contexts (2–6, 8, 27–29, 43), but is difficult to measure empirically without being able to track the insect in space and time. For example, determining the localization limits of insects can be used to optimize pest management strategies such as trap placement, increase pollination efficiency with better crop planning, and enhance repellent strategies for vectors.

Navigational models using landmarks and cognitive maps (32) presume the use of motion parallax to distinguish aspects of the surrounding landscape. By presenting multiple objects of different sizes and distances, we show that local motion parallax is actually used by flying insects to discriminate and localize 3D virtual objects amidst a complex background. To date, most of these parameters have been assessed in walking insects, where stimulus space is calibrated and insect trajectory can be monitored (14, 15). While similar models are postulated for flying insects such as honey bees, the precise use of these features previously remained elusive due to the inability to dynamically manipulate the landscape in space and time (16, 17). We note that motion parallax causes differences in expansion rates and also causes differential optic flow based on distance. Which of these mechanisms are being utilized in the observed response requires further study.

Additionally, we found that R. pomonella flies could utilize windfield cues for orientation in the absence of optic flow, which has important implications for understanding navigation and migration of flying insects, particularly at high altitudes (44, 45) (Fig. 3 B and C). Studies with migrating insects and marine species suggest that organisms advected by flow can measure the flow velocity of the medium indirectly by measuring the curl (46) and jerks (45) of the eddies due to turbulence. Although the mechanosensory input that a tethered insect receives will differ from a free-flying individual, our study demonstrates the intrinsic ability of R. pomonella to modulate behavior in response to airflow speed and direction. While these results do not negate the use of optomotor anemotaxis, they provide a putative mechanism as to how flying insects could navigate in the absence of optic flow, such as high-altitude and nighttime migrations. For example, flight mills, vertically facing radar, and harmonic radar tracking are commonly used techniques to understand migration and dispersal but cannot simultaneously measure the precise location of the insect and the sensory stimuli it is receiving at that moment (44, 45, 47, 48). In addition, the inability to dynamically manipulate these stimuli limits the ability to assess the effects of wind direction, wind speed, visual slip, landmarks, and horizon on individual behavior. These aspects are fundamental parameters in determining distance and direction of migration patterns, and have important implications for population-level movement and dispersal of pests, predators, and disease vectors and their management (44, 47).

Finally, we show that R. pomonella flies can respond to intermittently pulsed odor stimuli as predicted by current plume-following models, and show how our system can decouple each component of chemically mediated optomotor anemotaxis (8, 9, 49, 50). Wind tunnels are commonly used in studying plume-following behavior but cannot easily be utilized to assess and decouple the effects of dynamic wind and odor cues as examined here (1–3, 8, 38, 49, 50). Deconstructing the strategies and algorithms underlying plume-following behavior is a long-standing problem in biology and engineering applications, and has been hampered by an inability to fully quantify stimulus and response (51). Decoupling vision, wind, and odor in plume following provides important insights into the relative importance of each of these modalities in a behavior used in almost every aspect of insect life.

We note that our experiment here tested a maximum of two objects and a single directed airflow and odor flux. In natural contexts, search behavior generally involves missing or occluded cues, clutter, and ambiguous and confounding stimuli (5, 27–30, 43). Our experiments here nevertheless demonstrate prerequisites for search behavior including figure–ground discrimination, discrimination of objects in the foreground amidst a complex background, and navigation to targets while accounting for orientation, self-motion, slip, wind, and odor cues. Future studies can include multiple or confounding targets and stimuli, deconstruct the 3D aspects of targets and their surrounding environment required for object localization, or examine the spatial and temporal structures needed for plume following and assess the impact of missing and occluded stimuli on the search process. The insights gained in the current and future studies utilizing multimodal VR can help better understand the physiological mechanisms underlying foraging, navigation, dispersal, and mate choice in Dipterans and potentially other taxa as well.

Materials and Methods

Implementation of MultiMoVR.

A detailed system description can be found in SI Appendix, Methods. A partial list and bill of materials can be found in SI Appendix, Table S2. A detailed assembly guide and instruction manual can be found in ref. 34. The entire assembly is supported using a 1-m-cubic frame composed of 20 × 20-mm generic aluminum profile extrusions. High–refresh-rate gaming monitors (Asus Rog PG279Q; AsusTek Computer) provide visual input. The insect was placed in the geometric center of the arena using a 3D printed manipulator (52). Airflow and odor were delivered through one of the 16 capillaries surrounding the insect from a custom-built delivery system (Fig. 1B and SI Appendix, Methods, Windscape). In closed-loop systems, a fraction of the output is fed back to the input and this scaling factor is commonly known as “gain.” To estimate optimal gain range for measuring long-range search, we devised a gain sweep protocol to measure the stability and maneuverability of the insect (SI Appendix, Methods, Optimal Gain Determination).

Experimental Protocols.

Visual assay.

To determine if insects approached virtual visual objects, the VR was initialized with four worlds: virtual object on the left, object on the right, no object, and objects on both sides. The gain was set based on a species’ optimal range as calculated above, with 1 m/s translational velocity and reset time. The reset time was scaled to provide enough time for the insect to reach a faraway object, that is, twice the line-of-sight time taken to the virtual object. The insect was placed in the next virtual world and reinitialized after each reset event (SI Appendix, Methods, VR Initialization). Every world was repeated 10 times for each insect or until it stopped flying. The distance assay was repeated with the insect placed at different initial positions to measure response to perspective and spatial scaling in VR.

Windscape assay.

To measure if insects could make use of directional airflow cues provided using the windscape, VR was initialized with four worlds: open-loop windfield, easterly, westerly, and northerly. In the open windfield case, regardless of the insect’s position or heading, the insect always received directional airflow from a single capillary at the front of the insect. In the other three cases, based on the insect’s position and heading, the directional airflow was altered to provide that particular windscape at the current insect position and orientation (Fig. 3A). This entire assay was repeated at different airflow velocities.

Odor assay.

To assess response to odor, the VR was initialized with an open windfield (see above) and no odor, open windfield and odor, closed windfield and no odor, or closed windfield and odor. In the odor worlds, the odor was given as a 2-m-wide rectangular strip with a gradient of increasing packet frequency based on the insect’s position, starting at 1-Hz frequency and increasing to 10 Hz over 25 m with 50-ms pulse length (Fig. 4A).

Statistics.

Trajectories were analyzed based upon their orientation vectors. Displayed polar plots represent the spread and directionality of the orientation data. Violin plots represent the histogram of the orientation data from the entire trajectory. Depending on the assay, we used absolute heading (orientation with respect to north), angle with respect to the virtual object, or angle with respect to the wind. This put the relevant “target” at 0°. We used a Rayleigh test to check for directionality in the data. For the motion parallax data, we measured the number of trajectories that reached the virtual object and performed a binomial test between the two choices. For the odor assay, we compared the duration of in-plume traversals of different conditions with respect to slip alone using a one-sided Mann–Whitney U test. Where appropriate, 95% CIs are reported. Each of these plots and analyses was performed with custom Python scripts making use of Pandas (https://pandas.pydata.org/), SciPy (https://www.scipy.org/), and Seaborn (https://seaborn.pydata.org) graphing pipelines.

Data Availability.

All data including design files and a software installation guide for the MultiMoVR setup as well as the raw trajectory data from this study can be found in SI Appendix and at the Dryad Digital Repository (34).

Supplementary Material

Acknowledgments

We thank Sriram Narayan, Dora Babu, Sree Subha Ramaswamy, Umesh Mohan, Vaibhav Sinha, and Abin Ghosh for help in constructing the MultiMoVR hardware and software. We thank Hinal Kharva and Cheyenne Tait for collecting and maintaining the apple flies, Subaharan Kesavan for providing the mosquitoes, Polyfly for the hoverflies, and Allan Joy for the hawkmoths. We thank Tim C. Pearce, Holger Krapp, Mukund Thattai, Sanjay Sane, and Upinder Bhalla for helpful discussions. P.K.K. was supported by the National Centre for Biological Sciences, Tata Institute of Fundamental Research. M.R. was supported by Hochschule Bremen. S.B.O. was supported by a Ramanujan Fellowship (Science and Engineering Research Board India), a grant from Microsoft Research, and the Department of Atomic Energy, Government of India, under project no. 12-R&D-TFR-5.04-0800.

Footnotes

The authors declare no competing interest.

This article is a PNAS Direct Submission.

Data deposition: The raw trajectory data reported in this study have been deposited in the Dryad Digital Repository, https://doi.org/10.5061/dryad.jq2bvq85s.

This article contains supporting information online at https://www.pnas.org/lookup/suppl/doi:10.1073/pnas.1912124117/-/DCSupplemental.

References

- 1.Roitberg B. D., Search dynamics in fruit-parasitic insects. J. Insect Physiol. 31, 865–872 (1985). [Google Scholar]

- 2.Baker K. L., et al. , Algorithms for olfactory search across species. J. Neurosci. 38, 9383–9389 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Dickinson M. H., Death Valley, Drosophila, and the Devonian toolkit. Annu. Rev. Entomol. 59, 51–72 (2014). [DOI] [PubMed] [Google Scholar]

- 4.Coyne J. A., Bryant S. H., Turelli M., Long-distance migration of Drosophila. 2. Presence in desolate sites and dispersal near a desert oasis. Am. Nat. 129, 847–861 (1987). [Google Scholar]

- 5.Hein A. M., Carrara F., Brumley D. R., Stocker R., Levin S. A., Natural search algorithms as a bridge between organisms, evolution, and ecology. Proc. Natl. Acad. Sci. U.S.A. 113, 9413–9420 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mathews Z., et al. , “Insect-like mapless navigation based on head direction cells and contextual learning using chemo-visual sensors” in 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, (IEEE, 2009), pp. 2243–2250. [Google Scholar]

- 7.Yazdi M., Bouwmans T., New trends on moving object detection in video images captured by a moving camera: A survey. Comput. Sci. Rev. 28, 157–177 (2018). [Google Scholar]

- 8.Cardé R. T., Willis M. A., Navigational strategies used by insects to find distant, wind-borne sources of odor. J. Chem. Ecol. 34, 854–866 (2008). [DOI] [PubMed] [Google Scholar]

- 9.Murlis J., Elkinton J. S., Cardé R. T., Odor plumes and how insects use them. Annu. Rev. Entomol. 37, 505–532 (1992). [Google Scholar]

- 10.Fry S. N., Rohrseitz N., Straw A. D., Dickinson M. H., TrackFly: Virtual reality for a behavioral system analysis in free-flying fruit flies. J. Neurosci. Methods 171, 110–117 (2008). [DOI] [PubMed] [Google Scholar]

- 11.Warren T. L., Weir P. T., Dickinson M. H., Flying Drosophila melanogaster maintain arbitrary but stable headings relative to the angle of polarized light. J. Exp. Biol. 221, jeb177550 (2018). [DOI] [PubMed] [Google Scholar]

- 12.Tammero L. F., Dickinson M. H., The influence of visual landscape on the free flight behavior of the fruit fly Drosophila melanogaster. J. Exp. Biol. 205, 327–343 (2002). [DOI] [PubMed] [Google Scholar]

- 13.Straw A. D., Lee S., Dickinson M. H., Visual control of altitude in flying Drosophila. Curr. Biol. 20, 1550–1556 (2010). [DOI] [PubMed] [Google Scholar]

- 14.Nityananda V., et al. , A novel form of stereo vision in the praying mantis. Curr. Biol. 28, 588–593.e4 (2018). [DOI] [PubMed] [Google Scholar]

- 15.Kral K., Poteser M., Motion parallax as a source of distance information in locusts and mantids. J. Insect Behav. 10, 145–163 (1997). [Google Scholar]

- 16.Preiss R., Motion parallax and figural properties of depth control flight speed in an insect. Biol. Cybern. 57, 1–9 (1987). [Google Scholar]

- 17.Lehrer M., Srinivasan M. V., Zhang S. W., Horridge G. A., Motion cues provide the bee’s visual world with a third dimension. Nature 332, 356–357 (1988). [Google Scholar]

- 18.Collett T. S., Collett M., Memory use in insect visual navigation. Nat. Rev. Neurosci. 3, 542–552 (2002). [DOI] [PubMed] [Google Scholar]

- 19.Spaethe J., Tautz J., Chittka L., Visual constraints in foraging bumblebees: Flower size and color affect search time and flight behavior. Proc. Natl. Acad. Sci. U.S.A. 98, 3898–3903 (2001). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Raguso R. A., Willis M. A., Synergy between visual and olfactory cues in nectar feeding by naïve hawkmoths, Manduca sexta. Anim. Behav. 64, 685–695 (2002). [Google Scholar]

- 21.Lihoreau M., Ings T. C., Chittka L., Reynolds A. M., Signatures of a globally optimal searching strategy in the three-dimensional foraging flights of bumblebees. Sci. Rep. 6, 30401 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Moerickei V., Prokopy R. J., Berlocher S., Visual stimuli eliciting attraction of Rhagoletis pomonella (Diptera: Tephritidae) flies to trees. Entomol. Exp. Appl. 18, 497–507 (1975). [Google Scholar]

- 23.Dombeck D. A., Reiser M. B., Real neuroscience in virtual worlds. Curr. Opin. Neurobiol. 22, 3–10 (2012). [DOI] [PubMed] [Google Scholar]

- 24.Gray J. R., Pawlowski V., Willis M. A., A method for recording behavior and multineuronal CNS activity from tethered insects flying in virtual space. J. Neurosci. Methods 120, 211–223 (2002). [DOI] [PubMed] [Google Scholar]

- 25.Stowers J. R., et al. , Virtual reality for freely moving animals. Nat. Methods 14, 995–1002 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Peckmezian T., Taylor P. W., A virtual reality paradigm for the study of visually mediated behaviour and cognition in spiders. Anim. Behav. 107, 87–95 (2015). [Google Scholar]

- 27.Rieke F., Spikes: Exploring the Neural Code, (MIT Press, 1999). [Google Scholar]

- 28.Gibson J. J., The Ecological Approach to Visual Perception: Classic Edition, (Psychology Press, 2014). [Google Scholar]

- 29.Lewicki M. S., Olshausen B. A., Surlykke A., Moss C. F., Scene analysis in the natural environment. Front. Psychol. 5, 199 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Marr D., Vision: A Computational Investigation into the Human Representation and Processing of Visual Information, (Henry Holt and Co., 1982). [Google Scholar]

- 31.Currier T. A., Nagel K. I., Multisensory control of orientation in tethered flying Drosophila. Curr. Biol. 28, 3533–3546.e6 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gaudry Q., Nagel K. I., Wilson R. I., Smelling on the fly: Sensory cues and strategies for olfactory navigation in Drosophila. Curr. Opin. Neurobiol. 22, 216–222 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Aluja M., Prokopy R. J., Host search behaviour by Rhagoletis pomonella files: Inter-tree movement patterns in response to wind-borne fruit volatiles under filed conditions. Physiol. Entomol. 17, 1–8 (1992). [Google Scholar]

- 34.Kaushik P. K., Renz M., Olsson S. B., Characterizing long-range search behavior in Diptera using complex 3D virtual environments. Dryad. Available at 10.5061/dryad.jq2bvq85s. Deposited 27 November 2019. [DOI] [PMC free article] [PubMed]

- 35.van Breugel F., Dickinson M. H., The visual control of landing and obstacle avoidance in the fruit fly Drosophila melanogaster. J. Exp. Biol. 215, 1783–1798 (2012). [DOI] [PubMed] [Google Scholar]

- 36.Frasnelli E., Hempel de Ibarra N., Stewart F. J., The dominant role of visual motion cues in bumblebee flight control revealed through virtual reality. Front. Physiol. 9, 1038 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Green T. A., Prokopy R. J., Hosmer D. W., Distance of response to host tree models by female apple maggot flies,Rhagoletis pomonella (Walsh) (Diptera: Tephritidae): Interaction of visual and olfactory stimuli. J. Chem. Ecol. 20, 2393–2413 (1994). [DOI] [PubMed] [Google Scholar]

- 38.Aluja M., Prokopy R. J., Buonaccorsi J. P., Cardé R. T., Wind tunnel assays of olfactory responses of female Rhagoletis pomonella flies to apple volatiles: Effect of wind speed and odour release rate. Entomol. Exp. Appl. 68, 99–108 (1993). [Google Scholar]

- 39.Kaissling K. E., Flux detectors versus concentration detectors: Two types of chemoreceptors. Chem. Senses 23, 99–111 (1998). [DOI] [PubMed] [Google Scholar]

- 40.Olsson S. B., Hansson B. S., A flux capacitor for moth pheromones. Chem. Senses 37, 295–298 (2012). [DOI] [PubMed] [Google Scholar]

- 41.Sim S. B., et al. , A field test for host fruit odour discrimination and avoidance behaviour for Rhagoletis pomonella flies in the western United States. J. Evol. Biol. 25, 961–971 (2012). [DOI] [PubMed] [Google Scholar]

- 42.Olsson S. B., et al. , A novel multicomponent stimulus device for use in olfactory experiments. J. Neurosci. Methods 195, 1–9 (2011). [DOI] [PubMed] [Google Scholar]

- 43.Harris L. R., Jenkin M. R. M., Vision in 3D Environments, (Cambridge University Press, 2011). [Google Scholar]

- 44.Hu G., et al. , Mass seasonal bioflows of high-flying insect migrants. Science 354, 1584–1587 (2016). [DOI] [PubMed] [Google Scholar]

- 45.Reynolds A. M., Reynolds D. R., Sane S. P., Hu G., Chapman J. W., Orientation in high-flying migrant insects in relation to flows: Mechanisms and strategies. Philos. Trans. R. Soc. Lond. B Biol. Sci. 371, 20150392 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Oteiza P., Odstrcil I., Lauder G., Portugues R., Engert F., A novel mechanism for mechanosensory-based rheotaxis in larval zebrafish. Nature 547, 445–448 (2017). Erratum in: Nature549, 292 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Minter M., et al. , The tethered flight technique as a tool for studying life-history strategies associated with migration in insects. Ecol. Entomol. 43, 397–411 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Woodgate J. L., Makinson J. C., Lim K. S., Reynolds A. M., Chittka L., Life-long radar tracking of bumblebees. PLoS One 11, e0160333 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Vickers N. J., Baker T. C., Latencies of behavioral response to interception of filaments of sex pheromone and clean air influence flight track shape in Heliothis virescens (F.) males. J. Comp. Physiol. A 178, 831–847 (1996). [Google Scholar]

- 50.Saxena N., Natesan D., Sane S. P., Odor source localization in complex visual environments by fruit flies. J. Exp. Biol. 221, jeb172023 (2018). [DOI] [PubMed] [Google Scholar]

- 51.Celani A., Villermaux E., Vergassola M., Odor landscapes in turbulent environments. Phys. Rev. X 4, 41015 (2014). [Google Scholar]

- 52.Baden T., et al. , Open Labware: 3-D printing your own lab equipment. PLoS Biol. 13, e1002086 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

All data including design files and a software installation guide for the MultiMoVR setup as well as the raw trajectory data from this study can be found in SI Appendix and at the Dryad Digital Repository (34).