Abstract

A systematic review was conducted to identify determinants (barriers and facilitators) of implementing evidence-based psychosocial interventions for children and youth who experience emotional or behavioral difficulties due to trauma exposure. Determinants were coded, abstracted, and synthesized using Aarons and colleagues’ (2011) Exploration, Preparation, Implementation, and Sustainment framework. Twenty-three articles were included, all of which examined implementation of Trauma-Focused Cognitive Behavioral Therapy or Cognitive-Behavioral Intervention for Trauma in Schools. This review identified multilevel and multiphase determinants that can be addressed by implementation strategies to improve implementation and clinical outcomes, and suggests how future studies might address gaps in the evidence base.

Keywords: Implementation, Trauma-Focused Interventions, Children, Youth, Mental Health

Exposure to potentially traumatic events is highly prevalent among children and youth (i.e., individuals ages 2-17) (Copeland et al. 2007, McLaughlin et al. 2013, Finkelhor et al. 2009, Hillis et al. 2016). For example, global prevalence estimates of violent victimization (e.g., physical violence, emotional violence, sexual violence) have been shown to be 50% at minimum, with 1 billion children and youth experiencing past-year violent victimization (Hillis et al. 2016). Only 6-20% of these youth experience symptoms that qualify them for a formal diagnosis of Post-Traumatic Stress Disorder (PTSD); however, many others report a broad array of emotional, behavioral, and functional difficulties that seriously impact the attainment of milestones associated with normal child development that can endure into adulthood if not proactively addressed (Felitti et al. 1998, Anda et al. 2006, Kahana et al. 2006, Grasso et al. 2015). Furthermore, disparities exist in youth trauma outcomes with rates of PTSD diagnosis as high as 50% in under-resourced communities (Horowitz, McKay, and Marshall 2005).

Fortunately, a number of psychosocial interventions have proven effective for treating trauma-related difficulties experienced by children and youth. Systematic reviews have indicated that certain trauma-focused interventions, most notably those involving cognitive-behavioral therapy (CBT), are linked with positive outcomes (e.g., pre- to post-treatment decline in post-traumatic stress and other trauma-related symptoms) (Silverman et al. 2008, Gillies et al. 2012, Dorsey et al. 2017). In general, these evidence-based trauma-focused interventions involve some common elements that can be difficult to deliver, such as psychoeducation, management of stress-related symptoms, trauma narration (gradual exposure), imaginal or in vivo exposure to trauma reminders, and cognitive restructuring of maladaptive thoughts (Amaya- Jackson and DeRosa 2007, Dorsey, Briggs, and Woods 2011). Descriptive information and research evidence for trauma-focused interventions are summarized on several websites (e.g., those maintained by the National Child Traumatic Stress Network [NCTSN; https://www.nctsn.org/] and the California Evidence-Based Clearinghouse for Child Welfare [CEBC; http://www.cebc4cw.org/]). Much like other effective practices in child and adolescent behavioral health (Garland et al. 2010, Kohl, Schurer, and Bellamy 2009, Raghavan et al. 2010, Zima et al. 2005), effective trauma interventions are underutilized, and even when organizations and systems adopt them, implementation challenges can limit their effectiveness (Allen and Johnson 2012, Powell, Hausmann-Stabile, and McMillen 2013).

A thorough understanding of the factors that facilitate or impede effective implementation and the attainment of key implementation outcomes (e.g., adoption, fidelity, penetration, and sustainment) is needed to improve child and family outcomes and optimize the public health impact of trauma-focused interventions. A number of studies have sought to understand barriers and facilitators to evidence-based interventions (Addis, Wade, and Hatgis 1999, Raghavan et al. 2007, Cook, Biyanova, and Coyne 2009, Forsner et al. 2010, Rapp et al. 2010, Stein et al. 2013, Beidas, Stewart, et al. 2016, Powell, Hausmann-Stabile, and McMillen 2013, Powell, Mandell, et al. 2017, Powell et al. 2013), and several conceptual frameworks in the field of implementation science have proposed an array of potential barriers and facilitators across levels (e.g., intervention, individual, team, organization, system, policy) and phases of implementation (e.g., exploration, preparation, implementation, and sustainment) (Aarons, Hurlburt, and Horwitz 2011, Cane, O’Connor, and Michie 2012, Damschroder et al. 2009, Flottorp et al. 2013). These empirical and conceptual contributions highlight targets for implementation strategies that can promote the effective integration of evidence-based interventions into community settings (Powell et al. 2015). However, there has not yet been a systematic assessment of determinants for implementing evidence-based psychosocial interventions to address trauma-related symptoms in children, youth, and families.

Purpose and Contribution of this Review

The aim of the current study is to systematically review the literature to summarize empirical studies that identify determinants (i.e., barriers and facilitators) of implementing evidence-based psychosocial interventions that address trauma-related symptoms in children, youth, and families. The purpose of this review is twofold. First, it is intended to inform efforts to implement trauma-focused interventions in community settings by helping relevant stakeholders to anticipate and address barriers and leverage facilitators to improve implementation and clinical outcomes. Second, this review will inform a research agenda on the implementation of trauma-focused interventions for children, youth, and families by summarizing current knowledge of barriers and facilitators at different levels and across phases of implementation, and by suggesting how future studies might address gaps in the current evidence base.

Guiding Conceptual Frameworks

There are an increasing number of relevant conceptual frameworks that can guide implementation research and practice (Strifler et al. 2018, Tabak et al. 2012). These frameworks serve three main purposes: 1) to facilitate the identification of potential determinants of implementation; 2) to outline processes by which these determinants may be addressed; and 3) to suggest implementation outcomes (Proctor et al. 2011) that serve as indicators of implementation success, proximal indicators of implementation processes, and key intermediate outcomes in relation to service system or clinical outcomes in effectiveness and quality of care research (Nilsen 2015). In this review, we draw upon two frameworks that meet these three main purposes.

The Exploration, Preparation, Implementation, and Sustainment (EPIS) framework (Aarons, Hurlburt, and Horwitz 2011) was selected to guide the assessment of determinants and implementation processes, because it was developed to inform implementation efforts in public service sectors (e.g., public mental health and child welfare services) and has been used frequently within the field of child and adolescent mental health as well as other formal health care settings (Moullin et al. 2019). The EPIS model provides useful guidance for identifying key determinants and processes within the course of an implementation effort, as it specifies determinants that are internal and external to an organization (inner context and outer context) across the different phases of implementation (exploration, preparation, implementation, and sustainment). EPIS also acknowledges the recursive nature of implementation processes, as organizations and systems may reach one phase (e.g., implementation or sustainment) and then return to a prior phase (e.g., to explore need for clinical intervention adaptation or new services) (Becan et al. 2018). Accordingly, we used EPIS to identify determinants of implementing evidence-based, trauma-focused interventions across the four phases of implementation.

The Implementation Outcomes Framework (Proctor et al. 2011) outlines eight key intermediate outcomes that can serve as indicators of implementation success: acceptability, appropriateness, feasibility, adoption, penetration, fidelity, costs, and sustainment. As we identified determinants in relevant articles, we sought to ensure that each determinant had an explicit or implicit connection to one or more of these implementation outcomes. For example, clinicians’ previous negative experiences with an intervention may reduce acceptability and adoption of that intervention.

Methods

The methods described here were pre-registered on PROSPERO, an international database of protocols for systematic reviews in health and social care (Powell, Patel, and Haley 2017).

Data Sources and Searches

We searched CINAHL, MEDLINE (via PubMed), and PsycINFO using terms related to trauma, children and youth, psychosocial interventions, and implementation (Appendix I) to identify English-language peer-reviewed journal articles published prior to May 17, 2017 that present original research related to the implementation of evidence-based trauma-focused interventions primarily targeting children and youth.

Study Selection

Titles and abstracts of identified articles were independently reviewed by two members of the study team and full-texts of potentially relevant articles were retrieved. If the reviewers disagreed about the potential relevance of an article, we took a conservative approach of pulling the full-text for review. We also hand searched the reference lists of dually excluded articles that appeared likely to include relevant studies (e.g., systematic reviews) and retrieved relevant full-texts. Full-texts of potentially relevant studies were independently reviewed by two members of the study team. At this level of review, conflicts were resolved through discussion until consensus was reached.

This review focused on interventions for children and youth experiencing emotional or behavioral difficulties related to trauma that were identified as well-established by Dorsey et al. (2017). Criteria for well-established interventions included efficacy demonstrated either by:

statistically significant superiority to pill, psychological placebo, or other active treatment or

equivalence to an already established treatment in at least two independent research settings by two independent research teams as well as various methodological criteria (i.e., randomized controlled design; treatment manuals or equivalent used; treated specified problems for population meeting inclusion criteria; reliable and valid outcome measures used; appropriate analyses used with sufficient sample size to detect effects).

Studies of well-established interventions were included if they related an implementation determinant to an implementation outcome (e.g., staffing or funding’s impact on feasibility or sustainability). Determinants were identified according to the EPIS model (Aarons, Hurlburt, and Horwitz 2011). Outcomes of interest were those included in Proctor et al.’s (2011) taxonomy of implementation outcomes. Specific inclusion criteria are listed in Table 1; inclusion criteria were intentionally broad with respect to study design and research methods to ensure that we could characterize the level of evidence for specific determinants of implementing trauma-focused interventions.

Table 1.

Inclusion criteria.

| Inclusion criteria | ||

|---|---|---|

| Publication type | Published original research | |

| Population | • Studies of interventions targeting individuals <19 years of age who have experienced trauma; mixed populations with some individuals ≥19 years was considered acceptable • Studies of providers of trauma-focused care |

|

| Intervention | • Individual cognitive behavioral therapy (CBT), including prolonged exposure therapy (PE), cognitive behavioral writing therapy (CBWT), prolonged exposure for adolescents (PE-A), narrative exposure therapy for the treatment of traumatized children and adolescents (KidNET), individual project loss and survival team (Project LAST), and modified CBT (m-CBT) • Individual CBT with parent involvement, including trauma-focused CBT (TF-CBT), risk reduction through family treatment (RRFT), game-based CBT individual model (GB-CBT-IM), and TF-CBT with trauma narrative (TF-CBT-TN) • Group CBT, including group-based trauma and grief component therapy (TCGT), cognitive behavioral intervention for children in schools (CBITS), support for students exposed to trauma (SSET), group-based project loss and survival team (Project LAST with one individual session); group TF-CBT, and grief and trauma intervention with coping skills (GTI-C) and with trauma/loss narrative (GTI-CN) |

|

| Comparator | Any, none required | |

| Outcomes | Any implementation determinant related to an implementation outcome | |

| Outer context determinants: • Sociopolitical context • Funding • Client advocacy • Interorganizational networks • Intervention developers • External leadership • Public-academic collaboration • External fidelity monitoring and support • Other Inner context determinants: • Organizational characteristics • Individual adopter characteristics • Internal leadership • Innovation-values fit • Internal fidelity monitoring and support • Staffing • Other |

Implementation outcomes: • Adoption • Appropriateness • Feasibility • Fidelity • Cost • Penetration • Sustainability |

|

| Timing/context | Any, no restrictions | |

| Study design | Any non-systematic review effectiveness or implementation study | |

Data Extraction & Analysis

Data analysis was driven by a primarily deductive approach guided by qualitative content analysis as described by Forman and Damschroder, which unfolds over three phases: immersion, reduction, and interpretation (Forman and Damschroder 2007). In the immersion phase, researchers engaged with the data, reading and re-reading included articles to obtain a sense of “the whole.”

In the reduction phase, the results sections of the included studies were coded based on the EPIS framework using Dedoose mixed methods analysis software (version 7.6.22) to extract any relevant data for analysis (Aarons, Hurlburt, and Horwitz 2011). Three modifications were made to EPIS for the purposes of this review, with approval from the framework’s developer (G.A.): (1) fidelity monitoring and support could be coded for the inner or outer context, (2) any factor could be coded for any phase of implementation, and (3) implementation determinants that were identified but that did not fit into the factors specified by EPIS were coded as ‘other.’ Each excerpt was coded for the relevant phase of implementation and at least one determinant; a single excerpt could be coded for multiple determinants. A subset of included articles was identified for pilot data abstraction and coding by the research team. The results of this pilot round were discussed to ensure interrater agreement and minimize conflicts. For the remaining included articles, initial data abstraction and coding were verified by a second researcher with any conflicts resolved through discussion until consensus was reached.

After applying the codebook to all included articles, implementation determinants coded as ‘other’ were further classified using an inductive approach. The emergent factors were compared to implementation determinants included in the Consolidated Framework for Implementation Research (CFIR) and defined accordingly (Damschroder et al. 2009). All excerpts were then rearranged into code reports to facilitate in-depth exploration of each phase (exploration, preparation, implementation, and sustainment), level (inner and outer context), and construct within the EPIS framework (and its extensions via inductive coding).

Finally, during the interpretation phase, descriptive and interpretive summaries of the data were written that included the main points from the report, sample quotations, and an interpretive narrative.

Quality Assessment

The quality of included studies was assessed using the Mixed Methods Assessment Tool (MMAT), which provides a single scoring guide across qualitative, quantitative, and mixed methods studies (Pluye et al. 2011). Quality assessment was conducted based on coded data rather than overall study data (e.g., if we only coded qualitative findings from a mixed methods study because there was no quantitative data related to implementation, we only assessed the qualitative aspects of the study design). When multiple methods (i.e., qualitative and quantitative) were used to collect relevant data but use of the methods was independent and not considered “mixed,” quality assessment scores were based on items for qualitative and appropriate quantitative components without incorporating items for mixed methods studies (Palinkas et al. 2011). Single studies represented in multiple published articles were considered together and assigned a single quality score. Possible quality scores include 25%, 50%, 75%, or 100%, with studies meeting all relevant methodological requirements receiving 100% (total scores for included studies are reported in Table 2 and whether studies met relevant methodological requirements are reported in Appendix II). Each study was initially assessed by one researcher with all quality assessment scores then verified by a second researcher. Conflicts were resolved through discussion until consensus was reached.

Table 2.

Characteristics of included studies.

| Study | Setting | Intervention | Method of collection for relevant data | E | P | I | S | MMAT score |

|---|---|---|---|---|---|---|---|---|

| Allen 2012a | Community-based mental health, private practices (US) | TF-CBT | Survey of clinicians | ● | 50% | |||

| Allen 2012b | ● | |||||||

| Allen 2014 | Community-based mental health (US) | TF-CBT | Survey of clinicians | ● | 75% | |||

| Beidas 2016 | Community-based mental health (US) | TF-CBT | Survey of clinicians, administrative data | ● | ○ | ● | 50% | |

| Cohen 2016 | Residential treatment facilities (US) | TF-CBT | Survey of clinicians, fidelity checklist | ○● | 25% | |||

| Dorsey 2014 | Child welfare offices (US) | TF-CBT | Interviews of caregivers | ○ | 75% | |||

| Ebert 2012 | Community-based mental health (US) | TF-CBT | Survey of clinicians, administrators, and supervisors | ○● | 75% | |||

| Gleacher 2011* | Community-based mental health (US) | TF-CBT | Survey of clinicians | ● | ○ | 25% | ||

| Hanson 2014 | Not specified (US) | TF-CBT | Interviews of intervention trainers | ○● | 50% | |||

| Hoagwood 2007 | Community-based mental health, hospital clinics, schools (US) | TF-CBT | Case study | ○● | ○● | 25% | ||

| Jensen-Doss 2008 | Community-based mental health (US) | TF-CBT | Survey of clinicians, chart review | ● | ● | 50% | ||

| Lang 2015ⱡ | Community-based mental health (US) | TF-CBT | Survey of clients, clinicians, staff; focus groups with clinicians, administrators | ● | ○● | ● | 50% | |

| Morsette 2012* | Schools (US) | CBITS | Survey of clinicians | ● | ● | 50% | ||

| Murray 2013a | Community-based mental health (Zambia) | TF-CBT | Case study; interviews of clients, clinicians | ○● | ● | ○● | 75% | |

| Murray 2013b | ● | ○● | ||||||

| Murray 2014 | ● | ○● | ||||||

| Nadeem 2011 | Schools (US) | CBITS | Case study | ○● | ○● | ○● | 50% | |

| Nadeem 2016 | Schools (US) | CBITS | Interviews of clinicians, district staff | ○● | 75% | |||

| Sabalauskas 2014* | Community-based mental health (US) | TF-CBT | Survey of clinicians | ○ | 25% | |||

| Self-Brown 2016 ⱡ | Community-based mental health (US) | TF-CBT | Survey and interviews of caregivers | ○ | 75% | |||

| Sigel 2013 | Community-based mental health (US) | TF-CBT | Survey of clinicians | ○● | 50% | |||

| Wenocur 2016 | Homeless shelter (US) | TF-CBT | Case study | ● | ○ | ○● | 50% | |

| Woods-Jaeger 2017 | Community-based mental health (Kenya, Tanzania) | TF-CBT | Interviews of clinicians | ● | 100% |

○ = Excerpt(s) coded reflect outer setting factors.

● = Excerpt(s) coded reflect inner context factors.

Qualitative and quantitative data collected, but only qualitative or only quantitative data coded as relevant. Quality assessment is thus based on MMAT items for the qualitative or appropriate quantitative component only.

Multiple methods (i.e., qualitative and quantitative) used to collect relevant data, but use of methods was not considered “mixed.” Quality assessment is thus based on MMAT items for qualitative and appropriate quantitative components and does not incorporate MMAT items for mixed methods studies.

CBITS = Cognitive Behavioral Intervention for Trauma in Schools. E = Exploration phase. I = Implementation phase. MMAT = Mixed Methods Appraisal Tool. P = Preparation phase. S = Sustainment phase. TF-CBT = Trauma-focused Cognitive Behavioral Therapy. US = United States.

Results

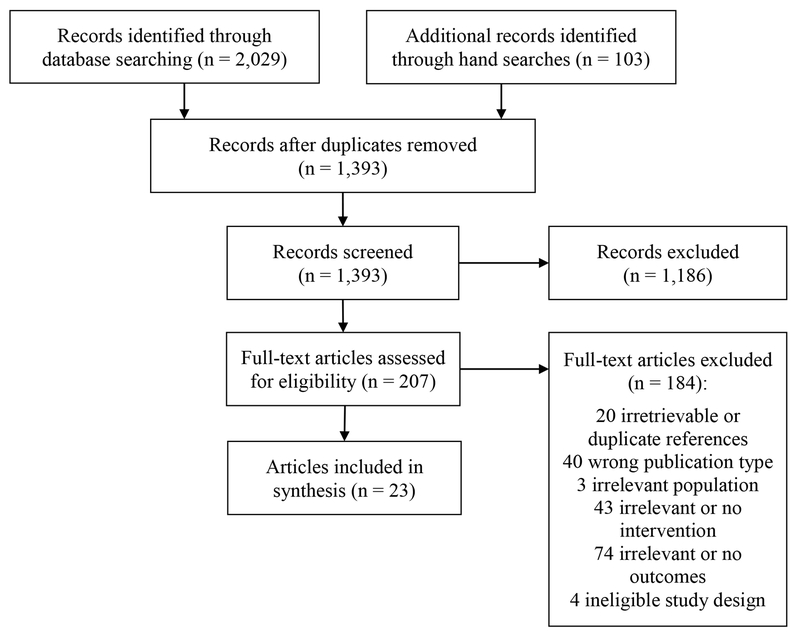

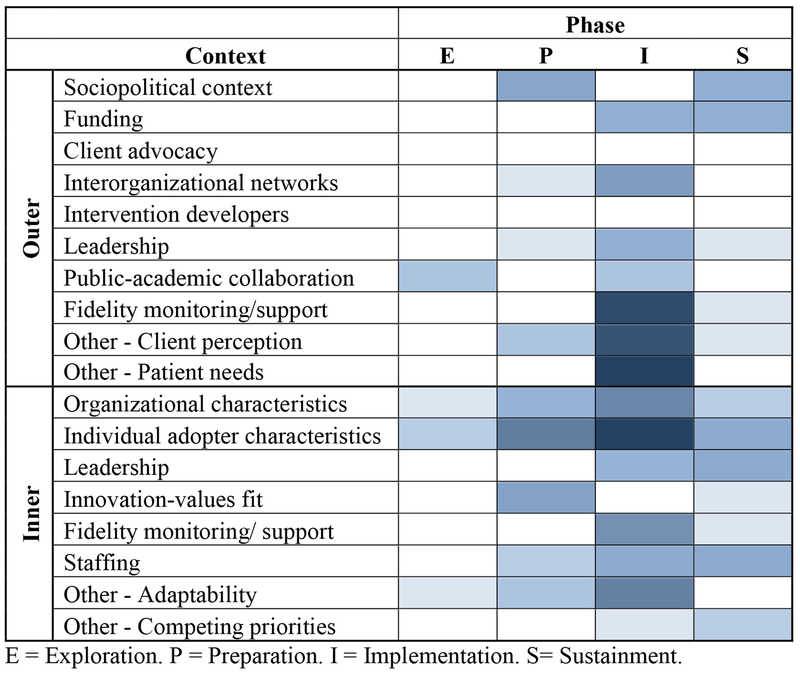

After initial screening (n = 1,393) and full text review (n = 207), 23 articles were included for data abstraction and coding (Figure 1). Table 2 lists the characteristics of the included studies. Most studies assessed implementation determinants in community-based mental health settings within the United States; four studies implementing trauma-focused care outside of the United States were included. All included studies examined the implementation of either trauma focused-CBT (TF-CBT; n = 20) or Cognitive Behavioral Intervention for Trauma in Schools (CBITS; n = 3). Most studies identified focused on determinants during the implementation phase, followed by studies of determinants in the preparation phase; determinants in the exploration and sustainment phases were less common (Figure 2). We limit our discussion of results to the eight more commonly coded determinants. The quality of the included studies based upon MMAT varied, with four studies rated at 25%, ten at 50%, eight at 75%, and one at 100%. When study quality was deemed to be low, however, it was largely a result of incomplete reporting of methods rather than methods that were deemed inadequate (Appendix II).

Figure 1. PRISMA flow diagram.

Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) flow diagram. Our search identified 2,029 records, of which 23 articles were included.

Figure 2. Density of codes by EPIS phase and factor.

Coding density by factor for each phase of implementation. Darker shades indicate a higher density of coding.

Outer Context

A total of 70 excerpts from 19 of the included articles were coded for outer context implementation determinants. A majority of these were discussed as part of the implementation phase (74%), followed by the preparation and sustainment phases (10% and 13%, respectively). We summarize findings regarding external fidelity monitoring and support and two determinants stemming from the outer context’s ‘other’ category (client perception and patient needs and resources). The remaining excerpts were coded for sociopolitical context (7 excerpts from 3 articles), interorganizational networks (6 excerpts from 3 articles), funding (6 excerpts from 6 articles), external leadership (5 excerpts from 2 articles), and public-academic collaboration (4 excerpts from 2 articles). No excerpts were coded for client advocacy or intervention developers.

Fidelity monitoring and support.

This code was applied to excerpts exploring the relationship between external support targeting clinician knowledge of and fidelity to the intervention and implementation outcomes. Ultimately, 15 excerpts from 7 articles were coded as outer context fidelity monitoring and support (Cohen et al. 2016, Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Gleacher et al. 2011, Lang et al. 2015, Morsette et al. 2012, Nadeem et al. 2011, Sabalauskas, Ortolani, and McCall 2014).

Data collected from clinicians, staff, and administrators revealed that logistical and clinical supports, hosted in external training and learning collaborative environments by groups other than the intervention developers, generally facilitated implementation. Initial training alone was found to be insufficient in one study, with clinicians and staff expressing a desire for ongoing training and oversight (Sabalauskas, Ortolani, and McCall 2014). This was echoed by participants in another study reporting periodic consultation and site visits as some of the most important components of their learning collaborative experience (Lang et al. 2015). One study found that Plan-Do-Study-Act (PDSA) cycles were particularly useful for administrators and supervisors, and less so for clinicians (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012). Improvement metrics (e.g., supervision time spent on TF-CBT, adherence to the treatment model) were useful to administrators and senior leaders, but not for supervisors and clinicians (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Lang et al. 2015). For clinicians, one study found intervention checklists to be helpful (Nadeem et al. 2011). Although such approaches for fidelity monitoring and support were generally found to facilitate implementation, the overall time and resources required for various stakeholders to engage in ongoing external supports may serve as a barrier to maximizing their benefits (Gleacher et al. 2011).

Client perception.

Excerpts coded as outer context other were further categorized as client perception when stakeholder beliefs about a specific intervention or clinical treatment more generally were related to an implementation outcome, such as the appropriateness of the intervention. Ultimately, 15 excerpts from 8 articles were coded as client perception (Dorsey, Conover, and Revillion Cox 2014, Hanson et al. 2014, Murray, Familiar, et al. 2013, Murray et al. 2014, Nadeem et al. 2011, Nadeem and Ringle 2016, Self-Brown et al. 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016).

Studies of caregivers found that care-seeking and continued engagement in treatment were influenced by previous experiences accessing mental health services and fit between the family and clinician (Dorsey, Conover, and Revillion Cox 2014, Self-Brown et al. 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016). In one study, caregivers’ perceptions of an evidence-based practice’s (EBPs) appropriateness influenced their decision to initiate treatment (Murray, Familiar, et al. 2013). National TF-CBT trainers also raised concerns about caregivers’ perceptions influencing engagement in treatment (Hanson et al. 2014). In a study of CBITS implementation, engaging parents prior to implementation was considered critical to successful delivery of care in schools, but lack of parent engagement in treatment remained a barrier (Nadeem et al. 2011, Nadeem and Ringle 2016). In Zambia, clinicians attributed poor TF-CBT session attendance to families’ familiarity with and preference for briefer treatments, comprised of fewer sessions (Murray et al. 2014).

Patient needs and resources.

Excerpts coded as outer context other were further categorized as patient needs and resources when patient or caregiver characteristics affected treatment engagement and were related to an implementation outcome, such as fidelity to the intervention. Ultimately, 15 excerpts from 8 articles were coded for patient needs and resources (Dorsey, Conover, and Revillion Cox 2014, Hoagwood et al. 2007, Murray, Dorsey, et al. 2013, Murray, Familiar, et al. 2013, Murray et al. 2014, Self-Brown et al. 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016, Woods-Jaeger et al. 2017).

Multiple studies reported logistical barriers that influenced caregiver engagement in treatment, such as limited availability of appointment times and inconvenient appointment locations that were incompatible with caregiver schedules and access to transportation (Dorsey, Conover, and Revillion Cox 2014, Murray et al. 2014, Self-Brown et al. 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016). In Zambia, similar logistical barriers were addressed in several ways: shortened sessions were still offered to clients who arrived late while fewer, longer sessions could be scheduled for clients who had to travel further distances (Murray, Dorsey, et al. 2013). Having limited financial resources was another factor that influenced engagement in treatment (Murray, Familiar, et al. 2013, Murray et al. 2014, Woods-Jaeger et al. 2017, Self-Brown et al. 2016). Lay counselors in Kenya and Tanzania reported noticing that their clients were distracted by hunger and recognized the benefits of having a referral network with organizations that could help address economic needs outside of treatment; still, one offered snacks to clients prior to their sessions (Woods-Jaeger et al. 2017). One study reported deviating from manual-based interventions to address other patient needs like client comorbidities and family crises (Hoagwood et al. 2007).

Inner Context

A total of 80 excerpts from 20 of the included articles were coded for inner context implementation determinants. Half of these were discussed as part of the implementation phase (53%), followed by the preparation and sustainment phases (24% and 20%, respectively). We summarize findings regarding organizational characteristics, individual adopter characteristics, internal fidelity monitoring and support, staffing, and one determinant stemming from the inner context’s ‘other’ category (adaptability). The remaining excerpts were coded for internal leadership (7 excerpts from 4 articles), innovation-values fit (5 excerpts from 3 articles), and other (3 excerpts from 2 articles).

Organizational characteristics.

This code was applied to excerpts exploring the relationship between organizational characteristics such as structure, climate, receptive context, absorptive capacity, and readiness for change and implementation outcomes. Ultimately, 13 excerpts from 8 articles were coded for organizational characteristics (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Gleacher et al. 2011, Jensen-Doss, Cusack, and de Arellano 2008, Lang et al. 2015, Murray, Familiar, et al. 2013, Nadeem et al. 2011, Nadeem and Ringle 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016).

Barriers due to absorptive capacity (especially related to organizational ability to use new knowledge and receptive context (most often related to an organization’s ability to minimize competing demands) were common (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Jensen-Doss, Cusack, and de Arellano 2008, Lang et al. 2015, Murray, Familiar, et al. 2013, Nadeem et al. 2011, Nadeem and Ringle 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016). Clinicians reported that time demands to attend training, to meet productivity requirements, and to incorporate new approaches to assessment and treatment were barriers to implementation and sustainment (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Jensen-Doss, Cusack, and de Arellano 2008, Lang et al. 2015). Two studies suggested that insufficient organizational capacity to meet patients’ demands led to long waiting lists and decreased treatment initiation and completion (Murray, Familiar, et al. 2013, Wenocur, Parkinson-Sidorski, and Snyder 2016). Perceived capacity within an organization to implement change was sometimes used as a screening tool to select organizations into interventions (Gleacher et al., 2011). A study on scaling up a school-based intervention suggests that organizational culture and climate were critical in implementation success. In particular, leadership support to build staff buy-in for the EBP, dedicated time, and physical space to support the new practice were facilitators of successful implementation (Nadeem et al. 2011). Having an implementation team in place, comprised of individuals within the organization, was considered one of the most important facilitators to implementation in one study (Ebert et al., 2012).

Individual adopter characteristics.

This code was applied to excerpts exploring the relationship between the goals, perceived need to change, and attitudes towards the intervention at the individual level within organizations, and implementation outcomes. Ultimately, 29 excerpts from 14 articles were coded for individual adopter characteristics (Allen, Gharagozloo, and Johnson 2012, Allen and Johnson 2012, Allen, Wilson, and Armstrong 2014, Beidas, Adams, et al. 2016, Cohen et al. 2016, Hanson et al. 2014, Hoagwood et al. 2007, Jensen-Doss, Cusack, and de Arellano 2008, Lang et al. 2015, Morsette et al. 2012, Murray et al. 2014, Nadeem et al. 2011, Nadeem and Ringle 2016, Sigel et al. 2013).

Individuals’ attitudes towards the innovation and perceived need for change influenced decisions to adopt and undergo training as well as participation in training in several studies (Nadeem et al. 2011, Murray et al. 2014, Sigel et al. 2013). A strong belief that the innovation was appropriate, but flexible to the context was an adoption driver in one international study (Murray et al. 2014). In a study examining the implementation of CBITS over a four-year period, clinicians with positive attitudes about the EBP due to positive clinical experiences or improved patient outcome were more like to sustain the practice (Nadeem & Ringle, 2016). Several studies suggested that clinician and supervisor buy-in improved with training and as they gained experience with treatment (Hoagwood et al. 2007, Morsette et al. 2012, Jensen-Doss, Cusack, and de Arellano 2008, Beidas, Adams, et al. 2016, Lang et al. 2015, Allen, Wilson, and Armstrong 2014).

In a study examining perceived implementation challenges from the perspective of 19 national trainers of TF-CBT, trainers also expressed concerns that clinicians’ beliefs about the intervention and level of skills impacted implementation fidelity (Hanson et al. 2014). Further, one study observed implementation challenges with supervisors’ negative perception of protocols, and clinicians’ attitudes towards manualized treatment (Hoagwood et al. 2007). The orientation of the clinician prior to training or experience with the intervention may impact clinicians’ perceptions of the value of the intervention, buy-in, and implementation fidelity (Jensen-Doss, Cusack, and de Arellano 2008, Allen, Gharagozloo, and Johnson 2012). While one study found no association between clinician’s professional discipline, age, or years of experience with implementing all components of TF-CBT, another study reported that fully licensed clinicians trained in TF-CBT were more likely to complete the model with fidelity compared to non-licensed providers (Allen, Gharagozloo, and Johnson 2012, Cohen et al. 2016).

Fidelity monitoring and support.

This code was applied to excerpts exploring the relationship between internal support targeting clinician knowledge of and fidelity to the intervention and implementation outcomes. Ultimately, 8 excerpts from 6 articles were coded for inner context fidelity monitoring and support (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Hoagwood et al. 2007, Murray, Familiar, et al. 2013, Murray et al. 2014, Nadeem et al. 2011, Nadeem and Ringle 2016).

Having an internal fidelity support system in place facilitated clinician buy-in and increased acceptability of the EBP (Hoagwood et al. 2007, Nadeem et al. 2011, Nadeem and Ringle 2016, Murray, Familiar, et al. 2013). One study used clinical outcome data to gain continued financial support to program sustainability and later program expansion (Nadeem et al.). EBP fidelity was positively impacted by supportive coaching, supervision, and monitoring clinical outcome data (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Murray, Familiar, et al. 2013, Murray et al. 2014).

Staffing.

This code was applied to excerpts exploring the relationship between hiring, retaining, or replacing employees and implementation outcomes. Ultimately, 10 excerpts from 7 articles were coded for staffing (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Hoagwood et al. 2007, Lang et al. 2015, Murray, Familiar, et al. 2013, Nadeem et al. 2011, Nadeem and Ringle 2016, Wenocur, Parkinson-Sidorski, and Snyder 2016).

Organizational restructuring required to deliver trauma-focused interventions and employee turnover were common challenges to implementation (Ebert, Amaya-Jackson, Markiewicz, Kisiel, et al. 2012, Murray, Familiar, et al. 2013, Lang et al. 2015). In Zambia, community volunteers who assessed and referred potential clients were not formally contracted and would sometimes stop working or become unreachable (Murray, Familiar, et al. 2013). A study in the United States found that issues related to funding contributed to employee turnover (Hoagwood et al. 2007). Senior leaders in one study noted that turnover was particularly concerning with regards to loss of investment in training (Lang et al. 2015). One study found that clinicians who changed schools, added a school to their caseload, or experienced a change in school administration, however, did not continue offering the intervention (Nadeem and Ringle 2016). Other studies noted that organizations needed to hire and train more clinicians as demand for treatment increased – a homeless shelter that implemented TF-CBT planned to hire additional clinicians while a group that implemented CBITS in schools required the schools to begin providing their own clinicians (Wenocur, Parkinson-Sidorski, and Snyder 2016, Nadeem et al. 2011).

Adaptability.

Excerpts coded as inner context other were further categorized as adaptability when stakeholder perceptions of an intervention’s ability to be modified to meet local needs were related to an implementation outcome, such as adoption of the intervention. Ultimately, 11 excerpts from 5 articles were coded as adaptability (Morsette et al. 2012, Murray, Dorsey, et al. 2013, Murray et al. 2014, Nadeem et al. 2011, Woods-Jaeger et al. 2017).

Three studies discussed the need for cultural adaptations to evidence-based trauma-focused care. In Zambia, TF-CBT was selected for implementation by a group of stakeholders who believed the core components were appropriate for the local cultural but that examples, activities, etc. needed to be modified to be more relevant (Murray, Dorsey, et al. 2013). Lay counselors who were trained in the intervention reported liking both its structure and flexibility (Murray et al. 2014). This sentiment was echoed by lay counselors in a study of TF-CBT implementation in Kenya and Tanzania who stressed the importance of connecting skills taught to cultural norms (Woods-Jaeger et al. 2017). Other modifications made to the delivery of TF-CBT included engaging more family members and sending text messages to remind and encourage clients to stay engaged (Murray, Dorsey, et al. 2013). In the United States, CBITS was adapted for American Indian youth; specifically, tribe elders and healers were invited to participate in the initial treatment session by presenting Indian perspectives on trauma as well as in the final treatment session by conducting ceremonies based on traditional healing practices (Morsette et al. 2012). Another study of CBITS, conducted in Louisiana after Hurricane Katrina, identified contextual modifications as paramount to successful implementation—this included addressing both the broader mental health needs and the limited resources and capacity of a community recovering from disaster (Nadeem et al. 2011).

Discussion

To our knowledge, this study is the first to systematically review the empirical literature on determinants of implementing evidence-based, trauma-focused interventions for children and youth. Systematic reviews of determinants are increasingly common, as they are a means of consolidating the literature in a specific clinical area and alerting stakeholders to potential determinants that they may face in implementation research or practice (Barnett et al. 2018, Pomey et al. 2013, Tricco et al. 2015, Vest et al. 2010). Understanding the contexts in which services are provided is fundamental to improving the quality of trauma-focused care. As noted by Hoagwood and Kolko (2009), “It is difficult and perhaps foolhardy to try to improve what you do not understand. Implementation of effective services in the absence of knowledge about the contexts of their delivery is likely to be impractical, inefficient, and costly” (p. 35). Given the high rates at which children and youth are exposed to trauma (Copeland et al. 2007, McLaughlin et al. 2013, Finkelhor et al. 2009, Hillis et al. 2016), the availability of evidence-based interventions to address trauma-related symptoms (Dorsey et al. 2017) and the scope of efforts to disseminate and implement trauma-focused interventions nationally (Amaya-Jackson et al. 2018, Ebert, Amaya-Jackson, Markiewicz, and Fairbank 2012), it is critical to take stock of what we currently know about implementation determinants.

Despite few studies having the explicit objective of assessing determinants for implementing trauma-focused interventions and their impact on implementation outcomes, the results of this systematic review highlight the complexity of implementation, with important determinants being identified at multiple levels and phases of implementation. Each of the determinants identified is a potential target for implementation strategies (Baker et al. 2015, Powell, Beidas, et al. 2017, Powell et al. 2015), though some are likely to be more malleable than others. Some determinants identified may be more readily addressed by certain types of stakeholders. For example, some client-level determinants, such as financial insecurity, may be more difficult for clinicians and organizations to address, and determinants related to the financing of EBPs might be best addressed by policymakers and system leaders. In fact, the goals of effective implementation and sustainment are more likely achieved where there are strong system level financing strategies (Jaramillo et al. 2018). While there is some evidence that implementation strategies that are prospectively tailored to address determinants are more effective than those that are not tailored (Baker et al. 2015), there is also evidence to suggest that a one-time assessment of determinants prior to an implementation effort may not be sufficient, as determinants are likely to change throughout the implementation process (Wensing 2017). The multilevel, multiphase determinants identified in this review certainly underscore the importance of an ongoing approach to assessing determinants and suggest that implementation strategies may actually need to be adaptively tailored throughout (Powell et al. 2019). Thus, efforts to prepare organizational leaders and clinicians to apply implementation strategies that match the needs of their organization are essential (Amaya-Jackson et al. 2018, Powell et al., In Press).

The preponderance of evidence for the influence of implementation determinants in this review is descriptive and based upon qualitative data. Qualitative methods are particularly well-suited to capturing contextual factors (QUALRIS 2018) and the exploratory nature of many of these studies is consistent with the developmental stage of implementation science (Chambers 2012). However, it is also important to move beyond lists of potential determinants and to seek a more robust understanding of causality in the field (Lewis, Klasnja, et al. 2018, Williams and Beidas 2018). To develop a richer understanding of how determinants interact to promote or inhibit implementation, it is recommended that future studies 1) engage a wide range of stakeholders to ensure that their vantage points are represented (Chambers and Azrin 2013); 2) apply well-established conceptual frameworks and theories that can promote comparability across studies (Birken et al. 2017, Proctor et al. 2012); 3) use psychometrically and pragmatically strong measures of implementation determinants (Glasgow and Riley 2013, Lewis, Mettert, et al. 2018, Powell, Stanick, et al. 2017, Stanick et al. 2018); and 4) leverage methods that can capture complexity and elucidate causal pathways through which determinants operate to influence implementation and clinical outcomes, including mixed methods (Aarons et al. 2012, Palinkas et al. 2011) and systems science approaches (Hovmand 2014, Zimmerman et al. 2016, Burke et al. 2015).

Consistent with prior research using the EPIS framework, few studies examined the exploration and sustainment phases (Novins et al. 2013, Moullin et al. 2019). The lack of focus on the early and late phases of implementation is problematic, as we have much to learn about the factors that influence clinicians’, organizations’, and systems’ readiness to implement new innovations (Weiner, Amick, and Lee 2008, Weiner 2009) as well as their ability to sustain them over time (Schell et al. 2013, Luke et al. 2014). One way of encouraging research on the earlier and later phases of implementation is through the use of process models and measures such as the EPIS model and the Stages of Implementation Completion measure that explicitly focus on all phases of implementation (Aarons, Hurlburt, and Horwitz 2011, Saldana 2014). NCCTS has articulated implementation science-informed elements of their learning collaborative across phases of the EPIS model, encouraging attention to each phase from exploration to (planning for) sustainment (Amaya-Jackson et al. 2018). Similarly, NCTSN has developed functional and translational products that address the need for focusing on all phases of implementation, such as a guide for senior leaders that facilitates consideration of factors related to fidelity and sustainment in the early phases of implementation (Landsverk 2012, NCTSN 2015, 2017, Agosti et al. 2016).

Finally, while nearly half of excerpts focused on client-level determinants, these determinants are not represented in detail in many of the leading implementation determinant frameworks (Nilsen 2015), such as EPIS (Aarons, Hurlburt, and Horwitz 2011) and CFIR (Damschroder et al. 2009). The field of implementation science has primarily focused on provider-level and organizational-level change, and client-level determinants have largely been the focus of clinical intervention developers. There is an opportunity for implementation researchers and practitioners to begin to more thoroughly assess and address client-level determinants, integrate those factors into prevailing conceptual frameworks, and draw upon the existing body of client-engagement research (McKay and Bannon Jr 2004, Gopalan et al. 2010) more deliberately and consistently.

Limitations & Strengths

A few limitations are worth noting. First, given the quantity, quality, and nature of the included studies, we can say little about which determinants influenced specific implementation outcomes. Such aggregation and more precise linking of determinants to implementation outcomes may be facilitated by coalescing on common conceptual frameworks and theories, as well as improving methods for assessing and prioritizing determinants (as described above). Second, for efficiency, our data extraction and quality assessment processes were not done by two independent researchers but were instead coded by one researcher and then reviewed by a second to verify accuracy of interpretation. Third, our approach to quality assessment may not have been optimal for every study design included. It was chosen because it is flexible and allows for a single metric to compare studies of heterogeneous designs. Additionally, it is important to reiterate that the low-quality ratings for many studies were due to incomplete reporting, and therefore may or may not reflect methodological shortcomings.

Despite its limitations, this study employed a rigorous review approach, adhering to a pre-registered protocol for the systematic review, engaging in a rigorous systematic search of the literature that built upon previous reviews of evidence-based psychosocial treatments for trauma, and relying upon a theory-driven approach guided by widely used determinant and process frameworks (Aarons, Hurlburt, and Horwitz 2011, Moullin et al. 2019, Proctor et al. 2011).

Conclusion

This study represents the first systematic review of determinants of implementing evidence-based psychosocial interventions for children and youth who experience symptoms as a result of trauma exposure. It advances the field by presenting multilevel and multiphase targets for intervention, allowing stakeholders engaging in implementation efforts to anticipate potential challenges and leverage points. Furthermore, this review suggests that, although the assessment of implementation determinants has almost become passé, we have much to learn about how to pragmatically assess and prioritize them; how they interact to influence implementation and clinical outcomes; and how we can design, select, and tailor implementation strategies to address them.

Supplementary Material

Footnotes

- Preliminary results at the 4th Biennial Society for Implementation Research Collaboration in September 2017 in Seattle, Washington

- Unpublished findings at the 11th Annual Conference on the Science of Dissemination and Implementation in Health in December 2018 in Washington, D.C.

Conflict of Interest: The authors declare that they have no conflict of interest.

Ethical Approval: This article does not contain any studies with human participants performed by any of the authors.

Informed Consent: This article did not involve the collection of primary data; thus, informed consent was not relevant.

Contributor Information

Byron J. Powell, Brown School, Washington University in St. Louis.

Sheila V. Patel, Department of Health Policy and Management, University of North Carolina at Chapel Hill Gillings School of Global Public Health and RTI International.

Amber D. Haley, Department of Health Policy and Management, University of North Carolina at Chapel Hill Gillings School of Global Public Health and RTI International.

Emily R. Haines, Department of Health Policy and Management, University of North Carolina at Chapel Hill Gillings School of Global Public Health and RTI International.

Kathleen E. Knocke, Department of Health Policy and Management, University of North Carolina at Chapel Hill Gillings School of Global Public Health.

Shira Chandler, Department of Health Policy and Management, University of North Carolina at Chapel Hill Gillings School of Global Public Health.

Colleen Cary Katz, Silberman School of Social Work, Hunter College, City University of New York.

Heather Pane Seifert, Center for Child and Family Health.

George Ake, III, Duke University School of Medicine.

Lisa Amaya-Jackson, Center for Child and Family Health and Duke University School of Medicine.

Gregory A. Aarons, Child and Adolescent Services Research Center, University of California at San Diego School of Medicine.

References

- Aarons Gregory A, Fettes Danielle L, Sommerfeld David H, and Palinkas Lawrence A. 2012. “Mixed methods for implementation research: application to evidence-based practice implementation and staff turnover in community-based organizations providing child welfare services.” Child maltreatment 17 (1):67–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons Gregory A, Hurlburt Michael, and Horwitz Sarah McCue. 2011. “Advancing a conceptual model of evidence-based practice implementation in public service sectors.” Administration and Policy in Mental Health and Mental Health Services Research 38 (1) :4–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addis Michael E, Wade Wendy A, and Hatgis Christina. 1999. “Barriers to dissemination of evidence- based practices: Addressing practitioners’ concerns about manual- based psychotherapies.” Clinical Psychology: Science and Practice 6 (4):430–441. [Google Scholar]

- Agosti J, Ake GS, Amaya-Jackson L, Pane-Seifert H, Alvord A, Tise N, Fixen A, and Spencer JH. 2016. A guide for senior leadership in implementation collaboratives. Los Angeles, CA and Durham, NC: National Child traumatic Stress Network. [Google Scholar]

- Allen B, Gharagozloo L, and Johnson JC. 2012. “Clinician knowledge and utilization of empirically-supported treatments for maltreated children.” Child Maltreat 17 (1): 11–21. doi: 10.1177/1077559511426333. [DOI] [PubMed] [Google Scholar]

- Allen B, and Johnson JC. 2012. “Utilization and implementation of trauma-focused cognitive-behavioral therapy for the treatment of maltreated children.” Child Maltreat 17 (1):80–5. doi: 10.1177/1077559511418220. [DOI] [PubMed] [Google Scholar]

- Allen B, Wilson KL, and Armstrong NE. 2014. “Changing Clinicians’ Beliefs About Treatment for Children Experiencing Trauma: The Impact of Intensive Training in an Evidence-Based, Trauma-Focused Treatment.” Psychological Trauma-Theory Research Practice and Policy 6 (4):384–389. doi: 10.1037/a0036533. [DOI] [Google Scholar]

- Amaya-Jackson Lisa, Hagele Dana, Sideris John, Potter Donna, Ernestine C Briggs Leila Keen, Robert A Murphy Shannon Dorsey, Patchett Vanessa, and Ake George S. 2018. “Pilot to policy: statewide dissemination and implementation of evidence-based treatment for traumatized youth.” BMC health services research 18 (1):589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amaya- Jackson Lisa, and DeRosa Ruth R. 2007. “Treatment considerations for clinicians in applying evidence- based practice to complex presentations in child trauma.” Journal of Traumatic Stress: Official Publication of The International Society for Traumatic Stress Studies 20 (4):379–390. [DOI] [PubMed] [Google Scholar]

- Anda RF, Felitti VJ, Bremner JD, Walker JD, Whitfield Ch., Perry BD, Dube Sh. R., and Giles WH. 2006. “The enduring effects of abuse and related adverse experiences in childhood.” European Archives of Psychiatry and Clinical Neuroscience 256 (3):174–186. doi: 10.1007/s00406-005-0624-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker Richard, Camosso- Stefinovic Janette, Gillies Clare, Shaw Elizabeth J, Cheater Francine, Flottorp Signe, Robertson Noelle, Wensing Michel, Fiander Michelle, and Eccles Martin P. 2015. “Tailored interventions to address determinants of practice.” Cochrane Database of Systematic Reviews (4). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barnett Miya L, Araceli Gonzalez, Jeanne Miranda, Chavira Denise A, and Lau Anna S. 2018. “Mobilizing community health workers to address mental health disparities for underserved populations: a systematic review.” Administration and Policy in Mental Health and Mental Health Services Research 45 (2): 195–211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becan Jennifer E, Bartkowski John P, Knight Danica K, Wiley Tisha RA, Ralph DiClemente, Lori Ducharme, Welsh Wayne N, Diana Bowser, Kathryn McCollister, and Matthew Hiller. 2018. “A model for rigorously applying the Exploration, Preparation, Implementation, Sustainment (EPIS) framework in the design and measurement of a large scale collaborative multi-site study.” Health & justice 6 (1):9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, Adams DR, Kratz HE, Jackson K, Berkowitz S, Zinny A, Cliggitt LP, DeWitt KL, Skriner L, and Evans A Jr. 2016. “Lessons learned while building a trauma-informed public behavioral health system in the City of Philadelphia.” Eval Program Plann 59:21–32. doi: 10.1016/j.evalprogplan.2016.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas Rinad S, Stewart Rebecca E, Adams Danielle R, Fernandez Tara, Lustbader Susanna, Powell Byron J, Aarons Gregory A, Hoagwood Kimberly E, Evans Arthur C, and Hurford Matthew O. 2016. “A multi-level examination of stakeholder perspectives of implementation of evidence-based practices in a large urban publicly-funded mental health system.” Administration and Policy in Mental Health and Mental Health Services Research 43 (6):893–908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Birken Sarah A, Powell Byron J, Shea Christopher M, Haines Emily R, Kirk M Alexis, Leeman Jennifer, Rohweder Catherine, Damschroder Laura, and Presseau Justin. 2017. “Criteria for selecting implementation science theories and frameworks: results from an international survey.” Implementation Science 12 (1):124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke Jessica G, Lich Kristen Hassmiller, Neal Jennifer Watling, Meissner Helen I, Yonas Michael, and Mabry Patricia L. 2015. “Enhancing dissemination and implementation research using systems science methods.” International journal of behavioral medicine 22 (3):283–291. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cane James, O’Connor Denise, and Michie Susan. 2012. “Validation of the theoretical domains framework for use in behaviour change and implementation research.” Implementation science 7 (1):37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chambers DA 2012. “Forward” In Dissemination and implementation research in health: Translating science to practice, vii–x. New York, NY: Oxford University Press. [Google Scholar]

- Chambers DA, and Azrin ST. 2013. “Partnership: a fundamental component of dissemination and implementation research.” Psychiatric Services 64 (6):509–511. [DOI] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Jankowski K, Rosenberg S, Kodya S, and Wolford GL 2nd. 2016. “A Randomized Implementation Study of Trauma-Focused Cognitive Behavioral Therapy for Adjudicated Teens in Residential Treatment Facilities.” Child Maltreat 21 (2) : 156–67. doi: 10.1177/1077559515624775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook JM, Biyanova T, and Coyne JC. 2009. “Barriers to adoption of new treatments: an internet study of practicing community psychotherapists.” Adm Policy Ment Health 36 (2):83–90. doi: 10.1007/s10488-008-0198-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Copeland WE, Keeler G, Angold A, and Costello EJ. 2007. “Traumatic events and posttraumatic stress in childhood.” Arch Gen Psychiatry 64 (5):577–84. doi: 10.1001/archpsyc.64.5.577. [DOI] [PubMed] [Google Scholar]

- Damschroder Laura J, Aron David C, Keith Rosalind E, Kirsh Susan R, Alexander Jeffery A, and Lowery Julie C. 2009. “Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science.” Implementation science 4 (1):50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorsey S, Briggs EC, and Woods BA. 2011. “Cognitive-behavioral treatment for posttraumatic stress disorder in children and adolescents.” Child Adolesc Psychiatr Clin N Am 20 (2):255–69. doi: 10.1016/j.chc.2011.01.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorsey S, Conover KL, and Revillion Cox J. 2014. “Improving foster parent engagement: using qualitative methods to guide tailoring of evidence-based engagement strategies.” J Clin Child Adolesc Psychol 43 (6):877–89. doi: 10.1080/15374416.2013.876643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dorsey S, McLaughlin KA, Kerns SEU, Harrison JP, Lambert HK, Briggs EC, Revillion Cox J, and Amaya-Jackson L. 2017. “Evidence Base Update for Psychosocial Treatments for Children and Adolescents Exposed to Traumatic Events.” J Clin Child Adolesc Psychol 46 (3):303–330. doi: 10.1080/15374416.2016.1220309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebert L, Amaya-Jackson L, Markiewicz JM, Kisiel C, and Fairbank JA. 2012. “Use of the breakthrough series collaborative to support broad and sustained use of evidence-based trauma treatment for children in community practice settings.” Adm Policy Ment Health 39 (3):187–99. doi: 10.1007/s10488-011-0347-y. [DOI] [PubMed] [Google Scholar]

- Ebert Lori, Amaya-Jackson Lisa, Markiewicz Jan, and Fairbank J. 2012. “Development and application of the NCCTS learning collaborative model for the implementation of evidence-based child trauma treatment.” Dissemination and implementation of evidence-based psychological interventions :97–123. [Google Scholar]

- Felitti Vincent J. M. D., Facp, Anda Robert F. M. D., Ms, Nordenberg Dale M. D., Williamson David F. M. S., PhD, Spitz Alison M. M. S., Edwards Valerie B. A., Koss Mary P. PhD, Marks James S. M. D., and Mph. 1998. “Relationship of Childhood Abuse and Household Dysfunction to Many of the Leading Causes of Death in Adults: The Adverse Childhood Experiences (ACE) Study.” American Journal of Preventive Medicine 14 (4):245–258. doi: 10.1016/S0749-3797(98)00017-8. [DOI] [PubMed] [Google Scholar]

- Finkelhor D, Turner H, Ormrod R, and Hamby SL. 2009. “Violence, abuse, and crime exposure in a national sample of children and youth.” Pediatrics 124 (5):1411–23. doi: 10.1542/peds.2009-0467. [DOI] [PubMed] [Google Scholar]

- Flottorp Signe A, Oxman Andrew D, Krause Jane, Musila Nyokabi R, Wensing Michel, Godycki-Cwirko Maciek, Baker Richard, and Eccles Martin P. 2013. “A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice.” Implementation Science 8 (1):35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forman Jane, and Damschroder Laura. 2007. “Qualitative content analysis” In Empirical methods for bioethics: A primer, 39–62. Emerald Group Publishing Limited. [Google Scholar]

- Forsner T, Hansson J, Brommels M, Wistedt AA, and Forsell Y. 2010. “Implementing clinical guidelines in psychiatry: a qualitative study of perceived facilitators and barriers.” BMC Psychiatry 10:8. doi: 10.1186/1471-244x-10-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garland Ann F, Lauren Brookman-Frazee, Hurlburt Michael S, Accurso Erin C, Zoffness Rachel J, Haine-Schlagel Rachel, and Ganger William. 2010. “Mental health care for children with disruptive behavior problems: A view inside therapists’ offices.” Psychiatric Services 61 (8):788–795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillies D, Taylor F, Gray C, O’Brien L, and D’Abrew N. 2012. “Psychological therapies for the treatment of post-traumatic stress disorder in children and adolescents.” Cochrane Database Syst Rev 12:Cd006726. doi: 10.1002/14651858.CD006726.pub2. [DOI] [PubMed] [Google Scholar]

- Glasgow Russell E, and Riley William T. 2013. “Pragmatic measures: what they are and why we need them.” American Journal of Preventive Medicine 45 (2):237–243. [DOI] [PubMed] [Google Scholar]

- Gleacher AA, Nadeem E, Moy AJ, Whited AL, Albano AM, Radigan M, Wang R, Chassman J, Myrhol-Clarke B, and Hoagwood KE. 2011. “Statewide CBT Training for Clinicians and Supervisors Treating Youth: The New York State Evidence Based Treatment Dissemination Center.” J Emot Behav Disord 19 (3):182–192. doi: 10.1177/1063426610367793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gopalan Geetha, Goldstein Leah, Klingenstein Kathryn, Sicher Carolyn, Blake Clair, and McKay Mary M. 2010. “Engaging families into child mental health treatment: Updates and special considerations.” Journal of the Canadian Academy of Child and Adolescent Psychiatry / Journal de l’Académie canadienne de psychiatrie de l’enfant et de l’adolescent 19 (3):182–196. [PMC free article] [PubMed] [Google Scholar]

- Grasso Damion J, Dierkhising Carly B, Branson Christopher E, Ford Julian D, and Lee Robert. 2015. “Developmental patterns of adverse childhood experiences and current symptoms and impairment in youth referred for trauma-specific services.” Journal of abnormal child psychology 44 (5):871–886. [DOI] [PubMed] [Google Scholar]

- Hanson RF, Gros KS, Davidson TM, Barr S, Cohen J, Deblinger E, Mannarino AP, and Ruggiero KJ. 2014. “National trainers’ perspectives on challenges to implementation of an empirically-supported mental health treatment.” Adm Policy Ment Health 41 (4):522–34. doi: 10.1007/s10488-013-0492-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillis S, Mercy J, Amobi A, and Kress H. 2016. “Global Prevalence of Past-year Violence Against Children: A Systematic Review and Minimum Estimates.” Pediatrics 137 (3) :e20154079. doi: 10.1542/peds.2015-4079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoagwood Kimberly Eaton, Vogel Juliet M., Levitt Jessica Mass, D’Amico Peter J., Paisner Wendy I., and Kaplan Sandra J.. 2007. “Implementing an evidence-based trauma treatment in a state system after September 11: The CATS project.” Journal of the American Academy of Child & Adolescent Psychiatry 46 (6):773–779. doi: 10.1097/chi.0b013e3180413def. [DOI] [PubMed] [Google Scholar]

- Hoagwood Kimberly, and Kolko David J. 2009. Introduction to the special section on practice contexts: A glimpse into the nether world of public mental health services for children and families. Springer. [DOI] [PubMed] [Google Scholar]

- Horowitz Karyn, McKay Mary, and Marshall Randall. 2005. “Community violence and urban families: Experiences, effects, and directions for intervention.” American Journal of Orthopsychiatry 75 (3):356–368. [DOI] [PubMed] [Google Scholar]

- Hovmand Peter S. 2014. Community based system dynamics: Springer. [Google Scholar]

- Jaramillo Elise Trott, Willging Cathleen E, Green Amy E, Gunderson Lara M, Fettes Danielle L, and Aarons Gregory A. 2018. ““Creative Financing”: Funding Evidence-Based Interventions in Human Service Systems.” The journal of behavioral health services & research: 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, Cusack KJ, and de Arellano MA. 2008. “Workshop-based training in trauma-focused CBT: an in-depth analysis of impact on provider practices.” Community Ment Health J 44 (4):227–44. doi: 10.1007/sl0597-007-9121-8. [DOI] [PubMed] [Google Scholar]

- Kahana Shoshana Y, Feeny Norah C, Youngstrom Eric A, and Drotar Dennis. 2006. “Posttraumatic stress in youth experiencing illnesses and injuries: An exploratory meta-analysis.” Traumatology 12 (2): 148–161. [Google Scholar]

- Kohl PL, Schurer J, and Bellamy JL. 2009. “The State of Parent Training: Program Offerings and Empirical Support.” Fam Soc 90 (3):248–254. doi: 10.1606/1044-3894.3894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Landsverk J 2012. “Methods development in dissemination & implementation: Implications for implementing and sustaining interventions in child welfare and child mental health “ [Webinar]. UCLA-Duke National Center for Child Traumatic Stress, accessed February 23 https://learn.nctsn.org/course/view.php?id=285.

- Lang JM, Franks RP, Epstein C, Stover C, and Oliver JA. 2015. “Statewide dissemination of an evidence-based practice using Breakthrough Series Collaboratives.” Children and Youth Services Review 55:201–209. doi: 10.1016/j.childyouth.2015.06.005. [DOI] [Google Scholar]

- Lewis Cara C, Klasnja Predrag, Powell Byron, Tuzzio Leah, Jones Salene, Walsh-Bailey Callie, and Weiner Bryan. 2018. “From classification to causality: Advancing Understanding of Mechanisms of change in implementation science.” Frontiers in public health 6:136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis Cara C, Mettert Kayne D, Dorsey Caitlin N, Martinez Ruben G, Weiner Bryan J, Nolen Elspeth, Stanick Cameo, Halko Heather, and Powell Byron J. 2018. “An updated protocol for a systematic review of implementation-related measures.” Systematic reviews 7 (1):66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luke Douglas A, Calhoun Annaliese, Robichaux Christopher B, Elliott Michael B, and Moreland-Russell Sarah. 2014. “Peer Reviewed: The Program Sustainability Assessment Tool: A New Instrument for Public Health Programs.” Preventing Chronic Disease 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McKay Mary M., and Bannon William M. Jr. 2004. “Engaging families in child mental health services.” Child and Adolescent Psychiatric Clinics 13 (4):905–921. doi: 10.1016/j.chc.2004.04.001. [DOI] [PubMed] [Google Scholar]

- McLaughlin KA, Koenen KC, Hill ED, Petukhova M, Sampson NA, Zaslavsky AM, and Kessler RC. 2013. “Trauma exposure and posttraumatic stress disorder in a national sample of adolescents.” J Am Acad Child Adolesc Psychiatry 52 (8):815–830. e14. doi: 10.1016/j.jaac.2013.05.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morsette Aaron, van den Pol Richard, Schuldberg David, Swaney Gyda, and Stolle Darrell. 2012. “Cognitive behavioral treatment for trauma symptoms in American Indian youth: Preliminary findings and issues in evidence-based practice and reservation culture.” Advances in School Mental Health Promotion 5 (1):51–62. [Google Scholar]

- Moullin JC, Dickson KS, Stadnick NA, Rabin B, and Aarons GA. 2019. “Systematic review of the Exploration, Preparation, Implementation, Sustainment (EPIS) framework.” Implement Sci 14 (1):1. doi: 10.1186/s13012-018-0842-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray LK, Dorsey S, Skavenski S, Kasoma M, Imasiku M, Bolton P, Bass J, and Cohen JA. 2013. “Identification, modification, and implementation of an evidence-based psychotherapy for children in a low-income country: the use of TF-CBT in Zambia.” Int J Ment Health Syst 7 (1):24. doi: 10.1186/1752-4458-7-24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray LK, Familiar I, Skavenski S, Jere E, Cohen J, Imasiku M, Mayeya J, Bass JK, and Bolton P. 2013. “An evaluation of trauma focused cognitive behavioral therapy for children in Zambia.” Child Abuse Negl 37 (12):1175–85. doi: 10.1016/j.chiabu.2013.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray LK, Skavenski S, Michalopoulos LM, Bolton PA, Bass JK, Familiar I, Imasiku M, and Cohen J. 2014. “Counselor and client perspectives of Trauma-focused Cognitive Behavioral Therapy for children in Zambia: a qualitative study.” J Clin Child Adolesc Psychol 43 (6):902–14. doi: 10.1080/15374416.2013.859079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nadeem E, Jaycox LH, Kataoka SH, Langley AK, and Stein BD. 2011. “Going to Scale: Experiences Implementing a School-Based Trauma Intervention.” School Psych Rev 40 (4) :549–68. [PMC free article] [PubMed] [Google Scholar]

- Nadeem E, and Ringle VA. 2016. “De-adoption of an Evidence-Based Trauma Intervention in Schools: A Retrospective Report from an Urban School District.” School Mental Health 8 (1):132–143. doi: 10.1007/s12310-016-9179-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- NCTSN. 2015. “Implementation summit on evidence based treamtents and trauma informed practices: Plenary, products and resources “. UCLA-Duke National Center for Child Traumatic Stress, accessed February 23 https://learn.nctsn.org/course/view.php?id=443.

- NCTSN. 2017. “Learning series on implementation: Foundational concepts, readiness preparation, leadership and sustainability.” UCLA-Duke National Center for Child Traumatic Stress, accessed Februrary 23 https://learn.nctsn.org/course/view.php?id=466§ion=0.

- Nilsen P 2015. “Making sense of implementation theories, models and frameworks.” Implement Sci 10:53. doi: 10.1186/s13012-015-0242-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Novins Douglas K, Green Amy E, Legha Rupinder K, and Aarons Gregory A. 2013. “Dissemination and implementation of evidence-based practices for child and adolescent mental health: A systematic review.” Journal of the American Academy of Child & Adolescent Psychiatry 52 (10):1009–1025. e18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, and Landsverk J. 2011. “Mixed Method Designs in Implementation Research.” Adm Policy Ment Health 38 (1):44–53. doi: 10.1007/s10488-010-0314-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pluye P, Robert E, Cargo M, Bartlett G, O’Cathain A, Griffiths F, Boardman F, Gagnon MP, and Rousseau MC. 2011. “A mixed methods appraisal tool for systematic mixed studies reviews: version 2011.” Department of Family Medicine, McGill University, accessed June 1 http://mixedmethodsappraisaltoolpublic.pbworks.com. [Google Scholar]

- Pomey Marie-Pascale, Forest Pierre-Gerlier, Sanmartin Claudia, Carolyn DeCoster Nathalie Clavel, Warren Elaine, Drew Madeleine, and Noseworthy Tom. 2013. “Toward systematic reviews to understand the determinants of wait time management success to help decision-makers and managers better manage wait times.” Implementation Science 8 (1):61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, and Mandell DS. 2017. “Methods to improve the selection and tailoring of implementation strategies.” The journal of behavioral health services & research 44 (2):177–194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, McHugh SM, and Weiner BJ. 2019. “Enhancing the impact of implementation strategies in healthcare: A research agenda.” Front Public Health 7 (3): 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Hausmann-Stabile C, and McMillen JC. 2013. “Mental health clinicians’ experiences of implementing evidence-based treatments.” J Evid Based Soc Work 10 (5) :396–409. doi: 10.1080/15433714.2012.664062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Patel SV, and Haley AD. 2017. A systematic review of barriers and facilitators to implementing trauma-focused interventions for children and youth In PROSPERO International prospective register of systematic reviews. England: Centre for Reviews and Dissemination, University of York. [Google Scholar]

- Powell BJ, Haley AD, Patel SV, Amaya-Jackson L, Glienke B, Blythe M, Lengnick-Hall R, McCrary S, Beidas RS, Lewis CC, Aarons GA, Wells KB, Saldana L, McKay MM, & Weinberger M (In Press). Improving the implementation and sustainment of evidence-based practices in community mental health organizations: A study protocol for a matched-pair cluster randomized pilot study of the Collaborative Organizational Approach to Selecting and Tailoring Implementation Strategies (COASTIS). Implementation Science Communications. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell Byron J, Mandell David S, Hadley Trevor R, Rubin Ronnie M, Evans Arthur C, Hurford Matthew O, and Beidas Rinad S. 2017. “Are general and strategic measures of organizational context and leadership associated with knowledge and attitudes toward evidence-based practices in public behavioral health settings? A cross-sectional observational study.” Implementation Science 12 (1):64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell Byron J, McMillen J Curtis, Hawley Kristin M, and Proctor Enola K. 2013. “Mental health clinicians’ motivation to invest in training: Results from a practice-based research network survey.” Psychiatric services 64 (8):816–818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell Byron J, Stanick Cameo F, Halko Heather M, Dorsey Caitlin N, Weiner Bryan J, Barwick Melanie A, Damschroder Laura J, Wensing Michel, Wolfenden Luke, and Lewis Cara C. 2017. “Toward criteria for pragmatic measurement in implementation research and practice: a stakeholder-driven approach using concept mapping.” Implementation Science 12 (1): 118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell Byron J, Waltz Thomas J, Chinman Matthew J, Damschroder Laura J, Smith Jeffrey L, Matthieu Monica M, Proctor Enola K, and Kirchner JoAnn E. 2015. “A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project.” Implementation Science 10 (1):21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, Baumann AA, Hamilton AM, and Santens RL. 2012. “Writing implementation research grant proposals: ten key ingredients.” Implementation Science 7 (1):96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Silmere H, Raghavan R, Hovmand P, Aarons GA, Bunger A, Griffey R, and Hensley M. 2011. “Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda.” Administration and Policy in Mental Health and Mental Health Services Research 38 (2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- QUALRIS. 2018. Qualitative research in implementation science. Bethesda, MD: National Cancer Institute. [Google Scholar]

- Raghavan R, Inkelas M, Franke T, and Halfon N. 2007. “Administrative barriers to the adoption of high-quality mental health services for children in foster care: a national study.” Adm Policy Ment Health 34 (3):191–201. doi: 10.1007/s10488-006-0095-6. [DOI] [PubMed] [Google Scholar]

- Raghavan Ramesh, Inoue Megumi, Ettner Susan L, Hamilton Barton H, and Landsverk John. 2010. “A preliminary analysis of the receipt of mental health services consistent with national standards among children in the child welfare system.” American journal of public health 100 (4):742–749. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rapp CA, Etzel-Wise D, Marty D, Coffman M, Carlson L, Asher D, Callaghan J, and Holter M. 2010. “Barriers to evidence-based practice implementation: results of a qualitative study.” Community Ment Health J 46 (2):112–8. doi: 10.1007/s10597-009-9238-z. [DOI] [PubMed] [Google Scholar]

- Sabalauskas Kara L., Ortolani Charles L., and McCall Matthew J.. 2014. “Moving from Pathology to Possibility: Integrating Strengths-Based Interventions in Child Welfare Provision.” Child Care in Practice 20 (1):120–134. [Google Scholar]

- Saldana Lisa. 2014. “The stages of implementation completion for evidence-based practice: protocol for a mixed methods study.” Implementation Science 9 (1):43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schell Sarah F, Luke Douglas A, Schooley Michael W, Elliott Michael B, Herbers Stephanie H, Mueller Nancy B, and Bunger Alicia C. 2013. “Public health program capacity for sustainability: a new framework.” Implementation Science 8 (1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Self-Brown S, Tiwari A, Lai B, Roby S, and Kinnish K. 2016. “Impact of Caregiver Factors on Youth Service Utilization of Trauma-Focused Cognitive Behavioral Therapy in a Community Setting.” Journal of Child and Family Studies 25 (6):1871–1879. doi: 10.1007/s10826-015-0354-9. [DOI] [Google Scholar]