Abstract

The identification of sea turtle behaviours is a prerequisite to predicting the activities and time-budget of these animals in their natural habitat over the long term. However, this is hampered by a lack of reliable methods that enable the detection and monitoring of certain key behaviours such as feeding. This study proposes a combined approach that automatically identifies the different behaviours of free-ranging sea turtles through the use of animal-borne multi-sensor recorders (accelerometer, gyroscope and time-depth recorder), validated by animal-borne video-recorder data. We show here that the combination of supervised learning algorithms and multi-signal analysis tools can provide accurate inferences of the behaviours expressed, including feeding and scratching behaviours that are of crucial ecological interest for sea turtles. Our procedure uses multi-sensor miniaturized loggers that can be deployed on free-ranging animals with minimal disturbance. It provides an easily adaptable and replicable approach for the long-term automatic identification of the different activities and determination of time-budgets in sea turtles. This approach should also be applicable to a broad range of other species and could significantly contribute to the conservation of endangered species by providing detailed knowledge of key animal activities such as feeding, travelling and resting.

Keywords: supervised learning algorithms, accelerometer, sea turtle, animal-borne camera, behavioural classification, marine ecology

1. Introduction

It is essential to assess the feeding behaviours of free-ranging animals in order to estimate their time budgets, and thus understand how these animals maximize their fitness [1,2]. However, investigating the foraging behaviour of sea turtles in their natural environment remains a significant challenge, as it is impossible to obtain long-term behavioural data through visual observations alone. Although some studies have provided relevant information on sea turtle diet through post-mortem stomach content analysis or the deployment of animal-borne video-recorders [3–5], the proportion of time that sea turtles allocate to feeding activities in the long term remains unknown. Time-depth recorders (TDR) have been used to record the dive profiles and durations of free-ranging sea turtles and have provided insights into their underwater activities [6–8]. However, a number of authors have underlined the limits of focusing on dive profile, as foraging activity cannot be distinguished from transit or resting phases [9,10]. The joint use of TDR and video-recorders revealed that the typical dive types described in [11,12] could not be associated with specific activities such as travelling, resting or foraging [13,14].

Devices combining miniaturized tri-axial accelerometers and TDR were described as a powerful tool to improve the identification of fine-scale behaviours in animals that cannot be easily monitored by visual observation [15–17]. Such devices have been deployed to study the behaviour and dive patterns of loggerheads (Caretta caretta, [18]), green turtles (Chelonia mydas, [19]) and leatherbacks (Dermochelys coriacea, [20]) during the inter-nesting period. However, the interpretation of the acceleration signals used in these studies to identify sea turtle behaviours in water was not validated by simultaneous visual observation, possibly resulting in misidentification and significant biases in the interpretation of the data.

A new approach was therefore necessary to reliably identify the underwater behaviours of free-ranging sea turtles without using direct visual observation (which is usually impossible) or video recordings, which are limited to short-term studies (a few hours) because of their high power consumption. Accelerometers permit the identification of feeding activity and time budget in marine animals such as seals and penguins by recording head movements that are likely to correspond to prey captures [21–23]. For the same purpose, accelerometers have been placed on the beak [24–27] or the top of the head [28] of sea turtles to record beak-openings and capture attempts. However, placing the device in this way is likely to result in a significant disturbance for the individuals and cannot be considered for long-term use (up to several weeks). It was therefore crucial to develop a protocol for the long-term recording and identification of sea turtle feeding activities that minimizes disturbance to the animals while making optimal use of the subtle variations in data acquired by loggers that are mounted on the carapace rather than the head.

Further work is needed to validate the identification of sea turtle underwater behaviours by data acquired by animal-borne sensors. In particular, before attempting to provide new insights about the at-sea behaviours of sea turtles in natural conditions, one needs to automatically and correctly identify these behaviours, including those that are hard to detect but play a key role such as feeding, from data acquired in a way that minimizes the disturbance of the equipped animal. The aim of our study is therefore to develop a new approach fulfilling this need. In this framework, we will use the results we obtained about turtles' behaviours only to illustrate the output of our approach without attempting to give them any biological significance. Although sea turtle behaviours have mainly been inferred from combined acceleration and depth data, the additional use of a gyroscope (which records angular velocity) can provide further relevant information in remote behavioural identification [29–31]. Thus, we deployed loggers combining an accelerometer, a gyroscope and a TDR on the carapace of free-ranging immature green turtles. This equipment was linked to a video-recorder that was mounted in the logger device to provide visual evidence that could validate logger interpretations of behaviours, given that our approach ultimately aims to infer behaviours solely through logger use. Surface behaviours were identified separately from depth data. The study tested a set of methods to infer diving behaviours from the signals provided by the accelerometer, gyroscope and TDR, including automatic segmentation and supervised learning algorithms. The validity of our approach was tested through the use of confusion matrices and by comparing the inferred activity budgets with those obtained from video recordings.

2. Material and methods

2.1. Data collection from free-ranging green turtles

The fieldwork was carried out from February 2018 to May 2019 in Grande Anse d'Arlet (14°50′ N, 61°09′ W), Martinique, France. We deployed CATS (Customized Animal Tracking Solutions, Germany) devices for periods ranging from several hours to several days on free-ranging immature green turtles. A CATS device comprises a video-recorder (1920 × 1080 pixels at 30 frames s−1) combined with a tri-axial accelerometer, a tri-axial gyroscope and a TDR (electronic supplementary material, figure S1). The maximum battery capacity was considered to provide a recording capacity of 18 h of video footage and 48 h for other data. These devices were programmed to record acceleration and angular velocity (gyroscope) at a frequency of 20 or 50 Hz according to the recording capacity of the logger (the 50 Hz data were subsampled at 20 Hz using a linear interpolation to homogenize the sample). Depth was recorded at 1 Hz using a pressure sensor with a range from 0 to 2000 m and 0.2 m accuracy.

The relatively shallow depths of the area allowed free divers to capture the turtles manually, as described in Nivière et al. [32]. Once an individual had been caught, it was placed on a boat and identified by scanning its passive integrated transponder (PIT) or tagged with a new PIT if it was unknown. It was then weighed and its carapace length was measured (electronic supplementary material, table S1). The device was attached to the carapace using four suction cups. Air was manually expelled from the cups, which were held in place by the use of a galvanic timed-release system. The dissolving of these releases by seawater and the positive buoyancy of the device (23.3 × 13.5 × 4 cm for 0.785 kg) led to the remote release of the device several hours later. Devices were recovered by geolocation of an Argos SPOT-363A tag (MK10, Wildlife Computers Redmond, WA, USA), which was glued to the CATS device, with a goniometer (RXG-134, CLS, France). Instruments were deployed on 37 individuals, but complete datasets including video, acceleration, gyroscope and depth values were only recovered for 13 individuals (electronic supplementary material, table S1).

2.2. Processing of video data and behavioural labelling

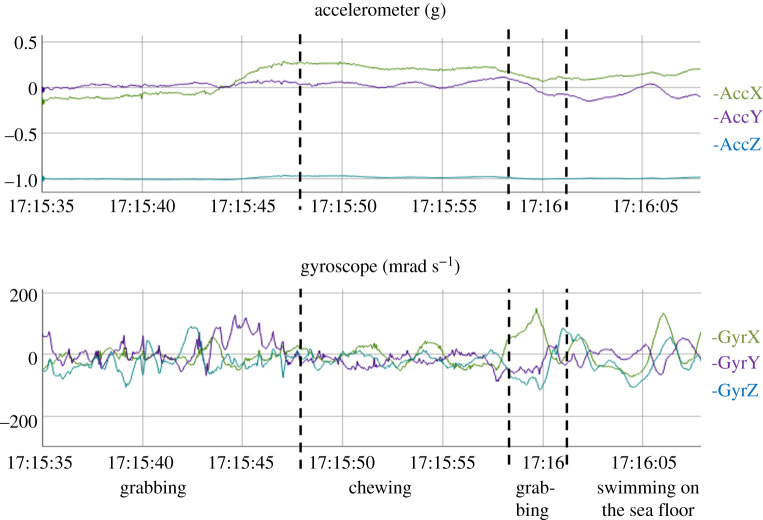

The video footage was watched to identify the various behaviours and determine their starting and ending times to the closest 0.1 s. Acceleration, angular velocity and depth data corresponding to each behavioural phase were visualized using R software (version 3.5.3) and the package rblt (figures 1 and 2; [33]). The 46 resulting behaviours were clustered into categories according to their similarities (the definition of the various behaviours is available in electronic supplementary material, table S2). We retained seven main expressed categories for the multi-sensor signals, namely ‘Breathing’, ‘Feeding’, ‘Gliding’, ‘Swimming’, ‘Resting’, ‘Scratching’ and ‘Staying at the surface’. All other behaviours were very infrequent and were grouped in an eighth category labelled ‘Other’.

Figure 1.

Raw acceleration, gyroscope and depth profiles for several behaviours expressed by turtle #12.

Figure 2.

Raw acceleration and gyroscope signals obtained for the feeding behaviours expressed by turtle #6. The definitions of the behaviours are available in electronic supplementary material, table S2).

2.3. Analysis of the angular velocity and acceleration data

The device was installed on the carapace in a tilted position along a longitudinal axis to obtain video images of the head. This results in biased values of accelerations and angular speeds for the surge (i.e. back-to-front) and heave (bottom-to-top) body axes, which therefore had to be corrected (see R-script in electronic supplementary material). The static acceleration vector (i.e. the component due to gravity) was obtained by separately averaging the acceleration values (ax, ay and az) on the surge, sway (right-to-left) and heave axes, respectively, over a centred running temporal window set to Δt = 2 s, which was the smallest window resulting in a norm that remains close to 1g (9.98 m s−2) for almost all measures. The dynamic body acceleration was then computed as DBA = √d2, where is the dynamic acceleration vector [34]. Similarly, the rotational activity was computed as RA = √g2, where g = (gx, gy , gz) is the angular velocity (gx, gy , and gz correspond, respectively, to the values of roll, pitch and yaw per unit time provided by the gyroscope).

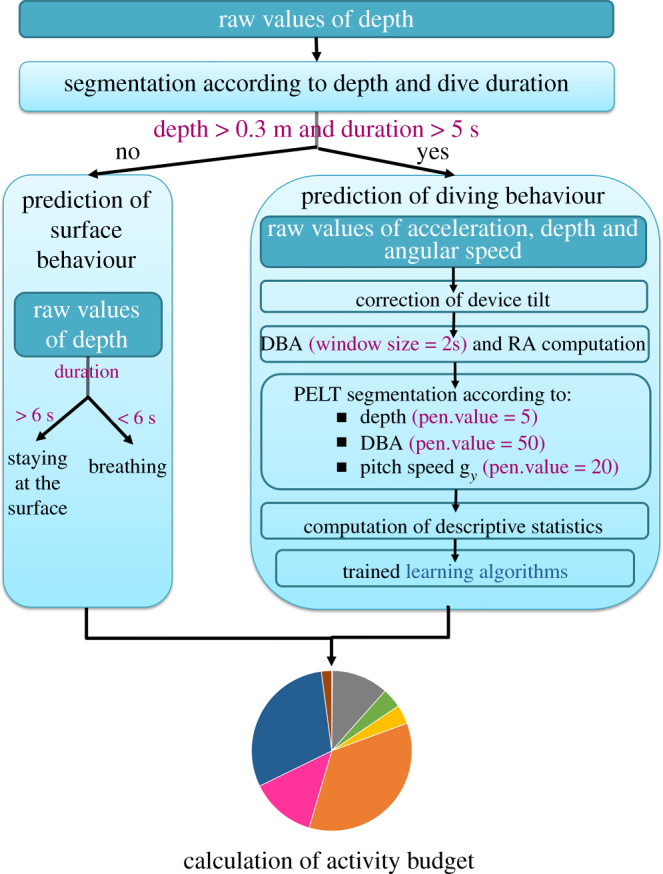

2.4. Segmentation of the multi-sensor dataset

The automatic identification of the labelled behaviours from the multi-sensor signals required the segmentation of the dataset into homogeneous behavioural bouts. We started by relying on the depth data to distinguish the dives, defined as depths exceeding 0.3 m for at least 5 s, from the surface periods. We attributed the surface periods to either ‘Breathing’ or ‘Staying at the surface’, according to whether the turtle remained at the surface for less or more than 6 s, respectively. We then distinguished between the various possible diving behaviours by using a changepoint algorithm, the pruned exact linear time (PELT) algorithm (R package changepoint; [35]), in which the ‘pen.value‘ parameter, which corresponds to the additional penalty in the cost function for each additional partition of the data, can be manually adjusted. We tested different values and retained those which resulted in the best balance between obtaining homogeneous behavioural bouts and limiting over-segmentation. We first detected depth changes over 3 s of each dive (function cpt.mean, penalty = ‘Manual’, pen.value = 5) to obtain segments which were labelled as ‘ascending’, ‘descending’ or ‘flat’ depending on whether the vertical speed was greater than 0.1 m s−1, less than –0.1 m s−1 or between these two values, respectively. These ascending and descending segments were further segmented based on the DBA mean and variance (function cpt.meanvar, penalty = ‘Manual’, pen.value = 50) in order to distinguish between the swimming and gliding phases of these segments. The green turtle is a grazing herbivore which mainly feed on seagrass and algae [36]. The head movements occurring during feeding activities are easily detected by gyroscopes and/or accelerometers set directly on the head, but are rarely detected when these sensors are placed on the carapace. We did, however, note that the carapace tended to display pitch oscillations when the turtle pulled on the seagrass, an activity that we refer to hereafter as ‘Grabbing’ (figure 2). Accordingly, we further segmented the ‘flat’ segments based on the variance of gy (angular speed in the animal's sagittal plane; function cpt.var, penalty = ‘Manual’, pen.value = 20) to pinpoint this behaviour. Each segment was then labelled as either the behavioural category that was expressed for at least 3/5 of its duration, or as ‘Transition’ if several behaviours were involved with none of them occurring for 3/5 of the behavioural bout. Thus, the overall procedure classified multi-sensor signals into nine categories comprised surface behaviours (Breathing and Staying at the surface) which were identified using depth data alone, diving behaviours (Feeding, Gliding, Resting, Scratching and Swimming) and also ‘Other’ and ‘Transition’, for which supervised learning algorithms were required.

2.5. Identification of the diving behaviours by supervised learning algorithms

We trained five supervised machine learning algorithms—(i) classification and regression trees (CART), (ii) random forest (RF), (iii) extreme gradient boosting (EGB), (iv) support vector machine (SVM), and (v) linear discriminant analysis (LDA)—to associate the seven diving behaviour categories with the corresponding patterns of different input variables. They are the most commonly used classifiers in behaviour recognition and are considered to be relevant in ecology studies [17,37,38]. These algorithms were applied to our data using the R packages rpart [39] for CART, randomForest [40] for RF (n = 300, mtry = 14), xgboost [41] for EGB (num_class = 7, eta = 0.3, max_depth = 3), e1071 [42] for SVM and MASS [43] for LDA.

For each segment, the algorithms were fed with four descriptive statistics (mean, minimum, maximum and variance) computed for the three linear acceleration values (ax, ay and az), for the three angular speeds values (gx, gy and gz), and for DBA and RA. We also included the difference between the last and first depth values, and the duration of each segment. The fact that ‘Feeding’ was characterized by high-frequency oscillations, in particular in terms of pitch speed (figure 2), but also (although less obviously) in terms of roll speed and surge/sway accelerations, enabled us to distinguish this behaviour from the others. We characterized these oscillations as follows: (i) we smoothed the high-frequencies values of gx, gy, ax and ay (after correction for the inclination) using a centred running mean over a 1 s window; (ii) for each of these four variables, we computed the differences d between the raw values and their respective running means; (iii) we computed the mean value of these differences for the whole segment m(d); (iv) we computed the mean and the maximum value of the squared differences (d–m(d))2 and we added these two parameters to the list of variables used to feed the algorithms, i.e. 42 variables for each segment. Such a number of variables may be characterized by numerous correlations. However, machine learning algorithms are less sensitive than classical regression methods to correlation in the explanatory variables. Nevertheless, for a simpler interpretation purpose, we looked for some possible reduced set of variables that may reach the same accuracy as the full dataset, but we did not find any convincing one. As the focus was more on predictability than interpretability (as is usual the case in machine learning), we kept all the 42 variables.

2.6. Validation of the automatic behavioural inferences

To estimate the ability of our procedure to correctly infer the behaviours of sea turtles based on acceleration, angular velocity and depth data, we repeatedly performed 2/3 : 1/3 splits of the sample of 13 individuals, with nine individuals retained for the learning phase and the remaining four individuals used to validate the outcome. From the 715 possible combinations, we retained the 376 combinations in which ‘Feeding’ and ‘Scratching’ were not under-represented in the training dataset (i.e. when more than 60% of total feeding and scratching segments were present, i.e. 898 and 714, respectively). Nevertheless, the number of ‘Feeding’ and ‘Scratching’ segments was much lower than those attributed to ‘Resting’ and ‘Swimming’ (11 168 and 10 293 segments, respectively). As an unbalanced training dataset can hinder the performance of supervised learning algorithms [44], we set an upper limit at 1000 segments per behaviour for the training dataset. These segments were randomly selected for the over-expressed categories at each training trial.

For each trial, we evaluated the efficiency of the different methods by computing the number of well-identified behaviours (true positive, TP, and true negative, TN) and of behaviours considered to be misclassified (false negative, FN, and false positive, FP) into a confusion matrix. We calculated three indicators for each behaviour: (i) ‘Sensitivity’ = TP/(TP + FN), also called true positive rate, hit rate or recall, measures the ability of a method to detect the target behaviour among other behaviours; (ii) ‘Precision’ = TP/(TP + FP), also called positive predictive value, measures the ability of a method to correctly identify the target behaviour; and (iii) ‘Specificity’ = TN/(TN + FP), also called selectivity or true negative rate, measures the ability of a method to avoid wrongly considering other behaviours as the target behaviour. We also computed ‘Accuracy’ = (TP + TN)/(TP + TN + FP + FN), which measures the ability of a method to correctly identify all behaviours as a whole.

Furthermore, to possibly improve the performance and/or minimize the variance of behavioural inferences, we also relied on the ‘Ensemble Methods’ [45,46], which consisted of combining the results obtained with the five supervised machine learning algorithms. We tested two such methods. The first was the ‘Voting Ensemble’ (VE), which retained the most frequently predicted behaviour. The second involved a ‘Weighted Sum’ (WS), where weights were given to the different predicted behaviours, based on ‘Precision’ (weighting based on Sensitivity and Specificity was also tested but gave poor results). In order to highlight the best method to automatically identify the diving behaviours and particularly the feeding behaviours, we used ANOVA to compare the mean global accuracy obtained for the 376 combinations of the seven classifiers (CART, SVM, LDA, RF, EGB, VE and WS). As the result of the ANOVA showed significant effects, we ran pairwise comparisons of mean performance using the Tukey HSD test.

Finally, the individual activity budgets were inferred by computing the proportion of time involved in the various surface behaviours (Breathing and Staying at the surface) inferred from depth data, and the proportion of time dedicated to diving behaviours (Feeding, Gliding, Other, Resting, Scratching and Swimming), inferred using the best classifier (figure 3). The inferred activity budgets were compared to those obtained with video recordings.

Figure 3.

Workflow of automatic behavioural identification using acceleration, angular speed and depth data, as adapted to the green turtle. The hyper-parameters set-up specifically for green turtle data are highlighted in pink. The application of this workflow for other marine species would necessitate the identification of the optimal hyper-parameter values for each species.

3. Results

A total of 66.2 h of video were recorded, with a maximum of 14.6 h for one individual (table 1). The seven specific behavioural categories retained for the analysis (Breathing, Feeding, Gliding, Resting, Scratching, Staying at the surface and Swimming) represented 99% of the total duration. Only the two shortest deployments were not associated with a feeding event while the maximum duration of feeding represented only 8% of the recording time of the individual. The catching of jellyfish was observed only occasionally in three individuals. This behaviour represented only 0.1% of the total feeding duration of the 13 individuals, whilst the rest of the feeding time for those individuals was used for grazing on seagrass. For the others, feeding consisted only of grazing on seagrass. ‘Scratching’ was particularly expressed by one turtle, and represented 13% of its observation time.

Table 1.

Total duration (seconds) of the observed sequences of behavioural categories for the 13 free-ranging immature green turtles.

| behaviour | #1 | #2 | #3 | #4 | #5 | #6 | #7 | #8 | #9 | #10 | #11 | #12 | #13 | total | relative importance (%) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| breathing | 36 | 301 | 37 | 20 | 66 | 87 | 89 | 6 | 57 | 27 | 132 | 75 | 293 | 1226 | 0.51 |

| feeding | — | 1499 | 162 | 540 | 152 | 1955 | 70 | — | 1030 | 661 | 6 | 28 | 178 | 6281 | 2.64 |

| gliding | — | 896 | 366 | 524 | 211 | 284 | 1054 | 102 | 1257 | 129 | 609 | 372 | 2271 | 8075 | 3.39 |

| resting | — | 10 134 | 7747 | 4760 | 5807 | 11 302 | 19 502 | 711 | 7190 | 602 | 17 579 | 3814 | 27 441 | 116 590 | 48.95 |

| scratching | — | 512 | 574 | 1789 | 8 | 903 | 136 | — | 64 | 21 | 177 | 94 | 218 | 4496 | 1.89 |

| staying at the surface | — | 898 | 1396 | 1546 | 1394 | 2541 | 2955 | 573 | 1032 | 818 | 1485 | 582 | 3246 | 18 465 | 7.75 |

| swimming | 5279 | 6522 | 3801 | 4082 | 6421 | 6005 | 4800 | 2026 | 6354 | 5493 | 7739 | 2760 | 18 895 | 80 178 | 33.66 |

| other | — | 258 | 169 | 283 | 148 | 818 | 136 | 45 | 261 | 209 | 233 | 140 | 188 | 2887 | 1.21 |

The seven classifiers identified the five specific behavioural categories on which we focused (Feeding, Gliding, Resting, Scratching and Swimming) and two additional categories, ‘Transition’ and ‘Other’, with an accuracy ranging from 0.91 to 0.95. The highest score was obtained with WS and the lowest one with SVM. The Tukey HSD test indicated that the RF, VE and EGB outputs were not significantly different (0.935, 0.932 and 0.932, respectively). All classifiers identified the behavioural category with a low false positive rate ( less than 0.1 for the best classifiers; figure 4). Few segments were wrongly identified as ‘Feeding’ with the WS method, which thus obtained the lowest false positive rate (with respect to other classifiers) for this behaviour. The best true positive rates, for the seven classifiers, were obtained in the ‘Scratching’ category despite its low occurrence in the dataset, meaning that this behaviour was relatively well identified when it occurred.

Figure 4.

True positive rate versus the false positive rate obtained with the seven classifiers for the seven diving categories. The symbols show the mean values obtained from 371 combinations of splitting the sample of 13 individuals into two sub-groups (one of nine individuals for learning and one of four individuals for testing).

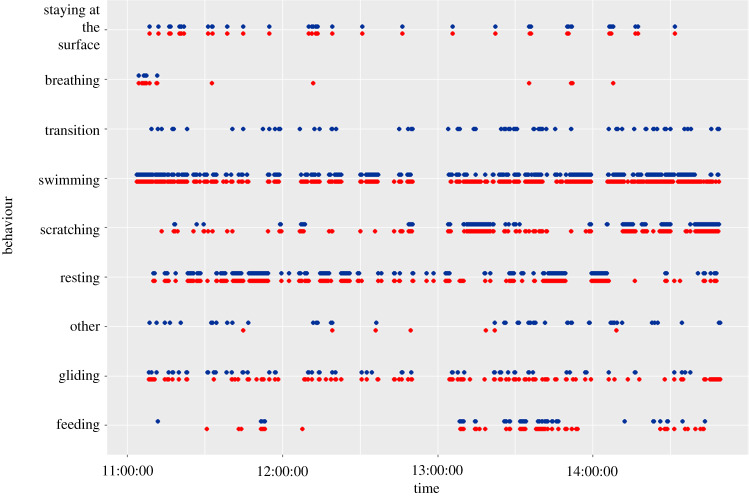

The activity budget, representing the percentage of the total mean time allocated to each behavioural category, showed similar proportions between the predictions and the observations (figures 5 and 6). This result highlights the ability of our method and the WS model to predict the behaviours of immature green turtle in natural conditions. The main differences between the observed and predicted activity budgets were seen in the ‘Resting’ and ‘Swimming’ behaviours (figure 5 and 6). These differences were small and represented less than 3% of the total observed time (table 2). ‘Feeding’ and ‘Scratching’ were under-represented in our models and consequently their difference between predicted–actual time represent roughly 1% of the total observed time. Their low expression for some individuals led to an important percentage difference with respect to the observed time of the behaviour even if they were predicted in small proportion. The results obtained for each individual are available in electronic supplementary material, table S3. With a very low true positive rate, the predicted time of ‘Transition’ represented on average 0.2% of the total observation time. Thus the overall procedure was able to reliably infer the seven mainly expressed behaviours of the immature green turtles.

Figure 5.

Pie chart of the actual (determined from the video) versus predicted mean durations of the various behaviours displayed by three free-ranging immature green turtles. The predicted durations of the diving behaviours were obtained using the WS classifier.

Figure 6.

Comparison of the nine main inferred behavioural categories (in red) and of the actually observed ones (in blue) for a few hours for immature green turtle #1. The predicted occurrences of the diving behaviours were obtained using the WS classifier.

Table 2.

Average duration of each behaviour shown by the 13 immature green turtles’ predicted time versus actual time. The percentages are expressed with respect to the total individual recorded video duration or to the time the behaviour in question was expressed. The predicted durations of the diving behaviours were obtained using the WS method, and the surfacing behaviours were predicted using depth values.

| behaviour | predicted (s) | observed (s) | difference (s) | %_total | %_behaviour |

|---|---|---|---|---|---|

| breathing | 99 | 94 | 32a | 0.2a | 46.9 |

| feeding | 432 | 497 | 207 | 1,1 | 180.1 |

| gliding | 936 | 651 | 326 | 1.0 | 39.1 |

| other | 92 | 235 | 143 | 0.8 | 60.2 |

| resting | 9175 | 9640 | 747b | 2.7b | 6.6 |

| scratching | 437 | 354 | 118 | 0.7 | 311.8b |

| staying at the surface | 1435 | 1477 | 151 | 0.8 | 10.8 |

| swimming | 6206 | 6256 | 441 | 2.4 | 6.9a |

| transition | 48 | — | 48 | 0.2a | — |

aThe lowest difference obtained among the nine behavioural categories.

bThe highest difference obtained among the nine behavioural categories.

4. Discussion

This is the first study to validate the use of acceleration, gyroscope and TDR signals for inferring free-ranging green turtle behaviours. In previous studies, carapace-mounted accelerometers were used to describe swimming behaviours and buoyancy regulation in sea turtles [19,20,47,48] in specific contexts where signals associated with ‘Swimming’ and ‘Gliding’ could be visually identified, or were used to estimate sea turtle activity levels in terms of DBA [18,49]. The possibility to rely on accelerometers and other carapace-mounted sensors such as TDRs and gyroscopes to infer behaviours of free-ranging sea turtles had not been explored in detail until now due to the lack of a validation process, which is critically important for this kind of approach [50]. The validation process described in the present study has enabled us to elaborate an overall procedure to reliably infer the seven most commonly expressed behaviours of the free-ranging green turtle (namely ‘Breathing’, ‘Feeding’, ‘Gliding’, ‘Resting’, ‘Scratching’, ‘Staying at the surface’ and ‘Swimming’), and thus to infer the fine-scale activity budgets of animals whose populations are currently under anthropogenic pressures which jeopardize their future [51,52]. This inference is essential if we wish to compare how these animals allocate their time between different activities according to natural and anthropogenic pressures such as available resources, environmental changes or tourism. When combined with GPS data, this protocol may help to identify the areas where sea turtles concentrate their activities and thus help to delineate protected areas in order to limit human disturbances.

We tested seven classifiers (LDA, SVM, CART, RF, EGB, VE and WS) to compare their strengths and weaknesses in automatic behavioural identification based on TDR, acceleration and gyroscopic data. The classifiers identified the seven behavioural classes with a global accuracy ranging from 0.91 to 0.95, which is comparable to the accuracy reached in other similar studies [17,53,54]. The WS classifier performed better than the base and VE classifiers: clearly, assigning precision-based weights to the base classifier predictions improved the behavioural classification. The decrease we observed in the false positive rate for the rare behaviours through the use an ensemble method in this study has also been highlighted by Brewster et al. [37]. Ensemble methods are mainly used because they reduce the variance of behaviour classification [53,55] and thus increase the global accuracy. However, they involve a higher computational cost and require a reliable setting-up of base learners.

The use of supervised machine-learning has become common to automatically identify behaviours from data provided by animal-borne loggers [17,50,56]. Indeed, the development of fast personal computers and of free user-friendly computing libraries made it possible to easily apply these ‘black box’ algorithms to huge amounts of data. The machine-learning approach has thus turned out to be a very powerful tool for identifying well-characterized behaviours (in terms of signal) such as locomotion [56–58] and resting [59–61]. However, it appears to be rather inefficient when seeking to identify behaviours with confusing signal characteristics. Examples include feeding and grooming in pumas [62], pecking in plovers [63] or foraging in fur seals [64]. Although one could expect that feeding machine-learning algorithms with big data should provide the most accurate predictive rules [16,65,66], Wilson et al. [67] showed that a classification method based on a good understanding and careful examination of the acceleration signal actually gives better results in terms of computational time and of accuracy than non-optimized machine learning. Accordingly, the mixed approach developed in this study fed machine-learning algorithms with a number of derived signals which were specifically elaborated to pinpoint specific hard-to-detect behaviours when alternative simpler means based on a single or a few parameters appeared to be effective. This method allowed us to identify key behaviours such as feeding and scratching, which had previously been either misidentified or not identified at all due to the lack of discriminative signals in the raw data obtained from raw acceleration and/or gyroscopic data obtained with loggers fixed to the carapace of the turtle. Although our choice of derived signals makes our approach specific to sea turtles, this principle can be applied to numerous species if the different signals are considered with care before the study.

When carrying out automatic behavioural identification from multi-sensor data using supervised learning algorithms, one of the main difficulties is the segmentation of the multi-sensor data to obtain homogeneous segments that are representative of the various behavioural categories. To date, most studies divided the multi-sensor data into segments using fixed-time segments [68–70] or a sliding sample window with a fixed length [38,71]. However, several studies testing the size of the window showed that it influences classification accuracy and the identification of short behaviours [53,72–74]. Indeed, an individual can express both short and long behaviours, such as burst swimming in lemon sharks or a prey capture in Adélie penguins compared to normal swimming behaviour [37,75]. While the use of long fixed segments dramatically increases the proportion of inhomogeneous segments, using short segments may prevent the detection of certain key signals such as low-frequency oscillations. A hierarchical, adapted segmentation procedure therefore seems to be a more judicious choice. This consists of splitting behaviours into groups based on signals that are easily interpretable in a dichotomic way (variables such as depth were used to attain this in our study). A change-point algorithm can be used to achieve a more specific segmentation based on other signals, with a possible ad hoc adjustment of the contrast required to evaluate whether two successive values do or do not belong to the same segment (such as the manual penalty of the PELT algorithm). In this paper, we demonstrate this approach for the green turtle (figure 3, R-script in electronic supplementary material), but there is no reason it could not be easily adapted for other species. This will certainly necessitate the identification of the optimal hyper-parameters as well as the informative signals for the segmentation according to the species, but the approach of combining automated segmentation and machine learning methods with well-thought-out descriptive variables should apply as well.

The approach we proposed thus offers promising perspectives for inferring behaviours of animals that cannot be easily observed in the wild through the automatic analysis of large amounts of raw data acquired over long periods by miniaturized (low-disturbance) loggers such as high-frequency tri-axial accelerometers and gyroscopes. It provides a number of adaptable principles that enable the efficient use of machine learning algorithms to automatically identify fine-scale behaviours in sea turtles, and may be used for a wide range of species. The automated and reliable identification of the various behaviours permits a rapid inference of the time budget of the animals under study. Identifying how much time the studied animals dedicate to activities such as feeding, travelling and resting can be of relevance when seeking to understand how individuals attempt to maximize their fitness in a given environment. This approach should therefore be a key tool in understanding the ecology of endangered species and make a significant contribution to their conservation.

Supplementary Material

Supplementary Material

Supplementary Material

Supplementary Material

Acknowledgements

This study was carried out within the framework of the Plan National d'Action Tortues Marines de Martinique et Guyane Française. The authors also appreciate the support of the ANTIDOT project (Pépinière Interdisciplinaire Guyane, Mission pour l'Interdisciplinarité, CNRS). The authors thank the DEAL Martinique and Guyane, the CNES, the ODE Martinique, the ONCFS Martinique and Guyane, the ONEMA Martinique and Guyane, the SMPE Martinique and Guyane, the ONF Martinique, the PNR Martinique, the Surfrider Foundation, Carbet des Sciences, Plongée-Passion, the Collège Cassien Sainte-Claire and the Collège Petit Manoir for their technical support and field assistance. We are also grateful to the numerous volunteers and free divers for their participation in the field operations. Results obtained in this paper were computed on the vo.grand-est.fr virtual organization of the EGI Infrastructure through IPHC resources. We thank EGI, France Grilles and the IPHC Computing team for providing the technical support, computing and storage facilities. We are also grateful to the three anonymous reviewers for their helpful corrections and comments.

Ethics

This study meets the legal requirements of the countries in which the work was carried out and follows all institutional guidelines. The protocol was approved by the ‘Conseil National de la Protection de la Nature’ (http://www.avis-biodiversite.developpement-durable.gouv.fr/bienvenue-sur-le-site-du-cnpn-et-du-cnb-a1.html), and the French Ministry for Ecology, Sustainable Development and Energy (permit no. 2013154-0037), which acts as an ethics committee in Martinique. The fieldwork was carried out in strict accordance with the recommendations of the Prefecture of Martinique in order to minimize the disturbance of animals (authorization no. 201710-0005).

Data accessibility

The R-script to visualize the raw acceleration, gyroscope and depth profile associated with the observed behaviours of the immature green turtles have been uploaded as part of the electronic supplementary material. The same is true for the R-script to automatically identify sea turtle behaviour from the labelled data. The datasets containing the acceleration, gyroscope and depth recordings of the 13 immature green turtles as well as their observed behaviours are available within the Dryad Digital Repository: https://doi.org/10.5061/dryad.hhmgqnkd9 [76].

Authors' contributions

D.C., H.D. and S.R. contributed conception and design of the study. L.J., D.C., J.M., F.S., P.L., J.G., D.E., C.H., Y.L.M., G.H., A.A., S.R., N.L., C.F., C.M., T.M., L.A., G.C., F.L., N.A. and A.B. contributed to data acquisition. L.J., S.G. and S.B. performed the data acceleration analysis and L.J. and V.P.B. the statistical analysis. L.J. wrote the first draft of the manuscript and S.B., V.P.B., F.S. and D.C. contributed critically to subsequent versions.

Competing interests

We declare we have no competing interests.

Funding

This study was co-financed by the FEDER Martinique (European Union, Conventions 2012/DEAL/0010/4-4/31882 and 2014/DEAL/0008/4-4/ 32947), DEAL Martinique (Conventions 2012/DEAL/0010/4-4/31882 and 2014/DEAL/0008/4-4/32947), the ODE Martinique (Convention 014-03-2015), the CNRS (Subvention Mission pour l'Interdisciplinarité), the ERDF fund (Convention CNRS-EDF- juillet2013) and the Fondation de France (Subvention Fondation Ars Cuttoli Paul Appell). Lorène Jeantet's PhD scholarship was supported by DEAL Guyane and CNES Guyane.

References

- 1.Stephens DW, Krebs JR. 1987. Foraging theory. Princeton, NJ: Princeton University Press. [Google Scholar]

- 2.Stephens DW, Brown JS, Ydenberg RC. 2007. Foraging: behavior and ecology. Chicago, IL: University of Chicago Press. [Google Scholar]

- 3.Colman LP, Sampaio CLS, Weber MI, de Castilhos JC.. 2014. Diet of olive ridley sea turtles, Lepidochelys olivacea, in the waters of Sergipe, Brazil. Chelonian Conserv. Biol. 13, 266–271. ( 10.2744/CCB-1061.1) [DOI] [Google Scholar]

- 4.Arthur K, O'Neil J, Limpus CJ, Abernathy K, Marshall G. 2007. Using animal-borne imaging to assess green turtle (Chelonia mydas) foraging ecology in Moreton Bay, Australia. Mar. Technol. 41, 9–13. ( 10.4031/002533207787441953) [DOI] [Google Scholar]

- 5.Wildermann NE, Barrios-Garrido H. 2013. First report of Callinectes sapidus (Decapoda: Portunidae) in the diet of Lepidochelys olivacea. Chelonian Conserv. Biol. 11, 265–268. ( 10.2744/ccb-0934.1) [DOI] [Google Scholar]

- 6.Lennox RJ, et al. 2017. Envisioning the future of aquatic animal tracking: technology, science, and application. Bioscience 67, 884–896. ( 10.1093/biosci/bix098) [DOI] [Google Scholar]

- 7.Hussey NE, et al. 2015. Aquatic animal telemetry: a panoramic window into the underwater world. Science 348, 1255642 ( 10.1126/science.1255642) [DOI] [PubMed] [Google Scholar]

- 8.Houghton JDR, Broderick AC, Godley BJ, Metcalfe JD, Hays GC. 2002. Diving behaviour during the internesting interval for loggerhead turtles Caretta caretta nesting in Cyprus. Mar. Ecol. Prog. Ser. 227, 63–70. ( 10.3354/meps227063) [DOI] [Google Scholar]

- 9.Chambault P, de Thoisy B, Kelle L, Berzins R, Bonola M, Delvaux H, Le Maho Y, Chevallier D.. 2016. Inter-nesting behavioural adjustments of green turtles to an estuarine habitat in French Guiana. Mar. Ecol. Prog. Ser. 555, 235–248. ( 10.3354/meps11813) [DOI] [Google Scholar]

- 10.Chambault P, et al. 2016. The influence of oceanographic features on the foraging behavior of the olive ridley sea turtle Lepidochelys olivacea along the Guiana coast. Prog. Oceanogr. 142, 58–71. ( 10.1016/j.pocean.2016.01.006) [DOI] [Google Scholar]

- 11.Hays GC, Adams CR, Broderick AC, Godley BJ, Lucas DJ, Metcalfe JD, Prior AA. 1999. The diving behaviour of green turtles at Ascension Island. Anim. Behav. 59, 577–586. ( 10.1006/anbe.1999.1326) [DOI] [PubMed] [Google Scholar]

- 12.Hochscheid S, Godley BJ, Broderick AC, Wilson RP. 1999. Reptilian diving: highly variable dive patterns in the green turtle Chelonia mydas. Mar. Ecol. Prog. Ser. 185, 101–112. ( 10.3354/meps185101) [DOI] [Google Scholar]

- 13.Seminoff JA, Jones TT, Marshall GJ. 2006. Underwater behaviour of green turtles monitored with video-time-depth recorders: what's missing from dive profiles? Mar. Ecol. Prog. Ser. 322, 269–280. ( 10.3354/meps322269) [DOI] [Google Scholar]

- 14.Thomson JA, Heithaus MR, Dill LM. 2011. Informing the interpretation of dive profiles using animal-borne video: a marine turtle case study. J. Exp. Mar. Bio. Ecol. 410, 12–20. ( 10.1016/j.jembe.2011.10.002) [DOI] [Google Scholar]

- 15.Laich AGG, Wilson RP, Quintana F, Shepard ELC. 2010. Identification of imperial cormorant Phalacrocorax atriceps behaviour using accelerometers. Endanger. Species Res. 10, 29–37. ( 10.3354/esr00091) [DOI] [Google Scholar]

- 16.Graf PM, Wilson RP, Qasem L, Hackländer K, Rosell F. 2015. The use of acceleration to code for animal behaviours; a case study in free-ranging Eurasian beavers Castor fiber. PLoS ONE 10, 1–18. ( 10.1371/journal.pone.0136751) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Nathan R, Spiegel O, Fortmann-Roe S, Harel R, Wikelski M, Getz WM. 2012. Using tri-axial acceleration data to identify behavioral modes of free-ranging animals: general concepts and tools illustrated for griffon vultures. J. Exp. Biol. 215, 986–996. ( 10.1242/jeb.058602) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Fossette S, Schofield G, Lilley MKS, Gleiss AC, Hays GC. 2012. Acceleration data reveal the energy management strategy of a marine ectotherm during reproduction. Funct. Ecol. 26, 324–333. ( 10.1111/j.1365-2435.2011.01960.x) [DOI] [Google Scholar]

- 19.Yasuda T, Arai N. 2009. Changes in flipper beat frequency, body angle and swimming speed of female green turtles Chelonia mydas. Mar. Ecol. Prog. Ser. 386, 275–286. ( 10.3354/meps08084) [DOI] [Google Scholar]

- 20.Fossette S, Gleiss AC, Myers AE, Garner S, Liebsch N, Whitney NM, Hays GC, Wilson RP, Lutcavage ME. 2010. Behaviour and buoyancy regulation in the deepest-diving reptile: the leatherback turtle. J. Exp. Biol. 213, 4074–4083. ( 10.1242/jeb.048207) [DOI] [PubMed] [Google Scholar]

- 21.Viviant M, Trites AW, Rosen DAS, Monestiez P. 2009. Prey capture attempts can be detected in Steller sea lions and other marine predators using accelerometers. Polar Biol. 33, 713–719. ( 10.1007/s00300-009-0750-y) [DOI] [Google Scholar]

- 22.Watanabe YY, Ito M, Takahashi A. 2014. Testing optimal foraging theory in a penguin-krill system. Proc. R. Soc. B 281, 20132376 ( 10.1098/rspb.2013.2376) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Gallon S, Bailleul F, Charrassin JB, Guinet C, Bost CA, Handrich Y, Hindell M. 2013. Identifying foraging events in deep diving southern elephant seals, Mirounga leonina, using acceleration data loggers. Deep. Res. Part II Top. Stud. Oceanogr. 88–89, 14–22. ( 10.1016/j.dsr2.2012.09.002) [DOI] [Google Scholar]

- 24.Fossette S, Gaspar P, Handrich Y, Le Maho Y, Georges JY. 2008. Dive and beak movement patterns in leatherback turtles Dermochelys coriacea during internesting intervals in French Guiana. J. Anim. Ecol. 77, 236–246. ( 10.1111/j.1365-2656.2007.01344.x) [DOI] [PubMed] [Google Scholar]

- 25.Hochscheid S, Maffucci F, Bentivegna F, Wilson RP. 2005. Gulps, wheezes, and sniffs: how measurement of beak movement in sea turtles can elucidate their behaviour and ecology. J. Exp. Mar. Bio. Ecol. 316, 45–53. ( 10.1016/j.jembe.2004.10.004) [DOI] [Google Scholar]

- 26.Myers AE, Hays GC. 2006. Do leatherback turtles Dermochelys coriacea forage during the breeding season? A combination of data-logging devices provide new insights. Mar. Ecol. Prog. Ser. 322, 259–267. ( 10.3354/meps322259) [DOI] [Google Scholar]

- 27.Okuyama J, Kawabata Y, Naito Y, Arai N, Kobayashi M. 2009. Monitoring beak movements with an acceleration datalogger: a useful technique for assessing the feeding and breathing behaviors of sea turtles. Endanger. Species Res. 10, 39–45. ( 10.3354/esr00215) [DOI] [Google Scholar]

- 28.Okuyama J, et al. 2013. Ethogram of immature green turtles: behavioral strategies for somatic growth in large marine herbivores. PLoS ONE 8, e65783 ( 10.1371/journal.pone.0065783) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Wilson M, Tucker AD, Beedholm K, Mann DA. 2017. Changes of loggerhead turtle (Caretta caretta) dive behavior associated with tropical storm passage during the inter-nesting period. J. Exp. Biol. 220, 3432–3441. ( 10.1242/jeb.162644) [DOI] [PubMed] [Google Scholar]

- 30.Tyson RB, Piniak WED, Domit C, Mann D, Hall M, Nowacek DP, Fuentes MMPB. 2017. Novel bio-logging tool for studying fine-scale behaviors of marine turtles in response to sound. Front. Mar. Sci. 4, 219 ( 10.3389/fmars.2017.00219) [DOI] [Google Scholar]

- 31.Noda T, Okuyama J, Koizumi T, Arai N, Kobayashi M. 2012. Monitoring attitude and dynamic acceleration of free-moving aquatic animals using a gyroscope. Aquat. Biol. 16, 265–276. ( 10.3354/ab00448) [DOI] [Google Scholar]

- 32.Nivière M, et al. 2018. Identification of marine key areas across the Caribbean to ensure the conservation of the critically endangered hawksbill turtle. Biol. Conserv. 223, 170–180. ( 10.1016/j.biocon.2018.05.002) [DOI] [Google Scholar]

- 33.Geiger S. 2019. Package ‘rblt’. See https://cran.r-project.org/web/packages/rblt/rblt.pdf.

- 34.Wilson RP, et al. 2020. Estimates for energy expenditure in free-living animals using acceleration proxies: a reappraisal. J. Anim. Ecol. 89, 161–172. ( 10.1111/1365-2656.13040) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Killick R, Fearnhead P, Eckley IA. 2012. Optimal detection of changepoints with a linear computational cost. J. Am. Stat. Assoc. 107, 1590–1598. ( 10.1080/01621459.2012.737745) [DOI] [Google Scholar]

- 36.Reich KJ, Bjorndal KA, Bolten AB. 2007. The ‘lost years’ of green turtles: using stable isotopes to study cryptic lifestages. Biol. Lett. 3, 712–714. ( 10.1098/rsbl.2007.0394) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brewster LR, Dale JJ, Guttridge TL, Gruber SH, Hansell AC, Elliott M, Cowx IG, Whitney NM, Gleiss AC. 2018. Development and application of a machine learning algorithm for classification of elasmobranch behaviour from accelerometry data. Mar. Biol. 165, 62 ( 10.1007/s00227-018-3318-y) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ladds MA, Thompson AP, Slip DJ, Hocking DP, Harcourt RG. 2016. Seeing it all: evaluating supervised machine learning methods for the classification of diverse otariid behaviours. PLoS ONE 11, 1–17. ( 10.1371/journal.pone.0166898) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Therneau T, Atkinson B.2018. rpart: Recursive Partitioning and Regression Trees. R Package, version 4.1-13. https://CRAN.R-project.org/package=rpart .

- 40.Liaw A, Wiener M. 2002. Classification and regression by randomForest. R news 2, 18–22. ( 10.1177/154405910408300516) [DOI] [Google Scholar]

- 41.Chen T, et al. 2018. xgboost: Extreme Gradient Boosting. R Package, version 0.71.2. https://CRAN.R-project.org/package=xgboost .

- 42.Meyer D, Dimitriadou E, Hornik K, Weingnessel A, Leisch F.2017. e1071: Misc Functions of the Department of Statistics, Probability Theory Group (Formerly: E1071). R Package, version 1.6-8. https://CRAN.R-project.org/package=e1071 .

- 43.Venables W, Ripley B. 2002. Modern applied statistics with S., 4th edn. New York, NY: Springer; (http://www.stats.ox.ac.uk/pub/MASS4). [Google Scholar]

- 44.Japkowicz N. 2000. The class imbalance problem: significance and strategies. In Proc. of the 2000 Int. Conf. on Artificial Intelligence (IC-AI'2000): Special Track on Inductive Learning, Las Vegas, NV, pp. 111–117. (doi:10.1.1.35.1693) [Google Scholar]

- 45.Opitz DW, Maclin R. 1999. Popular ensemble methods: an empirical study. J. Artif. Intell. Res. 11, 169–198. ( 10.1613/jair.614) [DOI] [Google Scholar]

- 46.Rokach L. 2010. Ensemble-based classifiers. Artif. Intell. Rev. 33, 1–39. ( 10.1007/s10462-009-9124-7) [DOI] [Google Scholar]

- 47.Hays GC, Marshall GJ, Seminoff JA. 2007. Flipper beat frequency and amplitude changes in diving green turtles, Chelonia mydas. Mar. Biol. 150, 1003–1009. ( 10.1007/s00227-006-0412-3) [DOI] [Google Scholar]

- 48.Okuyama J, Kataoka K, Kobayashi M, Abe O, Yoseda K, Arai N. 2012. The regularity of dive performance in sea turtles: a new perspective from precise activity data. Anim. Behav. 84, 349–359. ( 10.1016/j.anbehav.2012.04.033) [DOI] [Google Scholar]

- 49.Enstipp MR, Ballorain K, Ciccione S, Narazaki T, Sato K, Georges JY. 2016. Energy expenditure of adult green turtles (Chelonia mydas) at their foraging grounds and during simulated oceanic migration. Funct. Ecol. 30, 1810–1825. ( 10.1111/1365-2435.12667) [DOI] [Google Scholar]

- 50.Brown DD, Kays R, Wikelski M, Wilson R, Klimley AP. 2013. Observing the unwatchable through acceleration logging of animal behavior. Anim. Biotelemetry 1, 20 ( 10.1186/2050-3385-1-20) [DOI] [Google Scholar]

- 51.Koch V, Nichols WJ, Peckham H, De La Toba V.. 2006. Estimates of sea turtle mortality from poaching and bycatch in Bahía Magdalena, Baja California Sur, Mexico. Biol. Conserv. 128, 327–334. ( 10.1016/j.biocon.2005.09.038) [DOI] [Google Scholar]

- 52.Wallace BP, Kot CY, Dimatteo AD, Lee T, Crowder LB, Lewison RL. 2013. Impacts of fisheries bycatch on marine turtle populations worldwide: toward conservation and research priorities. Ecosphere 4, 1–49. ( 10.1890/ES12-00388.1) [DOI] [Google Scholar]

- 53.Ladds MA, Thompson AP, Kadar JP, Slip D, Hocking D, Harcourt R. 2017. Super machine learning: improving accuracy and reducing variance of behaviour classification from accelerometry. Anim. Biotelemetry 5, 1–10. ( 10.1186/s40317-017-0123-1) [DOI] [Google Scholar]

- 54.Ellis K, Godbole S, Marshall S, Lanckriet G, Staudenmayer J, Kerr J. 2014. Identifying active travel behaviors in challenging environments using GPS, accelerometers, and machine learning algorithms. Front. Public Heal. 2, 36 ( 10.3389/fpubh.2014.00036) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Ali KM, Pazzani MJ. 1996. Error reduction through learning multiple descriptions. Mach. Learn. 24, 173–202. ( 10.1007/bf00058611) [DOI] [Google Scholar]

- 56.Resheff YS, Rotics S, Harel R, Spiegel O, Nathan R. 2014. AcceleRater: a web application for supervised learning of behavioral modes from acceleration measurements. Mov. Ecol. 2, 27 ( 10.1186/s40462-014-0027-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Shepard ELC, et al. 2008. Identification of animal movement patterns using tri-axial accelerometry. Endanger. Species Res. 10, 47–60. ( 10.3354/esr00084) [DOI] [Google Scholar]

- 58.Yoda K, Sato K, Niizuma Y, Kurita M, Naito Y. 1999. Precise monitoring of porpoising behaviour of Adélie penguins determined using acceleration data loggers. J. Exp. Biol. 202, 3121–3126. [DOI] [PubMed] [Google Scholar]

- 59.Moreau M, Siebert S, Buerkert A, Schlecht E. 2009. Use of a tri-axial accelerometer for automated recording and classification of goats' grazing behaviour. Appl. Anim. Behav. Sci. 119, 158–170. ( 10.1016/j.applanim.2009.04.008) [DOI] [Google Scholar]

- 60.Jeantet L, et al. 2018. Combined use of two supervised learning algorithms to model sea turtle behaviours from tri-axial acceleration data. J. Exp. Biol. 221, jebl177378 ( 10.1242/jeb.177378) [DOI] [PubMed] [Google Scholar]

- 61.Shuert CR, Pomeroy PP, Twiss SD. 2018. Assessing the utility and limitations of accelerometers and machine learning approaches in classifying behaviour during lactation in a phocid seal. Anim. Biotelemetry 6, 14 ( 10.1186/s40317-018-0158-y) [DOI] [Google Scholar]

- 62.Wang Y, Nickel B, Rutishauser M, Bryce CM, Williams TM, Elkaim G, Wilmers CC. 2015. Movement, resting, and attack behaviors of wild pumas are revealed by tri-axial accelerometer measurements. Mov. Ecol. 3, 1–12. ( 10.1186/s40462-015-0030-0) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Bom RA, Bouten W, Piersma T, Oosterbeek K, van Gils JA.. 2014. Optimizing acceleration-based ethograms: The use of variable-time versus fixed-time segmentation. Mov. Ecol. 2, 6 ( 10.1186/2051-3933-2-6) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Ladds MA, Salton M, Hocking DP, McIntosh RR, Thompson AP, Slip DJ, Harcourt RG. 2018. Using accelerometers to develop time-energy budgets of wild fur seals from captive surrogates. PeerJ 6, e5814 ( 10.7717/peerj.5814) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Bidder OR, Campbell HA, Gómez-Laich A, Urgé P, Walker J, Cai Y, Gao L, Quintana F, Wilson RP. 2014. Love thy neighbour: automatic animal behavioural classification of acceleration data using the k-nearest neighbour algorithm. PLoS ONE 9, e88609 ( 10.1371/journal.pone.0088609) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Campbell HA, Gao L, Bidder OR, Hunter J, Franklin CE. 2013. Creating a behavioural classification module for acceleration data: using a captive surrogate for difficult to observe species. J. Exp. Biol. 216, 4501–4506. ( 10.1242/jeb.089805) [DOI] [PubMed] [Google Scholar]

- 67.Wilson RP, et al. 2018. Give the machine a hand: a Boolean time-based decision-tree template for rapidly finding animal behaviours in multi-sensor data. Methods Ecol. Evol. 2018, 1–10. ( 10.1111/2041-210X.13069) [DOI] [Google Scholar]

- 68.Lagarde F, Guillon M, Dubroca L, Bonnet X, Ben Kaddour K, Slimani T, El mouden EH. 2008. Slowness and acceleration: a new method to quantify the activity budget of chelonians. Anim. Behav. 75, 319–329. ( 10.1016/j.anbehav.2007.01.010) [DOI] [Google Scholar]

- 69.Martiskainen P, Järvinen M, Skön J-P, Tiirikainen J, Kolehmainen M, Mononen J. 2009. Cow behaviour pattern recognition using a three-dimensional accelerometer and support vector machines. Appl. Anim. Behav. Sci. 119, 32–38. ( 10.1016/j.applanim.2009.03.005) [DOI] [Google Scholar]

- 70.Shamoun-Baranes J, Bom R, van Loon EE, Ens BJ, Oosterbeek K, Bouten W. 2012. From sensor data to animal behaviour: an oystercatcher example. PLoS ONE 7, e37997 ( 10.1371/journal.pone.0037997) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.McClune DW, Marks NJ, Wilson RP, Houghton JDR, Montgomery IW, McGowan NE, Gormley E, Scantlebury M. 2014. Tri-axial accelerometers quantify behaviour in the Eurasian badger (Meles meles): towards an automated interpretation of field data. Anim. Biotelemetry 2, 1–6. ( 10.1186/2050-3385-2-5) [DOI] [Google Scholar]

- 72.Lush L, Wilson RP, Holton MD, Hopkins P, Marsden KA, Chadwick DR, King AJ. 2018. Classification of sheep urination events using accelerometers to aid improved measurements of livestock contributions to nitrous oxide emissions. Comput. Electron. Agric. 150, 170–177. ( 10.1016/j.compag.2018.04.018) [DOI] [Google Scholar]

- 73.Robert B, White BJ, Renter DG, Larson RL. 2009. Evaluation of three-dimensional accelerometers to monitor and classify behavior patterns in cattle. Comput. Electron. Agric. 67, 80–84. ( 10.1016/j.compag.2009.03.002) [DOI] [Google Scholar]

- 74.Allik A, Pilt K, Karai D, Fridolin I, Leier M, Jervan G. 2019. Optimization of physical activity recognition for real-time wearable systems: effect of window length, sampling frequency and number of features. Appl. Sci. 9, 4833 ( 10.3390/app9224833) [DOI] [Google Scholar]

- 75.Watanabe YY, Takahashi A. 2013. Linking animal-borne video to accelerometers reveals prey capture variability. Proc. Natl Acad. Sci. USA 110, 2199–2204. ( 10.1073/pnas.1216244110) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Jeantet L, et al. 2020. Raw acceleration, gyroscope and depth profiles associated with the observed behaviours of free-ranging immature green turtles in Martinique v2 Dryad Digital Repository. ( 10.5061/dryad.hhmgqnkd9) [DOI]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Citations

- Jeantet L, et al. 2020. Raw acceleration, gyroscope and depth profiles associated with the observed behaviours of free-ranging immature green turtles in Martinique v2 Dryad Digital Repository. ( 10.5061/dryad.hhmgqnkd9) [DOI]

Supplementary Materials

Data Availability Statement

The R-script to visualize the raw acceleration, gyroscope and depth profile associated with the observed behaviours of the immature green turtles have been uploaded as part of the electronic supplementary material. The same is true for the R-script to automatically identify sea turtle behaviour from the labelled data. The datasets containing the acceleration, gyroscope and depth recordings of the 13 immature green turtles as well as their observed behaviours are available within the Dryad Digital Repository: https://doi.org/10.5061/dryad.hhmgqnkd9 [76].