Abstract

Background

In an era of shared decision making, patient expectations for education have increased. Ideal resources would offer accurate information, digital delivery and interaction. Mobile applications have potential to fulfill these requirements. The purpose of this study was to demonstrate adoption of a patient education application (app: http://bit.ly/traumaapp) at multiple sites with disparate locations and varied populations.

Methods

A trauma patient education application was developed at one trauma center and subsequently released at three new trauma centers. The app contains information regarding treatment and recovery and was customized with provider information for each institution. Each center was provided with promotional materials, and each had strategies to inform providers and patients about the app. Data regarding utilization was collected. Patients were surveyed about usage and recommendations.

Results

Over the 16-month study period, the app was downloaded 844 times (70%) in the metropolitan regions of the study centers. The three new centers had 380, 89 and 31 downloads, while the original center had 93 downloads. 36% of sessions were greater than 2 min, while 41% were less than a few seconds. The percentage of those surveyed who used the app ranged from 14.3% to 44.0% for a weighted average of 36.8% of those having used the app. The mean patient willingness to recommend the app was 3.3 on a 5-point Likert scale. However, the distribution was bimodal: 60% of patients rated the app 4 or 5, while 32% rated it 1 or 2.

Discussion

The adoption of a trauma patient education app was successful at four centers with disparate patient populations. The majority of patients were likely to recommend the app. Variations in implementation strategies resulted in different rates of download. Integration of the app into patient education by providers is associated with more downloads.

Level of evidence

Level III care management.

Keywords: multiple trauma; education, medical; outcome assessment, health care

Background

In the last century, our expectations for patient comprehension have increased. Patients are expected to give informed consent, participate in shared decision making and care for themselves earlier after discharge. In order to participate in shared decision making, patients must understand their treatment options and the consequences of each option.1 Informed consent for medical research raises the bar by requiring a higher level of understanding that goes beyond typical medical care.2 In addition, the push to decrease length of stay has led to more complex discharge instructions.3 This makes comprehension of discharge instructions vital to patients’ ability to adhere to medical recommendations and correlates directly with patient-reported outcomes.4 Cumulatively, these responsibilities represent a substantial challenge for physicians.5

Research suggests that we have not lived up to this challenge, leaving patients with a poor understanding of consent, discharge instructions and postoperative care.6–8 There are myriad reasons for this. Physicians now have less time to spend with patients and that time is dominated by the demands of the electronic medical records.9 Informed consent documents are dense and difficult to read: a legal document that must be signed and placed in the chart rather than the basis for discussion with the patient.10 11

Although the consent document is a legal form, we have not done much better with educational materials explicitly aimed at patients. Specifically, the reading level of many patient education resources is too high. This has been shown across medicine.12–17 When patients do not understand resources provided to them, they may turn to the internet.18 Resources that patients find on the internet are often outdated, inaccurate and incomplete; frequently, they consist of promotional material.19 Modern physicians are capable of developing and implementing more effective educational materials.20

Educational resources are essential to successful patient engagement and recovery.21 Effective patient-centered mobile applications (apps) have the potential to augment physician success in educating patients.22 Mobile apps share the basic premise of other educational materials and should facilitate enhanced discussion with patients.23 Content must cover relevant information, including explanations of the illness or injury, treatments, procedures and recovery. Text should be comprehensible at the sixth to eighth grade level.24 Relevant images and videos should be included to facilitate discussion and improve comprehension, adherence and the informed consent process.25–28 Furthermore, apps are infinitely scalable, widely accessible and can be seamlessly updated following distribution. In this study, we hypothesize that a free, open-access app for trauma patient education, initially developed at a single institution, can be successfully scaled to three new institutions with distinct patient populations.

Methods

App content

Content for this app (http://bit.ly/traumaapp) was developed by fellowship-trained trauma and critical care, orthopedic trauma and spine surgeons at a level 1 trauma center.29 Text was created at the sixth grade reading level. Complementary images were included. The content covers common traumatic injuries. This includes upper extremity, lower extremity, pelvis, spine, chest, abdomen and head injuries. Content for each injury included background, non-surgical management, surgical management and recovery. Additional content included information on providers and various services to promote recovery after trauma. The cost of developing the app included $3000 for design of the app and initial population of content as well as $800 for usage of the web platform. An annual renewal fee of $800 is also anticipated.

Multicenter implementation

After piloting the app at the originating level 1 trauma center, three additional sites were added in October 2018. Each site was provided with portable document format versions of posters and flyers that could be used on the floor and in the outpatient clinic. Physicians, residents and nurses at each site were made aware of the app, and app usage occurred organically.

Google ads campaign

A plain text Google ads campaign was targeted in a 20 mile radius of a level 1 trauma center in an urban geographic region distinct from the study locations. Ads were shown to people in this area who searched for keywords such as ‘trauma’, ‘broken femur’ and ‘head injury’. The ad campaign lasted 14 days.

App downloads and usage

Data were collected through the app as well as in a survey. Data from the app stores included downloads, location, frequently used features, page views and time in app. The outcome ‘Downloads’ was defined as the number of app downloads per time period. The time period was adjustable by days, weeks or months. Location was defined using a map provided by the app platform, which showed location with granularity to the level of a city. Frequently used features were defined as the pages in the app that were used most frequently and was cumulative over the selected time frame. Page views were defined as unique visits to each section of the app and were cumulative over the selected time frame. Time spent in app was defined as the duration in minutes of each session or time a user opened the app. Time was recorded in 20 s blocks for sessions below 1 min, then 1–2 min, 2–5 min and greater than 5 min. Ratio of views to downloads and usage was collected from the app platform.

Satisfaction and quality improvement survey

Each site was provided with the same survey regarding patient and caregiver experience with the app (online supplementary appendix 1). Informed consent was waived by the IRB. Age range, gender, primary language, relationship to patient, satisfaction with care, likelihood to recover, usage, likelihood to recommend the app and desired improvements were gathered from the survey.

tsaco-2020-000452supp001.pdf (23.9KB, pdf)

Results

App analytics and multicenter implementation

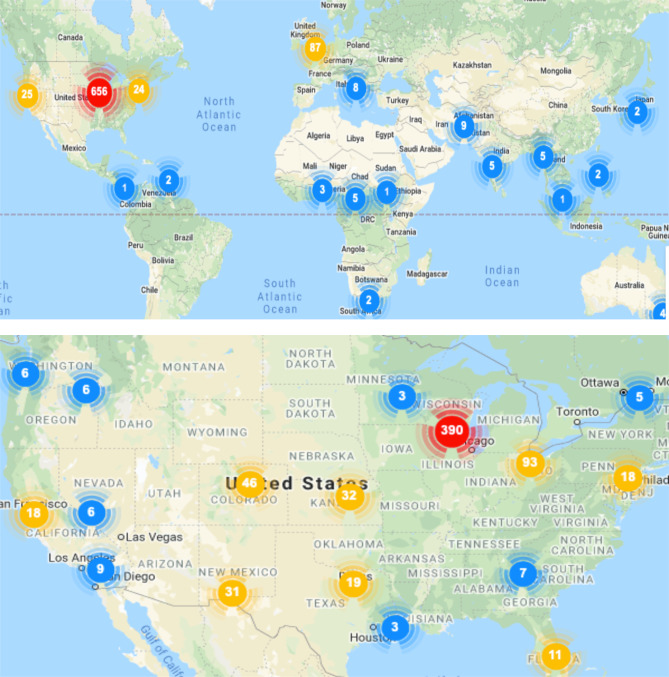

From initiation of the new sites to this analysis (14 October 2018–29 January 2020), the app was downloaded 844 times from Google Play and Apple App stores. Of these 844 downloads, 593 (70%) were in the metropolitan regions of the level 1 trauma centers where this study was conducted (figure 1). The three new centers had 380, 89 and 31 downloads, while the original center had 93 downloads during this period. During the 16-month study period of the app, it was used by between 0.7% to 9.8% of all trauma admissions as estimated by yearly average trauma admissions for each trauma center: (31/3985=0.7% (MetroHealth), 93/6188=1.5% (UW Health), 87/5333=1.6% (El Paso) and 380/3860=9.8% (King’s).

Figure 1.

Location of downloads (October 2018–January 2020). Figure 1A depicts a world map, while figure 1B shows usage within the USA. Over 16 months, the app was downloaded 844 times globally, 593 (70%) were in the metropolitan areas of the level 1 trauma centers where this study was conducted.

Google ads campaign

A Google ads campaign was used as control for the effect of physician recommendation on download likelihood. Ads were targeted in a 20 mile radius of an urban geographic region distinct from the study locations. In this location, an ad linked to the trauma app website was shown to 2447 people who searched for terms such as ‘trauma’, ‘broken femur’ and ‘head injury’. Of the 2447 people who were shown the add, 21 people (0.9%) clicked on the add and 0 downloaded it.

App usage and page views

User sessions (individual uses of the app) were split in a bimodal distribution between those that used the app for greater than 2 min and those that used it for less than a few seconds. Before the addition of the three new centers, 35% of sessions were greater than 2 min, while 42% of sessions were less than a few seconds. This changed to 36% and 41%, respectively, after the addition of the new centers. Overall, there were 8550 page views within the study period, with variable spikes in usage during single days. As a percentage of total page views, patients visited ‘Your Injury’ (10%), ‘Broken bones’ (8.3%), ‘Lower Leg (tibia and fibula)’ (8.3%), ‘Your Recovery Timeline’ (6.7%) and ‘After Surgery’ (6.7%) most often.

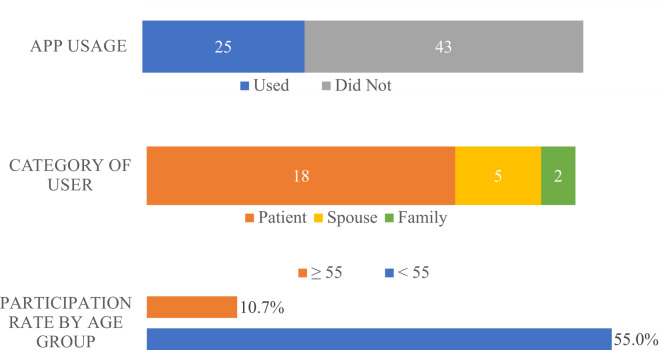

Satisfaction and quality improvement survey

The following results are from patient and caregiver surveys (figure 2). Surveys were IRB approved and obtained at three of four sites. Patient and caregiver surveys were collected from 68 participants who had been offered the app as part of their care. The majority (73.5%) of surveys were collected at the primary center. Of the patients and caregivers surveyed, 25 of 68 (37%) used the app. Of the 25 people surveyed who did use the app, 72% were patients, while 20% were spouses and 8% were family. Difference in app participation between men (36%) and women (38%) was not statistically significant (p=0.91). Participation was less in those age 55 years or older 10.7% vs 55.0%, p<0.001. The mean patient willingness to recommend the app was 3.3±1.5 on a 5-point Likert scale. However, the distribution was bimodal: 15 of 25 (60%) of patients rated the app 4 or 5, while 8 of 25 (32%) rated it 1 or 2.

Figure 2.

Patient and caregiver surveys were collected from patients who had been offered the app during their hospital care.

Discussion

Our study demonstrates that an educational app developed at one center can be adopted at three new level one trauma centers with varying patient populations. The app was developed by surgeons to assist in educating patients about their injury, non-operative and operative treatment options, and recovery. The app is written at a sixth grade reading level and contains relevant images. Within 1 year, three new level 1 trauma centers had success in implementing the app in their practice. This is evidenced by the growth in the number of downloads, length of use and overall page views of the app in the location of the new trauma centers during the period of this study. None of the US sites are in the same state, and one site is in the UK. Despite the differences in geography and population, the simple language and images in the app were adaptable to each site. Flexible wording such as ‘many’ and ‘some’ allowed surgeons to have variation in clinical practices without conflict with the app content. No site reported issues with the standard of care or range of treatment options presented in the app.

Delivery of content through an app had several other advantages. There were no additional costs associated with the more downloads at the new centers. In addition, improvements were made in real time. For example, one center found that while talking to patients with broken hips about surgical options, they wanted images of intramedullary nail as well as sliding hip screw and hemiarthroplasty. Those images were added within a few days. Polytrauma patients in multiple centers requested information explaining who the caregivers in the ICU were. This section was developed and added during the course of the study. Scapula and talus fracture content was developed and added. Additional improvements such as pediatric content and Spanish translation have been suggested and are ongoing. The ability to improve and update the app in real time enhances physicians’ ability to use the app with their patients and to meet needs of patients and their families.

In 1 year, one of the new centers had 380 downloads. That is nearly 10 SD above the mean of the other three centers for the same period. While the original center and the two other new centers found one surgeon who championed the app successfully, in the third center, the app was adopted by the entire trauma orthopedic and general surgery teams. Use was driven by the trauma nurse practitioners who began advocating it to every patient. This is a great example of the team environment in modern healthcare. When more healthcare professionals encourage education to patients, they will be more likely to download the app.

The influence of a physician or physician extender recommendation of the app was also evident in the results of the Google ads campaign. Over 2000 people who were searching for information related to trauma saw an ad similar to the flyer used with patients in clinic. However, of these, only 21 (0.9%) clicked through to the app home page, and none chose to download the app. Meanwhile, survey data indicated that over one-third of patients who were offered the app by a healthcare provider downloaded and used the app. The educational resources that physicians recommend are important to patients.

The digital nature of a smartphone apps provides instantaneous collection of information about usage patterns. This is feedback that is not possible with traditional paper educational materials. We know that patients viewed thousands of pages of educational material over 12 months and that one-third of the time they spend over 2 min looking at these pages. The pages are simply written, and 2 min is adequate time to take in the intended message. Similarly, tracking usage of paper education materials would require surveying patients who were provided such materials, a process subject to considerable recall bias and inaccuracy.30 31 With no feasible way to monitor the usage of paper educational materials, we do not know if patients tend to engage more with electronic materials. However, the high volume of downloads and pages views indicates that patients are using this resource.

Survey results supplemented data from the app with information about characteristics of users from the 68 patients and caregivers who completed the questionnaires. Survey data revealed that the app was used by patients (72%) and frequently by spouses (20%) and other family members (8%). As expected, participation was greater among younger patients. This trend is likely due to comfort and familiarity with technology. While a majority (60%) of patients said they would recommend the app, the distribution of responses on the Likert scale were highest at the extremes, and in the future, a yes or no format may provide survey accuracy.

This study has weaknesses that we would like to address in following investigations. We did not measure changes in patient comprehension. We collected a relatively small sample of surveys and most were from only one institution. In the future, we plan to measure patient recollection and comprehension of discharge instructions and determine if usage of the app makes an impact.

The development of patient education materials that successfully improve physicians’ ability to engage patients in their care are critical to the improvement of that care. Although it seems a daunting task, big initiatives do not necessarily require large budgets. This paper shows that a simple educational app designed by a small team for orthopedic trauma patients can be successful in multiple centers with hundreds of patients. Furthermore, adoption of a new educational app is enhanced through a team approach.

Footnotes

Presented at: This paper was presented at the Annual Meeting of the Society of Military Orthopaedic Surgeons, Palm Beach Gardens, Florida,18 December 2019.

Contributors: BRC: study development, implementation, enrollment, data collection, data analysis, manuscript writing and literature review. MB: study development, implementation and manuscript critical revision. MN: implementation, enrollment, data analysis and manuscript critical revision. NS: implementation, enrollment, data collection and manuscript critical revision. PW: implementation, data analysis and manuscript critical revision. AV: implementation, data collection and manuscript critical revision. HAV: study design, implementation, enrollment, data analysis, literature review, manuscript drafting and critical revision.

Funding: This study was IRB approved. Funding to develop the app was provided through the MetroHealth Foundation Nash Endowment for Orthopaedic Education. No benefits in any form have been received or will be received from a commercial party related directly or indirectly to the subject of this article. All of the devices in this manuscript are FDA approved.

Map disclaimer: The depiction of boundaries on this map does not imply the expression of any opinion whatsoever on the part of BMJ (or any member of its group) concerning the legal status of any country, territory, jurisdiction or area or of its authorities. This map is provided without any warranty of any kind, either express or implied.

Competing interests: Funding to develop the app was provided through the MetroHealth Foundation Nash Endowment for Orthopaedic Education.

Patient consent for publication: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data availability statement: Data are available on reasonable request. Data may be available on reasonable request.

References

- 1.McKneally MF, Ignagni E, Martin DK, D'Cruz J, D’Cruz J. The leap to trust: perspective of cholecystectomy patients on informed decision making and consent. J Am Coll Surg 2004;199:51–7. 10.1016/j.jamcollsurg.2004.02.021 [DOI] [PubMed] [Google Scholar]

- 2.Paasche-Orlow MK, Taylor HA, Brancati FL. Readability standards for informed-consent forms as compared with actual readability. N Engl J Med 2003;348:721–6. 10.1056/NEJMsa021212 [DOI] [PubMed] [Google Scholar]

- 3.Jones D, Musselman R, Pearsall E, McKenzie M, Huang H, McLeod RS. Ready to go home? patients' experiences of the discharge process in an enhanced recovery after surgery (ERAS) program for colorectal surgery. J Gastrointest Surg 2017;21:1865–78. 10.1007/s11605-017-3573-0 [DOI] [PubMed] [Google Scholar]

- 4.Rossi MJ, Brand JC, Provencher MT, Lubowitz JH. The expectation game: patient comprehension is a determinant of outcome. Arthroscopy 2015;31:2283–4. 10.1016/j.arthro.2015.09.005 [DOI] [PubMed] [Google Scholar]

- 5.Demiris G, Afrin LB, Speedie S, Courtney KL, Sondhi M, Vimarlund V, Lovis C, Goossen W, Lynch C. Patient-Centered applications: use of information technology to promote disease management and wellness. A white paper by the amiA knowledge in motion Working group. J Am Med Inform Assoc 2008;15:8–13. 10.1197/jamia.M2492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Falagas ME, Korbila IP, Giannopoulou KP, Kondilis BK, Peppas G. Informed consent: how much and what do patients understand? Am J Surg 2009;198:420–35. 10.1016/j.amjsurg.2009.02.010 [DOI] [PubMed] [Google Scholar]

- 7.Lin MJ, Tirosh AG, Landry A. Examining patient comprehension of emergency department discharge instructions: who says they understand when they do not? Intern Emerg Med 2015;10:993–1002. 10.1007/s11739-015-1311-8 [DOI] [PubMed] [Google Scholar]

- 8.Kadakia RJ, Tsahakis JM, Issar NM, Archer KR, Jahangir AA, Sethi MK, Obremskey WT, Mir HR. Health literacy in an orthopedic trauma patient population: a cross-sectional survey of patient comprehension. J Orthop Trauma 2013;27:467–71. 10.1097/BOT.0b013e3182793338 [DOI] [PubMed] [Google Scholar]

- 9.Mauksch LB, Dugdale DC, Dodson S, Epstein R. Relationship, Communication, and Efficiency in the Medical Encounter<subtitle>Creating a Clinical Model From a Literature Review</subtitle>. Arch Intern Med 2008;168:1387–95. 10.1001/archinte.168.13.1387 [DOI] [PubMed] [Google Scholar]

- 10.Eltorai AEM, Naqvi SS, Ghanian S, Eberson CP, Weiss A-PC, Born CT, Daniels AH. Readability of invasive procedure consent forms. Clin Transl Sci 2015;8:830–3. 10.1111/cts.12364 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Grady C. Enduring and emerging challenges of informed consent. N Engl J Med 2015;372:855–62. 10.1056/NEJMra1411250 [DOI] [PubMed] [Google Scholar]

- 12.Eltorai AEM, Ghanian S, Adams CA, Born CT, Daniels AH. Readability of patient education materials on the American association for surgery of trauma website. Arch Trauma Res 2014;3:e18161. 10.5812/atr.18161 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Eltorai AEM, Cheatham M, Naqvi SS, Marthi S, Dang V, Palumbo MA, Daniels AH. Is the readability of spine-related patient education material improving?: an assessment of subspecialty websites. Spine 2016;41:1041–8. 10.1097/BRS.0000000000001446 [DOI] [PubMed] [Google Scholar]

- 14.Roberts H, Zhang D, Dyer GSM. The readability of AAOS patient education materials: evaluating the progress since 2008. J Bone Joint Surg Am 2016;98:e70. 10.2106/JBJS.15.00658 [DOI] [PubMed] [Google Scholar]

- 15.Fahimuddin FZ, Sidhu S, Agrawal A. Reading level of online patient education materials from major obstetrics and gynecology societies. Obstet Gynecol 2019;133:987–93. 10.1097/AOG.0000000000003214 [DOI] [PubMed] [Google Scholar]

- 16.Basch CH, Fera J, Ethan D, Garcia P, Perin D, Basch CE. Readability of online material related to skin cancer. Public Health 2018;163:137–40. 10.1016/j.puhe.2018.07.009 [DOI] [PubMed] [Google Scholar]

- 17.LÖ S, Sönmez MG, Ayrancı MK, Gül M. Evaluation of the readability of informed consent forms used for emergency procedures. Dis Emerg Med J 2018;3:51–5. [Google Scholar]

- 18.Xiao N, Sharman R, Rao HR, Upadhyaya S. Factors influencing online health information search: an empirical analysis of a national cancer-related survey. Decis Support Syst 2014;57:417–27. 10.1016/j.dss.2012.10.047 [DOI] [Google Scholar]

- 19.Kumar N, Pandey A, Venkatraman A, Garg N. Are video sharing web sites a useful source of information on hypertension? J Am Soc Hypertens 2014;8:481–90. 10.1016/j.jash.2014.05.001 [DOI] [PubMed] [Google Scholar]

- 20.Rudd RE. Improving Americans' health literacy. N Engl J Med 2010;363:2283–5. 10.1056/NEJMp1008755 [DOI] [PubMed] [Google Scholar]

- 21.Nishimura A, Carey J, Erwin PJ, Tilburt JC, Murad MH, McCormick JB. Improving understanding in the research informed consent process: a systematic review of 54 interventions tested in randomized control trials. BMC Med Ethics 2013;14:28. 10.1186/1472-6939-14-28 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Demiris G, Afrin LB, Speedie S, Courtney KL, Sondhi M, Vimarlund V, Lovis C, Goossen W, Lynch C. Patient-Centered applications: use of information technology to promote disease management and wellness. A white paper by the amiA knowledge in motion Working group. J Am Med Inform Assoc 2008;15:8–13. 10.1197/jamia.M2492 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schenker Y, Fernandez A, Sudore R, Schillinger D. Interventions to improve patient comprehension in informed consent for medical and surgical procedures: a systematic review. Med Decis Making 2011;31:151–73. 10.1177/0272989X10364247 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Rudd RE. Improving Americans' health literacy. N Engl J Med 2010;363:2283–5. 10.1056/NEJMp1008755 [DOI] [PubMed] [Google Scholar]

- 25.Houts PS, Doak CC, Doak LG, Loscalzo MJ. The role of pictures in improving health communication: a review of research on attention, comprehension, recall, and adherence. Patient Educ Couns 2006;61:173–90. 10.1016/j.pec.2005.05.004 [DOI] [PubMed] [Google Scholar]

- 26.Chelf JH, Agre P, Axelrod A, Cheney L, Cole DD, Conrad K, Hooper S, Liu I, Mercurio A, Stepan K, et al. . Cancer-Related patient education: an overview of the last decade of evaluation and research. Oncol Nurs Forum 2001;28:1139–47. [PubMed] [Google Scholar]

- 27.Davis SA, Carpenter DM, Blalock SJ, Budenz DL, Lee C, Muir KW, Robin AL, Sleath B. A randomized controlled trial of an online educational video intervention to improve glaucoma eye drop technique. Patient Educ Couns 2019;102:937–43. 10.1016/j.pec.2018.12.019 [DOI] [PubMed] [Google Scholar]

- 28.Grady C, Cummings SR, Rowbotham MC, McConnell MV, Ashley EA, Kang G. Informed consent. N Engl J Med 2017;376:856–67. 10.1056/NEJMra1603773 [DOI] [PubMed] [Google Scholar]

- 29.Childs BR, Swetz A, Andres BA, Breslin MA, Hendrickson SB, Moore TA, VP H, Vallier HA. Enhancing trauma patient experience through education and engagement: Development of a mobile application, J Am Acad Orthop Surg. submitted for publication 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lovalekar M, Abt JP, Sell TC, Lephart SM, Pletcher E, Beals K. Accuracy of recall of musculoskeletal injuries in elite military personnel: a cross-sectional study. BMJ Open 2017;7:e017434. 10.1136/bmjopen-2017-017434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gabbe BJ, Finch CF, Bennell KL, Wajswelner H. How valid is a self reported 12 month sports injury history? Br J Sports Med 2003;37:545–7. 10.1136/bjsm.37.6.545 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

tsaco-2020-000452supp001.pdf (23.9KB, pdf)