Abstract

Medical educators have not reached widespread agreement on core content for a U.S. medical school curriculum. As a first step toward addressing this, five U.S. medical schools formed the Robert Wood Johnson Foundation Reimagining Medical Education collaborative to define, create, implement, and freely share core content for a foundational medical school course on microbiology and immunology. This proof-of-concept project involved delivery of core content to preclinical medical students through online videos and class- time interactions between students and facilitators. A flexible, modular design allowed four of the medical schools to successfully implement the content modules in diverse curricular settings. Compared with the prior year, student satisfaction ratings after implementation were comparable or showed a statistically significant improvement. Students who took this course at a time point in their training similar to when the USMLE Step 1 reference group took Step 1 earned equivalent scores on National Board of Medical Examiners–Customized Assessment Services microbiology exam items. Exam scores for three schools ranged from 0.82 to 0.84, compared with 0.81 for the national reference group; exam scores were 0.70 at the fourth school, where students took the exam in their first quarter, two years earlier than the reference group. This project demonstrates that core content for a foundational medical school course can be defined, created, and used by multiple medical schools without compromising student satisfaction or knowledge. This project offers one approach to collaboratively defining core content and designing curricular resources for preclinical medical school education that can be shared.

Medical educators in the United States have not yet reached widespread agreement on core content for a national medical school curriculum. Most national efforts have focused on defining the end goals of the curriculum (e.g., competencies and attributes) rather than on defining the body of knowledge itself.1,2 As a result, educators at each institution spend much of their time choosing what content to teach3 and how to design their courses.4 Having hundreds of instructors from across the country working independently to choose content and create course materials is inefficient and redundant. If we could collectively define core content and develop shared instructional materials for teaching that content instead, faculty would have more time to focus on improving the quality of teaching and content delivery.

As a first step toward addressing this issue, we embarked on an externally funded proof-of-concept project, the Robert Wood Johnson Foundation Reimagining Medical Education collaborative. We wanted to test the feasibility of multiple medical schools (1) agreeing on the core microbiology and immunology content that medical students should learn in their preclinical education and (2) based on this agreement, collaboratively creating flexible core curricular components to be implemented at the schools and freely shared with others. Our hope was to show others who are interested in this idea that developing shared core content for foundational medical school courses and sharing these resources could be a more efficient and effective way to approach curriculum development and delivery.5,6

Project Description

Project goals

Our specific goals as a multi-institution collaborative were to (1) agree on and define the core content for a microbiology and immunology course for preclinical medical students, (2) create and deliver the content at multiple medical schools, and (3) evaluate students’ satisfaction and knowledge with the new content modules.

Defining core content

Stanford University School of Medicine (Stanford) led this project, which was partially funded by the Robert Wood Johnson Foundation. The other collaborating schools were Duke University School of Medicine (Duke); University of California, San Francisco, School of Medicine (UCSF); University of Washington School of Medicine (UW); and University of Michigan Medical School (UM). All schools contributed to defining the core content and overall curriculum strategy; however, UM did not participate in creating or implementing the course because they were in the midst of curriculum restructuring.

Broad decisions about course goals and objectives, curricular content, educational strategies, and the roles and responsibilities of project collaborators (see below) were made at an initial in- person meeting in March 2014. A total of 35 faculty members, staff, and students from the five collaborating schools actively participated in this meeting, of whom 14 had MDs, 1 had an MD/PhD, and 1 had a PhD. Five microbiology course directors, 2 immunology course directors, and 4 senior medical education deans from the five schools also participated in the meeting. All those who participated in the meeting were considered project collaborators.

Preparation for the consensus- building process used at the meeting was facilitated via several steps. First, senior medical education leaders from the five collaborating schools agreed on overarching guiding principles for defining core content, which emphasized teaching of foundational principles and concepts rather than testable facts (see Supplemental Digital Appendix 1 at http://links.lww.com/ACADMED/A645). Course directors at Stanford then created an educational framework to help learners and educators apply fundamental concepts of microbiology and immunology to the clinical presentation of infectious diseases.7 After cross-referencing microbiology and immunology content in the existing curricula at the five collaborating schools with a comprehensive list of medical microbiology core knowledge objectives,8 we created a list of 72 major topic areas with accompanying learning objectives. Lastly, Stanford course directors drafted 74 patient vignettes that incorporated “teachable” microbiology and immunology concepts.7

At the in-person meeting, decision making was approached via alternating time for idea generation in small working groups with time for discussion and synthesis within the larger group to arrive at a final agreement. First, overall course objectives that emphasized microbiology and immunology concepts relevant to clinical medicine and public health were developed (see Supplemental Digital Appendix 2 at http://links.lww.com/ACADMED/A645). Then, the group discussed and narrowed the list of 72 major topic areas down to 66. In small working groups, faculty matched the topic areas to an appropriate patient vignette. For example, groups matched topic areas such as “infectious diarrhea” and “vaccines” to a patient vignette about a six-month-old girl with rotavirus gastroenteritis. Patient vignettes that integrated multiple topic areas were retained. Through an iterative and collaborative process, 35 patient vignettes were selected.

Creating and organizing core content

To enhance learning and retention, the project collaborators planned a flipped- classroom, interactive in-class learning approach, and to organize the content, we created a set of learning modules. Each module included four linked components: (1) a patient-centered narrative “springboard” video; (2) a set of short content videos providing factual information; (3) in-class, interactive learning sessions, and resources for these sessions, that emphasize links between the basic science and clinical presentations; and (4) tools for formative and summative assessments. The intended audience for the course was preclinical medical students; however, the modules were purposefully designed to be self-contained and flexible to allow for a variety of implementation options.

Faculty teams at each of the four participating schools were responsible for developing at least 8 learning modules. Each module was reviewed by faculty and students from a second participating school before final production. We created 29 microbiology learning modules that included 29 patient- centered videos, 145 content videos, and facilitator guides for 19 interactive sessions. We created 5 immunology learning modules that included 5 patient- centered videos, 62 content videos, and facilitator guides for 8 interactive sessions. Across all participating schools, 20 faculty members, 21 students, and 13 staff participated in creating or reviewing content and managing the project.

Patient-centered springboard videos.

Each module began with a patient story to emphasize the clinical relevance of the content and create a memorable context on which to build factual knowledge.9 For stylistic consistency, one faculty member produced and narrated all patient- centered videos.7

Content videos.

We presented content videos as narrated, voice-over PowerPoint presentations that used agreed-on guidelines (i.e., specific learning objectives, a standardized slide template and style, and video duration of less than 10 minutes). The content videos were produced and narrated by multiple faculty members. Feedback from student focus groups helped refine stylistic practices, including abundant use of illustrations and graphics, conversational narration, and highlighting key terms.

Interactive sessions.

We designed learning sessions to help students apply microbiology and immunology concepts addressed in the content videos through a variety of in-class, interactive experiences.10,11 We developed detailed facilitator guides for these sessions that allowed flexibility so that faculty could modify the activities on the basis of institution-specific resources and local faculty expertise. Interactive sessions could be conducted by 1 to 2 faculty members in a large-group lecture hall or by multiple facilitators with smaller groups of 8 to 10 students.

Tools for assessment.

We created both formative and summative learner assessments. For formative assessment, we used multiple-choice-question quizzes to test factual knowledge after students watched the springboard and content videos and to test students’ ability to apply their knowledge to specific clinical situations after the interactive sessions. We also developed a cumulative final exam composed of 60 microbiology questions selected from the National Board of Medical Examiners–Customized Assessment Services (NBME-CAS) to use as a summative assessment. The specific questions we used, agreed on by faculty across the participating schools, were selected for their quality and alignment with our core content. This standardized method of assessment allowed us to compare our outcomes across institutions and against a national reference group.

Delivery of Core Content and Implementation

Project-level delivery

The four participating schools delivered the shared core content modules (n = 34) to their 597 preclinical medical students in 2015–2016. The sequence and selection of core content were implemented differently at each of the schools (Table 1). Two schools (Stanford and UW) used the modules as the basis of a new microbiology or microbiology and immunology course, replacing prior lecture-based courses. Two schools (Duke and UCSF) integrated the modules into existing courses, which already included some flipped-classroom sessions. Three schools used all of the microbiology modules (UW, Duke, and Stanford), while one school (UCSF) used a subset of the modules (24%) in combination with their existing microbiology curriculum. Two schools (Duke and UW) also used the immunology modules in their microbiology and immunology course, while the other two schools (Stanford and UCSF) had separate immunology courses, which did not use the immunology modules. All schools had an organ-systems-based medical school and were encouraging more active learning and student–faculty interaction in class.

Table 1.

Comparison of Course Delivery and Implementationa

| Course delivery variable | School | |||

|---|---|---|---|---|

| UW | Duke | UCSF | Stanford | |

| Number of students | 246 | 117 | 141 | 93 |

| Percentage of modules (n = 29) used | 100% | 100% | 24% | 100% |

| Delivery timeline | Single term | Single term | Single term | Multiple terms |

| Timing in preclinical curriculum | Beginning | End | Middle | Middle to end |

| Classroom seating | Stadium seating | Stadium seating | Stadium seating | Flat classroom |

| Attendance policy | Required | Required | Optional | Optional |

Abbreviations: UW indicates University of Washington School of Medicine; Duke, Duke University School of Medicine; UCSF, University of California, San Francisco, School of Medicine; Stanford, Stanford University School of Medicine.

From a multi-institution collaboration to define core content and design flexible components for a foundational medical school curriculum. The course delivered was a microbiology course for preclinical medical students (2015–2016).

From the beginning of the project, we sought engagement of key stakeholders at each of the participating schools to facilitate implementation, with senior deans of medical education, curriculum deans, course directors, faculty, medical students, evaluation staff, and educational technology staff all participating in and contributing to creating the shared content. At the time of implementation, course directors explained to their students the project goals and how the core content was defined and created.

School-level delivery

The flexible design of the curriculum made it possible for each school to vary its implementation to meet local learner needs and curricular structure.

At Duke, course leaders integrated the microbiology and immunology modules into a larger course. They used the full set of modules and adapted a subset of the interactive sessions into class time that was already used for team-based activities.

UW delivered the microbiology and immunology modules in the context of an entire curriculum redesign, which had commenced prior to the beginning of this project. UW was the only school to introduce the content during the beginning of medical school (i.e., in the first quarter), as dictated by the medical school curriculum redesign. UW was also unique because its students are taught at six separate sites across five states. Thus, faculty at the Seattle campus provided background and orientation about the modules to teaching faculty at remote sites.

Stanford created a new microbiology and infectious diseases course that used all of the microbiology modules and delivered the content over multiple terms. Stanford structured interactive sessions in a flat classroom with multiple tables of 8 to 10 students and 1 to 2 facilitators. More than 90 facilitators participated, including clinical and basic science faculty, clinical residents and fellows, and postdoctoral scientists, spanning the fields of infectious diseases, microbiology, emergency medicine, general pediatrics, otolaryngology, and cardiology. Stanford’s immunology course was separate from the microbiology course, so the immunology modules were not used.

UCSF integrated a portion of the new microbiology modules into its existing microbiology course, choosing modules that improved specific, targeted topic areas. In the sessions, faculty enhanced interactivity within the large lecture hall setting through polling activities and think–pair–share exercises. UCSF’s immunology course was separate from the microbiology course, so the immunology modules were not used.

Evaluation Methods

Evaluation of student satisfaction and student knowledge focused on implementation of microbiology modules only, since the immunology modules were not delivered at all four participating schools.

Student satisfaction

All participating schools were asked to integrate common questions into their online student surveys, including Likert-scale items and open-ended short- answer questions providing students an opportunity to share written comments. Independent Student t test was performed to compare student ratings before and after implementation of the modules at each school. Significance was set at P < .05.

We collected student comments using open-ended survey questions (see Supplemental Digital Appendix 3 at http://links.lww.com/ACADMED/A645) as part of each participating school’s standard end-of-course evaluation process and analyzed data using inductive coding and thematic analysis.12 Two coders (Heeju Jang, PhD, and M.S.) created a preliminary codebook to capture student feedback on each component of the curriculum (e.g., springboard videos, content videos, interactive sessions). The codebook was iteratively refined by testing it on a subset of student survey comments. The two coders (Heeju Jang, PhD, and M.S.) used the final codebook to double-code all student comments (n = 1,262), resolving discrepancies through discussion for 100% interrater agreement. One investigator (J.N.B.) conducted thematic analysis to produce a list of major themes and representative quotes, which were presented to and verified by the rest of the authors (see Tables 2 and 3).

Table 2.

Themes and Representative Quotes of Positive Student Feedbacka

| Curriculum component | Theme | Representative quote |

|---|---|---|

| Overall curriculum | Effective curricular model | “I did like the flipped classroom because I was able to explore the information ahead of time, and the case-based learning in the interactive sessions really solidified the key concepts.” |

| Appreciation for faculty dedication, effort, support, and enthusiasm | “Dr. [X] and Dr. [Y] were so excited and knowledgeable about their topics that it made me want to learn and do well.” | |

| Convenient and flexible access to content | “The RWJF modules were more effective than traditional lectures … because it was possible to take breaks between them in order to maintain better attention, or to speed up the lectures in order to get through the material faster.” | |

| Patient-centered springboard videos | Compelling and engaging | “The springboard videos were amazing. They are engaging, interesting, … and provide a different way to learn. I loved them.” |

| Clinical narratives provided a conceptual framework for synthesizing and remembering information | “I liked the springboard videos. I felt that having a patient to compare the microbiology modules to aided in my learning. During the final, I actually remembered back to the patients presented in these videos and their symptoms.” | |

| Content videos | Excellent content, well- organized, with logical sequence | “I found the videos extremely helpful. Iťs great to have something preformed for you that has all the information you need in a logical, organized manner.” |

| Succinct, with appropriate length and level of detail | “The modules were well paced, clear, [and] concise.” | |

| Interactive sessions | Opportunity to think critically about the material and consolidate information | “The interactive sessions are phenomenal, and I think they really reinforce what I learned in the content videos and also make me think about the material we are learning in even more detail.” |

| Opportunity to apply knowledge to clinical context | “I felt like I was at a clinic discussing a case while learning in a classroom setting! I particularly like experts discussing how they approach medical diagnosis and what is actually done in clinical care.” | |

| Opportunity to interact with experts or faculty and peers | “Strengths [of the interactive sessions] were the absolutely incredible faculty involvement and dedication (and ability to have one-on-one interactions) as well as the collaborative nature.” |

Abbreviation: RWJF indicates Robert Wood Johnson Foundation.

On a microbiology course delivered to preclinical medical students (2015–2016). From a multi-institution collaboration to define core content and design flexible components for a foundational medical school curriculum.

Table 3.

Themes and Representative Quotes of Critical Student Feedbacka

| Curriculum component | Themes | Representative quote |

|---|---|---|

| Overall curriculum | Prefer traditional lectures or direct instruction | “I much prefer lecture-style learning.… I find it very difficult to retain information from watching videos and attending interactive sessions. Whether I attend or not, I end up doing the same amount of relearning while preparing for the exam.” |

| Organization and/ or sequencing need improvement | “I found this course considerably fragmented.… The class could benefit from more structure and a greater focus on what is important.” | |

| Patient-centered springboard videos | Little value added to understanding and retention | “I unfortunately donť find the springboard videos to be very useful for my learning. I think they are interesting and well done; I'm just not sure how much they contribute to my overall understanding of the material.” |

| Content videos | Too lengthy or repetitive, with excessive content | “Overall I felt that the videos were too detailed in their content … not good for long-term retention.” |

| Inconsistency in style and/or depth | “The difference in style and depth between modules was sometimes confusing.” | |

| Interactive sessions | Low yield due to poor pacing and organization, unfocused discussion, or lack of coordination with videos | “These interactive sessions were not usually very helpful. A significant amount of time was used covering material that was not directly applicable, and the pace was often too slow.… We needed more guidance to direct our focus.” |

| Inconsistent facilitation quality | “The one problem with [interactive] sessions is [that] the variation in instructors could make the session amazing or merely okay.” | |

| Logistical problems (e.g., inappropriate physical setting, group size, noise level) | “This style of teaching will not work well in a large lecture hall. We need small classrooms if you want this to work.” |

On a microbiology course delivered to preclinical medical students (2015–2016). From a multi-institution collaboration to define core content and design flexible components for a foundational medical school curriculum.

Student knowledge

Each participating school assessed student knowledge according to local norms, using the multiple-choice-question quizzes created by the project collaborators. One school (Stanford) also used short-answer questions to evaluate student knowledge. To measure knowledge acquisition with validated, standardized test items, NBME-CAS questions were used by all participating schools. Stanford, Duke, and UW used scores on these NBME-CAS questions as a component of their graded final exam. Fifty UCSF students voluntarily took the NBME-CAS exam, but their scores did not impact their course grades.

Evaluation Results

Student satisfaction

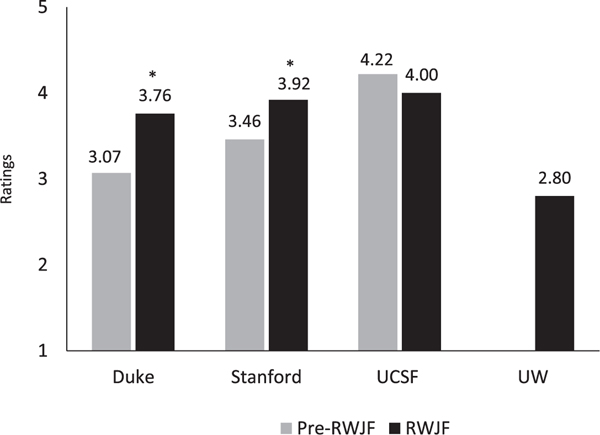

Microbiology course learner satisfaction ratings at all four participating schools were positive and generally consistent with the institutions’ course ratings in prior years (Figure 1). At Duke, mean course ratings showed statistically significant improvement (P = .02) following the introduction of these modules. No statistically significant difference was found at UCSF, although a slight decrease was observed. At Stanford, ratings significantly improved in the quarter with the largest amount of microbiology content (i.e., quarter two, P = .03). No significant difference was found in the other quarter. Comparison data for UW were not available given the dramatic change in the overall curricular structure.

Figure 1.

Data show learner satisfaction ratings (on a five-point Likert scale, where 1 = poor and 5 = excellent) for microbiology courses using the microbiology modules developed by the RWJF collaborative (2015–2016) compared with the institutions’ microbiology course ratings for the prior year. UW used a six-point scale, which was adjusted to five points by collapsing two categories (poor and very poor) into one. UW did not have pre-RWJF data available for comparison purposes because of curricular restructuring. Asterisks indicate significance; Duke’s (P = .02) and Stanford’s (P = .03) RWJF student satisfaction ratings were significantly higher than their pre-RWJF ratings. RWJF modules at Duke were not evaluated separately on end-of- course surveys; data shown reflect ratings for the body and disease course in which the RWJF modules were used. Ratings for Stanford show the mean rating for quarter two, which was one of the quarters in which the RWJF modules were used. The mean Stanford RWJF rating for quarter four (3.46, data not shown) was not significantly different compared with the mean pre-RWJF quarter two rating. Abbreviations: RWJF indicates Robert Wood Johnson Foundation; UW, University of Washington School of Medicine; Duke, Duke University School of Medicine; Stanford, Stanford University School of Medicine; UCSF, University of California, San Francisco, School of Medicine.

Positive themes from student feedback included finding the curricular model effective; appreciating the flexible, organized, and succinct delivery of content; and valuing faculty commitment and enthusiasm. Critical themes included preferring traditional lectures; desiring better overall curriculum organization or sequencing; and finding the videos excessive in length or detail, or inconsistent in depth or style. Further details are provided in Tables 2 and 3.

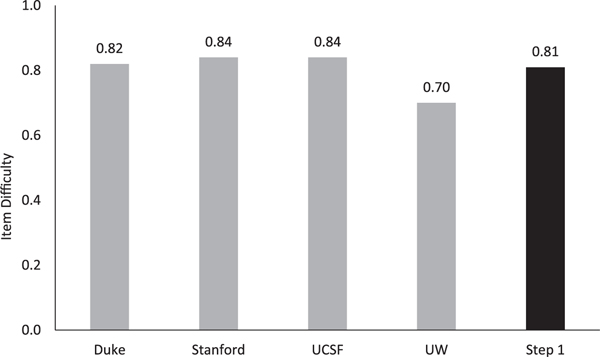

Student knowledge

On the NBME-CAS microbiology exam items, students who had been taught using the modules demonstrated learning outcomes comparable to the United States Medical Licensing Examination (USMLE) Step 1 reference group (first-time test takers from Liaison Committee on Medical Education–accredited schools, who had completed most of their required preclinical course work). Students at Duke, UCSF, and Stanford scored above average (range: 0.82–0.84) compared with the USMLE Step 1 reference group (0.81). At UW, where students took the NBME-CAS exam in their first quarter of medical school, earlier than when the USMLE Step 1 reference group took the exam, scores were slightly below the average (0.70; Figure 2).

Figure 2.

Data show performance on the NBME-CAS microbiology exam items (n = 60) by students who were taught with the microbiology modules developed by the RWJF collaborative (2015–2016) compared with past Step 1 test takers (i.e., Step 1 reference group). Higher item difficulty values correspond with a greater number of students answering the items correctly. The item difficulty value shown for the Step 1 reference group (black bar) is based on performance of first-time takers from LCME-accredited schools. Abbreviations: NBME-CAS indicates National Board of Medical Examiners–Customized Assessment Services; RWJF, Robert Wood Johnson Foundation; Step 1, United States Medical Licensing Examination Step 1; LCME, Liaison Committee on Medical Education; Duke, Duke University School of Medicine; Stanford, Stanford University School of Medicine; UCSF, University of California, San Francisco, School of Medicine; UW, University of Washington School of Medicine.

Sharing Content

Project collaborators agreed to share the core content modules with other domestic and international medical schools. As of June 2018, after presenting the project and study results at four regional and national conferences, 24 U.S. and 6 international medical schools have expressed interest in using the modules. Seventeen institutions have agreed to provide feedback to help improve future iterations. The YouTube channel13 hosting the springboard and content videos has attracted viewers from 219 countries and has amassed 484,342 views, 6,886 subscribers, 4,948 shares, and 4,550 likes.

Discussion

We demonstrated that it is possible for multiple medical schools to define core content for a foundational medical school course; create flexible, modular curricular components; and implement them across multiple uniquely structured curricula. Evaluation and assessment data suggest that our collaborative microbiology modules achieved knowledge acquisition and learner satisfaction outcomes comparable to the microbiology courses that were previously used at each participating school. We have also demonstrated that there is interest in sharing curricular components, as our YouTube channel13 has received views from over 200 countries.

We began this project in March 2014 and completed it in June 2016. Production of all springboard and content videos took 15 months. The completion of this project in a short time frame speaks to the commitment of the project collaborators, but the short time frame led to lessons learned as well as successes. Although we succeeded in bringing 20 faculty members to an agreement on core content, in large part because of the extensive preparation prior to the meeting with the project collaborators, formal approaches to consensus, such as the Delphi method, could be considered for future endeavors.

Iterative feedback was a key strategy of our development process. For content video production, we gathered peer and student feedback on learning objectives and video production style and provided templates and guidelines in pursuit of quality and consistency. Nonetheless, some heterogeneity remained given that faculty narrators applied different approaches to teaching the content. This led to some student dissatisfaction. In contrast, the patient-centered springboard videos, which were all narrated by one creator, were received positively by most learners. For future projects, a single oversight role and a single narrator for content videos may better unify the modules.

Survey results suggest that the length and timing of the course (i.e., the participating school’s curricular structure) influenced how the curriculum was perceived by students. UW students who took a single-term 4-week microbiology and immunology course in the first quarter of medical school reported less satisfaction compared with second-year students at Duke who took a single-term 12-week microbiology and immunology course, even though both schools used all of the modules (see Figure 1 and Table 1). UW students also reported more difficulties absorbing the content and raised concerns that the course covered too much content, particularly given the limited amount of class time. For future users of the modules, these experiences are important lessons for learning how to adapt the modules to the learner stage and curricular structure. We were also not surprised that given UW students’ early experience level, they earned lower scores on relevant NBME-CAS exam items when compared with (more experienced) students from the three other participating schools and the national reference group average.

Although the initial investment of faculty and staff time and resources required to define, create, and implement our shared core content was substantial, we anticipate that this up-front investment will see greater returns over time as the original four participating schools continue to use the materials. The project fulfilled each school’s larger goal of transitioning content delivery from traditional lectures to online videos, reserving class time for active learning. For other schools, we hope that sharing this core content will enable faculty to focus on high-quality instruction and dynamic engagement with students.

A key benefit of our project was the establishment of a multi-institution group made up of forward-thinking educators and engaged students who continue to share ideas. Collaborative work enhanced the quality of our educational materials because it allowed for diverse voices and an iterative process of revising materials on the basis of feedback from faculty members, staff, and students from multiple institutions. In contrast, “siloed” course directors at a single institution have little or no input or accountability from faculty peers and only retrospective accountability from students. This benefit of collaboration in itself may be a compelling reason to pursue similar large-scale collaborations for the creation of higher-quality educational resources in the future.

Conclusion

Our project demonstrates that faculty members, staff, and students from multiple medical schools can collaboratively agree on core content, create teaching materials together, and implement the shared content in their unique curricular structures. Initial results from this proof-of- concept project suggest that working collaboratively to define core content and develop curricular components can serve as a more efficient and effective model for approaching medical education delivery. Leveraging the diverse experiences and training of multiple faculty, staff, and student contributors may enable a more rigorous approach for selecting core content. Gathering iterative feedback from diverse project collaborators may lead to greater accountability and higher- quality work, as sharing core content reduces redundant work, which allows faculty to focus on improving the quality of teaching. We hope that our successes and lessons learned will help inform future endeavors. We viewed our proof-of-concept project as a first step in exploring the possibility of building widespread agreement on core content for one foundational course. We hope our project can inform larger long-term efforts to establish widespread agreement on core content for all foundational medical school courses.

Supplementary Material

Acknowledgments:

The authors wish to thank the following contributors: For contributing to study design, data collection, or data analysis, Alyssa Bogetz, Heeju Jang, Sylvia Merrell, Katy Nandagopol, Irina M. Russell, Jessica Whittemore, and Justin Hudgins. For designing or implementing the curriculum, Michael Gunn, John Lynch, Jacob Rosenberg, Troy Torgerson, and Matt Velkey. For providing technical support or guidance, Andrew Baek, Joe Benfield, Christian Burke, Michael Campion, Michael Halaas, Sharon Kaiser, John Loftin, Chandler Mayfield, Michael McAuliffe, Clinton Miller, Erika Noble, Jason Reep, Kevin Souza, and Jamie Tsui. For providing institutional support for curriculum implementation, Ed Buckley, Erika Goldstein, Colleen Grochowski, Catherine Lucey, and Michael Ryan.

Funding/Support: The Robert Wood Johnson Foundation, Michael W. Painter, and the Burke Family Foundation provided financial funding, support, or guidance.

Footnotes

Other disclosures: None reported.

Ethical approval: The institutional review boards at all participating schools approved the study or determined the research to be exempt.

Previous presentations: Some of the information presented in this article was presented at the Association of American Medical Colleges (AAMC) Annual Meeting, November 6–10, 2015, Baltimore, Maryland; at the Western Group on Education Affairs (WGEA) Annual Meeting, April 14–16, 2016, Tucson, Arizona; at the AAMC Annual Meeting, November 11–15, Seattle, Washington; and at IDWeek (Infectious Diseases Society of America), October 26–30, 2016, New Orleans, Louisiana.

The authors have informed the journal that they agree that both S.F. Chen and J. Deitz completed the intellectual and other work typical of the first author.

Supplemental digital content for this article is available at http://links.lww.com/ACADMED/A645.

Contributor Information

Sharon F. Chen, clinical associate professor of pediatrics, Stanford University School of Medicine, Stanford, California..

Jennifer Deitz, assistant dean, Stanford Continuing Studies, Stanford University, Stanford, California. At the time of the study and writing, she was director of research and evaluation, Office of Medical Education, Stanford University School of Medicine, Stanford, California..

Jason N. Batten, fourth-year medical student, and at the time of the study and writing, he was a second-year medical student, Stanford University School of Medicine, Stanford, California..

Jennifer DeCoste-Lopez, senior pediatric resident, Lucile Packard Children’s Hospital, Stanford Children’s Health, Palo Alto, California..

Maya Adam, director of health education outreach, Stanford Center for Health Education, Stanford University, Stanford, California..

J. Andrew Alspaugh, professor of medicine and of molecular genetics and microbiology, Duke University School of Medicine, Durham, North Carolina..

Manuel R. Amieva, associate professor of pediatrics and of microbiology and immunology, Stanford University School of Medicine, Stanford, California..

Pauline Becker, senior project manager, Information Resources & Technology, Educational Technology Department, Stanford University School of Medicine, Stanford, California..

Bryn Boslett, assistant professor of medicine, University of California, San Francisco, School of Medicine, San Francisco, California. J. Carline is professor of biomedical informatics and medical education, University of Washington School of Medicine, Seattle, Washington..

Jan Carline, professor of biomedical informatics and medical education, University of Washington School of Medicine, Seattle, Washington..

Peter Chin-Hong, professor of medicine, University of California, San Francisco, School of Medicine, San Francisco, California..

Deborah L. Engle, assistant dean for assessment and evaluation, Office of Curricular Affairs, Duke University School of Medicine, Durham, North Carolina..

Kristen N. Hayward, associate professor of pediatrics, University of Washington School of Medicine, Seattle, Washington..

Andrew Nevins, clinical associate professor of medicine, Stanford University School of Medicine, Stanford, California..

Aarti Porwal, managing director, Stanford Center for Health Education, Stanford University, Stanford, California..

Paul S. Pottinger, associate professor of medicine, University of Washington School of Medicine, Seattle, Washington..

Brian S. Schwartz, associate professor of medicine, University of California, San Francisco, School of Medicine, San Francisco, California..

Sherilyn Smith, professor of pediatrics, University of Washington School of Medicine, Seattle, Washington..

Mohamed Sow, curriculum program manager, Student Affairs, Office of Medical Education, Stanford University School of Medicine, Stanford, California..

Arianne Teherani, professor of medicine and education scientist, Center for Faculty Educators, University of California, San Francisco, School of Medicine, San Francisco, California..

Charles G. Prober, senior associate vice provost for health education and professor of pediatrics and of microbiology and immunology, Stanford Center for Health Education, Stanford University, Stanford, California..

References

- 1.Brown DR, Warren JB, Hyderi A, et al. ; AAMC Core Entrustable Professional Activities for Entering Residency Entrustment Concept Group. Finding a path to entrustment in undergraduate medical education: A progress report from the AAMC Core Entrustable Professional Activities for Entering Residency Entrustment Concept Group. Acad Med. 2017;92:774–779. [DOI] [PubMed] [Google Scholar]

- 2.Skochelak SE, Stack SJ. Creating the medical schools of the future. Acad Med. 2017;92: 16–19. [DOI] [PubMed] [Google Scholar]

- 3.Dexter J, Koshland G, Waer A, Anderson D. Mapping a curriculum database to the USMLE Step 1 content outline. Med Teach. 2012;34:e666–e675. [DOI] [PubMed] [Google Scholar]

- 4.Melber DJ, Teherani A, Schwartz BS. A comprehensive survey of preclinical microbiology curricula among US medical schools. Clin Infect Dis. 2016;63:164–168. [DOI] [PubMed] [Google Scholar]

- 5.Le TT, Prober CG. A proposal for a shared medical school curricular ecosystem. Acad Med. 2018;93:1125–1128. [DOI] [PubMed] [Google Scholar]

- 6.Prober CG, Khan S. Medical education reimagined: A call to action. Acad Med. 2013;88:1407–1410. [DOI] [PubMed] [Google Scholar]

- 7.Adam M, Chen SF, Amieva M, et al. The use of short, animated, patient-centered springboard videos to underscore the clinical relevance of preclinical medical student education. Acad Med. 2017;92:961–965. [DOI] [PubMed] [Google Scholar]

- 8.Booth SJ, Burges G, Justemen L, Knoop F. Design and implementation of core knowledge objectives for medical microbiology and immunology. J Int Assoc Med Sci Educ. 2009;19:100–138. [Google Scholar]

- 9.Hurwitz B, Charon R. A narrative future for health care. Lancet. 2013;381:1886–1887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Freeman S, Eddy SL, McDonough M, et al. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci U S A. 2014;111:8410–8415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Irby DM, Cooke M, O’Brien BC. Calls for reform of medical education by the Carnegie Foundation for the Advancement of Teaching: 1910 and 2010. Acad Med. 2010;85:220–227. [DOI] [PubMed] [Google Scholar]

- 12.Miles MB, Huberman AM, Saldana J. Qualitative Data Analysis. Thousand Oaks, CA: Sage; 2013. [Google Scholar]

- 13.YouTube. RWJF microbiology, immunology & infectious diseases. https://www.youtube.com/channel/UCD_hEu7G_yN5X29fbEf7XgA/playlists. Accessed January 15, 2019.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.