Summary

Animals actively interact with their environment to gather sensory information. There is conflicting evidence about how mice use vision to sample their environment. During head restraint, mice make rapid eye movements coupled between the eyes, similar to conjugate saccadic eye movements in humans. However, when mice are free to move their heads, eye movements are more complex and often non-conjugate, with the eyes moving in opposite directions. We combined head and eye tracking in freely moving mice and found both observations are explained by two eye-head coupling types, associated with vestibular mechanisms. The first type comprised non-conjugate eye movements, which compensate for head tilt changes to maintain a similar visual field relative to the horizontal ground plane. The second type of eye movements was conjugate and coupled to head yaw rotation to produce a “saccade and fixate” gaze pattern. During head-initiated saccades, the eyes moved together in the head direction but during subsequent fixation moved in the opposite direction to the head to compensate for head rotation. This saccade and fixate pattern is similar to humans who use eye movements (with or without head movement) to rapidly shift gaze but in mice relies on combined head and eye movements. Both couplings were maintained during social interactions and visually guided object tracking. Even in head-restrained mice, eye movements were invariably associated with attempted head motion. Our results reveal that mice combine head and eye movements to sample their environment and highlight similarities and differences between eye movements in mice and humans.

Keywords: eye movement, head movement, gaze, pupil, vision, oculomotor system, vestibular system, natural behavior

Highlights

-

•

Head and eye tracking in freely moving mice reveals two types of eye-head coupling

-

•

Eye coupling to head tilt aligns gaze to the horizontal plane

-

•

Eye coupling to head yaw rotation produces a “saccade and fixate” gaze pattern

-

•

Eye movements in head-restrained mice are related to attempted head rotation

Meyer et al. track head and eyes in freely moving mice and find two distinct types of eye-head coupling. Eye coupling to head tilt aligns gaze to the horizontal plane, while eye coupling to yaw head rotation produces a “saccade and fixate” gaze pattern. Also in head-restrained mice, eye movements are linked to attempted head rotation.

Introduction

During natural behaviors, animals actively sample their sensory environment [1, 2]. For example, humans use a limited and highly structured set of head and eye movements (see [3] and references therein) to shift their gaze (eye in head + head in space) to selectively extract relevant information during visually guided behaviors, like making a cup of tea [4] or a peanut butter sandwich [5]. Revealing the precise patterns of these visual orienting behaviors is essential to understand the function of vision in humans and other animals [6, 7] and to investigate the underlying neural mechanisms.

The mouse has emerged as a major model organism in vision research, due to the availability of genetic tools to dissect neural circuits and model human disease. This has yielded detailed insights into the circuitry and response properties of early visual pathways in mice (see [8] for a recent review). Mice use vision during natural behaviors, such as threat detection [9] and prey capture [10]. They can also be trained on standard visual paradigms similar to those used in humans and non-human primates, including visual detection and discrimination tasks, with or without head restraint [11, 12, 13]. However, very little is known about how visual orienting behaviors support vision in mice. Vision in mice is typically studied in head-restrained animals to facilitate neural recordings and experimental control of visual input. Until recently, it has not been feasible to simultaneously measure movement of the head and eyes in freely behaving mice. The aim of our study was therefore to determine how head and eye movements contribute to visually guided behaviors.

There is conflicting evidence about the role of eye movements in mice. Mice have laterally facing eyes with a large field of view of approximately 280° extending in front, above, below, and behind the animal’s head [8, 14, 15, 16, 17]. There is only a narrow binocular field of approximately 40°–50° overlap. In contrast to humans, mice have no fovea and appear to lack other pronounced retinal specializations for high-resolution vision [18, 19]. Despite this, multiple studies have found that head-restrained mice move their eyes [17, 20, 21, 22, 23]; these eye movements are rapid and conjugate, i.e., both eyes moving together in the same direction, with an average magnitude of 10°–20° and peak velocities that can reach more than 1,000°/s. While these saccade-like eye movements provide only a relatively small shift in the visual field (about 5%), mainly in the horizontal direction [20], it has been suggested that they resemble exploratory saccades in humans [17, 20].

However, studies in freely moving mice [23, 24] and also rats [25] have found eye movement patterns much more complex and often non-conjugate, i.e., both eyes moving in opposite directions. These non-conjugate eye movements were systematically coupled to changes in orientation of the animal’s head with respect to the horizontal plane (head tilt) [23, 25, 26]. Eye movements in response to static tilt changes are associated with the otolith organs, which sense head acceleration, including gravity, and referred to as “tilt otolith-ocular” [26, 27] or “ocular countertilt” reflexes [28]. While the precise function of this eye-head coupling is still unclear (but see [25, 29]), it appears to be largely compensatory and has been suggested to serve to stabilize the visual field with respect to the ground [23, 26].

We previously observed that freely moving mice rarely make saccades in the absence of head motion [23]. We therefore reasoned that eye movements might additionally serve to shift and stabilize the gaze during combined eye and head movement, similar to higher vertebrates, including humans, primates, cats, and rabbits [30]. These eye movements have been referred to as “saccade and fixate” eye movements. They are linked to the semicircular canals, where receptor cells sense head rotation, and referred to as the angular vestibulo-ocular reflex (aVOR) [28, 31, 32]. The aVOR counteracts rotational head movements, interspersed with fast-resetting saccadic eye movements that shift the gaze (also known as the quick phase of nystagmus). At the same time, compensation for changes in head orientation could then approximately maintain the same view of the visual environment with respect to the horizontal ground plane, consistent with previous observations that head orientation accounts for most variability in the vertical eye axis but fails to account for a substantial fraction of variability in the horizontal eye axis along which saccadic eye movements mainly occur in mice [23].

To investigate eye/head movement relations, we used a system that we recently developed for tracking eye positions together with head tilt and head rotations in freely moving mice [23]. We show that eye movements in freely moving mice can be decomposed into non-conjugate head-tilt-related eye movements and conjugate eye movements along the horizontal eye axis. Non-conjugate changes in eye position during head tilt stabilize the gaze of the two eyes relative to the horizontal plane. In contrast, conjugate horizontal eye movements yielded a saccade and fixate gaze pattern that was closely linked to rotational head movements around the yaw axis. Eye movements during the saccade and the fixate phases were strongly coupled to the head but in different rotation directions, and this coupling was preserved when animals were engaged in a novel visually guided tracking task. Indeed, eye movements in head-restrained mice always occurred during attempted head movements, and the direction of the attempted head movement was consistent with that of combined eye-head gaze shifts in freely moving animals.

To summarize, our results resolve the apparent discrepancy between eye movement patterns in head-restrained and freely moving mice. Eye movements in mice consist of two distinct, separable types: non-conjugate, head-tilt-related eye movements and conjugate eye movements along the horizontal eye axis. Importantly, gaze shifts in mice rely on combined head and eye movements with a similar saccade and fixate pattern as in other higher vertebrates, including humans.

Results

Eye Movements in Freely Moving Mice, Head-Restrained Mice, and Humans

To investigate how mice use their head and eyes to explore the environment, we tracked the positions of both eyes together with head motion in freely moving mice using a previously developed head-mounted system [23]. The system includes two head-mounted cameras combined with an inertial measurement unit (IMU) sensor (Figure 1A). The IMU provides information about head tilt and head rotation while the cameras measure the positions of the eyes relative to the eye axis in the head coordinate frame.

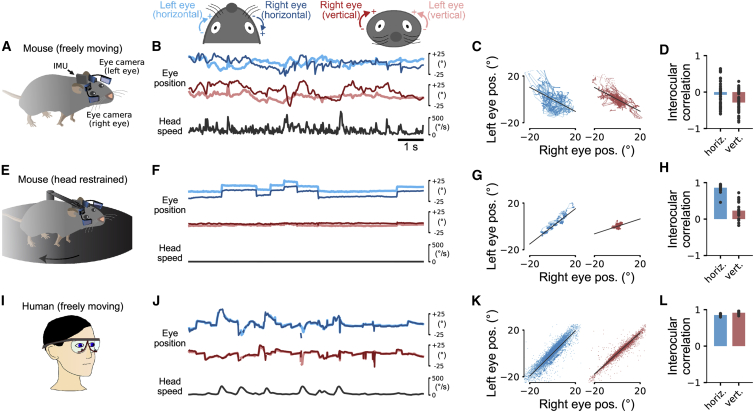

Figure 1.

Eye Movements in Freely Moving Mice, Head-Restrained Mice, and Humans

(A) Tracking eye and head motion in a freely moving mouse. Videos of each eye are recorded using miniature cameras and infrared (IR) mirrors mounted on an implant with a custom holder. Each eye is illuminated by two IR light sources attached to the holder. The mirrors reflect only IR light and allow visible light to pass so that the animal’s vision is not obstructed. Head motion and orientation are measured using an inertial measurement unit (IMU).

(B) Eye coordinate systems used in this study (top). A 10-s example segment showing horizontal and vertical position of both eyes and head speed (magnitude of angular head velocity) in an unrestrained, spontaneously behaving mouse (bottom).

(C) Horizontal (left) and vertical (right) eye positions for the whole recording of the data in (B) (10 min). Interocular eye positions were negatively correlated (solid black line).

(D) On average, interocular correlations were small and negative. Mean ± SEM.

(E) Eye tracking in a head-restrained mouse on a running disk using the same technique as in (A).

(F–H) The same as in (B)–(D) but for a head-restrained mouse. In contrast to the freely moving condition, eye movements mostly occurred in the horizontal direction and were tightly coupled between the eyes.

(I) Tracking eye and head movement in freely moving humans, using goggles with integrated eye-tracking cameras and IR illumination.

(J–L) The same as in (B)–(D) but for humans walking through the environment. Interocular correlations between the two eyes in humans show strong coupling between horizontal and vertical eye positions. Note that the lines in (J) for left and right eye positions are closely overlapping.

Timescale in (F) and (J) is the same as (B). See also Video S1.

We defined the horizontal eye coordinate system in mice and humans with clockwise positions more positive in each eye (Figure 1B, top diagram). For the left eye, horizontal eye positions closer to the nose have more positive values, while for the right eye, horizontal eye positions further away from the nose are more positive (Figure 1B, top left diagram). In the vertical direction (Figure 1B, top right diagram), eye positions of both eyes further toward the top of the eye are more positive. With this coordinate system, conjugate eye movements (typical in humans) generate positive correlations between horizontal and vertical eye positions of the two eyes, while non-conjugate eye movements generate negative ones.

Eye movements in mice freely exploring an environment showed large horizontal and vertical displacements of the two eyes (Figure 1B, bottom). On average, these displacements were weakly correlated across the two eyes (Figures 1C and 1D; r = −0.07 ± 0.05 horizontal; r = −0.29 ± 0.04 vertical; n = 47 recordings in 5 mice, 10 min each). In contrast, when the same mice were head-restrained (i.e., the head was fixed but the mice free to run on a wheel; Figure 1E), both eyes showed saccadic-like eye movements, preferentially in the horizontal direction (Figure 1F) with high interocular correlations (Figures 1G and 1H; r = 0.86 ± 0.02 horizontal and r = 0.23 ± 0.05 vertical eye positions; n = 22 recordings from 3 mice, 10 min each). Thus, eye movement patterns differed substantially in freely moving and head-restrained mice.

For comparison, we also recorded eye movements in humans walking around an environment using commercially available, head-mounted eye-tracking goggles (Figure 1I). Eye positions were strongly correlated between both eyes (Figures 1J–1L; interocular correlations r = 0.85 ± 0.01 horizontal and r = 0.92 ± 0.01 vertical; n = 10 recordings from 5 subjects, recording time 427 ± 200 s). Thus, eye movements in freely moving mice differed substantially from eye movements in humans. In contrast, head-restrained mice made saccadic-like eye movements strongly coupled across the two eyes, similar to those in humans. An obvious difference between the two conditions in the mouse was that they moved their heads a lot during free exploration, which was not possible during head restraint (Figures 1B and 1F). Coupling of the eyes to the motion of the head, therefore, could be a potential explanation for the observed differences.

Head-Tilt-Related Changes in Eye Position Stabilize Gaze Relative to the Horizontal Plane in Freely Moving Mice

We first analyzed the effect of head tilt on eye position in freely moving mice. Previous results in head-restrained [26, 33] and freely moving mice [23] showed that average eye position systematically varies with the tilt of the head (combined pitch and roll). To confirm this effect in our data, we computed average eye position separately during either pitch or roll of the head (Figures 2A–2D). Head pitch had an effect on both horizontal and vertical eye position (Figures 2A and 2B), whereas roll predominantly affected vertical eye position (Figures 2C and 2D). During upward (positive) head pitch, both eyes turn downward and inward toward the nose (Figure 2B, top). In contrast, during downward (negative) head pitch, the opposite happens: both eyes turn upward and outward toward the ears (Figure 2B, bottom). During positive roll (lowering the right side of the head relative to the left side), the right eye moves upward and the left eye moves downward (Figure 2D, top); the opposite happens during negative head roll (Figure 2D, bottom).

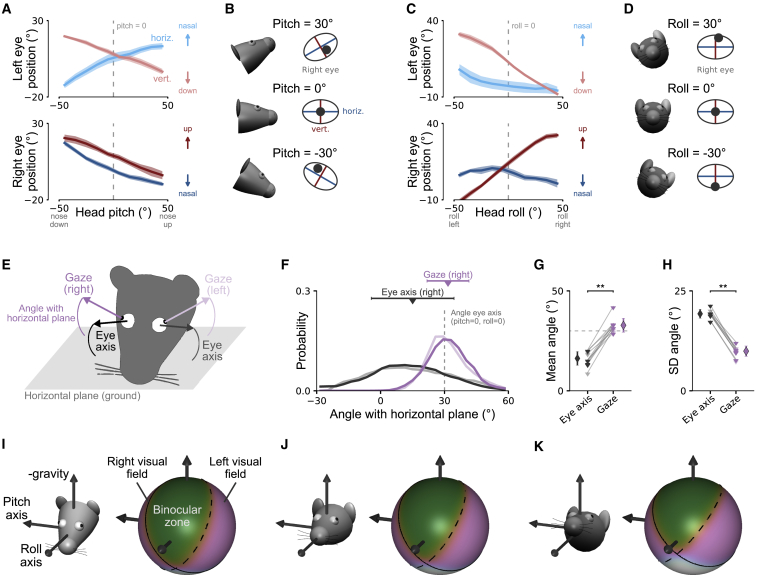

Figure 2.

Head-Tilt-Related Changes in Eye Position Stabilize Gaze Relative to the Horizontal Plane in Freely Moving Mice

(A) Horizontal (blue lines) and vertical eye position (red lines) as a function of head pitch for the left (top) and right eye (bottom) for freely moving mice. Plots show means ± SEM across 5 mice. Arrows indicate directions of eye position change in the eye coordinate system. Dashed vertical line shows pitch = 0°. Same data as in Figure 1D.

(B) Illustration of systematic dependence of horizontal and vertical eye position on head pitch for different pitch values. For illustration, intersection of horizontal and vertical eye axes aligned with average eye position for pitch = 0° (dashed line in A).

(C and D) The same as in (A) and (B) but as a function of head roll.

(E) Illustration of eye axes fixed in a head-centered reference frame (black arrow) and gaze axes (center of pupil rotating in head; violet arrow) for left and right eyes. Angles of axes are relative to horizontal plane (ground; gray area).

(F) Distributions of angles of eye axes (black/gray lines) and gaze axes (violet lines) with horizontal plane for one example mouse. Negative angles indicate axis pointing downward (to the horizontal plane), whereas positive angles indicate upward pointing axis. For reference, angle of eye axis for pitch = 0° and roll = 0° is shown (dashed gray line). Triangles and bars indicate circular mean and standard deviation of distributions, respectively. Same color scheme as in (E).

(G) Circular mean angles for left and right eye in 5 mice. Eye axis angle is as shown in (F) (dashed gray line). Same color scheme as in (E). Wilcoxon signed-rank test, **p < 0.01.

(H) Circular standard deviation of angles for the same data. Diamonds represent mean and standard deviation across mice (left and right eye). Same data as in (A) and (C).

(I) Visual field coverage for negative head pitch (−45° ≤ pitch ≤ −15°) for the example mouse in (F). Data are shown in a laboratory reference frame with average head pitch indicated by mouse head (relative to horizontal ground plane; left). Solid and dashed black lines indicate iso-contours (probability of part of visual field in monocular field = 0.5) for the left and right visual field, respectively. Green area, binocular zone where both monocular visual fields overlap; violet areas, monocular visual fields.

(J) The same as in (I) but for approximately level head (−15° ≤ pitch ≤ +15°).

(K) The same as in (I) but for upward pitch (+15° ≤ pitch ≤ +45°).

The observed effect of head tilt on eye position is consistent with a stabilization scheme [23, 26] that influences the position of the eyes with regard to gravity (based on vestibular input) [26, 33]. One potential function of changes in eye position could be alignment of the visual field with the horizontal plane (ground). To directly test this, we calculated the angle between the gaze (i.e., the vector determined by the center of the pupil rotating in the head) and the horizontal plane (Figure 2E). The calculation of gaze angles involved a geometric model of the eye axes in the head because of misalignment of the eye and the head (pitch and roll) axes (Figure S1A; STAR Methods). Gaze angles for both eyes were typically positive (i.e., pointing slightly upward from the horizontal plane) and tightly centered around the angle of the eye axis when the animal kept its head straight (pitch = 0° and roll = 0°; Figures 2F–2H; gaze angle mean = 32.8° ± 3.5°; SD = 9.9° ± 1.3°). In contrast, the angle of the eye axis, defined as the origin of the eye coordinate system fixed in a head-centered reference frame (Figures 2B and 2D), showed a much wider distribution with the axis frequently pointing toward the ground (Figures 2F–2H; eye axis angle mean = 16.2° ± 3.5°; SD = 19.3° ± 1.1°; p = 0.002, gaze versus eye axis mean; p = 0.002, gaze versus eye axis SD; Wilcoxon signed-rank tests, n = 10 [5 mice, left and right eye]).

To further illustrate the effect of tilt compensation on visual field stabilization, we mapped visual field coverage for different head pitch values (Figures 2I–2K; STAR Methods). Monocular visual fields of the two eyes and the binocular zone were largely similar across changes in head pitch. This suggests that one function of this head-tilt-related eye-head coupling in the mouse could be stabilization of the visual field relative to the horizontal plane.

Horizontal Eye Movements Not Explained by Head Tilt Are Conjugate across the Two Eyes

Next, we investigated whether eye movements that were not explained by head tilt revealed some properties of the saccadic-like eye movements observed in head-restrained mice. To isolate the head tilt-related component, and to reveal the component not explained by head tilt, we took advantage of the accelerometer signals of the head-mounted IMU to measure head pitch and roll and used these to predict horizontal and vertical eye positions for each eye using nonlinear regression models (Figure 3A; see also [23] and STAR Methods). Most of the variance in eye position could be explained by head pitch and roll (Figure 3B). Model predictions were significantly more accurate for vertical than horizontal eye positions (r2 = 0.86 ± 0.01 vertical; r2 = 0.62 ± 0.02 horizontal; p = 1 × 10−16; Wilcoxon signed-rank test; n = 47 recordings in 5 animals, 10 min each).

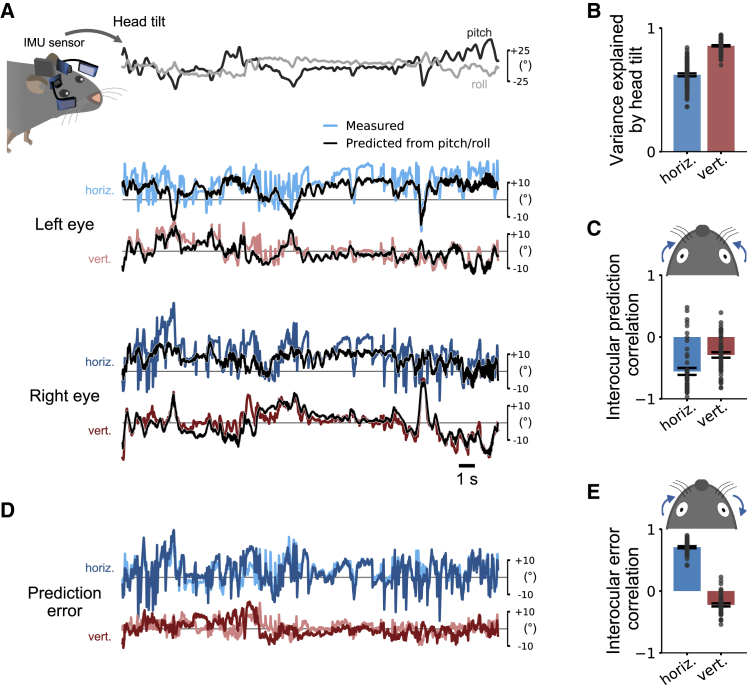

Figure 3.

Horizontal Eye Movements Not Explained by Head Tilt Are Conjugate across the Two Eyes

(A) Top: head tilt was measured using the IMU sensor attached to the animal’s head. Eye positions were measured using the head-mounted camera system. Nonlinear regression models were used to predict horizontal and vertical eye positions from head pitch and roll for each eye. Bottom: measured (colored lines) and predicted (black lines) horizontal and vertical eye positions for both eyes.

(B) Cross-validated explained variance along the horizontal (horiz.) and vertical (vert.) eye axes (n = 47 recordings from 5 mice, 10 min each). Head tilt explained 86% variance in vertical but only 62% in horizontal eye position. Recordings for each eye axis pooled across eyes and mice. Mean ± SEM.

(C) Interocular correlation of the eye movements that were predictable by head pitch and roll (i.e., the predictions of independent models for the two eyes as shown in A). Strong negative correlation for horizontal eye movements indicates convergence and divergence across eyes. Blue arrows show horizontal convergence. Mean ± SEM. Same data as in (B).

(D) Prediction errors for the eye position traces in (A) showed strong co-fluctuations in horizontal, but not vertical, eye direction.

(E) Interocular correlation of the eye movements that were not predictable by head pitch and roll (i.e., the prediction errors of independent models for the two eyes as shown in D). There was a strong positive correlation for horizontal eye movements, suggesting that conjugate eye movements occurred during head-free behavior and were not explained by head tilt. Arrows show coupling for left eye rotating in nasal direction. Mean ± SEM. Same data as in (B).

We wondered whether the lower predictability of horizontal eye position by head tilt compared to vertical eye position (Figure 3B) might be due to the inclusion of conjugate eye movements similar to those observed in head-restrained mice. Changes in head pitch have been shown to be associated with convergent horizontal eye movements, i.e., both eyes rotate toward the nasal edge when the head pitches up, and divergent eye movements, i.e., both eyes rotate toward the temporal edge when the head pitches down [23, 25, 26]. As a consequence, pupil position will be negatively correlated across the two eyes. In contrast, conjugate eye movements, such as the ones observed in head-restrained mice, will result in positive interocular correlations; both eyes rotate either clockwise (CW) or counter-clockwise (CCW), e.g., one eye moves toward the nasal edge while one moves away from the nasal edge. We therefore reasoned that, if conjugate eye movements occur in freely moving mice, failure to predict eye position based on head tilt should lead to positive rather than negative interocular correlations.

Indeed, consideration of head tilt succeeded in separating two different types of horizontal eye movement: positions predicted by models based on head pitch and roll were consistent with convergent/divergent horizontal eye movements, i.e., both eyes tended to move together toward the nasal or temporal edge (interocular correlation r = −0.56 ± 0.06; Figure 3C); those not predicted by head tilt (prediction error; Figure 3D) were positively correlated, implying conjugate horizontal eye movements (interocular correlation r = 0.71 ± 0.02; Figure 3E). Thus, conjugate horizontal eye movements, potentially resembling those observed in head-restrained mice, are also part of the natural repertoire in freely moving mice and co-occur with non-conjugate eye movements.

Rapid Saccadic Conjugate Horizontal Eye Movements in Freely Moving Mice

We next determined whether the conjugate horizontal eye movements in freely moving mice comprised rapid saccadic-like horizontal eye movements similar to those in head-restrained mice (Figures 1E–1H). Viewed on a fast timescale, the two eyes often showed brief, strong co-fluctuations (Figure 4A, top) of high velocities frequently reaching more than 800°/s (Figure 4A, middle). Saccades were defined as rapid high-velocity eye movements and detected using a speed threshold (including movement in horizontal and vertical eye direction; STAR Methods) [17, 20]. These saccadic eye movements were not only strongly coupled across the two eyes but also conjugate (Figure 4B), similar to saccades in head-restrained animals. In total, during 94% (9,703/10,331) of all saccades detected for both eyes, the two eyes were moving in the same direction (CW or CCW). Saccades in freely moving mice, therefore, were qualitatively similar to those in head-restrained mice.

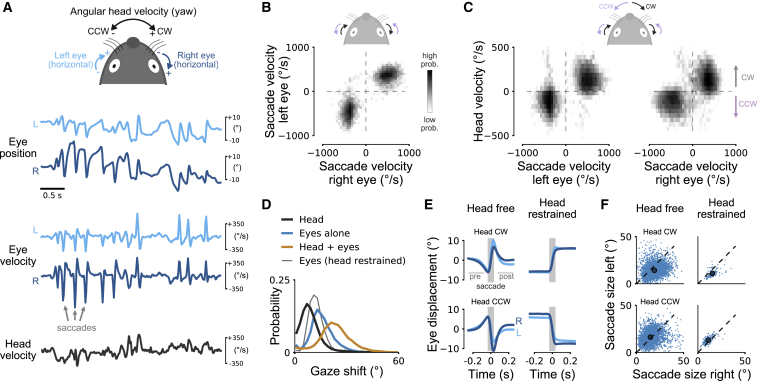

Figure 4.

Rapid Saccadic Conjugate Horizontal Eye Movements in Freely Moving Mice

(A) Schema for representing horizontal head and eye rotation axes (top). Examples of eye position (top traces), angular eye velocity (middle), and angular head velocity (bottom) in a freely moving mouse. High-velocity peaks during saccades coordinated between the two eyes are visible. Left eye is in light blue; right eye is in dark blue. Positive and negative head velocity indicates clockwise (CW) and counterclockwise (CCW) head rotation, respectively.

(B) Log-scaled joint distribution of horizontal saccade velocity for the left and right eyes. 94% of saccades had the same sign for both eyes (10,331 saccades detected in both eyes).

(C) Log-scaled joint distribution of horizontal saccade velocity for the left and right eyes and angular head velocity. Most saccades occurred during head rotations with eye rotations in the same direction as the head; same data as in Figure 1B.

(D) Gaze shift magnitudes during saccades in freely moving mice: eyes and head together in orange, eyes alone in blue, head alone in dark gray, and, for comparison, gaze shift in head-restrained mice in thin gray line.

(E) Average saccade profiles in freely moving (left) and head-restrained (right) mice. Saccades for CW (top) and CCW (bottom) head rotations (shaded gray area) were preceded and followed by a counter eye movement (“pre” and “post”) in freely moving mice, but not in head-restrained mice. Means ± SEM (smaller than line width).

(F) Saccade sizes for CW and CCW head rotations in head-free (left) mice. On average, saccades were larger for temporal-to-nasal than for nasal-to-temporal saccades. Dots indicate average saccade sizes. Saccades in head-restrained mice for comparison (right) with same asymmetry in average saccade sizes as in head-free mice.

See also Figure S2.

In many species, including humans [34], cats [35, 36], and rabbits [37], horizontal eye movements are often linked to rotations of the head. We therefore tested whether this is also true in mice. During saccades, eyes and head rotated in the same direction (i.e., CW or CCW; Figure 4C) similar to the pattern observed in freely moving humans (Figures S2A and S2B). We compared the effect of combined eye and head movements to eye or head shifts alone (integrated head yaw velocity signal from the head-mounted gyroscope). We found that eye or head movements alone shifted gaze by 15.8° ± 7.0° and 9.4° ± 6.5°, respectively (Figure 4D). Together, eye and head movements shifted the gaze by 23.3° ± 9.6°. Thus, both eye and head motion contributed substantially to saccade-related gaze shifts in freely moving mice. However, the eyes contributed to a greater extent during 83% of all saccades. Saccades in head-restrained mice were considerably smaller (12.3° ± 4.5°; 582 saccades from 3 mice) than gaze shifts or even eye saccades alone in head free mice (p = 1.9 × 10−16, “Head + eyes” versus “Eyes (head restrained)”; p = 1.8 × 10−16, “Eyes alone” versus “Eyes (head restrained)”; Wilcoxon rank-sum test, Bonferroni correction).

Gaze shifts in many animals are often observed together with periods of gaze stabilization [7], and we wondered whether a similar pattern could be observed in mice. To test this, we computed average displacements of both eyes, aligned to saccade onset (Figure 4E, left). These traces revealed two distinct features. First, saccades were preceded and followed by slower counter movements of the eyes (as indicated by eye displacement in opposite direction to the saccade in Figure 4E, left). This suggests that eye movements in freely moving mice not only support gaze shifts but also help to stabilize the image of the surrounding just before and after saccades during head rotation. The absence of counter movements in head-restrained mice (Figure 4E, right) further supports this hypothesis. Second, although the temporal profile of saccades in both eyes was similar, saccade size was larger for temporal-to-nasal than for nasal-to-temporal movement (Figures 4E and 4F, left; p = 1.1 × 10−16, CW left versus right eye; p = 6.5 × 10−16, CCW left versus right eye; Wilcoxon signed-rank tests; data pooled across mice; see STAR Methods for results in single mice). Consistent with previous studies [20, 22], we found a similar pattern for head-restrained mice (Figures 4E and 4F, right; p = 5.9 × 10−18, CW left versus right eye; p = 4 × 10−18, CCW left versus right eye; Wilcoxon signed-rank tests), suggesting that saccades in head-restrained and freely moving mice share similar mechanisms. At the same time, this degree of asymmetry in saccade sizes and velocities (Figure S2E) between both eyes represents a major difference between mice and humans [38]: saccades away from the nose “recenter” the eye (to its average position), whereas saccades toward the nose move the eye beyond the “center” (in the direction of the head turn; Figure S2F).

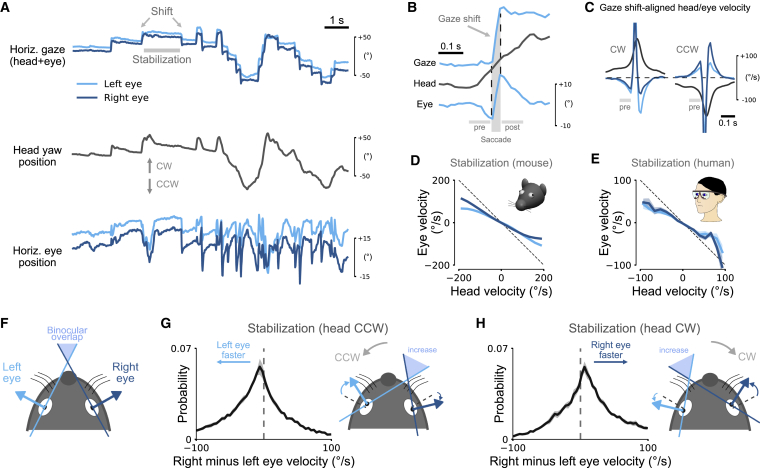

Head and Eyes Contribute to a Saccade and Fixate Gaze Pattern

Gaze in mice involves saccade and fixate periods, during which eye and head rotate together (gaze shift) preceded and followed by gaze stabilization (during which the eyes counter-rotate). We further investigated these patterns during natural behavior by comparing horizontal angular head position computed by integrating yaw velocity to horizontal eye positions using the head-mounted cameras.

Head position varied smoothly with large excursions of several hundred degrees in CW and CCW directions (Figure 5A, middle). In contrast, eye positions appeared jerky with both saccadic and slower movements of smaller amplitudes compared to the head rotations (Figure 5A, bottom). Combining head and eye positions to compute gaze (eye in head + head in space) revealed a step-like gaze pattern consisting of gaze shifts and periods during which the image of the external world was approximately stable (Figures 5A, top, S3A, and S3B). This pattern implies that mice view their surrounding as a sequence of stable images interrupted by rapid gaze shifts (1 to 2 shifts per second; Figure S3), similar to the stable view in humans that is only briefly interrupted by rapid saccadic eye movements.

Figure 5.

Head and Eyes Contribute to a “Saccade and Fixate” Gaze Pattern

(A) Horizontal positions of the two eyes (bottom), angular head yaw position (middle), and gaze (head + eye, top) during 12-s segment in a freely moving mouse selected to highlight the saccade and fixate pattern. Small-amplitude, jerky eye movements and large-amplitude, smooth head movements combine to produce the saccade and fixate gaze pattern.

(B) Magnified traces for a single gaze shift from the recording in (A). Head movement is accompanied by an initial counter-rotation of the eye before the gaze saccade. Vertical and horizontal gray bars indicate saccade and pre/post periods, respectively.

(C) Gaze shift-aligned head and eye velocity traces for clockwise (CW, left) and counter-clockwise (CCW, right) gaze shifts. 44,396 gaze shifts from 5 mice (22,226 CW and 22,170 CCW); mean ± SEM (smaller than line width).

(D) Relation between horizontal eye and head velocity during stabilization periods (example period marked in A). Eye movements between saccadic gaze shifts counteract head rotations; mean ± SEM (smaller than line width). Dashed line indicates complete offsetting counter-rotation; same data as in Figure 1C.

(E) The same as in (D) but for humans wearing head-mounted eye goggles.

(F) Illustration of monocular left and right visual field (about 180°) and horizontal binocular overlap.

(G) Left: distribution of the difference in right and left eye velocity during stabilizing eye movements (CCW head rotation); mean ± SEM for 5 mice. Right: illustration of consequence of asymmetric nasal-to-temporal and temporal-to-nasal eye velocity on binocular overlap (increase relative to setting shown in F). At the same time, gaze stabilization is enhanced for the left eye during leftward turn.

(H) The same as in (G) but for CW head rotations. Enhanced gaze stabilization for the right eye for mouse turning to the right.

See also Figure S3.

We looked more closely at the precise sequence of head and eye movements during saccade and fixate gaze shifts. Figure 5B shows a close up of an example gaze shift. Consistent with the average saccade traces (Figure 4E), there were three distinct phases of eye motion: an initial eye movement that counteracted the start of the head movement; the saccadic eye movement that is responsible for the rapid onset of the gaze shift; and a final “compensatory” phase, in which the eye rotates in a direction approximately equal and opposite to head rotation in order to ensure gaze stability. This three-phase pattern was consistent across a large number of saccade and fixate shifts for a total of 22,226 CW and 22,170 CCW gaze shifts (Figure 5C). This uniformity suggests that head, and not eye, movement is the driver of gaze shifts in freely moving mice.

We turned our attention to the conjugate movements of the eyes during the fixate phase and asked to what extent they counteracted head yaw rotations to stabilize retinal images. To investigate this, we compared angular velocities of eye and head in between the gaze shifts. Eyes were typically moving in the direction opposite to the head (Figure 5D), consistent with the expected effects of the aVOR. This relation was approximately linear, and we quantified the mean absolute deviation (MAD) from full counter-rotation (dashed line in Figure 5D) and the gain (defined here as the negative slope of a line fitted to the data using linear regression). Across the measured velocity range, the MAD was 41.46° ± 7.82°/s with a gain of 0.53 ± 0.06. We repeated the same analysis for our human eye-tracking data and found remarkably similar values in humans (Figure 5E; MAD 25.29° ± 4.74°/s; gain 0.59 ± 0.16); mice have a slightly reduced degree of image stabilization compared to humans. Changes in head tilt (Figure 3) had only a small and statistically insignificant impact on these parameters (Figures S3C–S3E), suggesting that head tilt-related visual field stabilization and gaze stabilization during the fixate phase of the saccade and fixate pattern act largely independent of each other in freely moving mice.

Finally, we investigated the effect of the asymmetric nasal-to-temporal and temporal-to-nasal eye movements on horizontal gaze. Mice have a small frontal region of binocular overlap (about 40°–50°; Figure 5F), and we wondered to what extent both eyes were aligned with each other during gaze stabilization periods (Figure 5A). Previous work in rats has suggested that alignment can change with head tilt [25]. Our data further suggest that alignment can also change during horizontal gaze shifts (in the absence of changes in head tilt; Figure S3C). To quantify the degree of alignment, we computed the difference in horizontal eye velocity between the right and left eyes for periods during which the head was approximately upright (head pitch and roll magnitude < 10°). If both eyes were largely aligned, the distribution of velocity differences would be tightly centered about 0°/s. Instead, we found that distributions for CCW and CW head rotations had a rather wide spread and were shifted and skewed toward the eye that moved temporal to nasal (Figures 5G and 5H; median absolute deviation: 31.42°/s CCW, 29.53°/s CW; median: 14.70°/s CCW, −10.87°/s CW; skewness: −0.53 CCW, 1.09 CW). This suggests that, when mice stabilize gaze during head yaw rotations, binocular alignment varies in width, even for horizontal eye movements that were largely conjugate. Thus, in contrast to humans, there seems to be no stable base for continuous stereoscopic depth perception using disparity in freely moving mice. At the same time, the eye facing the head turn showed enhanced gaze stabilization during the fixate phase (as illustrated by the angles of the left and right horizontal gaze arrows with the initial gaze direction in Figures 5G and 5H).

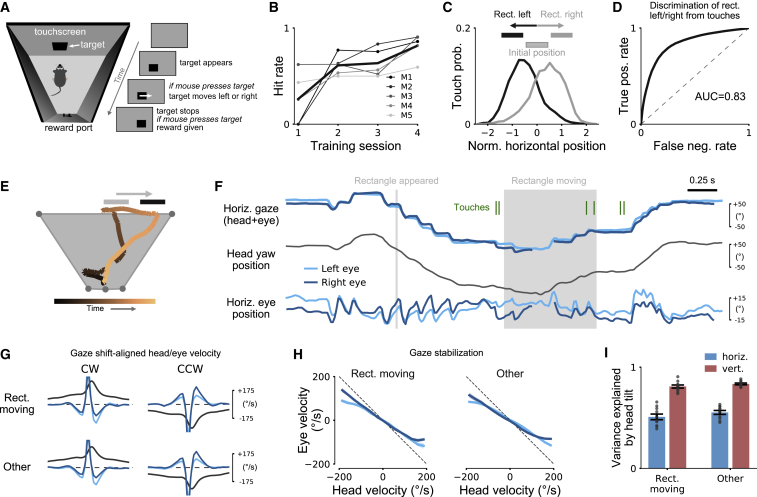

Both Types of Eye-Head Coupling Are Preserved during Visually Guided Behaviors

All of the above measurements in unrestrained mice were made from mice that freely explored a circular or rectangular environment. We wondered whether the observed gaze pattern was preserved when mice interacted with behaviorally salient sensory stimuli or when they engaged in visually guided behaviors. In humans and also other animals, the presence of relevant stimuli can alter gaze shift patterns, for example, during foraging [30]. To test whether this was also true in mice, we performed two different experiments.

In the first experiment, a male mouse with head-mounted camera system initially explored an empty environment. A second male mouse was then placed in the same environment and social interactions were monitored, allowing us to compare gaze shift patterns before and while the other mouse was present in the environment (Video S3). There were no discernible differences in any type of eye-head coupling between the two conditions (Figure S4). However, while social interactions may depend on visual input, particularly during approach [39, 40], a wide range of additional, non-visual inputs might be used during social behaviors [41]. For example, it is clear from the supplemental video (Video S3) that the mouse closed its eyes for much of the time that his head was close to the intruder mouse, even when the interaction involved rapid chasing around the box.

Eye camera images (top) together with images of an external camera (bottom) during mouse social interaction. The male mouse with the head-mounted cameras had not encountered the other male mouse (without cameras) before.

We therefore designed a visually guided task that resembles some aspects of typical mouse behavior—detection, approach, and tracking—but in contrast to natural behaviors relied exclusively on vision. The visual target was a black rectangle appearing on an LCD display (Figure 6A; Video S4). The mouse could only solve the task by using the visual information on the display, allowing us to isolate the effect of salient visual input. The rectangle randomly appeared at one of two locations and, once touched by the mouse, moved randomly to the left or right. The mouse had to press the rectangle again within 2 s after the rectangle stopped moving to get a drop of soy milk reward at the other end of the box. Touches of the mouse were detected with an infrared (IR) touchscreen mounted on top of the display as previously described [13, 42, 43].

Figure 6.

Both Types of Eye-Head Coupling Are Preserved during Visually Guided Behaviors

(A) Visually guided tracking task. Mice pressed a black rectangle that appeared on an IR touchscreen. The rectangle then moved randomly for different distances to the left or right, and the mouse had to press the rectangle again once the rectangle stopped moving to get a reward at the other end of the box. Mice were first pretrained to press rectangles appearing on the screen with a single touch and then to press the rectangle for a second time after it had shifted to a new position.

(B) Learning of the final version of the task in which the initial and final positions of the rectangle were non-overlapping. Data show average hit rates for 5 mice (thin lines) and average hit rate (fraction correct) across mice (thick black line). 211.1 ± 198.9 trials per session.

(C) Distribution of touchscreen touches for rectangle moving left (black line) or right (gray line). Touch positions are normalized by rectangle position and width. Extent of rectangles is shown above. Data are for 4,221 trials from 5 mice.

(D) Receiver operating characteristics curve for discrimination of left/right rectangle movement based on touchscreen touches for the data shown in (C). Area under curve was 0.83.

(E) Example trial of mouse performing the task. Overhead view of head position with color indicating trial time as in color bar below. Gray and black rectangles show initial and final rectangle positions, respectively.

(F) Gaze (top), head yaw (middle), and eye (bottom) positions for the trial in (E). Rectangle appearance and movement period are marked by gray areas. Green lines indicate time points when the mouse is touching the rectangle.

(G) Eye-head coupling during gaze shifts was preserved during rectangle tracking compared to a baseline condition (“Other”; without visual stimulus). Means ± SEM (smaller than line width).

(H) Relation between head and eye velocity during gaze-stabilization periods. Means ± SEM (typically smaller than line width).

(I) Cross-validated explained variance (mean ± SEM) of models trained on head pitch/roll for the baseline condition (“Other”; without visual stimulus).

Food-restricted mice learned within 3–5 days (Figure 6B) to reliably track the object moving left or right (Figures 6C and 6D). Simultaneous tracking of head and eye movement during behavior enabled us to determine whether eye-head coupling changed when animals tracked a relevant visual object (Figures 6E and 6F). We observed that the pattern of gaze shifts was similar during the visual tracking task compared to a baseline condition without visual stimulus (“Other”; Figures 6F–6H; permutation tests, all p > 0.13; STAR Methods). There was no significant change in gaze shift frequency between the two conditions (3.0 ± 0.9 gaze shifts per second “Rectangle moving” versus 3.1 ± 1.1 gaze shifts per second “Other”; Wilcoxon rank-sum test; p = 0.87). Moreover, the coupling of eye position to changes in head tilt was preserved; computational models trained on the baseline condition predicted equally well horizontal and vertical eye position from head tilt during stimulus tracking (Figure 6I; p = 0.32 horizontal; p = 0.23 vertical; Wilcoxon signed-rank test; n = 10 [5 mice, left and right eye]).

In sum, both types of eye-head coupling appeared to be maintained when mice were engaged in visually guided behaviors. How the mice moved their heads during the visual tracking task, however, became highly structured and differed substantially from the patterns observed during free exploration (Figures S4D and S4E).

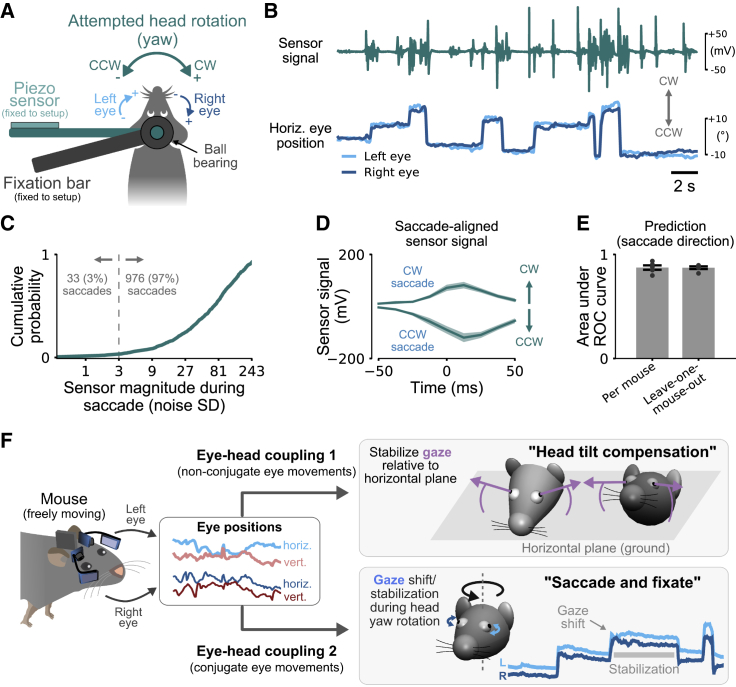

Saccades in Head-Restrained Mice Occur during Head Rotation Attempts

Our data suggest that the main role of saccades in mice is to shift gaze during head rotations. We therefore reasoned that saccades observed in head-restrained mice might be linked to the attempt of mice to rotate their heads. To directly test this, we designed an experiment that allowed us to measure eye movements in head-restrained mice along with head rotation attempts without actual motion of the head (Figure 7A). This excluded movement-related signals, such as visual or vestibular input, that could themselves drive eye movements [31, 44].

Figure 7.

Saccades in Head-Restrained Mice Occur during Head Rotation Attempts

(A) Measurement of attempted head rotations in a head-restrained mouse. A fixation bar (dark gray) is attached to the animal’s head post via a ball bearing. A second bar connected to animal’s head post is free to rotate about the yaw axis. The end of the bar is attached to a non-elastic piezoelectric sensor that measures changes in exerted head motion (in the absence of actual head rotation). The animal’s body was restrained by two plastic side plates and a cover above the animal (not shown).

(B) Sensor output signal (top) and simultaneously measured horizontal eye positions of both eyes (bottom). Gray arrows indicate CW and CCW directions of sensor signal (head) and eye movements.

(C) Sensor signal magnitude during saccades normalized by the standard deviation (SD) of the sensor noise (measured without mouse attached). For 97% of all 1,009 saccades in five mice, the sensor magnitude was larger than 3 noise standard deviations (dashed gray line).

(D) Saccade-aligned sensor trace for CW and CCW saccade directions. Average sensor deflections were in the same direction as the saccades. Mean ± SEM. Same data as in (C).

(E) Cross-validated prediction performance of saccade directions based on sensor data. Predictions were performed by training a linear classifier using the sensor signals around the saccades (−50 ms to +50 ms; 9 equally spaced time points). “Per mouse,” 5-fold cross-validation for each mouse separately; “Leave-one-mouse-out,” saccade direction of a given mouse is predicted using a classifier trained on the data of the other mice. Mean ± SEM. Same data as in (C).

(F) Schematic summary of the two types of eye-head coupling identified in this study in freely moving mice.

Mice made spontaneous saccades with amplitudes comparable to previous studies [17, 23] (Figure 7B; Video S5; horizontal saccade size 12.2° ± 5.1°; 1,009 saccades in 5 mice; all values for left eye). During 97% of all saccades, the sensor used to measure attempted head motion showed fluctuations clearly discernible from baseline (Figure 7C; sensor signal magnitude ≥ 3 sensor noise SDs). This indicated that head-restrained mice indeed attempted to rotate their head about the yaw axis during the saccades. The reverse conclusion, however, was not true: not every head movement attempt resulted in a saccade (Figure 7B; Video S5).

Eye images (top), extracted horizontal eye positions (middle) and simultaneously recorded head movement signal (bottom) for the 50 s segment shown in Figure 7B.

We wondered whether these head rotation attempts reflected the same saccade and fixate eye-head coupling observed in freely moving mice (Figure 4C). If this were true, then head rotation and horizontal saccade directions should be identical (CW or CCW). To test this, we first aligned sensor signals to either CW or CCW saccades (Figure 7D). Consistent with our findings in freely moving mice, average sensor traces indicated that head rotation attempts were in the same direction as the ocular saccade. To test whether this was also true for single saccades, we trained a linear classifier to predict horizontal saccade direction from the head sensor signal measured around the time of the saccades (9 time points from −50 ms before until +50 ms after each saccade). We found that, for each mouse, rotational head motion sensor signals were highly predictive of saccade direction (Figure 7D; area under the ROC curve 0.87 ± 0.02, 5-fold cross-validation; 5 mice). We also tested whether these predictions resulted from patterns that were consistent across mice as suggested by the eye-head coupling in freely moving mice. Predicting saccade direction for each mouse using a classifier trained on data from all other mice (“Leave-one-mouse-out”) resulted in the same high prediction performance (Figure 7E; area under ROC curve 0.87 ± 0.01), indicating that saccades in head-restrained mice occur during head motion patterns that are similar across mice.

Finally, we tested whether head sensor signals were also predictive of the size of the saccades. We used Bayesian linear regression to predict changes in eye position during saccades (i.e., direction and size) from head sensor traces (Figure S5). Predictions were far above chance level (r2 = 0.33 ± 0.03), even for leave-one-mouse-out cross-validation (r2 = 0.33 ± 0.05).

In summary, not only were saccades in head-restrained mice linked to head motion attempts, but the patterns were also strongly predictive of saccade direction and size. This shows that rotational eye-head coupling is maintained during head restraint and suggests that saccades in head-restrained animals might not serve active visual exploration independent of the head.

Discussion

We have shown that eye movements in freely moving mice consist of two dissociable types (Figure 7F; Table S1). By simultaneously tracking eye and head movements in freely behaving mice [23], we find that both types are invariably coupled to the head. The first type, “head tilt compensation,” is consistent with the effects of the otolith-ocular reflex. We show that it serves in freely moving mice to approximately maintain the same visual field relative to the horizontal ground plane by systematically changing eye position depending on the tilt of the animal’s head. The second type, the saccade and fixate gaze pattern, is consistent with the effects of the angular vestibulo-ocular reflex and enables gaze stabilization and gaze shift during reorienting head yaw rotations. Both types, linking eye and head movement, are consistent across a wide range of behaviors being maintained unmodified, for example, during visually guided behaviors. This link is so strong that it persists despite attempts to frustrate it: saccadic eye movements in head-restrained mice are associated with attempted head rotation, similar to eye movements in freely moving animals being associated with actual head yaw rotation. These results thereby resolve seemingly contradictory findings of conjugate eye movements in head-restrained mice [17, 20, 45] and complex combinations of conjugate and disconjugate eye movements in freely moving mice [23, 24] by separating eye movements into two types of eye-head coupling, each with its own different linkage. In freely behaving mice, our results now enable decomposition of the complex eye movement patterns into these two distinct and predictable types, where the second saccade and fixate type is further decomposed into two different phases: gaze shift (saccade) and gaze stabilization (fixate). This helps to clarify which aspects of visual behaviors in humans and non-human primates can be studied in the mouse, which has become a prominent model animal in vision in recent years. Our data highlight five major aspects of mouse eye movements relevant for this comparison that we discuss in the next five sections.

Head Tilt Compensation Eye Movements

We found that the average position of the mouse eye strongly depends on the tilt of the head, consistent with previous work in mice [23, 26, 32] and rats [25]. Head tilt stabilization has been reported in the absence of visual input (darkness) [23, 26, 33], suggesting that concomitant changes in eye position are typically driven by gravity-dominated vestibular rather than visual input [26, 33]. A distinct characteristic of this head tilt-related eye-head coupling is that the two eyes are non-conjugate, typically converging or diverging from each other when an animal is pitching or rolling its head, a behavior that is different from humans and non-human primates. As a consequence, the mouse maintains a relatively narrow range of angles between the ground plane and its gaze, much narrower than the range of angles between the ground and the axis of the eye in its head reference frame. This compensatory process has also been observed in head-restrained mice and has been suggested to reflect preferred alignment of specific parts of the visual field with specific locations on the retina [26].

Here, we focused on changes in horizontal and vertical eye position, because they are the main determinants of the visual field [26]. Future studies could extend the results to measurements of eye torsion, although torsion in mice is difficult to estimate non-invasively with video-based methods with little retinal structure to provide reference [26] (but see [25] for eye torsion measurement in rats). For ground-dwelling animals, such as the mouse, the horizon typically divides the world into the ground, where, for example, food and mates are likely to be found, and an upper visual field covering the sky, a region where aerial predators might appear [9]. Indeed, recent evidence suggests that dorsal-ventral shifts in color [46, 47] and contrast [48] sensitivity fall onto the ground-observing dorsal and sky-observing ventral retina, respectively. This is in agreement with our finding that the head tilt compensation eye system is trying to keep these parts of the retina aligned with the horizontal plane, regardless of other aspects of behavior or the presence of a salient stimulus as in our visual tracking task. At the same time, due to the arrangement of the eyes in the head, the same eye-head coupling may also help to ensure continuous coverage of a large fraction of the animal’s visual field by the two eyes as reported for rats [25].

Finally, rodents do not rely solely on vision for sensory exploration of the immediate environment but also on sniffing and whisking [49], both of which are coordinated with rapid rhythmical head movements [50]. The head might therefore act as a common reference frame to coordinate information from the different senses.

Saccade and Fixate Movements

Horizontal gaze involves fixations in which gaze is approximately kept still, interspersed with saccades to rapidly change gaze direction together with the head. This saccade and fixate pattern is observed in many vertebrates, including humans, and enables both stable fixation and rapid gaze shifting with minimal retinal blur [30]. In a classic paper, Walls argued that the origin of eye movements lies in the need to keep an object fixed on the retina, not in the need to scan the surroundings [51]. Indeed, we show that a major aspect of mouse eye movements is the stabilization of retinal stimulation during head rotation. Gaze shifting saccades were typically coupled to head rotations, and mice made about 1 to 2 gaze shifts per second, similar to humans [52]. We considered the possibility that these horizontal gaze movements reflected overt visual attention. The fact that they did not vary in number or property between spontaneous locomotion in an open field and a visual tracking task would seem to militate against this hypothesis. Thus, in the mouse, changes in overt visual attention behavior appear to be mediated by changes in head movements directed to the visual stimulus with eye movements that follow. A difference between mice and primates is therefore that gaze shifts in mice rely on combined head and eye movements, while primates can also shift their gaze in the absence of head movement [53].

Ocular Saccades in Head-Restrained Mice

The possibility that saccades observed in head-restrained mice would normally be associated with head movements during natural behaviors has been suggested previously [20]. Correlations between neck muscle activity and eye movements have been shown in different animals, including rabbits [37], cats [54, 55], and primates [53, 56], while the degree to which the coupling is compulsory appears to vary across species (and appears, for example, stronger in cats than in primates) [36]. Our data suggest that the coupling in mice is very strong: even in head-restrained mice, saccades are invariably associated with attempted head motions. These findings have implications for neural recording experiments in head-restrained animals. If eye movements in these preparations are coupled to and proceeded by head movements, it is necessary to take this into account when searching for neural correlates of eye movements. Correlations may in fact be with other aspects of movement, for example, neck proprioceptive or muscle activity, or head-movement-corollary discharge signals. Experiments demonstrating changes in the timing of neural signals to eye movements might simply reflect shifts in the correlation from eye to other, head-related movements.

Strength and Consistency of Eye-Head Coupling in the Mouse across Behaviors

We found that eye-head coupling appears to be relatively consistent across behaviors in the mouse. In other species, including cats and humans, the sequence and contributions of head and eye movements during gaze shifts can strongly depend on the task and the nature of the sensory stimulus [36]. We therefore considered the possibility that eye movements in mice are fundamentally different during visually guided tasks compared to baseline conditions. Previous studies demonstrated that freely moving mice rely on vision during a variety of naturalistic visually guided tasks, including social behavior [39, 40], prey detection and capture [10], and threat detection [9]. We compared eye movements during baseline conditions, during social behavior (which also relies on other sensory modalities; see [41] for a recent review), and during a task that could only be solved using vision that captures aspects of visual detection, approach, and tracking in naturalistic mouse behaviors. Our results indicate that, during these three conditions, both types of eye-head coupling are maintained; further, there was no evidence that saccades lead the head during gaze shifts toward a visual target as in humans and non-human primates [7, 30, 36, 53]. This suggests that, in the mouse, these patterns are less flexible and potentially hardwired for a wide range of visual tasks. There may be good reasons why the mouse gaze system shifts both the eyes and head as a general rule. As mentioned above, the mouse already has a large field of view, which is only shifted by a small amount (∼5%) by eye movements alone. Moreover, similar to other animals, like cats [35, 36, 52] and marmosets [57], mice may be able to rely more heavily on head movement to shift gaze, because they can move the head much faster than monkeys and humans with bigger heads that need to overcome much larger inertial forces. Finally, relying as much as possible on head movements as opposed to eye-in-head movements at the behavioral level reduces the computational burden on the brain to compute this early-stage egocentric transformation as it seeks to integrate information from the different sensory modalities in the construction of an allocentric representation of the world.

Asymmetry in Horizontal Gaze Movements between the Two Eyes

We discovered a substantial asymmetry in horizontal nasal-temporal and temporal-nasal eye movements in freely moving mice. Thus, similar to head-restrained mice, saccades or stabilizing eye movements along the horizontal eye axis occur simultaneously but with unequal amplitude in the two eyes [20, 22, 58]. Because conjugate binocular eye movements typically co-occur with head rotation, the asymmetry might be related to a selective bias for processing visual information in the eye that is on the side of the animal’s heading direction (for example, causing improved compensation for head rotation in the left eye compared to the right eye during leftward turns) [59]. In any case, without closely yoked eyes, it is not clear how the mouse, a lateral-eyed animal with a narrow binocular field, uses the two eyes to measure distance by disparity during self-motion. Even in the absence of horizontal head and eye movements and the asymmetry noted above, changes in the position of the two eyes as a consequence of head tilt could potentially perturb ocular alignment critical for binocular depth perception [25]. Despite this, there is evidence for neural representations of binocular disparities in mouse visual cortex [60, 61]. Future experiments could investigate the link between the neural representations of binocular disparity, binocular gaze, and visual behaviors in freely moving mice.

Brain Mechanisms of Coordinated Head and Eye Movements

The decomposition of head and eye movements into distinct and independent types has important implications for studying the underlying neural mechanisms. It legitimates the mouse as a useful model to study gaze stabilization and shifting, a prominent feature of visual orienting behavior in humans and other primates.

The vestibular pathways conveying information about head tilt and head rotation are known to be crucial for gaze stabilization (see [62] for a recent review), while the superior colliculus is a key structure for controlling head and eye movements in primates [63, 64] and rodents [21, 65, 66]. Previous experimental and computational work has shown how the vestibular system and superior colliculus are part of an extensive network, including areas in the brainstem and cerebellum, that enables coordinated head and eye movements (e.g., [36, 67, 68, 69]).

A major challenge remains to characterize the precise contributions of these different areas during complex natural behaviors, when animals need to take into account multiple sensory signals, dependent on their behavior goals. Studying the neural signals during natural visual orienting behavior will require carefully designed experiments to disentangle eye- [21, 22] and head motion-related [65] components and to understand the integration with visual, motor, vestibular, and proprioceptive signals [62, 70]. Advanced techniques for detailed tracking of head and eye movement [23, 71] and virtual reality for visual stimulus control in freely behaving mice [72, 73] can now be combined with powerful tools to measure and manipulate neural activity of specific cell types and projection neurons [74]. This provides a unique opportunity to establish the neural circuit computations that underlie the different types of eye-head coupling and their impact on processing of visual input when mice are actively sampling their surroundings.

STAR★Methods

Key Resources Table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Experimental Models: Organisms/Strains | ||

| Mouse: C57BL/6J | Charles River | Strain code: 027 |

| Software and Algorithms | ||

| Python 3.6 | https://www.python.org/ | RRID: SCR_008394 |

| Open Ephys plugin-GUI | http://www.open-ephys.org | https://github.com/open-ephys/plugin-GUI |

| Custom camera software | [23] | https://github.com/arnefmeyer/RPiCameraPlugin |

| Custom IMU software | [23] | https://github.com/arnefmeyer/IMUReaderPlugin |

| DeepLabCut | [75] | https://github.com/AlexEMG/DeepLabCut |

| R Project for Statistical Computing (v3.5.1) | https://www.r-project.org/ | RRID: SCR_001905 |

| rpy2 Python package (v3.1) | https://github.com/rpy2/rpy2 | N/A |

| Data extraction and analysis code | This paper | N/A |

| Other | ||

| Open Ephys acquisition board | Open Ephys | http://www.open-ephys.org/acq-board/ |

| Head-mounted camera system | [23] | https://open-ephys.org/mousecam |

| Custom IMU sensor board (MPU-9250) | Champalimaud Foundation Hardware Platform, Lisbon, Portugal | N/A |

| Miniature connectors for cameras and IMU | Preci-dip | Cat#853-87-008-10-001101 |

| Cat#852-10-008-10-001101 | ||

| External tracking cameras | Arducam | Cat#B0036 |

| Raspberry Pi 3 Model B+ for behavioral setup | Farnell | Cat#2842228 |

| Teensy 3.2 microcontroller | Farnell | Cat#SC13983 |

| LCD display with integrated touch screen | Nexio | Product#NEX121 |

| IR beam break detector | TT Electronics | Product#OPB815WZ |

| Pinch valve | NResearch | Product#161P011 |

| Pinch valve driver | NResearch | Product#CDS-V01 |

| Ball bearing for torque measurement | NSK | Product#608ZZ |

| Piezo for torque measurement | RS Components | Cat#724-3162 |

| Tobii Pro Glasses 2 (human eye tracking) | Tobii | https://www.tobiipro.com/product-listing/tobii-pro-glasses-2/ |

Resource Availability

Lead Contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Arne F. Meyer (a1.meyer@donders.ru.nl)

Materials Availability

This study did not generate new unique reagents.

Data and Code Availability

Code for camera and IMU data acquisition and plugins for controlling the camera (https://github.com/arnefmeyer/RPiCameraPlugin) and IMU (https://github.com/arnefmeyer/IMUReaderPlugin) have been made freely available. Instructions for construction of the eye and head tracking system are publicly available, together with code for extraction of head pitch and roll from accelerometer signals [23]. Pupil tracking code and example data have been made available at https://github.com/arnefmeyer/meyer-et-al_currbiol_2020.

Experimental Model and Subject Details

Experiments were performed on six male C57BL/6J mice (Charles River). After surgical implantation (see Surgical Procedures), mice were individually housed on a 12-h reversed light-dark cycle (lights off at 12.00 noon).

For the behavioral object tracking experiments, mice had free access to water, but were food deprived to maintain at least 85 percent of their free-feeding body weight (typically 2-3 g of standard food pellets per animal per day). During the other experiments, water and food were available ad libitum. All experiments were performed in healthy mice that had not been used for any previous procedures. All experimental procedures were carried out in accordance with a UK Home Office Project License approved under the United Kingdom Animals (Scientific Procedures) Act of 1986.

We also collected eye and head tracking data in 5 human subjects (2 females and 3 males, age 26-51). All gave written consent and the study was approved by the local ethics committee of the Department of Psychology at the University of Cambridge.

Method Details

Surgical procedures

Mice aged 44–49 days were anaesthetized with 1%–2% isoflurane in oxygen and injected with analgesia (Carprofen, 5 mg/kg IP). Ophthalmic ointment (Alcon, UK) was applied to the eyes and sterile saline (0.1 ml) injected subcutaneously as needed to maintain hydration. A circular piece of scalp was removed and the underlying skull was cleaned and dried. A custom machined aluminum head-plate was cemented onto the skull using dental adhesive (Superbond C&B, Sun Medical, Japan). Three miniature female connectors (853-87-008-10-001101, Preci-Dip, Switzerland) were fixed to the implant with dental adhesive to enable stable connection of two cameras and an inertial measurement unit (IMU) sensor during experimental sessions. The positions and angles of the two eye tracking cameras and IR mirrors were adjusted using a stereotaxic instrument (Model 963, Kopf Instruments, USA) to align the view of the camera with the eye axis. Additionally, the pitch and roll axes of the IMU sensor were aligned to coincide with the plane spanned by the horizontal eye axes. Mice were allowed to recover from surgery for at least five days and handled before the experiments began.

Eye and head tracking in mice

The custom head-mounted eye and head tracking system has been described in detail previously [23]. Briefly, we used commercially available camera modules (1937, Adafruit, USA; infrared filter removed), one for each eye. Each camera was inserted into a custom 3D printed camera holder that contained a 21G cannula (Coopers Needle Works, UK) to position and hold a 7 mm square IR mirror (Calflex-X NIR-Blocking Filter, Optics Balzers, Germany) and two IR LEDs (VSMB2943GX01, Vishay, USA) to illuminate the camera’s field of view. The camera holder was attached to the connectors on the animal’s head-plate using a miniature connector (852-10-008-10-001101, Preci-Dip, Switzerland). The mirror position was adjusted during surgery (see Surgical Procedures) and fixed permanently using a thin layer of strong epoxy resin (Araldite Rapid, Araldite, UK) after verifying correct positioning in the head-restrained awake mouse. Camera data were acquired using single-board computers (Raspberry Pi 3 model B, Raspberry Pi Foundation, UK), one for each eye camera, and controlled using a custom plugin [23] for the open-ephys recording system (http://www.open-ephys.org) [76]. For all recordings, camera images were 640 × 480 pixels per frame at 60 Hz.

Head rotation and head tilt (pitch and roll) were measured using a calibrated IMU sensor (MPU-9250, InvenSense, USA) mounted onto a custom miniature circuit board with integrated lightweight cable (Champalimaud Foundation Hardware Platform, Lisbon, Portugal). The total weight of the assembly was less than 0.5 g (including suspended part of the cable). Sensor data were acquired at 190 Hz using a microcontroller (Teensy 3.2, PJRC, USA) and recorded along with the camera data using a custom open-ephys plugin (see Data and Code Availability). Precise synchronization of IMU and camera data was ensured by hardware trigger signals generated by the microcontroller that were recorded by the open-ephys recording system. The delay of the IMU system was measured by comparing accelerometer signals recorded with the IMU to an analog accelerometer (ADXL335, Analog Devices, USA) recorded directly with the recording system for a number of experiments not included in the analysis. The delay was constant (5 ms) with minimal jitter (< 1 ms) and was compensated for prior to the analysis. Head pitch and roll were extracted from accelerometer signals as described previously [23].

All experiments were conducted in a custom double-walled sound-shielded anechoic chamber [23]. Animals became accustomed to handling and gentle restraint over two to three days, before they were head-restrained and placed on a custom circular running disk (20 cm diameter, mounted on a rotary encoder). After animals were head-restrained the camera holders and the IMU sensor were connected to the miniature connectors on the animal’s head-plate (see Surgical Procedures). Power to the infrared light-emitting diodes attached to the camera holders for eye illumination was provided by the IMU sensor.

Extraction of pupil positions from camera images

For each eye, we tracked the position of the pupil, defined as its center, together with the nasal and temporal eye corners. Tracking of the eye corners allowed us to automatically align the horizontal eye axis, even in the presence of potential camera image movement (which typically occurs in less than 1% of all frames as shown previously [23]), and to exclude eye blinks. First, about 50–100 randomly selected frames for both eyes (from a total of typically 70000 – 210000 frames) were labeled manually for each recording day. The labeled data were used to train a deep convolutional network via transfer learning using freely available code (https://github.com/AlexEMG/DeepLabCut). The structure and training of the network has been described in detail elsewhere [75]. The trained neural network was then used to extract pupil position and eye corners from all video frames independently for each eye. The network predicts the probability that a labeled part, e.g., the pupil center, is in a particular pixel. We used the pixel location with the maximum probability for each labeled part and included only parts into the analysis with probability . For our freely moving mouse experiments, this resulted in successful tracking of all labeled parts in 0.95% (1711098/1804120) of frames for the left eye and 96% (1725879/1805361) of frames for the right eye. Video S1 shows examples of tracked pupil center and eye corners for both eyes in a freely moving or head-restrained mouse.

Eye movements measured using two head-mounted cameras for the same mouse when it was freely moving or head-restrained. No stimuli or visual feedback were provided during the head-restrained recording. Dots indicate tracked locations of pupil center (blue), nasal eye corner (orange), and temporal eye corner (green).

The horizontal eye axis was defined along the line connecting nasal and temporal eye corners; the vertical eye axis was orthogonal to this line. The origin of the eye coordinate system (Figures 2B, 2D, and 2E) was defined as the mid point between the nasal and temporal eye corners. Pixel values in 2-D video plane were converted to angular eye positions using a model-based approach developed for the C57BL/6J mouse line used in this study [45].

For the analysis, extracted eye position traces were smoothed using a 3-point Gaussian window with coefficients (0.072, 0.855, 0.072). Eye velocity was computed from the smoothed eye position traces. For analyses that involved comparison of the positions of the two eyes (Figures 1D, 1H, 1L, 3C, and 3E), eye position traces were first mapped into the same, uniform time base (sampling frequency 60 Hz) using linear interpolation. The same approach was used to align eye data with head pitch and roll or head angular velocity traces.

For the experiments in freely moving humans eye position and head motion were recorded using a commercially-available head-mounted eye tracker with integrated IMU sensor (Tobii Pro Glasses 2, Tobii Pro, Sweden). Subjects were instructed to freely explore a hallway and to walk back and forth multiple times. Data were acquired at 100 Hz and analyzed offline. Before each recording, the eye tracker was calibrated using the supplied calibration routine. Angular horizontal and vertical eye positions were computed from the output of the tracker’s 3D eye model as the angle between the gaze vector and the vector pointing to the front relative to the wearable eye tracker. Care was taken to ensure that the eye tracker was aligned with the frontal plane such that the origin of the angular eye coordinate system (in head tracker coordinates) coincided with the eyes pointing straight ahead (in head coordinates). Eye coordinates were defined analogously to those in mice (Figure 1B): horizontal eye position increases for clockwise eye movements (rotation axis pointing downward) whereas vertical eye position increases for upward eye movements.

Calculation of gaze angle relative to the horizontal plane

Calculation of the angle between the gaze and the horizontal plane required transformations between reference frames. The first was the eye-centered reference frame (“eye in orbit”) represented by horizontal and vertical angular eye coordinates relative to the eye axis, i.e., the origin of the eye coordinate system (see Extraction of Pupil Positions from Camera Images). The position of the eye axis was defined in a second, head-centered reference frame (“eye in head”). We used an existing geometric model of the eye axes in the head for C57BL/6J mice [20], with the position of the left or right eye axes at azimuth (relative to midline) and elevation (relative to the animal’s head plate). The third reference frame was the position of the head in the laboratory environment. As the angle between the gaze and the horizontal plane is invariant against rotations about an axis vertical to the horizontal plane, and translations parallel to the horizontal plane, the transformation from a head-centered to a ground-centered reference frame was determined by head pitch and roll (“head in space”; measured using the head-mounted accelerometer).

The transformation between the different reference frames was implemented by multiplication of 3D rotation matrices in the order defined above (“eye in orbit” first, “head in space” last; see also Figure S1A):

| (Equation 1) |

where denotes matrix multiplication. Each matrix describes elemental rotations about the axes of a Cartesian coordinate system (using the right-hand rule) with axes defined in Figure S1A:

| (Equation 2) |

| (Equation 3) |

| (Equation 4) |

where and denote the current horizontal and vertical angular eye position, azimuth and elevation the orientation of the eye axis in the animal’s head, and head roll and head pitch the current head pitch and roll angles, respectively. Thus, the eye gaze vector, defined as the eye position in space, was computed as

| (Equation 5) |

where is a unit vector along the eye axis. The gaze angle was defined as the angle between and the horizontal plane (spanned by the pitch and roll axes in Figure S1A). Similarly, the angle between the eye axis and the horizontal plane was computed by setting and .

The resulting transformation was implemented in Python using the numpy and scipy packages [77]. Video S2 shows an example of the resulting gaze, eye axis, and head tilt vectors together with eye camera frames. Head or eye position angles for which the above configuration could not distinctly be described by the rotation matrices (“Gimbal Lock”) are extremely rare in mice [65] and were excluded from the analysis. We also tested whether the choice of the specific geometric eye axes model affected our results by using a different model ( azimuth, elevation; [26]). This model gave quantitatively similar results (Figures S1B–S1D).

Left: eye images of the two head-mounted cameras (top) and an external camera showing the mouse exploring a rectangular environment (bottom). Right: orientation of head (thick green arrow), eye axes (thin white arrows), and gaze vectors (purple lines) relative to the horizontal plane of the mouse in the video. Gaze vectors are kept at a similar angle with the horizontal plane as the mouse is going through a wide range of head tilts. Blue and red arrows indicate horizontal and vertical axes of the eye coordinate system, respectively.

Calculation of monocular visual fields and binocular zone

To calculate the monocular visual field for each eye, we assumed that each monocular visual field subtends approximately of visual angle [60] and the distance between the eye centers is 1 cm. Consequently, the part of the visual space covered by each eye lies on one side of a plane going through the center of each eye with normal vector given by the gaze vector in Equation 5. A grid of points equally-spaced in spherical coordinates (spacing ) was used to determine visual field coverage with the animal placed at the center of the sphere. For each tracked eye position, we counted whether a grid point was “visible” to the eye, i.e., the projection of a grid point onto the plane was positive. The resulting grid counts were normalized by the frame count such that a point in visual space covered on each frame has a value of 1 and a point covered on half of the number of frames a value of 0.5. This procedure was performed for each eye separately. The iso-contours for the left and right visual fields in Figures 2I–2K were computed using the find_contours function in the scikit-image Python package (version 0.16.1; contour level 0.5). For the visual field covered by the two eyes, including the binocular zone, the normalized grid counts for both eyes were summed (spherical plots in Figures 2I–2K).

Prediction of eye position using head tilt