Abstract

High-precision indoor localization plays a vital role in various places. In recent years, visual inertial odometry (VIO) system has achieved outstanding progress in the field of indoor localization. However, it is easily affected by poor lighting and featureless environments. For this problem, we propose an indoor localization algorithm based on VIO system and three-dimensional (3D) map matching. The 3D map matching is to add height matching on the basis of previous two-dimensional (2D) matching so that the algorithm has more universal applicability. Firstly, the conditional random field model is established. Secondly, an indoor three-dimensional digital map is used as a priori information. Thirdly, the pose and position information output by the VIO system are used as the observation information of the conditional random field (CRF). Finally, the optimal states sequence is obtained and employed as the feedback information to correct the trajectory of VIO system. Experimental results show that our algorithm can effectively improve the positioning accuracy of VIO system in the indoor area of poor lighting and featureless.

Keywords: visual inertial odometry, indoor localization, conditional random field, map matching, three-dimensional

1. Introduction

With the development of society, high-precision positioning has become indispensable in many places. In the unobstructed outdoor environment, we can use the high-precision Global Positioning System (GPS) [1,2,3] for positioning. However, in the indoor environment, the GPS positioning performance is poor due to the GPS signals being blocked and reflected by the building. Therefore, traditional indoor positioning mostly uses inertial measurement units [4,5], Wi-Fi [6,7], ultrawideband (UWB) [8], and Bluetooth [9,10] for positioning. However, unlike GPS, none of these methods have been shown to be most suitable for the majority of applications. When inertial sensors are used for positioning, error correction algorithms must be used to assist the positioning, such as zero velocity update algorithm [11] and gyro drift measurement technology [12]. Nevertheless, inertial sensors will still produce large cumulative error after a period of time. The Wi-Fi positioning and UWB technology need to set up the corresponding infrastructure indoors, and the signal is also very susceptible to interference, resulting in large deviations in positioning. For Bluetooth indoor positioning technology, Bluetooth Low Energy (BLE) technology has been used in recent years. In a study [9], the author introduces a wireless BLE Single Input Multiple Output (SIMO) system. The algorithm simplifies the deployment procedures in corridors. In another study [10], a Pedestrian Dead Reckoning (PDR) based indoor positioning system with iBeacon calibrations is proposed. The system can be implemented in resource-limited embedded device. However, BLE technology requires multiple iBeacon devices to be installed indoors, and the Bluetooth signal is also vulnerable to interference leading to inaccurate positioning. In addition, the later device maintenance is also a problem.

Visual inertial odometry is a well-known technology that uses cameras and inertial measurement unit (IMU) to estimate the position and motion trajectory of pedestrians or robots. It combines the information of vision and IMU to obtain higher accuracy location information than single vision and single IMU [13]. It can be used on micro aerial vehicles to achieve positioning and navigation [14]. It can also be used to implement the robot simultaneous localization and mapping (SLAM) function [15]. The use of VIO technology for indoor positioning has attracted the attention of many scholars in recent years [16,17,18]. However, one of the drawbacks of VIO indoor positioning is that in the place where indoor poor lighting and featureless environments, enough feature points cannot be extracted from the images taken by camera. Therefore, effective matching between two images cannot be carried out and accurate positioning cannot be obtained. Study [19] proposed to extract the point and line features in the image. Compared with point features, lines provide significantly more geometrical structure information on the environment. This algorithm has a certain effect, but in an environment with few texture features, such as white wall, the effect is not obvious. So, we considered using other algorithm to correct the positioning accuracy of VIO in these areas.

Many researchers have been paying attention to the application of map matching algorithm in indoor localization. The map matching algorithm proposed in [20,21] adopts particle filtering algorithm. The advantage of particle filtering algorithm is that it can be used in nonlinear non-Gaussian systems. But the disadvantage is also obvious, the computational workload of particle filtering is related to the number of particles. Once the number of particles increases, the computational workload will also increase rapidly, which will bring great computational burden. Studies [22,23] adopt a map matching algorithm based on hidden Markov model (HMM), but this method assumes that the observations are independent of each other, which is not suitable for our system. Recently, with the research of conditional random field model algorithm, it has made significant progress in map matching. In 2014, Xiao and Zhuoling from the University of Oxford applied the conditional random field (CRF) model to map matching to improve the inertial localization [24]. Study [25] has proved that compared with other algorithms, the CRF model can capture a variety of constraints relationships between observations, with more universal applicability and higher accuracy. In 2016, Study [26] proposes an offline map matching algorithm designed for indoor localization systems based on CRF. The algorithm uses loose coupling between the localization system and the proposed map matching technique. In 2017, we combined Microelectromechanical system (MEMS) with 2D map matching algorithm for indoor positioning and achieved good results [27].

Therefore, we propose an indoor positioning algorithm that combines 3D map matching based on a CRF and the VIO system, and uses the optimal matching states sequence to correct the VIO trajectory. First of all, we need to obtain the high-precision indoor map and digitize it to get a usable digital map, and then pre-process the states of the map. The coordinates of each point are vectorized and stored by using evenly spaced points to cover the whole indoor area. In order to match the indoor height information, we also set the states on the stairs. Finally, we remove the states of those unreachable areas based on the structure of the indoor map, which can reduce mismatches and the trajectories pass through walls. Our main contributions as follows:

An algorithm based on 3D map matching and VIO system is proposed for indoor localization.

The conditional random field model of 3D map matching algorithm is established.

According to the different walking characteristics of pedestrian in plane and stair stage, different state definition methods are adopted.

In order to verify the reliability of our algorithm, we have carried out multiple experiments and compared the performance of our system with only single VIO system. Experiments show that our algorithm can achieve more accurate and robust positioning effects than a single VIO system in indoor environment with insufficient light and texture.

The remainder of this paper is organized as follows. In Section 2, we will introduce the system overview. In Section 3, we will introduce the map matching algorithm based on CRF model. Section 4 gives the details and results of our experiment. Finally, Section 5 is the summary of the full text and the prospect of the future work.

2. System Overview

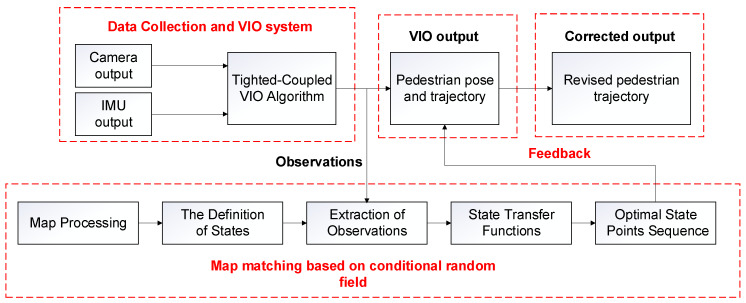

The overall framework of our approach is shown in Figure 1. The system is mainly divided into the following three parts: the data collection and VIO system, map matching based on conditional random field model and the feedback. The first part is the data collection and VIO system, we use IMU and camera to collect data. The IMU consists of a three-axis gyroscope and an accelerometer, which can collect acceleration and angular velocity information at each moment. The camera can capture the image information at each time. Then we transmit the collected data to the computer for the tightly-coupled VIO algorithm, which can obtain the pedestrian trajectory and pose at each moment. The second part is the map matching based on conditional random field, which uses the conditional random field to fuse the pose of the pedestrian at each moment obtained by the VIO system with the data of the map matching system to obtain an accurate matching trajectory. The third part is the feedback part. By taking the matching result as the feedback, the pose of the pedestrian at each moment of the VIO system are corrected to obtain the final corrected position trajectory.

Figure 1.

The framework of the proposed algorithm.

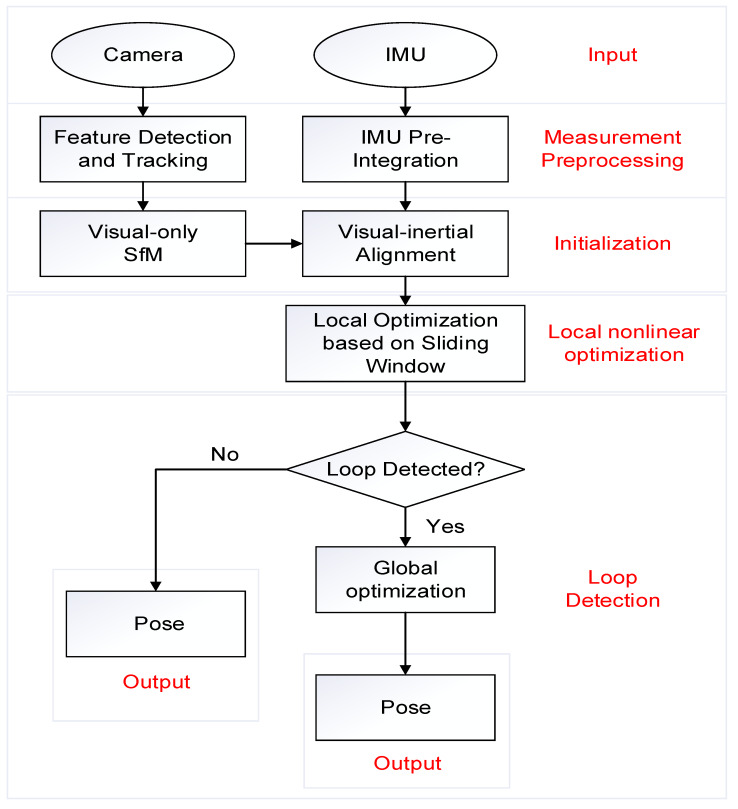

The VIO system we use is the vins-mono open source system proposed by Tong Qin et al. in 2017 [28]. It is the state-of-the-art tightly-coupled VIO system based on nonlinear optimization. The whole approach mainly includes four parts: IMU pre-integration, initialization, local nonlinear optimization, and loop detection. Figure 2 is the VIO algorithm flow chart. Firstly, the camera and IMU are used to collect data, extract feature points from the pictures taken by the camera and track the feature points, and perform IMU pre-integration on the data collected by the IMU. Secondly, Structure from Motion (SfM) algorithm is used to perform visual pose estimation, and then it is aligned with IMU pre-integration to solve the initialization parameters. After that, local nonlinear optimization is performed to optimize the state vectors in a sliding window. Finally, the loop detection will be judged. If there is no loop occurs, the pose will be output directly, otherwise, the global optimization will be performed, and then the pose will be output.

Figure 2.

The flow chart of the visual inertial odometry (VIO) algorithm.

3. Indoor 3D Map Matching Algorithm Based on CRF

We establish conditional random field model of indoor 3D map, and then estimate the optimal states sequence through observation information and model parameters. The optimal states sequence is used to correct the VIO trajectory.

3.1. Linear-Chain Conditional Random Field

The conditional random field algorithm is a conditional probability distribution model of output random variables when given a set of input random variables [29]. A special form of CRF is the random field model of linear chain. In this model, the output variables are modeled as a sequence, when given the input , the probability of the output has the following form:

| (1) |

where is local state transfer function and is corresponding weight. is the normalization factor and its expression is:

| (2) |

We can use and to represent the weight vector and the global state transfer function vector.

| (3) |

Therefore, Equation (1) can be rewritten as:

| (4) |

where:

| (5) |

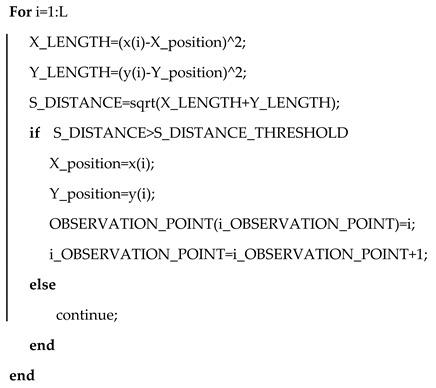

3.2. Map Pre-Processing

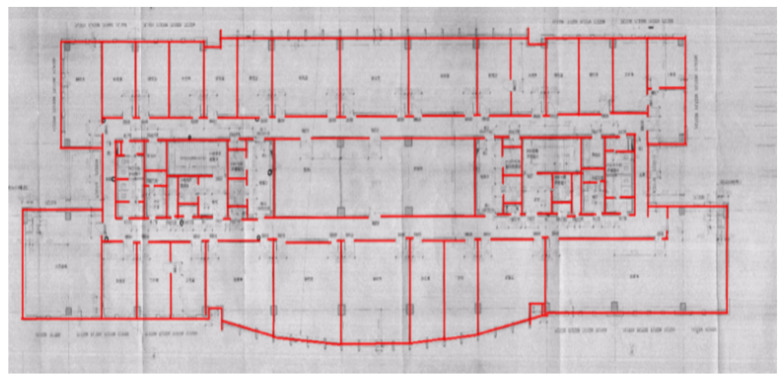

We need to process the indoor map before the experiment to obtain the discrete state of each moment. The indoor map is image format, which is not suitable for direct processing, so the software MapInfo is first used to convert the indoor maps from image format into digital format. The digital information of the indoor structure is obtained according to the calibration, projection, and description, and the digital information format is the endpoint coordinates of each line segment. The experiment site and map used are science building of Beijing University of Technology. The processed digital map is shown in Figure 3.

Figure 3.

Digital format map.

3.3. The Definition of States

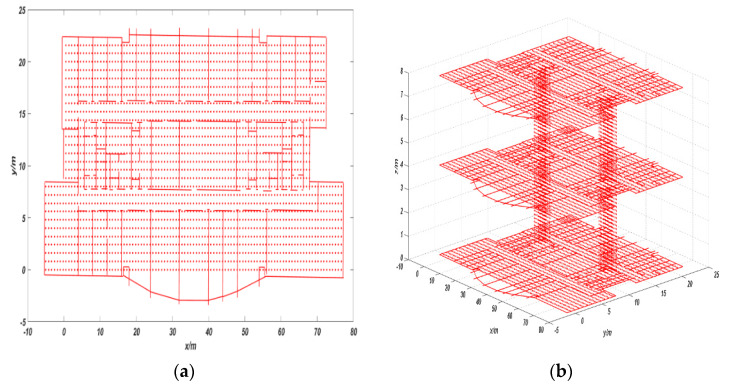

We use equal-sized square cells to divide the map and the vertices of each cell are the possible hidden states. The side length of each square cell is set as 0.8 m. At the same time, in order to achieve height matching, we use cuboids of equal size to divide the stair area between two floors. The height of cuboids is 0.16 m, which is equal to the height of a step, and the length and width of cuboids are 0.8 m, which is equal to the side length of the cell used before. The vertices of each cuboid are the possible hidden states and each state stores the corresponding coordinate information in the map coordinate system.

In order to improve the matching accuracy, we removed the states of those unreachable areas according to the specific situation of the indoor map. For example, if the vertices of the cells and cuboids in the map are too close to indoor impassable areas (such as walls, pillars, etc.), we will delete this state. Meanwhile, there are some constraints between the propagation of states. When the edge between two cell vertices passes through a previously unreachable area, the state cannot transfer directly. The processed map with states is shown in Figure 4, where, the red points represent the states, and the red lines represent the indoor structure.

Figure 4.

(a) Two-dimensional map with states and (b) three-dimensional map with states.

3.4. Extraction of Observations

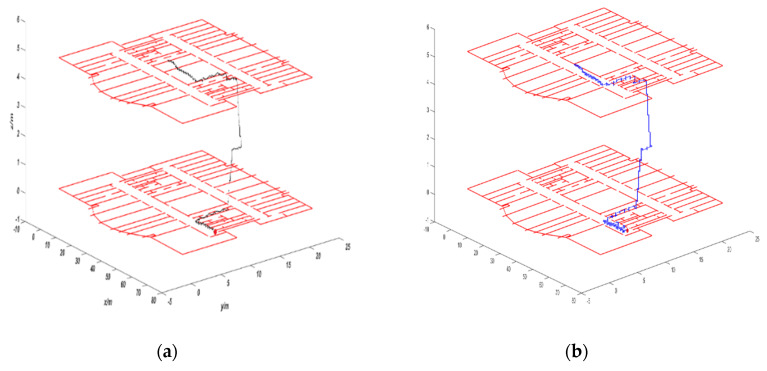

Pedestrians have different state characteristics when walking on the plane and walking on the stairs. Therefore, the extraction of observations should be divided into different cases. In the corridor 2D plane phase, we decided to extract the VIO output position information at the current moment as the observations when the pedestrian walking distance reached a fixed threshold. The threshold is set at 0.8 m, which is equal to the minimum distance between the two states. Its process is shown in Algorithm 1.

| Algorithm 1. Extraction of Observations. |

|

In the stairs phase, the change in height is involved. At the same time, when pedestrian walk on the stairs, each step will stay on the steps for a period of time, during which the speed is 0. So, we set that when the pedestrian’s height value changes at 0.16 m and the speed is 0, we extract the VIO output at the current moment as the observation. 0.16 m is the height of the cuboid set before, which is also the height of a step.

| (6) |

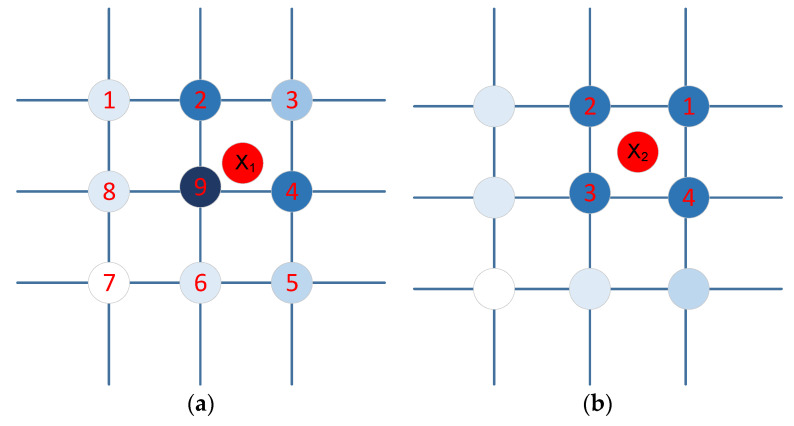

3.5. Establishment of State Transfer Function

The state transfer functions represent the support degree of the observation for the transition between consecutive states. When an observation matches a state point, an obvious rule is that the smaller the distance to this state point, the greater the probability of this observation at this state point, and vice versa. As shown in Figure 5a, the red point is an observation value output by VIO, and the remaining points are the state points on the map. During the process of matching the state points, the dark color state points in the figure below indicate the points with higher matching probability, while the light color state points in the figure below indicate the points with a low matching probability. Another factor that affects matching is the heading information. As shown in Figure 5b, the four state points (1,2,3,4) around the observation value are the same distance from . At this time, we can use the information of heading information. The specific method is shown in Table 1.

Figure 5.

Match selection between observation values and state points: (a) based on distance and (b) based on heading.

Table 1.

Match selection based on heading.

| Range of Heading | States Choice |

|---|---|

| 1 | |

| 2 | |

| 3 | |

| 4 |

Therefore, the state transfer function can be established based on distance and orientation. In the plane phase, it is the matching of two-dimensional state points. We use the distance and orientation between the pose information output by the VIO system at the current moment and the pose information output by the VIO system at the previous moment as two features to establish state transfer functions.

The first state transfer function is established based on the position information of the VIO system at the current moment and the previous moment:

| (7) |

where is the Euclidean distance between two consecutive observations, is the Euclidean distance between two consecutive state points, and is the variance of the distance in the observation data.

In the second state transfer function, we select the orientation information between the position output by the current VIO system and the position by the previous VIO system as a feature to establish state transfer function:

| (8) |

where is the orientation of two consecutive observations, is the orientation between two consecutive state points, and is the variance of the orientation in the observation data.

In the stair phase, we need to add the one-dimensional state points matching for the height. Each step of the pedestrian will correspond to a real height value. We can use the error between the height value matched by each step of the pedestrian and the height value output by VIO as a height matched feature to establish the state transfer functions:

| (9) |

where is the height value of best match at time t, is the height value of the VIO output at time t, is the height error variance, and is the average of all the errors before time t.

Therefore, the Equation (4) can be written as:

| (10) |

3.6. Optimal State Points Sequence

For the solution of Equation (10), we adopt the Viterbi algorithm [30]. When the observation value sequence is given, Viterbi algorithm can dynamically solve the optimal state points sequence that is most likely to produce the currently given observation value sequence. The solution steps of Viterbi algorithm are as follows:

(1) Initialization: Compute the non-normalized probability of the first position for all states, where m is the number of states.

| (11) |

(2) Recursion: Iterate through each state from front to back, find the maximum value of the non-normalized probability of each state at position , and record the state sequence label with the highest probability.

| (12) |

| (13) |

(3) When , we obtain the maximum value of the non-normalized probability and the terminal of the optimal state points sequence.

| (14) |

| (15) |

(4) Calculate the final state points output sequence.

| (16) |

(5) Finally, the optimal sequence of state points is as follows:

| (17) |

4. Experimental Results and Analysis

4.1. Implementation Details

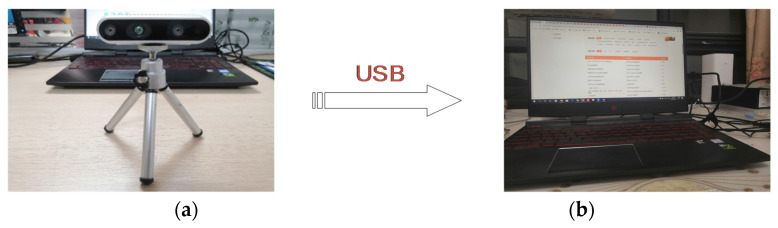

In order to verify the performance of our algorithm, we carried out experimental verification at the Science Building of Beijing University of Technology. The hardware we use includes an Intel RealSense D435i camera and a computer. The diagram is shown in Figure 6. The camera is internally integrated with a Bosch BMI055 six-axis inertial sensor, so it can be used to collect image information and IMU information. The online temporal calibration method is used to synchronize the time between the camera and the IMU to ensure that the data collected by the two can correspond [31]. The frame rate of the camera is set to 30 fps, and the frequency of the IMU is set to 650 Hz. The camera transmits data to the computer via a Universal Serial Bus, and then carries out the VIO algorithm and map matching algorithm in the computer, and finally outputs the optimal trajectory of the pedestrian. The model of the computer we use is Hewlett-Packard OMEN 4 (the processor of the computer is Intel Core i5-8300H). All software is implemented in C++/MATLAB language.

Figure 6.

Hardware connection diagram: (a) Intel RealSense D435i camera; (b) Hewlett-Packard OMEN 4 Laptop.

4.2. Two-Dimensional Map Matching Experiment

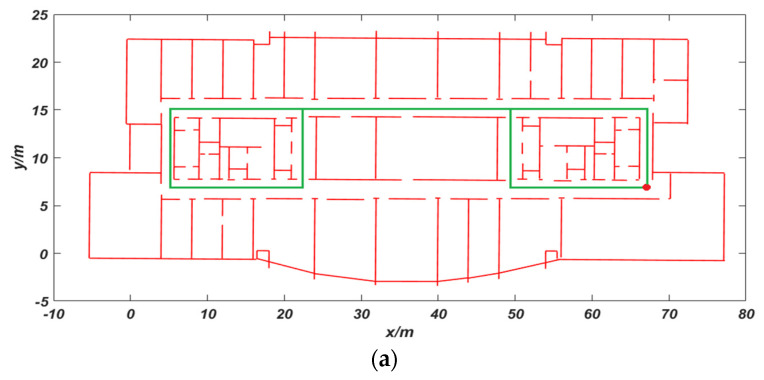

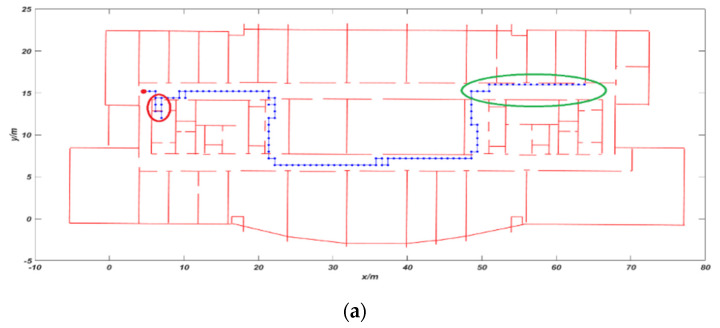

The first and second experiments were performed on the ninth floor of Science Building at Beijing University of Technology. The tester is a woman with a height of 1.63 m. She started from the red dot position, walked around the trajectory as shown in the Figure 7a, and eventually returned to the starting position. The average walking speed is 0.8 m/s. In Figure 7b, the black trajectory is the positioning trajectory of VIO. The red circle area is to enter the room. It can be seen that the trajectory of VIO is accurate at first, but the positioning accuracy of the green circle in the figure starts to decrease. After that, the pedestrian trajectory showed the serious phenomenon of passing through the wall. The main reason for the failure in positioning is that the surrounding environment of the aisle here is dominated by white walls and lacks visual valid texture information. The total distance of the experiment is 156 m, the mismatch rate reached 12.8%, and the cumulative error reached 4.26 m. The cumulative error is the Euclidean distance between the preset end point and the actual end point. The mismatch rate is the ratio between the mismatched state point and the actual total matched state point in the whole walking process. The blue trajectory in the Figure 7c is the VIO trajectory after using CRF feedback. The mismatch rate was reduced to 2.3% and the cumulative error was reduced to 0.61 m. The modified trajectory is more consistent with the indoor movement of pedestrians and basically corrects the phenomenon of the trajectory passing through the wall.

Figure 7.

Comparison of trajectory: (a) preset trajectory; (b) VIO trajectory; and (c) trajectory using the conditional random field (CRF) algorithm.

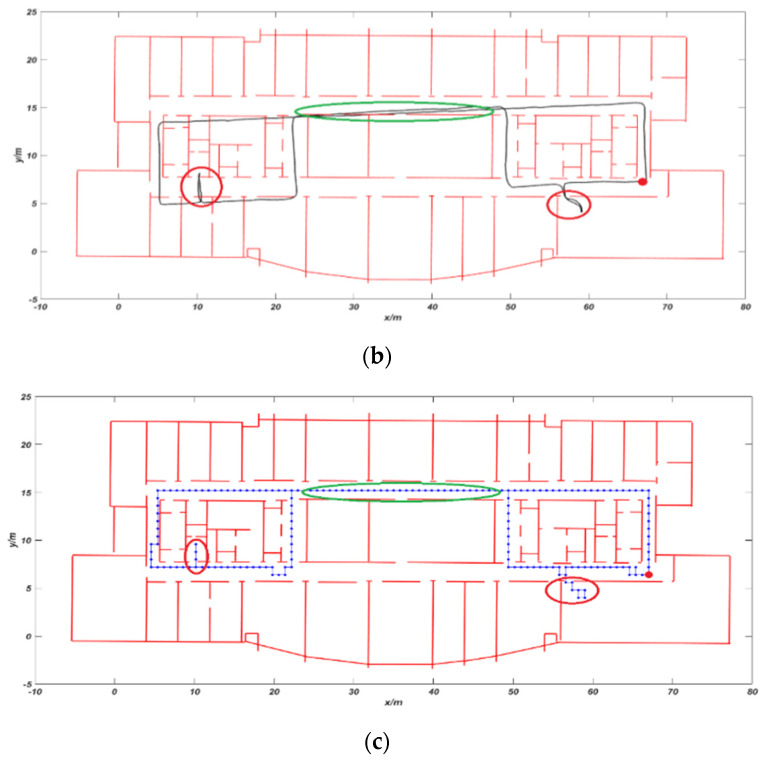

The second experiment is to verify the effect on the area with poor light. The tester is a man with a height of 1.78 m and the average walking speed is 1.2 m/s. As shown in Figure 8, the red dot is used as the starting position, the red circle area is entering the room and the green circle area will dim indoor lights. In Figure 8a, the VIO trajectory began to drift in the green area, the positioning error gradually increased, and eventually passed through the wall. At this time, we cannot correctly locate the tester. The total distance of the experiment is 102.8 m, the cumulative error is 2.62 m, and the mismatch rate reached 8%. The blue trajectory in Figure 8b is the VIO trajectory after using CRF feedback. It can be seen that our algorithm can solve the phenomenon of passing through walls and the accuracy of VIO trajectory has been significantly improved. The mismatch rate was reduced to 2% and the cumulative error was reduced to 0.43 m.

Figure 8.

Comparison of trajectory: (a) VIO trajectory and (b) trajectory using CRF algorithm.

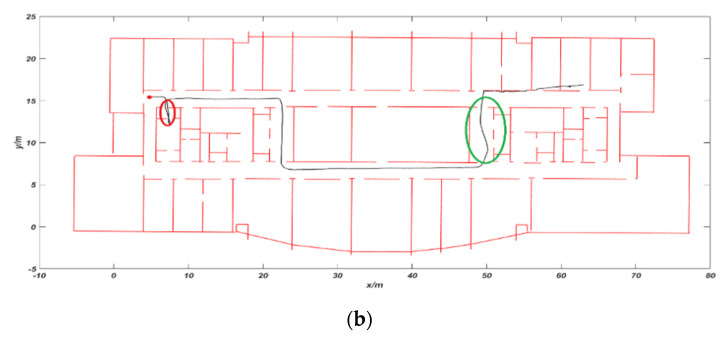

4.3. Three-Dimensional Map Matching Experiment

The third experiment was carried out on the ninth and 10th floors of Science Building in Beijing University of Technology. The tester is a man with a height of 1.7 5 m and the average walking speed is 1.0 m/s. The tester starts from the red dot on the ninth floor and go up the stairs to the 10th floor. The total distance is 59.2 m. Figure 9a is the trajectory of VIO output. As can be seen from the figure, after the floor changes, the height values of VIO trajectory have obvious drift phenomenon. The main reason is that the surrounding environment of the stair is mainly white walls, the texture information is relatively missing, and the maximum height error reaches 1.32 m. Figure 9b is the VIO trajectory corrected using the CRF model. The trajectory of the corrected VIO is significantly improved, and the cumulative height error is reduced to 0.2 6 m. Compared with the map matching algorithm in the study [25], we added the data of camera, so we achieved better accuracy. In study [26], the experiment was only carried out in the corridor and did not enter the room. In the study [27], the experiment was only performed on the same floor, and the 3D map matching is not realized.

Figure 9.

Comparison of trajectory: (a) VIO trajectory and (b) trajectory using CRF algorithm.

5. Conclusions

In this paper, in order to improve VIO positioning accuracy in indoor environments where insufficient light and featureless, we propose an algorithm based on the combination of VIO system and 3D map matching for indoor positioning. By establishing a conditional random field model, the values output by the VIO system are input into the CRF model as observation information, and the optimal state points sequence is solved. This is used as feedback value to correct the trajectory of the VIO to improve the accuracy of indoor positioning. We conducted experiments in the indoor areas with insufficient light and lack of texture, and finally verified the effectiveness of our algorithm. Our algorithm is not only applicable to the VIO system used in this paper, but also to other VIO system. Although the algorithm proposed in this paper depends on indoor map, it has some limitations. However, it provides an effective way to improve the accuracy of VIO indoor positioning. In order to make the proposed algorithm more general and practical, the future work will be focus on map construction.

Author Contributions

Conceptualization, J.Z. and M.R.; methodology, J.Z. and M.R.; investigation, J.Z., M.R., J.M., and Y.M.; software, J.Z. and J.M.; visualization, J.Z.; writing—original draft preparation, J.Z.; writing—review and editing, J.Z., M.R., and P.W.; supervision, M.R. and P.W. All authors read and agreed to the published version of the manuscript.

Funding

This research was supported by the China Scholarship Council.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- 1.Chen H.W., Wang H.S., Chang Y.T. A new coarse-time GPS positioning algorithm using combined Doppler and code-phase measurements. GPS Solut. 2013;18:541–551. doi: 10.1007/s10291-013-0350-8. [DOI] [Google Scholar]

- 2.Kim Y.K., Choi S.H., Lee J.M. Enhanced outdoor localization of multi-GPS/INS fusion system using Mahalanobis Distance; Proceedings of the 2013 10th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI); Jeju, Korea. 30 October–2 November 2013. [Google Scholar]

- 3.Capezio F., Sgorbissa A., Zaccaria R. GPS-based localization for a surveillance UGV in outdoor areas Robot Motion and Control; Proceedings of the Fifth International Workshop on Robot Motion and Control; Dymaczewo, Poland. 23–25 June 2005. [Google Scholar]

- 4.Li T., Wang J., Chen Z. Research of indoor localization based on inertial navigation technology; Proceedings of the 2016 Chinese Control and Decision Conference (CCDC); Yinchuan, China. 28–30 May 2016. [Google Scholar]

- 5.Hoflinger F., Zhang R., Reindl L.M. Indoor-localization system using a Micro-Inertial Measurement Unit (IMU); Proceedings of the 2012 European Frequency and Time Forum (EFTF); Gothenburg, Sweden. 23–27 April 2012. [Google Scholar]

- 6.Yang C., Shao H. Wi-Fi-Based indoor positioning. IEEE Commun. Mag. 2015;53:150–157. doi: 10.1109/MCOM.2015.7060497. [DOI] [Google Scholar]

- 7.Zhuang Y., Syed Z., Li Y. Evaluation of Two Wi-Fi Positioning Systems Based on Autonomous Crowdsourcing of Handheld Devices for Indoor Navigation. IEEE Trans. Mob. Comput. 2016;15:1982–1995. doi: 10.1109/TMC.2015.2451641. [DOI] [Google Scholar]

- 8.Vinicchayakul W., Promwong S. Improvement of fingerprinting technique for UWB indoor localization; Proceedings of the 2014 4th Joint International Conference on Information and Communication Technology, Electronic and Electrical Engineering (JICTEE); Chiang Rai, Thailand. 5–8 March 2014. [Google Scholar]

- 9.Rozum S., Kufa J., Polak L. Bluetooth Low Power Portable Indoor Positioning System Using SIMO Approach; Proceedings of the 42nd International Conference on Telecommunications and Signal Processing (TSP); Budapest, Hungary. 1–3 July 2019; pp. 228–231. [Google Scholar]

- 10.Shao Y.H., Jing W.L., Min C.Y., Jeng S.L. A PDR-Based Indoor Positioning System in a Nursing Cart with iBeacon-Based Calibration; Proceedings of the 2019 IEEE 90th Vehicular Technology Conference (VTC2019-Fall); Honolulu, HI, USA. 22–25 September 2019. [Google Scholar]

- 11.Wang Y., Chernyshoff A., Shkel A.M. Error Analysis of ZUPT-Aided Pedestrian Inertial Navigation; Proceedings of the 2018 International Conference on Indoor Positioning and Indoor Navigation (IPIN); Nantes, France. 24–27 September 2018. [Google Scholar]

- 12.Izmailov E.A., Kukhtievitch S.E., Tikhomirov V.V. Analysis of laser gyro drift components. Gyroscopy Navig. 2016;7:24–28. doi: 10.1134/S2075108716010053. [DOI] [Google Scholar]

- 13.Perlin V.E., Johnson D.B., Rohde M.M., Karlsen R.E. Fusion of Visual Odometry and Inertial Data for Enhanced Real-time Egomotion Estimation; Proceedings of the Unmanned Systems Technology XIII; Orlando, FL, USA. 27–29 April 2011. [Google Scholar]

- 14.Sun K., Mohta K., Pfrommer B., Watterson M., Liu S., Mulgaonkar Y., Taylor C.J., Kumar V. Robust Stereo Visual Inertial Odometry for Fast Autonomous Flight. IEEE Robot. Autom. Lett. 2018;3:965–972. doi: 10.1109/LRA.2018.2793349. [DOI] [Google Scholar]

- 15.Liu T., Lin H.Y., Lin W.Y. InertialNet: Toward Robust SLAM via Visual Inertial Measurement; Proceedings of the IEEE Intelligent Transportation Systems Conference (IEEE-ITSC); Auckland, New Zealand. 27–30 October 2019. [Google Scholar]

- 16.Fusco G., Coughlan J.M. Indoor Localization Using Computer Vision and Visual-Inertial Odometry; Proceedings of the 16th International Conference on Computers Helping People with Special Needs (ICCHP); Linz, Austria. 11–13 July 2018; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Fu Q., Yu H.S., Lai L.H., Wang J.W., Peng X. A Robust RGB-D SLAM System with Points and Lines for Low Texture Indoor Environments. IEEE Sens. J. 2019;19:9908–9920. doi: 10.1109/JSEN.2019.2927405. [DOI] [Google Scholar]

- 18.Gupta A., Yilmaz A. Indoor Positioning using Visual and Inertial Sensors; Proceedings of the 2016 IEEE Sensors; Orlando, FL, USA. 30 October–3 November 2016; [DOI] [Google Scholar]

- 19.He Y.J., Zhao J., Guo Y., He W.H., Yuan K. PL-VIO: Tightly-Coupled Monocular Visual-Inertial Odometry Using Point and Line Features. Sensors. 2018;18:1159. doi: 10.3390/s18041159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Mokhtari K.E., Reboul S., Choquel J.B. Indoor localization by particle map matching; Proceedings of the 2016 4th IEEE International Colloquium on Information Science and Technology (Cist); Tangier, Morocco. 24–26 October 2016. [Google Scholar]

- 21.Evennou F., Marx F., Novakov E. Map-aided indoor mobile positioning system using particle filter; Proceedings of the IEEE Wireless Communications & Networking Conference; New Orleans, LA, USA. 13–17 March 2005. [Google Scholar]

- 22.Lamy-Perbal S., Guenard N., Boukallel M. A HMM map-matching approach enhancing indoor positioning performances of an inertial measurement system; Proceedings of the 2015 International Conference on Indoor Positioning and Indoor Navigation (IPIN); Banff, AB, Canada. 13–16 October 2015. [Google Scholar]

- 23.Min L., Mei L.I., Xiaoyu X.U. An Improved Map Matching Algorithm Based on HMM Model. Acta Sci. Nat. Univ. 2018;54:1235–1241. [Google Scholar]

- 24.Xiao Z.L., Wen H.K., Markham A. Lightweight map matching for indoor localisation using conditional random fields; Proceedings of the 13th International Symposium on Information Processing in Sensor Networks (IPSN-14); Berlin, Germany. 15–17 April 2014; Piscataway, NJ, USA: IEEE; 2014. [Google Scholar]

- 25.Xiao Z.L., Wen H.K., Markham A., Trigoni N. Indoor Tracking Using Undirected Graphical Models. IEEE Trans. Mob. Comput. 2015;14:2286–2301. doi: 10.1109/TMC.2015.2398431. [DOI] [Google Scholar]

- 26.Safaa B., Alfonso B., Luis E.D., Enrique Q., Ikram B. Conditional Random Field-Based Offline Map Matching for Indoor Environments. Sensors. 2016;16:1302. doi: 10.3390/s16081302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ren M., Guo H., Shi J., Meng J. Indoor Pedestrian Navigation Based on Conditional Random Field Algorithm. Micromachines. 2017;8:320. doi: 10.3390/mi8110320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tong Q., Peiliang L., Shaojie S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2017;34:1004–1020. [Google Scholar]

- 29.Lafferty J., McCallum A., Pereira F.C. Conditional random fields: Probabilistic models for segmenting and labeling sequence data; Proceedings of the Eighteenth International Conference on Machine Learning; Williamstown, MA, USA. 28 June–1 July 2001. [Google Scholar]

- 30.Ryan M.S., Nudd G.R. The Viterbi Algorithm. Proc. IEEE. 1993;61:268–278. [Google Scholar]

- 31.Qin T., Shen S.J. Online Temporal Calibration for Monocular Visual-Inertial Systems; Proceedings of the 25th IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS); Madrid, Spain. 1–5 October 2018. [Google Scholar]