Abstract

Background

Evidence-based best practices are the cornerstone to guide optimal cardiopulmonary arrest resuscitation care. Adherence to the American Heart Association (AHA) guidelines for cardiopulmonary resuscitation (CPR) optimizes the management of critically ill patients and increases their chances of survival after cardiac arrest. Despite advances in resuscitation science and survival improvement over the last decades, only approximately 38% of children survive to hospital discharge after in-hospital cardiac arrest and only 6%-20% after out-of-hospital cardiac arrest.

Objective

We investigated whether a mobile app developed as a guide to support and drive CPR providers in real time through interactive pediatric advanced life support (PALS) algorithms would increase adherence to AHA guidelines and reduce the time to initiation of critical life-saving maneuvers compared to the use of PALS pocket reference cards.

Methods

This study was a randomized controlled trial conducted during a simulation-based pediatric cardiac arrest scenario caused by pulseless ventricular tachycardia (pVT). A total of 26 pediatric residents were randomized into two groups. The primary outcome was the elapsed time in seconds in each allocation group from the onset of pVT to the first defibrillation attempt. Secondary outcomes were time elapsed to (1) initiation of chest compression, (2) subsequent defibrillation attempts, and (3) administration of drugs, including the time intervals between defibrillation attempts and drug doses, shock doses, and the number of shocks. All outcomes were assessed for deviation from AHA guidelines.

Results

Mean time to the first defibrillation attempt (121.4 sec, 95% CI 105.3-137.5) was significantly reduced among residents using the app compared to those using PALS pocket cards (211.5 sec, 95% CI 162.5-260.6, P<.001). With the app, 11 out of 13 (85%) residents initiated chest compressions within 60 seconds from the onset of pVT and 12 out of 13 (92%) successfully defibrillated within 180 seconds. Time to all other defibrillation attempts was reduced with the app. Adherence to the 2018 AHA pVT algorithm improved by approximately 70% (P=.001) when using the app following all CPR sequences of action in a stepwise fashion until return of spontaneous circulation. The pVT rhythm was recognized correctly in 51 out of 52 (98%) opportunities among residents using the app compared to only 19 out of 52 (37%) among those using PALS cards (P<.001). Time to epinephrine injection was similar. Among a total of 78 opportunities, incorrect shock or drug doses occurred in 14% (11/78) of cases among those using the cards. These errors were reduced to 1% (1/78, P=.005) when using the app.

Conclusions

Use of the mobile app was associated with a shorter time to first and subsequent defibrillation attempts, fewer medication and defibrillation dose errors, and improved adherence to AHA recommendations compared with the use of PALS pocket cards.

Keywords: biomedical technologies, mobile apps, emergency medicine, pediatrics, resuscitation, guideline adherence

Introduction

Pediatric cardiac arrest is a high-risk, low-frequency event associated with death or severe neurological sequelae in survivors. It requires immediate recognition and care by skilled health providers. Recent studies show that pediatric in-hospital cardiac arrest (p-IHCA) affects 7100-8300 children per year in the United States [1], of which 14% occur in pediatric emergency departments (PEDs) [2]. Pediatric out-of-hospital cardiac arrest (p-OHCA) accounts for a further 7037 children brought to US PEDs by emergency medical services each year [3]. Despite advances in resuscitation science and survival improvement over the last decades, survival remains low, with only approximately 38% of children surviving to hospital discharge after p-IHCA, and 6%-20% after p-OHCA [3,4]. Evidence-based best practices are the cornerstone for the guidance of optimal cardiopulmonary arrest resuscitation care. High-quality cardiopulmonary resuscitation (CPR), according to the American Heart Association (AHA) life-support guidelines, is associated with a successful return of spontaneous circulation (ROSC), improved survival after hospital discharge, and good neurological outcomes [5]. Deviation from recommended procedures is associated with a reduced likelihood of survival from cardiac arrest [6].

While adherence to AHA guidelines in emergency departments has been described for adults, there are limited data for PEDs [7]. Reference tools for pediatric emergency physicians to handle pediatric CPR according to AHA guidelines are available on reference pocket cards. Unfortunately, health care providers frequently do not perform resuscitation according to guidelines, despite cognitive aids [8] and AHA life-support training courses, such as basic life support (BLS) and pediatric advanced life support (PALS). Suboptimal quality of CPR is still commonly encountered for both adult and pediatric patients [9].

New resuscitation strategies using information technologies and devices aiming to improve both in- and out-of-hospital CPR have been assessed to ensure adherence to AHA guidelines [10-16]. Nevertheless, research in this area remains scarce, especially in pediatrics, and studies assessing the impact of information technology on p-IHCA management and improved pediatric CPR outcomes are necessary. In a previous randomized trial, we found that adherence to PALS algorithms when adapted on Google Glass was improved with a significant reduction of errors and deviations in defibrillation doses by 53% when compared to the use of pocket reference cards [17]. However, time to the first defibrillation attempt and adherence to AHA guidelines to other critical resuscitation endpoints in terms of time and drug-dose delivery were not improved using the glasses. The complexity of interacting while wearing glasses, as well as the limits of the system to situate the current action in the whole resuscitation process and their small size, were major limitations to their potential use in p-IHCA. Thus, we have developed a new mobile app—the Guiding Pad app—from the ground up and dedicated it to tablets. It is intended as a guide to support and drive CPR providers in real-time conditions through interactive PALS algorithms enhanced with patient-centered cognitive aids.

Our objective was to investigate, in a simulated model, whether this app would increase adherence to AHA guidelines by reducing deviation and time to initiation of critical life-saving maneuvers during pediatric CPR compared to the use of PALS pocket reference cards.

Methods

Study Design

We conducted a prospective, randomized controlled trial in a tertiary PED (>33,000 consultations/year) with two parallel groups of voluntary pediatric residents. We compared time to the first defibrillation attempt and other critical resuscitation endpoints using a tablet app (Guiding Pad, group A) or AHA PALS conventional pocket reference cards (group B) during a standardized simulation-based pediatric cardiac arrest scenario. No changes were made to the app during the study.

The trial received a declaration of no objection by Swissethics and the Geneva Cantonal Ethics Committee, as the purpose of the study was to examine the effect of the intervention on health care providers. For the same reason, and according to the International Committee of Medical Journal Editors, a trial registration number was not required. The trial was conducted according to the principles of the Declaration of Helsinki and Good Clinical Practice guidelines, and in accordance with the CONSORT-EHEALTH (Consolidated Standards of Reporting Trials of Electronic and Mobile Health Applications and Online TeleHealth) guidelines (see Multimedia Appendix 1) [18] and the Reporting Guidelines for Health Care Simulation Research [19].

Participants

Any physician performing a residency in pediatrics was eligible. Shift-working residents were randomly recruited on the day of the study using an alphabetical list to avoid preparation bias. Included participants benefited from a standardized 5-minute introduction course on the use of the tablet app. As BLS training is a requirement for residents at our institution, all participants had previously completed this course prior to study entry. Participation in a simulation in the past month was an exclusion criterion to avoid a recent training effect. Study participants were not involved in the study design, choice of outcome measures, or the execution of the study. No participant was asked for advice on the interpretation or writing of the study results. Participants were informed of the results after completion of the study.

Randomization and Blinding

Residents were randomized using a single constant 1:1 allocation ratio determined with the web-based randomization software Sealed Envelope [20]. Written informed consent was obtained from each participant after full information disclosure prior to study participation. Blinding to the purpose of the study was maintained during recruitment to minimize preparation bias. Allocation concealment was managed with the software and was not released until the participant started the scenario.

The Guiding Pad App

Unlike adults, cardiac arrest in children without prior cardiac disease is mainly due to asystole (40%) and pulseless electrical activity (24%) [2]. As ventricular fibrillation and pulseless ventricular tachycardia (pVT), namely shockable rhythms, have been identified in 27% of p-IHCA cases [21], we decided to use the pVT algorithm, as we considered that it would offer a greater opportunity to assess the multiple-step resuscitative skills set out in the AHA guidelines. The app was developed at Geneva University Hospitals using Angular, version 8, a development framework created by Google to build mobile and web apps. The AHA PALS algorithms were adapted for tablets following a user-centered and ergonomic-driven approach by computer scientists, senior pediatric emergency physicians, and ergonomists. The numerous steps of AHA PALS algorithms were split into stages. Each stage transposed to the tablet paralleled the informational content of a resuscitation step from the original algorithm. The algorithms thus obtained were set up in a manner similar to the PALS pocket references regarding the progression and sequence of actions along the original algorithms’ sequences. For instance, the complete pVT algorithm was designed to be as concise as possible without hindering proper progression along the algorithm. After completing a quick pediatric assessment triangle as the first step to recognize cardiac arrest and initiate CPR, including information on the weight or age of the patient, the app displays an initial statement about whether the pulse is present or not and the subsequent cardiac arrhythmias. Once selected, the screen then splits into two main sections.

The first section on the left-hand side of the screen displays an entire algorithm overview, thus allowing users to situate the stepwise resuscitation progress in real time along that algorithm. The current step of the resuscitation process is surrounded by a blinking red line that allows an immediate understanding of the current position within the algorithm. Each action already performed turns grey. However, the app provides the possibility for users to navigate back and forth through the algorithm at any time in order to select one of the resuscitation steps if needed.

The second section on the right-hand side of the screen displays the following elements:

A color-coded title allowing direct identification of each step in progress.

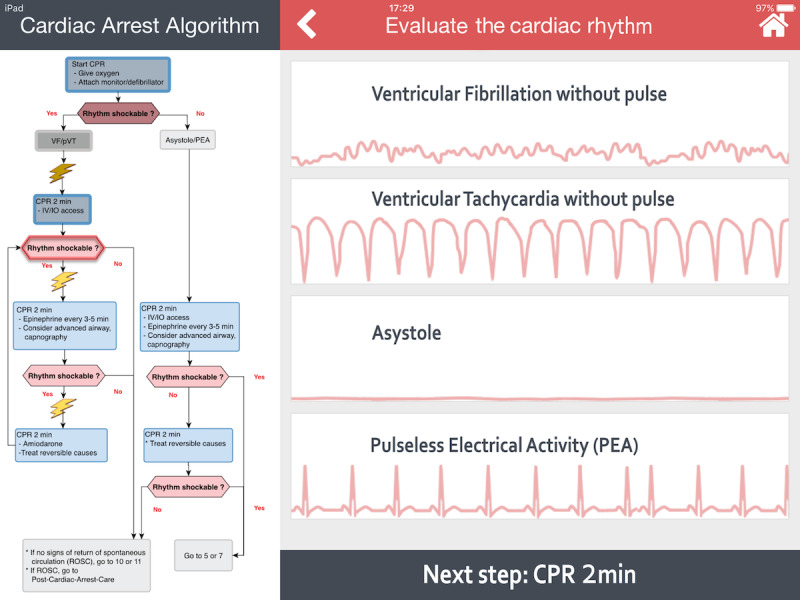

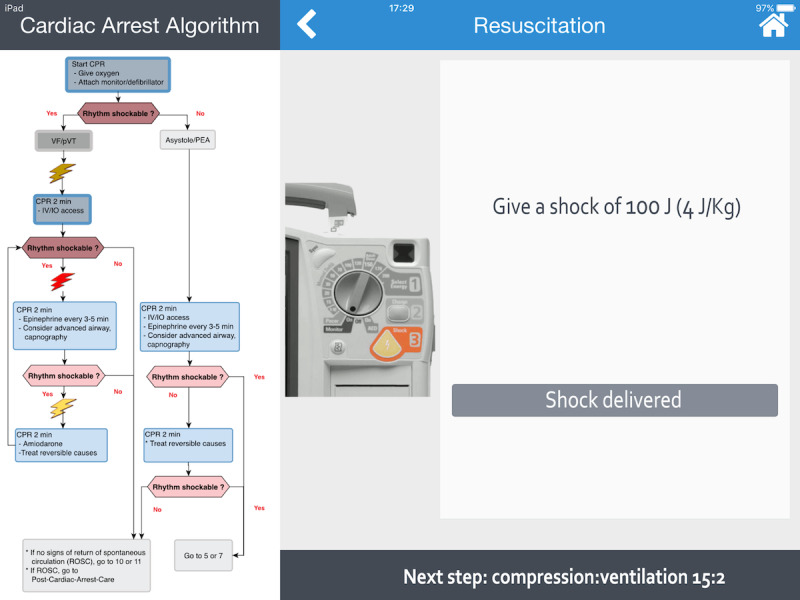

Cognitive aids helping with decision making, such as a distinctive illustration of cardiac rhythms (see Figure 1) or the shock dose to deliver with a picture of a manual defibrillator (Philips HeartStart MRx Biphasic Defibrillator, Philips Medical Systems) (see Figure 2).

A detailed and clickable list of actions to perform in a stepwise manner.

A footer to preview the next step.

Figure 1.

A screenshot of the Guiding Pad app. The left-hand side of the screen displays the American Heart Association (AHA) pediatric advanced life support (PALS) ventricular fibrillation (VF) and pulseless ventricular tachycardia (pVT) cardiac arrest algorithm. The current step (eg, determining the shockable status of the arrhythmia) of the resuscitation process is surrounded by a blinking red line. Past actions already accomplished are shown as shaded. At the top right-hand side of the screen, a color-coded title depicts the current step in progress. Below, four pulseless dysrhythmias are displayed; the provider selects the right one under consideration. At the bottom right-hand side, a footer helps to anticipate the next cardiopulmonary resuscitation (CPR) step. IO: intraosseous; IV: intravenous.

Figure 2.

Screenshot of the Guiding Pad app. The left-hand side of the screen displays the American Heart Association (AHA) pediatric advanced life support (PALS) ventricular fibrillation (VF) and pulseless ventricular tachycardia (pVT) cardiac arrest algorithm. The current step (eg, defibrillation) of the resuscitation process is displayed with a red lightning bolt. Past actions already accomplished are shown as shaded. On the right-hand side of the screen, the weight-based shock dose to deliver is displayed with a picture of a manual defibrillator (Philips HeartStart MRx Biphasic Defibrillator). Once delivered, clicking the “Shock delivered” button validates the action and allows the user to proceed to the next step. At the bottom right-hand side, a footer helps to anticipate the next compression:ventilation step. CPR: cardiopulmonary resuscitation; IO: intraosseous; IV: intravenous; PEA: pulseless electrical activity.

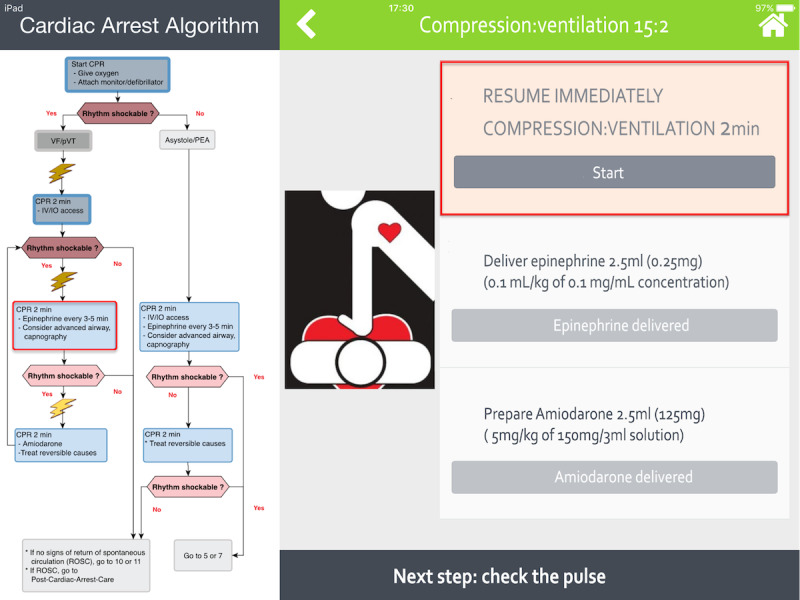

Each action prompts the provider either to perform a choice (ie, choose the correct arrhythmia among several propositions) or to validate an action to be executed (eg, a drug-dose administration), which is brought to the attention of the provider by a red-box warning (see Figure 3). Weight-based drug doses are automatically calculated by an in-built engine already used in another evidence-based app that was assessed in a multicenter randomized controlled trial for in-hospital emergency drug delivery [22]. Shock doses using Philips HeartStart MRx Biphasic Defibrillator are automatically calculated based on patients’ weights or ages. Each cycle of chest compression-ventilation is timed by a countdown clock displayed on the screen. In the case of rhythm change, the user can easily navigate across the multiple PALS algorithms (bradycardia, supraventricular tachycardia with poor perfusion, etc) at any time. All actions performed by the provider are automatically saved in log files to preserve information that can be retrieved at any time for debriefing or medicolegal purposes.

Figure 3.

Screenshot of the Guiding Pad app. The left-hand side of the screen displays the American Heart Association (AHA) pediatric advanced life support (PALS) ventricular fibrillation (VF) and pulseless ventricular tachycardia (pVT) cardiac arrest algorithm. On the right-hand side of the screen, the sequence of actions to be taken are displayed in a stepwise manner to facilitate accurate progression along the algorithm. The current action (eg, to resume compression and ventilation) is brought to the attention of the provider by a red-box warning and requires validation by a simple click. Once completed, the next action will be to deliver the weight-based epinephrine dose automatically calculated by the app and then to prepare amiodarone. The next step shown at the bottom right-hand side will be to check the pulse. CPR: cardiopulmonary resuscitation; IO: intraosseous; IV: intravenous; PEA: pulseless electrical activity.

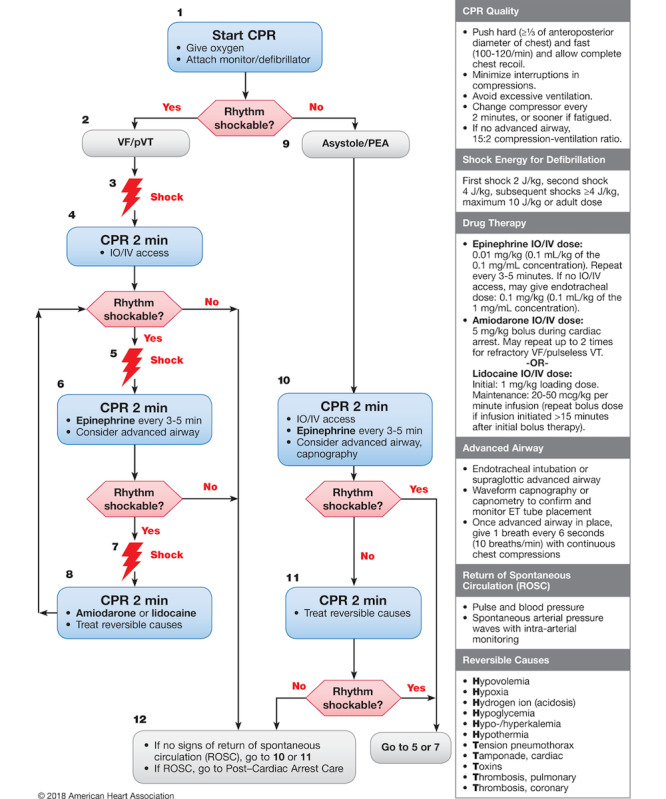

Procedures

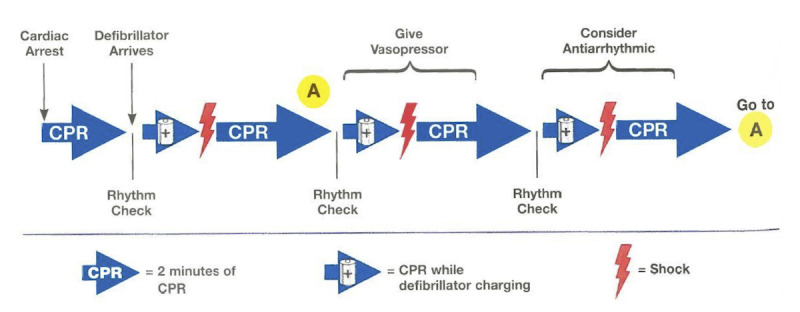

On the day of participation, each resident completed an anonymous survey on basic demographic information, professional length of clinical experience, and PALS training. After random allocation, each participant received a standardized 5-minute training session on how to use the app. Participants were then asked to perform a 15-minute, highly realistic, scripted CPR scenario on a high-fidelity manikin (SimJunior; Laerdal Medical). The scenario was standardized to strictly follow the 2018 AHA pediatric pVT algorithm (see Figure 4) and was performed on the same high-fidelity manikin already primed with vital signs appropriate for the scenario (see Multimedia Appendix 2). It was conducted in situ in the PED shock room to increase realism, thus allowing participants to make use of real resources in the actual environment where they were expected to handle cardiac arrest. All participants in group B were offered the possibility to hold PALS pocket reference cards in their hands throughout the entire scenario. Whether they referred to them or not was left to their discretion, similar to real-life settings. No interactions occurred between participants and investigators. The simulation involved the participating resident and a resuscitation team comprising three study team members (ie, a PED registered nurse and two medical students) to assist with resuscitation through drug preparation, chest compression, and bag-valve-mask ventilation. Study team members had no role in decision making to achieve ROSC. A PALS instructor (ie, a pediatric emergency physician) who was not a member of the resuscitation team operated the simulator. To be consistent with the 2018 AHA pediatric cardiac arrest algorithm [23] and to standardize the scenario, defibrillation doses of 2 Joules per kg for the first attempt, and 4 Joules per kg for the subsequent second, third, and fourth attempts, were expected (see Figure 4).

Figure 4.

American Heart Association (AHA) pediatric cardiac arrest algorithm: 2018 update (Duff et al, 2018). CPR: cardiopulmonary resuscitation; ET: endotracheal tube; IO: intraosseous; IV: intravenous; PEA: pulseless electrical activity; pVT: pulseless ventricular tachycardia; VF: ventricular fibrillation.

Epinephrine and amiodarone drug doses had to be given just before or just after the second or third shock attempts, respectively (see Figure 5). The ROSC as demonstrated by a palpable pulse and signs of regaining consciousness corresponded to the end of the scenario.

Figure 5.

Summary of the ventricular fibrillation and pulseless ventricular tachycardia (pVT) cardiac arrest sequence. This original illustration is from the eBook edition of the Pediatric Advanced Life Support (PALS) Instructor Manual, published by the American Heart Association (AHA), 2015. CPR: cardiopulmonary resuscitation.

Outcomes

The primary outcome was the delay (in seconds) in each allocation group from the end of the clinical statement given by the study investigator to the first defibrillation attempt, as the expected survival advantage from early CPR can be significantly affected by a subsequent delay in defibrillation [24,25]. Secondary outcomes were the delay (in seconds) to initiation of chest compression; time to subsequent defibrillation attempts; time to administration of epinephrine and amiodarone; time interval (in seconds) between defibrillation attempts, drug doses, shock doses, and number of shocks; and perceived stress and satisfaction scores after completion of the scenario, as measured by a questionnaire using 10-point Likert scales (see Multimedia Appendix 3). The AHA recommends five cycles of chest compression (approximately 2 minutes) between each defibrillation attempt. The time spent by participants to perform chest compressions by compression cycles was defined as the hands-on time and was measured in seconds with a chronometer. All these outcomes were assessed for deviation from AHA guidelines.

Methods of Measurement and Data Collection

All actions (ie, primary and secondary outcomes) performed by the resident during the scenario were independently recorded by two trained investigators blinded to each other’s records during the simulation, thus allowing an accurate assessment of timing and sequencing of actions and avoiding assessment bias. In the case of disagreement, a third independent evaluator helped reach a consensus. Data were manually retrieved and entered into a Microsoft Excel spreadsheet, version 16 (Microsoft Corporation). Unaccomplished actions were left blank and time was not assigned. Residents’ privacy was preserved. Only the study investigators had access to the data.

Statistical Analysis

Power calculations were based on the detection of a 30-second decrease in time to the first defibrillation attempt between the two independent groups. A previous study has shown a mean time to first defibrillation of 92 seconds with an SD of 23 seconds [26]. Assuming a similar SD in each group of our study, 10 participants per group had to be recruited to provide the trial with 80% power at a two-sided alpha level of .05. To prevent a potential loss of power due to misspecification of assumptions, 13 participants were recruited per group, giving a total sample size of 26 participants.

For the primary analysis, we first evaluated the time elapsed between the onset of pVT and first defibrillation attempt. The Shapiro-Wilks test was used for normality analysis of the parameters. As most of the continuous variables were normally distributed, means and SDs with their 95% CIs were reported. Nonnormally distributed variables were analyzed using a Mann-Whitney test. Frequencies were reported as percentages. We used t tests to compare independent groups. No paired data were compared. Kaplan-Meier curves for time elapsed between the onset of pVT and first defibrillation attempt were estimated and compared using the log-rank (Mantel-Cox) test for bivariate survival analysis.

For the secondary analysis, we evaluated the time elapsed between the onset of pVT to subsequent defibrillation attempts and the delivery of both drugs. For normally distributed variables, means and SDs with 95% CI were reported. Nonnormally distributed variables were analyzed using a Mann-Whitney test. Frequencies were reported as percentages. We used t tests to compare independent groups. No paired data were compared. Kaplan-Meier curves for time elapsed between the onset of pVT and subsequent defibrillation attempts and delivery of both drugs were also estimated and compared using the log-rank (Mantel-Cox) test for bivariate survival analysis. Errors in cycles of chest compression-ventilation were measured as the deviation in percent from the experimental time spent in seconds compared to the 2-minute duration recommended by the AHA. Incorrect defibrillation or drug doses were measured as a deviation from the amount of energy delivered in Joules or drug doses in milliliters compared to AHA recommendations. A chi-square test was used to assess the relationship between absolute errors in defibrillation and drug doses expressed as categorical variables. Incorrect defibrillation mode was also measured. Absolute deviations were also analyzed. The mean (SD) difference in deviation obtained with each method was reported with a 95% CI. A t test for unpaired data was used to compare interventions. Mean differences were reported by randomized group. Univariate linear regression analyses with 95% CI were performed to assess whether time to initiation of chest compression, defibrillation attempts, and drug delivery were associated with the number of postgraduate years or prior resuscitation experience as a provider in real-life and simulated environments. Means and SDs were determined for the perceived stress and satisfaction scores of individuals derived from the Likert-scale questionnaire and reported with descriptive statistics. A P value less than .05 was considered significant.

Interrater reliability was assessed by two observers who independently evaluated each resident’s performance. Interrater reliability scores were calculated using the Cohen kappa coefficient for the shock and drug-dose errors. As the remaining outcomes were continuous variables, the Bland-Altman method was used to plot the difference of values reported by both observers against the mean value for each outcome. The limits of agreement were assessed by the interval of SD 1.96 of the measurement differences on either side of the mean difference. The null hypothesis that there was no difference, on average, between both reviewers was tested using a t test. The mean difference was reported with its 95% CI. Additionally, the intraclass correlation coefficients for times to each critical endpoint were assessed, assuming that raters were comprised of a sample from a larger population of possible raters. GraphPad Prism, version 8 (GraphPad Software), and SPSS, version 15.0 (SPSS Inc), were used for graph figures and to perform descriptive and statistical analyses.

Results

Study Participants

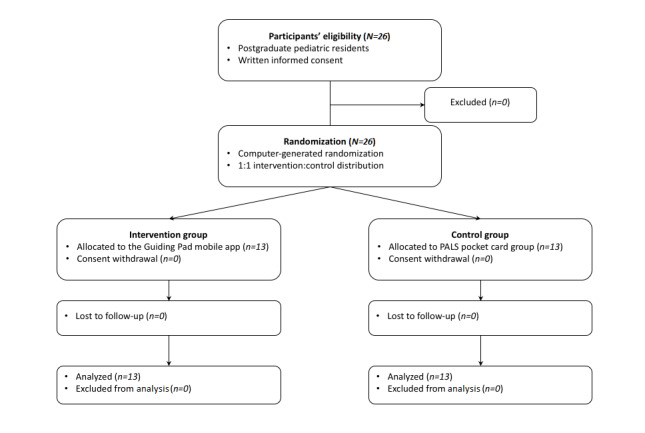

From August 30 to October 17, 2019, 26 pediatric residents were assessed for eligibility and randomly assigned to either the Guiding Pad app group (group A) (n=13) or the PALS pocket card group (group B) (n=13), without any dropouts or missing data (see Figure 6). Baseline characteristics were balanced in the two groups (see Table 1). In particular, we observed no statistically significant difference between the ages of both randomization arms (ie, no bias in randomization). We observed perfect interrater agreement for the scoring of the pVT scenario (see Table S1 in Multimedia Appendix 4, Table S2 in Multimedia Appendix 5, and Figure S1 in Multimedia Appendix 6).

Figure 6.

Trial flowchart. PALS: pediatric advanced life support.

Table 1.

Participants’ demographics and clinical characteristics.

| Demographics and clinical characteristics | Randomization arm | |

|

|

Guiding Pad (n=13) | PALSa pocket cards (n=13) |

| Age in yearsb, mean (SD) | 31.2 (5.5) | 29.0 (2.2) |

| Sex (female), n (%) | 9 (69) | 10 (77) |

| Years of residency, mean (SD) | 3.7 (2.3) | 3.5 (0.9) |

| Number of basic life support providers among residents, n (%) | 13 (100) | 13 (100) |

| Number of PALS providers among residents, n (%) | 9 (69) | 11 (85) |

| Level of self-confidencec in following American Heart Association guidelines, mean (SD) | 2.9 (1.1) | 3.5 (0.7) |

| Number of residents having been enrolled in more than five resuscitations in the past, n (%) | 4 (31) | 7 (54) |

| Prior simulation-based resuscitations, mean (SD); total | 6.5 (6.2); 84 | 4.8 (3.5); 62 |

| Prior real-world cardiopulmonary resuscitations, mean (SD); total | 9.2 (21.4); 120 | 7.8 (7.3); 102 |

| Prior use of a manual defibrillator in either real-world or simulated environments, n (%) | 10 (77) | 9 (69) |

| Months since last manual-mode defibrillator use in either real-world or simulated environments, mean (SD) | 9.5 (10.2) | 13.9 (17.8) |

aPALS: pediatric advanced life support.

bThe age difference between the randomization arms was not statistically significant.

cSelf-confidence was measured on a scale of 1 (not confident) to 5 (very confident).

Time to Critical Resuscitation Endpoint

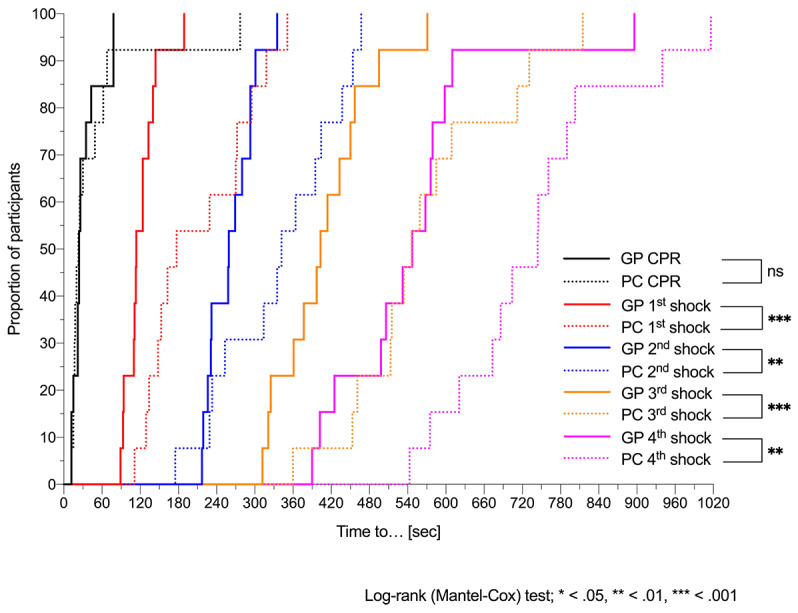

Using the Guiding Pad app, 11 residents out of 13 (85%) initiated chest compressions within 60 seconds of the onset of pVT (9/13, 69%, within 30 sec), and 12 out of 13 (92%) successfully defibrillated within 180 seconds (see Figure 7). Mean time elapsed between the onset of pVT and first defibrillation attempt was 121.4 seconds (SD 26.7).

Figure 7.

Time to cardiopulmonary resuscitation (CPR) and defibrillation attempts. Kaplan-Meier curves of time elapsed between the onset of simulated pulseless ventricular tachycardia (pVT) and initiation of chest compression (ie, CPR) for the first, second, third, and fourth defibrillation attempts for residents using the Guiding Pad (GP) app vs conventional pediatric advanced life support (PALS) pocket cards (PCs). Log-rank (Mantel-Cox) test comparing curves: χ2=0.0 and P=.97 for CPR; χ2=13.9 and P<.001 for the first defibrillation attempt; χ2=8.9 and P=.003 for the second defibrillation attempt; χ2=13.3 and P<.001 for the third defibrillation attempt; and χ2=9.5 and P=.002 for the fourth defibrillation attempt. ns: not significant.

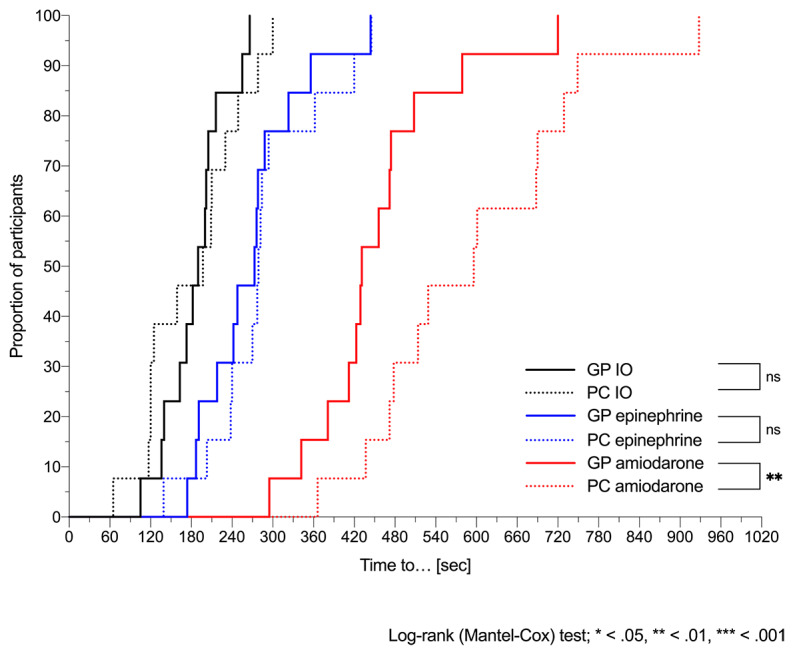

With PALS pocket cards, out of 13 residents, 10 (77%) started compressions within 60 seconds, 1 (8%) started compressions 277 seconds after onset of pVT, and 6 (46%) failed to discharge the defibrillator within 180 seconds. Mean time from initiation of chest compression to the first shock was significantly reduced for residents using the app (89.3 sec) than for those using the PALS pocket cards (163 sec; P=.002). Mean times to other critical resuscitation endpoints are summarized in Table 2. All defibrillation attempts, as well as amiodarone administration, were delivered significantly earlier in group A than in group B. However, the app was unable to speed up the delay before intraosseous access and epinephrine delivery (see Table 2 and Figure 8). We sought to analyze, in both groups, the difference in mean time to first defibrillation attempts between residents with or without previous defibrillation experience in either real-world or simulated environments, but with our small sample size we did not find any difference (with the app: 124.1 vs 112.3 sec, P=.53; without the app: 268.8 vs 186.1 sec, P=.09).

Table 2.

Mean time to critical resuscitation endpoints.

| Outcome | Mean timea for Guiding Pad app group (n=13), seconds | Mean timea for PALSb pocket cards group (n=13), seconds | Time differencec, seconds | P value | ||

|

|

Mean (SD) | 95% CI | Mean (SD) | 95% CI |

|

|

| Start chest compression | 32.1 (22.1) | 18.7-45.4 | 48.5 (71.1) | 5.6-91.5 | 16.5 | .91d |

| First defibrillation attempt | 121.4 (26.7) | 105.3-137.5 | 211.5 (81.2) | 162.5-260.6 | 90.1 | <.001 |

| Intraosseous route | 187.2 (45.4) | 159.7-214.6 | 183.0 (71.3) | 139.9-226.1 | 4.2 | .86 |

| Second defibrillation attempt | 262.5 (36.7) | 240.3-284.7 | 338.6 (93.8) | 282.0-395.3 | 76.1 | .01 |

| Epinephrine | 269.1 (74.8) | 223.8-314.3 | 287.2 (82.9) | 237.1-337.2 | 18.1 | .56 |

| Third defibrillation attempt | 408.9 (74.1) | 364.1-453.7 | 568.7 (124.4) | 493.5-643.9 | 159.8 | <.001 |

| Amiodarone | 455.5 (106.9) | 391.0-520.1 | 598.2 (154.7) | 504.7-691.7 | 142.7 | .01 |

| Fourth defibrillation attempt | 548.2 (127.6) | 471.1-625.4 | 738.5 (132.9) | 658.2-818.9 | 190.3 | <.001d |

aThe mean time in each allocation group refers to the delay in seconds from the end of the clinical statement given by the study investigator to each critical resuscitation endpoint.

bPALS: pediatric advanced life support.

cTime difference represents the absolute time difference between mean PALS pocket cards group and Guiding Pad app group outcomes.

dMann-Whitney test.

Figure 8.

Time to intraosseous (IO) route and drug delivery. Kaplan-Meier curves of time elapsed between the onset of simulated pulseless ventricular tachycardia (pVT) and the IO insertion, epinephrine, and amiodarone delivery for residents using the Guiding Pad (GP) app vs conventional pediatric advanced life support (PALS) pocket cards (PCs). Log-rank (Mantel-Cox) test comparing curves: χ2=0.4 and P=.55 for the IO route; χ2=0.6 and P=.44 for epinephrine; and χ2=7.5 and P=.006 for amiodarone. ns: not significant.

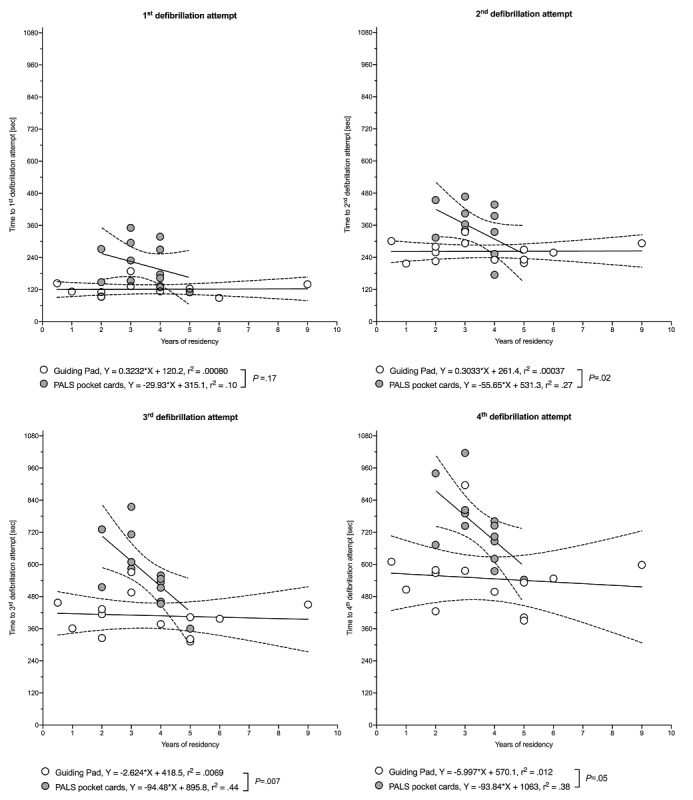

At the time of the study, 24 participants out of 26 (92%) were residents with more than one year of pediatric training (ie, postgraduate years). In a simple linear regression model, using the app was associated with a significant or borderline significant reduction in time to defibrillation attempts, regardless of the postgraduate years, and less scattered delays around the mean defibrillation time than when using the pocket cards (see Figure 9).

Figure 9.

Association between time to defibrillation attempts and years of residency. Data are shown as a regression line (solid) with 95% CI (dashed lines). P values and r2 values are based on simple linear regression analysis. White (Guiding Pad app) and grey (pediatric advanced life support [PALS] pocket cards) open circles denote each individual value.

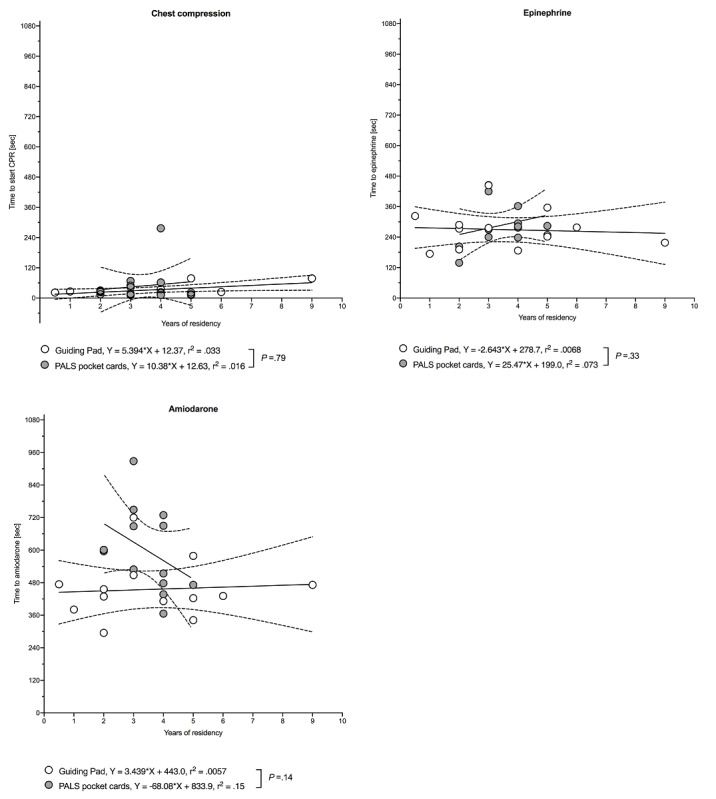

In group B, time to defibrillation attempts was inversely associated with the number of postgraduate years. In both groups, we observed no correlation between the time to initiation of chest compression or time to drug delivery and postgraduate years (see Figure 10). Moreover, we observed no relationship between previous CPR experience expressed as the number of prior CPR attempts on either a patient or a manikin and times to initiation of CPR, defibrillation attempts, or drug delivery (see Figure S2 in Multimedia Appendix 7 and Figure S3 in Multimedia Appendix 8).

Figure 10.

Association between time to chest compression or drug delivery and years of residency. Data are shown as a regression line (solid) with 95% CI (dashed lines). P values and r2 values are based on simple linear regression analysis. White (Guiding Pad app) and grey (pediatric advanced life support [PALS] pocket cards) open circles denote each individual value. CPR: cardiopulmonary resuscitation.

Errors and Deviations From the AHA pVT Algorithm

Errors and deviations from the AHA pVT algorithm are summarized in Table 3 and in Table S1 in Multimedia Appendix 4. The entire pVT algorithm was followed correctly in a stepwise fashion until ROSC by 12 out of 13 (92%) residents in group A and only 3 out of 13 (23%) residents in group B (P=.001) (see Table 4). Importantly, the pVT rhythm was recognized correctly in 51 out of 52 opportunities (98%) by residents using the app, but in only 19 out of 52 opportunities (37%) of those using the pocket cards (P<.001).

Table 3.

Errors and deviations from the American Heart Association (AHA) pulseless ventricular tachycardia (pVT) algorithm with respect to critical resuscitation endpoints.

| Critical resuscitation endpoint | AHA recommended dose | Guiding Pad app (n=13) | PALSa pocket cards (n=13) | ||||

|

|

|

Dose, mean (SD) | 95% CI | Dose range | Dose, mean (SD) | 95% CI | Dose range |

| First defibrillation attempt (J/kg) | 2.00 | 2.00 (0) | 2.00-2.00 | 2.00-2.00 | 1.97 (0.76) | 1.51-2.43 | 0.60-4.00 |

| Second defibrillation attempt (J/kg) | 4.00 | 3.85 (0.55) | 3.51-4.18 | 2.00-4.00 | 3.62 (0.96) | 3.04-4.20 | 1.00-4.00 |

| Epinephrine 0.1 mg/mL (mL/kg) | 0.10 | 0.10 (0) | 0.10-0.10 | 0.10-0.10 | 0.09 (0.03) | 0.08-0.11 | 0.001-0.10 |

| Third defibrillation attempt (J/kg) | 4.00 | 4.15 (0.55) | 3.82-4.49 | 4.00-6.00 | 3.87 (1.57) | 2.92-4.82 | 0.52-6.00 |

| Amiodarone (mL/kg) | 0.10 | 0.10 (0) | 0.10-0.10 | 0.10-0.10 | 0.10 (0.01) | 0.10-0.10 | 0.10-0.14 |

| Fourth defibrillation attempt (J/kg) | 4.00 | 4.31 (1.11) | 3.64-4.98 | 4.00-8.00 | 5.45 (1.67) | 4.44-6.46 | 4.00-8.00 |

aPALS: pediatric advanced life support.

Table 4.

Errors and deviations from the American Heart Association (AHA) pulseless ventricular tachycardia (pVT) algorithm with respect to defibrillation and drug factors.

| Defibrillation and drug factors | Guiding Pad app (n=13), n (%) | PALSa pocket cards (n=13), n (%) |

| Correct number of shocks (N=52) | 51 (98) | 51 (98) |

| Error in shock or drug doses (N=78) | 1 (1) | 11 (14)b |

| pVT rhythm recognition (N=52) | 51 (98) | 19 (37)c |

| Correct AHA sequence (n=13) | 12 (92) | 3 (23)d |

aPALS: pediatric advanced life support.

bDifference between Guiding Pad app and PALS pocket cards groups: P=.005 (Fisher exact test).

cDifference between Guiding Pad app and PALS pocket cards groups: P<.001 (Fisher exact test).

dDifference between Guiding Pad app and PALS pocket cards groups: P=.001 (Fisher exact test).

Out of 52 opportunities, 1 error in the defibrillation dose (2%) was committed during the whole scenario in group A. This resident delivered a second asynchronous shock at half the recommended energy dose (2 J/kg instead of 4 J/kg). Owing to a discontinuous adherence to the app by switching alternatively with his own CPR experience, he also failed to comply with the algorithm and gave a mistimed 5 mg/kg dose of amiodarone 3 minutes after an unnecessary additional (2 J/kg) second defibrillation attempt. This compares to 8 out of 52 (15%) errors in defibrillation doses during the whole scenario in group B (P<.03): 3 at the first defibrillation attempt (doses ranged from 0.6 to 4 J/kg instead of 2 J/kg); 2 at the second attempt (1.0 to 2 J/kg instead of 4 J/kg); and 3 at the third attempt (0.52 to 2 J/kg instead of 4 to 10 J/kg) (see Table 3). Out of 13 residents, 2 in group B (15%) wrongly used synchronized shocks, either at the first, second, or third attempts. In group A, the mean energy dose of the first defibrillation attempt was strictly in accordance with the recommendations, whereas the second, third, and fourth defibrillation attempts deviated from the AHA recommendations by 0.15 J/kg (95% CI of discrepancy: –0.49 to 0.18, P=.34), 0.15 J/kg (95% CI of discrepancy: –0.18 to 0.49, P=.34), and 0.31 J/kg (95% CI of discrepancy: –0.36 to 0.98, P=.34), respectively. In group B, all four mean defibrillation attempts deviated from the AHA recommendations by 0.03 J/kg (95% CI of discrepancy: –0.49 to 0.43; P=.89), 0.38 J/kg (95% CI of discrepancy: –0.97 to 0.20; P=.17), 0.13 J/kg (95% CI of discrepancy: –1.08 to 0.82; P=.77), and 1.45 J/kg (95% CI of discrepancy: 0.44-2.46; P=.009), respectively.

In group A, epinephrine drug doses were given according to AHA recommendations. However, in group B, epinephrine was delivered more than 2 minutes, on four occasions, either before the first (three times) or second (one time) shocks, and was once underdosed by 10 times the recommended dose. Regarding amiodarone, among card users, one resident wrongly ordered the drug before the first shock, another after the fourth shock, a third one at 1.4 times the recommended dose, and a resident even ordered a double dose before the fourth shock.

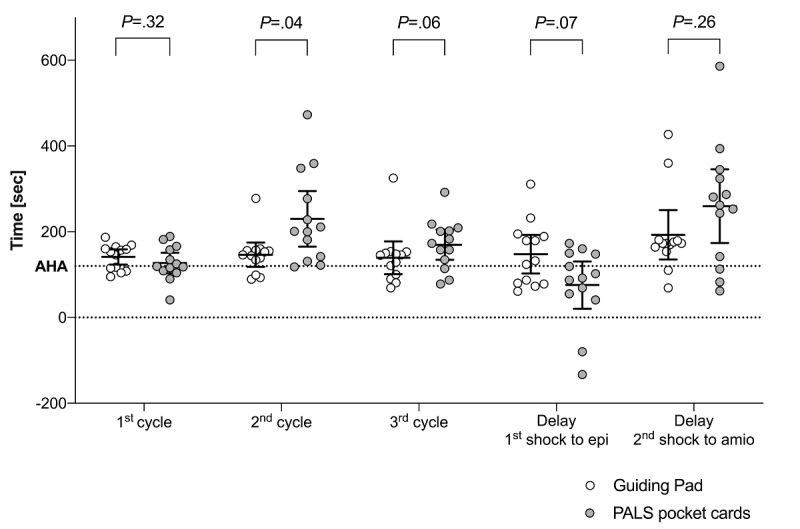

The hands-on time spent by cycles of chest compression between both groups is summarized in Figure 11. Using the Guiding Pad app, the mean time for the first, second, and third cycles of chest compression between each defibrillation attempt deviated from the AHA recommendations by 21.15 seconds (95% CI of discrepancy: 3.35-38.95; P=.02), 26.38 seconds (95% CI of discrepancy: –1.98 to 54.75; P=.07), and 19.30 seconds (95% CI of discrepancy: –18.88 to 57.49; P=.29), respectively. In group B, the mean time for the first, second, and third cycles of chest compression deviated from the AHA recommendations by 7.08 seconds (95% CI of discrepancy: –17.16 to 31.31; P=.54), 110.10 seconds (95% CI of discrepancy: 45.25-174.9; P=.003), and 49.85 seconds (95% CI of discrepancy: 14.58-85.11; P=.01), respectively. Mean delays between the first shock and epinephrine for the app and pocket card users were 147.7 seconds (95% CI 102.6-192.7) and 75.6 seconds (95% CI 20.5-130.7), respectively (see Figure 11). Mean delays between the second shock and amiodarone for the app and pocket card users were 193.0 seconds (95% CI 135.2-250.8) and 259.6 seconds (95% CI 173.6-345.6), respectively (see Figure 11).

Figure 11.

Time spent in seconds by cycles of chest compression and between defibrillation attempts and drug delivery during simulated pulseless ventricular tachycardia (pVT) scenarios. Solid horizontal lines denote mean and 95% CI. White (Guiding Pad app) and grey (pediatric advanced life support [PALS] pocket cards) open circles denote each individual value. The horizontal dashed line denotes the 120-sec American Heart Association (AHA) recommendation for a complete cycle. Delays between the first shock and epinephrine (epi) delivery, and between the second shock and amiodarone (amio) delivery, are expressed as the time to drug delivery minus the time to defibrillation attempt, by resident and by allocation group. A negative time point denotes a drug given before the expected defibrillation attempt.

The questionnaire evaluating perceived stress and satisfaction scores was completed and returned by 100% of participants. Participants in groups A and B rated the overall perceived stress before the scenario with mean scores of 5.3 (95% CI 4.0-6.6) and 5.1 (95% CI 3.9-6.3), respectively (P=.78). During the scenario, the stress remained contained by the app users (mean score 4.8, 95% CI 3.4-6.2, P=.55), whereas it increased significantly for residents relying on the PALS pocket cards (mean score 6.8, 95% CI 5.9-7.8, P=.01) compared to app users (P=.01). Satisfaction tended to be greater for residents using the app (mean score 7.5, 95% CI 6.5-8.5) compared to those using pocket cards (mean score 5.9, 95% CI 4.4-7.4) (P=.07).

Discussion

Principal Findings

In this randomized controlled trial, we report a reduced time to all defibrillation attempts and an improved adherence of approximately 70% to all CPR sequences of action outlined by the 2018 AHA pVT guidelines with the mobile app Guiding Pad compared with the PALS pocket reference cards among pediatric residents leading simulated CPR. Of note, this result was observed irrespective of residents’ previous years of experience or prior CPR knowledge. Interindividual variance was also reduced with the app, suggesting a worthwhile benefit of its use by residents with various experience levels. To our knowledge, this is the first study to investigate the benefit of a mobile device app to improve the performance and adherence of pediatric residents to AHA resuscitation guidelines.

Standards of care acknowledge that a prompt defibrillation attempt is an important determinant of survival after cardiac arrest [27]. As outlined by Topijan et al, shorter duration of CPR is associated with higher rates of survival to discharge, supporting the concept of rapid recognition, prompt chest compression, and defibrillation as soon as possible [25]. During the first 15 minutes of CPR, survival and a favorable neurological outcome decrease linearly by 2.1% and 1.2% per minute, respectively [28]. Delays in initiating CPR have a detrimental effect on patient outcome, regardless of the quality of resuscitation [29]. Therefore, the AHA recommends that pulseless patients of any age should receive immediate CPR without delay starting with chest compressions followed by a defibrillation within 180 seconds of a shockable rhythm. In our study, approximately 80% of residents in both allocation groups started compressions within 60 seconds from the onset of pVT. Importantly, mean time from initiation of chest compression to first shock was almost halved when using the app when compared to PALS pocket cards. Among residents using the app, 92% (12/13) defibrillated successfully in 180 seconds or less of pVT onset, whereas 46% (6/13) of PALS pocket card users failed to discharge the defibrillator within 180 seconds. This correlates well with the results of Hunt et al, who observed that despite the availability of AHA recommendations, 66% of pediatric residents failed to start compressions within 60 seconds from the onset of a simulated pVT, 33% never started compressions, only 54% successfully defibrillated within 180 seconds, and 7% never discharged the defibrillator [30]. A more recent study among first-year pediatric residents showed a median time of 50 seconds for the initiation of CPR and 282 seconds to first defibrillation [31]. Most alarmingly, the pVT rhythm in our trial was misidentified by almost 70% of residents holding the PALS reference cards in their hands. This could potentially negatively affect patient outcome as choosing the wrong electrical therapy, drugs, or algorithm in real life might impede the correct management of critically ill children and jeopardize their chance of survival.

Current AHA resuscitation guidelines emphasize 2 minutes of chest compressions between defibrillation attempts as optimal care for persistent pVT or ventricular fibrillation in children [32,33]. In this study, app users deviated less from the AHA, which reached statistical significance for the second and third cycles of chest compression. Moreover, following the first and second shocks and a 2-minute period of five cycles of CPR after each shock, antiarrhythmic drugs should be administered if the patient remains in cardiac arrest, with the aim of increasing defibrillation success with subsequent defibrillation attempts [32]. In this trial, both groups accurately administered epinephrine and amiodarone drug doses, with the exception of a 100-times underdosed epinephrine and a 1.4-times overdosed amiodarone dose in group B. On average, app users correctly respected a complete 2-minute cycle of chest compression-ventilation before administering epinephrine after the first shock. Conversely, and contrary to current AHA guidelines, pocket card users administered the drug too close to the first shock, possibly explaining the absence of a significant time difference to epinephrine administration between both groups, despite a significantly longer delay to deliver the first shock in group B. Due to further delays, amiodarone was delivered significantly later by more than 2 minutes among residents not using the app.

Prompt defibrillation is crucial for the termination of ventricular fibrillation or pVT in order to achieve ROSC [33]. The AHA 2018 guidelines recommend treating pVT or ventricular fibrillation in children with an initial dose of 2 J/kg [23]. For subsequent shocks, a dose of 4 J/kg is recommended, although higher energy levels may be considered up to an adult dose, if not exceeding 10 J/kg. In this trial, residents using the PALS pocket cards were more prone to deviate from defibrillation doses than those using the app. In a total of 52 defibrillation attempts, they deviated in 36% of cases. These deviations were reduced to 6% when using the app. It would be interesting in further studies to determine whether this would translate into fewer deviations in shock doses in real life.

While the app in this study offered better adherence to AHA resuscitation recommendations than conventional PALS pocket cards, we also found that it provided a relative advantage when compared to the Google Glass-based app dedicated to the same purpose [17]. The in-built small size of the screen was indeed a limiting factor reported by residents wearing the glasses, by hindering full display of algorithms. Usability issues were also observed with inopportune and time-consuming back-and-forth navigations throughout the algorithms. In this study, displaying the entire algorithm on the larger screen size of a tablet and paralleling stepwise patient-centered care guidance appeared to improve adherence to AHA guidelines and speed up skills, thus allowing residents to better manage simulated CPR. It would be interesting in further studies to assess this assumption with certified emergency physicians or paramedics in simulated and real-life in- or out-of-hospital environments. Given the evidence regarding the observed deviation from recommended resuscitation procedures, it might be also advisable to assess the educational impact of this app for the upstream training of rescuers’ p-IHCA technical skills in further studies.

Limitations

Our study has some limitations. First, it was conducted during a resuscitation simulation–based scenario rather than tested in real-life situations. However, high-fidelity simulation is an essential method to teach resuscitation skills and technologies that cannot be practiced during real CPR, as the diversity among patients and their diseases makes such studies difficult to standardize in critical situations. The low occurrence of p-IHCA also limits the implementation of randomized trials in real life [34]. Moreover, standardizing the scenario and the environment helped to avoid effect modifiers by limiting the influence of undesired variables on the outcomes. Realism was achieved as reflected by the stress level experienced by participants, who considered the simulation to be as stressful as real CPR situations. Second, the 5-minute app training was dispensed just before the scenario. In real life, the interval between training and actual use would probably be months. However, training with the app months before the study would have unblinded participants to its purpose and could have created a preparation bias. Third, the sample size limited stratified analyses to estimate the impact of PALS certification on the outcomes, but a recent study observed that improved adherence to AHA recommendations was not directly associated with PALS-trained providers [7]. Finally, we acknowledge that our findings might not be generalizable to providers with extensive CPR experience, such as pediatric emergency physicians. As only residents were assessed in this trial, further studies would be valuable to assess this assumption.

Conclusions

A PALS-based mobile app designed for tablets to interactively support residents during pediatric CPR contributed to a shorter time to first and subsequent defibrillation attempts, fewer medication and defibrillation dose errors, as well as a better adherence to AHA recommendations, compared with the conventional PALS pocket reference cards. Taken together, our results suggest that residents are not accurately following AHA recommendations during pediatric CPR when only supported by PALS pocket cards. A next step would be to determine, in real-life studies, whether this mobile app might benefit patients by improving the adherence and performance of residents to meet AHA resuscitation requirements in clinical practice.

Acknowledgments

We thank the residents for their contributions to the trial and Rosemary Sudan for providing editorial assistance. Geneva University Hospitals is the owner of the Guiding Pad app, which was not available at the time of submission on Google Play or the Apple App Store. This trial had financial support from the private foundation of Geneva University Hospitals (fund No. QS2-25). The funder of the study had no role in study design, data collection, data analysis, data interpretation, or writing of the report.

Abbreviations

- AHA

American Heart Association

- BLS

basic life support

- CONSORT-EHEALTH

Consolidated Standards of Reporting Trials of Electronic and Mobile Health Applications and Online TeleHealth

- CPR

cardiopulmonary resuscitation

- PALS

pediatric advanced life support

- PED

pediatric emergency department

- p-IHCA

pediatric in-hospital cardiac arrest

- p-OHCA

pediatric out-of-hospital cardiac arrest

- pVT

pulseless ventricular tachycardia

- ROSC

return of spontaneous circulation

Appendix

CONSORT-eHEALTH V1.6.2.

The pulseless ventricular tachycardia (pVT) resuscitation scenario.

Questionnaire for secondary outcomes, using 10-point Likert scales.

Interrater agreement on outcome analyses.

Bland and Altman analysis and intraclass correlation coefficient (ICC) on outcome analyses.

Bland and Altman analysis of pulseless ventricular tachycardia (pVT) algorithm review.

Association between time to defibrillation attempts and number of prior cardiopulmonary resuscitation (CPR) attempts.

Association between time to chest compression or drug delivery and number of prior cardiopulmonary resuscitation (CPR) attempts.

Footnotes

Authors' Contributions: JNS was responsible for the literature search and reading of articles, writing of the manuscript, preparation of figures and tables, and statistical analysis. JNS, LL, SM, and AC were responsible for data collection. FE was responsible for the development of the project software. JNS, LL, SM, AC, and FE were responsible for the concept and design of the study and critical review of manuscript content. All authors have contributed to, seen, and approved the final submitted version of the manuscript; had full access to all the data, including statistical reports and tables, in the study; and can take responsibility for the integrity of the data and the accuracy of the data analysis. The corresponding author (JNS) confirms that he had full access to the participants’ data and endorsed the final responsibility for the submission. He further affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any deviations from the study plan have been explained.

Conflicts of Interest: None declared.

Editorial Notice: This randomized study was not registered. The editor granted an exception as ICMJE does not require a registration if the purpose of the study is to examine the effect only on health care providers rather than patients. However, readers are advised to carefully assess the validity of any potential explicit or implicit claims related to primary outcomes or effectiveness, as retrospective registration does not prevent authors from changing their outcome measures retrospectively.

References

- 1.Holmberg MJ, Ross CE, Fitzmaurice GM, Chan PS, Duval-Arnould J, Grossestreuer AV, Yankama T, Donnino MW, Andersen LW, American Heart Association’s Get With The Guidelines–Resuscitation Investigators Annual incidence of adult and pediatric in-hospital cardiac arrest in the United States. Circ Cardiovasc Qual Outcomes. 2019 Jul 09;12(7):e005580. [PMC free article] [PubMed] [Google Scholar]

- 2.Nadkarni VM, Larkin GL, Peberdy MA, Carey SM, Kaye W, Mancini ME, Nichol G, Lane-Truitt T, Potts J, Ornato JP, Berg RA, National Registry of Cardiopulmonary Resuscitation Investigators First documented rhythm and clinical outcome from in-hospital cardiac arrest among children and adults. JAMA. 2006 Jan 04;295(1):50–57. doi: 10.1001/jama.295.1.50. [DOI] [PubMed] [Google Scholar]

- 3.Benjamin EJ, Virani SS, Callaway CW, Chamberlain AM, Chang AR, Cheng S, Chiuve SE, Cushman M, Delling FN, Deo R, de Ferranti SD, Ferguson JF, Fornage M, Gillespie C, Isasi CR, Jiménez MC, Jordan LC, Judd SE, Lackland D, Lichtman JH, Lisabeth L, Liu S, Longenecker CT, Lutsey PL, Mackey JS, Matchar DB, Matsushita K, Mussolino ME, Nasir K, O'Flaherty M, Palaniappan LP, Pandey A, Pandey DK, Reeves MJ, Ritchey MD, Rodriguez CJ, Roth GA, Rosamond WD, Sampson UK, Satou GM, Shah SH, Spartano NL, Tirschwell DL, Tsao CW, Voeks JH, Willey JZ, Wilkins JT, Wu JH, Alger HM, Wong SS, Muntner P, American Heart Association Council on Epidemiology and Prevention Statistics Committee and Stroke Statistics Subcommittee Heart disease and stroke statistics-2018 update: A report from the American Heart Association. Circulation. 2018 Mar 20;137(12):e67–e492. doi: 10.1161/CIR.0000000000000558. [DOI] [PubMed] [Google Scholar]

- 4.López-Herce J, Del Castillo J, Matamoros M, Cañadas S, Rodriguez-Calvo A, Cecchetti C, Rodriguez-Núñez A, Alvarez AC, Iberoamerican Pediatric Cardiac Arrest Study Network RIBEPCI Factors associated with mortality in pediatric in-hospital cardiac arrest: A prospective multicenter multinational observational study. Intensive Care Med. 2013 Feb;39(2):309–318. doi: 10.1007/s00134-012-2709-7. [DOI] [PubMed] [Google Scholar]

- 5.Cheng A, Nadkarni VM, Mancini MB, Hunt EA, Sinz EH, Merchant RM, Donoghue A, Duff JP, Eppich W, Auerbach M, Bigham BL, Blewer AL, Chan PS, Bhanji F, American Heart Association Education Science Investigators; on behalf of the American Heart Association Education Science and Programs Committee‚ Council on Cardiopulmonary‚ Critical Care‚ Perioperative and Resuscitation; Council on Cardiovascular and Stroke Nursing; Council on Quality of Care and Outcomes Research Resuscitation education science: Educational strategies to improve outcomes from cardiac arrest: A scientific statement from the American Heart Association. Circulation. 2018 Aug 07;138(6):e82–e122. doi: 10.1161/CIR.0000000000000583. [DOI] [PubMed] [Google Scholar]

- 6.Hunt EA, Duval-Arnould JM, Nelson-McMillan KL, Bradshaw JH, Diener-West M, Perretta JS, Shilkofski NA. Pediatric resident resuscitation skills improve after "rapid cycle deliberate practice" training. Resuscitation. 2014 Jul;85(7):945–951. doi: 10.1016/j.resuscitation.2014.02.025. [DOI] [PubMed] [Google Scholar]

- 7.Auerbach M, Brown L, Whitfill T, Baird J, Abulebda K, Bhatnagar A, Lutfi R, Gawel M, Walsh B, Tay K, Lavoie M, Nadkarni V, Dudas R, Kessler D, Katznelson J, Ganghadaran S, Hamilton MF. Adherence to pediatric cardiac arrest guidelines across a spectrum of fifty emergency departments: A prospective, in situ, simulation-based study. Acad Emerg Med. 2018 Dec;25(12):1396–1408. doi: 10.1111/acem.13564. doi: 10.1111/acem.13564. [DOI] [PubMed] [Google Scholar]

- 8.Nelson McMillan K, Rosen MA, Shilkofski NA, Bradshaw JH, Saliski M, Hunt EA. Cognitive aids do not prompt initiation of cardiopulmonary resuscitation in simulated pediatric cardiopulmonary arrests. Simul Healthc. 2018 Feb;13(1):41–46. doi: 10.1097/SIH.0000000000000297. [DOI] [PubMed] [Google Scholar]

- 9.Meaney PA, Bobrow BJ, Mancini ME, Christenson J, de Caen AR, Bhanji F, Abella BS, Kleinman ME, Edelson DP, Berg RA, Aufderheide TP, Menon V, Leary M, CPR Quality Summit Investigators‚ the American Heart Association Emergency Cardiovascular Care Committee‚ and the Council on Cardiopulmonary‚ Critical Care‚ Perioperative and Resuscitation Cardiopulmonary resuscitation quality: [Corrected] Improving cardiac resuscitation outcomes both inside and outside the hospital: A consensus statement from the American Heart Association. Circulation. 2013 Jul 23;128(4):417–435. doi: 10.1161/CIR.0b013e31829d8654. [DOI] [PubMed] [Google Scholar]

- 10.Field LC, McEvoy MD, Smalley JC, Clark CA, McEvoy MB, Rieke H, Nietert PJ, Furse CM. Use of an electronic decision support tool improves management of simulated in-hospital cardiac arrest. Resuscitation. 2014 Jan;85(1):138–142. doi: 10.1016/j.resuscitation.2013.09.013. http://europepmc.org/abstract/MED/24056391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Low D, Clark N, Soar J, Padkin A, Stoneham A, Perkins GD, Nolan J. A randomised control trial to determine if use of the iResus© application on a smart phone improves the performance of an advanced life support provider in a simulated medical emergency. Anaesthesia. 2011 Apr;66(4):255–262. doi: 10.1111/j.1365-2044.2011.06649.x. doi: 10.1111/j.1365-2044.2011.06649.x. [DOI] [PubMed] [Google Scholar]

- 12.Merchant RM, Abella BS, Abotsi EJ, Smith TM, Long JA, Trudeau ME, Leary M, Groeneveld PW, Becker LB, Asch DA. Cell phone cardiopulmonary resuscitation: Audio instructions when needed by lay rescuers: A randomized, controlled trial. Ann Emerg Med. 2010 Jun;55(6):538–543.e1. doi: 10.1016/j.annemergmed.2010.01.020. [DOI] [PubMed] [Google Scholar]

- 13.Semeraro F, Frisoli A, Loconsole C, Bannò F, Tammaro G, Imbriaco G, Marchetti L, Cerchiari EL. Motion detection technology as a tool for cardiopulmonary resuscitation (CPR) quality training: A randomised crossover mannequin pilot study. Resuscitation. 2013 Apr;84(4):501–507. doi: 10.1016/j.resuscitation.2012.12.006. [DOI] [PubMed] [Google Scholar]

- 14.Pérez Alonso N, Pardo Rios M, Juguera Rodriguez L, Vera Catalan T, Segura Melgarejo F, Lopez Ayuso B, Martí Nez Riquelme C, Lasheras Velasco J. Randomised clinical simulation designed to evaluate the effect of telemedicine using Google Glass on cardiopulmonary resuscitation (CPR) Emerg Med J. 2017 Nov;34(11):734–738. doi: 10.1136/emermed-2016-205998. [DOI] [PubMed] [Google Scholar]

- 15.Sondheim SE, Devlin J, Seward WH, Bernard AW, Feinn RS, Cone DC. Recording out-of-hospital cardiac arrest treatment via a mobile smartphone application: A feasibility simulation study. Prehosp Emerg Care. 2019;23(2):284–289. doi: 10.1080/10903127.2018.1490838. [DOI] [PubMed] [Google Scholar]

- 16.Drummond D, Arnaud C, Guedj R, Duguet A, de Suremain N, Petit A. Google Glass for residents dealing with pediatric cardiopulmonary arrest: A randomized, controlled, simulation-based study. Pediatr Crit Care Med. 2017 Feb;18(2):120–127. doi: 10.1097/PCC.0000000000000977. [DOI] [PubMed] [Google Scholar]

- 17.Siebert JN, Ehrler F, Gervaix A, Haddad K, Lacroix L, Schrurs P, Sahin A, Lovis C, Manzano S. Adherence to AHA guidelines when adapted for augmented reality glasses for assisted pediatric cardiopulmonary resuscitation: A randomized controlled trial. J Med Internet Res. 2017 May 29;19(5):e183. doi: 10.2196/jmir.7379. https://www.jmir.org/2017/5/e183/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Eysenbach G, CONSORT-EHEALTH Group CONSORT-EHEALTH: Improving and standardizing evaluation reports of web-based and mobile health interventions. J Med Internet Res. 2011 Dec 31;13(4):e126. doi: 10.2196/jmir.1923. https://www.jmir.org/2011/4/e126/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Cheng A, Kessler D, Mackinnon R, Chang TP, Nadkarni VM, Hunt EA, Duval-Arnould J, Lin Y, Cook DA, Pusic M, Hui J, Moher D, Egger M, Auerbach M, International Network for Simulation-based Pediatric Innovation‚ Research‚ and Education (INSPIRE) Reporting Guidelines Investigators Reporting guidelines for health care simulation research: Extensions to the CONSORT and STROBE statements. Simul Healthc. 2016 Aug;11(4):238–248. doi: 10.1097/SIH.0000000000000150. [DOI] [PubMed] [Google Scholar]

- 20.Sealed Envelope. [2020-05-11]. https://www.sealedenvelope.com/

- 21.Samson RA, Nadkarni VM, Meaney PA, Carey SM, Berg MD, Berg RA, American Heart Association National Registry of CPR Investigators Outcomes of in-hospital ventricular fibrillation in children. N Engl J Med. 2006 Jun 01;354(22):2328–2339. doi: 10.1056/NEJMoa052917. [DOI] [PubMed] [Google Scholar]

- 22.Siebert JN, Ehrler F, Combescure C, Lovis C, Haddad K, Hugon F, Luterbacher F, Lacroix L, Gervaix A, Manzano S, PedAMINES Trial Group A mobile device application to reduce medication errors and time to drug delivery during simulated paediatric cardiopulmonary resuscitation: A multicentre, randomised, controlled, crossover trial. Lancet Child Adolesc Health. 2019 May;3(5):303–311. doi: 10.1016/S2352-4642(19)30003-3. [DOI] [PubMed] [Google Scholar]

- 23.Duff JP, Topjian A, Berg MD, Chan M, Haskell SE, Joyner BL, Lasa JJ, Ley SJ, Raymond TT, Sutton RM, Hazinski MF, Atkins DL. 2018 American Heart Association focused update on pediatric advanced life support: An update to the American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2018 Dec 04;138(23):e731–e739. doi: 10.1161/CIR.0000000000000612. [DOI] [PubMed] [Google Scholar]

- 24.Bircher NG, Chan PS, Xu Y, American Heart Association’s Get With The Guidelines–Resuscitation Investigators Delays in cardiopulmonary resuscitation, defibrillation, and epinephrine administration all decrease survival in in-hospital cardiac arrest. Anesthesiology. 2019 Mar;130(3):414–422. doi: 10.1097/ALN.0000000000002563. [DOI] [PubMed] [Google Scholar]

- 25.Topjian A. Shorter time to defibrillation in pediatric CPR: Children are not small adults, but shock them like they are. JAMA Netw Open. 2018 Sep 07;1(5):e182653. doi: 10.1001/jamanetworkopen.2018.2653. https://jamanetwork.com/journals/jamanetworkopen/fullarticle/10.1001/jamanetworkopen.2018.2653. [DOI] [PubMed] [Google Scholar]

- 26.Dilley SJ, Weiland TJ, O'Brien R, Cunningham NJ, Van Dijk JE, Mahoney RM, Williams MJ. Use of a checklist during observation of a simulated cardiac arrest scenario does not improve time to CPR and defibrillation over observation alone for subsequent scenarios. Teach Learn Med. 2015;27(1):71–79. doi: 10.1080/10401334.2014.979182. [DOI] [PubMed] [Google Scholar]

- 27.Chan PS, Krumholz HM, Nichol G, Nallamothu BK, American Heart Association National Registry of Cardiopulmonary Resuscitation Investigators Delayed time to defibrillation after in-hospital cardiac arrest. N Engl J Med. 2008 Jan 03;358(1):9–17. doi: 10.1056/NEJMoa0706467. [DOI] [PubMed] [Google Scholar]

- 28.Matos RI, Watson RS, Nadkarni VM, Huang H, Berg RA, Meaney PA, Carroll CL, Berens RJ, Praestgaard A, Weissfeld L, Spinella PC, American Heart Association’s Get With The Guidelines–Resuscitation (Formerly the National Registry of Cardiopulmonary Resuscitation) Investigators Duration of cardiopulmonary resuscitation and illness category impact survival and neurologic outcomes for in-hospital pediatric cardiac arrests. Circulation. 2013 Jan 29;127(4):442–451. doi: 10.1161/CIRCULATIONAHA.112.125625. [DOI] [PubMed] [Google Scholar]

- 29.Ross JC, Trainor JL, Eppich WJ, Adler MD. Impact of simulation training on time to initiation of cardiopulmonary resuscitation for first-year pediatrics residents. J Grad Med Educ. 2013 Dec;5(4):613–619. doi: 10.4300/JGME-D-12-00343.1. http://europepmc.org/abstract/MED/24455010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Hunt EA, Vera K, Diener-West M, Haggerty JA, Nelson KL, Shaffner DH, Pronovost PJ. Delays and errors in cardiopulmonary resuscitation and defibrillation by pediatric residents during simulated cardiopulmonary arrests. Resuscitation. 2009 Jul;80(7):819–825. doi: 10.1016/j.resuscitation.2009.03.020. [DOI] [PubMed] [Google Scholar]

- 31.Jeffers J, Eppich W, Trainor J, Mobley B, Adler M. Development and evaluation of a learning intervention targeting first-year resident defibrillation skills. Pediatr Emerg Care. 2016 Apr;32(4):210–216. doi: 10.1097/PEC.0000000000000765. [DOI] [PubMed] [Google Scholar]

- 32.de Caen AR, Berg MD, Chameides L, Gooden CK, Hickey RW, Scott HF, Sutton RM, Tijssen JA, Topjian A, van der Jagt ÉW, Schexnayder SM, Samson RA. Part 12: Pediatric Advanced Life Support: 2015 American Heart Association guidelines update for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2015 Nov 03;132(18 Suppl 2):S526–S542. doi: 10.1161/CIR.0000000000000266. http://europepmc.org/abstract/MED/26473000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kleinman ME, Chameides L, Schexnayder SM, Samson RA, Hazinski MF, Atkins DL, Berg MD, de Caen AR, Fink EL, Freid EB, Hickey RW, Marino BS, Nadkarni VM, Proctor LT, Qureshi FA, Sartorelli K, Topjian A, van der Jagt EW, Zaritsky AL. Part 14: Pediatric Advanced Life Support: 2010 American Heart Association guidelines for cardiopulmonary resuscitation and emergency cardiovascular care. Circulation. 2010 Nov 02;122(18 Suppl 3):S876–S908. doi: 10.1161/CIRCULATIONAHA.110.971101. [DOI] [PubMed] [Google Scholar]

- 34.Cheng A, Auerbach M, Hunt EA, Chang TP, Pusic M, Nadkarni V, Kessler D. Designing and conducting simulation-based research. Pediatrics. 2014 Jun;133(6):1091–1101. doi: 10.1542/peds.2013-3267. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

CONSORT-eHEALTH V1.6.2.

The pulseless ventricular tachycardia (pVT) resuscitation scenario.

Questionnaire for secondary outcomes, using 10-point Likert scales.

Interrater agreement on outcome analyses.

Bland and Altman analysis and intraclass correlation coefficient (ICC) on outcome analyses.

Bland and Altman analysis of pulseless ventricular tachycardia (pVT) algorithm review.

Association between time to defibrillation attempts and number of prior cardiopulmonary resuscitation (CPR) attempts.

Association between time to chest compression or drug delivery and number of prior cardiopulmonary resuscitation (CPR) attempts.