Abstract

Purpose:

Brain-computer interface (BCI) techniques may provide computer access for individuals with severe physical impairments. However, the relatively hidden nature of BCI control obscures how BCI systems work behind the scenes, making it difficult to understand how electroencephalography (EEG) records the BCI related brain signals, what brain signals are recorded by EEG, and why these signals are targeted for BCI control. Furthermore, in the field of speech-language-hearing, signals targeted for BCI application have been of primary interest to clinicians and researchers in the area of augmentative and alternative communication (AAC). However, signals utilized for BCI control reflect sensory, cognitive and motor processes, which are of interest to a range of related disciplines including speech science.

Method:

This tutorial was developed by a multidisciplinary team emphasizing primary and secondary BCI-AAC related signals of interest to speech-language-hearing.

Results:

An overview of BCI-AAC related signals are provided discussing 1) how BCI signals are recorded via EEG, 2) what signals are targeted for non-invasive BCI control, including the P300, sensorimotor rhythms, steady state evoked potentials, contingent negative variation, and the N400, and 3) why these signals are targeted. During tutorial creation, attention was given to help support EEG and BCI understanding for those without an engineering background.

Conclusion:

Tutorials highlighting how BCI-AAC signals are elicited and recorded can help increase interest and familiarity with EEG and BCI techniques and provide a framework for understanding key principles behind BCI-AAC design and implementation.

Keywords: Brain-computer interface, BCI, electroencephalography, EEG, augmentative and alternative communication, AAC, event-related potential, steady state evoked potentials, sensorimotor rhythm, contingent negative variation, P300, N400

Electroencephalography (EEG) techniques non-invasively record brain activity at the level of the scalp via electrodes placed in a cap. The application of EEG techniques allows investigators to understand what happens in the brain when someone completes different tasks such as those related to movement, speech perception, language processing, and cognitive processes, in addition to exploring differences in brain activity demonstrated for those with varying physical, cognitive, and/or sensory impairments. One area of rapidly expanding EEG research focuses on building technologies around the recorded brain signals with the aim of providing computer control for those with severe physical impairments who may find current methods of computer access ineffective or inefficient. Currently, all computer access methods require some form of physical movement for access (e.g., eye movement for eye-gaze systems). However, brain-computer interface (BCI) technology seeks to translate the recorded brain activity into computer control, circumventing the necessity for an individual to possess a reliable form of motor movement for computer access. Consequently, BCI technology may serve as a computer access method, which allows individuals with severe physical impairments to utilize computer systems for varying applications such as augmentative and alternative communication (BCI-AAC) control (e.g., Brumberg, Pitt, Mantie-Kozlowski, & Burnison, 2018). Therefore, in the field of speech-language-hearing, signals targeted for BCI application have been of primary interest to clinicians and researchers in the areas of AAC and assistive technology. Further, the signals utilized for BCI control are applicable to a range of fields related to speech-language-hearing, including speech science to better understand the sensory, motor and cognitive processes of speech.

High technology techniques for AAC access such as eye-gaze can sometimes be complex for individuals who use AAC to understand (McCord, & Soto, 2004). However, as existing AAC access methods require some form of physical movement, it is possible to make basic associations between an action (e.g., eye movement or switch activation) and device control. In contrast, the relatively covert or hidden nature of BCI control obscures how these systems work for those who are not directly involved in BCI research and signal processing developments. Specifically, it may be unclear how EEG records the BCI related brain signals, what brain signals are being recorded by EEG, and why these signals are targeted for BCI control. Understanding the how, what, and why behind BCI is an important foundation for professionals looking to implement BCI technology and is necessary to comprehend how EEG signals are acquired and the rationales behind BCI designs that are tailored to elicit a specific target EEG signal such as the P300 for BCI control. Furthermore, while there are resources reviewing BCI techniques (e.g., Wolpaw, Birbaumer, McFarland, Pfurtscheller & Vaughan, 2002; Brumberg, Pitt, Mantie-Kozlowski, & Burnison, 2018; Akcakaya et al., 2014), there are limited works available focused on educating clinical and research professionals, along with other BCI stakeholders (e.g., clients, family, and caregivers) about the preliminary processes governing how EEG signals are recorded, why a given signal is suitable for BCI use, and how this impacts interface design. These foundations may not be fully intuitive for individuals without a background in science, engineering or BCI development, impeding the involvement of clinical professionals and stakeholders in the BCI process. This lack of background knowledge in BCI processes may decrease stakeholders’ comfort and familiarity with high technology-based AAC and BCI applications, (e.g., Baxter, Enderby, Evans, & Judge, 2012; Blain-Moraes, Schaff, Gruis, Huggins, & Wren, 2012), possibly increasing their anxiety (Jeunet, 2016), and ultimately impeding the translation of BCI research into clinical practice by limiting stakeholder involvement. Therefore, by demystifying the processes behind BCI and EEG technology (Jeunet, 2016), this tutorial aims to provide an EEG and BCI overview regarding 1) how EEG records BCI related signals, 2) what EEG signals are targeted for BCI control and development, and 3) why these signals are targeted.

Methods

A multidisciplinary team including a BCI-AAC developer, a neuroscientist with experience in BCI development and EEG data collection and analyses, and three speech-language pathologists (two with experience in the clinical translation of BCI-AAC technology, and the other with experience in neuroscience and EEG-based research) identified foundational principles of EEG function. In addition, the multidisciplinary team identified major paradigms for discussion that include auditory and visually elicited EEG signals primarily used for direct BCI control (i.e., auditory and visual P300, steady state evoked potentials, sensorimotor modulations, and the contingent negative variation), along with a secondary signal, the N400, which to date has largely been utilized to improve P300-based BCI accuracy. Tutorial sections were informed via recent literature on EEG-based BCI research and experience, with an emphasis on research related to the field of speech-language-hearing and speech science. To outline the how, what, and why of BCI technology, the tutorial is split into two sections, with section 1 discussing how brain signals are recorded via EEG, and section 2 discussing what primary and secondary signals are being recorded, in addition to why these signals are targeted for BCI applications.

How EEG Signals are Recorded

To understand the utility of BCI for AAC applications, it is important to gain a fundamental knowledge about why EEG is used for BCI-AAC applications, along with an understanding of how the EEG system records the targeted BCI signals. Therefore, in the following section, we will outline the basic foundations necessary for understanding the use of EEG in a BCI-AAC context, including the suitability of EEG for BCI-AAC applications, a description of the EEG system, and the underlying brain activity the system records.

EEG for BCI Primer

In contrast to invasive brain recording methods such as electrocorticography, which require invasive surgery for the electrode array to be placed on or within the brains cortex (e.g., Brumberg & Guenther 2010), EEG non-invasively measures brain activity at the scalp via electrodes placed in a cap, which the individual wears during EEG recordings. Therefore, while EEG provides decreased signal to noise ratios in comparison to invasive methods (e.g., Brumberg & Guenther 2010), it provides a practical alternative to record brain signals used for BCI-AAC control without requiring invasive surgery. A primary reason EEG is used for BCI control is due to its high temporal resolution, which allows for the measurement of brain activity from one millisecond to the next. As many aspects of attention and perception appear to operate on a scale of tens of milliseconds, this high temporal resolution allows a range of neurological signals to be identified in the EEG signal, which may be used for BCI-AAC control such as the P300, steady state evoked potentials, and those involved in motor processes (e.g., Brumberg, Pitt, Mantie-Kozlowski, & Burnison, 2018; Akcakaya et al., 2014). These varied brain signals can be elicited via paradigms that do not require overt physical movements, making them ideal candidates for communication device control for those without functional motor movements, or those who find precise movements (e.g., eye movements) highly fatiguing.

Traditionally, brain signals used in BCI-AAC applications utilize EEG systems with silver chloride or tin electrodes, which require the application of electrolyte to provide a conductive path between the scalp and the recording electrode, lowering electrical impedance. Different BCI-AAC systems utilize varied numbers of electrodes depending on the specific BCI system, and the targeted signal. Research-based BCI applications may use 64 electrode locations or more (e.g., Brumberg, Burnison & Pitt, 2016). However, more commercial BCI systems, may use less electrode locations (e.g., 8 electrode locations; Guger et al., 2009) to limit set up burdens. To understand the brain areas involved in the generation of BCI signals (e.g., visual, auditory, sensorimotor), it is important to understand the basic foundations of how electrode locations are identified. Furthermore, the electrode location of primary interest for a target BCI signal may influence BCI-AAC assessment criterion, as visually elicited BCI signals such as the visual P300, and steady state visually evoked response are commonly recorded on posterior recording electrodes (e.g., P, O and PO locations; Combaz et al., 2013), which may be impeded by wheel chairs headrest (e.g., Pitt & Brumberg, 2018a). EEG electrode locations are traditionally identified using the 10–20 system, which describes different electrode recording locations by using numbers and letters to identify the electrodes adjacent brain areas, and lateralization. Regarding underlying brain areas, electrodes identified with the letter F are located over frontal areas of the brain, C; over central areas, T; over temporal areas, P; over parietal areas, and O; over occipital areas at the back of the head. Even numbered electrode locations indicate right side lateralization, odd numbers left side, and Z or zero refers to electrodes placed along the midline (e.g., Teplan, 2002). For instance, electrode locations CZ, C3, and C4 refer to the centrally located sensorimotor areas of the brain found over midline (CZ), left lateral (C3) and right lateral (C4) locations, for a full review of the 10–20 and other placement systems, see Jurcak, Tsuzuki & Dan (2007).

Ultimately, the EEG techniques described above, measures the summed electrical activity of thousands to millions of neurons. When neurons in the brain communicate, they release neurotransmitters across the space between them called the synapse. The neurotransmitter released from the presynaptic neuron then bind with receptors on the other side of the synapse, which are known as postsynaptic receptors. The released neurotransmitter that binds to these postsynaptic receptors located on the neuron’s dendrites will either result in post-synaptic inhibition or excitation. An action potential will be generated with sufficient excitation propagating the signal along the axon to other neurons. The electrical activity associated with individual action potentials are not sufficient for observation using scalp EEG, but the voltages of the postsynaptic potentials of cortical pyramidal cells, when summed together during synchronous firing, become large enough to be recorded by EEG.

What Brain Signals are Recorded by EEG, and Why They are Targeted for BCI-AAC Control

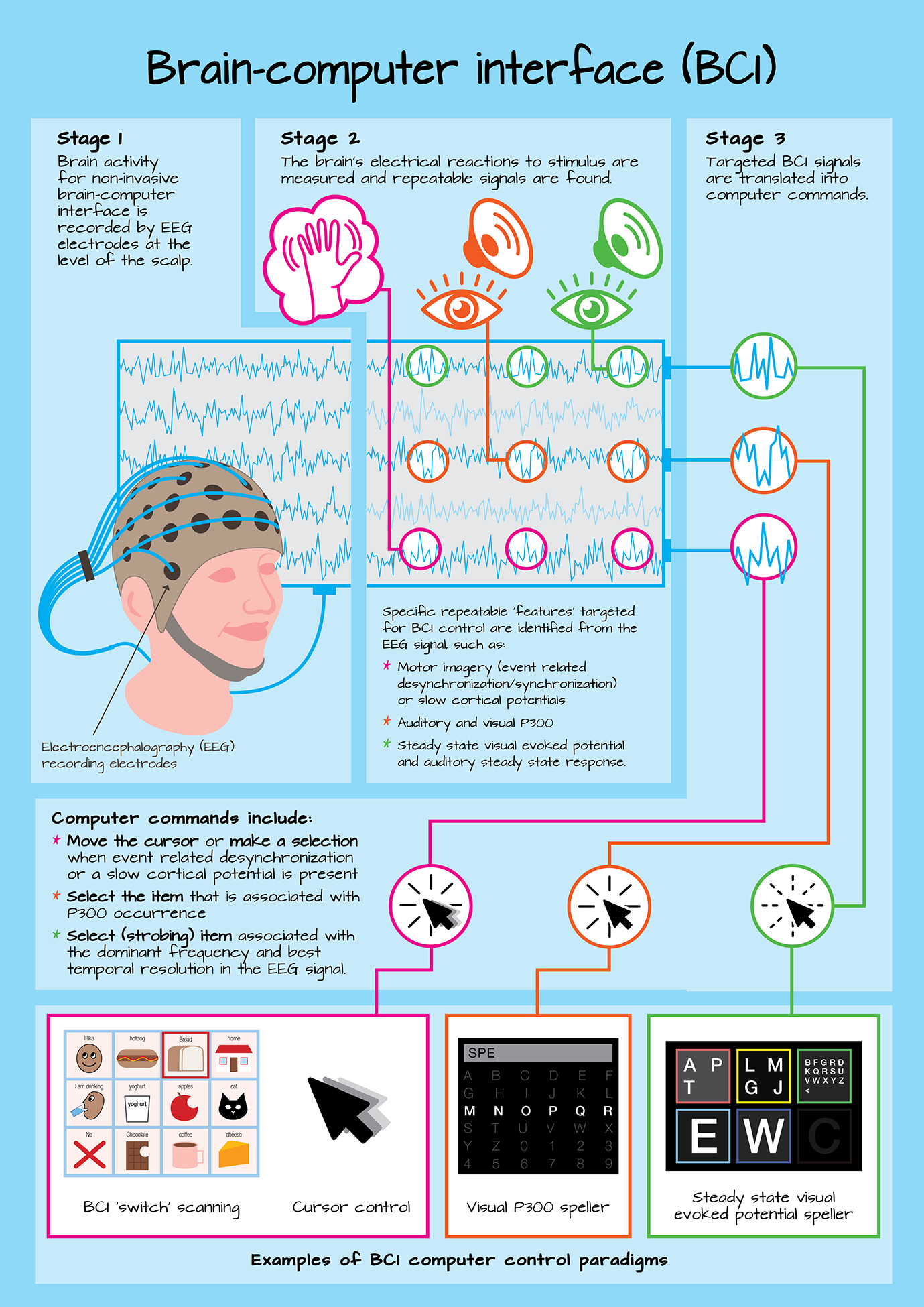

A variety of EEG paradigms are used to elicit brain signals related to BCI control and development, and each targeted signal is related to different sensory, cognitive, motor and language processes, each having its own application to BCI. The following section provides a review of primary signal used for direct BCI-AAC control (i.e., auditory and visual P300, steady state evoked potentials, sensorimotor modulations, and the contingent negative variation), along with a secondary signal, the N400, which to date has largely been utilized to improve P300-based BCI accuracy. Further, to inform BCI-AAC assessment, fundamental factors influencing signal production and BCI performance will be noted. A diagram highlighting different brain signals and how they are utilized for BCI control is provided in figure 1. In greater detail, stage 1 of figure 1, reflects the EEG recording of brain signals via the EEG electrodes placed in a fabric cap, as described in the How portion of this tutorial. Stage 2 reflects the second portion of this tutorial, outlining what primary signals utilized for BCI control including steady state evoked potentials (top line of the EEG output), P300 (second line of the EEG output), and sensorimotor rhythm (bottom line of the EEG output). Stage 3 then reflects the final tutorial portion, outlining why the primary BCI signals are targeted for communication device control (e.g., the interface item associated with the occurrence of the P300 may be identified for selection), along with examples of graphical interfaces for BCI-AAC. The N400 is not directly included in this figure since its primary role in BCI to date is to support increased performance for grid-based P300 BCI devices.

Figure 1.

A schematic outlining the basic stages of BCI operation, including how the BCI signal is recorded (stage 1), what signals are targeted for BCI control (stage 2), and why they are targeted for BCI control (stage 3), along with examples of different BCI control paradigms.

Primary Signals related to BCI-AAC control

Auditory and visual P300 event-related potential.

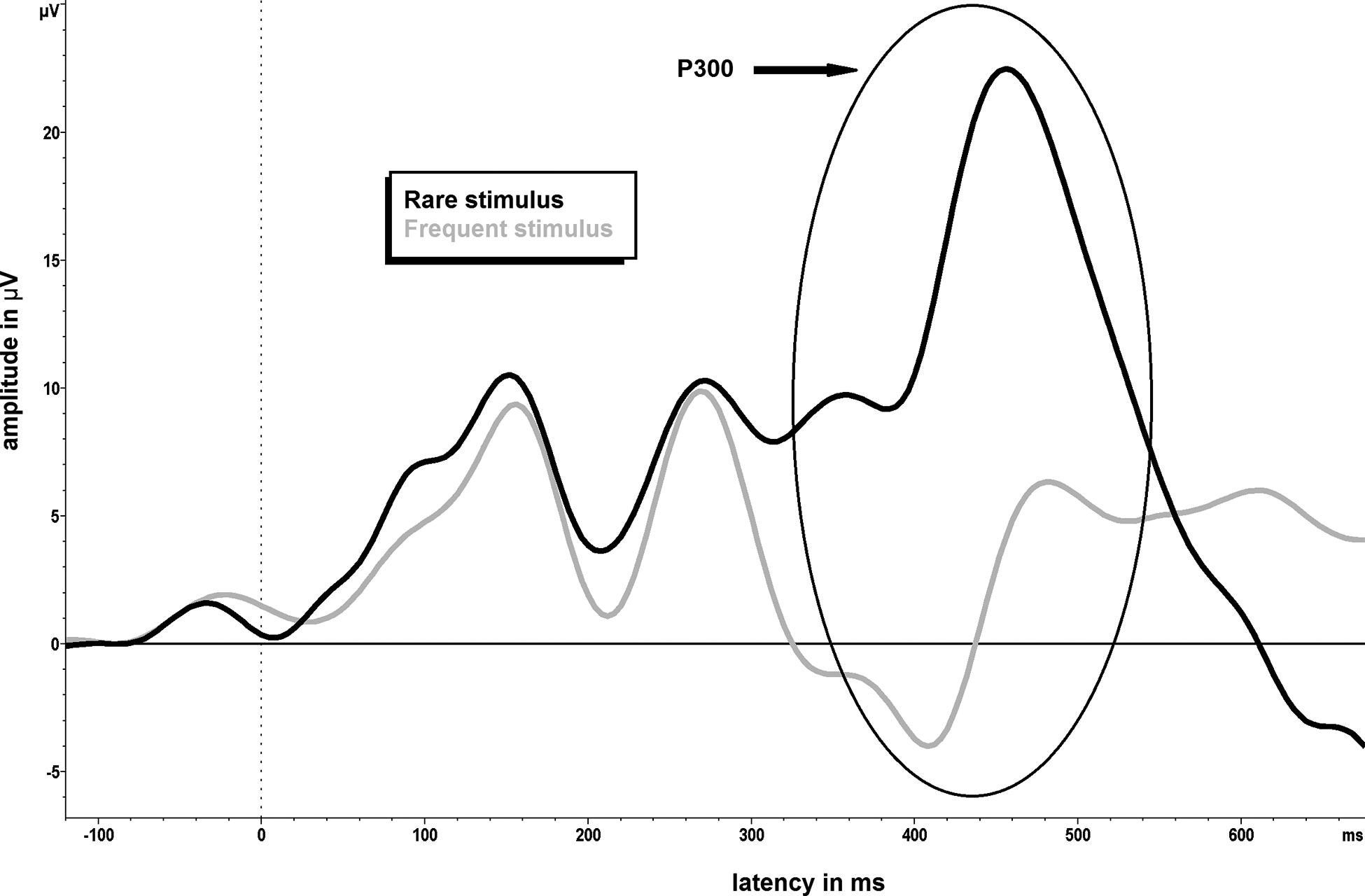

The P300 event-related potential (ERP) is a very popular signal targeted for BCI-AAC control due to its relatively large amplitude and ability to be elicited through auditory, visual and tactile sensory modalities (e.g., Guger et al., 2009). Event related potentials (ERPs) are small voltage changes recorded by EEG over time, which are generated in response to specific events or stimuli (e.g., onset of a visual or auditory stimulus; Luck, 2014). Increased ERP deflections in the EEG signal, either positive or negative, are associated with increased neural activity, with decreased latency representing a shorter time for allocation of associated cortical resources (e.g., Polich et al., 1983). As with most ERPs, the P300 signal name reflects the polarity and timing of the EEG voltage deflection after the initial stimulus onset or event. Specifically, the P300 is a positive going deflection in the EEG signal reaching its peak amplitude at latency of approximately 300 ms (figure 2), though its latency may vary from around 250–500 ms depending upon factors such as an individual’s age, stimulus modality (i.e., auditory, visual, and tactile presentations), and other task conditions (Polich, 2007).

Figure 2.

The P300 event-related potential, during which a positive going EEG deflection is noted at approximately 300ms

The P300 ERP continues to receive a lot of attention in the EEG literature since its discovery by Sutton, Braren, Zubin, & John (1965), and is elicited through tasks which require conscious discrimination of a target stimulus. Fully, the P300 is commonly elicited through an oddball presentation paradigm. During an oddball presentation paradigm, the individual is presented with a series of stimuli that include a frequently appearing ‘background’ stimulus intermixed with infrequent or novel stimulus known as the ‘oddball’ (e.g., Donchin et al., 1978). For instance, an individual may be required to listen for a less frequent high-pitched tone that occurs in a repeating train of more frequent, low pitched, standard tones, or count the number of times a target grid item is visually highlighted, amongst illumination of all other grid items. Through this paradigm, a P300 ERP occurs following identification of each high tone or target grid item presentation, with P300 amplitude increasing as the probability of identifying the target decreases (e.g., Duncan‐Johnson & Donchin, 1977). Decreasing the probability of target stimulus presentation to increase P300 amplitudes create a tradeoff in experimental designs however, as fewer rare/target stimuli will be presented in a given experimental time window. Therefore, stimulus probability must be balanced with obtaining sufficient target trials for the P300 ERP to be resolved over background noise.

The neural mechanisms governing P300 production are thought to reflect information processing, the allocation of attentional resources, and memory access or encoding during context updating (e.g., Donchin & Coles, 1988; Polich, 2007). In more detail, during the oddball paradigm, the early subcomponent of the P300 ERP (also known as the P3a) is thought to reflect attention driven processes that, along with memory access, help discriminate whether the current stimulus is different (novel) in comparison to the ones preceding it. If the stimulus is found to be different, the ‘full’ P300 ERP is produced, due to revision of the individual’s underlying mental representation and memory storage eliciting the later P3b subcomponent (e.g., Donchin, 1981; Polich, 2007). How the P300 signal specifically reflects these attention and memory related processes is still somewhat unclear, but the P300 may represent the inhibition of extraneous mental activity to facilitate enhancement of task related cognitive processes (see Polich, 2007 for review).

Why is the P300 targeted for BCI control?

For BCI applications, the P300 interface is designed to elicit the P300 via the oddball paradigm using either a serial or grid-based presentation. For example, to access a grid-based P300 BCI system (e.g., Donchin, Spencer, & Wijesinghe, 2000), the individual decides on the communication item they wish to select before the presentation begins. The undesired items serve as the frequent stimulus, and the desired item as novel/infrequent stimulus. The P300 display then randomly highlights all grid items (e.g., by turning them from grey to white or a color; e.g., Ikegami, Takano, Saeki, & Kansaku, 2011), while the individual focuses their attention on the target item they wish to select (e.g., Donchin et al., 2000). When the desired item is presented, a larger P300 is elicited in comparison to the other stimuli, and after a few presentations of each grid item, the averaged ERP for each stimulus are compared. The BCI then identifies the item associated with the largest P300 occurrence and selects that communication item. In contrast to the grid layout, items may also be presented in a rapid serial visual presentation format (e.g., Oken et al., 2014) where communication items are presented one-at-a-time in a randomized fashion from a single visual-field (e.g., central). The utilization of overt attention strategy where the individual focus their gaze on the item they wish to select has been shown to increase P300-based BCI outcomes in contrast to a covert attention strategy, where an individual focus on an item in their periphery (Brunner et al., 2010). Therefore, the rapid serial visual presentation paradigm is ideal for individuals with oculomotor difficulties who may struggle to attend to a desired stimulus placed in a grid format (e.g., Pitt & Brumberg, 2018a), as items can be placed according to oculomotor abilities. P300-based BCI interfaces are designed to elicit the maximum P300 amplitude and shortest latency for the stimulus of choice by manipulating fundamentals of the oddball paradigm such as matrix size and interstimulus interval (e.g., Sellers, Krusienski, McFarland, Vaughan, & Wolpaw, 2006), and presentation rate (McFarland, Sarnacki, Townsend, Vaughan, & Wolpaw, 2011). The time needed to select the appropriate letter may also be reduced by utilizing language models to guide stimuli presentation (e.g., Oken et al., 2014). It is also of relevance to interface design to understand ERP amplitudes are very small (i.e., microvolts) and thus are easily obscured in the EEG signal by noise from varying sources such as electrical interference and muscle movements (e.g., Luck, 2014; Fisch, 2000). Therefore, to improve the signal to noise ratio, the oddball target and frequent stimulus are commonly presented on multiple occasions, with each item in the grid being flashed more than once before the BCI makes a selection. Thus, it is the amplitude and latency of the averaged ERP waveform that is used by the BCI to assess the neural P300 response. While an increased number of trials improves the quality of the P300 recordings, the time it takes for the BCI to make an item selection is increased, slowing overall communication rate.

To date, visual and auditory P300 BCI systems have received the most attention, with visually-based devices currently involved in home use by individuals with ALS (e.g., Wolpaw et al., 2018), and a recent meta-analysis of BCI performance accuracies for individuals with ALS revealed the pooled accuracy of visually-based P300 BCI devices across fifteen studies was 72.94%, with a 95% confidence interval ranging from 64.26% to 81.62% (Marchetti & Priftis, 2015). Further, a recent longitudinal investigation by Wolpaw et al., (2018) demonstrated successful use of a visual P300 BCI system for individuals with ALS with 14 participants, from an original cohort of 39, progressing to independent at home BCI use, with 7/8 of the remaining participants electing to keep the BCI for continued use at the end of the 18 month study period. However, it should be noted that only five people withdrew from the study due to limitations in the BCI system or preferences for another device, with the primary reason for withdrawal being changes in health-related factors. Auditory-based P300 devices are a less frequently utilized form of BCI technology in comparison to their visual counterparts, and therefore performances for individuals with neuromotor disorders is less clear. Nevertheless, initial performance may be decreased for auditory-based P300 devices in comparison to their visual counterparts, due to difficulties with auditory attention, and an increased cognitive load associated with mapping of the grid-based system into the auditory domain (e.g., translating rows and columns of the visual grid into a number system; Kübler et al., 2009). However, the effects of long term BCI training on auditory P300 BCI performance requires further investigation. It is also clinically relevant to note that BCI performance accuracies for individuals with neuromotor impairments are variable for P300 devices, as with other BCI techniques, and an individual’s unique profile can influence BCI outcomes (e.g., Fried-Oken, Mooney, Peters, & Oken, 2013; Pitt & Brumberg, 2018a). For instance, improved P300 BCI performance is associated with unimpaired selective attention and working memory skills, along with positioning factors that helps ensure posterior recording electrodes are unimpeded. Therefore, similar to existing AAC procedures, an individual’s unique current and future profile and environment should be considered in BCI assessment (see Pitt & Brumberg, 2018a for a review).

Auditory and visual evoked potentials.

Similar to the P300, steady state evoked potentials allow for computer access via sensory stimulation. However, in contrast to the P300 which reflects voltage changes over time, steady state evoked potential-based BCI control is achieved via evaluation of task-related frequency components in the EEG signal. More specifically, steady state visual evoked potentials (SSVEPs) utilize rhythmic brain oscillations that are modulated by a driving visual stimulus repeating at a fixed rate, such as a flickering light or strobing icon (e.g., Regan, 1966). During SSVEP paradigms the individual attends to a constant SSVEP stimuli, which causes synchronous neural firing that follows the presentation rate of the visual stimulus (Horwitz et al., 2017). This synchronous firing produces a robust response with stable amplitude and phase over time (Regan, 1966). The SSVEP is periodic in nature, with stimulation frequencies commonly between 8–30 Hz. For example, in a SSVEP paradigm where multiple stimuli are presented simultaneously, at different stimulation frequencies, the stimulation frequency that the individual is attending induces a greater magnitude of neural synchrony (Muüller-Putz, Scherer, Brauneis, & Pfurtscheller, 2005). This synchronous neural firing increases the energy present in the target frequency band, when evaluated via a time-frequency analysis, along with increasing its temporal resolution (Lin, Zhang, Wu, & Gao, 2007) in comparison to the other non-target stimuli over posterior recording electrodes. The different stimuli are typically presented at different locations in the visual field to allow for discrete attention to one stimulus/frequency.

Paralleling the SSVEP, the auditory steady state response (ASSR) is an auditory evoked potential in response to periodically presented auditory stimuli such as a string of clicks, or amplitude-modulated tones between 20 Hz to 100 Hz (Cohen, Rickards & Clark, 1991; Hill, & Schölkopf, 2012). The ASSR can be localized to primary and secondary auditory cortex (Liégeois-Chauvel, Lorenzi, Trébuchon, Régis, & Chauvel, 2004), with rhythmic brain oscillations in auditory cortex being modulated by the frequency of the driving input stimulus.

Why are visual and auditory steady state evoked potentials targeted for BCI control?

While BCI applications of the ASSR are still in the early stages, SSVEP has an established history for BCI application due to its high signal to noise ratio (Srinivasan, Bibi, & Nunez, 2006). For instance, in four choice SSVEP display, item one may flicker at 8 Hz, item two at 9 Hz, item three at 10 Hz, and item four at 11 Hz. In this SSVEP paradigm, the frequency of the item associated with the target demonstrates increased neural synchrony and temporal correlation in the EEG signal in comparison to the non-target items. Therefore, the BCI decoding algorithm selects the target item by identifying the stimuli associated with the largest magnitude of response during the trial. With the SSVEP Shuffle Speller (Higger et al., 2017) the individual selects different boxes of letters, each flickering at their own specific frequency. Through a language model, selections are made until the individual has only one letter is left to select. Furthermore, SSVEP-based BCIs can allow access to graphical interfaces presenting a large array of items for selection such as a full keyboard layout with 30 selection options (e.g., Hwang et al., 2012). In the full keyboard paradigm, each letter and symbol flickers at a slightly different rate (e.g., letter A flickers at 7 Hz, B at 7.8 Hz, through Z, at 6 Hz) with the most similar stimulation frequencies (e.g., 7 and 7.1 Hz) being spread across the keyboard display to avoid overlap of adjacent stimulus frequencies.

For ASSR-based BCIs, individuals are instructed to attend to a specific frequency tagged sound stream coming from a specific location (Kim et al., 2011). Similar to the SSVEP, the sound stream to which the individual is attending can be identified by the BCI algorithm through increased ASSR frequency amplitude, resulting in item selection. For instance, Hill et al., (2014) investigated ASSR-based BCI paradigm for making yes and no selections, utilizing auditory attention to one of two amplitude modulated ‘beeping’ sound streams of 768 Hz to the right ear, and 512 Hz to the left ear.

Recent studies are beginning to support the feasibility SSVEP use by individual with neuromotor disorders (e.g., Hwang et al., 2017), with reported performance accuracies such as ≥70% (Combaz et al., 2013), and 76.99% (Hwang et al., 2017). However, SSVEP-based BCI performance is variable (e.g., 18.75% to 73% for individuals with various neuromotor disorders; Brumberg, Nguyen, Pitt, & Lorenz, 2018) and is influenced by an individual’s unique profile. Similar to P300-based BCIs, SSVEP performance is supported by factors such as individual’s oculomotor strengths, which allows for utilization of overt attention strategies (Brumberg, Nguyen, Pitt, & Lorenz, 2018; Peters et al., 2018). However, SSVEP items may be arranged to support oculomotor strengths to improve BCI accuracy (e.g., Brumberg, Nguyen, Pitt, & Lorenz, 2018). Further, positioning factors, such as pressure from a wheelchair headrest, and uncontrolled neck and muscle movements may impede posterior electrode recordings, which decreases SSVEP BCI performance below the aforementioned levels (Daly et al., 2013). Finally, in addition to cognitive factors such as attention, due to the rapidly flickering stimuli, an individual’s history of seizures is an important consideration when considering SSVEP-based BCI use (e.g., Pitt & Brumberg, 2018b). In comparison to SSVEP, ASSR devices are an emerging BCI technique. Therefore, the utility of ASSR-based BCIs for individuals with neuromotor disorders is currently unclear, but under investigation.

Sensorimotor modulations.

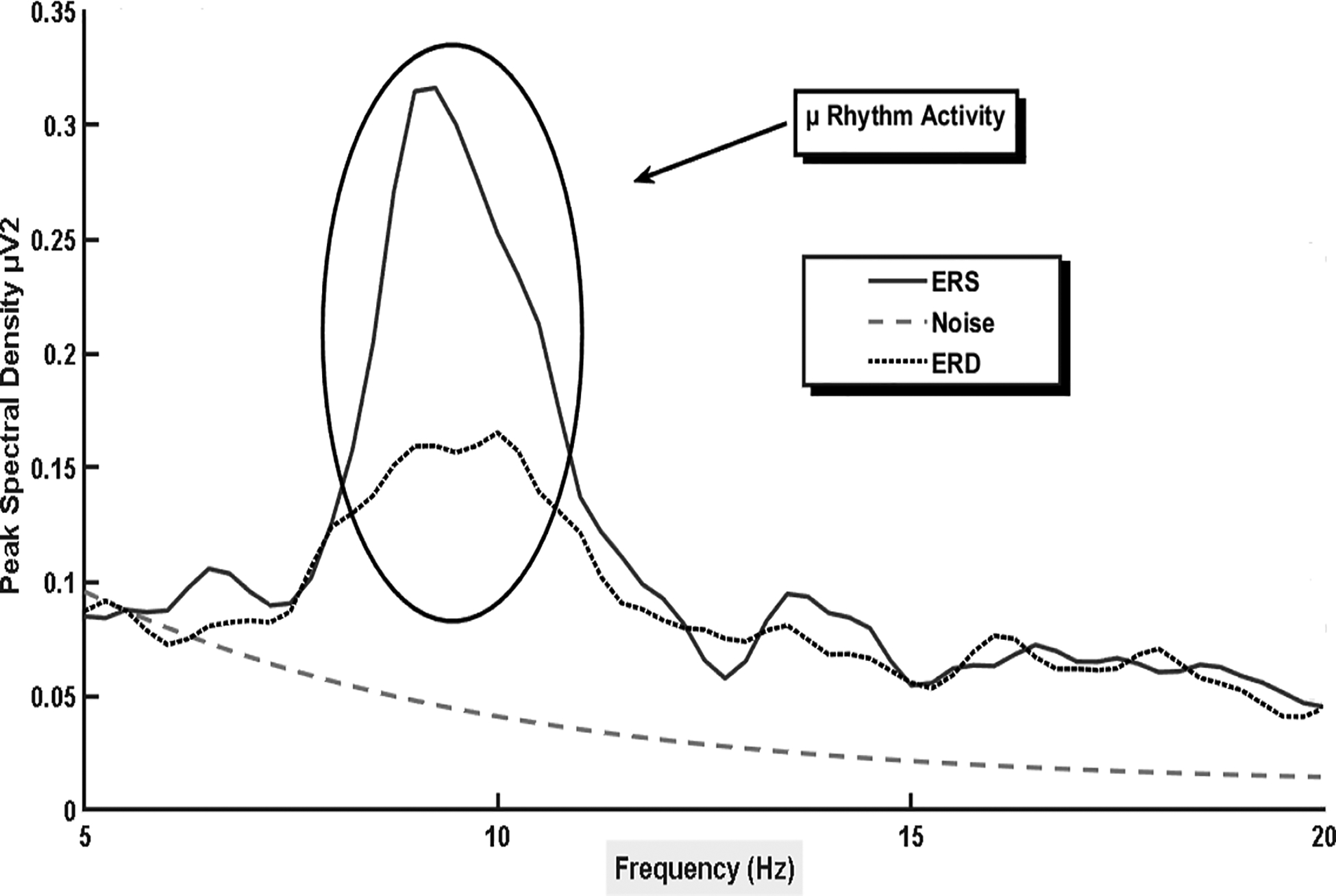

Similar to the steady state evoked potentials such as the SSVEP, sensorimotor-based BCI control is obtained through time frequency analysis of the energy levels present in different frequency bands. However, in comparison to influencing the synchrony of neural oscillation through external sensory stimulation (e.g., a flickering stimuli), sensorimotor oscillations are modulated by tasks such as physical or imagined movements, during which an individual mentally recreates an action without physical execution. Brain oscillations or rhythms refer to the repetitive, synchronous, electrical activity generated by neurons. When the brain is relaxed or at rest it is described as being in an idling state, and in this state, a large number of neurons produce synchronized rhythmic activity between ~8–12 Hz to possibly govern inhibitory and excitatory cortical processes to manage energy use (e.g., Pfurtscheller et al., 2006, 1999; Pfurtscheller & Neuper, 2009; Neuper & Pfurtscheller, 2001). However, when an individual becomes engaged in processing cognitive, sensory and/or motor-based information, synchronized ~8–12 Hz neuronal synchrony decreases, as neurons begin firing at different rates to accomplish the given task. Therefore, tasks such as an physical or imagined movement, and cognitive tasks such as word association, and arithmetic (e.g., mental subtraction; Friedrich, Scherer, & Neuper, 2012) may be used to modulate the energy levels (i.e., power) of different frequency bands within the EEG signal including, alpha (~8–12 Hz), which is called mu when measured over sensorimotor areas (Kuhlman, 1978), beta (~18–26 Hz), and gamma (> 35Hz). In more detail, each rhythm is identified by its own scalp location and frequency range, and when the brain is relaxed or at rest synchronous neural firing causes an increase in alpha band power known as event-related synchronization (ERS; figure 3). However, a time-frequency analysis reveals that when neuronal activity becomes desynchronized due to task related engagement of cortical areas, a decrease in alpha band power is noted in the EEG signal. When this neuronal desynchronization occurs following an event (e.g., presentation of an external stimulus prompting motor (imagery) task performance), it is known as event-related desynchronization (ERD; Pfurtscheller & Da Silva, 1999; see figure 3).

Figure 3.

The sensorimotor rhythm recoded over the right hemisphere (electrode location C4) during imagined movement of the left hand. The larger peak denotes increased mu band power at rest (event-related synchronization), with the lower peak demonstrates event-related desynchronization during imagined task performance.

The focus of sensorimotor-based BCI research has focused on providing BCI control via imagined actions, as imagined movements engage primary sensorimotor areas of the brain associated with neuromuscular function in a manner similar to physical movement (e.g., Pfurtscheller & Da Silva, 1999; Neuper, Scherer, Reiner & Pfurtscheller, 2005). However, a variety of tasks may potentially be utilized to produce general alpha desynchronization including cognitive tasks such as word association, and mental subtraction (Friedrich, Scherer, & Neuper, 2012; Scherer al., 2015). Regarding the performance of physical and imagined movements, as the nervous systems is organized to provide contralateral motor control (i.e., a right-hand movement is controlled by hand motor areas in left hemisphere), electrodes over left sensorimotor cortex (e.g., C3) show increased desynchronization for right hand tasks, and right sided electrodes (e.g., C4) for left hand task. However, for tasks where motor control areas are located closer to the brain’s midline (e.g., foot motor areas), a more central sensorimotor modulation is noted (e.g., Pfurtscheller, Brunner, Schlögl, & Da Silva, 2006). Sensorimotor desynchronization associated with task performance does not occur in isolation however and is accompanied by synchronization of cortical areas that are not directly involved in task completion (Suffczynski, 1999). For example, during right hand motor imagery an ipsilateral synchronization (ERS) is present in the EEG signal in conjunction with the contralateral desynchronization (e.g., Pfurtscheller & Neuper, 1997). Furthermore, during feet or tongue imagery, mu-band power increases over hand motor areas (Pfurtscheller et al., 2006).

Why are sensorimotor modulations targeted for BCI control?

For some individuals the sensorimotor rhythm may not be recordable via EEG (e.g., Blankertz et al., 2010), possibly due to anatomical variability in the shape and position of motor cortex (e.g., Morash, Bai, Furlani, Lin & Hallet, 2008). However, when present, since changes in the sensorimotor rhythms during performance of imagined movements parelleles that of physical execution, they are a viable target to provide BCI control as no physical motor skills are required for sensorimotor-based access to communication. In a BCI context, changes in the sensorimotor rhythm can be detected by the BCI during a single trial containing physical or imagined movements and translated into computer control to access a range of BCI paradigms. These paradigms are not reliant upon visual presentation paradigms that incorporate flashing stimuli such as the P300 and SSVEP. For instance, neuronal desynchronization can be detected by the BCI when an individual’s imagines performing an action following highlighting of a communication item they want to select. The presence of this event-related desynchronization can trigger the BCI to make a selection, similar to switch access during scanning-based AAC paradigms (Scherer al., 2015; Friedrich et al., 2009). Furthermore, when prompted, an individual can imagine performing a specific action (e.g., imagined right or left-hand movements) to select different groups of letters until a single item remains for selection (e.g., Obermaier, Muller, & Pfurtscheller 2003). Changes in the sensorimotor rhythm can also be interpreted by the BCI in real-time to provide cursor like computer control (e.g., Wolpaw & McFarland, 2004) in which imagining different movements results in changes in cursor position. This cursor control method could therefore allow access to a range of paradigms including selection of letters or words placed in different onscreen locations (e.g., Vaughan et al., 2006; Kübler, et al., 2005). Extending the idea of cursor control to speech processing, cursor-based BCI paradigms may be used to provide real-time control of a 2D formant speech synthesizer (Brumberg, Pitt, & Burnison, 2018; Brumberg & Pitt, 2019). It should also be considered, that in addition to motor actions and imagery different tasks such as mental subtraction, word association, mental rotation can create levels of desynchronization detectable by the EEG and may therefore have utility for BCI control. For example, while further research is still needed, a task other than motor imagery may yield BCI success for an individual with a lesion impairing motor cortex following stroke (see Friedrich et al., 2012 for a review).

Pooled sensorimotor BCI accuracies are reported as 70.04% across four studies with a 95% confidence interval ranging from 52.22% to 87.85%. However, these results need to be interpreted in light of participant heterogeneity, and differences in BCI design across studies (Marchetti & Priftis, 2015). Furthermore, while initial performances for sensorimotor BCIs utilizing auditory feedback may be associated with decreased initial BCI performances, with training, they may begin to approximate the performance levels of their visual counterparts (Nijboer et al., 2008). Regarding individual profile variability, Kasahara et al., (2012) found the desynchronization was dampened for individuals with ALS, especially for those with increased bulbar involvement. Therefore, the magnitude of the desynchronization used for BCI control may not be solely dependent upon the number or activation of surviving neural cells but by additional factors such as individuals’ ability to recall a motor action from memory, level of fatigue, and ability to concentrate. Therefore, in addition to neurophysiological measures such as amplitude of the sensorimotor rhythm (Blankertz et al., 2010), sensorimotor BCI performance may be impacted by a range of cognitive (e.g., attention; Geronimo, Simmons, & Schiff, 2016), psychological (e.g., motivation and confidence levels; Nijboer et al., 2010), and motor imagery (e.g., Neuper et al., 2005) related factors. However, additional research is needed to identify the effects of longitudinal training on sensorimotor BCI performance (e.g., Daly et al., 2014).

The contingent negative variation.

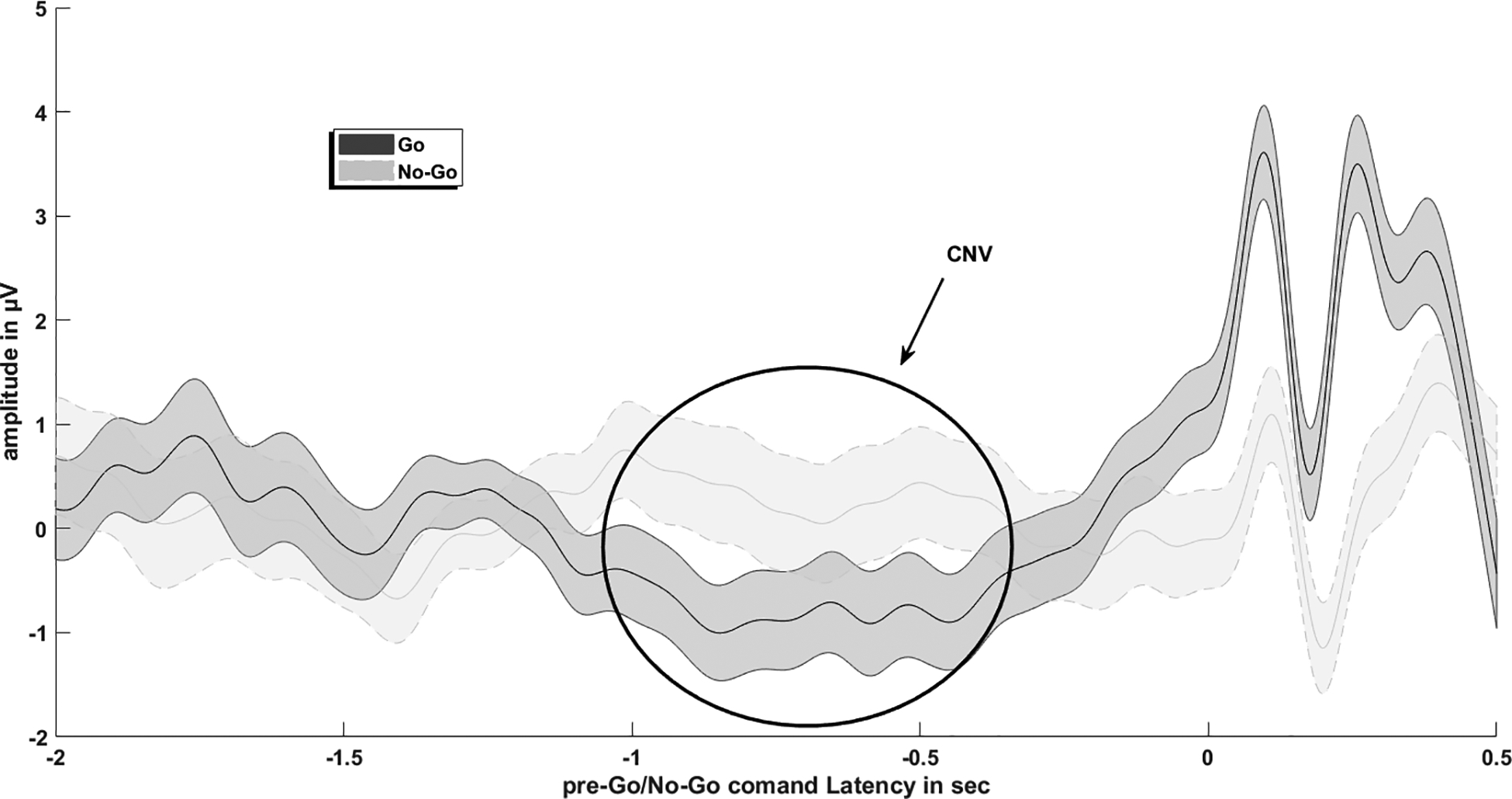

Similar to the sensorimotor rhythms, physical motor abilities are not required for an individual to learn voluntary control of slow cortical potentials, such as the contingent negative variation (CNV) and Bereitschaftspotential, making them suitable for BCI application. In the context of BCI, voluntary control of slow cortical potentials can be learned through feedback in operant conditioning paradigms (e.g., the position of an onscreen cursor changes in relation to slow cortical potential amplitudes; Neumann & Birbaumer, 2003). The CNV is a slow cortical potential (<1 Hz; e.g., Brumberg, Pitt, Mantie-Kozlowski, & Burnison, 2018) that was first described by Walter, Cooper, Aldridge, McCallum, & Winter (1964) and is characterized as an EEG signal with a negative going amplitude associated with one’s degree of cortical arousal during attentional anticipation, response preparation, and information processing (e.g., Nagai et al., 2004; Segalowitz, & Davies, 2004; figure 4). The CNV contains both cognitive and motor components and is commonly elicited during go/no-go response paradigms during which the CNV increases in negativity between a warning cue (stimulus 1), and an imperative stimulus (stimulus 2), which prompts the participant to complete an action (e.g., to press a button). For instance, during a typical CNV paradigm the first stimulus will inform the participant if they will or will not perform a set action when the second stimulus is presented. During trials where the stimulus 1 prompts the participant to prepare to perform the action upon presentation of stimulus 2 (known as ‘go trials’), an increasing negative drift is present in the EEG signal prior to task onset in comparison to ‘no-go’ trials where stimulus 1 prompts the participant to remain at rest for the trial duration (e.g., Taylor, Gavin, & Davies, 2016). However, stimulus 1 may also serve as a general ‘prepare for action’ prompt, with stimulus 2 instructing the participant to go or not to go. During response preparation between stimuli 1 and 2, the CNV may be divided into two phases, an early orienting phase known as the O-wave, and a later expectancy and preparation phase known as the E-wave (e.g., Taylor et al., 2016; Brunner et al., 2015), both of which are influenced by cognitive and motor factors (e.g., Lukhanina, Burenok, Mel’nik, & Berezetskaya, 2006). Specifically, the O-wave is greatest at midline frontal electrodes and is associated with arousal and processing of stimulus characteristics such as intensity (e.g., Nagai et al., 2004) in addition to cognitive processes associated with categorical judgement (e.g., to ‘go’, or ‘no-go’; Cui et al., 2000), and task maintenance and rehearsal (Brunner et al., 2015). In contrast, the late E-wave is associated with task setting (i.e., planning how to respond to the second stimulus), and the degree of sustained attentional efforts (Brunner et al., 2015; Cui et al., 2000). Furthermore, the late CNV is thought to reflect motor preparation to a greater degree than the early stage (Nagai et al., 2004; Cui et al., 2000), being influenced by motor factors such as task complexity (Cui et al., 2000). For neurotypical adults, a range of brain areas associated with cognitive-sensory-motor processes are associated with CNV generation (e.g., Nagai et al., 2004), with maturation of the frontal lobe attentional system as a possible contributing factor (Segalowitz & Davies, 2004) to why the CNV amplitudes increase in negativity during development and into young adulthood (Taylor et al., 2016; Segalowitz & Davies, 2004). Currently, the neural mechanisms underlying the CNV are still not fully understood (Nagai et al., 2004; Cui et al., 2000), and may vary with depending upon the task and stimulus characteristics (Segalowitz & Davies, 2004). The contingent negative variation shares a close relationship with another negative going EEG potential indicting motor ‘readiness’ known as the Bereitschaftspotential (Deecke, Grözinger & Kornhuber, 1976). The Bereitschaftspotential is commonly studied during self-initiated movements (e.g., finger flexion and extension), which the individual performs at their own pace without external cues (e.g., Cui et al., 2000). Conversely, the CNV is time-locked to stimulus presentations, requiring increased levels of attention Nagai et al., 2004).

Figure 4.

The contingent negative variation, showing a negative going EEG deflection prior to task onset.

Why is the CNV targeted for BCI control?

Traditionally, cortical arousal associated with slow cortical potentials such as the CNV or Bereitschaftspotential are used for control of operant conditioning-based BCI devices (Neumann & Birbaumer, 2003). To control operant conditioning-based BCIs such as the Thought Translation Device (Kübler et al., 1999; Birbaumer et al., 2000), the individual learns to voluntarily control their slow cortical potentials through feedback (e.g., of cursor movement). For example, in comparison to a baseline period, a negative going slow cortical potential amplitude during a single trial of active BCI control (reflecting increased cortical excitation) may move a cursor up, where a positive slow cortical potential amplitude (reflecting decreased cortical arousal), may move the cursor down (e.g., Wolpaw & Boulay, 2009; Kübler et al., 1999). Similar to sensorimotor-based BCIs, this cursor control mechanism may be used for communication output, or environmental control (Kübler et al., 1999; Neumann & Birbaumer, 2003). Furthermore, paralleling sensorimotor-based BCIs, mastery of slow cortical potential devices may take extended training times (e.g., Neumann & Birbaumer, 2003). Finally, while research is still in the early stages, the CNV may serve to provide switch-based access to commercial AAC scanning paradigms in which CNV occurrence prior to performance of an imagined movement triggering a BCI ‘switch’ selection of the currently highlighted icon during item scanning (Brumberg et al., 2016).

Across six studies the pooled classification accuracies of individuals with ALS for slow cortical potential-based BCI control was 72.94%, with a 95% confidence interval ranging from 67.32% to 83.36% (Marchetti & Priftis, 2015). Furthermore, single session BCI performance for an individual with advanced ALS was 62.2% when utilizing CNV-based access to a commercial AAC display (Brumberg, et al., 2016). Variability in BCI performance for slow cortical potential-based BCIs may be due in part to individual differences in CNV manifestation. For instance, for individuals with mild spastic cerebral the late CNV may be relatively preserved (Hakkarainen, Pirilä, Kaartinen, & Meere, 2012). However, individuals with spinal amyotrophic lateral sclerosis may present with CNV amplitudes that are increased (Hanagasi et al., 2002) or similar to neurotypical peers (Mannarelli et al., 2014), while individuals with bulbar amyotrophic lateral sclerosis may be more likely to present with decreased CNV amplitudes possibly due to cognitive impairments (Mannarelli et al., 2014).

Secondary Signal Related to BCI control

The N400 event-related potential.

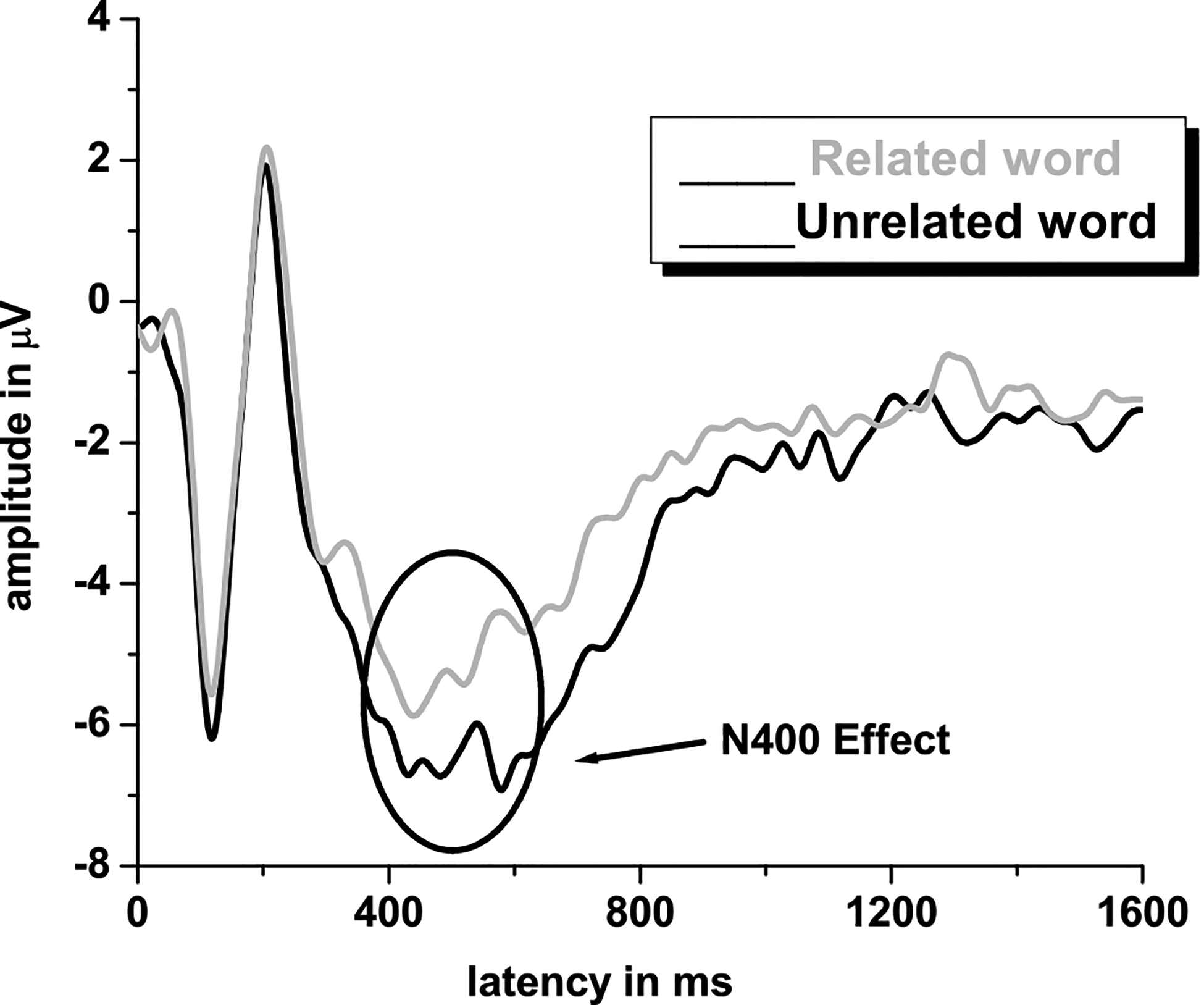

As many studies evaluating the N400 ERP (figure 5) are primarily language-based, it may be suprising to see it discussed in the context of BCI. However, the N400 can be elicited via pictures and is sensitive to congruency and processing efforts, which may be useful in a BCI application. The N400 was originally characterized by Marta Kutas and Steven Hillyard in 1980 as a reaction to an unexpected and/or inappropriate word at the end of a sentence. Kutas and Hillyard’s (1980a; 1980b; 1980c; 1982; 1983; 1984) initial series of experiments studied sentences which were grammatically correct with valid word endings, which were congruent control sentences such as “I shaved off my mustache and beard” in comparison to experimental sentences such as “I shaved off my mustache and eyebrows” (congruent and valid but low probability); “I shaved off my mustache and city (semantically anomalous)”; “I shaved off my mustache and [a pictorial representation]” (novel, uninterpretable); “I shaved off my mustache and BEARD” (congruent but physically unexpected with capital letters); “I shaved off my mustache and [line drawing of a beard]” (also congruent but physically unexpected); “I shaved off my mustache and [line drawing of a city]” (semantically anomalous and physically unexpected) (examples cited from Kutas & Federmeier, 2009). The N400 waveform is thus defined as a slow, negative deflection below the pre stimulus baseline, occurring anywhere between 200 milliseconds and 600 milliseconds, and typically peaking around 400 milliseconds. The amplitude component of the N400 is more sensitive to stimulus change compared to its latency. The variation in N400 amplitude is called N400 effect and a larger N400 amplitude is expected to semantically incongruent versus semantically congruent stimuli. This increase in N400 amplitude reflects the greater neural resources needed to process the incongruent stimulus (Kutas & Federmeier, 2011).

Figure 5.

The N400 event related potential, characterized by a negative going EEG deflection is noted at approximately 400ms

Since Kutas and Hillyard’s initial study, the N400 is shown to be sensitive to varied manipulations, including cloze probability (the number of possible sentence endings), sentence and discourse congruity, repetition, semantic priming, lexical association, concreteness and semantic richness, word frequency, orthographic neighborhood size, and several more. The N400 is especially sensitive to semantic processing and several linguistic and psycholinguistic accounts of how semantic context influences the N400 component during word processing have been proposed. For instance, Plante, Van Petten & Senkfor (2000) have shown that the N400 amplitude is smaller if the eliciting word is semantically related rather than unrelated to the preceding word, both in visual and auditory modalities. However, Neville, Coffey, Holcomb, & Tallal (1993) suggest that N400 amplitudes may vary depending upon the target word position within the sentence. Essentially, they showed that words earlier in the sentence elicited a larger N400 than later words because the later words can possibly benefit from the preceding context. N400 effects are seen in ERP components following the presentation of auditory and visual stimuli as well as by signs in American Sign Language (Neville, Coffey, Lawson, Fischer, Emmorey & Bellugi, 1997). Thus, it appears that the N400 is relatively independent of the sensory modality of the linguistic input. Overall, the current literature suggests that N400 reflects semantic/lexical processing of a given linguistic stimulus, and that the priming effects can be interpreted as evidence of variance or modulation in semantic processing (Mehta & Jerger, 2014).

The N400 has also been elicited in studies associated with attention (Mehta, Jerger, Jerger and Martin, 2009), and the N400 has utility for measuring the amount of cognitive load required for an individual during semantic memory retrieval. This is because the ability to process the information from target stimuli is highly dependent on one’s ability to recall previous relevant information from any of the multimodal channels such as images or sounds. This difficulty, or cognitive load, is associated with memory representations and cues from previous content priming the meaningful probe stimulus (Federmeier and Kutas, 2001; Lau et al., 2008; Van Petten and Luka, 2006). Therefore, when a difficult stimulus requires more effort to process, thus having more cognitive load, the N400’s amplitude deflection is larger than when it is easy.

Why is the N400 targeted for BCI control?

As the N400 is primarily produced by incongruency, in the absence of a motor response, future research may wish to explore the N400 and incongruency-based paradigms as the foundation for gaze-independent audio-visual BCI systems (e.g., Xie et al, 2018). However, currently in the field of BCI the N400 is largely discussed in the context of supporting improved outcomes for P300-based BCI devices, and N400 elicitation directly influences how the visual displays for grid-based P300 BCI devices are designed. Specifically, improved P300 BCI accuracy has focused on elicitation of the N400 ERP, alongside the P300, and elicitation of the N400 may contribute to improved P300-based BCI accuracies even if it possibly cancels out some of the P300 ERP activity (Kaufmann, Schulz, Grünzinger & Kübler, 2011). The N400 is commonly elicited through the P300 ‘face flash’ paradigm during which all items within the P300 grid are highlighted by toggling between a picture of a face and the letter. In the face flash paradigm, the N400 may reflect similar components to the classical N400, as non-linguistic information can also elicit N400 activity through access of semantic memory (Eimer, 2000). However, whether the N400 in the face flash paradigm reflects face specific semantic memory processes and attentional factors involved in face identification (Eimer, 2000) or reflects more general responses to stimuli processing and P300 paradigm characteristics is unclear (e.g., Kellicut-Jones, & Sellers, 2018). Therefore, while the N400 is not currently a common signal that is directly decoded for BCI performance, individuals involved in the BCI-AAC process should remain aware of its utility and impact on P300-based BCI design. Further research is warranted to fully understand the role of the N400 in P300 paradigms for various populations.

Discussion and Conclusion

Non-Invasive EEG has high temporal resolution and, without the need for the invasive surgery, can be used to record brain signals in the absence of physical movement. Therefore, EEG methods are a viable option in recording brain activity underlying BCI-based access to communication. However, the process of how EEG captures the brain activity, and how this recorded neural activity is translated into BCI control can be opaque for those not involved in BCI research. A lack of understanding behind basic principles governing how BCIs function may decrease the comfort of stakeholders in implementing and using BCI technology to access communication. Therefore, this tutorial provided the basic foundations regarding how EEG signals are recorded, popular EEG signals targeted for BCI development and how these EEG signals are utilized for BCI applications to help facilitate interest and familiarity of EEG-based BCI-AAC techniques for a range of individuals, and ultimately support the translation of BCI-AAC into clinical practice. Based upon the information provided in this tutorial it is clear that BCIs do not read an individual’s ‘thoughts’, but instead translate brain activity related to cognitive-sensory-motor processes into device control. This translation process is similar to existing AAC methods such as eye gaze, where a non-speech task (e.g., eye movements and fixations), are translated into item selections. A review of current levels of BCI performance was also provided, to help demonstrate recent advances in the field of BCI. However, while future work must continue to focus on the development of new decoding algorithms to increase BCI performances above those outlined in this tutorial, along with identifying how to decrease the high levels of workload associated with BCI use (e.g., Fager, Fried-Oken, Jakobs & Beukelman, 2019), it is important for researchers to remain aware of how BCI will ultimately be implemented in the clinical setting (Pitt, Brumberg, & Pitt, 2019), along with procedures providing at home and caregiver support (e.g., Wolpaw et al., 2018). Thus, to help account for possible BCI performance variations, and highlight how BCI is not a ‘one size fits all method’, cognitive-sensory-motor, and medical (e.g., history of seizures) factors influencing BCI outcomes such were also described. However, while the foundations are laid for considering BCI in the context of existing clinical procedures such as feature matching (e.g., Pitt & Brumberg, 2018a), much of the clinical groundwork for personalized BCI intervention remains unlaid, and further multidisciplinary research is needed to identify how person-centered factors influence BCI performance and develop clinical guidelines for BCI intervention (Pitt et al., 2019). Therefore, it is hoped future work may build upon this tutorial to enhance multidisciplinary involvement in BCI-AAC by helping overcome procedural and language barriers between disciplines in efforts to ensure that BCI-AAC devices are developed and implemented with a maximally person-centered focus.

Acknowledgments

The authors would like to thank Chavis Lickvar-Armstrong and Adrienne Pitt for input on the development of this tutorial, and Anthony Pitt for creation of the BCI schematic in figure 1.

Funding

This work was supported in part by the Texas Woman’s University Woodcock Institute Research Grant, the Nebraska Tobacco Settlement Biomedical Research Development Fund, and the National Institutes of Health (NIDCD R01-DC016343-01A1).

Footnotes

Conflict of Interest Statement

There are no relevant conflicts of interest.

Contributor Information

Kevin M. Pitt, Department of Special Education and Communication Disorders, University of Nebraska-Lincoln, Lincoln, NE

Jonathan S. Brumberg, Department of Speech-Language-Hearing: Sciences & Disorders, University of Kansas, Lawrence, KS

Jeremy D. Burnison, Department of Scientific Support, Brain Vision LLC, Morrisville, NC

Jyutika Mehta, Department of Communication Sciences & Disorders, Texas Woman’s University, Denton, TX.

Juhi Kidwai, Department of Speech-Language-Hearing: Sciences & Disorders, University of Kansas, Lawrence, KS.

References

- Akcakaya M, Peters B, Moghadamfalahi M, Mooney AR, Orhan U, Oken B, … Fried-Oken M (2014). Noninvasive brain-computer interfaces for augmentative and alternative communication. IEEE Reviews in Biomedical Engineering, 7, 31–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter S, Enderby P, Evans P, & Judge S (2012). Barriers and facilitators to the use of high-technology augmentative and alternative communication devices: a systematic review and qualitative synthesis. International Journal of Language & Communication Disorders, 47(2), 115–129. [DOI] [PubMed] [Google Scholar]

- Birbaumer N, Kubler A, Ghanayim N, Hinterberger T, Perelmouter J, Kaiser J, … & Flor H (2000). The thought translation device (TTD) for completely paralyzed patients. IEEE Transactions on rehabilitation Engineering, 8(2), 190–193. [DOI] [PubMed] [Google Scholar]

- Blain-Moraes S, Schaff R, Gruis KL, Huggins JE, & Wren PA (2012). Barriers to and mediators of brain-computer interface user acceptance: focus group findings. Ergonomics, 55(5), 516–525. [DOI] [PubMed] [Google Scholar]

- Blankertz B, Sannelli C, Halder S, Hammer EM, Kübler A, Müller KR, … & Dickhaus T (2010). Neurophysiological predictor of SMR-based BCI performance. Neuroimage, 51(4), 1303–1309. [DOI] [PubMed] [Google Scholar]

- Brumberg JS, Burnison JD, & Pitt KM (2016). Using motor imagery to control brain-computer interfaces for communication In International Conference on Augmented Cognition (pp. 14–25). Springer, Cham. [Google Scholar]

- Brumberg JS, Burnison JD, & Pitt KM (2016). Using motor imagery to control brain–computer interfaces for communication In Schmorrow DD & Fidopiastis CM (Eds.), Foundations of augmented cognition: Neuroergonomics and operational neuroscience (pp. 14–25). Cham, Switzerland: Springer [Google Scholar]

- Brumberg JS, & Guenther FH (2010). Development of speech prostheses: current status and recent advances. Expert review of medical devices, 7(5), 667–679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brumberg JS, Pitt KM, (2019). Motor induced suppression of the N100 ERP during motor-imagery control of a speech synthesizer brain-computer interface. Journal of Speech, Language and Hearing Research, 62, 2133–2140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brumberg JS, Pitt KM, Mantie-Kozlowski A, & Burnison JD (2018). Brain-Computer Interfaces for Augmentative and Alternative Communication: A Tutorial. American Journal of Speech Language Pathology, 27(1), 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brumberg JS, Pitt KM, & Burnison JD (2018). A Non-Invasive Brain-Computer Interface for Real-Time Speech Synthesis: The Importance of Multimodal Feedback. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 26(4), 874–881 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brumberg JS, Nguyen A, Pitt KM, & Lorenz. S (2018). Examining sensory ability, feature matching and assessment-based adaptation for a brain–computer interface using the steady-state visually evoked potential, Disability and Rehabilitation: Assistive Technology, 14(3), 241–249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunner JF, Olsen A, Aasen IE, Løhaugen GC, Håberg AK, & Kropotov J (2015). Neuropsychological parameters indexing executive processes are associated with independent components of ERPs. Neuropsychologia, 66, 144–156. [DOI] [PubMed] [Google Scholar]

- Brunner P, Joshi S, Briskin S, Wolpaw JR, Bischof H, & Schalk G (2010). Does the ‘P300’speller depend on eye gaze?. Journal of neural engineering, 7(5), 056013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen LT, Rickards FW, & Clark GM (1991). A comparison of steady state evoked potentials to modulated tones in awake and sleeping humans. Journal of Acoustical Society of America, 90(5), 2467–79. [DOI] [PubMed] [Google Scholar]

- Combaz A, Chatelle C, Robben A, Vanhoof G, Goeleven A, Thijs V, … & Laureys S (2013). A comparison of two spelling brain-computer interfaces based on visual P3 and SSVEP in locked-in syndrome. PloS one, 8(9), e73691. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui RQ, Egkher A, Huter D, Lang W, Lindinger G, & Deecke L (2000). High resolution spatiotemporal analysis of the contingent negative variation in simple or complex motor tasks and a non-motor task. Clinical Neurophysiology, 111(10), 1847–1859. [DOI] [PubMed] [Google Scholar]

- Daly I, Billinger M, Laparra-Hernández J, Aloise F, García ML, Faller J, … & Müller- Putz G(2013). On the control of brain-computer interfaces by users with cerebral palsy. Clinical Neurophysiology, 124(9), 1787–1797. [DOI] [PubMed] [Google Scholar]

- Daly I, Faller J, Scherer R, Sweeney-Reed CM, Nasuto SJ, Billinger M, & Müller- Putz GR (2014). Exploration of the neural correlates of cerebral palsy for sensorimotor BCI control. Frontiers in Neuroengineering, 7:20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deecke L, Grözinger B, & Kornhuber HH (1976). Voluntary finger movement in man: Cerebral potentials and theory. Biological cybernetics, 23(2), 99–119. [DOI] [PubMed] [Google Scholar]

- Donchin E (1981). Surprise!… surprise?. Psychophysiology, 18(5), 493–513. [DOI] [PubMed] [Google Scholar]

- Donchin E, & Coles MG (1988). Is the P300 component a manifestation of context updating?. Behavioral and brain sciences, 11(3), 357–374. [Google Scholar]

- Donchin E, Spencer KM, & Wijesinghe R (2000). The mental prosthesis: assessing the speed of a P300-based brain-computer interface. IEEE transactions on rehabilitation engineering, 8(2), 174–179. [DOI] [PubMed] [Google Scholar]

- Duncan‐Johnson CC, & Donchin E (1977). On quantifying surprise: The variation of event‐ related potentials with subjective probability. Psychophysiology, 14(5), 456–467. [DOI] [PubMed] [Google Scholar]

- Eimer M (2000). Event-related brain potentials distinguish processing stages involved in face perception and recognition. Clinical neurophysiology, 111(4), 694–705. [DOI] [PubMed] [Google Scholar]

- Fager S, Fried-Oken M, Jakobs T, & Beukelman DR (2019). New and emerging access technologies for adults with complex communication needs and severe motor impairments: State of the science. Augmentative and Alternative Communication, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Federmeier K, & Kutas M (2001). Meaning and modality: influences of context, semantic memory organization, and perceptual predictability on picture processing. Journal of Experimental Psychology: Learning, Memory, and Cognition, 27(1), 202–224. [PubMed] [Google Scholar]

- Fisch B (2000). Basic Principles of Digital and Analog EEG. USA: Elsevier Health Sciences. [Google Scholar]

- Fried-Oken M, Mooney A, Peters B, & Oken B (2013). A clinical screening protocol for the RSVP Keyboard brain-computer interface. Disability and Rehabiitation: Assistive Technology, 10(1), 11–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedrich EV, McFarland DJ, Neuper C, Vaughan TM, Brunner P, & Wolpaw JR (2009). A scanning protocol for a sensorimotor rhythm-based brain-computer interface. Biological Psychology, 80(2), 169–175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedrich EV, Scherer R, & Neuper C (2012). The effect of distinct mental strategies on classification performance for brain–computer interfaces. International Journal of Psychophysiology, 84(1), 86–94. [DOI] [PubMed] [Google Scholar]

- Geronimo A, Simmons Z, & Schiff SJ (2016). Performance predictors of brain–computer interfaces in patients with amyotrophic lateral sclerosis. Journal of neural engineering, 13(2), 026002. [DOI] [PubMed] [Google Scholar]

- Geronimo AM, & Simmons Z (2017). The P300 ‘face’speller is resistant to cognitive decline in ALS. Brain-Computer Interfaces, 4(4), 225–235 [Google Scholar]

- Guger C, Daban S, Sellers E, Holzner C, Krausz G, Carabalona R, … & Edlinger G (2009). How many people are able to control a P300-based brain–computer interface (BCI)? Neuroscience letters, 462(1), 94–98. [DOI] [PubMed] [Google Scholar]

- Hakkarainen E, Pirilä S, Kaartinen J, & Meere JJVD (2012). Stimulus evaluation, event preparation, and motor action planning in young patients with mild spastic cerebral palsy: an event-related brain potential study. Journal of child neurology, 27(4), 465–470. [DOI] [PubMed] [Google Scholar]

- Hanagasi HA, Gurvit IH, Ermutlu N, Kaptanoglu G, Karamursel S, Idrisoglu HA, … Demiralp T (2002). Cognitive impairment in amyotrophic lateral sclerosis: evidence from neuropsychological investigation and event-related potentials. Cognitive brain research, 14(2), 234–244 [DOI] [PubMed] [Google Scholar]

- Higger M, Quivira F, Akcakaya M, Moghdamfalahi M, Nezamfar H, Cetin M, & Erdogmus M (2017). Recursive Bayesian Coding for BCIs. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 25(6), 704–714. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill NJ, Ricci E, Haider S, McCane LM, Heckman S, Wolpaw JR, & Vaughan TM (2014). A practical, intuitive brain-computer interface for communicating ‘yes’ or ‘no’ by listening. Journal of neural engineering, 11(3), 035003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill NJ, & Schölkopf B (2012). An online brain-computer interface based on shifting attention to concurrent streams of auditory stimuli. Journal of neural engineering, 9(2), 026011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horwitz A, Thomsen MD, Wiegand I, Horwitz H, Klemp M, Nikolic M, … & Benedek K (2017). Visual steady state in relation to age and cognitive function. PloS one, 12(2), e0171859. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hwang HJ, Han CH, Lim JH, Kim YW, Choi SI, An KO, … & Im CH (2017). Clinical feasibility of brain‐computer interface based on steady‐state visual evoked potential in patients with locked‐in syndrome: Case studies. Psychophysiology, 54(3), 444–451. [DOI] [PubMed] [Google Scholar]

- Hwang HJ, Lim JH, Jung YJ, Choi H, Lee SW, & Im CH (2012). Development of an SSVEP-based BCI spelling system adopting a QWERTY-style LED keyboard. Journal of neuroscience methods, 208(1), 59–65. [DOI] [PubMed] [Google Scholar]

- Ikegami S, Takano K, Saeki N, & Kansaku K (2011). Operation of a P300-based brain– computer interface by individuals with cervical spinal cord injury. Clinical Neurophysiology, 122(5), 991–996. [DOI] [PubMed] [Google Scholar]

- Jenson D, Harkrider AW, Thornton D, Bowers AL, & Saltuklaroglu T (2015). Auditory cortical deactivation during speech production and following speech perception: an EEG of the temporal dynamics of the auditory alpha rhythm. Frontiers in human neuroscience, 9:534. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeunet C, N’Kaoua B, & Lotte F (2016). Advances in user-training for mental-imagery-based BCI control: Psychological and cognitive factors and their neural correlates In Progress in brain research, (Vol. 228, pp. 223–235). Elsevier. [DOI] [PubMed] [Google Scholar]

- Jurcak V, Tsuzuki D, & Dan I (2007). 10/20, 10/10, and 10/5 systems revisited: their validity as relative head-surface-based positioning systems. Neuroimage, 34(4), 1600–1611. [DOI] [PubMed] [Google Scholar]

- Jasper H (1958). The ten-twenty electrode system of the International Federation. Electroencephalography and Clinical Neurophysiology, 52(3), 3–6 [PubMed] [Google Scholar]

- Kasahara T, Terasaki K, Ogawa Y, Ushiba J, Aramaki H, & Masakado Y (2012). The correlation between motor impairments and event-related desynchronization during motor imagery in ALS patients. BMC neuroscience, 13:66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaufmann T, Schulz SM, Grünzinger C, & Kübler A (2011). Flashing characters with famous faces improves ERP-based brain–computer interface performance. Journal of neural engineering, 8(5), 056016. [DOI] [PubMed] [Google Scholar]

- Kellicut-Jones MR, & Sellers EW (2018). P300 brain-computer interface: comparing faces to size matched non-face stimuli. Brain-Computer Interfaces, 5(1), 30–39 [Google Scholar]

- Kim DW, Hwang HJ, Lim JH, Leeb YH, Jung KY, Im CH (2011). Classification of selective attention to auditory stimuli: Toward vision-free brain–computer interfacing. Journal of Neuroscience Methods, 197, 180–185. [DOI] [PubMed] [Google Scholar]

- Kirschstein T, & Kohling R (2009). What is the Source of the EEG? Clinical EEG and Neuroscience, 40(3), 146–149 [DOI] [PubMed] [Google Scholar]

- Kübler A, Furdea A, Halder S, Hammer EM, Nijboer F, & Kotchoubey B (2009). A brain–computer interface controlled auditory event‐related potential (P300) spelling system for locked‐in patients. Annals of the New York Academy of Sciences, 1157(1), 90–100. [DOI] [PubMed] [Google Scholar]

- Kübler A, Kotchoubey B, Hinterberger T, Ghanayim N, Perelmouter J, Schauer M, … & Birbaumer N(1999). The thought translation device: a neurophysiological approach to communication in total motor paralysis. Experimental Brain Research, 124(2), 223–232. [DOI] [PubMed] [Google Scholar]

- Kübler A, Nijboer F, Mellinger J, Vaughan TM, Pawelzik H, Schalk G, … & Wolpaw JR(2005). Patients with ALS can use sensorimotor rhythms to operate a brain-computer interface. Neurology, 64(10), 1775–1777. [DOI] [PubMed] [Google Scholar]

- Kuhlman WN (1978). Functional topography of the human mu rhythm. Electroencephalography and clinical neurophysiology, 44(1), 83–93. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1980a). Event-related brain potentials to semantically inappropriate and surprisingly large words. Biological Psychology, 11, 99–116. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1980b). Reading between the lines: Event-related brain potentials during natural sentence processing. Brain and Language, 11(2), 354–373. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1980c). Reading senseless sentences: Brain potentials reflect semantic incongruity. Science, 207, 203–205. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1982). The lateral distribution of event-related potentials during sentence processing. Neuropsychologia, 20(5), 579–590. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1983). Event-related brain potentials to grammatical errors and semantic anomalies. Memory and Cognition, 11(5), 539–550. [DOI] [PubMed] [Google Scholar]

- Kutas M & Hillyard S (1984). Brain potentials reflect word expectancy and semantic association during reading. Nature, 307, 161–163. [DOI] [PubMed] [Google Scholar]

- Kutas M & Federmeier K (2009). N400. Scholarpedia, 4(10): 7790 [Google Scholar]

- Kutas M & Federmeier K (2011). Thirty years and counting: Finding meaning the N400 component of the event related brain potential (ERP). Annual Review of Psychology, 62, 612–647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lau E, Phillips C, & Poeppel D (2008). A cortical network for semantics:(de)constructing the N400. Nature Reviews Neuroscience, 9(12). 920–933 [DOI] [PubMed] [Google Scholar]

- Lin Z, Zhang C, Wu W, & Gao X (2007). Frequency recognition based on canonical correlation analysis for SSVEP-Based BCIs. IEEE Transactions on Biomedical Engineering, 54(6), 1172–1176. [DOI] [PubMed] [Google Scholar]

- Liégeois-Chauvel C, Lorenzi C, Trébuchon A, Régis J, & Chauvel P (2004). Temporal envelope processing in the human left and right auditory cortices. Cerebral Cortex, 14(7),731–740. [DOI] [PubMed] [Google Scholar]

- Lotte F (2014). A tutorial on EEG signal-processing techniques for mental-state recognition in brain–computer interfaces In Guide to Brain-Computer Music Interfacing (pp. 133–161). Springer, London. [Google Scholar]

- Luck S (2014). An Introduction to the Event-Related Potential Technique, Second Edition MIT Press. [Google Scholar]

- Lukhanina EP, Burenok YA, Mel’nik NA, & Berezetskaya NM (2006). Two phases of the contingent negative variation in humans: association with motor and mental functions. Neuroscience and behavioral physiology, 36(4), 359–365. [DOI] [PubMed] [Google Scholar]

- Mannarelli D, Pauletti C, Locuratolo N, Vanacore N, Frasca V, Trebbastoni A, … & Fattapposta F (2014). Attentional processing in bulbar-and spinal-onset amyotrophic llateral sclerosis: insights from event-related potentials. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 15(1–2), 30–38. [DOI] [PubMed] [Google Scholar]

- Marchetti M, & Priftis K (2015). Brain–computer interfaces in amyotrophic lateral sclerosis: A metanalysis. Clinical Neurophysiology, 126(6), 1255–1263. [DOI] [PubMed] [Google Scholar]

- McCord MS, & Soto G (2004). Perceptions of AAC: An ethnographic investigation of Mexican-American families. Augmentative and Alternative Communication, 20(4), 209–227. [Google Scholar]

- McFarland DJ, Sarnacki WA, Townsend G, Vaughan T, & Wolpaw JR (2011). The P300-based brain–computer interface (BCI): effects of stimulus rate. Clinical neurophysiology, 122(4), 731–737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehta J, Jerger S, Jerger J, & Martin J (2009). Electrophysiological Correlates of Word Comprehension: ERP and ICA Analysis. International Journal of Audiology, 48 (1), 1–11. [DOI] [PubMed] [Google Scholar]

- Mehta J & Jerger J (2014). Semantic priming effect across life span: an ERP study. International Journal of Audiology, 53 (4), 235–242. [DOI] [PubMed] [Google Scholar]

- Milton J, Small SL, & Solodkin A (2008). Imaging motor imagery: methodological issues related to expertise. Methods, 45(4), 336–341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morash V, Bai O, Furlani S, Lin P, & Hallett M (2008). Classifying EEG signals preceding right hand, left hand, tongue, and right foot movements and motor imageries. Clinical neurophysiology, 119(11), 2570–2578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muüller-Putz GR, Scherer R, Brauneis C, & Pfurtscheller G (2005). Steady-state visual evoked potential (SSVEP)-based communication: Impact of harmonic frequency components. Journal of Neural Engineering, 2(4), 123–130. [DOI] [PubMed] [Google Scholar]

- Nagai Y, Critchley HD, Featherstone E, Fenwick PBC, Trimble MR, & Dolan RJ (2004). Brain activity relating to the contingent negative variation: an fMRI investigation. Neuroimage, 21(4), 1232–1241. [DOI] [PubMed] [Google Scholar]

- Neumann N, & Birbaumer N (2003). Predictors of successful self control during brain-computer communication. Journal of Neurology, Neurosurgery & Psychiatry, 74(8), 1117–1121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neuper C, & Pfurtscheller G (2001). Event-related dynamics of cortical rhythms: frequency-specific features and functional correlates. International journal of psychophysiology, 43(1), 41–58. [DOI] [PubMed] [Google Scholar]

- Neuper C, Scherer R, Reiner M, & Pfurtscheller G (2005). Imagery of motor actions: Differential effects of kinesthetic and visual–motor mode of imagery in single-trial EEG. Cognitive brain research, 25(3), 668–677. [DOI] [PubMed] [Google Scholar]

- Neville H, Coffey S, Holcomb P, and Tallal P (1993). The neurobiology of sensory and language processing in language impaired children. Journal of Cognitive Neuroscience, 5, 235–253. [DOI] [PubMed] [Google Scholar]

- Neville H, Coffey S, Lawson D, Fischer A, Emmorey K, and Bellugi, (1997).Neural systems mediating American sign language: effects of sensory experience and age of acquisition. Brain and Language, 57, 285–308. [DOI] [PubMed] [Google Scholar]

- Nijboer F, Furdea A, Gunst I, Mellinger J, McFarland DJ, Birbaumer N, & Kübler A (2008). An auditory brain–computer interface (BCI). Journal of neuroscience methods, 167(1), 43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Obermaier B, Muller GR, & Pfurtscheller G (2003). “Virtual keyboard” controlled by spontaneous EEG activity. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 11(4), 422–426. [DOI] [PubMed] [Google Scholar]

- Oken BS, Orhan U, Roark B, Erdogmus D, Fowler A, Mooney A, … & Fried-Oken MB (2014). Brain–computer interface with language model–electroencephalography fusion for locked-in syndrome. Neurorehabilitation and neural repair, 28(4), 387–394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G, Brunner C, Schlögl A, & Da Silva FL (2006). Mu rhythm (de) synchronization and EEG single-trial classification of different motor imagery tasks. NeuroImage, 31(1), 153–159. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, & Da Silva FL (1999). Event-related EEG/MEG synchronization and desynchronization: basic principles. Clinical neurophysiology, 110(11), 1842–1857. [DOI] [PubMed] [Google Scholar]

- Pfurtscheller G, & Neuper C (2009). Dynamics of sensorimotor oscillations in a motor task In Brain-Computer Interfaces (pp. 47–64). Springer, Berlin, Heidelberg. [Google Scholar]

- Pfurtscheller G, & Neuper C (1997). Motor imagery activates primary sensorimotor area in humans. Neuroscience Letters, 239(2–3), 65–68. [DOI] [PubMed] [Google Scholar]

- Peters B, Higger M, Quivira F, Bedrick S, Dudy S, Eddy B, … & Erdogmus D (2018). Effects of simulated visual acuity and ocular motility impairments on SSVEP brain-computer interface performance: an experiment with Shuffle Speller. Brain-ComputerInterfaces, 5(2–3), 58–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitt K, & Brumberg J (2018a). A Screening Protocol Incorporating Brain-Computer Interface Feature Matching Considerations for Augmentative and Alternative Communication. Assistive Technology. 1–15. [DOI] [PubMed] [Google Scholar]

- Pitt KM, & Brumberg JS (2018b). Guidelines for Feature Matching Assessment of Brain-Computer Interfaces for Augmentative and Alternative Communication. American Journal of Speech Language Pathology, 27(3), 950–964. [DOI] [PMC free article] [PubMed] [Google Scholar]