Abstract

In low- and middle-income countries (LMICs), e-learning for medical education may alleviate the burden of severe health worker shortages and deliver affordable access to high quality medical education. However, diverse challenges in infrastructure and adoption are encountered when implementing e-learning within medical education in particular. Understanding what constitutes successful e-learning is an important first step for determining its effectiveness. The objective of this study was to systematically review e-learning interventions for medical education in LMICs, focusing on their evaluation and assessment methods.

Nine databases were searched for publications from January 2007 to June 2017. We included 52 studies with a total of 12,294 participants. Most e-learning interventions were pilot studies (73%), which mainly employed summative assessments of study participants (83%) and evaluated the e-learning intervention with questionnaires (45%). Study designs, evaluation and assessment methods showed considerable variation, as did the study quality, evaluation periods, outcome and effectiveness measures. Included studies mainly utilized subjective measures and custom-built evaluation frameworks, which resulted in both low comparability and poor validity. The majority of studies self-concluded that they had had an effective e-learning intervention, thus indicating potential benefits of e-learning for LMICs. However, MERSQI and NOS ratings revealed the low quality of the studies' evidence for comparability, evaluation instrument validity, study outcomes and participant blinding. Many e-learning interventions were small-scale and conducted as short-termed pilots. More rigorous evaluation methods for e-learning implementations in LMICs are needed to understand the strengths and shortcomings of e-learning for medical education in low-resource contexts. Valid and reliable evaluations are the foundation to guide and improve e-learning interventions, increase their sustainability, alleviate shortages in health care workers and improve the quality of medical care in LMICs.

Keywords: e-learning, Country-specific developments, Evaluation methodologies, Evaluation of CAL systems, Post-secondary education, Medical education

Highlights

-

•

Most e-learning evaluations in low- and middle-income countries produce weak evidence.

-

•

Predominantly learner characteristics are evaluated with qualitative methods.

-

•

Multitude of outcome measures and evaluation methods are employed.

-

•

Evaluations following multi-dimensional approaches enable comprehensive insights.

-

•

Generating strong scientific evidence is key implementing e-learning successfully.

1. Introduction

To achieve the 2030 Sustainable Development Goals (SDGs) for health–specifically, goal 3 (Good Health and Well-Being) and goal 4 (Quality Education)–low- and middle-income countries (LMICs) need effective and affordable education strategies to address critical health worker shortages (Al-Shorbaji, Majeed, & Wheeler, 2015, p. 156; Bollinger et al., 2013). This is particularly crucial considering the significant challenges most LMICs face with limited health care budgets (World Health Organization, 2014), insufficient qualified health workers (Mills, 2014), limited access to equipment and infrastructure, and a lack of continuing professional development and continuing medical education programs (Mack, Golnik, Murray, & Filipe, 2017). Training facilities and resources for health workers in LMICs are insufficient, and a substantial number of the few trained professionals are subject to “brain drain” when regularly recruited to high-income countries (Aluttis, Bishaw, & Frank, 2014). E-learning interventions for medical education could be of great benefit for targeting good health and well-being and quality education, especially in low-resource settings (Al-Shorbaji, Atun, Car, Majeed, & Wheeler, 2015; Dawson, Walker, Campbell, & Egede, 2016; Liu et al., 2016; World Health Organization, 2016a,b). Current technological progress in hardware, software and affordable internet connectivity enable a broader technology usage in low-resource settings than a few years ago (Mack et al., 2017). The question seems to be not “if”, but rather “how” technology will be most beneficial in LMICs. The Lancet Global Commissioners for the Education of Health Professionals for the 21st Century stated, “The effect of electronic learning (e-learning) is likely to be revolutionary, although how precisely it will revamp professional education remains unknown” (Frenk et al., 2010, p. 1944). One potential target for medical e-learning could be to strengthen practical training for health workers and continuing medical education, since both are currently inadequate in quantity and quality in LMICs (Al-Shorbaji, R, J, Majeed, & Wheeler, 2015, p. 156; Asongu & Le Roux, 2017; Childs, Blenkinsopp, Hall, & Walton, 2005; Mack et al., 2017; World Health Organization, 2016b). Currently, low-resource contexts are faced with a lack of infrastructure, i.e., insufficient classrooms, lecture halls and dorms, and medical educators, which leads to a very limited capacity to enroll and educate students (Frenk et al., 2010). Infrastructure upscaling needs substantial investment, and staff upscaling requires a substantial investment in training. E-learning offers the possibility to quickly scale up training without the need for simultaneous resource-intensive infrastructure upscaling. E-learning may bridge distances in rural areas for accessibility to up-to date information in medical education and reduce the need to teach theory-based, on-site classes where there are a limited number of medical teachers. E-learning could strengthen the quantity and quality of medical education in LMICs through blended learning approaches wherein e-learning covers the theoretical and procedural training, and face-to-face training covers practical skills, for example. Since e-learning is adaptable and expandable to new technological advancements, such as artificial intelligence, once implemented, the potential of e-learning could be groundbreaking for training health care workers in LMICs. Yet, sound scientific evidence on the effect of e-learning for medical education in LMICs, including its strengths and shortcomings, is limited (Frehywot et al., 2013).

2. Background

Current systematic reviews of e-learning for medical education mainly focus on high-income countries (Al-Shorbaji et al., 2015, p. 156; Liu et al., 2016; Nicoll, MacRury, Van Woerden, & Smyth, 2018). A systematic review by the World Health Organization (WHO) (Al-Shorbaji et al., 2015, p. 156) examined global e-learning and blended learning methods and their effect on knowledge, skills, attitudes and satisfaction in comparison to other methods (traditional, alternative e-learning/blended learning, no intervention). Al-Shorbaji et al. (2015, p. 156) highlighted that only five studies have been conducted in LMICs, making their findings potentially inapplicable for low-resource contexts because the students' educational backgrounds may differ and have an impact on their perceptions and overall e-learning experiences.

Frehywot et al. (2013) focused their review on medical education in general in LMICs (not e-learning specifically) and summarized the current scope of literature for low-resource contexts. They described respective approaches to incorporating e-learning in a low-resource context and subsequent evaluation, and found significant variation, but a majority of blended learning approaches (Frehywot et al., 2013). The effectiveness of e-learning was mainly evaluated by comparing e-learning to other learning approaches, such as traditional teaching methods, or evaluating students and teachers' attitudes (Frehywot et al., 2013). A more recent systematic review by Nicoll et al. (2018) (not incorporating low-resource contexts), reviewed evaluation and assessment tools as well as frameworks of technology-enhanced learning approaches (specifically, digitally mediated and blended approaches), for continuing education of healthcare professionals. Overall, the authors identified a lack of standard evaluation tools for technology-enhanced health care education programs, thus pointing to the limited validity of the evaluation results (Nicoll et al., 2018). Focusing specifically on the assessment of knowledge, Liu et al. (2016) conducted a meta-analysis comparing the effect of blended learning on health professional knowledge acquisition and found that blended learning may have a neutral to positive effect compared to non-blended instruction (pure e-learning), as well as a largely positive effect compared to no intervention in this context. Based on the results of the two studies, there are indications that e-learning may be beneficial for medical education, but there is very limited evidence for its impact in LMICs (Al-Shorbaji et al., 2015, p. 156; Frehywot et al., 2013; Frenk et al., 2010). Furthermore, the validity of e-learning evaluation results is limited due to the heterogeneity of e-learning interventions and evaluation approaches employed, making comparability and meta-synthesis difficult (Clunie, Morris, Joynes, & Pickering, 2017; Nicoll et al., 2018). Currently, there are a limited number of available studies that consider the context of LMICs, where e-learning could be an enabling factor to ameliorate the current critical scarcity of health workers. Tailored evaluation methods for assessing e-learning in the context of medical education in LMICs have not been addressed in current literature. Therefore, this review addresses the knowledge gap in evaluation methods for e-learning interventions for medical education in low-resource contexts. We systematically reviewed e-learning evaluation approaches for medical education, especially in low-resource contexts, to document their current e-learning implementation and effectiveness, and to distill lessons learned. In particular, we systematically reviewed evaluation methods of asynchronous medical e-learning since poor internet connections in these settings do not allow for synchronous e-learning and outcome measures to be used in the evaluation of e-learning interventions.

Guiding questions for this study were: (a) “How has medical e-learning been implemented in low-resource contexts?”, (b) “What e-learning evaluation and assessment methods have been employed in the included studies?”, (c) “Which outcome measures have been evaluated?”, and (d) “How has success for e-learning interventions been defined?”

3. Methods

We conducted a systematic literature review “to collate all evidence that fits pre-specified eligibility criteria” on e-learning in low-resourced settings (Higgins & Green, 2011, p. 1:1). Systematic reviews aim to “minimize bias by using explicit, systematic methods” (Higgins & Green, 2011, p. 1:1) and by gathering, synthesizing and reviewing study findings (Jesson, Matheson, & Lacey, 2011). We conducted the systematic review in line with the Cochrane Handbook for Systematic Reviews of Interventions (Higgins & Green, 2011). The review was undertaken in distinct stages: identifying inclusion and exclusion criteria, choosing data sources and search strategies, performing quality assessments of included studies, coding data and summarizing results, and conducting data analysis. The details of these stages are described in the following sub-sections.

Furthermore, the PICOS (population, intervention, comparison, outcome, study design) framework guided search term development for the systematic review and was the foundation for study inclusion and exclusion criteria (see Table 1) (Moher et al., 2009). The systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) checklist for the standardized reporting of this systematic review (Moher et al., 2009) (see Appendix A).

Table 1.

Inclusion criteria according to the PICOS framework.

| Parameter | Description |

|---|---|

| Population | Health care professionals comprising medical students and medical doctors taking part in an educational endeavor taking place in LMICs. |

| Intervention | Studies that have evaluated asynchronous e-learning for medical education. |

| Comparison | Studies may have no comparison or comparator. |

| Outcome | Studies must include (i) evaluation methods used for asynchronous e-learning for medical education and (ii) outcome measures within their e-learning intervention |

| Studies | All study types that were published in English between 2007 and 2018 from peer-reviewed journals, conference papers. Opinion papers, commentaries, editorial notes, systematic reviews and meta-analysis were excluded. |

3.1. Data sources

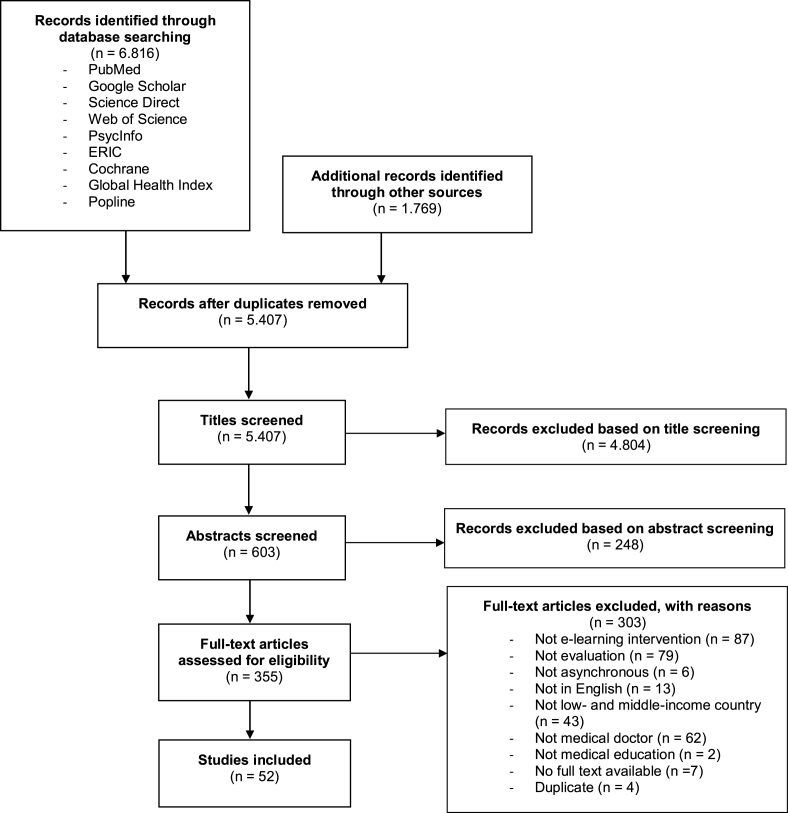

Peer-reviewed literature and grey literature were searched in the following databases: Cochrane Library, ERIC, PsycInfo, PubMed, Science Direct, Web of Science, 3ie, Cabi, Eldis, Global Health Index, Global Index Medicus, GreyLit.org, World Bank IMF, Medbox, OIASTER WORLDCAT, opengrey, Popline, SciDevNet, CRD and USAID. The search strategy and terms followed the PICOS framework (see Appendix B). Only results from the following nine databases were included since the others yielded no results: Pubmed, Google Scholar, Science Direct, Web of Science, PsycInfo, ERIC, Cochrane, Global health Index, Popline (see Fig. 1).

Fig. 1.

PRISMA flowchart (Moher et al., 2009) showing study selection process.

3.2. Search strategy

Peer-reviewed studies and grey literature were included only if they were available in English; participants were medical students and/or medical doctors (excluding dentists, nurses and other health workers); e-learning was asynchronous; and conducted in LMICs according to May 2017 World Bank classifications (World Bank, 2017). The selected population was limited to medical doctors and medical students since they provide the highest level of care in national health systems and have been found to be the predominant groups that currently utilize e-learning for medical education (Frehywot et al., 2013). The search was not restricted to a particular study design since the literature pre-screening indicated a heterogeneity of study designs including randomized controlled-trials (RCTs), case studies, cross-sectional studies, and observational studies. Asynchronous e-learning was defined as content delivered offline, e.g., self-paced e-learning modules, and did not include live video conferencing or live virtual classrooms, which are examples of synchronous e-learning and not included in this review (Ruggeri, Farrington, & Brayne, 2013). We only included studies conducted after 2007 to allow for comparable e-learning technologies used in medical education. Commentaries, editorial notes, systematic reviews, meta-analyses and opinion articles were all excluded.

3.3. Study selection

Of the initial 8,585 identified references, 5,407 unique references remained after duplicate removal (see Fig. 1 for PRISMA flowchart showing detailed numbers of studies available at each stage). Three researchers (SB, DG, MMJ) independently screened all titles after randomly dividing the articles into three groups. If inclusion was uncertain, papers were left for the title or abstract review stages. An additional 5052 records were excluded after title and abstract screening. References were randomly distributed for abstract screening among the aforementioned three researchers. If there was disagreement or insufficient information during abstract screening, we left the respective references for full-text screening and randomly distributed them again among the three researchers. After examining the full-texts of the remaining 355 studies, 52 eligible full-text studies were identified and retained for the review. No additional relevant articles were found after reviewing the references of the included full-text records (included studies are listed in Appendix C). Conflicts that arose during study selection were discussed within the research group until mutual consensus was reached.

3.4. Quality assessment

We reviewed and rated included studies on three quality scores: Medical Education Research Study Quality Instrument (MERSQI) (Cook & Reed, 2015; Reed, Beckman, et al., 2008), Newcastle-Ottawa Scale (NOS) (Stang, 2010), and Newcastle-Ottawa Scale-Education (NOS-E) (Cook & Reed, 2015). The objective was to use these frameworks to determine the quality of the included studies, their various study designs (credibility), and compare the quality measures in order to rate the adequacy of the e-learning intervention (see Appendix C). Domain items of the NOS-E framework include the representativeness of the intervention group, selection and comparability of the comparison group, study retention and assessment blinding. The NOS framework items include the representativeness of exposed cohort, selection of the non-exposed cohort, ascertainment of exposure, demonstration that an outcome of interest was not present at the start of the study, comparability of cohorts based on the design or analysis, outcome assessment, adequate follow-up for outcomes to occur and adequate cohort follow up. The MERSQI framework items include study design, sampling, type of data, the validity of evaluation instruments scores, data analysis, and outcome. The median was the threshold for categorizing the studies as low or high quality (Cook et al., 2011). We calculated interrater reliability based on the intraclass correlation coefficient (ICC) employing a two-way mixed effects model with mean of k (k = 3) raters according to absolute agreement (see Appendix H for ICC ratings). ICCs and their respective confident intervals were calculated for MERSQI, NOS, and NOS-E scores and followed the ICC categorization scheme as described in Landis & Koch (1977).

3.5. Data coding

Included studies were randomly split among three researchers (SB, DG, MMJ) for data coding and extraction. Pertinent data from studies were coded using the qualitative data analysis computer software NVivo and later cleaned using a cloud-based spreadsheet to allow for simultaneous document edits. The researchers independently extracted data and information according to 29 aspects: author name, title of publication, year of publication, country of intervention, institution, objective, study design, study cohort, study limitations, stage of training, type of evaluation, type of intervention, timing of evaluation, duration of evaluation, framework used, evaluation methods, course content, course delivery method, sampling size for evaluation, response rate to the evaluation, units of outcome measurement, data analysis methods, outcome measures, challenges, overall results, conclusions, success reported (by studies), outlook, and recommendations.

3.6. Data analysis

Studies were classified as quantitative, qualitative, or mixed methods and whether they utilized subjective or objective measures based on definitions by David and Sutton (2004) (see Table 2). Furthermore, evaluation methods of included studies were categorized into six groups, following the main evaluation methods for e-learning as discussed in Phillips, McNaught, and Kennedy (2012): (a) questionnaire, (b) document review, (c) interviews, (d) focus groups, (e) system log data and (f) knowledge testing (see Appendix D for classification criteria, and Appendix E for assessment and evaluation methods).

Table 2.

Overview of terminology definitions.

| Term | Definition |

|---|---|

| assessment | The systematic collection of individual learner achievements, also of groups, with regard to knowledge, ability and advancement, usually measured with marks or percentages (Gibbs, Brigden, & Hellenberg, 2006) |

| asynchronous | Content delivery that occurs at a different time than the student receives it and thus may be carried out while the student is offline (Wagner, Hassanein, & Head, 2008) |

| blended learning | E-learning supplemented by face-to-face training (Moore et al., 2011), or vice versa |

| content type | Category of media used for an e-learning intervention (e.g. PowerPoints, videos, audio) |

| delivery method | E-learning delivery techniques and characteristics (e.g., via mobile devices, computer-lab in school) |

| diagnostic assessment | Pre-assessment to determine user knowledge and skills prior to the intervention (Black, 1993) |

| distance learning | Provision of access to learning for those who are located geographically separated from the instructor (Moore et al., 2011) |

| document review | Evaluation method using audit and analysis of design documents or documents produced by learners as they use an e-learning environment (Phillips et al., 2012) |

| e-learning | The use of computer technology to deliver training including technology-supported learning either online, offline or both (Moore et al., 2011) |

| evaluation | The measurement of educational processes, content and adequacy for interventions, activities, programs, curricula (Gibbs et al., 2006) |

| evaluation timing | Point in time when the e-learning evaluation is completed (prior, during, or post intervention) |

| formative assessment | The continuous, often informal communication between teacher and learner/s to provide feedback on strengths, weaknesses and potential improvements, generally more descriptive and qualitative (Gibbs et al., 2006) |

| interactive content | E-learning content that makes use of multimedia content, such as text, graphics, audio and/or video and provides options for navigation, interaction and communication (Hofstetter, 2001) |

| podcasts | “A video and/or audio file made available in digital format for download over the Internet” (Merriam-Webster, 2018) |

| static content | E-learning content with limited interactivity and content engagement for learners (Zhang & Zhou, 2003) |

| summative assessment | The end-point of an assessment, often formal, whose character is more numeric and quantitative (Gibbs et al., 2006) |

| units of measurement | The manner or scale in which the intervention outcome is measured, such as a Likert-scale |

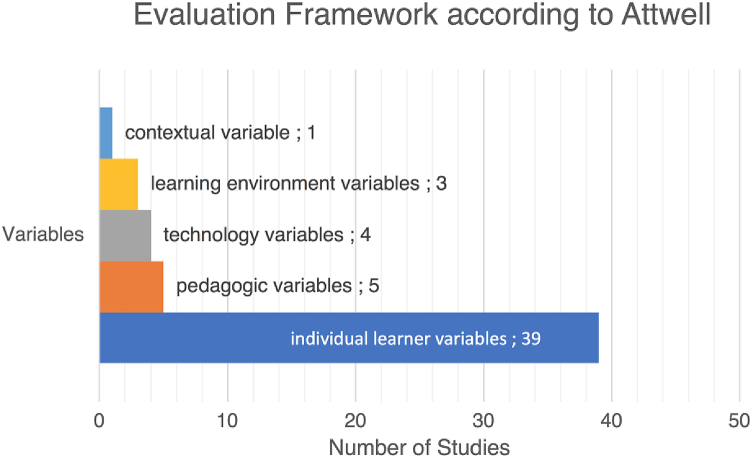

Attwell (2006) five levels were used to categorize the effectiveness of e-learning implementations.

-

•

individual learner variables (physical characteristics, learning history, learner attitude, and learner motivation);

-

•

learning environment variables (immediate learning environment, organizational or institutional environment, and the subject environment);

-

•

contextual variables (socio-economic factors, political context, cultural background, and geographic location);

-

•

technology variables (hardware, software, connectivity, media, and mode of delivery);

-

•

pedagogic variables (level and nature of learner support systems, accessibility issues, methodologies, flexibility, learner autonomy, selection and recruitment, assessment and examination, accreditation, and certification).

An intervention was determined to be effective based on the study's description and was categorized as (a) successful, (b) not successful or (c) non-applicable (no report on the success of the intervention).

Furthermore, studies were classified as cross-sectional if they gathered information from participants at one point in time (i.e., surveys), or experimental if they included non-controlled studies, one self-controlled group, or two-groups or more with a control and intervention group. Finally, the included studies were categorized as pilots or a fully implemented studies.

4. Results

4.1. Synthesis of findings

A total of 52 articles published from January 2007 to June 2017 were included in this review (see summary of extracted data in Appendix F). The number of participants within the studies ranged from 3 (Abdollahi, Sheikhbahaei, Meysamie, Bakhshandeh, & Hosseinzadeh, 2014) to 2,897 (Kotb, Elmahdy, Khalifa, El-Deen, & Lotfi, 2015) respectively. The duration of the interventions ranged from five days (Florescu, Mullen, Nguyen, Sanders, & Vu, 2015) to six months, (Walsh & De Villiers, 2015), and the average e-learning intervention was 12 weeks. Detailed study characteristics and features of the employed e-learning system and evaluation methods are shown in Table 3 and Table 4 respectively, and are presented in more detail in the following paragraphs.

Table 3.

Study characteristics of 52 included studies describing an e-learning program evaluation that was implemented in a low-and middle-income country (Jan 2007–June 2017).

| Study Characteristics |

Number of Participants, n (%) |

Number of Interventions, n (%) |

|---|---|---|

| Total | 12,294 (100%) | 52 (100%) |

| Study design | ||

| Cross-sectional or not controlled | 7,172 (58%) | 27 (52%) |

| Experimental (1 group, self-controlled) | 2,388 (19%) | 7 (13%) |

| Experimental (2 or 3 groups, Intervention and control) | 2,734 (23%) | 18 (35%) |

| Randomized | 938 (8%) | 11 (22%) |

| Non-randomized | 11,356 (92%) | 41 (78%) |

| Objectiveb | 519 (4%) | 11 (21%) |

| Subjectiveb | 2,779 (23%) | 19 (37%) |

| Mix of subjective and objectiveb | 8,996 (73%) | 22 (42%) |

| Qualitative | 3,106 (25%) | 4 (8%) |

| Quantitative | 8,844 (72%) | 39 (75%) |

| Mixed methods | 344 (3%) | 9 (17%) |

| Country income groups | ||

| Low-income country | N/Aa | 1 (2%) |

| Lower-middle-income country | 4,200 (34%) | 17 (33%) |

| Upper-middle-income country | 7,890 (64%) | 33 (63%) |

| All of the above combined | 204 (2%) | 1 (2%) |

| Geographical locationb | ||

| Africa | 6,498 (51%) | 12 (22%) |

| Asia | 1,726 (14%) | 24 (44%) |

| Europe | 204 (2%) | 3 (6%) |

| South America | 4,322 (33%) | 15 (28%) |

| Cohort/target groupb | ||

| Medical students (undergraduate) | 9,311 (72%) | 34 (61%) |

| Medical students and Residents | 71 (1%) | 1 (2%) |

| Medical students, Residents and Physicians | 798 (6%) | 2 (4%) |

| Residents (postgraduate) | ||

| Residents and Physicians | 310 (2%) | 5 (9%) |

| Physicians (work-experienced) | 276 (2%) | 3 (5%) |

| 2,185 (17%) | 7 (13%) | |

Characteristics described in more than one study are indicated. The number of participants and number of e-learning interventions are depicted in absolute numbers and the percentage is based on the absolute numbers of participants and studies for each individual characteristic.

N/A: number of participants not explicitly stated in the study.

More than one option possible for each study.

Table 4.

Features of the e-learning system, and characteristics of assessment and evaluation methods of 52 included studies on LMICs e-learning program evaluation (Jan 2007–June 2017).

|

Features of the e-learning system | ||

|

Characteristics |

Number of Participants, n (%) |

Number of E-learning interventions, n (%) |

| Stage of implementationa | ||

| pilot | 4,690 (34%) | 38 (72%) |

| implemented | 9,611 (66%) | 16 (28%) |

| E-Learning access | ||

| online | 9,458 (77%) | 38 (73%) |

| offline | 300 (2%) | 4 (8%) |

| online and offline | 2,536 (20%) | 10 (20%) |

| Format of e-learning delivery | ||

| mobile-based | 334 (3%) | 5 (9%) |

| computer-based | 9,301 (76%) | 39 (75%) |

| mobile- and computer-based | 2,236 (18%) | 3 (6%) |

| unspecified |

423 (3%) |

5 (10%) |

|

Assessment and evaluation | ||

|

Characteristics |

Number of Participants, n (%) |

Number of E-learning interventions, n (%) |

| Assessment and evaluation methoda,b | ||

| questionnaire | 12,140 (44,6%) | 46 (47%) |

| knowledge testing | 8,732 (32%) | 35 (35%) |

| document review | 43 (0,2%) | 1 (1%) |

| interviews | 110 (0,4%) | 3 (3%) |

| focus groups | 228 (0,8%) | 6 (6%) |

| system log data | 6,001 (22%) | 8 (8%) |

| Assessment typea | ||

| Diagnostic | 2,187 (14%) | 30 (35%) |

| Formative | 2,569 (17%) | 8 (9%) |

| Summative | 10,339 (69%) | 48 (56%) |

| Number of assessment and evaluation methods employed | ||

| 1 method type | 1,133 (9%) | 19 (37%) |

| 2 method types | 4,495 (37%) | 12 (23%) |

| 3 method types | 5,933 (48%) | 16 (31%) |

| 4 method types | 438 (4%) | 4 (8%) |

| 5 method types | 295 (2%) | 1 (2%) |

| Quality scorec | ||

| MERSQI ≥ 10.625 | 2,025 (17%) | 26 (50%) |

| MERSQI < 10.625 | 10,269 (83%) | 26 (50%) |

| NOS-E ≥ 2.5 | 6,550 (53%) | 33 (64%) |

| NOS-E < 2.5 | 5,774 (47%) | 19 (36%) |

| NOS ≥ 5 | 5,408 (44%) | 32 (62%) |

| NOS < 5 |

6,886 (56%) |

20 (38%) |

|

Learning outcomes (assessment) | ||

| Outcome measurementa,d | ||

| 1. knowledge | 6,618 | 31 (16%) |

| 2. satisfaction | 6,613 | 20 (10%) |

| 3. usage | 8,680 | 17 (9%) |

| 4. perceptions | 7,681 | 14 (7%) |

| 5. attitude | 963 | 10 (5%) |

| 6. opinion | 2,127 | 10 (5%) |

| 7. skills | 335 | 9 (5%) |

| 8. usefulness | 2,348 | 8 (4%) |

| 9. demographics | 2,513 | 7 (4%) |

| 10. ease of use | 333 | 4 (2%) |

| 11. usability | 156 | 4 (2%) |

| 12. acceptance | 289 | 4 (2%) |

| 13. experience | 330 | 3 (2%) |

| 14. self-perceived confidence | 48 | 3 (2%) |

| 15. learning relevance | 243 | 3 (2%) |

| Response rate (to assessment) | ||

| 100% | 665 (5%) | 14 (27%) |

| 80–99% | 2,809 (23%) | 16 (31%) |

| 50–79% | 2,672 (22%) | 7 (13%) |

| below 50% | 3,806 (31%) | 5 (10%) |

| NAe | 2,342 (19%) | 10 (19%) |

| Success reported | ||

| Yes | 10,349 (84%) | 45 (87%) |

| No | 1,184 (10%) | 5 (10%) |

| NAe | 761 (6%) | 2 (4%) |

Characteristics described in more than one study are indicated. The number of participants and the number of e-learning interventions are depicted in absolute numbers and the percentage is based on the absolute numbers of participants and studies for each individual characteristic.

More than one option possible for each study.

Participant numbers separately counted for each option.

Scores between raters averaged.

Measurement outcomes only included if study n ≥ 3, for a detailed list see Appendix I.

N/A: number of participants not explicitly stated in study.

4.2. Implementation of E-learning interventions: results of individual studies

4.2.1. Overview of included studies

The majority of the 52 included studies focused on e-learning for medical undergraduates (65%), while others targeted continuing medical education and professional development for physicians (see Table 3 for detailed study characteristics). Almost all of the studies (92%) made use of a summative assessment method, which was most often used in combination with a diagnostic (baseline) assessment prior to the intervention (58%). A few studies (15%) also made use of formative assessments during the intervention. The included studies covered a total of 25 countries, whereby almost two-thirds of the studies were from upper-middle-income countries (UMICs) (63%), followed by studies from lower-middle-income countries (33%) and only one study from a low-income country (LIC). We found that the majority of studies (73%) involved e-learning in pilots, and only 31% of the studies had progressed beyond the pilot stage. Most e-learning approaches were tailored for online access (delivery mode not specified) (73%), while a small number specified mobile online access, such as smartphones, (10%), and a few of those were based on offline approaches (8%).

4.2.2. Study design

Cross-sectional study designs with no control group were the most common (52%). Experimental designs were mainly implemented with two or three groups for the intervention and control (35%) or a single self-controlled group (13%). Non-random assignment to intervention or control group was predominant (79%), although a few studies did randomize group assignments (21%). Most studies (75%) had used quantitative methods, while a few studies used mixed-methods (17%) or a fully qualitative approach (8%). Most of the quantitative studies (79%) were based on subjective measures. In general, the effectiveness of the quantitative methods was measured by study participants' subjective self-reported data (perception) (40%), objective data (17%) or a mix of both (25%).

4.2.3. E-learning contents

The scope of the content covered 35 preclinical and clinical subjects (see Appendix F for details). The most frequently taught e-learning topic was pediatrics (10%), followed by anatomy (6%) and emergency medicine (6%).

In several studies, static content was offered in PowerPoint presentations alongside video content visualizing medical procedures and examinations, and audio-visual content, such as enhanced podcasts showing presentation slides with recorded audio or animation. Content included digital textbooks, scientific papers, lecture notes, original drawings, and links to relevant websites. The interactive e-learning segments covered assignments with case scenarios and questions, self-tests with peer feedback, quizzes including photo quizzes, crosswords, matching puzzles, online-based question and answer sessions, as well as recorded video-conferencing sessions made available as asynchronous e-learning materials.

4.3. Data analysis

The data analysis of e-learning studies generally included inferential statistics (58%), while 42% of studies included descriptive statistics. Inferential statistics only were reported in just under one-third of the studies (29%) or in combination with descriptive statistics (30%); a solely descriptive approach was rarely reported (12%). Some studies did not describe their data analysis at all or used no statistical tests (29%). The most frequent analysis method was a t-test (46%), reported as a two-sample t-test (23%), a paired two-sample t-test (15%), or an unpaired two-sample t-test (8%). Studies mentioned using a t-test to compare the performance of two groups to determine the significance of the effect of the intervention (Bediang et al., 2013), or to compare pre-test to post-test scores (Chang et al., 2012; Vieira et al., 2017), grades (Gazibara et al., 2015), cognitive load on both learning environments (Grangeia et al., 2016) or mean scores of experimental and control groups (Tomaz, Mamede, Filho, Roriz Filho, & van der Molen, 2015). A chi-square test was used in a few studies (12%), while other data analysis methods included Wilcoxon-Mann-Whitney test (6%), Levene's test (4%), linear regression (4%), variance analysis (4%), Spearman's rank-order correlation (2%), Bonferroni's correction (2%), and the Kruskall-Wallis test (2%).

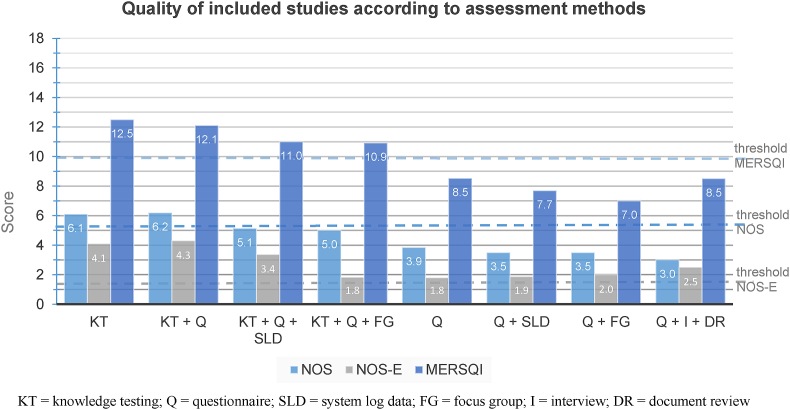

4.3.1. Study quality

The quality scores of studies varied with the highest MERSQI score of 15.5/18 points, and the lowest 5.75 (MERSQI scores: SD = 2.26, FX = 10.67) (see Fig. 2 and Appendix C and J for details). In total, 50% of the studies were below the median MERSQI score of 10.625. The highest NOS score was 8.5/9 points, and the lowest 2.5/9 (NOS scores: SD = 1.57, FX = 5.19), and 38% of the studies had a score below the median. For the NOS-E quality scale for education, three studies achieved the maximum rating of six points and one study the minimum score of 0.5 points (SD = 1.54, FX = 3.22), and 36% of studies were below the NOS-E median of 2.5 points.

Fig. 2.

Study quality according to NOS, NOS-E and MERSQI quality assessment tools of the various evaluation methods for e-learning intervention in the included studies.

Studies that employed knowledge testing for assessment received the higher average quality ratings (MERSQI FX = 12.1, NOS FX = 6.2, and NOS-E FX = 4.3), as well as studies that exclusively assessed knowledge (MERSQI FX = 12.5; NOS FX = 6.1; NOS-E FX = 4.1). The lowest average ratings were found for studies that used only a questionnaire for evaluation of the e-learning (MERSQI FX = 8.53; NOS FX = 3.85; NOS-E FX = 1.8), a questionnaire in combination with a system log data for usage (MERSQI FX = 7.69; NOS FX = 3.5; NOS-E FX = 1.9), or a questionnaire in combination with a focus group (MERSQI FX = 7; NOS FX = 3.5; NOS-E FX = 2).

Overall, NOS-E and NOS scores showed similar distributions since 63% of studies were above the NOS-E and 62% above the NOS quality threshold. ICC estimates and their 95% confident intervals were calculated based on a single-measurement, absolute-agreement, two-way mixed-effects model, since multiple raters assessed the study quality. NOS-E scores (ICC = 0.675, CI 0.49–0.79) were shown to be more reliable with a fair to good agreement (Landis & Koch, 1977), as compared to NOS (ICC = 0.37, CI 0.11–0.58) and MERSQI (ICC = 0.25, CI -0.01-0.49), which was indicative of poor reliability below 0.4 (see Appendix H for detailed ICC estimates).

4.3.2. Technology and e-learning delivery instruments

Most study participants accessed e-learning with computers (75%), although a minority of studies reported on the usage of mobile devices (10%) or both computers and mobile devices (5%). Some studies did not report the e-learning delivery mode (10%). A few studies provided electronic devices for e-learning access to study participants (8%); however, in most studies (92%), students used their own computer or mobile device.

Moodle was the predominant learning management system used in studies (29%), although not all authors were explicit about their learning management system. Other learning management system software applications included social media services such as Facebook (Sabouni et al., 2017), YouTube (Sabouni et al., 2017; Vieira et al., 2017), iTunes U (Florescu et al., 2015), GoogleDocs (Bandhu & Raje, 2014), GoogleSites (Gaikwad & Tankhiwale, 2014) and Blackboard (Martínez & Tuesca, 2014). Some studies custom built web-based e-learning platforms (Adanu et al., 2010; Davids, Chikte, & Halperin, 2011; de Sena, Fabricio, Lopes, & da Silva, 2013; Fontelo, Faustorilla, Gavino, & Marcelo, 2012; Kotb et al., 2015; Varghese, Faith, & Jacob, 2012). Self-developed mobile device apps were also used (Kulier et al., 2012; Martínez & Tuesca, 2014).

4.4. Assessment and evaluation methods

4.4.1. Assessment methods

Assessment of included studies largely followed a summative design (92%) (see Table 3, Table 5). The predominant assessment method in studies that reported their assessment method (n = 43) was knowledge testing (58%) and skills assessment (33%) using a pre- and post-test design (19%) (see Appendix E for detailed description of assessment and evaluation methods and outcome measures). Overall, the majority of studies (71%) reported a 50% and above response rate for assessments.

Table 5.

Outcome measures categorized by the evaluation and assessment methods.

| Outcome measures by evaluation and assessment methods | ||

|---|---|---|

| Methods | Outcome Measure | Number of e-learning interventions (n) |

| Evaluation methods | ||

| document review | content fit | 1 |

| focus group | needs | 2 |

| utlity | 1 | |

| opinion | 2 | |

| usage | 1 | |

| usefulness | 1 | |

| learning relevance | 1 | |

| interview | learning relevance | 2 |

| interaction | 1 | |

| attitude | 1 | |

| perspective | 1 | |

| improvements | 1 | |

| system log data | usage | 6 |

| technical problems | 1 | |

| demographics | 1 | |

| participation | 2 | |

| access date | 1 | |

| activity | 1 | |

| performance | 1 | |

| questionnaire | access date | 1 |

| access speed | 1 | |

| access to technology | 4 | |

| activity | 1 | |

| attitude | 6 | |

| cognitive load | 1 | |

| computer literacy | 1 | |

| content fit | 4 | |

| demographics | 5 | |

| ease of questions | 1 | |

| ease of use | 5 | |

| educational environment | 1 | |

| effectiveness | 3 | |

| efficacy | 1 | |

| efficiency | 1 | |

| expectations | 1 | |

| experience | 3 | |

| improvements | 1 | |

| infrastructure | 1 | |

| interaction | 3 | |

| learning approaches | 1 | |

| learning relevance | 2 | |

| motivation | 3 | |

| opinion | 7 | |

| participation | 2 | |

| perceived benefits | 1 | |

| perceived learning gains | 1 | |

| perceptions | 10 | |

| performance | 2 | |

| playability | 1 | |

| preferences | 1 | |

| quality of learning materials | 1 | |

| recommendable | 1 | |

| satisfaction | 11 | |

| self-evaluation | 1 | |

| self-regulation | 1 | |

| service quality | 1 | |

| suitability | 1 | |

| teaching quality | 1 | |

| teaching strategies | 1 | |

| technical problems | 1 | |

| technology acceptance | 1 | |

| time spent to perform task | 1 | |

| usability | 3 | |

| usage | 9 | |

| usefulness | 3 | |

| user-friendliness | 1 | |

| Assessment methods | ||

| pre-post-testing | skills | 10 |

| knowledge | 29 | |

| system log data | knowledge | 1 |

| questionnaire | affected behavior | 1 |

| self-assessment | 1 | |

| self-perceived skills | 1 | |

| self-reported behavior | 1 | |

4.4.2. Evaluation methods

Most of the studies (90%) used up to three methods (e.g., one method, two methods combined or three methods combined) to evaluate the e-learning implementation, and a minority used four or more evaluation methods (10%). The principal evaluation method was a questionnaire and most questionnaires were either designed by the study team or not specified.

A few studies based their questionnaires on validated frameworks, such as the System Usability Scale (Davids et al., 2011; Diehl, de Souza, Gordan, Esteves, & Coelho, 2015; Pusponegoro, Soebadi, & Surya, 2015), Berlin questionnaire (Sabouni et al., 2017), the course experience questionnaire (CEQ), a teaching performance indicator for higher education (Abdelhai et al., 2012; Ramsden, 1991), or adapted other validated questionnaires (Diehl et al., 2015; Grangeia et al., 2016; Pusponegoro et al., 2015; Rohwer, Young, & Van Schalkwyk, 2013). Other evaluation methods included the following: document review (Rohwer et al., 2013), interviews (De Villiers & Walsh, 2015; Rohwer et al., 2013; Wu, Wang, Johnson, & Grotzer, 2014), focus groups (De Villiers & Walsh, 2015; Gaikwad & Tankhiwale, 2014; Kaliyadan et al., 2010; Newberry, Kennedy, & Greene, 2016; Rajapakse, Fernando, Rubasinghe, & Gurusinghe, 2009), or system log data (Heydarpour et al., 2013; Kotb et al., 2015; Masters & Gibbs, 2007; Patil et al., 2016; Pino, Mora, Diaz, Guarnizo, & Jaimes, 2017; Seluakumaran, Jusof, Ismail, & Husain, 2011; Kukolja Taradi et al., 2008; Walsh & De Villiers, 2015).

4.4.3. Outcome measures

Outcome measures were defined following evaluation methods. Respectively, we assigned outcome measures for all included studies and the most frequent outcome measures, in order of frequency, included: knowledge (n = 31), satisfaction (n = 20), usage (n = 17), perceptions (n = 14), attitude (n = 10), opinion (n = 10), skills (studies n = 9), usefulness (n = 8), and demographics (n = 7) (see Table 4, Table 5, and Appendix E for detailed overview of outcome measures).

Knowledge assessment was mainly conducted using multiple-choice questions (33%) (Abdelhai et al., 2012; Chang et al., 2012; de Sena et al., 2013; Farokhi, Zarifsanaiey, Haghighi, & Mehrabi, 2016; Gaikwad & Tankhiwale, 2014; Hemmati et al., 2013; Kaliyadan et al., 2010; Khoshbaten et al., 2014; Kulatunga, Marasinghe, Karunathilake, & Dissanayake, 2013; Kulier et al., 2012; Martínez & Tuesca, 2014; Muñoz, Ortiz, González, López, & Blobel, 2010; Pino et al., 2017; Sabouni et al., 2017; Sánchez-Mendiola et al., 2012; Tomaz et al., 2015; Vieira et al., 2017), or multiple-choice questions mixed with other question types, e.g., extended matching questions (Abdelhai et al., 2012). The number of multiple-choice questions ranged from 5 to 108 in de Sena et al. (2013) and Chang et al. (2012), respectively. Other studies using multiple-choice questions had 10 questions (Gaikwad & Tankhiwale, 2014; Muñoz et al., 2010), 15 questions (Sabouni et al., 2017), 20 questions (Hemmati et al., 2013; Kulatunga et al., 2013; Pusponegoro et al., 2015), 21 questions (Khoshbaten et al., 2014), 24 questions (Farokhi et al., 2016), 30 questions (Martínez & Tuesca, 2014; Mojtahedzadeh et al., 2014), and 70 questions (Sánchez-Mendiola et al., 2012). Most studies followed a pre- and post-design to assess knowledge (n = 21), while a few studies assessed knowledge solely as summative (n = 8).

Skills were assessed in 10 studies (19%) (Abdollahi et al., 2014; Bustam, Azhar, Veriah, Arumugam, & Loch, 2014; Heydarpour et al., 2013; Khoshbaten et al., 2014; Kulier et al., 2012; O’Donovan, Ahn, Nelson, Kagan, & Burke, 2016; Sabouni et al., 2017; Sánchez-Mendiola et al., 2012; Seluakumaran et al., 2011; Tomaz et al., 2015). Medical skills were assessed with objectively structured clinical examinations (8%) (Grangeia et al., 2016; Kulier et al., 2012; O'Donovan et al., 2016; Tomaz et al., 2015), by blinded experts rating the student's echocardiography images (6%) (Bustam et al., 2014; Florescu et al., 2015), hands-on tissue ratings by an inter-observer agreement according to the Gleason grade score (Abdollahi et al., 2014), or by blinded experts grading a rhomboid flap skin model (de Sena et al., 2013). Skills were assessed after the e-learning intervention (6%) (Bediang et al., 2013; Bustam et al., 2014) or in a pre- and post-test design (13%). Evidence-based practice was evaluated in one study with a validated outcome measure based on a five-point scale covering seven domains: knowledge and learning materials, learner support, general relationships and support, institutional focus on evidence-based medicine (EBM), education training and supervision, EBM application opportunities, and affirmation of EBM environment (Kulier et al., 2012).

The outcome measures of perception, opinion, attitude, demographics, ease of use, satisfaction and learning relevance were predominantly evaluated with questionnaires. Other methods were used sporadically, e.g., focus groups (opinion) (De Villiers & Walsh, 2015; Gaikwad & Tankhiwale, 2014), interviews (attitude) (De Villiers & Walsh, 2015), learning relevance (De Villiers & Walsh, 2015; Wu et al., 2014) and system log data (demographics) (Hornos et al., 2013). Student satisfaction was predominantly evaluated subjectively (21%).

Insights on actual usage were derived with system log data (Heydarpour et al., 2013; Hornos et al., 2013; Kotb et al., 2015; Kukolja; Masters & Gibbs, 2007; Patil et al., 2016; Pino et al., 2017; Seluakumaran et al., 2011; Kukolja Taradi et al., 2008; Walsh & De Villiers, 2015), or based on the number of podcast downloads (Heydarpour et al., 2013). Study participants' self-reported usage was documented with questionnaires (Bandhu & Raje, 2014; Carvalho et al., 2012; Kotb et al., 2015; Varghese et al., 2012). In one study, the participants' e-learning usage was discussed in a focus group (De Villiers & Walsh, 2015).

Usability was evaluated using the System Usability Scale in three studies (Diehl et al., 2015; Pusponegoro et al., 2015; Rohwer et al., 2013), and one study extended the evaluation by giving a score based on video recordings of the participants' e-learning sessions (Diehl et al., 2015). Another study queried participants with questionnaires about the ease of navigation, esthetic quality of interface and information readability (Muñoz et al., 2010). To evaluate the students' experiences, the CEQ was used as a performance indicator of teaching quality in higher education (Abdelhai et al., 2012).

Cognitive load was evaluated with a questionnaire using a seven-point Likert scale from minimum to maximum (Grangeia et al., 2016). Examples of questions included, “How much mental effort was necessary to accomplish virtual rounds?” and “How much mental effort was necessary to accomplish real medical rounds?” These questions were further refined into intrinsic (“How difficult was the online course content?”), extrinsic (“How difficult it was to navigate on our platform?”), and germane load (“How much concentration did you maintain during our course activities?”) (Grangeia et al., 2016).

Most evaluation variables corresponded to individual learner variables (n = 47), pedagogic variables (n = 5), technology variables (n = 2), learning environment variables (n = 2), and contextual variables (n = 1) (see Fig. 3 and Appendix G for more details) (Attwell, 2006).

Fig. 3.

Outcome measures of included studies according to the variables of Attwell's evaluation framework.

4.5. Effectiveness of E-learning

The majority of studies (both quantitative and qualitative) reported effective e-learning (83%) with effectiveness predominantly based on a subjective measure (49%) (Abdelhai et al., 2012; Adanu et al., 2010; Bandhu & Raje, 2014; Davids et al., 2011; de Fátima Wardenski et al., 2012 De Villiers & Walsh, 2015; Diehl et al., 2015; Fontelo et al., 2012; Gazibara et al., 2015; Grangeia et al., 2016; Hemmati et al., 2013; Hornos et al., 2013; Karakuş, Duran, Yavuz, Altintop, & Çalişkan, 2014; Kotb et al., 2015; Kulatunga et al., 2013; Rajapakse et al., 2009; Reis, Ikari, Taha-Neto, Gugliotta, & Denardi, 2015; Sánchez-Mendiola et al., 2012; Tayyab & Naseem, 2013; Varghese et al., 2012; Walsh & De Villiers, 2015; de Fátima Wardenski et al., 2012). Just over one-fifth of the studies (21%) based e-learning effectiveness on objective measures (Abdelhai et al., 2012; Bediang et al., 2013; Bustam et al., 2014; Chang et al., 2012; Farokhi et al., 2016; Kulier et al., 2012; O'Donovan et al., 2016; Suebnukarn & Haddawy, 2007; Vieira et al., 2017). A number of studies (30%) reported effectiveness based on subjective and objective measures (de Sena et al., 2013; Tomaz et al., 2015 Gaikwad & Tankhiwale, 2014; Mojtahedzadeh et al., 2014; Muñoz et al., 2010; Newberry et al., 2016; Pino et al., 2017; Pusponegoro et al., 2015; Rohwer et al., 2013; Sabouni et al., 2017; Seluakumaran et al., 2011; Silva, Souza, Silva Filho, Medeiros and Criado, 2011; Tomaz et al., 2015) (see Appendix I for details of categorization by subjective and objective measures).

A few studies (10%) found no difference between e-learning and face-to-face learning with regards to knowledge and skills acquisition (Florescu et al., 2015; Khoshbaten et al., 2014; Martínez & Tuesca, 2014; Kukolja Taradi et al., 2008). One study reported negative results for e-learning since the study participants preferred learning with textbooks rather than mobile devices (Martínez & Tuesca, 2014). Study participants' positive feedback and attitude was found to contradict their actual low e-learning usage in one study (Patil et al., 2016).

5. Discussion

5.1. Primary results

We identified and reviewed 52 studies on e-learning for medical education in LMICs. Most of these studies were from UMICs in Asia, South America or Africa, while only a few were from lower-middle-income-countries (only one from an LIC), which was similarly reported by Frehywot et al. (2013). This may indicate that few e-learning studies have been published or conducted over the past 10 years in LICs. Most studies were found to focus primarily on the study participant as the learner, and only used individual learner variables for evaluation of the intervention instead of considering other aspects of a holistic e-learning evaluation, such as pedagogy, technology, context and learning environment (Attwell, 2006).

5.1.1. How was e-learning implemented in interventions?

Some implementations approached medical e-learning innovatively by re-purposing social media platforms for e-learning, and re-using technologies like podcasts for medical education, which may be appropriate for cultures in sub-Saharan Africa (Croft, 2002). Approaches to diversify e-learning contents are worth investigating since they have the potential to foster a more engaging e-learning environment. Podcasts, for example, have a low-entry level and smartphones currently allow for easy recording of high-quality, digital audio. However, in the majority of studies, we found that predominantly online-based e-learning was being implemented. Almost all studies had not accounted for the challenges and costs of online access, nor had they provided potentially costly technical devices for e-learning access. For medically underserved rural areas in LMICs, where there often is no reliable internet connectivity or electricity, offline-based solutions that bridge connectivity challenges are potentially important enabling factors for students to benefit from e-learning (Barteit, Jahn et al., 2019; Barteit, Neuhann et al., 2019). Blended learning that reduces face-to-face contact time may provide an approach for low-resource settings to bridge the lack of infrastructure by partly outsourcing learning as self-directed and outside the actual class room (Graham, Woodfield, & Harrison, 2013). Furthermore, e-learning implementations in low-resource contexts may benefit from minimizing resources needed for e-learning, such as for content development and technical equipment. Especially the development of e-learning materials constitutes a bottleneck since most of the low-resource countries are faced with a lack of medical teachers (Frenk et al., 2010). To decrease the work load of medical teachers for e-learning content development, for example, students may contribute e-learning contents themselves by creating step-by-step, peer-reviewed video guides on medical procedures, like measuring blood pressure. Also, when preparing the step-by-step video guides, students review local guidelines and learn the procedure as per the current guideline. Furthermore, the sustainability of technical devices in low-resource countries is key and “should be carefully balanced within the given setting” (Barteit, Jahn, et al., 2019), as most low-resource countries are constrained in their access to electricity and Internet, and face different climatic environments, like hot temperatures and dust. Another key aspect for low-resource contexts is to take into consideration if the technical device can be repaired in the country and maintained by local ICT staff, as this may not often be the case, especially with imported technical devices.

5.1.2. What e-learning evaluation and assessment methods were employed in the studies?

A highly prevalent evaluation method was to assess student knowledge with multiple-choice tests, although a small number of studies assessed skills with hands-on assignments. It appears that questionnaire-based evaluations and pre- and post-test assessments of knowledge focusing on the study participants are the go-to methods for e-learning evaluation in LMICs. However, as Attwell (2006) and others (Kirkpatrick, 1975; Lahti, Hätönen, & Välimäki, 2014; Pickering & Joynes, 2016) have argued, effective adaptation of e-learning implementations requires more complex study designs and evaluation methods. For instance, assessment methods should address the actual knowledge and skills transferred via e-learning. Overall, some studies identified shortcomings, e.g., Martínez and Tuesca (2014) who stated they “had several limitations, including the low sample size, the lack of a control group” (p. 404) or Sabouni et al. (2017) who identified the limitation that their e-learning “course did not have a significant effect on students' attitude toward [EBM] [which] may be explained by our questions not comprehensively assessing [EBM] attitude” (p. 5). Especially in low-resource contexts, many technology-enhanced interventions, like e-learning, never progress past the pilot stage, which has been coined pilotitis (Huang, Blaschke, & Lucas, 2017). A comprehensive evaluation framework (Kirkpatrick, 1975; Lahti et al., 2014; Pickering & Joynes, 2016) may lead to valuable insights into how to best implement e-learning into the local educational infrastructure.

5.1.3. What outcome was evaluated?

We found that a total of five methods (questionnaire, document review, interviews, focus group discussions, system log data) were used to evaluate a wide spectrum of outcome measures (n = 52) and most corresponded to an individual level of assessment. This finding highlighted the lack of a standard and common ground for medical education research (Cook, Beckman, & Bordage, 2007). Only very few studies extended beyond the individual level to gain insight into the complex ecosystem that defines effective e-learning implementation in LMICs. For example, Rohwer et al. (2013) discussed tutors' perceptions of study participants and medical e-learning in groups, thus potentially adding a further layer of insight into their intervention. Taking into consideration the cultural context is a central aspect to avoid frustration and to provide a supportive learning environment (Meier, 2007). Especially when e-learning materials have not been adapted to the respective LMICs context, tutors have shown to help “students adapt to the style of the material and to make a course developed in another country both culturally and pedagogically relevant” (Selinger, 2004, p. 1).

5.1.4. How was success of e-learning defined?

In line with the predominance of subjective evaluation of the learner's perspective, many studies defined e-learning effectiveness based on subjective measures, such as perception, satisfaction, opinion, and student feedback. Patil et al. (2016) reported that subjective feedback from study participants opposed the actual e-learning usage, since the study participants' feedback was positive, although their actual usage was quite low. Literature highlights the limitation of using subjective evaluation measures since the “scope of evaluation is typically limited to user enjoyment and satisfaction” (Ruggeri et al., 2013, p. 315).

5.2. Quality of included studies

Most studies did not produce scientifically sound evidence to conclusively determine e-learning effectiveness for medical education since only four studies featured RCTs. Other methodological approaches further weakened the significance of the studies' results, such as not using a comparison group or randomizing the control group to collect baseline data. Other weak studies made use of invalidated methods, such as self-developed questionnaires. We found that almost all of the studies evaluated rather small-scale e-learning pilots. Thus, many studies received ratings below the set threshold of all quality measures (NOS, NOS-E, MERSQI). Poor quality studies in medical education have been previously reported (Cook et al., 2007). Al-Shorbaji et al. (2015, p. 156) found “important methodological flaws, that may have biased the findings” of their systematic review on e-learning and blended learning interventions (p. 18). A potential underlying reason for the overall low quality of included e-learning studies may be the limited available funding for medical education research (Reed et al., 2008)

However, there were some studies, such as Kulier et al. (2012), that received overall strong quality ratings since the study design and reporting followed the routine procedures for RCTs. Based on our findings, the facilitation of RCTs seems recommendable, since they may generate significant insights for the design and implementation of e-learning interventions for medical education, especially in LMICs. However, RCTs are challenging to conduct in the highly regulated and applied field of medical education (Davis, Crabb, Rogers, Zamora, & Khan, 2008). Alternatively, strong causal research designs could be used, such as quasi-experimental studies (Briz-Ponce, Juanes-Méndez, García-Peñalvo, & Pereira, 2016; Campbell & Stanley, 1966) or alternately, multidimensional evaluation frameworks (Attwell, 2006; Kirkpatrick, 1975; Lahti et al., 2014; Pickering & Joynes, 2016). A strong study design is key for identifying the weaknesses and strengths of interventions for medical education and adapting e-learning to the realities of the local setting (Frenk et al., 2010).

For most low-resource contexts, the overarching objective of e-learning is to increase medical education opportunities in quantity and quality to address much-needed health worker capacity, while at the same time decreasing the workload of overburdened medical teachers (Frehywot et al., 2013). Our findings underline that the majority of studies included in this review described and evaluated e-learning implementations in a pilot stage. The low number of publications of e-learning interventions in LMICs may impede the development of best practice standards for e-learning implementation in low-resource contexts. This limitation has been already identified as an re-occurring phenomenon in e-learning and has been coined as “pilot-itis” (Barteit, Jahn, et al., 2019; Franz-Vasdeki, Pratt, Newsome, & Germann, 2015; Huang et al., 2017; Oliver, 2014). Although the World Health Organization has reported that the number of pilot interventions had decreased and large-scale interventions have increased (World Health Organization, 2016a), our findings may be indicative of the continued implementation of pilot projects that are ultimately ceded as unscalable projects, which cannot be translated into sustainable implementation beyond the pilot phase. Yet, sustainability is a pertinent aspect of an e-learning implementation, and specifically in LMICs. Failed e-learning implementation blocks resources and slows down the overall development process and people may simultaneously lose their trust in the usefulness of technologies for medical education and its potentially “revolutionary effect” in low-resource contexts. Main weaknesses for the sustainability of e-learning interventions have been identified and include inadequate training of all involved parties, poor technological support, unmet expectations and inadequate allotment of financial resources (Labrique, Vasudevan, Kochi, Fabricant, & Mehl, 2013). However, in our systematic review we only found a few studies that considered these aspects in their evaluation. In fact, many e-learning interventions do not account for a system-wide approach (Huang et al., 2017), thus decreasing the efficiency and sustainability of e-learning since challenges are not identified in time and potentially result in failure. Sustainable e-learning models that minimize the need for resources are necessary for low-resource contexts in particular (Barteit, Jahn et al., 2019). These include offline-based approaches that reduce the cost of internet usage or bottom-up approaches for content creation, such as student-created e-learning content, which alleviate overburdened senior medical teaching staff and promote student learning and engagement in a pedagogical way.

5.3. Limitations

A limitation of this study was the possibility that some sources were missed due to failure to locate articles within the searched databases or an unjustified exclusion during the screening process. However, selection bias was minimized by using a range of keywords to select the studies, a wide date range of included studies, multiple databases, and three researchers for screening and manually searching reference lists.

Due to the heterogeneity of the included studies, a meta-analysis was not conducted.

We did focus our review on medical students and medical doctors and excluded interventions targeting other health workers. However, there were many other studies including nurses, midwives or dentists that may have provided relevant evaluation methods for e-learning medical education in LMICs that were not included in this review. There were evaluation methods for medical education that were not included in the discussion section; however, they may be worthy of consideration during further analysis of the results of this study. Furthermore, we did not investigate the usage and delineation of the terminology of distance learning, blended learning and e-learning used in included studies.

6. Conclusions

This systematic review focused on e-learning for medical education implemented in LMICs and summarized the evaluation and assessment methods used to measure e-learning effectiveness. Our findings indicate that e-learning in LMICs has not met its expected potential. Although most studies have praised and implemented e-learning for its many advantages (e.g., adaptability, diversity and economic benefit), there are ongoing limitations to reach its full potential in LMICs. Some reasons for the limited success in e-learning implementation may be due to restricted financial resources and limited evaluation and assessment approaches rooted in an overemphasis on evaluating individual learner perceptions and knowledge, while not considering other features that may potentially inform and guide a strong e-learning system. E-learning interventions are mainly implemented as small-scale pilot projects, which may indicate that educational institutions and organizations are not giving e-learning a strong mandate as an educational method, but rather see it as a commodity of technological modern days with a short shelf-life. Based on our results, to unfold the potential of e-learning for medical education, institutions and organizations need to embrace and fund e-learning beyond pilot stages and consider a wider picture of e-learning including evaluations that expand their scope beyond the individual learner and provide transparent evaluation designs. Some studies in this review highlighted the potential and effectiveness of e-learning in LMICs settings. To contribute further to the mitigation of the health worker crisis, especially in LICs, innovative and adapted e-learning implementations may increase the quality and quantity of medical education while at the same decrease the workload of involved medical teachers. Thus, medical e-learning has the potential to play a central role in contributing to achieving the SDGs on health for LMICs. Progress to that end requires a combined effort and strong commitment from all involved stakeholders including those on a national level and potential international aid agencies to serve as a foundation to unfold e-learning's potential and expand the impact of this innovative teaching tool.

Funding

This work was supported by the Else Kröner-Fresenius-Stiftung [2016_HA67].

Declaration of competing interest

None declared.

Acknowledgements

SB, DG, MMJ conducted the systematic literature review. SB wrote the draft manuscript with support from FN, DG and MMJ. The manuscript was reviewed by SB, DG, MMJ, TB, AJ and FN.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.compedu.2019.103726.

Abbreviations

- CEQ

course experience questionnaire

- ICC

intraclass correlation coefficient

- LICs

low-income countries

- LMICs

low- and middle-income countries

- MERSQI

Medical Education Research Study Quality Instrument

- NOS

Newcastle-Ottawa Scale

- NOS-E

Newcastle-Ottawa Scale-Education

- OSCE

objective structured clinical examination

- PICOS

patient, intervention, comparison, outcome and studies

- PRISMA

Preferred Reporting Items for Systematic Reviews and Meta-Analyses

- RCTs

randomized-controlled trials

- SDGs

sustainable development goals

- UMICs

upper-middle-income countries

Appendix A. Supplementary data

The following are the Supplementary data to this article:

References

- Abdelhai R., Yassin S., Ahmad M.F., Fors U.G.H.H. An e-learning reproductive health module to support improved student learning and interaction: A prospective interventional study at a medical school in Egypt. BMC Medical Education. 2012;12(1):11. doi: 10.1186/1472-6920-12-11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Abdollahi A., Sheikhbahaei S., Meysamie A., Bakhshandeh M., Hosseinzadeh H. Inter-observer reproducibility before and after web-based education in the Gleason grading of the prostate adenocarcinoma among the Iranian pathologists. Acta Medica Iranica. 2014;52(5):370. http://acta.tums.ac.ir/index.php/acta/article/view/4614 Retrieved from. [PubMed] [Google Scholar]

- Adanu R., Adu-Sarkodie Y., Opare-Sem O., Nkyekyer K., Donkor P., Lawson A. Electronic learning and open educational resources in the health sciences in Ghana. Ghana Medical Journal. 2010;44(4):159–162. doi: 10.4314/gmj.v44i4.68910. https://www.ncbi.nlm.nih.gov/pubmed/21416051 Retrieved from. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Al-Shorbaji N., Atun R., Car J., Majeed A., Wheeler E. World Health Organisation; 2015. eLearning for undergraduate health professional education. A systematic review informing a radical transformation of health workforce development.http://whoeducationguidelines.org/sites/default/files/uploads/eLearning-healthprof-report.pdf Retrieved from. [Google Scholar]

- Aluttis C., Bishaw T., Frank M.W. The workforce for health in a globalized context – global shortages and international migration. Global Health Action. 2014;7(1):23611. doi: 10.3402/gha.v7.23611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Asongu S.A., Le Roux S. Enhancing ICT for inclusive human development in Sub-Saharan Africa. Technological Forecasting and Social Change. 2017;118:44–54. doi: 10.1016/j.techfore.2017.01.026. [DOI] [Google Scholar]

- Attwell G. Evaluating e-learning - a guide to the evaluation of e-learning. Evaluate Europe Handbook Series. 2006;2:5–46. [Google Scholar]

- Bandhu S.D., Raje S. Experiences with e-learning in ophthalmology. Indian Journal of Ophthalmology. 2014;62(7):792–794. doi: 10.4103/0301-4738.138297. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barteit S., Jahn A., Banda S.S., Bärnighausen T., Bowa A., Chileshe G. E-learning for medical education in sub-Saharan Africa and low-resource settings: Viewpoint. Journal of Medical Internet Research. 2019;21(1):e12449. doi: 10.2196/12449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barteit S., Neuhann F., Bärnighausen T., Lüders S., Malunga G., Chileshe G. Perspectives of nonphysician clinical students and medical lecturers on tablet-based health care practice support for medical education in Zambia, Africa: Qualitative study. JMIR Mhealth Uhealth. 2019;7(1) doi: 10.2196/12637. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bediang G., Franck C., Raetzo M.-A., Doell J., Ba M., Kamga Y. Developing clinical skills using a virtual patient simulator in a resource-limited setting. Studies in Health Technology and Informatics. 2013;192:102–106. doi: 10.3233/978-1-61499-289-9-102. [DOI] [PubMed] [Google Scholar]

- Black P.J. 1993. Formative and summative assessment by teachers. [Google Scholar]

- Bollinger R., Chang L., Jafari R., O'Callaghan T., Ngatia P., Settle D. Leveraging information technology to bridge the health workforce gap. Bulletin of the World Health Organization. 2013;91:890–892. doi: 10.2471/BLT.13.118737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Briz-Ponce L., Juanes-Méndez J.A., García-Peñalvo F.J., Pereira A. Effects of mobile learning in medical education: A counterfactual evaluation. Journal of Medical Systems. 2016;40(6):136. doi: 10.1007/s10916-016-0487-4. [DOI] [PubMed] [Google Scholar]

- Bustam A., Azhar M.N., Veriah R.S., Arumugam K., Loch A. Performance of emergency physicians in point-of-care echocardiography following limited training. Emergency Medicine Journal. 2014;31(5):369–373. doi: 10.1136/emermed-2012-201789. [DOI] [PubMed] [Google Scholar]

- Campbell D.T., Stanley J.C. Houghton Mifflin Company; 1966. Experimental and quasi-experimental designs for research. [Google Scholar]

- Carvalho K. M. de, Iyeyasu J.N., Castro S.M., da C. e, Monteiro G.B.M., Zimmermann A. Experience with an internet-based course for ophthalmology residents. Revista Brasileira de Educação Médica. 2012;36(1):63–67. doi: 10.1590/S0100-55022012000100009. [DOI] [Google Scholar]

- Chang L.W., Kadam D.B., Sangle S., Narayanan S., Borse R.T., McKenzie-White J. Evaluation of a multimodal, distance learning HIV management course for clinical care providers in India. Journal of the International Association of Physicians in AIDS Care. 2012;11(5):277–282. doi: 10.1177/1545109712451330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Childs S., Blenkinsopp E., Hall A., Walton G. Effective e‐learning for health professionals and students—barriers and their solutions. A systematic review of the literature—findings from the HeXL project. Health Information and Libraries Journal. 2005;22(s2):20–32. doi: 10.1111/j.1470-3327.2005.00614.x. [DOI] [PubMed] [Google Scholar]

- Clunie L., Morris N.P., Joynes V.C.T., Pickering J.D. Vol. 00. Anatomical Sciences Education; 2017. (How comprehensive are research studies investigating the efficacy of technology-enhanced learning resources in anatomy education? A systematic review). [DOI] [PubMed] [Google Scholar]

- Cook D.A., Beckman T.J., Bordage G. Quality of reporting of experimental studies in medical education: A systematic review. Medical Education. 2007;41(8):737–745. doi: 10.1111/j.1365-2923.2007.02777.x. [DOI] [PubMed] [Google Scholar]

- Cook D.A., Hatala R., Brydges R., Zendjas B., Szostek J.H., Wang A.T. Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. Journal of the American Medical Association. 2011;306(9):978–988. doi: 10.1001/jama.2011.1234. [DOI] [PubMed] [Google Scholar]

- Cook D.A., Reed D.A. Appraising the quality of medical education research methods: The medical education research study quality instrument and the Newcastle. Ottawa Scale-Education. 2015;90(8):1067–1076. doi: 10.1097/ACM.0000000000000786. [DOI] [PubMed] [Google Scholar]

- Croft A. Singing under a tree: Does oral culture help lower primary teachers be learner-centred? International Journal of Educational Development. 2002;22(3–4):321–337. doi: 10.1016/S0738-0593(01)00063-3. [DOI] [Google Scholar]

- Davids M.R., Chikte U.M.E., Halperin M.L. Development and evaluation of a multimedia e-learning resource for electrolyte and acid-base disorders. Advances in Physiology Education. 2011;35(3):295–306. doi: 10.1152/advan.00127.2010. [DOI] [PubMed] [Google Scholar]

- David M., Sutton C.D. Sage; 2004. Social research: The basics. [Google Scholar]

- Davis J., Crabb S., Rogers E., Zamora J., Khan K. Computer-based teaching is as good as face to face lecture-based teaching of evidence based medicine: A randomized controlled trial. Medical Teacher. 2008;30(3):302–307. doi: 10.1080/01421590701784349. [DOI] [PubMed] [Google Scholar]

- Dawson A.Z., Walker R.J., Campbell J.A., Egede L.E. Effective strategies for global health training programs: A systematic review of training outcomes in low and middle income countries. Global Journal of Health Science. 2016;8(11):119–138. doi: 10.5539/gjhs.v7n2p119. [DOI] [Google Scholar]

- De Villiers M., Walsh S. How podcasts influence medical students' learning–a descriptive qualitative study. African Journal of Health Professions Education. 2015;7(1):130–133. [Google Scholar]

- Diehl L.A., de Souza R.M., Gordan P.A., Esteves R.Z., Coelho I.C.M. User assessment of “InsuOnLine,” a game to fight clinical inertia in diabetes: A pilot study. Games for Health Journal. 2015;4(5):335–343. doi: 10.1089/g4h.2014.0111. [DOI] [PubMed] [Google Scholar]

- Farokhi M.R., Zarifsanaiey N., Haghighi F., Mehrabi M. E-Learning or in-person approaches in continuous medical education: A comparative study. The IIOAB Journal. 2016;7(2):472–476. [Google Scholar]

- de Fátima Wardenski R., de Espíndola M.B., Struchiner M., Giannella T.R. Blended learning in biochemistry education: Analysis of medical students' perceptions. Biochemistry and Molecular Biology Education. 2012;40(4):222–228. doi: 10.1002/bmb.20618. [DOI] [PubMed] [Google Scholar]

- Florescu C.C., Mullen J.A., Nguyen V.M., Sanders B.E., Vu P.Q.P. Evaluating didactic methods for training medical students in the use of bedside ultrasound for clinical practice at a faculty of medicine in Romania. Journal of Ultrasound in Medicine. 2015 doi: 10.7863/ultra.14.09028. [DOI] [PubMed] [Google Scholar]

- Fontelo P., Faustorilla J., Gavino A., Marcelo A. Digital pathology – implementation challenges in low-resource countries. Analytical Cellular Pathology. 2012;35(1):31–36. doi: 10.3233/ACP-2011-0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franz-Vasdeki J., Pratt B.A., Newsome M., Germann S. Taking mHealth solutions to scale: Enabling environments and successful implementation. Journal of Mobile Technology in Medicine. 2015;4(1):35–38. doi: 10.7309/jmtm.4.1.8. [DOI] [Google Scholar]

- Frehywot S., Vovides Y., Talib Z., Mikhail N., Ross H., Wohltjen H. E-learning in medical education in resource constrained low- and middle-income countries. BMC Human Resources for Health. 2013;11(4) doi: 10.1186/1478-4491-11-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frenk J., Chen L., Bhutta Z.A., Cohen J., Crisp N., Evans T. Health professionals for a new century : Transforming education to strengthen health systems in an interdependent world. The Lancet. 2010;376:1923–1958. doi: 10.1016/S0140-6736(10)61854-5. [DOI] [PubMed] [Google Scholar]

- Gaikwad N., Tankhiwale S. Interactive e-learning module in pharmacology: A pilot project at a rural medical college in India. Perspectives on Medical Education. 2014;3(1):15–30. doi: 10.1007/s40037-013-0081-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazibara T., Marusic V., Maric G., Zaric M., Vujcic I., Kisic-Tepavcevic D. Introducing e-learning in epidemiology course for undergraduate medical students at the faculty of medicine, University of Belgrade: A pilot study. Journal of Medical Systems. 2015;39(10):121. doi: 10.1007/s10916-015-0302-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibbs T., Brigden D., Hellenberg D. Assessment and evaluation in medical education. South African Family Practice. 2006;48(1):5–7. doi: 10.1080/20786204.2006.10873311. [DOI] [Google Scholar]

- Graham C.R., Woodfield W., Harrison J.B. A framework for institutional adoption and implementation of blended learning in higher education. Internet and Higher Education. 2013;18:4–14. doi: 10.1016/j.iheduc.2012.09.003. [DOI] [Google Scholar]