Abstract

This document provides guidance for communicators on how to communicate the various expressions of uncertainty described in EFSA's document: ‘Guidance on uncertainty analysis in scientific assessments’. It also contains specific guidance for assessors on how best to report the various expressions of uncertainty. The document provides a template for identifying expressions of uncertainty in scientific assessments and locating the specific guidance for each expression. The guidance is structured according to EFSA's three broadly defined categories of target audience: ‘entry’, ‘informed’ and ‘technical’ levels. Communicators should use the guidance for entry and informed audiences, while assessors should use the guidance for the technical level. The guidance was formulated using evidence from the scientific literature, grey literature and two EFSA research studies, or based on judgement and reasoning where evidence was incomplete or missing. The limitations of the evidence sources inform the recommendations for further research on uncertainty communication.

Keywords: uncertainty communication, risk communication, transparency, probability, likelihood

Short abstract

This publication is linked to the following EFSA Supporting Publications article: http://onlinelibrary.wiley.com/doi/10.2903/sp.efsa.2019.EN-1540/full

Summary

The European Food Safety Authority (EFSA) requested its Scientific Committee and Emerging Risk Unit (SCER) and its Communication Unit (COM) to establish a working group to develop practical guidance for EFSA communicators on how to communicate the various expressions of uncertainty resulting from the uncertainty analyses (e.g. qualitative, quantitative) described in EFSA's document: ‘Guidance on uncertainty analysis in scientific assessments’ (EFSA Scientific Committee, 2018a; henceforth, ‘Uncertainty Analysis GD’).

The European Union (EU) Food Law identifies the target audiences of risk communication on EU food and feed safety: ‘risk assessors, risk managers, consumers, feed and food businesses, the academic community and other interested parties’. EFSA tailors its communication messages according to the expected scientific literacy of these audiences and their varying interests, splitting them into three broad categories – ‘entry’, ‘informed’ and ‘technical’ levels. EFSA delivers layered content to these audiences through a mixture of channels (meetings, media, website, social media, journal) and formats (scientific opinions, news stories, multimedia, posts) depending on their information needs and behaviours.

The application of EFSA's Uncertainty Analysis GD can result in eight main types of expressions of uncertainty: (1) unqualified conclusions with no expression of uncertainty; (2) description of a source of uncertainty; (3) qualitative description of the direction and/or magnitude of uncertainty; (4) inconclusive assessment; (5) a precise probability; (6) an approximate probability; (7) a probability distribution; and (8) a two‐dimensional probability distribution. In this communication guidance document (henceforth, ‘Uncertainty Communication GD’), these eight expressions provide the framework for the discussion of evidence sources and the guidance on communication of uncertainty.

This Uncertainty Communication GD is primarily aimed at communicators at EFSA, but could be applied by risk communicators in other institutions that provide scientific advice. It should be used as a supporting document alongside the EFSA handbook: When food is cooking up a storm – proven recipes for risk communications (EFSA, 2017; henceforth, EFSA Risk Communication Handbook), which is a practical guide for food safety risk communicators.

This document contains general and specific guidance for communication to audiences within the three levels: guidance for the entry and informed levels is addressed to communicators producing supporting communications (e.g. news stories), while the guidance for the technical level is addressed to assessors producing scientific outputs (e.g. opinions, reports). The guidance consists of clear instructions, precautions, examples and choices to consider. The general guidance should be used for all uncertainty communication. Use of the specific guidance is case‐by‐case; an easy‐to‐use form is the main tool provided for identifying the specific guidance applicable to the eight different uncertainty expression types.

The general and specific guidances were developed from an analysis of key evidence sources including selected academic literature, extracts from similar frameworks or guidance documents from other national or international advisory bodies, and the results of EFSA's own target audience research studies.

Although the available evidence on the best ways to communicate the uncertainty expressions was limited overall, the expert analysis of selected academic literature provided a useful starting point. All but two of the eight expressions of uncertainty resulting from uncertainty analyses, i.e. ‘unqualified conclusion with no expression of uncertainty’ and ‘inconclusive assessment’, were extracted from the selected papers, either as formulated by the authors themselves or interpreted and drafted by the working group. The recommendations from this literature were applied and tested on real examples of EFSA scientific assessments, to draft messages and select visual aids for communicating the related uncertainties. Since the available evidence does not, however, address every aspect of communicating uncertainty, some guidance in the current document is based on judgement and reasoning.

EFSA commissioned a focus group study in 2016 (Etienne et al., 2018) and carried out its own follow‐up multilingual online survey in 2017 (EFSA, 2018) that represented the early development phase of the Communication GD. The studies provided indications of target audience perspectives of the usefulness of uncertainty information, the impact of language, culture and professional background, and on audiences’ understanding of and/or preferences for messages describing four types of expressions of uncertainty. Both studies have limitations in their design and execution but, considered cautiously, they are useful sources of insights directly from EFSA's key target audiences.

How uncertainties should be conveyed to enable non‐scientists to make informed decisions is still an under‐researched field. Experience gained during the implementation of this Uncertainty Communication GD itself will provide new insights into the best way to communicate different expressions of uncertainty in scientific assessments. EFSA therefore intends to review and, if needed, update the Uncertainty Communication GD over the next five years.

Future research should address how various subjective probabilities could be communicated to laypeople so that they understand the information. Additional research should examine how well test subjects understand uncertainty communication and whether various communication formats result in different decisions. All such research should also involve decision‐makers in the risk management domain, because these stakeholders may reach different conclusions depending on how uncertainty is communicated.

1. Introduction

1.1. Background

The European Food Safety Authority's (EFSA) Guidance document on uncertainty analysis in scientific assessment (EFSA Scientific Committee, 2018a; henceforth, ‘Uncertainty Analysis GD’) defines ‘uncertainty’ as a general term referring to all types of limitations in available knowledge that affect the range and probability of possible answers to an assessment question.

Available knowledge refers here to the knowledge (evidence, data, etc.) available to assessors at the time the assessment is conducted and within the time and resources agreed for the assessment. Sometimes ‘uncertainty’ is used to refer to a source of uncertainty (see separate definition), and sometimes to its impact on the conclusion of an assessment. In science and statistics, we are familiar with concepts such as measurement uncertainty and sampling uncertainty, and that weaknesses in methodological quality of studies used in assessments can be important sources of uncertainty. Uncertainties in how evidence is used and combined in assessment – e.g. model uncertainty, or uncertainty in weighing different and sometimes conflicting lines of evidence in a reasoned argument – are also important sources of uncertainty. General types of uncertainty that are common in EFSA assessments are outlined in Section 8 of its ‘Scientific opinion on the principles and methods behind EFSA's guidance on uncertainty analysis in scientific assessment’ (EFSA Scientific Committee, 2018b). There the Scientific Committee recommended closer interaction between assessors and decision‐makers both during the assessment process and when communicating the conclusions. Understanding of the type and degree of uncertainties identified in the assessment helps to characterise the level of risk to the recipients and is therefore essential for informed decision‐making (EFSA Scientific Committee, 2018b). Communication helps them to understand the range and likelihood of possible consequences.

During the development of the Uncertainty Analysis GD, the Scientific Committee reviewed literature on the communication of uncertainty information as a basis for developing a common approach for EFSA's communications on uncertainty to different target audiences, including decision‐makers and the general public. That initial review indicated that the literature is equivocal about the most effective strategies to communicate scientific uncertainties and that, on the whole, there is a lack of empirical data in the literature on which to base a working model. In EFSA's own publication on best practice in risk communication ‘When food is cooking up a storm – proven recipes for risk communications’ (EFSA, 2017), which was developed with national partners, the advice to communicators is only of a general nature.

Therefore, the Scientific Committee recommended that EFSA should initiate research activities to explore best practices and develop further guidance in areas where this would benefit implementation of the Uncertainty Analysis GD, and the communication of uncertainties in scientific assessments targeted at different audiences. This would allow EFSA to identify how changes could be made to its current communications practices in relation to uncertainties and to tailor key messages to specific target audience needs.

As EFSA completed its research activities on communication of uncertainties to different target audiences (EFSA, 2018; Etienne et al., 2018), the Scientific Committee proposed to develop a separate Guidance document on communication of uncertainty in scientific assessments (henceforth ‘Uncertainty Communication GD’). The Scientific Committee considered that the significance of the research results and the different purpose, scope and target of the communication methodology warranted a stand‐alone document for communication practitioners. The Uncertainty Communication GD is a companion document to the Uncertainty Analysis GD.

1.2. Terms of Reference as provided by the requestor

The European Food Safety Authority (EFSA) asked its Scientific Committee and Emerging Risk Unit (SCER) and its Communication Unit (COM) to establish a working group to develop guidance on how to communicate uncertainty on the basis of EFSA's Uncertainty Analysis GD.

The Scientific Committee advised that to carry out this work expertise in social sciences (e.g. psychology, risk communication, uncertainty communication and public perceptions) was needed to join its working group on uncertainty. The working group had the following three objectives:

Develop practical guidance for EFSA communicators on how to communicate the various expressions of the uncertainty analyses (e.g. qualitative, quantitative) described in EFSA's Uncertainty Analysis GD, and in the ‘Scientific opinion on the principles and methods behind EFSA's guidance on uncertainty analysis in scientific assessment’ (EFSA Scientific Committee, 2018b) with the aim of bridging risk assessors and the different EFSA target audiences.

Advise assessors on the ways in which uncertainties are reported in EFSA assessments in relation to the need to communicate.

Advise EFSA on its current communication approach for dealing with uncertainty as described in the EFSA Risk Communication Handbook (EFSA, 2017).

1.3. Interpretation of the Terms of Reference

The working group developed the following work plan to reach the three objectives of the Terms of Reference:

-

Develop practical guidance for EFSA communicators on how to communicate the various expressions of the uncertainty analyses (e.g. qualitative, quantitative) described in EFSA's Uncertainty Analysis GD and in the ‘Scientific opinion on the principles and methods behind EFSA's guidance on uncertainty analysis in scientific assessment’ (EFSA Scientific Committee, 2018b). The following tasks were planned to reach objective 1:

-

–

Identify review papers for risk communication and relate them to uncertainty communication.

-

–

Perform a literature search on uncertainty communication and review the resulting literature.

-

–

Complement the literature with the insights gained from the EFSA research projects on communication of uncertainty from 2016 and 2017.

-

–

Perform an online search on approaches to communicating uncertainty by relevant national and international organisations.

-

–

Identify a representative set of outputs with case studies upon which EFSA might communicate, map the different sensitivities of topics dealt with by EFSA, target audiences, different types of assessment and expressions of uncertainty (e.g. probabilities, quantitative, qualitative), linking them to the methods described in the Uncertainty Analysis GD.

-

–

Consider whether different communications on uncertainty are needed for EFSA's defined target audiences and/or whether new categories are required.

-

–

Develop a practical communications approach and supporting tools for communications practitioners who are required to communicate scientific uncertainties to different target audiences. Use the literature review and the results of EFSA's target audience research activities conducted in 2016 and 2017 to inform the approach.

-

–

Draft this Guidance document on communication of uncertainty for consultation and follow‐up on feedback from the consultation activities with a report and input for finalising the Guidance document.

-

–

Consult EFSA's risk communication partners in the European Union (EU) Member States, the EU institutions and other interested parties (e.g. other EU agencies, international organisations, non‐EU countries) before finalising the Communication GD.

-

–

-

Advise assessors on the ways in which uncertainties are reported in EFSA assessments in relation to the need to communicate. The following tasks were identified to reach objective 2:

-

–

For the results of objective 1, consider whether there are additional requirements and/or recommendations (e.g. terminology, data format, graphics) that Panels should be aware of when drafting their opinions and especially the conclusions of their assessments.

-

–

-

Advise EFSA on its current communication approach for dealing with uncertainty as described in the EFSA Risk Communication Handbook (EFSA, 2017). The following tasks were identified to reach objective 3:

-

–

Review the relevant section of the EFSA Risk Communication Handbook.

-

–

Identify key examples of past communication challenges in which uncertainty was a decisive issue to determine the impact of a new approach to uncertainty communication.

-

–

1.4. Scope, audience and use

The Terms of Reference require providing guidance for EFSA on how to communicate uncertainty on the basis of its Uncertainty Analysis GD. This Uncertainty Communication GD provides a practical framework for communicating uncertainties in scientific assessments. It does not provide a template for EFSA's risk communication activities as this takes place within a well‐defined legal framework. However, a short description of EFSA's risk communication role, strategies and target audiences follows below (Section 1.5) as background and context.

1.4.1. Audience for this guidance

This Uncertainty Communication GD is primarily aimed at risk communicators at EFSA, but it may also be helpful to risk communicators at other institutions that provide scientific advice. It does not replace the current EFSA Risk Communication Handbook (EFSA, 2017), which describes the overall framework and practical approaches for risk communication at EFSA, but supports and complements that publication. In addition to guidance for communicators, this document contains some guidance for assessors on how best to report the various expressions of uncertainty resulting from their uncertainty analyses so that they can support effective uncertainty communication.

1.4.2. Structure of the guidance document

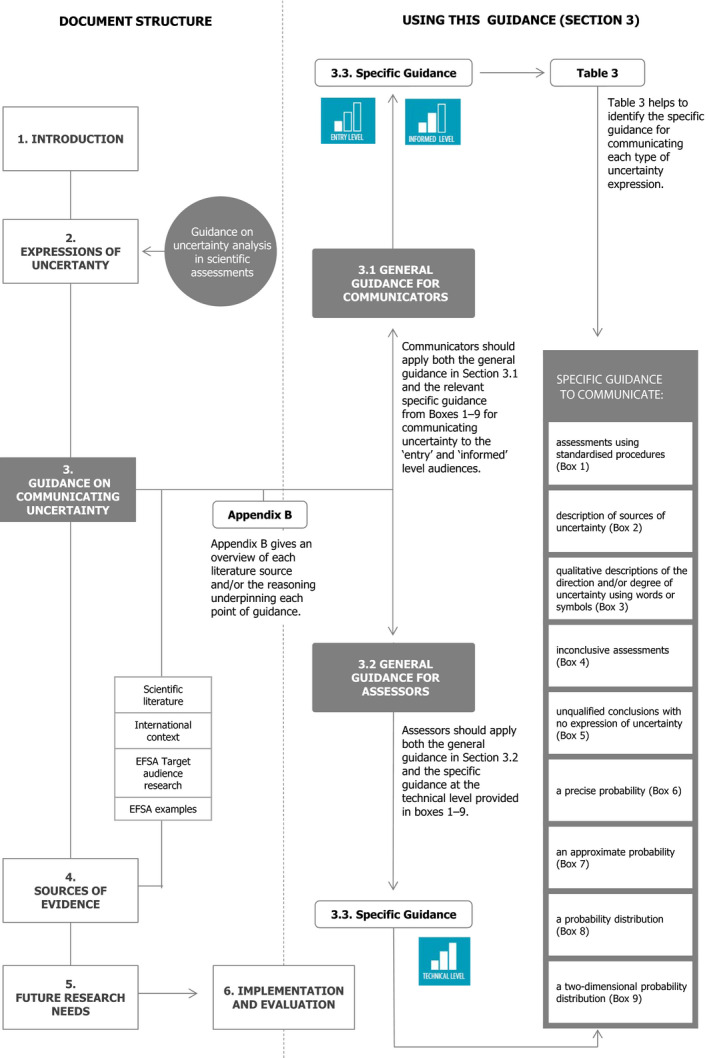

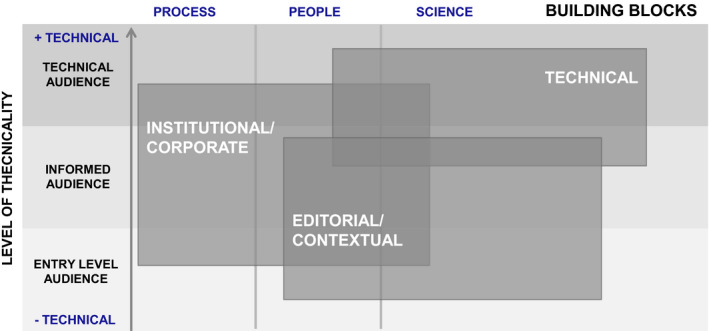

This Uncertainty Communication GD first explains EFSA's three broad communication target audiences (Section 1.5.2) and the eight different possible expressions of uncertainty as described in the Uncertainty Analysis GD (Section 2). As shown in Figure 1, Risk communicators looking for straightforward instructions on how best to communicate the various expression of uncertainty should go to Section 3. The guidance for the entry‐ and informed‐level audiences is addressed to communicators, while the guidance for the technical level is addressed to assessors and advises them on the information to provide in their assessments that is needed for communication. Section 4 describes the evidence sources that contributed to development of the guidance. Additional reasoning is summarised in Appendix B. Section 5 provides recommendations for further research and Section 6 outlines EFSA's plans for implementing the approach in its working practices and subsequent evaluation.

Figure 1.

Visual map of the structure and how to use this Uncertainty Communication GD

1.5. Risk communication at EFSA

EFSA's science communications role within the EU food safety system is discussed in this section. For terminology, the Uncertainty Analysis GD refers to ‘scientific assessment’ rather than ‘risk assessment’ to recognise that the Uncertainty Analysis GD is applicable to all of EFSA's scientific advisory work, not solely its risk assessments. Notwithstanding this, most of the following section refers to EFSA's ‘risk communication’ role because this is the terminology used in the relevant legal texts establishing EFSA. However, this Uncertainty Communication GD applies to all of EFSA's ‘science communication’ activities and so is titled in full: ‘Guidance on communication of uncertainty in scientific assessments’.

1.5.1. EFSA's risk communication role and strategies

Under the EU Food Law, Regulation (EC) No 178/20021, by which EFSA was founded, EFSA is ‘an independent scientific source of advice, information and risk communication to improve consumer confidence’. It defines risk communication as ‘the interactive exchange of information and opinions throughout the risk analysis process as regards hazards and risks, risk‐related factors and risk perceptions’. As stated by Codex (2018), risk communication should include a ‘transparent explanation of the risk assessment policy and of the assessment of risk, including the uncertainty’.

EFSA is charged with communicating the results of its work in the fields within its mission (food and feed safety, animal and plant health, nutrition) and with explaining its risk assessment findings. The European Commission is responsible for communicating its risk management decisions and the basis for them (i.e. scientific and/or other considerations). EU Member States are also charged with public communication on food and feed safety and risk. Given these overlapping roles, the Regulation also requires that EFSA collaborate closely with the Commission and the Member States to ‘promote the necessary consistency in the risk communication processes’.2 Risk management measures, if needed, usually take further time to agree and implement after the completion of an assessment. Public perceptions of the related risks may be affected by such a timescale, requiring linked communication over the entire public information campaign. Critically, this also allows opportunities for feedback and dialogue with the representatives of consumers, producers and other interested parties, which is also required under the Food Law.

With a clear mandate to communicate its scientific assessment results ‘on its own initiative’, EFSA follows a communications strategy based on guidelines in its EFSA Risk Communication Handbook (EFSA, 2017) that was developed together with the European Commission and members of EFSA's Communications Experts Network, which comprises communications representatives of EU national competent authorities on food and feed safety. The guidelines provide a framework to assist decision‐making about appropriate communication approaches in a wide variety of situations that can occur when assessing and communicating on risks related to food safety in Europe.

EFSA publishes over 500 scientific assessments and reports annually, but only about one‐tenth of these are accompanied by supporting communications (e.g. press releases/interviews, FAQs, videos, briefings). EFSA's communicators weigh up several factors in selecting assessments for supporting communication and the mix of communication approaches and formats to employ for each. Broadly, these include:

significance of the scientific results (e.g. routine vs new findings);

nature of the risk (e.g. emerging, possible, identified or confirmed);

potential public/animal health/environmental impact (e.g. there is a safety concern);

public perception and anticipated reactions/sensitivity of subject area;

legislative and market contexts (e.g. a request for authorisation);

urgency of request (e.g. in an outbreak situation, or a long‐term review);

institutional risk (national, European, international contexts).

The existence of one or more of the above factors in relation to an upcoming assessment or other activity is a potential trigger for such supporting communications. The communicator analyses the key results of the assessment or report, discusses key messages and supporting points with the assessors (scientific officers, EFSA Panel or working group members), agreeing a communications plan in the process. The plan identifies the rationale for communicating, the key messages and supporting points, and also defines the target audiences for the communication.

1.5.2. EFSA's target audiences

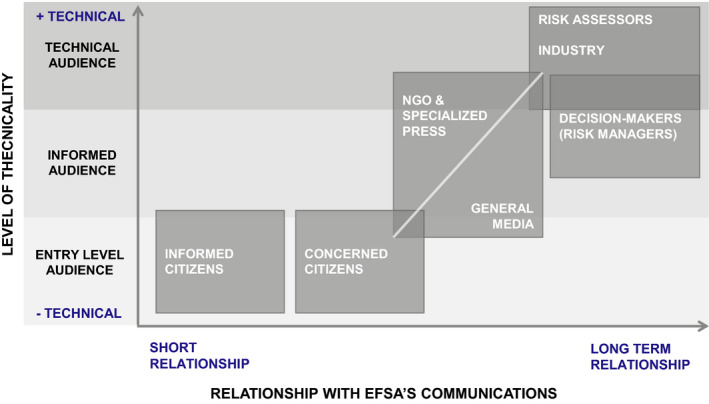

The EU Food Law identifies the target audiences of risk communication on EU food and feed safety as ‘risk assessors, risk managers, consumers, feed and food businesses, the academic community and other interested parties’. For EFSA's risk communication, the potential audience is therefore 500 million people residing in the European Union. Their interest, knowledge and concerns about food and food safety vary widely. Language, tradition, culture, age and education (e.g. scientific literacy) are among the variables that affect their understanding of messages about food safety‐related assessments.

Communicating directly to everyone is unrealistic given this diversity and complexity. Therefore, in the EU food safety system, many players share responsibility for communicating about food risks (and benefits) to consumers, food chain operators and other interested parties. EFSA cooperates with these other players: its partners in the EU Member States, EU institutions and ‘stakeholder’ groups (e.g. consumer organisations and public health professionals) to further disseminate the outcomes of its scientific assessments. To enable this, EFSA tailors its communication messages in layers to the expected scientific literacy and interests of these audiences and targets them through a mixture of channels (meetings, the media, website, social media, scientific journal) and formats (scientific opinions, news stories, multimedia, tweets).

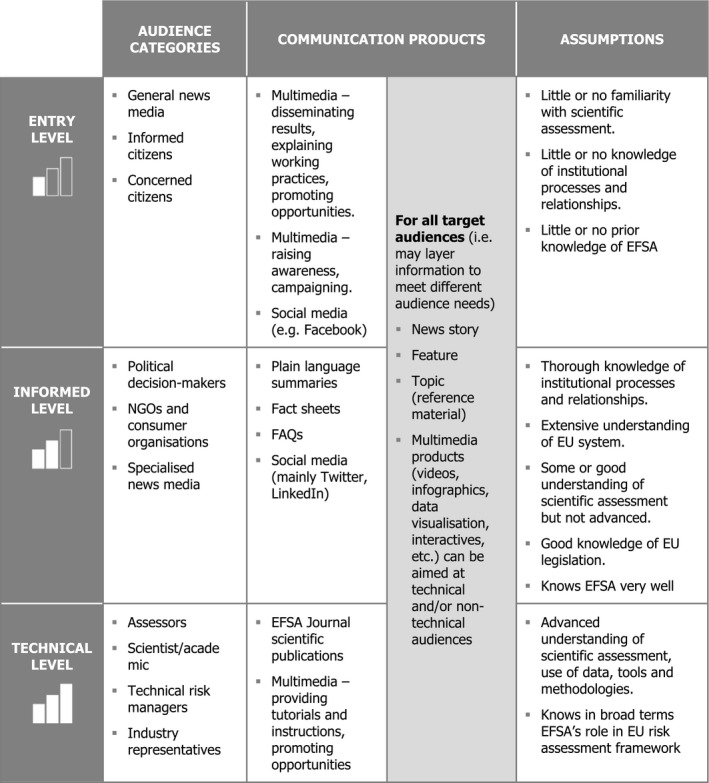

In devising its external communications, EFSA follows an approach for mapping and targeting these audiences (see Table 1) that was codified internally in 2014–2015 for the redesign of EFSA's corporate website. It was subsequently adapted to other communication channels and formats; more detailed segmentation of the audiences is possible for specific types of communication (e.g. to attract participants to events on specialist topics). The approach was based on the analysis of extensive user‐centred research involving interviews, online surveys, analytics data (web metrics, media pick up) and external expertise, as well as the frameworks that guide EFSA's work: EU Food Law, EFSA's strategic documents and plans (see Annex A for further details).

Table 1.

Mapping EFSA's target audiences for external communications (2015)

The table shows key target audience groups for EFSA that were identified through this research: decision‐makers, assessors, industry, non‐governmental organisations (NGOs)/specialised media, general media, and informed/concerned citizens. It also clusters them according to their scientific literacy and temporal relationship with EFSA's communications into three broad categories – ‘entry’, ‘informed’ and ‘technical’ levels. EFSA generally seeks to layer its communications content to improve accessibility for users in these broad audience categories. Certain audiences may generally prefer different communications products and channels, and the content can be tailored accordingly. The layering of information can also be within a single communication output. For example, a news story headline and introductory paragraphs are worded to be understandable to ‘entry’ level users while subsequent paragraphs may provide more details for ‘informed’ and ‘technical’ audiences.

The mapping of target audiences and strategy for content development comes with important caveats. There is much diversity within the target audiences and considerable overlap between them in terms of the assumptions made about them (e.g. their scientific literacy) and the communication products they may use when informing themselves about EFSA. For example, industry representatives have widely varying degrees of scientific literacy and users in all categories have personal preferences that may guide them in selecting one format over another. Nevertheless this approach proved practical and was already in use at EFSA, making it a functioning framework for the purposes of the Communication GD. Therefore, these groups of target audiences will be used as a parameter in structuring the guidance in Section 3. For communicators not involved in developing or further disseminating EFSA's communication activities but who may wish to use this guidance, this approach could probably be easily adapted to the characteristics of their target audiences.

1.6. Uncertainty communication

Communicating uncertainty aims to increase the transparency of the scientific assessment process and to provide the risk managers with a more informed evidence base by reporting on the strengths and weaknesses of the evidence (EFSA Scientific Committee, 2018b). However, several factors require consideration in developing strategies for uncertainty communications. Aversion to ambiguity has been observed in many studies (Ellsberg, 1961). People tend to prefer a risky option (e.g. 30% chance of a gain) over an ambiguous option (e.g. 20–40% chance of a gain). This aversion does not imply, however, that uncertainty should not be communicated. Transparent and open communication requires that uncertainty is communicated. Furthermore, studies suggest that people prefer to be openly informed about uncertainty associated with scientific findings (Frewer et al., 2002; Miles and Frewer, 2011).

The willingness to receive information about uncertainty also depends on education and age, with young and highly educated people wanting more details about uncertainty than less educated groups (Lofstedt et al., 2017). Additionally, beliefs about science interact with the level of scientific uncertainty, with high uncertainty being more influential on the willingness to act for people considering science as debate than for people understanding science as a search for absolute truth (Rabinovich and Morton, 2012).

To understand how and when uncertainty could be best formulated in qualitative terms, Frewer et al. (2002) tested the reception of 10 uncertainty statements, finding that people wanted to be provided with information on uncertainty as soon as it was identified and in full. Miles and Frewer (2011) tested the communication of eight types of uncertainty: uncertainty about who is affected, about past and future states, measurement uncertainty, due to scientific disagreement, from extrapolation from animals to humans, about the size of the risk and about how to reduce the risk. Their results were different across five different food hazards and confirmed higher risk perceptions in the presence of uncertainty for technological risks (genetically modified organisms, pesticides) than for natural risks (BSE, fat diets, Salmonella).

Frewer et al. (2002) found that uncertainty associated with the scientific process was more readily accepted than uncertainty due to lack of action by the government. This suggests that communication of uncertainty is less likely to cause public alarm if it is accompanied by information on what actions are being taken by the relevant authorities to address that uncertainty. However, such actions are risk management measures, which are outside the remit of EFSA (see Section 1.5.1). Therefore, when having assessed a risk, and when EFSA communications include information on uncertainty, consideration should be given to coordinating with the Commission on what can be said about any measures aimed at addressing the uncertainty.

1.6.1. EFSA's context

Transparency is one of EFSA's basic principles (EFSA, 2003) and has important implications for risk communication. A transparent approach to explaining how an organisation works, its governance and how it makes its decisions, is intended to build trust. Communications should clearly convey the most important areas of uncertainty in the scientific assessment, whether and how these can be addressed by the assessors and decision‐makers, and the implications of these remaining uncertainties for public health (EFSA, 2017).

It is important to distinguish between the understanding (comprehension) of EFSA's message by the audience, and the audience's perception of risk and uncertainty after receiving the message. Article 40 of EFSA's founding Regulation requires that EFSA ‘shall ensure that the public and any interested parties are rapidly given objective, reliable and easily accessible information, in particular for the results of its work’. This implies that EFSA's communications should be designed to ensure the results of its work (including its assessment of risk and uncertainty) are correctly understood by its audience, which is therefore the objective of this guidance. How people perceive risk and uncertainty themselves, after receiving EFSA's communications, will be influenced by many factors including their own prior beliefs, their stake in the issues involved, and their values. While authorities may consider it appropriate to communicate options for managing risk and uncertainty, this is outside EFSA's remit. This has implications for the review of the literature and the guidance, as most studies have focused on the perceptions of subjects after receiving information on risk and uncertainty, rather than their understanding of the information as it was communicated (see Section 4).

1.6.2. International context

Many national and international risk communication Guidance documents include only general rather than specific considerations about communication of uncertainty (Bloom et al., 1993; NRC, 2003; Fischoff et al., 2012; EEA, 2013; NASEM, 2017). A few national and international guidance documents on uncertainty analysis include a section with suggestions on how to communicate the findings of uncertainty analysis most effectively (Wardekker et al., 2013; Mastrandrea et al., 2010; BfR, 2015). Some of these suggestions reiterate some of the basic principles of good risk communication in the context of uncertainty, such as the need for transparent reporting of, for example, lack of sufficient knowledge, shared assumptions, or criteria by which evidence is included or dismissed.

Specific guidance on how to communicate on uncertainty in general has not yet been developed, with rare exceptions such as that included in the ‘Guidance for uncertainty assessment and communication’ by the Dutch environmental assessment agency (Wardekker et al., 2013) and in ‘Environmental decisions in the face of uncertainty’ by the Institute of Medicine in the USA (IOM, 2013). In both, general recommendations for communicating uncertainty are provided along with more specific recommendations for addressing the different target audiences and on how to present the uncertainty. These documents are more general in nature and do not provide detailed guidance on the different expressions of uncertainty that may result from the application of EFSA's Uncertainty Analysis GD (see next section).

2. Expressions of uncertainty

Messages about uncertainty need to be based on information that is provided in EFSA's scientific assessments. Therefore, this section describes the main types of expressions of uncertainty that are expected to result from uncertainty analyses following EFSA's Uncertainty Analysis GD. Section 3 then provides guidance on communicating each type of uncertainty expression, based on the different sources of evidence that contributed to the guidance and additional reasoning that is summarised in Appendix B.

The Uncertainty Analysis GD contains several options for carrying out an uncertainty analysis. Table 2 lists the types of expressions of uncertainty that are normally produced when uncertainty analysis is conducted by following those options. It does not include expressions that are discouraged by the Uncertainty Analysis GD, e.g. a range or bound for a quantity of interest that is presented without a specified probability and therefore lacks a defined meaning.. For conciseness, Table 2 and Sections 3 and 4 also do not include other expressions that may be generated by some more specialised methods included in the Uncertainty Analysis GD, such as probability boxes (Ferson et al., 2004) and the outputs of some methods for sensitivity analysis (Annex B17 of EFSA Scientific Committee, 2018b).

Table 2.

Types of expressions of uncertainty produced by uncertainty analysis when following the Uncertainty Analysis GD (EFSA Scientific Committee, 2018a) and included in the conclusion, summary or abstract of a scientific assessment. The same assessment may produce one or more of these expressions

| Type of uncertainty expression | Description |

|---|---|

| Unqualified conclusion, with no expression of uncertainty | This occurs in two situations:

|

| Description of a source of uncertainty | Verbal description of a source or cause of uncertainty. In some areas of EFSA's work, there are standard terminologies for describing some types of uncertainties, but often descriptions are specific to the assessment in hand (EFSA Scientific Committee, 2018b) |

| Qualitative description of the direction and/or magnitude of uncertainty using words or symbols |

Words or an ordinal scale describing how much a source of uncertainty affects the assessment or its conclusion (e.g. low, medium or high uncertainty; conservative, very conservative or non‐conservative; unlikely, likely or very likely; or symbols indicating the direction and magnitude of uncertainty: —, –, ‐, +, ++, +++) Because the meaning of such expressions is ambiguous, EFSA's Uncertainty Analysis GD recommends that they should not be used unless they are accompanied by a quantitative definition (EFSA Scientific Committee, 2018a) |

| Inconclusive assessment | This occurs in two situations:

|

| A precise probability |

A single number (in EFSA outputs: a percentage between 0% and 100%) quantifying the likelihood of either:

Note that the term ‘precise’ is used here to refer to how the probability is expressed, as a single number, and does not imply that it is actually known with absolute precision, which is not possible |

| An approximate probability |

Any range of probabilities (e.g. 10–20% probability) providing an approximate quantification of likelihood for either:

The probability ranges used in EFSA's approximate probability scale (see Table 4) are examples of approximate probability expressions. Assessors are not restricted to the ranges in the approximate probability scale and should use whatever ranges best reflect their judgement of the uncertainty (EFSA Scientific Committee, 2018a) |

| A probability distribution | A graph showing probabilities for different values of an uncertain quantity that has a single true value (e.g. the average exposure for a population). The graph can be plotted in various formats, most commonly a probability density function (PDF), cumulative distribution function (CDF) or complementary cumulative distribution function (CCDF) (see Section 4.1.4.2) |

| A two‐dimensional probability distribution | In this guidance, the term ‘two‐dimensional (or 2D) probability distribution’ refers to a distribution that quantifies the uncertainty of a quantity that is variable, i.e. takes multiple true values (e.g. the exposure of different individuals in a population). This is most often plotted as a CDF or CCDF representing the median estimate of the variability, with confidence or probability intervals quantifying the uncertainty around the CDF or CCDF |

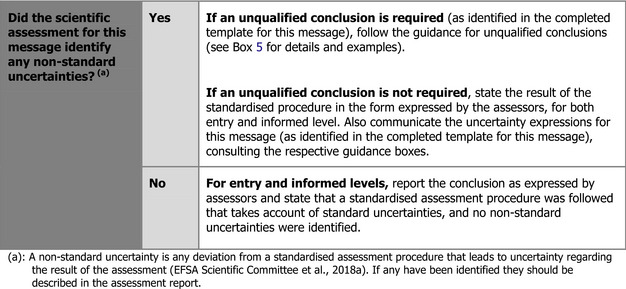

It is currently expected that, in many assessments, the conclusion, summary and abstract of the assessment will not contain expressions of uncertainty. This may arise in two types of situation, as indicated in the description for ‘unqualified conclusions’ in Table 2.

The first type of situation arises in standard assessment procedures, which are most commonly used in the assessment of regulated products. A standard procedure is an assessment methodology prepared for routine use in a specified type of assessment (e.g. acute or chronic risk for a specified class of chemicals). Standard assessment procedures include standard provisions (e.g. uncertainty factors) for addressing ‘standard uncertainties’, i.e. uncertainties that routinely occur in that type of assessment, and their conclusions are expressed in a standard form (e.g. ‘no health concern’, ‘health concern’, etc.) (EFSA Scientific Committee, 2018a,b). Provided only the standard uncertainties are present, the standard conclusion can be reported and communicated without qualification.

The second situation relates to other contexts in which legislation or decision‐makers require an unqualified conclusion (EFSA Scientific Committee, 2018a,b). This may apply to standard procedures in which non‐standard uncertainties are present, and to some types of non‐standard (case‐specific) assessments. In both cases, the assessment conclusion will have been based on an analysis of the uncertainties that are present but will be reported in an unqualified manner, which should then also be the primary message in communication. If the uncertainties are reported in the body of the assessment or an annex, then they may also be referred to in supporting parts of the communication but should not be included in the primary message.

When non‐standard uncertainties are present, the Uncertainty Analysis GD recommends that assessors quantify the combined impact of as many of the uncertainties they identify as possible, for reasons explained in detail by EFSA Scientific Committee (2018b). However, in some assessments, the assessors will be unable to include all the identified uncertainties in their quantitative expression of overall uncertainty, which will then be accompanied by a qualitative description of the unquantified uncertainties.

The Uncertainty Analysis GD contains the form of uncertainty expression ‘probability bound’. Note that a probability bound includes either a ‘precise probability’ or an ‘approximate probability’, and should be communicated accordingly. Precise and approximate probabilities are addressed separately in the present guidance because they have different implications for communication.

Note also that the Uncertainty Analysis GD quantifies uncertainty using probability expressed as a percentage (0–100%), which leads to potential for confusion when the uncertainty refers to a quantity that is itself a percentage, e.g. a 10% probability that 10% of people have exposures above a reference dose. This and other potential sources of misunderstanding are addressed by the general guidance in Section 3.1.

3. Guidance on communicating uncertainty

This section contains practical guidance for communicators and some guidance for assessors on providing information in their assessments that is needed for communication. It gives straightforward instructions, practical tips and examples, as well as further choices to consider. It is presented as general guidance and specific guidance: the general guidance applies universally to all communication of uncertainty in EFSA scientific assessments; the specific guidance is structured to address and communicate the different expressions of uncertainty described in Section 2.

The guidance is based, as far as possible, on the scientific literature and author recommendations described in Section 4. The available evidence does not address every aspect of communicating uncertainty, however, so some general and specific guidance is based on judgement and reasoning. This guidance was formulated following the testing and interpretation of concrete EFSA examples, which are listed in Section 4.4. For transparency, the literature source and/or detailed reasoning underpinning each point of guidance is summarised in Appendix B.

This guidance complements the EFSA Risk Communication Handbook (EFSA, 2017), which details general best practice in science communication: e.g. use plain language as far as possible, explain technical terms when they are unavoidable, and provide links to more detailed information (e.g. factsheets, videos, FAQs or EFSA's scientific outputs) for interested readers.

3.1. General guidance for communicators

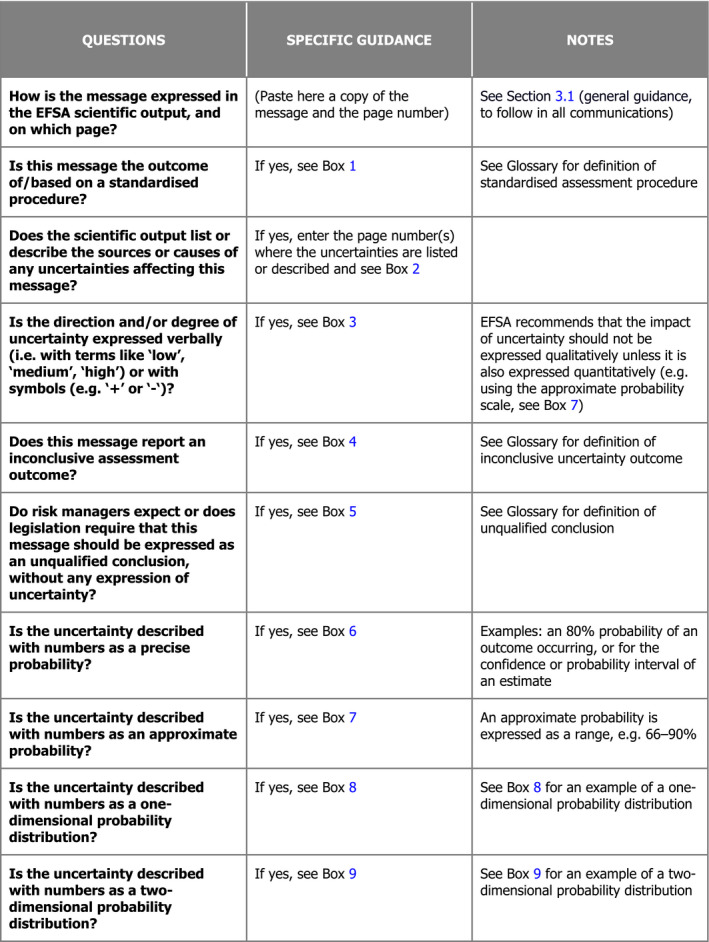

Follow all the general guidance below for communicating uncertainty to the ‘entry’ and ‘informed’ level audiences. Apply it together with the specific guidance related to the different uncertainty expressions (described in Section 2). Complete the template in Table 3 to find the specific guidance, contained in separate boxes (Section 3.3), that is relevant for your communication.

Table 3.

Template for identifying messages with associated uncertainty expressions, and specific guidance for their communication

3.1.1. Alignment with the assessment

Be consistent with the degree of certainty or uncertainty the assessors give to their scientific conclusions in all parts of your communication, including entry‐ and informed‐level material and any accompanying titles or headlines. Avoid using any forms of expression that would imply more or less certainty than expressed in the assessment.

State clearly what the message and uncertainty information refer to, e.g. a specific event, outcome or quantity, and the population, geographic region and time period for which it has been assessed. Indicate if the outcome has any particular importance (e.g. exposure exceeding a reference dose such as a tolerable intake).

State clearly if the conclusion described in your message or the assessment of its uncertainty are subject to any conditions or assumptions (e.g. ‘this assessment assumes consumers comply fully with the proposed dietary advice, and would change if compliance was partial’).

3.1.2. Describing uncertainty with words

If numbers are provided in the scientific output to express uncertainty, do not replace them with words – follow the guidance in the following sections.

If the scientific output includes words that describe a degree of uncertainty or probability (e.g. low uncertainty, unlikely, high probability), use exactly the same wording in your communications. Only alter the wording (e.g. if you consider it too technical or unclear) after checking the rewording with the assessors to ensure it still conveys the intended message.

3.1.3. Describing uncertainty with numbers

When assessors quantify uncertainty using probability, always communicate it numerically and do not convert it into words (e.g. likely, unlikely).

Refer to uncertainty as percentage certainty (e.g. ‘the experts considered it 90% certain that…’) rather than percentage probability, as this helps to make clear that it is an expert judgement and not a measure of frequency. The word ‘certainty’ is preferred to confidence because the latter has different connotations, including a special technical meaning when used in the term ‘confidence interval’.

At the entry level, frame the message positively as % certainty for the outcome, conclusion or range of values that EFSA considers more likely. This is important because expressing, e.g., 5% probability as 5% certainty is misleading, since there is then a 95% probability that the outcome will not occur. This is better expressed as 95% certainty of non‐occurrence. At the informed level, repeat the certainty statement from the entry level and explain clearly the main reasons why the outcome might occur. Then, give the main reasons why the outcome might not occur.

If the assessors provide a verbal expression as well as probability (e.g. when using the ‘approximate probability scale’ (APS), see Table 4), always communicate the probability first (expressed as % certainty) and the words second. For example, ‘the experts considered it 66–90% certain (likely) that…’ should be preferred to ‘the experts considered it likely (66–90% certain) that…’. This is because, in English, people interpret numeric and verbal probabilities more consistently in this order. This may be different in other languages where word order is more flexible or follows other conventions.

When communicating a range for an uncertain quantity, always accompany it with an expression of probability that the quantity lies in this range, e.g. ‘exposure was estimated to be between 5 and 20 mg/kg bw per day, with 95% certainty’. Without this, the meaning of the range is ambiguous. Also consider indicating which values within the range are more likely, e.g. by providing a central estimate (see Point 6), to counter the tendency for people to focus excessively on the upper or lower bound.

Do not use the expression ‘best estimate’, rather use ‘central estimate’ and make clear at the informed level which type of central estimate it refers to, e.g. a mean or median estimate or the most likely value (mode). The meaning of ‘best’ is ambiguous and might lead people to focus excessively on that value.

Communicate the quantities that are part of the message – do not require the audience to infer them. For example, if you have to communicate a range, communicate the upper and lower bounds explicitly and do not require the audience to derive them by looking at a graph.

Link, when relevant, to general FAQs for explanation of all commonly used ways of communicating uncertainty. They should explain probabilities, approximate probabilities and the terms in EFSA's APS (e.g. ‘likely’) and also any visualisations that might be used in communication (e.g. box plots, see Box 8 below).

Table 4.

Approximate probability scale recommended for harmonised use in EFSA to express uncertainty about questions or quantities of interest

| Probability term | Subjective probability range | Additional options | |

|---|---|---|---|

| Almost certain | 99–100% | More likely than not: > 50% |

Unable to give any probability: range is 0–100% Report as ‘inconclusive’, ‘cannot conclude’, or ‘unknown’ |

| Extremely likely | 95–99% | ||

| Very likely | 90–95% | ||

| Likely | 66–90% | ||

| About as likely as not | 33–66% | ||

| Unlikely | 10–33% | ||

| Very unlikely | 5–10% | ||

| Extremely unlikely | 1–5% | ||

| Almost impossible | 0–1% | ||

This table was adapted from a similar scale used by the Intergovernmental Panel on Climate Change. Additional details and guidance on use can be found in EFSA Scientific Committee (2018b).

3.1.4. Precautions when using numbers

Avoid using ‘hedging words such as ‘about’, ‘approximately’, ‘up to’, etc. to qualify numerical expressions. People interpret the meaning of these words differently. Warning: if you choose to use hedging words anyway – e.g. saying ‘about 80% certain’ in spoken communication if ‘66–90% certain’ seems less natural – be aware that people interpret the implied range around 80% differently. This means that at least some of your audience will understand incorrectly what the assessors intended.

Try to word the communication so that it makes clear that expressions of % certainty are the consensus judgement of the experts involved for the probability of a conclusion being correct, and do not refer to the percentage of experts who support the conclusion.

Avoid using percentages for the outcome of interest (e.g. a proportion of something, or the incidence or risk of an outcome or effect), to avoid confusion with percentages quantifying uncertainty. Where possible, use frequencies (e.g. 1 in 20) to express incidence. Example: ‘The Panel was 90% certain that at most 3 in 10,000 sheep would be affected by the virus.’

People are generally more familiar with the use of probability for expressing variability, frequency, incidence or risk. Therefore, if you use probability to express uncertainty, use wordings that avoid people misinterpreting it. For example, if communicating EFSA's percentage probability that a hazard exists, either use % certainty as recommended in Section 3.1.3, or explain clearly that the probability refers to uncertainty about whether the hazard exists, and not to the percentage of people who will be affected.

Ranges are often used to represent variability, e.g. the range of intakes in a population. Therefore, state clearly when ranges represent uncertainty. Also, people are more familiar with the use of graphs (e.g. box plots or graphs) to represent variability, so explain clearly when they represent uncertainty, or both variability and uncertainty (see Boxes 8 and 9 respectively).

3.1.5. Describing sources of uncertainty

Provide information on sources of uncertainty at the informed level, when this is included in the assessment report, as it helps recipients to understand why uncertainty is present (see Box 2 for guidance on this).

Make clear that all uncertainties referred to in the communication have been taken into account in the overall conclusion of the assessment, to avoid any impression that the conclusion is undermined by the uncertainties. For example, if the conclusion is ‘no safety concern’, then it must be made clear that this is the final conclusion of the assessors after considering the uncertainties.

3.1.6. Addressing the uncertainties

Communicate information about options for addressing uncertainty, especially if the uncertainty is substantial or might cause concern.

If the assessment evaluates any risk management options for dealing with uncertainty (e.g. precautionary action), present these as options. Do not imply any preference between options or recommend particular options as in most cases this would involve risk management considerations (e.g. affordability, feasibility, proportionality), which are outside EFSA's remit.

-

If the assessment specifies any options or requirements for further data or analysis aimed at reducing uncertainty, communicate these as follows:

-

–

At the entry level, mention that the assessors identified aspects in which further data are needed; listing the most important of these is optional.

-

–

At the informed level, briefly list the options or requirements and differentiate formal requirements for applicants (i.e. linked to market authorisations) from other non‐statutory options outside EFSA's explicit remit, e.g. proposals for future research.

-

–

3.2. General guidance for assessors

Follow the guidance below for expressing uncertainties in scientific assessments. Apply it together with the specific guidance related to each of the eight uncertainty expressions as listed in Section 3.3 Specific guidance.

Assessors should make clear whether scientific conclusions relate to real world conditions and outcomes or to specific conditions and/or assumptions. When the conclusion is based on the result of a model or statistical analysis, remember to consider uncertainties not quantified within the model or analysis, including uncertainties about the assumptions of the model or analysis and any extrapolation from it to the real quantity or question of interest. This should be carried out as part of characterising overall uncertainty, which is one of the steps in the Uncertainty Analysis GD.

Do not express more precision than is justified by the scientific assessment. In particular, do not express quantities or probabilities with more significant figures than is justified – usually one or two.

When using probability to express uncertainty, always express it numerically. If you also provide a verbal expression (e.g. when using the APS), then report the numeric expression first and the verbal expression second, as this has been shown to improve the consistency of interpretation by recipients. If you cannot provide a numeric expression of probability, state explicitly that the probability cannot be assessed.

When estimating the probability, frequency or incidence of outcomes, express them as frequencies (e.g. 10 per 1,000), as this makes it easier for people to understand and use them. This is especially true if probabilities are conditional and the audience needs to infer unconditional probabilities for outcomes of interest to them (e.g. infer the probability they have a disease from three different pieces of information: the conditional probability for having the disease given a positive test, the accuracy of the test, and the base rate for the disease in the population), as it has been shown that people can do this more reliably using frequencies. Using frequencies to quantify variability also reduces the risk of confusion that can arise if probability is used to quantify both variability and uncertainty

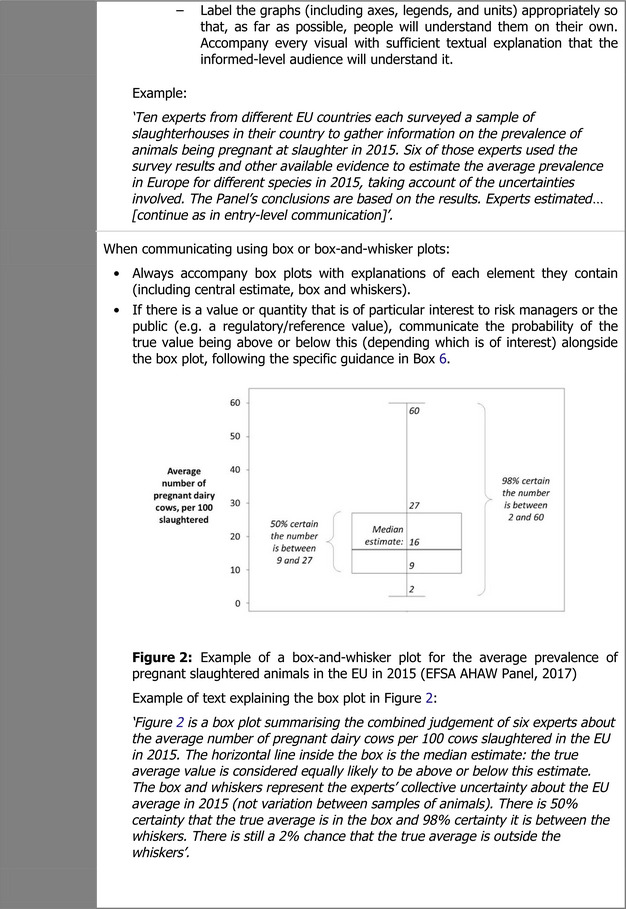

When reporting a range for a quantitative estimate, always accompany it with a precise or approximate probability for that range. Also indicate which values within the range are more likely if this might be important for understanding and decision‐making. Presenting a central estimate as well as the range will indicate whether the distribution is skewed to one side. If more detail on the shape of the distribution is needed, consider including quartiles or a box plot (see Box 8).

When giving an approximate probability, always provide a lower and upper bound (e.g. 66–90%). When the probability refers to a range of values of a quantity of interest, give both a lower and upper bound for the range when both are available from the assessment (e.g. a confidence interval from a statistical analysis). When using assessment methods that produce one‐sided bounds, e.g. when using probability bounds analysis, consider providing also a probability for a second value of the quantity, to reduce the tendency for people to anchor if only one value is mentioned. For example, if presenting a probability for exposure exceeding a safe dose, consider also assessing and presenting the probability of exceeding a specified multiple of the safe dose (e.g. 2× or 5×). Similarly, if presenting a probability for any (> 1) infected animals entering the EU, consider also assessing the probability of the true value being greater than a higher number of interest.

A clear and concise summary of the outcome of the uncertainty analysis should be included in the abstract of the scientific assessment where it is easily accessible to communicators. This should include the overall impact of uncertainty on the conclusions and the major sources of uncertainty. More detailed information on the uncertainty analysis should be provided in the summary and main text of the assessment report.

3.3. Specific guidance

This section contains specific guidance for communicating different uncertainty expressions as listed in Table 2 to different audiences. Communicators should use the specific guidance for the entry and informed levels together with the general guidance in Section 3.1. The specific guidance at the technical level provided in Boxes 1–9 refers to tasks for assessors to support the communication process, and should be applied in conjunction with the general guidance in Section 3.2 of this document and in the Uncertainty Analysis GD. Assessors should use this guidance to inform how information is reported in their assessments.

Communicators should take the following steps for each communication:

Examine the scientific output to identify proposed messages and supporting points for communication according to the EFSA Risk Communication Handbook.

Consult the appropriate assessor (scientific officer, head of unit, working group chair, etc.) to confirm the selection and validate the accuracy of messages in line with the standard operating procedure (EFSA, 2015).

For messages that refer to scientific conclusions, either ask the assessor to fill in the template below (Table 3) or complete it yourself with their input. Answer all the questions in the template for each of these messages.

The completed template tells you which types of uncertainty expression are associated with each message. It also directs you to the specific guidance for communicating each type of expression (Boxes 1–9). One or more types of uncertainty expression might be used in a single message (e.g. description of some sources of uncertainty and an approximate probability for the conclusion). Always consider them together.

Craft entry‐level and informed‐level communications for each message, applying both the general guidance in Section 3.1 and the relevant specific guidance from Boxes 1–9.

Integrate the crafted communications into a coherent narrative in the chosen format (e.g. news story). Material for the entry and informed levels may be used in a single communication, e.g. top‐line messages followed by more details lower down. If published separately, link the entry level to more detailed material (e.g. FAQs, the scientific output).

Box 1: Guidance for communicating assessments using standardised procedures

Box 2: Guidance for communicating a description of sources of uncertainty

Box 3: Guidance for communicating qualitative descriptions of the direction and/or degree of uncertainty using words or symbols(a)

Box 4: Guidance for communicating inconclusive assessments

Box 5: Guidance for communicating unqualified conclusions with no expression of uncertainty

Box 6: Guidance for communicating a precise probability

Box 7: Guidance for communicating an approximate probability

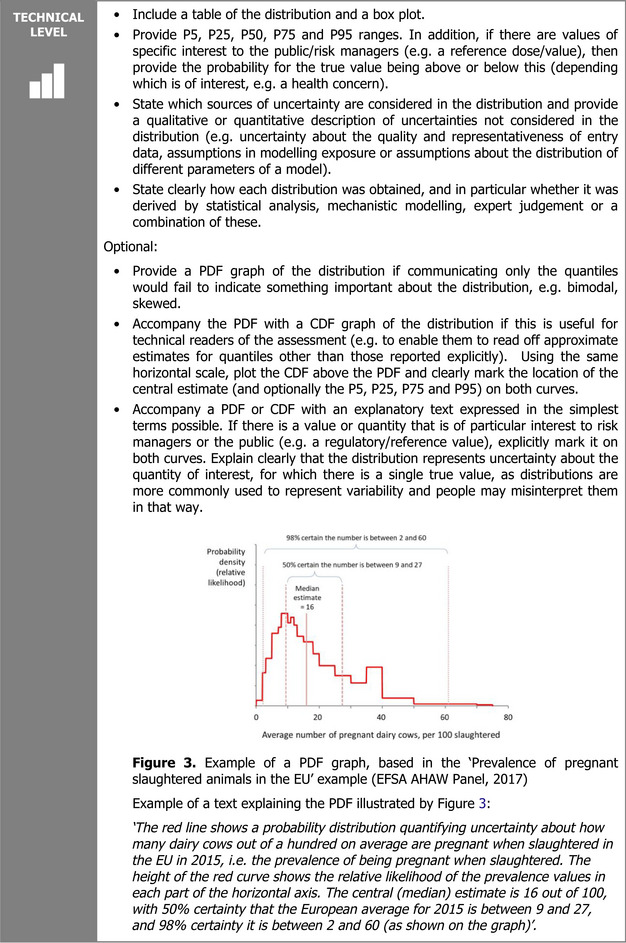

Box 8: Guidance for communicating a probability distribution

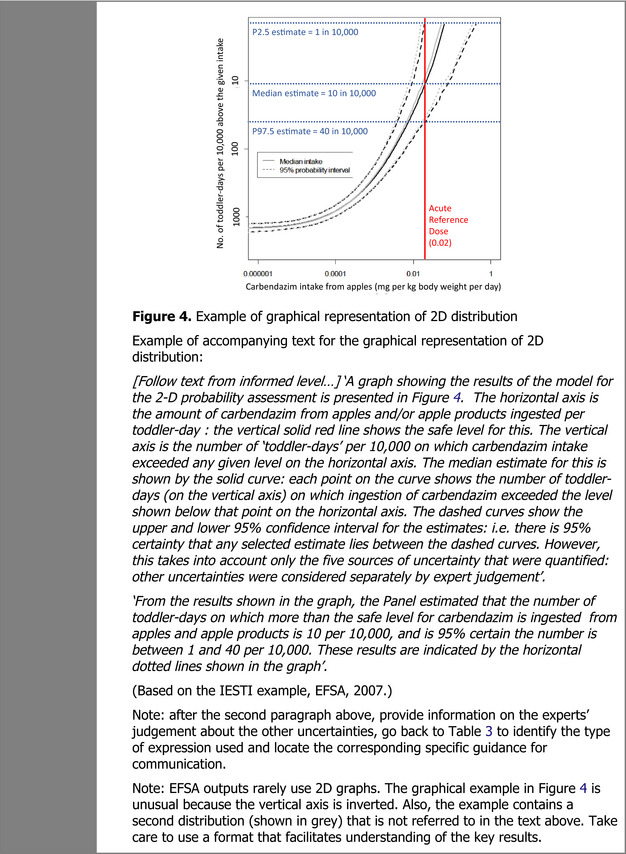

Box 9: Guidance for communicating a two‐dimensional probability distribution

4. Sources of evidence for the guidance

This section describes the key sources of evidence on which the guidance in Section 3 is based. These evidence sources include an expert analysis of selected academic literature including direct author recommendations, quotes and interpretation (Section 4.1), the results of research commissioned or carried out by EFSA to inform the development of this Uncertainty Communication GD (Section 4.2) and extracts from frameworks or guidance documents similar in scope and purpose to this Uncertainty Communication GD from other national and international advisory bodies (Section 4.3). It also includes a description of the concrete EFSA examples, which were used to formulate additional guidance with expert judgement and reasoning (Section 4.).

For transparency, Appendix B provides a comprehensive overview of each literature source and/or the reasoning underpinning each point of guidance described in Section 3.

This section concludes that the available evidence does not address every aspect of communicating uncertainty and therefore feeds directly into the ‘Further research needs’ described in Section 5.

4.1. Scientific literature

An expert analysis of key papers on communicating uncertainty follows below. The criteria used to select the key papers are described first.

4.1.1. Scope of literature search

An initial list of 37 references was drafted based on:

working group members’ knowledge of the literature;

references included in a major recent review paper on risk and uncertainty communication, (Spiegelhalter, 2017);

references already available at EFSA from different sources.

This list was used for a literature search to identify current publications that reported primary research on communicating uncertainties. However, owing to the ubiquitous appearance of the search terms ‘communication’ and ‘uncertainty’ in scientific literature, the usual search strategies were not specific enough to narrow the search to a feasible number.

A list of criteria for including papers in the literature review was drafted collectively by the working group. Studies were included that simultaneously:

provide guidance on how uncertainty should be communicated (best practice),

include experimental data, but also literature reviews on uncertainty communication,

provide recommendations for uncertainty communication,

cover at least one of the possible expressions of uncertainty from the Uncertainty Analysis GD.

The relevance of the subject area was also considered, with studies on food safety/public health/environment being preferred.

Studies essentially arguing about the importance of communicating uncertainty were excluded, as they were considered to be of low relevance for the practical needs of the Communication GD.

Application of these criteria by the working group and resulted in 25 papers being considered in the first round of the literature study, selected from the 37 papers proposed initially. These papers were read in full and further relevant references were identified from them. This list of 25 papers was then crosschecked with a review paper by van der Bles (2018), which is currently in preparation. This work has also provided inspiration for structuring our literature review and recommendations. Finally, additional references have been suggested during the public consultation. Finally, 57 papers have been included in the literature review.

4.1.2. Relevance of the scientific literature included

This review does not include a large set of risk communication studies because they examined how best to communicate risks (e.g. the risk of having cancer given a positive test result (Gigerenzer and Hoffrage, 1995)) and are less relevant to the communication of uncertainty. Although both risk and uncertainty are often expressed in terms of probability, probabilities for risk either quantify the frequency of an adverse outcome in a population, or are frequentist estimates of the probability of the outcome for an individual member of the population. Probabilities for uncertainty quantify the likelihood of single events, for example the likelihood that the true frequency of the adverse outcome is within a specified interval. EFSA's Uncertainty Analysis GD uses subjective probabilities for this purpose. There is considerable variation among the studies included in this review as to how they dealt with uncertainty and it is often not explicitly stated whether the authors focused on how to communicate frequentist or Bayesian expressions of uncertainty. There seemed to be a lack of research that focused on the communication of subjective probabilities, which is the type of uncertainty expression used in assessments following EFSA's Uncertainty Analysis GD.

However, the distinction between relative and absolute risks is important for both risk and uncertainty communication (Gigerenzer et al., 2007). Although relative risk information is widely used for communicating risks, it is not an effective strategy when the target audience consists of laypeople (Siegrist and Hartmann, 2017). The presentation of relative risk information results in larger and more persuasive numbers than when the same information is expressed in absolute terms. This can be easily demonstrated using a simple example: a given treatment reduces the risk of an adverse outcome from 8% to 4%, consequently decreasing the risk in relative terms by 50% but by only 4% when expressed in absolute terms. Research suggests that relative risk information increases the likelihood of people misinterpreting it and displaying a biased reaction (Malenka et al., 1993). Providing both, relative and absolute risk information is not advisable, because experiments suggest that the relative risk reduction information influences people's preferences even when absolute risk reduction information is also presented (Gyrd‐Hansen et al., 2003). These results imply that for the communication of risks and uncertainties, absolute rather than relative numbers should always be used.

There is a body of literature which recommends communicating with frequencies rather than probabilities (e.g. Gigerenzer and Hoffrage, 1995; Gigerenzer et al., 2007). However, many of the studies supporting this recommendation involved tasks which required subjects to make inferences involving combination of conditional probabilities and base rates. EFSA assessments and communications generally provide direct estimates of the outcomes of interest to the audience, and hence do not require them to deduce these from conditional probabilities. Furthermore, applying EFSA's guidance on uncertainty analysis results not in probabilities representing frequency but in subjective probabilities expressing uncertainty about single outcomes or events, and it is unclear whether these would be better understood if they were expressed using frequencies. A study by Joslyn and Nichols (2009) in which the tasks did not require conditional inferences found that subjects understood weather forecast information better when expressed in a probability format rather than a frequency format. Furthermore, expressing single‐event probabilities in terms of frequency would require framing each assessment in terms of a hypothetical population or reference class of similar cases (e.g. other chemical risks with similar evidence and risk to the one under assessment), which increases the complexity of the communication and therefore poses different challenges to understanding.

Most of the reviewed studies presented uncertain information to subjects and then asked them to express their response to the information. The form of response that was requested varied between studies, but in nearly every study it was explicit or implicit that the requested response referred to the subject's own interpretation or perception after receiving the information, and/or what actions they might take or wish to be taken in response to it. Only one study (Ibrekk and Morgan, 1987) was identified in which the participants were explicitly asked ‘what the forecast says’. In some further studies (e.g. Edwards et al., 2012), it was ambiguous whether the subjects were being asked for their understanding (comprehension) of the communication or for their own opinion, having seen the communication. The former is more relevant for this guidance as its purpose is to ensure that EFSA's communications are understood (see Section 1.6.1). This varying relevance has been taken into account when using the findings from the literature (reviewed below) to inform this Guidance (Section 3).

Whereas many of the selected studies investigated the communication of uncertainty expressed as a range for a quantity of interest, in only few of them were the ranges accompanied by confidence levels or probabilities.

The subjects considered in the reviewed studies were mostly selected to represent the common ‘layperson’, and in many cases reflected convenience choices for the experimental settings. Most studies used convenience samples: students form the biggest pool of subjects, followed by people recruited in shopping malls, parks, at cinema exits, via advertisements on university networks or campuses, via the Decision Research web panel subject pool, and/or via Amazon Mechanical Turk.

Only four studies (Patt and Dessai, 2005; Wardekker et al., 2008; Pappenberger et al., 2013; Beck et al., 2016) experimentally dealt with the clearly identifiable target audiences with whom EFSA interacts on a regular basis, namely decision‐makers, policy advisers and stakeholder representative groups such as NGOs, consumer groups or industry associations.

4.1.3. General findings

In a study of climate change, Visschers (2018) found that respondents differentiated between different types of uncertainty: ambiguity in research, measurement uncertainty and uncertainty about future impacts. Similarly, Wardekker et al. (2008) reported a preference among experts and users of uncertainty information at the science–policy interface for communication of different types and sources of uncertainty. Together, these results indicate that communicating different sources of uncertainty together with communication of aggregated uncertainty might be welcomed.

4.1.4. Scientific literature contributing to the specific guidance

The scientific literature that contributes to the specific guidance in Section 3.3 are sorted by the expressions of uncertainty that may be produced when following the EFSA Uncertainty Analysis GD.

4.1.4.1. Ranges

Ranges can refer to a quantity of interest or to a probability used to express uncertainty (Section 2). Most of the literature reviewed studied ranges for a quantity of interest (e.g. the 95th percentile of a population), sometimes accompanied by a probability for the range. Some papers studied communication of ranges for probabilities describing uncertainty on the occurrence of an event. The parameters examined in the studies were diverse and are reviewed below in six categories:

influence on risk perception,3

effectiveness of communication leading to accurate understanding of the uncertainty information,

impact on decision‐making (of forms of uncertainty communication, use of uncertainty information in taking decisions, ambiguity aversion,4 ability to take a decision),

effects on emotions (e.g. worry),

role of source credibility on risk perception,

focus on the end values of the range.

Although not all of these effects relate to the specific needs of this Uncertainty Communication GD they may be valuable for deriving further recommendations for uncertainty communication.

The influence of uncertainty communication on risk perceptions is by far the most frequent parameter investigated, followed by effectiveness.

Influence on risk perception

Kuhn (2000), Dieckmann et al. (2010) and Han et al. (2011) found no significant difference in the magnitude of risk perception associated with communication of a probability range (i.e. a probability with associated uncertainty expressed by giving a range: x% to y%) as compared with communicating a precise probability (i.e. a point estimate for the probability without expressing associated uncertainty: x%). Han et al. (2011) provided both uncertainty information and comparative risk information. They found that uncertainty information moderated the increase in risk perception that was observed when comparative risk information was provided in addition to individual risk information (i.e. the individual risk compared to the average risk in a population). For the hypothetical situation of a terrorist attack, tested by Dieckmann et al. (2010), there was no difference in perceived harm with or without uncertainty information.

In the experiments of Dieckmann et al. (2010), uncertainty information was presented as scenarios that included probabilities and narrative information, the latter adding context and an explanatory story in addition to describing the logic and evidence used to generate the assessment. Risk perception increased when the probability increased, and also when the narrative was provided. The effect of increased probability was the same, whether it was presented as a point probability or a range, and whether or not it was accompanied by narrative information or not.

Johnson and Slovic (1995) reported an increase in risk perception when uncertainty was communicated as probability ranges rather than as compared with point estimates. More generally, low risk estimates were deemed more ‘preliminary’, whether or not uncertainty was communicated. In Kuhn (2000), when no mention was made of uncertainty, or when the ends of the probability range were explained as the conclusions of two different sources with opposing biases, peoples’ environmental attitudes predicted risk perception. However, environmental attitudes predicted risk perception less well when the probability range was centred on the best estimate. The average level of risk perception did not differ between point estimates and uncertainty communication. Viscusi et al. (1991) emphasised that the number of people who are influenced by the most adverse or the most favourable uncertainty estimates is quite constant.

Overall, although influence on risk perception is relevant to risk management, the main consideration for this Communication GD is the impact of uncertainty information on accurate understanding.

Effectiveness in terms of accurate understanding of uncertainty information

Dieckmann et al. (2015) presented subjects with ranges for various quantities, but no information on the underlying distribution. Subjects were then asked to choose between three options for the underlying distribution: uniform, central values more likely or extremes more likely. Distributional perceptions were similar whether the range referred to a quantity (e.g. temperature) or to the probability of an event occurring. Without clues about the form of the distribution, people tended, in relatively balanced proportions, either to consider all the values in a range as being equally probable (uniform distribution) or to perceive the central values as more likely (particularly the more numerate). A small percentage perceived a U‐shaped distribution. Providing a best estimate and a confidence level with the range reduced the perceptions of both uniform and U‐shaped distributions. Further studies by the same team (Dieckmann et al., 2017) confirmed that perceptions of the distribution underlying numerical ranges were affected by the motivations and worldviews of the recipients. This ‘motivated reasoning effect’ remained after controlling for numeracy and fluid intelligence, but was attenuated (in one instance described as ‘eliminated’) when the correct interpretation of the distribution was made clear through the use of a graph. In other words, people interpret the uncertainty information in a motivated way only if they are given the opportunity, and they do not do so when the correct information about the distribution is made clear.

Impact on decision‐making

Participants in the experiments of Dieckmann et al. (2010) thought that at higher levels of probability the probability range was more useful for decision‐making than the point probability, whereas at a lower probability the point estimate was more useful. However, the magnitude of the difference was not provided and the authors stated that the reasons for it were unclear.

Kuhn (1997) showed that for the low to high range condition (e.g. 10–30%), vague options are more likely to be preferred when decision problems are framed negatively (i.e. losses) than when they are framed positively (i.e. gains). Morton et al. (2011) showed that communication of a probability range combined with a positive framing (possibility of losses not materialising) increased individual intentions to behave environmentally‐friendly for limiting climate change effects.

The impacts of probability bounds for which either the lower or the upper bound were specified have been studied by Hohle and Teigen (2018). They compared how research participants perceived climate‐related forecasts communicated with lower‐bound (‘more than x% chance’) versus upper‐bound (‘less than x% chance’) probability ranges. They found that ‘more than’ statements guide listeners’ attention towards the possible occurrence of the event, while the contrary was found for the ‘less than’ statements, focusing participants’ attention towards the non‐occurrence of the event, accompanied by negative explanations of why that event might not appear. Furthermore, most people thought of estimates for the probability of the event rather close to the boundary, even if the range allowed by that boundary was very large (e.g. 30–100% for ‘over 30%’). A second finding was that boundaries were perceived as indicating the forecaster's belief about either an increasing (‘more than’) or a decreasing (‘less than’) trend over time (Teigen, 2008; Hohle and Teigen, 2018). Similarly, a ‘more–less’ asymmetry has been found by Hoorens and Bruckmüller (2015), who showed that ‘more than’ statements seem to be easier to process cognitively than ‘less than’ statements. For this reason, people use ‘more than’ statements more frequently, agree with them more, more readily believe them and like them better.