Abstract

Goosegrass is a problematic weed species in Florida vegetable plasticulture production. To reduce costs associated with goosegrass control, a post-emergence precision applicator is under development for use atop the planting beds. To facilitate in situ goosegrass detection and spraying, tiny- You Only Look Once 3 (YOLOv3-tiny) was evaluated as a potential detector. Two annotation techniques were evaluated: (1) annotation of the entire plant (EP) and (2) annotation of partial sections of the leaf blade (LB). For goosegrass detection in strawberry, the F-score was 0.75 and 0.85 for the EP and LB derived networks, respectively. For goosegrass detection in tomato, the F-score was 0.56 and 0.65 for the EP and LB derived networks, respectively. The LB derived networks increased recall at the cost of precision, compared to the EP derived networks. The LB annotation method demonstrated superior results within the context of production and precision spraying, ensuring more targets were sprayed with some over-spraying on false targets. The developed network provides online, real-time, and in situ detection capability for weed management field applications such as precision spraying and autonomous scouts.

Subject terms: Ecological modelling, Plant sciences

Introduction

Goosegrass [Eleusine indica (L.) Gaertn.] is an invasive and problematic weed with nearly worldwide distribution including North and South America, Africa, Europe, Australia, and Southeast Asia1. Goosegrass infests many agroecosystems including turfgrass2,3, rice4, and fruiting vegetable crops5. In Florida, goosegrass is a problematic weed in many major horticultural crops including strawberry [(Fragaria × ananassa (Weston) Duchesne ex Rozier (pro sp.) [chiloenis × virginiana]], bell pepper (Capsicum annuum L.), tomato (Solanum lycopersicum L.), and cucurbit (Cucurbitaceae) production. While goosegrass interference has not been extensively studied in horticultural crops, it has shown to interfere with cotton (Gossypium hirsutum L.) yield in the field6 and greenhouse-grown corn (Zea mays L.)7.

In Florida, many broadleaf horticultural crops are produced using a plasticulture system. This system included raised beds covered in plastic mulch with drip irrigation installed to provide nutrients and moisture. Weeds within this system primarily occur within the planting holes or between the rows, except for purple nutsedge (Cyperus rotundus L.) and yellow nutsedge (Cyperus esculentus L.) which penetrate and emerge through the plastic mulch.

Within vegetable horticulture, the prevalent post-emergence weed management options for goosegrass control include hand weeding and herbicides. For pre-plant burn down and within row middles, broad-spectrum herbicides such as paraquat and glyphosate are widely employed. Consequently, both goosegrass and American black nightshade (Solanum americanum Mill.) have developed paraquat resistance8,9 and ragweed parthenium (Parthenium hysterophorous L.) developed glyphosate resistance10. For weed control atop the bed during the cropping cycle, WSSA Group 1 herbicides are the most common post-emergence chemical control option. Group 1 herbicides are becoming increasingly utilized within herbicide mixtures for grass control in row middles depending on weed pressures and resistance issues faced.

Implementing precision technology into spraying equipment is a viable option to reduce production costs associated with weed management. Goosegrass and other grass species are excellent targets for precision technology to apply Group 1 herbicides to a variety of broadleaf crops. A prototype precision sprayer was developed to simultaneously detect and spray weeds in plasticulture production within Florida. Briefly, the system was a modified plot sprayer with a digital camera sensor, a controller linked with artificial intelligence for detection, and nozzles controlled by solenoids. The desirable detector for this system is a convolutional neural network.

Machine vision-based weed detection is typically conducted using either multispectral/hyperspectral or RGB imagery, the latter being more desirable for economic costs and practical adoption for producers11. Recent technological advances in graphical processing units permit training and employing deep learning convolutional neural networks as detectors12. Deep convolutional neural network frameworks have been reviewed elsewhere12,13. Briefly, neural networks take inspiration from the visual cortex, containing layers for feature extraction, convolution, pooling, activation functions, and class labeling14. The system relies on pattern recognition via filters within the convolutional layers for detection and classification15. Convolutional neural networks for weed detection have been employed in several crops including turfgrass16,17, wheat18, and strawberry19. For horticultural plasticulture row middles, a convolutional neural network has been developed to detect grasses among broadleaves and sedges20. Within broader agriculture, deep neural network applications include strawberry yield prediction21, sweet pepper (Capsicum annum L.), and cantaloupe (Cucumis melo var. cantalupo Ser.) fruit detection22, and detection of tomato pests and diseases23.

With the widespread registration of Group 1 herbicides in broadleaf crops and the widespread distribution of goosegrass, the successful development of a detection network would have far-reaching implications for conventional horticulture. Development of a multi-crop, within-crop grass detection network has challenges including training image availability, ease of image collection due to the patchy nature of weeds, and the diverse background of several crops as the negative space. Additionally, the goosegrass within-crop growth habit, as well as the general habit of grassy weeds causes issues for bounding box-based network training. Goosegrass has a tufted plant habit with stems that are erect to spreading and up to 8.5 m tall, and leaves which are 5 cm to 35 cm long and 3 mm to 5 mm wide24. For strawberry production, goosegrass leaves have been observed to either penetrate through the crop canopy, growing prostrate along with the plastic, or grow in planting holes where strawberry plants have died. For tomato production, goosegrass plants typically grow at the base of the tomato plants, which are vertically staked for fresh-market production. The study objectives were to (1) develop a network with utilities in multiple broadleaf crops, starting with strawberry and tomato plasticulture, (2) evaluate the use of small label annotation boxes along the leaf-blade length for goosegrass detection compared to boxes encompassing the entire plant habit, and (3) evaluate a piecemeal oversampling technique.

Results

For strawberry production, the entire plant annotation method (EP) (precision = 0.93; recall = 0.88; F-score = 0.90; accuracy = 0.82) resulted in an overall increased YOLOv3-tiny training fit compared to the leaf-blade annotation method (LB) (precision = 0.39; recall = 0.55; F-score = 0.46; accuracy = 0.30) (Table 1). Convergence time, in iterations, declined rapidly for EP compared to LB (data not shown). This was expected since EP resulted in fewer bounding boxes and provided larger bounding boxes with a static location. Labeling of goosegrass leaf blades with narrow squares resulted in “ground truth fluidity” with resultant increased training time and reduced fit.

Table 1.

Training accuracy for network assessment of two convolutional neural networks trained to detect goosegrass developed in Balm, FL, USA in 2018a.

| Measure | Network accuracy | |

|---|---|---|

| Leaf-blade annotation | Entire plant annotation | |

| True positives | 1495 | 77 |

| False positives | 2367 | 6 |

| False negatives | 1202 | 11 |

| average IoU (%) | 25.10 | 76.12 |

aThreshold for detection was 0.25 or 25% intersection of union between the predicted and ground truth bounding box.

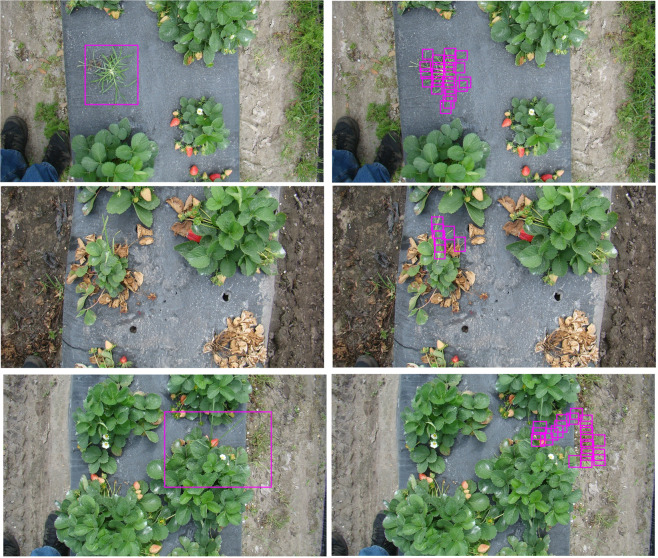

While the EP network appeared more successful in training, the network provided inadequate testing results. For goosegrass detection within strawberries, the LB (precision = 0.87; recall = 0.84; F-score = 0.85; accuracy = 0.74) outperformed the EP (precision = 0.93; recall = 0.62; F-score = 0.75; accuracy = 0.60) in terms of overall F-score and accuracy (Table 2). The EP method demonstrated high precision but tended to miss targets (Fig. 1). There was no impact of the annotation method on iteration time (Table 3). Compared to the EP, the LB network increased recall substantially at the expense of precision but resulted in the highest F-score.

Table 2.

Pooled relevant binary classification categories and neural network accuracy measures for goosegrass (Eleusine indica) detection in tomato (Solanum lycopersicum) and strawberry (Fragaria × ananassa) using two annotation methods on digital photography acquired in Central Florida, USA, in 2018 and 2019a.

| Measure | Network accuracy | |||

|---|---|---|---|---|

| Strawberry | Tomato | |||

| EPb | LBc | EP | LB | |

| True positives | 43 | 58 | 10 | 17 |

| False positives | 3 | 9 | 3 | 12 |

| False negatives | 26 | 11 | 13 | 6 |

aThe neural network was the tiny version of the state-of-the-art object detection convolutional neural network You Only Look Once Version 3 (Redmon and Farhadi 2018).

bEP = Entire plant annotation method. This refers to using a single, large square box to identify goosegrass within digital images.

cLB = Leaf-blade annotation method. This refers to using multiple, small square boxes placed along leaf blades and inflorescence to identify goosegrass within digital images.

Figure 1.

Examples of YOLOv3-tiny network detection of goosegrass (Eleusine indica) growing in competition with strawberry (Fragaria × ananassa) using either entire plant (left) or leaf blade (right) annotation techniques in Central FL, USA in 2018.

Table 3.

Impact of annotation style on testing iteration time for goosegrass (Eleusine indica) detection in strawberry (Fragaria × ananassa) and tomato (Solanum lycopersicum) production using a convolutional neural network developed at Balm, FL, USA in 2018a.

| Annotation style | Crop | Mean iteration time (s image−1) | Sample size | Standard error | Confidence intervalb |

|---|---|---|---|---|---|

| LBb | Strawberry | 0.008067 | 62 | 0.000263 | 0.007542, 0.008593 |

| EPc | Strawberry | 0.008125 | 62 | 0.000250 | 0.007625, 0.008624 |

| LB | Tomato | 0.011399 | 47 | 0.003664 | 0.004027, 0.018771 |

| EP | Tomato | 0.007601 | 47 | 0.000328 | 0.006941, 0.008261 |

aThe neural network was the tiny version of the state-of-the-art object detection convolutional neural network You Only Look Once Version 3 (Redmon and Farhadi 2018).

bLB = Leaf-blade annotation method. This refers to using multiple, small square boxes placed along leaf blades and inflorescence to identify goosegrass within digital images.

cEntire plant refers to the annotation method where a single, large square box to was used to identify goosegrass within digital images.

For goosegrass detection in tomato, the EP (precision = 0.77; recall = 0.43; F-score = 0.56; accuracy = 0.38) had higher precision but struggled at detecting plants (Table 2, Fig. 2). Comparatively, the LB (precision = 0.59; recall = 0.74; F-score = 0.65; accuracy = 0.49) had reduced precision but had an increased recall. The LB derived network resulted in the highest overall F-score and accuracy for goosegrass detection in tomato.

Figure 2.

Examples of YOLOv3-tiny network detection of goosegrass (Eleusine indica) growing in competition with tomatoes (Solanum lycopersicum) using either entire plant (left) or leaf blade (right) annotation techniques in Balm, FL, USA in 2019.

Discussion

Detection in strawberry production demonstrated suitable identification of goosegrass. For images taken within tomato production, success was limited (Table 2). This was most likely a consequence of available goosegrass training images within strawberry production but not for tomatoes. While attempts were made to match image acquisition angles and growth stages for both goosegrass and tomato growing in isolation, not having additional training images of the desired target and background together was likely detrimental. This could be due to the degree of actual overlap between the crop and weed in competition, altered growth habit by the weed in competition, or natural variability in the tomato growth habit inducing a stoichiometric effect that requires additional training images to overcome.

For detection in both tomato and strawberry, the LB outperformed the EP in terms of recall, F-score, and accuracy. The EP networks had consistently higher precision but lower recall. This was likely a consequence of selecting the entire plant habit, increasing the variability between targets, and reducing the number of potential targets for training. Such precision and recall neural network trade-offs have been noted elsewhere, including polyp detection25. For precision spraying, the EP network would miss many plants but would typically spray goosegrass only. Comparatively, the LB network would spray goosegrass more regularly with some degree of over-spraying upon undesirable targets. For weed detection in occluded winter wheat using a convolutional neural network based on DetectNet achieved 87% precision and 46% recall18. Comparatively, using an object detection convolutional neural network based on You Only Look Once to detect weeds in winter wheat images resulted in 76% precision and 60% recall26. Detection of Carolina geranium in strawberry using DetectNet and leaf-level annotation resulted in 99% precision and 78% recall19. Current results for goosegrass detection in strawberry obtained a relatively similar overall accuracy compared to similar studies using convolutional neural networks alone, but detection in tomatoes may require further sampling.

Results indicate the potential for a unified network for use across multiple crops. Additional options for precision spraying multi-crop networks include Group 2 herbicides in vegetable plasticulture, group 4 within cereals, and groups 9 and 10 within associated genetically modified crops. While the piecewise image methodology results for tomatoes were limited, network desensitization for additional crops does provide some benefit. Existing networks for goosegrass can be expanded to additional crops and the number of necessary training images should be reduced.

Several kinds of grass infest vegetable fields. Since the network did not classify tropical signalgrass [Urochloa distachya (L.) T.Q. Nguyen] as goosegrass (data not shown), additional classes are likely necessary or grouping multiple grass species into a single category20. A similar network (YOLOv3) was trained to detect broadleaf species that were not previously part of the training dataset20, so this option may be feasible but requires further study. If such is desirable, care should be taken to avoid class imbalances, which negatively impact network performance27,28.

Network performance enhancement within limited datasets may be improved using convolutional neural networks with traditional machine learning systems (support vector machines), as demonstrated with black nightshade (Solanum lycopersicum L.) and velvetleaf (Abutilon theophrasti Medik) in tomato and cotton (Gossypium hirsutum L.)29. The integration of segmentation techniques with neural networks has previously been successful and may help improve precision and recall30,31. For example, a weed detection system using blob segmentation and a convolutional neural network achieved 89% weed detection accuracy32. For some weed management scenarios, a resource such as CropDeep and DeepWeeds could be used for pre-training or supplementing datasets33,34. Using k-means pre-training may improve detection, which improved detection of an image classification convolutional neural network resulted from 2%, up to 93% accuracy35.

The developed networks demonstrated detection across two broadleaf vegetable crops within vegetable plasticulture production. The LB annotation technique provided superior results for goosegrass detection in strawberry production (F-score = 0.85) compared to the EP annotation technique (F-score = 0.75). Supplementing the model with a majority of isolated tomato and goosegrass images produced moderate results. The LB annotation technique provided better detection (F-score = 0.65) compared to the EP technique (F-score = 0.56). Results demonstrate that the use of the piecemeal technique alone does not provide adequate detection for field-level evaluation but may represent a suitable oversampling strategy to supplement datasets. The developed network provides an online, real-time, and in situ detection capability for weed management field applications such as precision spraying and autonomous scouts.

Methods

Images were acquired with either a Sony (DSC-HX1, Sony Cyber-shot Digital Still Camera, Sony, Minato, Tonky, Japan) or Nikon digital camera (D3400 with AF-P DX NIKKOR 18-55 mm f3.5-5.6 G VR lenses, Nikon Inc., Melville NY). Training images were taken at the Gulf Coast Research and Education Center (GCREC) in Balm, FL (27.76°N, 82.22°W) and the Strawberry Growers Association (SGA) field site in Dover, FL (28.02°N, 82.23°W). Images were acquired from the perspective of the modified plot sprayer camera (T-30G-6, Bellspray, Inc., Opelousas, LA).

Training data (Training 1, Table 4) were acquired during the strawberry growing season at GCREC and SGA. Images were taken in tandem with a previous study19. Strawberry plants were transplanted on October 10, 2017, and October 16, 2017, at the GCREC and SGA, respectively. Several datasets were acquired due to limited goosegrass emergence at GCREC, so a piecemeal solution was undertaken. Training images of tomatoes and goosegrass were acquired separately within a plasticulture setting. A training dataset was developed for goosegrass competing with tomatoes (Training 2, Table 4). Goosegrass was grown in isolation (Training 3, Table 4), with seedlings transplanted on March 12, 2018, and May 15, 2018. Images of only tomato plants were collected for network desensitization (Training 4, Table 4). After preliminary testing, additional images were collected for network desensitization for purple nutsedge (Desensitization, Table 4), which was at the 3-leaf stage, before blooming.

Table 4.

Training, desensitization, and testing dataset specifications for developing a convolutional neural network to detect goosegrass (Eleusine indica) in Florida strawberry (Fragaria × ananassa) and tomato (Solanum lycopersicum) production.

| Dataset Type | Species | Image No. | Date | Location |

|---|---|---|---|---|

| Training 1 | Strawberry, goosegrass | 954 | 11 Dec 2017 to 23 Feb 2018 | GCREC, SGA |

| Training 2 | Tomato, goosegrass | 28 | 1 May 2018 to 8 May 2018 | GCREC |

| Training 3 | Goosegrass | 516 | 18 Mar 2018 to 29 May 2018 | GCREC |

| Training 4 | Tomato | 94 | 4 Oct 2018 | GCREC |

| Desensitization | Purple nutsedge | 138 | 11 Mar 2019 | GCREC |

| Testing 1 | Strawberry, goosegrass | 43 | 23 Feb 2018 | Commercial farms |

| Testing 2 | Strawberry, goosegrass | 7 | 17 Dec 2018 | GCREC |

| Testing 3 | Tomato, goosegrass | 60 | 10 Apr 2019 | GCREC |

| Testing 4 | Tomato, goosegrass | 27 | 4 Oct 2018 | GCREC |

| Testing 5 | Goosegrass | 12 | 14 Mar 2019 | GCREC |

Abbreviations: GCREC = Gulf Coast Research and Education Center at Balm, FL; SGA = Strawberry Growers Association field site in Dover, FL.

Five datasets were acquired for network testing to meet each crop objective and provide sufficient samples. Images were collected at two commercial strawberry farms (27.93°N, 82.10°W, and 27.98°N, 82.10°W) (Testing 1, Table 4) and supplemented with images from GCREC (Testing 3, Table 4). Images were collected approximately 134 and 136 days after strawberry transplanting from commercial farms and 60 days after transplanting at GCREC. For testing images in tomato production (Testing 3, Table 4), goosegrass seedlings (approximately 5-leaf stage) were transplanted into planting holes containing tomato plants transplanted on March 4, 2019. The tomato data was supplemented with additional tomato images (Testing 4, Table 4) to evaluate the network’s ability to discriminate goosegrass from another grass species. A fifth dataset included goosegrass growing in isolation (Testing 5, Table 4).

The image resolution of the Nikon digital camera was 4000 × 3000 pixels. Nikon images were resized to 1280 × 853 pixels and cropped to 1280 × 720 pixels (720p) using IrfanView (Version 4.50, Irfan Skiijan, Jajce, Bosnia). The Sony digital camera image resolution was 1920 × 1080 pixels and images were resized to 720p. Training images were annotated using custom software compiled with Lazarus (https://www.lazarus-ide.org/) in two ways. The EP annotation method used a single bounding box to encompass the entire plant habit. The LB annotation method used smaller bounding boxes along the leaf blade to reduce the overall variability of the target. This approach had been utilized previously to improve detection by focusing annotation to individual Carolina geranium (Geranium carolinianum L.) leaves19. Due to the leaf shape and potential goosegrass leaf angles, square bounding boxes were not an ideal solution to minimize background noise by annotating entire leaves. Instead, multiple small square bounding boxes, approximately the width of the leaves, were used to label goosegrass along the length of the leaves. Examples of each method are matched by corresponding bounding box output found in Figs. 1 and 2. Bounding box annotation was the preferable technique compared to pixel-wise annotation due to increased accuracy and reduced time consideration22.

The convolutional neural network utilized was tiny- You Only Look Once Version 3 (YOLOv3-tiny)36. YOLOv3-tiny was selected for the implementation into a developed prototype precision sprayer for in situ spraying of grasses in horticultural crops including strawberry and tomato plasticulture. The sprayer has a 50 cm distance between the camera and the solenoid-controlled nozzles. As such, image processing speed was considered a priority. The state-of-the-art object detection neural network for iteration speed and capacity for implementation into the controller was selected.

YOLOv3-tiny feature extraction is achieved with the convolutional-based Darknet-1936,37. Darknet-19 was derived for YOLOv2, using 3 × 3 filters within its 19 convolutional layers and 1 × 1 filters within its 5 max-pooling layers38. Localization is achieved by dividing the image into a grid, predicting multiple bounding boxes within each, and using regression to resolve spatially separated predictions39. Bounding box classification permits multiple classification categories and multi-labeling of predictions37,39, which is particularly useful for mixed weed communities.

YOLOv3-tiny was trained and tested using the Darknet infrastructure40 and pre-trained with the COCO dataset41. YOLOv3-tiny contained augmentation parameters to reduce the opportunity for overtraining on irrelevant features through altering input images. These parameters included color alteration (exposure, hue, and saturation), flipping, cropping, and resizing. Network training continued until either the average loss error stopped decreasing or the validation accuracy (recall or precision) stopped increasing. For training, 10% of the available images were randomly selected as the validation dataset used during training.

To assess network effectiveness, classification output was pooled and categorized by binary classification for networks derived from both annotation methods. These categories included true positives (tp), false positives (fp), and false negatives (fn). A tp was when the network correctly identified the target. An fp was when the network falsely predicted the target. An fn was when the network failed to predict the true target. Precision, recall, F-score, and accuracy were used to evaluate the network effectiveness to predict targets12. Precision measures the effectiveness of the network in properly identifying its target and was calculated as39,40:

| 1 |

Recall evaluates the effectiveness of the network in target detection and was calculated as42,43:

| 2 |

The F-score is the precision and recall harmonic mean and gives an overall performance measure with considerations to both fp and fn, and is calculated as42:

| 3 |

For comparison purposes, the testing network accuracy was calculated as:

| 4 |

To validate the network training fit, the “map” command was specified. This method used an intersection of union (IoU) with a threshold of 0.25 to evaluate predicted estimates compared to ground-truth annotation. This measure was included to evaluate the effectiveness of the annotation method on overall training. For network detection accuracy assessment of testing datasets, a separate approach was taken for precision sprayer considerations. For both annotation methods, should any of the plant falls within the predicted bounding box, it was considered a hit (IoU > 0). Additional predicted bounding boxes on the same plant were ignored. This method prioritized the detection of some part of the goosegrass plant and is reliant on the ability of the controller software to compensate and increase the area sprayed if necessary.

Acknowledgements

This study was supported by the Florida Strawberry Research and Education Foundation in the United States.

Author contributions

S.S. and N.B. developed the experimental premise, design, and procedures. S.S. and A.S. processed images and trained neural networks. S.S. wrote the manuscript text. All authors reviewed the manuscript.

Competing interests

The authors received funding support from the Florida Strawberry Research and Education Foundation in the United States. The authors declare no further competing interests.

Footnotes

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Secretariat, G. Eleusine indica (L.) Gaertn. GBIF Backbone Taxonomy. Checklist dataset, 10.15468/39omei, Accessed 24 April 2019 (2017).

- 2.Masin R, Zuin MC, Archer DW, Forcella F, Zanin G. WeedTurf: a predictive model to aid control of annual summer weeds in turf. Weed Sci. 2005;53:193–201. doi: 10.1614/WS-04-066R1. [DOI] [Google Scholar]

- 3.Nishimoto RK, McCarty LB. Fluctuating temperature and light influence seed germination of goosegrass (Eleusine indica) Weed Sci. 1997;45:426–429. doi: 10.1017/S0043174500093103. [DOI] [Google Scholar]

- 4.Chauhan BS, Johnson DE. Germination ecology of goosegrass (Eleusine indica): An important grass weed of rainfed rice. Weed Sci. 2008;56:699–706. doi: 10.1614/WS-08-048.1. [DOI] [Google Scholar]

- 5.Webster, T. M. Weed survey – southern states 2014. Vegetable, fruit and nut crop subsection. In Proceedings of the Southern Weed Science Society 67th Annual Meeting, 288. (Southern Weed Science Society, 2014).

- 6.Xiao-yan M, Han-wen W, Wei-li J, Ya-jie M, Yan M. Goosegrass (Eleusine indica) density effects on cotton (Gossypium hirsutum) J. Integr. Agr. 2015;14:1778–1785. doi: 10.1016/S2095-3119(15)61058-9. [DOI] [Google Scholar]

- 7.Wandscheer ACD, Rizzardi MA, Reichert M. Competitive ability of corn in coexistence with goosegrass. Planta Daninha. 2013;31:281–289. doi: 10.1590/S0100-83582013000200005. [DOI] [Google Scholar]

- 8.Bewick TA, Kostewicz SR, Stall WM, Shilling DG, Smith K. Interaction of cupric hydroxide, paraquat, and biotype of American black nightshade (Solanum americanum) Weed Sci. 1990;38:634–638. doi: 10.1017/S0043174500051626. [DOI] [Google Scholar]

- 9.Buker RS, Steed ST, Stall WM. Confirmation and control of a paraquat-tolerant goosegrass (Eleusine indica) biotype. Weed Technol. 2002;16:309–313. doi: 10.1614/0890-037X(2002)016[0309:CACOAP]2.0.CO;2. [DOI] [Google Scholar]

- 10.Fernandez JV, Odero DC, MacDonald GE, Ferrell J, Gettys LA. Confirmation, characterization, and management of glyphosate-resistant ragweed parthenium (Parthenium hysterophorus L.) in the Everglades agricultural area of south Florida. Weed Technol. 2015;29:233–242. doi: 10.1614/WT-D-14-00113.1. [DOI] [Google Scholar]

- 11.Fennimore SA, Slaughter DC, Siemens MC, Leon RG, Saber MN. Technology for automation of weed control in specialty crops. Weed Technology. 2016;30:823–837. doi: 10.1614/WT-D-16-00070.1. [DOI] [Google Scholar]

- 12.Schmidhuber J. Deep learning in neural networks: An overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 13.Dyrmann M, Karstoft H, Midtiby HS. Plant species classification using deep convolutional neural network. Biosyst. Eng. 2016;151:72–80. doi: 10.1016/j.biosystemseng.2016.08.024. [DOI] [Google Scholar]

- 14.Ball JE, Anderson DT, Chan CS. A comprehensive survey of deep learning in remote sensing: theories, tools and challenges for the community. J. Appl. Remote Sens. 2017;11:042609. doi: 10.1117/1.JRS.11.042609. [DOI] [Google Scholar]

- 15.Gu J, et al. Recent advances in convolutional neural networks. Pattern Recogn. 2017;77:354–377. doi: 10.1016/j.patcog.2017.10.013. [DOI] [Google Scholar]

- 16.Yu J, Sharpe SM, Schumann AW, Boyd NS. Deep learning for image-based weed detection in turfgrass. Eur. J. Agr. 2019;104:78–84. doi: 10.1016/j.eja.2019.01.004. [DOI] [Google Scholar]

- 17.Yu J, Sharpe SM, Schumann AW, Boyd NS. Detection of broadleaf weeds growing in turfgrass with convolutional neural networks. Pest Manag. Sci. 2019;75:2211–2218. doi: 10.1002/ps.5349. [DOI] [PubMed] [Google Scholar]

- 18.Dyrmann M, Jørgensen RN, Midtiby HS. RoboWeedSupport - Detection of weed locations in leaf occluded cereal crops using a fully convolutional neural network. In J. A. Taylor, D. Cammarano, A. Prashar, & H. A (Eds.), Proceedings of the 11th European Conference on Precision Agriculture. Adv. Anim. Biosci. 2017;8:842–847. doi: 10.1017/S2040470017000206. [DOI] [Google Scholar]

- 19.Sharpe SM, Schumann AW, Boyd NS. Detection of Carolina geranium (Geranium carolinianum) growing in competition with strawberry using convolutional neural networks. Weed Sci. 2019;67:239–245. doi: 10.1017/wsc.2018.66. [DOI] [Google Scholar]

- 20.Sharpe SM, Schumann AW, Yu J, Boyd NS. Vegetation detection and discrimination within vegetable plasticulture row middles using a convolutional neural network. Precis. Agric. 2019;21:264–277. doi: 10.1007/s11119-019-09666-6. [DOI] [Google Scholar]

- 21.Chen Y, et al. Strawberry yield prediction based on a deep neural network using high-resolution aerial orthoimages. Remote Sens. 2019;11:1584. doi: 10.3390/rs11131584. [DOI] [Google Scholar]

- 22.Sa I, et al. DeepFruits: A fruit detection system using deep neural networks. Sensors. 2016;16:1222. doi: 10.3390/s16081222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Fuentes AF, Yoon S, Lee J, Park DS. High-performance deep neural network-based tomato plant diseases and pests diagnosis system with refinement filter bank. Front. Plant Sci. 2018;9:1162. doi: 10.3389/fpls.2018.01162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Bryson, C., & DeFelice, M. Weeds of the South. Athens: University of Georgia Press. 467 p. (2009).

- 25.Urban G, et al. Deep learning localizes and identifies polyps in real time with 96% accuracy in screening colonoscopy. Gastroenterology. 2018;155:1069–1078.e8. doi: 10.1053/j.gastro.2018.06.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Dyrmann, M., Skovsen, S., Laursen, M. S. & Jørgensen, R. N. Using a fully convolutional neural network for detecting locations of weeds in images from cereal fields. In The 14th International Conference on Precision Agriculture, 1–7. (International Society of Precision Agriculture, 2018).

- 27.Blaschko, M. B., Vedaldi, A., & Zisserman, A. Simultaneous object detection and ranking with weak supervision. In J. Lafferty, C. Williams, J. Shawe-Taylor, R. Zemel, & A. Culotta (Eds.), Advances in Neural Information Processing Systems 23. Vancouver, BC, Canada, http://papers.nips.cc/paper/4105-simultaneous-object-detection-and-ranking-with-weak-supervision.pdf Accessed 29 April 2019 (2010).

- 28.Buda M, Maki A, Mazurowski MA. A systematic study of the class imbalance problem in convolutional neural networks. Neural Netw. 2018;106:249–259. doi: 10.1016/j.neunet.2018.07.011. [DOI] [PubMed] [Google Scholar]

- 29.Espejo-Garcia B, Mylonas N, Athanasakos L, Fountas S, Vasilakoglou I. Towards weed identification assistance through transfer learning. Comput. Electron. Agric. 2020;171:105306. doi: 10.1016/j.compag.2020.105306. [DOI] [Google Scholar]

- 30.McCool C, Perez T, Upcroft B. Mixtures of lightweight deep convolutional neural networks: Applied to agricultural robotics. IEEE Robot Autom. Lett. 2017;2:1344–1351. doi: 10.1109/LRA.2017.2667039. [DOI] [Google Scholar]

- 31.Vioix JB, Douzals JP, Truchetet F, Assémat L, Guillemin JP. Spatial and spectral methods for weed detection and localization. EURASIP J Applied Signal Proc. 2002;200:679–685. doi: 10.1155/S1110865702204072. [DOI] [Google Scholar]

- 32.Milioto, A., Lottes, P. & Stachniss, C. Real-time blob-wise sugar beet vs weeds classification for monitoring fields using convolutional neural networks. In ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, 41–48. (International Society for Photogrammetry and Remote Sensing, 2017).

- 33.Olsen A, et al. DeepWeeds: A Multiclass Weed Species Image Dataset for Deep Learning. Sci. Rep. 2019;9:1–12. doi: 10.1038/s41598-018-38343-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Zheng YY, et al. CropDeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. Sensors. 2019;19:1058. doi: 10.3390/s19051058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tang J, et al. Weed identification based on K-means feature learning combined with convolutional neural network. Comput. Electron. Agric. 2017;135:63–70. doi: 10.1016/j.compag.2017.01.001. [DOI] [Google Scholar]

- 36.Redmon, J. & Farhadi, A. YOLOv3: An incremental improvement. arXiv preprint, 1804.02767, https://arxiv.org/abs/1804.02767 (2018).

- 37.He W, Huang Z, Wei Z, Li C, Guo B. TF-YOLO: An improved incredmental network for real-time object detection. Appl. Sci. 2019;9:3225. doi: 10.3390/app9163225. [DOI] [Google Scholar]

- 38.Redmon, J. & Farhadi, A. YOLO9000: better, faster, stronger. In Proceedings of the IEEE conference on computer vision and pattern recognition, 7263–7271. (Institute of Electrical and Electronics Engineers, 2017).

- 39.Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 779–788. (Institute of Electrical and Electronics Engineers, 2016).

- 40.Redmon, J. Darknet: Open source neural networks in C (2013–2016), http://pjreddie.com/darknet/ (2016).

- 41.Lin, T.-Y. et al. Microsoft COCO: Common objects in context. In Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 740–755, 10.1007/978-3-319-10602-1_48 (European Conference on Computer Vision, 2014).

- 42.Sokolova M, Lapalme G. A systematic analysis of performance measures for classification tasks. Inf. Process. Manag. 2009;45:427–437. doi: 10.1016/j.ipm.2009.03.002. [DOI] [Google Scholar]

- 43.Hoiem, D., Chodpathumwan, Y. & Dai, Q. Diagnosing error in object detectors. In A. Fitzgibbon, S. Lazebnik, P. Perona, Y. Sato, & C. Schmid (Eds.), Computer Vision–ECCV 2012 (pp. 340–353). Berlin, Heidelberg: Springer Berlin Heidelberg. 10.1007/978-3-642-33712-3_25 (2012).