Saionz et al. show that the first 3 months after an occipital stroke are characterized by a gradual – not sudden – loss of visual perceptual abilities. Training in the subacute period stops this degradation, and is more efficient at eliciting recovery than identical training in the chronic phase.

Keywords: hemianopia, vision, perceptual learning, training, rehabilitation

Abstract

Stroke damage to the primary visual cortex (V1) causes a loss of vision known as hemianopia or cortically-induced blindness. While perimetric visual field improvements can occur spontaneously in the first few months post-stroke, by 6 months post-stroke, the deficit is considered chronic and permanent. Despite evidence from sensorimotor stroke showing that early injury responses heighten neuroplastic potential, to date, visual rehabilitation research has focused on patients with chronic cortically-induced blindness. Consequently, little is known about the functional properties of the post-stroke visual system in the subacute period, nor do we know if these properties can be harnessed to enhance visual recovery. Here, for the first time, we show that ‘conscious’ visual discrimination abilities are often preserved inside subacute, perimetrically-defined blind fields, but they disappear by ∼6 months post-stroke. Complementing this discovery, we now show that training initiated subacutely can recover global motion discrimination and integration, as well as luminance detection perimetry, just as it does in chronic cortically-induced blindness. However, subacute recovery was attained six times faster; it also generalized to deeper, untrained regions of the blind field, and to other (untrained) aspects of motion perception, preventing their degradation upon reaching the chronic period. In contrast, untrained subacutes exhibited spontaneous improvements in luminance detection perimetry, but spontaneous recovery of motion discriminations was never observed. Thus, in cortically-induced blindness, the early post-stroke period appears characterized by gradual—rather than sudden—loss of visual processing. Subacute training stops this degradation, and is far more efficient at eliciting recovery than identical training in the chronic period. Finally, spontaneous visual improvements in subacutes were restricted to luminance detection; discrimination abilities only recovered following deliberate training. Our findings suggest that after V1 damage, rather than waiting for vision to stabilize, early training interventions may be key to maximize the system’s potential for recovery.

Introduction

The saying ‘time is brain’ after stroke may be true, but if that stroke affects primary visual cortex (V1), the urgency seems to be lost. Such strokes cause a dramatic, contralesional loss of vision known as hemianopia or cortically-induced blindness (Zhang et al., 2006; Pollock et al., 2019). Our current understanding of visual plasticity after occipital strokes or other damage is largely informed by natural history studies that show limited spontaneous recovery early on, with stabilization of deficits by 6 months post-stroke (Gray et al., 1989; Tiel and Kolmel, 1991; Zhang et al., 2006; Zihl, 2010). By that time, patients with cortically-induced blindness are considered ‘chronic’ and exhibit profound visual field defects in both detection and discrimination contralateral to the V1 damage (Hess and Pointer, 1989; Townend et al., 2007; Huxlin et al., 2009; Das et al., 2014; Cavanaugh and Huxlin, 2017). In fact, research into post-stroke visual function and plasticity has focused on patients in this chronic phase (Melnick et al., 2016), precisely because of the stability of their visual field defects. However, therapeutically, this approach runs counter to practice in sensorimotor stroke, where it has been shown that early injury responses heighten neuroplastic potential (Kwakkel et al., 2002; Rossini et al., 2003; Krakauer, 2006; Bavelier et al., 2010; Hensch and Bilimoria, 2012; Seitz and Donnan, 2015; Winstein et al., 2016; Bernhardt et al., 2017a).

Although some have argued that the visual system is not capable of functional recovery in the chronic phase post-stroke (Horton, 2005a, b; Reinhard et al., 2005), multiple studies, from several groups worldwide, have shown that gaze-contingent visual training with stimuli presented repetitively inside the perimetrically-defined blind field can lead to localized visual recovery, both on the trained tasks and on visual perimetry measured using clinical tests (Sahraie et al., 2006, 2010b; Raninen et al., 2007; Huxlin et al., 2009; Das et al., 2014; Cavanaugh et al., 2015; Melnick et al., 2016; Cavanaugh and Huxlin, 2017). However, the training required to attain such recovery is intense and lengthy (Huxlin et al., 2009; Das et al., 2014; Melnick et al., 2016), and recovered vision appears to be low-contrast and coarser than normal (Huxlin et al., 2009; Das et al., 2014; Cavanaugh et al., 2015). It is currently unknown why visual recovery in chronic cortically-induced blindness is so difficult, partial, and spatially restricted. Possible explanations include that V1 damage kills a large portion of cells selective for basic visual attributes such as orientation and direction, and that it causes a shift in the excitation/inhibition balance in residual visual circuitry towards excessive inhibition (Spolidoro et al., 2009). Excessive intracortical inhibition can limit plasticity and raise the threshold for activation of relevant circuits. These factors may explain why training that starts more than 6 months post-stroke is arduous and why recovery is incomplete.

Thus, while there is much left to do to improve rehabilitation strategies for the increasingly large population of chronic sufferers of cortically-induced blindness (Pollock et al., 2019), the situation is much worse for acute and subacute cortically-induced blindness. Indeed, this group of early post-stroke patients has been almost completely ignored in the vision science literature. There are no published, detailed assessments of visual function within cortically-blind fields in the first few weeks after stroke, nor do we know anything about the potential for training-induced recovery during this early post-stroke period. This is in marked contrast to sensorimotor stroke, which has been investigated as soon as 5 days after ischaemic damage, with current treatment guidelines advocating early rehabilitation to facilitate greater, faster recovery (Kwakkel et al., 2002; Krakauer, 2006; Winstein et al., 2016;Bernhardt et al., 2017a). In addition to the resolution of stroke-associated inflammation and swelling, this early period is characterized by a shift in the excitation/inhibition balance towards excitation, upregulation of growth and injury response factors (especially brain-derived neurotrophic factor), changes in neurotransmitter modulation (especially GABA, glutamate and acetylcholine), and even re-emergence of a critical-period-like state (Rossini et al., 2003; Bavelier et al., 2010; Hensch and Bilimoria, 2012; Seitz and Donnan, 2015). These cellular and environmental cerebral changes could in fact underlie the observed spontaneous recovery in luminance detection perimetry in the first few months after visual cortical strokes (Zhang et al., 2006; Townend et al., 2007; Çelebisoy et al., 2011; Shin et al., 2017). Moreover, structural barriers to plasticity have yet to form, in particular myelin-related proteins inhibiting axonal sprouting, perineuronal nets of chondroitin sulfate proteoglycans, and fibrotic tissue (Bavelier et al., 2010; Hensch and Bilimoria, 2012). Thus, whether this post-stroke ‘critical period’ could be recruited to attain greater and faster recovery of visual functions in the blind field is the second question asked here.

Materials and methods

Study design

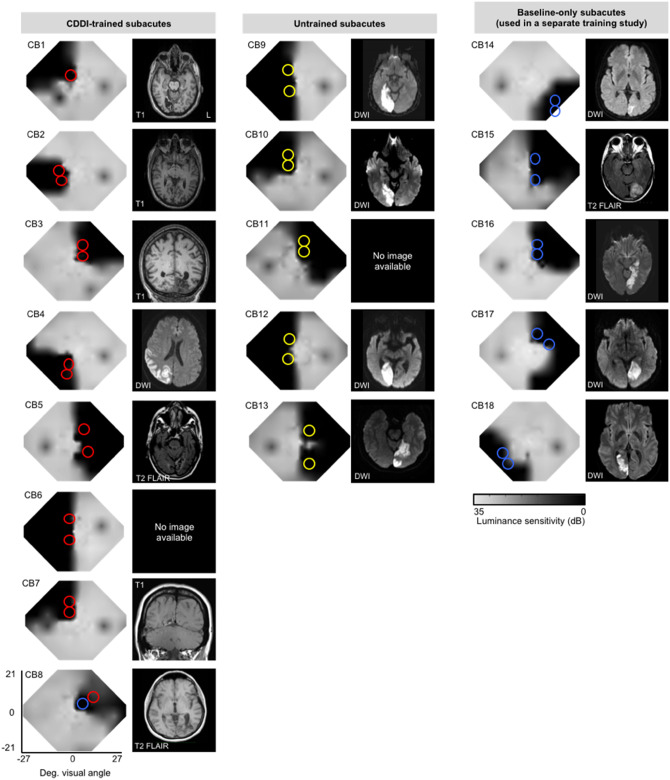

Here, our first goal was to measure the basic properties of vision in subacute, cortically-blinded visual fields, contrasting them with vision in chronic, cortically-blinded visual fields. For this, we recruited 18 subacute patients with cortically-induced blindness [mean ± standard deviation (SD) = 7.7 ± 3.4 weeks post-stroke, range 2.0–12.7 weeks], and 14 adults with chronic cortically-induced blindness (33.4 ± 54.5 months post-stroke, range 5–226 months). Subject demographics are detailed in Table 1. Brain scans illustrating individual lesions and visual field defects are presented in Figs 1 and 2. In each participant, visual field deficits were first estimated from Humphrey visual perimetry, as previously described (Cavanaugh and Huxlin, 2017), and served as a starting point to precisely map the position of the blind field border and select training locations. This mapping was done with a coarse (left/right) global direction discrimination and integration (CDDI) task and a fine direction discrimination (FDD) task, both using random dot stimuli (Huxlin et al., 2009; Das et al., 2014; Cavanaugh et al., 2015; Cavanaugh and Huxlin, 2017). Suitable training locations were picked from these test results according to previously-published criteria (Huxlin et al., 2009; Das et al., 2014; Cavanaugh et al., 2015) and are shown to scale, with coloured circles superimposed on composite Humphrey visual field maps in Figs 1 and 2. At each training location in the blind field, luminance contrast sensitivity functions were also estimated while subjects performed a static non-flickering orientation discrimination task and, separately, a direction discrimination task. Finally, intact field performance was also collected in each participant: for each task, performance was measured at locations mirror-symmetric to the blind field locations selected for initial training. This was essential to provide a patient-specific internal control for ‘normal’ performance on each task.

Table 1.

Participant demographics, testing and training parameters

| Subject | Gender | Age (years) | Time post-stroke | Training type |

|---|---|---|---|---|

| Patients with subacute cortically-induced blindness | ||||

| CB1 | Female | 49 | 11.1 weeks | CDDI |

| CB2 | Male | 67 | 7.1 weeks | CDDI |

| CB3 | Male | 70 | 9.0 weeks | CDDI |

| CB4 | Male | 61 | 7.3 weeks | CDDI |

| CB5 | Male | 74 | 4.6 weeks | CDDI |

| CB6 | Female | 44 | 11.3 weeks | CDDI |

| CB7 | Female | 39 | 12.7 weeks | CDDI |

| CB8 | Female | 66 | 11.1 weeks | CDDI |

| CB9 | Male | 58 | 10.1 weeks | Untrained |

| CB10 | Male | 69 | 2.4 weeks | Untrained |

| CB11 | Male | 69 | 9.9 weeks | Untrained |

| CB12 | Male | 77 | 11.1 weeks | Untrained |

| CB13 | Male | 70 | 5.9 weeks | Untrained |

| CB14 | Male | 47 | 2.4 weeks | Other |

| CB15 | Female | 69 | 6.7 weeks | Other |

| CB16 | Female | 27 | 8.1 weeks | Other |

| CB17 | Male | 49 | 6.0 weeks | Other |

| CB18 | Male | 47 | 2.0 weeks | Other |

| Patients with chronic cortically-induced blindness | ||||

| CB19 | Male | 57 | 11 months | CDDI |

| CB20 | Male | 40 | 21 months | CDDI |

| CB21 | Female | 36 | 9 months | CDDI |

| CB22 | Female | 54 | 7 months | CDDI |

| CB23 | Female | 59 | 29 months | CDDI |

| CB24 | Male | 63 | 5 months | CDDI |

| CB25 | Male | 70 | 11 months | CDDI |

| CB26 | Male | 63 | 226 months | CDDI |

| CB27 | Male | 61 | 36 months | CDDI |

| CB28 | Male | 17 | 17 months | CDDI |

| CB29 | Female | 53 | 24 months | CDDI |

| CB30 | Female | 64 | 7 months | CDDI |

| CB31 | Female | 76 | 31 months | CDDI |

| CB32 | Male | 80 | 33 months | CDDI |

Patients CB14–18 underwent ‘other’ types of training and their outcomes are part of a different study.

Figure 1.

Baseline Humphrey visual field composite maps, MRIs and testing/training locations in subacute participants. Grey scale denoting Humphrey-derived visual sensitivity is provided under the right-most column. MRI type [T1, diffusion-weighted imaging (DWI), T2-weighted fluid-attenuated inversion recovery (T2-FLAIR)] is indicated on radiographic images, which are shown according to radiographic convention (left brain hemisphere on image right). Red circles = CDDI training locations; yellow circles = putative training locations in untrained controls, which were only pre- and post-tested; blue circles = locations tested at baseline in subacutes who were used in a separate training study (designated ‘other’ training type in Table 1).

Participants

All participants had sustained V1 damage in adulthood, confirmed by neuroimaging and accompanied by contralesional homonymous visual field defects. Additional eligibility criteria included reliable visual fields at recruitment as measured by Humphrey perimetry (see below), and stable, accurate fixation during in-lab, psychophysical, gaze-contingent testing enforced with an eye tracker (see below). Participants were excluded if they had ocular disease (e.g. cataracts, retinal disease, glaucoma), any neurological or cognitive impairment that would interfere with proper training, or hemi-spatial neglect. All participants were best-corrected using glasses or contact lenses. Procedures were conducted in accordance with the Declaration of Helsinki, with written informed consent obtained from each participant, and participation at all times completely voluntary. This study was approved by the Research Subjects Review Board at the University of Rochester (#RSRB00021951).

Perimetric mapping of visual field defects

Perimetry was conducted using the Humphrey Field Analyzer II-i750 (Zeiss Humphrey Systems, Carl Zeiss Meditec). Both the 24-2 and the 10-2 testing patterns were collected in each eye, using strict quality-control criteria, as previously described (Cavanaugh and Huxlin, 2017). All tests were performed at the University of Rochester Flaum Eye Institute, by the same ophthalmic technician, with fixation controlled using the system’s eye tracker and gaze/blind spot automated controls, visual acuity corrected to 20/20, a white size III stimulus, and a background luminance of 11.3 cd/m2.

The results of the four test patterns were interpolated in MATLAB (Mathworks) to create a composite visual field map of each patient, as previously described (Cavanaugh and Huxlin, 2017) and illustrated in Figs 1, 2 and 3A and B. First, luminance detection thresholds were averaged from locations identical in the two eyes. Next, natural-neighbour interpolation with 0.1 deg2 resolution was applied between non-overlapping test locations across the four tests, creating composite visual fields of 121 tested locations and 161 398 interpolated datapoints, subtending an area of 1616 deg2. To determine changes over time, difference maps were generated by subtracting the initial composite visual field map from the subsequent map; change (areas improved or worsened) was defined as visual field locations that differed by at least 6 dB, a conservative standard of change at twice the measurement error of the Humphrey test (Zeiss Humphrey Systems, Carl Zeiss Meditec).

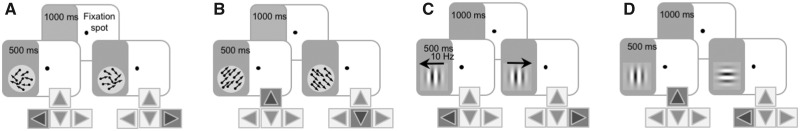

Figure 3.

Measuring and retraining vision in subacute and chronic stroke. Trial sequences for psychophysical tasks measuring (A) CDDI, (B) FDD, (C) contrast sensitivity for direction and (D) static orientation discrimination.

In addition to areas of change across visual field maps, the following measures were collected for each visual field: pattern deviation (Humphrey-derived metric for the deviation from the age-corrected population mean at each Humphrey visual field test location), perimetric mean deviation (Humphrey-derived metric comparing the overall field of vision to an age-matched normal hill of vision), and total deficit area [defined as regions ≤10 dB of sensitivity, per the standard definition of legal blindness (Social Security Administration, 2019)].

After Humphrey perimetry, each subject underwent psychophysical mapping of the blind field border as previously described (Huxlin et al., 2009; Das et al., 2014). In brief, training locations were selected as sites where performance on an FDD task (see below for more details) first declined to chance (50–60% correct) during mapping. Two initial training locations were identified in each subject, including those subsequently assigned to the untrained group. Following their selection, baseline measures of performance on all four of the discrimination tasks described below (see ‘Baseline measurements of visual discrimination performance’ section) were collected at each putative training location.

Apparatus and eye tracking for in-lab psychophysical measures

Visual discrimination tasks were performed on a Mac Pro computer with stimuli displayed on an HP CRT monitor (HP 7217A, 48.5 × 31.5 cm screen size, 1024 × 640 resolution, 120 Hz frame rate). The monitor’s luminance was calibrated using a ColorCal II automatic calibration system (Cambridge Research Systems) and the resulting gamma-fit linearized lookup table implemented in MATLAB. A viewing distance of 42 cm was ensured using a chin/forehead rest. Eye position was monitored using an Eyelink 1000 eye tracker (SR Research Ltd.) with a sampling frequency of 1000 Hz and accuracy within 0.25°. All tasks and training were conducted using MATLAB (Mathworks) and Psychtoolbox (Pelli, 1997).

Baseline measurements of visual discrimination performance

A battery of two-alternative, forced choice (2AFC) tasks was used to assess visual discrimination performance in-lab, at recruitment, after putative training locations were selected. In each task, trials were initiated in a gaze-contingent manner: participants began by fixating on a central spot for 1000 ms before a stimulus appeared, accompanied by a tone. If eye movements deviated beyond the 2° × 2° fixation window centred on the fixation spot during the course of stimulus presentation, the trial was aborted and excluded, and a replacement trial was added to the session. Participants indicated their perception of the different stimuli (see below) via keyboard. Auditory feedback was provided to differentiate correct and incorrect responses. Following each test, participants were also asked to describe the appearance of the stimuli in as much detail as possible, or to report if they were unable to sense them at all.

Coarse direction discrimination and integration task

After participants initiated a trial through stable fixation for 1000 ms, a random dot stimulus appeared for 500 ms in a 5° diameter circular aperture (Fig. 3A). The stimulus consisted of black dots moving on a mid-grey background (dot lifetime: 250 ms, speed: 10 deg/s, density: 3 dots/deg2). Dots moved globally in a range of directions distributed uniformly around the leftward or rightward vectors (Huxlin et al., 2009; Das et al., 2014). Participants responded whether the global direction of motion was left- or rightward. Task difficulty was adjusted using a 3:1 staircase, increasing dot direction range from 0° to 360° in 40° steps (Huxlin et al., 2009; Das et al., 2014). In-lab sessions consisted of 100 trials per visual field location. Session performance was fit using a Weibull psychometric function with a threshold criterion of 75% correct to calculate direction range thresholds. Direction range thresholds were then normalized to the maximum range of directions in which dots could move (360°), and expressed as a percentage using the following formula: CDDI threshold (%) = [360° − direction range threshold] / 360° × 100. For ease of analysis, when participants performed at chance (50–60% correct for a given session), the CDDI threshold was set to 100%.

Fine direction discrimination task

Random dot stimuli were presented in which black dots (same parameters as in CDDI task) moved on a mid-grey background within a 5° circular aperture, for 500 ms (Fig. 3B). Dots moved almost uniformly (2° direction range) leftwards or rightwards, angled upward or downward relative to the stimulus horizontal meridian. Participants indicated if the motion direction was up or down. Task difficulty was adjusted using a 3:1 staircase, which decreased angle of motion from the horizontal meridian from 90° (easiest) to 1° in log steps. Each test session consisted of 100 trials at a given location. Session performance was fit using a Weibull function with a threshold criterion of 75% correct to calculate FDD thresholds. At chance performance (50–60% correct), the FDD threshold was set to 90°.

Contrast sensitivity functions for orientation and direction discrimination

Contrast sensitivity functions were measured using the quick contrast sensitivity function (Lesmes et al., 2010), a widely used Bayesian method to measure the entire contrast sensitivity function across multiple spatial frequencies (0.1–10 cycles/deg) in just 100 trials, with a test-retest reliability of 0.94 in clinical populations (Hou et al., 2010). In this method, the shape of the contrast sensitivity function is expressed as a truncated log-parabola defined by four parameters: peak sensitivity, peak spatial frequency, bandwidth, and low-frequency truncation level. In the motion quick contrast sensitivity function (Fig. 3C), participants performed a 2AFC left- versus rightward direction discrimination task of a drifting, vertically-oriented Gabor (5° diameter, sigma 1°, 250 ms on/off ramps). Velocity varied as a function of spatial frequency to ensure a temporal frequency of 10 Hz (Lesmes et al., 2010). In our static quick contrast sensitivity function task (Fig. 3D), participants had to discriminate horizontal from vertical orientation of a non-flickering Gabor patch (5° diameter, sigma 1°, 250 ms on/off ramps).

Visual training in cortically-blind fields

Eight of the subacutes, and all 14 of the patients with chronic cortically-induced blindness were assigned to train at home on the CDDI task (Fig. 3A); five of the subacute participants were chosen to remain untrained until the start of the chronic period. Further details of subacute assignment method to trained and untrained groups are provided in the ‘Results’ section, as the motivation is only made clear by our initial findings from the baseline testing.

Participants used custom MATLAB-based software on their personal computers and displays to train daily at home. They were supplied with chin/forehead rests and instructed to position them such that their eyes were 42 cm away from their displays during training. They performed 300 trials per training location per day, at least 5 days per week, and emailed their auto-generated data log files back to the laboratory for analysis weekly. Session thresholds were calculated by fitting a Weibull function with a threshold criterion of 75% correct performance. Direction range thresholds were converted to CDDI thresholds as described above. Once performance reached levels comparable to equivalent intact field locations, training moved 1° deeper into the blind field along the x-axis (Cartesian coordinate space). Although home-training was performed without an eye tracker, patients were instructed to fixate whenever a fixation spot was present and warned that inadequate fixation would prevent recovery. Once subacute participants reached the chronic period, they were brought back to Rochester and performance at all home-trained locations was verified in-lab with on-line fixation control using the Eyelink 1000 eye tracker (SR Research). Chronic participants’ performance post-training was similarly verified in lab with eye tracking.

Statistical analyses

To evaluate differences in threshold performance for the CDDI and FDD tasks, when three or more groups were compared, inter-group differences were tested with a one- or two-way ANOVA followed by Tukey’s post hoc tests, if appropriate. When only two groups were compared, a two-tailed Student’s t-test was performed. Repeated measures statistics were used whenever appropriate. A probability of type I error of P < 0.05 was considered statistically significant.

Because of the adaptive nature of the quick contrast sensitivity function procedure, a bootstrap method (Efron and Tibshirani, 1994) was used to determine statistically significant changes to the contrast sensitivity function across experimental conditions and groups. Specifically, the quick contrast sensitivity function procedure generates an updated prior space for each of four contrast sensitivity function parameters on each successive trial. To generate a bootstrapped distribution of contrast sensitivity function parameters (and, by extension, contrast sensitivities at each spatial frequency) we performed the following procedure: for each experimental run, we generated 2000 contrast sensitivity functions by running the quick contrast sensitivity function procedure 2000 times using 100 trials randomly sampled with replacement from the data collected at that location. To compute P-values for comparisons of contrast sensitivity functions, we compiled difference distributions for the comparisons in questions for each model parameter and contrast sensitivities at each tested spatial frequency, with P-values determined by the proportion of samples that ‘crossed’ zero. To estimate a floor for a case of no visual sensitivity, we simulated 10 000 quick contrast sensitivity functions using random responses. In this simulation, the 97.5 percentile peak contrast sensitivity was 2.55. For actual patient data, any sample with peak sensitivity of <2.55 was considered at chance level performance, and thus set to zero. This ensured that we used a conservative criterion for determining whether patients exhibited contrast sensitivity in their blind field. Then, using bootstrap samples, we obtained 95% confidence intervals (CIs) for contrast sensitivity function parameters and contrast sensitivities for individual spatial frequencies as well as associated P-values.

Data availability

All de-identified data are available from the authors upon request.

Results

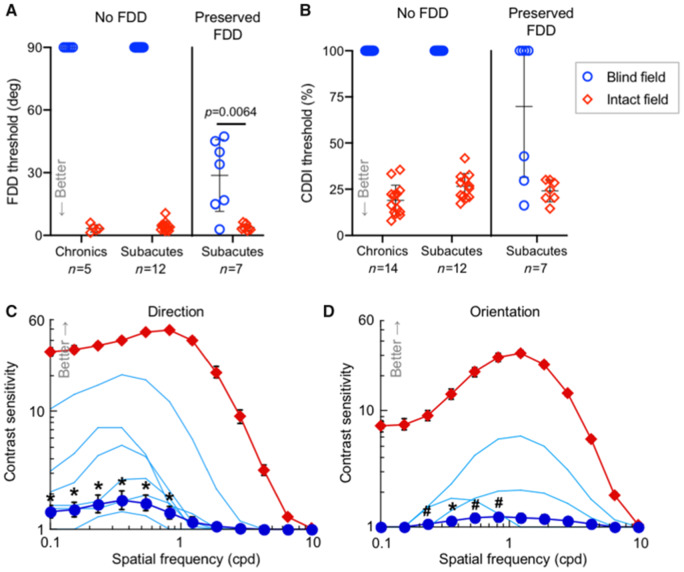

Preserved motion discriminations in subacute but not chronic blind fields

Consistent with prior observations, within their perimetrically-defined blind fields, our patients with chronic cortically-induced blindness failed to discriminate opposite motion directions (Das et al., 2014; Cavanaugh et al., 2015, 2019) or to effectively integrate across motion directions (Huxlin et al., 2009; Das et al., 2014; Cavanaugh and Huxlin, 2017) – tasks that elicited normal, threshold levels of performance at corresponding locations within their intact visual hemifields (Fig. 4A and B). Subjects verbally reported detecting that visual stimuli had been briefly presented within their blind fields, and while most could describe a sense of motion, they could not discriminate the global direction information contained in these stimuli above chance-level performance (50–60% correct in these 2AFC tasks).

Figure 4.

Preserved visual discrimination abilities in subacute but not chronic cortically-blind fields. (A) Plot of individual baseline FDD thresholds at blind-field training locations and corresponding, intact-field locations in patients with chronic and subacute cortically-induced blindness. Bars indicate means ± SD. Baseline FDD was unmeasurable in all chronics and two-thirds of subacutes, but measurable in one-third of subacutes. Patient CB15 is included in both subacute categories because of this hemianope’s ability to discriminate FDD in one blind-field quadrant but not the other, illustrating heterogeneity of perception across cortically-blind fields. As a group, subacutes’ baseline FDD thresholds were better than chronics’ [one-sample t-test versus mean of 90°, t(18) = 3.08, P = 0.0064]. However, subacutes with preserved FDD had worse thresholds than in their own intact hemifields [paired t-test, t(6) = 4.09, P = 0.0064]. (B) Plot of baseline CDDI at blind- and corresponding intact-field locations in patients with chronic and subacute cortically-induced blindness, stratified by preservation of blind-field FDD. Three subacutes with preserved blind-field FDD had measurable CDDI thresholds, a phenomenon never observed in chronics. (C) Baseline contrast sensitivity functions for direction discrimination in the blind and intact fields of subacutes (datapoints = mean ± SEM); light blue lines denote contrast sensitivity functions of participants with preserved blind-field sensitivity (significant in n = 5, P < 0.005; in the sixth subject, P = 0.16, see ‘Materials and methods’ section for bootstrap analysis). Group t-tests were performed at each spatial frequency: *P < 0.05, #P < 0.10. There were significant effects for peak contrast sensitivity [t(14) = 2.38, P = 0.016] and total area under the contrast sensitivity function [t(14) = 2.10, P = 0.027] (D) Baseline contrast sensitivity functions for orientation discrimination in subacutes. Labelling conventions as in C. Statistics for peak contrast sensitivity and area under the contrast sensitivity function: t(13) = 1.5, P = 0.079 and t(13) = 1.62, P = 0.065, respectively.

In contrast, when performing the same tasks, just over a third of subacutes could still discriminate relatively small direction differences at multiple locations in their blind fields, albeit with FDD thresholds usually poorer than at equivalent locations in their own intact fields (29 ± 17° versus 4 ± 2°; Fig. 4A). Of these subacutes, 43% could also integrate motion direction in their blind field, with CDDI thresholds approaching their own intact-field levels (Fig. 4B). When performance exceeded chance, participants always reported subjective awareness of the stimulus and a clear sensation of motion (in a direction above or below the horizontal meridian for the FDD task, and left- or rightward for the CDDI task). In contrast, subacutes who performed at chance on the FDD task in their blind field also performed at chance when asked to integrate motion direction into a discriminable percept (CDDI task). These participants could usually detect appearance and disappearance of the visual stimuli but, like chronic patients, were unable to reliably identify the global direction of motion they contained.

Even more surprising than the preservation of global motion discrimination measured using high-contrast, random dot stimuli, all of the subacutes with preserved FDD thresholds also had preserved contrast sensitivity for opposite direction discrimination of small Gabor patches in their blind field (Fig. 4C). However, at the same blind-field locations, preserved contrast sensitivity for the orientation discrimination of static, non-flickering Gabors was only observed in three of these participants (Fig. 4D). As with the random dot stimuli, where participants had preserved contrast sensitivity, they reported sensation of the stimulus and its direction/orientation. To our knowledge, even partial preservation of luminance contrast sensitivity in perimetrically-defined blind fields has never been described in the literature on this patient population. That contrast sensitivity should be preserved is also somewhat surprising, since it was measured within perimetrically-defined blind fields, and Humphrey perimetry is, in essence, a measure of luminance contrast detection. However, there are key differences between Humphrey stimuli and those used to measure contrast sensitivity in the present experiments: the Humphrey’s spots of light are much smaller than the 5° diameter Gabor patches in our psychophysical tests, and it uses luminance increments in these small spots relative to a bright background. As such, the larger Gabors used to measure contrast sensitivity may have been more detectable by invoking spatial summation (Nakayama and Loomis, 1974; Allman et al., 1985; Tadin et al., 2003), which would have improved perceptual performance.

Finally, that some patients with subacute cortically-induced blindness should possess measurable contrast sensitivity functions in their blind fields is also in stark contrast to the lack of such functions in patients with chronic cortically-induced blindness. Over more than a decade of testing, we and others have consistently found luminance contrast sensitivity—prior to training interventions—to be unmeasurable in chronic patients (Hess and Pointer, 1989; Huxlin et al., 2009; Das et al., 2014).

In sum, we report here the discovery of preserved direction discrimination, direction integration abilities and even luminance contrast sensitivity (strongest for direction discrimination) in the perimetrically-defined blind fields of a significant proportion of subacute participants <3 months post-stroke. Given that threshold-level performance is never seen in patients with chronic cortically-induced blindness, we posit that some subacute patients retain functionality of key visual circuits, which are then lost by the chronic period. This highlights the highly modular nature of visual processing, which allows even extensive brain damage to occur in some people without eradicating all visual function, at least initially. Why these functions were not preserved in all subacutes remains to be determined in what we hope will be numerous future studies of this hemianopic subpopulation.

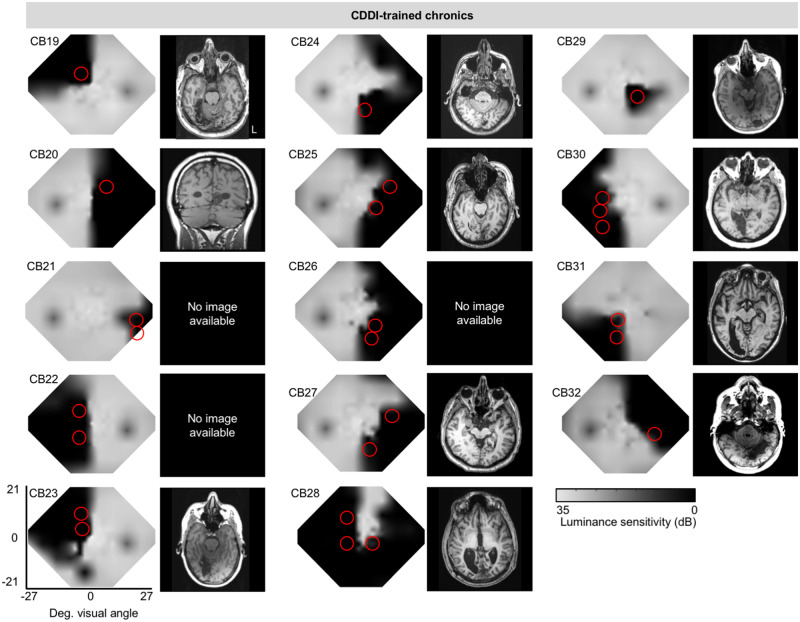

Effect of coarse direction discrimination and integration training

We next turned our attention to the as-yet unaddressed question of how subacute patients with cortically-induced blindness respond to visual training. To ensure a fairer comparison with patients with chronic cortically-induced blindness, who have no preserved discrimination abilities in their blind field at baseline (Huxlin et al., 2009; Das et al., 2014; Cavanaugh et al., 2015), we sub-selected subacutes with deficits in global motion perception (Patients CB1–13) in their blind field (Fig. 1). These subacutes were alternatingly assigned, in the order they were enrolled, to either CDDI training or no training until five individuals were enrolled in each. Subsequently-enrolled subacutes (n = 3) were directed into the training group to total five untrained (Fig. 1, yellow circles) and eight trained patients with cortically-induced blindness (Fig. 1, red circles). Training was performed until subacutes entered the chronic post-stroke period; as such, trained subacutes returned for in-lab testing using eye-tracker-enforced, gaze-contingent stimulus presentations after 4.8 ± 1.1 months of training, at ∼7.0 ± 0.9 months post-stroke. Untrained subacutes were recalled when they reached 7.9 ± 3.7 months post-stroke. Subacute data were compared with those previously-published (Cavanaugh and Huxlin, 2017) from 14 CDDI-trained patients with chronic cortically-induced blindness (Table 1 and Fig. 2). Importantly, daily in-home training on the CDDI task occurred over a comparable number of sessions in the subacute and chronic groups (subacutes: 125 ± 46 sessions, chronics: 129 ± 82 sessions).

Figure 2.

Baseline Humphrey visual field composite maps, structural (T1) MRI and training locations in chronic participants. Grey scale denoting Humphrey-derived visual sensitivity is provided under right-most column. MRIs are shown according to radiographic convention with left brain hemisphere on image right (L). Red circles = CDDI training locations. Data from these chronic subjects were previously published (Cavanaugh and Huxlin, 2017).

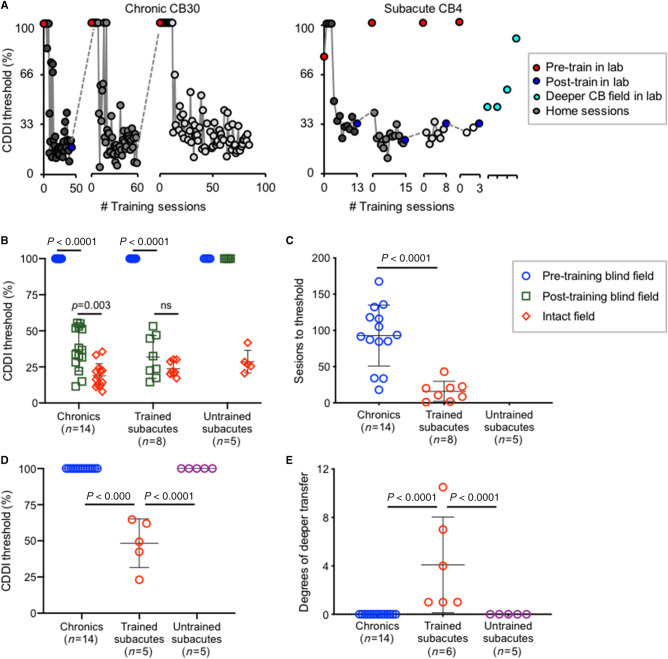

Post-training psychophysical tests revealed that training improved CDDI thresholds comparably across all trained participants, both subacute and chronic (Fig. 5A and B). However, subacutes recovered significantly faster than chronics, reaching normal, stable CDDI thresholds in only 16 ± 14 training sessions per blind-field location, compared to 93 ± 42 sessions in chronics (Fig. 5C). Additionally, patients with subacute cortically-induced blindness exhibited generalization of CDDI recovery to untrained blind-field locations (Fig. 5A, right panel, D and E), something never seen in patients with chronic cortically-induced blindness (Fig. 5A, left panel, D and E) (Huxlin et al., 2009; Das et al., 2014). By the end of training, subacute and chronic participants had regained global motion discrimination on average 4° and 0° deeper into the blind field than the deepest trained location, respectively (Fig. 5E).

Figure 5.

Trained subacutes recover direction integration faster and deeper than chronics. (A) Training data for representative patients with chronic and subacute cortically-induced blindness. (B) Plot of individual CDDI thresholds at training locations pre- and post-testing. Bars indicate mean ± SD. Two-way repeated measures ANOVA for group (chronics, trained subacutes, untrained subacutes) across locations (pre-training blind field, post-training blind field, intact field) was significant: F(4,48) = 37.11, P < 0.0001. Post hoc Tukey’s multiple comparisons tests within group are shown on graph. (C) Plot of the number of training sessions to reach normal CDDI thresholds in the blind field. Bars indicate mean ± SD. Chronics required significantly more training sessions than trained subacutes: unpaired t-test t(20) = 4.98, P < 0.0001. (D) Plot of initial CDDI threshold at location 1° deeper into the blind field than trained/tested location. Bars indicate mean ± SD. Only trained subacutes had measurable thresholds deeper than the trained blind-field location. (E) Plot of degrees of visual angle by which random dot stimulus could be moved deeper into the blind field than the last training/testing location, while still able to attain a measurable CDDI thresholds. All trained subacutes had measurable CDDI thresholds deeper into the blind field, something never observed in chronic or untrained subacutes. Bars indicate mean ± SD. Two trained subacutes were not included because of the extent of recovery exceeding our ability to measure depth in the blind field. One-way ANOVA across groups F(2,22) = 10.69, P < 0.0001.

Another surprising outcome of this first experiment was that untrained subacutes exhibited no spontaneous recovery of CDDI thresholds in their blind field; they remained unable to integrate motion direction at all pretested blind-field locations (Fig. 5B), as well as at locations deeper inside their blind field (Fig. 5E).

Generalization to untrained tasks

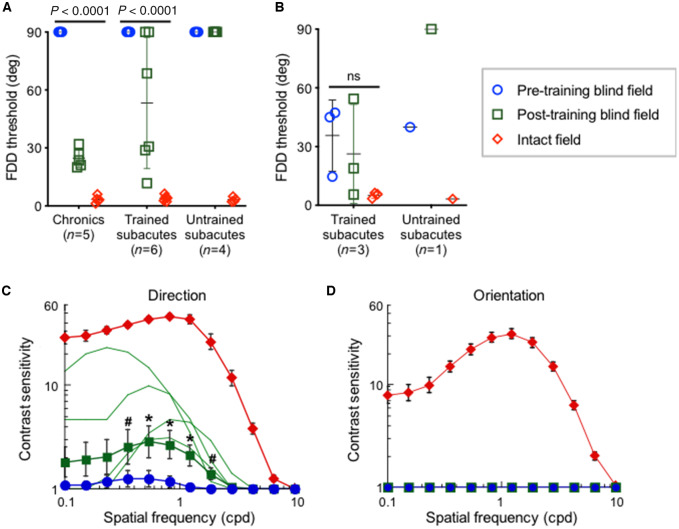

Fine direction discrimination

Subacutes selected for CDDI training fell into two categories: those with and those without preserved FDD thresholds. As such, we considered outcomes in these two subgroups separately. Among subacutes with no baseline preservation of FDD in the blind field, CDDI training transferred to and improved FDD thresholds in four of six participants (Fig. 6A). All trained chronics (who never have preservation of FDD at baseline) also exhibited transfer of learning to FDD but they attained better FDD thresholds than subacutes. Given that longer training tends to enhance learning transfer (Dosher and Lu, 2009), this better outcome in chronics could be related to the subacutes spending less time training at each blind-field location because of their faster learning rate. Nonetheless, consistent with our prior studies (Das et al., 2014; Cavanaugh et al., 2015, 2019), neither subacutes nor chronics achieved intact-field levels of FDD thresholds following CDDI training. Notably, untrained subacutes exhibited no spontaneous recovery of FDD thresholds (Fig. 6A). Finally, in subacutes with preserved blind field FDD thresholds at baseline, CDDI training maintained but did not further enhance FDD thresholds (Fig. 6B). As such, blind field CDDI training in the subacute period may have been critical for preserving existing FDD performance. This was further evidenced in untrained subacute CB10, who could discriminate fine direction differences in the blind field at baseline; however, by 6 months post-stroke, absent any training, this ability was lost and CB10 performed like any typical, untrained, chronic patient (Das et al., 2014; Cavanaugh et al., 2015).

Figure 6.

Subacute training on CDDI improves FDD thresholds and motion contrast sensitivity functions at trained, blind-field locations. (A) Plot of FDD thresholds in participants without baseline FDD, before and after CDDI training. Labelling conventions as in Fig. 4B. CDDI training improved FDD thresholds in most cases, whereas untrained subacutes never improved. A 3participant type × 3visual field location repeated measures ANOVA showed a main effect of participant [F(2,12) = 9.715, P = 0.0031], visual field location [F(1.007,12.09) = 168.6, P < 0.0001, Geisser-Greenhouse ε = 0.5036], and a significant interaction between the two [F(4,24) = 9.629, P < 0.0001]. Mean recovered FDD thresholds were better in chronic than subacute trained participants (Tukey’s multiple comparisons test: P < 0.01). (B) Plot of FDD thresholds in participants with preserved baseline FDD, before and after CDDI training. No enhancements in FDD thresholds were noted [one-way repeated measures ANOVA: F(2,8) = 3.81, P = 0.12]. When left untrained, FDD thresholds worsened to chance in the one subacute participant in this group. (C) Post-training contrast sensitivity functions for direction in the blind and intact fields of trained subacutes. Labelling conventions as in Fig. 3C except for light green lines denoting individual, post-training contrast sensitivity functions. CDDI training improved contrast sensitivity for motion direction across multiple spatial frequencies in four of seven subacutes (n = 4, P < 0.01, see ‘Materials and methods’ section for bootstrap analysis). Group t-tests were performed at each spatial frequency, with *P < 0.05, #P < 0.10. There were significant effects for peak contrast sensitivity [t(6) = 2.45, P = 0.025] and area under the contrast sensitivity function [t(6) = 2.28, P = 0.032]. (D) Post-training contrast sensitivity functions for orientation in subacute participants showing no improvements after CDDI training (P > 0.2). Labelling conventions as in C.

Luminance contrast sensitivity

CDDI training in the subacute period improved luminance contrast sensitivity for direction, but not for orientation discrimination of static (non-flickering) Gabors. Among subacutes selected for CDDI training, none had measurable baseline contrast sensitivity for static orientation discrimination, and only one had measurable baseline contrast sensitivity for direction discrimination. After CDDI training, four of seven participants tested achieved measurable motion contrast sensitivity functions (Fig. 6C), but orientation contrast sensitivity functions remained flat (Fig. 6D). Untrained subacutes failed to improve on either measure of contrast sensitivity, which remained flat. Improvement on motion contrast sensitivity in this training study was thus completely dependent on CDDI training.

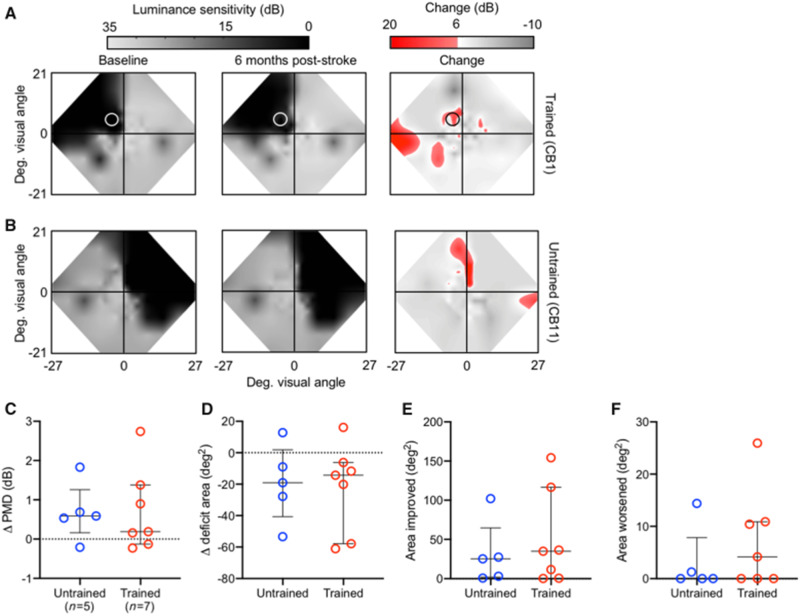

Humphrey perimetry

An unexpected finding in the present study was that CDDI-trained and untrained subacutes exhibited similar changes in Humphrey perimetry (Fig. 7A and B). No significant differences were observed between these two groups using four separate metrics: (i) perimetric mean deviation (PMD; Fig. 7C); (ii) area of the deficit encompassed by the 24-2 Humphrey (Fig. 7D); (iii) area of the 24-2 Humphrey that improved by >6 dB (Fig. 7E); and (iv) area of the 24-2 Humphrey that lost >6 dB of sensitivity (Fig. 7F). The lack of significant improvement in Humphrey perimetry in trained subacutes is consistent with their lack of improvement in blind-field static contrast sensitivity, and illustrates a clear dissociation between training-dependent recovery of motion discriminations and spontaneous, training-independent improvements in luminance detection perimetry.

Figure 7.

Subacute CDDI training improves Humphrey perimetry similarly to spontaneous recovery. (A) Composite visual field maps of representative trained subacute participant (Patient CB1) at baseline and post-training, along with a map of the net change in visual sensitivity (red shading), with a threshold for change of 6 dB. Training location indicated by a white circle. (B) Composite visual field maps of representative untrained subacute participant (Patient CB11) at baseline and follow-up, along with a map of net change in visual sensitivity. (C) Plot of changes in the Humphrey-derived perimetric mean deviation (PMD) of individual patients with subacute cortically-induced blindness who were untrained versus CDDI-trained. The PMD is the overall difference in sensitivity between the tested and expected hill of vision for an age-corrected, normal population. Bars indicate means ± SD. No significant differences were observed between groups (independent Student’s t-test: P > 0.05). (D) Plot of change in visual deficit area in the same participants as in C, computed from Humphrey perimetry as previously described (Cavanaugh and Huxlin, 2017). No significant differences were observed between trained and untrained subacutes (independent Student’s t-test: P > 0.05). (E) Plot of the area of the Humphrey visual field that improves by >6 dB (Cavanaugh and Huxlin, 2017) in the same participants as in C and D. No significant differences were observed between trained and untrained subacutes (independent Student’s t-test: P > 0.05). (F) Plot of the area of the Humphrey visual field that worsens by >6 dB (Cavanaugh and Huxlin, 2017) in the same participants as in C–E. No significant differences were observed between trained and untrained subacutes (independent Student’s t-test: P > 0.05).

Discussion

The present study represents the first systematic assessment of visual discrimination abilities within perimetrically-defined, cortically-blinded fields in 18 subacute occipital stroke patients. It appears that—unlike patients with chronic cortically-induced blindness—subacute patients often retain global direction discrimination abilities, as well as luminance contrast sensitivity for direction. Moreover, the residual, conscious visual processing in perimetrically-blind, subacute visual fields disappears by the chronic period. That the preserved vision is consciously accessible to the patients was evident both because of their verbal reports, accurately describing the visual stimuli presented in their blind fields, and because their performance on the 2AFC tasks used here far exceeded chance levels or discrimination performance levels expected for blindsight (Sahraie et al., 2010a, 2013), despite a relatively short stimulus presentation (Weiskrantz et al., 1974) and, in some cases, varying stimulus contrast (Weiskrantz et al., 1995).

Substrates of preserved vision and mechanisms of vision restoration

In chronic cortically-induced blindness, it has been hypothesized that training-induced restoration of visual motion capacities could be mediated by ‘alternative’ visual pathways that convey visual information from the retina to the dorsal lateral geniculate nucleus (dLGN) of the thalamus, the superior colliculus, pulvinar and thence to middle temporal (MT) and other extrastriate areas (Sincich et al., 2004; Schmid et al., 2010; Ajina et al., 2015a; Ajina and Bridge, 2017). Indeed, early observation by Riddoch (Riddoch, 1917; Zeki and Ffytche, 1998) found that patients with V1 lesions can possess conscious sensation of motion, a capacity associated with strong activity in MT (Zeki and Ffytche, 1998). In chronic patients with blindsight, these pathways were suggested to involve primarily koniocellular projections, rather than parvo- or magnocellular ones, because of their characteristic spatial frequency and contrast preferences (Ajina et al., 2015a; Ajina and Bridge, 2019). Animal models also suggested that this class of neurons and its retinal input may be more resistant to trans-synaptic retrograde degeneration after adult-onset V1 damage (Cowey et al., 1989; Yu et al., 2018).

In contrast, preserved vision in subacute cortically-blind fields could be mediated by spared regions of cortex in V1 (Fendrich et al., 1992; Wessinger et al., 1997; Das and Huxlin, 2010; Papanikolaou et al., 2014; Barbot et al., 2017), which may become quiescent without targeted use by the chronic period. The progressive silencing of these networks may be the result of trans-synaptic retrograde degeneration coupled with a sort of ‘visual disuse atrophy’, whereby weak surviving connections are pruned and/or down-weighted over time, as patients learn to ignore the less salient visual information within corresponding regions of their blind fields. That this vision is mediated by V1 itself is consistent with the relative preservation of contrast sensitivity within perimetric blind fields, with the contrast sensitivity functions measured here more reflective of the contrast response of V1 than MT neurons (Albrecht and Hamilton, 1982; Boynton et al., 1999; Niemeyer et al., 2017); the latter saturate at low levels of contrast (Tootell et al., 1995; Ajina et al., 2015b) and shift to higher spatial frequencies as contrast increases (Pawar et al., 2019). Here, we saw motion contrast sensitivity functions that showed best sensitivity at low—not high—spatial frequencies.

Over a matter of months after stroke, without intervention, the substrates of this initially-preserved subacute vision appear to be lost. Chronic cortically-blind patients may thus differ from subacutes not only in the permissiveness of the environment around their lesion for plasticity (Rossini et al., 2003; Bavelier et al., 2010; Hensch and Bilimoria, 2012; Seitz and Donnan, 2015), but also in the availability of neuronal substrates to perform targeted visual discriminations. But while the natural course of cortically-induced blindness is for any residual vision in subacutes to disappear by the chronic phase after stroke, training appears to both prevent loss of remaining visual abilities and strengthen them. Here, too, the progress of vision training in subacutes (versus chronics) points towards greater involvement of residual V1 circuits in the former. Performance in subacute blind fields more closely resembles that in V1-intact controls both in terms of the faster timescale of perceptual learning on the CDDI task (Levi et al., 2015), the ability to transfer learning to untrained locations (Larcombe et al., 2017), and the ability to transfer learning/recovery to untrained tasks, including to motion contrast sensitivity (Ajina et al., 2015b; Levi et al., 2015). Additionally, CDDI training also improved fine direction discrimination in subacutes around a motion axis orthogonal to that trained (since the FDD task involved global motion discrimination along the vertical axis). Such transfer (both to untrained directional axes and to FDD thresholds) was previously reported for patients with chronic cortically-induced blindness who trained with CDDI (Das et al., 2014; Cavanaugh et al., 2015). However, the subacutes trained on CDDI in the present study did not exhibit transfer to FDD as consistently as chronics. As mentioned earlier, generalizability of learning may have been suboptimal in the presently-tested subacutes because of their faster learning rates and the resulting shorter time spent training per blind-field location (Dosher and Lu, 2009).

Dissociation between visual detection (perimetry) and discriminations

An important dissociation in the present results pertained to the visual behaviour of untrained participants. As predicted, the five untrained subacutes sustained measurable spontaneous improvements in their Humphrey visual fields (∼0.5 dB increase in PMD, over ∼25 deg2), largely located around the blind fields’ borders, as previously reported for chronic patients (Cavanaugh and Huxlin, 2017). However, the same untrained subacutes did not recover visual discrimination abilities (CDDI, FDD or contrast sensitivity) at any of the pretested blind-field locations, even if these locations were within perimetrically-defined border regions that exhibited spontaneously-improved Humphrey sensitivity (Fig. 6A). The dissociation between spontaneous recovery of luminance detection (i.e. Humphrey sensitivity) and discrimination performance points towards major differences in the sensitivity of these different forms of visual assessment, in differences in the kinds of stimuli/tasks necessary to induce different forms of recovery, and in different neural mechanisms underlying different forms of recovery. It has been postulated that subacute visual field defects recover spontaneously as oedema and inflammation surrounding the lesion resolve, essentially unmasking networks that were dormant but not destroyed by the stroke (Rossini et al., 2003; Bavelier et al., 2010; Hensch and Bilimoria, 2012; Seitz and Donnan, 2015). However, the need for deliberate training to recover discrimination performance in subacute blind fields suggests that resolution of oedema/inflammation is not sufficient for more complex aspects of visual processing and perception to recover. Unfortunately, we did not have a large enough pool of untrained patients to ascertain if those with more subjective blind field motion sensation early after brain injury can anticipate greater spontaneous field recovery (Riddoch, 1917). Those who trained on our motion discrimination task exhibited similar amounts of perimetric field recovery as those who remained untrained until 6–7 months post-stroke. Yet, with training, residual vision could be leveraged to promote restoration of discrimination abilities. This dissociation between improvements on discrimination tasks (not spontaneous) and clinical perimetry (spontaneous) suggests a need to develop more comprehensive clinical tests—beyond perimetry. As Riddoch (1917) himself demonstrated with his discovery of a dissociation between vision for motion and static stimuli in V1-damaged patients, more comprehensive clinical visual tests are key to better assess the true extent and depth of visual impairments after occipital strokes. They would also be more optimal than current methods for tracking patient outcomes in a manner that better captures the complexities of visual perception, a function that relies on both discrimination and detection across multiple modalities.

Research challenges and steps needed for clinical implementation

Because of the lack of knowledge of visual properties in subacute patients with cortically-induced blindness, the present study was a non-blinded laboratory experiment. Our early discovery, as we began testing subacute patients with cortically-induced blindness, that a significant proportion had preserved visual abilities in their blind field was totally unexpected, and required that we alter study goals as the data emerged. As such, our work suffers from limitations associated with the lack of blinding of the investigators/participants and only partial randomization of a subset of the subacute patients into a training and untrained group. Using the knowledge gained, future studies can now plan to incorporate a more balanced, less biased design, as well as a larger sample size, to evaluate and contrast the efficacy of rehabilitation in subacute and chronic stroke patients.

Another limitation of this work, and indeed a major challenge in all rehabilitation research, is the inherent heterogeneity of stroke patients. Stroke damage—even when restricted to a single vascular territory—is highly variable, as are patient characteristics such as age and comorbidities. We have attempted to control for these factors as much as possible by limiting our study to isolated occipital strokes in patients with otherwise healthy neurological and ophthalmological backgrounds. Nonetheless, variability in lesion size, location of visual field deficit, and extent of undamaged extrastriate visual cortical areas remained (Figs 1 and 2). Some of these variables may have explained why only some (and not all) subacute participants had preserved visual discrimination abilities in their perimetrically-defined blind fields. Moreover, though all participants had verifiable V1 damage, and training locations were selected and monitored using standard criteria, variability in training outcomes may also relate to above-mentioned individual differences. Additionally, other patient-specific factors could contribute to recovery differences. Demographic factors included in Table 1 such as age and gender were not significantly associated with relative preservation of baseline visual discriminations, recovery of visual discriminations, or recovery of the Humphrey perimetric visual field. Our sample size restricted us from analysing the effect of stroke etiology or acute post-stroke intervention [e.g. receipt of tissue plasminogen activator (tPA) or thrombectomy], though larger studies in the future may be interested in the impact of these clinical factors and others.

Another limitation of the present study is that we could not identify when, during their first 6 months after stroke, subacutes lost visual discrimination abilities, and whether these were all lost together, or sequentially. It appears that static orientation discrimination was affected before motion discriminations as only 3 of 18 subacute patients tested here had measurable contrast sensitivity for orientation discrimination, less than half of those who had preserved contrast sensitivity for moving stimuli. Although we did not measure other form discriminations (e.g. shape), we speculate that those abilities are also lost earlier than motion-related discriminations. It is an intriguing possibility to consider that staged, targeted treatment paradigms could be designed that retrain static form earlier than motion perception post-V1 stroke. It is also conceivable that more complex training stimuli and tasks could be designed to simultaneously engage form, motion and other visual modalities during rehabilitation.

Finally, as the field of stroke rehabilitation advances, efforts towards clinical implementation will be aided by development of additional metrics and biomarkers to further stratify patients’ recovery potential (Bernhardt et al., 2017b). Moreover, while the present study used a global motion training program because it was previously identified as highly effective in patients with chronic cortically-induced blindness (Huxlin et al., 2009; Das et al., 2014; Cavanaugh and Huxlin, 2017), we have yet to determine which training stimuli and paradigms are best suited to treat patients with subacute cortically-induced blindness; ongoing work is investigating this question, as well as the use of adjuvants such as non-invasive brain stimulation, which may further augment training (Herpich et al., 2019). Clinical translation will ultimately depend on all these determinations, as well as the development of services to teach patients how to train properly (especially with accurate fixation), while automatically monitoring their progress and customizing their training locations as needed. Supervised sessions may further extend the clinical utility of training to include patients with cognitive or attentive issues who otherwise would not be able to engage with the program independently.

Conclusions

Subacute blind fields retain—for a short time—a surprisingly large and robust range of visual discrimination abilities. Absent intervention, these are all lost by 6 months post-stroke, possibly a functional consequence of trans-synaptic retrograde degeneration and progressive ‘disuse’ of the blind field. Yet, through early training during the subacute post-stroke period, discrimination abilities can be both retained and harnessed to recover some of the already-lost visual perception in the blind field. Compared to chronic participants, subacutes trained faster and with greater spatial generalization of learning across the blind field; these represent unique and significant advantages for clinical implementation in this patient group rather than waiting for them to become chronic. Fundamentally, our findings challenge the notion that cortically-blinded fields are a barren sensory domain, and posit that preserved visual abilities indicate rich sensory information processing that temporarily circumvents the permanently-damaged regions of cortex. Thus, after damage to the adult primary visual cortex, judicious, early visual training may be critical both to prevent degradation and enhance restoration of preserved perceptual abilities. For the first time, we can now conclusively say that just as for sensorimotor stroke, ‘time is VISION’ after an occipital stroke.

Acknowledgements

The authors wish to thank Terrance Schaefer, who performed Humphrey visual field tests presented here. We also thank Drs Shobha Boghani, Steven Feldon, Alexander Hartmann, Ronald Plotnick, Zoe Williams (Flaum Eye Institute); Ania Busza and Bogachan Sahin (Department of Neurology) for patient referrals. Some of the data in chronic patients analysed here were collected by Anasuya Das and Matthew Cavanaugh as part of two prior studies.

Funding

The present study was funded by the National Institutes of Health (EY027314 and EY021209, T32 EY007125 to Center for Visual Science, T32 GM007356 to the Medical Scientist Training Program, TL1 TR002000 to the Translational Biomedical Sciences Program, a pilot grant from University of Rochester CTSA award UL1 TR002001 to E.L.S.), and by an unrestricted grant from the Research to Prevent Blindness (RPB) Foundation to the Flaum Eye Institute. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests

K.R.H. is co-inventor on US Patent No. 7,549,743 and has founder’s equity in Envision Solutions LLC, which has licensed this patent from the University of Rochester. The University of Rochester also possesses equity in Envision Solutions LLC. The remaining authors have no competing interests.

Glossary

- CDDI =

coarse direction discrimination and integration

- FDD =

fine direction discrimination

- V1 =

primary visual cortex

References

- Ajina S, Bridge H.. Blindsight and unconscious vision: what they teach us about the human visual system. Neuroscientist 2017; 23: 529–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajina S, Bridge H.. Subcortical pathways to extrastriate visual cortex underlie residual vision following bilateral damage to V1. Neuropsychologia 2019; 128: 140–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajina S, Pestilli F, Rokem A, Kennard C, Bridge H.. Human blindsight is mediated by an intact geniculo-extrastriate pathway. Elife 2015; 4: 1–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ajina S, Rees G, Kennard C, Bridge H.. Abnormal contrast responses in the extrastriate cortex of blindsight patients. J Neurosci 2015; 35: 8201–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albrecht DG, Hamilton DB.. Striate cortex of monkey and cat: contrast response function. J Neurophysiol 1982; 48: 217–37. [DOI] [PubMed] [Google Scholar]

- Allman J, Miezin F, McGuinness E.. Stimulus specific responses from beyond the classical receptive field: neurophysiological mechanisms for local-global comparisons in visual neurons. Annu Rev Neurosci 1985; 8: 407–30. [DOI] [PubMed] [Google Scholar]

- Barbot A, Melnick M, Cavanaugh MR, Das A, Merriam E, Heeger D, et al. Pre-training cortical activity preserved after V1 damage predicts sites of training-induced visual recovery. J Vis 2017; 17: 17. [Google Scholar]

- Bavelier D, Levi DM, Li RW, Dan Y, Hensch TK.. Removing brakes on adult brain plasticity: from molecular to behavioral interventions. J Neurosci 2010; 30: 14964–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernhardt J, Godecke E, Johnson L, Langhorne P.. Early rehabilitation after stroke. Curr Opin Neurol 2017; 30: 48–54. [DOI] [PubMed] [Google Scholar]

- Bernhardt J, Hayward KS, Kwakkel G, Ward NS, Wolf SL, Borschmann K, et al. Agreed definitions and a shared vision for new standards in stroke recovery research: the stroke recovery and rehabilitation roundtable taskforce. Neurorehabil Neural Repair 2017; 31: 793–9. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Demb JB, Glover GH, Heeger DJ.. Neuronal basis of contrast discrimination. Vision Res 1999; 39: 257–69. [DOI] [PubMed] [Google Scholar]

- Cavanaugh MR, Barbot A, Carrasco M, Huxlin KR.. Feature-based attention potentiates recovery of fine direction discrimination in cortically blind patients. Neuropsychologia 2019; 128: 315–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanaugh MR, Huxlin KR.. Visual discrimination training improves Humphrey perimetry in chronic cortically induced blindness. Neurology 2017; 88: 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavanaugh MR, Zhang R, Melnick MD, Roberts M, Carrasco M, Huxlin KR.. Visual recovery in cortical blindness is limited by high internal noise. J Vis 2015; 15: 1–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Çelebisoy M, Çelebisoy N, Bayam E, Köse T.. Recovery of visual-field defects after occipital lobe infarction: a perimetric study. J Neurol Neurosurg Psychiatry 2011; 82: 695–702. [DOI] [PubMed] [Google Scholar]

- Cowey A, Stoerig P, Perry VH.. Transneuronal retrograde degeneration of retinal ganglion cells after damage to striate cortex in macaque monkeys: selective loss of Pβ cells. Neuroscience 1989; 29: 65–80. [DOI] [PubMed] [Google Scholar]

- Das A, Huxlin KR.. New approaches to visual rehabilitation for cortical blindness: outcomes and putative mechanisms. Neuroscientist 2010; 16: 374–87. [DOI] [PubMed] [Google Scholar]

- Das A, Tadin D, Huxlin KR.. Beyond Blindsight: properties of Visual Relearning in Cortically Blind Fields. J Neurosci 2014; 34: 11652–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dosher BA, Lu ZL.. Hebbian Reweighting on Stable Representations in Perceptual Learning. Learn Percept 2009; 1: 37–58. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Efron B, Tibshirani RJ, Introduction to the bootstrap. Boca Raton, Florida: CRC Press; 1994. [Google Scholar]

- Fendrich R, Wessinger CM, Gazzaniga MS.. Residual vision in a scotoma: implications for blindsight. Science (80-) 1992; 258: 1489–91. [DOI] [PubMed] [Google Scholar]

- Gray C, French J, Bates D, Cartildge N, Venerables G, James O.. Recovery of visual fields in acute stroke: homonymous hemianopia associated with adverse prognosis. Age Ageing 1989; 18: 419–21. [DOI] [PubMed] [Google Scholar]

- Hensch TK, Bilimoria PM.. Re-opening windows: manipulating critical periods for brain development. Cerebrum 2012; 2012: 11. [PMC free article] [PubMed] [Google Scholar]

- Herpich F, Melnick MD, Agosta S, Huxlin KR, Tadin D, Battelli L.. Boosting learning efficacy with noninvasive brain stimulation in intact and brain-damaged humans. J Neurosci 2019; 39: 5551–61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hess RF, Pointer J.. Spatial and temporal contrast sensitivity in hemianopia. Brain 1989; 112: 871–94. [DOI] [PubMed] [Google Scholar]

- Horton J. Disappointing results from Nova Vision’s visual restoration therapy. Br J Ophthalmol 2005. a; 89: 1–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horton J. Vision restoration therapy: confounded by eye movements. Br J Ophthalmol 2005. b; 89: 792–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hou F, Huang CB, Lesmes L, Feng LX, Tao L, Zhou YF, et al. qCSF in clinical application: efficient characterization and classification of contrast sensitivity functions in amblyopia. Invest Ophthalmol Vis Sci 2010; 51: 5365–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huxlin KR, Martin T, Kelly K, Riley M, Friedman DI, Burgin WS, et al. Perceptual relearning of complex visual motion after V1 damage in humans. J Neurosci 2009; 29: 3981–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krakauer JW. Motor learning: its relevance to stroke recovery and neurorehabilitation. Curr Opin Neurol 2006; 19: 84–90. [DOI] [PubMed] [Google Scholar]

- Kwakkel G, Kollen BJ, Wagenaar RC.. Long term effects of intensity of upper and lower limb training after stroke: a randomised trial. J Neurol Neurosurg Psychiatry 2002; 72: 473–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larcombe SJ, Kennard C, Bridge H.. Time course influences transfer of visual perceptual learning across spatial location. Vision Res 2017; 135: 26–33. [DOI] [PubMed] [Google Scholar]

- Lesmes LA, Lu Z-L, Baek J, Albright TD.. Bayesian adaptive estimation of the contrast sensitivity function: the quick CSF method. J Vis 2010; 10: 1–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levi A, Shaked D, Tadin D, Huxlin KR.. Is improved contrast sensitivity a natural consequence of visual training? J Vis 2015; 14: 1158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Melnick MD, Tadin D, Huxlin KR.. Relearning to see in cortical blindness. Neuroscientist 2016; 22: 199–212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, Loomis J.. Optical velocity patterns, velocity sensitive neurons, and space perception: a hypothesis. Perception 1974; 3: 63–80. [DOI] [PubMed] [Google Scholar]

- Niemeyer JE, Paradiso MA, Paradiso MA.. Contrast sensitivity, V1 neural activity, and natural vision. J Neurophysiol 2017; 117: 492–508. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Papanikolaou A, Keliris G, Papageorgiou T, Shao Y, Krapp E, Papageorgiou E, et al. Population receptive field analysis of the primary visual cortex complements perimetry in patients with homonymous visual field defects. Proc Natl Acad Sci U S A 2014; 111: E1656–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pawar AS, Gepshtein S, Savel’ev S, Albright TD.. Mechanisms of spatiotemporal selectivity in cortical area MT. Neuron 2019; 101: 514–27.e2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. The VideoToolbox software for visual psychophysics: transforming numbers into movies. Spat Vis 1997; 10: 437–42. [PubMed] [Google Scholar]

- Pollock A, Hazelton C, Rowe FJ, Jonuscheit S, Kernohan A, Angilley J, et al. Interventions for visual field defects in people with stroke. Cochrane Database Syst Rev 2019; 5: CD008388. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Raninen A, Vanni S, Hyvarinen L, Nasanen R.. Temporal sensitivity in a hemianopic visual field can be improved by long-term training using flicker stimulation. J Neurol Neurosurg Psychiatry 2007; 78: 66–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinhard J, Schreiber A, Schiefer U, Kasten E, Sabel B, Kenkel S, et al. Does visual restitution training change absolute homonymous visual field defects? A fundus controlled study. Br J Ophthalmol 2005; 89: 30–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riddoch G. On the relative perceptions of movement and a stationary object in certain visual disturbances due to occipital injuries. Proc R Soc Med 1917; 10: 13–34. [PMC free article] [PubMed] [Google Scholar]

- Rossini PM, Calautti C, Pauri F, Baron J-C.. Post-stroke plastic reorganisation in the adult brain. Lancet Neurol 2003; 2: 493–502. [DOI] [PubMed] [Google Scholar]

- Sahraie A, Hibbard PB, Trevethan CT, Ritchie KL, Weiskrantz L.. Consciousness of the first order in blindsight. Proc Natl Acad Sci U S A 2010; 107: 21217–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sahraie A, MacLeod MJ, Trevethan CT, Robson SE, Olson JA, Callaghan P, et al. Improved detection following neuro-eye therapy in patients with post-geniculate brain damage. Exp Brain Res 2010; 206: 25–34. [DOI] [PubMed] [Google Scholar]

- Sahraie A, Trevethan CT, MacLeod MJ, Murray AD, Olson JA, Weiskrantz L.. Increased sensitivity after repeated stimulation of residual spatial channels in blindsight. Proc Natl Acad Sci U S A 2006; 103: 14971–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sahraie A, Trevethan CT, MacLeod MJ, Weiskrantz L, Hunt AR.. The continuum of detection and awareness of visual stimuli within the blindfield: from blindsight to the sighted-sight. Invest Ophthalmol Vis Sci 2013; 54: 3579–85. [DOI] [PubMed] [Google Scholar]

- Schmid MC, Mrowka SW, Turchi J, Saunders RC, Wilke M, Peters AJ, et al. Blindsight depends on the lateral geniculate nucleus. Nature 2010; 466: 373–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seitz RJ, Donnan GA.. Recovery potential after acute stroke. Front Neurol 2015; 6: 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shin H-Y, Kim SH, Lee MY, Kim SY, Kee YC.. Late emergence of macular sparing in a stroke patient. Medicine (Baltimore) 2017; 96: e7567. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sincich LC, Park KF, Wohlgemuth MJ, Horton JC.. Bypassing V1: a direct geniculate input to area MT. Nat Neurosci 2004; 7: 1123–8. [DOI] [PubMed] [Google Scholar]

- Social Security Administration. Medical/Professional Relations Disability Evaluation Under Social Security 2.00 Special Senses and Speech-Adult. https://www.ssa.gov/disability/professionals/bluebook/2.00-SpecialSensesandSpeech-Adult.htm (10 January 2019, date last accessed).

- Spolidoro M, Sale A, Berardi N, Maffei L.. Plasticity in the adult brain: lessons from the visual system. Exp Brain Res 2009; 192: 335–41. [DOI] [PubMed] [Google Scholar]

- Tadin D, Lappin JS, Gilroy LA, Blake R.. Perceptual consequences of centre-surround antagonism in visual motion processing. Nature 2003; 424: 312–5. [DOI] [PubMed] [Google Scholar]

- Tiel K, Kolmel H.. Patterns of recovery from homonymous hemianopia subsequent to infarction in the distribution of the posterior cerebral artery. J Neuro-Ophthalmol 1991; 11: 33–9. [Google Scholar]

- Tootell RB, Reppas JB, Kwong KK, Malach R, Born RT, Brady TJ, et al. Functional analysis of human MT and related visual cortical areas using magnetic resonance imaging. J Neurosci 1995; 15: 3215–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Townend BS, Sturm JW, Petsoglou C, O’Leary B, Whyte S, Crimmins D.. Perimetric homonymous visual field loss post-stroke. J Clin Neurosci 2007; 14: 754–6. [DOI] [PubMed] [Google Scholar]

- Weiskrantz L, Barbur JL, Sahraie A.. Parameters affecting conscious versus unconscious visual discrimination with damage to the visual cortex (V1). Proc Natl Acad Sci U S A 1995; 92: 6122–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weiskrantz L, Warrington EK, Sanders M, Marshall J.. Visual capacity in the hemianopic field following a restricted occipital ablation the relationship between attention and visual experience. Brain 1974; 97: 709–28. [DOI] [PubMed] [Google Scholar]

- Wessinger CM, Fendrich R, Gazzaniga MS.. Islands of residual vision in hemianopic patients. J Cogn Neurosci 1997; 9: 203–21. [DOI] [PubMed] [Google Scholar]

- Winstein CJ, Stein J, Arena R, Bates B, Cherney LR, Cramer SC, et al. Guidelines for adult stroke rehabilitation and recovery: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke 2016; 47: e98–e169. [DOI] [PubMed]

- Yu H-H, Atapour N, Chaplin TA, Worthy KH, Rosa MG.. Robust visual responses and normal retinotopy in primate lateral geniculate nucleus following long-term lesions of striate cortex. J Neurosci 2018; 38: 3955–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zeki S, Ffytche DH.. The Riddoch syndrome: insights into the neurobiology of conscious vision. Brain 1998; 121: 25–45. [DOI] [PubMed] [Google Scholar]

- Zhang X, Kedar S, Lynn MJ, Newman NJ, Biousse V.. Natural history of homonymous hemianopia. Neurology 2006; 66: 901–5. [DOI] [PubMed] [Google Scholar]

- Zihl J. Rehabilitation of visual disorders after brain injury. 2nd edn London: Psychology Press; 2010. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All de-identified data are available from the authors upon request.