Abstract

The perception of infant emotionality, one aspect of temperament, starts to form in infancy, yet the underlying mechanisms of how infant emotionality affects adult neural dynamics remain unclear. We used a social reward task with probabilistic visual and auditory feedback (infant laughter or crying) to train 47 nulliparous women to perceive the emotional style of six different infants. Using functional neuroimaging, we subsequently measured brain activity while participants were tested on the learned emotionality of the six infants. We characterized the elicited patterns of dynamic functional brain connectivity using Leading Eigenvector Dynamics Analysis and found significant activity in a brain network linking the orbitofrontal cortex with the amygdala and hippocampus, where the probability of occurrence significantly correlated with the valence of the learned infant emotional disposition. In other words, seeing infants with neutral face expressions after having interacted and learned their various degrees of positive and negative emotional dispositions proportionally increased the activity in a brain network previously shown to be involved in pleasure, emotion, and memory. These findings provide novel neuroimaging insights into how the perception of happy versus sad infant emotionality shapes adult brain networks.

Keywords: emotion, infant emotionality, infant temperament, network dynamics, parent–infant interaction

Introduction

“The smile is the shortest distance between two persons”.

-Victor Borge.

An individual’s temperament refers to individual differences in several biobehavioral domains, spanning activity, emotionality, attention, and self-regulation (Rothbart and Bates 2006; Shiner et al. 2012; Nolvi et al. 2016). It is not a trait itself, but rather a rubric for a group of related traits (Goldsmith et al. 1987). The characteristics that comprise temperament are thought to be relatively stable over time and consistent across situations (Sanson et al. 2004), but they also develop in interactions with the social environment (Lee and Bates 1985). Emotionality is one aspect of infant temperament, which is often measured on a scale ranging from clear fussing and crying, to neutral, to predominantly smiles and laughter (Pauli-Pott et al. 2004). In order to have optimal and adaptive social behavior, we utilize knowledge from previous experiences of individuals, such as emotionality, to make predictions about the future and minimize the cost of surprise (Friston et al. 2006; Brown and Brüne 2012). Therefore, learning about an individual’s predominant emotional dispositions is a key part of human social interaction, in particular parent–infant interaction (Stark et al. 2019).

Infant emotionality has a measurable effect upon early mother–infant bonding. While positive infant emotionality (measured by infant smiling or laughter) relates to better mother–infant bonding, negative infant emotionality (measured by infant distress) relates to lower quality of bonding, while controlling for maternal symptoms of both depression and anxiety (Nolvi et al. 2016). Infant emotionality may also influence the way in which parents respond to their infant. For instance, irritable children who cry frequently may elicit feelings of irritation in parents and subsequent withdrawal of contact (Putnam et al. 2002). One study in the Netherlands reported that 5.6% of parents in their sample recounted smothering, slapping, or shaking their baby due to crying, particularly when they judged the crying to be “excessive” (Reijneveld et al. 2004). Positive or ‘cute’ temperamental factors such as smiling or babbling may conversely elicit interaction and proximity (Kringelbach et al. 2016).

Infants attract our attention (Kringelbach et al. 2016). The unique and instantly recognizable facial configuration of infants is pleasing and rewarding, and an instinctive reaction of adults upon seeing an infant is to smile (Hildebrandt and Fitzgerald 1978). The infant face has a measurable impact upon our perceptions and behavior. Adults prefer infant faces to adult faces (Brosch et al. 2007; Parsons, Young, Kumari, et al. 2011) and infant cues spur us to action—both men and women will expend extra effort to look at cute infant faces for longer (Parsons, Young, Kumari, et al. 2011; Hahn et al. 2013). Even seeing an infant face briefly before a simple motor task promotes faster reaction times and more sustained engagement with the task (Proverbio et al. 2011). Infant visual cues therefore seem to be one of the most basic but powerful forces shaping our perceptions and behavior (Kringelbach et al. 2016). Importantly, this behavioral impact of the infant face must be linked to changes in brain activity and in fact the infant face has been shown to elicit brain activity on a very fast timescale (<130 ms) in a network including the orbitofrontal cortex (Kringelbach et al. 2008; Parsons et al. 2013; Young et al. 2016), which may mobilize the perceiver to ready themselves for providing care.

Still, our perception of cuteness and subsequent behavior is dynamic and is strongly influenced by context such as previous interactions mediated by valenced social signals including smiles, laughter, distress, and crying. In all human relationships, the bond between caregiver and child is arguably the strongest of all. For caregivers, learning about their infant’s emotional state helps them to predict how the infant approaches and reacts to the world. Some infants may smile, laugh, and babble contentedly more frequently than others, indicating a positive disposition. On the other hand, all infants cry to signal need, but infants differ from each other in how frequently and intensely they cry. Infants with a temperament characterized by negative disposition cry more often and tend to react to stressors with a high degree of emotionality, including anger, irritability, fear, or sadness (Rothbart et al. 1994).

We were interested in measuring the underlying brain networks for learning of infant emotionality and used our probabilistic social reward task, which allows participants to learn that infants have different emotional dispositions (through varying levels of probabilistic positive and negative feedback) (Parsons, Young, Bhandari, et al. 2014; Parsons, Young, Craske, et al. 2014). In the learning phase, participants learn over time, through trial and error, that a given infant is more or less likely to smile and laugh. We have shown that this can significantly shift the perception of cuteness and motivation to view an infant, so that those infants with more positive emotionality are perceived as ‘cuter’ than before the task (Parsons et al. 2014). This demonstrates that the perception of the emotionality dimension of temperament can be changed through a simple behavioral task that shifts the intrinsic reward value of infants.

Here, we investigated the brain networks underlying learning of infant emotional dispositions. In particular, we were interested in capturing the specific functional network (FN) involved in the perception of the learned infant emotional disposition following the successive presentation, inside the MRI scanner, of pictures of infants with neutral facial expressions, whose emotional disposition was previously learned. In order to achieve this, we used a recent neuroimaging analysis method, the Leading Eigenvector Dynamics Analysis (LEiDA; Cabral et al. 2017; Figueroa et al. 2019; Lord et al. 2019), which allows us to detect, at a single-TR (repetition time) resolution, the occurrence of FN from functional MRI (fMRI) data. In this approach, FNs are defined as recurrent BOLD phase-locking patterns, which can be captured with low-dimensionality by considering only the relative phase of BOLD signals (i.e., how all BOLD phases project into their leading eigenvector at each discrete time point). Previous implementations of the LEiDA method have revealed that the probabilities of occurrence of different FN (and their corresponding switching profiles) can show significant differences between participant groups, but these measures were computed over entire resting-state fMRI sessions (Cabral et al. 2017; Figueroa et al. 2019).

Here, for the first time, we make use of the high temporal resolution of LEiDA and apply it to a task paradigm, in order to evaluate if the occurrence of specific FN at a precise timing after the stimulus can relate to the learned infant emotionality. This advanced method allows for an unbiased way to investigate learning of infant emotional dispositions and in particular to identify the brain networks linked to infant emotionality along a positive–negative happy versus sad gradient.

Materials and Methods

Participants

We analyzed neuroimaging data from 47 female participants included in our previous study (Riem et al. 2017) (mean age 19.62 years old, SD = 2.12, all undergraduate students from the Department of Child and Family Studies, Leiden University, >95% born in The Netherlands). None of the participants had children. All participants were screened for MRI contraindications, childhood experiences, psychiatric, or neurological disorders, problems with hearing, pregnancy, and alcohol and drug abuse. The participants gave written informed consent, and permission for the study was obtained from the Leiden University Medical Centre Ethics Committee and from the Leiden Institute for Brain and Cognition Ethics Committee.

Procedure

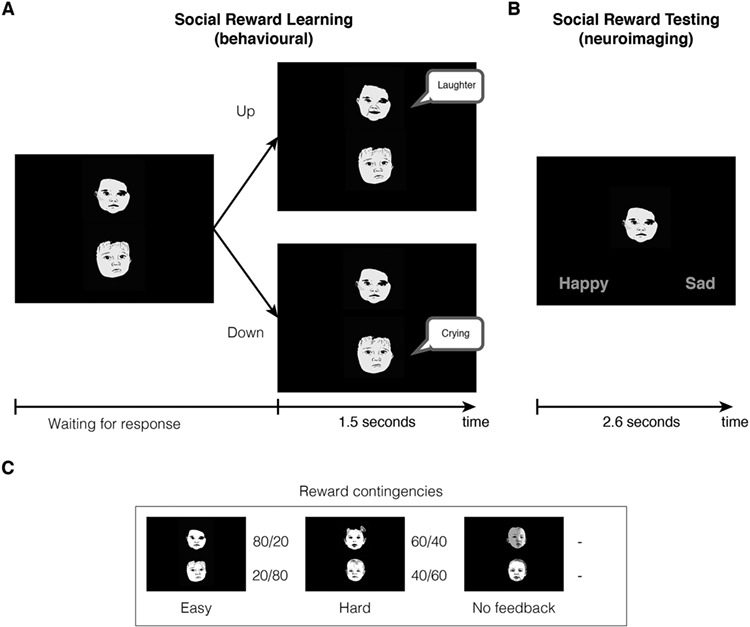

The elements of the study used in this analysis consisted of the learning and test phase of a variation of the original probabilistic social reward task (Parsons et al. 2014) shown in Figure 1 (Riem et al. 2017). The 47 participants came to Leiden University Medical Center for the experiment.

Figure 1.

Overview of the social reward task. (A) Participants were trained outside of the scanner to associate six different infant faces with different emotional dispositions. They were presented with two infant faces and had to choose the top face (pressing “up”) or the bottom face (pressing “down”). They were then exposed to feedback for the chosen face, either positive (smile and laughter) or negative (sad expression and crying). Bottom panel shows the different contingencies for each of the six faces: 80% happy, 20% happy (the easy pair), 60% happy, and 40% happy (the hard pair), and two with no feedback. (B) The testing phase was then administered inside the scanner, where participants stated the predominant emotional disposition of each face—happy or sad (Riem et al. 2017). (C) Illustration of each of the reward contingences for the six face stimuli. Please note that the faces shown in the figures are not the ones used in the experiment but rather hand drawings of nonexistent infant faces to depict the learning and test phases of the probabilistic social reward task.

Learning Phase

First, participants were trained to learn the emotional dispositions of the infants in the social reward task (Parsons et al. 2014), which was constructed using previous widely used learning paradigms (Kringelbach and Rolls 2003; Frank et al. 2004). There were six different infants that varied in their probability of being happy or sad. Faces were presented in pairs. The easy-to-learn pair consisted of a happy infant, which laughed in 80% of trials and cried in the remaining 20%, presented together with a sad infant that laughed in 20% of trials and cried in the remaining 80%. In the difficult-to-learn pair, the happy infant laughed 60% of the time while the sad infant laughed only 40% of the time. There was also a neutral pair where no feedback was given, which participants were told to expect.

The learning phase consisted of two blocks of 60 trials per participant, with each pair of faces being presented 40 times in total (20 times per block). Trials were randomly ordered in each session, as was the order of the blocks. The emotional disposition of the babies (happy, sad, or neutral) was also randomized between participants.

Participants were presented with one pair of babies at a time, both showing a neutral emotional expression (see Fig. 1). They selected the ‘up’ key or the ‘down’ key on a keyboard to choose one of the two baby faces (the upper neutral face or the lower neutral face) and this selection prompted feedback on the selected baby’s emotional disposition. On pressing the key, visual feedback for the selected face was presented immediately for 1.5 s accompanied by a 1.5 s vocalization. In the happy condition, they would see the baby smiling and they would hear a happy vocalization. In the sad condition, participants would see a sad facial expression and hear a baby cry. There was a 500 ms gap between the end of the feedback and the next trial beginning, during which a red fixation cross was presented in the center of the screen.

Participants were instructed to discover the emotional disposition of the infant by listening to the vocalizations and viewing the infant’s facial expressions. By means of repeated trials, they could infer how often the baby cried or laughed and decide which one was the happier or the sadder of the two. Participants were told for one block, “In each pair of faces, there is one happy and one sad baby. Like in real life, the happy baby will not always be happy and the sad baby will not always be sad. In each set, your task is to find the happiest baby, the one who smiles most often, and continue to always select this baby even if this baby may sometimes appear sad.” In the other counterbalanced block, participants were instructed to find the saddest baby.

Testing Phase

The second stage of the experiment was the fMRI procedure, where participant learning of the infant emotionality was tested. Participants were briefed on the fMRI procedure and paradigm. It has previously been established that the participants can discriminate between the six infant faces with high accuracy (Parsons et al. 2014; Riem et al. 2017). While being scanned, participants were presented with the six infant faces, all of which had neutral facial expressions. Each neutral infant face was presented in the center of the screen, accompanied by the words ‘happy’ and ‘sad.’ Participants were tasked with indicating whether they believed the baby to be happy or sad, based upon the previous training phase, using their right hand to button press. Each face was presented 20 times, for up to 2.6 s, in random order (120 presentations in total). The button press terminated the trial and continued to the next trial, so the task was self-paced. Interstimulus intervals were jittered and calculated using Optseq (https://surfer.nmr.mgh.harvard.edu/optseq/). All tasks were programmed and performed using E-Prime software.

Stimuli

All infant facial images and vocalizations were the same as those used in Parsons et al. (2014) and Bhandari et al. (2014). Each of the six babies was aged 3–12 months old, and had a corresponding image for smiling, crying, and neutral conditions. An independent sample of adult females (n = 40) was asked to rate the faces from a larger set of 13 stimuli (Kringelbach et al. 2008) as “male,” “female,” or “cannot tell.” The results were then used to select six faces that represented two perceived as female, two as male, and two with ambiguous ratings (Parsons et al. 2014). All images were in grayscale, and were equally sized (300 × 300 pixels), as well as being matched for luminosity.

There were 12 vocalizations: 6 of crying infants, and 6 of laughing infants. Adults unambiguously categorized these as such (Young et al. 2012), and they were taken from a larger database of sounds, the Oxford Vocal (OxVoc) Sounds Database, which is a validated set of nonacted affective sounds from human infants, adults, and domestic animals (Parsons, Young, Craske, et al. 2014; Young et al. 2017). All vocalizations were 1.5 s long, free from background noise, and matched for the characteristics of the sounds. Headphones were used to present the vocalizations to participants during the training phase of the social reward task.

Data acquisition with fMRI

All scanning was performed with a standard whole-head coil on a 3-T Philips Achieva TX MRI system (Philips Medical Systems, Best, The Netherlands) in the Leiden University Medical Center. During fMRI, there were a total of 298 T2*-weighted whole-brain echoplanar images acquired (repetition time = 2.2 s; echo time = 30 ms, flip angle = 80°, 38 transverse slices, voxel size 2.75 × 2.75 × 2.75 mm [+10% interslice gap]). Following the fMRI scan, a T1-weighted anatomic scan was acquired (flip angle = 8°, 140 slices, voxel size 0.875 × 0.875 × 1.2 mm).

Preprocessing

The preprocessing of the neuroimaging data was carried out in FSL5.0 (www.fmrib.ox.ac.uk/fsl) using high-pass temporal filtering (100 s high-pass filter), motion correction, brain extraction, and finding the linear registration from the EPI images to standard MNI space via the participant’s T1-weighted images. We used this registration matrix to parcellate according to the AAL parcellation (Tzourio-Mazoyer et al. 2002) and generated the average BOLD signal time series for each AAL90 region (cortical and subcortical but not cerebellum regions) by computing the mean over all voxel time-series for each region. We also created participant-specific vectors with the onset of each stimulus presentation for use in the main data analysis.

Data analysis

Transient Functional Networks

To assess the FN activated at each instance of time, we applied LEiDA, a data-driven method that focuses on the connectivity patterns captured by the leading eigenvector of the BOLD phase-coherence matrices over time (Cabral et al. 2017).

First, we obtained a time-resolved matrix of functional connectivity, dFC, with size NxNxT, where N = 90 is the number of AAL90 brain areas and T = 280 is the total number of recording frames in each scan (timeseries), using the following equation:

|

where θ(n,t) is the phase of the BOLD signal in area n at time t obtained using the Hilbert transform. The first and last epochs of each scan were removed to account for the boundary distortions associated to the Hilbert transform. dFC(n,p,t) is positive if two areas n and p have synchronized BOLD signals at time t (phase shift <90°), and dFC(n,p,t) is negative if the BOLD signals of areas n and p are more than 90° out of phase at time t.

To assess instantaneous patterns of functional connectivity, LEiDA considers only the leading eigenvector V1(t) of each dFC(t). This simultaneously reduces the dimensionality of the data (one 1 × N vector at a time instead of a N × N matrix at a time) and acts as a denoising procedure since the leading eigenvector V1(t) captures only the dominant pattern of connectivity of the dFC(t) at time t (Cabral et al. 2017). This vector contains N elements (each representing one brain area) and their sign (positive or negative) serves to separate brain areas into communities according to their BOLD-phase relationship. Since V and −V represent the same state, we use a convention ensuring that most elements are negative. When all elements of V1(t) have the same sign, it means all BOLD signals are evolving in the same direction (within a range of 90°) and are hence considered to be following a single global mode (Newman 2006). If instead V1(t) has elements of different signs (i.e., positive and negative), it means the BOLD signals can be divided according to their phase into two modes/communities, where one subset of brain areas become coherent forming a FN, which is phase shifted by more than 90° with respect to the other brain areas. Conveniently, FNs can be represented in cortical space, by plotting links between the smaller subset of areas, whose BOLD signal is coherent and phase-shifted from the rest of the brain.

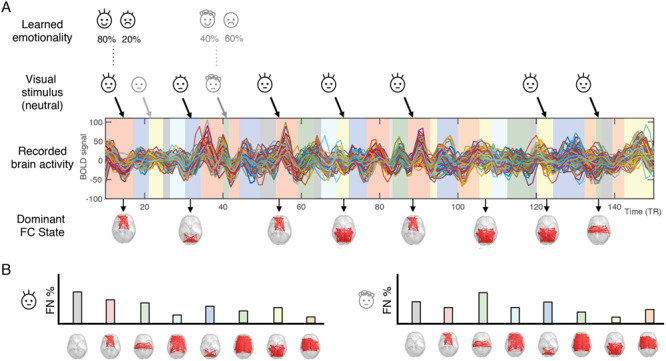

To detect a discrete number of recurrent FC states, we applied a k-means clustering to all leading eigenvectors V1(t) across all 47 participants (47 × 278 = 13 066 leading eigenvectors in total). The clustering divides the sample into a k number of clusters (each representing a recurrent FC state), with higher k resulting in more fine-grained network configurations. Although there is no consensus regarding the number of FC states revealed by fMRI (and whether FC states can be discretized in the first place), we can explore which partition of the sample allows for a better detection of FN associated with learning of infant emotionality. As such, we varied k (number of clusters) from 2 to 20, and for each k, obtained a repertoire of k FC states. Subsequently, for each FC state, we evaluated whether its probability of occurrence 2TR (TR stands for repetition transition) after the neutral face presentation correlated with the happy–sad gradient of learned infant emotionality (using Pearson correlation and associated P-values) (see Fig. 2 for an overview of the whole analysis process).

Figure 2.

Schematic illustration of the LEiDA methodology used to analyze the fMRI data. (A) First, we applied LEiDA to the fMRI data and clustered the FC patterns into a given number, k, of FC states, assigning one of these FC state to each TR (represented by shaded colored bars under the BOLD signals). Then, for each infant face, we detect the FC state that is active 2TR after stimulus presentation (to account for the hemodynamic response time). (B) For each infant face and for each participant, we obtain a probability distribution of the FC states, which we subsequently correlate with the happy–sad gradient given by the probability of smiling in the training phase.

Results

We used LEiDA methodology to investigate the dynamics of brain networks involved in learning of infant emotionality arising from the probabilistic social reward task (for more details on task, see Fig. 1 and Methods). This allowed us to investigate the probability of occurrence of each state of functional connectivity linked to the positive emotionality score of the infant faces, that is, the probability of smiling and laughter for each of the six infants in the training phase (80/20, 60/40, and 50/50) (see Fig. 2 and Methods for an overview of the data analysis).

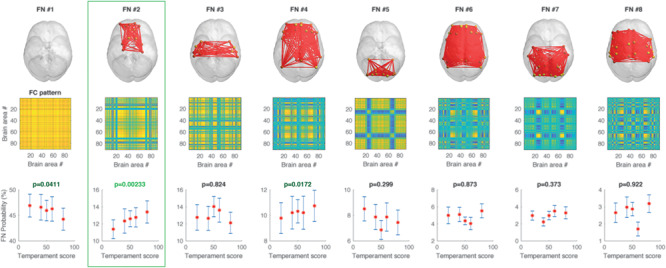

Figure 3 shows the repertoire of FC states (for k = 8) that recurrently emerged over time in the group of 47 participants during the entire fMRI recording sessions, and where the FC states are sorted according to their overall probability of occurrence. As can be seen in Figure 3, the most prevalent pattern of functional connectivity [FN#1] corresponds to periods where all the BOLD signals are aligned (within a 90° angle), representing a slow global mode of BOLD activity. When this state is dominant, the associated FC pattern (shown in matrix format in Fig. 3B) shows only positive values. This global mode of BOLD connectivity is consistent with previous reports of a global modulation of BOLD signals in the resting-state. Given its putative neurophysiological value, we opted not to regress it out (Murphy and Fox 2017).

Figure 3.

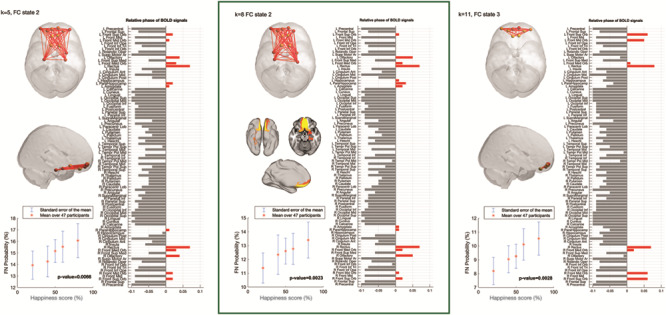

Repertoire of FN states assessed with LEiDA and association to learned emotionality (for k = 8). The results show that FN#2 is significantly correlated with positive emotional disposition scores for the six infants (P < 0.002, highlighted in green box, see row of probabilities), suggesting that this network is important for learning of infant emotionality. The brain network contains regions including the orbitofrontal cortex, amygdala, and hippocampus. The error bars represent the standard error of the mean across all 47 participants. These results are obtained when the dynamic FC is clustered into 8 FC states.

In the remaining seven FC states, we find different subsets of brain regions (FN#2–8) that transiently but consistently desynchronize together from the global mode of BOLD activity. Figure 3 shows each FN in brain space, by plotting red links between the areas that shift away from the global mode (with this convention, the global mode network FN#1 shows no links). This representation in cortical space reveals that each FN state involves functionally different sets of brain areas. For each of the FN, we computed the probability of being active 2 TR after the presentation of each neutral infant face (allowing for the hemodynamic lag). Since each infant face has an associated emotionality score (80%, 60%, 50%, 40%, 20% probability of smiling and laughing), we correlate this probability with the corresponding emotionality score and obtain an associated P-value (Fig. 3, lower row), revealing the significance of each FN in predicting the emotionality of the infants. As can be seen, most of the FNs do not encode the emotionality, but FN#2 is clearly significantly linked to the degree of overall happiness (P < 0.002) and includes regions of the orbitofrontal cortex, amygdala, parahippocampus, and hippocampus.

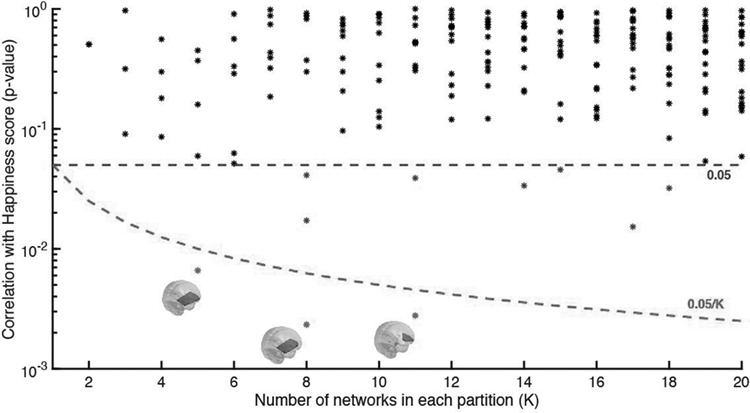

We investigated the robustness of this novel finding by investigating the results over a wide range of clusters k between 2 and 20, given that the spatial configuration of the FNs depends on the number of clusters determined in the k-means algorithm, with a higher number of networks generally resulting in more fine-grained (and often less symmetric) networks. Figure 4 shows for each solution with k FNs, the P-value associated with the most significant result. Since a higher number of clusters increases the probability of false positives, we correct the significance threshold as 0.05/k (green dashed line). We find that the partitions into 5, 8, and 11 FC states each return a very similar FN, whose probability of occurrence significantly correlates with the infant emotionality after correcting by k.

Figure 4.

Significance of correlation between FN and infant emotionality over the range of k-means clustering solutions explored. We verified which partition models detected FN, whose probability of occurrence 2 TRs after the presentation of an infant neutral face correlated to its learned emotionality. The figure shows the P-values obtained for all the networks compared. All the P-values represented as dots are above the 0.05 standard threshold (upper dashed line), meaning that no relation was found between the occurrence of the corresponding networks and the infants’ emotionality. To account for the family-wise error rate when performing multiple hypotheses tests, we corrected the standard threshold by the number of independent hypothesis tested in each partition model (0.05/K lower dashed line). As can be seen, for a number of cluster sizes (K = 5, 8, 11), we detect a FN, whose probability of occurrence significantly correlates with the learned infants’ emotionality (P < 0.01), with statistical significance surviving correction for multiple comparisons.

Figure 5 shows the robustness of the emotionality learning FN for the three different k values (k = 5, 8, 11). As can be seen the regions involved in this FN are remarkably similar for different k values (compare the red lines) and significantly correlated with infant emotionality (P < 0.007 for k = 5; P < 0.002 for k = 8; and P < 0.003 for k = 11). This confirms the robustness of the result of finding a brain network encoding learning of infant emotionality, involving regions such as the orbitofrontal cortex, parahippocampus, hippocampus, and amygdala.

Figure 5.

Functional brain networks associated with emotionality learning. The probability of the networks (red links) being active 2TR after stimulus onset increased as the learned emotionality of the infant showed a more positive disposition. In more detail, these networks are considered to be active when the BOLD signals of these areas (red bars) become coherent and phase shifted by more than 90° with respect to the BOLD signals in the rest of the brain (gray bars). An emotionality learning network was found to show significant correlation with learned emotional dispositions over the 47 participants. This solution for k = 8 (middle) returned the most significant functional network (FN#2) (P = 0.0023, uncorrected, P = 0.0184 after correcting by the number of clusters). This emotionality learning network includes not only the orbitofrontal cortex (which is known to be involved in pleasure and emotion), but also the amygdala (involved in emotional processing) and the hippocampus and parahippocampus (involved in memory). Attesting to the robustness of the results, the other two networks found to relate significantly with the infants’ learned emotionality (for k = 5 and k = 11), also include the orbitofrontal cortex, with differences arising in the number of output states constrained by K.

Discussion

An infant’s temperament is partially comprised of individual differences in their emotionality—whether they are predominantly happy, signaled by smiles and laughter, or sad, signaled by crying and distress cues. We investigated the functional brain networks underlying learning of infant emotionality using neuroimaging in healthy adult participants. We used a probabilistic social reward task allowing participants to learn, through trial and error, the emotional disposition of a group of six infants (Parsons et al. 2014; Riem et al. 2017). Through this interactive learning task, the participants learned the probability of each infant showing a positive disposition by smiling and laughing. We have previously shown that this probabilistic social reward task can reliably shift the way infant cuteness is perceived and the motivation to view individual infant faces (Parsons et al. 2014), such that infants previously judged less cute become significantly cuter if they display a positive emotional disposition during the short social reward task. Here, we scanned 47 participants in the testing phase of the social reward task after they had learned the experimentally established infant emotionality. This allowed us to compute the underlying changes in dynamic functional brain connectivity associated with each infant emotional disposition using a novel LEiDA methodology (Cabral et al. 2017).

Our results revealed for the first time a significant brain network exhibiting time-varying activity that significantly correlated with the experimentally established infant emotional disposition, that is, more activity when seeing the infants with most positive emotionality (80% and 60% probability of smiling and laughing) and much less activity when seeing the infants with the most negative emotionality (20% and 40% probabilities of smiling and laughing). Importantly, these experimentally established infant emotionality values were different from the initial cuteness ratings and desire to view the infant face (Parsons et al. 2014), suggesting that this brain network is not encoding simply the cuteness of an infant but this emotional aspect of the learned infant temperament.

Revealing the brain networks engaged in learning about infant emotionality is important given that positive, cute infant cues such as smiles and laughter promote caregiver proximity and care vital for the infant’s survival (Kringelbach et al. 2016). This should be seen in context of the development of three main “parental capacities,” which apply to all caregivers (Parsons et al. 2010; Stein et al. 2014). The first parental capacity is the ability to focus attention on the infant’s emotional cues and respond contingently and responsively, which predicts later cognitive development (Murray et al. 1996). The second key parental capacity is emotional scaffolding, which is the ability to perceive changes in emotion and stress in the infant and support them to regulate their emotions, especially when the infant is distressed. The third key parental capacity is sensitivity to an infant’s attachment behaviors, such as eye contact, and to respond appropriately. Previous research has shown that the antecedents to these capacities, particularly attentional focus, are found even in the brain processing of nonparents (Kringelbach et al. 2008; Young et al. 2016). Here we demonstrate for the first time the brain networks involved for nonparents in learning about infant emotional dispositions, which are essential for the ability to perceive emotional state, provide emotional scaffolding in instances such as crying, and to hone sensitivity to an infant’s attachment behaviors (Bornstein 2014). A future endeavor for this work is to explore how learning of infant emotional dispositions affects the brain of new parents, perhaps also exploring own-infant versus other-infant processing.

We have identified the brain network encoding learning of infant emotionality consisting of the orbitofrontal cortex, hippocampus, parahippocampus, and amygdala (see Figs. 3 and 5). These regions are known to be structurally connected, for example, via the uncinate fasciculus (Von Der Heide et al. 2013). Perhaps the most important region in this emotionality-encoding network is the orbitofrontal cortex: a large heterogeneous brain region with many functions, which has primarily been implicated in emotion and hedonic processing (Kringelbach and Rolls 2004; Kringelbach 2005; Kringelbach and Berridge 2009). It has a specific role in processing the valence of primary reinforcers including face perception, as patients with lesions to the orbitofrontal cortex struggle to identify emotional facial expressions (Hornak et al. 1996), and similarly face-selective patches have been found in orbitofrontal cortex, primarily in electrophysiology studies using primates (O'Scalaidhe et al. 1997). Previous work has associated infant faces with fast activity in the OFC at around 130 ms (Kringelbach et al. 2008; Parsons et al. 2013). Similarly, the orbitofrontal cortex has been involved in the fast processing (<130 ms) of infant auditory stimuli (Young et al. 2016). This processing is present in men and women, parents, and nonparents, and has been theorized to comprise a universal “caregiving instinct” (Lorenz 1943) that may prepare the individual to provide care to the infant by coordinating responsiveness and readiness for sociality (Kringelbach et al. 2016). Importantly, when an infant face is altered, as in the case of cleft lip, which is rated as much less cute than healthy infants (Parsons, Young, Parsons, et al. 2011b), the rapid activity in the orbitofrontal cortex is significantly diminished, suggesting that the configuration of the infant face is vital for the perception of a biologically significant infant (Parsons et al. 2013). Perhaps, as our current study demonstrates, positive emotional cues such as laughter and smiles could help to shift individuals’ perception of these infants to perceive them as cuter and facilitate subsequent caregiving.

The amygdala has been shown to be involved in the processing of emotional stimuli (LeDoux and Phelps 2000) and particularly in the recognition of facial emotions (Adolphs 2002). For many years the literature seemed to suggest that the amygdala was mainly involved in processing negative emotions including facial expressions denoting threat (fear or anger), mainly driven by findings in rodents (LeDoux and Phelps 2000). Yet, amygdala activity has also been found for positive stimuli including faces (Yang et al. 2002; Fitzgerald et al. 2006). As a result, Pessoa and Adolphs (2010) proposed that the role of the amygdala in visual processing is to coordinate cortical networks during the evaluation of the biological significance of visual stimuli with an affective dimension, like a conductor with an orchestra. Interestingly, it has been proposed that some of the role of the amygdala seen in rodents have been taken over by the orbitofrontal cortex over the course of evolution (Rolls 1999).

Previous research has also shown the detection of biological significance is linked to emotional memory networks, which include the orbitofrontal cortex, amygdala, parahippocampus, and hippocampus (Berridge and Kringelbach 2015; Kringelbach and Berridge 2017). Both human and animal research has shown how the amygdala often works in concert with hippocampal regions to lay down emotionally valenced episodic memories (Phelps 2004) (Stark et al. 2015). There is some evidence to suggest that emotional cues are more easily memorized and recalled (Kensinger 2009), which would suggest that imbuing an infant face with an emotional disposition might strengthen the memory and aid recall. An interesting follow up would be to explore valence in greater detail, specifically whether happy or sad emotionality leads to better recall.

Thus, given their roles in processing emotional behaviors, the interaction between the orbitofrontal cortex and the amygdala with memory systems mediated by the hippocampal regions could signal to the attentional systems to dynamically update the reward value of infants and help guide subsequent caregiving. Here, it is important to stress the role of the network rather than the role of individual brain regions. Due the instantaneous nature of the patterns detected with LEiDA, we were able to detect a specific set of regions whose probability to synchronize their BOLD signal phases relates with the learned emotional dispositions associated to the neutral infant faces. Importantly, since successive stimuli were presented <10 s apart, conventional sliding-window analysis used for the evaluation of dynamic functional connectivity would have failed to capture the emotional specificity associated to each face (Preti et al. 2017). Recently, other methodological approaches focusing on BOLD coactivation patterns have been proposed to analyze BOLD connectivity dynamics at high temporal resolution (Tagliazucchi et al. 2012; Liu and Duyn 2013; Karahanoglu and Van De Ville 2015). However, coactivation approaches (in their variant forms) are only sensitive to simultaneity in the data, whereas phase-coherence techniques can, by definition, capture temporally delayed relationships, which may explain why the LEiDA method appears more sensitive to detect meaningful functional subsystems. We are thus able to expand our previous categorical neuroimaging analysis, which suggested increased amygdala connectivity with frontal regions and the visual cortex during the perception of infants with a happy disposition (Riem et al. 2017). Crucially, however, such categorical analyses rarely provide insights into the spatiotemporal dynamics of network activity. Longer-term, combining these sophisticated unsupervised data analysis methods with whole-brain computational modeling has the potential to show the causal influence of each of the regions in the emotionality-learning network identified here (Deco and Kringelbach 2014; Deco et al. 2018).

Another proposed role of the orbitofrontal–amygdala–hippocampus network would be to provide top–down predictions to sensory regions when processing the neutral infant faces. Previous work has shown that one proposed function of the OFC in visual processing is to integrate perceptual representations with top–down expectations activated by contextual or associative detail (Bar et al. 2006). This view corroborates with the concept that the brain is not a passive organ, but is constantly predicting incoming proximate sensory information based upon memories of past experiences (Vuust et al. 2018). In addition to this, a recent study found the hippocampus to encode the identity of a visual stimulus based upon associative predictions from auditory cues (Kok and Turk-Browne 2018). If this network is providing a prediction of the infant’s emotional disposition despite the neutral face presented during scanning, this could provide evidence demonstrating how contextual and trait-related social information is integrated into visual perception.

Finally, it is interesting to consider how the present research may be adapted to explore further the processing of infant emotionality in psychiatric disorders. Research in depressed patients have shown that they are less accurate at discriminating happy facial expressions (Gur et al. 1992; Surguladze et al. 2004; Dai et al. 2016), which is thought to underlie some of the impaired interpersonal functioning in depression. This interpersonal functioning is vital to the parent–infant relationship, as is sensitivity to infant cues that signal their affective state and also their needs. Research has found impairments in precise, controlled psychomotor performance in adults with depression (Young et al. 2015) and mothers with postnatal depression also show reduced affective touching than healthy mothers (Young et al. 2015). Given that the brain networks in response to infant cues are crucial in triggering behavioral responsivity, it would be of considerable interest to test whether caregivers with depression may show altered brain networks for learning of infant emotionality from facial and vocal cues. Our learning paradigm may also be usefully incorporated in broader interventions, such as the “Video-feedback Intervention to promote Positive Parenting” video feedback approach (Juffer et al. 2017), emphasizing attention to positive emotional signals of the infant in order to more systematically change parental perceptions of their infants’ negative emotionality and trigger less harsh and more sensitive parental interactions.

Funding

This work was supported by a Medical Research Council Studentship awarded to EAS; a European Research Council Consolidator Grant to MLK (CAREGIVING, no. 615539); a Wellcome Trust Grant (No. 090139) to AS; funding from the Portuguese Foundation for Science and Technology (CEECIND/03325/2017), Portugal, to JC; and a research award from the Netherlands Organization for Scientific Research (SPINOZA prize), the Gravitation program of the Dutch Ministry of Education, Culture, and Science and the Netherlands Organization for Scientific Research (NWO grant number 024.001.003) to MHvIJ.

Notes

We are grateful to Marian Bakermans-Kranenburg, Christine Parsons, and Katie Young for valuable contributions to task design and this manuscript. Conflicts of interest: The authors declare no conflicts of interest.

References

- Adolphs R. 2002. Recognizing emotion from facial expressions: psychological and neurological mechanisms. Behav Cogn Neurosci Rev. 1:21–61. [DOI] [PubMed] [Google Scholar]

- Bar M, Kassam KS, Ghuman AS, Boshyan J, Schmid AM, Dale AM, et al. . 2006. Top-down facilitation of visual recognition. Proc Natl Acad Sci U S A. 103(2):449–454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Berridge KC, Kringelbach ML. 2015. Pleasure systems in the brain. Neuron. 86:646–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bhandari R, Veen R, Parsons CE, Young KS, Voorthuis A, Bakermans-Kranenburg MJ, Stein A, Kringelbach ML, Van IJzendoorn MH. 2014. Effects of intranasal oxytocin administration on memory for infant cues: moderation by childhood emotional maltreatment. Soc Neurosci. 9(5):536–547. [DOI] [PubMed] [Google Scholar]

- Bornstein MH. 2014. Human Infancy … and the Rest of the Lifespan. Annu Rev Psychol. 65(1):121–158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brosch T, Sander D, Scherer KR. 2007. That baby caught my eye... Attention capture by infant faces. Emotion. 7(3):685–689. [DOI] [PubMed] [Google Scholar]

- Brown EC, Brüne M. 2012. The role of prediction in social neuroscience. Front Hum Neurosci. 24(6):147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cabral J, Vidaurre D, Marques P, Magalhāes R, Moreira PS, Soares JM, et al. . 2017. Cognitive performance in healthy older adults relates to spontaneous switching between states of functional connectivity during rest. Sci Rep. 7(1):5135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dai Q, Wei J, Shu X, Feng Z. 2016. Negativity bias for sad faces in depression: an event-related potential study. Clin Neurophysiol. 127(12):3552–3560. [DOI] [PubMed] [Google Scholar]

- Deco G, Cruzat J, Cabral J, Knudsen GM, Carhart-Harris RL, Whybrow PC, et al. . 2018. Whole-brain multimodal neuroimaging model using serotonin receptor maps explains non-linear functional effects of LSD. Curr Biol. 28(19):3065–3074. [DOI] [PubMed] [Google Scholar]

- Deco G, Kringelbach ML. 2014. Great expectations: using whole-brain computational Connectomics for understanding neuropsychiatric disorders. Neuron. 84:892–905. [DOI] [PubMed] [Google Scholar]

- Figueroa CA, Cabral J, Mocking RJT, Rapuano KM, Hartevelt TJ, Deco G, Expert P, Schene AH, Kringelbach ML, Ruhé HG. 2019. Altered ability to access a clinically relevant control network in patients remitted from major depressive disorder. Hum Brain Mapp. 40(9):2771–2786. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fitzgerald DA, Angstadt M, Jelsone LM, Nathan PJ, Phan KL. 2006. Beyond threat: amygdala reactivity across multiple expressions of facial affect. NeuroImage. 30:1441–1448. [DOI] [PubMed] [Google Scholar]

- Frank MJ, Seeberger LC, O'Reilly RC. 2004. By carrot or by stick: cognitive reinforcement learning in parkinsonism. Science. 306(5703):1940–1943. [DOI] [PubMed] [Google Scholar]

- Friston K, Kilner J, Harrison L. 2006. A free energy principle for the brain. J Physiol Paris. 100(1–3):70–87. [DOI] [PubMed] [Google Scholar]

- Goldsmith HH, Buss AH, Plomin R, Rothbart MK, Thomas A, Chess S, et al. . 1987. Roundtable: what is temperament? Child Dev. 58(2):505–529. [PubMed] [Google Scholar]

- Gur RC, Erwin RJ, Gur RE, Zwil AS, Heimberg C, Kraemer HC. 1992. Facial emotion discrimination: II. Behavioral findings in depression. Psychiatry Res. 42(3):241–251. [DOI] [PubMed] [Google Scholar]

- Hahn AC, Xiao DK, Sprengelmeyer R, Perrett DI. 2013. Gender differences in the incentive salience of adult and infant faces. Q J Exp Psychol. 66(1):200–208. [DOI] [PubMed] [Google Scholar]

- Hildebrandt KA, Fitzgerald HE. 1978. Adults' responses to infants varying in perceived cuteness. Behav Process. 3(2):159–172. [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls ET, Wade D. 1996. Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia. 34:247–261. [DOI] [PubMed] [Google Scholar]

- Juffer F, Bakermans-Kranenburg MJ, Van Ijzendoorn MH. 2017. Pairing attachment theory and social learning theory in video-feedback intervention to promote positive parenting. Curr Opin Psychol. 15:189–194. [DOI] [PubMed] [Google Scholar]

- Karahanoglu FI, Van De Ville D. 2015. Transient brain activity disentangles fMRI resting-state dynamics in terms of spatially and temporally overlapping networks. Nat Commun. 6:7751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kensinger EA. 2009. Remembering the details: Effects of emotion. Emotion Review. 1(2): 99–113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok P, Turk-Browne NB. 2018. Associative prediction of visual shape in the hippocampus. J Neurosci. 38(31):6888–6899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML. 2005. The orbitofrontal cortex: linking reward to hedonic experience. Nat Rev Neurosci. 6(9):691–702. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Berridge KC. 2009. Towards a functional neuroanatomy of pleasure and happiness. Trends Cogn Sci. 13(11):479–487. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Berridge KC. 2017. The affective core of emotion: linking pleasure, subjective well-being and optimal metastability in the brain. Emot Rev. 9(3):191–199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Lehtonen A, Squire S, Harvey AG, Craske MG, Holliday IE, et al. . 2008. A specific and rapid neural signature for parental instinct. PLoS One. 3(2):e1664. doi: 10.1371/journal.pone.0001664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. 2003. Neural correlates of rapid context-dependent reversal learning in a simple model of human social interaction. NeuroImage. 20(2):1371–1383. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Rolls ET. 2004. The functional neuroanatomy of the human orbitofrontal cortex: evidence from neuroimaging and neuropsychology. Prog Neurobiol. 72(5):341–372. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML, Stark EA, Alexander C, Bornstein MH, Stein A. 2016. On cuteness: unlocking the parental brain and beyond. Trends Cogn Sci. 20(7):545–558. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux JE, Phelps EA. 2000. Emotional networks in the brain In: Lewis M, Haviland-Jones JM, editors. Handbook of emotions. New York: Guilford, pp. 157–172. [Google Scholar]

- Lee CL, Bates JE. 1985. Mother-child interaction at age two years and perceived difficult temperament. Child Dev. 56(5):1314–1325. [DOI] [PubMed] [Google Scholar]

- Liu X, Duyn JH. 2013. Time-varying functional network information extracted from brief instances of spontaneous brain activity. Proc Natl Acad Sci. 110(11):4392–4397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lord L-D, Expert P, Atasoy S, Roseman L, Rapuano K, Lambiotte R, et al. . 2019. Dynamical exploration of the repertoire of brain networks at rest is modulated by psilocybin. NeuroImage. 199:127–142. [DOI] [PubMed] [Google Scholar]

- Lorenz K. 1943. Die angeborenen Formen Möglicher Erfahrung. [innate forms of potential experience]. Z Tierpsychol. 5:235–519. [Google Scholar]

- Murphy K, Fox MD. 2017. Towards a consensus regarding global signal regression for resting state functional connectivity MRI. NeuroImage. 154:169–173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray L, Hipwell A, Hooper R, Stein A, Cooper P. 1996. The cognitive development of 5-year-old children of postnatally depressed mothers. J Child Psychol Psychiatry. 37(8):927–935. [DOI] [PubMed] [Google Scholar]

- Newman ME. 2006. Finding community structure in networks using the eigenvectors of matrices. Phys Rev E Stat Nonlinear Soft Matter Phys. 74(3 Pt 2):036104. [DOI] [PubMed] [Google Scholar]

- Nolvi S, Karlsson L, Bridgett DJ, Pajulo M, Tolvanen M, Karlsson H. 2016. Maternal postnatal psychiatric symptoms and infant temperament affect early mother-infant bonding. Infant Behav Dev. 43:13–23. [DOI] [PubMed] [Google Scholar]

- O'Scalaidhe SP, Wilson FA, Goldman-Rakic PS. 1997. Areal segregation of face-processing neurons in prefrontal cortex. Science. 278:1135–1138. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Bhandari R, IJzendoorn M, Bakermans-Kranenburg MJ, Stein A, Kringelbach ML. 2014. The Bonnie baby: experimentally manipulated temperament affects perceived cuteness and motivation to view infant faces. Dev Sci. 17(2):257–269. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Craske MG, Stein AL, Kringelbach ML. 2014. Introducing the Oxford vocal (OxVoc) sounds database: a validated set of non-acted affective sounds from human infants, adults, and domestic animals. Front Psychol. 5:562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Kumari N, Stein A, Kringelbach ML. 2011. The motivational salience of infant faces is similar for men and women. PLoS One. 6(5):e20632. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Mohseni H, Woolrich MW, Thomsen KR, Joensson M, et al. . 2013. Minor structural abnormalities in the infant face disrupt neural processing: a unique window into early caregiving responses. Soc Neurosci. 8(4):268–274. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Murray L, Stein A, Kringelbach ML. 2010. The functional neuroanatomy of the evolving parent-infant relationship. Prog Neurobiol. 91:220–241. [DOI] [PubMed] [Google Scholar]

- Parsons CE, Young KS, Parsons E, Dean A, Murray L, Goodacre T, et al. . 2011b. The effect of cleft lip on adults' responses to faces: cross-species findings. PLoS One. 6(10):e25897. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pauli-Pott U, Mertesacker B, Beckmann D. 2004. Predicting the development of infant emotionality from maternal characteristics. Dev Psychopathol. 16(1):19–42. [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. 2010. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nature Reviews Neuroscience. 11(11): 773–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Phelps EA. 2004. Human emotion and memory: interactions of the amygdala and hippocampal complex. Current Opinion in Neurobiology. 14(2): 198–202. [DOI] [PubMed] [Google Scholar]

- Preti MG, Bolton TAW, Van De Ville D. 2017. The dynamic functional connectome: state-of-the-art and perspectives. NeuroImage. 160:41–54. [DOI] [PubMed] [Google Scholar]

- Proverbio AM, Riva F, Zani A, Martin E. 2011. Is it a baby? Perceived age affects brain processing of faces differently in women and men. J Cogn Neurosci. 23(11):3197–3208. [DOI] [PubMed] [Google Scholar]

- Putnam SP, Sanson AV, Rothbart MK. 2002. Child temperament and parenting In: Bornstein MH, editor. Handbook of Parenting. Mahwah (NJ): Lawrence Erlbaum Associates. [Google Scholar]

- Reijneveld SA, Wal MF, Brugman E, Hira Sing RA, Verloove-Vanhorick SP. 2004. Infant crying and abuse. Lancet. 364(9442):1340–1342. [DOI] [PubMed] [Google Scholar]

- Riem MME, Van IJzendoorn MH, Parsons CE, Young KS, De Carli P, Kringelbach ML, Bakermans-Kranenburg MJ. 2017. Experimental manipulation of infant temperament affects amygdala functional connectivity. Cogn Affect Behav Neurosci. 17(4):858–868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rolls ET. 1999. The brain and emotion. Oxford: Oxford University Press. [Google Scholar]

- Rothbart MK, Ahadi SA, Hershey KL. 1994. Temperament and social behavior in childhood. Merrill-Palmer Q. 40(1):21–39. [Google Scholar]

- Rothbart MK, Bates JE. 2006. Temperament In: Eisenberg N, Damon W, editors. Handbook of child psychology, social, emotional, and personality development. 6th ed. Vol 3 New York: Wiley, pp. 99–166. [Google Scholar]

- Sanson A, Hemphill SA, Smart D. 2004. Connections between temperament and social development: a review. Soc Dev. 13(1):142–170. [Google Scholar]

- Shiner RL, Buss KA, McClowry SG, Putnam SP, Saudino KJ, Zentner M. 2012. What is temperament now? Assessing progress in temperament research on the twenty-fifth anniversary of Goldsmith et al. (1987). Child Dev Perspect. 6(4):436–444. [Google Scholar]

- Stark EA, Parsons CE, Hartevelt TJ, Charquero-Ballester M, McManners H, Stein A, Kringelbach ML. 2015. Post-traumatic stress influences the brain even in the absence of symptoms: a systematic, quantitative meta-analysis of neuroimaging studies. Neurosci Biobehav Rev. 56:207–221. [DOI] [PubMed] [Google Scholar]

- Stark EA, Stein A, Young KS, Parsons CE, Kringelbach ML. 2019. Neurobiology of parenting. In: Bornstein MH, editor. Handbook of parenting.

- Stein A, Pearson RM, Goodman SH, Rapa E, Rahman A, McCallum M, et al. . 2014. Effects of perinatal mental disorders on the fetus and child. Lancet. 384(9956):1800–1819. [DOI] [PubMed] [Google Scholar]

- Surguladze SA, Young AW, Senior C, Brébion G, Travis MJ, Phillips ML. 2004. Recognition accuracy and response bias to happy and sad facial expressions in patients with major depression. Neuropsychology. 18(2):212. [DOI] [PubMed] [Google Scholar]

- Tagliazucchi E, Balenzuela P, Fraiman D, Chialvo DR. 2012. Criticality in large-scale brain FMRI dynamics unveiled by a novel point process analysis. Front Physiol. 3:15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, et al. . 2002. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage. 15(1):273–289. [DOI] [PubMed] [Google Scholar]

- Von Der Heide RJ, Skipper LM, Klobusicky E, Olson IR. 2013. Dissecting the uncinate fasciculus: disorders, controversies and a hypothesis. Brain. 136(6):1692–1707. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vuust P, Witek MAG, Dietz M, Kringelbach ML. 2018. Now you hear it: a novel predictive coding model for understanding rhythmic incongruity. Ann N Y Acad Sci. in press. [DOI] [PubMed] [Google Scholar]

- Yang TT, Menon V, Eliez S, Blasey C, White CD, Reid AJ, et al. . 2002. Amygdalar activation associated with positive and negative facial expressions. 13(14):1737–1741. [DOI] [PubMed] [Google Scholar]

- Young KS, Parsons CE, LeBeau RT, Tabak BA, Sewart AR, Stein A, et al. . 2017. Sensing emotion in voices: negativity bias and gender differences in a validation study of the Oxford vocal (`OxVoc') sounds database. Psychol Assess. 9(8):967–977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young KS, Parsons CE, Stein A, Kringelbach ML. 2012. Interpreting infant vocal distress: the ameliorative effect of musical training in depression. Emotion. 12(6):1200–1205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young KS, Parsons CE, Stein A, Kringelbach ML. 2015. Motion and emotion: depression reduces psychomotor performance and alters affective movements in caregiving interactions. Front Behav Neurosci. 9:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young KS, Parsons CE, Stevner A, Woolrich MW, Jegindø E-M, Hartevelt TJ, et al. . 2016. Evidence for a caregiving instinct: rapid differentiation of infant from adult vocalisations using magnetoencephalography. Cereb Cortex. 26(3):1309–1321. [DOI] [PMC free article] [PubMed] [Google Scholar]