1. Introduction

Accurately measuring pain in humans and rodents is essential to unravel the neurobiology of pain and discover effective pain therapeutics. However, given its inherently subjective nature, pain is nearly impossible to objectively assess. In the clinic, patients can articulate their pain experience using questionnaires and pain scales,8,13,20 but self-reporting can be unreliable due to various psychological and social influences or difficulties for some patients to verbalize their experience (eg, infants, toddlers, and those with neurodevelopmental disorders).7,25 At the bench, these challenges are even more daunting as researchers rely on the behaviors of rodents to measure pain or pain relief. Given this, there is a growing realization among pain researchers, clinicians, and funding entities that these traditional approaches of assessing pain in rodents may be flawed. Importantly, these flaws may have contributed to several failed drugs that initially showed promise as analgesics and point toward inconsistencies in our understanding of basic pain neurobiology.5,17,28

This has prompted the field to seek new and more reliable ways to measure pain in rodents. In concert with these efforts, behavioral neuroscientists across several fields are developing new tools to improve their own behavioral assays. In this review, we describe some of these efforts and provide background for the wide adoption of these new tools by the pain research field to speed the translation of basic science findings to the clinic.

2. Subsecond analysis of acute pain assays aids in quantifying the sensory experience

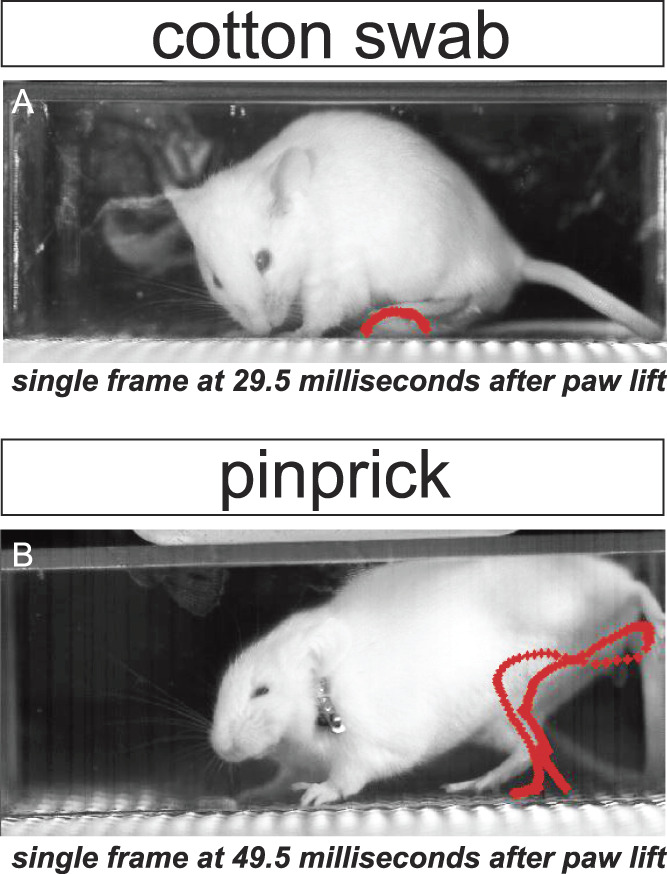

The predominant measurement for pain sensation in rodents is the paw withdrawal from a noxious thermal, chemical, or mechanical stimulus. Features of the withdrawal response such as latency or frequency occur over the span of a few seconds and can be easily scored by novice experimenters. However, meaningful features that occur on a millisecond timescale go unnoticed with the unaided eye. To circumvent this, researchers have turned toward high-speed video imaging, revealing that millisecond behavioral features can be resolved and that these features contain meaningful information about the animals' internal pain state.

In one study, 2 of us (I.A-S. and N.T.F.) imaged the behavior of freely moving mice in small enclosures at 500 to 1000 fps to identify the differences between subsecond movements induced by noxious vs innocuous stimulation of the hind paw.1 Through statistical analysis and machine learning, we found that 3 features (paw height, paw velocity, and a combinatorial score of pain-related behaviors including orbital tightening, paw shaking, paw guarding, and jumping) could reliably distinguish withdrawals induced by noxious vs innocuous stimuli. This opened the possibility of creating a “mouse pain scale,” which used a principle component analysis to combine the 3 features into a single number (similar to the clinically used zero-to-ten scale). We further demonstrated the utility of this pain scale by determining the sensation evoked by 3 von Frey hair filaments (0.6, 1.4, and 4.0 g) and found that only the 4.0 g induced a pain-like withdrawal, while the 0.6- and 1.4-g filaments induced a withdrawal more similar to that seen with innocuous stimuli. Finally, we examined where along this pain scale optogenetic activation of 2 different nociceptor populations, Trpv1Cre and MrgprdCre would fall. Predictably, activation of Trpv1-lineage neurons induced a withdrawal within the pain domain. However, activation of the nonpeptidergic Mrgprd nociceptors led to a withdrawal within the nonpain domain. Although historically considered a nociceptor population, data from other reports also suggest these neurons are not sufficient to transmit pain signals at baseline.4,10,15,26

Other groups have also extracted meaningful information from subsecond behaviors. One group identified that the latency of response to noxious stimuli was shorter (50-180 ms) than to innocuous stimuli (220-320 ms).6 Another group resolved subsecond movement of whisker vibrissae, body, tail, and hind paw movement to identify that an animal's posture can impact the latency of noxious-related movements, suggesting circuit-level inhibitory control of the behavior.9 One study demonstrated that even using a lower frame rate (240 fps) with an Apple iPhone6 camera could resolve subsecond paw withdrawal, paw guarding, and jumping.3 Collectively, these studies reveal that meaningful data can be extracted from enhanced temporal resolution of stimulus-evoked pain assays, allowing for a better approximation of an animal's pain state.

3. Considerations for using high-speed videography to capture subsecond pain behaviors

Moving from webcams, camcorders, or smartphones to dedicated high-speed cameras to study pain requires some additional considerations. Although financial considerations rank high on the list, the costs for cameras that can record around 1k fps are dropping. Another consideration is the lighting used to capture the videos. Most newer cameras can be activated with infrared lighting that will not overtly perturb the animal's behavior. Newer high-speed cameras also produce minimal sound, as to not disturb the animal. However, we still recommend habituating animals to any sounds a camera generates. Finally, an important consideration is the hard drive space available on the camera itself because high-speed videos can be several GB, and most high-speed cameras must first save a video on internal space before transferring to external hard drives. We have found that at least 4 to 8 GB of internal memory on a high-speed monochrome camera is necessary for 5 to 10 seconds of high-resolution recording (1024 × 1024 pixels) of behavior—which allows sufficient temporal resolution to capture subsecond and multisecond pain behaviors. With these improvements and reductions in costs, high-speed imaging is accessible enough that more laboratories in the field should consider incorporating high-speed imaging when evaluating paw withdrawal as an endpoint since such recordings can be used to better define the animal's behavioral state when the paw withdrawal occurs.

4. Supervised automated tracking and pose estimation with machine learning and deep neural networks to increase pain assessment workflow

High-speed imaging with manual tracking allows researchers to record behavior at millisecond resolution timescales, but the process of manually scoring and tracking these movements of multiple body areas across potentially thousands of frames or hours of data can be laborious and influenced by experimenter bias or methodological inconsistencies. Several research groups are developing new ways of automatically tracking animal posture (pose estimation) and the movements of body regions of interest (bROI) in animals ranging from invertebrates to vertebrates.11

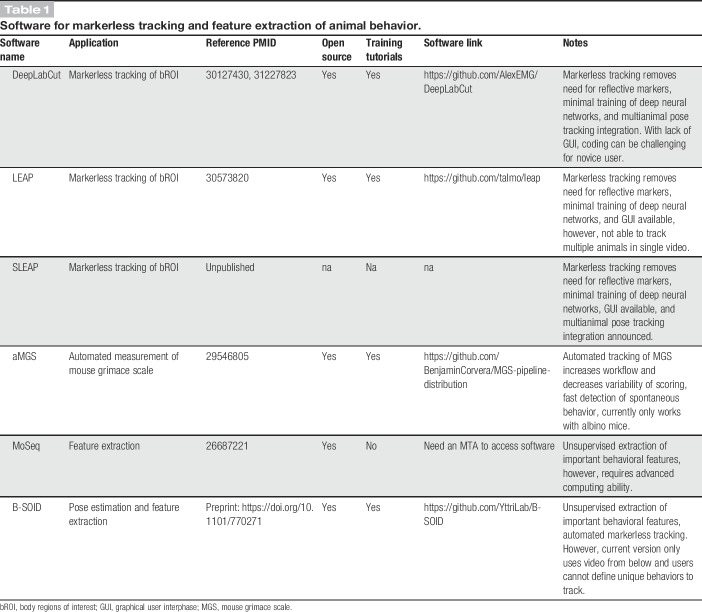

One new platform, DeepLabCut, has already been widely adopted across the behavioral neuroscience community22 (Table 1). This open-source system uses deep neural networks to perform pose estimation without the need of placing reflective markers on the animals. DeepLabCut was built from an earlier pose estimation algorithm called DeeperCut, which used thousands of labeled data sets to accurately track body parts of humans engaged in diverse tasks from rowing to throwing a baseball.16 The goal of DeepLabCut was to modify the existing DeeperCut algorithm to achieve human-level tracking accuracy in animals while also reducing the amount of computer training. The use case of this software relies on an experimenter manually identifying all bROI in as few as 200 frames. The software then uses those frames to train itself to identify the same bROI throughout the entire video or other videos with similar camera angles that contain one or more animals. Thus, after the initial 200 frames of training, the software can track animal movement in all future experiments.

Table 1.

Software for markerless tracking and feature extraction of animal behavior.

Another deep-learning pose estimation program, LEAP,23 is also gaining traction in the behavioral neuroscience community (Table 1). Initially developed for Drosophila, LEAP moves beyond DeepLabCut by introducing a graphical user interphase to label body features. Similar to DeepLabCut, LEAP uses a convolutional neural network capable of making predictions of bROI in a given image. With LEAP, users only need to label the first 10 images of a data set for training, which can be performed in 1 hour. The authors demonstrate that LEAP can faithfully track bROI in both flies or freely behaving mice. Similar to DeepLabCut, LEAP is also free and open source with online instructions. At the time of this publication, a new version of LEAP, SLEAP (Social LEAP Estimates Animal Poses), has been announced but not yet published and will include the ability to track multiple animals in a single video.

Importantly, automated tracking of behavioral features can also be applied to the detection of spontaneous pain in rodents. The Mogil laboratory previously developed a mouse grimace scale (MGS) based on changes in facial expressions induced by noxious stimuli of moderate duration (10 minutes-4 hours).18 However, the MGS relies on humans scoring a small number of frames in videos, making the MGS labor-intensive and subject to variability across research groups. To overcome this, the Mogil and Zylka laboratories built a new platform for automated scoring of the MGS (aMGS), using a convolutional neural network24 (Table 1). The aMGS was trained with nearly 6000 facial images of albino mice either in pain or not in pain for testing and validation. Using a postoperative pain assay, the aMGS was able to accurately predict pain, and relief of pain, with an accuracy equal to that of a human scorer. As automation of the MGS platform continues to develop, this technology should become a mainstay platform used in preclinical pain and analgesic assessment.

5. Unsupervised identification and measurement of novel pain-related behavioral features

DeepLabCut and LEAP markerless tracking software allow a researcher to easily track bROI and then perform analysis on the subsequent data. However, these software platforms rely on the user identifying movement features of bROI. In some cases, there may be rich information not easily recognizable to humans. Thus, some groups are working to develop deep learning technologies that can automatically identify important features an experimenter should focus on. In these instances, instead of a user identifying a movement feature, deep learning software can identify previously unrecognized movement features that contain important information to distinguish between experimental groups.

The Datta laboratory has applied this approach to the development of a platform, MoSeq, to decode spontaneous mouse behaviors within an open field using three-dimensional (3D) depth imaging and 3 separate cameras (Table 1). Using this, they revealed that normal mouse behavior is structured into 60 different movement blocks (eg, darting, freezing, low/high rearing, etc) of roughly 350 ms in length with mice frequently transitioning between these blocks.27 They coined these movement blocks “behavioral syllables,” similar to the syllables used to create words in language. Importantly, these syllables were not identified or defined by the experimenter. Instead, they were classified automatically by deep neural networks. As a proof of principle, researchers in the Datta laboratory re-evaluated the walking phenotype of mouse mutants for a gene important for fluid locomotion. Although previous kinematic assessment identified abnormal motor movement patterns, this new platform uncovered a so-called “waddle” walking phenotype in both homozygous and heterozygous mutant mice—a phenotype missed by other scoring techniques.2,12,19,21

Although powerful, MoSeq is not a turn-key solution and requires substantial code customization and a full-time computer scientist. To make unsupervised behavioral analysis more accessible, the new software suite, B-SOID, developed by the Yttri laboratory, was created in which the automated tracking capabilities of DeepLabCut were combined with feature extraction similar to MoSeq14 (Table 1). The current iteration of B-SOID tracks 6 body parts and categorizes those positions into 7 behaviors that have been manually defined in the software: pause, rear, groom, sniff, locomote, orient left, and orient right. Although fairly general, these behaviors enable researchers to easily and automatically identify trends and sequences in the behaviors associated with whatever stimulus or condition is applied. Alternatively, B-SOID also offers the ability to segment statistically different behaviors based entirely on the data without any manual definition from the user. This feature of the software is perhaps most promising because it will enable pain researchers to discover novel, nonobvious behaviors that can provide insight into the rich experience of pain. One current limitation is that B-SOID only accepts video recorded from beneath the animal. Many behaviors that pain researchers care about, like itching or scratching, are best captured either from above, the side, or a combination of multiple views demonstrating the need for B-SOID to expand to include multiangle analysis. Nevertheless, B-SOID represents a significant advance in terms of ease of use and should enable pain researchers with minimal programming skills to take advantage of unsupervised behavioral analysis.

6. Future directions

It should be noted that machine learning–based tools for pain research are still in their infancy. We need a greater understanding of accuracy, how well different tools predict analgesic efficacy across a broad range of drugs and pain models, concordance between tools and laboratories, and ease of adoption for pain researchers. Major barriers still exist despite open-source sharing of new analytic pipelines. Most pain research laboratories lack the personnel to install, troubleshoot, and operate these somewhat finicky software packages. Efforts must be expanded to simplify the user interface and operability to facilitate broader use. Still, the combination of markerless tracking for automated pose estimation and deep learning networks for feature extraction will undoubtedly help the field identify new pain-related behaviors at both subsecond and multiminute time scales while making already established behaviors easier and more reliable to measure. It is possible that researchers may identify nuances to rodent pain behavior by tracking an eye, ear, paw, or even a single digit, vastly improving upon our ability to use rodents in pain research.

Although the technologies detailed here will undoubtedly increase an experimenter's accuracy at measuring acute pain, it remains uncertain how well each of these technologies will perform in measuring chronic states, such as neuropathic pain. Because animals in chronic pain states often guard their injured limbs or show reductions in mounting robust motor outputs, this may be a rate-limiting factor since some of the platforms described above rely on animals performing quick and intense movements (Fig. 1). Therefore, it is possible that an advanced version of aMGS, and the unsupervised learning platforms with the capacity to detect spontaneous pain behaviors such as MoSeq or B-SOID, may be the most reliable in detecting subtle phenotypes that define the pain states during neuropathic or inflammatory pain.

Figure 1.

High-speed videography reveals differences in paw movements to innocuous vs noxious stimuli. Red paw trajectory patterns display path of stimulated paw from lift to return using automated paw tracking approaches. Orbital (eye) tightening, a MGS action unit indicative of pain, is also visible after pinprick (B), but not in response to an innocuous stimulus (A).

To end where we began, a very small number of basic science findings in preclinical pain models are translated into the clinic as novel pain therapeutics. Therefore, another future direction could be for laboratories to perform pain behavior testing in rodents with some of the technologies described here and test both known analgesics and drugs that showed painkilling promise in rodents but failed subsequently in clinical trials.29 What if increased resolution in pain measurement in rodents could save us valuable time and energy by not pursuing the wrong targets? Only time will tell the true value in using these newer tools to study pain in mice and rats, but if our predictions are correct, the field has reason to be optimistic.

Conflict of interest statement

The authors have no conflicts of interest to declare.

Acknowledgments

M.J. Zylka is supported by a grant from the National Institute of Neurological Disorders and Stroke (NINDS, R01NS114259, R21NS106337). A. Chamessian is supported by the Departments of Neurology and Anesthesiology at Washington University in St. Louis. I. Abdus-Saboor is supported by startup funds from the University of Pennsylvania and by a grant from the National Institute of Dental and Craniofacial Research (NIDCR, R00-DE026807). N.T. Fried is supported by the Rutgers Camden Provost Fund for Research Catalyst Grant.

Footnotes

Sponsorships or competing interests that may be relevant to content are disclosed at the end of this article.

References

- [1].Abdus-Saboor I, Fried NT, Lay M, Burdge J, Swanson K, Fischer R, Jones J, Dong P, Cai W, Guo X, Tao YX, Bethea J, Ma M, Dong X, Ding L, Luo W. Development of a mouse pain scale using sub-second behavioral mapping and statistical modeling. Cell Rep 2019;28:1623–34.e1624. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Andre E, Conquet F, Steinmayr M, Stratton SC, Porciatti V, Becker-Andre M. Disruption of retinoid-related orphan receptor beta changes circadian behavior, causes retinal degeneration and leads to vacillans phenotype in mice. EMBO J 1998;17:3867–77. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Arcourt A, Gorham L, Dhandapani R, Prato V, Taberner FJ, Wende H, Gangadharan V, Birchmeier C, Heppenstall PA, Lechner SG. Touch receptor-derived sensory information alleviates acute pain signaling and fine-tunes nociceptive reflex coordination. Neuron 2017;93:179–93. [DOI] [PubMed] [Google Scholar]

- [4].Beaudry H, Daou I, Ase AR, Ribeiro-da-Silva A, Seguela P. Distinct behavioral responses evoked by selective optogenetic stimulation of the major TRPV1+ and MrgD+ subsets of C-fibers. PAIN 2017;158:2329–39. [DOI] [PubMed] [Google Scholar]

- [5].Berge OG. Predictive validity of behavioural animal models for chronic pain. Br J Pharmacol 2011;164:1195–206. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Blivis D, Haspel G, Mannes PZ, O'Donovan MJ, Iadarola MJ. Identification of a novel spinal nociceptive-motor gate control for Adelta pain stimuli in rats. Elife 2017;6:e23584. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Booker S, Herr K. The state-of-“cultural validity” of self-report pain assessment tools in diverse older adults. Pain Med 2015;16:232–9. [DOI] [PubMed] [Google Scholar]

- [8].Boonstra AM, Schiphorst Preuper HR, Balk GA, Stewart RE. Cut-off points for mild, moderate, and severe pain on the visual analogue scale for pain in patients with chronic musculoskeletal pain. PAIN 2014;155:2545–50. [DOI] [PubMed] [Google Scholar]

- [9].Browne LE, Latremoliere A, Lehnert BP, Grantham A, Ward C, Alexandre C, Costigan M, Michoud F, Roberson DP, Ginty DD, Woolf CJ. Time-resolved fast mammalian behavior reveals the complexity of protective pain responses. Cell Rep 2017;20:89–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Cavanaugh DJ, Lee H, Lo L, Shields SD, Zylka MJ, Basbaum AI, Anderson DJ. Distinct subsets of unmyelinated primary sensory fibers mediate behavioral responses to noxious thermal and mechanical stimuli. Proc Natl Acad Sci 2009;106:9075–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Datta SR, Anderson DJ, Branson K, Perona P, Leifer A. Computational neuroethology: a call to action. Neuron 2019;104:11–24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Eppig JT, Blake JA, Bult CJ, Kadin JA, Richardson JE, Grp MGD. The mouse genome database (MGD): facilitating mouse as a model for human biology and disease. Nucleic Acids Res 2015;43:D726–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Ferreira-Valente MA, Pais-Ribeiro JL, Jensen MP. Validity of four pain intensity rating scales. PAIN 2011;152:2399–404. [DOI] [PubMed] [Google Scholar]

- [14].Hsu A, Yttri E. B-SOiD: an open source unsupervised algorithm for discovery of spontaneous behaviors. bioRxiv 2019. doi: 10.1101/770271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Huang T, Lin SH, Malewicz NM, Zhang Y, Zhang Y, Goulding M, LaMotte RH, Ma Q. Identifying the pathways required for coping behaviours associated with sustained pain. Nature 2019;565:86–90. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Insafutdinov E, Pishchulin L, Andres B, Andriluka M, Schiele B. DeeperCut: a deeper, stronger, and faster multi-person pose estimation model. arXiv 2016:1605.03170. [Google Scholar]

- [17].Kissin I. The development of new analgesics over the past 50 years: a lack of real breakthrough drugs. Anesth Analg 2010;110:780–9. [DOI] [PubMed] [Google Scholar]

- [18].Langford DJ, Bailey AL, Chanda ML, Clarke SE, Drummond TE, Echols S, Glick S, Ingrao J, Klassen-Ross T, Lacroix-Fralish ML, Matsumiya L, Sorge RE, Sotocinal SG, Tabaka JM, Wong D, van den Maagdenberg AM, Ferrari MD, Craig KD, Mogil JS. Coding of facial expressions of pain in the laboratory mouse. Nat Methods 2010;7:447–9. [DOI] [PubMed] [Google Scholar]

- [19].Liu H, Kim SY, Fu Y, Wu X, Ng L, Swaroop A, Forrest D. An isoform of retinoid-related orphan receptor beta directs differentiation of retinal amacrine and horizontal interneurons. Nat Commun 2013;4:1813. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Main CJ. Pain assessment in context: a state of the science review of the McGill pain questionnaire 40 years on. PAIN 2016;157:1387–99. [DOI] [PubMed] [Google Scholar]

- [21].Masana MI, Sumaya IC, Becker-Andre M, Dubocovich ML. Behavioral characterization and modulation of circadian rhythms by light and melatonin in C3H/HeN mice homozygous for the RORbeta knockout. Am J Physiol Regul Integr Comp Physiol 2007;292:R2357–2367. [DOI] [PubMed] [Google Scholar]

- [22].Mathis A, Mamidanna P, Cury KM, Abe T, Murthy VN, Mathis MW, Bethge M. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat Neurosci 2018;21:1281–9. [DOI] [PubMed] [Google Scholar]

- [23].Pereira TD, Aldarondo DE, Willmore L, Kislin M, Wang SSH, Murthy M, Shaevitz JW. Fast animal pose estimation using deep neural networks. Nat Methods 2019;16:117–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Tuttle AH, Molinaro MJ, Jethwa JF, Sotocinal SG, Prieto JC, Styner MA, Mogil JS, Zylka MJ. A deep neural network to assess spontaneous pain from mouse facial expressions. Mol Pain 2018;14:1744806918763658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [25].von Baeyer CL. Children's self-reports of pain intensity: scale selection, limitations and interpretation. Pain Res Manag 2006;11:157–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Vrontou S, Wong AM, Rau KK, Koerber HR, Anderson DJ. Genetic identification of C fibres that detect massage-like stroking of hairy skin in vivo. Nature 2013;493:669–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Wiltschko AB, Johnson MJ, Iurilli G, Peterson RE, Katon JM, Pashkovski SL, Abraira VE, Adams RP, Datta SR. Mapping sub-second structure in mouse behavior. Neuron 2015;88:1121–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Woolf CJ. Overcoming obstacles to developing new analgesics. Nat Med 2010;16:1241–7. [DOI] [PubMed] [Google Scholar]

- [29].Yezierski RP, Hansson P. Inflammatory and neuropathic pain from bench to bedside: what went wrong? J Pain 2018;19:571–88. [DOI] [PubMed] [Google Scholar]