Supplemental Digital Content is available in the text.

Abstract

Clear communication with patients upon emergency department (ED) discharge is important for patient safety during the transition to outpatient care. Over one-third of patients are discharged from the ED with diagnostic uncertainty, yet there is no established approach for effective discharge communication in this scenario. From 2017 to 2019, the authors developed the Uncertainty Communication Checklist for use in simulation-based training and assessment of emergency physician communication skills when discharging patients with diagnostic uncertainty. This development process followed the established 12-step Checklist Development Checklist framework and integrated patient feedback into 6 of the 12 steps. Patient input was included as it has potential to improve patient-centeredness of checklists related to assessment of clinical performance. Focus group patient participants from 2 clinical sites were included: Thomas Jefferson University Hospital, Philadelphia, PA, and Northwestern University Hospital, Chicago, Illinois.

The authors developed a preliminary instrument based on existing checklists, clinical experience, literature review, and input from an expert panel comprising health care professionals and patient advocates. They then refined the instrument based on feedback from 2 waves of patient focus groups, resulting in a final 21-item checklist. The checklist items assess if uncertainty was addressed in each step of the discharge communication, including the following major categories: introduction, test results/ED summary, no/uncertain diagnosis, next steps/follow-up, home care, reasons to return, and general communication skills. Patient input influenced both what items were included and the wording of items in the final checklist. This patient-centered, systematic approach to checklist development is built upon the rigor of the Checklist Development Checklist and provides an illustration of how to integrate patient feedback into the design of assessment tools when appropriate.

Emergency department (ED) transitions of care are a high-risk period for patient safety, and clear communication regarding discharge diagnosis, prognosis, treatment plan, and expected course of illness is critical for safe discharge.1 While discharge communication tools have been developed for patients with certain medical diagnoses,2,3 these tools are not applicable to a significant portion of ED discharges. At least one-third of patients are discharged from the ED with a symptom-based diagnosis (i.e., chest pain),4 and there is no existing guidance for how to effectively communicate with these patients at the time of discharge. As a result, patients often leave the ED with unaddressed fear related to their symptoms5,6 and face a troubling dichotomy. If they are unaware of the uncertainty, they may not follow up appropriately or may ignore dangerous symptoms. Alternately, their fear may cause heightened sensitivity to their symptoms and lead them to seek unnecessary care.

Patient-centered communication skills and the ability to establish an appropriate discharge plan are core competencies for medical residents.7 Yet, a recent survey of 263 emergency medicine trainees found that 43% “often” or “always” encountered challenges discharging patients with diagnostic uncertainty, and 51% reported a strong desire for more training in how to have these conversations.8 These survey findings highlight a clear gap in medical resident training for how to safely and effectively approach a common scenario: discharging a patient for whom there is diagnostic uncertainty.

Training and assessment of communication competence is complex and challenging. Simulation-based mastery learning (SBML) is a form of competency-based medical education that allows learners to develop skills through deliberate practice. While more often used in the context of technical procedures, SBML has also been used for communication training, including breaking bad news and code status discussions,9,10 and has been shown to improve patient care practices.11–13 SBML requires having an assessment tool, or checklist, to determine when a learner has achieved mastery of the content. Numerous checklist evaluation tools exist to assess general communication skills14 as well as communication about more focused topics (e.g., informed consent, code status discussion),15,16 though to our knowledge, none address communication regarding diagnostic uncertainty. With this work, we sought to fill a critical gap in resident training regarding safe and effective patient-centered discharge communication.

Checklist Development

Our team developed the Uncertainty Communication Checklist (UCC), an assessment tool for an SBML curriculum focused on teaching emergency medicine residents to effectively communicate with patients being discharged from the ED with diagnostic uncertainty. We followed the framework for checklist development as described in the Checklist Development Checklist (CDC) 12-step method,17 incorporating patient and expert feedback in an iterative fashion. The 12-step CDC method is a structured approach outlining the steps involved in development of evaluation checklists and has been used to inform development of a number of educational checklist tools.9,18,19

Rationale for patient engagement

We incorporated patient input at all key decision points in the checklist development process to ensure patient-centeredness of the final checklist. The typical SBML checklist development process starts with a review of the literature and of existing clinical guidelines, with subsequent review by expert practitioners (e.g., thoracentesis checklist development using American Thoracic Society guidelines with review by physicians who perform and supervise thoracentesis).17,20 Our work differed from procedural competency measurement in an important way. In contrast to procedural tasks, for which there is an accepted “correct” approach, there is no clearly defined “right answer” for how to effectively discharge patients from the ED in the setting of diagnostic uncertainty. Our team strove to define a patient-centered approach to these discharge conversations, identifying the need for inclusion of patients as “experts” in the checklist development. We involved patients directly in all stages of the UCC development process. To our knowledge, few medical education publications report patient involvement in teaching and learner assessment,21,22 and none report patient involvement in all key steps of checklist development.

Checklist development participants

We engaged 3 categories of participants in the checklist development: the study team, expert panel members, and focus group patient participants.

The study team had 7 persons: 4 emergency physicians (expertise in uncertainty after ED visits [K.L.R.], health literacy and doctor–patient communication [D.M.M.], and resident education and simulation [D.H.S., D.P.]), 2 research scientists (expertise in risk communication and health literacy [K.A.C.] and medical education and SBML [W.C.M.]), and 1 internal medicine physician (expertise in improving quality of the patient experience [R.E.P.]).

The expert panel was a multidisciplinary group consisting of 7 health professionals (with expertise in health care communication, health literacy, diagnostic uncertainty, underserved populations, and simulation education) and 3 patient advocates. We identified the non-study team health professionals through the study team’s professional network and selected members based on prior research and familiarity with the literature on uncertainty and communication in emergency care. Of the 7, 5 are practicing emergency physicians and 2 are research scientists. Of the 3 expert panel patients, 2 have been long-term members of a patient advisory board run by one of the study team members (K.L.R.). We identified the third patient from her involvement in our team’s prior work related to uncertainty in health care. The study team solicited expert panelists’ input and feedback throughout checklist development during a series of group conference calls and email. Communication with the expert panel occurred from February to November 2018.

We recruited focus group patient participants from 2 clinical sites: Thomas Jefferson University (TJU) Hospital, in Philadelphia, Pennsylvania, with > 64,000 annual ED visits, and Northwestern Memorial Hospital at Northwestern University (NU), in Chicago, Illinois, with > 88,000 annual ED visits. We identified patients using an electronic health record query for recent ED visit (within the prior 2 weeks) with a symptom-based discharge diagnosis (e.g., “chest pain,” “abdominal pain”) and contacted identified patients by phone to explain the project and further assess their eligibility. Patients were excluded if they were admitted to the hospital, had cognitive impairment as determined by a 6-item screener,23 or did not speak English. We scheduled patients who were interested and eligible to participate for a focus group session. Focus groups were conducted in 2 waves, with 4 focus groups conducted for each wave (2 at each clinical site). Participants completed written informed consent and a basic demographic survey at the start of each session, and each participant received $50 compensation. Sessions were audiorecorded and transcribed professionally. Focus group procedures were approved by both institutions’ institutional review boards. Study data were collected and managed using REDCap electronic data capture tools hosted at NU.24,25

Steps of checklist development

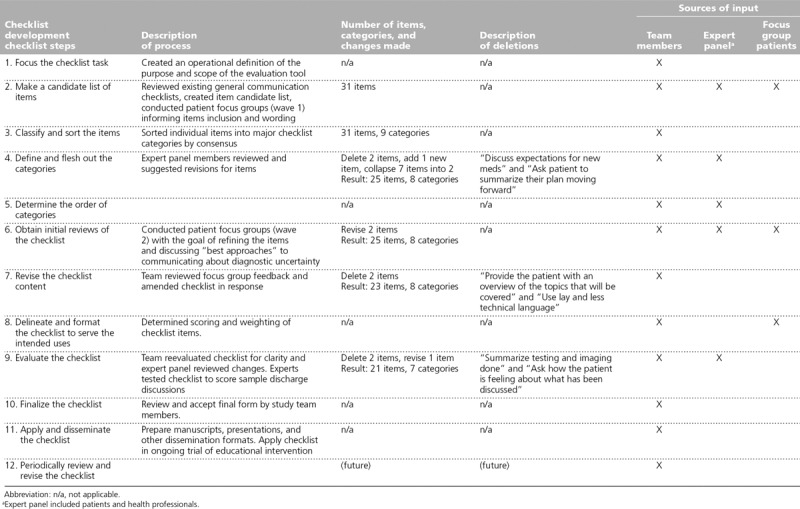

We completed the 12-step checklist development process from October 2017 through March 2019. The overall steps of the CDC 12-step framework are outlined in Table 1, along with details about how the research team applied each step. Steps 1–5 focus on initial checklist creation, steps 6–9 focus on review and revision, and steps 10–12 focus on finalizing and working with the developed checklist.17

Table 1.

Patient-Centered Application of the Checklist Development Checklist14 Steps as Used to Develop the Uncertainty Communication Checklist for Patient Discharge From the Emergency Department

Steps 1–5: Initial checklist creation

We first focused the checklist task, creating an operational definition of the purpose and scope of the evaluation tool. This ensured that we had a shared mental model about the use and applicability of the checklist (step 1). We then reviewed 15 of the most commonly used general communication checklists in detail and identified items that overlap with the UCC’s content area.14,26–29,32–40 We also completed a literature review on medical and diagnostic uncertainty and discharge communication to inform identification of candidate checklist items.41–64 We then conducted the first expert panel phone call, after which all 7 research team members independently generated lists of potential UCC items (step 2).

We conducted the first wave of focus groups in January 2018. Wave 1 focused on understanding patients’ experiences of uncertainty after an ED visit (e.g., their thoughts about uncertainty, questions for the doctor, experiences post discharge), their understanding of why and how frequently uncertainty occurs in the acute care setting, and their preferences for how physicians would ideally communicate regarding uncertainty. Focus group transcripts were analyzed using NVivo qualitative data analysis software, version 11 (QSR International Ltd, Doncaster, Victoria, Australia). We applied the existing items as a priori codes to the focus group transcripts and assessed for the presence of any ideas not already represented in an existing item.

Next, we classified and sorted the checklist items by group consensus (steps 3–5). Expert panel members were then engaged in item revision via rounds of anonymous email feedback using a web-based format. Panelists were asked to review each checklist item and respond if they would “keep as worded,” “remove,” or “revise.” Panelists could also provide suggested revisions and open-ended commentary. Responses and suggested revisions were then compiled, anonymized, and shared electronically with the panelists. Item wording was further refined via a moderated group teleconference discussion and subsequent email communication.

Steps 6–9: Review, revise, and evaluate the checklist content

We undertook 2 activities to review (step 6) and revise (step 7) checklist items. First, we reviewed item precision, item overlap, and item “interpretability” of each checklist item by using the checklist to rate simulated clinical encounters. Seven senior residents (4 at TJU, 3 at NU) role-played a discharge encounter based on a brief description of a clinical encounter. These sessions were audiorecorded and transcribed. Members of the study team then mapped residents’ statements from each session to checklist items, facilitating identification of items that weren’t covered or that needed rewording to clarify appropriate application. In addition, language used by residents was used to revise the “yes if” and “no if” examples provided for each checklist item.

We then conducted the second wave of focus groups in May 2018. In these focus groups, each checklist item was reviewed individually, with patient participants providing general feedback on item relevance as well as suggested wording changes. Focus group transcripts were coded using the checklist items as an a priori code set.

As the purpose of the checklist is for use in simulation-based education, the checklist was delineated and formatted for use by an assessor who is scoring the learner (step 8). The study team then reevaluated the checklist, with a specific focus on ensuring clarity, comprehensiveness, and parsimony.

For step 9, 2 collaborating simulation education experts assessed the checklist for clarity, ease of use, and fairness from the perspective of the checklist “user” or assessor. Each expert used the checklist to score 2 audiorecorded samples of simulated discharge discussions that were obtained during step 6. Following scoring, each expert completed a brief interview with a study investigator (K.L.R., D.M.M.) to understand scoring decisions and to seek clarity on which items would benefit from revision.

Steps 10–12: Finalize checklist, apply and disseminate, periodically review and revise

Upon finalization of the UCC (step 10), the team prepared for its dissemination, through this publication and several other formats (step 11). Starting September 2019, the checklist is being applied in a trial of an educational intervention to teach emergency medicine residents best practices for discharging a patient from the ED in the setting of diagnostic uncertainty. Knowledge gained through this education trial may lead to further refinement of the current checklist, consistent with the final step of the checklist development process (step 12).

Outcomes

Focus group participants

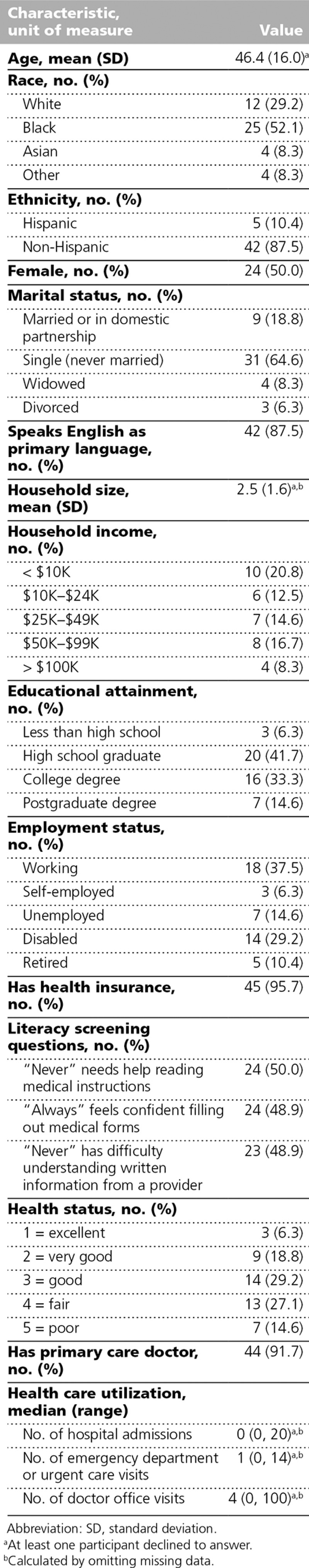

A total of 23 patients participated across the 4 wave 1 focus groups and 25 patients across the 4 wave 2 focus groups. The focus group participants were 50% female and had a mean age of 46 years. Participants had a wide range of education attainment, income, and employment status. The majority had health insurance (95.7%) (Table 2).

Table 2.

Demographic Characteristics of 48 Focus Group Participants Who Participated in Development of the Uncertainty Communication Checklist for Patient Discharge From the Emergency Department, Thomas Jefferson University Hospital and Northwestern Memorial Hospital, 2018

Steps 1–5: Initial checklist creation

List 1 outlines the team’s operational definition for the purpose and scope of the UCC. The team’s literature review and the subsequent expert panel call initially resulted in a total of 92 checklist items that had significant overlap. After consolidation, there were 31 checklist items. Wave 1 focus group analysis did not reveal any new emergent codes that required a new checklist item. Overall, focus group findings supported existing items and informed item wording (step 2).

The team sorted the 31 items into 9 categories: introduction, test results/ED summary, uncertain diagnosis, next steps/follow-up, self-care, reasons to return/red flags, patient questions, patient reaction and teach back, and general communication skills. Subsequent item review by expert panelists resulted in deletion of 2 items, creation of 1 item, and consolidation of 7 items into 2 items (steps 4–5).

Steps 6–9: Review, revise, and evaluate the checklist content

Using wave 2 focus group feedback, we removed 2 checklist items. For example, patients were confused about the item: “Provide the patient with an overview of the topics that will be covered.” They commented that this would be “awkward” in conversation and they would rather that physicians just tell them the information. This item was originally included because research in the emergency setting suggests that “information structuring” improves information recall22,23 (steps 6 and 7).

To delineate and format the checklist (step 8), we determined that each checklist item would receive equal weight, with each item receiving a dichotomous score of 1 = done correctly or 0 = done incorrectly/not done. No partial points are awarded for “done incorrectly” and no items are considered “critical actions.” At this stage, 2 additional items were removed because of redundancy and 1 item was reworded to improve clarity.

After evaluation of the checklist with 2 simulation experts, the wording of the “yes if” and “no if” examples was modified for 4 items and 1 item had a “no if” statement added (step 9).

Steps 10–12: Finalize checklist, apply and disseminate, periodically review and revise

Application of the 12-step CDC process resulted in a final 21-item UCC, covering 7 major categories: introduction, test results/ED summary, no/uncertain diagnosis, next steps/follow-up, home care, reasons to return, and general communication skills. See List 2 for the UCC, and Supplemental Digital Appendix 1, available at http://links.lww.com/ACADMED/A807, for the UCC including scoring instructions.

Discussion

We developed the UCC to guide the teaching and assessment of trainees for discharging patients from the ED in the setting of diagnostic uncertainty. Our patient-centered systematic approach to checklist development built upon the rigor of the preexisting CDC and provides an illustration of how to integrate patient feedback into the design of assessment tools when appropriate.

Patients have been engaged in the past to develop other communication rating guides, yet we are not aware of any other work in which patients have directly assisted throughout the checklist development process. Standardized patients were involved in the development of the SEGUE checklist,15 and audio-recordings of patient interviews were used to inform item validation for the Patient Perceptions of Patient-Centeredness scale.30,31 There are also many communication checklists that employ patients as raters or scorers of the checklist.30,34,37 The process with which we developed the UCC used a well-established checklist development structure while incorporating patient input at all key decision points.

Our development of the UCC is particularly novel to checklist development for use with SBML. Most checklists developed specifically for use in an SBML environment have targeted procedural skills (e.g., central line placement, lumbar puncture, thoracentesis).19,20,65 To our knowledge, use of SBML for teaching communication skills has only been done in the context of breaking bad news and code status discussion,9,10,16,66 and those checklists were not developed with patient input.

The degree to which patient involvement during the checklist development phase may be beneficial likely varies depending on the intended context of use. Actively involving patients alongside experts in development of communication-based checklists may result in a more patient-centered product, whereas patient involvement is likely less informative for technical medical procedures. The degree of influence patient involvement has on checklist development may also depend on the amount of previously topical research that has involved direct patient input. For example, there is a large and growing body of literature focused on patient and family reactions to code status discussions.67–69 By contrast, diagnostic uncertainty has been minimally explored in prior work, and there is scant literature about the patient experience of a conversation about uncertainty in the acute care setting; thus, it is a context particularly well suited for patient involvement.

Overall, our experience with patient engagement was positive and patients influenced the checklist in both the items excluded from the final list as well as in the wording of the checklist items. Embarking on this process, we initially had concerns about patients’ ability to participate in some of the steps of the process, particularly in step 8 wherein they helped to determine the scoring of the checklist; however, patient participants quickly understood this task and all portions of the process. One challenge we faced was that patients frequently recommended eliminating items from the checklist that were included by the team because of a strong evidence base for efficacy in communication (e.g., use of a teach-back, which was removed in step 4, use of information structuring to improve recall, which was removed in step 7). Ultimately, the research team followed the patient recommendations on these items because, although they are best practices for general communication, their removal focused the checklist more clearly on the content of the conversation related to uncertainty.

This patient-centered checklist development process incorporated input from patient groups at 3 stages (steps 2, 6, and 8), which directly informed the revisions made by the study team in steps 3 and 7. Additionally, the patient expert panelists were involved in steps 2, 4, 5, 7, and 9. This process of iterative involvement of experts and patients directly informed many of the checklist items. Although time intensive, we believe this process was highly valuable and is easily replicable and transferable to other contexts of patient-centered checklist development.

Limitations

Our approach has several advantages, yet there are also limitations. The main limitation of this approach is that it is time and resource intensive. Development of the UCC involved not only the coordination of patient recruitment and expert panel meetings but also weekly internal team meetings and additional asynchronous work. Another limitation is that processes involving group dynamics, such as focus groups and expert panel sessions, may be influenced by a single forceful opinion and may not represent true consensus. This risk is particularly present when patients are in the same setting as subject matter experts, as they were in our panel. There is a risk that patient participants may not feel that their input is as important or relevant as that of a subject matter expert. The use of the iterative feedback with the opportunity for anonymous individual input via emailed questionnaires should mitigate this risk. The input from patients in focus groups, rather than solely via an expert panel, also minimizes this risk. In our experience developing this checklist, there were no significant disagreements in either setting and participants were all very vocal, regardless of their role (patient or expert) or setting (focus group or expert panel). The patients we engaged, however, were all recruited from 2 inner city academic health systems, and the majority were insured. Perspectives of patients in rural and suburban areas and those who are uninsured may be missing.

Another limitation to our approach relates specifically to the topic area: diagnostic uncertainty in the acute care setting. Diagnostic uncertainty is relatively new as a focus for research, and, as such, there are little published data on the topic or established expertise. Our advisory panel, with 5 practicing emergency physicians and 2 research scientists, had experts in health care communication, health literacy, diagnostic uncertainty, underserved populations, simulation, and education.

In addition, our checklist was designed for an educational setting. While patients were involved in its development, the checklist was not designed for use during real patient encounters. Involving patients during doctor–patient interactions using a physical checklist is a “next frontier” of patient engagement.70 We believe that this type of engagement will be well suited for the topic of diagnostic uncertainty. Finally, while the UCC has content validity based in its codevelopment with patients to whom the content applies, we are unable to assess the scoring validity of the checklist at this time as we have not yet obtained scores for a cohort of participants.

Implications and Future Directions

With an ever-increasing focus on patient-centered care delivery, our method of incorporating patient input into the checklist development process has many possible applications, particularly in clinical communication skills. Next steps for our work with the UCC include assessment of the impact of the UCC on patient outcomes during and after an ED discharge, including subsequent health care utilization. It is conceivable that having more direct conversations regarding the presence of ongoing uncertainty, as encouraged by the UCC, could affect subsequent utilization in either direction. Patients may have higher trust in providers based on these conversation and feel more confident in staying home to “wait out” their symptoms. Or alternatively, patients may feel more motivated to seek follow-up care based on the higher emphasis on ongoing uncertainty.

Additionally, prior studies suggest that patients struggle with issues related to uncertainty even in the setting of a confirmed diagnosis (i.e., treatment success, prognosis across diagnostic).50,71–74 Thus, it is possible that many of the items within this checklist address needs that are neither unique to patients with diagnostic uncertainty nor to patients being discharged specifically from the ED setting and that these items should be incorporated as a standard process for all clinical discharges. Future work is needed to explore whether there is a core set of items within the UCC that should be incorporated as a standard process across all discharges.

List 1

Operational Definition of the Purpose and Scope of the Evaluation for the Uncertainty Communication Checklist for Patient Discharge From the Emergency Department

Checklist will cover:

Diagnostic uncertainty

Medical uncertainty

Acute care setting, acute illness

Adult self-care

Patients being discharged home

Information giving

Communication skills deemed important for this topic (e.g., demonstrating empathy/opportunity to ask questions)

Checklist will not cover:

Illness trajectory uncertainty or treatment uncertainty

Personal or social uncertainty

Not chronic illness/symptoms

Pediatrics, caregivers

Admitted or observation status

General history taking

All general good communication practices (e.g., shaking hands, sitting down)

List 2

Uncertainty Communication Checklist for Patient Discharge From the Emergency Department

Introduction

1. Explain to the patient that they are being discharged

2. Ask if there is anyone else whom the patient wishes to have included in the conversation in person and/or by phone

Test results/ED summary

3. Clearly state that either “life-threatening” or “dangerous” conditions have not been found

4. Discuss diagnoses that were considered (using both medical and lay terminology)

5. Communicate relevant results of tests to patients (normal or abnormal)

6. Ask patient if there are any questions about testing and/or results

7. Ask patient if they were expecting anything else to be done during their encounter—if yes, address reasons not done

No/uncertain diagnosis

8. Discuss possible alternate or working diagnoses

9. Clearly state that there is not a confirmed explanation (diagnosis) for what the patient has been experiencing

10. Validate the patient’s symptoms

11. Discuss that the ED role is to identify conditions that require immediate attention

12. Normalize leaving the ED with uncertainty

Next steps/follow-up

13. Suggest realistic expectations/trajectory for symptoms

14. Discuss next tests that are needed, if any

15. Discuss who to see next and in what time frame

Home care

16. Discuss a plan for managing symptoms at home

17. Discuss any medication changes

18. Ask patient if there are any questions and/or anticipated problems related to next steps (self-care and future medical care) after discharge

Reasons to return

19. Discuss what symptoms should prompt immediate return to the ED

General communication skills

20. Make eye contact

21. Ask patient if there are any other questions or concerns

Abbreviation: ED, emergency department.

Acknowledgments:

The authors thank the following members of the expert panel for their assistance in developing and revising this checklist: Kirsten Engel, MD; Erik Hess, MD, MS; Annemarie Jutel, RN, BPhED(Hons), PhD; Juanita Lavalais; Larry Loebell, BA, MA, MFA; Zachary Meisel, MD, MPH, MSHP; Erica Shelton, MD, MPH, MHS, FACEP; John Vozenliek, MD; Gail Weingarten; and Michael Wolf, PhD, MPH. In addition, the authors thank the following simulation experts for their assistance with final checklists evaluation: Ronald Hall, MD, and Nahzinine Shakeri MD, MS.

Supplementary Material

Footnotes

Supplemental digital content for this article is available at http://links.lww.com/ACADMED/A807.

Funding/Support: This project was supported by grant number R18HS025651 from the Agency for Healthcare Research and Quality. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality.

Other disclosures: None reported.

Ethical approval: This project was granted exemption by the Thomas Jefferson University Internal Review Board on 02/26/2018, Control #18E.135; an IRB authorization agreement was set up to allow Northwestern University to rely on Thomas Jefferson University’s Internal Review Board.

References

- 1.Johns Hopkins University; Armstrong Institute for Patient Safety and Quality. Improving the Emergency Department Discharge Process: Environmental Scan Report. 2014Rockville, MD: Agency for Healthcare Research and Quality; [Google Scholar]

- 2.Waisman Y, Siegal N, Siegal G, Amir L, Cohen H, Mimouni M. Role of diagnosis-specific information sheets in parents’ understanding of emergency department discharge instructions. Eur J Emerg Med. 2005;12:159–162. [DOI] [PubMed] [Google Scholar]

- 3.Lawrence LM, Jenkins CA, Zhou C, Givens TG. The effect of diagnosis-specific computerized discharge instructions on 72-hour return visits to the pediatric emergency department. Pediatr Emerg Care. 2009;25:733–738. [DOI] [PubMed] [Google Scholar]

- 4.Wen LS, Espinola JA, Kosowsky JM, Camargo CA., Jr Do emergency department patients receive a pathological diagnosis? A nationally-representative sample. West J Emerg Med. 2015;16:50–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Rising KL, Hudgins A, Reigle M, Hollander JE, Carr BG. “I’m just a patient”: Fear and uncertainty as drivers of emergency department use in patients with chronic disease. Ann Emerg Med. 2016;68:536–543. [DOI] [PubMed] [Google Scholar]

- 6.Rising KL, Padrez KA, O’Brien M, Hollander JE, Carr BG, Shea JA. Return visits to the emergency department: The patient perspective. Ann Emerg Med. 2015;65:377–386. [DOI] [PubMed] [Google Scholar]

- 7.Accreditation Council for Graduate Medical Education, American Board of Emergency Medicine. The Emergency Medicine Milestone Project. http://www.acgme.org/acgmeweb/Portals/0/PDFs/Milestones/EmergencyMedicineMilestones.pdf. Published 2012. Accessed December 6, 2019.

- 8.Rising KL, Papanagnou D, McCarthy D, Gentsch A, Powell R. Emergency medicine resident perceptions about the need for increased training in communicating diagnostic uncertainty. Cureus. 2018;10:e2088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Vermylen JH, Wood GJ, Cohen ER, Barsuk JH, McGaghie WC, Wayne DB. Development of a simulation-based mastery learning curriculum for breaking bad news. J Pain Symptom Manage. 2019;57:682–687. [DOI] [PubMed] [Google Scholar]

- 10.Sharma RK, Szmuilowicz E, Ogunseitan A, et al. Evaluation of a mastery learning intervention on hospitalists’ code status discussion skills. J Pain Symptom Manage. 2017;53:1066–1070. [DOI] [PubMed] [Google Scholar]

- 11.Barsuk JH, Cohen ER, Potts S, et al. Dissemination of a simulation-based mastery learning intervention reduces central line-associated bloodstream infections. BMJ Qual Saf. 2014;23:749–756. [DOI] [PubMed] [Google Scholar]

- 12.Barsuk JH, Cohen ER, Williams MV, et al. The effect of simulation-based mastery learning on thoracentesis referral patterns. J Hosp Med. 2016;11:792–795. [DOI] [PubMed] [Google Scholar]

- 13.Barsuk JH, McGaghie WC, Cohen ER, O’Leary KJ, Wayne DB. Simulation-based mastery learning reduces complications during central venous catheter insertion in a medical intensive care unit. Crit Care Med. 2009;37:2697–2701. [PubMed] [Google Scholar]

- 14.Schirmer JM, Mauksch L, Lang F, et al. Assessing communication competence: A review of current tools. Fam Med. 2005;37:184–192. [PubMed] [Google Scholar]

- 15.Ripley BA, Tiffany D, Lehmann LS, Silverman SG. Improving the informed consent conversation: A standardized checklist that is patient centered, quality driven, and legally sound. J Vasc Interv Radiol. 2015;26:1639–1646. [DOI] [PubMed] [Google Scholar]

- 16.Szmuilowicz E, Neely KJ, Sharma RK, Cohen ER, McGaghie WC, Wayne DB. Improving residents’ code status discussion skills: a randomized trial. J Palliat Med. 2012;15:768–774. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Stufflebeam DL. Guidelines for developing evaluation checklists: The checklists development checklist (CDC). https://wmich.edu/sites/default/files/attachments/u350/2014/guidelines_cdc.pdf. Published 2000. Accessed December 10, 2019.

- 18.Wayne DB, Butter J, Siddall VJ, et al. Mastery learning of advanced cardiac life support skills by internal medicine residents using simulation technology and deliberate practice. J Gen Intern Med. 2006;21:251–256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Barsuk JH, Cohen ER, Caprio T, McGaghie WC, Simuni T, Wayne DB. Simulation-based education with mastery learning improves residents’ lumbar puncture skills. Neurology. 2012;79:132–137. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wayne DB, Barsuk JH, O’Leary KJ, Fudala MJ, McGaghie WC. Mastery learning of thoracentesis skills by internal medicine residents using simulation technology and deliberate practice. J Hosp Med. 2008;3:48–54. [DOI] [PubMed] [Google Scholar]

- 21.Wayne DB, Cohen E, Makoul G, McGaghie WC. The impact of judge selection on standard setting for a patient survey of physician communication skills. Acad Med. 2008;83(10 suppl):S17–S20. [DOI] [PubMed] [Google Scholar]

- 22.Clayman ML, Makoul G, Harper MM, Koby DG, Williams AR. Development of a shared decision making coding system for analysis of patient-healthcare provider encounters. Patient Educ Couns. 2012;88:367–372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Callahan CM, Unverzagt FW, Hui SL, Perkins AJ, Hendrie HC. Six-item screener to identify cognitive impairment among potential subjects for clinical research. Med Care. 2002;40:771–781. [DOI] [PubMed] [Google Scholar]

- 24.Harris PA, Taylor R, Thielke R, Payne J, Gonzalez N, Conde JG. Research electronic data capture (REDCap)—A metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42:377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Harris PA, Taylor R, Minor BL, et al. ; REDCap Consortium. The REDCap consortium: Building an international community of software platform partners. J Biomed Inform. 2019;95:103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.van Thiel J, Kraan HF, Van Der Vleuten CP. Reliability and feasibility of measuring medical interviewing skills: The revised Maastricht history-taking and advice checklist. Med Educ. 1991;25:224–229. [DOI] [PubMed] [Google Scholar]

- 27.Novack DH, Dubé C, Goldstein MG. Teaching medical interviewing. A basic course on interviewing and the physician-patient relationship. Arch Intern Med. 1992;152:1814–1820. [DOI] [PubMed] [Google Scholar]

- 28.Cohen DS, Colliver JA, Marcy MS, Fried ED, Swartz MH. Psychometric properties of a standardized-patient checklist and rating-scale form used to assess interpersonal and communication skills. Acad Med. 1996;71(1 suppl):S87–S89. [DOI] [PubMed] [Google Scholar]

- 29.Whelan GP. Educational Commission for Foreign Medical Graduates: Clinical skills assessment prototype. Med Teach. 1999;21:156–160. [DOI] [PubMed] [Google Scholar]

- 30.Henbest RJ, Stewart M. Patient-centredness in the consultation. 2: Does it really make a difference? Fam Pract. 1990;7:28–33. [DOI] [PubMed] [Google Scholar]

- 31.Stewart M, Meredith L, Ryan BL, Brown JB. The Patient Perception of Patient-Centeredness Questionnaire. Working Paper Series #04-1. 2004. London, ON, Canada: The University of Western Ontario; https://www.schulich.uwo.ca/familymedicine/research/csfm/publications/working_papers/index.html. Accessed February 14, 2020. [Google Scholar]

- 32.Frankel RM, Stein T. Getting the most out of the clinical encounter: The four habits model. J Med Pract Manage. 2001;16:184–191. [PubMed] [Google Scholar]

- 33.Makoul G. Essential elements of communication in medical encounters: The Kalamazoo consensus statement. Acad Med. 2001;76:390–393. [DOI] [PubMed] [Google Scholar]

- 34.Lipner RS, Blank LL, Leas BF, Fortna GS. The value of patient and peer ratings in recertification. Acad Med. 2002;77(10 suppl):S64–S66. [DOI] [PubMed] [Google Scholar]

- 35.Makoul G. The SEGUE Framework for teaching and assessing communication skills. Patient Educ Couns. 2001;45:23–34. [DOI] [PubMed] [Google Scholar]

- 36.Kurtz S, Silverman J, Benson J, Draper J. Marrying content and process in clinical method teaching: Enhancing the Calgary-Cambridge guides. Acad Med. 2003;78:802–809. [DOI] [PubMed] [Google Scholar]

- 37.Epstein RM, Dannefer EF, Nofziger AC, et al. Comprehensive assessment of professional competence: The Rochester experiment. Teach Learn Med. 2004;16:186–196. [DOI] [PubMed] [Google Scholar]

- 38.Kalet A, Pugnaire MP, Cole-Kelly K, et al. Teaching communication in clinical clerkships: Models from the macy initiative in health communications. Acad Med. 2004;79:511–520. [DOI] [PubMed] [Google Scholar]

- 39.Lang F, McCord R, Harvill L, Anderson DS. Communication assessment using the common ground instrument: Psychometric properties. Fam Med. 2004;36:189–198. [PubMed] [Google Scholar]

- 40.Stillman PL, Brown DR, Redfield DL, Sabers DL. Construct validation of the Arizona clinical interview rating scale. Educ Psychol Meas. 1977;37:1031–1038. [Google Scholar]

- 41.Bhise V, Meyer AND, Menon S, et al. Patient perspectives on how physicians communicate diagnostic uncertainty: An experimental vignette study. Int J Qual Health Care. 2018;30:2–8. [DOI] [PubMed] [Google Scholar]

- 42.Bhise V, Rajan SS, Sittig DF, Morgan RO, Chaudhary P, Singh H. Defining and measuring diagnostic uncertainty in medicine: A systematic review. J Gen Intern Med. 2018;33:103–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Wray CM, Loo LK. The Diagnosis, prognosis, and treatment of medical uncertainty. J Grad Med Educ. 2015;7:523–527. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Ghosh AK. Understanding medical uncertainty: A primer for physicians. J Assoc Physicians India. 2004;52:739–742. [PubMed] [Google Scholar]

- 45.Politi MC, Clark MA, Ombao H, Légaré F. The impact of physicians’ reactions to uncertainty on patients’ decision satisfaction. J Eval Clin Pract. 2011;17:575–578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hewson MG, Kindy PJ, Van Kirk J, Gennis VA, Day RP. Strategies for managing uncertainty and complexity. J Gen Intern Med. 1996;11:481–485. [DOI] [PubMed] [Google Scholar]

- 47.Ackermann S, Heierle A, Bingisser MB, et al. Discharge communication in patients presenting to the emergency department with chest pain: Defining the ideal content. Health Commun. 2016;31:557–565. [DOI] [PubMed] [Google Scholar]

- 48.Jutel A. Putting a Name to It: Diagnosis in Contemporary Society. 2011Baltimore, MD: Johns Hopkins University Press; [Google Scholar]

- 49.Martin SC, Stone AM, Scott AM, Brashers DE. Medical, personal, and social forms of uncertainty across the transplantation trajectory. Qual Health Res. 2010;20:182–196. [DOI] [PubMed] [Google Scholar]

- 50.Zhang Y. Uncertainty in illness: Theory review, application, and extension. Oncol Nurs Forum. 2017;44:645–649. [DOI] [PubMed] [Google Scholar]

- 51.Mishel MH. The measurement of uncertainty in illness. Nurs Res. 1981;30:258–263. [PubMed] [Google Scholar]

- 52.Mishel MH. Uncertainty in acute illness. Annu Rev Nurs Res. 1997;15:57–80. [PubMed] [Google Scholar]

- 53.Undeland M, Malterud K. Diagnostic work in general practice: More than naming a disease. Scand J Prim Health Care. 2002;20:145–150. [DOI] [PubMed] [Google Scholar]

- 54.Engel KG, Buckley BA, Forth VE, et al. Patient understanding of emergency department discharge instructions: Where are knowledge deficits greatest? Acad Emerg Med. 2012;19:E1035–E1044. [DOI] [PubMed] [Google Scholar]

- 55.Engel KG, Heisler M, Smith DM, Robinson CH, Forman JH, Ubel PA. Patient comprehension of emergency department care and instructions: Are patients aware of when they do not understand? Ann Emerg Med. 2009;53:454–461.e15. [DOI] [PubMed] [Google Scholar]

- 56.Rhodes KV, Bisgaier J, Lawson CC, Soglin D, Krug S, Van Haitsma M. “Patients who can’t get an appointment go to the ER”: Access to specialty care for publicly insured children. Ann Emerg Med. 2013;61:394–403. [DOI] [PubMed] [Google Scholar]

- 57.Samuels-Kalow ME, Stack AM, Porter SC. Effective discharge communication in the emergency department. Ann Emerg Med. 2012;60:152–159. [DOI] [PubMed] [Google Scholar]

- 58.Vashi A, Rhodes KV. “Sign right here and you’re good to go”: A content analysis of audiotaped emergency department discharge instructions. Ann Emerg Med. 2011;57:315–322. [DOI] [PubMed] [Google Scholar]

- 59.Baile WF, Buckman R, Lenzi R, Glober G, Beale EA, Kudelka AP. SPIKES—A six-step protocol for delivering bad news: Application to the patient with cancer. Oncologist. 2000;5:302–311. [DOI] [PubMed] [Google Scholar]

- 60.Albers MJ. Communication as reducing uncertainty. 2012Conference paper. Proceedings of the 30th ACM International Conference on Design of Communication. [Google Scholar]

- 61.Politi MC, Clark MA, Ombao H, Dizon D, Elwyn G. Communicating uncertainty can lead to less decision satisfaction: A necessary cost of involving patients in shared decision making? Health Expect. 2011;14:84–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Stone J, Wojcik W, Durrance D, et al. What should we say to patients with symptoms unexplained by disease? The “number needed to offend”. BMJ. 2002;325:1449–1450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Brashers DE. Communication and uncertainty management. J Commun. 2001;51:477–497. [Google Scholar]

- 64.Body R, Kaide E, Kendal S, Foex B. Not all suffering is pain: Sources of patients’ suffering in the emergency department call for improvements in communication from practitioners. Emerg Med J. 2013:1–6. [DOI] [PubMed] [Google Scholar]

- 65.Barsuk JH, McGaghie WC, Cohen ER, Balachandran JS, Wayne DB. Use of simulation-based mastery learning to improve the quality of central venous catheter placement in a medical intensive care unit. J Hosp Med. 2009;4:397–403. [DOI] [PubMed] [Google Scholar]

- 66.Cohen ER, Barsuk JH, Moazed F, et al. Making July safer: Simulation-based mastery learning during intern boot camp. Acad Med. 2013;88:233–239. [DOI] [PubMed] [Google Scholar]

- 67.Quill TE. Initiating end-of-life discussions with seriously ill patients: Addressing the “elephant in the room.” JAMA. 2000;284:2502–2507. [DOI] [PubMed] [Google Scholar]

- 68.Tulsky JA, Fischer GS, Larson S, Roter DL, Arnold RM. Experts practice what they preach. Arch Intern Med. 2003;160:3477. [DOI] [PubMed] [Google Scholar]

- 69.Chittenden EH, Clark ST, Pantilat SZ. Discussing resuscitation preferences with patients: Challenges and rewards. J Hosp Med. 2006;1:231–240. [DOI] [PubMed] [Google Scholar]

- 70.Latif A, Haider A, Pronovost PJ. Smartlists for patients: The next frontier for engagement? NEJM Catalyst. https://catalyst.nejm.org/patient-centered-checklists-next-frontier/. Published 2017. Accessed December 6, 2019.

- 71.Bailey DE, Mishel MH, Belyea M, Stewart JL, Mohler J. Uncertainty intervention for watchful waiting in prostate cancer. Cancer Nurs. 2004;27:339–346. [DOI] [PubMed] [Google Scholar]

- 72.Zhang Y, Kwekkeboom K, Petrini M. Uncertainty, self-efficacy, and self-care behavior in patients with breast cancer undergoing chemotherapy in China. Cancer Nurs. 2014;38:19–26. [DOI] [PubMed] [Google Scholar]

- 73.Mishel MH, Germino BB, Lin L, et al. Managing uncertainty about treatment decision making in early stage prostate cancer: A randomized clinical trial. Patient Educ Couns. 2009;77:349–359. [DOI] [PubMed] [Google Scholar]

- 74.Hall DL, Mishel MH, Germino BB. Living with cancer-related uncertainty: Associations with fatigue, insomnia, and affect in younger breast cancer survivors. Support Care Cancer. 2014;22:2489–2495. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.