Abstract

Memory retrieval is thought to depend on interactions between hippocampus and cortex, but the nature of representation in these regions and their relationship remains unclear. Here, we performed an ultra-high field fMRI (7T) experiment, comprising perception, learning and retrieval sessions. We observed a fundamental difference between representations in hippocampus and high-level visual cortex during perception and retrieval. First, while object-selective posterior fusiform cortex showed consistent responses that allowed us to decode object identity across both perception and retrieval one day after learning, object decoding in hippocampus was much stronger during retrieval than perception. Second, in visual cortex but not hippocampus, there was consistency in response patterns between perception and retrieval, suggesting that substantial neural populations are shared for both perception and retrieval. Finally, the decoding in hippocampus during retrieval was not observed when retrieval was tested on the same day as learning suggesting that the retrieval process itself is not sufficient to elicit decodable object representations. Collectively, these findings suggest that while cortical representations are stable between perception and retrieval, hippocampal representations are much stronger during retrieval, implying some form of reorganization of the representations between perception and retrieval.

Keywords: fMRI, hippocampus, memory retrieval, perception, visual cortex

Introduction

Memory retrieval is the process by which humans bring previously experienced information back to mind (Tulving 2002). For over a century, researchers have proposed that memory retrieval re-engages processes that were active during the original experience (James 1890; Penfield and Perot 1963; Tulving and Thomson 1973; Norman and O’Reilly 2003; Rugg et al. 2008; Danker and Anderson 2010; Liu et al. 2012; Tambini and Davachi 2013). Consistent with this view, functional imaging studies have demonstrated that perception and retrieval of visual stimuli (<1 h delay after the encoding) evoke similar patterns of BOLD (blood oxygen level dependent) response across the cortex (Kosslyn et al. 1997; O’Craven and Kanwisher 2000; Buchsbaum et al. 2012). In particular, studies of visual imagery and retrieval suggest reinstatement of not just category-specific (Polyn et al. 2005; Reddy et al. 2010), but also item-specific patterns in visual cortex (Stokes et al. 2009; Lee et al. 2012; Riggall and Postle 2012) and individual event-specific patterns in parahippocampal cortex (Staresina et al. 2012). While these studies provide evidence that retrieval is supported by a reinstatement of the cortical representation elicited during perception or encoding, it remains unclear how these dynamics relate to the hippocampus, which is one of the key regions in memory processes.

Hippocampus is considered critical for memory retrieval (Dudai 2004), and the hippocampal representations during retrieval are thought to be linked to its activation during encoding (Gordon et al. 2014; Danker et al. 2017). Memory models suggest that through cortico-hippocampal interactions, hippocampus binds multiple elements of the original event into a novel unitary representation through consolidation, and reinstates the components together during retrieval (Sutherland and Rudy 1989; Rudy and Sutherland 1995; O’Reilly and Rudy 2001; Moscovitch et al. 2016). Thus, the hippocampal representation during retrieval is not a simple replay or reinstatement of the neural responses during the original experience. However, it remains unclear how hippocampal representations compare with cortical representations both during the original experience and memory retrieval.

To directly compare hippocampal and cortical representations during perception and retrieval, we performed an event-related functional magnetic resonance imaging (fMRI) experiment, combined with a simple object memory task. We directly measured the information available in the distributed response across posterior fusiform cortex (pFs), which is thought to be involved in the high-level processing of visual objects (Kravitz et al. 2013), and hippocampus using ultra-high field (7T) fMRI during the perception and retrieval of object information after a one-day delay (Fig. 1).

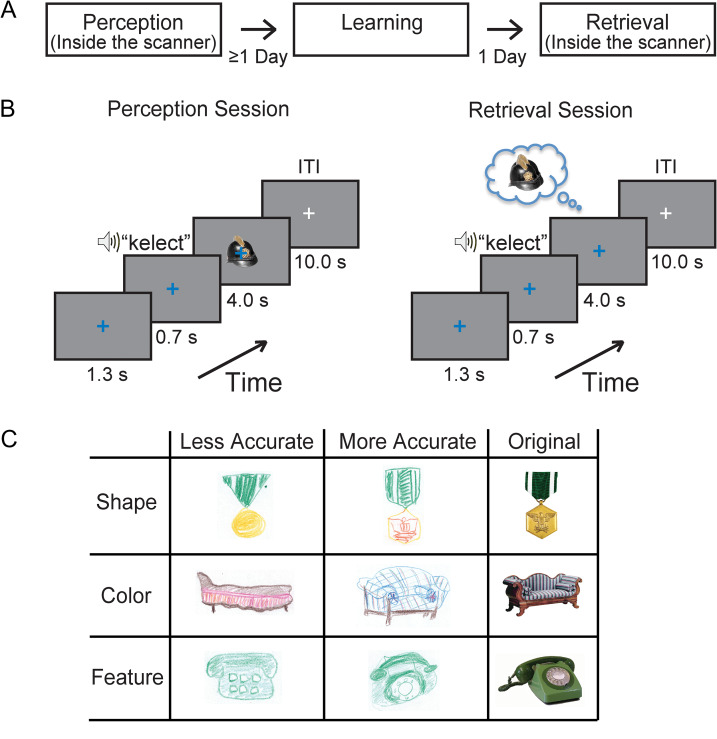

Figure 1.

Experimental design. (A) The task comprised separate Perception, Learning, and Retrieval sessions, with a one-day delay between Learning and Retrieval. (B) On each trial of the Perception and the Retrieval sessions, a blue fixation cross was followed by an auditory cue presentation. For each cue, participants were instructed to view (Perception session) or retrieve (Retrieval session) the paired image as long as the blue fixation cross remained on the screen. During the inter-trial interval (ITI), the color of the fixation cross changed to white. (C) Examples of participants’ drawings. There were variations in accuracy of shape, color, and feature.

We find that while object identity can be consistently decoded from visual cortex during perception and retrieval one day after learning, object decoding from hippocampal responses is significantly stronger during retrieval than perception. Further, hippocampal representations show less consistency between perception and retrieval than cortical representations. These results suggest that while cortical representations are stable following perception, hippocampal representations are modified during the first day following encoding. Moreover, this change of the representations results in stronger and more consistently distinct representations for individual objects in the hippocampus.

Materials and Methods

Participants

Eighteen neurologically intact right-handed participants (10 females, age 24.22 ± 0.81 years) took part in the main experiment testing retrieval after a one-day delay. In a follow-up experiment of retrieval on the same day as learning, 16 neurologically intact right-handed participants (nine females, age 25.25 ± 0.83 years) were recruited. All participants provided written informed consent for the procedure in accordance with protocols approved by the NIH Institutional Review Board.

Tasks and Stimuli

In the main experiment, participants completed separate Perception, Learning, and Retrieval sessions (Fig. 1A) as well as a drawing test immediately following the Retrieval session. The Perception and Retrieval sessions were both conducted inside the MRI scanner, while the Learning session was conducted in a behavioral testing room outside the scanner. The time between the Perception and Learning sessions was not fixed, while the Retrieval session always occurred on the next day immediately following the Learning session.

Perception Session

Participants were presented with fixed pairings of 14 auditory cues (pseudowords) and visual images inside the scanner in an event-related design. There were six runs consisting of 28 trials each (two trials per cue-image pair). On each trial, the white fixation cross first changed color to blue, indicating onset of the trial (Fig. 1B). After 1.3 s, participants heard a 700-ms long cue immediately followed by the paired image for 4000 ms. Between trials, there was a 10-s inter-trial interval (ITI). The order of the stimuli were randomized and counterbalanced across runs.

Participants were not informed of any association between the auditory cues and the visual stimuli and were simply asked to passively view the stimuli (Fig. 1B Perception Session). The auditory cues were a man’s voice speaking 1 of 14 two-syllable pseudowords (shisker, manple, tenire, alose, bismen, happer, kelect, prigsle, salpen, towpare, leckot, cetish, finy, poxer), and the visual images were 1 of 12 objects (bag, phone, chair, clock, flag, guitar, hat, lamp, medal, necklace, shoes, vase) or two face (an adult man, a little girl) images. The visual images (~7°) were viewed via a back-projection display (1024 × 768 resolution, 60 Hz refresh rate) with a uniform gray background. We included face stimuli as a “sanity check” for responses in visual cortex, which normally shows clear discrimination between perception of object and face stimuli. In all analyses except for the results in Figure 2B and Supplementary Fig. S1, we focus on the responses for the 12 objects only.

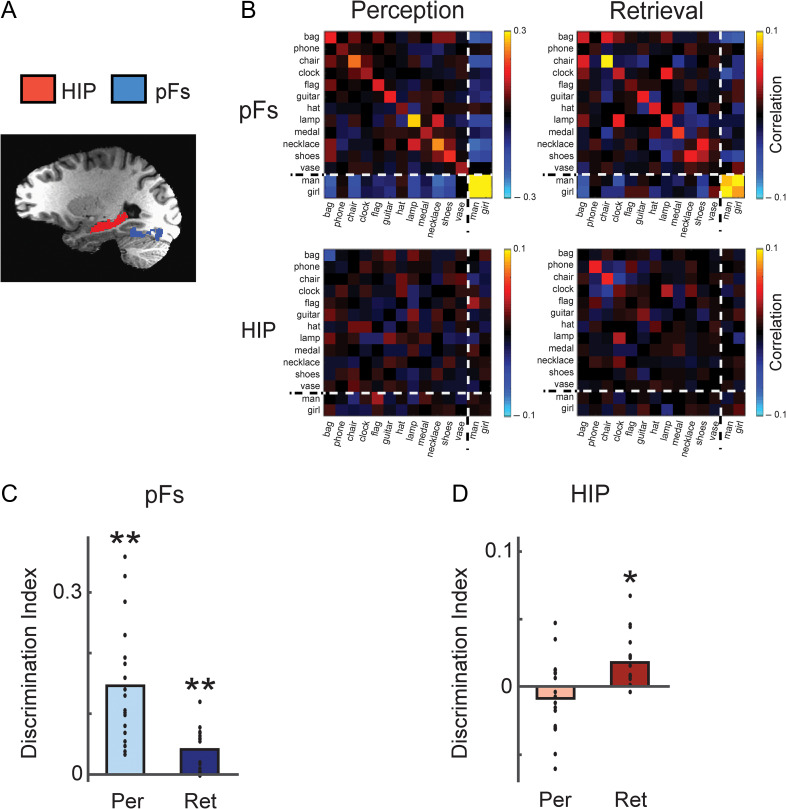

Figure 2.

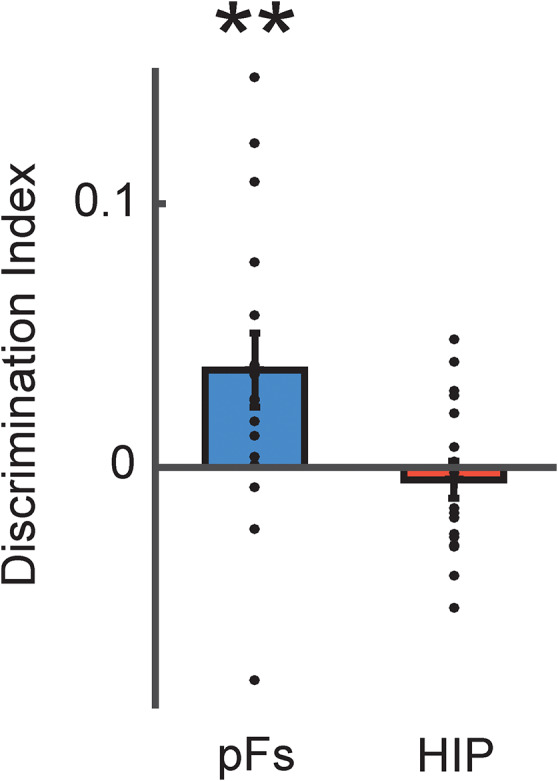

Decoding of object identity based on pFs and hippocampal responses. (A) Regions-of-interest (ROI) are displayed on a participant’s T1-weighted image. Red (Hippocampus, HIP), and blue (posterior fusiform cortex, pFs) areas indicate voxels which were included in the ROI. (B) Similarity matrices of pFs and hippocampus averaged across participants during perception and retrieval. The main diagonal in each matrix from the top left to bottom right corner are the correlations between a visual object and itself in the two halves of the data. The dotted lines indicate the division between objects and faces in the matrix. (C, D) Average discrimination indices for pFs (C) and hippocampus (D) during perception and retrieval. HIP showed significant discrimination only during retrieval but not perception whereas pFs showed positive discrimination during both perception and retrieval sessions. *P < 0.05; **P < 0.01. Each dot indicates the mean value of the object discrimination indices in each participant.

Learning Session

This session was conducted on a separate day (on average 9.59 ± 2.94 days after the Perception session) outside the scanner (Fig. 1A), participants were trained to explicitly associate the fourteen auditory cue and visual image pairs for about one hour. This session consisted of 8 blocks of 42 trials. On each trial, the white fixation cross first changed color to blue, indicating onset of the trial. After 1.3 s, participants heard a 700-ms long recording of the spoken non-sense word (auditory cue), followed by 2 s presentation of the visual image corresponding to the cue. The ITI was 1 s. Participants were asked to memorize all pairs and specific details of the visual image when they heard the cues and saw the images. To motivate their learning, after the fourth and eighth block, they were given two different forced choice tests: category test and exemplar test. In the category test, they were asked to choose the matching object category (basic-level category such as “chair”) between two written category names for each given auditory cue. In the exemplar test, they had to choose the paired exemplar image between two choices from the same category. Both tests were conducted for all cue-image pairs. Participants exhibited strong performance in both tests after learning (after eighth block): 97.68% ± 1.52% for category test and 98.61% ± 0.78% for exemplar test.

Retrieval Session

This session was identical to the Perception session except that no visual images were presented. Participants were instructed to retrieve the specific visual image given by the auditory cue as long as the blue fixation cross (4 s) remained on the screen in the absence of any visual object or face image (Fig. 1B, Retrieval session).

Drawing Test

This test was conducted outside the scanner immediately following the Retrieval session. For each cue, participants were asked to draw the paired image retrieved from their memory. The drawings were scored by two examiners without any information about the participant and corresponding fMRI data for each drawing, based on specific criteria including color, overall shape, and object features. In terms of color, each drawing of an object scored three points if the drawing contained at least two major colors of the object in the correct locations, two points if the drawing contained at least two major colors but in the wrong locations, and one point if the drawing contained at least one color of the object. For the overall shape, each drawing of an object scored three points if the overall shape and orientation were identical to those of the original object, two points if the shape or orientation were slightly different, and one point if the drawing included at least some partial shape. In terms of object features, each drawing of an object was awarded one point for each specific feature. For example, for the medal, the striped pattern, eagle, and arrow could each be awarded one point (Fig. 1C, Supplementary Fig. S4). The scores from the two examiners were averaged. The total score of each participant was derived by adding the scores from the color, overall shape, and object features criteria, and then normalized to 100%, which indicates a perfect match.

Follow-up Experiment

To examine the neural responses of short-delay retrieval after the learning (similar to prior studies on visual imagery (Cichy et al. 2012; Lee et al. 2012)), we conducted a similar fMRI experiment with the Learning and Retrieval sessions occurring on the same day in a different set of participants. For this set of participants, there was no Perception session, given the difficulty of conducting 5 h of testing in one day with 4 h of scanning. The Learning and Retrieval sessions were identical to those in the main experiment, except the delay between sessions was ~30 min (Fig. 5). Participants also exhibited strong performance in the forced choice tests that are given after the learning session: 98.96 ± 1.08% for category test and 100% for exemplar test.

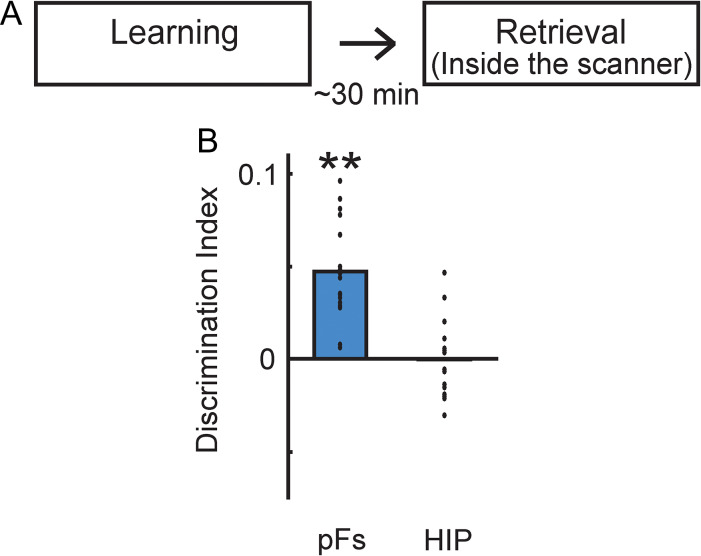

Figure 5.

Representations during retrieval following short delay. (A) Participants had the same learning and retrieval sessions as in the main experiment but the delay between learning and retrieval was about 30 min. (B) Average discrimination indices of pFs and HIP during retrieval (same day retrieval). HIP did not show significant discrimination whereas pFs showed positive discrimination. **P < 0.01. Each dot indicates the mean value of the object discrimination indices in each participant.

fMRI Data Acquisition

Participants were scanned on the 7T Siemens scanner at the fMRI facility on the NIH campus in Bethesda. Images were acquired using a 32-channel head coil with an in-plane resolution of 1.3 × 1.3 mm, and 39 1.3 mm slices (0.13 mm inter-slice gap, repetition time [TR] = 2 s, echo time [TE] = 27 ms, matrix size = 154 × 154, field-of-view = 200 mm). Partial volumes of the temporal and occipital cortices were scanned, and slices were oriented approximately parallel to the base of the temporal lobe. All functional localizer and main task runs were interleaved. Standard MPRAGE (magnetization-prepared rapid-acquisition gradient echo) and corresponding GE-PD (gradient echo–proton density) images were collected after the experimental runs in each participant, and the MPRAGE images were then normalized by the GE-PD images for use as a high-resolution anatomical data for the following fMRI data analysis (Van De Moortele et al. 2009).

Regions-of-Interest

Object-selective regions-of-interest (ROI) were determined by a functional localizer scan (Fig. 2A). Participants viewed alternating 16 second blocks of grayscale object images and retinotopically matched scrambled images (Lee et al. 2012). The resulting object-selective lateral occipital complex (LOC) was divided into an anterior ventral (pFs) and a posterior dorsal (LO) part. Because our prior study of visual imagery showed that the responses of pFs showed more stable decoding for individual objects (Lee et al. 2012), here we focused on pFs only. The hippocampus was automatically defined by the subcortical parcellation of FreeSurfer (Fig. 2A), and segmentation of the hippocampus was performed in anterior-posterior direction on the basis of predefined cutoffs allocating 35%, 45%, and 20% of slices to the head, body, and tail, respectively (Fischl et al. 2002; Hackert et al. 2002). The perirhinal cortex was manually traced using anatomic landmarks (Insausti et al. 1998) (Fig. 4A). Because there was no significant difference in the results between the left and right hemisphere in each ROI, analyses were collapsed across hemisphere.

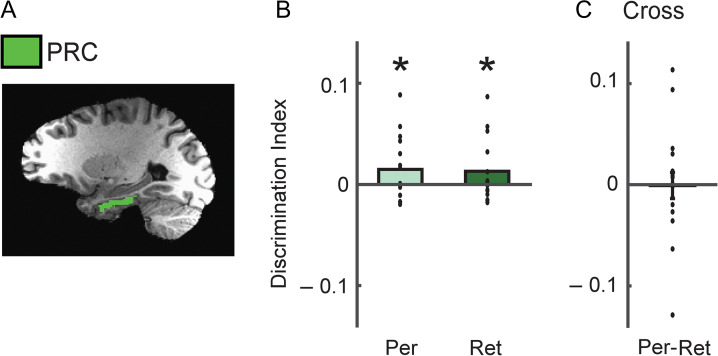

Figure 4.

Discrimination of object identity based on PRC responses. (A) PRC is displayed on a participant’s T1-weighted image. Green areas indicate voxels included in the PRC ROI. (B) Average discrimination indices for PRC during perception and retrieval. (C) Similarity between retrieval and perception. Discrimination indices between retrieved objects and the corresponding perceived objects in PRC. There was no significant correspondence between retrieval and perception in PRC. *P < 0.05. Each dot indicates the mean value of the object discrimination indices in each participant.

fMRI Data Analysis

Data analysis was conducted using AFNI (http://afni.nimh.nih.gov/afni), SUMA (AFNI surface mapper), FreeSurfer, and custom MATLAB scripts. Data preprocessing included slice-time correction, motion-correction, and smoothing (with Gaussian blur of 2 mm full-width half-maximum (fwhm) for the event-related data, and 3 mm for the functional localizer data).

To derive the BOLD response magnitudes during the tasks, we conducted a standard general linear model using the AFNI software package (3dDeconvolve using GAM function) to deconvolve the event-related responses. For each image presentation or retrieval, the β-value and t-value of each voxel were derived. For the average magnitude of responses across all voxels and stimuli within each ROI, β-value was used. To derive discrimination indices of individual objects, we used the split-half correlation analysis method as the standard measure of information (Haxby et al. 2001; Kravitz et al. 2010; Lee et al. 2012). The six event-related runs for each participant were divided into two halves (each containing three runs) in all possible 10 ways. For each of the splits, we estimated the t-value between each event and baseline in each half of the data. The t-values were then extracted from the voxels within each ROI and cross correlated. We here used t-values rather than β-values as they tend to be slightly more stable, though we found nearly identical results from the analysis with β-values. Before calculating the correlations, the t-values were normalized in each voxel by subtracting the mean value across all object conditions (Haxby et al. 2001; Lee et al. 2012). A discrimination index for an object condition was calculated by subtracting the average of between-condition correlations (Pearson correlation coefficient comparing each object with every other object) from the within-condition correlations (Pearson correlation coefficient comparing each object with other presentations of the same object). For cross-correlations between Perception and Retrieval sessions, we aligned the EPI data from each session on the MPRAGE data first, and then derived correlation values and discrimination indices.

Statistical Analyses

To compare discrimination indices against zero, we used one-sample t-tests (one-tailed) with the assumption of predicted positive direction. Repeated-measures ANOVAs (tests of within-subjects effects) were used to determine statistical significance of session (Perception, Retrieval) or ROI effects. For all ANOVAs with factors with more than two levels, Greenhouse–Geisser corrections were applied. To examine detailed effects between Sessions, two-tailed paired t-tests were used.

Results

Memory Performance

Following the Retrieval scan session, we assessed the quality of memory by asking participants to draw the object images retrieved from their memory for each given cue. Drawings were scored based on shape, color, and features of the objects (Fig. 1C, Materials and Methods). All participants correctly retrieved all associated objects except one participants who only correctly retrieved 13 pairs. We found that participants remembered 63.6% of object details on average (SEM = 3.56). If they did not remember any detail, the score would be zero even if they recalled the paired object names. Thus, even one-day after learning participants were able to retrieve detailed object information as well as the correct pairings, suggesting they were retrieving detailed representations of the objects during scanning.

Specificity of Neural Representations

To compare responses in visual cortex and hippocampus, we first examined the average magnitude of responses across all voxels and stimuli within the pFs and hippocampal ROIs (Fig. 2A). While pFs showed much stronger responses in the Perception than Retrieval session, the hippocampus showed comparable, weak responses in both (Supplementary Table S1). However, prior studies have shown that the average magnitude of responses across the voxels within an ROI can be around zero even when the ROI participates in memory or imagery processes (Lee et al. 2012; Albers et al. 2017). This may be because the reinstatement of information is sufficient to drive an informative distributed response across voxels but not to increase the overall average response.

To investigate whether individual object information is represented in the patterns of response across the ROIs, we next used multivariate pattern analysis (MVPA). For this, we extracted the responses in each ROI in two independent halves of the data, and then compared the patterns of response across the two halves (Fig. 2B). To further ensure that participants were adequately processing visual information during the Perception and Retrieval sessions, we first focused on category discrimination and investigated the difference between face and object categories. Consistent with prior studies, we found distinct patterns of response and significant decoding between object and face categories in pFs during both perception and retrieval (Fig. 2B, Supplementary Fig. S1) (Reddy et al. 2010; Cichy et al. 2012). These category-specific response patterns were not observed in the hippocampus (Supplementary Fig. S1). For our main analyses, however, we were primarily interested in the differences between individual object exemplars (not categories) and in the following analyses we focus on the responses for the 12 objects only.

Based on the pattern similarity, we calculated within- and between-object correlations and derived discrimination indices for individual objects as the difference between these correlations (see Materials and Methods for details) (Kriegeskorte et al. 2006; Kravitz et al. 2010, 2011). Consistent with prior studies (Stokes et al. 2009; Lee et al. 2012), we found that for pFs during perception, within-object correlations were significantly greater than between-object correlations, resulting in significantly positive discrimination indices (t = 6.26, P = 4.33 × 10−6, one-tailed) (Fig. 2C, Supplementary Fig. S2). This indicates specific patterns of visual response for individual objects during perception. Similarly, during retrieval, pFs also showed significant positive discrimination for individual objects (t = 4.99, P = 5.58 × 10−5, one-tailed), although the discrimination was much stronger for perception than retrieval (t = 4.55, P = 2.86 × 10−4, two-tailed, paired) (Fig. 2A,C, Supplementary Fig. S2). Thus, our results show that during both perception and retrieval one day after learning, object identity information can be decoded from the response of pFs.

However, we found a very different set of results in hippocampus. While discrimination indices were significantly greater than zero during retrieval (t= 3.92, P = 5.51 × 10−4, one-tailed), there was no significant difference during perception (t = −1.34, P = 0.90, one-tailed) (Fig. 2D, Supplementary Fig. S2). Moreover, hippocampal discrimination during retrieval was significantly greater than that observed during perception (t= 3.65, P = 0.002, two-tailed, paired). These data indicate that stable and differentiable representations for individual objects are stronger during retrieval than during perception in the hippocampus.

One possibility is that the representation of object information in the hippocampus might involve only a small segment of the structure and there could potentially be different contributions during perception and retrieval. To assess this, we divided the hippocampus into three subregions (head, body, tail) (Hackert et al. 2002), and derived discrimination indices for each subregion. We found that each subregion showed a similar tendency as the whole hippocampus with significant discrimination indices only during retrieval (Supplementary Fig. S3).

To directly compare pFs and hippocampus, we conducted a two-way ANOVA with ROI (pFs, HIP) and Session (Perception, Retrieval) as factors yielding a highly significant interaction (F = 33.50, P = 2.19 × 10−5) (Fig. 2C,D). This result combined with the significant pairwise comparisons within each ROI (described above) indicates that pFs shows stronger object discrimination during perception than retrieval whereas hippocampus shows the opposite pattern.

We also investigated whether the decoding of object identity in cortex and hippocampus was correlated with the drawing score, and observed suggestive evidence that object discriminability in visual cortex reflects the strength of the retrieved memory (see Supplementary Results and Supplementary Fig. S4).

Taken together, these results suggest that while object identity can be consistently decoded from the response patterns of visual cortex between perception and retrieval, in hippocampus there is a substantial increase of object discriminability from perception to retrieval.

Correspondence Between Retrieval and Perception

While discrimination indices were significantly greater than zero in pFs during both perception and retrieval, this does not necessarily indicate that the representations are similar. To directly compare the representations of object identity during perception and retrieval we calculated discrimination indices based on the correlation between perception and retrieval. While the discrimination between perception and retrieval was significantly positive in pFs (t = 2.71, P = 0.007), it did not reach significance in the hippocampus (t = −0.68, P = 0.75) (Fig. 3, Supplementary Fig. S5). Moreover, the discrimination index across perception and retrieval in pFs was significantly greater than that of hippocampus (t = 3.08, P = 0.007, two-tailed, paired). These data indicate that there was a significantly greater correspondence between the responses observed during perception and retrieval in pFs than hippocampus, suggesting that hippocampal representations are changing between perception and retrieval.

Figure 3.

Similarity between retrieval and perception. Discrimination indices between retrieved objects and the corresponding perceived objects in pFs and HIP. There was significant correspondence between retrieval and perception only in pFs but not HIP. **P < 0.01. Each dot indicates the mean value of the object discrimination indices in each participant.

In an exploratory analysis, we obtained suggestive evidence of increased similarity of representations between hippocampus and visual cortex over time following learning (see Supplementary Results and Supplementary Fig. S6). While tentative, this finding is consistent with the previously proposed idea that hippocampus reinstates cortical representations during retrieval (Sutherland and Rudy 1989; Rudy and Sutherland 1995; O’Reilly and Rudy 2001; Tanaka et al. 2014; Moscovitch et al. 2016).

Collectively, these results suggest that while visual representations evoked during retrieval are similar to those induced during perception, hippocampal representations are distinct during these two phases.

Representations in Perirhinal Cortex

Although our primary focus was on comparisons between hippocampus and visual cortex, other regions are involved in perception and memory of objects. In particular, perirhinal cortex has been implicated in object recognition and memory (Alvarez and Squire 1994; O’Reilly and Rudy 2001; Devlin and Price 2007; Lehky and Tanaka 2016; Martin et al. 2018), and is a major source of cortical input to the hippocampus both directly and indirectly via entorhinal cortex (Squire et al. 2004; Kravitz et al. 2013). Therefore, we also investigated responses in perirhinal cortex during perception and retrieval (Fig. 4). Like the hippocampus, perirhinal cortex showed similar but weak average magnitude of responses across the Perception and Retrieval sessions (Supplementary Table S1). Further, perirhinal cortex showed significant discrimination indices across both Perception and Retrieval sessions (perception, t = 1.98, P = 0.03; retrieval, t = 1.76, P = 0.048, one-tailed) that was also comparable across sessions (t = 0.26, P = 0.80, two-tailed, paired) (Fig. 4B). However, there was no correspondence between retrieval and perception and discrimination indices across perception and retrieval were not significantly greater than zero (t = −0.07, P = 0.53, one-tailed) (Fig. 4C). Thus, perirhinal cortex shows intermediate properties of representations between visual cortex and hippocampus, consistent with its intermediate location in the broader neuroanatomical circuitry connecting these structures.

Contribution of the Retrieval Process

Prior work on retrieval during visual imagery, has revealed representations in visual cortex similar to those we report here (e.g., Stokes et al. 2009; Lee et al. 2012). However, these studies have often involved only a short delay following learning (e.g., 30 min) and have focused primarily on visual cortex and not hippocampus. To be able to compare our current results with this work, we conducted an exploratory follow-up fMRI experiment in which the Learning session was conducted immediately prior to the Retrieval session (Fig. 5A). Further, this experiment allows us to address whether the retrieval process itself is sufficient to account for the decoding we observed in hippocampus in the main experiment.

During this same day retrieval, both pFs and hippocampus showed similar average response magnitude across voxels and stimuli to that during retrieval in the main experiment (Supplementary Table S1). Further, object discrimination indices in pFs were significantly greater than zero (t = 6.91, P = 2.50 × 10−6, one-tailed) (Fig. 5B) and comparable to those observed in the main experiment (t = 0.56, P = 0.58, two-tailed, unpaired) (Fig. 2C). In contrast, object discrimination indices in the hippocampus were no longer significantly greater than zero (t = −0.06, P = 0.48, one-tailed) (Fig. 5B) and were significantly weaker than those observed during the one-day retrieval in the main experiment (t = 2.62, P = 0.01, two-tailed, unpaired). Further, there was no relationship between the strength of pFs and hippocampal representations (correlation between discrimination indices in pFs and hippocampus) (Supplementary Fig. S7). Thus, these results suggest that the retrieval process itself or retrieval with short delay is not sufficient to induce the high specificity of hippocampal representations we observed in the main experiment.

Discussion

Our findings demonstrate a fundamental difference between hippocampal and cortical representations during perception and memory retrieval. Visual cortex showed relatively stable and consistent neural responses that allowed us to decode object identity across perception and retrieval one day after learning. However, hippocampus showed much stronger decoding during one-day retrieval than during perception when object discrimination was at chance levels. Moreover, we found corresponding representations between perception and retrieval in visual cortex but not in hippocampus. Further, the strong decoding of the hippocampus was not observed when retrieval session was conducted just after the learning suggesting that the retrieval process itself is not sufficient to account for the results. Collectively, these findings suggest that while the retrieved representation of an image is similar to that evoked during encoding in the cortex, in hippocampus there is a substantial difference that may reflect some sort of reorganization of the representations between perception and retrieval.

We found that decoding of objects in hippocampus was possible during one-day retrieval but not during perception, when our measures of decoding were at chance (Fig. 2). Similarly, in a recent study comparing encoding and retrieval (Xiao et al. 2017), detailed stimulus information was present in the response patterns of the hippocampus during retrieval but not during encoding. While these results could be taken to suggest that hippocampus does not contain object representations during perception or encoding, this is highly unlikely given that the hippocampus is considered critical for new memory formation and consolidation (Alvarez and Squire 1994; Olsen et al. 2012) and we would urge caution against over-interpreting the null results. Further, there is some evidence for hippocampal decoding during both perception and short term memory (Chadwick et al. 2010; Bonnici et al. 2012). For example, at a perceptual level, the participant’s location within a virtual environment or scene stimuli could be decoded (Hassabis et al. 2009; Bonnici et al. 2012). In terms of memory, Chadwick and colleagues found that BOLD patterns within hippocampus could be used to distinguish between three video clips that participants recalled (Chadwick et al. 2010). The apparent discrepancy between these prior studies and our data may reflect the contribution of spatial information. In this study, we used a simple object memory task whereas prior studies employed more complex stimuli that included spatial information (Chadwick et al. 2010; Bonnici et al. 2012). Indeed, consistent with our results, other studies have reported mixed evidence for the presence of even object category information in the patterns of response within hippocampus (Diana et al. 2008; LaRocque et al. 2013; Liang et al. 2013). Regardless, we think it is important to emphasize that our key finding is not the absolute level of decoding but the difference in decoding between perception and retrieval in visual cortex and hippocampus.

The difference in object identity decoding between perception and retrieval in the hippocampus suggests that there is some modification of representations over time. However, it could be argued that the difference simply reflects the retrieval process itself. Against this view, in the follow-up experiment in which the Retrieval session was conducted immediately after the Learning session, significant object discrimination was observed during retrieval only in pFs but not in hippocampus. This negative result supports the idea of a time-consuming change of response patterns in hippocampus that is needed to establish a high specificity of hippocampal representations. While prior work has provided evidence for some correspondence between encoding and retrieval in hippocampus (Tompary et al. 2016), this encoding-retrieval similarity was much weaker than that observed in perirhinal cortex and data from visual cortex was not reported.

What could account for the change in hippocampal representations between perception and retrieval? Several models suggest a unique function of hippocampus in forming a unitary representation by binding of disparate elements (Marr 1971; O’Reilly and Rudy 2001; Norman and O’Reilly 2003; Chadwick et al. 2010; Olsen et al. 2012). Thus, it is possible that in the initial phase of the encoding and consolidation, contextual or temporal information that is unrelated to the task might be combined with the object information (Hsieh et al. 2014), resulting in similar representations for objects due to shared aspects of the context. Through consolidation, a gradual reorganization may prune uninformative context information producing a more consistent representation and reproducible pattern of response for each object in the hippocampus (Gluck and Myers 1993; Gluck et al. 2005). Recently Hsieh et al. (2014) showed that hippocampal activity patterns carry information about the temporal positions of objects in learned sequences, but not about object identity alone. Because their learning and retrieval sessions were on the same day, this result is consistent with our data, supporting the scenario that contextual or temporal information is combined with the objects during earlier phases. Another possibility is that representations in hippocampus become less sparse between perception and retrieval making it easier to detect the responses during retrieval with fMRI, which effectively measures population level activity.

Our results are consistent with prior visual imagery and working memory studies showing that BOLD responses in visual cortex can be used to decode the identity of imagined or maintained stimuli, and that there is consistency in the pattern of responses in visual cortex between perception and imagery/retrieval (Stokes et al. 2009; Riggall and Postle 2012; Lee et al. 2012, 2013). However, because these studies used short-delay tasks, in which participants conducted both recall (or maintenance) and perception sessions on the same day, it was unclear how the results of visual cortex apply to long-term memory retrieval. Our results extend these findings, showing similar representations in visual cortex during retrieval following a one-day delay, which potentially involves sleep-dependent consolidation (Stickgold 2005; Rasch et al. 2007).

Because perirhinal cortex has been implicated in both object recognition and memory, we also tested decoding of retrieved information in this region. The perirhinal cortex showed comparable positive discrimination across perception and retrieval (Fig. 4B). While the overall positive discrimination across time is similar with that of visual cortex (Fig. 4B), the limited correspondence between retrieval and perception is similar to the findings for hippocampus (Fig. 4C). Thus, the perirhinal cortex is not only anatomically located between visual cortex and hippocampus, but also shows mixed representational properties for object identity information.

Our findings raise important questions for future research. In particular, while our results suggest a change in hippocampal representations over the course of one day, it will be important to elucidate the role of sleep. Studies of systems consolidation suggest that over the course of months or years (remote long-term memories), cortical areas are more engaged in memory processes and hippocampus gradually become independent of the processes (Dudai 2004). Thus, it will be interesting to investigate the nature of memory representations over longer delays (months or years) in both hippocampus and cortex. Finally, our results also suggest that object discrimination in hippocampus and pFs are more strongly correlated across individuals during retrieval than perception, and that drawing accuracy is correlated with object discrimination in pFs but not hippocampus. However, given our relatively small sample size for such correlation analyses and the lack of significant differences between the correlations, these suggestive findings will need to be further investigated in future work.

In summary, our results show while the neural populations involved in encoding individual object information are shared between perception and retrieval in high-level visual cortex, the representations change over time in the hippocampus. These results provide evidence for (1) a fundamental difference between hippocampal and cortical representations across perception and retrieval, and (2) suggest the possibility of a lengthy reorganization process in human hippocampus.

Supplementary Material

Authors’ Contributions

S.-H.L. and C.I.B. designed the research. S.-H.L. performed the research and analyzed the data. D.J.K. contributed analyses. C.I.B. supervised the project. S.-H.L., D.J.K., and C.I.B. wrote the manuscript.

Funding

This work was supported by the US National Institute of Mental Health Intramural Research Program (ZIAMH 002909), a NARSAD Young Investigator Grant from the Brain and Behavior Research Foundation (21121), a grant of the Korea Health Technology R&D Project through the Korea Health Industry Development Institute (KHIDI) funded by the Ministry of Health & Welfare, Korea (HI15C3175), and the National Research Foundation of Korea (NRF) grant funded by Ministry of Science and ICT (NRF-2016R1C1B2010726).

Notes

Thanks to B. Levy and M. King for help with data collection, and members of the Laboratory of Brain and Cognition, NIMH for discussion. Conflict of Interest: None declared.

References

- Albers AM, Meindertsma T, Toni I, de Lange FP. 2017. Decoupling of BOLD amplitude and pattern classification of orientation-selective activity in human visual cortex. Neuroimage. 180:31–40. [DOI] [PubMed] [Google Scholar]

- Alvarez P, Squire LR. 1994. Memory consolidation and the medial temporal lobe: a simple network model. Proc Natl Acad Sci USA. 91:7041–7045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Kumaran D, Chadwick MJ, Weiskopf N, Hassabis D, Maguire EA. 2012. Decoding representations of scenes in the medial temporal lobes. Hippocampus. 22:1143–1153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H. 2012. The neural basis of vivid memory is patterned on perception. J Cogn Neurosci. 24:1867–1883. [DOI] [PubMed] [Google Scholar]

- Chadwick M, Hassabis D, Weiskopf N, Maguire E. 2010. Decoding individual episodic memory traces in the human hippocampus. Curr Biol. 20:544–547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cichy RM, Heinzle J, Haynes JD. 2012. Imagery and perception share cortical representations of content and location. Cereb Cortex. 22:372–380. [DOI] [PubMed] [Google Scholar]

- Danker JF, Anderson JR. 2010. The ghosts of brain states past: remembering reactivates the brain regions engaged during encoding. Psychol Bull. 136:87–102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Danker JF, Tompary A, Davachi L. 2017. Trial-by-trial hippocampal encoding activation predicts the fidelity of cortical reinstatement during subsequent retrieval. Cereb Cortex. 27:3515–3524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Devlin J, Price C. 2007. Perirhinal contributions to human visual perception. Curr Biol. 17:1484–1488. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diana RA, Yonelinas AP, Ranganath C. 2008. High-resolution multi-voxel pattern analysis of category selectivity in the medial temporal lobes. Hippocampus. 18:536–541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dudai Y. 2004. The neurobiology of consolidations, or, how stable is the engram? Annu Rev Psychol. 55:51–86. [DOI] [PubMed] [Google Scholar]

- Fischl B, Salat DH, Busa E, Albert M, Dieterich M, Haselgrove C, Van Der Kouwe A, Killiany R, Kennedy D, Klaveness S, et al. 2002. Whole brain segmentation: automated labeling of neuroanatomical structures in the human brain. Neuron. 33:341–355. [DOI] [PubMed] [Google Scholar]

- Gluck MA, Myers CE. 1993. Hippocampal mediation of stimulus representation: a computational theory. Hippocampus. 3:491–516. [DOI] [PubMed] [Google Scholar]

- Gluck MA, Myers C, Meeter M. 2005. Cortico-hippocampal interaction and adaptive stimulus representation: a neurocomputational theory of associative learning and memory. Neural Netw. 18:1265–1279. [DOI] [PubMed] [Google Scholar]

- Gordon AM, Rissman J, Kiani R, Wagner AD. 2014. Cortical reinstatement mediates the relationship between content-specific encoding activity and subsequent recollection decisions. Cereb Cortex. 24:3350–3364. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hackert VH, den Heijer T, Oudkerk M, Koudstaal PJ, Hofman A, Breteler MM. 2002. Hippocampal head size associated with verbal memory performance in nondemented elderly. Neuroimage. 17:1365–1372. [DOI] [PubMed] [Google Scholar]

- Hassabis D, Chu C, Rees G, Weiskopf N, Molyneux PD, Maguire EA. 2009. Decoding neuronal ensembles in the human hippocampus. Curr Biol. 19:546–554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Hsieh LT, Gruber MJ, Jenkins LJ, Ranganath C. 2014. Hippocampal activity patterns carry information about objects in temporal context. Neuron. 81:1165–1178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Insausti R, Juottonen K, Soininen H, Insausti AM, Partanen K, Vainio P, Laakso MP, Pitkänen A. 1998. MR volumetric analysis of the human entorhinal, perirhinal, and temporopolar cortices. Am J Neuroradiol. 19:659–671. [PMC free article] [PubMed] [Google Scholar]

- James W. 1890. The principles of psychology. New York: Holt. [Google Scholar]

- Kosslyn SM, Thompson WL, Alpert NM. 1997. Neural systems shared by visual imagery and visual perception: a positron emission tomography study. Neuroimage. 6:320–334. [DOI] [PubMed] [Google Scholar]

- Kravitz DJ, Kriegeskorte N, Baker CI. 2010. High-level visual object representations are constrained by position. Cereb Cortex. 20:2916–2925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Peng CS, Baker CI. 2011. Real-world scene representations in high-level visual cortex: it’s the spaces more than the places. J Neurosci. 31:7322–7333. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Ungerleider LG, Mishkin M. 2013. The ventral visual pathway: an expanded neural framework for the processing of object quality. Trends Cogn Sci. 17:26–49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. 2006. Information-based functional brain mapping. Proc Natl Acad Sci USA. 103:3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaRocque KF, Smith ME, Carr VA, Witthoft N, Grill-Spector K, Wagner AD. 2013. Global similarity and pattern separation in the human medial temporal lobe predict subsequent memory. J Neurosci. 33:5466–5474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S-H, Kravitz DJ, Baker CI. 2012. Disentangling visual imagery and perception of real-world objects. Neuroimage. 59:4064–4073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee S-H, Kravitz DJ, Baker CI. 2013. Goal-dependent dissociation of visual and prefrontal cortices during working memory. Nat Neurosci. 16:997–999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lehky SR, Tanaka K. 2016. Neural representation for object recognition in inferotemporal cortex. Curr Opin Neurobiol. 37:23–35. [DOI] [PubMed] [Google Scholar]

- Liang JC, Wagner AD, Preston AR. 2013. Content representation in the human medial temporal lobe. Cereb Cortex. 23:80–96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu X, Ramirez S, Pang PT, Puryear CB, Govindarajan A, Deisseroth K, Tonegawa S. 2012. Optogenetic stimulation of a hippocampal engram activates fear memory recall. Nature. 484:381–385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D. 1971. Simple memory: a theory for archicortex. Philos Trans R Soc B Biol Sci. 262:23–81. [DOI] [PubMed] [Google Scholar]

- Martin CB, Douglas D, Newsome RN, Man LLY, Barense MD. 2018. Integrative and distinctive coding of visual and conceptual object features in the ventral visual stream. Elife. 7:1–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moscovitch M, Cabeza R, Winocur G, Nadel L. 2016. Episodic memory and beyond: the hippocampus and neocortex in transformation. Annu Rev Psychol. 67:105–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norman KA, O’Reilly RC. 2003. Modeling hippocampal and neocortical contributions to recognition memory: a complementary-learning-systems approach. Psychol Rev. 110:611–646. [DOI] [PubMed] [Google Scholar]

- Olsen RK, Moses SN, Riggs L, Ryan JD. 2012. The hippocampus supports multiple cognitive processes through relational binding and comparison. Front Hum Neurosci. 6:146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O’Craven KM, Kanwisher N. 2000. Mental imagery of faces and places activates corresponding stiimulus-specific brain regions. J Cogn Neurosci. 12:1013–1023. [DOI] [PubMed] [Google Scholar]

- O’Reilly RC, Rudy JW. 2001. Conjunctive representations in learning and memory: principles of cortical and hippocampal function. Psychol Rev. 108:83–95. [DOI] [PubMed] [Google Scholar]

- Penfield W, Perot P. 1963. The brain’s record of auditory and visual experience. Brain. 86:595–696. [DOI] [PubMed] [Google Scholar]

- Polyn SM, Natu VS, Cohen JD, Norman KA. 2005. Category-specific cortical activity precedes retrieval during memory search. Science. 310:1963–1966. [DOI] [PubMed] [Google Scholar]

- Rasch B, Büchel C, Gais S, Born J. 2007. Odor cues during slow-wave sleep prompt declarative memory consolidation. Science. 315:1426–1429. [DOI] [PubMed] [Google Scholar]

- Reddy L, Tsuchiya N, Serre T. 2010. Reading the mind’s eye: decoding category information during mental imagery. Neuroimage. 50:818–825. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riggall AC, Postle BR. 2012. The relationship between working memory storage and elevated activity as measured with functional magnetic resonance imaging. J Neurosci. 32:12990–12998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rudy JW, Sutherland RJ. 1995. Configural association theory and the hippocampal formation: an appraisal and reconfiguration. Hippocampus. 5:375–389. [DOI] [PubMed] [Google Scholar]

- Rugg MD, Johnson JD, Park H, Uncapher MR. 2008. Chapter 21 Encoding-retrieval overlap in human episodic memory: a functional neuroimaging perspective. Prog Brain Res. 169:339–352. [DOI] [PubMed] [Google Scholar]

- Squire LR, Stark CEL, Clark RE. 2004. The medial temporal lobe. Annu Rev Neurosci. 27:279–306. [DOI] [PubMed] [Google Scholar]

- Staresina BP, Fell J, Do Lam ATA, Axmacher N, Henson RN. 2012. Memory signals are temporally dissociated in and across human hippocampus and perirhinal cortex. Nat Neurosci. 15:1167–1173. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stickgold R. 2005. Sleep-dependent memory consolidation. Nature. 437:1272–1278. [DOI] [PubMed] [Google Scholar]

- Stokes M, Thompson R, Cusack R, Duncan J. 2009. Top-down activation of shape-specific population codes in visual cortex during mental imagery. J Neurosci. 29:1565–1572. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sutherland RJ, Rudy JW. 1989. Configural association theory: the role of the hippocampal formation in learning, memory, and amnesia. Psychobiology. 17:129–144. [Google Scholar]

- Tambini A, Davachi L. 2013. Persistence of hippocampal multivoxel patterns into postencoding rest is related to memory. Proc Natl Acad Sci USA. 110:19591–19596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka KZ, Pevzner A, Hamidi AB, Nakazawa Y, Graham J, Wiltgen BJ. 2014. Cortical representations are reinstated by the hippocampus during memory retrieval. Neuron. 84:347–354. [DOI] [PubMed] [Google Scholar]

- Tompary A, Duncan K, Davachi L.. 2016. High-resolution investigation of memory-specific reinstatement in the hippocampus and perirhinal cortex. Hippocampus. 26:995–1007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tulving E. 2002. Episodic memory: from mind to brain. Annu Rev Psychol. 53:1–25. [DOI] [PubMed] [Google Scholar]

- Tulving E, Thomson DM. 1973. Encoding specificity and retrieval processes in episodic memory. Psychol Rev. 80:352–373. [Google Scholar]

- Van De Moortele P-F, Auerbach EJ, Olman C, Yacoub E, Uğurbil K, Moeller S. 2009. T1 weighted brain images at 7 Tesla unbiased for Proton Density, T2* contrast and RF coil receive B1 sensitivity with simultaneous vessel visualization. Neuroimage. 46:432–446. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xiao X, Dong Q, Gao J, Men W, Poldrack RA, Xue G. 2017. Transformed neural pattern reinstatement during episodic memory retrieval. J Neurosci. 37:2986–2998. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.