Abstract

Recent studies have demonstrated the existence of rich visual representations in both occipitotemporal cortex (OTC) and posterior parietal cortex (PPC). Using fMRI decoding and a bottom-up data-driven approach, we showed that although robust object category representations exist in both OTC and PPC, there is an information-driven 2-pathway separation among these regions in the representational space, with occipitotemporal regions arranging hierarchically along 1 pathway and posterior parietal regions along another pathway. We obtained 10 independent replications of this 2-pathway distinction, accounting for 58–81% of the total variance of the region-wise differences in visual representation. The separation of the PPC regions from higher occipitotemporal regions was not driven by a difference in tolerance to changes in low-level visual features, did not rely on the presence of special object categories, and was present whether or not object category was task relevant. Our information-driven 2-pathway structure differs from the well-known ventral-what and dorsal-where/how characterization of posterior brain regions. Here both pathways contain rich nonspatial visual representations. The separation we see likely reflects a difference in neural coding scheme used by PPC to represent visual information compared with that of OTC.

Keywords: object category, object representation, occipitotemporal pathway, posterior parietal pathway

Introduction

Although the posterior parietal cortex (PPC) has been largely associated with visuospatial processing (Kravitz et al. 2011), over the last 2 decades, both monkey neurophysiology and human brain imaging studies have reported robust nonspatial object responses in PPC along the intraparietal sulcus (IPS) (Freud et al. 2016; Kastner et al. 2017; Xu 2017). For example, neurons in macaque lateral intraparietal (LIP) region exhibited shape selectivity (Sereno and Maunsell 1998; Lehky and Sereno 2007; Janssen et al. 2008; Fitzgerald et al. 2011) that was tolerant to changes in position and size (Sereno and Maunsell 1998; Lehky and Sereno 2007). Similarly, human IPS topographic areas IPS1/IPS2 exhibited shape selective fMRI adaptation responses that were tolerant to changes in size and view-point (Konen and Kastner 2008; see also Sawamura et al. 2005). Given that macaque LIP has been argued to be homologous to human IPS1/IPS2 (Silver and Kastner 2009), there is thus convergence between the monkey and human findings. Recent fMRI studies have further reported the existence of a multitude of visual information in human PPC (Liu et al. 2011; Christophel et al. 2012; Zachariou et al. 2014; Ester Edward et al. 2015; Xu and Jeong 2015; Bettencourt and Xu 2016; Bracci et al. 2016, 2017; Jeong and Xu 2016; Weber et al. 2016; Woolgar et al. 2015, 2016; Yu and Shim 2017). Areas along IPS, especially a visual working memory (VWM) capacity defined region in superior IPS region that overlaps with IPS1/IPS2 (Todd and Marois 2004; Xu and Chun 2006; Bettencourt and Xu 2016), have been shown to hold robust visual representations that track perception and behavioral performance (Bettencourt and Xu 2016; Jeong and Xu 2016). PPC now appears to contain a rich repertoire of nonspatial representations, ranging from orientation, shape, to object identity, just like those in occipitotemporal cortex (OTC). Meanwhile, just like PPC, robust representation of spatial information has been found in both monkey and human OTC (Hong et al. 2016; Schwarzlose et al. 2008; Zhang et al. 2015; see also topographic mapping results from the ventral areas, e.g., Hasson et al. 2002, Wandell et al. 2007). Are visual representations in PPC indeed highly similar to those in OTC or do they still differ in significant ways?

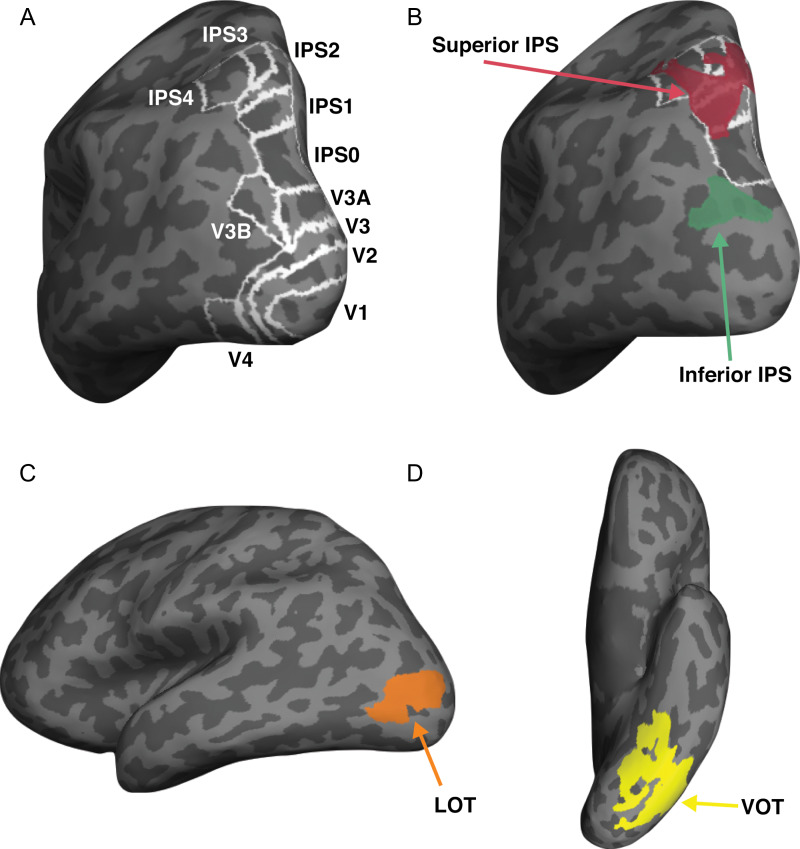

Using fMRI and multivoxel pattern analysis (Haxby et al. 2001; Kriegeskorte et al. 2008), here, we examined object category representations in a number of independently defined human OTC and PPC regions (Fig. 2). In OTC, we included early visual areas V1–V4 and areas involved in visual object processing in lateral occipitotemporal (LOT) and ventral occipitotemporal (VOT) regions. LOT and VOT loosely correspond to the location of lateral occipital (LO) and posterior fusiform (pFs) areas (Malach et al. 1995; Grill‐Spector et al. 1998) but extend further into the temporal cortex in our effort to include as many object selective voxels as possible in OTC regions. Reponses in these regions have previously been shown to correlate with successful visual object detection and identification (Grill-Spector et al. 2000; Williams et al. 2007) and lesions to these areas have been linked to visual object agnosia (Goodale et al. 1991; Farah 2004). In PPC, we included regions previously shown to exhibit robust visual encoding along the IPS. Specifically, we included topographic regions V3A, V3B, and IPS0-4 along the IPS (Sereno et al. 1995; Swisher et al. 2007; Silver and Kastner 2009) and 2 functionally defined object selective regions with one located at the inferior and one at the superior part of IPS (henceforth referred to inferior IPS and superior IPS, respectively, for simplicity). Inferior IPS has previously been shown to be involved in visual object selection and individuation while superior IPS is associated with visual representation and VWM storage (Xu and Chun 2006, 2009; Xu and Jeong 2015; Jeong and Xu 2016; Bettencourt and Xu 2016; see also Todd and Marois 2004).

Figure 2.

Inflated brain surface from a representative participant showing the ROIs examined. (A) Topographic ROIs in occipital and parietal cortices. (B) Superior IPS and inferior IPS ROIs, with white outlines showing the overlap between these 2 parietal ROIs and parietal topographic ROIs. (C) LOT and VOT ROIs.

Across 7 experiments, we observed robust object categorical representations in both human OTC and PPC, consistent with prior reports. Critically, using representational similarity analysis (Kriegeskorte and Kievit 2013) and a bottom-up data-driven approach, we found a large separation between these brain regions in the representational space, with occipitotemporal regions arranging hierarchically along one pathway (the OTC pathway) and posterior parietal regions along an orthogonal pathway (the PPC pathway). This 2-pathway distinction was independently replicated 10 times and accounted for 58–81% of the total variance of the region-wise differences in visual representations. Visual information processing thus differs between OTC and PPC despite the existence of rich visual representations in both. The separation between OTC and PPC was not driven by differences in tolerance to changes in low-level visual features, as similar amount of tolerance was seen in both higher occipitotemporal and posterior parietal regions. Neither was it driven by an increasingly rich object category-based representation landscape along the OTC pathway, as the 2-pathway distinction was equally strong for natural object categories varying in various category features (such as animate/inanimate, action/nonaction, small/large) as well as artificial object categories in which none of these differences existed. Finally, the 2-pathway distinction was not driven by PPC showing greater representations of task relevant features than OTC (Bracci et al. 2017; Vaziri-Pashkam and Xu 2017), as the presence of the 2 pathways for object shape representation was unaffected by whether or not object shape was task relevant.

Although previous research has characterized posterior brain regions in visual processing according to the well-known ventral-what and dorsal-where/how distinction (Mishkin et al. 1983; Goodale and Milner 1992), our 2-pathway structure differs from this characterization in one significant aspect: both of our 2 pathways contain rich nonspatial visual representations. Thus, it may not be the contents of visual presentation, but rather the neural coding schemes used to form representations, that differ significantly between how OTC and PPC process visual information.

Materials and Methods

Participants

Seven healthy human participants (P1–P7, 4 females) with normal or corrected to normal visual acuity, all right-handed, and aged between 18 and 35 took part in the experiments. Each main experiment was performed in a separate session lasting between 1.5 and 2h. Each participant also completed 2 additional sessions for topographic mapping and functional localizers. All participants gave their informed consent before the experiments and received payment for their participation. The experiments were approved by the Committee on the Use of Human Subjects at Harvard University.

Experimental Design and Procedures

Main Experiments

A summary of the main experiments is presented in Table 1. It includes the list of participants and the stimuli and the task(s) used in each experiment.

Table 1.

A summary of all the experiments, including the list of participants and the stimuli and the task(s) used

| Participants | Stimulus set | Task | |

|---|---|---|---|

| Experiment 1 | P1–P6 | 8 Natural object categories shown both in original and controlled format | Shape 1-back |

| Experiment 2 | P1–P7 | 8 Natural object categories in original format shown either above or below fixation | Shape 1-back |

| Experiment 3 | P1–P7 | 8 Natural object categories in original format shown in either small or large size | Shape 1-back |

| Experiment 4 | P1–P5, P7 | 8 Natural object categories and 9 artificial object categories both shown in original format | Shape 1-back |

| Experiment 5 | P1–P7 | 8 Natural object categories shown in original format with both the object and background colored | Shape 1-back or color 1-back, depending on the instructions |

| Experiment 6 | P1–P7 | 8 Natural object categories shown in original format with colored dots overlaid on top of the object | Shape 1-back or color 1-back, depending on the instructions |

| Experiment 7 | P1–P7 | 8 Natural object categories shown in original format with only the object colored | Shape 1-back or color 1-back, depending on the instructions |

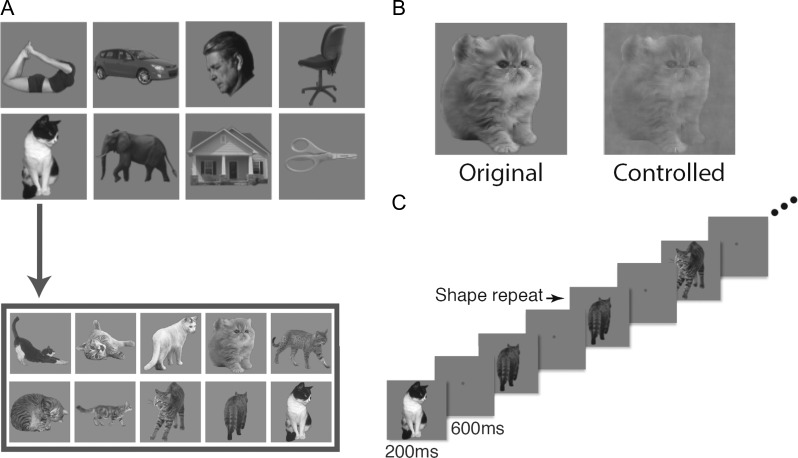

Experiment 1: Testing Original and Controlled Images

In this experiment, we used black and white images from 8 object categories (faces, bodies, houses, cats, elephants, cars, chairs, and scissors) and modified them to occupy roughly the same area on the screen (Fig. 1A). For each object category, we selected 10 exemplar images that varied in identity, pose and viewing angle to minimize the low-level similarities among them. All images were placed on a dark gray square (subtended 9.13° × 9.13°) and displayed on a light gray background. In the original image condition, the original images were shown. In the controlled image condition, we equalized image contrast, luminance and spatial frequency across all the categories using the shine toolbox (Willenbockel et al. 2010, see Fig. 1B). Participants fixated at a central red dot (0.46° in diameter) throughout the experiment. Eye-movements were monitored in all the fMRI experiments using SR-research Eyelink 1000 to ensure proper fixation.

Figure 1.

Stimuli and paradigm used in Experiment 1. (A) The 8 natural object categories used. Each category contained 10 different exemplars varying in identity, pose, and viewing angle to minimize the low-level image similarities among them. (B) Example images showing the original version (left) and the controlled version (right) of the same image. (C) An illustration of the block design paradigm used. Participants performed a one-back repetition detection task on the images.

During the experiment, blocks of images were shown. Each block contained a random sequential presentation of 10 exemplars from the same object category. Each image was presented for 200 ms followed by a 600 ms blank interval between the images (Fig. 1C). Participants detected a one-back repetition of the exact same image by pressing a key on an MRI-compatible button-box. Two image repetitions occurred randomly in each image block. Each experimental run contained 16 blocks, 1 for each of the 8 categories in each image condition (original or controlled). The order of the 8 object categories and the 2 image conditions were counterbalanced across runs and participants. Each block lasted 8 s and followed by an 8-s fixation period. There was an additional 8-s fixation period at the beginning of the run. Each participant completed one scan session with 16 runs for this experiment, with each run lasting 4 min 24 s.

Experiment 2: Testing Position Tolerance

In this experiment, we used the controlled images from Experiment 1 at a smaller size (subtended 5.7° × 5.7°). Images were centered at 3.08° above the fixation in half of the 16 blocks and the same distance below the fixation in the other half of the blocks. The order of the 8 object categories and the 2 positions were counterbalanced across runs and participants. Other details of the experiment were identical to that of Experiment 1.

Experiment 3: Testing Size Tolerance

In this experiment, we used the controlled images form Experiment 1, shown at fixation at either a small size (4.6° × 4.6°) or a large size (11.4° × 11.4°). Half of the 16 blocks contained small images and the other half, large images. The presentation order of the 8 object categories and the 2 sizes were counterbalanced across runs and participants. Other details of the experiment were identical to that of Experiment 1.

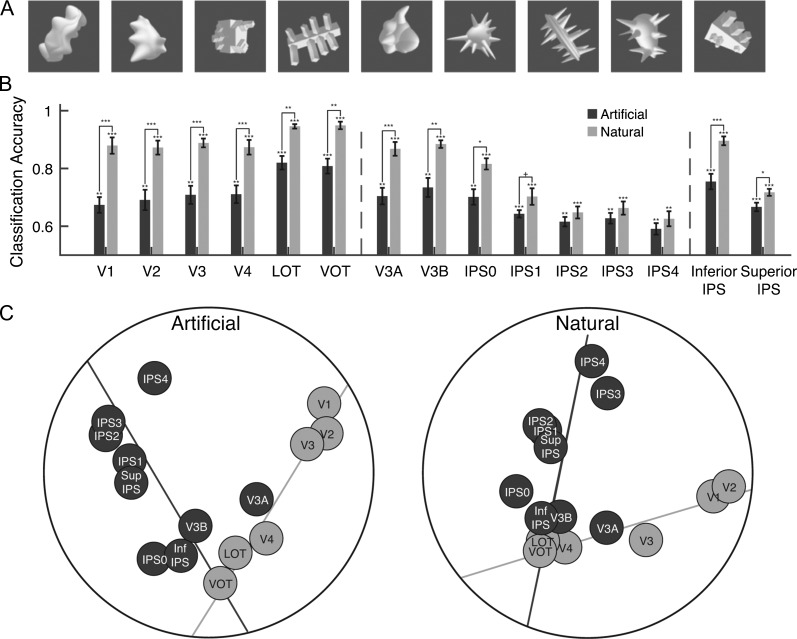

Experiment 4: Comparing Natural and Artificial Object Categories

In this experiment, we used both natural and artificial shape categories. The natural categories were the same 8 categories used in Experiment 1. We used the original images from these categories. The artificial categories were 9 categories of computer-generated 3D shapes (10 images per category) adopted from Op de Beeck et al. (2008) and shown in random orientations to increase image variation within a category (Fig. 5A). All stimuli were modified to occupy roughly the same spatial extent, placed on a dark gray square (subtended 9.13° × 9.13°), and displayed on a light gray background. Each run of the experiment contained 17 8-s blocks, one for each of the 8 natural categories and one for each of the 9 artificial categories. An 8-s fixation period was presented between the stimulus blocks and at the beginning of the run. Each participant completed 18 experimental runs. The order of the object categories was counterbalanced across runs and participants. Each run lasted 4 min 40 s.

Figure 5.

Comparing natural and artificial object category representations in Experiment 4. (A) Sample images from the 9 artificial object categories. (B) fMRI decoding accuracies for the natural and artificial object categories. The left vertical dashed line separates OTC regions from PPC regions and the right vertical dashed line separates PPC functionally defined regions from PPC topographically defined regions. Error bars indicate standard errors of the means. Chance level performance equals 0.5. Asterisks show corrected P values (***P < 0.001, **P < 0.01, *P < 0.05, †P < 0.1). (C) MDS plots of the region-wise similarity matrices for the artificial (left panel) and natural (right panel) object categories. The 2-pathway structure (i.e., the light and dark gray total least square regression lines through the OTC and PPC regions, respectively) accounted for 64% and 64% of the total amount of variance in region-wise differences in visual object representation for the natural and artificial object categories, respectively, with no systematic difference between the 2. The presence of the 2-pathway structure thus is not driven by the specific natural object categories used.

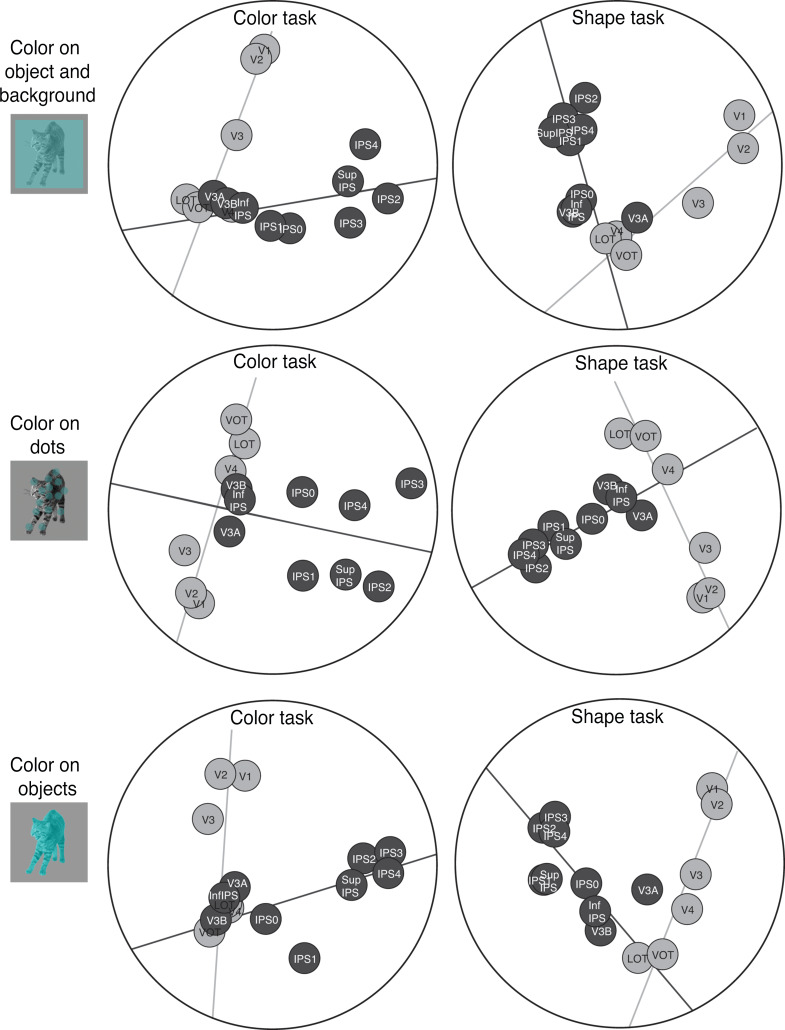

Experiments 5, 6, and 7: Testing Task Modulations

Some data of these 3 task experiments were reported in a previous publication (Vaziri-Pashkam and Xu 2017). The analysis reported here, however, have not been published before. Details of these 3 task experiments are reproduced here for the readers’ convenience.

The original images from the 8 natural categories as used in Experiment 1 were used in the 3 task experiments here. In Experiment 5, the objects were covered with a semitransparent colored square subtending 9.24° of visual angle (Fig. 7), making both the object and the background surrounding the object colored. In Experiment 6, a set of 30 semitransparent colored dots, each with a diameter subtending 0.93° of visual angle, were placed on top of the object, covering the same spatial extent as the object. In Experiment 7, the objects were fully colored, making color an integrated feature of the object. On each trial, the color of the image was selected from a list of 10 different colors (blue, red, light green, yellow, cyan, magenta, orange, dark green, purple, and brown). Participants were instructed to view the images while fixating. In half of the runs participants performed a one-back repetition detection on the object shape (similar to the tasks in the previous experiments), and in the other half they ignored the shape and detected a one-back repetition of the color.

Figure 7.

Results of the MDS analysis on the region-wise similarity matrices for the shape (left panels) and color (right panels) tasks in Experiments 5–7. The top, middle and bottom rows show the 3 color and shape manipulations, respectively, with color appearing on the object and background, on dots overlaying the objects, and on the objects. The 2-pathway structure (i.e., the light and dark gray total least square regression lines through the OTC and PPC regions, respectively) accounted for 63% and 58%, respectively, of the amount of variance in the region-wise differences in visual object representation in the shape and color tasks in Experiment 5. These values were 65% and 63% in Experiment 6, and 61% and 63% in Experiment 7. No systematic difference was observed across these 3 experiments and the 2 tasks. The 2-pathway structure thus remains stable across variations in the 2 tasks used here.

Each experimental run consisted of 1 practice block at the beginning of the run and 8 experimental blocks with 1 for each of the 8 object categories. The stimuli from the practice block were chosen randomly from 1 of the 8 categories and data from the practice block were removed from further analysis. Each block lasted 8 s. There was a 2-s fixation period at the beginning of the run and an 8-s fixation period after each stimulus block. The presentation order of the object categories was counterbalanced across runs for each task. Task changed every other run with the order reversed halfway through the session. Each participant completed one session of 32 runs, with 16 runs for each of the 2 tasks in each experiment. Each run lasted 2 min 26 s.

Localizer Experiments

All the localizer experiments conducted here used previously established protocols and the details of these protocols are reproduced here for the reader’s convenience.

Topographic Visual Regions

These regions were mapped with flashing checkerboards using standard techniques (Sereno et al. 1995; Swisher et al. 2007) with parameters optimized following Swisher et al. (2007) to reveal maps in parietal cortex. Specifically, a polar angle wedge with an arc of 72° swept across the entire screen (23.4 × 17.5° of visual angle). The wedge had a sweep period of 55.467 s, flashed at 4 Hz, and swept for 12 cycles in each run (for more details, see Swisher et al. 2007). Participants completed 4–6 runs, each lasting 11 min 56 s. The task varied slightly across participants. All participants were asked to detect a dimming in the visual display. For some participants, the dimming occurred only at fixation, for some it occurred only within the polar angle wedge, and for others, it could occur in both locations, commiserate with the various methodologies used in the literature (Bressler and Silver 2010; Swisher et al. 2007). No differences were observed in the maps obtained through each of these methods.

Superior IPS

To identify the superior IPS ROI previously shown to be involved in VSTM storage (Todd and Marois 2004; Xu and Chun 2006), we followed the procedures development by Xu and Chun (2006) and implemented by Xu and Jeong (2015). In an event-related object VSTM experiment, participants viewed in the sample display, a brief presentation of 1–4 everyday objects, and after a short delay, judged whether a new probe object in the test display matched the category of the object shown in the same position as in the sample display. A match occurred in 50% of the trials. Gray-scaled photographs of objects from 4 categories (shoes, bikes, guitars, and couches) were used. Objects could appear above, below, to the left, or to the right of the central fixation. Object locations were marked by white rectangular placeholders that were always visible during the trial. The placeholders subtended 4.5° × 3.6° and were 4.0° away from the fixation (center to center). The entire display subtended 12.5° × 11.8°. Each trial lasted 6 s and contained the following: fixation (1 000 ms), sample display (200 ms), delay (1 000 ms), test display/response (2500 ms), and feedback (1 300 ms). With a counterbalanced trial history design (Todd and Marois 2004; Xu and Chun 2006), each run contained 15 trials for each set size and 15 fixation trials in which only the fixation dot was present for 6 s. Two filler trials, which were excluded from the analysis, were added at the beginning and end of each run, respectively, for practice and trial history balancing purposes. Participants were tested with 2 runs, each lasting 8 min.

Inferior IPS

Following the procedure developed by Xu and Chun (2006) and implemented by Xu and Jeong (2015), participants viewed blocks of objects and noise images. The object images were similar to the images in the superior IPS localizer, except that in all trials, 4 images were presented on the display. The noise images were generated by phase-scrambling the entire object images. Each block lasted 16 s and contained 20 images, each appearing for 500 ms followed by a 300 ms blank display. Participants were asked to detect the direction of a slight spatial jitter (either horizontal or vertical), which occurred randomly once in every 10 images. Each run contained 8 object blocks and 8 noise blocks. Each participant was tested with 2 or 3 runs, each lasting 4 min 40 s.

Lateral and Ventral Occipitotemporal Regions (VOT and LOT)

To identify LOT and VOT ROIs, following Kourtzi and Kanwisher (2000), participants viewed blocks of face, scene, object and scrambled object images (all subtended approximately 12.0° × 12.0°). The images were photographs of gray-scaled male and female faces, common objects (e.g., cars, tools, and chairs), indoor and outdoor scenes, and phase-scrambled versions of the common objects. Participants monitored a slight spatial jitter which occurred randomly once in every 10 images. Each run contained 4 blocks of each of scenes, faces, objects, and phase-scrambled objects. Each block lasted 16 s and contained 20 unique images, with each appearing for 750 ms and followed by a 50 ms blank display. Besides the stimulus blocks, 8-s fixation blocks were included at the beginning, middle, and end of each run. Each participant was tested with 2 or 3 runs, each lasting 4 min 40 s.

MRI Methods

MRI data were collected using a Siemens MAGNETOM Trio, A Tim System 3T scanner, with a 32-channel receiver array head coil. Participants lay on their back inside the MRI scanner and viewed the back-projected LCD with a mirror mounted inside the head coil. The display had a refresh rate of 60 Hz and spatial resolution of 1024 × 768. An Apple Macbook Pro laptop was used to present the stimuli and collect the motor responses. For topographic mapping, the stimuli were presented using VisionEgg (Straw 2008). All other stimuli were presented with Matlab running Psychtoolbox extensions (Brainard 1997).

Each participant completed 8–9 MRI scan sessions to obtain data for the high-resolution anatomical scans, topographic maps, functional ROIs and experimental scans. Using standard parameters, a T1-weighted high-resolution (1.0 × 1.0 × 1.3 mm3) anatomical image was obtained for surface reconstruction. For all the fMRI scans, a T2*-weighted gradient echo pulse sequence was used. For the experimental scans, 33 axial slices parallel to the AC-PC line (3 mm thick, 3 × 3 mm2 in-plane resolution with 20% skip) were used to cover the whole brain (TR = 2 s, TE = 29 ms, flip angle = 90°, matrix = 64 × 64). For the LOT/VOT and inferior IPS localizer scans 30–31 axial slices parallel to the AC-PC line (3 mm thick, 3 × 3 mm2 in-plane resolution with no skip) were used to cover occipital, temporal and parts of parietal and frontal lobes (TR = 2 s, TE = 30 ms, flip angle = 90°, matrix = 72 × 72). For the superior IPS localizer scans, 24 axial slices parallel to the AC-PC line (5 mm thick, 3 × 3 mm2 in-plane resolution with no skip) were used to cover most of the brain with priority given to parietal and occipital cortices (TR = 1.5 s, TE = 29 ms, flip angle = 90°, matrix = 72 × 72). For topographic mapping 42 slices (3 mm thick, 3.125 × 3.125 mm2 in-plane resolution with no skip) just off parallel to the AC-PC line were collected to cover the whole brain (TR = 2.6 s, TE = 30 ms, flip angle = 90°, matrix = 64 × 64). Different slice prescriptions were used here for the different localizers to be consistent with the parameters used in our previous studies. Because the localizer data were projected into the volume view and then onto individual participants’ flattened cortical surface, the exact slice prescriptions used had minimal impact on the final results.

Data Analysis

FMRI data were analyzed using FreeSurfer (surfer.nmr.mgh.harvard.edu), FsFast (Dale et al. 1999), and in-house MATLAB codes. LibSVM software (Chang and Lin 2011) was used for the MVPA support vector machine analysis. FMRI data preprocessing included 3D motion correction, slice timing correction and linear and quadratic trend removal.

ROI Definitions

Topographic Maps

Following the procedures described in Swisher et al. (2007) and by examining phase reversals in the polar angle maps, we identified topographic areas in occipital and parietal cortices including V1, V2, V3, V4, V3A, V3B, IPS0, IPS1, IPS2, IPS3, and IPS4 in each participant (Fig. 2A). Activations from IPS3 and IPS4 were in general less robust than those from other IPS regions. Consequently, the localization of these 2 IPS ROIs was less reliable. Nonetheless, we decided to include these 2 ROIs here to have a more extensive coverage of the PPC regions along the IPS.

Superior IPS

To identify this ROI (Fig. 2B) fMRI data from the superior IPS localizer was analyzed using a linear regression analysis to determine voxels whose responses correlated with a given participant’s behavioral VWM capacity estimated using Cowan’s K (Cowan 2001). In a parametric design, each stimulus presentation was weighted by the estimated Cowan’s K for that set size. After convolving the stimulus presentation boxcars (lasting 6 s) with a hemodynamic response function, a linear regression with 2 parameters (a slope and an intercept) was fitted to the data from each voxel. Superior IPS was defined as a region in parietal cortex showing significantly positive slope in the regression analysis overlapping or near the Talairach coordinates previously reported for this region (Todd and Marois 2004). As in Vaziri-Pashkam and Xu (2017), we defined superior IPS initially with a threshold of P < 0.001 (uncorrected). However, for 5 participants, this produced an ROI that contained too few voxels for MVPA decoding. We therefore used P < 0.001 (uncorrected) in 2 participants and relaxed the threshold to 0.05 in 3 or 0.1 in 2 participants to obtain a reasonably large superior IPS with at least 100 voxels across hemispheres. The resulting ROIs on average had 234 voxels across all the participants.

Inferior IPS

This ROI (Fig. 2B) was defined as a cluster of continuous voxels in the inferior part of IPS that responded more (P < 0.001 uncorrected) to the original than to the scrambled object images in the inferior IPS localizer and did not overlap with the superior IPS ROI.

LOT and VOT

These 2 ROIs (Fig. 2C, D) were defined as a cluster of continuous voxels in the lateral and ventral occipital cortex, respectively, that responded more (P < 0.001 uncorrected) to the original than to the scrambled object images. LOT and VOT loosely correspond to the location of LO and pFs (Malach et al. 1995; Grill‐Spector et al. 1998; Kourtzi and Kanwisher 2000) but extend further into the temporal cortex in an effort to include as many object selective voxel as possible in OTC regions. The LOT and VOT ROIs from all the participants are shown in Supplemental Figure S1.

MVPA Classification Analysis

In Experiment 1 in which natural object categories were shown in both natural and controlled images, to determine whether object category information is present in our ROIs, we performed MVPA decoding using a support vector machine (SVM) classifier. To generate fMRI response patterns for each condition in each run, we first convolved the 8-s stimulus presentation boxcars with a hemodynamic response function. We then conducted a general linear model analysis with 16 factors (2 image formats × 8 object categories) to extract beta value for each condition in each voxel in each ROI. This was done separately for each run. We then normalized the beta values across all voxels in a given ROI in a given run using z-score transformation to remove amplitude differences between runs, conditions and ROIs. Following Kamitani and Tong (2005), to discriminate between the fMRI response patterns elicited by the different object categories, within each image format (original or controlled), pairwise category decoding was performed with SVM analysis using a leave-one-out cross-validation procedure. As pattern decoding to a large extent depends on the total number of voxels in an ROI, to equate the number of voxels in different ROIs to facilitate comparisons across ROIs, the 75 most informative voxels were selected from each ROI using a t-test analysis (Mitchell et al. 2004). Specifically, during each SVM training and testing iteration, the 75 voxels with the lowest P values for discriminating between the 2 conditions of interest were selected from the training data. An SVM was then trained and tested only on these voxels. It is noteworthy, however, that the results remained very much the same in all experiments when we included all voxels from each ROI in the analysis. We have included sample results from Experiment 1 in Supplemental Figure S2. Nevertheless, to avoid potential confounds due to differences in voxel numbers, to increase power and to obtain the best decoding each brain region could accomplish, we decided to still select the 75 best voxels in our analyses for all subsequent experiments.

After calculating the SVM accuracies, within each image format, we averaged the decoding accuracy for each pair of object categories (28 total pairs) to determine the average category decoding accuracy in a given ROI. All subsequent experiments were analyzed using the same procedure.

When results from each participant were combined to perform group-level statistical analyses, all P values reported were corrected for multiple comparisons using Benjamini–Hochberg procedure for false discovery rate (FDR) controlled at q < 0.05 (Benjamini and Hochberg 1995). In the analysis of the 15 ROIs, the correction was applied to 15 comparisons, and in the analysis of the 3 representative regions, the correction was applied to 3 comparisons.

Representational Similarity Analysis

To visualize how similarities between object categories were captured by a given brain region, we performed multidimensional scaling (MDS) analysis (Shepard 1980). MDS takes as input either distance measures or correlation measures. From the group average pairwise category decoding accuracy, we constructed an 8 by 8 category-wise similarity matrix with the diagonal being 0.5 and the other values ranging from around 0.5 to 1. To turn these values into distance measures that started from 0 in order to perform MDS, we subtracted 0.5 from all cell values to obtain a similarity matrix with the diagonal set to zero. To avoid negative values (as distance measures cannot be negative), we replaced all values below zero with zero. We then projected the first 2 dimensions of the modified category-wise similarity matrix for a given brain region onto a 2D space with the distance between the categories denoting their relative representational similarities to each other in that brain region.

To determine the extent to which object category representations in one brain region were similar to those in another region, we correlated the unmodified category-wise similarity matrices (i.e., without subtracting 0.5 from the diagonal and replacing the values bellow zero to zero) for each pair of brain regions to form a region-wise similarity matrix. This was done by concatenating all the off-diagonal values of the unmodified category-wise similarity matrix for a given brain region to create an object category similarity vector for that region and then correlating these vectors between every pair of brain regions. The region-wise similarity matrix was first calculated for each participant and then averaged across participants to obtain the group level region-wise similarity matrix.

To calculate the split-half reliability of the region-wise similarity matrix, we divided the participants into 2 groups and correlated the region-wise similarity matrices between the 2 groups as a measure of reliability. This reliability measure was obtained for all possible split-half divisions and averaged to generate the final reliability measure. To determine the significance of this reliability measure, we obtained the bootstrapped null distribution of reliability by randomly shuffling the labels in the correlation matrix separately for the 2 split half groups and calculating the reliability for 10 000 random samples.

To visualize the representational similarity of the different brain regions, using the correlational values in the region-wise similarity matrix as input to MDS, we projected the first 2 dimensions of the region-wise similarity matrix onto a 2D space with the distance between the regions denoting their relative similarities to each other. We then fit 2 regression lines using least square error, with one line passing through the positions of the OTC regions V1, V2, V3, V4, LOT, and VOT; and the other positions of the PPC regions V3A, V3B, IPS0-4, inferior, and superior IPS.

To determine the amount of variance captured by the first 2 dimensions of the region-wise similarity matrix as depicted in the 2D MDS plots and that captured by the 2 regressions lines fitted to the occipitotemporal and posterior parietal regions on the 2D MDS plots, we first obtained the Euclidean distances between brain regions on the 2D MDS plots (2D-distance) and the Euclidean distance between brain regions based on the predicted positions on the 2 regression lines in the MDS plots (Line-distance). We then calculated the squared correlation (r2) between the 2D distances and the original region-wise similarity matrix to determine the amount of variance explained by the first 2 dimensions. Likewise, we calculated the r2 between the 2D-distances and the Line-distances to determine the amount of variance explained by the 2 regression lines on the 2D MDS plot. To determine how much of the total variance in the region-wise similarity matrix is explained by the 2 regression lines, we calculated the r2 between the line distances and the original region-wise similarity matrix.

In addition to line fitting in 2D, we also performed line fitting in higher-dimensional space, with the number of dimensions ranging from 2 to Nmax. Nmax included all the dimensions with positive eigenvalues as determined by MDS and varied between 12 and 14 across the 7 experiments. Nmax-dimensional space could account for close to 100% of the total variance of the region-wise correlation matrix (see Supplemental Fig. S4). We calculated the amount of total variance explained by each higher-dimensional space and that by the 2 fitted lines in that space using r2 as described above.

Within and Cross Position and Size Change Decoding

In Experiments 2 and 3, to determine whether object category representations in each region were tolerant to changes in position and size, we performed a generalization analysis and calculated cross-position and cross-size changes in decoding accuracy. To examine position tolerance in neural representations, we trained the classifier to discriminate 2 object categories at one location and then tested the classifier’s performance in discriminating the same 2 object categories at the other location. We repeated the same procedure for every pair of objects (28 pairs) and averaged the results across participants to determine the amount of position tolerance for each ROI. We also compared the amount of cross-position decoding with that from within-position decoding in which training and testing were done with object categories shown at the same position as described earlier. The same analysis was applied for testing size tolerance.

Results

A 2-Pathway Characterization of Visual Object Representation in OTC and PPC

To compare visual object representations in OTC and PPC, in Experiment 1 we showed human participants images from 8 object categories (i.e., faces, houses, bodies, cats, elephants, cars, chairs, and scissors, see Fig. 1A). These categories were chosen as they covered a good range of natural object categories encountered in our everyday visual environment and are the typical categories used in previous investigations of object category representations in OTC (Haxby et al. 2001; Kriegeskorte et al. 2008). Participants viewed blocks of images containing different exemplars from the same category and detected the presence of an immediate repetition of the same exemplar (Fig. 1C). We examined fMRI response patterns in topographically defined regions in occipital cortex (V1–V4) and along the IPS including V3A, V3B, and IPS0-4 (Sereno et al. 1995; Swisher et al. 2007; Silver and Kastner 2009, see Fig. 2A). We also examined functionally defined object-selective regions. In PPC, we selected 2 parietal regions previously shown to be involved in object selection and encoding, with one located in the inferior and one in the superior part of IPS (henceforward referred to as inferior and superior IPS, respectively; Xu and Chun 2006, 2009; see also Todd and Marois 2004, see Fig. 2B). In OTC, we selected regions in LOT overlapping with LO (Malach et al. 1995; Grill‐Spector et al. 1998, see Fig. 2C) and VOT, overlapping with pFs (Grill‐Spector et al. 1998, see Fig. 2D), whose responses were shown to be correlated with successful visual object detection and identification (Grill-Spector et al. 2000; Williams et al. 2007) and whose lesions have been linked to visual object agnosia (Goodale et al. 1991; Farah 2004).

To examine the representation of object categories in OTC and PPC, we first z-normalized fMRI response patterns to remove response amplitude differences across categories and brain regions. Using linear SVM classifiers (Kamitani and Tong 2005; Pereira et al. 2009), we performed pairwise category decoding and averaged the accuracies across all pairs to obtain the average decoding accuracy in each ROI and for each participant. Consistent with prior reports, we found robust and distinctive object representations in both OTC and PPC such that the average category decoding accuracy was significantly above chance in all the ROIs examined (ts > 6.09, Ps < 0.01, corrected for multiple comparisons using Benjamini–Hochberg procedure with FDR set at q < 0.05; this applies to all subsequence pairwise t-tests; see dark bars in Fig. 3A).

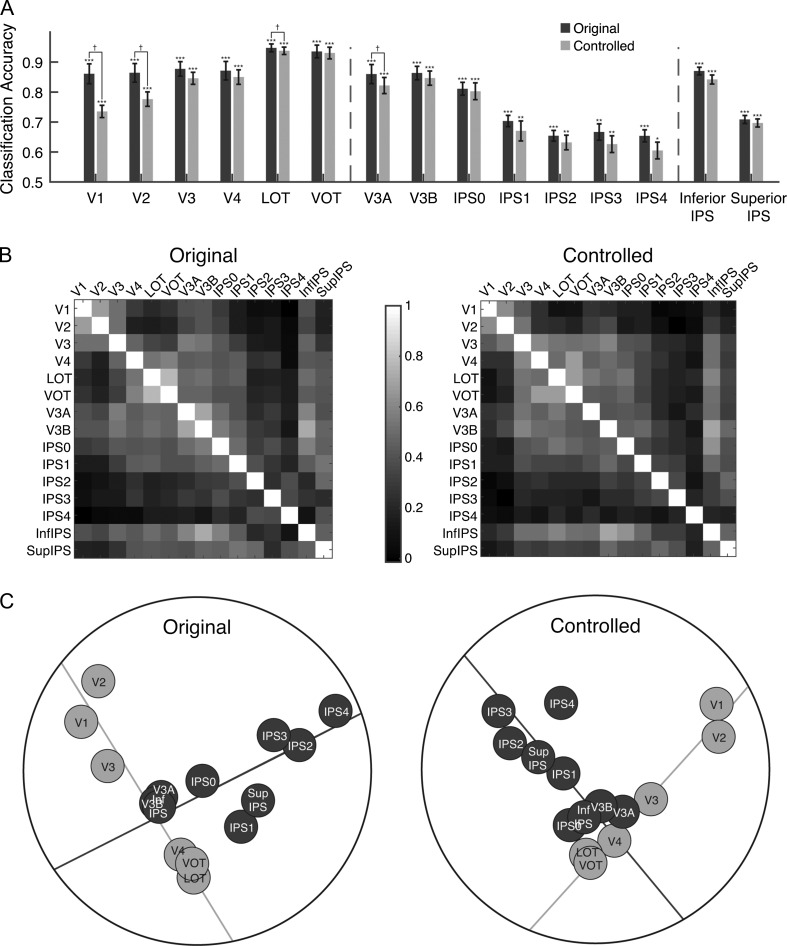

Figure 3.

Results of Experiment 1. (A) fMRI decoding accuracies for the original and controlled stimuli. The left vertical dashed line separates OTC ROIs from PPC ROIs and the right vertical dashed line separates functionally defined PPC ROIs from topographically defined PPC ROIs. Error bars indicate standard errors of the means. Chance level performance equals 0.5. Asterisks show corrected p values (***P < 0.001, **P < 0.01, *P < 0.05, †P < 0.1). (B) The region-wise similarity matrix for the original (left panel) and controlled (right panel) images. Each cell of the matrix depicts the correlation of 2 ROIs in how similar their representations for the 8 natural categories are. Lighter colors show higher correlations. (C) Results of the MDS analysis on the region-wise similarity matrices for the original (left panel) and controlled (right panel) images. The emergence of a 2-pathway structure is prominent in both images. A total least square regression line through the OTC regions (the light gray line) and another through the PPC regions (the dark gray line) were able to account for 79% and 61% of the total amount of variance of the region-wise differences in visual object representation for the original and the controlled object images, respectively. No systematic difference was observed whether original or controlled object images were used.

To compare the similarities of the visual representations among regions in OTC and PPC, we performed representational similarity analyses (Kriegeskorte and Kievit 2013). From the pairwise category classification accuracies, we first constructed a representational similarity matrix for the 8 object categories in each brain region. We then correlated the similarity matrices for each pair of brain regions to determine the extent to which object representations in one region were similar to those in another region. The resulting region-wise similarity matrix (Fig. 3B, left panel) on average had a split half reliability of 0.68, significantly higher than chance (bootstrapped null distribution 95% CI [−0.26,0.28]). To summarize this matrix and to better visualize the similarities across brain regions, we performed dimensionality reduction using a MDS analysis (Shepard 1980). Figure 3C (left panel), depicts the first 2 dimensions that explained 83% of the variance. Although both OTC and PPC contain object category representations, strikingly, they were separated from each other in the MDS plot (Fig. 3C, left panel). Specifically, brain regions seemed to be organized systematically into 2 pathways, with the occipitotemporal regions lining up hierarchically in an OTC pathway and the posterior parietal regions lining up hierarchically in a roughly orthogonal PPC pathway. To quantify this observation, we fit 2 straight lines to the points on the MDS plot. One line was fit to the OTC regions V1, V2, V3, V4, LOT, and VOT, and the other to the PPC regions V3A, V3B, IPS0-4, inferior IPS, and superior IPS (the light and dark gray lines in the left panel of Fig. 3C, left, respectively). These lines explained 95% of the variance of the positions of the regions on the 2D MDS plot and 79% of the variance of the full region-wise correlation matrix. Thus, with a bottom-up data-driven approach, we unveiled an information-driven 2-pathway representation of visual object information in posterior brain regions despite the existence of robust visual object representations in both OTC and PPC.

We also examined the contribution of the dorsal and ventral parts of V1–V3 separately and found that the 2 parts for each of these 3 visual areas are located right next to each other on the OTC axis in the MDS plots (see Supplemental Fig. S3).

The Contribution of Tolerance to Low-Level Feature Changes

Is the presence of the 2-pathway distinction caused by OTC containing progressively higher-level visual object representations from posterior to anterior regions whereas PPC containing relatively lower-level sensory information? When we equalized the overall luminance, contrast, average spatial frequency, and orientation of the images from the 8 object categories in the controlled condition of Experiment 1 (Willenbockel et al. 2010, see Fig. 1B controlled image), we again observed significant category decoding in all the regions examined (see Light bars in Fig. 3A, ts > 3.75, Ps < 0.05, corrected). The decoding accuracy was marginally higher for the original than controlled images in V1, V2, V3a, and LOT (ts > 3.3, Ps < 0.08, corrected) but showed no difference for the other regions examined (ts < 2.6, Ps > 0.1, corrected). Critically, the region-wise similarity matrix and MDS plot remained qualitatively similar for the controlled images (Fig. 3C, D, right panels). The region-wise similarity matrix had the split half reliability of 0.6 (bootstrapped null distribution 95% CI [−0.30,0.32]), the 2 dimensions in the MDS plot for these controlled images explained 70% of the overall variance. The 2 fitted lines in the MDS plot explained 95% of the variance in the 2-dimensions and 61% of the full region-wise correlation matrix. Moreover, the region-wise similarity matrices for the original and controlled images were highly correlated (r = 0.84, bootstrapped null distribution 95% CI [−0.28, 0.33]). The 2-pathway structure was thus present regardless of whether or not low-level image differences between the categories were equated.

To further compare the sensitivity of higher OTC and PPC regions to changes in low-level features, in Experiments 2 and 3 we examined whether visual representations in these regions differ in their tolerance to changes in position and size of the stimuli, respectively. While previous human fMRI adaptation studies have found comparable tolerance to size and viewpoint changes between these regions using relatively simple stimuli (Sawamura et al. 2005; Konen and Kastner 2008), whether fMRI decoding could reveal a similar effect has not been tested. Moreover, conflicting results exist regarding position and size tolerance in parietal visual representations in monkey neurophysiology studies (Sereno and Maunsell 1998; Janssen et al. 2008), leaving open the possibility that difference in position tolerance may account for a representational difference between higher OTC and PPC regions. To examine tolerance to changes in position, in Experiment 2, we presented images either above or below fixation and trained our classifier to discriminate object categories presented at one position and tested it on the categories shown at either the same or a different position. With the exception of V1, V2, and IPS 4 which showed only within-position object category decoding (ts > 4.6, Ps < 0.01, corrected) but no cross-position object category decoding, (ts < 2.1, Ps > 0.1, corrected), all other ROIs showed both within- and cross-position object category decoding (ts > 2.7, Ps < 0.05, corrected, see Fig. 4A). All examined regions also showed higher within- than cross-position decoding (ts > 3.73, Ps < 0.01, corrected, see Fig. 4A). To compare the amount of position tolerance in early visual and higher occipitotemporal and posterior parietal regions, we focused on 3 representative regions V1, VOT, and superior IPS. Our goal here was not to compare each region with every other region, but to show whether similar tolerance for position change existed between higher OTC and PPC regions. Given the hierarchical arrangement of PPC regions, we selected superior IPS as it was a higher PPC region than inferior IPS. Our quick visual inspection indicated that VOT showed more tolerance than LOT. Thus, to make a fair comparison between higher regions in PPC and VTC, VOT instead of LOT was chosen. The amount of decoding drop was larger in V1 than in either VOT or superior IPS (ts > 6.3, Ps < 0.001, corrected). Critically, there was no difference between VOT and superior IPS (t[6] = 1.32, P = 0.96, corrected). Higher-level occipitotemporal and posterior parietal regions thus showed comparable amounts of tolerance to changes in position, with both being greater than that in early visual area V1.

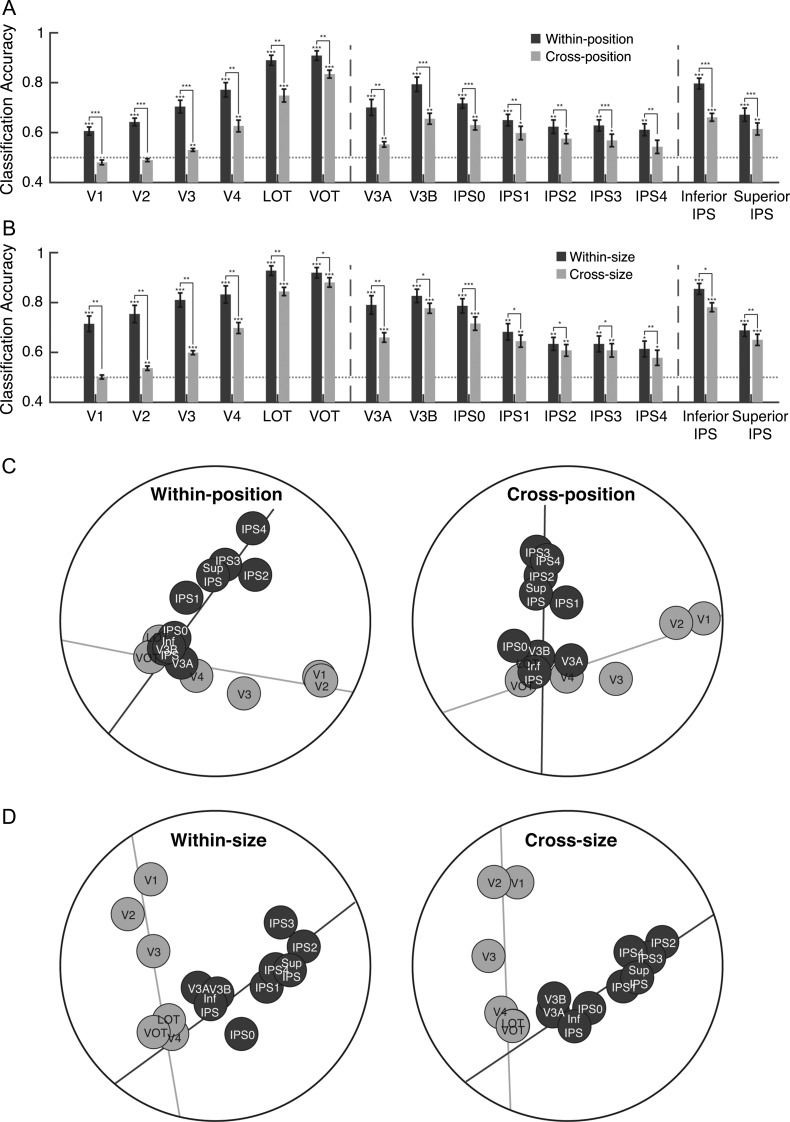

Figure 4.

Results of Experiments 2 and 3. (A) Comparing within- and cross-position object category decoding in Experiment 2. The dark grey bars show category decoding performance when the classifier was trained and tested on object categories shown at the same position and the light grey bars show category decoding performance when the classifier was trained at one position and tested at the other position. (B) Comparing within- and cross-size object category decoding in Experiment 3. The dark and light grey bars show category decoding performance for within- and cross-size category decoding, respectively. The left vertical dashed line separates OTC regions from PPC regions and the right vertical dashed lines separate PPC functionally defined regions from PPC topographically defined regions. Error bars indicate standard errors of the means. The horizontal dashed line represents chance level performance at 0.5. Asterisks show corrected P values (***P < 0.001, **P < 0.01, *P < 0.05, †P < 0.1). (C) MDS plots of the region-wise similarity matrices for Experiment 2 for within-position (left panel) and cross-position (right panel) decoding. The 2-pathway structure (i.e., the light and dark gray total least square regression lines through the OTC and PPC regions, respectively) accounted for 77% and 69% of the total amount of variance in region-wise differences in visual object representation for the 2 types of decoding, respectively. (D) MDS plots of the region-wise similarity matrices for Experiment 3 for within-size (left panel) and cross-size (right panel) decoding. The 2-pathway structure accounted for 64% and 81% of the total amount of variance in region-wise differences in visual object representation for the 2 types of decoding, respectively. Overall, the 2-pathway structure remains stable across variations in the position and size of objects.

Similar results were found for size tolerance in Experiment 3 (Fig. 4B). With the exception of V1 which showed only within-size (t[6] = 6.87, P < 0.001, corrected) but no cross-size object category decoding (t[6] = 0.17, P = 0.87, corrected), both types of decoding was significant in all the other regions examined (ts > 2.57, Ps < 0.05, corrected). All regions also showed higher within- than cross-size decoding (ts > 2.7, Ps < 0.05, corrected). Among the 3 representative regions, the amount of decoding drop was similar for superior IPS and VOT (t[6] = 0.06, P = 0.96, corrected), with both being smaller than that for V1 (ts > 5.47, Ps < 0.01, corrected). Object representations in both higher occipitotemporal and posterior parietal regions thus showed similar tolerance to changes in position and size, indicative of the existence of more abstract object representations as opposed to low-level visual representations in these regions.

MDS analyses of the region-wise similarity matrix from these 2 experiments again revealed the existence of a 2-pathway structure, with the MDS plots remaining qualitatively similar to those observed in Experiment 1 regardless of whether the cross-position/size or the within-position/size classification matrices were used to calculate region-wise similarities (Fig. 4C,D). The split-half reliability of the within and cross classification matrices were 0.67 and 0.61 for the position and 0.67 and 0.65 for the size experiments (bootstrapped null distribution 95% CI upper bound of 0.33 across all the conditions). The correlations of the region-wise similarity matrices between the within- and cross-classifications were high and at 0.78 and 0.85 (bootstrapped null distribution 95% CI of [−0.25, 0.34] and [−0.23, 0.27]) for the size and position experiments, respectively. The 2 dimensions of the MDS explained (for within-/cross-classification) 80/73% and 67/84% of the variance of the full correlation matrix in the position and size experiments, respectively. The 2 lines explained 96/94% and 92/97% of the variance of the 2D MDS and 77/69% and 64/81% of the variance of the full correlation matrix for the position and size experiments, respectively. These results not only reaffirmed the existence of the 2-pathway structure observed in Experiment 1 but also indicated that the 2-pathway structure remains stable across variations in the position and size of objects.

The Contribution of Special Natural Object Categories

Higher OTC regions contain areas with selectivity for special natural object categories and features such as faces, body parts, scenes, animacy, and size (Kanwisher et al. 1997; Epstein and Kanwisher 1998; Chao et al. 1999; Downing et al. 2001; Kriegeskorte et al. 2008; Martin and Weisberg 2003; Konkle and Oliva 2012; Konkle and Caramazza 2013). Perhaps, the emergence of this progressively rich natural object landscape along OTC shaped the representational structure of the OTC pathway, making it distinct from that of the PPC pathway. To examine this possibility, in Experiment 4, participants viewed in the same test session 9 novel shape categories adopted from Op de Beeck et al. (2008, see Fig. 5A) as well as the 8 natural object categories used earlier. There was an overall significant decoding for both categories in all the regions examined (ts > 4.57, Ps < 0.01, corrected). Except for IPS1–4 where no difference was found (ts < 2.22, Ps > 0.09, corrected), decoding was lower for the artificial than the natural categories in all the other regions examined (ts > 3.26, Ps < 0.05, corrected), likely reflecting a greater similarity among the artificial than the natural categories (Fig. 5B).

Despite this difference in decoding, the 2-pathway structure was present in the representational structures of both types of categories (Fig. 5C). The region-wise correlation matrices for the artificial and natural categories had a split half reliability of 0.54 (bootstrapped null distribution 95% CI [−0.22,0.24]) and 0.66 (bootstrapped null distribution 95% CI [−0.25,0.26]) across participants, respectively, and were highly correlated (r = 0.81, bootstrapped null distribution 95% CI [−0.21, 0.26]). The 2 dimensions in the MDS plots explained 79% and 71% of the total variance, the 2 fitted straight lines (Fig. 5C) explained 84% and 89% of the variance of the 2D space, and 64% and 64% of the variance of the full correlation matrix for the artificial and natural stimuli, respectively. Thus, the emergence of the 2-pathway structure in the representational space was not a result of the special natural object categories used.

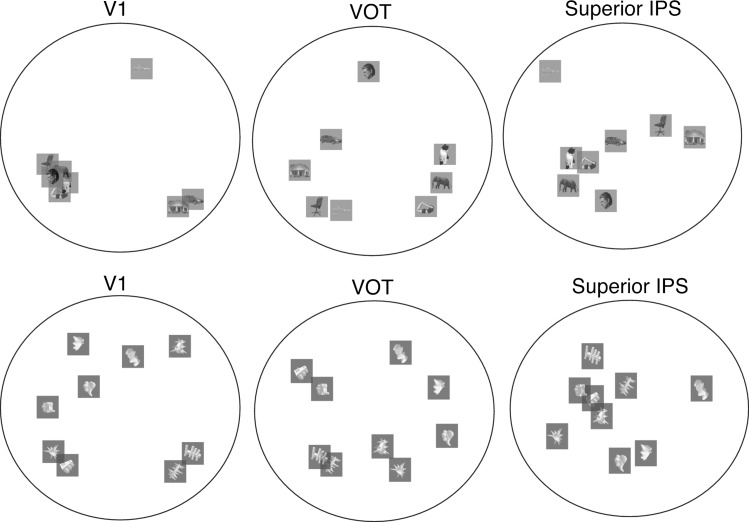

The presence of the 2-pathway structure indicates that object representations differ between PPC and OTC. Affirming this finding, in MDS plots depicting the representational structure of the 8 natural object categories and the 9 artificial object categories in the 3 representative brain regions (i.e., V1, VOT, and superior IPS, see Fig. 6), the distribution of the categories appeared to differ among these 3 brain regions. Given that we did not systematically manipulate feature similarity among the different categories used, it is difficult to draw firm conclusions regarding the exact neural representation schemes used in each region. Future studies are needed to decipher the precise neural representational differences among PPC, VTC and early visual regions.

Figure 6.

MDS plots depicting the representational structure of the 8 natural object categories and the 9 artificial object categories used in Experiment 4 in the 3 representative brain regions, V1, VOT, and superior IPS. Consistent with the region-wise MDS plots shown in Figure 5, the representation structures for these object categories differ among these 3 brain regions.

The Contribution of Task-Relevant Visual Representation

PPC has long been associated with attention-related processing (Corbetta and Shulman 2011; Ptak 2012; Shomstein and Gottlieb 2016) and shows greater representations of task and task-relevant visual features than OTC (Xu and Jeong 2015; Bracci et al. 2017; Jeong and Xu 2017; Vaziri-Pashkam and Xu 2017). Could this difference contribute to the presence of the PPC pathway in the representational space? In a previous study (Vaziri-Pashkam and Xu 2017), with the same 8 categories of natural objects, we presented colors along with object shapes and manipulated in 3 experiments how color and shape were integrated, from partially overlapping, to overlapping but on separate objects, to being fully integrated (Fig. 7). We found that object category representation was stronger when object shape than color was task relevant, with this task effect being greater in PPC than OTC but decreasing as task relevant and irrelevant features became more integrated due to object-based encoding. Here, we compared the brain region-wise similarity matrices for the 2 tasks in these 3 experiments (referred to here as Experiments 5–7), which were not examined in our previous study. Regardless of the amount of task modulation in PPC, both the region-wise similarity matrix and the 2-pathway separation were stable across tasks in all 3 experiments. Specifically, the correlations of the region-wise similarity matrices between the shape and the color tasks were 0.79, 0.86, 0.74 (bootstrapped null distribution 95% CI [−0.24,0.29], [−0.20,0.24], [−0.23, 0.28]) for Experiments 5–7, respectively, indicating highly similar representational structures across the 2 tasks (the split-half reliability of the similarity matrices were (shape/color task): 0.68/0.71, 0.53/0.73, 0.69/0.68 for Experiments 5–7, respectively, with bootstrapping null distribution 95% max CI upper bound being 0.27/0.3). MDS and the 2-line regression analyses showed very similar structure for the 2 tasks across the 3 experiments (Fig. 7). In Experiment 5, the 2 dimensions in the MDS plots explained 65% and 62% of the total variance of the region-wise similarity matrix in the shape and the color tasks, respectively. These values were 66% and 78% in Experiment 6, and 68% and 68% in Experiment 7. The 2 fitted lines (Fig. 7) explained 94% and 93% of the variance in the 2D MDS space for the shape and the color tasks in Experiment 5. These values were 97% and 84% in Experiment 6, and 92% and 92% in Experiment 7. The 2 fitted lines explained 63% and 58% of the total variance of the region-wise similarity matrix in the shape and the color tasks in Experiment 5. These values were 65% and 63% in Experiment 6, and 61% and 63% in Experiment 7. Overall no systematic variations were observed across experiments and tasks. These results indicate that even-though object category representations are degraded when attention is diverted away from the object shape (Vaziri-Pashkam and Xu 2017), the representational structure within each brain region remains stable across tasks.

Line Fitting in Higher-Dimensional Space

Our main analyses in this series of experiments involved plotting the first 2 dimensions of the region-wise similarity matrix using MDS and then performing line fitting. Plotting the 2D MDS space was convenient for visualization but might not have been the best approach as line fitting in higher-dimensional space could potentially capture more representational variance among brain regions. To test this possibility, in addition to line fitting in 2D MDS, we also performed line fitting in higher-dimensional space with the maximal number of dimensions included captured close to 100% of the total variance in the region-wise similarity matrix. As shown in Supplemental Figure S4, despite the increase in the amount of total variance explained by adding more dimensions to MDS, the amount of variance explained by the 2 lines did not steadily increase. On the contrary, line fitting in 2D explained about the same or greater amount of variance than line fitting in higher-dimensional space. This suggests that the best-fit lines likely intersected even in higher-dimension, and since 2 intersecting lines defined a 2D space, our 2D MDS analysis was sufficient to capture this surface. Adding additional dimensions was thus unnecessary. These results affirm our main conclusion that a 2-pathway structure exists in the region-wise representational similarity space.

Discussion

Recent studies have reported the existence of rich nonspatial visual object representations in PPC, similar to those found in OTC. Focusing on PPC regions along the IPS that have previously been shown to exhibit robust visual encoding, here we showed that even though robust object category representations exist in both OTC and PPC regions, using a bottom-up data-driven approach, there is still a large separation among these regions in the representational space, with occipitotemporal regions lining up hierarchically along an OTC pathway and posterior parietal regions lining up hierarchically along an orthogonal PPC pathway. This information-driven 2-pathway representational structure was independently replicated 10 times in the present study (Figs 3C, 4C, D, 5C, 7), accounting for 58–81% of the total variance of region-wise differences in visual representation. The presence of the 2 pathways was not driven by a difference in tolerance to changes in features such as position and size between higher OTC and PPC regions. The 2-pathway distinction was present for both natural and artificial object categories, and was resilient to variations in task and attention.

The presence of the 2 pathways in our results was unlikely to have been driven by fMRI noise correlation between adjacent brain regions. This is because areas V4 and V3A that are cortically apart appear adjacent to each other in the representational space (Fig. 3C, left panel), reflecting a shared representation likely enabled by the recent rediscovery of the vertical occipital fasciculus that directly connects these 2 regions (Yeatman et al. 2014; Takemura et al. 2016). Neither could the 2-pathway distinction be driven by higher category decoding accuracy in OTC than PPC (Fig. 3A), as this difference would have been normalized during the calculation of the correlations of the category representation similarity matrices between brain regions. Moreover, even though the overall decoding was lower and more similar among the brain regions for the artificial object categories used, we still observed a similar 2-pathway separation.

These results showed that whereas regions extending further into OTC follow more or less a continuous visual information representation trajectory from early visual areas, there is a change in information representation along PPC regions, with the most posterior parietal regions located away from both the early visual and the ventral OTC regions in the region-wise MDS plot. Thus, despite the presence of object representation throughout posterior regions, the representations formed in higher PPC regions are distinct from those in both early visual and higher OTC regions.

Although OTC visual representations become progressively more tolerant to changes in low-level visual features going from posterior to anterior regions, a similar amount of tolerance to position and size changes was seen in both higher OTC and PPC regions (see also comparable tolerance to size and viewpoint changes in an fMRI adaptation study from Konen and Kastner, 2008). This indicates that the distinction between the 2 pathways observed here was not due to OTC representations becoming progressively more abstract than that of PPC or representations in PPC being more position bound than that of OTC.

There is an increasingly rich natural object category-based representation going from posterior to anterior OTC, with higher occipitotemporal regions showing selectivity for special natural object categories and features such as faces, body parts, scenes, animacy, and size (Chao et al. 1999; Downing et al. 2001; Epstein and Kanwisher 1998; Kanwisher et al. 1997; Konkle and Oliva 2012; Konkle and Caramazza 2013; Kriegeskorte et al. 2008; Martin and Weisberg 2003). Nonetheless, this does not seem to have contributed to the results observed here, as the 2-pathway distinction was preserved even when artificial object categories were used. Thus, the 2-pathway distinction likely reflects a more fundamental difference in the neural coding schemes used by OTC and PPC, rather than preferences for special natural object categories or features in OTC.

Although attention and task-relevant visual information processing have been strongly associated with PPC (Toth and Assad 2002; Stoet and Snyder 2004; Gottlieb 2007; Xu and Jeong 2015; Bracci et al. 2017; Jeong and Xu 2017; Vaziri-Pashkam and Xu 2017), our task manipulation revealed that the 2-pathway distinction is unchanged whether or not object shape was attended and task relevant. It is unlikely that our task manipulation was ineffective as task effects were readily present in the overall object category decoding accuracy and a number of other measures (Vaziri-Pashkam and Xu 2017). The present set of studies addressed an orthogonal question regarding the nature of representation and whether or not objects are represented similarly in the 2 sets of brain regions. This is independent of whether or not representations are modulated by task to a similar or different extent in these regions. Thus, in addition to PPC’s involvement in attention and task-relevant visual information processing, the 2-pathway distinction suggests that visual information is likely coded fundamentally differently in PPC compared with that in OTC. That said, it would be interesting for future studies to include tasks beyond visual processing, such as action planning or semantic judgment, and test whether the 2-pathway structure is still present in those tasks. Given the necessity of visual processing preceding those tasks, the 2-pathway structure would likely be present whenever the processing of visual information is required no matter what the final goal/task may be. Further studies are needed to verify this prediction.

Zachariou et al. (2016) recently reported that a position sensitive region in parietal cortex could contribute to the configural processing of facial features. However, face configural processing has also been reported in OTC (Liu et al. 2010; Zhang et al. 2015) and strong functional connectivity between OTC and PPC was present in Zachariou et al. (2016). It is thus unlikely that the 2-pathway distinction in the representational space observed here is driven by the presence of configural processing in PPC but not OTC.

It has been argued that PPC processes visual input for action preparation (Goodale and Milner 1992). Representations for grasping actions and graspable objects, however, have typically activated a region in anterior intraparietal (AIP) sulcus (Sakata and Taira 1994; Binkofski et al. 1998; Chao and Martin 2000; Culham et al. 2003; Martin and Weisberg 2003; Janssen and Scherberger 2015), more anterior to the parietal regions examined here. Nevertheless, posterior IPS regions were thought to provide input to AIP and mediate action planning between early visual areas and AIP (Culham and Valyear 2006). Thus, it could be argued that object features relevant for grasping or action planning would be preferentially represented in PPC, making representations differ between PPC and OTC. Along this line, Fabbri et al. (2016) reported a mixed representation of visual and motor properties of objects in PPC during grasping, and Bracci and Op de Beeck (2016) suggested that PPC and OTC differ in processing object action properties. However, our experiment never required the planning of category-specific action and the one-back task performed required identical action planning across all the categories. Moreover, similar results were observed for the artificial shape categories with no prior stored action representation. In addition, the action-biased PPC region reported by Bracci and Op de Beeck (2016) was largely located in superior parietal lobule, likely corresponding to the parietal reach region, and more medial than the IPS visual regions examined here. Thus, a strictly action-based account could not explain the 2-pathway separation observed here.

Together with other recent findings, the presence of rich nonspatial visual representation found in both OTC and PPC argues against the original ventral-what and dorsal-where/how 2-pathway characterization of posterior brain regions (Mishkin et al. 1983; Goodale and Milner 1992). Here using a bottom-up information-driven approach, even for processing action-independent nonspatial object information, we found a large separation between OTC and PPC regions. It is important to keep in mind that regions on the 2 pathways described here reflect the representational content of these regions, rather than their anatomical locations on the cortex. Given the numerous feedforward and feedback connections among brain regions, anatomical locations may not accurately capture the representational similarities of the different brain regions.

While the present results do not reveal the precise neural coding schemes used in OTC and PPC that would account for the representation difference in these regions, they nevertheless unveil the presence of the 2 pathways using an entirely data-driven approach and rule out factors that do not contribute to the representation difference. These results lay important foundations for future work that will continue the quest. A recent development in neurophysiology studies have revealed that while OTC neurons use a fixed-selectivity coding, prefrontal and PPC neurons can use a mixed-selectivity coding (Rigotti et al. 2013; Parthasarathy et al. 2017; Zhang et al. 2017). The difference between fixed versus mixed-selectivity coding could possibly contribute to the difference in neural coding schemes in OTC and PPC. Alternatively, PPC neurons could be selective for a different set of features compared with those in OTC. Further work is needed to thoroughly investigate these possibilities.

In summary, with a bottom-up information-driven approach, the present results demonstrate that visual representations in PPC are not mere copies of those in OTC and that visual information is processed in 2 separate hierarchical pathways in the human brain, reflecting different representational spaces for visual information in PPC and OTC. Although more research is needed to fully understand the precise neural coding schemes of these 2 pathways, the separation of visual processing into these 2 pathways will serve as a useful framework to further our understanding of visual information representation in posterior brain regions.

Supplementary Material

Footnotes

We thank Katherine Bettencourt for her help in selecting parietal topographic maps, Hans Op de Beeck for kindly sharing his computer-generated stimulus set with us, and Michael Cohen for sharing some of the real object images with us. Conflict of Interest: None declared.

Funding

National Institute of Health, grant (1R01EY022355) to Y.X.

References

- Benjamini Y, Hochberg Y. 1995. Controlling the false discovery rate—a practical and powerful approach to multiple testing. J R Stat Soc B Methodol. 57:289–300. [Google Scholar]

- Bettencourt KC, Xu Y. 2016. Decoding the content of visual short-term memory under distraction in occipital and parietal areas. Nat Neurosci. 19:150–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Binkofski F, Dohle C, Posse S, Stephan KM, Hefter H, Seitz RJ, Freund HJ. 1998. Human anterior intraparietal area subserves prehension: a combined lesion and functional MRI activation study. Neurology. 50:1253–1259. [DOI] [PubMed] [Google Scholar]

- Bracci S, Daniels N, Op de Beeck HP. 2017. Task context overrules object- and category-related representational content in the human parietal cortex. Cereb Cortex. 27:310–321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bracci S, Op de Beeck HP. 2016. Dissociations and associations between shape and category representations in the two visual pathways. J Neurosci. 36:432–444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics toolbox. Spat Vis. 10:433–436. [PubMed] [Google Scholar]

- Bressler DW, Silver MA. 2010. Spatial attention improves reliability of fMRI retinotopic mapping signals in occipital and parietal cortex. NeuroImage. 53:526–533. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CC, Lin CJ. 2011. LIBSVM: a library for support vector machines. ACM Trans Intell Syst Technol. 2(3):27. [Google Scholar]

- Chao LL, Haxby JV, Martin A. 1999. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 2(10):1913. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. 2000. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 12:478–484. [DOI] [PubMed] [Google Scholar]

- Christophel TB, Hebart MN, Haynes J-D. 2012. Decoding the contents of visual short-term memory from human visual and parietal cortex. J Neurosci. 32:12983–12989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. 2011. Spatial neglect and attention networks. Annu Rev Neurosci. 34:569–599. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cowan N. 2001. Metatheory of storage capacity limits. Behav Brain Sci. 24:154–185. [DOI] [PubMed] [Google Scholar]

- Culham JC, Danckert SL, Souza JFXD, Gati JS, Menon RS, Goodale MA. 2003. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 153:180–189. [DOI] [PubMed] [Google Scholar]

- Culham JC, Valyear KF. 2006. Human parietal cortex in action. Curr Opin Neurobiol. 16:205–212. [DOI] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. 1999. Cortical surface-based analysis. I. Segmentation and surface reconstruction. NeuroImage. 9:179–194. [DOI] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. 2001. A cortical area selective for visual processing of the human body. Science. 293:2470–2473. [DOI] [PubMed] [Google Scholar]

- Epstein R, Kanwisher N. 1998. A cortical representation of the local visual environment. Nature. 392:33402. [DOI] [PubMed] [Google Scholar]

- Ester Edward F, Sprague Thomas C, Serences John T. 2015. Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron. 87:893–905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fabbri S, Stubbs KM, Cusack R, Culham JC. 2016. Disentangling representations of object and grasp properties in the human brain. J Neurosci. 36:7648–7662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farah MJ. 2004. Visual agnosia. Cambridge, Mass.: MIT Press. [Google Scholar]

- Fitzgerald JK, Freedman DJ, Assad JA. 2011. Generalized associative representations in parietal cortex. Nat Neurosci. 14(8):2878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freud E, Plaut DC, Behrmann M. 2016. ‘What’ is happening in the dorsal visual pathway. Trends Cogn Sci. 20:773–784. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. 1992. Separate visual pathways for perception and action. Trends Neurosci. 15:20–25. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD, Jakobson LS, Carey DP. 1991. A neurological dissociation between perceiving objects and grasping them. Nature. 349:154–156. [DOI] [PubMed] [Google Scholar]

- Gottlieb J. 2007. From thought to action: the parietal cortex as a bridge between perception, action, and cognition. Neuron. 53:9–16. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Hendler T, Malach R. 2000. The dynamics of object-selective activation correlate with recognition performance in humans. Nat Neurosci. 3:837–843. [DOI] [PubMed] [Google Scholar]

- Grill‐Spector K, Kushnir T, Hendler T, Edelman S, Itzchak Y, Malach R. 1998. A sequence of object‐processing stages revealed by fMRI in the human occipital lobe. Hum Brain Mapp. 6:316–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hasson U, Levy I, Behrmann M, Hendler T, Malach R. 2002. Eccentricity bias as an organizing principle for human high-order object areas. Neuron. 34:479–490. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. 2001. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 293:2425–2430. [DOI] [PubMed] [Google Scholar]

- Hong H, Yamins DLK, Majaj NJ, DiCarlo JJ. 2016. Explicit information for category-orthogonal object properties increases along the ventral stream. Nat Neurosci. 19:613–622. [DOI] [PubMed] [Google Scholar]

- Janssen P, Scherberger H. 2015. Visual guidance in control of grasping. Annu Rev Neurosci. 38:1–18. [DOI] [PubMed] [Google Scholar]

- Janssen P, Srivastava S, Ombelet S, Orban GA. 2008. Coding of shape and position in macaque lateral intraparietal area. J Neurosci. 28:6679–6690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SK, Xu Y. 2016. Behaviorally relevant abstract object identity representation in the human parietal cortex. J Neurosci. 36:1607–1619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeong SK, Xu Y. 2017. Task-context-dependent linear representation of multiple visual objects in human parietal cortex. J Cogn Neurosci. 29:1–12. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. 2005. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 8(5):679–685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. 1997. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, Chen Q, Jeong SK, Mruczek REB. 2017. A brief comparative review of primate posterior parietal cortex: a novel hypothesis on the human toolmaker. Neuropsychologia. 105:123–134. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konen CS, Kastner S. 2008. Two hierarchically organized neural systems for object information in human visual cortex. Nat Neurosci. 11(2):224–231. nn2036. [DOI] [PubMed] [Google Scholar]

- Konkle T, Caramazza A. 2013. Tripartite organization of the ventral stream by animacy and object size. J Neurosci. 33:10235–10242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Oliva A. 2012. A real-world size organization of object responses in occipitotemporal cortex. Neuron. 74:1114–1124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. 2000. Cortical regions involved in perceiving object shape. J Neurosci. 20:3310–3318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz D, Saleem K, Baker C, Mishkin M. 2011. A new neural framework for visuospatial processing. J Vis. 11:319. [DOI] [PMC free article] [PubMed] [Google Scholar]