Abstract

Background

The purpose of this review is to depict current research and impact of artificial intelligence/machine learning (AI/ML) algorithms on dialysis and kidney transplantation. Published studies were presented from two points of view: What medical aspects were covered? What AI/ML algorithms have been used?

Methods

We searched four electronic databases or studies that used AI/ML in hemodialysis (HD), peritoneal dialysis (PD), and kidney transplantation (KT). Sixty-nine studies were split into three categories: AI/ML and HD, PD, and KT, respectively. We identified 43 trials in the first group, 8 in the second, and 18 in the third. Then, studies were classified according to the type of algorithm.

Results

AI and HD trials covered: (a) dialysis service management, (b) dialysis procedure, (c) anemia management, (d) hormonal/dietary issues, and (e) arteriovenous fistula assessment. PD studies were divided into (a) peritoneal technique issues, (b) infections, and (c) cardiovascular event prediction. AI in transplantation studies were allocated into (a) management systems (ML used as pretransplant organ-matching tools), (b) predicting graft rejection, (c) tacrolimus therapy modulation, and (d) dietary issues.

Conclusions

Although guidelines are reluctant to recommend AI implementation in daily practice, there is plenty of evidence that AI/ML algorithms can predict better than nephrologists: volumes, Kt/V, and hypotension or cardiovascular events during dialysis. Altogether, these trials report a robust impact of AI/ML on quality of life and survival in G5D/T patients. In the coming years, one would probably witness the emergence of AI/ML devices that facilitate the management of dialysis patients, thus increasing the quality of life and survival.

1. Introduction

Artificial intelligence (AI) solutions are currently present in all medical and nonmedical fields. New algorithms have evolved to handle complex medical situations where the medical community has reached a plateau [1]. Medical registries received machine learning (ML) solutions for a better prediction of events that beat human accuracy [2]. Since AI/ML “…have the potential to adapt and optimize device performance in real-time to continuously improve health care for patients…,” the US Food and Drug Administration released this year a regulatory framework for modifications in the AI/ML-based software as a medical device [3].

The same board has approved in the last year at least 15 AI/deep learning platforms involved in the medical field (e.g., for atrial fibrillation detection, CT brain bleed diagnosis, coronary calcium scoring, paramedic stroke diagnosis, or breast density via mammography) [4].

In the last 15 years, numerous issues and complications generated by the end-stage renal disease requiring dialysis [5] (chronic kidney disease (CKD) stage G5D), by the technique of dialysis itself or by kidney transplantation (CKD stage G5T) [6], received incipient inputs from AI algorithms. However, the implementation of AI solutions in the dialysis field is still at the beginning. None of the successes reported above and approved by the FDA could be found in renal replacement therapy. Given the significant influence on healthcare in medical image processing, smart robotics in surgery, or Apple's watch impact on atrial fibrillation detection, the nephrology community recently raised two questions: “Can this success be exported to dialysis? Is it possible to design and develop smart dialysis devices?” [1].

If we are to be realistic, most of the end-stage renal disease (ESRD) patients (those who cannot benefit from kidney transplantation) are reliant on technology: currently, without a dialysis machine (the so-called “artificial kidney”), it is almost impossible to stay alive. It is evident that from the desire to create safer and more physiological devices, the nephrology community and the patients could benefit from AI/ML solutions powering actual machines or other improved versions. In other words, enhancing the functionality of the “artificial kidney” with “artificial intelligence” could constitute the next significant step toward better management of G5D patients.

In this regard, in 2019 was published probably one of the most suggestive and advanced examples: a multiple-endpoint model was developed to predict session-specific Kt/V, fluid volume removal, heart rate, and blood pressure based on dialysis-patient characteristics, historic hemodynamic responses, and dialysis-related prescriptions [7]. This research opens the door to other AI studies in ESRD patients in which an ML-powered machine would continuously and autonomously change its parameters (temperature, dialysate electrolyte compositions, duration, and ultrafiltration rates) in order to avoid one of the most vexing situations in dialysis (e.g., hypotension). Also, in this future framework, nephrology would indeed be “personalized medicine,” since a dialysis session would not be the same.

Our review's purpose is to depict the current research and impact of AI/ML algorithms on renal replacement therapy (hemo-, peritoneal dialysis, and kidney transplantation). We intend to summarize all studies published on this topic, presenting data from two points of view: (a) what medical aspects were covered? (b) What AI/ML algorithms have been used?.

2. Materials and Methods

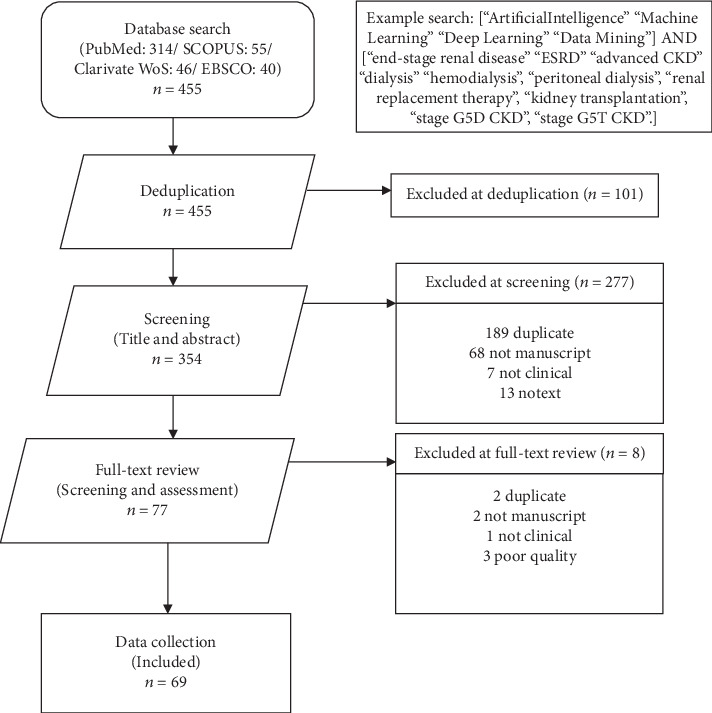

Our review adheres as strictly as possible to the PRISMA guidelines. The overall workflow is shown in Figure 1 and described below.

Figure 1.

PRISMA flowchart for including articles in our study.

2.1. Search Strategy

We searched the electronic databases of PubMed, SCOPUS, Web of Science, and EBSCO from its earliest date until August 2019 for studies using AI/ML algorithms in dialysis and kidney transplantation. The terms used for searching were “artificial intelligence”, “machine learning”, “deep learning”, “data mining”, AND, “end-stage renal disease”, “ESRD”, “advanced CKD”, “dialysis”, “hemodialysis”, “peritoneal dialysis”, “renal replacement therapy”, “kidney transplantation”, “stage G5D CKD”, “stage G5T CKD”. The reference sections of relevant articles were also searched manually for additional publications (Figure 1). RCTs and observational studies, including prospective or retrospective cohort studies, reviews, meta-analyses, and guidelines were included if referring to AI in G5D/T CKD.

Since the research field is not too broad, we decided to include published conference proceedings. Two independent reviewers selected studies by screening the title and abstract. During the screening stage, 277 titles were excluded from the 354 papers previously deduplicated. Seventy-seven papers were found. In another phase, from the full articles which conformed to the selection criteria, essential data were extracted independently, and the results sorted. Discrepancies were resolved by discussion and consensus. Duplicates were excluded both manually and through reference manager software. Finally, 69 studies met the inclusion criteria (see Supplemental Tables 1–3).

2.2. Clinical Approach (according to the Clinical Topics)

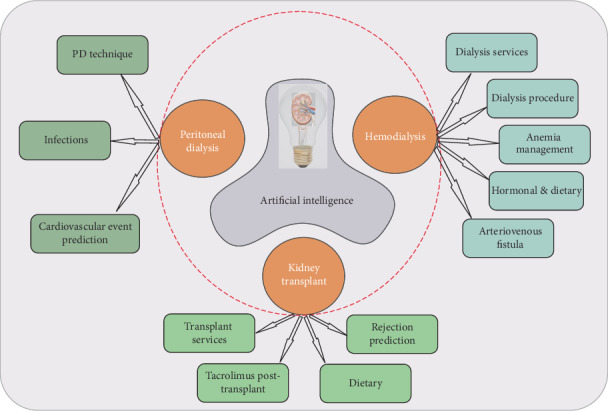

The sixty-nine included studies were split into three categories: AI and hemodialysis (HD), AI and peritoneal dialysis (PD), and AI and kidney transplantation (KT), respectively (Figure 2). There were 43 trials on AI in HD, eight studies in PD, and 18 in KT.

Figure 2.

The involvement of AI in hemodialysis, peritoneal dialysis, and kidney transplant, respectively.

Moreover, each one of the three main categories was further divided into subsections (Table 1). Trials dealing with AI and HD covered five issues: (a) dialysis center/healthcare management, (b) dialysis technique and procedure, (c) anemia management, (d) hormonal/dietary issues, and (e) arteriovenous fistula assessment. Regarding PD, the studies were divided into three subsections: (a) peritoneal technique issues, (b) infections, and (c) cardiovascular event prediction. Finally, studies dealing with AI in KT were allocated into four categories: (a) healthcare management systems, (b) predicting graft rejection, (c) tacrolimus therapy modulation, and (d) dietary issues.

Table 1.

AI studies involved in HD, PD, and KT, respectively.

| Stage G5 CKD | Clinical issue | No. | Studies | No. | Ref. |

|---|---|---|---|---|---|

| HD | |||||

| a | Dialysis services | 7 | Bellazzi 2005, Raghavan 2005, Tangri 2006, Jacob 2010, Titapiccolo 2013, Saadat 2017, Usvyat 2018 | 43 | [13–17, 74, 75] |

| b | Dialysis procedure | 14 | Nordio 1994, Nordio 1995, Guh 1998, Akl 2001, Goldfarb-Rumyantzev 2003, Gabutti 2004, Chiu 2005, Fernandez 2005, Mancini 2007, Cadena 2010, Azar 2011, Niel 2018, Barbieri 2019, Hueso 2019 | [20–23, 76–78] [7, 18, 19, 25, 79–81] |

|

| c | Anemia management | 13 | Martin Guerrero 2003, Martin Guerrero 2003, Gabutti 2006, Gaweda 2008, Gaweda 2008, Fuertinger 2013, Escandel-Montero 2014, Barbieri 2015, Barbieri 2016, Barbieri 2016, Brier 2016, Brier 2018, Bucalo 2018 | [26–28, 30–34] [29, 35, 82, 83] |

|

| d | Hormonal & dietary | 6 | Gabutti 2004, Wang 2006, Chen 2007, Bhan 2010, Nigwekar 2014, Rodriguez 2016 | [20, 36–40] | |

| e | AV fistula | 3 | Chen 2014, Bhatia 2018, Chao 2018 | [41–43] | |

| PD | |||||

| a | Peritoneal technique | 5 | Zhang 2005, Chen 2006, Tangri 2011, Brito 2019, John 2019 | 8 | [44–48] |

| b | Infections | 1 | Zhang 2017 | [49] | |

| c | Cardiovascular events | 2 | Rodriguez 2017, Fernandez- Lozano 2018 | [50, 51] | |

| KT | |||||

| a | Healthcare management | 2 | Sharma 2008, Karademirci 2015 | 18 | [52, 53] |

| b | Rejection prediction | 11 | Simic-Ogrizovic 1999, Fritsche 2002, Santori 2007, Greco 2010, Brown 2012, Decruyenaere 2015, Srinivas 2017, Yoo 2017, Gallon 2018, Jia 2018, Rashidi Khazaee 2018 | [54, 56–62, 84–86] | |

| c | Tacrolimus post-T | 4 | Seeling 2012, Tang 2017, Niel 2018, Thishya 2018 | [55, 63, 64, 87] | |

| d | Dietary | 1 | Stachowska 2006 | [65] |

Most of the studies (except for one randomized controlled trial (RCT)) were observational. Only three trials were published before 2000, whereas over 60% of the studies were reported after 2010. Most HD studies involved personalized anemia management and parameters of the dialysis session. The accurate prediction of graft rejection or individualizing immunosuppressive treatment posttransplant was the main topics covered by the AI and KT trials.

2.3. Algorithm Approach (according to the AI/ML Algorithm Used)

All trials were also classified according to the type of AI algorithm (Table 2). Core concepts, various AI algorithms, and differences between them have been defined and described elsewhere [8–11].

Table 2.

Different types of AI algorithms used in G5D/T trials.

| Type of AI/ML algorithm used | No. | Studies | Ref. |

|---|---|---|---|

| Unspecified machine learning (ML) algorithms | 15 | Cadena 2010, Fuertinger 2013, Barbieri 2015, Barbieri 2016, Brier 2016, Saadat 2017, Bhatia 2018, Bucalo 2018, Usvyat 2018, Zhang 2017, John 2019, Decruyenaere 2015, Karademirci 2015, Tang 2017, Gallon 2018 | [27, 28, 35, 80] [16, 42, 82, 83] [48, 49, 75, 84] [53, 55, 85] |

| ML—Naive Bayes models | 1 | Rodrigues 2017 | [50] |

| ML—support vector machine (SVM) | 3 | Martin-Guerrero 2003, Chao 2018, Fernandez-Lozano 2018 | [30, 43, 51] |

| ML—k-NN (k-nearest neighbor) | 1 | Fernandez-Lozano 2018 | [51] |

| ML—reinforcement learning with Markov decision processes (MDP) | 1 | Escandell-Montero 2014 | [26] |

| Fuzzy | |||

| Fuzzy logic | 5 | Nordio 1994, Nordio 1995, Mancini 2007, Gaweda 2008, Zhang 2005 | [21, 22, 34, 79] [44] |

| Coactive fuzzy | 1 | Chen 2007 | [37] |

| Fuzzy Petri nets | 1 | Chen 2014 | [41] |

| ML—natural language processing | 1 | Nigwekar 2014 | [40] |

| Data mining | 4 | Bellazzi 2005, Brito 2019, Srinivas 2017, Jia 2018 | [13, 47, 60, 86] |

| Bayesian belief network | 1 | Brown 2012 | [59] |

| Dynamic time warping (DTW) | 1 | Fritsche 2002 | [56] |

| ML—unspecified neural network (NN) algorithm | 30 | Guh 1998, Akl 2001, Goldfarb-Rumyantzev 2003, Martin Guerrero 2003, Gabutti 2004, Gabutti 2004, Chiu 2005, Fernandez 2005, Gabutti 2006, Tangri 2006, Wang 2006, Gaweda 2008, Bhan 2010, Jacob 2010, Azar 2011, Barbieri 2016, Brier 2018, Niel 2018, Barbieri 2019, Hueso 2019, Chen 2006, Tangri 2011, Simic-Ogrizovic 1999 | [31, 76–78] [19, 20, 23, 88] [14, 32, 33, 36] [15, 29, 38, 81] [7, 18, 87, 89] [45, 46, 54, 65] |

| ML—multilayer perceptron | Stachowska 2006, Santori 2007, Sharma 2008, Tang 2017 | [25, 52, 55, 57] | |

| (MLP) ML—recurrent NN | Niel 2018, Rashidi Khazaee 2018, Thishya 2018 | [62, 64] | |

| 1 | Martin-Guerrero 2003 | [31] | |

| 1 | Gallon 2018 | [85] | |

| ML—tree-based modeling (TBM) | |||

| Random forest (RF) | 7 | Titapiccolo 2013, Rodriguez 2016, Fernandez-Lozano 2018, Sharma 2008, Greco 2010, Tang 2017, Yoo 2017 | [17, 39, 51, 52] [55, 58, 61] |

| Decision trees | 3 | Goldfarb-Rumyantzev 2003, Raghavan 2005, Yoo 2017 | [61, 74, 78] |

| Conditional inference trees | 1 | Seeling 2012 | [63] |

Sixty-four studies included ML algorithms: unspecified, Naive Bayes models, support vector machine (SVM), and reinforcement learning with Markov decision processes (MDP). One study used k-nearest neighbor (k-NN), one study used multilayered perceptron (MLP), 30 studies used unspecified neural network algorithms, and 11 studies were based on tree-based modeling (TBM), random forest (RF), or conditional inference trees. Four trials used data mining algorithms, and five of them had fuzzy logic approaches. One study included specific natural language processing algorithms. Two studies have also included the Bayesian belief network and dynamic time warping (DTW) algorithms.

3. Discussions

Our endeavor is the first in-depth review of the literature gathering all studies using AI in dialysis or kidney transplantation. Two recent papers looked at AI in nephrology, but both focused on AI core concepts, AI perspectives, and algorithms [1, 8]. These articles explained in detail AI terminology and most of the algorithms used, also describing some significant clinical challenges, quoting only a few trials on dialysis and KT. Generally speaking, perspectives about AI oscillate between two extremes: either an extremely optimistic approach (“algorithms are desperately needed to help” [4]) or a disarming nihilism (“Should we be scared of AI? Unfortunately, as our AI capabilities expand, we will also see it being used for dangerous or malicious purposes” [12]).

3.1. Hemodialysis and AI

The most important implication of AI in HD is related to dialysis services. Generating auditing systems powered by AI/ML algorithms seems to improve major outcomes.

In a retrospective trial, 5800 dialysis sessions from 43 patients supervised for 19 months were assessed through temporal data mining techniques to gain insight into the causes of unsatisfactory clinical results [13]. Quality assessment was based on the automatic measurement of 13 variables reflecting significant aspects of a dialysis session, such as the efficiency of protein catabolism product removal or total body water (TBW) reduction and hypotension episodes. Based on the stratification of different causes of failed dialysis in time, ML algorithm “learned” association and temporal rules, reporting “risk profiles” for patients, containing typical failure scenarios [13].

Due to neural networks (NNs), we are now able to exclude the bias of the “dialysis center effect” on mortality [14] (the residual difference in mortality probability that exists between centers after adjustment for other risk factors). In a study including 18,000 ESRD patients from UK Renal Registry, an MLP (multilayered perceptron) was “trained” and then “tested” for predicting mortality. The authors proved with high accuracy that the renal center characteristics show little association with mortality and created a predictive survival model with a high degree of accuracy [14].

To have a real perception of what big data means, we present a study encompassing dialysis patients from the USRDS. A total of 1,126,495 records were included in a combined dataset, forty-two variables being selected to be used in the analysis based on their potential clinical significance. The authors described a feed-forward NN with two inputs, one output, and a hidden layer containing four neurons. A powerful tool was created to predict mortality with high accuracy [15].

In daily practice, clinician nephrologists could also use forecast models that can predict the quality of life (QoL) changes (through an early warning system performing dialysis data interpretation using classification tree and Naive Bayes) [16] and cardiovascular outcomes (a lasso logistic regression model and an RF model were developed and used for predictive comparison of data from 4246 incident HD patients) [17].

Probably the most attractive and tempting field is the HD session. An actual dialysis machine cannot adapt/react when various changes occur; only AI may lead to personalized “precision medicine” [18]. Indeed, a recent AI model was able to predict hypotension and anticipate patient's reactions (in terms of volumes, blood pressure, and heart rate variability) [7]. Since the NN approach is more flexible and adapts easier to complex prediction problems compared to standard regression models, the authors included 60 variables (patient characteristics, a historical record of physiological reactions, outcomes of previous dialysis sessions, predialysis data, and the prescribed dialysis dose for the index session). The dataset used for modeling consisted of 766,000 records, each representing a dialysis session recorded in the Spanish NephroCare centers. NN proved to be a better predictor than a standard recommendation from the guidelines regarding the urea removal ratio, postdialysis BUN, or Kt/V [19]. ML algorithms were used to predict low blood pressure [20], blood volume [21, 22], or TBW [23].

Recent studies suggest that NN outperforms experienced nephrologists. A combined retrospective and prospective observational study was performed in two Swiss dialysis units (80 HD patients, 480 monthly clinical and biochemical variables). A NN was “trained” and “tested” using the BrainMaker Professional software, predicting intradialytic hypotension better than six trained nephrologists [20]. In other studies, 14 pediatric patients were switched from nephrologists to AI. Results proved that AI is a superior tool for predicting dry weight in HD based on bioimpedance, blood volume monitoring, and blood pressure values [24, 25].

Since anemia is a frequent comorbidity found in ESRD and dialysis patients [26], key elements were targeted by AI software [26]: erythropoietin-stimulating agents [27], hemoglobin target [28], and iron treatment dosing [29].

An impressive recent retrospective observational study included HD patients from Portugal, Spain, and Italy from 2006 to 2010 in Fresenius Medical Care clinics. At every treatment, darbepoetin alpha and iron dose administration was recorded as well as parameters concerning HD treatment. These data represent the input for “training” and “testing” a feed-forward multilayered perceptron (MLP). This approach puts together the potential of MLP to produce accurate models given a representative dataset with better use of the available information using a priori knowledge of RBC lifespan and the effect produced by iron and ESA [28]. Other studies also used NNs to individualize ESA dosage in HD patients with outstanding results [30, 31]. Moreover, NN implemented in clinical wards could appreciate erythropoietin responsiveness [32–34].

Probably the most advanced system to manage anemia in HD is the “Anemia Control Model” (ACM) [35]. Conceived and validated previously [28], this model can predict future hemoglobin and recommend a precise ESA dosage. It was deployed in 3 pilot clinics as part of routine daily care of a large population of unselected patients. Also, a direct comparison between standard anemia management by expert nephrologists following established best clinical practices and ACM-supported anemia management was performed. Six hundred fifty-three patients were included in the control phase and 640 in the observation phase. Compared to the “traditional” management, the AI approach led to a significant decrease in hemoglobin fluctuation and reductions in ESA use, with the potential to reduce the cost of treatment [35].

AI algorithms also improve chronic kidney disease-mineral and bone disorder (CKD-MBD) management in HD. Some studies suggest that NN (based on limited clinical data) can accurately forecast the target range of plasma iPTH concentration [36]. An MLP was constructed with six variables (age, diabetes, hypertension, hemoglobin, albumin, and calcium) collected retrospectively from an internal validation group (n = 129). Plasma iPTH was the dichotomous outcome variable, either target group (150 ng/L ≤ iPTH ≤ 300 ng/L) or nontarget group (iPTH < 150 ng/L or iPTH > 300 ng/L). After internal validation, the ANN was prospectively tested in an external validation group (n = 32). This algorithm provided excellent discrimination (AUROC = 0.83, p = 0.003) [36]. Usually, frequent measurement is needed to avoid inadequate prescription of phosphate binders and vitamin D. AI can repeatedly perform the forecasting tasks and may be a satisfactory substitute for laboratory tests [37]. NNs were used to predict HD patients who need more frequent vitamin D dosage, using only simple clinical parameters [38]. However, analysis of the complex interactions between mineral metabolism parameters in ESRD may demand a more advanced data analysis system such as random forest (RF) [39].

Another work based on natural language processing algorithms applied to 11,451 USRDS patients described the real incidence and mortality of calciphylaxis patients [40]. It identified 649 incident calciphylaxis cases over the study period, with mortality rates noted to be 2.5–3 times higher than average mortality rates. This trial serves as a template for investigating other rare diseases. However, the algorithm in this paper has not been disclosed by the authors.

Patency of the arteriovenous access is the last aspect involving AI in HD. Fuzzy Petri net (FPN) algorithms were involved in quantifying the degree of AV fistula stenosis. A small study from Taiwan (42 patients) used an electronic stethoscope to estimate the characteristic frequency spectra at the level of AV fistula. Observing three main characteristic frequencies, it provides information to evaluate the degree of AVS stenosis [41].

Moreover, the life of the AV fistula could be forecasted by ML algorithms [42]. Six ML algorithms were compared to predict the patency of a fistula based on clinical and dialysis variables only. Of these, SVM with a linear kernel gives the highest accuracy of 98%. The proposed system was envisioned after considering the dataset of 200 patients, five dialysis sessions for each patient (to avoid any operator error), and over 30 values reported by the machine. Such a system may improve the patient's QoL by foregoing the catheter and reducing the medical costs of scans like ultrasound Doppler [42].

Finally, another study using small-sized sensors (as opposed to conventional Doppler machines) used SVM for assessing the health of arteriovenous fistula. The model achieved high accuracy (89.11%) and a low type II error (9.59%) [43].

3.1.1. Key Messages

(1) How Can the Use of AI Improve Healthcare Delivery to HD?.

-

(i)Prevention

- AI methods capable of determining risk profiles for unsatisfactory clinical results of HD sessions were described

- Early detection allows for timely correction of risk factors to attain good quality HD sessions and favorable outcomes

-

(ii)Diagnosis

- Estimating the patency of AV fistula by AI approaches may improve HD session outcomes and the patient's QoL

- AI solutions reduce medical costs by replacing more expensive diagnostic procedures

-

(iii)Prescription

- AI can recommend medication dosage for preventing HD-specific complications like anemia and hemoglobin fluctuations, mineral imbalance

- Algorithm involvement leads to fewer complications and reduces medication use, proving the potential to reduce treatment expenses

-

(iv)Prediction

- AI was used for predicting mortality and survival in HD

- Specific algorithms predict changes in QoL, cardiovascular outcomes, and intradialytic hemodynamic events

- Survival and QoL predictive models can help mitigate the impact on public health by better directing the use of resources

- Predicting intradialytic events allows for flexible adaptions of the HD process in real time by avoiding hypotension, the variability of heart rate and volumes, thus ensuring the success of the HD session and overall cost efficiency of interventions

(2) Challenges and Areas Which Require More Studies in AI for HD.

Real-time monitoring AI systems could achieve personalized treatment with embedded automatic adaptive responses in HD sessions

Implementing potential interaction through feedback between AI/ML systems and physicians responsible for HD would allow both parts to learn from each other and provide better decisions for ESRD patients

The more AI systems will be deployed in HD patient care, the larger the scale data will be available. This should compel to the development of stringent regulations concerning data privacy, maintenance, and sharing for safer implementation in public healthcare

3.2. Peritoneal Dialysis and AI

One of the first applications of AI in PD was the selection of PD schemes. Fuzzy logic algorithms were used in small studies with excellent compatibility with doctors' opinions [44]. Since high peritoneal membrane transport status is associated with higher morbidity and mortality, determining peritoneal membrane transport status can result in a better prognosis. An MLP used predialysis data from a 5-year PD database of 111 uremic patients and demonstrated the usefulness of this approach to stratify predialysis patients into high and low transporter groups [45]. The evaluation of peritoneal membrane transport status, if predictable before PD, will help clinicians offer their uremic patients better therapeutic options.

Almost 40% of PD patients experience technique failure in the 1st year of therapy. Understanding which factors are genuinely associated with this outcome is essential to develop interventions that might mitigate it. Such data were obtained from a high-quality registry—UK Renal Registry [46]: between 1999 and 2004, 3269 patients were included in the analysis. An MLP with 73-80-1 nodal architectures was constructed and trained using the back-propagation approach.

Due to the vast number of data acquired from continuous ambulatory peritoneal dialysis (CAPD) patients (routine lab tests on follow-up), data mining algorithms were proposed to discover patterns from meaningless data (e.g., consecutive creatinine values) [47]. This study is probably one of the best examples of AI in medicine: identifying patterns in big data series.

AI/ML algorithms would help predict impending complications such as fluid overload, heart failure, or peritonitis, allowing early detection and interventions (remote patient management) to avoid hospitalizations [48].

Using a systematic approach to characterize responses to microbiologically well-defined infection in acute peritonitis patients, ML techniques were able to generate specific biomarker signatures associated with Gram-negative and Gram-positive organisms and with culture-negative episodes of unclear etiology [49]. By combining biomarker measurements during acute peritonitis and feature selection approaches based on SVM, NN, and RF, a study (including 83 PD patients with peritonitis) demonstrated the power of advanced mathematical models to analyze complex biomedical datasets and highlight critical pathways involved in pathogen-specific inflammatory responses at the site of infection.

Using data mining models in CAPD patients, patterns were extracted to classify a patient with stroke risk, according to their blood analysis [50]. In a recent study analyzing a dataset from 850 cases, five different AI algorithms (Naïve Bayes, Logistics Regression, MLP, Random Tree, and k-NN) were used to predict the stroke risk of a patient. The specificity and sensibility of RT and k-NN were 95% in predicting stroke risk. Shortly, PD patients will benefit from a high prediction (stroke, infection, cardiovascular events [51], or even mortality risk) only from information easy to obtain (demographical, biological, or PD-related data).

3.2.1. Key Messages

(1) How Can the Use of AI Improve Healthcare Delivery to PD?.

-

(i)Prevention

- AI was used to identify factors associated with PD technique failure

- Developing interventions to mitigate risk factors to prevent this outcome can be the result of the critical contribution of AI in the PD process

-

(ii)Diagnosis

- AI algorithms found specific biomarker signatures associated with different types of infections

- This has significant implications in the early initiation of appropriate treatment and in avoiding severe infectious complications of the vulnerable ESRD patients

- AI contributed to expanding scientific knowledge of the pathophysiological mechanisms by highlighting critical pathways involved in pathogen-specific inflammatory responses in PD

-

(iii)Prescription

- By using AI, better therapeutic options can be offered to uremic patients by stratifying predialysis patients into high and low transporter groups

- This will improve prognosis and reduce morbidity and mortality in PD patients

-

(iv)Prediction

- AI can predict complications such as fluid overload, heart failure, or peritonitis

- Also, algorithms could identify patients with stroke risk, thereby allowing early interventions and reduce PD hospitalizations

(2) Challenges and Areas Which Require More Studies in AI for PD.

AI could be exploited to identify patients at risk of developing peritonitis, a significant complication of PD, in order to reduce the infectious risk and to overcome a substantial burden in the PD process

Conducting studies on home remote monitoring in automated PD may improve patients' outcomes and adherence to the therapy

While dialysate regeneration using sorbent technology makes it possible to build an automated wearable artificial kidney PD device, studies of safety in this area would be much needed, especially for patients facing mobility problems

3.3. Kidney Transplantation and AI

Given the high number of dialysis patients and the limited number of organ donors, AI algorithms are involved in optimizing the healthcare management system [52]. Through data mining and NN algorithms, complex e-health systems are proposed for a wiser allocation of organs and predicting transplant outcomes [53].

The most stirring contemporary issue regarding KT is the power of AI to predict graft rejection. A study published 20 years ago reported that NNs could be utilized in the prediction of chronic renal allograft rejection (the authors described a retrospective analysis on 27 patients with chronic rejection, eight simple variables manifesting a strong influence on rejection) [54]. Another cohort study of 500 patients from 2005 to 2011 used ML algorithms (SVM, RF, and DT) to predict “delayed graft function.” Linear SVM had the highest discriminative capacity (AUROC of 84.3%), outperforming the other methods [55]. However, currently, no guideline supports the use of AI in organ allocation or prediction of rejection.

Despite ongoing efforts to develop other methods, serum creatinine remains the most important parameter for assessing renal graft function. A rise in creatinine corresponds to deterioration in the KT function. The physician should recognize “significant” increases in serum creatinine. DTW was used to identify abnormal patterns in a series of laboratory data, thus detecting earlier and reporting creatinine courses associated with acute rejection [56]. Data extraction was performed on 1,059,403 laboratory values, 43,638 creatinine measurements, 1143 patients, and 680 rejection episodes stored in the database. By integrating AI into the electronic patient registration system, the real impact on the care of transplant recipients could be evaluated prospectively.

An MLP trained with back-propagation was used in a retrospective study on 257 pediatric patients who received KT to identify delayed decrease of serum creatinine (a delay in functional recovery of the transplanted kidney) using 20 simple input variables [57]. Other models (decision trees) were reported to highlight subjects at risk of graft loss [58].

Using datasets from the USRDS database (48 clinical variables from 5144 patients), other authors developed an ML software (based on Bayesian belief network (BBN)) that functioned as a pretransplant organ-matching tool. This model could predict graft failure within the first year with a specificity of 80% [59]. Other stand-alone software solutions (trained on big data) incorporated pretransplant variables and predicted graft loss and mortality [60–62]. Since these AI tools can be easily integrated into electronic health records, we appreciate that all kidney transplants will be managed with AI tools in the next years.

Few studies were found dealing (through AI algorithms) with posttransplant immunosuppressive therapy.

The objective of a study was to identify adaptation rules for tacrolimus therapy from a clinical dataset to predict drug concentration [63]. Since tacrolimus has a narrow therapeutic window and variability in clinical use, the challenge for various ML models was to predict the tacrolimus stable dose (TSD). In a large Chinese cohort comprising 1045 KT patients, eight ML techniques were trained in the pharmacogenetic algorithm-based prediction of TSD. Clinical and genetic factors significantly associated with TSD were identified. Hypertension, use of omeprazole, and CYP3A5 genotype were used to construct the multiple linear regression (MLR) [55].

A prospective study involving 129 KT patients confirms that the combination of multiple ABCB1 polymorphisms with CYP3A5 genotype through a NN calculates more precisely the initial tacrolimus dose improving therapy and preventing tacrolimus toxicity [64].

Finally, the only RCT reported in our review was a study evaluating the benefits of different types of diets after transplant [65]. NN seems to be the most suitable method for investigations with many variables, interconnected nonlinearly, allowing for a more general approach to biological problems. 37 KT patients were randomized either to a low-fat standard or a Mediterranean diet (MD). For the MD group, the NNs had two hidden layers with 223 and 2 neurons. In the control group, the networks had two hidden layers as well, with 148 and 2 neurons, respectively. The conclusion was that MD would be ideal for posttransplant patients, without affecting the lipid profile.

3.3.1. Key Messages

(1) How Can the Use of AI Improve Healthcare Delivery to KT?.

-

(i)Diagnosis

- AI was able to detect and report early creatinine courses associated with acute KT rejection by identifying abnormal patterns in a series of laboratory data, thus allowing for rapid intervention and improved aftermath in KT patients

-

(ii)Prescription

- Various ML models accurately predict the tacrolimus stable dose succeeding to improve posttransplant immunosuppressive therapy and prevent tacrolimus toxicity

- Proper management of immunosuppression can have a significant impact on averting graft loss

- ML can evaluate the benefits of different types of diets after transplant that can lead to a positive impact on QoL in KT

-

(iii)Prediction

- AI is used to predict graft rejection, “delayed graft function,” and mortality

- AI algorithm—pretransplant organ-matching tool

- This allows for the wiser allocation of organs and overall optimization of the healthcare management system in KT

(2) Challenges and Areas Which Require More Studies in AI for KT.

Preventive AI tools could certainly be employed in identifying modifiable risk factors for graft rejection and graft loss, offering patients better chances for successful KT

Guidelines need to be developed for supporting the use of AI in organ allocation or prediction of rejection

Prospective evaluation of the real AI impact on the care of transplant recipients can be easily accomplished by integrating AI into electronic patient registration systems

We appreciate that in the next years, all kidney transplant procedures will be managed through AI tools

3.4. Internet of Things (IoT) and Wearables in Dialysis/Transplantation Management

The emergence of the modern IoT concept yielded the idea of connecting everything to the Internet and generating data through sensors' signals regarding external information and changes in the environment, thus moving AI to the edge in healthcare.

IoT wearable systems are capable of real-time remote monitoring and analyzing HD and PD patients' physiological parameters by integrating sensors to detect a pulse, temperature [66], blood pressure [67], blood leakage [68, 69], electrocardiographic measurements, hyperkalemia, or fluid overload [70].

Medical wearables have been proposed to help and support KT patients in aftercare by monitoring vital signs, heart rate, temperature, blood pressure, physical activity, and risk calculations [71].

IoT medical devices have a tremendous impact on healthcare monitoring and treatment outcomes. They collect a substantial amount of data that can be employed in training new ML models for providing better patient care. Nevertheless, large-scale data can make them vulnerable to security breaches; thus, privacy and security issues need to be rigorously addressed.

4. Limitations and Future Directions

Probably the most important limitation of the AI/ML approach is that there is a need for robust validation in real-world studies. We are aware that AI enthusiasm exceeds AI software abilities, mainly due to a lack of clinical validation and daily care implementation.

Since ML algorithms have the strengths to learn and improve from experience without being explicitly programmed for a specific task, AI is often perceived as a “black box” that hides the precise way in which it concludes/results [72]. Due to the “hidden layers” of a NN, likely, the algorithm itself cannot adequately describe the decision-making process, none being like the previous one. Currently, there is a real debate whether it is acceptable to use nontransparent algorithms in a clinical setting (the so-called “deconvolution” of algorithms being required by the European Union's General Data Protection Regulation) [4].

There are more questions about how an ML algorithm discovers patterns and learns from a dataset on predicting dialysis volumes than on how AI is used in self-driving cars. In a recent editorial, we are underlining a novel paradigm used to optimize the diagnostic and treatment of ESRD, inviting clinicians and researchers to envision and expect more from AI.

However, the reluctance of the medical community in implementing AI in clinical practice derives from the reliance on RCTs—the cornerstone of Evidence-Based Medicine (EBM). It seems that the transition from the “EBM paradigm” to the “deep medicine” concept is confusing, burdensome, and meets new and unexpected obstacles. Another disadvantage of NNs is that inputs and outputs of the network are often surrogates for clinical/paraclinical situations. Surrogates should be particularly relevant to maximizing the correlation between the prediction of the network and the clinical situation. This correlation may not always be optimal [8].

The privacy of personal information and the security of data are an important matter. It is difficult to provide security guarantees regarding the risk of hacking and manipulating content, events that could limit the progress of AI in medicine. More than that, due to private corporations' cooperation and investment in AI medical projects, there is a serious risk (and fear) that doctors will be constrained to work on AI machines that they do not understand or trust, manipulating the medical data subsequently stored on private servers. This has led one of the largest private-sector investors to claim that AI is far more dangerous than nukes.

Finally, when programmers approach a problem related to HD or transplantation, there is still debate over the superiority of one algorithm over another. The tendency is to demonstrate the superiority of deep learning algorithms through ML, but the soundness of this approach requires long periods of “training” and large databases.

There is a chance that the entire dialysis process will be monitored and predicted by AI solutions. Future dialysis complications will be foreseen through simple clinical/paraclinical variables. Mining knowledge from big data registries will allow building intelligent systems (the so-called Clinical Decision Support Systems), which will help physicians in classifying risks, diagnosing CKD 5D/T complications, and assessing prognosis [73].

5. Conclusions

Although the guidelines are reluctant to recommend the implementation of AI in daily clinical practice, there is evidence that AI/ML algorithms can predict better than nephrologists: volumes, Kt/V, and risk of hypotension and cardiovascular events during dialysis. There are integrated anemia management AI systems, through personalized dosing of ESA, iron, and hemoglobin modulation. Recent studies employ ML algorithms as pretransplant organ-matching tools, thus minimizing graft failure and accurately predicting mortality. Altogether, these trials report a significant impact of AI on quality of life and survival in G5D/T patients. In the coming years, we will probably witness the emergence of AI/ML devices that will improve the management of dialysis patients.

Acknowledgments

AB was supported by the Romanian Academy of Medical Sciences and European Regional Development Fund, MySMIS 107124: Funding Contract 2/Axa 1/31.07.2017/107124 SMIS; AC was supported by a grant of Ministry of Research and Innovation, CNCS-UEFISCDI, project number PN-III-P4-ID-PCE-2016-0908, contract number 167/2017, within PNCDI III.

Conflicts of Interest

The authors declare that there is no conflict of interest regarding the publication of this article.

Authors' Contributions

AB and AC drafted the manuscript. DJ and AB performed a database search. AB and AC wrote the paper. CV and IVP made the figures. CV, IVP, and DJ wrote the supplemental tables. AI and DJ described AI/ML algorithms. AC and IVP revised the paper. All authors edited the text and approved the final version.

Supplementary Materials

Supplemental Table 1: short description and results of the studies involving AI in HD. Supplemental Table 2: short description and results of the studies involving AI in PD. Supplemental Table 3: short description and results of the studies involving AI in KT

References

- 1.Hueso M., Vellido A., Montero N., et al. Artificial intelligence for the artificial kidney: pointers to the future of a personalized hemodialysis therapy. Kidney Diseases. 2018;4(1):1–9. doi: 10.1159/000486394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Krumholz H. M. Big data and new knowledge in medicine: the thinking, training, and tools needed for a learning health system. Health Affairs. 2014;33(7):1163–1170. doi: 10.1377/hlthaff.2014.0053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Administration FaD. Proposed regulatory framework for modifications to artificial intelligence/machine learning (AI/ML)-based software as a medical device (SaMD)-discussion paper and request for feedback. 2019, https://www.fda.gov/media/122535/download.

- 4.Topol E. J. High-performance medicine: the convergence of human and artificial intelligence. Nature Medicine. 2019;25(1):44–56. doi: 10.1038/s41591-018-0300-7. [DOI] [PubMed] [Google Scholar]

- 5.Mavrakanas T. A., Charytan D. M. Cardiovascular complications in chronic dialysis patients. Current Opinion in Nephrology and Hypertension. 2016;25(6):536–544. doi: 10.1097/mnh.0000000000000280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Reyna-Sepúlveda F., Ponce-Escobedo A., Guevara-Charles A., et al. Outcomes and surgical complications in kidney transplantation. International Journal Organ Transplantation Medicine. 2017;8(2):78–84. [PMC free article] [PubMed] [Google Scholar]

- 7.Barbieri C., Cattinelli I., Neri L., et al. Development of an Artificial Intelligence Model to Guide themanagement of Pressure, Fluid Volume, and Dose in End-Stage Kidney Disease Patients: Proof of Concept and First Clinical Assessment. Kidney Disease. 2019;5(1):28–33. doi: 10.1159/000493479. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Niel O., Bastard P. Artificial intelligence in nephrology: core concepts, clinical applications, and perspectives. American Journal of Kidney Diseases. 2019;74(6):803–810. doi: 10.1053/j.ajkd.2019.05.020. [DOI] [PubMed] [Google Scholar]

- 9.Fedak V. Top 10 most popular AI models. 2019. https://dzone.com/articles/top-10-most-popular-ai-models.

- 10.The 10 algorithms machine learning engineers need to know. September 2019, https://www.kdnuggets.com/2016/08/10-algorithms-machine-learning-engineers.html.

- 11.Johnson K. W., Torres Soto J., Glicksberg B. S., et al. Artificial intelligence in cardiology. Journal of the American College of Cardiology. 2018;71(23):2668–2679. doi: 10.1016/j.jacc.2018.03.521. [DOI] [PubMed] [Google Scholar]

- 12.Marr B. Is artificial intelligence dangerous? 6 AI risks everyone should know about. 2018. September 2019, https://www.forbes.com/sites/bernardmarr/2018/11/19/is-artificial-intelligence-dangerous-6-ai-risks-everyone-should-know-about/

- 13.Bellazzi R., Larizza C., Magni P., Bellazzi R. Temporal data mining for the quality assessment of hemodialysis services. Artificial Intelligence in Medicine. 2005;34(1):25–39. doi: 10.1016/j.artmed.2004.07.010. [DOI] [PubMed] [Google Scholar]

- 14.Tangri N., Ansell D., Naimark D. Lack of a centre effect in UK renal units: application of an artificial neural network model. Nephrology, Dialysis, Transplantation. 2006;21(3):743–748. doi: 10.1093/ndt/gfi255. [DOI] [PubMed] [Google Scholar]

- 15.Jacob A. N., Khuder S., Malhotra N., et al. Neural network analysis to predict mortality in end-stage renal disease: application to United States Renal Data System. Nephron. Clinical Practice. 2010;116(2):c148–c158. doi: 10.1159/000315884. [DOI] [PubMed] [Google Scholar]

- 16.Saadat S., Aziz A., Ahmad H., et al. Predicting Quality of Life Changes in Hemodialysis Patients Using Machine Learning: Generation of an Early Warning System. Cureus. 2017;9(9, article e1713) doi: 10.7759/cureus.1713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Titapiccolo J. I., Ferrario M., Cerutti S., et al. Artificial intelligence models to stratify cardiovascular risk in incident hemodialysis patients. Expert Systems with Applications. 2013;40(11):4679–4686. doi: 10.1016/j.eswa.2013.02.005. [DOI] [Google Scholar]

- 18.Hueso M., Navarro E., Sandoval D., Cruzado J. M. Progress in the Development and Challenges for the Use of Artificial Kidneys and Wearable Devices. Kidney Diseases. 2019;5(1):3–10. doi: 10.1159/000492932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Fernández E. A., Valtuille R., Presedo J. M. R., Willshaw P. Comparison of different methods for hemodialysis evaluation by means of ROC curves: from artificial intelligence to current methods. Clinical Nephrology. 2005;64(9):205–213. doi: 10.5414/cnp64205. [DOI] [PubMed] [Google Scholar]

- 20.Gabutti L., Vadilonga D., Mombelli G., Burnier M., Marone C. Artificial neural networks improve the prediction of Kt/V, follow-up dietary protein intake and hypotension risk in haemodialysis patients. Nephrology, Dialysis, Transplantation. 2004;19(5):1204–1211. doi: 10.1093/ndt/gfh084. [DOI] [PubMed] [Google Scholar]

- 21.Nordio M., Giove S., Lorenzi S., Marchini P., Mirandoli F., Saporiti E. Projection and simulation results of an adaptive fuzzy control module for blood pressure and blood volume during hemodialysis. ASAIO Journal. 1994;40(3):M686–M690. doi: 10.1097/00002480-199407000-00086. [DOI] [PubMed] [Google Scholar]

- 22.Nordio M., Giove S., Lorenzi S., Marchini P., Saporiti E. A new approach to blood pressure and blood volume modulation during hemodialysis: an adaptive fuzzy control module. The International Journal of Artificial Organs. 2018;18(9):513–517. [PubMed] [Google Scholar]

- 23.Chiu J. S., Chong C. F., Lin Y. F., Wu C. C., Wang Y. F., Li Y. C. Applying an artificial neural network to predict total body water in hemodialysis patients. American Journal of Nephrology. 2005;25(5):507–513. doi: 10.1159/000088279. [DOI] [PubMed] [Google Scholar]

- 24.Hayes W., Allinovi M. Beyond playing games: nephrologist vs machine in pediatric dialysis prescribing. Pediatric Nephrology. 2018;33(10):1625–1627. doi: 10.1007/s00467-018-4021-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Niel O., Bastard P., Boussard C., Hogan J., Kwon T., Deschênes G. Artificial intelligence outperforms experienced nephrologists to assess dry weight in pediatric patients on chronic hemodialysis. Pediatric Nephrology. 2018;33(10):1799–1803. doi: 10.1007/s00467-018-4015-2. [DOI] [PubMed] [Google Scholar]

- 26.Escandell-Montero P., Chermisi M., Martínez-Martínez J. M., et al. Optimization of anemia treatment in hemodialysis patients via reinforcement learning. Artificial Intelligence in Medicine. 2014;62(1):47–60. doi: 10.1016/j.artmed.2014.07.004. [DOI] [PubMed] [Google Scholar]

- 27.Fuertinger D. H., Kappel F. A parameter identification technique for structured population equations modeling erythropoiesis in dialysis patients. In: Ao S. I., Douglas C., Grundfest W. S., Burgstone J., editors. World Congress on Engineering and Computer Science, Wcecs 2013, Vol Ii, vol Ao. Lecture Notes in Engineering and Computer Science. Hong Kong: Int Assoc Engineers-Iaeng; 2013. p. p. 940. [Google Scholar]

- 28.Barbieri C., Mari F., Stopper A., et al. A new machine learning approach for predicting the response to anemia treatment in a large cohort of end stage renal disease patients undergoing dialysis. Computers in Biology and Medicine. 2015;61:56–61. doi: 10.1016/j.compbiomed.2015.03.019. [DOI] [PubMed] [Google Scholar]

- 29.Barbieri C., Bolzoni E., Mari F., et al. Performance of a predictive model for long-term hemoglobin response to darbepoetin and iron administration in a large cohort of hemodialysis patients. PLoS One. 2016;11(3, article e0148938) doi: 10.1371/journal.pone.0148938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Martin-Guerrero J. D., Camps-Valls G., Soria-Olivas E., Serrano-Lopez A. J., Perez-Ruixo J. J., Jimenez-Torres N. V. Dosage individualization of erythropoietin using a profile-dependent support vector regression. IEEE Transactions on Biomedical Engineering. 2003;50(10):1136–1142. doi: 10.1109/tbme.2003.816084. [DOI] [PubMed] [Google Scholar]

- 31.López A. J. S., Ruixo J. J. P., Torres N. V. J. Use of neural networks for dosage individualisation of erythropoietin in patients with secondary anemia to chronic renal failure. Computers in Biology and Medicine. 2003;33(4):361–373. doi: 10.1016/S0010-4825(02)00065-3. [DOI] [PubMed] [Google Scholar]

- 32.Gabutti L., Lötscher N., Bianda J., Marone C., Mombelli G., Burnier M. Would artificial neural networks implemented in clinical wards help nephrologists in predicting epoetin responsiveness? BMC Nephrology. 2006;7(1):p. 13. doi: 10.1186/1471-2369-7-13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Gaweda A. E., Jacobs A. A., Aronoff G. R., Brier M. E. Model Predictive Control of Erythropoietin Administration in the Anemia of ESRD. American Journal of Kidney Diseases. 2008;51(1):71–79. doi: 10.1053/j.ajkd.2007.10.003. [DOI] [PubMed] [Google Scholar]

- 34.Gaweda A. E., Jacobs A. A., Brier M. E. Application of fuzzy logic to predicting erythropoietic response in hemodialysis patients. The International Journal of Artificial Organs. 2018;31(12):1035–1042. doi: 10.1177/039139880803101207. [DOI] [PubMed] [Google Scholar]

- 35.Barbieri C., Molina M., Ponce P., et al. An international observational study suggests that artificial intelligence for clinical decision support optimizes anemia management in hemodialysis patients. Kidney International. 2016;90(2):422–429. doi: 10.1016/j.kint.2016.03.036. [DOI] [PubMed] [Google Scholar]

- 36.Wang Y. F., Hu T. M., Wu C. C., et al. Prediction of target range of intact parathyroid hormone in hemodialysis patients with artificial neural network. Computer Methods and Programs in Biomedicine. 2006;83(2):111–119. doi: 10.1016/j.cmpb.2006.06.001. [DOI] [PubMed] [Google Scholar]

- 37.Chen C. A., Li Y. C., Lin Y. F., Yu F. C., Huang W. H., Chiu J. S. Neuro-fuzzy technology as a predictor of parathyroid hormone level in hemodialysis patients. The Tohoku Journal of Experimental Medicine. 2007;211(1):81–87. doi: 10.1620/tjem.211.81. [DOI] [PubMed] [Google Scholar]

- 38.Bhan I., Burnett-Bowie S. A. M., Ye J., Tonelli M., Thadhani R. Clinical measures identify vitamin D deficiency in dialysis. Clinical Journal of the American Society of Nephrology. 2010;5(3):460–467. doi: 10.2215/cjn.06440909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rodriguez M., Salmeron M. D., Martin-Malo A., et al. A new data analysis system to quantify associations between biochemical parameters of chronic kidney disease-mineral bone disease. PLoS One. 2016;11(1, article e0146801) doi: 10.1371/journal.pone.0146801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Nigwekar S. U., Solid C. A., Ankers E., et al. Quantifying a rare disease in administrative data: the example of calciphylaxis. Journal of General Internal Medicine. 2014;29(Suppl 3):724–731. doi: 10.1007/s11606-014-2910-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Chen W.-L., Kan C.-D., Lin C.-H., Chen T. A rule-based decision-making diagnosis system to evaluate arteriovenous shunt stenosis for hemodialysis treatment of patients using fuzzy petri nets. IEEE Journal of Biomedical and Health Informatics. 2014;18(2):703–713. doi: 10.1109/jbhi.2013.2279595. [DOI] [PubMed] [Google Scholar]

- 42.Bhatia G., Wagle M., Jethnani N., Bhagtani J., Chandak A. 3rd International Conference for Convergence in Technology, I2CT 2018. Institute of Electrical and Electronics Engineers Inc.; 2018. Machine learning for prediction of life of arteriovenous fistula. [DOI] [Google Scholar]

- 43.Chao P. C., Chiang P. Y., Kao Y. H., et al. A Portable,wireless sensor for health of fistula Class-Weightedsupport Machine. Sensors. 2018;18(11):p. 3854. doi: 10.3390/s18113854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zhang M., Hu Y., Wang T. Selection of peritoneal dialysis schemes based on multi-objective fuzzy pattern recognition. Sheng Wu Yi Xue Gong Cheng Xue Za Zhi. 2005;22(2):335–338. [PubMed] [Google Scholar]

- 45.Chen C. A., Lin S. H., Hsu Y. J., Li Y. C., Wang Y. F., Chiu J. S. Neural network modeling to stratify peritoneal membrane transporter in predialytic patients. Internal Medicine. 2006;45(9):663–664. doi: 10.2169/internalmedicine.45.1419. [DOI] [PubMed] [Google Scholar]

- 46.Tangri N., Ansell D., Naimark D. Determining factors that predict technique survival on peritoneal dialysis: application of regression and artificial neural network methods. Nephron. Clinical Practice. 2011;118(2):c93–c100. doi: 10.1159/000319988. [DOI] [PubMed] [Google Scholar]

- 47.Brito C., Esteves M., Peixoto H., Abelha A., Machado J. A data mining approach to classify serum creatinine values in patients undergoing continuous ambulatory peritoneal dialysis. Wireless Networks. 2019;2019 doi: 10.1007/s11276-018-01905-4. [DOI] [Google Scholar]

- 48.John O., Jha V. Contributions to Nephrology, vol 197. 2019. Remote patient management in peritoneal dialysis: an answer to an unmet clinical need. [DOI] [PubMed] [Google Scholar]

- 49.Zhang J., Friberg I. M., Kift-Morgan A., et al. Machine-learning algorithms define pathogen-specific local immune fingerprints in peritoneal dialysis patients with bacterial infections. Kidney International. 2017;92(1):179–191. doi: 10.1016/j.kint.2017.01.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Rodrigues M., Peixoto H., Esteves M., Machado J. Understanding stroke in dialysis and chronic kidney disease. In: Shakshuki E., editor. 8th International Conference on Emerging Ubiquitous Systems and Pervasive Networks, EUSPN 2017 and the 7th International Conference on Current and Future Trends of Information and Communication Technologies in Healthcare, ICTH 2017. Elsevier B.V.; 2017. pp. 591–596. [DOI] [Google Scholar]

- 51.Fernandez-Lozano C., Díaz M. F., Valente R. A., Pazos A. 1st International Conference on Data Science, E-Learning and Information Systems, DATA 2018. Association for Computing Machinery; 2018. A generalized linear model for cardiovascular complications prediction in PD patients. [DOI] [Google Scholar]

- 52.Sharma D., Shadabi F. An intelligent multi agent design in healthcare management system. In: Nguyen N. T., Jo G. S., Howlett R. J., Jain L. C., editors. Agent and Multi-Agent Systems: Technologies and Applications, Proceedings, vol 4953. Lecture Notes in Artificial Intelligence. Berlin, Berlin: Springer-Verlag; 2008. pp. 674–682. [DOI] [Google Scholar]

- 53.Karademirci O., Terzioğlu A. S., Yılmaz S., Tombuş Ö. Implementation of a User-Friendly, Flexible Expert System for Selecting Optimal Set of Kidney Exchange Combinations of Patients in a Transplantation Center. Transplantation Proceedings. 2015;47(5):1262–1264. doi: 10.1016/j.transproceed.2015.04.051. [DOI] [PubMed] [Google Scholar]

- 54.Simic-Ogrizovic S., Furuncic D., Lezaic V., Radivojevic D., Blagojevic R., Djukanovic L. Using ANN in selection of the most important variables in prediction of chronic renal allograft rejection progression. Transplantation Proceedings. 1999;31(1-2):p. 368. doi: 10.1016/s0041-1345(98)01665-0. [DOI] [PubMed] [Google Scholar]

- 55.Tang J., Liu R., Zhang Y. L., et al. Application of Machine-Learning Models to Predict Tacrolimus Stable Dose in Renal Transplant Recipients. Scientific Reports. 2017;7(1):p. 42192. doi: 10.1038/srep42192. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Fritsche L., Schlaefer A., Budde K., Schroeter K., Neumayer H. H. Recognition of critical situations from time series of laboratory results by case-based reasoning. Journal of the American Medical Informatics Association. 2002;9(5):520–528. doi: 10.1197/jamia.m1013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Santori G., Fontana I., Valente U. Application of an Artificial Neural Network Model to Predict Delayed Decrease of Serum Creatinine in Pediatric Patients After Kidney Transplantation. Transplantation Proceedings. 2007;39(6):1813–1819. doi: 10.1016/j.transproceed.2007.05.026. [DOI] [PubMed] [Google Scholar]

- 58.Greco R., Papalia T., Lofaro D., Maestripieri S., Mancuso D., Bonofiglio R. Decisional Trees in Renal Transplant Follow-up. Transplantation Proceedings. 2010;42(4):1134–1136. doi: 10.1016/j.transproceed.2010.03.061. [DOI] [PubMed] [Google Scholar]

- 59.Brown T. S., Elster E. A., Stevens K., et al. Bayesian modeling of pretransplant variables accurately predicts kidney graft survival. American Journal of Nephrology. 2012;36(6):561–569. doi: 10.1159/000345552. [DOI] [PubMed] [Google Scholar]

- 60.Srinivas T. R., Taber D. J., Su Z., et al. Big data, predictive analytics, and quality improvement in kidney transplantation: a proof of concept. American Journal of Transplantation. 2017;17(3):671–681. doi: 10.1111/ajt.14099. [DOI] [PubMed] [Google Scholar]

- 61.Yoo K. D., Noh J., Lee H., et al. A machine learning approach using survival statistics to predict graft survival in kidney transplant recipients: a multicenter cohort study. Scientific Reports. 2017;7(1):p. 8904. doi: 10.1038/s41598-017-08008-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Rashidi Khazaee P., Bagherzadeh J., Niazkhani Z., Pirnejad H. A dynamic model for predicting graft function in kidney recipients’ upcoming follow up visits: a clinical application of artificial neural network. International Journal of Medical Informatics. 2018;119:125–133. doi: 10.1016/j.ijmedinf.2018.09.012. [DOI] [PubMed] [Google Scholar]

- 63.Seeling W., Plischke M., Schuh C. Knowledge-based tacrolimus therapy for kidney transplant patients. Studies in Health Technology and Informatics. 2012;180:310–314. [PubMed] [Google Scholar]

- 64.Thishya K., Vattam K. K., Naushad S. M., Raju S. B., Kutala V. K. Artificial neural network model for predicting the bioavailability of tacrolimus in patients with renal transplantation. PLoS One. 2018;13(4, article e0191921) doi: 10.1371/journal.pone.0191921. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Stachowska E., Gutowska I., Strzelczak A., et al. The use of neural networks in evaluation of the direction and dynamics of changes in lipid parameters in kidney transplant patients on the Mediterranean diet. Journal of Renal Nutrition. 2006;16(2):150–159. doi: 10.1053/j.jrn.2006.01.003. [DOI] [PubMed] [Google Scholar]

- 66.Zainol M. F., Farook R. S. M., Hassan R., Halim A. H. A., Rejab M. R. A., Husin Z. A. 2019 IEEE Conference on Open Systems (ICOS) 2019. New IoT patient monitoring system for hemodialysis treatment; pp. 46–50. [DOI] [Google Scholar]

- 67.Agarwal R. Home and ambulatory blood pressure monitoring in chronic kidney disease. Current Opinion in Nephrology and Hypertension. 2009;18(6):507–512. doi: 10.1097/MNH.0b013e3283319b9d. [DOI] [PubMed] [Google Scholar]

- 68.Du Y.-C., Lim B.-Y., Ciou W.-S., Wu M.-J. Novel wearable device for blood leakage detection during hemodialysis using an array sensing patch. Sensors. 2016;16(6):p. 849. doi: 10.3390/s16060849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Wu J. X., Huang P. T., Lin C. H., Li C. M. Blood leakage detection during dialysis therapy based on fog computing with array photocell sensors and heteroassociative memory model. Health Technology Letter. 2018;5(1):38–44. doi: 10.1049/htl.2017.0091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Kooman J. P., Wieringa F. P., Han M., et al. Wearable health devices and personal area networks: can they improve outcomes in haemodialysis patients? Nephrology Dialysis Transplantation. 2020;35(Supplement_2):ii43–ii50. doi: 10.1093/ndt/gfaa015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Mario B., Walpola H., Kisal R., De Silva S. Kidney transplant aftercare with IOT medical wearables. 2017. https://www.researchgate.net/publication/327653042_Kidney_Transplant_aftercare_with_IOT_Medical_Wearables?channel=doi&linkId=5b9bd8a3299bf13e603155e6&showFulltext=true.

- 72.Castelvecchi D. Can we open the black box of AI? Nature News. 2016;538(7623):20–23. doi: 10.1038/538020a. [DOI] [PubMed] [Google Scholar]

- 73.Yang C., Kong G., Wang L., Zhang L., Zhao M. H. Big data in nephrology: Are we ready for the change? Nephrology. 2019;24(11):1097–1102. doi: 10.1111/nep.13636. [DOI] [PubMed] [Google Scholar]

- 74.Raghavan S. R., Ladik V., Meyer K. B. Developing decision support for dialysis treatment of chronic kidney failure. IEEE Transactions on Information Technology in Biomedicine. 2005;9(2):229–238. doi: 10.1109/TITB.2005.847133. [DOI] [PubMed] [Google Scholar]

- 75.Usvyat L., Dalrymple L. S., Maddux F. W. Using Technology to Inform and Deliver Precise Personalized Care to Patients With End-Stage Kidney Disease. Seminars in Nephrology. 2018;38(4):418–425. doi: 10.1016/j.semnephrol.2018.05.011. [DOI] [PubMed] [Google Scholar]

- 76.Guh J. Y., Yang C. Y., Yang J. M., Chen L. M., Lai Y. H. Prediction of equilibrated postdialysis BUN by an artificial neural network in high-efficiency hemodialysis. American Journal of Kidney Diseases. 1998;31(4):638–646. doi: 10.1053/ajkd.1998.v31.pm9531180. [DOI] [PubMed] [Google Scholar]

- 77.Akl A. I., Sobh M. A., Enab Y. M., Tattersall J. Artificial intelligence: A new approach for prescription and monitoring of hemodialysis therapy. American Journal of Kidney Diseases. 2001;38(6):1277–1283. doi: 10.1053/ajkd.2001.29225. [DOI] [PubMed] [Google Scholar]

- 78.Goldfarb-Rumyantzev A., Schwenk M. H., Liu S., Charytan C., Spinowitz B. S. Prediction of single-pool Kt/v based on clinical and hemodialysis variables using multilinear regression, tree-based modeling, and artificial neural networks. Artificial Organs. 2003;27(6):544–554. doi: 10.1046/j.1525-1594.2003.07001.x. [DOI] [PubMed] [Google Scholar]

- 79.Mancini E., Mambelli E., Irpinia M., et al. Prevention of dialysis hypotension episodes using fuzzy logic control system. Nephrology, Dialysis, Transplantation. 2007;22(5):1420–1427. doi: 10.1093/ndt/gfl799. [DOI] [PubMed] [Google Scholar]

- 80.Cadena M., Pérez-Grovas H., Flores P., et al. Method to observe hemodynamic and metabolic changes during hemodiafiltration therapy with exercise. Conference Proceedings: Annual International Conference of the IEEE Engineering in Medicine and Biology Society. 2010;2010:1206–1209. doi: 10.1109/iembs.2010.5625945. [DOI] [PubMed] [Google Scholar]

- 81.Azar A. T., Wahba K. M. Artificial neural network for prediction of equilibrated dialysis dose without intradialytic sample. Saudi Journal of Kidney Diseases and Transplantation. 2011;22(4):705–711. [PubMed] [Google Scholar]

- 82.Brier M. E., Gaweda A. E. Artificial intelligence for optimal anemia management in end-stage renal disease. Kidney International. 2016;90(2):259–261. doi: 10.1016/j.kint.2016.05.018. [DOI] [PubMed] [Google Scholar]

- 83.Bucalo M. L., Barbieri C., Roca S., et al. El modelo de control de anemia: ¿ayuda al nefrólogo en la decisión terapéutica para el manejo de la anemia? Nefrología. 2018;38(5):491–502. doi: 10.1016/j.nefro.2018.03.004. [DOI] [PubMed] [Google Scholar]

- 84.Decruyenaere A., Decruyenaere P., Peeters P., Vermassen F., Dhaene T., Couckuyt I. Prediction of delayed graft function after kidney transplantation: comparison between logistic regression and machine learning methods. BMC Medical Informatics and Decision Making. 2015;15(1):p. 83. doi: 10.1186/s12911-015-0206-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Gallon L., Mathew J. M., Bontha S. V., et al. Intragraft molecular pathways associated with tolerance induction in renal transplantation. Journal of the American Society of Nephrology. 2018;29(2):423–433. doi: 10.1681/asn.2017030348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Jia L., Jia R., Li Y., Li X., Jia Q., Zhang H. LCK as a Potential Therapeutic Target for Acute Rejection after Kidney Transplantation: A Bioinformatics Clue. Journal of Immunology Research. 2018;2018:6451299. doi: 10.1155/2018/6451298. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Niel O., Bastard P. Artificial intelligence improves estimation of tacrolimus area under the concentration over time curve in renal transplant recipients. Transplant International. 2018;31(8):940–941. doi: 10.1111/tri.13271. [DOI] [PubMed] [Google Scholar]

- 88.Gabutti L., Burnier M., Mombelli G., Malé F., Pellegrini L., Marone C. Usefulness of artificial neural networks to predict follow-up dietary protein intake in hemodialysis patients. Kidney International. 2004;66(1):399–407. doi: 10.1111/j.1523-1755.2004.00744.x. [DOI] [PubMed] [Google Scholar]

- 89.Brier M. E., Gaweda A. E., Aronoff G. R. Personalized Anemia Management and Precision Medicine in ESA and Iron Pharmacology in End-Stage Kidney Disease. Seminars in Nephrology. 2018;38(4):410–417. doi: 10.1016/j.semnephrol.2018.05.010. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Table 1: short description and results of the studies involving AI in HD. Supplemental Table 2: short description and results of the studies involving AI in PD. Supplemental Table 3: short description and results of the studies involving AI in KT