Abstract

Neuroimaging studies show that ventral face-selective regions, including the fusiform face area (FFA) and occipital face area (OFA), preferentially respond to faces presented in the contralateral visual field (VF). In the current study we measured the VF response of the face-selective posterior superior temporal sulcus (pSTS). Across 3 functional magnetic resonance imaging experiments, participants viewed face videos presented in different parts of the VF. Consistent with prior results, we observed a contralateral VF bias in bilateral FFA, right OFA (rOFA), and bilateral human motion-selective area MT+. Intriguingly, this contralateral VF bias was absent in the bilateral pSTS. We then delivered transcranial magnetic stimulation (TMS) over right pSTS (rpSTS) and rOFA, while participants matched facial expressions in both hemifields. TMS delivered over the rpSTS disrupted performance in both hemifields, but TMS delivered over the rOFA disrupted performance in the contralateral hemifield only. These converging results demonstrate that the contralateral bias for faces observed in ventral face-selective areas is absent in the pSTS. This difference in VF response is consistent with face processing models proposing 2 functionally distinct pathways. It further suggests that these models should account for differences in interhemispheric connections between the face-selective areas across these 2 pathways.

Keywords: amygdala, face processing, fusiform face area (FFA), occipital face area (OFA)

Introduction

Neuroimaging studies have identified multiple face-selective areas across the human brain. These include the fusiform face area (FFA) (Kanwisher et al., 1997; McCarthy et al., 1997), occipital face area (OFA) (Gautier et al., 2000), and posterior superior temporal sulcus (pSTS) (Puce et al., 1998; Phillips et al., 1997). Models of face perception (Haxby et al., 2000; Calder and Young, 2005) propose that these areas are components in 2 separate and functionally distinct neural pathways: a ventral pathway specialized for recognizing facial identity (that includes the FFA), and a lateral pathway specialized for recognizing facial expression (that includes the pSTS). While these pathways perform different cognitive functions, both are thought to begin in the OFA, the most posterior face-selective area in the human brain. Alternative models have proposed different cortico-cortical connections for the face-selective pSTS. One theory proposes that the pSTS has anatomical and functional connections with the motion-selective area human MT+ (hMT+), which are independent of the OFA (O’Toole et al., 2002; Gschwind et al., 2012; Pitcher et al., 2014, Duchaine and Yovel, 2015). In the current study, we sought to further investigate the functional connections of the pSTS using functional magnetic resonance imaging (fMRI) and transcranial magnetic stimulation (TMS).

Our recent neuropsychological and combined TMS/fMRI studies suggest that the rpSTS is functionally connected to brain areas other than the right occipital face area (rOFA) and right fusiform face area (rFFA) (Rezlescu et al., 2012; Pitcher et al., 2014). These studies demonstrated that disruption of the rFFA and rOFA did not reduce the neural response to moving faces in the rpSTS, suggesting it is functionally connected to other brain areas. However, these studies did not investigate which brain areas may be functionally connected with the rpSTS. As suggested previously, hMT+ may have functional connections with the rpSTS (O’Toole et al., 2002), but alternate potential candidate areas are also plausible, notably face-selective areas in the contralateral hemisphere. For example, a recent study demonstrated that TMS delivered over the rpSTS and lpSTS impaired a facial expression recognition task (Sliwinska and Pitcher, 2018). This suggests that face-selective areas in both hemispheres are necessary for optimal task performance.

In the present study, we investigated the visual field (VF) responses to faces in face- and motion-selective areas to better understand the functional connections of the rpSTS. Prior evidence has shown that the FFA and OFA exhibit a greater response to faces presented in the contralateral compared to the ipsilateral VF (Hemond et al., 2007; Chan et al., 2010; Kay et al., 2015). However, the VF responses to faces in the pSTS has not been established. If, like the FFA and OFA, the pSTS shows a greater response to faces presented in the contralateral VF this would suggest that the dominant functional inputs to the pSTS come from brain areas in the ipsilateral hemisphere. If, however, the pSTS responds to faces in the ipsilateral VF to a greater extent than the FFA and OFA, then the pSTS is likely to have greater functional connectivity with the contralateral hemisphere.

We used fMRI to measure the neural response evoked by short videos of faces presented in the 4 quadrants of the VF in face-selective areas (Experiment 1) and in hMT+ (Experiment 2). In Experiment 3, we increased the size of the stimulus videos and presented them in the 2 visual hemifields. This was done to increase the size of the neural responses across face-selective regions. Finally, in Experiment 4, we used TMS to investigate if VF responses were reflected in behavior. TMS was delivered over the rOFA, rpSTS, or the vertex control site, while participants performed a behavioral facial expression recognition task in the 2 visual hemifields. Our results were consistent across all experiments; namely, the bilateral FFA, rOFA, and bilateral hMT+ all exhibited a greater response to faces presented in the contralateral than ipsilateral VF (we were unable to functionally identify the left OFA in a sufficient number of participants). By contrast, the bilateral pSTS showed no preference for faces presented in any part of the VF. This same pattern was observed in Experiment 4: TMS delivered over the rpSTS disrupted task performance in both hemifields, but TMS delivered over the rOFA disrupted performance in the contralateral hemifield only. Our results demonstrate a functional difference in the interhemispheric connectivity between face-selective areas on the ventral and lateral brain surfaces.

Materials and Methods

Participants

In Experiments 1–3, a total of 23 right-handed participants (13 females, 10 males) with normal, or corrected-to-normal, vision gave informed consent as directed by the National Institutes of Mental Health Institutional Review Board. A total of 18 participants were tested in Experiment 1, 13 in Experiment 2, and 18 in Experiment 3. Thirteen of the participants took part in all the fMRI experiments (1–3). In Experiment 4, 14 right-handed participants (8 females, 6 males) with normal, or corrected-to-normal, vision gave informed consent as directed by the ethics committee at the University of York.

Stimuli

Regions-of-Interest Localizer Stimuli

In Experiments 1, 3, and 4, face-selective regions-of-interest (ROIs) were identified using 3-s video clips of faces and objects. These videos were used in previous fMRI studies of face perception (Pitcher et al., 2011a, 2014, 2017). Videos of faces were filmed on a black background and framed close-up to reveal only the faces of 7 children as they danced or played with toys or with adults (who were out of frame). Fifteen different moving objects were selected that minimized any suggestion of animacy of the object itself or of a hidden actor moving the object. Stimuli were presented in categorical blocks and, within each block, were randomly selected from the entire set for that stimulus category. This meant that the same actor or object could appear within the same block. The order of repeats was randomized and happened on average once per block. Participants were instructed to watch the movies and to detect when the subject of the video was repeated (one-back task). Repeats occurred randomly at least 2 times per run.

In Experiment 2, hMT+ was identified using a motion localizer. This localizer used an on/off block design to identify parts of the brain that respond more strongly to coherent dot motion than random dot motion. Stimuli were presented in 12 alternating blocks of coherent and random motion (11.43s each). In both conditions, 150 white dots (dot diameter: 0.04 degrees; speed: 5.0 degrees/s) appeared in a circular aperture (diameter: 9 degrees). During blocks of coherent motion, dots changed their coherent direction every second to avoid adaptation to any maintained direction of motion. The dots in the incoherent condition changed every second but the changes were not co-ordinated with each other to generate the appearance of random motion. Participants were instructed to focus on a red fixation dot presented at the center of the screen. HMT+ was identified using a contrast of activation evoked by coherent dot motion greater than that evoked by random dot motion (noise).

fMRI Face VF Mapping Stimuli (Experiments 1–3)

VF responses in face-selective regions were mapped using 2-s video clips of dynamic faces making one of 4 different facial expressions: happy, fear, disgust, and neutral air-puff. These faces were used in a previous fMRI study of face perception (van der Gaag et al., 2007). Happy expressions were recorded when actors laughed spontaneously at jokes, whereas the fearful and disgusted expressions were posed by the actors. The neutral, air-puff condition consisted of the actors blowing out their cheeks to produce movement but expressing no emotion. Both male and female actors were used. Videos were filmed against a gray background, and the actors limited their head movements. In Experiments 1 and 2, videos were presented at 3 by 3 degrees of visual angle and were shown centered in the 4 quadrants of the VF at a distance of 5 degrees from fixation to the edge of the stimulus (Kravitz et al., 2010). Experiment 3 followed the same procedure as Experiments 1 and 2, except that faces were presented in the contralateral and ipsilateral visual hemifields (see Figure 1). Videos were presented at 5 by 5 degrees of visual angle and shown at a distance of 5 degrees from fixation to the edge of the stimulus. The size of the videos was increased in order to increase the neural response recorded in face-selective areas.

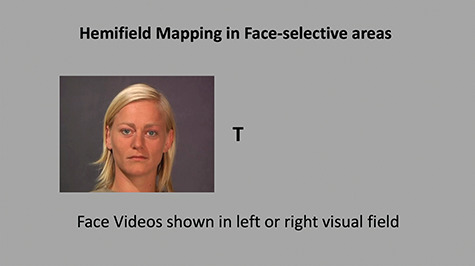

Figure 1.

Static image taken from the hemifield VF mapping stimulus used in Experiment 3. Actors displaying different emotions (happy, fear, disgust, neutral air-puff) were shown in the 2 hemifields of the VF. Participants maintained fixation by detecting the presence of either a T or an L (shown upright or inverted) at fixation and were informed of their performance at the end of each block. Runs in which the participant failed to perform the task at an accuracy of 75 % correct were excluded from further analysis.

TMS Face VF Mapping Stimuli (Experiment 4)

Face stimuli were 6 female models (C, MF, MO, NR, PF, and SW) from Ekman and Friesen’s (1976) facial affect series expressing 1 of 6 emotions: happy, sad, surprise, fear, disgust, and anger. Each grayscale picture was cropped with the same contour using Adobe Photoshop to cover the hair, ears, and neck.

Procedure

Brain Imaging Acquisition and Analysis

Participants were scanned using research dedicated GE 3-Tesla scanners at the National Institutes of Health (Experiments 1–3) and the University of York (Experiment 4). In Experiments 1 and 2, whole brain images were acquired using an 8-channel head coil (36 slices, 3 × 3 × 3mm, 0.6 mm interslice gap, TR = 2 s, TE = 30 ms). In Experiment 3, whole brain images were acquired using a 32-channel head coil (36 slices, 3 × 3 × 3 mm, 0.6 mm interslice gap, TR = 2 s, TE = 30 ms). In Experiment 4, whole brain images were acquired using a 12-channel head coil (32 slices, 3 × 3 × 3 mm, 0.6 mm interslice gap, TR = 3 s, TE = 30 ms). Slices were aligned with the anterior/posterior commissures. In addition, a high-resolution T-1 weighted MPRAGE anatomical scan (T1-weighted FLASH, 1 x 1 x 1 mm resolution) was acquired to anatomically localize functional activations.

Functional MRI data were analyzed using AFNI (http://afni.nimh.nih.gov/afni). Data from the first 4 TRs from each run were discarded. The remaining images were slice-time corrected and realigned to the third volume of the first functional run and to the corresponding anatomical scan. The volume-registered data were spatially smoothed with a 5-mm full-width-half-maximum Gaussian kernel. Signal intensity was normalized to the mean signal value within each run and multiplied by 100 so that the data represented percent signal change from the mean signal value before analysis.

A general linear model (GLM) was established by convolving the standard hemodynamic response function with the regressors of interest (4 visual quadrants in Experiments 1 and 2; 2 visual hemifields in Experiment 3). Regressors of no interest (e.g., 6 head movement parameters obtained during volume registration and AFNI’s baseline estimates) were also included in the GLM.

Face-selective ROIs (Experiments 1, 3, and 4) were identified for each participant using a contrast of greater activation evoked by dynamic faces than that evoked by dynamic objects, calculating significance maps of the brain using an uncorrected statistical threshold of P = 0.001. hMT+ (Experiment 2) was identified for each participant using a contrast of greater activation evoked by coherent motion than by random motion using an uncorrected statistical threshold of P = 0.0001. Within each functionally defined ROI, we then calculated the magnitude of response (percent signal change from a fixation baseline) for the VF mapping data in each quadrant (Experiments 1 and 2) or each hemifield (Experiment 3).

TMS stimulation and site localization

TMS was delivered at 60% of maximal stimulator output, using a Magstim Super Rapid Stimulator (Magstim, UK) and a 50 mm figure-8 coil, with the coil handle pointing upwards and parallel to the midline. A single intensity was used based on previous TMS studies of the same brain areas (Pitcher et al., 2008; Pitcher, 2014). Stimuli were presented while double-pulse TMS was delivered over the target site at latencies of 60 and 100 ms after onset of the probe stimulus. These latencies were chosen to cover the most likely times of rOFA and rpSTS involvement in facial expression recognition (Pitcher, 2014).

TMS sites were individually identified in each participant using the Brainsight TMS–MRI co-registration system, utilizing individual high-resolution MRI scans for each participant. The rOFA and rpSTS were localized by overlaying individual activation maps from the fMRI localizer task onto the structural scan, and the proper coil locations were marked on each participant’s head. The voxel exhibiting the peak activation in each of the functionally defined regions was used as the target.

Experiment 1—Responses to Faces in the 4 Quadrants of the VF in Face-Selective Areas

In Experiment 1, participants fixated the center of the screen while 2-s video clips of actors performing different facial expressions were shown in the 4 quadrants of the VF. To ensure that participants maintained fixation, they were required to detect the presence of an upright or inverted letter (either a T or an L) at the center of the screen. Letters (0.6° in size) were presented at fixation for 250 ms in random order and in different orientations at 4 Hz (Kastner et al., 1999). Participants were instructed to respond when the target letter (either T or L) was shown; this occurred approximately 25% of the time. The target letter (T or L) was alternated and balanced across participants. We informed the participants that the target detection task was the aim of the experiment, and we discarded any runs in which the participant scored less than 75% correct.

VF mapping images were acquired over 6 blocked-design functional runs lasting 408 s each. Each functional run contained sixteen 16-s blocks during which 8 videos of 8 different actors performing the same facial expression (happy, fear, disgust, and neutral air-puff) were presented in 1 of the 4 quadrants of the VF. The order was pseudo-randomized such that each quadrant appeared once every 4 blocks but, within each of these blocks of 4, the quadrant order was randomized. After the VF mapping blocks were completed, participants then viewed 4 blocked-design functional localizer runs lasting 234 s each, to identify the face-selective ROIs. Finally, we collected a high-resolution anatomical scan for each participant.

Experiment 2—Responses to Faces in the 4 Quadrants of the VF in hMT+

Experiment 2 followed the same design as Experiment 1, except for the following differences. VF mapping images were acquired over 4 blocked-design functional runs lasting 408 s each. After the VF mapping blocks were completed participants viewed 2 blocked-design functional runs lasting 288 s each, to functionally localize the motion-selective region hMT+. During the motion localizer blocks, participants were instructed to focus on a red dot at the center of the screen.

Experiment 3—Responses to Faces in the 2 Hemifields of the VF in Face-Selective Areas

Participants fixated the center of the screen while 2-s video clips of actors performing different facial expressions were shown in the 2 hemifields of the VF. VF mapping images were acquired over 6 blocked-design functional runs lasting 408 s each. Each functional run contained sixteen 16-sec blocks during which 8 videos of 8 different actors performing the same facial expression (happy, fear, disgust, and neutral air-puff) were presented in 1 of the 2 hemifields. Eight blocks were shown in each hemifield and the order in which they appeared was randomized. After the VF mapping blocks were completed, participants completed 6 blocked-design functional runs lasting 234 s each to functionally localize the face-selective ROIs.

Experiment 4—Facial Expression Recognition Task in the 2 Hemifields While TMS is Delivered Over the rOFA and rpSTS

Double-pulse TMS was delivered over the rOFA, rpSTS, and vertex, while participants performed a delayed match-to-sample facial expression recognition task. The vertex condition served as a control for non-specific effects of TMS. Figure 2 displays the trial procedure. Participants sat 57 cm from the monitor with their heads stabilized in a chin rest and indicated, by a right-hand key press, whether the sample face showed the same facial expression as the match face. During each block, facial expression stimuli were presented randomly in 1 of the 2 visual hemifields. Stimuli were presented at a size of 5 x 8 degrees of visual angle and 5 degrees of visual angle from fixation to the inside edge of the stimuli.

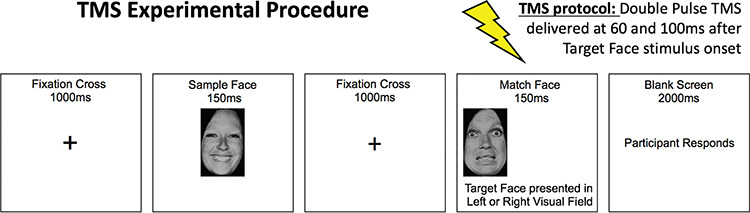

Figure 2.

The timeline of the TMS experimental procedure used in Experiment 4. Participants had to judge whether the sample face and target face had the same facial expression.

Participants were instructed to maintain fixation at the center of the screen where a cross was presented during each trial. Half the trials showed picture pairs with the same expression and half showed pairs with different expressions. Identity always changed between match and sample. The 6 expressions were presented an equal number of times. This task has been used in previous TMS studies of facial expression recognition (Pitcher et al., 2008; Pitcher, 2014; Sliwinska and Pitcher, 2018) as well as in neuropsychological (Garrido et al., 2009; Banissy et al., 2011) and neuroimaging studies (Germine et al., 2011). Two blocks of 72 trials were presented for each TMS site (rOFA, rpSTS, vertex). Each block consisted of 36 match trials and 36 non-match trials. Site order was balanced across participants. Within each block, the trial order was randomized. Participants were instructed to respond as quickly and as accurately as possible and were not given feedback on their performance.

Results

Experiment 1—fMRI Mapping of Faces in the 4 Quadrants of the VF in Face-Selective Areas

Using a contrast of greater activation to faces than to objects, we identified the rFFA (mean MNI co-ordinates 43, −52, −13), left fusiform face area (lFFA) (mean MNI co-ordinates −40, −54, −13), rpSTS (mean MNI co-ordinates 50, −47, 14), lpSTS (mean MNI co-ordinates −53, −51, 14), and the rOFA (mean MNI co-ordinates 41, −80, −4) in 16 of the 18 participants. Other face-selective ROIs were not present across all participants; the right amygdala was present in only 13 participants, the left amygdala in 11 participants, and the left OFA in 7 participants. Because of this issue of reduced power, only the rFFA, lFFA, right pSTS (rpSTS), left pSTS (lpSTS), and rOFA were included in the subsequent ROI analysis.

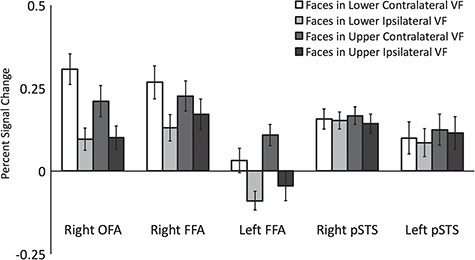

Results showed that the rFFA, lFFA, and rOFA exhibited a greater response to faces presented in the contralateral VF than in the ipsilateral VF. In addition, the rFFA exhibited a greater response to faces presented in the upper field than in the lower field. By contrast, the rpSTS and lpSTS exhibited no bias in response to faces presented in any of the 4 quadrants (see Figure 3).

Figure 3.

Percent signal change data for dynamic faces presented in the 4 quadrants of the VF in face-selective regions. Results showed that the rFFA, lFFA, and rOFA exhibited a significantly greater response to faces in the contralateral VF than in the ipsilateral VF. There were no VF biases in the rpSTS and lpSTS. Error bars show standard errors of the mean across participants.

Percent signal change data (Figure 3) were entered into a 5 (ROI: rFFA, lFFA, rpSTS, lpSTS, and rOFA) by 2 (contralateral vs. ipsilateral VF) by 2-way (upper vs. lower VF) repeated-measures analysis of variance (ANOVA). We found main effects of ROI [F (4,60) = 8, P < 0.0001], contralateral/ipsilateral VF [F (1,15) = 10, P = 0.006], and of upper/lower VF [F (1,15) = 7.5, P = 0.015]; there was also a significant 3-way interaction between ROI, contralateral versus ipsilateral VF and upper versus lower VF [F (4,60) = 3, P = 0.033].

Separate ANOVAs were performed on each of the face-selective ROIs. The rFFA showed a significantly greater response to faces shown in the contralateral than the ipsilateral VF (F (1,15) = 42, p < 0.001) and to faces shown in the upper than the lower VF [F (1,15) = 8, P = 0.012], but there was no significant interaction between the factors [F (1,15) = 2.5, P = 0.15]. The lFFA showed the same pattern as the rFFA, with a significantly greater response to faces shown in the contralateral than the ipsilateral VF [F (1,15) = 27, P < 0.001] and to faces shown in the upper than the lower VF [F (1,15) = 5, P = 0.04], and again there was no significant interaction between the factors [F (1,15) = 0.8, P = 0.4]. The rOFA showed a significantly greater response to faces shown in the contralateral than the ipsilateral VF [F (1,15) = 39, P < 0.001], but there was no significant difference between faces shown in the upper and lower VFs [F (1,15) = 0.2, P = 0.7] nor was there a significant interaction between the factors [F (1,15) = 1.5, P = 0.25].

By contrast, the rpSTS and lpSTS showed no significant effects of VF. The rpSTS showed no main effect of contralateral/ipsilateral VF [F (1,15) = 1, P = 0.3] or of upper/lower VF [F (1,15) = 2.6, P = 0.1] and there was no significant interaction [F (1,15) = 0.1, P = 0.9]. The lpSTS showed no main effect of contralateral/ipsilateral VF [F (1,15) = 3, P = 0.15] or of upper/lower VF [F (1,15) = 3, P = 0.2], and there was no significant interaction [F (1,15) = 0.5, P = 0.5].

Experiment 2—fMRI Mapping of Faces in the 4 Quadrants of the VF in hMT+

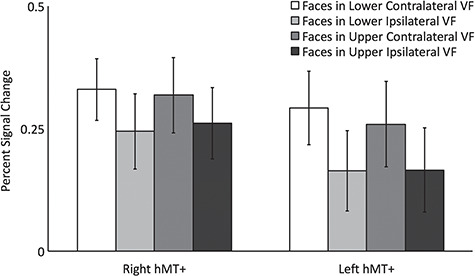

Using a contrast of activation to coherent dot motion greater than that to random dot motion, we identified the left hMT+ (mean MNI co-ordinates −43, −75, 2) and right hMT+ (mean MNI co-ordinates 42, −74, −2) in all 13 participants. As reported by others (Watson et al., 1993; Huk et al., 2002), hMT+ was localized to the lateral occipital cortex. Results of the VF mapping revealed that hMT+ in both hemispheres had a contralateral VF bias but no upper or lower VF bias (Figure 4).

Figure 4.

Percent signal change data for dynamic faces presented in the 4 quadrants of the VF in left and right hMT+. Results showed that bilateral hMT+ exhibited a significantly greater response to faces in the contralateral VF than in the ipsilateral VF. Error bars show standard errors of the mean across participants.

The percent signal change data were entered into a 2 (ROI: left vs. right HMT+) by 2 (contralateral vs. ipsilateral VF) by 2 (upper v. lower VF) repeated-measures ANOVA. There was a significant main effect of contralateral versus ipsilateral VF [F (1,12) = 27, P < 0.0001] revealing that bilateral HMT+ responded to faces in the contralateral VF more than in the ipsilateral VF. There was no main effect of ROI [F (1,12) = 0.8, P = 0.4] or of upper vs. lower VF (F (1,12) = 0.2, p = 0.7) and no interactions approached significance (P > 0.15).

Experiment 3—fMRI Mapping of Faces in the 2 Hemifields in Face-Selective Areas

Face-selective ROIs were identified in each participant using a contrast of activation to faces greater than that to objects. To increase the likelihood of identifying face-selective regions across both hemispheres, the number of face localizer runs was increased from 4 to 6 in Experiment 3. We identified the rFFA (mean MNI co-ordinates 42, −48, −20), rOFA (mean MNI co-ordinates 42, −78, −11), rpSTS (mean MNI co-ordinates 49, −44, 6), lFFA (mean MNI co-ordinates −40, −46, −22), left posterior STS (lpSTS) (mean MNI co-ordinates −53, −49, 4), and face-selective voxels in the right amygdala (mean MNI co-ordinates 23, −6, −16) in 15 of the 18 participants. The left OFA was present in 7 participants and the left amygdala in 6 participants so these areas were excluded them from further analysis.

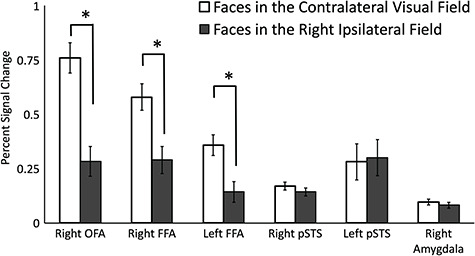

As in Experiment 1, the rOFA, rFFA, and lFFA showed a greater response to dynamic faces in the contralateral VF than in the ipsilateral VF. By contrast, the rpSTS, lpSTS, and the right amygdala showed no VF bias (see Figure 5).

Figure 5.

Percent signal change for dynamic faces presented in the contralateral and ipsilateral hemifields. Results showed that the rFFA and rOFA exhibited a significantly greater response to faces in the contralateral VF than in the ipsilateral VF. There were no VF biases in the rpSTS or the right amygdala. Error bars show standard errors of the mean across participants. * denotes a significant difference (P < 0.0001) in post hoc tests. Bilateral FFA and right OFA show the expected response of contralateral > ipsilateral. Error bars denote standard errors of the mean across participants.

Percent signal change data (Figure 5) were entered into a 6 (ROI: rOFA, rFFA, lFFA, rpSTS, lpSTS and right amygdala) by 2 (contralateral vs. ipsilateral VF) repeated-measures analysis of variance (ANOVA). Results showed significant main effects of ROI [F (5,70) = 4, P = 0.003] and of contralateral/ipsilateral VF [F (1,14) = 8, P = 0.013], as well as a significant interaction of ROI and VF [F (5,70) = 27, P < 0.0001]. Bonferroni corrected post hoc tests showed a significantly greater response to faces in the contralateral than ipsilateral VF in the rOFA (P < 0.0001), rFFA (P < 0.0001) and lFFA (P < 0.0001) but not in the rpSTS (P = 0.2), lpSTS (P = 0.8), or right amygdala (P = 0.4).

Experiment 4—TMS Mapping of Faces in the 2 Hemifields in the rOFA and rpSTS

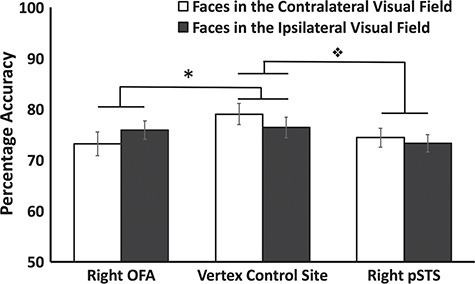

In Experiment 4, TMS was delivered over the rOFA, rpSTS, and the vertex while participants performed a delayed match-to-sample facial expression recognition task in each of the 2 visual hemifields. This was done to investigate if the differences in the VF responses in the rOFA and rpSTS (observed in Experiments 1 and 3) is behaviorally relevant. The vertex, a point on the top of the head, acted as a control site for the non-specific effects of TMS.

Results showed that participants performed more accurately when faces were presented in the left VF than the right VF when TMS was delivered over the vertex (see Figure 6). This is consistent with prior results showing a left VF advantage for behavioral face perception tasks (Sackeim et al., 1978; Young et al., 1985). TMS delivered over the rOFA selectively disrupted task accuracy when faces were presented in the contralateral VF but had no effect on faces presented in the ipsilateral VF. Presumably this is because ipsilateral VF faces were preferentially processed by face-selective areas in the left hemisphere. By contrast, TMS delivered over the rpSTS disrupted task accuracy when faces were presented in both VFs.

Figure 6.

Mean accuracy performance for the expression recognition task when TMS was delivered over the rOFA, rpSTS, and vertex control site. Results revealed that TMS delivered over the rOFA selectively impaired task performance in the contralateral VF only compared to vertex ( * denotes a significant interaction between TMS site and VF, P = 0.025). By contrast TMS delivered over the rpSTS impaired task accuracy in both VFs compared to vertex (❖ denotes a significant main effect between the rpSTS and vertex conditions, P < 0.0001). Error bars denote standard errors of the mean across participants.

Accuracy data (Figure 6) were entered into a 3 (ROI: rOFA, rpSTS, vertex) by 2 (contralateral vs. ipsilateral VF) repeated-measures ANOVA. Results showed a significant main effect of TMS site [F (2,26) = 8.3, P = 0.002] but not of VF [F (1,13) = 0.6, P = 0.46]. Crucially, there was also a significant interaction between TMS site and VF [F (2,26) = 3.9, P = 0.032]. To further understand what factors were driving this significant interaction we then performed 2 further ANOVAs that separately compared the accuracy data from the rOFA and the rpSTS to the vertex control site.

For OFA stimulation a 2 (TMS site: rOFA, vertex) by 2 (contralateral vs. ipsilateral VF) repeated-measures ANOVA showed a main effect of TMS site [F (1,13) = 8.1, P = 0.014] but not of VF [F (1,13) = 0.8, P = 0.82]. Crucially, there was a significant interaction between TMS site and VF [F (1,13) = 6.4, P = 0.025]. Planned Bonferroni corrections showed that TMS delivered over the rOFA impaired performance accuracy in the left VF compared to TMS delivered over the vertex (P = 0.016). No other comparisons approached significance (P > 0.35).

For rpSTS stimulation a 2 (TMS site: rpSTS, vertex) by 2 (contralateral vs. ipsilateral VF) repeated-measures ANOVA showed main effects of TMS site [F (1,13) = 23.7, P < 0.0001] and of VF [F (1,13) = 7, P = 0.02]. However, there was no significant interaction between TMS site and VF [F (1,13) = 0.8, P = 0.8]. The main effect of TMS site demonstrates that TMS delivered over the rpSTS impaired accuracy equally in both VFs relative to vertex stimulation. The main effect of VF is consistent with the left VF advantage for face discrimination we observed in the vertex condition and the behavioral left visual advantage for face recognition (Sackeim et al., 1978; Young et al., 1985).

A 3 by 2-way repeated measures ANOVA on the RT data showed no main effects of TMS site (P = 0.5) or VF site (P = 0.28), and there was no significant interaction (P = 0.15).

Discussion

In the present study we investigated the neural responses to faces presented in different parts of the VF in face-selective and motion-selective brain areas. fMRI results showed that the rFFA, lFFA, and rOFA exhibited a greater response to faces presented in the contralateral than the ipsilateral VF, a finding consistent with prior evidence (Hemond et al., 2007, Kay et al., 2015; Silson et al., 2015). This same pattern, a greater contralateral than ipsilateral response to face videos, was also observed in hMT+. By contrast, the face-selective region in the right and left pSTS did not preferentially respond to faces presented in any part of the VF. The absence of a contralateral VF bias for faces was also observed in face-selective voxels in the right amygdala. In a separate TMS experiment, we demonstrated that the difference in VF responses we observed between the rpSTS and rOFA was behaviorally relevant. TMS delivered over the rpSTS disrupted performance on a facial expression recognition task in both hemifields, while TMS delivered over the rOFA disrupted facial expression recognition in the contralateral VF only. Our results demonstrate that the contralateral VF bias observed in the bilateral FFA, rOFA, and bilateral hMT+ is absent in the bilateral pSTS.

Mapping VF responses can reveal the functional connections between brain areas. For example, non-human primate evidence shows that the parts of visual areas V1, V2, and V4 with dense anatomical interconnections also represent the same part of the VF (Gattass et al., 1997). A functional connection between the OFA and the FFA is consistent with both areas exhibiting a contralateral VF bias. By contrast, if the OFA and FFA provided the sole functional input to pSTS, then the left and right pSTS would exhibit the same contralateral bias, which they did not. Our results show that there is no difference between the response to faces shown in the left and right VF in the pSTS.

This difference in VF response between face-selective areas ventrally (FFA and OFA) and laterally (pSTS) in the brain is also consistent with models showing that there are 2 functionally distinct face pathways (Bruce and Young, 1986; Haxby et al., 2000; Calder and Young, 2005; Pitcher et al., 2011b; Duchaine and Yovel, 2015). The ventral pathway, which includes the FFA, preferentially responds to invariant facial aspects, such as individual identity (Grill-Spector et al., 2004), whereas the lateral pathway, which includes the pSTS, preferentially responds to changeable facial aspects, such as emotional expression and eye-gaze direction (Hoffman and Haxby et al., 2000). The differences in VF response we observed suggest that the interhemispheric connections between face-selective areas differ between the ventral and lateral brain surfaces. Namely, the interhemispheric connections between the bilateral pSTS are greater than those between the bilateral FFA. Future models of face perception should account for differences in the interhemispheric connections between face-selective areas.

Neuroimaging studies have shown that the pSTS exhibits a greater response to moving than to static faces (Puce et al., 1998; LaBar et al., 2003; Fox et al., 2009; Schultz and Pilz, 2009; Pitcher et al., 2011a; 2019), while the FFA and OFA show little, or no, preference for dynamic over static faces. This preferential response to motion indicates that the pSTS may be cortically connected to the motion-selective area hMT+ (O’Toole et al., 2002). An anatomical connection between motion-selective areas and the STS has been shown in both humans (Gschwind et al., 2012) and macaques (Ungerleider and Desimone, 1986; Boussaoud et al., 1990). In addition, combined TMS/fMRI and neuropsychological evidence has shown that disruption of the OFA and FFA does not impair the neural response to moving faces in the pSTS (Rezlescu et al., 2012; Pitcher et al., 2014), suggesting that the pSTS has independent functional inputs for processing moving faces. The results of the current study indicate that a likely source of this functional input to the rpSTS are face-selective areas in the left hemisphere, perhaps most notably the lpSTS. This is consistent with a recent study showing that delivered over the lpSTS impairs facial expression recognition, albeit to a lesser extent than TMS delivered over the rpSTS (Sliwinska and Pitcher, 2018).

VF mapping in macaques shows that visual areas that respond to motion in the contralateral VF (MT, MST and FST) progressively represent a greater proportion of the ipsilateral VF when moving anteriorly within the STS (Desimone and Ungerleider, 1986). This is consistent with human neuroimaging studies showing that more anterior areas of hMT+ represent a greater proportion of the ipsilateral field than more posterior areas (Huk et al., 2002; Amano et al., 2009). In our study bilateral hMT+ showed a greater response to moving faces in the contralateral than the ipsilateral VF. This demonstrates that hMT+ cannot be the sole source of functional input into the pSTS, as at least some of the ipsilateral response we observed in the pSTS must come from the other hemisphere.

In humans, the anatomical and functional connections of the amygdala are also unclear, but non-human primate neuroanatomical studies have identified a pathway projecting down the STS into the amygdala (Aggleton et al., 1980; Stefanacci and Amaral, 2000, 2002). If a functional connection between the pSTS and amygdala exists, then the VF responses to faces in these regions would likely be similar. In fact, we found that the right amygdala, like the rpSTS, showed no VF bias for dynamic faces. This similarity of the VF responses in the rpSTS and amygdala suggests that the rpSTS could be a source of dynamic face input for the right amygdala, which is consistent with our study demonstrating that thetaburst TMS (TBS) delivered over the rpSTS reduced the fMRI response to moving faces in the right amygdala (Pitcher et al., 2017).

In sum, we investigated the VF responses to faces in face-selective and motion-selective brain areas. Consistent with prior evidence, we observed a contralateral bias in the rFFA, lFFA, and rOFA (Hemond et al., 2007, Kay et al., 2015). By contrast, we observed no such VF bias in the rpSTS, lpSTS, and face-selective voxels in the right amygdala. Our results suggest that future face perception network models should consider interhemispheric asymmetries in the functional connections of different face-selective brain areas.

Funding

Intramural Research Program of the National Institute of Mental Health (NCT01617408, ZIAMH002918); Biotechnology and Biological Sciences Research Council (BB/P006981/1 to D.P.); Simons Foundation Autism Research Initiative, United States (#392150 to D.P).

Notes

We thank Mbemba Jabbi and Nancy Kanwisher for providing stimuli. We also thank Geena Ianni, Kelsey Holiday and Magda Sliwinska for help with data collection.

References

- Aggleton JP, Burton MJ, Passingham RE. 1980. Cortical and subcortical afferents to the amygdala of the rhesus monkey (Macaca mulatta). Brain Res. 190:347–368. [DOI] [PubMed] [Google Scholar]

- Amano K, Wandell BA, Dumoulin SO. 2009. Visual Field Maps, Population Receptive Field Sizes, and Visual Field Coverage in the Human MT plus Complex. J Neurophysiol. 102:2704–2718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Banissy MJ, Garrido L, Kusnir F, Duchaine B, Walsh V, Ward J. 2011. Superior Facial Expression, But Not Identity Recognition, in Mirror-Touch Synesthesia. J Neurosci. 31:1820–1824. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boussaoud D, Ungerleider LG, Desimone R. 1990. Pathways for motion analysis: cortical connections of the medial superior temporal and fundus of the superior temporal visual areas in the macaque. J Comp Neurol. 296:462–495. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. 1986. Understanding face recognition. Br J Psychol. 77:305–327. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. 2005. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 6:641–651. [DOI] [PubMed] [Google Scholar]

- Chan AW, Kravitz DJ, Truong S, Arizpe J, Baker CI. 2010. Cortical representations of bodies and faces are strongest in commonly experienced configurations. Nat Neurosci. 13:417–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Ungerleider LG. 1986. Multiple visual areas in the caudal superior temporal sulcus of the macaque. J Comp Neurol. 248:164–189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duchaine B, Yovel G. 2015. A Revised Neural Framework for Face Processing. Ann Rev Vis Sci. Vol 1(1):393–416. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. 1976. Measuring Facial Movement. Environmental Psychology and Nonverbal Behavior 1:56–75. [Google Scholar]

- Fox CJ, Iaria G, Barton J. 2009. Defining the face-processing network: optimization of the functional localizer in fMRI. Hum Brain Mapp. 30:1637–1651. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garrido L, Furl N, Draganski B, Weiskopf N, Stevens J, Tan GC, Driver J, Dolan RJ, Duchaine B. 2009. Voxel-based morphometry reveals reduced grey matter volume in the temporal cortex of developmental prosopagnosics. Brain. 132:3443–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gattass R, Sousa APB, Mishkin M, Ungerleider LG. 1997. Cortical projections of area V2 in the macaque. Cereb Cortex. 7:110–129. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. 2000. The fusiform “face area” is part of a network that processes faces at the individual level (vol 12, pg 499, 2000). J Cogn Neurosci. 12:912–912. [DOI] [PubMed] [Google Scholar]

- Germine LT, Duchaine B, Nakayama K. 2011. Where cognitive development and aging meet: Face learning ability peaks after age 30. Cogn. 118:201–210. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. 2004. The fusiform face area subserves face perception, not generic within-category identification. Nat Neurosci. 7:555–62. [DOI] [PubMed] [Google Scholar]

- Gschwind M, Pourtois G, Schwartz S, Van De D, Vuilleumier P. 2012. White-matter connectivity between face-responsive regions in the human brain. Cereb Cortex. 22:1564–1576. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. 2000. The distributed human neural system for face perception. Trends Cogn Sci. 4:223–233. [DOI] [PubMed] [Google Scholar]

- Hemond C, Kanwisher N, Op de H. 2007. A preference for contralateral stimuli in human object- and face-selective cortex. PLoS One. 2(6): e574. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. 2000. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 3:80–84. [DOI] [PubMed] [Google Scholar]

- Huk AC, Dougherty RF, Heeger DJ. 2002. Retinotopy and functional subdivision of human areas MT and MST. J Neurosci. 22:7195–7205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. 1997. The fusiform face area: a module in human extrastriate cortex specialised for face perception. J Neurosci. 17:4302–4311. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, De P, Desimon R, Ungerleider LG. 1999. Mechanisms of directed attention in the human Extrastriate cortex as revealed by functional MRI. Science. 282:108–111. [DOI] [PubMed] [Google Scholar]

- Kay KN, Weiner KS, Grill-Spector K. 2015. Attention reduces spatial uncertainty in human ventral temporal cortex ventral temporal cortex. Curr Biol. 25:1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kravitz DJ, Kriegeskorte N, Baker CI. 2010. High-level visual object representations are constrained by position. Cereb Cortex. 20:2916–2925. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBar KS, Crupain MJ, Voyvodic JB, McCarthy G. 2003. Dynamic perception of facial affect and identity in the human brain. Cereb Cortex. 13:1023–1033. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Puce A, Gore JC, Allison T. 1997. Face-specific processing in the human fusiform gyrus. J Cogn Neurosci. 9:605–10. [DOI] [PubMed] [Google Scholar]

- O’Toole AJ, Roark D, Abdi H. 2002. Recognition of moving faces: a psychological and neural framework. Trends Cogn Sci. 6:261–266. [DOI] [PubMed] [Google Scholar]

- Phillips ML, Young AW, Senior C, Brammer M, Andrew C, Calder AJ, Bullmore ET, Perrett DI, Rowland D, Williams SCR et al. 1997. A specific neural substrate for perceiving facial expressions of disgust. Nat. 389:495–498. [DOI] [PubMed] [Google Scholar]

- Pitcher D. 2014. Discriminating facial expressions takes longer in the posterior superior temporal sulcus than in the occipital face area. J Neurosci. 34:9173–9177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Dilks DD, Saxe RR, Triantafyllou C, Kanwisher N. 2011a. Differential selectivity for dynamic versus static information in face selective cortical regions. NeuroImage. 56:2356–2363. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Duchaine B, Walsh V. 2014. Combined TMS and fMRI reveals dissociable cortical pathways for dynamic and static face perception. Curr Biol. 24:2066–2070. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine B. 2008. TMS disrupts the perception and embodiment of facial expressions. J Neurosci. 28:8929–8933. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Ianni G, Ungerleider LG. 2019. A functional dissociation of face-, body- and scene-selective brain areas based on their response to moving and static stimuli. Sci Rep. 9(1):8242. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Japee S, Rauth L, Ungerleider LG. 2017. The superior temporal sulcus is causally connected to the amygdala: a combined TBS-fMRI study. J Neurosci. 37:1156–1161. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Duchaine B. 2011b. The role of the occipital face area in the cortical face perception network. Exp Brain Res. 209:481–493. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. 2007. TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol. 17:1568–1573. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Bentin S, Gore JC, McCarthy G. 1998. Temporal cortex activation in humans viewing eye and mouth movements. J Neurosci. 18(6):2188–2199. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puce A, Allison T, McCarthy G. 1999. Electrophysiological studies of human face perception. III: effects of top-down processing on face- specific potentials. Cereb Cortex. 9:445–458. [DOI] [PubMed] [Google Scholar]

- Rezlescu C, Pitcher D, Duchaine B. 2012. Acquired prosopagnosia with spared within-class object recognition but impaired recognition of basic-level objects. Cogn Neuropsychol. 29:325–347. [DOI] [PubMed] [Google Scholar]

- Sackeim HA, Gur RC, Saucy MC. 1978. Emotions are expressed more intensely on the left side of the face. Science. 202:434–436. [DOI] [PubMed] [Google Scholar]

- Schultz J, Pilz KS. 2009. Natural facial motion enhances cortical responses to faces. Exp Brain Res. 194:465–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silson EH, Chan A, Reynolds R, Kravitz D, Baker C. 2015. A retinotopic basis for the division of high-level scene processing between lateral and ventral human occipitotemporal cortex. J Neurosci. 35:11921–11935. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sliwinska MW, Pitcher D. 2018. TMS demonstrates that both right and left superior temporal sulci are important for facial expression recognition. NeuroImage. 183:394–400. [DOI] [PubMed] [Google Scholar]

- Stefanacci L, Amaral DG. 2000. Topographic organization of cortical inputs to the lateral nucleus of the macaque monkey amygdala: a retrograde tracing study. J Comp Neurol. 421:52–79. [DOI] [PubMed] [Google Scholar]

- Stefanacci L, Amaral DG. 2002. Some observations on cortical inputs to the macaque monkey amygdala: an anterograde tracing study. J Comp Neurol. 451:301–323. [DOI] [PubMed] [Google Scholar]

- Ungerleider LG, Desimone R. 1986. Cortical connections of visual area MT in the macaque. J Comp Neurol. 248:190–222. [DOI] [PubMed] [Google Scholar]

- van der C, Minderaa R, Keysers C. 2007. The BOLD signal in the amygdala does not differentiate between dynamic facial expressions. Soc Cogn Affect Neurosci. 2:93–103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watson JD, Myers R, Frackowiak RS, Hajnal JV, Woods RP, Mazziotta JC, Shipp S, Zeki S. 1993. Area V5 of the human brain: evidence from a combined study using positron emission tomography and magnetic resonance imaging. Cereb Cortex. 3:79–94. [DOI] [PubMed] [Google Scholar]

- Young AW, Hay DC, McWeeny KH, Ellis AW, Barry C. 1985. Familiarity decisions for faces presented to the left and right cerebral hemispheres. Brain Cogn. 4:439–450. [DOI] [PubMed] [Google Scholar]