Abstract

To facilitate social interactions, humans need to process the responses that other people make to their actions, including eye movements that could establish joint attention. Here, we investigated the neurophysiological correlates of the processing of observed gaze responses following the participants’ own eye movement. These observed gaze responses could either establish, or fail to establish, joint attention. We implemented a gaze leading paradigm in which participants made a saccade from an on-screen face to an object, followed by the on-screen face either making a congruent or incongruent gaze shift. An N170 event-related potential was elicited by the peripherally located gaze shift stimulus. Critically, the N170 was greater for joint attention than non-joint gaze both when task-irrelevant (Experiment 1) and task-relevant (Experiment 2). These data suggest for the first time that the neurocognitive system responsible for structural encoding of face stimuli is affected by the establishment of participant-initiated joint attention.

Keywords: event-related potential, gaze leading, joint attention, N170

Introduction

Humans frequently follow others’ gaze to look at the same object. The advantages of engaging in gaze following are well understood (Frischen et al., 2007, for a review). For example, joint attention facilitates social interactions (Striano and Rochat, 1999), supports early language development (Brooks and Meltzoff, 2015) and is a key cue to allow us to infer others’ mental states (Baron-Cohen and Cross, 1992). To establish joint attention, there is typically a gaze leader and a gaze follower. Both individuals will share overlapping mental representations as a result of their shared experience, but the neurocognitive mechanisms engaged by these two individuals to reach this unified state differ (Schilbach et al., 2010; Oberwelland et al., 2016; Mundy, 2017). In general, the field has to date learned more about how joint attention is established by the follower than processes engaged by the gaze leader. Recent work on gaze leading has revealed that participants who led gaze spontaneously made faster saccades back to faces who responded with joint attention (Bayliss et al., 2013). Moreover, Edwards et al. (2015) investigated the attentional mechanisms underpinning this propensity, finding faces that follow eye gaze captured covert spatial attention. Furthermore, gaze leading evokes an implicit sense of agency over the gaze shift response (Stephenson et al., 2018). Building on these initial behavioural findings, we wanted to further elucidate the processes involved in gaze leading by investigating the neurophysiological basis of joint attention. Specifically, the aim was to explore whether the N170 event-related potential (ERP) component would be modulated when gaze leading was reciprocated with a congruent gaze shift, compared with an averted gaze shift response. This would serve as evidence that the brain rapidly detects the outcome of a joint attention bid in a way that affects perceptual processing.

The N170 ERP component is a negative-going evoked potential associated with face processing, reaching maximal amplitude over parietal-occipital sites around 170 ms after face stimulus onset (e.g. Bentin et al., 1996). The N170 is usually associated with face perception and thought to be face-sensitive as it is generally greater for faces than other objects (see Eimer, 2011; Rossion, 2014, for reviews). However, images of the eyes in particular appear to be strong drivers of the N170 (Itier et al., 2007). Moreover, previous work has demonstrated that the N170 is sensitive to the changes of observed gaze direction of a centrally presented face, particularly when comparing direct vs averted observed gaze (Conty et al., 2007; Latinus et al., 2014; Myllyneva and Hietanen, 2016). It is possible, therefore, that contextual information, such as the establishment—or not—of joint attention, could modulate this gaze-sensitive component.

Previous electrophysiological research comparing conditions where an on-screen face either followed or did not follow the participants gaze is limited but revealing. For example, one recent EEG study found greater alpha band suppression in infants when their gaze was followed over not followed, suggesting early development of neural sensitivity to reciprocated gaze (Rayson et al., 2019). An ERP study has shown enhanced P350 in response to an incongruently responding face (Caruana et al., 2015). In their paradigm, the response to the participant’s joint attention bid was made after the participant looked back towards the stimulus face, meaning that in the congruent condition, the face looked at the same location to which the participant had previously been looking. In the present study, we aim to assess the ERP response to an observed gaze onset that establishes a current state of joint attention. In this way, our study is, to our knowledge, the first to examine the ERP response to the detection of peripheral gaze shift responses to gaze leading to a referent object.

Our investigation of joint attention initiation necessarily requires participants to process observed gaze onset in the periphery of their visual field. Previous behavioural work has shown that gaze cueing of attention emerges reliably from faces presented in the periphery (Kingstone et al., 2000, see also Yokoyama and Takeda, 2019). Edwards et al. (2015) also showed that peripheral gaze cues affect spatial attention but the manner in which they do so is greatly influenced by context. Specifically, if the participant makes a saccade to an image of a real-world object and is followed to this object by the eyes of the peripherally presented face, then spatial attention shifts to the gazing face itself. That is, attention is shifted towards a face that follows one’s gaze: the ‘gaze leading’ effect.

This study aims to pursue this gaze leading effect at the neural level, so it is also important to understand whether we can expect a typical N170 response to the onset of observed gaze. Previous work has shown that the N170 response to peripherally presented faces can be detected, but the timecourse is delayed (e.g. Rigoulot et al., 2011). Because our paradigm involves analysing only the peripheral onset of gaze stimuli within an already-present face, we can also expect a relatively small amplitude. We propose that the N170 could be sensitive not just to the onset of observed averted gaze per se but could be modulated by the social context that the averted gaze establishes. Therefore, we hypothesised that processing gaze shifts that establish joint attention would be associated with an enhanced N170 compared with the onset of observed gaze that does not establish joint attention. To pre-empt the results, we find evidence in support of this hypothesis in both Experiments 1 and 2, observing a small N170 that is reliably larger for observed gaze shifts that follow the participant gaze compared with gaze shifts that do not establish joint attention.

Experiment 1

The present study implemented a simple simulated gaze interaction, to explore whether ERPs are modulated by gaze responses following a simple gaze leading saccade from a face to an object. In addition to the central research question regarding the N170 as a function of observed gaze context, we were also interested in potential individual differences. Edwards et al. (2015) noted a correlation between autism-like traits (measured by the autism spectrum quotient—the AQ—Baron-Cohen et al., 2001) and the gaze leading effect, with participants high on autism-like traits showing weaker effects of observed gaze on attention (see also Bayliss et al., 2005; Bayliss and Tipper, 2005, 2006). We therefore included the autism-spectrum quotient in our study to test for any relationship between neural responses in a gaze leading context.

Method

Participants

After ethical approval was obtained from the local ethics committee, 36 psychology undergraduate student participants (mean age = 19.9 years, s.d. = 1.33; 7 were men), gave written, informed consent and were granted course credits for participation. All reported normal or corrected-to-normal vision and no history of neurological disorders. One participant was excluded from analysis because the EEG signal was poor in the electrodes of interest (henceforth n = 35). There was an insufficient existing literature from which to anticipate likely effect sizes to inform a power analysis. However, an a priori power analysis using a medium effect size as a predicted outcome with a power of 0.80 led to a required sample of 34 (GPower: Faul et al., 2007).

Stimuli

Images of six smiling faces, three male and three female, (560 × 748 px, subtending ~12.2° visual angle) were taken from the NimStim face set (see Figure 1; Tottenham et al., 2009). Smiling faces were used to make the task engaging for participants. Each face comprised three versions: eyes looking right, looking left or straight ahead. There were eight images of everyday kitchen objects (~440 × 156 px, ~2.45°) taken from Bayliss et al. (2007). All stimuli were presented on a black background using E-Prime 2.0.

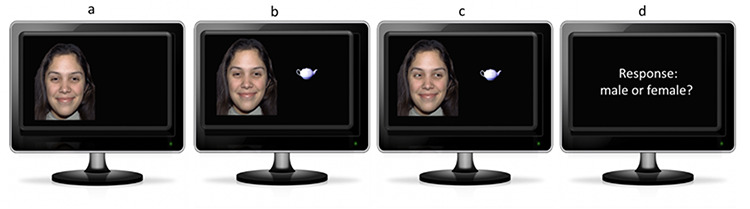

Fig. 1.

Trial sequences and examples of stimuli: (a) the participant fixates an on-screen face, displayed for 1000 ms; (b) an object appears and the participant immediately saccades to the object and is told to keep fixation there until response; (c) 800 ms after object onset, the on-screen face responds with either a congruent or incongruent gaze shift, displayed for 2500 ms and (d) the participant is prompted to report the gender of the face they just saw (instructions displayed until response).

Apparatus and materials

A 64-channel active electrode system (Brain Products GMbH) with a BrainAmp MR64 PLUS amplifier was used for EEG acquisition. Viewing distance was ~70 cm from eyes to a 61 cm monitor (resolution 1920 × 1080 px). A standard keyboard was used for participants’ manual responses. The AQ was presented using E-Prime. Participants rated how strongly they agreed or disagreed with each item (e.g. ‘I enjoy social chit-chat’) on a four-point Likert scale ranging from definitely agree to definitely disagree (Baron-Cohen et al., 2001).

Procedure

Participants were positioned in a comfortable chair in front of a computer screen 70 cm from their face. They completed six practice trials, followed by four blocks of 60 experimental trials wherein on each trial, the face’s eyes either followed participants’ gaze (joint attention) or did not follow participants’ gaze (averted gaze), with each face identity appearing equally often in both gaze conditions. Trials were presented in a new random order for each block. Therefore, there were a total of 120 trials per condition (joint attention vs averted gaze). In two of the gaze leading blocks, the faces were presented 2.5 cm to the left of the centre of the screen with the object appearing 13.5 cm to the right of the faces. In the other two blocks, faces were presented 2.5 cm to the right of centre, with the object appearing 13.5 cm to the left of the faces. Block order was counterbalanced. Finally, participants completed the AQ on the computer. Participants were given rest breaks between each block.

In all blocks, the face was presented looking straight ahead (i.e. with direct gaze towards the participants). This was displayed for 1000 ms, whereas the participants were instructed to fixate on the face. Next, the object appeared to the right or left of the face positioned in line with the line of gaze of the on-screen face. Participants were instructed to saccade from the face to the object as soon as it appeared and to keep fixating on the object until they noticed the gaze shift occur in their peripheral vision, with no further fixation instructions thereafter. About 800 ms after the onset of the object, gaze onset occurred either to follow (joint attention) or not to follow (averted gaze) the participant’s gaze towards the object. The 800 ms time frame between the object appearing and the gaze onset was informed by previous work on different time intervals between gaze leading and response ratings of relatedness (Pfeiffer et al., 2012) and a small pilot study in which participants were asked to rate which of four time durations felt most naturalistic from a choice of 400, 800, 1000 and 1400 ms, using the same stimuli setup as the current experiment.

Once the gaze response had been displayed for 2500 ms, the face and object cleared to reveal a prompt to report whether the face was male or female. Participants used the index finger of their right hand to press letter key ‘m’ for male and the index finger of their left hand to report ‘f’ for female. There was an inter-trial interval of a blank screen jittered with a random interval of 750–1250 ms following response and before the next face appeared to start the next trial (see Figure 1). Participants performed a gender identification task, so the task was orthogonal to any judgments about whether their gaze was followed or not.

Before beginning the task, participants were shown examples of their typical EEG artifacts on a monitor in the testing room, including their horizontal saccades and blinks. They were informed that experimenters would be monitoring their horizontal eye movements using the EEG signal throughout the experiment. They were told that the best time to blink was between each trial (after making their gender categorisation response).

Data acquisition

Accuracy and reaction time (RT) for the gender identification task were recorded for every trial. EEG was recorded using a Brain Vision actiCAP system with 64 active electrodes. Participants wore an elastic nylon cap (10/10 system extended). One electrode was placed under the left eye to monitor vertical eye movements. The continuous EEG signal was recorded at a 500 Hz sampling rate using FCz as a reference electrode. All electrodes had connection impedance below 50 kΩ before recording commenced.

Continuous EEG data were pre-processed and analysed offline using EEGLAB (Delorme and Makeig, 2004) and ERPLAB (Lopez-Calderon and Luck, 2014). High- and low-pass half-amplitude cutoffs were set at 0.1 and 40 Hz, respectively. Noisy channels were interpolated with the spherical interpolation function from EEGLAB. Artifacts were removed in two stages. Firstly, trials containing excessive artifacts were rejected by manual inspection (2.40% of trials). Secondly, horizontal eye movements and blinks were identified using the ‘runica’ ERPLAB function for independent component analysis. The resultant scalp maps for all components for each participant were examined. Components which corresponded to eye artifacts (blinks and saccades) were removed. Continuous data were segmented into epochs of 1000 ms (from −200 to 800 ms relative to gaze onset). EEG data were then re-referenced to an average reference and averaged. The total mean number of trials per condition, out of 120, following artifact removal, was 117 for joint attention gaze shifts (range 109–120 trials) and 117 for averted gaze (range 106–120 trials).

Clusters of four electrodes were identified as the region of interest (ROI) over parietal-occipital regions in each hemisphere based on the previous research and visual inspections of the ERPs from this study (left; P5/P7/PO3/PO7, right; P6/P8/PO4/PO8). These sites are commonly associated with face processing, gaze processing and attentional processes (see Hietanen et al., 2008; Rossion and Jacques, 2008; Eimer, 2011). It is less common to include PO3/PO4 in N170 studies, but as the effects we found there were similar to the rest of the electrode sites, we included them in order to capture this topography.

ERP trials were time locked to the onset of the gaze shift. The amplitudes for ERPs were measured as the mean of all data points between 170 and 230 ms relative to the mean of all data points in the 200 ms pre-stimulus baseline. This time window was chosen based on a combination of previous research (e.g. Conty et al., 2007) and visual inspection of grand averaged and individual participant’s average ERPs (averaged across conditions, as recommended by Luck, 2014).

Results

Confidence intervals and standard errors around the means for all measures are based on 1000 bootstrap samples. Confidence intervals around effect sizes have been calculated using ESCI (Exploratory Software for Confidence Intervals; Cumming and Calin-Jageman, 2016). Note that this uses a more conservative calculation for Cohen’s d values than taking the mean difference and dividing by the standard deviation and, instead, calculates Cohen’s d by: M diff/√((S12 + S22)/2).

Behavioural data

Accuracy for identifying gender of faces approached ceiling in both conditions (99%, s.d. = 1.2%). The mean RTs for reporting gender can be found in Table 1.

Table 1.

Mean RTs in milliseconds (SD in parentheses) for Experiment 1 (gender discrimination task) and Experiment 2 (gaze direction discrimination task)

| Experiment | Joint attention | Averted gaze | Faces on left | Faces on right |

|---|---|---|---|---|

| E1 | 558 (214) | 552 (210) | 557 (214) | 549 (218) |

| E2 | 627 (230) | 619 (271) | 627 (230) | 618 (257) |

RTs to report the gender of the faces were subjected to an ANOVA with gaze response (joint attention or averted) and face position (faces presented on the left or right) as within subject factors. There was no main effect of gaze response, F(1,34) = 0.72, MSE = 1618.65, P = 0.401, ηp2 = 0.021, no main effect of face position, F(1,34) = 0.23, MSE = 2219.69, P = 0.463, ηp2 = 0.007, and no interaction, F(1,34) = 0.96, MSE = 1400.51, P = 0.326, ηp2 = 0.028.

ERP data

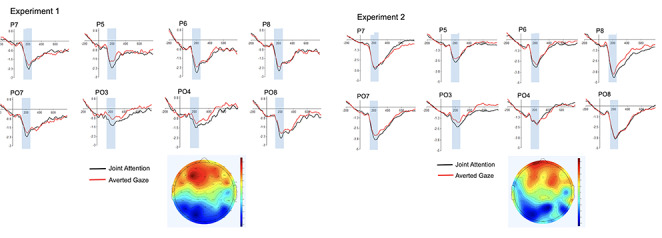

Grand averaged ERPs can be found in the left panel of Figure 2, together with a scalp map showing joint attention minus averted gaze condition. See also Figure 3 for distributional information.

Fig. 2.

Experiment 1 (n = 35; left panel) and Experiment 2 (n = 34; right panel) grand averaged ERPs showing the effect of gaze response at 170–230 ms after gaze shift (shaded area). The scalp maps show the gaze response effects, calculated as the mean amplitude (in μV) for the incongruent, averted gaze response subtracted from the mean amplitude for the congruent, joint attention gaze response.

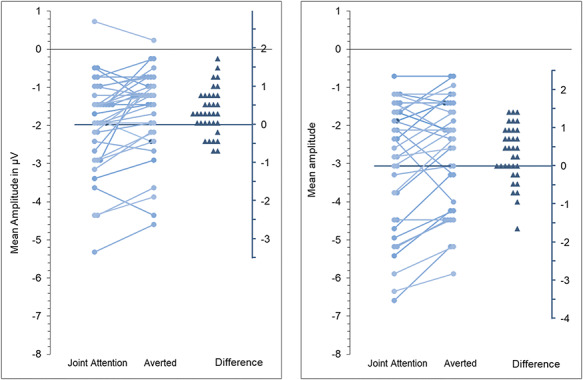

Fig. 3.

Distributional information for the main effect of gaze response reported in Results sections of both Experiment 1 (left panel) and Experiment 2 (right panel). Each panel contains a line graph representing N170 amplitudes for each participant in the two gaze conditions, with reference to left-sided y-axis. On the right of each panel, difference scores are presented for each participant relative to the right-sided y-axis.

The mean amplitudes in the time window 170–230 ms were subjected to a 2 × 2 × 2 ANOVA with gaze response (joint attention or averted) and hemisphere (left or right) and face location (left or right) as within-subject factors. There was a large and statistically significant main effect of gaze response, F(1,34) = 15.58, MSE = 10.21, P < 0.001, ηp2 = 0.31, due to greater negativity for joint attention over averted gaze (mean difference = −0.36 μV, s.d. = 0.59). The grand-averaged ERPs for each electrode in the left and right ROIs can be seen in Figure 2.

In addition to the already-reported gaze effect, the only additional effect was an expected interaction between the factors ‘face location’ (left/right) and ‘hemisphere’ (left/right), F(1,34) = 24.59, MSE = 55.33, P < 0.001, ηp2 = 0.42, which showed a larger N170 in response to contralateral stimuli. This is expected simply because of the lateralised nature of the display (i.e. when fixating a left location, the stimulus event—the observed gaze shift—appears in the right visual hemifield, leading to a stronger neural response over contrahemispheric electrode sites, and the reverse for fixate-right trials). Neither other main effects nor interactions were significant (all F’s < 1). The lack of significant interactions involving face location here suggests that the effects of observed gaze direction were stable across hemifields.

Discussion

These results show that the brain distinguishes between a congruent gaze shift response to gaze leading, and an incongruent one. This is evidenced by greater mean negative amplitude between 170 and 230 ms following gaze response, likely to be an N170 ERP component modulation which evidences the detection of a successful joint attention bid. This is consistent with some of the previous work showing N170 modulation during gaze processing (e.g. Watanabe et al., 2002; Itier et al., 2007; Latinus et al., 2014).

Experiment 2

Here, we aimed to establish if the effect of joint attention on the N170 would replicate when the task was changed to a gaze-relevant task, rather than a gaze-irrelevant (i.e. gender categorisation) task as in Experiment 1. Task-relevance of gaze stimuli has been previously shown to modulate the ERP responses to observed gaze. For example, McCrackin and Itier (2019) showed that observed gaze direction modulates ERPs only when gaze was task-relevant and not when participants completed a gender discrimination task. These effects were, however, observed at later timepoints (>220 ms), but it is nevertheless important to consider the key role that behavioural goals and other contextual information can have when examining how social stimuli are processed. We pre-registered this follow-up experiment, aiming to collect data from around 35 participants for meaningful comparison with our findings in Experiment 1. A new sample of participants from the same population was recruited to complete an experiment identical in every aspect to Experiment 1, apart from the task. Participants now reported whether the gaze shift which occurred in the periphery was towards or away from the object they were fixating following their gaze leading saccade from the face to the object.

Methods

Participants

We collected data from 36 participants but included n = 34 in the analysis (mean age: 21.06 years, s.d. = 5.44, 9 males) because two participants had poor EEG signal in the ROIs, in accordance with our pre-registered exclusion criteria.

Design & procedure

The only difference from Experiment 1 was the task. Participants were prompted to use the right or left arrow key to report whether the onscreen gaze shift was towards or away from the object they were fixating. For the two blocks in which faces were presented on the left, participants used the index finger of their right hand for ‘towards’ and the index finger of their left hand for ‘away.’ For the two blocks in which faces were presented on the right, they used the index finger of their left hand for ‘towards’ and the index finger of their right hand for ‘away.’ The pre-registration and sample pre-processing script for this second experiment can be found on the Open Science Framework website at https://osf.io/eguw2/, along with the data from both experiments.

The mean number of trials per condition, after artifact removal (3.17% of trials), were 115 for joint attention gaze shifts (range 87–120 trials) and 115 for averted gaze (range 88–120 trials). There were three participants for whom EEG signal was poor in just one of the four blocks of trials, hence we included data from only three blocks for those three participants.

Results

Behavioural data

Accuracy for identifying gaze shift direction approached ceiling in both conditions (99%, s.d. = 2.5%). RTs to report the direction of gaze (towards or away) were subjected to an ANOVA with gaze response (joint attention or averted) and face position (faces presented on the left or right) as within subject factors. There were no main effects of gaze response or face position, F(1,33) < 1, and no interaction, F(1,33) < 1 (see Table 1).

ERP data

Grand averaged ERPs can be found in the right panel of Figure 2 for the eight electrodes in the ROIs, together with Cz (to show the VPP) and a scalp map of joint attention minus averted gaze conditions.

The mean amplitudes in the time window 170–230 ms were subjected to a 2 × 2 × 2 ANOVA with gaze response (joint attention or averted) and hemisphere (left or right) and face location (left or right) as within-subject factors. There was a large and statistically significant main effect of gaze response, F(1,33) = 6.95, MSE = 7.63, P = 0.013, ηp2 = 0.174, due to greater negativity for joint attention than averted gaze (mean difference = 0.34 μV, s.d. = 0.74 μV). Therefore, we replicated the main finding from Experiment 1. Grand-averaged ERPs for each electrode in the left and right ROIs can be seen in Figure 2.

Like Experiment 1, there was an interaction between face location and hemisphere, F(1,33) = 30.84, MSE = 127.82, P < 0.001, ηp2 = 0.483, which emerges due to the non-central presentation of the stimulus event. Similarly, like Experiment 1, there were no main effects of face location, F(1,33) = 1.64, MSE = 7.38, P = 0.209, ηp2 = 0.047, or hemisphere, F(1,33) < 1. Contrary to Experiment 1, there was a significant interaction between face location and gaze, F(1,33) = 4.44, MSE = 3.54, P = 0.043, ηp2 = 0.119. Follow-up comparisons showed that this was because there was a difference between joint attention and averted gaze, collapsed across hemisphere, when faces were presented on the right, t(33) = 3.11, P = 0.004, dz = 0.36, 95% CI[0.11, 0.60], but not when presented on the left, t(33) = 0.70, P = 0.487, dz = 0.06, 95% CI[−0.10, 0.21]. There was also an interaction between face location, hemisphere and gaze response, F(1,33) = 6.08, MSE = 2.06, P = 0.019, ηp2 = 0.156. To explore this interaction, please see Table 2 that shows the mean amplitudes for joint attention and averted gaze for faces presented on the left and the right, by hemisphere, for both experiments. The only contrast in which there was not greater amplitude for joint attention over averted gaze was over the right hemisphere when faces were presented on the left. Paired sample t-tests showed that there was no difference between joint attention and averted gaze mean amplitude when faces were presented on the left, in the right hemisphere, t(33) = 0.62, P = 0.539, dz = 0.11, nor the left hemisphere, t(33) = 1.94, P = 0.061, dz = 0.33.

Table 2.

Mean N170 amplitudes (at 170–230 ms after gaze shift; SD in parentheses) for Experiment 1 (Gender Discrimination Task) and Experiment 2 (Gaze Direction Discrimination Task) , for each condition, according to whether faces were presented on the left or on the right, and by regions of interest (ROI)

| Experiment & face location | Joint attention left ROI | Averted left ROI | Joint attention right ROI | Averted right ROI |

|---|---|---|---|---|

| E1 faces on left | −1.48 (1.44) | −1.01 (1.18) | −2.42 (1.83) | −2.09 (1.81) |

| E1 faces on right | −2.37 (1.68) | −2.02 (1.58) | −1.62 (1.32) | −1.23 (1.27) |

| E2 faces on left | −2.09 (1.82) | −1.75 (1.41) | −3.48 (2.95) | −3.61 (2.73) |

| E2 faces on right | −3.84 (2.19) | −3.40 (1.93) | −2.84 (1.77) | −2.16 (1.78) |

Discussion

We replicated the finding from Experiment 1 of greater N170 amplitude for reciprocated over averted gaze shift responses in a gaze judgment task. This supports our hypothesis that the neurocognitive system responsible for face processing is sensitive to the outcome of an incidental joint attention bid. We also found a clear laterality effect whereby, unlike the bilateral effect in Experiment 1, this effect of joint attention on the N170 was restricted to faces presented on the right.

Follow-up analyses of Experiments 1 and 2

Between experiments analysis of N170

A mixed ANOVA was used to explore between groups differences to assess whether the N170 amplitudes differed between experiments. As in the separate analyses, there was a main effect of gaze, F(1,67) = 18.58, MSE = 4.14, P < 0.001, ηp2 = 0.217, and there was no interaction between gaze response and experiment, F(1,67) < 1. The laterality effect that emerged in Experiment 2 did not lead to any other interactions involving Experiment, F’s < 1, P’s > 0.88. However, the overall N170 magnitude was larger in Experiment 2 than Experiment 1, F(1,67) = 11.41, MSE = 3.65, P = 0.001, ηp2 = 0.146, presumably due to the task-relevance of the stimulus in Experiment 2.

AQ data

The mean AQ score was 17.38 (s.d. = 7.23) out of 50, similar to normative data for typical adult samples (Baron-Cohen et al., 2001). We combined the mean amplitudes and AQ scores for both experiments and found no significant correlation between the difference in N170 amplitude for joint attention and averted gaze, r = 0.02, P = 0.869. Although the effect of joint attention on N170 was not modulated by AQ, the N170 overall amplitude was positively correlated with AQ, being larger for those with low AQ scores (i.e. fewer autism-like traits for both joint attention, r(69) = 0.264, P = 0.029, and averted gaze conditions, r(69) = 0.289, P = 0.016, representing medium effect-sizes).

General discussion

In two experiments, we found greater N170 amplitude for reciprocated over averted gaze shift responses both when the gaze direction was task-irrelevant (Experiment 1) and task-relevant (Experiment 2). Altogether, this shows that by 200 ms after observed gaze response, the neurocognitive system responsible for structural encoding of faces is sensitive to the outcome of an incidental joint attention bid. This is remarkable given the physically small peripheral stimulus change in these experiments that evoked the ERP of interest. The findings accord with previous behavioural evidence that faces that follow gaze capture attention (Edwards et al., 2015); the current data could be similarly interpreted as evidence for enhanced or prioritised processing of faces that engage in joint attention. The results also provide further evidence that early neural responses are sensitive to observed responses to joint attention initiation, with this perceptual effect complementing other work that focussed on later cognitive and affective evaluative processes in joint attention initiation by employing more open-ended designs (e.g. Caruana et al., 2015, 2017; Schilbach et al., 2010).

Our averaged ERP waveform peak is later than that which is typically observed for an N170, peaking around 200 ms (see Eimer, 2011). Most N170 studies have face stimuli onsets presented at fovea. Here, participants were fixating on the object when a small perceptual change in the face stimulus (the gaze shift) occurred in their periphery, which is likely responsible for the slightly delayed onset (e.g. Rigoulot et al., 2011).

One element of our findings is unexpected. In Experiment 1, the effects were stable across hemifields with greater N170 for joint attention over averted gaze responses, whether the faces were presented in the left or right hemifield. However, in Experiment 2, the effect only emerged when faces were presented on the right. In the gaze processing literature, there is often a behavioural advantage for gaze perception when eyes are displayed in the left hemifield (Conty et al., 2006; Palanica and Itier, 2011). Consistent with this, imaging studies suggest that the right hemisphere is dominant for gaze and face processing (e.g. Wicker et al., 1998), although gaze and face processing are served by a bilateral neural network (Minnebusch et al., 2009). Quite how the laterality effect we found here fits into the laterality of the face and gaze processing network is unclear, especially given we observed bilaterality in one of the two experiments.

We also found that smaller mean N170 amplitudes were associated with higher self-reported autism-like traits. There have been previous observations of reduced N170 for the onset of faces in people with autism, although a meta-analysis has suggested there are latency delays but no overall amplitude differences in N170 between clinical and typically developing samples (Kang et al., 2018). We are not aware of previous research that examined gaze onset-elicited N170 magnitude associations with autism-like traits. Our finding suggests that there may be individual differences in N170 magnitude in non-clinical samples, specifically, when the N170 is elicited by an observed gaze shift alone. This is consistent with previous studies reporting associations between behavioural indices of gaze processing performance and level of autism-like traits (see Bayliss and Tipper, 2005; Edwards et al., 2015). This said, it is of course important to make clear that although the correlation with overall N170 magnitude was significant, the correlation with the difference in N170 between conditions was not significant, meaning that sensitivity to the context of the observed gaze shift—the main contrast of interest to our central hypothesis—was not related to AQ.

Further work could aim to establish critical boundary conditions for this effect of gaze leading on the N170. For example, while our paradigm employed a participant-generated saccade to a peripheral object as a simulated joint attention ‘bid’ that could then be followed or rejected, it is possible that the effect of joint attention on the N170 could emerge in a paradigm where the participant is already fixating the object and does not actively make the joint attention bid. This would imply that the spatial arrangement of elements is sufficient for the effect to emerge. In our previous work, however, we have shown that gaze leading effects on spatial attention are only detected when an active saccade to a coherent object is required of the participants (Edwards et al., 2015), suggesting that shifts in object-oriented overt attention is required to reveal gaze leading effects. Nevertheless, this important empirical question is unanswered and would be helpful for distinguishing between shared attention and mere co-ordinated gaze.

In sum, the central finding of this paper shows that when processing observed gaze shifts in response to gaze leading, there is a modulation of the N170 ERP component, with greater negativity for joint attention gaze shifts over averted gaze responses. Beyond the importance for understanding the mechanisms underpinning joint attention, these data emphasise the importance of perceptual processing of contextually relevant non-foveal changes to social stimuli such as potential interaction partners. Moreover, the results highlight the importance of considering that gaze interactions typically require the integration of multiple environmental and agentic elements that contribute to the overall spatio-temporal social context. The present work suggests that early perceptual processing is sensitive to the semantics of a peripheral social event, supporting the emergence and maintenance of joint attention episodes.

Funding

This work was supported by a University of East Anglia Social Science Faculty Studentship to L.J.S., and by a Leverhulme Trust Project Grant RPG-2016-173 to A.P.B. and to L.R.

Conflict of interest

The authors declare no conflicts of interest.

Author contributions

A.P.B., L.J.S. and S.G.E. conceived the study, all authors designed the experiments, L.J.S., S.G.E. and N.M.L. collected the data, L.J.S., N.M.L. and L.R. analysed the data and L.J.S. and A.P.B. wrote the paper, with contributions from all other authors.

Data availability

Data on which statistical analyses were performed have been uploaded as supplementary materials.

Supplementary Material

References

- Baron-Cohen S., Cross P. (1992). Reading the eyes: evidence for the role of perception in the development of a theory of mind. Mind & Language, 7(1–2), 172–86. [Google Scholar]

- Baron-Cohen S., Wheelwright S., Skinner R., Martin J., Clubley E. (2001). The autism Spectrum quotient : evidence from Asperger syndrome/high functioning autism, males and females, scientists and mathematicians. Journal of Autism and Developmental Disorders, 31(1), 5–17. doi: 10.1023/A:1005653411471. [DOI] [PubMed] [Google Scholar]

- Bayliss A.P., Tipper S.P. (2005). Gaze and arrow cueing of attention reveals individual differences along the autism spectrum as a function of target context. British Journal of Psychology, 96(1), 95–114. [DOI] [PubMed] [Google Scholar]

- Bayliss A.P., Tipper S.P. (2006). Predictive gaze cues and personality judgements: should eye trust you? Psychological Science, 17(6), 514–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayliss A.P., di Pellegrino G., Tipper S.P. (2005). Sex differences in eye gaze and symbolic cueing of attention. Quarterly Journal of Experimental Psychology, 58A(4), 631–50. [DOI] [PubMed] [Google Scholar]

- Bayliss A.P., Frischen A., Fenske M.J., Tipper S.P. (2007). Affective evaluations of objects are influenced by observed gaze direction and emotional expression. Cognition, 104(3), 644–53. doi: 10.1016/j.cognition.2006.07.012. [DOI] [PubMed] [Google Scholar]

- Bentin S., Allison T., Puce A., Perez E., McCarthy G. (1996). Electrophysiological studies of face perception in humans. Journal of Cognitive Neuroscience, 8(6), 551–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brooks R., Meltzoff A.N. (2015). Connecting the dots from infancy to childhood: a longitudinal study connecting gaze following, language, and explicit theory of mind. Journal of Experimental Child Psychology, 130, 67–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caruana N., de Lissa P., McArthur G. (2015). The neural time course of evaluating self-initiated joint attention bids. Brain and Cognition, 98, 43–52. [DOI] [PubMed] [Google Scholar]

- Conty L., Tijus C., Hugueville L., Coelho E., George N. (2006). Searching for asymmetries in the detection of gaze contact versus averted gaze under different head views: a behavioural study. Spatial Vision, 19(6), 529–45. [DOI] [PubMed] [Google Scholar]

- Conty L., N’Diaye K., Tijus C., George N. (2007). When eye creates the contact! ERP evidence for early dissociation between direct and averted gaze motion processing. Neuropsychologia, 45(13), 3024–37. doi: 10.1016/j.neuropsychologia.2007.05.017. [DOI] [PubMed] [Google Scholar]

- Cumming G., Calin-Jageman R. (2016). Introduction to the New Statistics: Estimation, Open Science, and Beyond, Routledge, New York and London. [Google Scholar]

- Delorme A., Makeig S. (2004). EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. Journal of Neuroscience Methods, 134(1), 9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Edwards S.G., Stephenson L.J., Dalmaso M., Bayliss A.P. (2015). Social orienting in gaze leading: a mechanism for shared attention. Proceedings of the Royal Society B: Biological Sciences, 282(1812), 20151141. doi: 10.1098/rspb.2015.1141. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eimer M. (2011). The face sensitive N170 component of the event-related potential In: Calder J.V., Andrew J., Rhodes G., Johnson M.H., editors. The Oxford Handbook of face perception, Oxford: Oxford University Press, pp. 329–44. [Google Scholar]

- Faul F., Erdfelder E., Lang A.G., Buchner A. (2007). G* power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behavior Research Methods, 39(2), 175–91. doi: 10.3758/BF03193146. [DOI] [PubMed] [Google Scholar]

- Frischen A., Bayliss A.P., Tipper S.P. (2007). Gaze cueing of attention: visual attention, social cognition, and individual differences. Psychological Bulletin, 133(4), 694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hietanen J.K., Leppänen J.M., Nummenmaa L., Astikainen P. (2008). Visuospatial attention shifts by gaze and arrow cues: an ERP study. Brain Research, 1215, 123–36. doi: 10.1016/j.brainres.2008.03.091. [DOI] [PubMed] [Google Scholar]

- Itier R.J., Alain C., Sedore K., McIntosh A.R. (2007). Early face processing specificity: It’s in the eyes! Journal of Cognitive Neuroscience, 19(11), 1815–26. doi: 10.1162/jocn.2007.19.11.1815. [DOI] [PubMed] [Google Scholar]

- Kang E., Keifer C. M., Levy E. J., Foss-Feig J. H., McPartland J. C., & Lerner M. D. (2018). Atypicality of the N170 event-related potential in autism spectrum disorder: a meta-analysis. Biological Psychiatry: Cognitive Neuroscience and Neuroimaging, 3(8), 657–666. doi: 10.1016/j.bpsc.2017.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kingstone A., Friesen C.K., Gazzaniga M.S. (2000). Reflexive joint attention depends on lateralized cortical connections. Psychological Science, 11(2), 159–66. [DOI] [PubMed] [Google Scholar]

- Latinus M., Love S.A., Rossi A., et al. (2014). Social decisions affect neural activity to perceived dynamic gaze. Social Cognitive and Affective Neuroscience. 10(11), 1557–1567. doi: 10.1093/scan/nsv049. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lopez-Calderon J., Luck S.J. (2014). ERPLAB: an open-source toolbox for the analysis of event-related potentials. Frontiers in Human Neuroscience, 8(April), 1–14. doi: 10.3389/fnhum.2014.00213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck S.J. (2014). An Introduction to the Event-Related Potential Technique, 2nd edn, MIT Press, Cambridge and London. [Google Scholar]

- McCrackin S.D., Itier R.J. (2019). Perceived gaze direction differentially affects discrimination of facial emotion, attention and gender–an ERP study. Frontiers in Neuroscience, 13, 517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Minnebusch D.A., Suchan B., Köster O., Daum I. (2009). A bilateral occipitotemporal network mediates face perception. Behavioural Brain Research, 198(1), 179–85. [DOI] [PubMed] [Google Scholar]

- Mundy P. (2017). A review of joint attention and social-cognitive brain systems in typical development and autism spectrum disorder. European Journal of Neuroscience, 47(6), 497–514. doi: 10.1111/ejn.13720. [DOI] [PubMed] [Google Scholar]

- Myllyneva A., Hietanen J.K. (2016). The dual nature of eye contact: to see and to be seen. Social Cognitive and Affective Neuroscience, 11(7), 1089–95. doi: 10.1093/scan/nsv075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oberwelland E., Schilbach L., Barisic I., et al. (2016). Look into my eyes: investigating joint attention using interactive eye-tracking and fMRI in a developmental sample. NeuroImage, 130, 248–60. doi: 10.1016/j.neuroimage.2016.02.026. [DOI] [PubMed] [Google Scholar]

- Palanica A., Itier R.J. (2011). Searching for a perceived gaze direction using eye tracking. Journal of Vision, 11(2), 19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfeiffer U.J., Schilbach L., Jording M., Timmermans B., Bente G., Vogeley K. (2012). Eyes on the mind: investigating the influence of gaze dynamics on the perception of others in real-time social interaction. Frontiers in Psychology, 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rayson H., Bonaiuto J.J., Ferrari P.F., Chakrabarti B., Murray L. (2019). Building blocks of joint attention: early sensitivity to having one’s own gaze followed. Developmental Cognitive Neuroscience, 37, 100631. doi: 10.1016/j.dcn.2019.100631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rigoulot S., D’Hondt F., Defoort-Dhellemmes S., Despretz P., Honoré J., Sequeira H. (2011). Fearful faces impact in peripheral vision: behavioral and neural evidence. Neuropsychologia, 49(7), 2013–21. doi: 10.1016/j.neuropsychologia.2011.03.031. [DOI] [PubMed] [Google Scholar]

- Rossion B. (2014). Understanding face perception by means of human electrophysiology. Trends in Cognitive Sciences, 18(6), 310–8. doi: 10.1016/j.tics.2014.02.013. [DOI] [PubMed] [Google Scholar]

- Rossion B., Jacques C. (2008). Does physical interstimulus variance account for early electrophysiological face sensitive responses in the human brain? Ten lessons on the N170. NeuroImage, 39(4), 1959–79. [DOI] [PubMed] [Google Scholar]

- Stephenson L. J., Edwards S. G., Howard E. E., & Bayliss A. P. (2018). Eyes that bind us: Gaze leading induces an implicit sense of agency. Cognition., 172, 124–133. doi: 10.1016/j.cognition.2017.12.011. [DOI] [PubMed] [Google Scholar]

- Schilbach L., Wilms M., Eickhoff S.B., et al. (2010). Minds made for sharing: initiating joint attention recruits reward-related Neurocircuitry. Journal of Cognitive Neuroscience, 22(12), 2702–15. doi: 10.1162/jocn.2009.21401. [DOI] [PubMed] [Google Scholar]

- Striano T., Rochat P. (1999). Developmental link between dyadic and triadic social competence in infancy. British Journal of Developmental Psychology, 17(4), 551–62. [Google Scholar]

- Tottenham N., Tanaka J.W., Leon A.C., et al. (2009). The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research, 168(3), 242–9. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Watanabe S., Miki K., Kakigi R. (2002). Gaze direction affects face perception in humans. Neuroscience Letters, 325(3), 163–6. doi: 10.1016/S0304-3940(02)00257-4. [DOI] [PubMed] [Google Scholar]

- Wicker B., Michel F., Henaff M.-A., Decety J. (1998). Brain regions involved in the perception of gaze: a PET study. NeuroImage, 8(2), 221–7. [DOI] [PubMed] [Google Scholar]

- Yokoyama T., Takeda Y. (2019). Gaze cuing effects in peripheral vision. Frontiers in Psychology, 10, 708 doi: 10.3389/2Ffpsyg.2019.00708. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Data on which statistical analyses were performed have been uploaded as supplementary materials.