Abstract

Understanding how humans make competitive decisions in complex environments is a key goal of decision neuroscience. Typical experimental paradigms constrain behavioral complexity (e.g. choices in discrete-play games), and thus, the underlying neural mechanisms of dynamic social interactions remain incompletely understood. Here, we collected fMRI data while humans played a competitive real-time video game against both human and computer opponents, and then, we used Bayesian non-parametric methods to link behavior to neural mechanisms. Two key cognitive processes characterized behavior in our task: (i) the coupling of one’s actions to another’s actions (i.e. opponent sensitivity) and (ii) the advantageous timing of a given strategic action. We found that the dorsolateral prefrontal cortex displayed selective activation when the subject’s actions were highly sensitive to the opponent’s actions, whereas activation in the dorsomedial prefrontal cortex increased proportionally to the advantageous timing of actions to defeat one’s opponent. Moreover, the temporoparietal junction tracked both of these behavioral quantities as well as opponent social identity, indicating a more general role in monitoring other social agents. These results suggest that brain regions that are frequently implicated in social cognition and value-based decision-making also contribute to the strategic tracking of the value of social actions in dynamic, multi-agent contexts.

Keywords: social cognition, games, strategies, decision making, dorsolateral prefrontal cortex

Introduction

Strategically interacting with other agents requires inference of their beliefs, goals and intentions in order to inform the implementation of one’s own actions (Lee and Seo, 2016; Wheatley et al., 2019). Social, strategic interactions are often studied in laboratory experiments using paradigms and analysis methods borrowed from game theory (Von Neumann and Morgenstern, 1944; Nash, 1950; Kreps, 1990; Camerer, 2011). Though such approaches illuminate cognitive processes such as the psychological tradeoffs between competition and cooperation (Rapoport et al., 1965; Axelrod and Hamilton, 1981; Rilling et al., 2002; Camerer, 2011; Poncela-Casasnovas et al., 2016), they nevertheless miss key elements of naturalistic, social interactions that occur in real time (Redcay and Schilbach, 2019). Specifically, adaptive behavior in a dynamic, multi-agent context requires the decision-maker to estimate two quantities not present in typical discrete-play games: how strongly coupled are my opponent’s actions to my actions, and when should I execute my action to maximize my chance of success? For example, driving an automobile requires not only creating mental models of other drivers, each of whom might vary in how responsive they are to one’s own actions, but also identifying an opportune time to implement a planned action (e.g. turning across a lane during a gap in traffic). Understanding how these specifically dynamic quantities are implemented within social decision-making mechanisms remains a fundamental challenge for social neuroscience (Ruff and Fehr, 2014).

The first of these quantities—the extent to which one’s actions are affected by the actions of other agents, here called opponent sensitivity—is endemic to real-world social interactions. For example, haggling with a shopkeeper over the price of an item requires real-time adjustments to your offer based on the shopkeeper’s behavior; if that adjustment process is accurate, your negotiating tactics will be more effective. Opponent sensitivity can be estimated by adapting the computational framework of reinforcement learning models (Koster-Hale et al., 2017; Leibo et al., 2017; McDonald et al., 2019) to determine a social policy function that characterizes the likelihood of future actions given the actions of all task-relevant agents and the environment’s current state. We propose that the human brain estimates this quantity in real time by recruiting brain regions that have been associated in the literature with action inference. Previous physiology research in rhesus macaques suggests that regions in the prefrontal cortex, particularly the dorsolateral prefrontal cortex (dlPFC), are involved in learning and adapting one’s actions in light of an opponent’s actions (Barraclough et al., 2004). Social action prediction error signals have also been found in the dlPFC, as well as the dorsomedial prefrontal cortex (dmPFC) and the temporoparietal junction (TPJ) (Suzuki et al., 2012). The dlPFC has also been shown to play important roles in predicting social decision-making outcomes according to information regarding the likely behavior of others (Coricelli and Nagel, 2009; Yoshida et al., 2010).

The second element of dynamic social decisions, advantageous timing, reflects the estimated reward distribution given the execution of an action at a particular time. For many social interactions—whether driving or negotiating—the challenge of the decision-maker may not be what action to take (e.g. turning from one road to another) but when to take that action given the likely actions of others (e.g. at a sufficiently large gap in traffic). This element can be quantified by constructing a social value function that maps a policy function onto expected rewards (Kishida and Montague, 2012). Previous literature relating to the neural basis for social value inference comes predominantly from value-based decision-making studies. Self-referential value-driven choice studies have implicated frontal regions such as the ventromedial prefrontal cortex and the dmPFC in being crucial for representing relative subjective value for oneself (Kable and Glimcher, 2007; Levy et al., 2009; Kolling et al., 2016; Piva et al., 2019) as well as during social decision-making (Amodio and Frith, 2006; Rilling and Sanfey, 2011; Lee and Seo, 2016). The dmPFC has been shown in computational neuroimaging studies to be involved in estimating the value of rewards when making choices in a social decision-making task (Apps and Ramnani, 2017). Prior work thus links the prefrontal cortex to both tracking the actions of others and to optimizing one’s own actions, but whether these processes are subsumed within a single region of PFC or rely on different regions remains unknown.

Importantly, the neural mechanisms of opponent sensitivity and advantageous timing may be distinct from information carried by the social or non-social identity of other agents in the environment. Prior neuroscience research has revealed that processing in brain systems that support social decision-making does vary according to the characteristics of the opponent (Carter et al., 2012; Schurz et al., 2014; Vickery et al., 2015; Jenkins et al., 2018; Siegel et al., 2018). For example, when human participants played static games against both human and computer agents, regions of the social cognitive network, such as the TPJ, were more active when playing against a human than against a computer opponent (Rilling et al., 2004). Similarly, application of machine learning techniques to functional magnetic resonance imaging (fMRI) data collected in a social poker paradigm showed that the TPJ encoded information predictive of subsequent decisions only when competing with a human opponent and not with a computer (Carter et al., 2012), indicating that this region supports the creation of a social context for decision-making (Carter and Huettel, 2013). Much of the social cognition literature is interested in how the brain differs when engaging with different agent types. However, we posit that learning about these two dynamic quantities across agent types will be critical for elucidating the computational mechanisms that underlie social action and value inference, independently of the identities of other agents.

Here, we investigated the brain mechanisms that support decision-making in dynamic social contexts, using an incentive-compatible game that distinguishes the processing of opponent behavior from the processing of opponent identity. Human participants attempted to direct a disk into a goal on a virtual playing field while avoiding a goalie controlled either by another human or by a realistic computer algorithm (cf. a ‘penalty shot’ in hockey) (Iqbal et al., 2019; McDonald et al., 2019). From the relative movements of the two players and the resulting trial outcomes, we estimated both a social policy function that provided a metric of opponent sensitivity and a social value function that allows the calculation of advantageous (or disadvantageous) timing, as shown in our prior work developing these computational models (McDonald et al., 2019). We connect these metrics to trial-to-trial fluctuations in brain function across different phases of our experimental task: recognizing the opponent identity, playing the game in real time and receiving a reward for successful performance. We found that multiple regions within the prefrontal cortex tracked dissociable features of behavior specific to dynamic decision-making, whereas social cognitive regions including the TPJ were differentially active according to opponent identity.

Results

Penalty shot experimental design

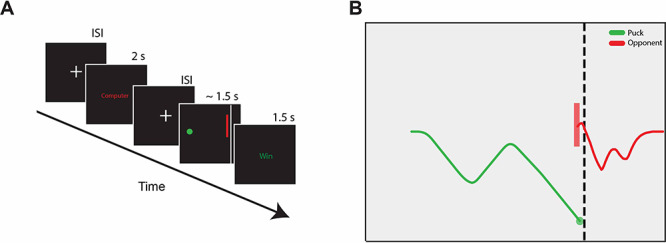

Human participants (n = 75) played a competitive, dynamic game (Figure 1) inspired by a penalty shot in hockey (Iqbal et al., 2019; McDonald et al., 2019) while the measures of brain activation were collected using fMRI. On each trial, the participant first viewed text (for 2 s) indicating their opponent on that trial—either a human who was incentivized to beat them in the game or a computer algorithm developed to mimic typical human behavior, although the strategic play style of the computer algorithm was not disclosed to participants. Then, following an interstimulus interval (ISI) of variable duration, the game screen appeared, and the participant used a joystick to control the vertical acceleration of a disk (i.e. the ‘puck’) that moved from left to right at constant velocity. The opponent controlled the vertical acceleration of a bar (the ‘goalie’) positioned on the right side of the screen. The participant’s task was to move the puck past the goalie without making contact; conversely, the opponent’s task was to move the goalie to block the puck.

Fig. 1.

Schematic of the Penalty Shot Task. (A) Each trial consisted of three phases with intervening variable-duration ISIs: (i) the Opponent phase (2 s) that indicated the opponent for the upcoming trial, (ii) the Game Play phase, during which participants attempted to move the puck past the defending goalie (1.5 s), and (iii) the Outcome phase that displayed the outcome of the trial (either Win or Loss; 1.5 s). (B) One example trial of game play with the trajectory of the puck shown in green and the vertical movements of the goalie shown in red. Note that the goalie has a fixed horizontal position; here, we visualize the goalie as traveling along the x-axis from right to left to illustrate its trajectory.

Each participant completed roughly 200 trials (median N = 202) of the Penalty Shot Task: half against the human opponent and half against the computer opponent (in randomized order). For consistency across fMRI sessions, we used two highly practiced individuals as our human opponents; both trained extensively in the task before their first session and then played against many different participants throughout the experiment (Human Goalie 1 = 45 participants, Human Goalie 2 = 30 participants). The human opponent received a monetary bonus for each successful trial (i.e. stopping the puck with the goalie) and was free to use any strategy they desired. The human opponent met the fMRI participant before each session, and both the consent form and task instructions indicated that all human trials would be against this opponent; thus, there was no deception in the experiment. The computer opponent used a track-then-guess heuristic that attempted to match the fMRI participant’s vertical movements and then dove upward or downward (randomly) at a threshold point near the end of each trial (McDonald et al., 2019). This behavior simulated equilibrium human opponent behavior, in that human opponents tended to adopt a similar strategy. Parameters of the computer algorithm were customized to human reaction times based on pilot experiments; for example the lag between the fMRI participant’s actions and the computer opponent’s actions was matched to the lag typical of human opponents.

Opponent sensitivity: social policy function

In keeping with our prior computational modeling approach for this task (McDonald et al., 2019), we fit separate Gaussian Process (GP) Policy Function models to behavioral data from each participant. These models predicted the occurrence of a change in vertical direction (i.e. a ‘change point’) at the next time step (t + 1, measured in units of screen refresh cycle, 60 Hz) given the state of the game at the current time step (t). The state of the game included seven input variables: the x and y positions of the puck, the y position of the bar, the vertical velocity of both the puck and the bar, the time since the occurrence of the last change point, and an opponent experience variable ranging from 0 (first trial) to 1 (last trial) which reflected potential strategic adaptation over the course of the experiment. Our model predicted binary future action,  (0 = no direction change, 1 = direction change) from the current state of the environment at time, t, st, and a binary opponent identity, ω (0 = computer opponent, 1 = human opponent). That is we fit each subject’s policy,

(0 = no direction change, 1 = direction change) from the current state of the environment at time, t, st, and a binary opponent identity, ω (0 = computer opponent, 1 = human opponent). That is we fit each subject’s policy,  ,

,

|

(1) |

This model effectively assesses the probability that a participant will change direction given the instantaneous state of the game  against opponent

against opponent  .

.

As detailed in (Rasmussen and Williams, 2006; McDonald et al., 2019), we chose to model behavior in our paradigm using GPs. GPs are distributions over functions: in this case, the log odds of switching directions as a function of state and opponent:  Not only do GPs provide a Bayesian measure of uncertainty for this inferred policy function (Rasmussen and Williams, 2006), they are also differentiable, allowing us to derive gradient-based sensitivity estimates that quantify the dynamic coupling between agents. That is we can quantify the change in each participant’s switch probability as a function of small changes in the observed actions of the goalie. Formally, we define an opponent sensitivity metric,

Not only do GPs provide a Bayesian measure of uncertainty for this inferred policy function (Rasmussen and Williams, 2006), they are also differentiable, allowing us to derive gradient-based sensitivity estimates that quantify the dynamic coupling between agents. That is we can quantify the change in each participant’s switch probability as a function of small changes in the observed actions of the goalie. Formally, we define an opponent sensitivity metric,  , as a combination of each subject’s GP gradient with respect to opponent position and velocity

, as a combination of each subject’s GP gradient with respect to opponent position and velocity

|

(2) |

where  is a vector including the y-position and vertical velocity of the opponent,

is a vector including the y-position and vertical velocity of the opponent,  . In addition, we have normalized these gradients by the inverse of the Cholesky factor of the GP covariance:

. In addition, we have normalized these gradients by the inverse of the Cholesky factor of the GP covariance:  . This is equivalent to both an orthogonalization and an equal weighting of each principal component of the gradient and has the effect of down-weighting each contribution by uncertainty in the GP.

. This is equivalent to both an orthogonalization and an equal weighting of each principal component of the gradient and has the effect of down-weighting each contribution by uncertainty in the GP.

The opponent sensitivity metric from the policy model represents an instantaneous estimate of how the participant’s likelihood of changing vertical direction depends on small changes in the opponent’s position and velocity. This metric characterizes individual differences in strategic play (see Figure 2 and McDonald et al., 2019). For the neuroimaging analyses, due to the temporal resolution of fMRI, we log-transformed each time point’s opponent sensitivity measure (see Supplementary Figure 6) and then calculated the average logged opponent sensitivity for each trial. This allows us to characterize complex trajectories generated during game play in terms of the overall player coupling for a given trial.

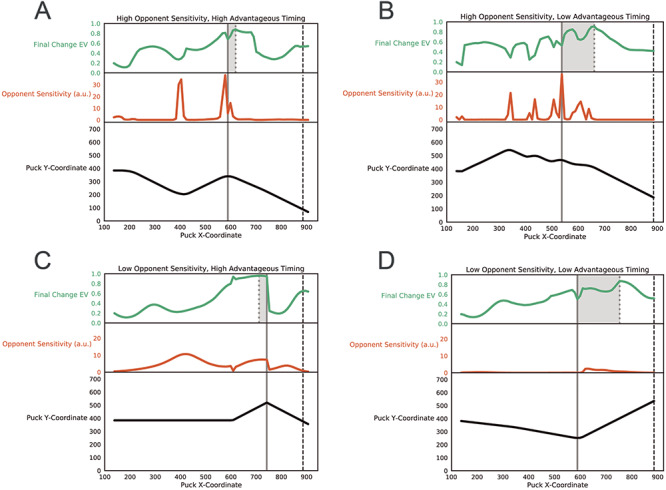

Fig. 2.

(A–D) Example trial trajectories and behavioral metrics for high/low opponent sensitivity and high/low advantageous timing. For each panel, the black line at the bottom shows a trajectory of the puck’s horizontal and vertical position on a given trial, with the puck moving rightward as the trial progresses. The orange line in the middle denotes that the same trial’s opponent sensitivity. The green line at top represents the expected value (i.e. probability of winning), conditional on making the final direction change at that time point; optimal behavior involves moving when this metric is at its maximum. The solid gray line vertically spanning all three subplots indicates the timing of the final change in direction actually taken by the participant, whereas the dotted gray line at the top subplot denotes when the participant should have made the final change in direction. The shaded gray region provides a measure of advantageous timing or the difference in time between when the participant should have switched vs when they actually switched. Low advantageous timing means there is a large difference between these times (i.e. the final direction change for that trial was ill-timed; see right column) and high advantageous timing means there is a small difference (i.e. the final direction change for the trial was well-timed; see left column).

Advantageous timing: social value function

We also estimated each subject’s social value function or the momentary expected value given the action history of both players. By definition, given the dynamics of our task, all successful participant strategies must involve a final change in direction (e.g. attempting to direct the ball either above or below the goalie) and continuation in that new direction until the end of the trial. Making this movement too early would allow the opponent to react and counter the action; making it too late would not allow enough time to move the ball beyond the vertical extent of the goalie bar. Accordingly, we applied a separate GP classification model to estimate, for each time point, the expected value of a final change in direction at that time (called hereafter the Advantageous Timing model),  , where a represents a final vertical direction change.

, where a represents a final vertical direction change.

Advantageous timing is operationalized as the distance in time between when subjects made their final change in vertical direction in a given trial  and the time when they should have made their final change in direction (

and the time when they should have made their final change in direction ( as determined by the maximum of that trial’s expected value curve (formalized as

as determined by the maximum of that trial’s expected value curve (formalized as  ; see Figure 2). In McDonald et al., 2019, we found not only that advantageous timing was correlated with win rate, but also that high-scoring subjects placed their final change in direction within periods of relatively high expected value, whereas lower scoring subjects did not. This metric thus captures individual player’s skill more cleanly than win rate, since the latter reflects the players’ joint actions.

; see Figure 2). In McDonald et al., 2019, we found not only that advantageous timing was correlated with win rate, but also that high-scoring subjects placed their final change in direction within periods of relatively high expected value, whereas lower scoring subjects did not. This metric thus captures individual player’s skill more cleanly than win rate, since the latter reflects the players’ joint actions.

Behavioral results

We found that player’s behavior was well described by both our opponent sensitivity and advantageous timing metrics (see McDonald et al., 2019 for full description of behavior). For visualization, we plot four example trajectories (see Figure 2, in black) that illustrate the interaction between high/low opponent sensitivity and high/low advantageous timing. We found no linear relationship between opponent sensitivity or instantaneous probability of winning (Supplementary Figure 5). Across all trials, we found that high-scoring subjects placed their final change points during the periods of relatively high expected value, according to their estimated value functions, whereas low-scoring subjects placed their final change points during the periods of relatively low expected value. Thus, the differentiating behavior between high- and low-scoring subjects in the Penalty Shot Task is recognizing the advantageous time to make one’s final movement.

Opponent sensitivity: neural measures

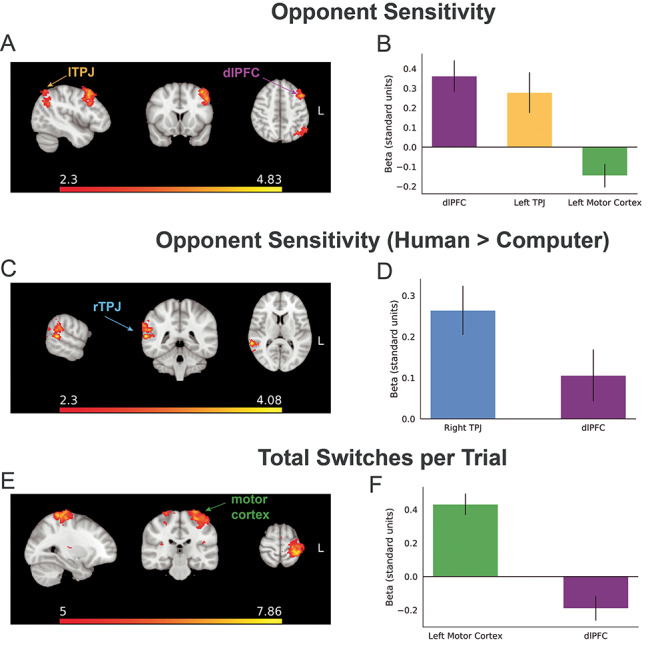

We examined which brain regions were parametrically modulated by opponent sensitivity during the game play phase. The dlPFC and left TPJ both exhibited activity patterns that positively correlated with overall opponent sensitivity across trials (see Figure 3A and B). Importantly, we orthogonalized the opponent sensitivity regressor with respect to the self-sensitivity regressor (see below) in order to capture specifically other-regarding processes above and beyond cognitive factors common to the performance of the task, such as high arousal or attention during difficult trials. We observed an interaction effect of opponent sensitivity with opponent identity, such that, selectively, the right TPJ displayed greater activity that correlated with opponent sensitivity during trials against the human opponent compared with that of the computer opponent (see Figure 3C and D).

Fig. 3.

Brain regions that tracked opponent sensitivity during game play. (A) We found regions within left dlPFC and left TPJ whose activation covaried with opponent sensitivity (orthogonalized with respect to self-sensitivity) on a trial-by-trial basis (dlPFC MNI X,Y,Z = −40, 16, 54, max z-statistic = 4.83; left TPJ X, Y, Z = −58, −64, 34; max z-statistic = 4.76). MNI coordinates in Supplementary Table S1. Brain images are presented in radiological convention (i.e. the right side of the image represents the left side of the brain). (B) Activation within the dlPFC was significantly modulated by opponent sensitivity. Conversely, activation within the motor cortex was instead negatively correlated with both sensitivity measures. Bar height shows subject-level mean z-score parameter estimate (see Materials and Methods); error bars denote standard error of the mean. (C) The rTPJ was the only brain region that showed activation correlating with opponent sensitivity that interacted with opponent identity; the rTPJ showed greater activation correlating with opponent sensitivity during trials played against the human opponent compared with that of the computer opponent (rTPJ MNI X,Y,Z = 64, −42, 10, max z-statistic = 4.08). (D) rTPJ activation was greater during the opponent sensitivity × opponent identity contrast than activation from the dlPFC. (E) Control analyses identified a region of the motor cortex whose activation correlated positively with the number of directional switches made in a given trial (motor cortex MNI X,Y,Z = −36, −40, 68, max z-statistic = 7.86); no such effects of overall movement were observed in dlPFC, TPJ or other regions. (F) Within motor cortex, there was a strong effect of the number of switches, indicating that this region was not tracking our social sensitivity metrics per se, but tracking the overall motor behavior. See Materials and Methods for ROI definitions.

As referenced above, we calculated a control metric called self-sensitivity, which quantified the extent to which the participant’s likelihood of switching is sensitive to changes in the participant’s own position and velocity. Including self-sensitivity as a regressor in the GLM analysis provided a test of whether the dlPFC and/or TPJ activation was tracking one’s own past actions and the changes in behavior that those actions presage. No brain regions evinced a significant correlation with the self-sensitivity regressor after cluster-correction was applied.

Next, we examined whether the dlPFC and TPJ were instead tracking the motor demands of the task, as indexed by the number of switch points; this analysis addressed the potential confounding factor that trials with high opponent sensitivity could involve more tracking-related switches. This analysis revealed strong contralateral motor cortex activation that positively correlated with the total number of switches made by each participant (see Figure 3E and F), but no dlPFC or TPJ activation—indicating that our effects of sensitivity cannot be explained as consequences of the motor demands of the task. Thus, we concluded that the dlPFC and TPJ tracked neither the motor demands of the task nor one’s own behavior; rather, these regions selectively tracked the extent to which the participant’s likely future actions were quantitatively coupled to an opponent’s actions.

We additionally examined the effects of opponent sensitivity for the opponent phase (i.e. before game play). During the opponent phase, activation in dmPFC predicted the level of opponent sensitivity on the upcoming trial’s game play (Supplementary Figure S3). This effect did not interact with the social identity of opponent (human or computer). Collectively, these results provide evidence that the dlPFC and TPJ selectively tracked the magnitude of social coupling between task-relevant agents and that this pattern of activity cannot be attributed to motor demands or tracking one’s own actions.

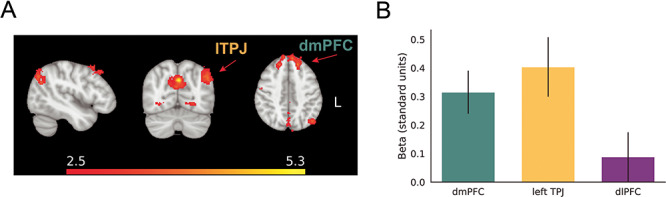

fMRI correlates of advantageous timing

We next investigated the effects of advantageous timing, specifically seeking brain regions that could distinguish trials in which the participant’s final change in direction was well-timed compared with less well-timed trials. During the outcome phase of the task, whole-brain analyses revealed activation in the dmPFC and left TPJ whose activation increased for trials with highly advantageous timing (Figure 4A and B). Unlike for opponent sensitivity, activation in dlPFC was unpredictive of advantageous timing (Figure 4B). No significant interaction effect of social vs non-social opponent identity with advantageous timing was found.

Fig. 4.

(A) Activation in dmPFC and left TPJ was parametrically modulated by the advantageous timing regressor during the outcome screen (MNI coordinates in Supplementary Table S2). Brain images are presented in radiological convention. (B) Each subject’s dmPFC, lTPJ and dlPFC contrast parameter estimate and variance estimate were extracted and z-scored to enable activation comparison across subjects. We observed a dissociation in which positive activation in dmPFC correlated with advantageous timing, whereas activation in dlPFC did not. This pattern is the reverse of that found for opponent sensitivity in which the dlPFC (and not the dmPFC) was positively correlated with opponent sensitivity. The lTPJ was found to be correlated with both opponent sensitivity and advantageous timing. Bar height shows subject-level mean z-score parameter estimate; error bars denote standard error of the mean. See Materials and Methods for ROI definitions.

Opponent identity

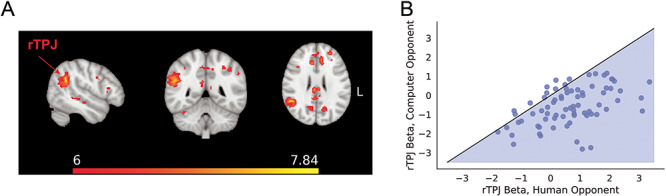

Next, we investigated the effects of opponent identity (i.e. computer vs human) on BOLD activation. During the opponent phase, we observed increased activation in the right TPJ (rTPJ) and dmPFC when the subjects were notified that they would play the upcoming trial against the human opponent rather than the computer opponent (Figure 5A). This effect held across the range of variability observed in our subject sample, such that nearly all participants individually evinced greater rTPJ activation when preparing to play against the human opponent (Figure 5B). Furthermore, at the outcome screen, we observed increased activation of the dmPFC during trials played against a human opponent compared to trials against the computer opponent (see Supplementary Figure 2). Furthermore, we observed heightened activation in the rTPJ and inferior frontal cortex during game play against the human opponent compared to play against the computer opponent. (see Supplementary Figure S3). Finally, in order to evaluate whether the relationship between the right TPJ and other brain regions varied as a function of opponent sensitivity, we conducted a psychophysiological interaction analysis. This analysis revealed that the functional connectivity between the right TPJ and the left TPJ (considered over the entire trial period) increased with increasing opponent sensitivity (see Supplementary Figure S1 and Note S1 for details).

Fig. 5.

(A) rTPJ and dmPFC activation was stronger when preparing to play a trial (during the opponent screen phase) against the human opponent, compared with trials against the computer opponent. Brain images are presented in radiological convention. (B) Scatter plot showing each subject’s z-scored rTPJ beta coefficient during the opponent screen phase preparing to play against the human opponent (x-axis) vs playing against the computer opponent (y-axis). The shaded region indicates numerically greater TPJ response to a human component compared with a computer opponent; a substantial majority of subjects exhibited that pattern in their data, consistent with a social bias toward the human opponent.

Discussion

The current study derived the computational measures of adaptive behavior within a dynamic social game and linked those measures to trial-to-trial fluctuations in activation in distinct brain regions as measured by fMRI. There were three primary results: first, a region within dlPFC tracked the ongoing coupling of a participant’s behavior to their opponent (i.e. opponent sensitivity), regardless of whether the opponent was another human or a computer. Second, a region within dmPFC tracked the optimality of the participant’s final movement (i.e. advantageous timing), again independent of the identity of the opponent. And, third, activation within social cognitive regions—most notably the TPJ—encoded the identity of the opponent across different phases of the task, with multiple analyses demonstrating heightened rTPJ activation during interaction with the human opponent compared with interaction with the computer opponent. Collectively, these results support the conclusion that distinct brain regions track the information carried by another agent’s dynamic behavior separately from information about the nature of that agent itself.

The recent explosion in research interest in social decision-making has been aided by simple, yet sophisticated paradigms from game theory, by computational models stemming from reinforcement learning and by neuroimaging methodologies that probe the underlying neural mechanisms. Tightly constrained game theory paradigms such as the Trust Game and Prisoner’s Dilemma have provided insight into common behaviors observed in dyads, including trust, fairness, social punishment and social learning (Fehr and Gächter, 2000; Nowak et al., 2000; Sanfey et al., 2003; Delgado et al., 2005; Behrens et al., 2008; Rilling and Sanfey, 2011). The now common pairing of fMRI methods with computational modeling has allowed for more nuanced hypotheses regarding possible latent variables that play a key role in social decision-making, such as trust, influence of one’s actions on an opponent and learning rates of both self and other (Behrens et al., 2008; Hampton et al., 2008; Kwak et al., 2014). Furthermore, across multiple studies investigating social decision-making, a core network of brain regions has been frequently implicated in social information processing, including the dmPFC, dlPFC and the TPJ. Despite the progress in this vein of research, there still exist many open questions.

One such question is to what extent these neuroscientific findings extend to dynamic social interactions like those observed in naturalistic settings (Zaki and Ochsner, 2009; Iqbal et al., 2019; McDonald et al., 2019)—answers to which will be critical for advancing the field. Indeed, the participant does not in fact need to know which opponent (human/social or computer/nonsocial) they are playing against in order to win. We thus interpret this aspect of our paradigm as a feature that strengthens the conclusions we can draw: brain regions associated with social cognition become engaged even under highly abstracted conditions with minimal social cues (Carter et al., 2012; Lee and Harris, 2013). Current research using even more naturalistic social stimuli, such as virtual reality interactions with other agents in an environment, could contribute additional insights about how more complex social conditions (e.g. seeing an opponent’s face or hearing their voice) influence the mechanisms of choice (Zaki and Ochsner, 2009; Vodrahalli et al., 2018).

Our paradigm allowed us to infer how different brain regions distinctly contributed to the computational process of making a complex competitive decision against an opponent. Our research thus builds on an important vein of game theory literature that examines human behavior in dynamic contexts, such as studying sequential decisions in which people aim to establish a good reputation for future interactions (Weigelt and Camerer, 1988; Milinski et al., 2002; Brandt et al., 2003). Multi-round interactions with the same individuals allow for computational claims to be made about how people signal a group-beneficial play style in games such as Prisoner’s Dilemma (Brandt et al., 2003), how past behavior can lead to inference on an agent’s reputation (Weigelt and Camerer, 1988) and under what conditions do the goal of establishing a pro-social reputation affect varying levels of public good contributions (Milinski et al., 2002). We integrated this idea of reputation by including an opponent experience variable ranging from 0 (first trial) to 1 (last trial) which reflected potential strategic adaptation over the course of the experiment between participant-goalie pairs.

Our finding that activation in dlPFC tracked opponent sensitivity during game play is consistent with findings from the social cognition literature suggesting that the dlPFC is involved in adapting one’s actions after observing the actions of an opponent (Barraclough et al., 2004), as well as generating social action prediction errors (Burke et al., 2010; Suzuki et al., 2012). In addition, the dlPFC is commonly active in tasks involving cognitive control and complex decision-making (Dunne and O’Doherty, 2013; Leong et al., 2017; Frings et al., 2018). Importantly, control analyses ruled out alternative explanations for these effects. Neither overall motor demands (e.g. number of direction switches in a trial) nor self-sensitivity (e.g. influences of one’s own prior actions upon behavior) modulated activation in dlPFC, indicating that this action reflected information specific to the dyadic interaction between players. This provides support for the view that dlPFC activation does not reflect motor planning demands but, instead, tracks a task-relevant opponent whose current and future goals and actions must be inferred.

We also found that the dmPFC tracked different behavioral quantities before and after game play. In advance of the task, activation in dmPFC predicted opponent sensitivity on the upcoming trial; during game play, however, it tracked the advantageous timing of the participant’s final change in direction. Considered together, these results point to a higher level role for the dmPFC in the control of dynamic behavior, such that it prepares behavior for the current task context—setting up a strategy in advance of the trial and optimizing the execution of that strategy during the trial as play evolves. This corroborates other findings that the dmPFC plays an important role in representing self-referential outcome in a goal-directed task (Skerry and Saxe, 2014) and predicting outcomes in social, strategic contexts (Frith and Frith, 2006; Behrens et al., 2008; Burke et al., 2010; Lee and Seo, 2016).

While the above prefrontal regions tracked distinct quantities associated with behavior, activation in the TPJ tracked not only both of these quantities but also the identity of the opponent, with evidence for a hemispheric dissociation between these roles. The right TPJ was preferentially more active for the human opponent than the computer opponent both during game play and the pre-trial period in which subjects learned the identity of the opponent. The right TPJ was also found to be preferentially more correlated with opponent sensitivity during game play against the human opponent than during game play against the computer opponent. This social bias has been found in many other studies linking the rTPJ to social cognition and theory of mind processes (Gallagher and Frith, 2003; Saxe and Kanwisher, 2003; Carter et al., 2012; Lee and Seo, 2016)—including the hypothesis that TPJ constructs a task-relevant social context for adaptive decision-making (Carter et al., 2012; Carter and Huettel, 2013; Hill et al., 2017). In comparison, the lTPJ activation correlated with each of the behavioral measures examined during game play, suggesting that these quantities computed during dynamic game play rely on a brain region that support processes related to inferring the mental states of others (Samson et al., 2004; Saxe, 2006; Lewis et al., 2011).

One valuable future direction for this work could come through the use of parallel recording methodologies such as hyper-scanning, in which the neural activity of both interacting humans in a given social interaction is compared (Montague et al., 2002; King-Casas et al., 2005). We have shown that applying our established computational modeling framework to human neuroimaging data yields neural activity that overlaps with many brain regions frequently implicated in social cognition and value-based decision-making. Thus, it would be particularly interesting to study the correlated dynamics of both players in such a setup.

Cognitive neuroscience has revealed neural mechanisms that underlie strategizing, mentalizing and value-based decision-making by using simple, constrained paradigms borrowed from game theory in neuroimaging studies (Sanfey et al., 2003; King-Casas et al., 2005; Hampton et al., 2008; Yoshida et al., 2010). Moving forward, combining dynamic paradigms with computational modeling of behavior will benefit our collective understanding of how humans interact with each other in social contexts.

Materials and Methods

Subjects

All analyses report data from 75 participants (41 female; mean age 25.9 years; s.d. = 6.8 years; age range 18–48 years) who played the Penalty Shot Task against both a human opponent and a computer opponent while scanned using fMRI. A total of 82 participants were collected; 7 participants were excluded prior to data analyses due to excessive head motion (3 mm criterion) within the scanner, leaving a total of 75 participants. All participants were right-handed and free of neurological and psychiatric disorders, by self-report. All participants provided written informed consent approved by the Institutional Review Board of Duke University Medical Center and were informed that no deception would be used throughout the experiment. Two additional participants (not included in n = 75) played the role of the human opponent in the task; each of our fMRI participants met one of these individuals before the scanning session and played against the same opponent throughout their session. The human opponents were not members of the study team and had no stake in the outcome of the study aside from maximizing their own compensation.

Experimental design: penalty shot task

The experiment began with a 4 min practice run followed by three experimental runs of game play, each approximately 12 min long, with short breaks between runs. Participants played as many trials as they could within each 12 min block; each participant engaged in roughly 200 trials in total (i.e. approximately 100 trials per opponent).

Each trial began with a prompt instructing the subject to center the joystick, followed by a fixation cross which was presented for a jittered time interval of 1.0–7.5 s. When the fixation cross disappeared, the Opponent Screen displayed (for 2 s) the identity of the opponent on the upcoming trial (either ‘Computer’ or the name of the human opponent). After a second fixation cross, the Game Play period started; the participant’s task was to move the puck across the goal line near the right side of the screen before the opponent can block them. Each trial lasted roughly 1.5 s. Following the end of a trial, an Outcome Screen displayed either ‘Win’ or ‘Loss’ to indicate the trial’s outcome. After the experiment, participants completed a post-task survey, were debriefed and compensated. For full details of the experimental paradigm including the physical dynamics of both players, see McDonald et al., 2019. Stimuli were projected onto a screen located in the scanner bore. The Penalty Shot Task was programmed using the Psychophysics Toolbox in MATLAB (Brainard and Vision, 1997).

The task was incentive compatible, in that each trial the experimental subject won went toward a task bonus that was paid in addition to the baseline participation rate; similarly, the long-term participant was paid a separate task bonus for each trial the participant lost. Thus, both participants were incentivized to win as many trials as possible.

For detailed descriptions of the algorithm used by the computer opponent and the Penalty Shot Task itself, including videos of game play, please see McDonald et al., 2019.

fMRI acquisition and preprocessing

Imaging acquisition

Functional MRI data were collected using a General Electric MR750 3.0 tesla scanner. Images sensitive to BOLD contrast were acquired using an echo-planar imaging sequence: [repetition time (TR), 2000 ms; echo time (TE) 28 ms; matrix, 64 × 64; field of view (FOV), 192 × 192 mm2; flip angle, 90°; voxel size 3.0 × 3.0 × 3.8 mm]. Before preprocessing the functional data, we discarded the first four volumes of each run to allow for magnetic stabilization. We also acquired whole-brain, high-resolution anatomical scans to facilitate coregistration and normalization of the functional data (T1-weighted, TR, 8.16 ms; TE, 3.18 ms; matrix, 256 × 256; FOV, 256 mm; flip angle, 12°; voxel size 1.0 × 1.0 × 1.0 mm).

Imaging analysis

Preprocessing was conducted using the FMRIB Software Library (FSL version 5.0.1, https://fsl.fmrib.ox.ac.uk/fsl/). Motion correction was conducted using MCFLIRT (Jenkinson et al., 2002). We removed non-brain material using the brain extraction tool, BET (Smith, 2002). Intravolume slice-timing correction was conducted using Fourier-space time-series phase-shifting (Sladky et al., 2011). Images were spatially smoothed using a 5 mm full-width at half-maximum Gaussian kernel. We then applied a high-pass temporal filter with a 50 s cutoff (Gaussian-weighted least-squares straight line fitting, with σ = 50s). Finally, each run was grand-mean intensity normalized using a single multiplicative factor. Before group analyses, functional data were spatially normalized to the standard MNI avg152 T1-weighted template (2 mm isotropic resolution) using a 12-parameter affine transform implemented in FLIRT (Jenkinson and Smith, 2001).

Neuroimaging analyses were conducted using FEAT (fMRI Expert Analysis Tool) version 6.00 (Smith et al., 2004; Woolrich et al., 2009). The first-level analyses (within-run) used a general linear model with local autocorrelation correction (Woolrich et al., 2001) consisting of regressors modeling each phase’s (Opponent pre-trial screen, Game Play screen, Outcome post-trial screen) time of onset and duration, as well as opponent identity (human or computer) and trial outcome. In addition, we included four parametric regressors describing trial-level behavior: (i) mean opponent sensitivity on that trial (orthogonalized with respect to self-sensitivity), (ii) mean self-sensitivity on that trial, (iii) the advantageous timing metric on that trial and (iv) the total number of vertical direction changes made on that trial. Both main and interaction effects of opponent identity (human or computer opponent) were included in the first-level models. We also included nuisance regressors to account for motion. Aside from the head motion nuisance regressors, all regressors were convolved with a canonical hemodynamic response function. To verify that our conclusions about opponent sensitivity were not biased by the complexity of the model, we ran a separate, smaller GLM which only included two regressors: self-sensitivity and opponent sensitivity (orthogonalized with respect to self-sensitivity). Our key results were all observed in this simplified model (see Supplementary Figure S3 and Supplementary Table S3).

Data across runs (second-level, within-participant) as well as data across participants (third-level) were combined hierarchically using a mixed-effects model (Beckmann et al., 2003; Woolrich et al., 2004). Statistical significance was assessed using cluster-thresholding, implemented in FEAT. Z-statistic images were thresholded using clusters determined by Z > 2.3 and a corrected cluster significance threshold of P = 0.05 (Worsley). Statistical overlay images were created using FSLeyes (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/FSLeyes). All coordinates are reported in MNI space.

Neuroimaging subject-level contrast parameter estimates from bar graphs were conducted by extracting the mean and variance contrast parameter estimates for each within-subject region of interest (ROI). ROIs were defined as 8 mm radius spheres around centroids of the activation regions observed within dlPFC (MNI −48, 20, 34), left TPJ (MNI −46, −68, 36) and right TPJ (54, −50, 24). For the control analyses of the left motor cortex ROI, we extracted the left post-central gurus (PoGy_016) from the Harvard-Oxford anatomical atlas. Parameter estimates were converted to percent (%) activation before z-scoring to enable comparison across subjects in common units. Z-scores were determined by dividing the ROI’s mean contrast estimate by the ROI’s variance contrast estimate, to allow for across-subject comparison. Given that we used functionally defined ROIs to illustrate the pattern of activation—and to avoid circularity in our analyses—we do not report significance testing on the column graphs in Figures 3 and 4.

Supplementary Material

Acknowledgements

We are thankful to the staff at Duke’s Brain Imaging Analysis Center (BIAC) for assistance with data collection.

Conflict of interest. The authors declare no conflicts of interest for this research.

Funding

Research reported in this publication was supported by a BD2K Career Development Award (K01-ES-025442) to J.M.P., an NIMH R37-109728 to J.M.P, an NIMH R01-108627 to S.A.H. and a National Science Foundation Graduate Research Fellowship DGE-1644868 and a Duke Dean’s Graduate Fellowship to K.M. Computational resources were supported by NIH S10-OD-021480. The GPU used for this research was donated by the NVIDIA Corporation.

References

- Amodio D.M., Frith C.D. (2006). Meeting of minds: the medial frontal cortex and social cognition. Nature Reviews Neuroscience, 7, 268. [DOI] [PubMed] [Google Scholar]

- Apps M., Ramnani N. (2017). Contributions of the medial prefrontal cortex to social influence in economic decision-making. Cerebral Cortex, 27, 4635–48. [DOI] [PubMed] [Google Scholar]

- Axelrod R., Hamilton W.D. (1981). The evolution of cooperation. Science, 211, 1390–6. [DOI] [PubMed] [Google Scholar]

- Barraclough D.J., Conroy M.L., Lee D. (2004). Prefrontal cortex and decision making in a mixed-strategy game. Nature Neuroscience, 7, 404. [DOI] [PubMed] [Google Scholar]

- Beckmann C.F., Jenkinson M., Smith S.M. (2003). General multilevel linear modeling for group analysis in FMRI. NeuroImage, 20, 1052–63. [DOI] [PubMed] [Google Scholar]

- Behrens T.E., Hunt L.T., Woolrich M.W., Rushworth M.F. (2008). Associative learning of social value. Science, 456, 245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brainard D.H., Vision S. (1997). The psychophysics toolbox. Spatial Vision, 10, 433–6. [PubMed] [Google Scholar]

- Brandt H., Hauert C., Sigmund K. (2003). Punishment and reputation in spatial public goods games. Proceedings of the Royal Society of London Series B, 270, 1099–104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burke C.J., Tobler P.N., Baddeley M., Schultz W. (2010). Neural mechanisms of observational learning. Proceedings of the National Academy of Sciences, 107, 14431–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Camerer C.F. (2011). Behavioral Game Theory: Experiments in Strategic Interaction, Princeton University Press. [Google Scholar]

- Carter R.M., Huettel S.A. (2013). A nexus model of the temporal–parietal junction. Trends in Cognitive Sciences, 17, 328–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter R.M., Bowling D.L., Reeck C., Huettel S.A. (2012). A distinct role of the temporal-parietal junction in predicting socially guided decisions. Science, 337, 109–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coricelli G., Nagel R. (2009). Neural correlates of depth of strategic reasoning in medial prefrontal cortex. Proceedings of the National Academy of Sciences, 106, 9163–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delgado M.R., Frank R.H., Phelps E.A. (2005). Perceptions of moral character modulate the neural systems of reward during the trust game. Nature Neuroscience, 8, 1611. [DOI] [PubMed] [Google Scholar]

- Dunne S., O’Doherty J.P. (2013). Insights from the application of computational neuroimaging to social neuroscience. Current Opinion in Neurobiology, 23, 387–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fehr E., Gächter S. (2000). Fairness and retaliation: the economics of reciprocity. Journal of Economic Perspectives, 14, 159–81. [Google Scholar]

- Frings C., Brinkmann T., Friehs M.A., Lipzig T. (2018). Single session tDCS over the left DLPFC disrupts interference processing. Brain and Cognition, 120, 1–7. [DOI] [PubMed] [Google Scholar]

- Frith C.D., Frith U. (2006). The neural basis of mentalizing. Neuron, 50, 531–4. [DOI] [PubMed] [Google Scholar]

- Gallagher H.L., Frith C.D. (2003). Functional imaging of ‘theory of mind’. Trends in Cognitive Sciences, 7, 77–83. [DOI] [PubMed] [Google Scholar]

- Hampton A.N., Bossaerts P., O'Doherty J.P. (2008). Neural correlates of mentalizing-related computations during strategic interactions in humans. Proceedings of the National Academy of Sciences, 105, 6741–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill C.A., Suzuki S., Polania R., Moisa M., O'Doherty J.P., Ruff C.C. (2017). A causal account of the brain network computations underlying strategic social behavior. Nature Neuroscience, 20, 1142. [DOI] [PubMed] [Google Scholar]

- Iqbal S.N., Yin L., Drucker C.B., et al. (2019). Latent goal models for dynamic strategic interaction. PLoS Computational Biology, 15, e1006895. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkins A.C., Karashchuk P., Zhu L., Hsu M. (2018). Predicting human behavior toward members of different social groups. Proceedings of the National Academy of Sciences, 115, 9696–701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M., Smith S. (2001). A global optimisation method for robust affine registration of brain images. Medical Image Analysis, 5, 143–56. [DOI] [PubMed] [Google Scholar]

- Jenkinson M., Bannister P., Brady M., Smith S. (2002). Improved optimization for the robust and accurate linear registration and motion correction of brain images. NeuroImage, 17, 825–41. [DOI] [PubMed] [Google Scholar]

- Kable J.W., Glimcher P.W. (2007). The neural correlates of subjective value during intertemporal choice. Nature Neuroscience, 10, 1625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King-Casas B., Tomlin D., Anen C., Camerer C.F., Quartz S.R., Montague P.R. (2005). Getting to know you: reputation and trust in a two-person economic exchange. Science, 308, 78–83. [DOI] [PubMed] [Google Scholar]

- Kishida K.T., Montague P.R. (2012). Imaging models of valuation during social interaction in humans. Biological Psychiatry, 72, 93–100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kolling N., Wittmann M.K., Behrens T.E., Boorman E.D., Mars R.B., Rushworth M.F. (2016). Value, search, persistence and model updating in anterior cingulate cortex. Nature Neuroscience, 19, 1280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koster-Hale J., Richardson H., Velez N., Asaba M., Young L., Saxe R. (2017). Mentalizing regions represent distributed, continuous, and abstract dimensions of others' beliefs. NeuroImage, 161, 9–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kreps D.M. (1990). Game Theory and Economic Modelling. Oxford University Press.

- Kwak Y., Pearson J., Huettel S.A. (2014). Differential reward learning for self and others predicts self-reported altruism. PLoS One, 9, e107621. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee V., Harris L. (2013). How social cognition can inform social decision making. Frontiers in Neuroscience, 7, 259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee D., Seo H. (2016). Neural basis of strategic decision making. Trends in Neurosciences, 39, 40–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leibo J.Z., Zambaldi V., Lanctot M., Marecki J., Graepel T. (2017). Multi-agent reinforcement learning in sequential social dilemmas In: Proceedings of the 16th Conference on Autonomous Agents and MultiAgent Systems. pp. 464–473.

- Leong Y.C., Radulescu A., Daniel R., DeWoskin V., Niv Y. (2017). Dynamic interaction between reinforcement learning and attention in multidimensional environments. Neuron, 93, 451–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levy I., Snell J., Nelson A.J., Rustichini A., Glimcher P.W. (2009). Neural representation of subjective value under risk and ambiguity. Journal of Neurophysiology, 103, 1036–47. [DOI] [PubMed] [Google Scholar]

- Lewis P.A., Rezaie R., Brown R., Roberts N., Dunbar R.I. (2011). Ventromedial prefrontal volume predicts understanding of others and social network size. NeuroImage, 57, 1624–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDonald K.R., Broderick W.F., Huettel S.A., Pearson J.M. (2019). Bayesian nonparametric models characterize instantaneous strategies in a competitive dynamic game. Nature Communications, 10, 1808. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Milinski M., Semmann D., Krambeck H.J. (2002). Reputation helps solve the ‘tragedy of the commons’. Nature, 415, 424–6. [DOI] [PubMed] [Google Scholar]

- Montague P.R., Berns G.S., Cohen J.D., et al. (2002). Hyperscanning: simultaneous fMRI during linked social interactions. NeuroImage, 16(4), 1159. [DOI] [PubMed] [Google Scholar]

- Nash J.F. (1950). Equilibrium points in n-person games. Proceedings of the National Academy of Sciences, 36, 48–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nowak M.A., Page K.M., Sigmund K. (2000). Fairness versus reason in the ultimatum game. Science, 289, 1773–5. [DOI] [PubMed] [Google Scholar]

- Piva M., Velnoskey K., Jia R., Nair A., Levy I., Chang S.W. (2019). The dorsomedial prefrontal cortex computes task-invariant relative subjective value for self and other. eLife, 8, e44939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poncela-Casasnovas J., Gutiérrez-Roig M., Gracia-Lázaro C., et al. (2016). Humans display a reduced set of consistent behavioral phenotypes in dyadic games. Science Advances, 2(8):e1600451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rapoport A., Chammah A.M., Orwant C.J. (1965). Prisoner's Dilemma: A Study in Conflict and Cooperation, Vol. 165, University of Michigan Press. [Google Scholar]

- Rasmussen C.E., Williams C.K. (2006). Gaussian Processes for Machine Learning, Vol. 2, MA: MIT Press Cambridge. [Google Scholar]

- Redcay E., Schilbach L. (2019). Using second-person neuroscience to elucidate the mechanisms of social interaction. Nature Reviews Neuroscience, 20(8):495–505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rilling J.K., Sanfey A.G. (2011). The neuroscience of social decision-making. Annual Review of Psychology, 62, 23–48. [DOI] [PubMed] [Google Scholar]

- Rilling J.K., Gutman D.A., Zeh T.R., Pagnoni G., Berns G.S., Kilts C.D. (2002). A neural basis for social cooperation. Neuron, 35, 395–405. [DOI] [PubMed] [Google Scholar]

- Rilling J.K., Sanfey A.G., Aronson J.A., Nystrom L.E., Cohen J.D. (2004). The neural correlates of theory of mind within interpersonal interactions. NeuroImage, 22, 1694–703. [DOI] [PubMed] [Google Scholar]

- Ruff C.C., Fehr E. (2014). The neurobiology of rewards and values in social decision making. Nature Reviews Neuroscience, 15, 549–62. [DOI] [PubMed] [Google Scholar]

- Samson D., Apperly I.A., Chiavarino C., Humphreys G.W. (2004). Left temporoparietal junction is necessary for representing someone else's belief. Nature Neuroscience, 7, 499. [DOI] [PubMed] [Google Scholar]

- Sanfey A.G., Rilling J.K., Aronson J.A., Nystrom L.E., Cohen J.D. (2003). The neural basis of economic decision-making in the ultimatum game. Science, 300, 1755–8. [DOI] [PubMed] [Google Scholar]

- Saxe R. (2006). Uniquely human social cognition. Current Opinion in Neurobiology, 16, 235–9. [DOI] [PubMed] [Google Scholar]

- Saxe R., Kanwisher N. (2003). People thinking about thinking people: the role of the temporo-parietal junction in “theory of mind”. NeuroImage, 19, 1835–42. [DOI] [PubMed] [Google Scholar]

- Schurz M., Radua J., Aichhorn M., Richlan F., Perner J. (2014). Fractionating theory of mind: a meta-analysis of functional brain imaging studies. Neuroscience & Biobehavioral Reviews, 42, 9–34. [DOI] [PubMed] [Google Scholar]

- Siegel J.Z., Mathys C., Rutledge R.B., Crockett M.J. (2018). Beliefs about bad people are volatile. Nature Human Behaviour, 2, 750. [DOI] [PubMed] [Google Scholar]

- Skerry A.E., Saxe R. (2014). A common neural code for perceived and inferred emotion. Journal of Neuroscience, 34, 15997–6008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sladky R., Friston K.J., Tröstl J., Cunnington R., Moser E., Windischberger C. (2011). Slice-timing effects and their correction in functional MRI. NeuroImage, 58, 588–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith S.M. (2002). Fast robust automated brain extraction. Human Brain Mapping, 17, 143–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith S.M., Jenkinson M., Woolrich M.W., et al. (2004). Advances in functional and structural MR image analysis and implementation as FSL. NeuroImage, 23, S208–19. [DOI] [PubMed] [Google Scholar]

- Suzuki S., Harasawa N., Ueno K., et al. (2012). Learning to simulate others' decisions. Neuron, 74, 1125–37. [DOI] [PubMed] [Google Scholar]

- Vickery T.J., Kleinman M.R., Chun M.M., Lee D. (2015). Opponent identity influences value learning in simple games. Journal of Neuroscience, 35, 11133–43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vodrahalli K., Chen P.H., Liang Y., et al. (2018). Mapping between fMRI responses to movies and their natural language annotations. NeuroImage, 180, 223–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Von Neumann J., Morgenstern O. (1944). Theory of Games and Economic Behavior. Princeton University press. [Google Scholar]

- Weigelt K., Camerer C. (1988). Reputation and corporate strategy: a review of recent theory and applications. Strategic Management Journal, 9, 443–54. [Google Scholar]

- Wheatley T., Boncz A., Toni I., Stolk A. (2019). Beyond the isolated brain: the promise and challenge of interacting minds. Neuron, 103, 186–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woolrich M.W., Ripley B.D., Brady M., Smith S.M. (2001). Temporal autocorrelation in univariate linear modeling of FMRI data. NeuroImage, 14, 1370–86. [DOI] [PubMed] [Google Scholar]

- Woolrich M.W., Behrens T.E., Beckmann C.F., Jenkinson M., Smith S.M. (2004). Multilevel linear modelling for FMRI group analysis using Bayesian inference. NeuroImage, 21, 1732–47. [DOI] [PubMed] [Google Scholar]

- Woolrich M.W., Jbabdi S., Patenaude B., et al. (2009). Bayesian analysis of neuroimaging data in FSL. NeuroImage, 45, S173–86. [DOI] [PubMed] [Google Scholar]

- Worsley K.J. (2001). Statistical analysis of activation images In: Jezzard P., Matthews P.M., Smith S.M. (eds). Functional MRI: An Introduction to Methods, Vol. 251.

- Yoshida W., Seymour B., Friston K.J., Dolan R.J. (2010). Neural mechanisms of belief inference during cooperative games. Journal of Neuroscience, 30, 10744–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaki J., Ochsner K. (2009). The need for a cognitive neuroscience of naturalistic social cognition. Annals of the New York Academy of Sciences, 1167, 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.