Abstract

Objective

Financial impacts associated with a switch to a different electronic health record (EHR) have been documented. Less attention has been focused on the patient response to an EHR switch. The Mayo Clinic was involved in an EHR switch that occurred at 6 different locations and with 4 different “go-live” dates. We sought to understand the relationship between patient satisfaction and the transition to a new EHR.

Materials and Methods

We used patient satisfaction data collected by Press Ganey from July 2016 through December 2019. Our patient satisfaction measure was the percent of patients responding “very good” (top box) to survey questions. Twenty-four survey questions were summarized by Press Ganey into 6 patient satisfaction domains. Piecewise linear regression was used to model patient satisfaction before and after the EHR switch dates.

Results

Significant drops in patient satisfaction were associated with the EHR switch. Patient satisfaction with access (ease of getting clinic on phone, ease of scheduling appointments, etc.) was most affected (range of 6 sites absolute decline: -3.4% to -8.8%; all significant at 99% confidence interval). Satisfaction with providers was least affected (range of 6 sites absolute decline: -0.5% to -2.8%; 4 of 6 sites significant at 99% confidence interval). After 9-15 months, patient satisfaction with access climbed back to pre-EHR switch levels.

Conclusions

Patient satisfaction in several patient experience domains dropped significantly and stayed lower than pre–“go-live” for several months after a switch in EHR. Satisfaction with providers declined less than satisfaction with access.

Keywords: electronic health record, patient satisfaction, patient experience, Press Ganey, electronic medical record

INTRODUCTION

According to the Office of the National Coordinator for Health Information Technology, 96% of nonfederal acute care hospitals had electronic health record (EHR) software in 2017.1 There were 186 certified health information technology (IT) developers in 2016 who supplied certified health IT to 4520 nonfederal acute care hospitals.2 Also in 2016, there were 684 vendors supplying certified health IT to 384 395 ambulatory healthcare providers.3 Although much of both of the hospital and ambulatory care EHR market is dominated by 10 vendors,2,3 there is likely to be additional consolidation to 3 major vendors.4 This means that a significant number of hospitals and ambulatory care providers will likely make a switch to a different EHR as marginal vendors leave the market and other factors encourage hospitals and ambulatory practices to switch to 1 of the 3 major EHR vendors.

The implementation of an EHR in a medical system is complex due to the multiple missions of medical institutions (eg, patient care, education, research), varied and complicated structures, and a workforce with expertise and autonomy.5 Media attention has provided insight into the financial challenges experienced by hospitals and healthcare systems when switching and implementing EHRs.6–10 Informaticists and others have also been examining the impact of the EHR on patient satisfaction and patient-provider communication since the mid-1990s. Alkureishi et al11 in 2016 published a systematic review on the impact of the EHR on the patient-doctor relationship and communication. In that review, the authors identified 9 studies of 53 reviewed that used pre- and post-EHR patient surveys. One of the larger studies from Australia by Fairley et al12 used more than 18 000 patient surveys comparing paper medical records to EHRs and showed no decline in patient satisfaction. Another study in the United States by Nagy et al13 at Kaiser Permanente reported on more than 11 000 patient surveys pre- and postintroduction of exam room computers (with EHR) and also showed no satisfaction difference after the EHR or computer implementation. Hsu et al14 had a smaller sample of 313 surveys and showed patient visit satisfaction improved significantly after exam room computer implementation. The 9 studies referenced in the Alkureishi et al review focused on the changes in care provider interaction; other components of patient experience such as patient access were not reported. There appear to be few published studies that examine multiple domains of patient experience along a time series before and after a switch from one EHR to another.

Organizations contemplating a change to a new EHR should consider the potentially negative impact of significant and persistent changes across multiple domains of patient experience, including patient access and other patient experience not specifically associated with an individual care provider. If switching to a new EHR significantly reduces identifiable components of patient satisfaction, leaders need information on the anticipated extent and duration of these effects. A significant change in patient satisfaction from an EHR switch could be an unintended major confounding factor that could interfere with analysis and interpretation of ongoing interventions aimed at improving patient satisfaction.

The Mayo Clinic switch to Epic EHR software (Epic Systems, Verona, WI) in 2017 and 2018 created a natural experiment to test whether a major EHR software change was associated with a change in patient satisfaction. Mayo patient satisfaction data were available extending months before and months after the EHR conversion. The new EHR “go-live” also occurred at 4 different dates across different sites of Mayo Clinic.

We measured patient satisfaction changes associated with the EHR change and examined the difference in impact across several domains of patient experience, including satisfaction with access, care provider, and moving through the visit.

MATERIALS AND METHODS

Setting

Our observations were based on 6 Mayo Clinic practices in the United States. The Mayo Clinic Health System (MCHS) in Wisconsin and Minnesota accounted for 4 practices; Mayo Clinic Arizona (MC Arizona) and Mayo Clinic Florida (MC Florida) were the fifth and sixth, respectively. The MCHS practices are mostly in areas with population centers under 100 000 and have more than 70 clinics. MC Arizona and MC Florida are multispecialty practices located in major metropolitan areas (Phoenix, Arizona; and Jacksonville, Florida). All 6 sites switched from Cerner software (Cerner, North Kansas City, MO) to Epic on the go-live dates shown in Table 1. MCHS Southeast Minnesota was the only site that had 2 switch dates. The Rochester, Minnesota, practice within MCHS Southeast Minnesota switched from GE Centricity software to Epic.

Table 1.

Site-specific information for 6 EHR switch sites

| Site-specific information | Northwest Wisconsin | Southwest Wisconsin | Southwest Minnesota | Southeast Minnesota | Arizona | Florida |

|---|---|---|---|---|---|---|

| Date of newEHR go-live | July 8, 2017 | July 8, 2017 | November 7, 2017 | November 7, 2017, and May 6, 2018 | October 6, 2018 | October 6, 2018 |

| Pre–go-live EHR | Cerner | Cerner | Cerner | Cerner/GE Centricity | Cerner | Cerner |

| Data collection initiation to completion | July 1, 2016, to March 1, 2019 | July 1, 2016, to March 1, 2019 | July 1, 2016, to March 1, 2019 | July 1, 2016, to March 1, 2019 | July 1, 2016, to January 1, 2020 | July 1, 2016, to January 1, 2020 |

| Time series half months (before go-live/after go-live) | 24/40 | 24/40 | 32/32 | 32/32 | 54/30 | 54/30 |

| Provider count (providers whose office visits were subject of surveys) | 463 | 361 | 345 | 980 | 746 | 727 |

| Total patient satisfaction questions answered | 1 368 714 | 1 103 951 | 825 189 | 2 543 058 | 3 302 654 | 3 173 786 |

| Average responses per question per half-month (% by site) | 891 (13) | 719 (10) | 537 (8) | 1656 (24) | 1638 (23) | 1574 (22) |

| Percent question responses related to primary care visits | 49 | 56 | 62 | 74 | 27 | 26 |

EHR: electronic health record.

Patient satisfaction data

We used patient satisfaction data collected by Press Ganey (South Bend, IN).15 Press Ganey is a third-party vendor used by many large healthcare organizations to create patient surveys and collect and analyze patient satisfaction data. Several studies have examined the validity and stability of these data.16–20 The Press Ganey survey has been shown to be sensitive to a variety of factors in health care such as time of day, environment, healthcare delivery, and communication.21–24

Patient satisfaction surveys were based on face-to-face visits in outpatient settings. We queried the Press Ganey InfoEdge patient satisfaction database for patient visits from the first day of the month through the 15th day, and from the 16th day of the month through the last day of the month. Data collection for the 4 MCHS practices extended for 64 half-months. For Arizona and Florida, data collection extended for 84 half-months. Data collection dates and half-months of data collection before and after the switch are found in Table 1. It should be noted that the go-live dates did not fall exactly at the beginning dates of the half-month intervals. All 6 practice sites had at least 30 half-months of patient satisfaction data after the go-live EHR switches (Table 1). Provider counts in Table 1 are all providers that completed face-to-face patient visits during the entire data collection periods and who had patient survey responses concerning those visits. The percent question responses related to primary care are the total questions answered about primary care visits (family medicine, general internal medicine, and pediatric providers) divided by the total response count.

The Press Ganey satisfaction data collected for Mayo Clinic during the course of the study included patient surveys that asked questions grouped into separate categorized domains of patient experience. These domains were (1) access (4 questions), (2) satisfaction with care provider (10 questions), (3) moving through your visit (2 questions), (4) satisfaction with nursing (2 questions), (5) overall practice assessment (2 questions), and (6) personal issues (4 questions). Supplementary Appendix A lists the question content summary and the associated patient experience domains assessed. Patients answered individual questions with a categorical 5-point Likert scale ranked from lowest to highest as follows: very poor, poor, fair, good, and very good.

Press Ganey data had the frequency of each of the 5 Likert-type responses to each question. We used the percent of “very good” responses (the most favorable response) as our measure of patient satisfaction.25 Press Ganey refers to this most favorable response percent as the “top box score” and we also use the abbreviated terminology here as “top box%.” It should be noted that the percent of “very good” responses was used by Press Ganey in their reporting both for individual questions and for categories (domains) of patient experience. For example, if there were 600 “very good” responses of 1000 to the specific survey question about information on clinic delays, the “very good” (most favorable) percent would be 60. Thus, 60 would be the top box% for the specific question about information on clinic delays. To obtain the top box% for patient experience domains, the “very good” responses for each question in the domain are totaled and divided by the total responses from those questions. For example, in the “moving through your visit,” domain there are 2 questions, one on information about clinic delays and one about wait time (Supplementary Appendix A). If for the question on information about delays there were 600 “very good” of 990, and for the wait time question there were 800 “very good” responses of 1010, then the top box% for the Moving Through Your Visit domain would be (600 + 800)/(990 + 1010)*100 or 70.

Likewise, the Press Ganey “overall top box%” combines the total responses from all patients and all 24 questions and is the percent that has a “very good” response. As shown in the previous example, satisfaction domain score calculations were from a simple total of all “very good” responses from the questions in that domain. There was no special weighting by individual question within the satisfaction domains. In our analysis, we examined the 24 individual question top box% as well as the top box% in the 6 satisfaction domain categories.

Statistical analysis

We used Stata 15.1 statistics software (StataCorp, College Station, TX). The threshold regression function from the Stata time series tools was used to analyze the time series of patient satisfaction data for the 64 half-monthly intervals in the MCHS practices and for the 84 half-monthly intervals in the MC Arizona and MC Florida practices. By sequentially examining different piecewise regression models, the threshold regression function of Stata looks for the best fit for a model of 2 or more piecewise linear regressions. In this study, Stata fit the piecewise models blinded (without any information where in the time series go-live occurred). We used the Stata-derived best-fit 2 piecewise linear regression model to find the predicted top box% and upper and lower 99% top box% confidence intervals.

From the best-fit piecewise regressions, we used estimates for the linear regression constant and slope to predict the months it took the satisfaction score to return to baseline. For a best-case estimate of the months to return to baseline satisfaction, we used the 99% confidence interval highest predicted slope and the 99% confidence interval highest predicted constant for the best-case regression estimates post–go-live.

RESULTS

Table 1 summarizes the differences among the 6 sites in data collection information. The sample sizes for each question answered per half-month were above 500 at each site, and 3 sites averaged over 1500 responses per half-month per satisfaction question answered. The percent questions relating to primary care visits was consistent with the multispecialty care practice in Arizona and Florida and a more primary care focus in MCHS. Question responses were distributed across the 6 patient experience categories as follows: access 16.9%, care provider 41.7%, moving through your visit 7.7%, nurse/assistant 8.4%, practice assessment 8.5%, and personal issues 16.8%. This was in line with frequencies of questions in the survey, indicating no large-scale omissions of specific responses among the 24 questions.

Time series changes in patient satisfaction

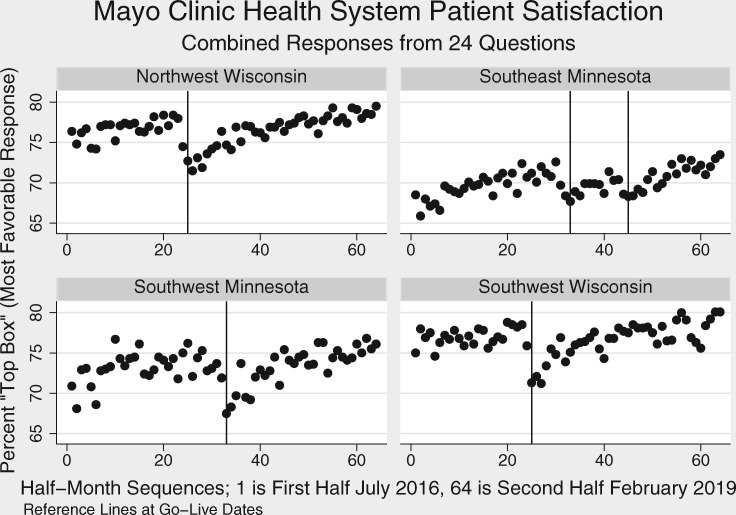

Figure 1 shows the time series of overall patient satisfaction (top box%, combined 24 questions) for all 4 MCHS locations with half-month time intervals and the go-live dates marked with reference lines. There are drops in satisfaction associated with 3 go-live dates across the 4 locations.

Figure 1.

Mayo Clinic Health System time series of overall “top box%” patient satisfaction, combining responses from all 24 questions and using half-month intervals (4 separate go-live locations), with reference lines at go-live.

Table 2 shows the differences in satisfaction based on the 2-region piecewise regression models for each of the 24 questions, 6 satisfaction domains, and overall (24 questions combined).

Table 2.

Change in top box% patient satisfaction (top box% after switch – top box% before switch)

| Change in top box% patient satisfaction by domains and individual questions (99% CI)a |

||||||

|---|---|---|---|---|---|---|

| Satisfaction domain | Northwest Wisconsin | Southwest Wisconsin | Southwest Minnesota | Southeast Minnesota | Arizona | Florida |

| Overall (24 individual question responses combined) | −4.5 (−5.8 to -3.2) | −3.7 (−5.5 to -2.0) | −4.4 (−6.5 to -2.2) | −3.6 (−4.9 to -2.3) | −2.9 (−4.0 to -1.7) | −1.2 (−2.1 to -0.3) |

| Access (4 individual question responses below combined) | −8.8 (−11.2 to -6.4) | −8.3 (−11.0 to -5.6) | −7.7 (−10.7 to -4.7) | −6.9 (−8.5 to -5.3) | −8.5 (−10.2 to -6.8) | −3.4 (−4.8 to -1.9) |

| Convenience of our office hours | −8.7 (−11.4 to -6.0) | −7.7 (−10.4 to -4.9) | −6.6 (−10.2 to -3.1) | −5.5 (−7.3 to -3.7) | −5.2 (−6.8 to -3.7) | −2.1 (−3.7 to -0.5) |

| Courtesy of registration staff | −5.0 (−6.8 to -3.1) | −4.1 (−6.4 to -1.9) | −5.4 (−8.2 to -2.6) | −4.5 (−6.0 to -3.0) | −3.4 (−4.6 to -2.2) | −0.6 (−1.8 to 0.6) |

| Ease of getting clinic on phone | −11.9 (−14.8 to -9.0) | −12.4 (−16.1 to -8.6) | −10.6 (−14.4 to -6.7) | −9.9 (−12.1 to -7.7) | −14.9 (−17.6 to -12.2) | −5.4 (−7.2 to -3.6) |

| Ease of scheduling appointments | −9.8 (−12.9 to -6.7) | −8.9 (−12.1 to -5.7) | −8.2 (−11.6 to -4.7) | −7.6 (−9.7 to -5.6) | −10.9 (−13.3 to -8.6) | −5.4 (−7.2 to -3.5) |

| CP (10 individual question responses below combined) | −2.3 (−3.6 to -0.9) | −2.5 (−4.3 to -0.6) | −2.8 (−4.9 to -0.7) | −2.0 (−3.4 to -0.5) | −0.6 (−1.8 to 0.6) | −0.5 (−1.4 to 0.4) |

| CP concern for questions/worries | −2.6 (−4.3 to -1.0) | −3.0 (−5.0 to -1.0) | −3.7 (−6.2 to -1.3) | −2.1 (−3.6 to -0.6) | −0.4 (−1.8 to 0.9) | −0.3 (−1.4 to 0.8) |

| CP efforts to include in decisions | −1.8 (−3.3 to -0.2) | −2.3 (−4.4 to -0.3) | −3.0 (−5.4 to -0.5) | −2.1 (−3.8 to -0.5) | −0.9 (−2.2 to 0.4) | −0.5 (−1.7 to 0.7) |

| CP explanations of prob/condition | −2.3 (−3.9 to -0.8) | −2.9 (−5.0 to -0.7) | −3.4 (−5.9 to -1.0) | −1.8 (−3.5 to 0.0) | −0.7 (−1.9 to 0.5) | −0.3 (−1.4 to 0.8) |

| CP information about medications | −2.3 (−4.0 to -0.6) | −2.7 (−5.0 to -0.3) | −3.4 (−6.1 to -0.7) | −2.4 (−4.2 to -0.6) | −0.7 (−2.2 to 0.8) | −0.7 (−1.9 to 0.5) |

| CP instructions for follow-up care | −3.0 (−4.8 to -1.3) | −2.8 (−5.1 to -0.5) | −3.8 (−6.3 to -1.3) | −2.3 (−4.1 to -0.6) | −1.5 (−2.9 to -0.2) | −1.0 (−2.2 to 0.2) |

| CP spoke using clear language | −2.1 (−3.5 to -0.6) | −2.1 (−4.2 to 0.0) | −2.3 (−4.6 to 0.0) | −1.9 (−3.5 to -0.4) | −0.3 (−1.5 to 0.9) | −1.0 (−2.2 to 0.2) |

| Friendliness/courtesy of CP | −2.1 (−3.4 to -0.8) | −2.7 (−4.8 to -0.6) | −1.9 (−4.2 to 0.4) | −1.5 (−2.8 to -0.3) | 0.1 (−1.0 to 1.3) | −0.1 (−1.1 to 0.8) |

| Likelihood of recommending CP | −2.1 (−3.8 to -0.5) | −2.3 (−4.2 to -0.3) | −2.6 (−5.1 to -0.1) | −1.8 (−3.3 to -0.3) | −0.6 (−1.8 to 0.6) | −0.2 (−1.2 to 0.8) |

| Patients' confidence in CP | −1.6 (−3.1 to -0.1) | −1.8 (−3.9 to 0.3) | −2.0 (−4.3 to 0.3) | −1.6 (−3.1 to -0.1) | −0.3 (−1.5 to 0.9) | −0.5 (−1.5 to 0.6) |

| Time CP spent with patient | −3.1 (−4.8 to -1.4) | −2.4 (−4.9 to 0.1) | −3.3 (−5.7 to -0.9) | −2.8 (−4.4 to -1.3) | −0.9 (−2.3 to 0.5) | −0.8 (−1.9 to 0.2) |

| Moving through your visit (2 individual question responses below combined) | −8.4 (−10.8 to -6.0) | −8.0 (−11.0 to -4.9) | −9.1 (−12.2 to -5.9) | −6.9 (−8.9 to -4.8) | −6.6 (−8.6 to -4.6) | −3.4 (−5.0 to -1.8) |

| Information about delays | −9.1 (−11.8 to -6.4) | −7.0 (−10.2 to -3.8) | −9.7 (−13.4 to -5.9) | −6.8 (−9.0 to -4.7) | −6.9 (−9.2 to -4.7) | −3.8 (−5.6 to -2.0) |

| Wait time at clinic | −7.9 (−10.3 to -5.4) | −8.5 (−11.7 to -5.4) | −8.4 (−11.7 to -5.2) | −6.8 (−9.0 to -4.7) | −6.3 (−8.4 to -4.3) | −3.0 (−4.6 to -1.4) |

| Nurse/assistant (2 individual question responses below combined) | −4.1 (−5.5 to -2.7) | −2.8 (−4.7 to -1.0) | −3.2 (−5.7 to -0.8) | −3.4 (−5.1 to -1.6) | −1.9 (−3.2 to -0.6) | −0.2 (−1.3 to 0.9) |

| Concern of nurse/assistant for problem | −4.7 (−6.3 to -3.0) | −2.8 (−4.9 to -0.7) | −3.6 (−6.4 to -0.9) | −3.4 (−5.2 to -1.5) | −2.2 (−3.8 to -0.6) | −0.4 (−1.7 to 0.9) |

| Friendliness/courtesy of nurse/assistant | −3.5 (−5.0 to -2.1) | −2.9 (−4.9 to -0.8) | −2.9 (−5.5 to -0.4) | −3.3 (−5.2 to -1.5) | −1.6 (−2.8 to -0.4) | 0.0 (−1.0 to 1.0) |

| Practice assessment (2 individual question responses below combined) | −5.0 (−6.5 to -3.4) | −3.7 (−5.6 to -1.7) | −4.1 (−6.8 to -1.5) | −4.4 (−6.1 to -2.7) | −2.2 (−3.5 to -0.9) | −0.9 (−2.0 to 0.2) |

| Likelihood of recommending practice | −4.7 (−6.4 to -3.1) | −3.9 (−6.0 to -1.8) | −4.0 (−6.7 to -1.3) | −4.5 (−6.3 to -2.6) | −2.0 (−3.2 to -0.8) | −0.6 (−1.8 to 0.5) |

| Staff worked together | −5.3 (−6.9 to -3.6) | −3.4 (−5.5 to -1.3) | −4.2 (−7.1 to -1.3) | −4.3 (−6.1 to -2.5) | −2.4 (−4.0 to -0.8) | −1.1 (−2.3 to 0.1) |

| Personal issues (4 responses below combined) | −3.5 (−4.8 to -2.2) | −2.3 (−4.0 to -0.6) | −4.2 (−6.5 to -1.9) | −2.5 (−4.0 to -1.0) | −1.9 (−3.0 to -0.7) | −0.6 (−1.6 to 0.3) |

| Cleanliness of our practice | −3.1 (−4.7 to -1.4) | −1.9 (−3.9 to 0.1) | −3.8 (−6.2 to -1.5) | −2.6 (−4.1 to -1.1) | −1.2 (−2.1 to -0.2) | −0.6 (−1.6 to 0.3) |

| How well staff protect safety | −3.9 (−5.5 to -2.3) | −1.9 (−3.6 to -0.2) | −3.6 (−6.2 to -1.0) | −2.9 (−4.7 to -1.2) | −2.0 (−3.2 to -0.8) | −0.8 (−2.0 to 0.4) |

| Our concern for patients' privacy | −2.9 (−4.3 to -1.4) | −2.6 (−4.5 to -0.7) | −4.6 (−7.1 to -2.2) | −2.5 (−4.1 to -0.9) | −1.9 (−3.3 to -0.6) | −0.8 (−1.8 to 0.2) |

| Our sensitivity to patients' needs | −4.1 (−5.6 to -2.6) | −2.8 (−4.8 to -0.8) | −4.6 (−7.2 to -2.0) | −2.9 (−4.5 to -1.3) | −1.9 (−3.3 to -0.5) | −0.9 (−2.1 to 0.4) |

Calculated from linear regression estimates from the best fit piecewise regressions before and after go-live.

CI: confidence interval; CP: care provider.

Difference measured between linear regression mid-confidence estimate before and after go-live.

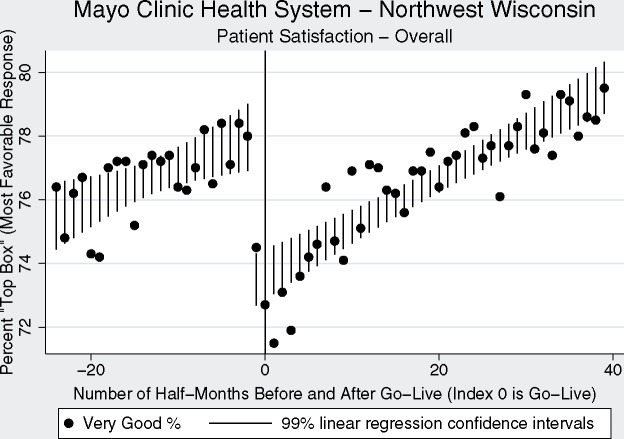

Figure 2 shows the time series of overall patient satisfaction (based on all 24 individual questions) for the Northwest Wisconsin location of clinics at half-month intervals before and after the EHR switch. This is shown as the Stata statistically generated best 2-region piecewise linear regression model with 99% confidence intervals. The index time of 0 (shown by reference line) marks the satisfaction data during the half-month of go-live. Of note is the drop in satisfaction that occurred about one half-month before go-live.

Figure 2.

Mayo Clinic Health System Northwest Wisconsin time series “top box%” overall patient satisfaction (combined 24 questions) using half-month intervals, with reference line at go-live.

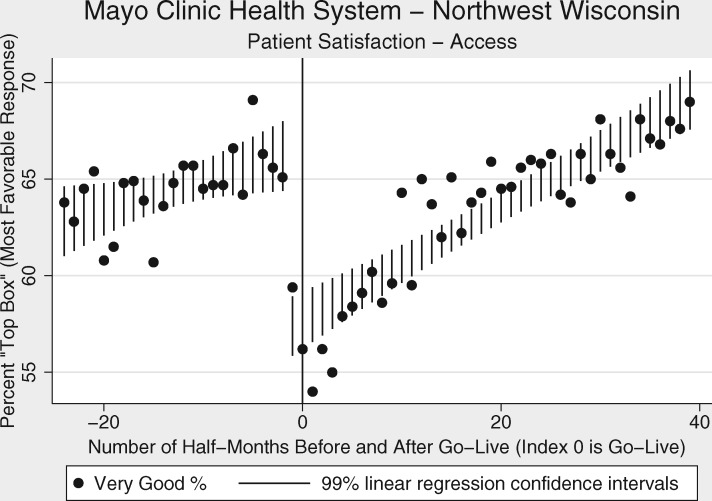

Figure 3 shows the time series of the access patient satisfaction domain, which had one of the highest percent changes in satisfaction (Table 2). It took between 9 and 15 months to return to previous levels (Table 3).

Figure 3.

Mayo Clinic Health System Northwest Wisconsin time series “top box%” patient satisfaction with access (combined 4 questions) using half-month intervals, with reference line at go-live.

Table 3.

Months to return to pre–go-live satisfaction levels

| Satisfaction domain | Northwest Wisconsin | Southwest Wisconsin | Southwest Minnesota | Southeast Minnesota | Arizona | Florida |

|---|---|---|---|---|---|---|

| Overall (all questions) | 14.8 (9.8) | 13.1 (6.4) | 11.1 (5.4) | 14.5 (7.7) | 8.6 (4.7) | 4.8 (1.6) |

| Access | 15.0 (10.1) | 14.6 (9.0) | 9.7 (5.1) | 15.1 (9.8) | 11.3 (7.7) | 9.3 (4.6) |

| Care provider | 12.8 (6.1) | 15.3 (4.1) | 11.4 (3.4) | 15.2(4.2) | 3.0 (<1) | 2.3 (<1) |

| Moving through your visit | 15.4 (10.4) | 15.4 (8.6) | 11.3 (6.4) | 12.2 (7.4) | 12.7 (6.3) | 8.0 (3.5) |

| Nurse assistant | 16.9 (9.7) | 10.4 (4.1) | 12.5 (3.1) | 12.9 (5.3) | 8.8 (2.7) | 3.2 (<1) |

| Practice assessment | 16.0 (10.1) | 16.1 (6.3) | 13.1 (5.2) | 13.9 (7.7) | 7.7 (3.3) | 3.9 (<1) |

| Personal issues | 16.2 (9.2) | 11.3 (4.0) | 12.3 (5.1) | 16.2 (5.7) | 8.2 (2.8) | 3.0 (<1) |

Values in parentheses are 99% confidence interval best-case months, calculated using linear regression 99% confidence interval post–go-live steepest slope and post–go-live largest 99% confidence interval predicted constant.

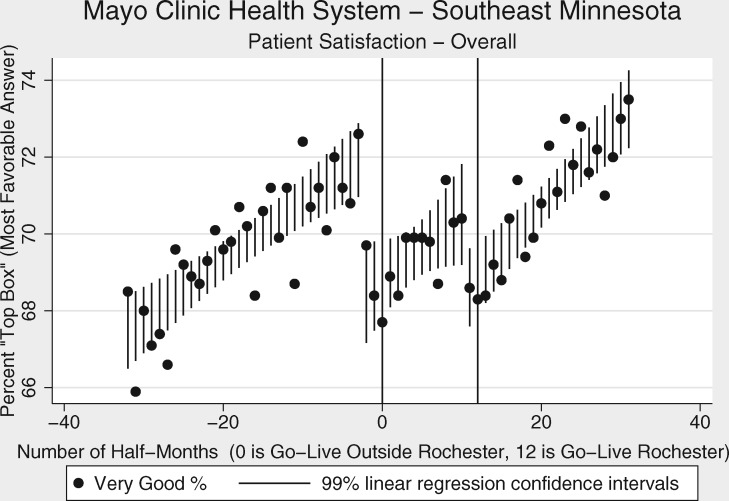

Figure 4 shows the 2 drops in patient satisfaction associated with the Southeast Minnesota region 2 different go-live dates (switch to Epic from different EHRs, Cerner and GE Centricity). The pattern of having satisfaction drops at 1-2 half-months before go-live is also seen here.

Figure 4.

Mayo Clinic Health System Southeast Minnesota time series “top box%” overall patient satisfaction (combined 24 questions) using half-month intervals, with reference lines at go-live.

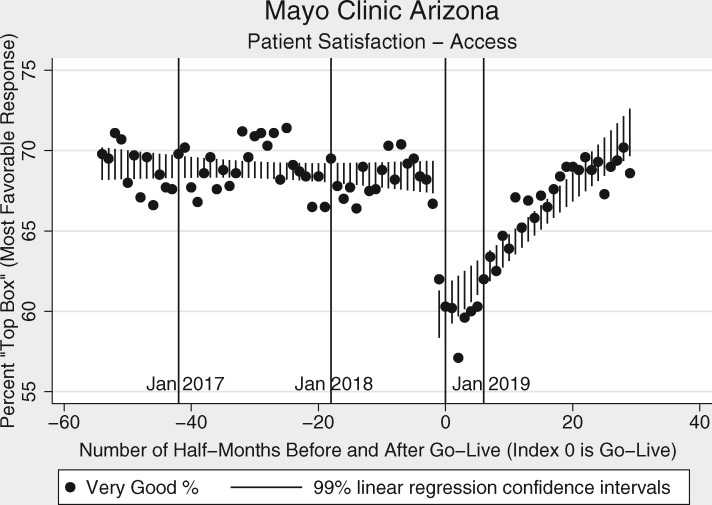

Figure 5 shows the time series of the access patient satisfaction domain for the MC Arizona location. The index line of the first half-month October 2018 (go-live October 6) is the final of the 4 go-live dates for the Mayo Clinic switch to Epic. Additional reference lines are labeled for calendar year starts to help examine seasonal trends. There is a suggestion of a seasonal cyclical component that may coincide with winter visitors to the Southwest United States.

Figure 5.

Mayo Clinic Arizona time series “top box%” patient satisfaction with access (combined 4 questions) using half-month intervals, with reference lines at go-live and start of each calendar year.

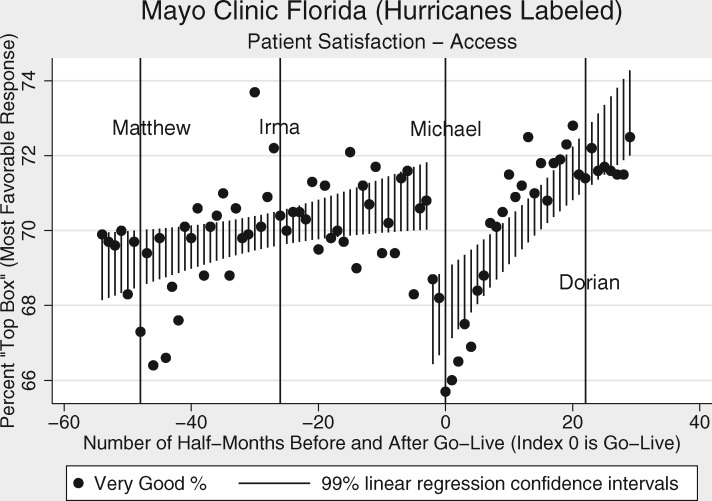

Figure 6 shows the time series of the access patient satisfaction domain for the MC Florida location. The index line (go-live October 6, 2018) is the final of the 4 go-live dates. Florida hurricane events potentially relevant to access are labeled with additional reference lines. Hurricane Michael (October 10, 2018) coincided with the go-live interval but landfall occurred in the Florida “panhandle,” over 200 miles away from MC Florida. MC Florida did not limit outpatient services in response to Michael. However, hurricanes Matthew (2016), Irma (2017), and Dorian (2019) all caused disruptions of MC Florida outpatient services, including temporary (1- or 2-day) closures of outpatient clinics.

Figure 6.

Mayo Clinic Florida time series “top box%” patient satisfaction with access (combined 4 questions) using half-month intervals, with reference lines at go-live and Florida hurricanes. Go-live (October 6, 2018) was in the same data collection interval as Hurricane Michael landfall (October 10, 2018).

As seen in Table 3, it took from 2 to 16 months to return to satisfaction levels present before go-live. Even using a liberal best-case 99% confidence interval slope and constant estimation, it took up to 10 months to return to previous satisfaction levels.

Supplementary Figure 7 shows the MC Arizona and MC Florida time series of the combined 24-question overall satisfaction as was shown for the 4 MCHS sites in Figure 1. Supplementary Figures 8 and 9 show a different perspective of the patient satisfaction data over time. Instead of showing favorable responses, Supplementary Figures 8 and 9 show the time series of combined “very poor” and “poor” responses for the 4 sites in MCHS (Supplementary Figure 8) and MC Arizona and MC Florida sites (Supplementary Figure 9). The overall low level of unfavorable responses is punctuated by spikes of higher unfavorable responses associated with go-live dates. Supplementary Figures 10 and 11 show additional examples of patient experience domains before and after the EHR switch. Supplementary Figure 10 shows the “moving through your visit” domain, a domain associated with a larger change in patient satisfaction after the EHR switch. As a contrast, Supplementary Figure 11 shows the “care provider” patient experience domain, which was associated with a smaller change in patient satisfaction.

DISCUSSION

We observed that an EHR change was associated with significant changes in patient satisfaction. Notably, drops in satisfaction occurred at the same time of the EHR switch or within about 1 month before. How were patients sensing an EHR change before it happened? As there were no other major interventions in the MCHS, MC Arizona, or MC Florida within a month before each go-live date, we hypothesize that preparatory work was contributing to patient dissatisfaction. Several weeks before go-live, patients were being scheduled on 2 systems. In addition to scheduling on 2 systems, schedulers were employing new procedures which were time consuming. With the challenge of placing duplicate orders in an unfamiliar system, patients were put on hold if they were on the telephone and asked to wait if they were being scheduled in person. Additionally, all members of the care team (providers, nursing and administrative support staff) were undergoing training in the new EHR which may have adversely affected the number of team members available to accomplish the work. So, although go-live had not happened, the patient experience, at least with future scheduling, was impacted for several weeks before the actual switch to Epic. We saw the drop in satisfaction before go-live occur across multiple patient satisfaction domains.

Drops in satisfaction persisted for several months (Figures 2–6). Using the linear regression estimates, we were able to quantify the months to return to the satisfaction levels just before the EHR switch (Table 3). Most of the patient experience domains took several months before a return to previous levels. This information could be useful for those considering quality improvement projects involving patient satisfaction. Our study should give pause to those who want to implement patient satisfaction projects concurrently with an EHR switch. An EHR change may cause a confounding influence on patient satisfaction for many months.

The Mayo Clinic was extremely diligent about trying to make the switch to Epic less disruptive. Planning for the switch involved hundreds of employees working with Epic staff over several years before go-live. A healthcare financial journal and a local newspaper even reported that the EHR switch did not significantly impact Mayo’s earnings.26,27 However, other healthcare institutions should take note that even with meticulous planning there may be some patient dissatisfaction associated with an EHR switch.

Satisfaction data have also been used to adjust provider pay and other benefits.28 Our data suggest that for institutions switching EHRs, there may be a drop in satisfaction with the care provider. Although the decrease in satisfaction with the care provider appears small, it may be relevant to those providers who may drop below a threshold level that generates a bonus or that results in a salary decrease. Leadership should not only be aware of the significant drops in overall satisfaction but also keep in mind the potential drops in provider satisfaction and how they may affect individual physicians.29

Our study showed significant differences in patient satisfaction across several patient experience domains. None of the domains of patient experience that Press Ganey surveyed was associated with a significant immediate increase in favorable responses from patients. Almost all the domains showed a rapid drop of favorable responses. Patient perception of access was associated with the largest decrease of very favorable responses. This may be explained by the several week duplications of scheduling before go-live, as explained previously. The “moving through your visit” domain also declined to a level statistically similar to the decline in access satisfaction (Table 2). The moving through your visit questions are more specifically about wait time and information about delays. This suggests that in addition to some potential scheduling issues, there may have been some challenges with other processes, such as getting patients roomed and seen by providers during the scheduled appointment times. The least affected domain was care provider satisfaction. Patients continued to give providers very favorable responses, at close to the same level after the EHR switch as they did before. An explanation may be that Mayo Clinic had extensive provider training months before go-live, both with required classes and with online learning modules. At go-live, outpatient providers temporarily had their patient appointment load cut in half, and there were Epic experts readily available in the clinics for providers to consult for problems.

Our results may shed some light on the utility of patient satisfaction data. Although we detected a significant shift in patient satisfaction associated with the EHR switch, there was still a lot of variability in patient satisfaction scores even with responses per question totaling over 1500 every half-month. This resulted in some wide confidence intervals around the regression lines. Our study shows that an intervention can escape the patient satisfaction “noise,” but how big an intervention is needed? An EHR change is a massive intervention. Can smaller interventions be expected to break through the variability that we saw? Also, while we did detect a drop in satisfaction with the provider in 4 of the 6 sites, it was small. Although Mayo did give care providers intensive training and support before and for a few weeks after go-live, there were many providers who continued to have challenges adjusting to the new software for several months. Despite this, providers as a whole seemed to be relatively immune to the drop in patient satisfaction seen in other domains. It is interesting that patient satisfaction scores for individual providers vary by 20%,30 but a major system change such as an EHR switch was associated with only a 2% change. Perhaps to no surprise, this suggests that individual traits of providers are important to patient perception and even major system changes do not alter those perceptions.

Limitations

We used 2 region piecewise linear regression as the model for this time series. It is possible that other models, including some nonlinear models, could fit the data better. The MC Florida data (Figure 6) in particular show satisfaction data before go-live that do not appear to be a good fit for a linear regression model. Also, our 84 half-month time series limits our ability to look at baseline variability. Our longest baseline pre–go-live was 54 half-months in the Arizona and Florida sites. A longer baseline would help put changes around the time of the EHR switch in the perspective of baseline variability of patient experience domains.

Known and unknown confounding events and seasonal cycles could have effects on the interpretation of satisfaction data. For example, hurricane Michael struck the “panhandle” of Florida October 10, 2018, just 4 days after go-live in MC Florida. Although Michael’s landfall was about 200 miles west of MC Florida and did not disrupt outpatient services like Matthew (2016), Irma (2017), and Dorian (2019), it’s possible that Michael was a confounding event in the MC Florida satisfaction data. Figure 6 does show some lower top box% scores around the time of hurricane Matthew in 2016, when there was temporary disruption of outpatient services. Other potential confounders are more subtle and harder to identify than a hurricane, so we should remain cautious about assigning an associated change in satisfaction exclusively to a change in EHR.

The Arizona data (Figure 5) also shows a possible seasonal cyclical pattern associated with access satisfaction. This would be consistent with the influx of winter visitors to Arizona and could be a confounder in the Arizona data. Other seasonal events such as influenza could potentially act as confounders. According to the Centers for Disease Control, the 2017-2018 influenza outbreak was associated with high levels of outpatient clinic and emergency department visits for influenza-like illness.31 The 2017-18 outbreak started in November 2017 and peaked in January and February 2018, so it may have had a confounding effect on the MCHS Southwest and Southeast Minnesota regions with a November 4, 2017, go-live. However, go-live was July 8, 2017, in Northwest and Southwest Wisconsin and May 6, 2018, in Rochester, Minnesota (in the Southeast Minnesota region). The 2018-2019 influenza outbreak resulted in a lower percent of peak outpatient influenza-like illness visits (peak of 5.1% visits the week of February 16, 2019, compared with 7.5% visits on the week ending February 3, 2018, in the 2017-2018 influenza outbreak).31,32 The 2018-2019 influenza outbreak could have been a confounder in the MC Florida and MC Arizona data, where the go-live date was October 6, 2018. However, in the year before go-live in MC Arizona, when the influenza outbreak was more severe, there was not much change (Figure 5).

As for confounders originating within Mayo Clinic, it should be emphasized that there was very little change for a few months before and after go-live that was not directly related to the EHR transition. By design, there were no other major undertakings that would distract from the new EHR implementation, especially within a few months before and after go-live.

Press Ganey survey questions are not specific enough to give much insight about potential reasons why there may be an association of patient satisfaction with an EHR change. Also, the Press Ganey survey has been found to have high ceiling rates (high percent of scores at the maximum) and low floor rates (low percent of scores at the minimum).18 However, it can be argued that low floor rates have some desirable attributes for this study. For example, the low baseline floor rate causes a change in combined “very poor” and “poor” associated with the EHR switch to be a very large relative percent, even though the absolute percent change is not that large. For this study, the low baseline floor rate may be acting to filter all but the biggest impact events. In the Supplementary Appendix, we have included time series (Supplementary Figures 8 and 9) of 4 MCHS locations, MC Arizona, and MC Florida showing a low floor rate punctuated by abrupt rises in access dissatisfaction coinciding with the EHR change.

These findings may not be generalizable to all healthcare organizations. Mayo intensively prepared providers for the change, but it is difficult to know whether that preparation resulted in the lower impact on care provider patient satisfaction. It is also difficult to know if having less duplication of scheduling or easier ordering would result in less impact in future implementations. There may also be some differences in switching from one specific EHR platform to another. In the Mayo software switch, patients did not have the ability to self-schedule appointments with either the pre- or postswitch EHR. Switching to an EHR with self-scheduling features could result in a different patient experience, especially with access, and this would be an avenue for future research.

CONCLUSION

A switch in EHRs may be associated with a significant drop across several patient satisfaction domains. Satisfaction with care providers may show no decrease or a smaller decrease compared with a larger drop in satisfaction concerning access, wait time, and information about delays. After an EHR switch it may take several months to regain lost patient satisfaction. Quality experts focusing on patient satisfaction interventions should be aware of a potential confounder associated with an EHR switch. Healthcare leaders should consider changes in patient satisfaction associated with an EHR switch when looking at patient satisfaction on an institutional scale or at the individual provider level.

AUTHOR CONTRIBUTIONS

FN was involved in conception, study design, and data collection. FN, JLP, SMT, RC, JCM, JOE were involved in data interpretation and data visualization. FN was involved in statistical analysis and wrote the manuscript draft. All authors were involved in critical revisions, editing, and manuscript approval.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

CONFLICT OF INTEREST STATEMENT

None declared.

Supplementary Material

References

- 1.Office of the National Coordinator for Health Information Technology. Health IT Dashboard. Non-federal acute care hospital electronic health record adoption. 2017. https://dashboard.healthit.gov/quickstats/pages/FIG-Hospital-EHR-Adoption.php Accessed April 6, 2020.

- 2.Office of the National Coordinator for Health Information Technology. Certified health IT developers and editions reported by hospitals participating in the Medicare EHR Incentive Program. 2017. https://dashboard.healthit.gov/quickstats/pages/FIG-Vendors-of-EHRs-to-Participating-Hospitals.php Accessed April 6, 2020.

- 3.Office of the National Coordinator for Health Information Technology. Certified health IT developers and editions reported by ambulatory primary care physicians, medical and surgical specialists, podiatrists, optometrists, dentists, and chiropractors participating in the Medicare EHR Incentive Program. 2017. https://dashboard.healthit.gov/quickstats/pages/FIG-Vendors-of-EHRs-to-Participating-Professionals.php Accessed April 6, 2020.

- 4. Green J. Who are the largest EHR vendors. EHR in Practice. October 18, 2019. https://www.ehrinpractice.com/largest-ehr-vendors.html Accessed April 6, 2020. [Google Scholar]

- 5. Boonstra A, Versluis A, Vos J.. Implementing electronic health records in hospitals: a systematic literature review. BMC Health Serv Res 2014; 14 (1): 370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.MD Anderson points to Epic implementation for 77% drop in adjusted income. Becker’s Hospital CFO Report. August 26, 2016. https://www.beckershospitalreview.com/finance/md-anderson-points-to-epic-implementation-for-77-drop-in-adjusted-income.html Accessed April 6, 2020.

- 7. Monica K. Epic EHR contributed to major operating losses for Dana-Farber. EHR Intelligence. September 26, 2017. https://ehrintelligence.com/news/epic-ehr-contributed-to-major-operating-losses-for-dana-farber Accessed April 6, 2020.

- 8. Pratt M. The true cost of switching EHRs. Med Econ 2018; 96 (10). https://www.medicaleconomics.com/business/true-cost-switching-ehrs Accessed April 6, 2020. [Google Scholar]

- 9. Ramos Hegwer L. How Brookwood Baptist Health survived a vendor switch and maintained strong revenue. Revenue-cycle Strateg 2016; 13 (7): 2–4. [PubMed] [Google Scholar]

- 10. Schuler M, Berkebile J, Vallozzi A.. Optimizing revenue cycle performance before, during, and after an EHR implementation. Healthc Financ Manage 2016; 70 (6): 76–80. [PubMed] [Google Scholar]

- 11. Alkureishi MA, Lee WW, Lyons M, et al. Impact of electronic medical record use on the patient-doctor relationship and communication: a systematic review. J Gen Intern Med 2016; 31 (5): 548–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Fairley CK, Vodstrcil LA, Huffam S, et al. Evaluation of Electronic Medical Record (EMR) at large urban primary care sexual health centre. PLoS One 2013; 8 (4): e60636. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Nagy VT, Kanter MH.. Implementing the electronic medical record in the exam room: the effect on physician-patient communication and patient satisfaction. Perm J 2007; 11 (2): 21–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Hsu J, Huang J, Fung V, Robertson N, Jimison H, Frankel R.. Health information technology and physician-patient interactions: impact of computers on communication during outpatient primary care visits. J Am Med Inform Assoc 2005; 12 (4): 474–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Press Ganey. Engage Patients to Understand Needs. 2019. https://www.pressganey.com/solutions/patient-experience/engage-patients-to-understand-needs Accessed April 6, 2020.

- 16.Press Ganey. Transparency Strategies: Online Physician Reviews for Improving Care and Reducing Suffering [white paper]. 2014. http://healthcare.pressganey.com/2014-PI-Transparency_Strategies Accessed April 6, 2020.

- 17. Cambria B, Basile J, Youssef E, et al. The effect of practice settings on individual Doctor Press Ganey scores: a retrospective cohort review. Am J Emerg Med 2019; 37 (9): 1618–21. [DOI] [PubMed] [Google Scholar]

- 18. Presson AP, Zhang C, Abtahi AM, Kean J, Hung M, Tyser AR.. Psychometric properties of the Press Ganey® Outpatient Medical Practice Survey. Health Qual Life Outcomes 2017; 15 (1): 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Ryan T, Specht J, Smith S, DelGaudio JM.. Does the Press Ganey Survey correlate to online health grades for a major Academic Otolaryngology Department? Otolaryngol Head Neck Surg 2016; 155 (3): 411–5. [DOI] [PubMed] [Google Scholar]

- 20. Schwartz TM, Tai M, Babu KM, Merchant RC.. Lack of association between Press Ganey emergency department patient satisfaction scores and emergency department administration of analgesic medications. Ann Emerg Med 2014; 64 (5): 469–81. [DOI] [PubMed] [Google Scholar]

- 21. Patel NK, Kim E, Khlopas A, et al. What influences how patients rate their hospital stay after total hip arthroplasty? Surg Technol Int 2017; 30: 405–10. [PubMed] [Google Scholar]

- 22. Philpot LM, Khokhar BA, Rosedahl JK, Sinclair TA, Chaudhry R, Ebbert JO.. Variation in patient experience across the clinic day: a multilevel assessment of four primary care practices. J Gen Intern Med 2019; 34 (11): 2536–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Repplinger MD, Ravi S, Lee AW, et al. The impact of an emergency department front-end redesign on patient-reported satisfaction survey results. West J Emerg Med 2017; 18 (6): 1068–74. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Siddiqui ZK, Zuccarelli R, Durkin N, Wu AW, Brotman DJ.. Changes in patient satisfaction related to hospital renovation: experience with a new clinical building. J Hosp Med 2015; 10 (3): 165–71. [DOI] [PubMed] [Google Scholar]

- 25.Press Ganey. Scoring Quick Guide for Quick Reports. 2013. https://helpandtraining.pressganey.com/lib-docs/default-source/ip-training-resources/Scoring_Quick_Guide_for_Quick_Reports.pdf? sfvrsn=0 Accessed April 6, 2020.

- 26. Ellison A. Mayo’s annual revenue climbs to $12.6B amid move to Epic EHR. Becker’s Hospital CFO Report. February 19, 2019. https://www.beckershospitalreview.com/finance/mayo-s-annual-revenue-climbs-to-12-6b-amid-move-to-epic-ehr.html Accessed April 6, 2020.

- 27. Kiger J. Mayo Clinic reports ‘a very good’ 2018, despite Epic costs. Rochester Post Bulletin. February 18, 2019. https://www.postbulletin.com/news/local/mayo-clinic-reports-a-very-good-despite-epic-costs/article_9b7d4f3c-33b0-11e9-829f-f7dca8cdcf01.html Accessed April 6, 2020.

- 28. Japsen B. Ouch! Patient Satisfaction Hits Physician Pay. Forbes. July 2, 2013. https://www.forbes.com/sites/brucejapsen/2013/07/02/patient-satisfaction-hits-physician-pay/#4d74147654e5 Accessed April 6, 2020.

- 29. Zgierska A, Rabago D, Miller MM.. Impact of patient satisfaction ratings on physicians and clinical care. Patient Prefer Adherence 2014; 8: 437–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30. North F, Crane SJ, Ebbert JO, Tulledge-Scheitel SM. Do primary care providers who prescribe more opioids have higher patient panel satisfaction scores? SAGE Open Med 2018; 6:2050312118782547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Garten R, Blanton L, Elal AIA, et al. Update: influenza activity in the United States during the 2017-18 season and composition of the 2018-19 influenza vaccine. MMWR Morb Mortal Wkly Rep 2018; 67 (22): 634–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Xu X, Blanton L, Elal AIA, et al. Update: influenza activity in the United States during the 2018-19 season and composition of the 2019-20 influenza vaccine. MMWR Morb Mortal Wkly Rep 2019; 68 (24): 544–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.