Abstract

Augmented and mixed reality are emerging interactive and display technologies. These technologies are able to merge virtual objects, in either two or three dimensions, with the real world. Image-guidance is the cornerstone of IR. With augmented or mixed reality, medical imaging can be more readily accessible or displayed in actual 3D space during procedures to enhance guidance, at times when this information is most needed. In this review, the current state of these technologies is addressed followed by a fundamental overview of their inner workings and challenges with 3D visualization. Finally, current and potential future applications in IR are highlighted.

I. Introduction

Spatial computing is a new paradigm of computing that uses the immediate, surrounding environment as a medium to interact with technology. Virtual, augmented, and mixed reality are all types of spatial computing. Virtual reality (VR) completely immerses the user in an artificial, digitally-created world. Augmented reality (AR) overlays digital content on the real world, enhancing reality with superimposed information. Mixed reality, also known as merged reality, represents the fusion of both virtual and real-world environments, where digital and physical objects co-exist and can interact with each other.

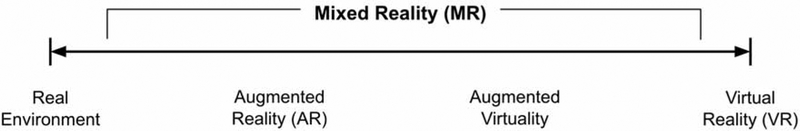

Despite mixed reality devices being an evolution of AR, traditionally, mixed reality represented a continuum with the real-world environment at one end of the spectrum to a completely virtual environment at the opposite end (Fig. 1) [1]. This review will consider mixed reality as synonymous with AR and will focus on AR and its potential impact on IR; VR will not be discussed in detail. Much of the research highlighted has been demonstrated as proof-of-concept or as a feasibility study; more established studies and clinical trials remain to be published.

Figure 1.

Reality-Virtuality Continuum proposed by Milgram in 1994 [1].

One of the key benefits of AR over VR is the ability to visualize and interact with digital objects while maintaining views of the natural world. Preserving direct line-of-sight with the surrounding environment permits the use of AR during image-guided interventions, provides relevant depth cues, and reduces virtual reality sickness, also known as cybersickness. Cybersickness is due to discrepancies between the visual and vestibular senses and can still occur with AR but is less frequent and milder compared to VR [2].

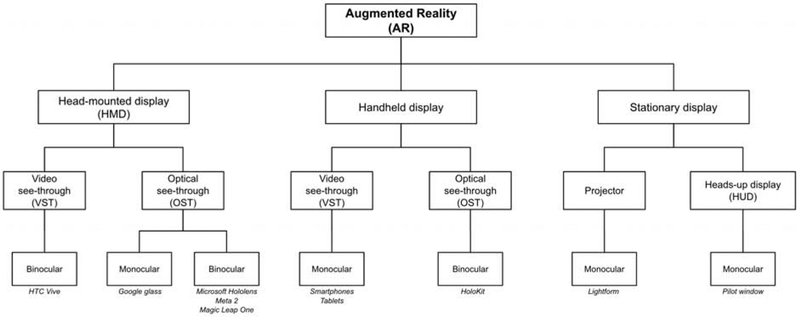

AR helps increase situational awareness by reducing shifts in focus [3, 4]. Strategically placed digital content minimizes refocusing between the content and the real world. AR devices can be grouped into multiple distinct subtypes (Fig. 2): head-mounted displays (HMDs), handheld displays, and stationary displays. These classifications can be further categorized into optical see-through (OST) and video see-through (VST). OST displays utilize special transparent lenses that allow direct views of the external environment. VST displays use a video feed to indirectly view the external environment. These categories can be further subdivided into monocular or binocular. Monocular displays provide a single channel for viewing. Binocular displays provide two separate channels to each eye to simulate the perception of depth through stereoscopy.

Figure 2.

Organizational chart on types of AR devices. Specific examples are displayed in italics where appropriate.

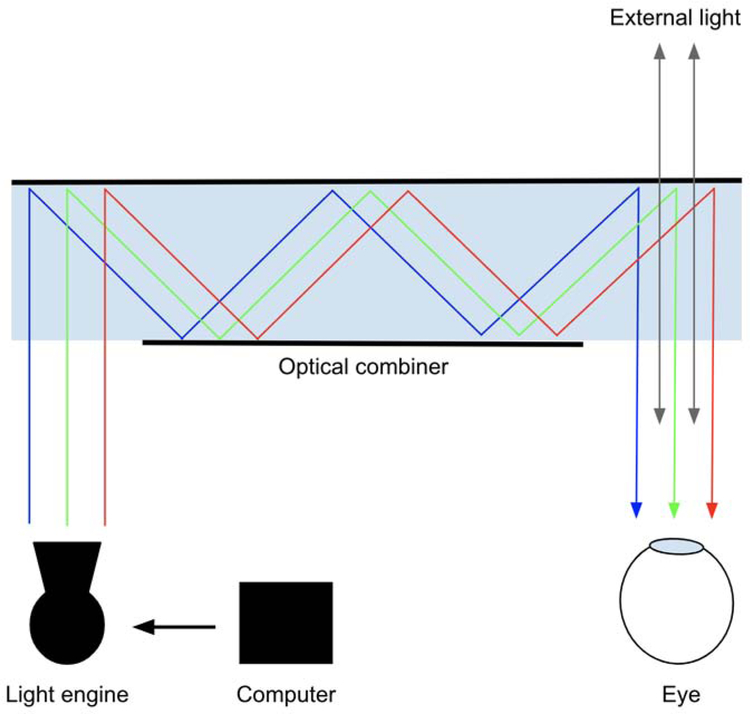

An OST display has three main components: light engine, optical combiner, and computer (Fig. 3). Special transparent lenses, called optical combiners or holographic waveguides, merge digitally-created images with light from the natural world. The optical combiner acts essentially like a partial mirror, allowing light from the real world to pass through while redirecting light from the projector to generate a hologram. A complete evaluation of OST-HMDs and their applications for surgical interventions is provided by Qian et al [5].

Figure 3.

General schematic of an optical see-through (OST) display. Light from a projector is reflected through a waveguide using total internal reflection and diffraction and is directed at specific angles and wavelengths into the eye to produce a hologram. External light from the natural world also passes through into the eye.

In general, there are two conventional methods for rendering 3D volumetric data: 1) surface rendering (SR, Video 1), also known as indirect volume rendering or shaded surface display, and 2) direct volume rendering (DVR, Video 2) [6–9]. SR is a binary process with visualization of surface meshes at tissue interfaces, which are usually preprocessed by segmentation and represent a fraction of the raw volumetric data. DVR is a continuous and much more computationally intensive process involving the entire volume of data but provides the most accurate visual 3D representation medical imaging [9–11]. Both of these methods can be incorporated into AR displays to render medical imaging in actual 3D space [9].

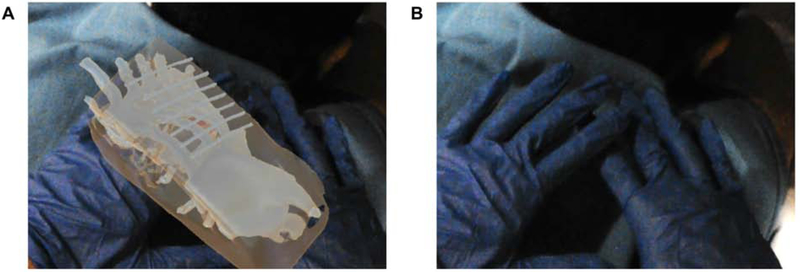

The primary advantage of AR displays is the ability to place and anchor virtual objects anywhere in space. This can be useful for projecting anatomic models or 3D imaging through the surgical drape, flat panel detector, or CT gantry. However, this feature can also unintentionally preclude visualization of important physical objects, such as instruments and the operator’s hands (Fig. 4). Therefore, object occlusion, or the way virtual objects project in front of and behind physical objects, will be important for managing virtual content in procedural settings [12].

Figure 4.

Object occlusion as a potential unintended consequence of AR. A. Virtual model within the patient projecting through the operator’s hands, unintentionally occluding visualization of the hands. B. Hands are visualized with hologram turned off.

One of the key limitations of AR displays is the field-of-view (FOV) for augmentation. Naturally, binocular FOV of the human eyes is about 200 degrees in the horizontal plane and 135 degrees in the vertical plane [13]. All commercially available OST-HMDs have less than 90 degrees horizontal or vertical FOVs, with most ranging 30–40 degrees [5]. Additionally, most untethered displays have battery lives of 2–3 hours [14], an important factor to consider during prolonged procedures. In general, early clinical studies will seek to define how and whether AR can potentially offer additional benefits in IR, such as enhancing anatomic understanding, decreasing procedure times, and reducing radiation exposure.

II. 3D Accuracy, Tracking, and Registration

Accurate tracking and registration are needed for any image-guided navigation system [15]. For binocular displays, projectional accuracies are dependent on accurate calibration of the device. Calibration of OST-HMDs is necessary to tailor projections to the user’s inter-pupillary distance (IPD) [16]. Inaccurate IPD can result in poor eye-lens alignment, image distortion, and eye strain. Additionally, small errors in the IPD as well as off-centering of the device can propagate to large projectional errors due to off-axis projection [17].

Near-perfect accuracies are needed for AR to be useful during image-guided interventions. Although measuring accuracies of virtual objects in a 2D plane is relatively straightforward, measuring accuracies of virtual 3D objects is more challenging. Accuracy in depth, or the z-plane, is affected by the vergence-accommodation conflict [18]. Human eyes naturally converge and focus on an object at the same distance. However, since most OST-HMDs have fixed focal planes, the eyes may focus and converge at separate distances causing distorted depth perception (Fig. 5). This conflict is also the leading contributor for causing eye fatigue and discomfort, common symptoms from prolonged AR use [19].

Figure 5.

Various visual perception scenarios affecting vergence and accommodation. A. Focal distance and vergence distance are equal, which occurs naturally with human vision. This is the ideal configuration for OST-HMDs. B. Vergence-accommodation conflict with focal distance greater than vergence distance when virtual objects are projected close to the display. C. Vergence-accommodation conflict with focal distance less than vergence distance when virtual objects are projected far from the display.

Microsoft HoloLens (Redmond, WA), which was released in 2016, has been shown to be systematically superior to comparative OST-HMDs on the market for surgical interventions [5]. Several validation studies have reported HoloLens accuracy to be near or within one centimeter [20–25]. With subcentimeter accuracies, the U.S. Food and Drug Administration approved Novarad Opensight (American Fort, UT) and Medivis SurgicalAR (Brooklyn, NY) software applications in September 2018 and May 2019, respectively, for preoperative visualization using HoloLens.

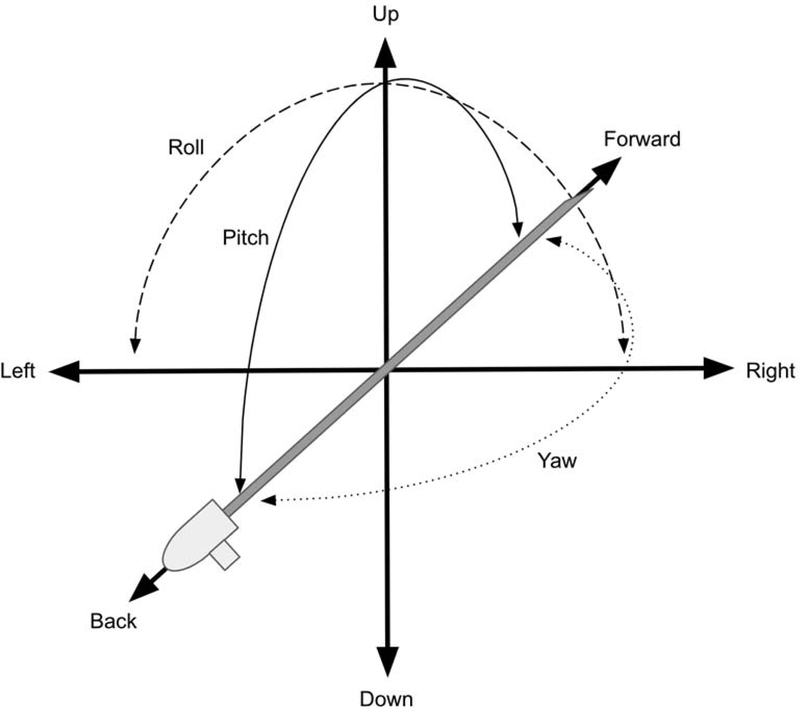

Tracking is the sensing and measuring of spatial properties. Registration involves the matching or alignment of those spatial properties, allowing anatomic imaging to be overlaid directly onto the patient. AR devices contain multiple sensors that continuously map and track its position within the environment, a process known as SLAM [26]. Accurate mapping and tracking are necessary to update spatial relationships of virtual objects. Full tracking requires 6 degrees of freedom: 3 degrees of freedom in position (x-, y-, and z-axes) and 3 degrees of freedom in rotation (pitch, yaw, and roll) (Fig. 6). Because AR involves 3D tracking, accurate registration can be challenging and limited by built-in sensors, known as “inside-out tracking.” However, computer vision algorithms using built-in cameras to detect and track image-based markers can supplement “inside-out tracking” and provide accurate and fast registration [20, 21, 27–29].

Figure 6.

Six degrees of freedom representing combination of three positions (x-y-z) and three orientations (roll-pitch-yaw).

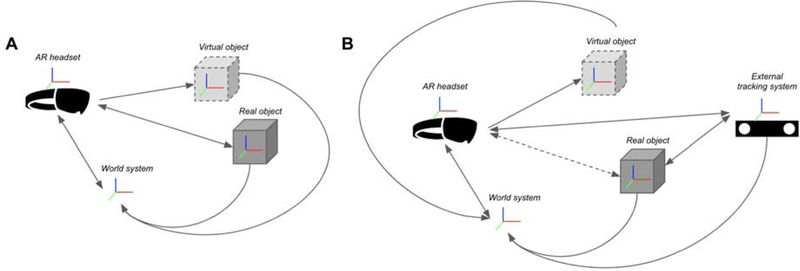

Many existing AR-assisted navigation systems integrate an external optical tracking system, or “outside-in tracking,” and bypass internal sensors to further improve accuracies or track additional hardware [30–36]. Another external tracking system commonly employed is electromagnetic tracking [37]. Despite the type of tracking, most external tracking systems provide limited degrees of freedom, balancing tradeoffs between sensing position versus orientation. For integration with external trackers, each coordinate system needs to be calibrated and transformed to be congruent within the same space, or world coordinate system (Fig. 7). Calibration of the AR device and external trackers can be performed using manual, semi-automatic, or automatic methods [32, 38]. Once calibrated, 3D datapoints in virtual space can be registered to known and tracked points in physical space.

Figure 7.

Various types of tracking with arrows representing transformations needed for virtual and physical objects to be congruent within the same space. Red-green-blue axes represent respective coordinate systems. A. “Inside-out tracking” using built-in sensors within the augmented reality headset. B. “Outside-in tracking” after integration with an external tracking system. Tracking of real physical objects by the AR headset (dotted line) is replaced by the external tracking system.

The aforementioned registration processes have all been based on rigid transformations. However, multiple practical considerations impede accuracy and rigid registration, such as patient motion, breathing, and organ deformation, which are dynamic processes that may require dynamic and potentially computationally intensive solutions [39, 40]. Respiratory and patient motion continue to remain one of the largest technical and practical hurdles for adoption of many navigation or fusion systems in IR, with many systems opting for simple respiratory gating [41] or a rigid to elastic switch [35].

III. AR in medicine

The enhanced ability of AR to visualize and localize targets may have downstream implications for improving procedural outcomes, complication profiles, and operating time. Thus, AR has been explored to augment a variety of surgical procedures. Recent applications with AR technologies have been demonstrated in neurosurgery [25, 33], otolaryngology [42], vascular surgery [43], hepatobiliary surgery [44], orthopedic surgery [45], plastic surgery [14], and urology [46]. Despite the importance of visualizing and localizing targets in IR, a recent systematic review of wearable heads-up displays in an operating room identified IR as having the fewest number of published studies among ten other procedural specialties [47]. As image-guided experts and proceduralists, more experimentation and developments in IR should be undertaken to evaluate this new visualization technology as other specialties have done.

IV. IR applications

The role for advanced imaging and technologies in image-guided procedures has transformed procedural medicine. The 1996 RSNA New Horizons Lecture emphasized the capability for computers to enhance visibility and navigate through 3D coordinates during IR and minimally invasive procedures [48]. The lecture highlighted the promises of preoperative planning to select optimal approaches, register models onto patients, and display virtual needle paths, all of which continue to be active areas of research and applicable to a variety of IR procedures. Over two decades later, these promises still have yet to be fully realized, but current research endeavors show progress and potential for translation into the IR suite.

A. Endovascular Procedures

The additional spatial information provided by AR can enable the IR to obtain a more intuitive understanding of complex vascular anatomy. Currently, IRs must cognitively associate 2D images on a monitor screen with a mentally reconstructed 3D model. AR permits the ability to easily visualize 3D vascular anatomy from prior cross-sectional imaging for preprocedural planning [49] or use as an intra-procedural reference [50]. This capability can alleviate the continual process of associating and mentally reconstructing 2D images into 3D [51]. Indeed, cognitive reconstruction and registration have been shown to be less accurate than registration with computer assistance for fusion-guided, needle-based interventions [52].

With AR, the IR can look virtually inside of the patient from any viewpoint. Anterior, posterior, and oblique divisions of vessels can be easily differentiated and optimally displayed with the added depth dimension [51]. Prior to the procedure, the IR can simulate ideal fluoroscopic angles and positions that highlight vessel courses and branchpoints. During the procedure, a virtual 3D roadmap can be placed anywhere within the IR suite as a reference to augment vessel selection and catheter positioning. Acquired cone-beam CT, or rotational angiography, can also be projected in 3D to quickly confirm the target of interest, as opposed to scrolling though axial 2D images.

AR utilization may also help achieve radiation dose savings during endovascular procedures in the future. Using AR and external electromagnetic tracking, Kuhlmann et al demonstrated the ability to overlay a 3D vascular model on a patient phantom and virtually track and navigate an endovascular catheter through the vascular model, foreseeably eliminating the need for any radiation for real-time endovascular guidance [53].

B. Percutaneous Procedures

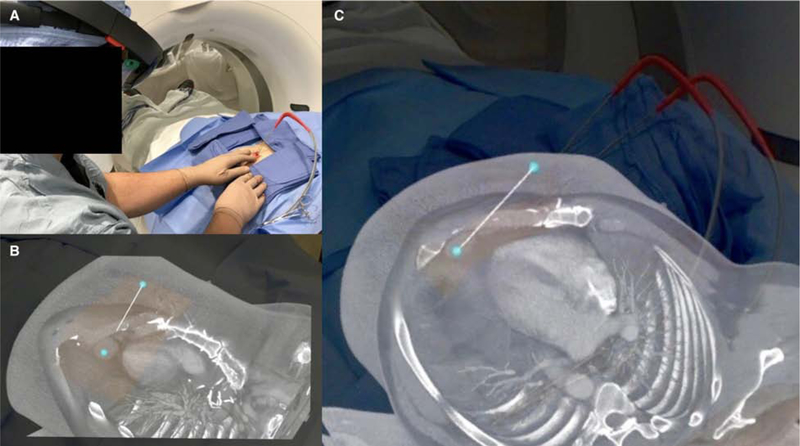

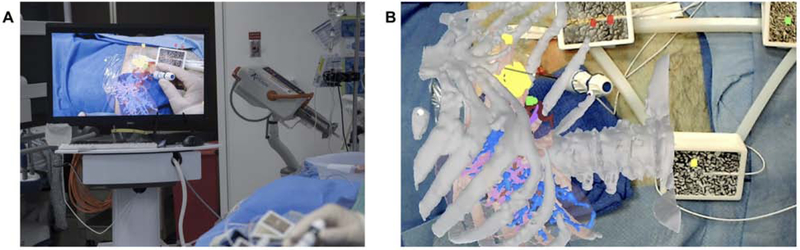

Many AR implementations currently exist for navigation during percutaneous needle-based interventions. For example, virtual 3D needle trajectories can be registered to patients to assist in the placement and positioning of ablation probes (Fig. 8); protocol approved by the institutional review board at [omitted]. Moreover, AR has been shown to reduce procedure times, number of acquired images, and radiation dose during simulated percutaneous bone interventions [54]. In a similar fashion, fusion navigation with electromagnetic tracking has been proven in a randomized controlled trial to reduce radiation, number of CT scans, indwelling needle time, and the number of needle manipulations in CT-guided liver biopsies [55]. A clinical trial utilizing HoloLens for percutaneous liver ablation is currently underway (Fig. 9) [56]; protocol approved by the institutional review board at [omitted].

Figure 8.

A. Metastatic thymoma for cryoablation of cardiophrenic lymph node using AR-assisted visualization intraoperatively. Preoperative CT was projected using Microsoft HoloLens (Redmond, WA) and Medivis SurgicalAR (Brooklyn, NY). Rendering was performed on a remote workstation and wirelessly streamed to HoloLens in real time. Holographic 3D volume was manually registered to the patient using the patient’s nipples as markers. B and C. A virtual needle trajectory track can be overlaid during planning and used as a virtual guide during the procedure.

Figure 9.

A. Hepatocellular carcinoma for microwave ablation using intraoperative AR-assisted navigation. The preoperative CT was projected using surface rendering software and navigation system by Medview AR (Cleveland, OH) and Microsoft HoloLens (Redmond, WA). The holographic projection was registered to the patient using an electromagnetic tracking system and image-based markers. B. Combined real-time tracking of virtual/actual ablation probe relative to tumor target (yellow).

One of the first AR systems for this application was made by Siemens (Erlangen, Germany) in 2006 and called RAMP, which used a VST-HMD system to project virtual needle trajectories during CT- and MRI-guided interventions [57, 58]. More recent AR-assisted navigation systems have been developed using tablets and OST-HMDs. An AR-assisted needle guidance system using an OST-HMD and external optical tracking demonstrated guidance error between the actual and virtual needle trajectories by less than two degrees in a CT phantom [31]. Another system utilizing a tablet computer and computer vision marker detection achieved sub-5 mm accuracies in a porcine model and cadaver for liver thermal ablation [28], which has been subsequently upgraded with a VST-HMD and commercialized as Bracco Imaging Endosight (Milan, Italy) for CT-based tumor ablations. In contrast to these rigid needle-based navigation systems, HoloLens was used to project and extrapolate bending 3D needle trajectories with a shape sensing needle and reduce targeting error by 26% compared to rigid needle assumptions [32].

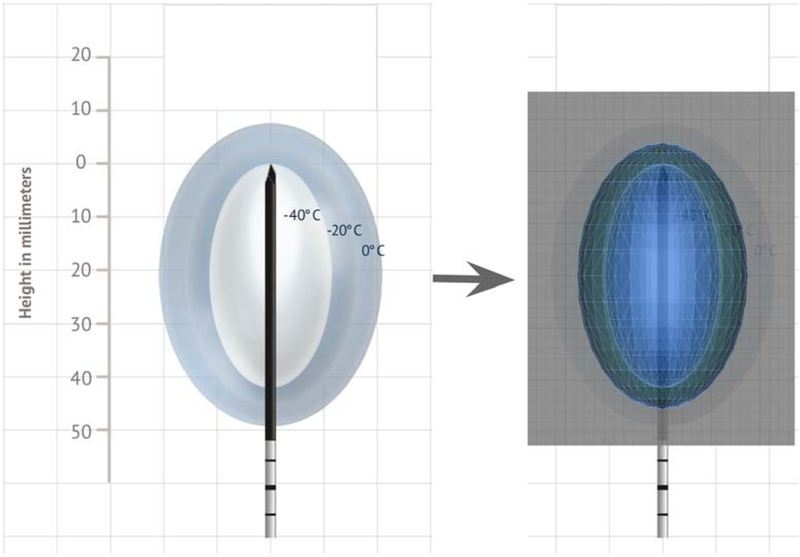

AR can provide enhanced volumetric tumor margin visualization and localization, potentially leading to more successful ablation coverage and adequate treatment margins [59]. Higher ablation success rates have been shown in simulated microwave liver ablation following planning in 3D, albeit on a monitor screen, compared to 2D [60]. In a similar fashion, AR my help plan and facilitate optimal probe placement by visualizing theoretical ablation treatment volumes in actual 3D space (Fig. 10 and Video 3). These plans can then be transferred and registered onto the patient for virtual procedural guidance using the planned trajectories. This approach may give more confidence to the IR for approaching and treating targets in challenging locations that were previously unfavorable, such as liver dome lesions requiring nonorthogonal or out-of-plane approaches [15].

Figure 10.

Planning an ablation with augmented reality. 3D surface-rendered model of −40°C and −20°C isotherm ice balls from manufacturer technical specifications from Galil Medical (Arden Hills, MN).

AR may be able to help achieve considerable radiation dose savings and resource utilization during percutaneous procedures. The use of an AR capable C-arm system revealed approximately 40–50% radiation dose reduction during needle localization of targets in pigs compared to standard CT fluoroscopy while preserving accuracy [61]. HoloLens and Novarad OpenSight were used to virtually guide spinal needles into a lumbar spine phantom and resulted in sub-5 mm accuracies using preoperative CT alone without the need for any real-time imaging [24].

C. Training & Instruction

Simulations with AR for medical training are becoming increasingly popular for teaching procedural and technical skills [62]. AR can help create immersive scenarios within a real IR suite to improve performance before complex cases or simulate the use of new equipment before actual use [63, 64]. However, current evidence regarding the relative superiority of AR simulations to conventional instruction is lacking. One study found no difference in internal jugular vein cannulation time and total procedure time using AR compared to conventional instruction [65].

In addition to simulations, AR devices enable the ability to share environments for collaborative experiences with other users. Existing interactive platforms allow remote consultants to project live annotations into the AR display of another operator, offering remote real-time instruction or expert assistance [66, 67]. Additionally, procedures performed with AR HMDs can be broadcasted on a larger scale, allowing IRs in rural settings or developing countries to visualize live or recorded procedures performed by experts [68].

D. Ergonomics & Workflow

There are benefits to projecting virtual 2D objects as well as 3D objects in IR. AR headsets are able to deploy virtual 2D monitors that can improve ergonomics, patient monitoring, and workflow. Virtual 2D monitor screens, as many as needed, can be placed anywhere within the IR suite for readily accessible viewing. These virtual screens can be made as large as desired but will fundamentally be constrained by the headset’s FOV. Images from the C-arm or ultrasound (US) machine can be streamed to the AR headset in real time with a video capture device [30]. This allows the operator to maintain focus on the task at hand while reducing gazes away from the procedural field. For example, virtual 2D monitors placed within the procedural field during vertebroplasty can allow the IR to have close observation of cement placement without shifting focus [69]. A randomized control trial in breast phantoms showed that AR-assisted needle guidance using a VST-HMD and a virtual screen displaying live 2D US images along the end of the US transducer led to improved biopsy needle accuracy compared to standard US guidance [70].

Finally, AR can have beneficial impacts on all IR staff members. C-arms requiring manual positioning can be cumbersome and require several adjustments until the desired view is obtained. An AR-assisted virtual C-arm positioning guide can aid technologists to quickly establish desired C-arm views, eliminating the need for iterative refinement and thereby reducing radiation [71]. Additionally, AR can be used to project a radiation dose map of the patient onto the IR table as well as help virtually and visually monitor radiation dose to staff members during the procedure in real time [72]. Furthermore, the advent of virtual screens and controls permits the removal of extraneous cables, carts, and mounts to allow staff members to more easily maneuver around the IR suite.

V. Conclusions

Augmented and mixed reality are novel display technologies that are able to provide a new way to visualize images and localize targets during image-guided procedures. Although IR has been somewhat slow to adopt such technologies, these technologies may be appealing to IR and image-guided therapists for readily-accessible image viewing or advanced 3D visualization intra-procedurally. AR-assisted systems should be further developed and evaluated to see if they can improve outcomes in IR with safer, more efficient procedures that require less radiation. Clinical evidence is currently lacking, but as these technologies evolve, AR may become easier to implement and utilize imaging in an actual volumetric fashion to enhance interventions. However, it will be paramount that metrics be established and clearly defined through validated, high-level, evidence-based studies. In doing so, translation and adoption into the IR suite may transform the way future image-guided interventions are undertaken and provide benefits to patients, operators, and staff.

Supplementary Material

Video 1. Surface-rendered (SR) 3D model of a right apical lung mass from CT scan using Microsoft HoloLens (Redmond, WA) and custom software created in Unity (San Francisco, CA). Only surface mesh data from skin, bones, and tumor (red) are projected. Content within these surfaces are absent and create a hollow appearance. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.

Video 2. Direct volume-rendered (DVR) 3D model of an apical lung mass from CT scan using Microsoft HoloLens (Redmond, WA) and Medivis SurgicalAR (Brooklyn, NY). Entire dataset from the CT scan is projected, and all internal content is viewable. Rendering was performed locally on HoloLens. Drops in frame rate can occasionally be noticed due to HoloLens struggling with DVR computations. This can be overcome by performing remote rendering on a more powerful machine and wirelessly streaming the content to HoloLens in real time. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.

Video 3. AR ablation planning with 3D visualization of a surface-rendered model of a large, complex iliac bone metastasis and treatment coverage from multiple cryoablation probes with models of −40°C and −20°C isotherm ice balls. Five virtual probes are shown for demonstration purposes only. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.

Acknowledgments:

BP reports grants from the NIH (5T32EB004311-14), SIR Foundation, and Nvidia; and nonfinancial support from Medivis during the conduct of the study. SH reports personal fees from BTG and Amgen outside the submitted work. CM reports grants from Cleveland Clinic Lerner Research Institute and National Center for Accelerated Innovation (NCAI-CC); and other activities from Boston Scientific, BTG, and Terumo Medical outside the submitted work. GN has nothing to disclose. BW reports grants and other from activities from Philips, NVIDIA, Siemens, Celsion, XAct Robotics, and BTG Biocompatibles outside the submitted work; multiple patents in field of fusion and image-guided therapies (not directly related to augmented reality or this work) with royalties from Philips; and research support from the NIH Center for Interventional Oncology and NIH Intramural Research Program. TG reports personal fees from Trisalis Life Sciences outside the submitted work. Special thanks to Dr. Steven Horii and the Penn Medicine Medical Device Accelerator for supporting equipment for this research.

Footnotes

Conflicts of Interests and Financial Disclosures: There are no financial disclosures of any author listed that are related to or conflict with this topic of interest.

SIR Annual Scientific Meeting: Related research has been presented as oral presentations, invited talks, and posters in 2018 and 2019.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- [1].Milgram P, Takemura H, Utsumi A, Kishino F. Augmented reality: a class of displays on the reality-virtuality continuum. Proc SPIE 2351, Telemanipulator and Telepresence Technologies, 1994; p. 282–92. [Google Scholar]

- [2].Vovk A, Wild F, Guest W, Kuula T. Simulator Sickness in Augmented Reality Training Using the Microsoft HoloLens ACM Human Factors in Computing Systems. Montreal, QC, Canada, 2018. [Google Scholar]

- [3].Sojourner RJ, Antin JF. The Effects of a Simulated Head-Up Display Speedometer on Perceptual Task Performance. Human Factors 1990; 32:329–39. [DOI] [PubMed] [Google Scholar]

- [4].He J. Head-up display for pilots and drivers. Journal of Ergonomics 2013; 3:e120. [Google Scholar]

- [5].Qian L, Barthel A, Johnson A, et al. Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int J Comput Assist Radiol Surg 2017; 12:901–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Calhoun PS, Kuszyk BS, Heath DG, Carley JC, Fishman EK. Three-dimensional volume rendering of spiral CT data: theory and method. Radiographics 1999; 19:745–64. [DOI] [PubMed] [Google Scholar]

- [7].Dalrymple NC, Prasad SR, Freckleton MW, Chintapalli KN. Introduction to the language of three-dimensional imaging with multidetector CT. Radiographics 2005; 25:1409–28. [DOI] [PubMed] [Google Scholar]

- [8].Zhang Q, Eagleson R, Peters TM. Volume visualization: a technical overview with a focus on medical applications. J Digit Imaging 2011; 24:640–64. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Sutherland J, Belec J, Sheikh A, et al. Applying Modern Virtual and Augmented Reality Technologies to Medical Images and Models. J Digit Imaging 2018; 32:38–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Meißner M, Huang J, Bartz D, Mueller K, Crawfis R. A practical evaluation of popular volume rendering algorithms IEEE Volume Visualization Symposium. Salt Lake City, UT, 2000. [Google Scholar]

- [11].Holub J, Winer E. Enabling Real-Time Volume Rendering of Functional Magnetic Resonance Imaging on an iOS Device. J Digit Imaging 2017; 30:738–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Schmalstieg D, Höllerer T. Augmented Reality: Principles and Practice. 1st ed: Addison-Wesley Professional, 2016. [Google Scholar]

- [13].Ruch TC, Fulton JF. Medical Physiology and Biophysics. 18 ed: W.B. Saunders, 1960. [Google Scholar]

- [14].Tepper OM, Rudy HL, Lefkowitz A, et al. Mixed Reality with HoloLens: Where Virtual Reality Meets Augmented Reality in the Operating Room. Plast Reconstr Surg 2017; 140:1066–70. [DOI] [PubMed] [Google Scholar]

- [15].Maybody M, Stevenson C, Solomon SB. Overview of navigation systems in image-guided interventions. Tech Vasc Interv Radiol 2013; 16:136–43. [DOI] [PubMed] [Google Scholar]

- [16].Grubert J, Itoh Y, Moser K, Swan JE. A survey of calibration methods for optical see-through head-mounted displays. J IEEE Transactions on Visualization and Computer Graphics 2018; 24:2649–62. [DOI] [PubMed] [Google Scholar]

- [17].Held RT, Hui TT. A guide to stereoscopic 3D displays in medicine. Acad Radiol 2011; 18:1035–48. [DOI] [PubMed] [Google Scholar]

- [18].Singh G, Ellis SR, Swan II JE. The effect of focal distance, age, and brightness on near-field augmented reality depth matching. IEEE Transactions on Visualization and Computer Graphics 2019; Preprint. [DOI] [PubMed] [Google Scholar]

- [19].Shibata T, Kim J, Hoffman DM, Banks MS. Visual discomfort with stereo displays: Effects of viewing distance and direction of vergence-accommodation conflict. Proc SPIE 7863, Stereoscopic Displays and Applications XXII, 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Perkins SL, Lin MA, Srinivasan S, Wheeler AJ, Hargreaves BA, Daniel BL. A Mixed-Reality System for Breast Surgical Planning. IEEE Int Symposium on Mixed and Augmented Reality Adjunct Proc, 2017; p. 269–74. [Google Scholar]

- [21].Frantz T, Jansen B, Duerinck J, Vandemeulebroucke J. Augmenting Microsoft’s HoloLens with vuforia tracking for neuronavigation. Healthc Technol Lett 2018; 5:221–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Liu Y, Dong H, Zhang L, El Saddik A. Technical Evaluation of HoloLens for Multimedia: A First Look. IEEE MultiMedia 2018; 25:8–18. [Google Scholar]

- [23].Rae E, Lasso A, Holden MS, Morin E, Levy R, Fichtinger G. Neurosurgical burr hole placement using the Microsoft HoloLens. Proc SPIE 10576, Medical Imaging: Image-Guided Procedures, Robotic Interventions, and Modeling: International Society for Optics and Photonics, 2018. [Google Scholar]

- [24].Gibby JT, Swenson SA, Cvetko S, Rao R, Javan R. Head-mounted display augmented reality to guide pedicle screw placement utilizing computed tomography. Int J Comput Assist Radiol Surg 2019; 14:525–35. [DOI] [PubMed] [Google Scholar]

- [25].Incekara F, Smits M, Dirven C, Vincent A. Clinical Feasibility of a Wearable Mixed-Reality Device in Neurosurgery. World Neurosurg 2018; 118:e422–e7. [DOI] [PubMed] [Google Scholar]

- [26].Cadena C, Carlone L, Carrillo H, et al. Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age. IEEE Transactions on Robotics 2016; 32:1309–32. [Google Scholar]

- [27].Lepetit V. On computer vision for augmented reality. IEEE Int Symposium on Ubiquitous Virtual Reality, 2008; p. 13–6. [Google Scholar]

- [28].Solbiati M, Passera KM, Rotilio A, et al. Augmented reality for interventional oncology: proof-of-concept study of a novel high-end guidance system platform. Eur Radiol Exp 2018; 2:18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Park BJ, Hunt S, Nadolski G, Gade T. Registration methods to enable augmented reality-assisted 3D image-guided interventions. Proc SPIE 11072, Fully Three-Dimensional Image Reconstruction in Radiology and Nuclear Medicine, 2019. [Google Scholar]

- [30].Kuzhagaliyev T, Clancy NT, Janatka M, et al. Augmented reality needle ablation guidance tool for irreversible electroporation in the pancreas. Proc SPIE 10576, Medical Imaging: Image-Guided Procedures, Robotic Interventions, and Modeling, 2018. [Google Scholar]

- [31].Li M, Xu S, Wood BJ. Assisted needle guidance using smart see-through glasses. Proc SPIE 10576, Medical Imaging: Image-Guided Procedures, Robotic Interventions, and Modeling, 2018. [Google Scholar]

- [32].Lin MA, Siu AF, Bae JH, Cutkosky MR, Daniel BL. HoloNeedle: Augmented Reality Guidance System for Needle Placement Investigating the Advantages of Three-Dimensional Needle Shape Reconstruction. J IEEE Robotics and Automation Letters 2018; 3:4156–62. [Google Scholar]

- [33].Maruyama K, Watanabe E, Kin T, et al. Smart Glasses for Neurosurgical Navigation by Augmented Reality. Oper Neurosurg 2018; 0:1–6. [DOI] [PubMed] [Google Scholar]

- [34].Si W, Liao X, Wang Q, Heng P-A. Augmented Reality-Based Personalized Virtual Operative Anatomy for Neurosurgical Guidance and Training IEEE Conference on Virtual Reality and 3D User Interfaces. Reutlingen, Germany, 2018; p. 683–4. [Google Scholar]

- [35].Si W, Liao X, Qian Y, Wang Q. Mixed Reality Guided Radiofrequency Needle Placement: A Pilot Study. J IEEE Access 2018; 6:31493–502. [Google Scholar]

- [36].Meulstee JW, Nijsink J, Schreurs R, et al. Toward Holographic-Guided Surgery. Surgical Innovation 2019; 26:86–94. [DOI] [PubMed] [Google Scholar]

- [37].Cleary K, Peters TM. Image-guided interventions: technology review and clinical applications. Annu Rev Biomed Eng 2010; 12:119–42. [DOI] [PubMed] [Google Scholar]

- [38].Andress S, Johnson A, Unberath M, et al. On-the-fly augmented reality for orthopedic surgery using a multimodal fiducial. J Med Imaging 2018; 5:021209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [39].Udupa JK. Three-dimensional visualization and analysis methodologies: a current perspective. Radiographics 1999; 19:783–806. [DOI] [PubMed] [Google Scholar]

- [40].Tam GKL, Cheng Z, Lai Y, et al. Registration of 3D Point Clouds and Meshes: A Survey from Rigid to Nonrigid. IEEE Transactions on Visualization and Computer Graphics 2013; 19:1199–217. [DOI] [PubMed] [Google Scholar]

- [41].Nicolau SA, Pennec X, Soler L, et al. An augmented reality system for liver thermal ablation: design and evaluation on clinical cases. Med Image Anal 2009; 13:494–506. [DOI] [PubMed] [Google Scholar]

- [42].Wong K, Yee HM, Xavier BA, Grillone GA. Applications of Augmented Reality in Otolaryngology: A Systematic Review. Otolaryngol Head Neck Surg 2018; 159:956–67. [DOI] [PubMed] [Google Scholar]

- [43].Pratt P, Ives M, Lawton G, et al. Through the HoloLens looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur Radiol Exp 2018; 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [44].Tang R, Ma LF, Rong ZX, et al. Augmented reality technology for preoperative planning and intraoperative navigation during hepatobiliary surgery: A review of current methods. Hepatobiliary Pancreat Dis Int 2018; 17:101–12. [DOI] [PubMed] [Google Scholar]

- [45].Gregory TM, Gregory J, Sledge J, Allard R, Mir O. Surgery guided by mixed reality: presentation of a proof of concept. Acta Orthop 2018; 89:480–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [46].Detmer FJ, Hettig J, Schindele D, Schostak M, Hansen C. Virtual and Augmented Reality Systems for Renal Interventions: A Systematic Review. IEEE Reviews in Biomedical Engineering 2017; 10:78–94. [DOI] [PubMed] [Google Scholar]

- [47].Yoon JW, Chen RE, Kim EJ, et al. Augmented reality for the surgeon: Systematic review. Int J Med Robot 2018; 14:e1914. [DOI] [PubMed] [Google Scholar]

- [48].Jolesz FA. 1996 RSNA Eugene P. Pendergrass New Horizons Lecture. Image-guided procedures and the operating room of the future. Radiology 1997; 204:601–12. [DOI] [PubMed] [Google Scholar]

- [49].Karmonik C, Elias SN, Zhang JY, et al. Augmented Reality with Virtual Cerebral Aneurysms: A Feasibility Study. World Neurosurg 2018; 119:e617–e22. [DOI] [PubMed] [Google Scholar]

- [50].Grinshpoon A, Sadri S, Loeb GJ, Elvezio C, Siu S, Feiner SK. Hands-free augmented reality for vascular interventions ACM SIGGRAPH Emerging Technologies. Vancouver, BC, Canada, 2018. [Google Scholar]

- [51].Mohammed MAA, Khalaf MH, Kesselman A, Wang DS, Kothary N. A Role for Virtual Reality in Planning Endovascular Procedures. J Vasc Interv Radiol 2018; 29:971–4. [DOI] [PubMed] [Google Scholar]

- [52].Kwak JT, Hong CW, Pinto PA, et al. Is Visual Registration Equivalent to Semiautomated Registration in Prostate Biopsy? BioMed Research International 2015; 2015:7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Kuhlemann I, Kleemann M, Jauer P, Schweikard A, Ernst F. Towards X-ray free endovascular interventions - using HoloLens for on-line holographic visualisation. Healthc Technol Lett 2017; 4:184–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [54].Fotouhi J, Fuerst B, Lee SC, et al. Interventional 3D augmented reality for orthopedic and trauma surgery. Int Society for Computer Assisted Orthopedic Surgery, 2016. [Google Scholar]

- [55].Kim E, Ward TJ, Patel RS, Fischman AM, Nowakowski S, Lookstein RA. CT-guided liver biopsy with electromagnetic tracking: results from a single-center prospective randomized controlled trial. Am J Roentgenol 2014; 203:W715–23. [DOI] [PubMed] [Google Scholar]

- [56].Martin C. Intra-procedural 360-degree Display for Performing Percutaneous Liver Tumor Ablation NCT03500757. ClinicalTrials.gov. Online. Accessed 1 Nov 2018. [Google Scholar]

- [57].Das M, Sauer F, Schoepf UJ, et al. Augmented reality visualization for CT-guided interventions: system description, feasibility, and initial evaluation in an abdominal phantom. Radiology 2006; 240:230–5. [DOI] [PubMed] [Google Scholar]

- [58].Wacker FK, Vogt S, Khamene A, et al. An augmented reality system for MR image-guided needle biopsy: initial results in a swine model. Radiology 2006; 238:497–504. [DOI] [PubMed] [Google Scholar]

- [59].Abi-Jaoudeh N, Venkatesan AM, Van der Sterren W, Radaelli A, Carelsen B, Wood BJ. Clinical experience with cone-beam CT navigation for tumor ablation. J Vasc Interv Radiol 2015; 26:214–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [60].Liu F, Cheng Z, Han Z, Yu X, Yu M, Liang P. A three-dimensional visualization preoperative treatment planning system for microwave ablation in liver cancer: a simulated experimental study. Abdom Radiol 2017; 42:1788–93. [DOI] [PubMed] [Google Scholar]

- [61].Racadio JM, Nachabe R, Homan R, Schierling R, Racadio JM, Babic D. Augmented Reality on a C-Arm System: A Preclinical Assessment for Percutaneous Needle Localization. Radiology 2016; 281:249–55. [DOI] [PubMed] [Google Scholar]

- [62].Barsom EZ, Graafland M, Schijven MP. Systematic review on the effectiveness of augmented reality applications in medical training. Surg Endosc 2016; 30:4174–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [63].Gould DA. Interventional radiology simulation: prepare for a virtual revolution in training. J Vasc Interv Radiol 2007; 18:483–90. [DOI] [PubMed] [Google Scholar]

- [64].Uppot RN, Laguna B, McCarthy CJ, et al. Implementing Virtual and Augmented Reality Tools for Radiology Education and Training, Communication, and Clinical Care. Radiology 2019:182210. [DOI] [PubMed] [Google Scholar]

- [65].Huang CY, Thomas JB, Alismail A, et al. The use of augmented reality glasses in central line simulation: “see one, simulate many, do one competently, and teach everyone”. Adv Med Educ Pract 2018; 9:357–63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Shenai MB, Dillavou M, Shum C, et al. Virtual interactive presence and augmented reality (VIPAR) for remote surgical assistance. Neurosurgery 2011; 68:200–7. [DOI] [PubMed] [Google Scholar]

- [67].Wang S, Parsons M, Stone-McLean J, et al. Augmented reality as a telemedicine platform for remote procedural training. J Sensors 2017; 17:2294. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [68].Khor WS, Baker B, Amin K, Chan A, Patel K, Wong J. Augmented and virtual reality in surgery—the digital surgical environment: applications, limitations and legal pitfalls. J Annals of Translational Med 2016; 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Deib G, Johnson A, Unberath M, et al. Image guided percutaneous spine procedures using an optical see-through head mounted display: proof of concept and rationale. J Neurointerv Surg 2018; 0:1–5. [DOI] [PubMed] [Google Scholar]

- [70].Rosenthal M, State A, Lee J, et al. Augmented reality guidance for needle biopsies: an initial randomized, controlled trial in phantoms. Med Image Anal 2002; 6:313–20. [DOI] [PubMed] [Google Scholar]

- [71].Unberath M, Fotouhi J, Hajek J, et al. Augmented reality-based feedback for technician-in-the-loop C-arm repositioning. Healthc Technol Lett 2018; 5:143–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [72].Flexman M, Panse A, Mory B, Martel C, Gupta A. Augmented reality for radiation dose awareness in the catheterization lab ACM SIGGRAPH. Los Angeles, California, 2017. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Video 1. Surface-rendered (SR) 3D model of a right apical lung mass from CT scan using Microsoft HoloLens (Redmond, WA) and custom software created in Unity (San Francisco, CA). Only surface mesh data from skin, bones, and tumor (red) are projected. Content within these surfaces are absent and create a hollow appearance. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.

Video 2. Direct volume-rendered (DVR) 3D model of an apical lung mass from CT scan using Microsoft HoloLens (Redmond, WA) and Medivis SurgicalAR (Brooklyn, NY). Entire dataset from the CT scan is projected, and all internal content is viewable. Rendering was performed locally on HoloLens. Drops in frame rate can occasionally be noticed due to HoloLens struggling with DVR computations. This can be overcome by performing remote rendering on a more powerful machine and wirelessly streaming the content to HoloLens in real time. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.

Video 3. AR ablation planning with 3D visualization of a surface-rendered model of a large, complex iliac bone metastasis and treatment coverage from multiple cryoablation probes with models of −40°C and −20°C isotherm ice balls. Five virtual probes are shown for demonstration purposes only. Note that the 2D video does not fully capture the 3D stereoscopic views seen with AR.