Abstract

Speakers show a remarkable tendency to align their productions with their interlocutors’. Focusing on sentence production, we investigate the cognitive systems underlying such alignment (syntactic priming). Our guiding hypothesis is that syntactic priming is a consequence of a language processing system that is organized to achieve efficient communication in an ever-changing (subjectively non-stationary) environment. We build on recent work suggesting that comprehenders adapt to the statistics of the current environment. If such adaptation is rational or near-rational, the extent to which speakers adapt their expectations for a syntactic structure after processing a prime sentence should be sensitive to the prediction error experienced while processing the prime. This prediction is shared by certain error-based implicit learning accounts, but not by most other accounts of syntactic priming. In three studies, we test this prediction against data from conversational speech, speech during picture description, and written production during sentence completion. All three studies find stronger syntactic priming for primes associated with a larger prediction error (primes with higher syntactic surprisal). We find that the relevant prediction error is sensitive to both prior and recent experience within the experiment. Together with other findings, this supports accounts that attribute syntactic priming to expectation adaptation.

Keywords: Structural persistence, Syntactic priming, Alignment, Adaptation, Implicit learning, Prediction error, Surprisal

1. Introduction

When we talk, we align with our conversation partners along various levels of linguistic representation. This includes decisions about speech rate and how we articulate sounds, as well as lexical and structural decisions. Here we focus on alignment of syntactic structure, also known as syntactic priming or structural persistence (Bock, 1986; Pickering & Branigan, 1998). Syntactic priming has received an enormous amount of attention in the psycholinguistic literature (for a recent overview, see Pickering & Ferreira, 2008). With respect to language production, which we will be concerned with here, syntactic priming refers to the increased probability of re-using recently processed syntactic structures. For example, comprehending a passive sentence (e.g., The church was struck by lightning) increases the probability of encoding the next transitive event with a passive rather than an active structure.

A large body of work has investigated under what conditions syntactic priming is observed. Thanks to this work, it is known that syntactic priming is observed in both spoken and written production and that it is observed independent of whether the prime was produced or comprehended (to name just two findings). What has emerged from this work is that priming effects are small but robust. Others have investigated what factors modulate the strength of syntactic priming – that is, the magnitude of the increase in the probability of re-using the syntactic structure of the prime. For example, a stronger priming effect is observed for target sentences with the same verb as the prime, compared to targets that do not overlap lexically with the prime (the ‘lexical boost’ effect, e.g., Hartsuiker, Bernolet, Schoonbaert, Speybroeck, & Vanderelst, 2008; Pickering & Branigan, 1998; Snider, 2008).

Considerably less is known about what causes syntactic alignment. Despite broad agreement on the significance of this question, relatively few studies have addressed it (e.g., Bock & Griffin, 2000; Chang, Dell, & Bock, 2006; Kaschak, 2007; Malhotra, 2009; Pickering, Branigan, Cleland, & Stewart, 2000; Reitter, Keller, & Moore, 2011). We explore the hypothesis that syntactic priming is a consequence of adaptation with the goal to minimize the expected prediction error experienced while processing subsequent sentences, thereby facilitating efficient information transfer (cf. Jaeger, 2010). This view owes intellectual debt to, and builds on, previous accounts of syntactic priming in terms of implicit learning (in particular, Chang et al., 2006; but also Bock & Griffin, 2000; Chang, Dell, Bock, & Griffin, 2000; Kaschak, 2007). We use the term adaptation or expectation adaptation as a mechanism-neutral term to refer to changes in the expectations or beliefs held by producers and comprehenders. By prediction error, we refer to the deviation between what is observed and expectations prior to the observation. In particular, we focus on the syntactic prediction error, the degree to which expectations for syntactic structures are violated during incremental language understanding.1 The minimization of future prediction errors – or, more cautiously, the maximization of utility, which usually entails the ability to reduce the prediction error – is broadly accepted to be one of the central functions of the brain (for a summary of relevant work, see Clark, in press).

In order to situate our approach to syntactic priming within a broader theoretical context, we begin by reviewing the role of prediction errors in language processing. This leads us to recent work on syntactic priming and adaptation in comprehension, and the question as to how comprehenders determine how much to adapt their expectations for future sentences whenever a prediction error is experienced. Once we have established this broader context, we discuss the consequences for syntactic priming during language production.

1.1. Prediction errors in language comprehension

The prediction error experienced while processing a word or sentence affects the processing difficulty associated with it. For example, the processing difficulty experienced when temporarily ambiguous sequences of words are disambiguated towards a specific interpretation (so-called ‘garden path’ effects) depends on how expected that interpretation was given the preceding context (e.g., Garnsey, Pearlmutter, Meyers, & Lotocky, 1997; Hare, McRae, & Elman, 2003; MacDonald, Pearlmutter, & Seidenberg, 1994; Trueswell, Tanenhaus, & Kello, 1993). Similarly, word-by-word processing difficulty during reading is a function of how expected the word is given preceding context (among other factors, e.g., DeLong, Urbach, & Kutas, 2005; Demberg & Keller, 2008; Levy, 2008; McDonald & Shillcock, 2003; Rayner & Duffy, 1988; Staub & Clifton, 2006).

Sensitivity to prediction errors is a natural consequence of a processing system that has developed to process language efficiently: expectations based on previous experience help to overcome the noisiness of the perceptual input and to deal efficiently with uncertainty about the incremental parse (see also Levy, 2008; Norris & McQueen, 2008; Smith & Levy, 2008). This assumes that comprehenders’ expectations closely match the actual statistics of the linguistic environment, thereby minimizing the expected prediction error. This assumption might be seen as in conflict with another well-known property of language: speakers differ with regard to their production preferences, including syntactic preferences (e.g., Tagliamonte & Smith, 2005; Weiner & Labov, 1983). Even within a speaker, syntactic preference can vary dependent on, for example, register (Finegan & Biber, 2001; Sigley, 1997). As a consequence, the actual linguistic distributions frequently change. From the comprehender’s perspective, linguistic distributions are thus subjectively non-stationary. Provided that differences in environment-specific statistics are sufficiently large, this implies that language understanding will be more efficient if comprehenders continuously adapt their syntactic expectations to match the statistics of the current environment (e.g., speaker-specific production preferences).

Indeed, there is evidence for such behavior, which we have dubbed expectation adaptation elsewhere (Fine, Jaeger, Farmer, & Qian, submitted for publication). One piece of evidence comes from the burgeoning literature on syntactic priming in comprehension. For example, recent exposure to a syntactic structure results in faster processing if the same structure is encountered again (e.g., Arai, van Gompel, & Scheepers, 2007; Traxler, 2008). That these effects are due to changes in expectations is confirmed by evidence from anticipatory eye-movements during language comprehension. In a visual world eye-tracking paradigm, Thothathiri and Snedeker (2008) find that listeners were biased to expect the most recently experienced structure to be used again. These studies provide evidence that the most recently experienced prime affects expectations for upcoming syntactic structure. Other experiments have found that comprehenders integrate, not only the most recent prime, but rather the cumulative recent experience, into environment-specific syntactic expectations (e.g., Fine, Qian, Jaeger, & Jacobs, 2010; Hanulłková, van Alphen, van Goch, & Weber, 2012; Kamide, 2012; Kaschak & Glenberg, 2004b). For example, consider the case of garden path sentences, which are associated with processing difficulty at the disambiguation point. As mentioned above, this processing difficulty is a function of how unexpected the disambiguated parse is. In self-paced reading experiments, Fine et al. (submitted for publication) found that this processing difficulty at the disambiguation point dissipates rapidly if the a priori unexpected parse is experienced frequently in the current environment. These studies also provide preliminary evidence that comprehenders’ adapted expectations actually converge against the statistics of the environment.

Findings like these suggest that comprehenders continuously adapt their expectations to match – or at least approximate – environment-specific statistics (in this case, the distribution of syntactic structures in an experiment). But how do comprehenders know how much to adapt their expectations at each moment in time? One important source of information is the prediction error. Generally, the larger the prediction error, the more there is a need to adapt one’s prior expectations (see also Courville, Daw, & Touretzky, 2006). This insight is, of course, incorporated in many models of learning, regardless of whether the prediction error is explicitly evoked in the learning algorithm (as in error-based learning, e.g., Elman, 1990; Rescorla & Wagner, 1972; Rumelhart, Hinton, & Williams, 1986) or not (as in other forms of supervised learning, such as Bayesian belief update, or unsupervised learning). If our perspective on expectation adaptation during language comprehension is correct, the degree to which comprehenders adapt their syntactic expectations after exposure to a prime sentence should be a function of the prediction error they experienced while processing that prime.2

We are not the first to make this prediction. In their seminal paper, Chang et al. (2006) present a connectionist model of language acquisition that shares our prediction. The primary purpose of their model is to account for the acquisition of syntax from sequences of words. In their model, the language comprehension and production systems are structured networks of simple recurrent networks (Elman, 1990). Syntactic acquisition is achieved by error-based implicit learning: during comprehension learners predict the next word, and the deviation between the comprehenders expectations and the actually observed word (i.e., the prediction error) serves as an error signal that is used to adjust the weights in the network (Chang et al., 2006, p. 270; via backpropagation, Rumelhart et al., 1986). Henceforth, we will refer to this as the error-based model.

Syntactic priming follows from the error-based model with just the additional assumption that the same error-based learning processes operating during acquisition continue to operate throughout adult life (see also Botvinick & Plaut, 2004; Plaut, McClelland, Seidenberg, & Patterson, 1996). As we detail below, this also predicts that the strength of syntactic priming should be a function of the prediction error experienced while processing the prime (Chang et al., 2006, p. 255). The error-based model thus shares this prediction with the perspective advanced here, that syntactic priming is a consequence of adaptation with the goal to minimize the expected prediction error. In the discussion, we review important conceptual differences between the approaches as well as the predictions they make for future work. For now, we focus on their shared prediction.

1.2. Prediction errors and syntactic priming

Preliminary support for this prediction comes from syntactic priming in comprehension: a re-analysis of Thothathiri and Snedeker (2008) discussed above found that syntactic primes with larger prediction errors result in bigger changes in expectations following the prime (Fine & Jaeger, in press). Here, we turn to production.

Prima facie, speakers could contribute to mutual expectation alignment by aligning their production preferences with (their beliefs about) their interlocutors’ expectations. Such alignment can contribute to the minimization of the joint future prediction error, thereby facilitating both faster information transfer (Levy, 2008; Levy & Jaeger, 2007) and more efficient information transfer (Aylett & Turk, 2004; Jaeger, 2006; Jaeger, 2010). Specifically, if interlocutors’ production preferences are at least in part reflective of their expectations in comprehension, speakers should adapt their production preferences based on the prediction error they experienced while comprehending their interlocutors’ utterances. Although the error-based model is not typically described in these terms, it describes an architecture that essentially achieves this type of mutual expectation alignment.

In the error-based model, comprehension and production share the same sequencing system (Chang et al., 2006, pp. 238–239). This system generates predictions about word sequences. In comprehension, these predictions contribute to the expectations speakers have during word-by-word sentence understanding. In production, they affect which word is produced next.3 An elegant consequence of this – which to the best of our knowledge has not been discussed before – is that syntactic priming in production contributes to the reduction of interlocutors prediction error during comprehension (and hence to the processing difficulty experienced by interlocutors): not only would comprehenders adapt their expectations to match the interlocutor’s production preferences, but they would also adjust their own production preferences so as to more closely resemble the expectations of their interlocutor.

This means that both producers and comprehenders would be contributing to mutual expectation alignment (see also Pickering & Garrod, 2004 although the current account is considerably more specific), thereby contributing to the minimization of the joint effort experienced by interlocutors (cf. Clark, 1996). Among other things, the perspective outlined here also offers an explanation as to why syntactic alignment between interlocutors seems to facilitate both faster production (Ferreira, Klein-man, Kraljic, & Siu, 2011) and better communication (Reitter & Moore, 2007) – because it reduces the prediction error experienced during both production and comprehension.

This brings us to the prediction we aim to test: the relative increase in the strength of syntactic priming should increase as a function of the prediction error experienced while processing the prime structure. This prediction is in principle compatible with other implicit learning accounts that commit neither to specific forms of learning nor to specific aims of learning (e.g., Bock & Griffin, 2000; Kaschak, 2007). Competing accounts that take syntactic priming to be solely a consequence of short-term activation boosts of recently processed representations do not share this prediction (e.g., Dubey, Keller, & Sturt, 2008; Kaschak & Glenberg, 2004a; Pickering & Branigan, 1998). A recently proposed third type of account, hybrid accounts, that evoke both short-term activation boosts and unsupervised implicit learning mechanisms, require additional assumptions to accommodate our prediction (Reitter et al., 2011). In the interest of brevity, we postpone the discussion of these accounts to the general discussion.

Preliminary evidence in line with the prediction that syntactic priming in production is sensitive to the prediction error comes from the observation that less frequent structures tend to prime more strongly (the ‘inverse frequency’ or ‘inverse preference’ effect, e.g., Bock, 1986; Ferreira, 2003; Hartsuiker & Kolk, 1998; Kaschak, Kutta, & Jones, 2011; Scheepers, 2003). For example, English passives, which are hugely less frequent than active structures, elicit reliable syntactic priming whereas active structures exhibit almost no detectable priming effect (Bock, 1986). Since frequency tends to be associated with processing times (e.g., Rayner & Duffy, 1988), it is natural to assume that frequency is inversely correlated with the prediction error experienced while processing a structure. Under this assumption, the inverse frequency effect provides evidence that syntactic priming is sensitive to the prediction error.

The inverse frequency effect is, however, only a weak test of the prediction that we are interested in. If language processing is indeed inescapably tied to learning, the relevant prediction error should be context-dependent: there is broad agreement that the processing difficulty associated with a word or structure is affected by its contextual predictability (e.g., Altmann & Kamide, 1999; DeLong et al., 2005; Garnsey et al., 1997; Kamide, Altmann, & Haywood, 2003; MacDonald et al., 1994; McDonald & Shillcock, 2003; Staub & Clifton, 2006; Trueswell et al., 1993). Some even hold that contextual expectations are the primary source of processing difficulty compared to frequency effects (Smith & Levy, 2008).

Hence, the prediction made here and by the error-based model implies that the strength of syntactic priming in language production is a function of the prediction error given context-dependent expectations based on both prior and recent experience. We test these predictions against data from conversational speech and experiments on written as well as spoken language production. The first of these two predictions (the effect of prediction errors based on prior experience) is also investigated in a recent study on the Dutch ditransitive alternation by Bernolet and Hartsuiker (2010). We return to their results below.

1.3. Overview of studies

The studies presented below investigate syntactic priming in the dative alternation (also called ditransitive alternation, e.g., Bresnan, Cueni, Nikitina, & Baayen, 2007). In the dative alternation, speakers choose between two near-meaning equivalent syntactic variants, as exemplified in (1a) and (1b). In the double object (DO) variant the verb (give below) is followed by two noun phrase arguments, with the recipient argument (a country) preceding the theme argument (money). In the prepositional object (PO) variant, the dative verb is followed by the theme and then a prepositional phrase, the argument of which is the recipient noun phrase.

| (1) |

|

Study 1 investigates the effect of the prime’s prediction error on the strength of syntactic priming in conversational speech (based on data from Bresnan et al. (2007) and Recchia (2007)). Study 2 investigates syntactic priming due to written primes in written sentence completion (based on data from Kaschak (2007) and Kaschak & Borreggine (2008)). Study 3 investigates syntactic priming due to spoken primes in spoken picture description. Studies 1 and 2 employ estimates of the prediction error based on the prior ‘average’ language experience that speakers have before entering the context in which we assess syntactic priming. These studies hence follow the majority of previous work in employing estimates of prior language experience that are neither individualized nor sensitive to the statistics of the current environment (for some exceptions, see Fine & Jaeger, 2011; Fine et al., submitted for publication; MacDonald & Christiansen, 2002; Wells, Christiansen, Race, Acheson, & MacDonald, 2009). In this sense, these studies assess the effect of the prediction error based on prior experience.

However, if there is indeed life-long continuous adaptation, we would expect the prediction error associated with a syntactic prime to depend on all experience prior to a sentence, including, in particular, recent experience with the statistics of the current environment. For example, in the error-based model the same structure might be associated with different prediction errors, depending on what has recently been processed, since everything that is processed leads to changes in the model’s weights. Given that expectation adaptation in comprehension seems to be rather rapid (Fine et al., submitted for publication; Kaschak & Glenberg, 2004b), changes to the prediction error might be detectable within a single experiment. If so, it should be possible to detect changes to the strength of syntactic priming based on recent experience within an experiment. Study 3 tests this prediction by manipulating the sequential distribution of syntactic structures participants are exposed to during the experiment, thereby manipulating the prediction error based on recent experience. To the best of our knowledge, this is the first study that investigates effects on syntactic priming as a function of the prediction error based on recent experience.

1.4. Operationalizing the prediction error

Before we describe the experiments, one last piece of background information is necessary. In order to assess the effect of prediction errors on syntactic priming, we need to operationalize the prediction error. Here, we estimate the prediction error associated with processing a prime as the surprisal of the prime structure (see also Jaeger & Snider, 2008; Malhotra, 2009, p. 185). The surprisal of a linguistic unit (e.g., a word or syntactic structure) in context is equivalent to the amount of Shannon information it adds to the preceding context, (Shannon, 1948). As should be the for a measure of prediction error, surprisal is larger, the less expected the encountered word or structure is given previous experience. Specifically, a prime’s surprisal is 0 when its structure is perfectly expected (p(unit|context) = 1), and increases the less expected the structure. We chose surprisal rather than other measures of ‘unexpectedness’ because surprisal has been found to be a good predictor of word-by-word processing times in self-paced reading and eye-tracking reading experiments (Smith & Levy, 2008; see also Alexandre, 2010; Boston, Hale, Kliegl, Patil, & Vasishth, 2008; Demberg & Keller, 2008; Frank & Bod, 2011).

Interestingly, surprisal as an estimate of the prediction error also follows naturally from the error-based model. In the words of Chang and his colleagues, the prediction error associated with comprehending a word is calculated as follows:

The word … units used a soft-max activation function, which passes the activation of the unit through an exponential function and then divides the activation of each unit by the sum of these exponential activations for the layer. … Error on the word units was measured in terms of divergence where oi is the activation for the i output unit on the current word and ti is its target activation because of the soft-max activation function. (Chang et al., 2006, p. 270)

Although Chang and colleagues describe the prediction error with reference to activation, the distribution of relative exponentiated activation over word units at each point in time also characterizes a probability distribution (each value falls in [0, 1] and the values sum to 1). With this in mind, it becomes apparent that the error signal employed in the error-based model is the Kullback–Leibler divergence or relative entropy between the desired – i.e., actually observed – output distribution over words in the mental lexicon (which is 1 for the actual word and 0 for all other words) and the distribution of word probabilities given previous experience. This is, of course, no coincidence since the relative entropy is a measure of the divergence between two probability distributions and hence constitutes a rational choice for the prediction error that is sought to be minimized via backpropagation (see also MacKay, 2003, chap. 5).

Since ti is 0 for all but the actual word, the equation for the error signal reduces to , where oi is the networks estimate of p(word|previousexperience). Hence, the error used to adjust the network’s weights after processing a word is identical to the word’s surprisal given the network’s weights before encountering the word. In the general discussion, we will return to the relation between our operationalization of the prediction error and the error signal used in the error-based model.

2. Study 1: Prediction error and syntactic priming in conversational speech

Study 1 assesses the effect of a prime’s prediction error on the strength of syntactic priming against data from the dative alternation in spontaneous speech that was generously provided by Bresnan et al. (2007). As an example, take a verb like owe, which is highly biased towards DO:

| (2) | …you don’t owe your city anything… |

| (3) | …you owe that back to God … |

The prediction we are testing is that processing a prime structure that is unexpected given the prime’s verb, as in (3), will make speakers more likely to produce the structure later (in the target) than if the prime’s verb is biased towards the structure, as in (2).

2.1. Methods

2.1.1. Data

The database provided by Bresnan et al. (2007) contains 2,349 instances of the dative alternation from the full Switchboard corpus (about 2 million words, Godfrey, Hol-liman, & McDaniel, 1992). Since we were interested in how the prediction error associated with a prime affects the strength of syntactic priming, we excluded all cases without preceding primes (i.e., the first dative in each conversation). This reduced the database to 1249 tokens. We further removed all cases with verbs for which it was unclear whether they participate in the dative alternation (i.e., for which the verb occurred fewer than 5 times in either the PO or DO). This left a total of 1007 target productions (and 25 target verb types, of the original 38 in the database), comprising 234 PO and 773 DO structures.

2.1.2. Estimating the prediction error (prime surprisal)

Prime surprisal was estimated as the negative log of the conditional probability of the prime structure given the verb: for DO primes, the surprisal is −log2 p(DO|verb); for PO primes, the surprisal is −log2 p(PO|verb). Hence, the larger the prime surprisal is, the greater the difference between the expected structure (given the verb) and the observed structure in the prime. A large number of studies have shown that comprehenders are sensitive to verb subcategorization biases and these biases affect what structure comprehenders expect to follow the verb (e.g., Garnsey et al., 1997; MacDonald, Bock, & Kelly, 1993; Trueswell et al., 1993; specifically, for the dative alternation, see Brown, Savova, & Gibson, 2012).

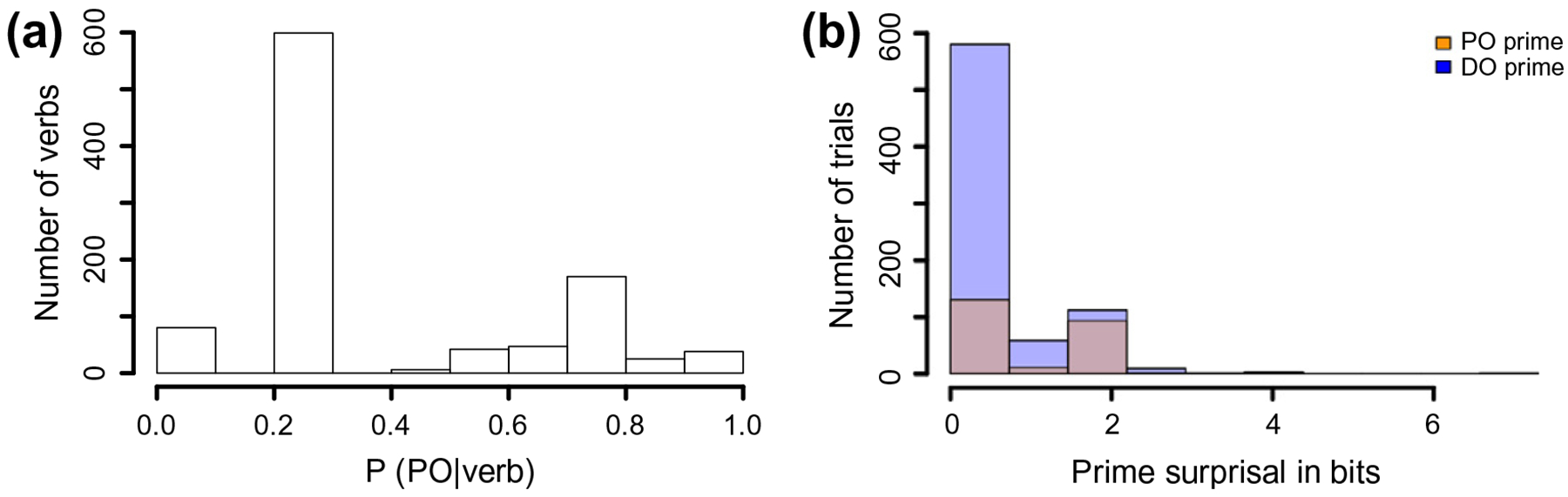

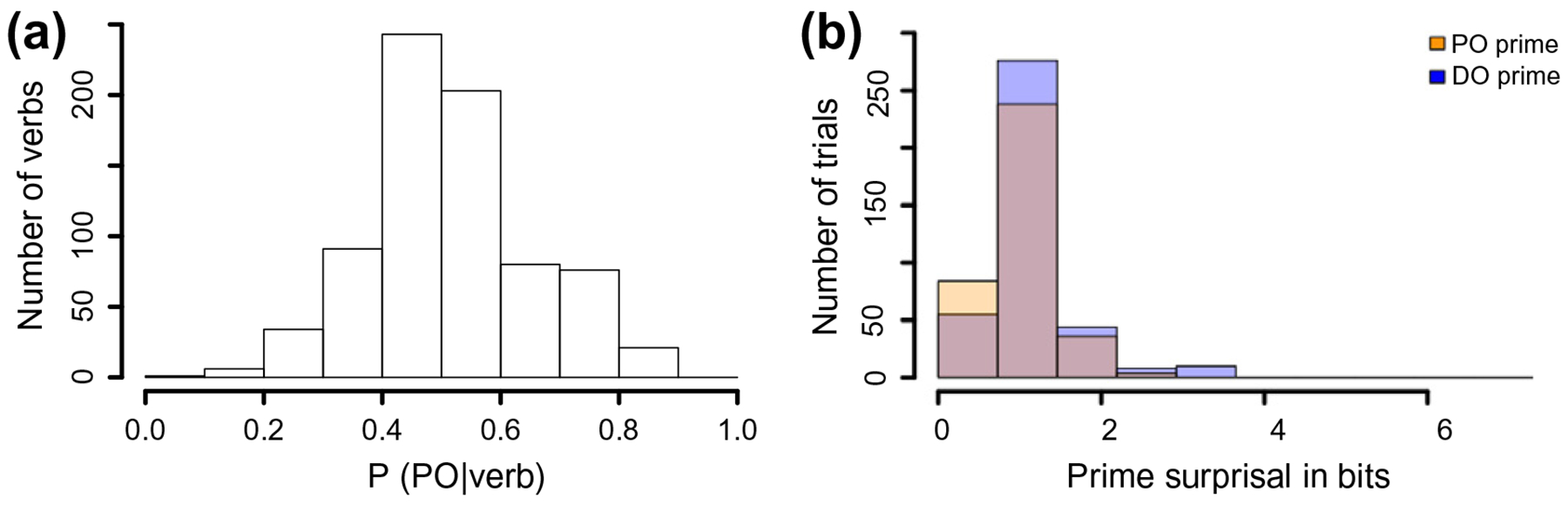

Our estimates of subcategorization frequencies of the prime verbs were based on the full Switchboard corpus. Hence, prime surprisal in Study 1 is based on an estimate of the average prior language experience of the speakers recorded in the Switchboard corpus. The database contains a range of verb biases, as attested in Fig. 1a. Fig. 1b shows the distribution of prior prime surprisal based on the actual prime structures occurring in the database. Note that most primes had rather low surprisal values. This unsurprising since the primes for Study 1 came from conversational speech, rather than a balanced psycholinguistic experiment: in real life, verbs most often occur with the structures that they are biased towards.

Fig. 1.

Histograms of prime verbs’ PO biases (a) and prior prime surprisal (b) in Study 1. Note that verb biases are independent of the actual prime structure (PO or DO), whereas prime surprisal is the surprisal of the prime’s structure given the prime’s verb bias.

2.1.3. Analysis

In a mixed logit regression (for an introduction, see Jaeger, 2008), we analyzed the occurrence of PO vs. DO structures based on the prior prime surprisal associated with the most recent prime, while controlling for (a) effects on speakers’ preferences in the dative alternation found in previous work (Bresnan et al., 2007; Gries, 2005), (b) additional predictors specific to syntactic priming (added to this database by Recchia (2007)).

We included twelve properties of the target sentence as control predictors based on previous work by Bresnan et al. (2007) and Gries (2005). These are listed in the first block of Table 1. Bresnan and colleagues found that the accessi bility and complexity of both the theme and the recipient expression affect speakers’ preferences, with more accessible and less complex phrases usually being ordered before less accessible and more complex phrases (see also Arnold, Wasow, Losongco, & Ginstrom, 2000; Bock & Warren, 1985). Beyond properties of the theme and recipient, both the semantic class of the target verb and the target verb’s subcategorization bias (here operationalized as the log-odds of a PO structure given the verb in the Switchboard corpus) were found to account for unique proportions of the variance in predicting speakers’ preferences (Bresnan et al., 2007; Gries, 2005). Here we modeled semantic class as a binary distinction, indicating whether the dative verb in the target sentence was a ‘prevention of possession’ verb (as in “cost/deny the team a win”) or not.4

Table 1.

Summary of Study 1 results (c = centered, r = residualized; see text for details). For each effect, we report the coefficient estimate (in log-odds and odds), its standard errors and two tests of significance: Wald’s Z statistic, which tests whether the coefficients are significantly different from zero (given the estimated standard error), as well as the χ2 over the change in data likelihood, Δ(−2Λ), associated with the removal of the unresidualized predictor from the final model. Finally, we report the partial Nagelkerke R2 as a measure of effect size (cf. Jaeger, 2010, footnote 2).

| Predictor (independent variable) | Parameter estimates | Wald’s test | Δ(−2Ʌ)-test | Partial pseudo-R2 | ||||

|---|---|---|---|---|---|---|---|---|

| Log-odds | S.E. | Odds | Z | pz | χ2 | p | ||

| Properties of target sentence | ||||||||

| Theme pronominal (r) | 2.25 | 0.39 | 9.5 | 5.7 | ≪0.001 | 18.2 | ≪0.001* | 0.061 |

| Theme given (r) | 1.32 | 0.45 | 3.7 | 2.9 | <0.005 | 13.8 | ≪0.001* | 0.047 |

| Theme indefinite | −2.41 | 0.41 | 0.1 | −5.9 | ≪0.001 | 35.6 | ≪0.001* | 0.112 |

| Theme singular | −0.83 | 0.38 | 0.4 | −2.2 | <0.05 | 5.6 | <.05* | 0.017 |

| Recipient pronominal | −0.54 | 0.50 | 0.6 | −1.1 | >0.2 | 1.2 | >0.2 | 0.002 |

| Recipient given (r) | −2.53 | 0.58 | 0.1 | −4.4 | ≪0.001 | 24.9 | ≪0.001* | 0.081 |

| Recipient indefinite | 0.27 | 0.53 | 1.3 | 0.5 | >0.6 | 0.3 | >0.6 | 0.001 |

| Recipient inanimate | 3.29 | 0.58 | 26.8 | 5.7 | ≪0.001 | 33.4 | ≪0.001* | 0.104 |

| Recipient third person | 0.39 | 0.41 | 1.5 | 0.9 | >0.3 | 1.1 | >0.3 | 0.004 |

| Log argument length difference | −2.55 | 0.23 | 0.1 | −10.9 | ≪0.001 | 66.7 | ≪0.001* | 0.193 |

| Verb class = ‘prevention of possession’ | −3.75 | 2.50 | 0.1 | −1.6 | >0.11 | 2.4 | >0.12 | 0.017 |

| Target verb bias (log-odds) | 4.45 | 0.63 | 85.6 | 6.9 | ≪0.001 | 117.3 | ≪0.001* | 0.302 |

| Basic priming effects | ||||||||

| PO prime (c) | 0.56 | 0.33 | 1.8 | 1.7 | <0.1 | 3.2 | <0.1 + | 0.004 |

| Cumulative PO primes | 0.29 | 0.23 | 1.3 | 1.2 | >0.2 | 1.8 | >0.2 | 0.005 |

| Prime-target verb identity (c) | 0.01 | 0.34 | 1.0 | 0.1 | >0.9 | 1.4 | >0.3 | 0.004 |

| Log prime-target distance (c,r) | 0.02 | 0.10 | 1.0 | 0.2 | >0.8 | 0.2 | >0.9 | 0.001 |

| PO prime × verb identity (r) | 1.04 | 0.68 | 2.8 | 1.6 | >0.10 | 2.6 | >0.10 | 0.009 |

| PO prime × distance (r) | −0.17 | 0.21 | 0.8 | −0.4 | >0.4 | 1.4 | >0.4 | 0.002 |

| PO prime × verb identity × distance (r) | −0.24 | 0.43 | 0.8 | −0.8 | >0.5 | 0.4 | >0.5 | 0.001 |

| Effect of prime’s prediction error | ||||||||

| Prior prime surprisal given the prime verb (c,r) | −0.07 | 0.20 | 0.9 | 0.4 | >0.7 | 0.2 | >0.6 | 0.001 |

| PO prime × prior prime surprisal (r) | 0.81 | 0.40 | 2.2 | 2.0 | <0.05 | 4.3 | <0.05* | 0.012 |

Seven additional control predictors were included to account for effects of syntactic priming identified in previous work. These controls are shown in the second block of Table 1. This includes the basic effect of syntactic priming (i.e., whether the most recent prime was a PO or DO structure) and the cumulative effect of all preceding primes (Kaschak, 2007; Kaschak, Loney, & Borreggine, 2006). Since previous work has found stronger priming effects if the prime and target share a verb lemma (the ‘lexical boost’, e.g., Hartsuiker et al., 2008; Pickering & Branigan, 1998; Szmrecsányi, 2005), both the main effect of verb repetition and its interaction with the prime structure were included. Similarly, we included the distance to the most recent prime (in words) and its interaction with the prime structure to control for potential decays in the strength of the priming effect (Reitter, Moore, & Keller, 2006). Finally, we included the 3-way interaction since recent work suggests that the lexical boost on syntactic priming, but not syntactic priming itself, decay over time (Hartsuiker et al., 2008). The model also contained a by-speaker random intercept, to control for individual differences in speakers’ preference for PO over DO structures.

Here and in all studies reported below, all predictors were centered and all interactions were residualized against their main effects in order to reduce collinearity in the models. Additionally, prime-target distance was residualized against verb identity since the two predictors were correlated. Prime surprisal was residualized against the main effect of prime structure. In the analysis reported below, all fixed effect correlation rs < 0.25. We report the full model, which is generally recommendable whenever one has sufficient data (for guidelines, see references in Jaeger, 2011) because of the inherent problems of stepwise predictor removal (for a concise overview, see Harrell, 2001). Removing predictors that have p > 0.7 (a frequently proposed threshold, see Harrell, 2001) does not change the results of any of our studies.

2.2. Predictions

Under the assumption that the prediction error experienced while processing a prime can be approximated as prime surprisal given the prime’s verb, we predict that more surprising primes should lead to larger priming effects.

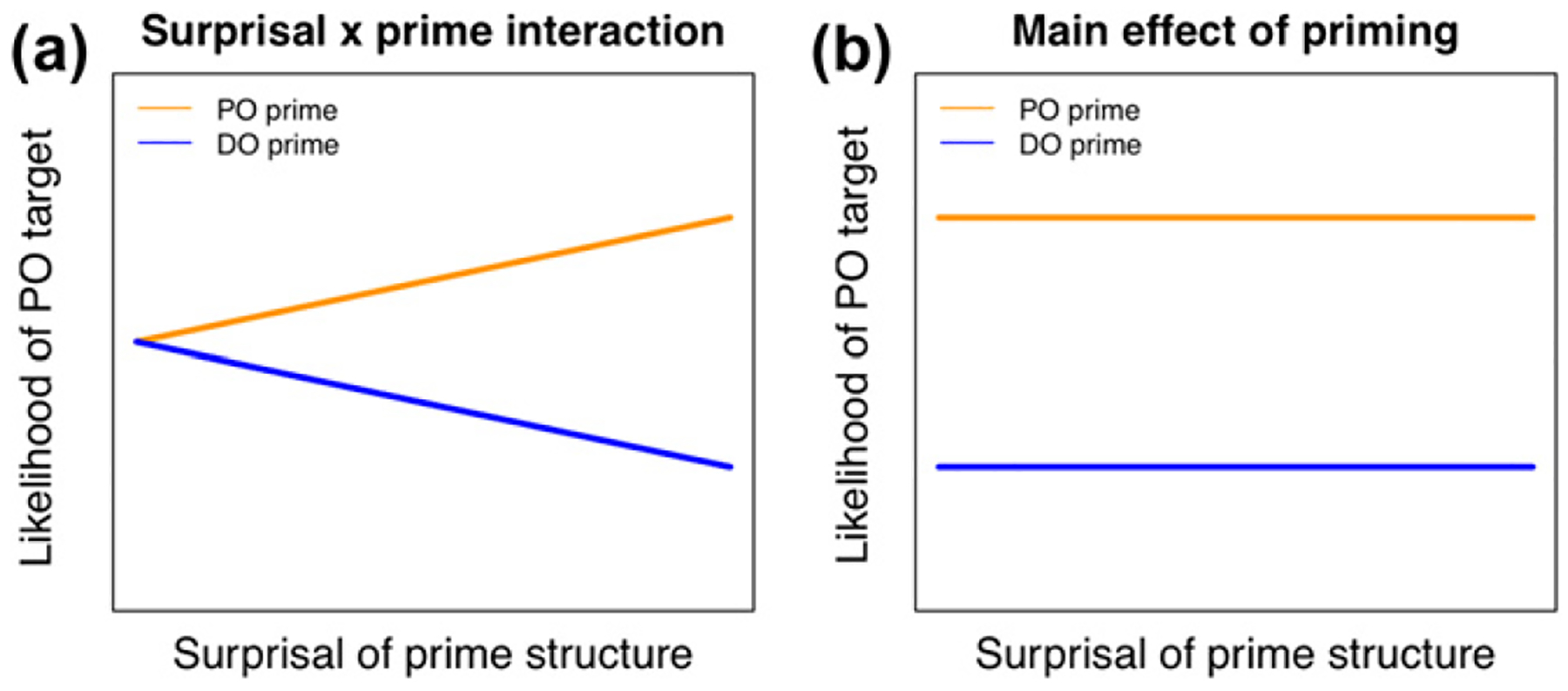

Specifically, we predict an interaction between the prime structure (PO vs. DO) and prime surprisal given the verb (−log2 p(PO|verb) for PO primes and −log2 p(DO|verb) for DO primes). Fig. 2a illustrates this prediction. For PO primes, the PO structure should be more likely to be produced in the target the more surprising (less expected) the PO prime was. This is illustrated by the positive slope for a PO prime in Fig. 2a. Conversely, for DO primes, DO targets should be more likely the more surprising it was to see a DO prime. This is illustrated by the negative slope in Fig. 2a. Activation-boost accounts, on the other hand, do not predict any effect of prime surprisal (Fig. 2b).

Fig. 2.

Illustration of the predicted interaction of prime structure and prime surprisal (a) as contrasted with the main effect of prime structure (b) expected if syntactic priming is unaffected by the prediction error.

2.3. Results

Table 1 summarizes the effects of control predictors and the predictors of interest, both of which we discuss below. Coefficients are given in log-odds (the space in which logit models are fitted to the data). Significant positive coefficients indicate increased log-odds (and hence increased probabilities) of a PO structure in the target sentence.

2.3.1. Non-priming controls

As shown in the first block of Table 1, all control predictors replicated previous findings, with effects pointing in the same direction as in Bresnan et al. (2007): more accessible and less complex themes favored the PO structure, whereas more accessible and less complex recipients favored the DO structure. Verbs that overall favor the PO structure did so also in our sample. The coefficients as well as significance levels of all control predictors are only minimally different from Bresnan et al. (2007).

2.3.2. Basic priming effects

The second block of rows of Table 1 summarize the priming-related controls. There was a marginally significant main effect of priming (pz < 0.1). There was no main effect of prime-target distance (pz > 0.9), nor a main effect of repeating the verb in the prime (pz > 0.7). There also was no main effect of the cumulative proportion of PO in the dialogue (pz > 0.1). None of the interactions reached significance (pzs > 0.1).

2.3.3. Prior prime surprisal

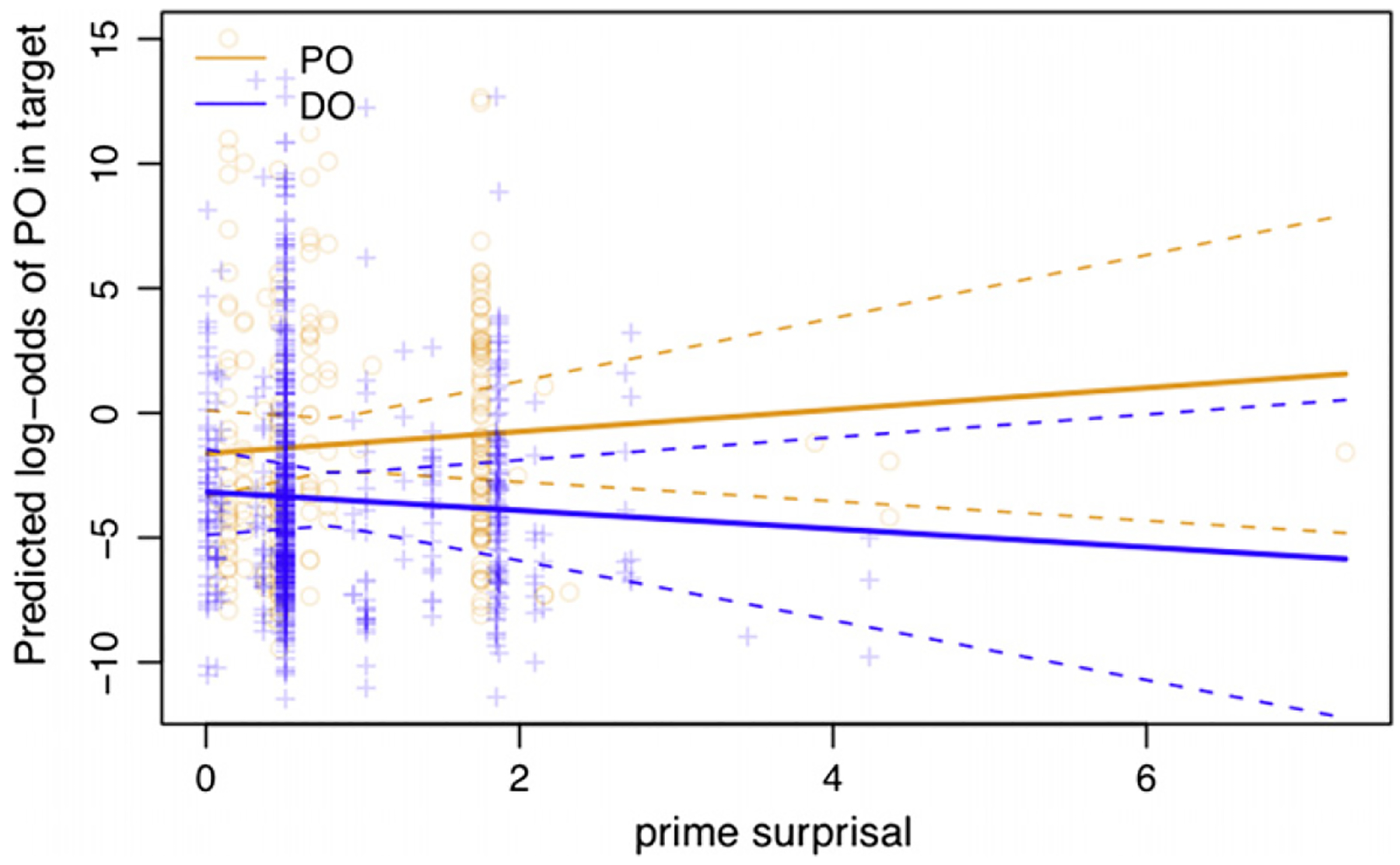

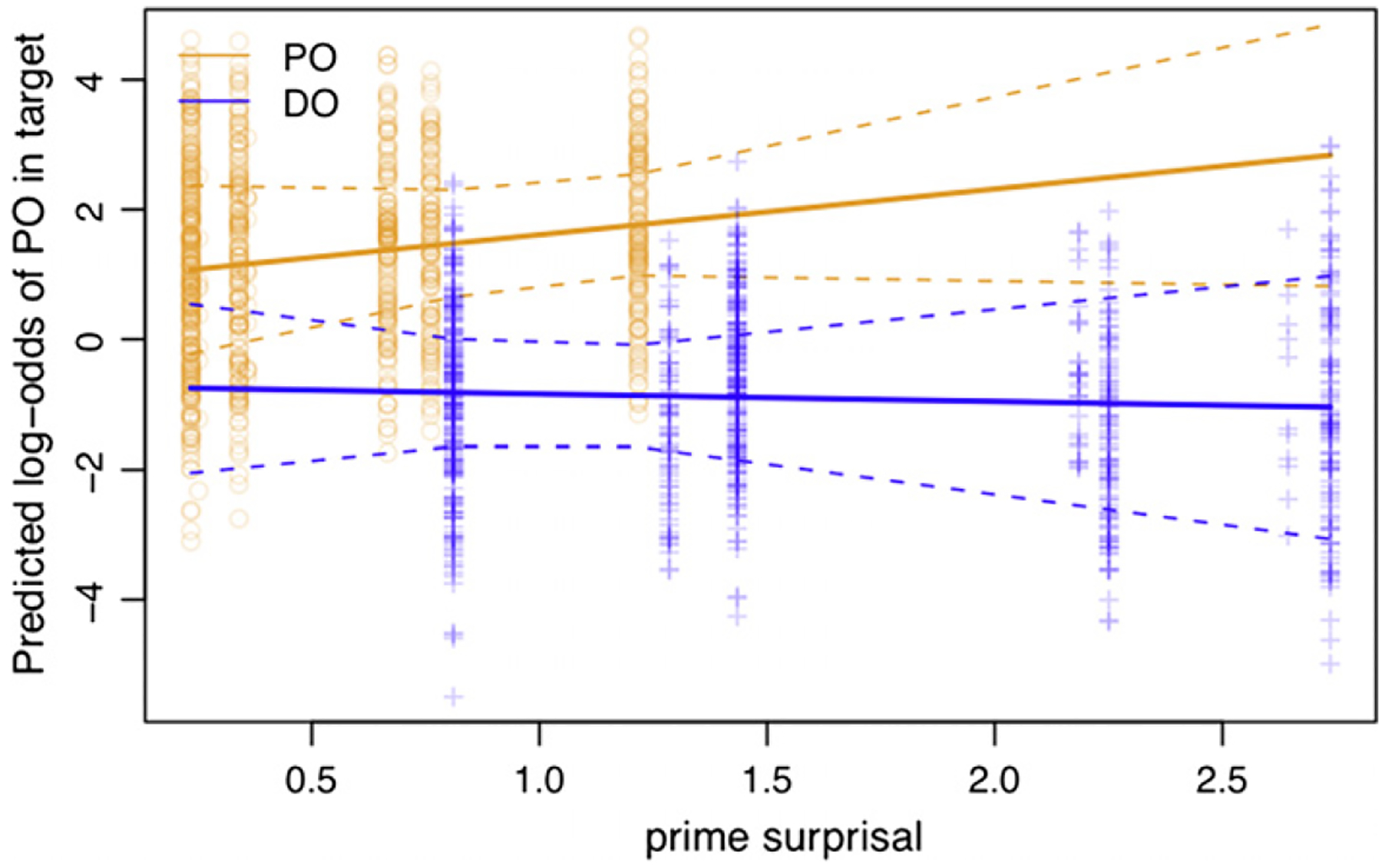

As predicted, we found a significant interaction between the prime structure and prior prime surprisal given the verb (pz < .05). There was no main effect of prior prime surprisal (pz > 0.6). The positive interaction indicates that the slope of the surprisal effect in PO primes is significantly greater than the slope of surprisal in DO primes. The interaction is illustrated in Fig. 3, which reflects the predictions illustrated in Fig. 2. Surprisal has a positive trend in PO primes (the more surprising a PO prime, the more likely the target is to be a PO), but a negative trend in DO primes (the more surprising the DO structure, the more likely it is to be repeated, which means a PO is less likely in the target). A simple effect analysis revealed that the effect of surprisal was significant for PO primes (pz < .05). The simple effect of surprisal for DO primes did not reach significance, although the numerical effect had the expected negative trend (pz > 0.2).

Fig. 3.

Interaction between prior prime surprisal (in bits) and priming in Study 1. Circle and crosses indicate the log-odds for PO targets according to the model. Orange circles represent PO primes, and blue crosses represent DO primes. Dotted curves represent the 95% confidence intervals of the slopes.

2.4. Discussion

We find that, the more surprising a prime structure is given the subcategorization bias of the prime’s verb, the more likely this structure is to be repeated in the target. This sensitivity of syntactic priming to the prediction error is predicted by the hypothesis that syntactic priming is a consequence of adaptation with the goal to minimize the expected future prediction error. It also follows from the error-based model (Chang et al., 2006) and is broadly compatible with less specific implicit learning accounts (e.g., Bock & Griffin, 2000; Chang et al., 2000). Short-term activation boost accounts do not predict this effect (Pickering & Branigan, 1998).

Interestingly, the simple effect of surprisal reached significance only for PO primes, but failed to reach significance for DO primes. It is theoretically possible that this is due to a floor effect for DO primes due to the fact that DO target structures were overall more frequent in the database (773 DOs vs. 234 POs; see also Fig. 3, where the overall the log-odds of a PO structure are smaller than 0). However, Studies 2 and 3 reported below argue against this interpretation.

Another explanation of the observed asymmetry is that priming effects are overall weaker for DO structures, perhaps because DO structures are more frequent (cf. the inverse-frequency effect, Hartsuiker & Kolk, 1998; Hartsuiker & Westenberg, 2000; Kaschak, 2007; Scheepers, 2003). Kaschak (2007) found weaker priming effects for DO than PO primes in a series of production experiments. To test whether the same holds for the current data, we examined the main effect of prime structure against the entire data set, including unprimed turns (2349 target tokens). This provides a baseline against which to compare both DO and PO primes. Replicating Kaschak (2007) for the Switchboard corpus, we found that PO priming is stronger (pz > 0.01) than DO priming – in fact, the simple effect of priming is non-significant for DO primes (pz > 0.6). It is hence possible that the failure to find a significant simple effect of prime surprisal for DO primes is due to the overall insignificance of DO priming. We will return to this question below, since – to anticipate the results of our remaining studies – we find the same asymmetry between PO and DO priming in Studies 2 and 3.

We find no evidence that syntactic priming decays (replicating Bock & Griffin, 2000; Bock, Dell, Chang, & Onishi, 2007; Snider, 2008; but unlike Branigan, Pickering, & Cleland, 1999; Reitter et al., 2006). The speakers in the corpus were equally likely to repeat dative structures used previously in the conversation no matter how many words had been subsequently uttered in the conversation. This is remarkable considering the median prime-target distance was 148 words, with a maximum distance of 2000 words.

Contrary to the prediction of some implicit learning accounts, we found no cumulative effect of primes beyond the most recent prime (Bock & Griffin, 2000; Kaschak, 2007). This is most likely due to the fact that there was little variation in the number of primes preceding target structures: the median number of datives per dialogue in our database is 2. Studies 2 and 3, where we find clear cumulative effects of syntactic priming, support this interpretation.

Finally, the interaction between prime structure and verb repetition did not reach significance, though it was near-marginal and in the predicted direction (pz = 0.11). We hypothesize that the distribution of verb lemmas across primes did not provide sufficient power: while verb repetition between prime and target was common in our database (46.7% of tokens), 74.5% of the verb repetitions involve the verb give, leaving only 120 cases with 17 types of repeated verbs. This made it difficult to distinguish between effects of the target verb bias and effects of verb repetition.

3. Study 2: Prediction error and syntactic priming in written production

Study 1 provides evidence from conversational dialogue data that larger prediction errors while processing the syntactic structure of the prime lead to larger syntactic priming effects. Study 2 extends our investigation to written production. We test whether the effect of prior prime surprisal on prime strength is also observed under more controlled conditions in laboratory production experiments. We present a meta-analysis of data from three priming experiments generously provided by Mike Kaschak and colleagues (Kaschak, 2007; Kaschak & Borreggine, 2008).

3.1. Methods

3.1.1. Data

The data set consisted of 4508 prime and 1703 target trials from three production experiments on syntactic priming (a total of 392 participants and 18 target items). All experiments employed written sentence completion: participants completed a partial sentence presented on a computer by typing into a text box. Prime stimuli contained a subject, verb, and either an animate recipient (to induce a DO) or an inanimate theme (to induce a PO). Targets contained only a subject and verb to allow for either PO or DO structures to be produced. All non-dative target completions were excluded from the analysis.

The design of the experiments is summarized in Table 2. The three experiments were designed to investigate cumulative priming. During an exposure phase, participants saw 16–20 primes, which consisted of 100%/0%, 75%/25%, 50%/ 50%, 25%/75%, or 0%/100% PO and DO primes, respectively. The cumulative effect of these primes was assessed in the test phase. The test phase of Experiment 1 from Kaschak and Borreggine (2008) consisted of 10 prime-target pairs, while the test phases of Experiments 1 and 2 from Kaschak (2007) consisted only of target completions (6 and 12 completions, respectively).

Table 2.

Summary of experiments included in the meta-analysis for Study 2.

| Kaschak and Borreggine (2008) Expt. 1 | Kaschak (2007) Expt. 2 | Kaschak (2007) Expt. 1 | ||

|---|---|---|---|---|

| Training phase | 100%D0/0%P0 or | 100%D0/0%P0 or 20 | ||

| 0%D0/100%P0 or | 0%D0/100%P0 or | |||

| 50%D0/50%P0 or | 50%D0/50%P0 or | |||

| 75%D0/25%P0 or | ||||

| 75%D0/25%P0 | ||||

| 20 primes | 16 primes | 20 primes | ||

| Testing phase | 10 prime-target pairs | 12 targets | 6 targets | |

In prime trials, participants saw stems that enforced either a PO or a DO completion (e.g., Meghan gave the toy … vs. Meghan gave her mom …). In target trials, participants saw stems that could be continued with either PO or DO structures (e.g., Meghan gave …; Kaschak, 2007, p. 928). We assessed the effect of the prediction error on the strength of priming in the target trials.

3.1.2. Estimating prime surprisal

Although the original experiments did not manipulate prime surprisal, the prime trials in all three experiments employed a sufficiently large variety of verbs, making it possible to examine the effects of prime surprisal in a meta analysis. Prime surprisal was operationalized following Study 1. Since the data set for the meta-analysis contained some verbs that were not in the Switchboard corpus, we estimated the PO bias of all prime and target verbs based on the subcategorization frequencies from the database in Roland, Dick, and Elman (2007); to obtain reliable estimates, we combined the subcategorization estimates from all corpora.

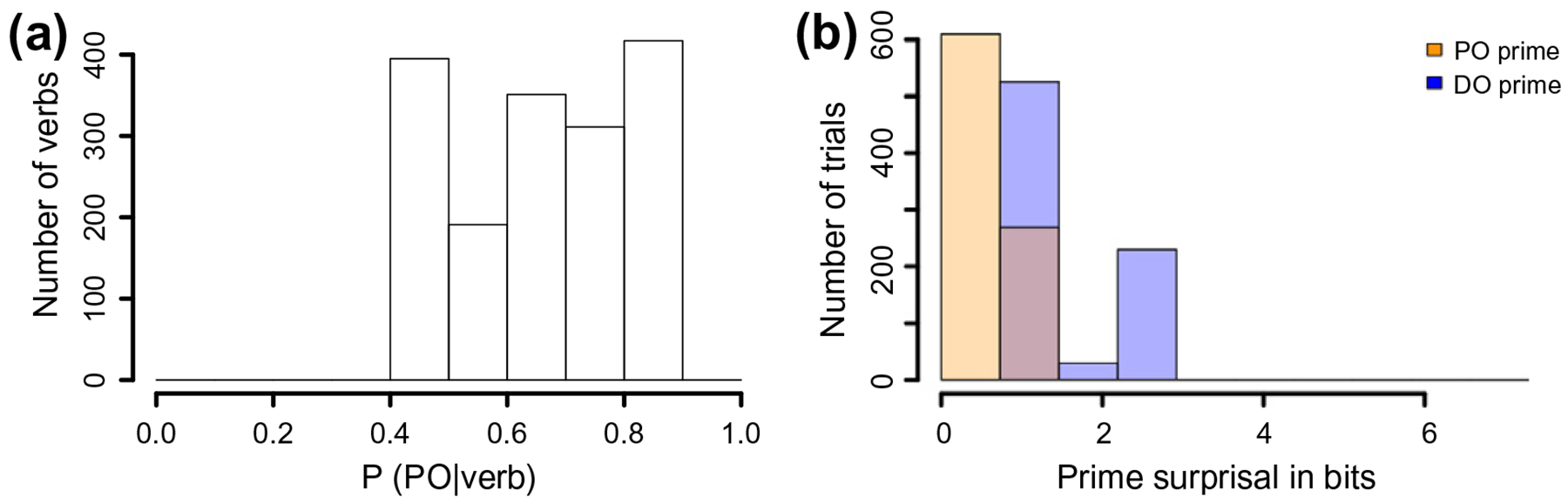

Hence, like in Study 1, our estimate of prime surprisal in Study 2 is an estimate of prior prime surprisal was based on an estimate of the average previous language experience. Unlike in Study 1, ‘previous language experience’ for Study 2 had to be approximated by averaging over corpora consisting of a more heterogenous mix of genres, styles, and including both written and spoken data. More importantly, the estimate of previous language experience in Study 2 also does not include data from the participants in the Kaschak and colleagues’ experiments and hence might be a less accurate estimate of the actual surprisal experienced by participants in the experiments than that employed in Study 1. This biases against finding the predicted interaction of prime structure and prime surprisal. The distribution of PO bias and prior prime surprisal is summarized in Fig. 4a and b, respectively.

Fig. 4.

Histograms of prime verbs’ PO biases (a) and prior prime surprisal (b) in Study 2.

The surprisal values for two verbs (throw and sell) in the Kaschak (2007) experiments were outliers in that they were much higher than the other other verbs: they were more than 3 standard deviations higher than the mean. These accounted for only 2.2% of target trials, which were excluded from the analysis, leaving 1665 trials for the analysis, thereof 900 PO and 765 DO structures.

3.1.3. Analysis

We again employed a mixed logit regression to predict the occurrence of PO over DO structures based on the surprisal associated with the most recent prime. Following Study 1, we included the PO bias of the target verb as a control predictor. Except for prime-target distance, which did not vary in the experiments, we also included all priming-related controls from Study 1. We coded as the most recent prime whatever trial immediately preceded the target trial, regardless of whether this was a target or prime trial. Similarly, the cumulative count of preceding prime structures included both prime and target trials.

Finally, both by-participant and by-item random effects were included in the analysis. Model comparison was conducted to assess which random effects were significant. Since all results presented in this paper hold both for the full random effect structure – all intercepts, all slopes, and all covariances – and for the maximal random effect structure justified by model comparison, we simply present models with full random effect structures. That is, we present the results from the most conservative analysis (see Appendix B for a summary of the random effect structure). All fixed effect correlation rs < 0.3. The predictions are the same as in Study 1.

3.2. Results

The results of Study 2 are summarized in Table 3. Here, this is only the subcategorization bias of the target verb, which has the expected effect. The second block summarizes the basic priming effects and the final block the effects of interest. Next, we summarize these effects.

Table 3.

Summary of Study 2 results (c = centered, r = residualized; for further information see the caption of Table 1).

| Predictor (independent variable) | Parameter estimates | Wald’s test | Δ(−2Ʌ)-test | Partial pseudo-R2 | ||||

|---|---|---|---|---|---|---|---|---|

| Log-odds | S.E. | Odds | Z | pz | χ2 | p | ||

| Properties of target sentence | ||||||||

| Target verb bias (log-odds) | 0.90 | 0.20 | 2.5 | 4.5 | ≪0.001 | 17.0 | ≪0.001* | 0.015 |

| Basic priming effects | ||||||||

| PO prime (c) | 1.08 | 0.15 | 2.9 | 6.9 | ≪0.001 | 33.0 | ≪0.001* | 0.033 |

| Cumulative PO primes | 0.27 | 0.06 | 1.3 | 4.2 | ≪0.001 | 13.4 | <0.005* | 0.014 |

| Prime-target verb identity (c) | 0.09 | 0.25 | 1.1 | 0.4 | >0.7 | 0.2 | >0.9 | 0.001 |

| PO prime × verb identity (r) | 1.20 | 0.37 | 3.3 | 3.2 | <0.005 | 9.6 | <0.001* | 0.009 |

| Effect of prime’s prediction error | ||||||||

| Prior prime surprisal given the prime verb (c,r) | 0.07 | 0.13 | 1.1 | 0.5 | >0.5 | 0.5 | >0.4 | 0.001 |

| PO prime × prior prime surprisal (r) | 0.82 | 0.32 | 2.3 | 2.5 | <0.05 | 6.0 | <0.05* | 0.005 |

3.2.1. Basic priming effects

There was a main effect of prime structure such that PO primes were likely to lead to PO targets (pz ≪ 0.001). There was no main effect of verb repetition between prime and target (pz > 0.6), but, consistent with previous findings, there was an interaction with prime structure such that the prime structure is more likely to be repeated in the target when the verbs are the same (pz < .005). There also was a main effect of cumulative priming such that the more POs participants had encountered, the more likely they were to produce a PO (pz ≪ 0.001). In short, both the cumulative priming effect observed in the original experiments (Kaschak & Borreggine, 2008; Kaschak, 2007) and the verb repetition effect observed in previous work (Hartsuiker et al., 2008; Pickering & Branigan, 1998; Szmrecsányi, 2005) replicated.

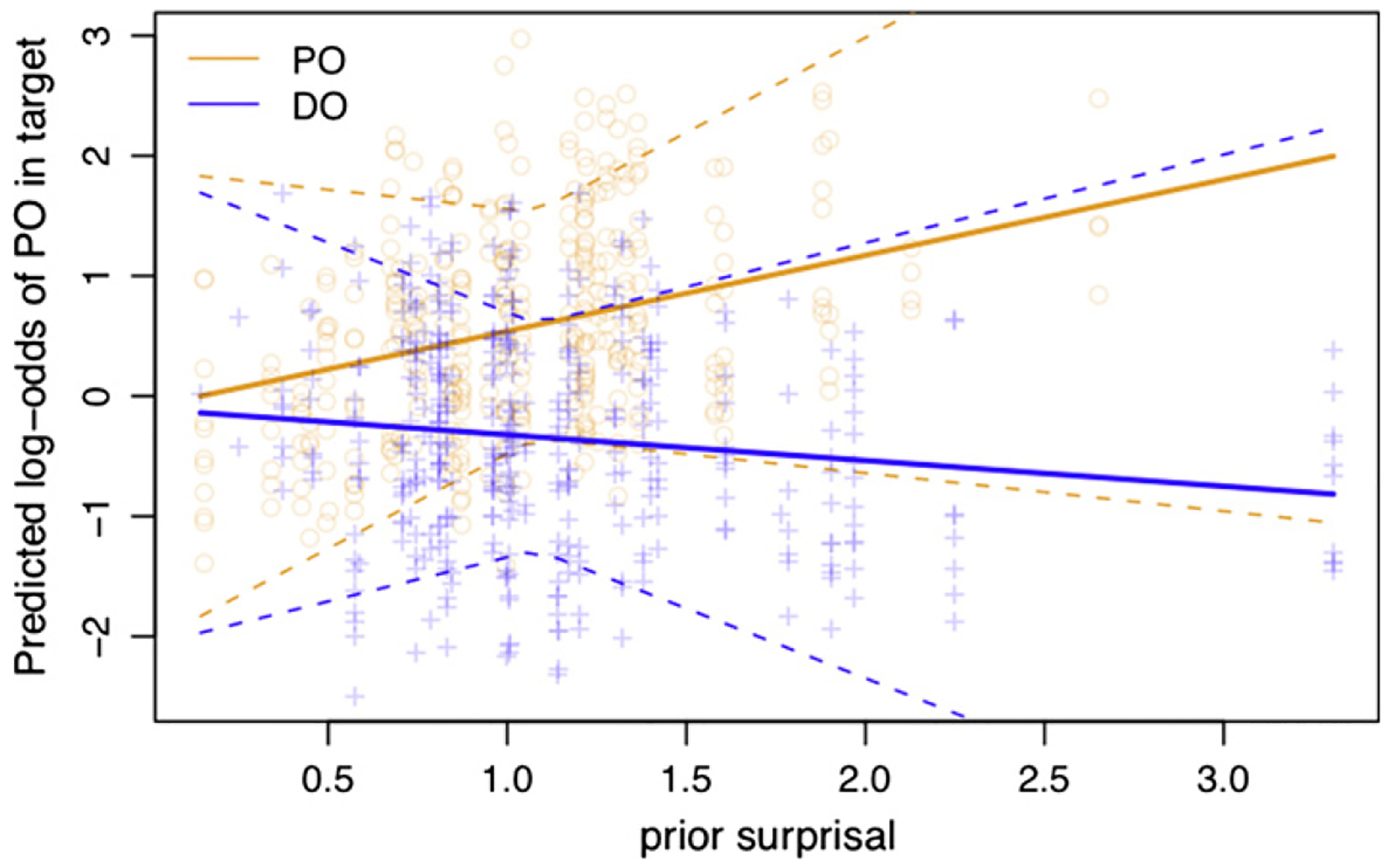

3.2.2. Prior prime surprisal

Like in Study 1, we found the predicted significant interaction between prime structure and prior prime surprisal given the verb (pz < .05; if the tokens of verbs throw and sell are not excluded, the results are the same except the surprisal interaction would be pz = .05). There was no main effect of prior prime surprisal (pz > 0.5). The interaction is illustrated in Fig. 5. As in Study 1, simple effect analyses revealed that the effect of prime surprisal was significant for PO primes (pz < 0.05), whereas the effect did not reach significance for DO primes (pz > 0.4, although it is in the predicted negative direction).

Fig. 5.

Interaction between prior prime surprisal (in bits) and priming in Study 2. Orange circles represent PO primes, and blue crosses represent DO primes. Dotted curves represent the 95% confidence intervals of the slopes. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

3.3. Discussion

Study 2, which employed written production data, replicates the effect observed for conversational data in Study 1. In both studies, we find that priming is sensitive to the magnitude of the prediction error. The more surprising a prime structure is given the prime verb, the more likely this structure is to be repeated in the target.

As in Study 1, we find the prediction confirmed only for PO primes, where the effect of prime surprisal is not significant for DO primes. Unlike in Study 1, PO and DO production were about equally likely in Study 2, so that this lack of an effect for DO primes is unlikely to be due to a floor effect. The data are, however, compatible with the second explanation advanced in our discussion of Study 1: it is possible that there is overall very little DO priming so that it is hard to detect effects of surprisal. We postpone further discussion of this issue until after Study 3.

Taken together, Studies 1 and 2 provide evidence from conversational speech and from written production that syntactic priming is sensitive to the prediction error associated with the processing of the prime structure. This, as we laid out in the introduction, is expected if syntactic priming is the consequence of mechanisms that aim to align interlocutors’ expectations, thereby reducing the prediction errors on upcoming sentences. If this is indeed the correct interpretation of the effects observed here, then we would expect prediction errors – and hence syntactic priming – to be sensitive to current expectations. For example, if speakers aim to align with their interlocutors, they should be sensitive to that specific interlocutor’s productions.

Indeed, as outlined in the introduction, there is evidence that comprehension is exquisitely sensitive to the statistics of the current linguistic environment. Most of this evidence comes from lower-level perceptual and phonetic processes. For example, the processing difficulty initially experienced with non-native or non-standard pronunciations can dissipate after only a few minutes of exposure (Bradlow & Bent, 2008; Maye, Aslin, & Tanenhaus, 2008). Perceptual adaptation of phonetic categories has been observed after exposure to only a handful of auditorily ambiguous but visually or lexically disambiguated instances of a sound (e.g., Vroomen, van Linden, de Gelder, & Bertelson, 2007; van Linden & Vroomen, 2007). Evidence that this type of adaptation can be understood as expectation adaptation comes from more recent work that has found perceptual recalibration to be sensitive to the relative distribution of phonetic cues in recent experience (Cla-yards, Tanenhaus, Aslin, & Jacobs, 2008; Kleinschmidt & Jaeger, 2012; Sonderegger & Yu, 2010). More recent work has also revealed evidence for similarly rapid expectation adaptation during syntactic comprehension (Farmer, Monaghan, Misyak, & Christiansen, 2011; Fine et al., submitted for publication; Kaschak & Glenberg, 2004b).

Very little is known about such processes in production. Here we begin to address this question for sentence production (for phonological production, see Warker & Dell, 2006). We ask whether the prediction error that affects syntactic priming in production, as observed in Studies 1 and 2, is based on recent experience with the current linguistic environment. In short, is the strength of syntactic priming in production sensitive to how unexpected the prime structure was given the distribution of syntactic structures preceding the prime? Studies 1 and 2 do not speak to this question since we estimated the prediction error based on verb’s subcategorization frequencies as observed in corpora. The prime surprisal estimate in Studies 1 and 2 therefore was an estimate of the surprisal based on prior experience (here: experience that is not environment- or speaker-specific). Studies 1 and 2 therefore leave open the question as to whether syntactic priming in production is sensitive to the prediction error as it is experience during the processing of a prime sentence. The primary purpose of Study 3 is to address this question.5 A secondary motivation was to test whether the effect of the prediction error based on prior experience that was observed in Studies 1 and 2 would replicate in an experiment explicitly designed to test this hypothesis.

4. Study 3: Prediction error depending on prior and recent experience

Study 3 investigates the effect of both prior and recent experience on syntactic priming in a picture description paradigm under the guise of a memory task. Specifically, the design of Study 3 crossed prime structure (PO or DO) with the prediction error based on prior experience and the prediction error based on recent experience within the experiment. To increase the power to detect effects of prior surprisal, we include 48 different verbs in the experiment spanning a wide range of subcategorization biases (cf. Study 1, which contained 25 verbs, of which only 9 occurred more than 20 times, and Study 2, which contained only 7 verbs). The prediction error based on recent experience was manipulated by either alternating prime structures throughout the experiment (PO–DO–PO–DO–⋯ or vice versa) or blocking prime structures, so that all prime trials within the first half of the experiment used one prime structure and the prime trials in the remainder of the experiment the other prime structure (PO–PO–⋯–PO–DO–DO–⋯–DO or vice versa). Under the assumption that participants continuously adapt their syntactic expectations after each prime, participants’ expectations for a PO or DO prime structure should depend, not only on prior experience, but also on how many PO and DO structures they have processed within the experiment prior to a prime trial. Hence, we predict that syntactic priming in our experiment is sensitive to both prior surprisal and adapted surprisal (i.e., surprisal based on recent experience).

4.1. Estimating and manipulating prior prime surprisal

The prior surprisal of the prime stimuli employed for Study 3 (see below) was estimated in a norming experiment using magnitude estimation (Bard, Robertson, & Sorace, 1996). Forty-one Stanford University undergraduate students participated for course credit. Participants were shown one prime sentence at a time in both of the possible word orders (PO or DO). The order of presentation of the two structures was counterbalanced within items and participants. The first sentence was assigned a score of 100 and participants were asked to rate on a multiplicative scale how much better or worse the second word order variant was compared to the first.

The rating task was preceded by instructions that illustrated the task, using examples not used during the norming experiment. This included examples of how fractions may be necessary if the second was more than two orders of magnitude less acceptable than the first.

Following Bard et al. (1996), ratings were log-transformed and then standardized by participant, providing z-scores. We then calculated the average PO z-score for each sentence. These z-scores were normalized into probabilities, based on which prime surprisal was calculated. The distributions of PO bias and prior prime surprisal are summarized in Fig. 6a and b, respectively.

Fig. 6.

Histograms of prime verbs’ PO biases (a) and prime surprisal (b) in Study 3.

4.2. Estimating and manipulating adapted prime surprisal

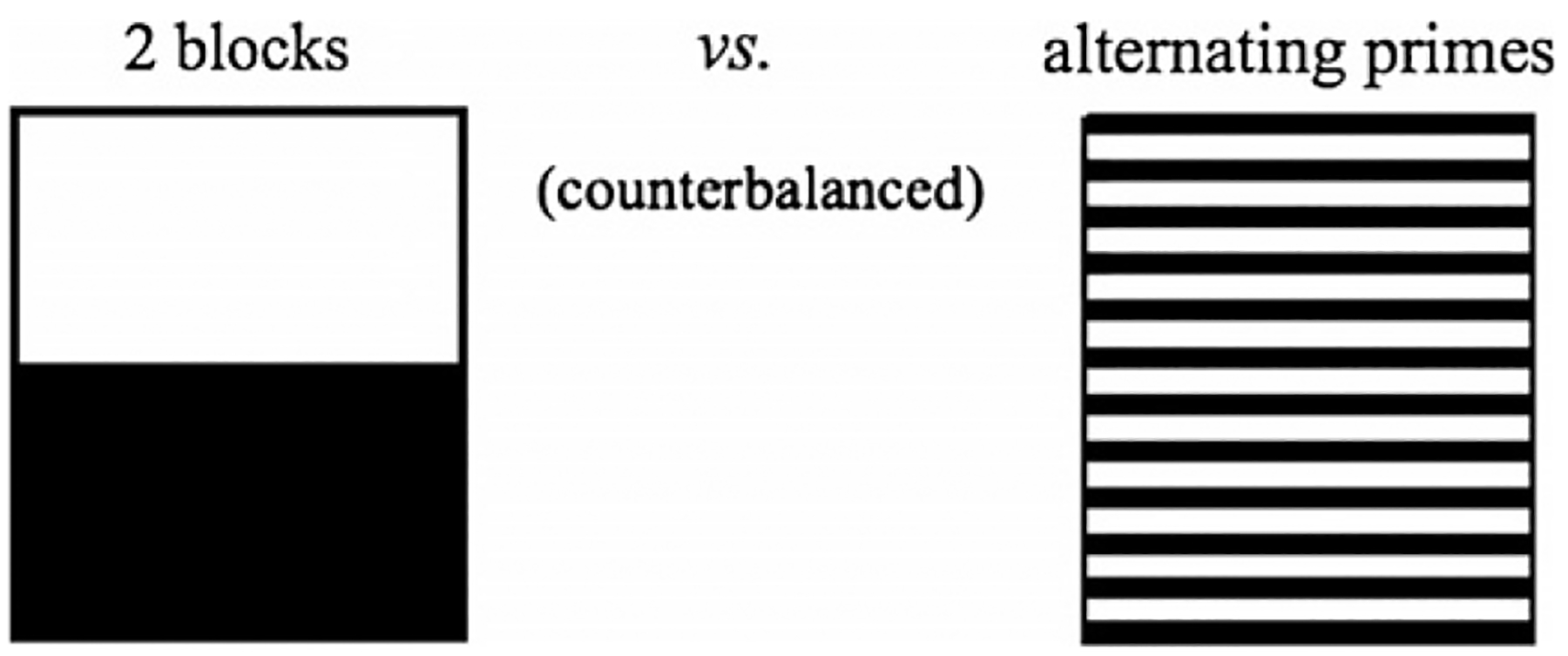

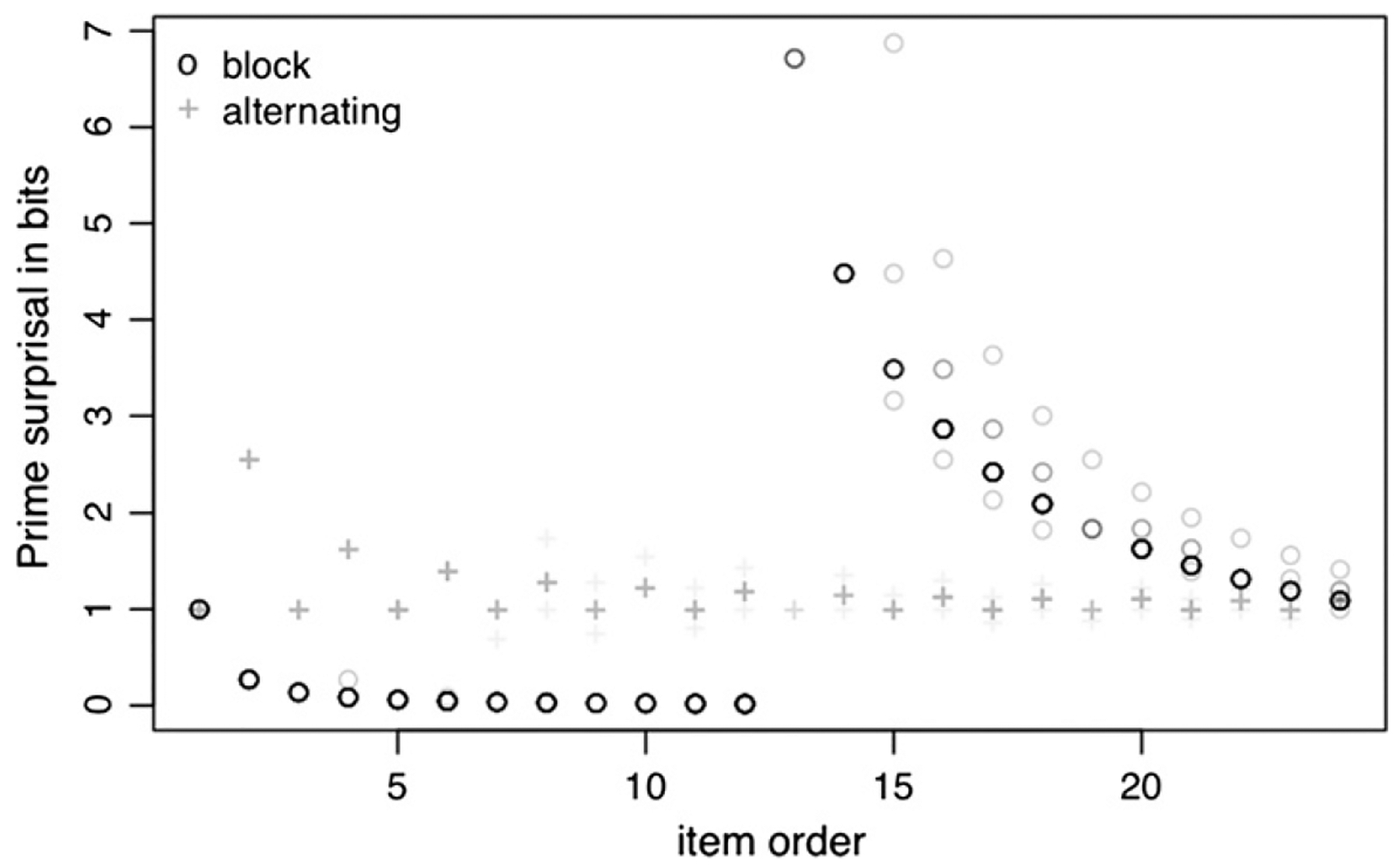

Adapted prime surprisal was manipulated within the priming experiment by varying how many prime structures of the same type (PO or DO) were presented to participants in a row, while keeping the overall count of both structures constant (50% PO and 50% DO). Specifically, half of participants saw primes in block order, whereas the other half saw primes in alternating order. In the block order, the first half of the experiment contained only instances of one prime structure (e.g., 12 PO primes, with fillers interspersed) and the second half of the experiment contained only instances of the other prime structure (e.g., 12 DO primes, with fillers interspersed). In the alternating condition, PO and DO prime structures alternated (with fillers interspersed). Both for the block and the alternating orders, the order of PO and DO primes was counterbalanced across participants. Fig. 7 visualizes the block and alternating condition.

Fig. 7.

Manipulation of recent experience in Study 3: prime structures were presented either in two blocks, each consisting of 12 primes with the same structure (e.g., 12 PO followed by 12 DO), or in alternating order (e.g., PO, DO, PO, DO, etc.)

Adapted prime surprisal was calculated based on the cumulative proportion of PO and DO primes encountered by the participant.6 For PO primes, adapted surprisal was based on the proportion of PO primes in previous trials; for DO primes, adapted surprisal was based on the proportion of DO primes in previous trials. To smooth these estimates and to avoid undefined values for the first prime trial (for which p(prime structure | previous trials) = 0), counts were first converted to empirical logits , before being converted into proportions (by taking the inverse logit of the the empirical logit values). Thus the first trial always has a prime surprisal of 1 bit, which corresponds to a uniform prior expectation of p(prime structure) = 0.5. In the block condition, the structure presented in the first block should become less surprising given experience during the experiment as the block proceeds. When the first prime of the second half of the experiment is encountered, adapted prime surprisal should rise starkly and then again decrease through the rest of the experiment. In the alternating condition, the surprisal of both prime structures should be distributed more homogenously around 1, reflecting the average proportion of the two prime structures closer 50% compared the the block condition.

It should be noted that our estimate of adapted prime surprisal does not combine recent experience with the prior bias due to the prime verb’s subcategorization bias and other properties of the prime stimuli. In this sense, what we refer to here as adapted prime surprisal is not the same as the posterior surprisal as experienced by participants in our experiment. That posterior surprisal is a combination of the prior surprisal, which we estimated under consideration of the prime verb’s subcategorization bias, and the adapted surprisal, which takes into consideration the distribution of PO and DO structures in recent experience but does not take into account the prime verb’s subcategorization bias.7

4.3. Methods

4.3.1. Participants

The analysis includes data from 25 students at the University of Rochester, who were paid for their participation, and 20 students at Stanford University, who were given course credit, for a total of 45 participants. Sixty participants were originally run in the experiment, but 2 had to be excluded due to recording errors, and another 13 because they did not produce sufficient variation in their target structures (<15% PO targets). Exclusion of these participants does not change the significance of effects reported below.

4.3.2. Materials

Target pictures consisted of the 24 dative-eliciting pictures generously provided by Bock and Griffin (2000). Each picture was grouped with a specific dative prime. The 24 prime items each consisted of one sentence with high PO bias and one sentence with low PO bias (i.e., 48 dative sentences).

An example item is shown in (4). The complete list of items is given in Appendix A. Prime sentences and target pictures were grouped in such a way that the target picture was unlikely to be described by the prime verb (indeed, the prime and target verbs never overlapped in Study 3).

| (4) |

|

Target trials were mixed with 30 filler sentences and 30 filler pictures and 24 sentence and pictures for an unrelated experiment (all them using transitive structures). Items and fillers were presented in the same order to each participant, regardless of the experimental condition. The two prime structure conditions and the two prior prime surprisal conditions each occurred equally often within the experiment (Latin square design; the order of prime structures used to manipulate adapted prime surprisal was a between-participant factor, although it results in within-participant variability in adapted prime surprisal).

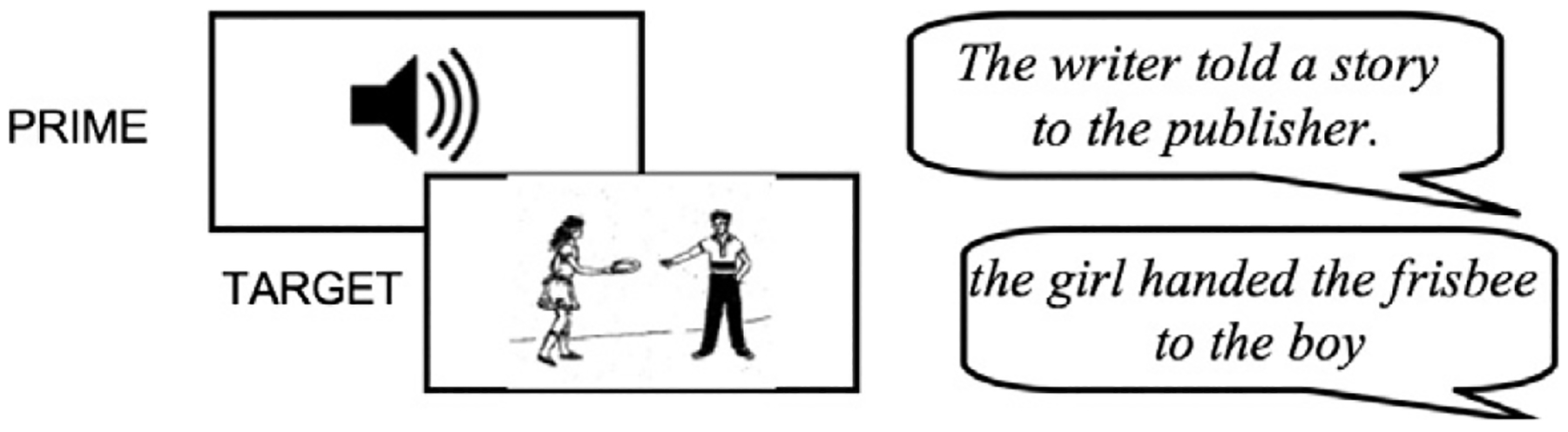

4.3.3. Procedure

Participants were seated in front of a computer and told that they would be participating in an experiment that investigated how language production affected their memory for sentences and pictures. Stimuli were presented using the program Linger (Rohde, 1999). Prime trials were always immediately followed by target trials. The procedure is illustrated in Fig. 8.

Fig. 8.

Procedure for priming trials in Study 3.

On prime trials, participants listened to a prime sentence read by a female voice played over a set of speakers. After the prime sentence had been played, participants pressed the space bar (to begin recording) and repeated the sentence exactly as it was presented. After participants finished speaking, they pressed the space bar again, and then they were asked whether they had heard the sentence before. Participants pressed the ‘F’ key for ‘yes’, and the ‘J’ key for ‘no’.

On target trials, participants were presented a picture that they had to describe in one sentence after pressing the space bar (to start recording). Once they were done speaking, they pressed the space bar again and a question appeared asking if they had seen the picture before, which they answered just like in the prime trials.

Items were always separated by at least five fillers (sentence or picture, chosen randomly). On filler trials, participants either repeated a filler sentence or described a filler picture, and also indicated whether they had seen the stimulus before, using the same procedure as for item trials described above. In order to implement the memory cover task, 18 of the 30 filler sentences and 18 of the 30 filler pictures were repeated a second time in the experiment. To convince participants early on in the experiment that their memory would indeed be tested, the first repeated filler occurred on the third trial, repeating the first trial. Including the 24 experimental trials, the 24 unrelated trials and the 96 filler trials, there were a total of 144 trials in the experiment.

4.3.4. Scoring

Participants responses were scored and annotated by two independent raters. One participant was scored by both raters in order to estimate inter-annotator agreement, which was high (Cohen’s κ = 0.91). For each prime or target response, the annotators transcribed the entire utterance and scored the prime structure, the prime verb, the subject, recipient, and theme NPs.

On 4.4% of the prime trials, participants did not repeat the prime correctly. These prime trials were excluded from the analysis and from the count of prime structures based on which adapted surprisal was calculated. Fig. 9 shows the distribution of estimated adapted prime surprisal by item order.

Fig. 9.

Estimates of adapted prime surprisal plotted versus item order. Circles represent the block condition. Crosses represent the alternating condition.

Two of the original items were excluded because they produced low variation in the target productions (<15% PO). This left a total of 755 trials for the analysis. Inclusion of these trials does not change the results reported below.

4.3.5. Analysis

As in Studies 1 and 2, we employed a mixed logit regression to predict the occurrence of PO over DO structures. Three random effects were included in the analysis. For by-participant and by-item random effects, the analysis contained the full random effect structure – all intercepts, all slopes, and all covariances. Some of these random effects were not justified by model comparison, but since their removal did not change results we simply report the full model here (but see Appendix B for more details). Since participants varied in what verbs they produced for a picture (there were 24 items in Study 3 but across participants 34 different verbs were produced), we also included a random intercept by target verb, which was justified by model comparison (χ2(1) = 12.0, p < 0.001).

Finally, we included all priming-related controls from Studies 1 and 2 in the analyses, except for prime-target distance and prime-target verb identity, which were held constant in Study 3. As in Studies 1 and 2, the cumulative count of preceding prime structures included both prime and target trials. All fixed effect correlation rs < 0.15.

4.4. Predictions

We predicted that the interaction between prime structure and prior prime surprisal observed in Studies 1 and 2 should replicate. If participants indeed rapidly adapt their syntactic expectations based on recent experience including the distribution of prime structures they are exposed to during the experiment (as proposed by, e.g., Chang et al., 2006 and supported by recent work, Farmer et al., 2011; Fine et al., submitted for publication; Kaschak & Glenberg, 2004b), we should also observe the same type of interaction between adapted prime surprisal and prime structure.

4.5. Results

Table 4 summarizes the results. As in Studies 1 and 2, we first summarize the basic priming effects and then turn to the effects of interest.

Table 4.

Summary of Study 3 results (c = centered, r = residualized; for further information see the caption of Table 1).

| Predictor (independent variable) | Parameter estimates | Wald’s test | Δ(−2Ʌ)-test | Partial pseudo-R2 | ||||

|---|---|---|---|---|---|---|---|---|

| Log-odds | S.E. | Odds | Z | pz | χ2 | P | ||

| Basic priming effects | ||||||||

| PO prime (c) | 0.94 | 0.17 | 2.5 | 5.3 | ≪0.001 | 21.0 | ≪0.001* | 0.054 |

| Cumulative PO primes | 1.72 | 0.46 | 5.6 | 3.6 | <0.001 | 14.8 | <0.001* | 0.027 |

| Effects of prime’s prediction error | ||||||||

| Prior prime surprisal (c,r) | 0.11 | 0.25 | 1.1 | 0.3 | >0.7 | 0.2 | >0.6 | 0.001 |

| PO prime × prior prime surprisal (r) | 1.23 | 0.61 | 3.4 | 2.5 | <0.02 | 4.4 | <0.05* | 0.007 |

| Adapted prime surprisal (c,r) | 0.11 | 0.09 | 1.1 | 1.3 | >0.18 | 2.6 | >0.11 | 0.005 |

| PO prime × adapted prime surprisal (r) | 0.50 | 0.24 | 1.6 | 2.0 | <0.05 | 5.6 | <0.02* | 0.011 |

4.5.1. Basic priming effects

Replicating previous work, we found a significant main effect of prime structure such that PO primes made PO structures more likely to be produced in the target (pz 0.001). Replicating work by Kaschak and colleagues, we also found a significant effect of cumulative priming (pz < .001): the higher the proportion of PO structures in the trials preceding the target, the more likely speakers were to produce a PO structure.

4.5.2. Prior prime surprisal

We found the predicted interaction of prior prime surprisal and PO prime (pz < 0.05). This effect is plotted in Fig. 10. Replicating Studies 1 and 2, simple effect analyses revealed that the effect of surprisal was significant for PO primes (pz < 0.05) but not DO primes (pz > 0.2), although in the predicted direction. As in Studies 1 and 2, there was no main effect of prior prime surprisal (pz > 0.7).

Fig. 10.

Interaction between prior prime surprisal (in bits) and priming in Study 3. Orange circles represent PO primes, and blue crosses represent DO primes. Dotted curves represent the 95% confidence intervals of the slopes. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

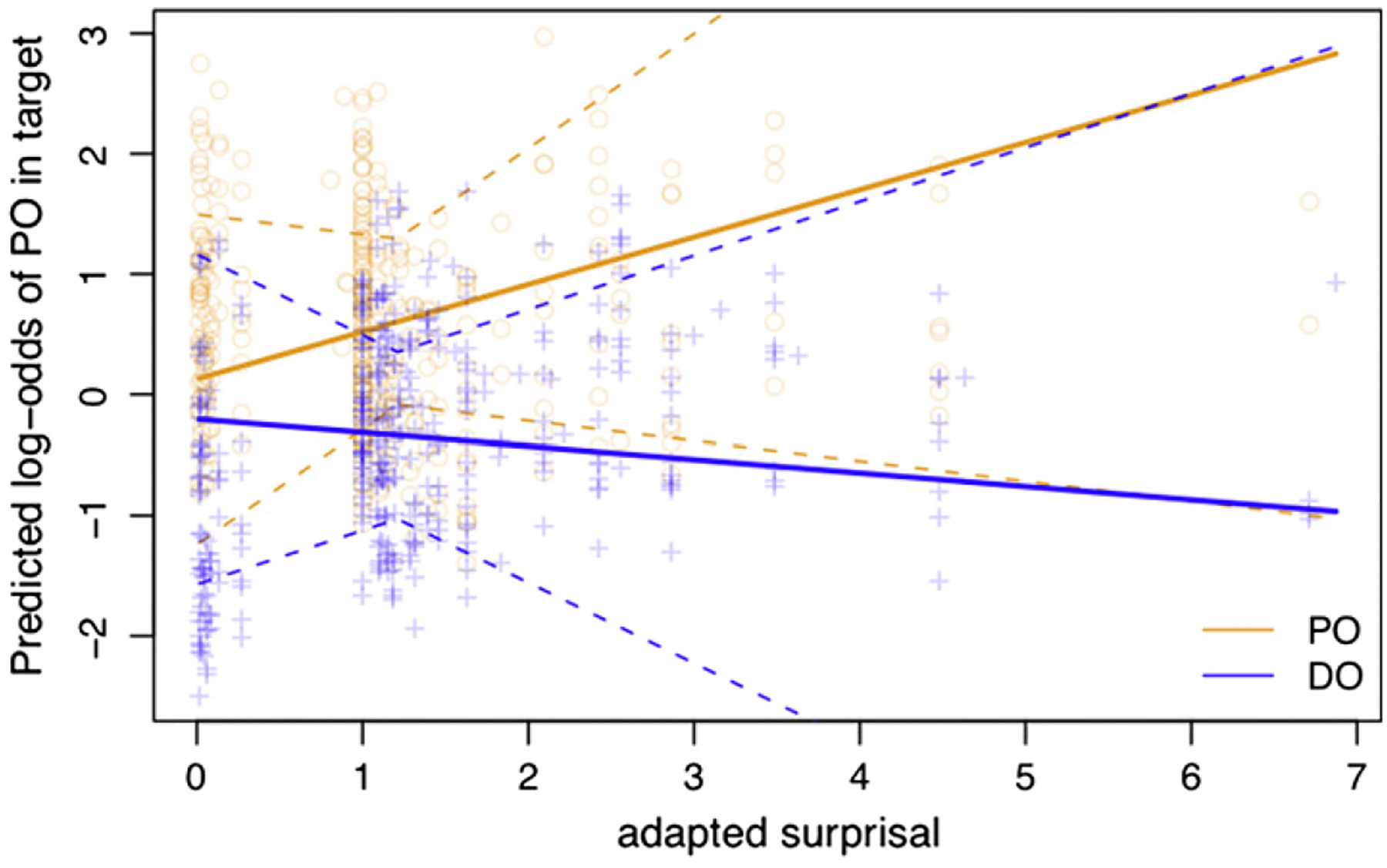

4.5.3. Adapted prime surprisal

As with prior surprisal, we found the predicted interaction between adapted prime surprisal and the prime structure (pz < .05). This effect is plotted in Fig. 11. Again, the simple effect of adapted surprisal is significant for PO primes (pz < 0.05) and non-significant for DO primes (pz > 0.3, in the expected direction). There was no main effect of adapted prime surprisal (pz > 0.2).

Fig. 11.

Illustration of the interaction between adapted prime surprisal (in bits) and priming in Study 3. Orange circles represent PO primes, and blue crosses represent DO primes. Dotted curves represent the 95% confidence intervals of the slopes. (For interpretation of the references to colour in this figure legend, the reader is referred to the web version of this article.)

4.6. Discussion

The large effects of prime structure and cumulativity suggest that Study 3 was successful in eliciting strong syntactic priming effects, which make it a suitable paradigm for the current purpose. More crucially, the experiment confirms and extends the results of Studies 1 and 2. We find that priming is stronger the more surprising the prime structure based both on prior experience and recent experience within the experiment. Before we discuss the consequences of these findings in more detail, we address a potential a caveat to our results.

As in Studies 1 and 2, the simple effect of prime surprisal went in the predicted direction for both PO and DO primes. However, in the all three studies, the effect only reached significance for PO primes. In Studies 2 and 3, this effect is unlikely to be due to a floor effect since PO and DO structures were about equally likely in the target sentences (cf. mean between orange and blue lines, Figs. 5 and 11). A more likely explanation is that DO primes exhibit only very weak priming effect to begin with, as observed in Study 1 and previous work (Kaschak, 2007; Kaschak et al., 2011). Since this hypothesis cannot be tested here because Study 3 did not contain a baseline condition against which we could compare the effect of PO and DO priming. There is, however, supporting evidence from a number of previous studies that have found weaker or no priming effects for the more frequent structure in an alternation compared to the less frequent structure (e.g., Bock, 1986; Ferreira, 2003; Hartsuiker & Kolk, 1998; Hartsuiker & Westenberg, 2000; Jaeger, 2010; Scheepers, 2003). For example, Kaschak et al. (2011) do not find cumulative priming effects for the more frequent DO structure, but find cumulative priming for the less frequent PO structure.

Most relevant to the current context are the experiments reported in Bernolet and Hartsuiker (2010). Building on earlier presentations of the current work, Bernolet and Hartsuiker investigate the effect of prime surprisal in the Dutch dative alternation. They find stronger priming for more surprising DO primes, but no such effect for PO primes. As a matter of fact, Bernolet and Hartsuiker do not observe any priming for PO primes. That is, they seem to find the opposite of our result. As Bernolet and Hartsuiker point out though, DOs are actually the less frequent structure in Dutch (whereas POs are the less frequent structure in English). That is, once we take into consideration that English and Dutch differ in terms of the relative frequency of PO and DO structures, the pattern of results found in Studies 1 to 3 is identical to those reported in Bernolet and Hartsuiker. We conclude that the repeated failure to find a significance simple effect of prime surprisal for DO primes is likely due to an overall weak priming effect for the more frequent prime structure (DOs for English, POs for Dutch).

Still, this is a question that clearly deserves further attention. For example, in an ongoing study, we have found DO priming for English for a different set of verbs (Weatherholz, Campbell-Kibler, & Jaeger, 2012). One question that needs to be addressed in future work is what determines when priming by the more frequent structure is observed. Another question is whether the strength of DO priming – when it is observed – is dependent on the prediction error. Without further assumptions, this is the prediction made by both the error-based model and the perspective advanced here.

5. General discussion

At the outset of this article, we hypothesized that syntactic priming is a consequence of adaptation with the goal to minimize the expected future prediction error. Specifically, we hypothesized that comprehenders continuously adapt their expectations to the statistics of the current environment (see also Botvinick & Plaut, 2004; Chang et al., 2006; Fine et al., submitted for publication; Plaut et al., 1996) and that speakers contribute to the minimization of the mutual prediction error. From this, we derived the prediction that the strength of syntactic priming in production should be correlated with the prediction error speakers’ experienced while processing the most recent prime. This is the prediction that we tested and found confirmed in our studies.

In all of our studies, the strength of syntactic priming increased with the surprisal associated with the prime’s syntactic structure. Study 1 found this effect in conversational speech, Study 2 found the effect for written production, and Study 3 found the effect for spoken picture descriptions. The three studies also differed in terms of how the prediction error was assessed. For Studies 1 and 2 the prediction error was based on the prime verb’s subcategorization bias (see also Bernolet & Hartsuiker, 2010). For Study 3, we estimated the prediction error based on the relative preference for a PO or DO structure given the entire lexical content of the sentence. Finally, we found that syntactic priming during language production is affected by the prime’s prediction error given both prior experience (Studies 1–3) and recent experience (Study 3).

The results obtained here have implications for accounts of syntactic priming. We first briefly (re)introduce those accounts. We discuss the extent to which previous evidence distinguishes between these accounts and how that evidence relates to the perspective advanced here. Once this background has been established, we discuss the implications of the current findings for previous accounts and for future work on syntactic priming.

5.1. Accounts of syntactic priming