Abstract

The screening of healthcare workers for COVID-19 (coronavirus disease 2019) symptoms and exposures prior to every clinical shift is important for preventing nosocomial spread of infection but creates a major logistical challenge. To make the screening process simple and efficient, University of California, San Francisco Health designed and implemented a digital chatbot-based workflow. Within 1 week of forming a team, we conducted a product development sprint and deployed the digital screening process. In the first 2 months of use, over 270 000 digital screens have been conducted. This process has reduced wait times for employees entering our hospitals during shift changes, allowed for physical distancing at hospital entrances, prevented higher-risk individuals from coming to work, and provided our healthcare leaders with robust, real-time data for make staffing decisions.

Keywords: coronavirus, COVID-19, employee screening, chatbot, healthcare worker

INTRODUCTION

On Friday, March 13, 2020, the San Francisco Department of Public Health issued an urgent order requiring all hospitals to develop and implement a coronavirus disease 2019 (COVID-19) mitigation plan.1 This order mandated that by Monday, March 16, at 8 am, hospitals must begin screening all employees and visitors for COVID-19 symptoms prior to entrance.

While necessary to promote health and safety of patients and employees, this requirement posed an enormous logistical and operational challenge for University of California, San Francisco (UCSF) Health. Our campuses have thousands of employees arriving within narrow time windows for shift changes, through multiple entrances, and have never before employed entry screening. The occupational health department did not have the resources to begin performing thousands of symptom screens daily, around the clock, at multiple clinical sites across the San Francisco Bay Area.

The initial stopgap solution was in-person manual screening. Employees were directed to a limited number of hospital entrances, which were staffed by screeners using a paper-based protocol. This resulted in wait times of up to 26 minutes for employees entering during shift changes, leaving inbound employees stuck in long lines (in the rain on multiple occasions) while outbound employees waited beyond the end of their shift time to be relieved. Long lines made physical distancing challenging. The verbal administration of health screening questions in public locations also raised privacy concerns.

An improved screening process was important not only for efficiency, but also to prevent nosocomial spread of infection. During the early days of the pandemic, testing ability was limited and the potential for hospital outbreaks was considerable, making careful detection of symptoms and contact tracing essential. Our goal was therefore to develop an efficient, reliable, and dynamic tool for daily screening of healthcare workers to prevent the spread of COVID-19 in the healthcare setting.

MATERIALS AND METHODS

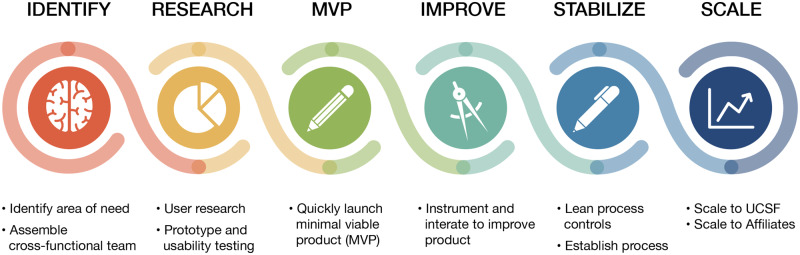

We followed an agile product design process to conduct this sprint (Figure 1).

Figure 1.

University of California, San Francisco (UCSF) product development roadmap.

Identify

We interviewed stakeholders to understand the intended screening workflow and identify initial product requirements. Employees needed to be assigned 1 of 3 dispositions: cleared to work, cleared to work with restrictions (eg, with a letter from occupational health), and not cleared to work. Screening would need to happen far enough in advance of each shift to allow managers to arrange alternate staffing, and avoid employees commuting to work only to find out that they are not cleared for entry. Data reporting would be important for executives tracking overall rates of employees at risk and unable to work.

We focused on 3 key user experience design principles:

Make it fast and easy for employees and screeners and lower technical barriers to use, in part by not requiring login or installation of an application

Avoid medical jargon to make the tool accessible to employees with low health literacy or English as a second language

Make the process transparent and nonjudgmental to promote honest responses

Based on these principles, we focused on delivering a minimum viable product that would be rapidly deployable and enable iterative improvements.

Research

We conducted a rapid landscape analysis and chose to leverage a chatbot platform (Conversa)2 that was initially selected for use for a virtual patient care program. We selected this vendor for their expertise in user engagement, including ability to send reminders via multiple communication channels. Importantly, they were also able to customize the technology for an evolving use case.

In order to understand the context of use, team members directly observed and participated in the existing process of in-person, paper-based screening. Armed with the requirements of internal stakeholders, insights from participatory research, and the capabilities of the digital tool, we set out to design an efficient digital screening experience.

Minimum viable product

To create the chatbot, we first translated existing screening criteria into a set of branching logic questions. With the vendor, we then built these questions into a rule-based chatbot with prespecified responses.

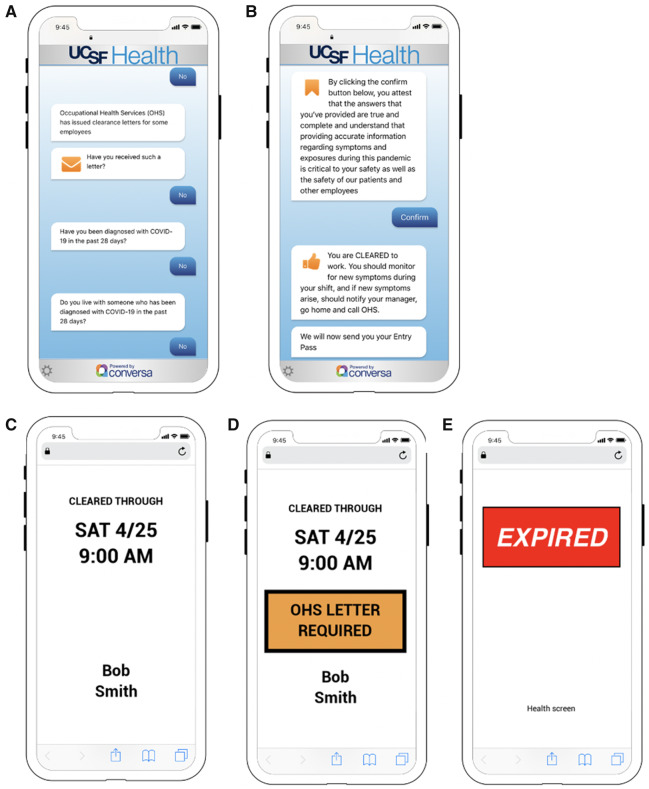

The employee launches the Web-based chatbot (Figures 2A and 2B) by clicking on a link provided in an email or by sending a 1-word text message. During their first session, the employee enters their name and date of birth to create a unique identifier. For subsequent sessions, the employee clicks on a tokenized link to reopen the session, eliminating the need for logins or re-entering personal information. The chat interface incorporates branching logic such that questions are simple and relevant, removing the ambiguity and contingencies present in any paper-based tool.

Figure 2.

Chatbot screenshots: (A) exposure questions, (B) attestation and clearance, (C) clearance pass, (D) clearance pass requiring letter from Occupational Health Services (OHS), and (E) expired pass.

Upon completion, the chat generates an entry pass, which allows employees to use a “fast lane,” modeled after TSA PreCheck, expediting entry during common shift change times. The pass is designed to be simple and easily read by front-door screeners from 6 feet away (Figures 2C–E). It expires after 18 hours. After using the chat, employees can set their text message or email reminder frequency. Paper-based screening is available for employees who do not wish to or are unable to use the digital version.

Improve and stabilize

Knowing that the content, workflow, and software would require rapid iteration, we aligned internal workstreams with the vendor to allow for daily software releases if necessary. We worked using an agile software development methodology3 with daily standups that included internal and vendor teams. We maintained ongoing communications among both organizations using an asynchronous chat tool4 and collaborative document editing.5 We set up an incident reporting system6,7 to quickly and systematically address issues with the screening tool or screening process. We reviewed usage data and incident reports daily.

Scale

Five days after forming the team, we launched the initial version of the digital tool and piloted with approximately 80 employees. We collected user experience data from surveys, interviews, direct observations, remote user testing, and application workflow analytics and made a series of changes to address common issues (Table 1). For example, a common theme among pilot users was the request for the “fast lane” to be physically separated from the manual screening lane to expedite access and preserve physical distancing—a change we instituted the following week. Three days after the initial pilot, we launched the tool to all employees at the 3 main UCSF hospitals. Within 1 week, we expanded to ambulatory clinics and began the process of expanding to nonclinical employees and affiliate health systems.

Table 1.

Product design toolkit

| Design tool | Description |

|---|---|

| Design research by direct observation |

|

| Service design blueprint |

|

| Product design prototyping |

|

| Remote usability testing |

|

| Time and motion study |

|

| Survey |

|

RESULTS

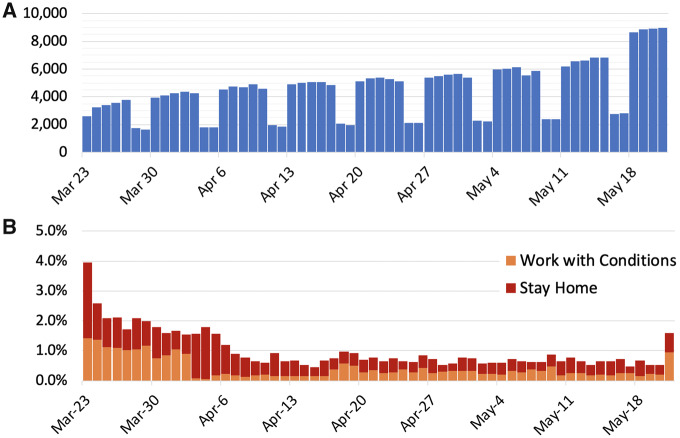

Data from the health screen are summarized and sent to hospital leadership each morning to inform staffing decisions. In the first 60 days since the hospital-wide launch, 271 324 screens (average 4522 screens/d) were completed (Figure 3A). Completion rate was 97%. Of completed screens, 268 843 (99.1%) resulted in employees being cleared to work with no restrictions, and 654 (0.2%) resulted in employees being allowed to work with restrictions (eg, needing a clearance letter from Occupational Health). A total of 1453 screens (0.5%, average 24 screens/d) resulted in employees being asked to stay home from work (Figure 3B).

Figure 3.

(A) Number of daily screens over time and (B) percentage of high risk screens over time.

We directly measured and compared the time required for individual employees to clear the front entrance screening desk by the manual screening process vs the digital screening process. Starting with an employee reaching the front of the line, the manual screening process took a mean of 48 seconds (n = 28), whereas those having used the digital tool were cleared in a mean of 8 seconds (n = 20). During peak shift change, the average time waiting in line was reduced from 8 minutes 20 seconds to 1 minute 40 seconds. Based on these time savings and estimating two-thirds of employees entering during shift changes, we calculated that, to date, this tool has saved employees over 15 000 hours of time waiting to be screened. With a reduced queue length, employees completing the digital screening at home had reduced contact with other employees and screeners. This tool has also reduced the staffing necessary for entry screening, resulting in substantial cost savings for our health system.

DISCUSSION

In under 1 week, we designed, launched, and scaled a digital employee screening tool that was designed to minimize friction in the daily screening process. With minimal marketing, this tool was adopted by more than 3000 employees in the first week, and is now used over 9000 times per day, in part because it did not require an application download or login, and because it made the entry process quicker and easier for the employee and screener. There were several key learnings from this process. First, rapid product design and deployment in the healthcare setting requires a multidisciplinary team with a strong digital product management background, and preexisting technology assets. UCSF was able to deploy this digital tool in 5 days in part because this team had experience developing similar tools, and already had access to a technology toolkit to leverage. In similar situations, health systems without access to preapproved technologies may need to instead create an expedited approval process to meet emergent technology needs. Second, initial focus should be on creating and launching a minimum viable product, with the expectation that it will evolve and improve over time. As we transition from reacting to the acute phase of the crisis to planning for a prolonged period of screening, we are changing the product accordingly. For example, we are creating an expedited chat for employees who were cleared the day before. Third, the team should enact a rigorous change control process. Our clinical product lead served as a liaison between the clinical operations and product teams to shepherd necessary changes and translate them into product requirements. We also followed a quality assurance and communication “checklist” before each version update to ensure tool accuracy and synchrony with the broad organizational set of stakeholders.

We faced several key challenges when implementing this tool. User privacy was a major concern, particularly because many of our employees are not patients of the UCSF Health system. Furthermore, the health information that we collect pertains to COVID-19, which some perceive as a sensitive diagnosis.9 During initial user testing, we found that employees were suspicious about entering their identifying data into a tool that did not have clear UCSF branding, which is consistent with literature on use of digital health applications.10 To address this, we featured the UCSF logo prominently and clearly communicated why we were collecting the data and how it would be used. The tool was vetted by privacy, legal, and risk committees. We also had concerns about relying entirely on self-reporting, which introduced the potential for untruthful answers motivated by the need to or desire to work. In response, we added an attestation to the end of the chat. The institution also provided 128 hours of paid administrative leave to all employees to use if they were sent home for COVID-19–related concerns, reducing the incentive to work when symptomatic. Limitations of the data include the lack of randomization or A/B testing across different sites, stemming from the need to quickly introduce a product that was critical to clinical operations.

Screening of healthcare workers is now ubiquitous based on recommendations by the Centers for Disease Control and Prevention and infection control experts.11,12 Many cities and states also recommend that nonhealthcare employers enact employee screening.13–15 Outbreaks in nonhealthcare workplaces are abundant,16,17 reinforcing the importance of creating an effective and scalable screening process. However, self-screening is likely to fail without a process that reminds employees to do so, requires proof of self-screening on entry to the workplace, and makes the self-screening process efficient. We describe an intervention that does all of the above in a way that is agile and user-friendly. Large employers both in the healthcare and nonhealthcare spaces should consider enacting similar tools and processes. Although others have described their initial experience implementing entry screening tools,18 this is to our knowledge the first and most longitudinal peer-reviewed data on a commercially available employee COVID-19 screening tool.

CONCLUSION

A digital tool and workflow can enable daily screening of healthcare workers while preserving the ability to efficiently enter and exit the workplace. We demonstrate that such a tool can decrease wait times for employees at workplace entrances, reduce the manual and expensive burden of staff entry screeners, enable physical distancing, and provide real-time workforce data to health system leaders.

AUTHOR CONTRIBUTIONS

All authors contributed to the conception and design of the work and the collection and assembly of data, writing the manuscript, and final approval of the manuscript.

ACKNOWLEDGMENTS

The authors acknowledge Eli Medina; Edward Martin; Harris Durrani; Jessica Chao; Jeffrey Chiu; Julia Wallace; Lauren Lee; Robert Kosnik, MD; Sondra Renly; Stefoni Bavin; Tim Moriarty, MBA; Victor Galvez; Russ Cucina, MD, MS; Michael Blum, MD; and the Conversa team for their partnership in developing and launching this product. They received no compensation related to this manuscript.

CONFLICT OF INTEREST STATEMENT

TJJ has received consulting fees from McKinsey and Company’s Healthcare Systems and Services Practice. AYO has received consulting fees from VSee, Inc. RG serves as an advisor to Phreesia, Inc and Healthwise, Inc. ABN has received research support from Cisco Systems, Inc; has received consulting fees from Nokia Growth Partners and Grand Rounds; serves as advisor to Steady Health (received stock options); has received speaking honoraria from Academy Health and Symposia Medicus; has written for WebMD (receives compensation); and is a medical advisor and co-founder of Tidepool (for which he receives no compensation).

REFERENCES

- 1. Order of the Health Officer No. C19-06 (Limitations on Hospital Visitors). 2020. https://www.sfdph.org/dph/alerts/files/Order-C19-06-ExcludingVisitorstoHospitals-03132020.pdf Accessed May 20, 2020.

- 2. Conversa. https://conversahealth.com/ Accessed May 25, 2020.

- 3. Highsmith J, Cockburn A. Agile software development: the business of innovation. Computer 2001; 34 (9): 120–7. [Google Scholar]

- 4. Slack. https://slack.com/ Accessed May 25, 2020.

- 5. Google Docs. https://www.google.com/docs/about/ Accessed May 25, 2020.

- 6. Jira Software. https://www.atlassian.com/software/jira Accessed May 25, 2020.

- 7. ServiceNow. https://www.servicenow.com/ Accessed May 25, 2020.

- 8. Lookback. 2020. https://lookback.io/ Accessed June 1, 2020.

- 9. Lin C-Y. Social reaction toward the 2019 novel coronavirus (COVID-19). Soc Health Behav 2020; 3 (1): 1–2. [Google Scholar]

- 10. Bari L O’Neill DP. Rethinking patient data privacy in the era of digital health. Health Aff Blog 2019. https://www.healthaffairs.org/do/10.1377/hblog20191210.216658/full/ Accessed May 25, 2020. [Google Scholar]

- 11. Centers for Disease Control and Prevention. Return to work for healthcare personnel with confirmed or suspected COVID-19. 2020. https://www.cdc.gov/coronavirus/2019-ncov/hcp/return-to-work.html Accessed May 25, 2020.

- 12. Black JRM, Bailey C, Swanton C. COVID-19: the case for health-care worker screening to prevent hospital transmission. Lancet 2020; 395: 1418–20. doi: 10.1016/S0140-6736(20)30917-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Ohio Department of Health. Screening Employees for COVID-19. 2020. https://coronavirus.ohio.gov/wps/portal/gov/covid-19/resources/general-resources/Screening-Employees-for-COVID-19 Accessed May 25, 2020.

- 14. Washington State Department of Health. Recommended guidance for daily COVID-19 screening of employees and visitors. 2020. https://www.doh.wa.gov/Portals/1/Documents/1600/coronavirus/Employervisitorscreeningguidance.pdf Accessed May 25, 2020.

- 15. Department of Public Health. Directive of the Health Officer of the City and County of San Francisco regarding required best practices for warehouse and logistical support businesses. https://www.sfdph.org/dph/alerts/files/Directive-2020-12-Warehousing-05172020.pdf Accessed May 25, 2020.

- 16. Miriam Jordan CD. Poultry worker’s death highlights spread of coronavirus in meat plants. The New York Times April 9, 2020. https://www.nytimes.com/2020/04/09/us/coronavirus-chicken-meat-processing-plants-immigrants.html Accessed May 5, 2020.

- 17. Karen Weise KC. Gaps in Amazon’s response as virus spreads to more than 50 warehouses. The New York Times April 5, 2020. https://www.nytimes.com/2020/04/05/technology/coronavirus-amazon-workers.html Accessed May 25, 2020.

- 18. Zhang H, Dimitrov D, Simpson L. A web-based, mobile responsive application to screen healthcare workers for COVID symptoms: descriptive study. Health Inform 2020. Apr 22 [E-pub ahead of print]; doi:10.1101/2020.04.17.20069211. [DOI] [PMC free article] [PubMed] [Google Scholar]