A reconfigurable neural network vision sensor is proposed by using gate-tunable photoresponse of van der Waals heterostructures.

Abstract

Early processing of visual information takes place in the human retina. Mimicking neurobiological structures and functionalities of the retina provides a promising pathway to achieving vision sensor with highly efficient image processing. Here, we demonstrate a prototype vision sensor that operates via the gate-tunable positive and negative photoresponses of the van der Waals (vdW) vertical heterostructures. The sensor emulates not only the neurobiological functionalities of bipolar cells and photoreceptors but also the unique connectivity between bipolar cells and photoreceptors. By tuning gate voltage for each pixel, we achieve reconfigurable vision sensor for simultaneous image sensing and processing. Furthermore, our prototype vision sensor itself can be trained to classify the input images by updating the gate voltages applied individually to each pixel in the sensor. Our work indicates that vdW vertical heterostructures offer a promising platform for the development of neural network vision sensor.

INTRODUCTION

Traditional vision chips (1) separate image sensing and processing, which would limit their performance with increasing demand for real-time processing (2). In contrast, the human retina has a hierarchical biostructure for connectivity among neurons with distinct functionalities and enables simultaneous sensing and preprocessing of visual information. A principal function of the human retina is to extract key features of the input visual information by preprocessing operations, although the specific neuronal activities remain a subject of intensive investigations (3, 4). This function aims to discard the redundant visual data and substantially accelerates further information processing in the human brain, such as pattern recognition and interpretation (5). Therefore, implementing retinomorphic vision chips represents a promising solution to solve the challenge faced by traditional chips and to process a large volume of visual data in practical applications (6, 7). So far, various technologies have been proposed to emulate the functions of the retina to integrate the image sensor and processing unit in each pixel for retinomorphic applications (2, 7, 8). Alternative to these conventional technologies, optoelectronic resistive random access memory synaptic devices allow achieving the functions of image sensing and preprocessing as well as memory (9), showing promise in reducing the complex circuitry for artificial visual system. To meet the increasing demands for edge computing, developing more advanced image sensors, such as with reconfigurable and self-learning capabilities, is highly desirable. Exploiting novel physical phenomena of emerging atomic-scale materials and hierarchical architectures made of these materials may offer a promising approach to realize such neural network vision sensors.

Two-dimensional (2D) materials with atomic thickness and flatness have shown great potential for numerous applications in electronics (10, 11) and optoelectronics (12–14). The van der Waals (vdW) vertical heterostructures formed by stacking different 2D materials accommodate an abundance of electronic and optoelectronic properties (15–21), which may be exploited to mimic hierarchical architecture and functions of retinal neurons (22–27) in a natural manner to implement a neural network vision sensor. Here, we show that the image sensor based on vdW vertical heterostructures can emulate the biological characteristics of photoreceptors and bipolar cells as well as the hierarchical connectivity between photoreceptors and bipolar cells. Besides, the fabricated vision sensor can be programmed to simultaneously sense images and process them with distinct kernels. We also demonstrate that the retinomorphic vision sensor itself is capable of being trained to carry out the task of pattern recognition. The technology proposed in this work opens up opportunities for the implementation of advanced neural network vision chips in the future.

RESULTS

As mentioned above, different types of retinal neurons are organized in a hierarchical way (Fig. 1A). More than 50 types of cells are distributed within a few different layers in the vertebrate retina, such as the photoreceptor layer, bipolar cell layer, and ganglion cell layer (28). The layered structure creates various types of retinal microcircuits that constitute the distinct visual pathways in the retina and ensures that the information flows from the top to the bottom. In these microcircuits, cone cells (one type of photoreceptor) and bipolar cells are crucial neurons. The cone cells transduce visual signals into electrical potential, while the bipolar cells serve as the critical harbors for shaping input signals (Fig. 1A), which can accelerate perception in the brain. According to their distinct response polarities, bipolar cells can be classified into ON cells and OFF cells, which respond to a light stimulus in opposite manners. Under a light stimulus, glutamate release from the cones is suppressed. The ionic channels of OFF cells with ionotropic glutamate receptors are closed because of the lack of glutamate to attach to. The resulting hyperpolarized OFF cells reduce the membrane potential (green curve in Fig. 1B). Conversely, suppressing the glutamate release by light stimulus opens the ionic channels of ON cells with metabotropic glutamate receptors. The resulting depolarized ON cells show an increased membrane potential (red curve in Fig. 1B). Through these ON and OFF bipolar cells in the pathway (29), information can be preprocessed and relayed to the visual cortex in the brain to be further processed for perception.

Fig. 1. Retinal and artificial retinal structures.

(A) Profile of a biological retina. (B) Biological working mechanism and photoresponse of OFF bipolar cells [with α-amino-3-hydroxy-5-methyl-4-isoxazolepropionic acid (AMPA)] and ON bipolar cells [with metabotropic glutamate receptor 6 (mGluR6)]. Black bars in the photoresponse of bipolar cells represent the moment of light illumination. (C) Optical image of a retinomorphic device based on a vdW vertical heterostructure. (D) Operating mechanism and photoresponse of the ON- and OFF-photoresponse devices at zero and negative gate voltages, respectively. The positive (negative) ∆Ids corresponds to ON-photoresponse (OFF-photoresponse). Shadow areas correspond to the duration of light illumination. (E) OFF-photoresponse at different bias voltages and light intensities (indicated by shadow areas). OFF-photoresponse of the device remains retained at extremely low bias voltage (10 mV), which allows the operation of low power consumption.

Mimicking retinal cells with vertical heterostructure devices

To emulate the hierarchical architecture and biological functionalities of photoreceptors and bipolar cells layers, we fabricated the WSe2/h-BN/Al2O3 heterostructure device (Fig. 1C). In contrast to the complex structure of silicon retina, the vdW device architecture in which we vertically integrate photoreceptor and bipolar cells is simple and compact. The device fabrication and Raman characterization of WSe2 can be found in Materials and Methods and fig. S1A. Compared to the emulation of ON-photoresponse feature, it is more challenging in mimicking OFF-photoresponse characteristic. Previous works have shown that the electrical current of devices can be suppressed by light-induced reduction in carrier mobility of low-dimensional material. However, the resulting response time is incomparable to that of ON-photoresponse (15, 16, 30, 31). By using atomically sharp interface of vdW vertical heterostructure and Al2O3 with nanoscale thickness, we are able to overcome the challenge and achieve fast photoresponse speed. The light-induced change of electrical current (∆Ids), which represents the photoresponse of the devices, is measured from the source/drain electrodes deposited on the WSe2 channel. The vertical heterostructure devices enable the conversion between light and electric signal and exhibit positive photoresponse (positive ∆Ids) and negative photoresponses (negative ∆Ids) dependent on the gate voltage, resembling the biological characteristics of photoreceptors and bipolar cells. Without applying gate voltage, light illumination generates excess electrons and holes in the WSe2 channel to increase the current change (Fig. 1D). This source/drain current increasing (“ON-photoresponse”) feature under the light stimulus is similar to light-stimulated increase in the membrane potential of ON bipolar cells. By applying a negative gate voltage, the ambipolar WSe2 is electrostatically doped with holes (10) (fig. S1B), and the source/drain current decreasing characteristic (“OFF-photoresponse”) resembles the light-stimulated reduction in the membrane potential of OFF bipolar cells. This OFF-photoresponse feature is highly reproducible in devices with similar parameters (fig. S2).

The physical origin of OFF-photoresponse can be understood in the following way. The existence of point defects in h-BN has been pointed out by previous studies of cathodoluminescence and elemental analysis (15, 32–34) and consequently confirmed by the scanning tunneling microscopy experiment (35). With the light illumination on vdW heterostructure devices, electrons of these defects distributed in different layers of h-BN are excited and then migrate upward under the action of perpendicular electric field from the back-gate voltage (35). The positively charged defects distributed in the upper layers of h-BN are recombined by other photogenerated electrons migrated from the lower part of h-BN. The positively charged defects localized in close proximity to the interface of h-BN and Al2O3 are not recombined during light illumination, effectively screening the black-gate electric field and suppressing the conduction of the WSe2 channel (fig. S3A). This screening effect can be enhanced by increasing the light intensity (fig. S3B), and the photoresponse of the devices is able to operate in the entire visible spectrum (fig. S3C). When the light is removed, the electrons tunneling through the thin Al2O3 layer (~6 nm thick) would recombine with those positively charged defects, and the reduced current rapidly recovers. We have carried out control experiments (fig. S4, A to C) and carefully ruled out the possibility that trap centers on the surface of oxidation layer cause the OFF-photoresponse. On the basis of the reduction of current upon light illumination, we can estimate the concentration of defects in h-BN to be around 1010 cm−2 (fig. S5). This concentration is comparable to that reported in previous works (15, 35), further indicating that the OFF-photoresponse arises from electron excitation of the defects in the h-BN.

The vdW heterostructure devices show good performance in terms of operating speed and power consumption. The sharp vdW interface enables us to achieve response time of less than 8 ms (fig. S6) in the OFF-photoresponse device. This time scale is comparable to that of biological bipolar cells (36), but it is expected to be further improved by reducing the contact resistance between metal electrodes and WSe2, fabricating high-quality interface between WSe2 and h-BN, engineering the defect distribution or concentration in the h-BN, etc. Besides the fast response, the OFF-photoresponse device holds promise in the operation of low power consumption (Fig. 1E). Figure 1E presents the current of the OFF-photoresponse device at different biases and light intensities. The device is capable of exhibiting OFF-photoresponse at low bias (i.e., 10 mV), indicating that the low power consumption is reachable with the device. Compared to the OFF-photoresponse device, the ON-photoresponse device exhibits a smaller dark current, resulting in a lower power consumption. Using vertical heterostructure could drastically reduce the complexity in each pixel of conventional retinomorphic circuits. With further optimization on power consumption and operating speed, these vertical devices are promising in emulating more advanced functionalities of human retina.

Reconfigurable retinomorphic vision sensor

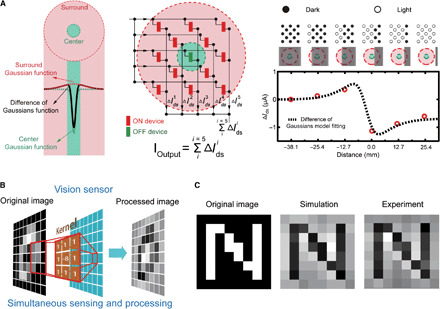

Assembling these ON- and OFF-photoresponse devices into an array (an OFF-photoresponse device in the center surrounded by ON-photoresponse devices) enables the emulation of the biological receptive field (RF). The RF is indispensable for early visual signal processing and has a center area (green in the left panel of Fig. 2A) and surrounding areas (pink in the left panel of Fig. 2A). Under a light stimulus, the center and surrounding areas of the biological RF show an antagonistic response, which is characterized by difference-of-Gaussians model (DGM; Materials and Methods). The key role of the RF of bipolar cells in the human retina is to early process visual information by extracting its key features (37) to accelerate the visual perception in the brain. We emulate the RF of bipolar cells by integrating 13 vdW heterostructure devices into an array (center panel of Fig. 2A and fig. S7A), with the individual device controlled by gate voltage separately. According to Kirchhoff’s law, output current (Ioutput) of artificial RF is a summation of photocurrent from all devices , and real-time variations of the output represent the dynamic response to light patterns changing. With this working principle, the artificial RF can be used for detecting edge of objects, which is a fundamental function of the biological RF. In the experiment, the light was switched on column by column to represent a contrast-reversing edge moving from the left side to the right side (right panel of Fig. 2A). When the edge moves toward the right side, the current variation increases as more ON-photoresponse devices are activated and exhibit strongest response before the edge reaches the center device. With continuous movement, the OFF-photoresponse device in the center antagonizes the photoresponse of the ON-photoresponse devices, leading to an opposite peak in the photocurrent variation. This behavior can be well described by the widely used DGM model (dashed line in the right panel of Fig. 2A), which also accounts for the dynamic response to the edge moving along other directions (fig. S8).

Fig. 2. The retinomorphic vision sensor based on a vdW vertical heterostructure for simultaneous image sensing and processing.

(A) RF with green center and pink surround areas. Left panel: DGM of the RF characterizes the distribution of responsivity. Center panel: Vision sensor and its output. An OFF-photoresponse device in the center is surrounded by ON-photoresponse devices. The output of vision sensor is the current summation over all devices. Right panel: Outputs of the artificial RF with a contrast-reversing edge moving from the left side to the right side. The upper circle array represents light sources. Light is on for solid circles and off for circles. (B) Vision sensor for simultaneous image processing and processing. (C) Edge enhancement of the letter N. Left panel: Original 8 × 8 binary image of the letter N. Middle and right panels: Simulation and experimental results.

The retinomorphic vision sensor shows the functionality of simultaneous sensing and processing (Fig. 2B), which allows the implementation of near-data processing. This architecture is completely different from traditional architecture vision chips. As aforementioned, the separation of image sensing and processing in traditional vision chips would reduce the efficiency for processing large amounts of real-time image information, as all the redundant visual data sensed by cameras have to be first converted to digital data and then transmitted to processors. In contrast, the visual information can be sensed and processed simultaneously by using our retinomorphic vision sensor based on vdW heterostructure without requiring analog-to-digital conversions. As a demonstration, we mapped the difference-of-Gaussian (DoG) kernel (3 × 3) into our vision sensor by assigning specific values to each gate and demonstrated the edge enhancement of letter “N” (8 × 8 binary, left panel of Fig. 2C). The experimental details can be found in Materials and Methods and fig. S9A. The variance of Ioutput in the array is simultaneously recorded (fig. S9B). Reconstructing the data of the current variance yields the experimental and simulated letter N (center and right panels of Fig. 2C). The experimental results are in good agreement with the simulation results.

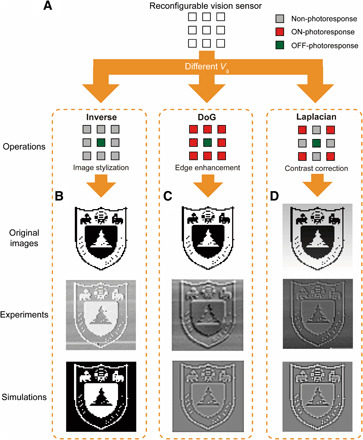

By modulating Vg individually applied to each vdW heterostructure device, we are able to achieve reconfigurable retinomorphic vision sensor to simultaneously sensing images and processing images in three different ways, as shown in Fig. 3. Image stylization refers to the rendering effect that generates a photorealistic or nonphotorealistic image. It is mainly implemented by software in computer graphics (38). By using the retinomorphic vision sensor, we are able to invert a grayscale image of the Nanjing University logo (Fig. 3B). The stylized image of the logo is similar to the simulation results. In addition to the function of image stylization, we also use the vision sensor to demonstrate other important functions widely used for image processing, such as edge enhancement and contrast correction, which well reproduce the image features shown in the simulation results. In Fig. 3C, we realize edge enhancement by eliminating the contrast difference between logo patterns (black) and background (white). Furthermore, the contrast in the logo can be corrected by using the vision sensor to display hidden information of the edge due to underexposure/overexposure (Fig. 3D). The detection accuracy of the sensor is not deteriorated by the irregular edge patterns in the logo, which is justified by the good agreement between the experimental and simulation results. The functionality of our vision sensor is not limited to those demonstrated above; it also shows promising application in reducing the noise of the target image (fig. S10A) and real-time tracking (fig. S10B). These findings indicate that the field of hardware accelerating in image processing may benefit from the use of the reconfigurable vision sensor.

Fig. 3. Reconfigurable retinomorphic vision sensor.

(A) Demonstration of image processing with three different operations (i.e., image stylization, edge enhancement, and contrast correction). These operations are realized by controlling the photoresponse of each pixel in the sensor by varying Vg independently. (B) Image stylization. (C) Edge enhancement. (D) Contrast correction. Original images correspond to the images to be processed by different operations. Experimental results by distinct convolution operations are compared with simulations. Photo credits: C.Y. Wang, Nanjing University.

Implementation of a convolutional neural network

The retinomorphic vision sensor is also promising to form a convolutional neural network and carry out classification task of target images (Fig. 4), in which the weights can be updated by tuning gate voltages applied to each pixel of the vision sensor. We take dot product of the sensed image information and the weights represented by the back-gate voltage of each pixel to calculate the total output current. By adopting backpropagation approach, we are able to tune the back-gate voltage of each pixel to update the weights after each epoch. In the experiments, the dataset for training is made of 9 binary figures (3 × 3), including three different types of letters, i.e., “n,” “j,” and “u.” As shown in Fig. 4A, the instruction information representing these figures of letters were input into the retinomorphic vision sensor through laser. Figure 4B illustrates the training process of the vision sensor for pattern recognition. Initially, all the back-gate voltages are set to 0. The modulation of gate voltage in each pixel (with i and j representing the pixel location) depends on the feedback of the measured photocurrent for the input kth figure. f1, f2, and f3 correspond to the output of the neural network for three different letters. δk denotes the backpropagated error in the kth iteration. We examine the accuracy of image recognition over training epoch to evaluate the convergence of neural network outputs. As shown in Fig. 4C, the accuracy reaches 100% with less than 10 epochs, which is obtained by the weighted average of three different convolution kernels. The inset shows the weight distribution of the convolutional neural network vision sensor, corresponding to initial (yellow histogram) and after 10 epochs (blue histogram). To further examine the evolution of the recognized results averaged over all three different types of each specific letter, we present the variation of the output (f1, f2, and f3) of each convolution kernel over the number of training (Fig. 4D). We found that the target letter can be well separated from the input images after two epochs.

Fig. 4. Implementation of convolutional neural network with the retinomorphic vision sensor.

(A) Three different patterns of each specific letter (n, j, and u). (B) Training process of the vision sensor at each epoch. The different color maps correspond to different convolution kernels. k is the number of training. i and j denote the location of each pixel in the sensor. (C) Accuracy of recognition over the epoch; the inset shows the weight distribution of vision sensor, corresponding to initial (yellow) and final training (blue). (D) Measured average output signals for each epoch for a specific input letter. The curves with largest values (f1, f2, and f3, respectively) represent the recognition results of the target letters.

Discussion

The excellent performance of the prototype vision sensor as a convolutional neural network suggests that the integration of vdW vertical heterostructure may open up a new avenue for achieving highly efficient convolutional neural network for visual processing in a fully analog regime (39). While this manuscript was in revision, we also note that a similar work based on a split-gate WSe2 homojunction device has recently been demonstrated for ultrafast machine vision (40).

In conclusion, we demonstrate a prototype vision sensor based on vdW heterostructure. This sensor can not only closely mimic the biological functionalities of retinal cells but also exhibit reconfigurable functions of image processing beyond the human retina. Furthermore, we show that the retinomorphic vision sensor itself can serve as a convolutional neural network for image recognition. Our work represents a first step toward the development of future reconfigurable convolutional neural network vision sensor.

MATERIALS AND METHODS

Device fabrication

WSe2 (from HQ Graphene) and h-BN were obtained by a mechanical exfoliation approach. Bottom-gate electrodes [Ti (5 nm)/Au (25 nm)] and source/drain electrodes [Pd (5 nm)/Au (75 nm)] were patterned by standard electrical beam lithography and electrical beam evaporation. Al2O3 was grown on the bottom-gate electrodes by atomic layer deposition. We fabricated vdW vertical heterostructures by transferring h-BN and WSe2 onto the substrate with the standard polyvinyl alcohol method. We used an atomic force microscope (AFM) to confirm the thickness of WSe2 (2 to 20 nm), h-BN (10 to 40 nm), and Al2O3 (6 to 10 nm), and robustness of devices is a positive correlation with thickness of materials. Each channel between source/drain electrodes is 1 to 2 μm, and the length of WSe2 used is 10 to 30 μm. Before carrying out electrical measurements, all devices were annealed at 573 K in an argon atmosphere for 2 hours to remove photoresist residue.

Difference-of-Gaussians model

DGM has been widely used to describe the response of the biological RF under a light stimulus, where the output Gaussian function Goutput(x,y) can be expressed by Goutput(x, y) = GON(x, y) − GOFF(x, y). Here, is a 2D Gaussian function representing the spatial intensity distribution of the responsivity in the center or surround area, where x and y are the space coordinates, μix and μiy denote the central coordinate of the biological RF in different areas, and σix and σiy represent the SD of the spatially distributed photoresponse of the biological RF.

Electrical measurement

Each vdW heterostructure device was placed on special printed circuit board (PCB) in a nitrogen atmosphere. All the devices were connected in parallel via a switch matrix box. We conducted current measurements through a data acquisition card (National Instruments, PCIe-6351) and current amplifier (Stanford Research Systems, model SR570). The gate voltage was applied by a source measurement unit (Keithley, 2635A). As shown in fig. S9A, two separate measurement channels were involved in the experiment: one for ON-photoresponse devices and another for OFF-photoresponse devices.

Details of datasets

We down-sampled the Nanjing University logo into 64 × 64. Pixel values in the image of the Nanjing University logo were binarized. In Fig. 3D, the original image used in the experiment for contrast correction was obtained by compressing the pixel value range (0 to 255) of the logo image into the range of 0 to 191 and by adding a contrast background that smoothly changes from darkness to brightness.

Pattern generation

The pattern generator system used in the experiments was composed of a laser array and multiterminal voltage sources. It was controlled via a code implemented in Python. With a sampling window sliding in the stride of one, we transferred every target image into many subimages. The intensity information in these subimages was further translated into instructions and fed into the pattern generator system in chronological order. As illustrated in fig. S9A, each instruction was applied with a fixed time interval. In addition, we calibrated the photocurrent measured from ON- and OFF-photoresponse devices so that the current remains unchanged over a certain time period. Note that all the operations were implemented in LabVIEW.

Signal processing

We acquired current signals from the ON and OFF channels separately. Then, we reduced the noise resulting from H2O and O2 molecules absorbed on the surface of WSe2. This kind of noise would lead to fluctuation of the background current and the current change of ON- and OFF-photoresponse devices over time. To eliminate the effect of noise, we first obtained the fluctuation of the background current under background light illumination; then, we calibrated the photocurrent of ON- and OFF-photoresponse devices by multiplying the weights obtained in the section on pattern generation. Last, we added measured current from ON and OFF channels and then reorganized these current data into images. All codes were implemented in the Igor software (Wavemetrics).

Implementation of pattern recognition

To show the training of convolutional neural network implemented by the retinomorphic vision sensor, we have input the light signal representing the images into the vision sensor and measured the current variation, , as indicated in Fig. 4B. The collected Ioutput was then input into the activation function f = (1 + e−αIoutput)−1 to produce the outputs (f1, f2, and f3), where α = 1.5e5 A−1 is the scaling factor used for normalization. According to the feedback of outputs, the error δk would be backpropagated to update the weights of the neural network. The updated weight is defined by , where n = 0.1, β = 0.5 V, and P is the input of light intensity.

Supplementary Material

Acknowledgments

Funding: This work was supported, in part, by the National Natural Science Foundation of China (61625402, 61921005, and 61974176), the Collaborative Innovation Center of Advanced Microstructures and Natural Science Foundation of Jiangsu Province (BK20180330), and Fundamental Research Funds for the Central Universities (020414380122 and 020414380084). K.W. and T.T. acknowledge support from the Elemental Strategy Initiative conducted by the MEXT, Japan; A3 Foresight by JSPS; and the CREST (JPMJCR15F3), JST. Zhong. Wang, W.S., and J.J.Y. were supported, in part, by TetraMem Inc. Author contributions: F.M., C.-Y.W., S.-J.L., and J.J.Y. conceived the idea and designed the experiments. C.-Y.W., S.W., and Z.L. fabricated the device arrays and performed electric experiments on the arrays. C.-Y.W. and S.-J.L. analyzed experimental data. A.G., C.L., and X.W. assisted in optoelectronic measurement. C.P. and B.C. assisted in the electrical measurement. J.L., H.Y., and K.C. grew Al2O3 with atomic layer deposition. X.L. and Zhenl. Wang performed the measurement of Raman spectrum of WSe2 and h-BN. K.W. and T.T. prepared the h-BN samples. S.W., Z.L., and P.W. implemented the neural network. Zhong. Wang, W.S., and C.W. contributed to the discussion for neural network. S.-J.L., C.-Y.W., F.M., and J.J.Y. wrote the manuscript with inputs from all co-authors. Competing interests: The authors declare that they have no competing interests. Data and materials availability: All data needed to evaluate the conclusions in the paper are present in the paper and/or the Supplementary Materials. Additional data related to this paper may be requested from the authors.

SUPPLEMENTARY MATERIALS

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/26/eaba6173/DC1

REFERENCES AND NOTES

- 1.A. Moini, Vision Chips (Springer Science & Business Media, 2012), vol. 526.

- 2.Posch C., Serrano-Gotarredona T., Linares-Barranco B., Delbruck T., Retinomorphic event-based vision sensors: Bioinspired cameras with spiking output. Proc. IEEE 102, 1470–1484 (2014). [Google Scholar]

- 3.Eldred K. C., Hadyniak S. E., Hussey K. A., Brenerman B., Zhang P.-W., Chamling X., Sluch V. M., Welsbie D. S., Hattar S., Taylor J., Wahlin K., Zack D. J., Johnston R. J. Jr., Thyroid hormone signaling specifies cone subtypes in human retinal organoids. Science 362, eaau6348 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hong G., Fu T.-M., Qiao M., Viveros R. D., Yang X., Zhou T., Lee J. M., Park H.-G., Sanes J. R., Lieber C. M., A method for single-neuron chronic recording from the retina in awake mice. Science 360, 1447–1451 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Gollisch T., Meister M., Eye smarter than scientists believed: Neural computations in circuits of the retina. Neuron 65, 150–164 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mead C. A., Mahowald M. A., A silicon model of early visual processing. Neural Netw. 1, 91–97 (1988). [Google Scholar]

- 7.Kyuma K., Lange E., Ohta J., Hermanns A., Banish B., Oita M., Artificial retinas – Fast, versatile image processors. Nature 372, 197–198 (1994). [Google Scholar]

- 8.Brandli C., Berner R., Yang M. H., Liu S.-C., Delbruck T., A 240 × 180 130 dB 3 μs latency global shutter spatiotemporal vision sensor. IEEE J. Solid State Circuits 49, 2333–2341 (2014). [Google Scholar]

- 9.Zhou F., Zhou Z., Chen J., Choy T. H., Wang J., Zhang N., Lin Z., Yu S., Kang J., Wong H.-S. P., Chai Y., Optoelectronic resistive random access memory for neuromorphic vision sensors. Nat. Nanotechnol. 14, 776–782 (2019). [DOI] [PubMed] [Google Scholar]

- 10.Wang Q. H., Kalantar-Zadeh K., Kis A., Coleman J. N., Strano M. S., Electronics and optoelectronics of two-dimensional transition metal dichalcogenides. Nat. Nanotechnol. 7, 699–712 (2012). [DOI] [PubMed] [Google Scholar]

- 11.Liu C. S., Chen H., Hou X., Zhang H., Han J., Jiang Y.-G., Zeng X., Zhang D. W., Zhou P., Small footprint transistor architecture for photoswitching logic and in situ memory. Nat. Nanotechnol. 14, 662–667 (2019). [DOI] [PubMed] [Google Scholar]

- 12.Lopez-Sanchez O., Lembke D., Kayci M., Radenovic A., Kis A., Ultrasensitive photodetectors based on monolayer MoS2. Nat. Nanotechnol. 8, 497–501 (2013). [DOI] [PubMed] [Google Scholar]

- 13.Fang H. H., Hu W. D., Photogating in low dimensional photodetectors. Adv. Sci. 4, 1700323 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Long M., Wang P., Fang H., Hu W., Progress, challenges, and opportunities for 2D material based photodetectors. Adv. Funct. Mater. 29, 1803807 (2019). [Google Scholar]

- 15.Ju L., Velasco J. Jr., Huang E., Kahn S., Nosiglia C., Tsai H.-Z., Yang W., Taniguchi T., Watanabe K., Zhang Y., Zhang G., Crommie M., Zettl A., Wang F., Photoinduced doping in heterostructures of graphene and boron nitride. Nat. Nanotechnol. 9, 348–352 (2014). [DOI] [PubMed] [Google Scholar]

- 16.Xiang D., Liu T., Xu J., Tan J. Y., Hu Z., Lei B., Zheng Y., Wu J., Castro Neto A. H., Liu L., Chen W., Two-dimensional multibit optoelectronic memory with broadband spectrum distinction. Nat. Commun. 9, 2966 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Geim A. K., Grigorieva I. V., Van der Waals heterostructures. Nature 499, 419–425 (2013). [DOI] [PubMed] [Google Scholar]

- 18.Liu C., Yan X., Song X., Ding S., Zhang D. W., Zhou P., A semi-floating gate memory based on van der Waals heterostructures for quasi-non-volatile applications. Nat. Nanotechnol. 13, 404–410 (2018). [DOI] [PubMed] [Google Scholar]

- 19.Liang S.-J., Cheng B., Cui X., Miao F., Van der Waals heterostructures for high-performance device applications: Challenges and opportunities. Adv. Mater. , 1903800 (2019). [DOI] [PubMed] [Google Scholar]

- 20.Huang M., Li S., Zhang Z., Xiong X., Li X., Wu Y., Multifunctional high-performance van der Waals heterostructures. Nat. Nanotechnol. 12, 1148–1154 (2017). [DOI] [PubMed] [Google Scholar]

- 21.Wang M., Cai S., Pan C., Wang C., Lian X., Zhuo Y., Xu K., Cao T., Pan X., Wang B., Liang S.-J., Yang J. J., Wang P., Miao F., Robust memristors based on layered two-dimensional materials. Nat. Electron. 1, 130–136 (2018). [Google Scholar]

- 22.Zhu X., Li D., Liang X., Lu W. D., Ionic modulation and ionic coupling effects in MoS2 devices for neuromorphic computing. Nat. Mater. 18, 141–148 (2019). [DOI] [PubMed] [Google Scholar]

- 23.Sangwan V. K., Lee H.-S., Bergeron H., Balla I., Beck M. E., Chen K.-S., Hersam M. C., Multi-terminal memtransistors from polycrystalline monolayer molybdenum disulfide. Nature 554, 500–504 (2018). [DOI] [PubMed] [Google Scholar]

- 24.Seo S., Jo S.-H., Kim S., Shim J., Oh S., Kim J.-H., Heo K., Choi J.-W., Choi C., Oh S., Kuzum D., Wong H.-S. P., Park J.-H., Artificial optic-neural synapse for colored and color-mixed pattern recognition. Nat. Commun. 9, 5106 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Choi S., Tan S. H., Li Z., Kim Y., Choi C., Chen P.-Y., Yeon H., Yu S., Kim J., SiGe epitaxial memory for neuromorphic computing with reproducible high performance based on engineered dislocations. Nat. Mater. 17, 335–340 (2018). [DOI] [PubMed] [Google Scholar]

- 26.Wang C.-Y., Wang C., Meng F., Wang P., Wang S., Liang S.-J., Milao F., 2D layered materials for memristive and neuromorphic applications. Adv. Electron. Mater. 6, 1901107 (2019). [Google Scholar]

- 27.Fuller E. J., Keene S. T., Melianas A., Wang Z., Agarwal S., Li Y., Tuchman Y., James C. D., Marinella M. J., Yang J. J., Salleo A., Talin A. A., Parallel programming of an ionic floating-gate memory array for scalable neuromorphic computing. Science 364, 570–574 (2019). [DOI] [PubMed] [Google Scholar]

- 28.Masland R. H., The fundamental plan of the retina. Nat. Neurosci. 4, 877–886 (2001). [DOI] [PubMed] [Google Scholar]

- 29.Euler T., Haverkamp S., Schubert T., Baden T., Retinal bipolar cells: Elementary building blocks of vision. Nat. Rev. Neurosci. 15, 507–519 (2014). [DOI] [PubMed] [Google Scholar]

- 30.Wang Y., Liu E., Gao A., Cao T., Long M., Pan C., Zhang L., Zeng J., Wang C., Hu W., Liang S.-J., Miao F., Negative photoconductance in van der Waals heterostructure-based floating gate phototransistor. ACS Nano 12, 9513–9520 (2018). [DOI] [PubMed] [Google Scholar]

- 31.Miao J., Song B., Li Q., Cai L., Zhang S., Hu W., Dong L., Wang C., Photothermal effect induced negative photoconductivity and high responsivity in flexible black phosphorus transistors. ACS Nano 11, 6048–6056 (2017). [DOI] [PubMed] [Google Scholar]

- 32.Taniguchi T., Watanabe K., Synthesis of high-purity boron nitride single crystals under high pressure by using Ba–BN solvent. J. Cryst. Growth 303, 525–529 (2007). [Google Scholar]

- 33.Watanabe K., Taniguchi T., Kanda H., Direct-bandgap properties and evidence for ultraviolet lasing of hexagonal boron nitride single crystal. Nat. Mater. 3, 404–409 (2004). [DOI] [PubMed] [Google Scholar]

- 34.Remes Z., Nesladek M., Haenen K., Watanabe K., Taniguchi T., The optical absorption and photoconductivity spectra of hexagonal boron nitride single crystals. Phys. Status Solidi A 202, 2229–2233 (2005). [Google Scholar]

- 35.Wong D., Velasco J. Jr., Ju L., Lee J., Kahn S., Tsai H.-Z., Germany C., Taniguchi T., Watanabe K., Zettl A., Wang F., Crommie M. F., Characterization and manipulation of individual defects in insulating hexagonal boron nitride using scanning tunnelling microscopy. Nat. Nanotechnol. 10, 949–953 (2015). [DOI] [PubMed] [Google Scholar]

- 36.Robson J. G., Frishman L. J., Response linearity and kinetics of the cat retina: The bipolar cell component of the dark-adapted electroretinogram. Vis. Neurosci. 12, 837–850 (1995). [DOI] [PubMed] [Google Scholar]

- 37.Gilbert C. D., Wiesel T. N., Receptive field dynamics in adult primary visual cortex. Nature 356, 150–152 (1992). [DOI] [PubMed] [Google Scholar]

- 38.L. A. Gatys, A. S. Ecker, M. Bethge, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (IEEE, 2016), pp. 2414–2423. [Google Scholar]

- 39.Burr G. W., A role for analogue memory in AI hardware. Nat. Mach. Intell. 1, 10–11 (2019). [Google Scholar]

- 40.Mennel L., Symonowicz J., Wachter S., Polyushkin D. K., Molina-Mendoza A. J., Mueller T., Ultrafast machine vision with 2D material neural network image sensors. Nature 579, 62–66 (2020). [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary material for this article is available at http://advances.sciencemag.org/cgi/content/full/6/26/eaba6173/DC1