Abstract

Intelligent systems (i.e., artificial intelligence), particularly deep learning, are machines able to mimic the cognitive functions of humans to perform tasks of problem-solving and learning. This field deals with computational models that can think and act intelligently, like the human brain, and construct algorithms that can learn from data to make predictions. Artificial intelligence is becoming important in radiology due to its ability to detect abnormalities in radiographic images that are unnoticed by the naked human eye. These systems have reduced radiologists' workload by rapidly recording and presenting data, and thereby monitoring the treatment response with a reduced risk of cognitive bias. Intelligent systems have an important role to play and could be used by dentists as an adjunct to other imaging modalities in making appropriate diagnoses and treatment plans. In the field of maxillofacial radiology, these systems have shown promise for the interpretation of complex images, accurate localization of landmarks, characterization of bone architecture, estimation of oral cancer risk, and the assessment of metastatic lymph nodes, periapical pathologies, and maxillary sinus pathologies. This review discusses the clinical applications and scope of intelligent systems such as machine learning, artificial intelligence, and deep learning programs in maxillofacial imaging.

Keywords: Artificial Intelligence, Deep Learning, Machine Learning, Radiology

Introduction

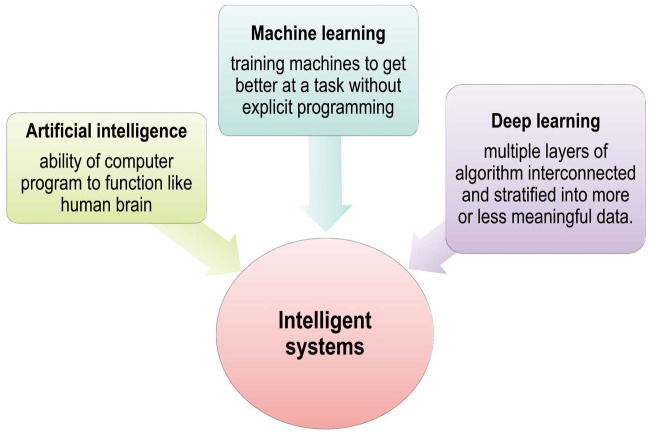

Radiologists are at the forefront of interpreting 2-dimensional (2D) and 3-dimensional (3D) volumetric images for effective patient management. Since the advent of radiology as a field at the end of the 19th century, image interpretation has usually been subjective.1 The most commonly used imaging modalities to detect and diagnose dental conditions include plain radiography, computed tomography (CT), magnetic resonance imaging (MRI), positron emission tomography, and ultrasonography, but the broader use of these modalities to analyze large volumes of data increases radiologists' workload. In the modern era, new technologies - namely, artificial intelligence, machine learning, and deep learning - have revolutionized ways of working with data. Although all these terms are related to each other and often used interchangeably, they are not synonyms (Fig. 1).2

Fig. 1. Pictorial representation of intelligent systems (i.e., artificial intelligence, deep learning and machine learning).

The existing literature reveals that intelligent systems have served as useful adjunctive tools for radiologists in the analysis of large quantities of diagnostic images with improved speed and accuracy.3,4,5,6,7,8,9,10 They have the ability to detect abnormalities within images that may go unnoticed by the naked eye or to solve problems not resolved by human cognition. This review article provides an overview of the general concepts of intelligent systems, such as artificial intelligence, deep learning, and machine learning programs, and the application of these systems in diagnosis and treatment planning for various dental conditions. It provides insights into the associated advantages and disadvantages of these technologies and concludes by discussing future areas of research in this field.

Artificial intelligence

Artificial intelligence is defined as the ability of machines to mimic the cognitive functions of humans (i.e., the ability of a computer program to function like the human brain). It accomplishes tasks such as learning and problem-solving based on computer algorithms.1,2

Machine learning

Machine learning includes several advanced iterative statistical methods used to discover patterns in data; although it is inherently non-linear, it is based on linear algebra data structures. Machine learning refers to the ability of computers or machines to gain human-like intelligence without explicit programming; that is, decisions are mostly data-driven rather than being strategically programmed for a certain task.11 Machine learning tasks depend upon the input signal or feedback given to the learning system.11,12 Machine learning can be utilized to improve prediction performance by dynamically adjusting the output model when data change over time. Furthermore, machine learning may provide quantitative tools that will increase the value of diagnostic imaging, augment image quality with shorter acquisition times, and improve workflow and communication.13

Deep learning

Deep learning is a subset of intelligent systems comprising multiple layers of algorithms interconnected and stratified into more or less meaningful data. These multilayered algorithms form a large artificial neural network. Recently developed deep learning programs comprise convolutional neural networks that utilize self-learning back-propagation algorithms to learn directly from the data via end-to-end processes to make predictions.14 These programs are mainly used for processing large and complex images such as 2D radiographs or 3D CT. This differentiates deep learning from machine learning, which builds algorithms directed by the data.15

Artificial neural networks

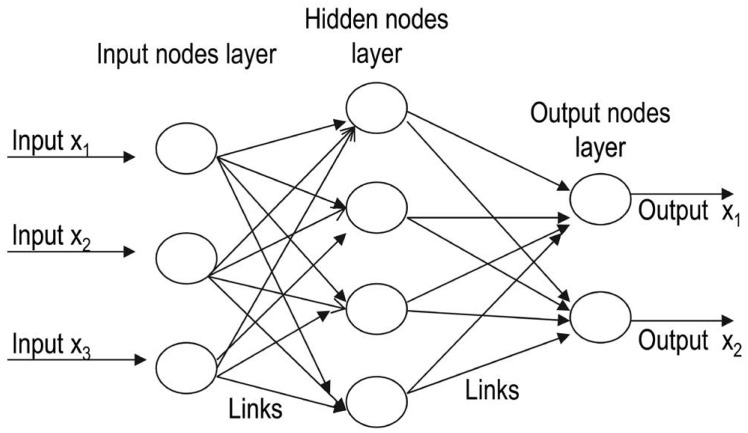

Artificial neural networks are computational models that function like the human brain, which consists of nerve cells arranged to form a network of nerves. The dendrites of these nerves receive an input signal that is transmitted to the nucleus of the nerve cell, known as the soma, where the signal is processed and passed on to the axon. The axon carries the signal from the soma to the synapse, where it is then transmitted to the dendrite of the next neuron. An artificial neural network is analogous to the human brain, but with networks that are created on a computer.14 These networks consist of 3 layers (input, hidden, and output) interconnected to each other by links. The input layers receive the input and forward it to other layers without processing. The next layer processes the input and then forwards it to subsequent layers for further processing. Thus, a link propagates activation from one layer to other, and the layer that receives the activation is known as the preceptron.1,2 Artificial neurons in the hidden layer simulate biological neurons in the human brain. This layer is not shown on a network, as it is hidden between the input and output layers. The network performs computation on a set of weighted inputs to produce an output by applying an activation function.14 The network can be feed-forward, composed of multiple layers (i.e., with connections in one direction) or recurrent, where the output is fed back into its input (Fig. 2).1

Fig. 2. Schematic representation of artificial neural networks, depicts various approaches to intelligent systems from the mid-1950s to 2010 and the concepts of cognitivism and connectionism.

Convolutional neural networks

Convolutional neural networks are deep-learning networks that consist of multiple building blocks stacked together, namely convolution layers, pooling layers, and fully connected layers. A convolutional neural network learns directly from the data, recognizes patterns within images, and classifies the final output into classes based on its task. The first 2 layers (i.e., the convolution and pooling layers) deal with feature extraction, whereas fully connected layers provide the final output and the last activation layer classifies extracted features into categories. A set of learnable parameters (known as kernels) are applied at each image position. These kernels are optimized by the training process in the convolution layer so as to minimize the mismatch between the output and ground values by applying an optimization algorithm known as back propagation.14,15 Hyper-parameters, such as the arbitrary number and size of kernels (e.g., 3×3, 5×5, or 7×7), are set before the start of the training process. The pooling layer performs down-sampling operations by reducing the number of learnable parameters. Lastly, the down-sampled features are mapped by the fully connected layers to the final output on which the activation function is applied to classify it into categories; for example, a tumor could be categorized as benign or malignant by a convolutional neural network.15

Training of neural networks

These artificial networks are trained and tested using a different dataset (the validation dataset) before implementation. During training, each activation is weighed by a numeric scale that defines the strength of the connection, and these values change according to the output during training. Multiple rounds of training and testing against different datasets are done to improve diagnostic performance.1,2 Data augmentation refers to the process of modifying the training data so that the network will not receive similar input upon further iteration of the training, with the goal of reducing the number of errors. Convolutional neural networks usually require more rounds of training to achieve proper diagnostic accuracy.1

Intelligent system approaches

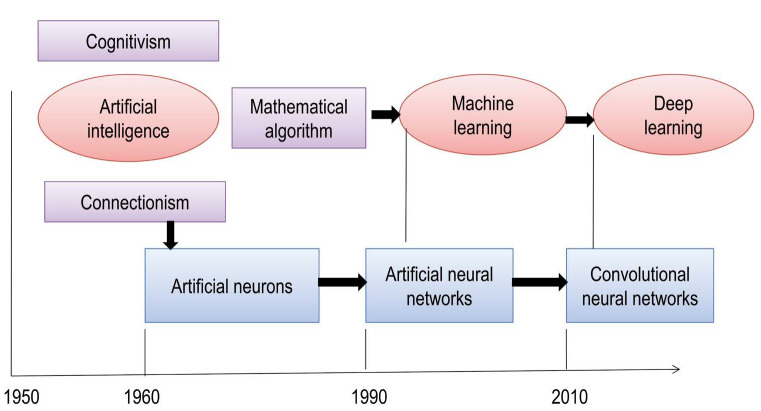

Between the mid-1950s and the early 1960s, the following 3 groups of intelligent system approaches were proposed: 1) brain simulation, 2) symbolic and sub-symbolic, and 3) statistical approaches. Symbolic approaches consist of cognitive simulation, logic-based intelligence, and knowledge-based intelligence. Computational theories form the basis of sub-symbolic approaches. Cognitivism refers to the development of rule-based programs known as expert systems. These systems are simulations of human intelligence on a machine (i.e., machines are trained to solve complex problems). Cognitive systems are probabilistic; they augment human intelligence by addressing complex problems based on unstructured data, creating a hypothesis, and making recommendations or decisions.2,11

A more recent approach is connectionism, which involves naïve programs that are guided by data. Connectionist models are interconnected neural networks analogous to neurons and synapses in the human brain. Connectionist systems are gaining popularity due to their capacity to compute large volumes of data, and neural networks have the ability to learn more quickly than expert systems. New connectionist models used for deep learning comprise multiple layers of algorithms that extract patterns from distorted, unstructured data (Fig. 3).11

Fig. 3. Outline of the concepts and theories of intelligent systems, shows the interconnection of various layers of artificial neural networks.

Learning programs and algorithms

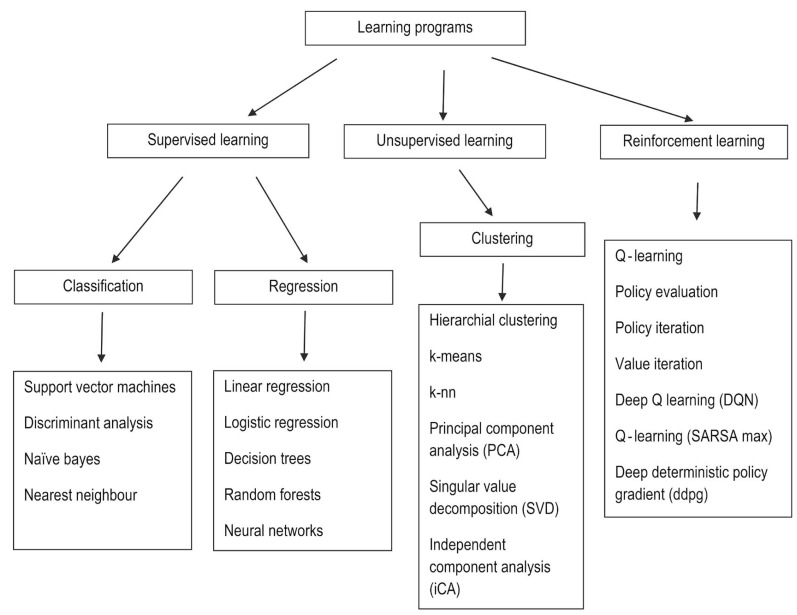

There are 3 types of learning programs: supervised learning, unsupervised learning, and reinforcement learning.

Supervised learning refers to performing computations and then adjusting the error to achieve an expected output, with the function inferred from hand-labeled training data that consist of a set of training examples. In other words, these systems learn to detect normal and abnormal characteristics by analyzing hand-labeled images. These programs are of 2 types: classification, in which the output variable is a category such as red or blue, or disease or no disease (i.e., there are 2 possible outputs), and regression, in which the output variable is a real or continuous value (e.g., weight, price, or size).

Unsupervised learning is machine learning on its own based on the input pattern. The data specified to the learner are unlabeled and divided into different clusters; therefore, these models are also known as clustering algorithms. The main limitation is there is no explicit evaluation of the accuracy of the output, which differentiates it from supervised learning; furthermore, reinforcement learning is based on output that interacts with the environment to accomplish certain goals, with a reward for the correct output and a penalty for the wrong output (Fig. 4).1,12,16,17

Fig. 4. Types of learning programs and their algorithms.

Unlike supervised learning programs, unsupervised learning utilizes unlabeled input data, from which algorithms infer the structures of interest. The most commonly used algorithms are k-means for clustering problems and the Apriori algorithm for association rule learning problems, in which rules are identified to describe large volumes of data. These algorithms use computational methods to learn information directly from the data. The performance of these algorithms improves as the amount of data for learning increases; that is, the greater the number of images and scans with a clinically proven diagnosis that are entered into the machine, the better the machine performs at identifying a pathology or abnormality within an image.11,12

Software learning algorithms are of several types. For example, linear regression establishes relationships between dependent and independent variables. Logistic regression predicts the probability of occurrence of an event. A decision tree algorithm is a type of supervised learning algorithm used for problem-solving and classification. It is a flow chart-like structure, where each internal non-leaf node represents a test on an attribute, each branch represents the outcome of a test, and each leaf or terminal node holds a class label.3,4 In support vector machine algorithms, each data item is plotted as a point in n-dimensional space, where n represents the number of features. Then, the algorithm finds a hyperplane that distinctly classifies the data points. The dimension of the hyperplane depends upon the number of input features. If the number of input features is 2, then the hyperplane is just a line. If the number of input features is 3, then the hyperplane becomes 2D, and the geometry of the hyperplane becomes difficult to visualize when the number of features exceeds 3. The k-nearest neighbors approach is a non-parametric supervised learning domain that classifies coordinates into groups identified by an attribute.11

Fuzzy logic

Fuzzy logic is a method of reasoning that imitates the human behavior of reasoning and produces a definite output that can be partially true or false instead of completely true or false, equivalent to human “yes” or “no” decisions. It assumes that every output falls somewhere in between, with varying shades of gray instead of a completely black-and-white approach to decision-making.18 Fuzzy learning could be considered as a subset of artificial intelligence and has been applied in the medical field to detect diabetic neuropathy and early retinopathy, to determine drug dosage, to calculate volumes of brain tissue on MRI, to visualize nerve fibers in the brain, to characterize ultrasound images of the breast and CT scan images of liver lesions, and to differentiate benign skin lesions from malignant melanoma. Although it has numerous applications, most information is fuzzy according to fuzzy set theory, in which values between partially true and partially false are determined (e.g., for example it predicts uncertainties of life, such as warm and cool as somewhere in between hot and cold). As such, fuzzy learning essentially provides crisp logical output to the clinician, who uses his or her knowledge and mental ability to diagnose a lesion.19

Clinical applications of intelligent systems

The term “use case” refers to the different scenarios that form the basis of the clinical applications of intelligent systems in radiology. Broadly speaking, 3 use cases can be distinguished: 1) triage scenarios involve determining the probability of disease (positive or negative); 2) replacement scenarios imply the replacement of radiologists by intelligent software due to the more accurate, rapid, and reproducible output of the software; and 3) add-on scenarios involve the use of neural networks, but with the imaging findings ultimately dependent on the radiologist's interpretation.

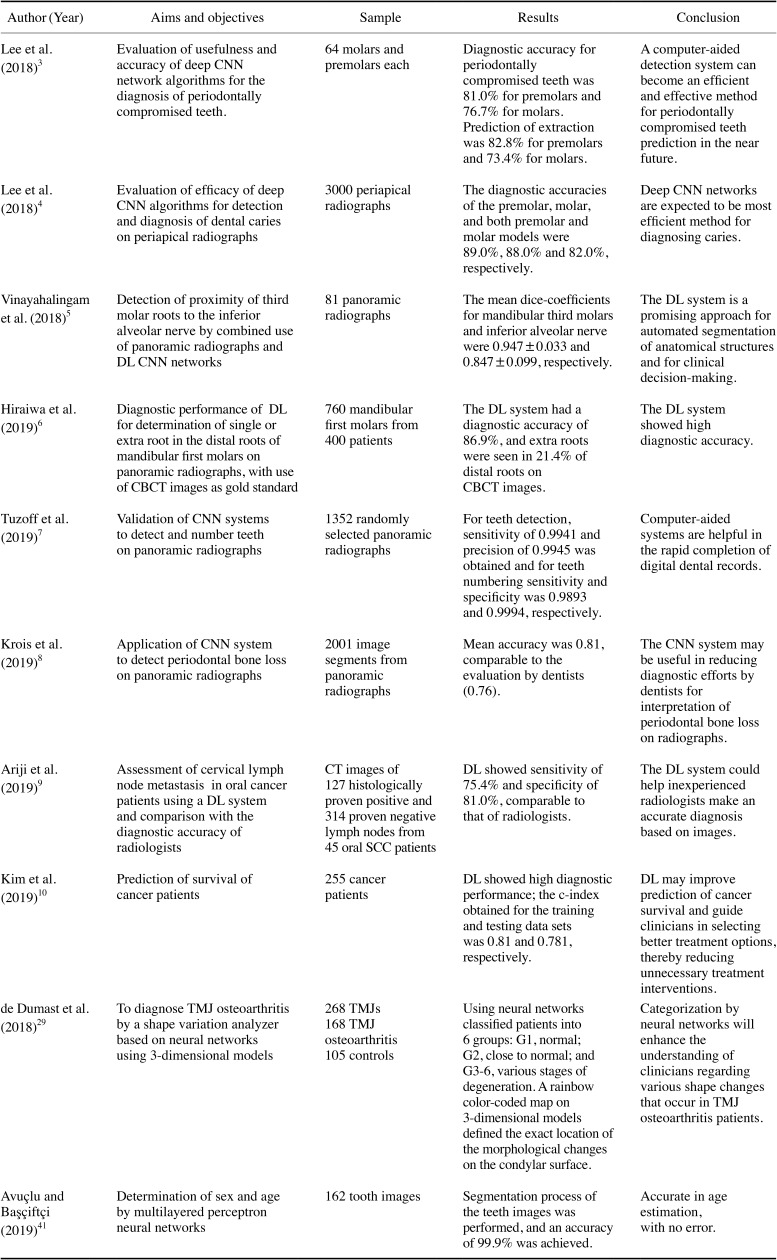

Apart from above scenarios, clinical applicability can be divided into 3 types: 1) detection, for identification of an abnormality within an image that may be unnoticed by the naked human eye; 2) automated segmentation, which provides information on the functional performance of tissues, boundaries, and the extent of the disease, with the goal of reducing the radiologist's workflow by reducing the need to carry out segmentation manually; and 3) disease classification, in which an abnormality within an image is classified into a category (e.g., high or low risk, or a good or bad prognosis).20 Intelligent software assists dentists in clinical diagnosis and treatment planning, as it records and presents data rapidly and creates virtual database for each patient.1,2 Deep learning convolutional networks are gaining popularity in the medical field, but according to our literature search, very few studies have been conducted in dentistry to validate the performance of this emerging approach. Table 1 summarizes the clinical applications of intelligent systems based on the existing literature in the diagnosis and interpretation of maxillofacial diseases and in various dental specialties. The various clinical applications of intelligent systems are discussed below.

Table 1. Summary of the clinical applications of intelligence systems in the existing literature in the diagnosis and interpretation of maxillofacial diseases and in various dental specialties.

AI: artificial intelligence, CNN: convolutional neural network, ANN: artificial neural network, DL: deep learning, CBCT: cone-beam computed tomography, CT: computed tomography, SCC: squamous cell carcinoma, TMJ: temporomandibular joint

Computer-aided diagnosis

Computer-aided diagnosis (CAD) represents the earliest clinical application of artificial intelligence in the medical field and in oral radiology. Doi15 carried out foundational research related to CAD systems, as reported in articles between 1963 and 1973, and found it to be very useful for detecting lung, breast, and colon cancer. A CAD system makes a diagnosis for which it has been trained specifically, and the performance of CAD algorithms can only be improved by feeding it additional datasets. The performance of these systems does not have to be better than, or even comparable to, that of clinicians; instead, the computer output is employed as a second opinion to assist clinicians in detecting pathologies. In some clinical cases about which a radiologist is confident, he or she may agree or disagree with the computer output, but in cases with less certainty, the use of CAD can improve the final diagnosis. The main limitation of CAD is the high rate of false-positive detections, and some CAD systems may also miss certain lesions. In the modern era, early CAD diagnostic systems are being replaced by artificial intelligence approaches characterized by autonomous learning, which detects and solves problems based on computer algorithms similar to the human brain. These systems have reduced the burden on radiologists by eliminating the need to perform segmentation manually and also provide vital information on the functional performance of vital organs and tissues, as well as the extent of disease.21

Dentomaxillofacial imaging

Location of radiographic landmarks

Convolutional neural networks use automated segmentation to detect and segment certain patterns in large-volume datasets. The U-net architecture is the most common convolutional neural network segmentation method used in medical specialties to differentiate osseous and soft tissue structures. The automated interpretation of radiographs enables the accurate localization of landmarks and could be used with CT and MRI to identify abnormalities in images that may go unnoticed by visual interpretation.1,2 Convolutional neural networks allow precise edge detection, and edge-based, region-based, and knowledge-based algorithms are used to locate cephalometric landmarks. These networks can locate the landmarks in partially hidden, low-contrast, overlapping areas that are not visible to the naked human eye. Convolutional neural network algorithms enable pixel-by-pixel elaboration, and knowledge-based algorithms help to locate new anatomic landmarks in a more robust and precise way.22 On panoramic images, neural networks have been used to detect and number the teeth according to World Dental Federation Notation and the Fédération Dentaire Internationale 2-digit notation system with more precision.22

Periapical pathologies

Periapical granulomas, abscesses, and cysts are the most common periapical lesions encountered in daily clinical practice. Most of these lesions are evident on radiographs, but some may go unnoticed as images may be noisy or have low contrast.23 Intelligent systems can accurately locate tooth areas prone to caries and complex periapical pathoses, use automated segmentation to define the boundaries of lesions in a more precise way, and enable their differentiation. In the future, these systems they may benefit implant dentistry by enabling the early detection of peri-implantitis with appropriate interventions.23,24

Maxillary sinus pathologies

Maxillary sinusitis is an inflammation of the mucosal lining characterized radiographically by mucosal thickening >4 mm, the air fluid level, and opacification. The conventional Waters and paranasal sinus views are used to screen suspected patients for maxillary sinusitis, and confirmation is made using a CT scan, which is the preferred imaging modality for evaluating the air fluid level and sinus opacifications. These conventional radiographs pose diagnostic difficulties due to overlapping of the maxillary sinus by facial bony structures, which may yield false-negative results. Deep learning programs increase the diagnostic ability of conventional radiographic views, thereby helping to avoid unnecessary referrals of patients for CT examinations, which have high radiation doses. Murata et al.25 found an accuracy of 87.5% for deep learning systems in the diagnosis of maxillary sinusitis on panoramic radiographs. In another study, Kim et al.26 found a diagnostic performance of 88%–93% for deep learning systems, which was superior or comparable to radiologists, and a diagnostic performance of 75%–89% in the detection of maxillary sinusitis on Waters' radiographs. They emphasized that deep learning systems might provide diagnostic support to inexperienced radiologists in the detection of maxillary sinusitis.

Periodontal disease and bone density assessment

Deep analysis tools are assisting periodontists in the early detection of alveolar bone loss, bone density changes, and areas of furcation involvement. Krois et al.27 found that a convolutional neural network showed higher diagnostic performance, with an accuracy of 81%, than individual examiners, who showed an accuracy of 76%, in the radiographic detection of periodontal bone loss (P=0.067). They suggested that automated systems can be used as reliable and accurate adjunctive tools for detecting periodontal bone loss on panoramic radiographs. The application of neural networks aims to reduce the diagnostic efforts by radiologists by allowing interpretation of periodontal structures with greater accuracy in less time. These neural networks may also predict the early risk of osteoporosis by evaluating bone architecture and bone mineral density (BMD), thereby avoiding the need for further BMD testing involving bone densitometry.1,3,4

Oral oncology

Intelligent systems have been used for the early detection of head and neck cancers and cervical lymph node metastasis, which may affect the treatment choice and prognosis of head and neck cancer patients. CT and MRI are the imaging modalities most commonly used to identify cervical lymph node metastasis and sentinel lymph nodes. Recently, Ariji et al.9 found that the use of a convolutional neural network enhanced the CT-based diagnosis of lymph node metastasis. The performance of a convolutional neural network image classification system resulted in an accuracy of 78.2%, a sensitivity of 75.4%, and a specificity of 81.0%, comparable to experienced radiologists.

Kim et al.10 used a deep learning program to predict the survival of oral cancer patients and found that the diagnostic performance of the program was superior to that of the classical statistical model. Proper fit was found between the accuracy of the training and testing sets, which were 81% and 78.1%, respectively. Based on their analysis, they suggested that deep learning survival predictions might guide clinicians in choosing the best treatment option for oral cancer patients, thereby preventing unnecessary treatment interventions. These systems also enable the clinician to classify oral lesions as benign, potentially malignant, or malignant for early treatment interventions.9,10

Temporomandibular joint osteoarthritis

Osteoarthritis, which is the most common degenerative disease affecting the temporomandibular joint (TMJ), is characterized by destruction of the articular cartilage and subchondral bone resorption.28 No method has been developed to quantify morphological changes in the early stages of the disease. de Dumast et al.29 described a non-invasive technique, referred to as the shape variation analyzer, that uses neural networks to classify morphological variations of 3D models of the mandibular condyle into 7 different categories (G1, normal; G2, close to normal; and G3–6, various stages of degeneration). A rainbow color-coded map on 3D models defined the exact location of the morphological changes on the condylar surface. Categorization by neural networks will enhance the understanding of clinicians regarding the shape changes that occur in patients with TMJ osteoarthritis.

Headache

Primary headaches, such as migraine and tension headaches, have a significant impact of the quality of life of individuals in the working population. Primary headaches have no associated underlying organic cause, whereas secondary headaches are usually the symptoms of an underlying disease process. Physicians diagnose patients with chronic headache symptoms on the basis of a clinical examination and imaging modalities such as MRI, and usually prefer medical management as an appropriate treatment intervention. Nonetheless, some patients suffer delays in diagnosis, undergo multiple consultations, and complain of persisting headache symptoms; therefore, it is essential to rule out certain underlying causes in order to select the proper treatment.30,31 For a precise diagnosis of headache, Vandewiele et al.30 and Krawczyk et al.31 developed an automated 3-component system for classifying headache disorders by a decision tree algorithm. They found that automated techniques were highly accurate (97.85%) and reduced classification errors (P<0.05). These systems enable trigger management by the automated detection of possible triggers, which enables patients to adjust their lifestyle to prevent triggers and the occurrence of headache attacks.

Researchers have recently investigated the compression of vascular structures by various anatomical structures, such as elongation or medial/lateral deviation of the styloid process and the ponticulus posticus, which results in a decrease in cerebral blood flow and ultimately leads to cervicogenic headache. Computational software tools have been found to be useful for elucidating vertebrobasilar insufficiency as a root cause of cervicogenic headache, which is still a focus of ongoing research.32

Sjögren syndrome

Sjögren syndrome is a systemic autoimmune disease that mainly affects the salivary and lacrimal glands, resulting in severe dryness of the mouth and eyes. Recently, software algorithms have emerged as a promising approach for the analysis of big datasets obtained from large groups of patients affected by systemic autoimmune diseases.33 Kise et al.33 utilized a deep learning system to detect Sjögren syndrome on CT and found accuracy, sensitivity, and specificity of 96.0%, 100%, and 92.0%, respectively. The corresponding values of inexperienced radiologists (83.5%, 77.9%, and 89.2%) indicate that the diagnostic performance of the deep learning system was better than that of inexperienced radiologists. The results of their study imply that deep learning systems could be used as diagnostic support for the interpretation of CT images of patients with Sjögren syndrome.

Miscellaneous applications

In endodontic practice, artificial intelligence software has been found to be accurate in establishing working length by determining the exact location of the apical foramen and detecting vertical root fractures on cone-beam computed tomography (CBCT) images.24 Devito et al.23 found that neural networks improved the diagnosis of proximal caries by 39.4% in comparison to bitewing radiography. Artificial intelligence algorithms are helping prosthodontists to improve the consistency and esthetics of the crown, providing a more realistic esthetic appearance contrary to the standard template procedure. Fabrication of a prosthesis is carried out by computer-aided design and manufacturing software technologies, such as subtractive milling and additive manufacturing, which reduce the chance of errors in the final prosthesis.34,35

Intelligent systems are assisting orthodontists in determining the necessity of tooth extractions, monitoring tooth movements, staging tooth development, and marking landmarks on cephalograms; as such, these systems help clinicians make digital impressions that are more accurate and time-saving by eliminating the need for lengthy laboratory procedures.

Intelligent systems have revolutionized the field of oral and maxillofacial surgery by the introduction of image-guided surgery. Preoperative CT and MRI images are registered with CBCT images for intraoperative imaging due to the reduced radiation exposure and high resolution of CBCT.36 Image-guided surgery is now performed at many large hospitals (e.g., for implant placement), enabling these procedures to be performed more precisely than was previously possible. Surgical removal of lower third molars is challenging due to the close proximity of the mandibular third molar (M3) and inferior alveolar nerve (IAN). These procedures can result in damage to the IAN, causing neurosensory impairment in the chin and lower lip. To address this issue, Vinayahalingam et al.5 performed automated segmentation on panoramic radiographs to detect the proximity of M3 in relation to the IAN prior to surgical removal of M3 and suggested that it was an encouraging approach to the segmentation of anatomical structures. In the future, these systems will also have tremendous applications in orthognathic surgery due to the remarkable power of image recognition for various dentofacial abnormalities.37

Forensic applications

Sex and age determination from skeletal remains are an important component of forensic investigations to identify victims at crime sites. The forensic literature has proven that teeth are a meaningful tool in determining people's identity, as they are highly resistant to decay and remain unaffected even after the decomposition of soft tissue and skeletal structures.38 Bewes et al.39 found that neural networks showed a 95% accuracy in distinguishing sex in 900 anthropological skulls reconstructed from CT scans. Intelligent systems could also improve the accuracy of age estimation methods, although very limited studies have yet investigated the application of neural networks for age determination. Gross et al.40 trained neural networks to calculate skeletal age from 521 hand-wrist radiographs and found that the age calculated by neural networks was accurate (i.e., close to the chronological age) in 243 (47%) cases, whereas Avuçlu and Başçiftçi41 found a high success rate of 99.9% with multilayered perceptron neural networks. These studies all suggested that neural networks can serve as a reliable method for age and sex determination, but that further research should be done to establish their accuracy.

Three-dimensional printing

Three-dimensional printing, which was first introduced in the 1980s, is a manufacturing process wherein objects are fabricated in a layering method; that is, physical models are generated from digital layouts. This process is also known as rapid prototyping or additive manufacturing.

Intelligent systems are currently being applied for 3D printing and additive manufacturing by improving manufacturing in the prefabrication stage. These tools assist in optimizing lattices in the CAD file, evaluate the most efficient printing path, and detect any faults by the help of computer vision.42 The main problem of additive manufacturing is overhanging of printing, which creates problems for the subsequent layers and increases the likelihood of printing in an empty space. Artificial intelligence software predicts the possible failure of the printing process and resolves the overhang problem by generating additional support layers at the end to fill any gaps between layers. Intelligent algorithms find the solution to the problem and make a decision when humans are not able to react quickly enough, enabling 3D printers to perform compensation strategies to resolve quality issues.42

Advantages and limitations

Intelligent systems have reduced the burden on radiologists' workload by enabling automated segmentation, detecting minute abnormalities within an image that may be unnoticed by the human eye, providing information on the functional performance of tissues and organs and disease extent, facilitating early screening for cancers, identifying patients at risk of cancer, and eliminating errors due to cognitive bias. Despite those above advantages, the dilemma is whether this technology will replace radiologists in the future. Intelligent systems have reduced radiologists' workload, but cannot replace human intelligence.1 These systems require a huge database of knowledge, and when applied to images from other contexts, the wrong interpretation of images may occur, yielding false-positive or false-negative results. Moreover, radiologists should be trained regarding computational algorithms, data science, biometrics, and genomics to improve their use of this technology. A search of the recent literature regarding the use of intelligent systems in dentomaxillofacial radiology revealed only in vitro studies, studies on skulls, and anatomic models. No studies have been performed in real clinical situations to verify the true applicability of these systems. In the near future, more clinical trials pertaining to intelligent systems should be funded by the government to facilitate the testing and implementation of this technology in clinical practice.11,17

Future prospects

Radiomics

Radiomics is an emerging translational field of research aiming to extract high-dimensional data/features from radiographic images using data characterization algorithms. The radiomic process can be divided into distinct steps, with definable inputs and outputs, such as image acquisition and reconstruction, image segmentation, feature extraction and qualification, analysis, and model building. These algorithms have been found to extract approximately 400 features from CT and MRI images of tumors, including shape, intensity, and surface texture, some of which may go unnoticed by the naked human eye. Radiomics could become a useful tool for oncologists in predicting the metastatic potential of tumors and the expression of oncogenes, as well as in evaluating treatment response.2,43

Imaging biobanks

This potential application draws upon computers' capacity to store large amounts of data and images. An imaging biobank is defined by Organization for Economic Co-operation and Development as a collection of biological material and the associated data and information stored in an organized system for a large population. Data from imaging biobanks could be later used by computational models to perform simulations of disease development and progression.2

Hybrid intelligence

Hybrid intelligence refers to the combination of human and machine intelligence, expanding the human intellect. It is based on the principle that humans can work harmoniously with intelligent machines (i.e., human intelligence working together with artificial intelligence) to solve difficult problems. A newly developed hybrid intelligence image fusion method has been used to fuse multimodal images for better diagnosis and treatment planning. Edges are an important feature of an image that is detected and enhanced, and the resulting input is given to simplified pulse-coupled neural network. The proposed fusion hybrid system performed better than computational models.2

Conclusion

Intelligent systems play an important role in dentomaxillofacial radiology in making diagnostic recommendations. They have been proven to be very useful in every field in dentistry as a way to obtain a quick diagnosis and treatment plan for complex problems unresolved by the human brain. These systems have tremendously reduced radiologists' workload and allow radiologists to improve their relevance and value. Therefore, radiologists should educate themselves about computational software in order to implement these new developments in a safe and appropriate way. The clinical applications of intelligent systems are never-ending, and the field is in its nascent stage, with ongoing active research. These systems have a promising and bright future both in general dentistry and maxillofacial radiology.

Footnotes

Conflicts of Interest: None

References

- 1.Wong SH, Al-Hasani H, Alam Z, Alam A. Artificial intelligence in radiology: how will be affected? Eur Radiol. 2019;29:141–143. doi: 10.1007/s00330-018-5644-3. [DOI] [PubMed] [Google Scholar]

- 2.Hosny A, Parmar C, Quackenbush J, Schwartz LH, Aerts HJ. Artificial intelligence in radiology. Nat Rev Cancer. 2018;18:500–510. doi: 10.1038/s41568-018-0016-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lee JH, Kim DH, Jeong SN, Choi SH. Diagnosis and prediction of periodontally compromised teeth using a deep learning-based convolutional neural network algorithm. J Periodontal Implant Sci. 2018;48:114–123. doi: 10.5051/jpis.2018.48.2.114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Lee JH, Kim DH, Jeong SN, Choi SH. Detection and diagnosis of dental caries using a deep learning-based convolutional neural network algorithm. J Dent. 2018;77:106–111. doi: 10.1016/j.jdent.2018.07.015. [DOI] [PubMed] [Google Scholar]

- 5.Vinayahalingam S, Xi T, Bergé S, Maal T, de Jong G. Automated detection of third molars and mandibular nerve by deep learning. Sci Rep. 2019;9:9007. doi: 10.1038/s41598-019-45487-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Hiraiwa T, Ariji Y, Fukuda M, Kise Y, Nakata K, Katsumata A, et al. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac Radiol. 2019;48:20180218. doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tuzoff DV, Tuzova LN, Bornstein MM, Krasnov AS, Kharchenko MA, Nikolenko SI, et al. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol. 2019;48:20180051. doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Krois J, Ekert T, Meinhold L, Golla T, Kharbot B, Wittemeier A, et al. Deep learning for the radiographic detection of periodontal bone loss. Sci Rep. 2019;9:8495. doi: 10.1038/s41598-019-44839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ariji Y, Fukuda M, Kise Y, Nozawa M, Yanashita Y, Fujita H, et al. Contrast-enhanced computed tomography image assessment of cervical lymph node metastasis in patients with oral cancer by using a deep learning system of artificial intelligence. Oral Surg Oral Med Oral Pathol Oral Radiol. 2019;127:458–463. doi: 10.1016/j.oooo.2018.10.002. [DOI] [PubMed] [Google Scholar]

- 10.Kim DW, Lee S, Kwon S, Nam W, Cha IH, Kim HJ. Deep learning-based survival prediction of oral cancer patients. Sci Rep. 2019;9:6994. doi: 10.1038/s41598-019-43372-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Das S, Dey A, Pal A, Roy N. Applications of artificial intelligence in machine learning: review and prospect. Int J Comput Appl. 2015;115:31–41. [Google Scholar]

- 12.Sharma D, Kumar N. A review on machine learning algorithms, tasks and applications. Int J Adv Res Comput Eng Technol. 2017;6:1548–1552. [Google Scholar]

- 13.Chan S, Siegel EL. Will machine learning end the viability of radiology as a thriving medical specialty? Br J Radiol. 2019;92:20180416. doi: 10.1259/bjr.20180416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hopfield JJ. Neural networks and physical systems with emergent collective computational abilities. Proc Natl Acad Sci U S A. 1982;79:2554–2558. doi: 10.1073/pnas.79.8.2554. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Doi K. Computer-aided diagnosis in medical imaging: historical review, current status, and future potential. Comput Med Imaging Graph. 2007;31:198–211. doi: 10.1016/j.compmedimag.2007.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Dhyan P. Unsupervised learning. In: Wilson RA, Keil FC, editors. The MIT encyclopedia of the cognitive sciences. Cambridge, Mass: MIT Press; 1999. pp. 1–7. [Google Scholar]

- 17.Zhang Z, Sejdić E. Radiological images and machine learning: trends, perspectives, and prospects. Comput Biol Med. 2019;108:354–370. doi: 10.1016/j.compbiomed.2019.02.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Shiraishi J, Li Q, Appelbaum D, Doi K. Computer-aided diagnosis and artificial intelligence in clinical imaging. Semin Nucl Med. 2011;41:449–462. doi: 10.1053/j.semnuclmed.2011.06.004. [DOI] [PubMed] [Google Scholar]

- 19.Yanger RR. Fuzzy logics and artificial intelligence. Fuzzy Sets Syst. 1997;90:193–198. [Google Scholar]

- 20.Axer H, Jantzen J, Keyserlingk DG, Berks G. The application of fuzzy-based methods to central nerve fiber imaging. Artif Intell Med. 2003;29:225–239. doi: 10.1016/s0933-3657(02)00071-4. [DOI] [PubMed] [Google Scholar]

- 21.Doi K, MacMahon H, Katsuragawa S, Nishikawa RM, Jiang Y. Computer-aided diagnosis in radiology: potential and pitfalls. Eur J Radiol. 1999;31:97–109. doi: 10.1016/s0720-048x(99)00016-9. [DOI] [PubMed] [Google Scholar]

- 22.Chen H, Zhang K, Lyu P, Li H, Zhang L, Wu J, et al. A deep learning approach to automatic teeth detection and numbering based on object detection in dental periapical films. Sci Rep. 2019;9:3840. doi: 10.1038/s41598-019-40414-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Devito KL, de Souza Barbosa F, Felippe Filho WN. An artificial multilayer perceptron neural network for diagnosis of proximal dental caries. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 2008;106:879–884. doi: 10.1016/j.tripleo.2008.03.002. [DOI] [PubMed] [Google Scholar]

- 24.Naik M, de Ataide ID, Fernandes M, Lambor R. Future of endodontics. Int J Curr Res. 2016;8:25610–25616. [Google Scholar]

- 25.Murata M, Ariji Y, Ohashi Y, Kawai T, Fukuda M, Funakoshi T, et al. Deep learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019;35:301–307. doi: 10.1007/s11282-018-0363-7. [DOI] [PubMed] [Google Scholar]

- 26.Kim Y, Lee KJ, Sunwoo L, Choi D, Nam CM, Cho J, et al. Deep learning in diagnosis of maxillary sinusitis using conventional radiography. Invest Radiol. 2019;54:7–15. doi: 10.1097/RLI.0000000000000503. [DOI] [PubMed] [Google Scholar]

- 27.Krois J, Ekert T, Meinhold L, Golla T, Kharbot B, Wittemeier A, et al. Deep learning for the radiographic detection of periodontal bone loss. Sci Rep. 2019;9:8495. doi: 10.1038/s41598-019-44839-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Cevidanes LH, Hajati AK, Paniagua B, Lim PF, Walker DG, Palconet G, et al. Quantification of condylar resorption in temporomandibular joint osteoarthritis. Oral Surg Oral Med Oral Pathol Oral Radiol Endod. 2010;110:110–117. doi: 10.1016/j.tripleo.2010.01.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.de Dumast P, Mirabel C, Paniagua B, Yatabe M, Ruellas A, Tubau N, et al. SVA: shape variation analyzer. Proc SPIE Int Soc Opt Eng. 2018;10578:105782H. doi: 10.1117/12.2295631. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Vandewiele G, De Backere F, Lannoye K, Vanden Berghe M, Janssens O, Van Hoecke S, et al. A decision support system to follow up and diagnose primary headache patients using semantically enriched data. BMC Med Inform Decis Mak. 2018;18:98. doi: 10.1186/s12911-018-0679-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Krawczyk B, Simić D, Simić S, Woźniak M. Automatic diagnosis of primary headaches by machine learning methods. Cent Eur J Med. 2013;8:157–165. [Google Scholar]

- 32.Bogduk N. Cervicogenic headache: anatomic basis and pathophysiologic mechanisms. Curr Pain Headache Rep. 2001;5:382–386. doi: 10.1007/s11916-001-0029-7. [DOI] [PubMed] [Google Scholar]

- 33.Kise Y, Ikeda H, Fujii T, Fukuda M, Ariji Y, Fujita H, et al. Preliminary study on the application of deep learning system to diagnosis of Sjögren's syndrome on CT images. Dentomaxillofac Radiol. 2019;48:20190019. doi: 10.1259/dmfr.20190019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lerner H, Mouhyi J, Admakin O, Mangano F. Artificial intelligence in fixed implant prosthodontics: a retrospective study of 106 implant-supported monolithic zirconia crowns inserted in the posterior jaws of 90 patients. BMC Oral Health. 2020;20:80. doi: 10.1186/s12903-020-1062-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Vecsei B, Joós-Kovács G, Borbély J, Hermann P. Comparison of the accuracy of direct and indirect three-dimensional digitizing processes for CAD/CAM systems - an in vitro study. J Prosthodont Res. 2017;61:177–184. doi: 10.1016/j.jpor.2016.07.001. [DOI] [PubMed] [Google Scholar]

- 36.Panesar S, Cagle Y, Chander D, Morey J, Fernandez-Miranda J, Kliot M. Artificial intelligence and future of surgical robotics. Ann Surg. 2019;270:223–226. doi: 10.1097/SLA.0000000000003262. [DOI] [PubMed] [Google Scholar]

- 37.Bouletreau P, Makaremi M, Ibrahim B, Louvrier A, Sigaux N. Artificial intelligence: applications in orthognathic surgery. J Stomatol Oral Maxillofac Surg. 2019;120:347–354. doi: 10.1016/j.jormas.2019.06.001. [DOI] [PubMed] [Google Scholar]

- 38.Dalitz GD. Age determination of adult human remains by teeth examination. J Forensic Sci Soc. 1962;3:11–21. [Google Scholar]

- 39.Bewes J, Low A, Morphett A, Pate FD, Henneberg M. Artificial intelligence for sex determination of skeletal remains: application of a deep learning artificial neural network to human skulls. J Forensic Leg Med. 2019;62:40–43. doi: 10.1016/j.jflm.2019.01.004. [DOI] [PubMed] [Google Scholar]

- 40.Gross GW, Boone JM, Bishop DM. Pediatric skeletal age: determination with neural networks. Radiology. 1995;195:689–695. doi: 10.1148/radiology.195.3.7753995. [DOI] [PubMed] [Google Scholar]

- 41.Avuçlu E, Başçiftçi F. Novel approaches to determine age and gender from dental X-ray images by using multiplayer perceptron neural networks and image processing techniques. Chaos Solitons Fractals. 2019;120:127–138. [Google Scholar]

- 42.Attaran M. The rise of 3-D printing: the advantages of additive manufacturing over traditional manufacturing. Bus Horiz. 2017;60:677–688. [Google Scholar]

- 43.Khanna SS, Dhaimade PA. Artificial intelligence: transforming dentistry today. Indian J Basic Appl Med Res. 2017;6:161–167. [Google Scholar]