Abstract

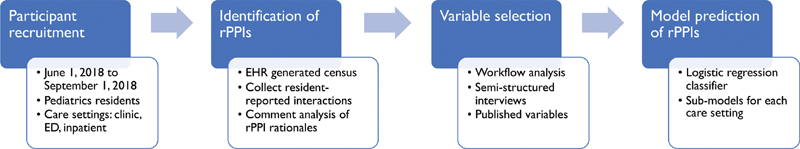

Objective Patient attribution, or the process of attributing patient-level metrics to specific providers, attempts to capture real-life provider–patient interactions (PPI). Attribution holds wide-ranging importance, particularly for outcomes in graduate medical education, but remains a challenge. We developed and validated an algorithm using EHR data to identify pediatric resident PPIs (rPPIs).

Methods We prospectively surveyed residents in three care settings to collect self-reported rPPIs. Participants were surveyed at the end of primary care clinic, emergency department (ED), and inpatient shifts, shown a patient census list, asked to mark the patients with whom they interacted, and encouraged to provide a short rationale behind the marked interaction. We extracted routine EHR data elements, including audit logs, note contribution, order placement, care team assignment, and chart closure, and applied a logistic regression classifier to the data to predict rPPIs in each care setting. We also performed a comment analysis of the resident-reported rationales in the inpatient care setting to explore perceived patient interactions in a complicated workflow.

Results We surveyed 81 residents over 111 shifts and identified 579 patient interactions. Among EHR extracted data, time-in-chart was the best predictor in all three care settings (primary care clinic: odds ratio [OR] = 19.36, 95% confidence interval [CI]: 4.19–278.56; ED: OR = 19.06, 95% CI: 9.53–41.65' inpatient : OR = 2.95, 95% CI: 2.23–3.97). Primary care clinic and ED specific models had c- statistic values > 0.98, while the inpatient-specific model had greater variability ( c- statistic = 0.89). Of 366 inpatient rPPIs, residents provided rationales for 90.1%, which were focused on direct involvement in a patient's admission or transfer, or care as the front-line ordering clinician (55.6%).

Conclusion Classification models based on routinely collected EHR data predict resident-defined rPPIs across care settings. While specific to pediatric residents in this study, the approach may be generalizable to other provider populations and scenarios in which accurate patient attribution is desirable.

Keywords: secondary use, patient–provider, data mining, workflow, medical education

Background and Significance

Patient attribution is the process of attributing patient-level metrics to specific providers, 1 which are signals of real-life provider–patient interactions (PPIs). Such attribution is essential for measuring provider-specific quality metrics, 2 determining physician case exposure, 3 4 and identifying accountable care team members. 5 Attributing the care of patients to providers has been attempted through a myriad of approaches. 6 7 8 Despite their potential wide-ranging impact, accurately deducing in-person PPIs from within the EHR remains a challenge, 9 10 11 especially in the inpatient setting. 1 From the perspective of medical education, automated PPIs derived from EHR data may help educators understand which patient care experiences add “educational value,” informing educational opportunities throughout the spectrum of clinical training. 12 13 By determining the types of conditions, procedures, and demographics to which one has exposure, medical educators and trainees can identify relative strengths and gaps in clinical experience.

The growing momentum behind competency-based medical education calls for educational structures that align with standardized goals, which can be greatly facilitated with improved methods of automated patient attribution from medical records. 12 13 The Accreditation Council for Graduate Medical Education recently released its second version of its Clinical Learning Environment Review (CLER) pathways that aim to optimize trainee education, while providing safe and high-quality patient care. 14 Among the set of expectations outlined, the CLER pathways recommend that trainees receive both site-levels, as well as individual-specific quality metrics, and outcome data. This feedback necessitates accurate patient attribution to trainee providers. Diagnostic exposure and volume among resident trainees have been reported at single sites using organization-specific enterprise data warehouses. 15 16 17 18 Such information could inform gaps in clinical experiences, as well as relative strengths. 19

While many EHR data elements, like note authorship, care team assignment, and order placement, may be suggestive of PPIs, such approaches to patient attribution may fall short in depicting accurate representations of care delivery by providers who should be primarily associated with a patient's care. 16 20 For example, residents may place orders on patients for whom they are not the primary front-line clinician (FLC) during rounds when the assigned FLC is presenting the patient, or throughout the day while the FLC is attending to other patients. Anecdotally as noted by the authors, this is most commonly observed in the inpatient context but may occur in resident primary care clinic or ED. Others have applied methods from computational ethnography to EHR audit logs to describe and optimize clinical workflows in the outpatient setting. 21 22 To date, only one single-center study has leveraged EHR features, such as note authorship, ordering provider, and time-in-chart, to develop a model predicting specific resident PPIs (rPPIs). 23 A critical need exists in the development of an approach to accurately attribute patients to providers using common EHR data elements, including audit logs.

Objectives

In the current study, we sought to develop a method to predict pediatric rPPIs from EHR data focusing on audit logs. We describe the validation of these models in multiple care settings against resident-reported rPPIs and explored the nature of these interactions through a comment analysis of residents' patient inclusion rationale.

Methods

Study Design and Recruitment

We performed a prospective cohort study of pediatric residents at The Children's Hospital of Philadelphia in Philadelphia, Pennsylvania, a large urban academic freestanding children's hospital in the United States with a pediatrics residency program consisting of 153 residents, helping to staff 3 primary care clinics, 4 emergency department (ED) teams, and 15 inpatient teams ( Fig. 1 ). Residents were engaged in patient care across multiple care settings (primary care clinics, ED, and inpatient floors) between June 1, 2018 and September 1, 2018. Eligibility included completion of at least one shift in any of the three care settings. As the intended use case for our attribution method focused on resident education, we excluded attending physicians and nurse practitioners working as FLCs. Care settings were randomly sampled and participants were enrolled based on convenience availability at the end of their shifts with a planned 2:1:1 sampling of inpatient:ED:clinic. We sampled the inpatient care setting more heavily, as we anticipated greater variation in rPPI selection, given the workflow considerations of each care setting as described below. Enrollment continued until we achieved our target sample sizes for each care setting based on power analyses to achieve 80% power of detecting a sensitivity greater than 50% for detecting true rPPIs from EHR data and a specificity greater than 90% with α = 0.05 for each model. The study was approved by the Institutional Review Board (IRB) at The Children's Hospital of Philadelphia in Pennsylvania, United States.

Fig. 1.

Pediatric residents were surveyed at the end of shifts to provide a “silver standard” of provider–patient interactions, against which classifier predictions using EHR data were compared. ED, emergency department; EHR, electronic health record; rPPI: resident provider-patient interaction.

Description of Workflows

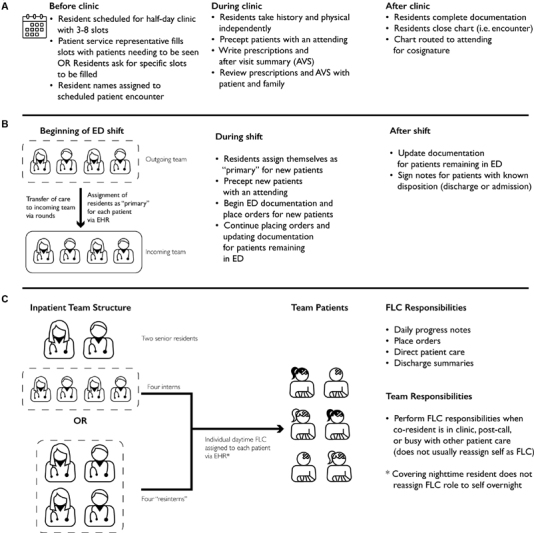

Primary Care Clinic

Residents may be scheduled for primary care half-day clinic shifts as follows: (1) after half-day inpatient shifts, (2) before evening ED shifts, or (3) during a primary care rotation. Prior to a clinic date, residents are assigned from three to eight slots over 3 hours during which patients may be scheduled. Patient service representatives fill these appointment slots, although residents may request certain patients be seen on specific dates. Slots are typically filled with appointments prior to a clinic date, although some slots may be reserved for walk-in appointments. Residents' names are directly listed on appointments, even for walk-in encounters. Upon patient arrival, residents independently take a history and physical and manage the remainder of the visit with an attending. Following the visit and completion of documentation, residents close the encounter, which is cosigned by the attending ( Fig. 2A ).

Fig. 2.

Workflows for each resident care setting ( A ) primary care clinic ( B ) ED and ( C ) inpatient care settings vary widely, affecting the EHR variables relevant for a given interaction. ED, emergency department; EHR, electronic health record; FLC, front-line clinician; OR, operating room.

Emergency Department

At the beginning of an ED shift, residents transition the care of patients continuing to require emergent services and assign oncoming residents to the care team for these patients using the EHR “care team” activity. Residents continue to write orders, document, and provide care for these patients, while also administering care to new ED patients. Residents “sign up” for new patients by assigning themselves via the same “care team” activity, take a physical history, place orders, and manage the visit with an attending physician. Residents continue to provide care through either discharge or admission, but do not close encounters ( Fig. 2B ).

Inpatient Floor

On inpatient floors, residents serve as FLCs for patients. Teams either have a senior-intern structure composed of two senior residents and four interns or a “resintern” structure composed of four second-year residents acting in the FLC role. Within these teams, residents assign themselves as a patient's primary FLCs via the EHR “care team” activity. While the assignment of daytime coverage is performed consistently, night-time coverage is rarely updated in the EHR. As FLCs, residents write daily progress notes, place orders, and provide direct patient care. Each patient is exclusively assigned an FLC, but residents may care for patients on the team for whom they are not FLCs in a “cross-coverage” role, when a patient's FLC may be in clinic, postcall, or busy with other patient care. As a result, patients may receive care from multiple residents on the same team. Upon patient discharge, residents write discharge summaries but to do not close encounters ( Fig. 2C ).

Identification of Resident-Reported rPPIs

Pediatric residents were approached by the study investigators (M.V.M. and A.C.D.) and provided verbal consent, which was recorded per IRB regulations. No participant declined to participate or refused to provide consent. Each interview lasted for approximately 10 minutes including time to describe the study, obtain, and record verbal consent and administer the survey. Residents were able to participate on multiple shifts and in multiple care settings. Survey responses were collected on paper and entered onto electronic forms after data collection by the investigators. Using their EHR's report-generating functionality, the study investigators presented participants with a list of potential patients with whom they may have interacted based on their care setting using the following filters:

Primary care clinic (clinic): all patients with a scheduled visit in the clinic during that shift.

ED: all patients seen by the resident's ED care team during that shift.

Inpatient: all patients on the inpatient care team during that shift, including patients who were admitted, transferred, or discharged.

Participants were asked to identify patients with whom they had a “clinically meaningful experience,” such that they would want a given patient to appear in an automated case log. These patients were classified as “rPPI” versus “non-rPPI,” which together comprised a “silver standard” against which we compared model outputs. This prompt was chosen based on a short series of semistructured interviews with residents prior to data collection, where they expressed that serving as an FLC for a patient did not necessarily constitute a meaningful interaction, since not all interactions contain educational value. Residents also expressed that they sometimes gleaned educational value from patients for whom they did not serve as FLCs, particularly when they “cross-covered” a patient in the inpatient setting. While a time motion study would have provided objective data about residents' interactions with patients, doing so would have been time and resource-prohibitive without fully capturing the perceived educational value of an interaction.

To further elucidate the types of interactions, participants had with patients and whether certain rPPIs may be more easily predicted with EHR data, participants were encouraged to comment on their rationale for marking rPPIs next to each rPPI with a short description. Two of the study investigators (M.V.M. and A.C.D.) independently grouped the comments into qualitative themes based on type of interaction described (e.g., FLC, learned about on rounds, and examined patient). Themes generated by the investigators were then compared and resolved by consensus agreement by the investigators (M.V.M and A.C.D.).

Development of Automated rPPIs

Variables Included in the Model

EHR data elements associated with clinical activity were identified and extracted from the Epic Clarity database (Epic Systems, Verona, Wisconsin, United States) for all patient encounters (both rPPIs and non-rPPIs) identified during the validation data collection. The selection of data elements to be extracted were based on the clinical workflows in each care setting (Description of Workflows, Fig. 2 ), residents' comments from the semistructured interviews, and previously presented approaches to secondary use of data in the EHR. 15 23 In an attempt to create a broadly applicable model that was only specific to care setting, we chose to include variables likely to be available from any EHR. These variables included time-in-chart calculated from EHR audit logs, note authorship (signed or shared), order placement, care team assignment, and chart closure ( Table 1 ). All covariates except for time-in-chart were represented as dichotomous variables. While patient level characteristics, such as age, complications, comorbidities, illness severity, and length of stay, may have an effect on attribution, inclusion of such features would limit generalizability in other care areas and thus were excluded from the study.

Table 1. Model input variables and variable characteristics.

| Variable name | Data type | Definition |

|---|---|---|

| Time-in-chart | Continuous | Discrete time stamped audit logs generated by a resident within the hospital were algorithmically converted into shifts, represented in 10-minute increments |

| Note authorship | Binary | The resident authored, edited, or signed ≥ 1 note in the patient's chart (1 = yes, 0 = no) |

| Order placement | Binary | The resident placed ≥ 1 order in the patient's chart (1 = yes, 0 = no) |

| Care team | Binary | The resident had a role documented in the patient's EHR care team (1 = yes, 0 = no) |

| Chart closure | Binary |

Clinic only

.

The resident in question was the provider closing the patient's chart |

Time-in-chart was calculated from discrete audit log events generated by a given resident in a given patient's chart, as previously described. 24 The general workflow included audit log extraction, calculation of interaction intervals, and aggregation into total time in a patient's chart. Since the institution's EHR records user interactions in the system as discrete timestamps, we extracted all audit logs generated from a hospital workstation within the participant's shift with a patient identifier attached, without filtering by interaction type (e.g., “login,” “logout,” and “chart closure”). Shifts were defined as the period of time prior to survey administration which contained any audit log entries for that participant. From these discrete entries, we generated time gaps between audit log entries, representing the duration between subsequent interactions for a given user. To exclude possible “inactive” time in a chart, lapses greater than 30 seconds marked the end of one interval and the beginning of another. Intervals of interaction within a patient's chart during a shift were summed from these time gaps and represented in 10-minute increments.

Classification Method and Evaluation

We created classifiers based on multivariable logistic regression, decision tree, 25 and random forest 26 27 28 models from the selected features and identified the significant covariates for each care setting. The various classifiers performed equally well with near-identical sensitivities, specificities, positive predictive values (PPVs), and c -statistic values. Given the equivalent characteristics of the classifiers, we chose logistic regression as our primary classifier, as we felt that clinician educators would likely have the most experience interpreting this classifier. All variables were included for model development.

For all binomial regression models, the dependent variable was patient class (rPPI vs. non-rPPI) and independent variables were the covariates listed in Table 1 . Odds ratios (ORs) and 95% confidence intervals (CI) are presented for significant and nonsignificant covariates. All analyses were performed in R version 3.3.3. 29 Using K -fold cross validation, we generated classifier predictions and constructed receiver operating characteristics (ROC) curves. Concordance statistic ( c -statistic) and CIs are presented for each ROC curve. Optimal threshold cut-offs were determined by previously described methods of minimizing the costs associated with false-positive and false-negative results 30 and 2x2 concordance tables are presented at these cutoffs.

Results

Between June 1, 2018 and September 1, 2018, we interviewed 81 unique residents over 111 shifts (24 clinic, 20 ED, and 47 inpatient shifts). From a total of 4,899 potential rPPIs, participants identified 579 rPPIs and 4,320 non-rPPIs ( Table 2 ). Of the rPPIs identified in the clinic care setting, 87.2% were well child visits, and 12.8% were sick visit encounters.

Table 2. Description of validation data.

| Care setting | No. of unique resident shifts | No. of rPPIs n (%) |

No. of non-rPPIs

a

n (%) |

|---|---|---|---|

| Clinic | 24 | 82 (3.25) | 2,440 (96.75) |

| ED | 20 | 131 (21.65) | 474 (78.35) |

| Inpatient | 47 | 366 (20.65) | 1,406 (79.35) |

| Total | 111 | 579 (11.82) | 4,320 (88.18) |

Abbreviations: ED, emergency department; rPPI, resident provider–patient interaction.

Non-rPPIs were potential rPPIs listed on a patient census for the resident's shift that the resident excluded from the list of rPPIs.

In applying the logistic regression classifier across all care settings, increased time-in-chart was significantly associated with a patient being classified as an rPPI ( Table 3 ). For example, in clinic for each ten-minute increase in time-in-chart the odds of being classified as an rPPI increased by a factor of 19.36 (95% CI: 4.2–278.6), while in the ED for each 10-minute increase in time-in-chart the odds of being classified an rPPI increased by a factor of 19.1 (95%: CI, 9.5–41.7). In the ED, authoring a note was also significantly associated with an rPPI classification (OR= 10.8, 95% CI: 3.6–35.9). Orders placed, note authorship, assignment on care team, and total time-in-chart all significantly contributing to predicting rPPI in the inpatient care setting.

Table 3. Odds ratio of being classified as PPIs in clinic, ED, and inpatient contexts by multivariable logistic regression.

| Variable | Clinic OR (95% CI, p ) | ED OR (95% CI, p ) | Inpatient OR (95% CI, p ) |

|---|---|---|---|

| Total time-in-chart a | 19.36 (4.19–278.56, p < 0.01) | 19.06 (9.53–41.65, p < 0.001) | 2.95 (2.23–3.97, p < 0.001) |

| Note authorship | 2.23 × 10 9 (4.58 × 10 −71 –NA, p = 0.99) | 10.8 (3.55–35.93, p < 0.001) | 2.25 (1.39–3.58, p < 0.001) |

| Orders placed | 2.52 (0.10–4.15, p = 0.52) | 1.63 (0.38–7.44, p = 0.52) | 2.74 (1.84–4.07, p < 0.001) |

| Care team assignment | 6.5 × 10 −9 (NA–3.83 × 10 82 , p = 0.99) | 0.60 (0.13–3.00, p = 0.52) | 2.28 (1.39–3.72, p < 0.001) |

| Chart closure b | 16.92 (0.13–540.72, p = 0.16) | – | – |

Abbreviations: CI, confidence interval; ED, emergency department; NA, not available; OR, odds ratio; PPI, provider–patient interaction.

Total time-in-chart is represented in 10-minute increments.

Chart closure is performed by residents only in primary care.

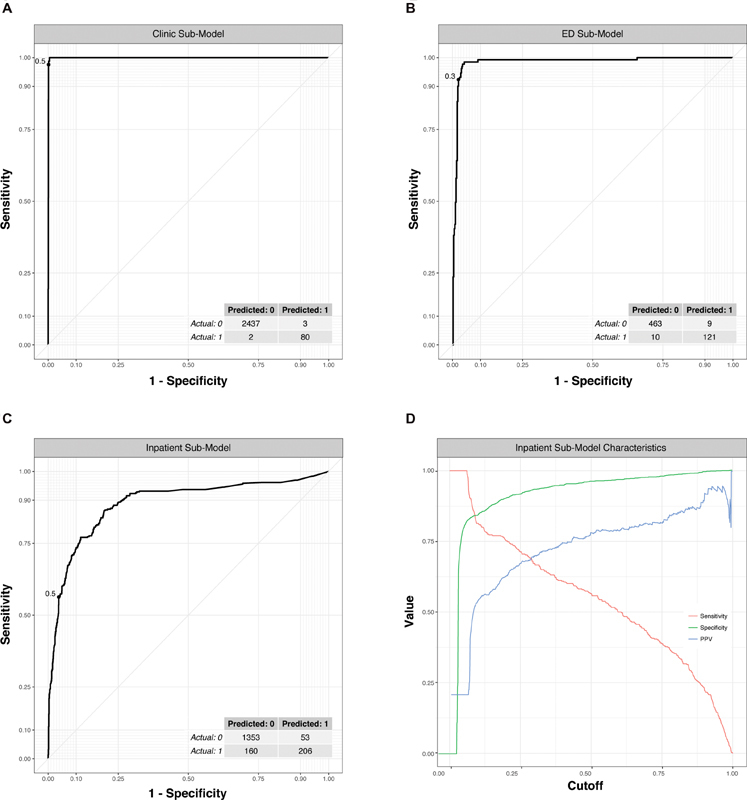

Fig. 3A–C displays the ROC curves for each care setting-specific classifier, along with concordance tables at optimal cutoff thresholds. The clinic and ED models had similar predictive performance, with a c -statistic of 0.999 (95% CI: 0.998–1) for the clinic model and of 0.982 (95% CI: 0.966–0.997) for the ED model. The inpatient model had a c -statistic of 0.895 (95% CI: 0.876–0.915). Given the lower predictive performance for the inpatient care setting model and the potential for competing priorities when choosing a cut-off, we plot sensitivity, specificity and PPV across a range of cut-off values ( Fig. 3D ). At an example cut-off value of 0.5, the sensitivity was 56.3%, specificity was 96.2%, and PPV was 79.5%.

Fig. 3.

Receiver operator characteristic curves show excellent prediction of PPIs for the clinic and ED submodels, while the inpatient submodel performed reasonably well. ( A–C ) Submodels for each care setting studied ( D ) Impact on sensitivity, specificity, and positive predictive value (PPV) with varying cutoffs. Dashed line indicates optimal cut-off displayed in confusion matrix in part ( C ). ED, emergency department; PPI, provider–patient interaction.

The analysis of the survey comments in the inpatient care setting showed that of 366 inpatient rPPIs, residents provided reasons for 330 (90.1%) with an average length of 1.98 words per comment. Participants commonly identified direct involvement in a patient's admission or transfer, or care as the FLC (55.6%, n = 183) as the rationale. Discussion of a patient on rounds (14.6%; n = 48) and patient/family interactions (14.3%; n = 47) were less frequently stated reasons for selections. Only 9.2% ( n = 31) of responses were grouped into involvement in cross-coverage or escalation of care. Another 6.1% ( n = 20) of responses were grouped as miscellaneous comments not fitting into another theme.

Discussion

In this study, we have demonstrated that an approach involving EHR data elements, particularly audit log data, may yield salient predictors of pediatric rPPIs. Unique compared with prior work, we validate the interpretation of these rPPIs in multiple care settings. These commonly collected EHR data elements, including time-in-chart, note authorship, care team assignment, and order placement, accurately predicted resident-identified rPPIs. Time-in-chart estimated through established algorithms was the best predictor of rPPIs in all three care settings, 22 24 31 contributing to extremely accurate ( c- statistic > 0.98) predictive models in the clinic and ED care settings. Attributing patients in the inpatient care setting was less robust ( c -statistic > 0.89) and suggested the significance of other covariates in the prediction. Data from the comment analysis of resident-reported rationales demonstrated that inpatient providers identified rPPIs when they were directly involved in a patient's care, most commonly during an admission, discharge, or transfer and less commonly during cross-coverage or escalation of care.

The current study builds on the work of EHR-based patient attribution using workflow heuristics, which highlighted several key variables, many of which overlap with the variables we used in our models. To examine whether EHR data could predict which primary care provider a patient should see during future appointments, Tripp et al found that “previously scheduled provider” was the best of the predictors examined, including the named primary care physician, placement of orders, and note writing. 32 However, the resulting model had a false positive rate >50% following both inpatient and emergency department visits. Atlas et al augmented scheduling and billing data with patient and physician demographic data to develop a model with a PPV of >90%. 33 However, nearly 20% of patients were inappropriately labeled as not having a provider. Herzke et al used billing data to assign an “ownership” fraction of specific metrics to multiple attending providers for a single hospital stay. 1 This approach cannot be applied to nonbilling providers, such as residents, and may mask the contribution of cross-covering providers. These promising EHR-based approaches yielded initial insights to variables important for patient attribution and provide opportunity for improvement when focusing on rPPIs.

Relatively few studies focused on medical education have used EHR data to attribute patients to providers. 17 19 23 Recently, Schumacher et al performed a feasibility study to attribute care of individual patients to internal medicine interns on an inpatient service using postgraduate year, progress note authorship, order placement, and audit log data resulting in a model with a sensitivity approaching 80%, specificity near 98%, and a c -statistic of 91%. 23 Similarly, Sequist et al tested the feasibility of using outpatient encounters linked to internal medicine primary care resident codes for the purposes of informing resident education. 34 Our study reinforces the importance of similar variables with particular emphasis on audit log data, in a separate population across multiple care settings. This finding supports the notion that residents are more likely to report an rPPI as they spend more time in a patient's chart. Given that physicians are likely to spend greater amount of time in the charts of the patients for whom they are primarily caring, as reflected in our qualitative data, the strength of association between time-in-chart and rPPIs may hold external validity beyond resident physicians. Time-in-chart cut-offs may vary depending on the unique characteristics of a given workflow and site-specific validation may be necessary. The inclusion of other EHR data may help augment the accuracy of such models in other use cases.

Our approach to patient attribution is focused on identifying patients from which pediatric residents have gained educational value. With similar motivations, Levin and Hron described the development of a dashboard built for pediatric residents that utilized records of resident documentation in a patient chart as a means for patient attribution, although validation metrics around patient attribution were not reported. 15 While asking residents to identify “clinically meaningful experiences” may limit the utility of these specific models to medical education, the methodology employed in this paper may be generalizable across other use cases in which robust patient attribution methods are desired. Researchers may be able to ask participants more or less targeted questions and revalidate to adjust model cut-offs for other needs, such as generating individualized physician quality metrics or developing clinical decision support for specific care team members. Together with previous work done in this area, we hope that by characterizing a specific resident population across multiple care settings using a common EHR data model that others may be able to begin to test the feasibility of our approach more widely.

Future work will require demonstrated generalizability of the framework outlined here to other specialties, provider populations, and institutions. However, perhaps more exciting are the potential use cases that could benefit from improved attribution. From the standpoint of medical education, curricular decisions could be influenced at a programmatic level with data about aggregated trainee exposure, while individual trainees could tailor their electives or self-directed learning based on exposure gaps. Such precision education could further be accelerated by the delivery of targeted educational material shortly after exposure for just-in-time reinforcement of learning. At a national level, aggregated data could also start to unravel how clinical experiences at different institutions may vary from one another. The potential applications extend beyond medical education, as improved attribution could enhance targeted role-based notifications and outcomes-based learning for decision support through positive deviance models. 35 In a health care system where many patients have several providers, patients may also directly benefit from knowing which providers are attributed to them in an automated fashion. 36 Given the fundamental relationship between patient and provider, accurate patient attribution has wide-ranging applications.

Limitations

There are several limitations to our work. Most importantly, our study focuses on pediatric residents at a single institution. While we have reason to believe that many components involved in EHR-derived rPPIs may also be important for determining rPPIs at other locations, our study limits this conclusion. Local clinical workflows may vary widely, making determination of rPPIs using our approach easier or harder. Alternate workflows where trainees do not sign clinic or ED provider notes would necessitate modification of existing models.

The focus of our study on a pediatric resident population in one academic institution may further limit generalizability to other types of providers in different settings. As examples, primary care pediatricians, pediatric inpatient hospitalists, and pediatric ED physicians practicing independently likely have a workflow that is more streamlined and possibly less contiguous, resulting in different time-in-chart values. Adjustment of cut-off points for the models may or may not capture accurate attribution in these scenarios, and warrants further study. Though the significant variables within our models are suspected to be universal across institutions, they may be the result of workflows specific to this group, warranting additional work to validate this approach more broadly. Furthermore, while direct observation of clinical workflows is considered “gold standard” for attribution, we opted for a survey-based “silver standard” for manually identifying rPPIs, which allowed identification of interactions with “educational value” that would not have been identified with a time-motion study. This approach may have been subject to recall bias and under- or overreporting. A clearer definition when asking residents to identify rPPIs and/or the inclusion of additional variables may lessen trade-off between sensitivity and specificity in the inpatient submodel. However, through our comment analysis in the inpatient care setting, we were able to elaborate on what factors play into how providers would like to attribute themselves to patients.

Conclusion

Routinely collected EHR data, including EHR audit times, note authorship, care team assignment, and order placement can be used to predict resident-defined rPPIs among pediatric residents in lieu of manually documented case logs. Highly accurate methods of patient attribution, like the one described here, have broad applications ranging from measuring medical education to individualized clinical decision support. Future research will be needed to determine the generalizability of this approach to other populations of providers across other subspecialties, as well its application to other use cases outside of medical education.

Clinical Relevance Statement

Accurate EHR-derived rPPIs have the potential to transform data-driven graduate medical education. From a practical standpoint, rPPIs could help trigger targeted educational content based on recent patient experiences with specific diagnoses, possible multiple choice questions as a component in the assessment of competency, and patient experience surveys when measuring trainees' communication skills. The methods described in this paper advance our understanding of how EHR data may help identify rPPIs and lays the groundwork for future work in other providers, as well as other use cases.

Multiple Choice Questions

-

Which of the following is the most practical use case of accurate patient attribution?

Developing swim-lane diagrams for multidisciplinary clinic flow.

Identifying providers in need of additional elbow-to-elbow support.

Generating a departmental order set for standardized lead screening.

Identifying follow-up physicians for patients discharged from the ED.

Correct Answer: The correct answer is option d. The process of patient attribution entails matching patients to providers responsible for their care. The potential applications are myriad, but offer the most benefit for physician centered efforts. For example, quality improvement initiatives looking to improve the care of an outpatient population with type-II diabetes might use patient attribution models to determine the physicians' responsibility for the care of these patients. Alternatively, individualized clinical decision support systems might leverage the use of accurate patient attribution models to display certain alerts to certain providers, based on their role in a patient's care. Of the choices listed, answer (d) is the only option that involves targeting the correct physician based on patient care.

-

What EHR data element is the most likely to be the strongest predictor for a highly accurate model of patient attribution?

Provider training level

User login context.

EHR audit logs.

Order placement.

Correct Answer: The correct answer is option c. While previous studies have used provider demographic data, like postgraduate training level, to predict provider–patient interactions, the models performed poorly. Login context may be used to narrow possible providers for a given patient, but does not provide data granular enough to attribute providers to patients. Order placement may be a potential contributor to a model; however, this may be a nonspecific action, as cross-covering providers may place orders for patients in lieu of colleagues but would not consider themselves to be a patient's primary care provider. EHR audit logs, particularly when time-in-chart is calculated, can be a proxy for the amount of time and effort a provider is spending on a patient's care and thus is likely to be a strong predictor of patient attribution.

Funding Statement

Funding This work was supported by a Special Project Award from the Association of Pediatric Program Directors.

Conflict of Interest A.C.D. and M.V.M. are the principal investigators for the Special Project Award from the Association of Pediatric Program Directors, which supported this work. They hold a pending institutional technology disclosure based on this work. E.W.O. is a cofounder of and has equity in Phrase Health, a clinical decision support analytics company which was not involved, directly or indirectly, in this work. He receives no direct revenue from this relationship. J.D.M. is a member and has equity in ArchiveCore, a health care credentialing company which was not involved, directly or indirectly, in this work. A.A.L. has no conflicts of interest to disclose.

Protection of Human and Animal Subjects

This study was reviewed and approved by the Children's Hospital of Philadelphia Institutional Review Board, Pennsylvania, United States.

References

- 1.Herzke C A, Michtalik H J, Durkin N et al. A method for attributing patient-level metrics to rotating providers in an inpatient setting. J Hosp Med. 2018;13(07):470–475. doi: 10.12788/jhm.2897. [DOI] [PubMed] [Google Scholar]

- 2.Schumacher D J, Martini A, Holmboe E et al. Developing resident-sensitive quality measures: engaging stakeholders to inform next steps. Acad Pediatr. 2019;19(02):177–185. doi: 10.1016/j.acap.2018.09.013. [DOI] [PubMed] [Google Scholar]

- 3.Mattana J, Kerpen H, Lee C et al. Quantifying internal medicine resident clinical experience using resident-selected primary diagnosis codes. J Hosp Med. 2011;6(07):395–400. doi: 10.1002/jhm.892. [DOI] [PubMed] [Google Scholar]

- 4.Wanderer J P, Charnin J, Driscoll W D, Bailin M T, Baker K. Decision support using anesthesia information management system records and accreditation council for graduate medical education case logs for resident operating room assignments. Anesth Analg. 2013;117(02):494–499. doi: 10.1213/ANE.0b013e318294fb64. [DOI] [PubMed] [Google Scholar]

- 5.Sebok-Syer S S, Chahine S, Watling C J, Goldszmidt M, Cristancho S, Lingard L. Considering the interdependence of clinical performance: implications for assessment and entrustment. Med Educ. 2018;52:970–980. doi: 10.1111/medu.13588. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Mehrotra A, Burstin H, Raphael C. Raising the bar in attribution. Ann Intern Med. 2017;167(06):434–435. doi: 10.7326/M17-0655. [DOI] [PubMed] [Google Scholar]

- 7.McCoy R G, Bunkers K S, Ramar P et al. Patient attribution: why the method matters. Am J Manag Care. 2018;24(12):596–603. [PMC free article] [PubMed] [Google Scholar]

- 8.Fishman E, Barron J, Liu Y et al. Using claims data to attribute patients with breast, lung, or colorectal cancer to prescribing oncologists. Pragmat Obs Res. 2019;10:15–22. doi: 10.2147/POR.S197252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gupta K, Khanna R. Getting the (right) doctor, right away. Available at:https://psnet.ahrq.gov/webmm/case/381/getting-the-right-doctor-right-away. Accessed August 29, 2018

- 10.Vawdrey D K, Wilcox L G, Collins S et al. Awareness of the care team in electronic health records. Appl Clin Inform. 2011;2(04):395–405. doi: 10.4338/ACI-2011-05-RA-0034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dalal A K, Schnipper J L. Care team identification in the electronic health record: A critical first step for patient-centered communication. J Hosp Med. 2016;11(05):381–385. doi: 10.1002/jhm.2542. [DOI] [PubMed] [Google Scholar]

- 12.Arora V M. Harnessing the power of big data to improve graduate medical education: big idea or bust? Acad Med. 2018;93(06):833–834. doi: 10.1097/ACM.0000000000002209. [DOI] [PubMed] [Google Scholar]

- 13.Weinstein D F. Optimizing GME by measuring its outcomes. N Engl J Med. 2017;377(21):2007–2009. doi: 10.1056/NEJMp1711483. [DOI] [PubMed] [Google Scholar]

- 14.Accreditation Council for Graduate Medical Organization. CLER Pathways to Excellence: Expectations for an Optimal Clinical Learning Environment to Achieve Safe and High-Quality Patient Care, Version 2.0. Available at:https://www.acgme.org/Portals/0/PDFs/CLER/1079ACGME-CLER2019PTE-BrochDigital.pdf. Accessed May 18, 2020

- 15.Levin J C, Hron J. Automated reporting of trainee metrics using electronic clinical systems. J Grad Med Educ. 2017;9(03):361–365. doi: 10.4300/JGME-D-16-00469.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Nagler J, Harper M B, Bachur R G. An automated electronic case log: using electronic information systems to assess training in emergency medicine. Acad Emerg Med. 2006;13(07):733–739. doi: 10.1197/j.aem.2006.02.010. [DOI] [PubMed] [Google Scholar]

- 17.Simpao A, Heitz J W, McNulty S E, Chekemian B, Brenn B R, Epstein R H. The design and implementation of an automated system for logging clinical experiences using an anesthesia information management system. Anesth Analg. 2011;112(02):422–429. doi: 10.1213/ANE.0b013e3182042e56. [DOI] [PubMed] [Google Scholar]

- 18.Smith T I, LoPresti C M. Clinical exposures during internal medicine acting internship: profiling student and team experiences. J Hosp Med. 2014;9(07):436–440. doi: 10.1002/jhm.2191. [DOI] [PubMed] [Google Scholar]

- 19.Sebok-Syer S S, Goldszmidt M, Watling C J, Chahine S, Venance S L, Lingard L. Using electronic health record data to assess residents' clinical performance in the workplace: the good, the bad, and the unthinkable. Acad Med. 2019;94(06):853–860. doi: 10.1097/ACM.0000000000002672. [DOI] [PubMed] [Google Scholar]

- 20.Cebul R D. Using electronic medical records to measure and improve performance. Trans Am Clin Climatol Assoc. 2008;119:65–75. [PMC free article] [PubMed] [Google Scholar]

- 21.Hirsch A G, Jones J B, Lerch V Ret al. The electronic health record audit file: the patient is waiting J Am Med Inform Assoc 201724(e1):e28–e34. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Hribar M R, Read-Brown S, Goldstein I H et al. Secondary use of electronic health record data for clinical workflow analysis. J Am Med Inform Assoc. 2018;25(01):40–46. doi: 10.1093/jamia/ocx098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Schumacher D J, Wu D TY, Meganathan K et al. A feasibility study to attribute patients to primary interns on inpatient ward teams using electronic health record data. Acad Med. 2019;94(09):1376–1383. doi: 10.1097/ACM.0000000000002748. [DOI] [PubMed] [Google Scholar]

- 24.Dziorny A C, Orenstein E W, Lindell R B, Hames N A, Washington N, Desai B. Automatic detection of front-line clinician hospital shifts: a novel use of electronic health record timestamp data. Appl Clin Inform. 2019;10(01):28–37. doi: 10.1055/s-0038-1676819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Hothorn T, Hornik K, Zeileis A. Unbiased recursive partitioning: a conditional inference framework. J Comput Graph Stat. 2006;15:651–674. [Google Scholar]

- 26.Hothorn T, Bühlmann P, Dudoit S, Molinaro A, van der Laan M J. Survival ensembles. Biostatistics. 2006;7(03):355–373. doi: 10.1093/biostatistics/kxj011. [DOI] [PubMed] [Google Scholar]

- 27.Strobl C, Boulesteix A-L, Zeileis A, Hothorn T. Bias in random forest variable importance measures: illustrations, sources and a solution. BMC Bioinformatics. 2007;8:25. doi: 10.1186/1471-2105-8-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Strobl C, Boulesteix A-L, Kneib T, Augustin T, Zeileis A. Conditional variable importance for random forests. BMC Bioinformatics. 2008;9:307. doi: 10.1186/1471-2105-9-307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.R Core Team. R: a language and environment for statistical computing. Available at:https://www.gbif.org/tool/81287/r-a-language-and-environment-for-statistical-computing. Accessed May 18, 2020

- 30.Greiner M. Two-graph receiver operating characteristic (TG-ROC): update version supports optimisation of cut-off values that minimise overall misclassification costs. J Immunol Methods. 1996;191(01):93–94. doi: 10.1016/0022-1759(96)00013-0. [DOI] [PubMed] [Google Scholar]

- 31.Mai M, Orenstein E W. Network analysis of EHR interactions to identify 360° evaluators. Available at:https://dblp.org/rec/conf/amia/MaiO18.html. Accessed May 18, 2020

- 32.Tripp J S, Narus S P, Magill M K, Huff S M. Evaluating the accuracy of existing EMR data as predictors of follow-up providers. J Am Med Inform Assoc. 2008;15(06):787–790. doi: 10.1197/jamia.M2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Atlas S J, Chang Y, Lasko T A, Chueh H C, Grant R W, Barry M J. Is this “my” patient? Development and validation of a predictive model to link patients to primary care providers. J Gen Intern Med. 2006;21(09):973–978. doi: 10.1111/j.1525-1497.2006.00509.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Sequist T D, Singh S, Pereira A G, Rusinak D, Pearson S D. Use of an electronic medical record to profile the continuity clinic experiences of primary care residents. Acad Med. 2005;80(04):390–394. doi: 10.1097/00001888-200504000-00017. [DOI] [PubMed] [Google Scholar]

- 35.Wang J K, Hom J, Balasubramanian S et al. An evaluation of clinical order patterns machine-learned from clinician cohorts stratified by patient mortality outcomes. J Biomed Inform. 2018;86:109–119. doi: 10.1016/j.jbi.2018.09.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Singh A, Rhee K E, Brennan J J, Kuelbs C, El-Kareh R, Fisher E S. Who's my doctor? Using an electronic tool to improve team member identification on an inpatient pediatrics team. Hosp Pediatr. 2016;6(03):157–165. doi: 10.1542/hpeds.2015-0164. [DOI] [PubMed] [Google Scholar]