Abstract

Systems and protocols based on emergent responding training have been demonstrated to be effective instructional tools for teaching a variety of skills to typically developing adult learners across a number of content areas in controlled research settings. However, these systems have yet to be widely adopted by instructors and are not often used in applied settings such as college classrooms or staff trainings. Proponents of emergent responding training systems have asserted that this failure might be because the protocols require substantial resources to develop, and there are no known manuals or guidelines to assist teachers or trainers with the development of the training systems. In order to assist instructors with the implementation of systems, we provide a brief summary of emergent responding training systems research; review the published computer-based training systems studies; present general guidelines for developing and implementing a training and testing system; and provide a detailed, task-analyzed written and visually supported manual/tutorial for educators and trainers using free and easily accessible computer-based learning tools and web applications. Educators and trainers can incorporate these methods and learning tools into their current curriculum and instructional designs to improve overall learning outcomes and training efficiency.

Electronic supplementary material

The online version of this article (10.1007/s40617-019-00405-x) contains supplementary material, which is available to authorized users.

Keywords: Computer-based training, Educational technology, Emergent responding, Equivalence-based instruction

Traditional didactic teaching is generally used in classroom and training settings and typically includes lectures, textbooks, independent study, and an assessment of minimal mastery with assignments, projects, and tests. Most full-time college students will spend over 40 hr per week attending classes or engaged in independent study (Michael, 1991); however, this time does not ensure maximal mastery, competency, understanding, or proficiency in applying targeted skills in other settings. Ideally, instructors would ensure that the teaching protocols, learning systems, and educational tools that they employ are effective and lead to the desired outcomes, although that is not always the case (Keller, 1968; Skinner, 1968; Twyman & Heward, 2016). One way to address this issue might be to apply behavior-analytic principles to teaching protocols with the goal of improving student outcomes (Cihon & Swisher, 2018; Critchfield & Twyman, 2014). Given that the core tenets of applied behavior analysis (ABA) include objective and operationally defined target behaviors and mastery criteria, as well as evidence-based instructional technologies based on the systematic manipulation of environmental variables, ABA appears to be uniquely poised to ensure instruction is effective (Skinner, 1958).

Recently, digital learning tools have begun to revolutionize how students engage with educational content. These tools challenge the basic tenets of quality instruction by providing all students with access to learning opportunities outside of formal and structured educational settings (Beetham & Sharpe, 2013). Twyman (2014) described how technology may benefit education to help increase personalization and improve student outcomes. Quasi-automated training systems using computer-based learning tools derived from ABA principles and technologies (e.g., immediate feedback, prompt fading) present a possible response to the growing need for technological solutions (Baer, Wolf, & Risley, 1968) for quality instructional design (Cihon & Swisher, 2018; Keller, 1968; Skinner, 1958). Skinner (1958) emphasized the critical components of a training protocol that included immediate feedback, active responding, and individualized pacing. He further proposed that teaching machines that incorporated all three of these components could be used in a variety of instructional settings. Both Skinner (1958) and Keller (1968) also proposed a personalized system of instruction that allowed for students to actively work independently, allowing them to meet mastery criteria before advancing, and removing the need for group learning or teachers. However, only recently have those systems been used to teach complex and academically relevant skills to typically developing adult learners in a variety of settings (see Brodsky & Fienup, 2018).

In an effort to provide support for instructors and trainers who might be considering the use of computer-based emergent responding training systems, this article presents a summary of the principles of emergent responding, proposes some basic best practices and guidelines regarding the implementation of training and testing systems, presents a summary of computer-based systems that have been used in published research articles, and supplies access to a visually supported tutorial (hosted on the Behavior Analysis in Practice website as supplemental material to this publication) that guides instructors on how to implement training systems with freely available web-based learning tools. It was our intention to share an introductory manual for instructors and trainers so that they can more easily, and affordably, incorporate computer-based emergent responding training systems into their instructional practices. In addition, we hope that researchers will be able to conduct investigations more efficiently, given access to this publication and the supplemental materials.

Emergent Responding

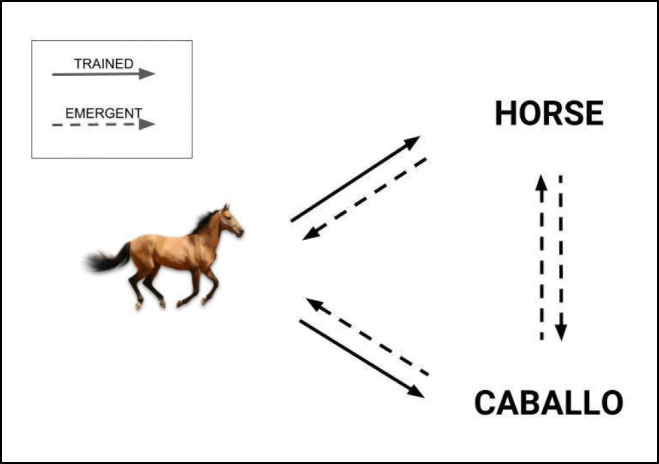

In a series of studies, Sidman (1994) empirically demonstrated the principle of stimulus equivalence, in which there is an emergence of untrained responding following trial-based training (i.e., match to sample [MTS]) that cannot be explained by existing behavioral principles (e.g., stimulus generalization). He observed that two or more stimuli could come to control responding in the same way despite a lack of physical similarity and in the absence of being directly taught (i.e., the stimuli become substitutable for one another). An equivalence class is established when the functional and relational properties of reflexivity, symmetry, and transitivity/equivalence emerge by testing following the direct training of baseline relations (Green & Saunders, 1998). For example, an equivalence class consisting of a picture of a horse (A), the English word horse, (B) and the Spanish word caballo (C) is established after directly training only the A→B and A→C discriminations and testing for the emergence of all other discriminations (Figure 1). Reflexivity is demonstrated when a learner selects an identical comparison when presented with a sample (e.g., picture of a horse = picture of a horse). Symmetrical responding emerges when a learner selects the written word horse (B) when presented with the picture of the horse (A), and then selects the picture of the horse (A) when presented with the written word horse (B). Equivalence responding emerges after the direct training of two conditional discriminations (e.g., if A = B and A = C, then B = C and C = B). If after training these two baseline conditional discriminations, a learner selects the Spanish word caballo (C) when presented with the English word horse (B), and vice versa, without training, then equivalence is established. When this occurs, the stimuli are said to be equivalent to, or functionally substitutable for, each other (Green & Saunders, 1998). The importance of emergent responding is that acquisition can be expedited without having to teach every discrimination or stimulus relation directly, as is often done in traditional teaching settings (Critchfield & Twyman, 2014; Pilgrim, 2020). In a three-member equivalence class consisting of six possible stimulus-stimulus relations (not including reflexive relations), two conditional discriminations are trained, and four emerge. Additionally, some preliminary research has suggested that emergent responding training frameworks may be more efficient at producing novel responding than other traditional teaching methods (Ferman et al., 2019; Haegele, McComas, Dixon, & Burns, 2011; Zinn, Newland, & Ritchie, 2015).

Fig. 1.

Illustration of an equivalence class consisting of a picture of a horse (A), the English word horse (B), and the Spanish word caballo (C). Trained responses are represented by the solid arrows, and tested emergent responses are represented by the dotted arrows

Computer-Based Training

Computers (desktop, laptop, and mobile devices) can present training material with software applications, either locally or via the Internet. These applications tend to require active responding from the learner and can also be used with multiple people at the same time (Williams & Zahed, 1996), which can reduce the strain on individual instructors. In some cases, using a computer for all instructional delivery may obviate the need for an instructor altogether (Keller, 1968; Skinner, 1958), resulting in a more economical option (Johnson & Rubin, 2011). Consequently, computer-based training can provide opportunities for personalized instruction, including self-paced study and remedial practice to ensure mastery (Cihon & Swisher, 2018; Keller, 1968). In a review of 79 experiments published between 1995 and 2007 that evaluated computer-based training with typical adults, 95% of the studies used learner pacing (Johnson & Rubin, 2011). Additionally, 95% of studies produced superior or equal outcomes as compared to other alternatives when participants were required to engage with the material, suggesting that an important component of computer-based training is the interactive nature of the instructional system. Across all studies, the most common (32%) type of feedback provided was contingent but nonspecific feedback (i.e., did not explain what the correct answer was or why it was incorrect). Thus, it seems that all of these variables may contribute to effective computer-based training with typical adults.

Computer-based training has been successfully used to teach a variety of topics with typically developing adults, including discrete-trial training and backward chaining (Erath & DiGennaro Reed, 2019; Nosik & Williams, 2011) and analyzing and refining functional analysis conditions (Schnell, Sidener, DeBar, Vladescu, & Kahng, 2017), using several different modalities, including video modeling, quiz competencies, and other computer-based presentations (e.g., PowerPointTM). In addition, touch-based learner interfaces have recently been investigated, with promising results (Barron, Leslie, & Smyth, 2018). However, limitations to computer-based training systems include up-front costs that are required to purchase the software applications or develop the training materials (Kruse & Keil, 2000), as well as the need to identify application developers to create the systems. For example, Nosik and Williams (2011) reported that it required approximately 40 hr to record, edit, create audio voice-overs, and sequence animations for their 20-min training videos. Schnell et al. (2017) reported that they paid a programming consultant $20 per hour to build their tutorial and that it took 29 hr over a 4-month period. Although both studies suggested that the training systems were effective, the cost and time required to develop the systems might not be feasible for most educators.

Computer-Based Emergent Responding Training Systems

Over the past 15 years, researchers have demonstrated the efficacy of computer-based automated emergent responding training systems (see Brodsky & Fienup, 2018, and Rehfeldt, 2011, for reviews). Most published studies with computer-based training and testing systems evaluated applications that automatically presented trials to a learner, usually as visual stimuli (e.g., static images or printed text displayed on a computer monitor), with immediate feedback provided. Generally, researchers have used custom-developed systems to control the presentation of sample and comparison stimuli to mitigate position bias and to ensure a random stimulus presentation order. The systems also recorded learner responses and controlled the delivery of feedback. System development applications typically included a feature that allowed the instructor to label and load stimuli into the system a single time, and it would then present the sample and comparison stimuli for every trial according to programmed criteria. Researchers provided instructions to participants for how to use the system, and the learner responses usually took the form of either selecting a stimulus on the screen with a mouse or using a keyboard to enter a response (e.g., typing a word or phrase, typing a single letter). The computer systems also determined the order of training and testing conditions based on the performance of the learner and automatically provided remedial training sessions if necessary. In many cases, a researcher simply started the computer-based system, provided instructions to the learner, and observed the research session to ensure that the computer system performed without error or crashing. This type of computer-based training and testing system has been used to conduct a variety of research experiments in very controlled settings.

Computer-based emergent responding training systems have also been systematically compared to other teaching procedures. Zinn et al. (2015) compared the efficacy and efficiency of a custom computer-based emergent responding training system to a computer-based but unsystematic presentation of training trials to teach college students to identify brand and generic drug names. They found that the emergent responding training protocol was substantially more efficient. Similarly, O’Neill, Rehfeldt, Ninness, Munoz, and Mellor (2015) compared computer-based (i.e., a learning management system [LMS]) conditional discrimination training to simply reading descriptions from a textbook to teach verbal operants and found that conditional discrimination training was more effective. Most recently, Ferman et al. (2019) compared a custom computer-based system to video lectures to teach religious literacy to five children between the ages of 11 and 13. All participants in the computer-based group formed equivalence classes, whereas only one participant formed equivalence classes in the video lecture group. To date, few studies have directly compared the efficacy and efficiency of emergent responding training systems to other teaching methodologies, so future research must be conducted.

In addition to efficiency measures, many emergent responding training studies have evaluated participants’ preference and acceptance of computer-based training procedures compared to other teaching methods. Albright, Schnell, Reeve, and Sidener (2016) and Blair et al. (2019) collected social validity data from college students at the conclusion of the researchers’ studies. Participants in both studies reported successfully learning the relevant material, had generally favorable views of the procedures, and would recommend the procedures to others. Ferman et al. (2019) also collected social validity data and found that the group that was exposed to the computer-based emergent responding training system reported that the format was easier than the video lectures, that they thought their friends could learn more easily using the computer-based system than using video lectures, and that they would like to learn more topics using computer-based training systems. Although preliminary, these data support the acceptability of emergent responding training procedures that use computer-based training and testing systems to teach various skills across a wide age range.

Lack of Adoption of Computer-Based Emergent Responding Training Systems

Over several decades, researchers have determined that conditional discrimination training with typically developing learners, specifically via MTS, is one of the most effective strategies to increase the likelihood that untrained responding will emerge (Brodsky & Fienup, 2018; Critchfield & Twyman, 2014; Green, 2001; Pilgrim, 2020; Rehfeldt, 2011). However, despite empirical evidence, the adoption of published technological training systems appears to be nearly nonexistent in applied settings (e.g., clinical, educational, training; Brodsky & Fienup, 2018; Rehfeldt, 2011). The lack of comprehensive and wide-ranging applied scientist-practitioner research on computer-based emergent responding training systems outside of laboratory settings suggests that practitioners might not be implementing the procedures and thus are not encountering new socially relevant empirical questions. Dixon, Belisle, Rehfeldt, and Root (2018) referred to the published emergent responding research as “demonstration of concept exercises” (p. 8)—or essentially, translational. Brodsky and Fienup (2018) reported that only 16% of published studies that investigated emergent responding training systems with college students were conducted in classroom settings. Thus, there appears to be a disconnect between research and practice. As such, Critchfield (2018) proposed that the field should focus on emergent responding training technologies that can be more easily implemented in real-world settings. For example, Critchfield and Twyman (2014) provided a number of best practices for emergent learning and described how a systematic curriculum based on an emergent responding training framework could be used to teach various academic topics across different levels of learners.

Computer-based emergent responding training systems as an ABA technology

In an attempt to analyze and better understand the specific characteristics of computer-based emergent responding training systems, we reviewed the studies included in Rehfeldt (2011) and Brodsky and Fienup (2018), as well as several studies that were not included in those reviews. The purpose was to quantify the characteristics of the computer-based training and testing systems that were used to teach skills to typically developing learners (N = 37).1 The goal was to determine whether the procedures used in these studies were technological, one of the seven dimensions of ABA (Baer et al., 1968), which is essential in the context of the application, replication, and extension of published research on computer-based training. Specifically, we evaluated if a system was technological by determining whether behavior-analytic instructors (e.g., faculty, trainers, supervisors) could realistically independently develop and implement the systems used in these studies without pursuing additional training in computer application development based solely on the description of each system from the published article (or from the original source publication). For each study, if the researchers used application development software (e.g., Visual BasicTM) to create a system that was not commercially available, we determined if it included (or referenced) detailed written and/or visually supported step-by-step instructions for the independent development of the system. If the researchers used a commercially available application (e.g., Adobe CaptivateTM, BlackboardTM LMS) to create a system, we evaluated whether the application could be easily modified by independent practitioners (i.e., not directly associated with a college or university) for use across multiple computer operating systems to teach a variety of skills. In addition, for commercially available applications, we investigated if it was reasonably affordable for individual use.

We concluded that no study met our criteria to be considered technological (Table 1), and none used a system that could realistically be implemented by independent behavior analysts across a variety of settings with varying technological and financial resources. The majority (78%) of the published studies used training systems that required software application development skills (e.g., Visual BasicTM or a scripting programming language) to create and that are not commercially available (n = 29). Some studies (11%) used systems that did not require application development skills (n = 4), but those studies used LMSs (e.g., BlackboardTM or Distance2LearnTM) that are not available to all independent instructors or trainers who are not associated with a college or university (and even those who are associated with a college or university might not have access to a specific system). In addition, those studies did not provide detailed instructions on how to implement an emergent learning system with the LMS. One older study used a commercial application (HyperCardTM 2.0) that is no longer available for use. Several of the systems (24%) were web based (n = 9) or touch based (11%, n = 4; no system was both web based and touch based); however, these systems were custom developed and could not be used outside of laboratory settings, are no longer available, or used an LMS that is not available to all instructors.

Table 1.

Characteristics of published computer-based emergent responding training and testing systems

| Number of Studies (N = 37) | Percentage of Total Studies | |

|---|---|---|

| Participants (all typically developing) | ||

| Adults | 27 | 73% |

| Children | 10 | 27% |

| Technology | ||

| Custom-developed system | 29 | 78% |

| Learning management system (LMS) | 4 | 11% |

| Commercially available application | 3 | 8% |

| Commercially available application (no longer available) | 1 | 3% |

| Training/testing application | ||

| Custom system developed with Visual BasicTM / Visual StudioTM / Visual ++TM / Visual Basic.netTM | 17 | 46% |

| Custom system developed with unknown programming language | 5 | 14% |

| Web browser with custom application script (no longer available) | 4 | 11% |

| BlackboardTM LMS | 2 | 5% |

| Adobe CaptivateTM | 1 | 3% |

| Distance2LearnTM LMS | 1 | 3% |

| Custom system developed with Visual BasicTM and Adobe | 1 | 3% |

| Action ScriptTM | ||

| Custom system developed with Visual BasicTM and C++TM | 1 | 3% |

| Custom system developed with Visual BasicTM, Adobe FlashTM, | 1 | 3% |

| Adobe ActionScript 2.0TM, and C++TM | ||

| E-PrimeTM | 1 | 3% |

| HyperCardTM (no longer available) | 1 | 3% |

| SakaiTM LMS | 1 | 3% |

| ToolBook InstructorTM | 1 | 3% |

| Skilled application development required | ||

| Yes | 29 | 78% |

| No | 7 | 19% |

| N/A (application no longer available) | 1 | 3% |

| Computer operating system | ||

| WindowsTM | 34 | 92% |

| macOSTM | 10 | 27% |

| WindowsTM and macOSTM | 9 | 24% |

| Chrome OSTM | 8 | 22% |

| WindowsTM, macOSTM, and Chrome OSTM | 8 | 22% |

| Web based | ||

| Yes | 9 | 24% |

| No | 28 | 76% |

| Touch based | ||

| Yes | 4 | 11% |

| No | 33 | 89% |

| Mobile devices (phones and/or tablets) | ||

| Yes | 5 | 14% |

| No | 32 | 86% |

| Cost | ||

| Not available for purchase | 34 | 92% |

| $995–$2,795 (single license one-time purchase) $159–$1,895 (single license yearly subscription) | 3 | 8% |

| Technological (dimension of ABA) | ||

| Yes | 0 | 0% |

| No | 37 | 100% |

Of the studies that used commercially available applications (8%, n = 3), one study (Sella, Ribeiro, & White, 2014) used e-learning development software, Adobe CaptivateTM (Adobe, 2019), that requires substantial training in order to implement in educational or training settings. A single license of CaptivateTM costs $1,299, and a subscription costs $407.88 per user per year. Another study (Greville, Dymond, & Newton, 2016) used E-PrimeTM (Psychology Software Tools, 2019), which is a psychology research application. A single-user license costs $995 and a subscription costs $159 per user per year, and the program can only be installed on computers with the WindowsTM operating system. A third study (Connell & Witt, 2004)2 used ToolBook InstructorTM (SumTotal, 2019), which is an e-learning development application that can only be installed on computers with WindowsTM; a single license costs $2,795, and a subscription costs $1,895 per user per year.

Across all 37 studies, only nine studies (24%) reported systems that could be used with both the WindowsTM and macOSTM operating systems; however, of those nine studies, four studies reported research with custom systems, and four studies used LMSs that are not available to all instructors. The e-learning modules developed in Adobe CaptivateTM can be used with both the WindowsTM and macOSTM computer operating systems; however, CaptivateTM is limited by its substantial cost and developmental training requirements. The systems that used Adobe CaptivateTM and LMSs (n = 5) could, in theory, be used on mobile devices; however, as noted, these systems are limited by cost, requisite development skills and time, and availability to independent instructors in a variety of settings. Therefore, the major general limitation common to all the computer systems used in these studies is the inability to replicate, extend, or apply the technology in socially relevant and meaningful ways.

Specific barriers to implementing computer-based emergent responding training systems

As demonstrated by reviews of published research on computer-based emergent responding systems (Brodsky & Fienup, 2018; Rehfeldt, 2011), it is clear that current technology can assist with the development of teaching systems. However, substantial barriers that prevent their adoption and extension by educators and trainers exist. These barriers include the limited accessibility, extensibility, and applicability of custom-developed systems; the requisite computer programming skills to develop the systems; and the time and money required to create the systems (Cummings & Saunders, 2019). Additionally, instructors who might consider incorporating an emergent learning framework into their practice might lack sufficient conceptual and practical skills with emergent responding and might not fully understand the possible advantages to the procedures, established best practices, or lingering empirical questions.

Limited accessibility, extensibility, and applicability

Nearly all the systems that were deployed in the studies that we reviewed are not accessible by independent trainers. As a result, the systems cannot be extended to different settings or learners, and a range of skills cannot easily be taught because the systems are not adaptable by others who were not part of the research team. Additionally, a lack of user-friendly guidelines or instructions for the creation of training systems prevents others from replicating technologies that have been published as part of research studies. Moreover, for computer-based systems to be truly applicable and relevant to a broad group of consumers, the systems must be able to be implemented across multiple computer operating systems. As of today, nearly all systems that were reported can only be used on a single operating system (WindowsTM). Finally, given that a large number of learners have access to mobile devices (e.g., phones and tablets), systems that cannot run on mobile devices are inherently limited (Brodsky & Fienup, 2018).

Application development and programming skills

The vast majority of published studies examined systems that required advanced application development skills to create and implement. A typical instructor, even one with a great deal of exposure to modern technology and software, and even one with some limited computer programming skills, would still most likely find it overly time consuming and challenging to attempt to replicate the systems from published research. As an example, Albright et al. (2016) and Blair et al. (2019) used the same custom and proprietary computer-based emergent responding training system to teach behavior-analytic concepts to college students. The interface of the system mimicked traditional MTS training procedures with the presentation of the visual sample and comparison stimuli on a computer screen and selection-based responses (clicking with a computer mouse) emitted by the learner with immediate automated feedback provided by the computer. However, Albright et al. (2016) reported that “substantial time” (p. 305) was required to develop and customize the software and suggested that more readily available applications could be used.

Cost

If a study investigated the efficacy of a training system with a commercially available computer application, the application was very expensive to either purchase as a single license or as a subscription. Applications, replications, and extensions of translational research need not exclusively use systems that are free; however, in order for instructors to implement these systems, the cost of the applications must not be prohibitively high so as to preclude socially relevant applications in a variety of settings (Howard, 2019).

Instructor skills

Critchfield (2018) suggested that the dissemination of the technology of emergent responding training systems and protocols has been stalled due to a limited number of researchers in the area and the use of overly technical jargon. This may have resulted in a general lack of awareness of emergent responding training protocols among instructors due to a lack of motivation to learn the conceptual foundations and technical jargon associated with emergent responding. If instructors are not fluent in emergent responding concepts and applications, they might be less likely to implement such systems or even pursue available professional development (e.g., conference presentations or workshops). Until very recently (Pilgrim, 2020), succinct and practical overviews of emergent responding training protocols did not exist, and this may have contributed to the lack of dissemination of the technology.

Possible solutions to the lack of adoption of computer-based emergent responding training systems

Several authors (e.g., Brodsky & Fienup, 2018; Dixon et al., 2018) have called for the development of tools for instructors—for example, task analyses—to facilitate the implementation of emergent responding training systems in classrooms. In addition, if emergent responding training protocols are to be implemented by instructors on a large scale, then training and learning systems that are easy to develop and implement must be readily available for modification, extension, and use by practicing behavior analysts, trainers, and educators.

One solution might be to create simple visual→visual or auditory→visual MTS training and testing modules using presentation software (Cummings & Saunders, 2019) for teaching arbitrary matching and topography-based responses. MTS training and testing sessions can be conceptualized as discrete modules, and the individual components can easily be extended to an emergent responding training and testing system (Table 2). For example, a behavior-analytic supervisor could create simple, low-cost, and easy-to-implement computer-based modules (see supplemental resources) to teach research designs (Walker & Rehfeldt, 2012), the identification of functions of behaviors (Albright et al., 2016), or the identification of functional relations (Blair et al., 2019) to trainees. Additionally, college instructors could teach the concepts related to verbal operants (O’Neill et al., 2015) by systematically embedding slide-based trials with immediate feedback, or other interactive activities like QuizletTM (“Get to know Quizlet Learn!” n.d.), during in-class instruction and then test for emergent responding. Another option would be to require students to complete web-based instructional modules independently to support and enhance in-class instruction or as part of asynchronous online learning systems (Keller, 1968; Nguyen, 2015; Skinner, 1958). Such a system addresses many of the limitations noted in previously published research.

Table 2.

Example order of sessions and training/testing characteristics for an emergent responding training system with GOOGLE SLIDESTM and GOOGLE FORMSTM

| Module Type and Order | Recommended Applicationa | Sample → Comparison | Trials per Plock | Mastery Criterion |

|---|---|---|---|---|

| Pretest | FormsTM | All relations | 18 | N/Ab |

| Train | SlidesTM | A→B | 9 | 9/9 × 2 |

| Symmetry test | FormsTM | B→A | 3 | 3/3 (A→B train if not met) |

| Train | SlidesTM | A→C | 9 | 9/9 × 2 |

| Symmetry test | FormsTM | C→A | 3 | 3/3 (A→C train if not met) |

| Equivalence test | FormsTM | B→C | 3 | 3/3 (A→B train if not met) |

| Equivalence test | FormsTM | C→B | 3 | 3/3 (A→B train if not met) |

| Maintenance | FormsTM | All relations | 18 | 18/18 (A→B train if not met) |

aEither Google SlidesTM or Google FormsTM can be used for both training and testing modules.

bAnalyze score by relation and determine which relations to train.

Limited accessibility, extensibility, and applicability

By using computer applications that are freely available across operating systems, the systems become instantly more accessible, extensible, and applicable across a range of instructors, including professors and staff trainers. In contrast to the custom systems that previous researchers have used, providing instructors with directions for how to incorporate commercially available software in an emergent responding training system, even if that software lacks some of the functionality of a custom system, will potentially lead to wider adoption. Specifically, the system that we developed (see supplemental resources) uses Google SlidesTM and Google FormsTM (Table 3), both of which are free and easily accessed via any modern computer that runs the WindowsTM, macOSTM, or Chrome OSTM operating systems or via tablets or phones with an Internet connection. In addition, reusable and easily shareable template files can be created to expedite the extension and application of training systems across learners, skills, and settings (Howard, 2019).

Table 3.

Characteristics and functions of GOOGLE SLIDESTM and GOOGLE FORMSTM in an emergent responding training system

| Google SlidesTM | Google FormsTM | |

|---|---|---|

| Function | Presentation of MTS training and testing trials | “Quiz” feature for training and testing trials |

| Training types | MTS | Quizzes with multiple-choice and/or short-answer questions |

| Testing types | MTS | Quizzes with multiple-choice and/or short-answer questions |

| Stimulus forms | Visual (text, pictorial, animation, video), auditory | Visual (text, pictorial, animation, video), auditory |

| Response forms | Selection, vocal, typed/written, signed | Selection, typed/written |

| Data collection | Manual | Automatic |

| Available on common platforms (e.g., WindowsTM, macOSTM, Chrome OSTM) | Yes | Yes |

| Web based | Yes | Yes |

| Mobile access (iOSTM, AndroidTM, tablet) | Yes | Yes |

| Touch-based interface | Yes | Yes |

| Offline access | Yes | No |

| Link | https://slides.google.com | https://forms.google.com |

| Cost | $0 | $0 |

Application development and programming skills

Critchfield and Twyman (2014) and Pilgrim (2020) provided some basic guidelines, described examples, and suggested some preliminary steps that trainers can take to use emergent responding training protocols; however, the recommendations were general and did not specifically address the development of computer-based systems. Dixon et al. (2018) argued that “efficient transportability through manualization or other means is likely pivotal to the adoption, at a global scale, of technologies based on derived stimulus relations” (p. 8), yet, to date, this type of manualization has not occurred. Trainers would benefit from further refinement of published guidelines and a more manualized form of procedural steps that results in a fully functional emergent responding training system. Specifically, the existence of a tutorial that describes how to use low-cost and widely available computer-based learning tools to promote instruction that results in emergent and generative responding might help with the dissemination of the technology of emergent responding training systems (Rehfeldt, 2011). The use of widely available applications such as PowerPointTM, Google SlidesTM, or Google FormsTM in an emergent responding training system does not require extensive experience, specific training, or computer programming skills.

Our tutorial extends the Cummings and Saunders (2019) tutorial that demonstrated how to design Microsoft PowerPointTM slides to teach conditional discriminations. They provided an easy-to-follow task analysis with illustrations and examples for creating a computerized auditory-visual MTS system for learners with disabilities without requiring computer programming skills. However, some limitations to their tutorial include programming with only auditory sample stimuli, the use of a non-web-based application (PowerPointTM), the lack of automated prompting procedures, the lack of feedback for incorrect responses or error correction, the inability to use the training system on mobile devices, limited learner response topographies, the lack of integration into an emergent responding training system, and the substantial cost for purchasing licenses of the software to implement on multiple computers. The systems described in our tutorial address several of these limitations.

Cost

The applications that we propose for use, Google SlidesTM and FormsTM, are free.

Instructor skills

Review articles (Brodsky & Fienup, 2018; Rehfeldt, 2011), a textbook chapter (Pilgrim, 2020), and the supplemental materials associated with the current article might provide instructors with best practices, protocols, and examples of easy-to-access systems. Specifically, the tutorial that we created is intended to assist instructors with implementing best practices for computer-based MTS and emergent responding training so that the procedures can more easily be applied. Best practices for conditional discrimination training procedures are widely known and are listed in Table 4. In addition, the results of basic and applied research have shown that several procedural designs result in more consistent and reliable emergent responding; however, recommendations for developing emergent responding systems (Critchfield & Twyman, 2014) are less widely recognized and are briefly reviewed here. The following guidelines should be considered as preliminary because further research must be conducted, particularly with typically developing adult learners (Brodsky & Fienup, 2018; Fienup, Hamelin, Reyes-Giordano, & Falcomata, 2011; Pilgrim, 2020). However, based on basic and translational research, the first generally accepted procedure for emergent responding training based on stimulus equivalence is to use a simple-to-complex (STC) training protocol (Arntzen, 2012; Fields, Adams, & Verhave, 1993; Fienup, Wright, & Fields, 2015; Pilgrim, 2020). In an STC protocol, baseline conditional responses (A→B) are taught first, followed immediately by a test for symmetry (B→A). If symmetry is established, further baseline responses (A→C) are trained and symmetry (C→A) is similarly tested. Finally, tests are conducted to determine if the transitive/equivalence responses (B→C, C→B) have emerged. The second generally accepted procedure is to use a one-to-many (OTM) training structure (Arntzen, 2012; Pilgrim, 2020; Saunders & Green, 1999). With an OTM training structure, the A→B and A→C responses are taught, usually successively (instead of successively teaching A→B, then B→C). Tests for topography-based responding (e.g., vocal, written, or typed responses, or answers to fill-in-the-blank or open-ended questions) are also recommended where appropriate (Pilgrim, 2020; Reyes-Giordano & Fienup, 2015; Skinner, 1958). Other best practices for effective emergent responding systems, including for generalization and maintenance, such as prompting strategies, reinforcement schedules, and mastery criteria, have not been firmly established, and further research is required. The established best practices, the simple training and testing structure described in our tutorial, and the incorporation of accessible learning tools might help some instructors to better conceptualize what a comprehensive emergent responding training system could look like in their setting.

Table 4.

Conditional Discrimination training best practices and recommended protocols for emergent responding training systems with GOOGLE SLIDESTM and GOOGLE FORMSTM

| Conditional Discrimination Best Practicesa | SlidesTM | FormsTM |

|---|---|---|

| Auditory stimulus used as sample | Yes | Yes |

| Counterbalanced presentation order and position of sample and comparison stimuli | Yes | Yes |

| Fast-paced trial presentation | Yes | Yes |

| At least three comparison stimuli in an array | Yes | Yes |

| Immediate trial-by-trial reinforcement/feedback during training | Yes | No |

| Trials prepared out of view of the learnerb | N/A | N/A |

| A different sample stimulus presented every trial | Yes | Yes |

| Observing response required | Yes | No |

| Basic learner and readiness skills taught prior to using MTSc | N/A | N/A |

| Errorless teaching or prompt-fading methods used | Yes | Yesd |

| Recommended Protocols for Emergent Responding Training Systemse | ||

| Three or more equivalence classes | Yes | Yes |

| Three or more members in an equivalence class | Yes | Yes |

| One-to-many training structure | Yes | Yes |

| Simple-to-complex training protocol | Yes | Yes |

| Selection-based responses taught | Yes | Yes |

| Topography-based responses taught | Nof | Yes |

| Test for emergent selection-based responses without feedback | Yes | Yes |

| Test for emergent topography-based responses without feedback | Nof | Yes |

| Training/testing mastery criteria to facilitate emergent responding | Yes | Yes |

aGreen (2001), Grow and LeBlanc (2013), and MacDonald and Langer (2018).

bNot applicable for a computer-based system.

cNot applicable for typically developing learners.

dPrompting procedures can be implemented; however, they cannot be automated.

eArntzen (2012); Brodsky and Fienup (2018); Critchfield and Twyman (2014); Fienup, Wright, and Fields (2018); Pilgrim (2020); Rehfeldt (2011); Reyes-Giordano and Fienup (2015); and Saunders and Green (1999).

fTopography-based responses can be taught and tested; however, responses cannot be recorded by the system and must be directly observed by the trainer

Discussion

Several review articles on emergent responding training systems, particularly EBI (Brodsky & Fienup, 2018; Rehfeldt, 2011), have concluded that it is critical for applied behavior analysts to determine how to disseminate the technology of derived stimulus relations to educators and trainers to facilitate the adoption of technical protocols in practice. Additionally, reviewers and researchers have agreed that in order for computer-based emergent responding training systems to gain more widespread use, behavior analysts must design manualized technological applications that can be widely and easily accessed, applied, replicated, and extended by instructors (Critchfield, 2018; Dixon et al., 2011). However, to date, few resources have been provided directly to educators and trainers to meet this challenge.

The supplemental tutorial and materials associated with this article include step-by-step written and visual instructions for constructing such a system. The supplemental materials extended the Cummings and Saunders (2019) tutorial, attempted to provide a means to implement the recommendations made by Pilgrim (2020), and extended other published but proprietary systems (e.g., Albright et al., 2016; Barron et al., 2018; Blair et al., 2019; Ferman et al., 2019; O’Neill et al., 2015; Zinn et al., 2015) with freely available and user-friendly web-based applications. The supplemental tutorial is intended to demonstrate how to design, create, and deploy computer-based emergent responding training systems for typically developing learners with widely available, inexpensive, and user-friendly web-based applications. The systems described in this tutorial add several features and extend previous demonstrations to further assist educators and practitioners with the development of functional training systems for a variety of learners. In addition, alternative MTS designs, features, and systems are briefly described (e.g., prompting, click- or touch-based navigation as opposed to time-based systems, prevention of memorization and position bias, testing systems).

Although this initial step toward developing a technical manual for creating emergent responding training systems is functional and can be used in a variety of research and practice settings, the systems described here do have limitations. There does not appear to be a perfect solution to the challenge of digitizing and automating MTS procedures. Some limitations include the time required to develop training systems, the inability to electronically and automatically record user responses during training (without advanced computer application development skills), and the lack of a comprehensive, cohesive, extensible, and automated computer-based training system that incorporates the necessary features and flexibility that are often components of traditional analog MTS systems in a user-friendly package. One solution to these limitations is to enlist the help of computer programmers and application developers. However, that leads to another obvious limitation: cost. One distinct advantage that the system described in this article has is that it uses applications that are free, universally available today, and ready for adoption and extension by behavior-analytic instructors. Unlike some of the custom proprietary systems described in published studies (e.g., Albright et al., 2016; Barron et al., 2018; Blair et al., 2019; Ferman et al., 2019; O’Neill et al., 2015; Zinn et al., 2015), the system described here will presumably continue to be freely available and allow for the replication, extension, and application of research across an array of learners and settings.

Another limitation of the system described in this article, as well as other systems that are designed to produce emergent responding, is that relatively little is known about how efficient these systems are when compared to more traditional teaching methods. Future research should directly compare these types of quasi-automated computerized emergent responding training systems to more traditional methods of teaching such as reading texts, memorization, and didactic and interactive lecture, and this tutorial can be used to design such systems that can easily be investigated in future research.

This technical tutorial is hopefully a small step toward a full implementation of emergent responding training procedures and protocols in applied settings (e.g., higher education, staff training). Readers are encouraged to implement individualized systems based on our recommendations and supplemental resources and add to the technical manualization of training and testing systems by publishing their own manuals with updated characteristics and features, conducting applied and practitioner-oriented research, presenting workshops, and sharing ideas and resources. In addition, instructors and researchers are encouraged to use tutorials like this one to develop systems that incorporate the characteristics of personalized systems of instruction and programmed instruction (Cihon & Swisher, 2018).

As others have noted (Brodsky & Fienup, 2018; Pilgrim, 2020; Rehfeldt, 2011), Sidman (1971) first demonstrated the principle of stimulus equivalence nearly 50 years ago. However, practicing behavior analysts, particularly instructors and trainers, are still not using technologies derived from the principles of stimulus equivalence in educational or training settings. The lack of attention paid to this basic behavioral principle is concerning given that many students and trainees are not benefitting from an empirically supported practice. Only with more emphasis on the development and routine implementation of functional and relevant emergent responding training systems by behavior-analytic instructors will we be able to refine the technology to make it more suitable for mass adoption and ultimately extend the impact of training systems with the goal of emergent responding to as many learners as possible.

Author Note

Bryan J. Blair, Long Island University, Brooklyn, and Behavior Analysis Unlimited, LLC; Lesley A. Shawler, Endicott College.

The authors would like to thank Dr. Paul Mahoney for his critical feedback on the procedures described in this article.

Electronic supplementary material

(DOCX 1838 kb)

Funding

This study received no funding.

Compliance with Ethical Standards

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Footnotes

List of articles is available upon request.

This is a relatively old study, and the application ToolBook Instructor has most likely substantially changed since the publication of the study.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- Adobe. (2019). Adobe Captivate 2019 release. Retrieved from https://www.adobe.com/products/captivate.html

- Albright LK, Schnell L, Reeve KF, Sidener TM. Using equivalence-based instruction to teach graduate students in applied behavior analysis to interpret operant functions of behavior. Journal of Behavioral Education. 2016;25(3):290–309. doi: 10.1007/s10864-016-9249-0. [DOI] [Google Scholar]

- Arntzen E. Training and testing parameters in formation of stimulus equivalence: Methodological issues. European Journal of Behavior Analysis. 2012;13(1):123–135. doi: 10.1080/15021149.2012.11434412. [DOI] [Google Scholar]

- Baer DM, Wolf MM, Risley TR. Some current dimensions of applied behavior analysis. Journal of Applied Behavior Analysis. 1968;1(1):91–97. doi: 10.1901/jaba.1968.1-91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barron R, Leslie JC, Smyth S. Teaching real-world categories using touchscreen equivalence-based instruction. The Psychological Record. 2018;68(1):89–101. doi: 10.1007/s40732-018-0277-0. [DOI] [Google Scholar]

- Beetham H, Sharpe R, editors. Rethinking pedagogy for a digital age: Designing for 21st century learning. New York, NY: Routledge; 2013. [Google Scholar]

- Blair BJ, Tarbox J, Albright L, MacDonald J, Shawler LA, Russo SR, Dorsey MF. Using equivalence-based instruction to teach the visual analysis of graphs. Behavioral Interventions. 2019;34(3):405–418. doi: 10.1002/bin.1669. [DOI] [Google Scholar]

- Brodsky J, Fienup DM. Sidman goes to college: A meta-analysis of equivalence-based instruction in higher education. Perspectives on Behavior Science. 2018;41(1):95–119. doi: 10.1007/s40614-018-0150-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cihon, T., & Swisher, M. (2018). Slow and steady wins the race? [Web log post]. Retrieved from https://science.abainternational.org/slow-and-steady-wins-the-race/traci-cihonunt-edu/

- Connell JE, Witt JC. Applications of computer-based instruction: Using specialized software to aid letter-name and letter-sound recognition. Journal of Applied Behavior Analysis. 2004;37:67–71. doi: 10.1901/jaba.2004.37-67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchfield TS. Efficiency is everything: Promoting efficient practice by harnessing derived stimulus relations. Behavior Analysis in Practice. 2018;11(3):206–210. doi: 10.1007/s40617-018-0262-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchfield TS, Twyman JS. Prospective instructional design: Establishing conditions for emergent learning. Journal of Cognitive Education and Psychology. 2014;13(2):201–216. doi: 10.1891/1945-8959.13.2.201. [DOI] [Google Scholar]

- Cummings, C., & Saunders, K. J. (2019). Using PowerPoint 2016 to create individualized matching to sample sessions. Behavior Analysis in Practice, 12(2), 483–490. doi:10.1007/s40617-018-0223-2 [DOI] [PMC free article] [PubMed]

- Dixon MR, Belisle J, Rehfeldt RA, Root WB. Why we are still not acting to save the world: The upward challenge of a post-Skinnerian behavior science. Perspectives on Behavior Science. 2018;41(1):1–27. doi: 10.1007/s40614-018-0162-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erath, T. G., & DiGennaro Reed, F. D. (2019). A brief review of technology-based antecedent training procedures. Journal of Applied Behavior Analysis. Advance online publication. Retrieved from https://onlinelibrary.wiley.com/doi/abs/10.1002/jaba.633. 10.1002/jaba.633. [DOI] [PubMed]

- Ferman, D. M., Reeve, K. F., Vladescu, J. C., Albright, L. K., Jennings, A. M., & Domanski, C. (2019). Comparing stimulus equivalence-based instruction to a video lecture to increase religious literacy in middle-school children. Behavior Analysis in Practice. Advance online publication. Retrieved from https://link.springer.com/article/10.1007%2Fs40617-019-00355-4. 10.1007/s40617-019-00355-4. [DOI] [PMC free article] [PubMed]

- Fields L, Adams BJ, Verhave T. The effects of equivalence class structure on test performances. The Psychological Record. 1993;43(4):697–713. doi: 10.1007/BF03395907. [DOI] [Google Scholar]

- Fienup DM, Hamelin J, Reyes-Giordano K, Falcomata TS. College-level instruction: Derived relations and programmed instruction. Journal of Applied Behavior Analysis. 2011;44(2):413–416. doi: 10.1901/jaba.2011.44-413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fienup DM, Wright NA, Fields L. Optimizing equivalence-based instruction: Effects of training protocols on equivalence class formation. Journal of Applied Behavior Analysis. 2015;48(3):613–631. doi: 10.1002/jaba.234. [DOI] [PubMed] [Google Scholar]

- Green G. Behavior analytic instruction for learners with autism: Advances in stimulus control technology. Focus on Autism and Other Developmental Disabilities. 2001;16(2):72–85. doi: 10.1177/108835760101600203. [DOI] [Google Scholar]

- Green G, Saunders RR. Stimulus equivalence. In: Lattal KA, Perone M, editors. Handbook of research methods in human operant behavior. New York, NY: Plenum; 1998. pp. 229–262. [Google Scholar]

- Greville, W. J., Dymond, S., & Newton, P. M. (2016). The student experience of applied equivalence-based instruction for neuroanatomy teaching. Journal of Educational Evaluation for Health Professions, 13(32). doi:10.3352/jeehp.2016.13.32 [DOI] [PMC free article] [PubMed]

- Grow L, LeBlanc L. Teaching receptive language skills. Behavior Analysis in Practice. 2013;6(1):56–75. doi: 10.1007/BF03391791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haegele KM, McComas JJ, Dixon M, Burns MK. Using a stimulus equivalence paradigm to teach numerals, English words, and Native American words to preschool-age children. Journal of Behavioral Education. 2011;20(4):283–296. doi: 10.1007/s10864-011-9134-9. [DOI] [Google Scholar]

- Howard, V. J. (2019). Open educational resources in behavior analysis. Behavior Analysis in Practice, 1–15. Retrieved from https://link.springer.com/article/10.1007/s40617-019-00371-4. doi:10.1007/s40617-019-00371-4 [DOI] [PMC free article] [PubMed]

- Keller FS. Good-bye, teacher. Journal of Applied Behavior Analysis. 1968;1(1):79–89. doi: 10.1901/jaba.1968.1-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald R, Langer S. Teaching essential discrimination skills to children with autism. Bethesda, MD: Woodbine House; 2018. [Google Scholar]

- Michael J. A behavioral perspective on college teaching. The Behavior Analyst. 1991;14(2):229–239. doi: 10.1007/BF03392578. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nguyen T. The effectiveness of online learning: Beyond no significant difference and future horizons. Journal of Online Learning and Teaching. 2015;11(2):309–319. [Google Scholar]

- Nosik MR, Williams WL. Component evaluation of a computer based format for teaching discrete trial and backward chaining. Research in Developmental Disabilities. 2011;32(5):694–702. doi: 10.1016/j.ridd.2011.02.022. [DOI] [PubMed] [Google Scholar]

- O’Neill JO, Rehfeldt RA, Ninness C, Munoz BE, Mellor J. Learning Skinner’s verbal operants: Comparing an online stimulus equivalence procedure to an assigned reading. The Analysis of Verbal Behavior. 2015;31(2):252–266. doi: 10.1007/s40616-015-0035-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pilgrim C. Equivalence-based instruction. In: Cooper JO, Heron TE, Heward WL, editors. Applied behavior analysis. 3. Hoboken, NJ: Pearson; 2020. pp. 442–496. [Google Scholar]

- Psychology Software Tools. (2019). E-Prime. Retrieved from https://pstnet.com/products/e-prime/

- Rehfeldt RA. Toward a technology of derived stimulus relations: An analysis of articles published in the Journal of Applied Behavior Analysis, 1992–2009. Journal of Applied Behavior Analysis. 2011;44(1):109–119. doi: 10.1901/jaba.2011.44-109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reyes-Giordano K, Fienup DM. Emergence of topographical responding following equivalence-based neuroanatomy instruction. The Psychological Record. 2015;65(3):495–507. doi: 10.1007/s40732-015-0125-4. [DOI] [Google Scholar]

- Saunders RR, Green G. A discrimination analysis of training-structure effects on stimulus equivalence outcomes. Journal of the Experimental Analysis of Behavior. 1999;72(1):117–137. doi: 10.1901/jeab.1999.72-117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schnell LK, Sidener TM, DeBar RM, Vladescu JC, Kahng S. Effects of computer-based training on procedural modifications to standard functional analyses. Journal of Applied Behavior Analysis. 2017;51(1):87–98. doi: 10.1002/jaba.423. [DOI] [PubMed] [Google Scholar]

- Sidman M. Reading and auditory-visual equivalences. Journal of Speech & Hearing Research. 1971;14(1):5–13. doi: 10.1044/jshr.1401.05. [DOI] [PubMed] [Google Scholar]

- Sidman, M. (1994). Equivalence relations and behavior: A research story. Boston, MA: Authors Cooperative.

- Skinner BF. Teaching machines. Science. 1958;128(3330):969–977. doi: 10.1126/science.128.3330.969. [DOI] [PubMed] [Google Scholar]

- Skinner BF. The technology of teaching. East Norwalk, CT: Appleton-Century-Crofts; 1968. [Google Scholar]

- SumTotal. (2019). ToolBook. Retrieved from http://tb.sumtotalsystems.com/

- Twyman JS. Envisioning education 3.0: The fusion of behavior analysis, learning science and technology. Mexican Journal of Behavior Analysis. 2014;40(2):20–38. [Google Scholar]

- Twyman JS, Heward WL. How to improve student learning in every classroom now. International Journal of Educational Research. 2016;87:78–90. doi: 10.1016/j.ijer.2016.05.007. [DOI] [Google Scholar]

- Walker BD, Rehfeldt RA. An evaluation of the stimulus equivalence paradigm to teach single-subject design to distance education students via Blackboard. Journal of Applied Behavior Analysis. 2012;45(2):329–344. doi: 10.1901/jaba.2012.45-329. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams CT, Zahed H. Computer-based training versus traditional lecture: Effects on learning and retention. Journal of Business and Psychology. 1996;11(2):297–310. doi: 10.1007/BF02193865. [DOI] [Google Scholar]

- Zinn TE, Newland MC, Ritchie KE. The efficiency and efficacy of equivalence-based learning: A randomized controlled trial. Journal of Applied Behavior Analysis. 2015;48(4):865–882. doi: 10.1002/jaba.258. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(DOCX 1838 kb)