Abstract

Background

Platform trials allow adding new experimental treatments to an on-going trial. This feature is attractive to practitioners due to improved efficiency. Nevertheless, the operating characteristics of a trial that adds arms have not been well-studied. One controversy is whether just the concurrent control data (i.e. of patients who are recruited after a new arm is added) should be used in the analysis of the newly added treatment(s), or all control data (i.e. non-concurrent and concurrent).

Methods

We investigate the benefits and drawbacks of using non-concurrent control data within a two-stage setting. We perform simulation studies to explore the impact of a linear and a step trend on the inference of the trial. We compare several analysis approaches when one includes all the control data or only concurrent control data in the analysis of the newly added treatment.

Results

When there is a positive trend and all the control data are used, the marginal power of rejecting the corresponding hypothesis and the type one error rate can be higher than the nominal value. A model-based approach adjusting for a stage effect is equivalent to using concurrent control data; an adjustment with a linear term may not guarantee valid inference when there is a non-linear trend.

Conclusions

If strict error rate control is required then non-concurrent control data should not be used; otherwise it may be beneficial if the trend is sufficiently small. On the other hand, the root mean squared error of the estimated treatment effect can be improved through using non-concurrent control data.

Keywords: Adding arms, Concurrent/ non-concurrent control, Platform trials

Background

The implementation of innovative trial designs for drug development programmes has increased in recent years [1]. A platform trial is one such innovative design that helps improve efficiency. It has a multi-arm multi-stage design where a single control group is utilized to evaluate multiple experimental treatments for a single disease. Apart from the feature of dropping arms, a platform trial allows adding arms when new experimental treatments become available. This adds efficiency as adding arms takes less time and has a lower cost than setting up new trials from scratch.

A platform trial can be implemented through the specification of a master protocol [2–4]. Cohen et al [5] review the statistical aspects on the adding arm adaptation and present some trials that have adopted a platform approach. For pairwise comparisons between the effectiveness of newly added treatments and the control treatment, there are different views on utilising the control data [5]. To be more specific, the control data of a platform trial can be separated into non-concurrent and concurrent. The former (latter) corresponds to the control data of the patients who are recruited before (after) a new experimental treatment is added. This definition applies to each newly added arm independently; the control data can be concurrent to a newly added treatment, and be non-concurrent to another treatment that is added at a later time point.

To our knowledge, methodology and guidance for adding arms remain limited and the operating characteristics of platform trials have not been well-studied. The literature on other trial adaptations such as dropping arms and changing treatment allocation ratio are more comprehensive [6–11]. In particular, many have explored the presence of time trends when more complex randomization procedures are recommended/ explored [12–21]. A trend may be present in a trial when there is a learning curve among the study personnel; a shift in the population baseline characteristics; and/ or the effect of the control treatment may change due to other reasons (e.g. improvement of the standard of care/ practice) [7,22].

In order to minimise the impact caused by the presence of a trend, most researchers advocate the use of concurrent control data when making inference about the newly added treatment(s) [23–25]. Others stipulate that non-concurrent control data may be used when making inference about the newly added arm by adjusting for possible trends [26,27]. Here we investigate whether using non-concurrent control data is worthwhile in platform trials. Several analysis approaches are considered. Within a two-stage setting where a new treatment is added at the end of stage one [25,28], we explore the impact of i) the timing of adding a new arm ii) the sample size of the new arm and iii) the magnitude of a linear or a step trend [15] on the inference about the newly added experimental treatment. We provide recommendations about under which conditions non-concurrent control data should be used.

Method

Consider a two-stage multi-arm trial that initially has K treatments and a control treatment with a total sample size of N=N1+N2 where Ns is stage s sample size, s=1,2. Denote k=0 for a control treatment, k=1,...,K for the initial experimental treatments. Let nks be the sample size of treatment k in stage s with nk1+nk2=nk. At the end of stage one, in particular after patients have been randomized to the initial arms in stage one, a new treatment (denoted by K+1) is added to the on-going trial with nK+1,2 patients. This increases the second stage sample size to N2+nK+1,2 and the overall sample size of the trial to N+nK+1,2.

Denote n01/n0 as the timing of adding a new arm. Small n01/n0 indicates the newly available treatment is added to the trial after a small number of patients have been randomized to the initial arms in stage one. We consider the case that all arms finish recruitment simultaneously (although as long as controls continue to be recruited while treatment K+1 is allocated patients, results apply more generally). For j=1,...,N+nK+1,2, denoting the sequence of patient enrolment to the trial, let:

| 1 |

be the response of treatment k of subject j; μk be the true effect of treatment k; τ(j) be a trend that could be a function of the patient ordering j; εj be the random errors that are identically and independently normally distributed with mean 0 and variance σ2; and Tj be the allocated treatment of subject j, with I(·) is an indicator function.

Let and denote the stage s sample mean response of treatment k and the overall mean response of treatment k. When there is no trend in the trial, i.e. τ(j)=0(j), the sample mean estimators and .

For illustration purpose, we consider the following when exploring the impact of a fixed trend on the inference:

- linear trend:

2 - stepwise trend:

3

where cj is an indicator that subject j is enrolled in stage two, and λ is a positive fixed value. Both of these trends inflate the value of the outcome variable in a sequential manner; the responses of the patients who were enrolled later are larger than those who were enrolled earlier.

Simple approach: Z-test

We first consider a simple method that is analogous to ignoring the possibility of a trend or stage effect: a standard Z-test for testing each hypothesis

| H0k:μk=μ0 | against | H1k:μk>μ0, |

using the sample means. When there is no trend, the (pairwise) marginal power of rejecting a null hypothesis to detect a difference in treatment effect, δk=μk−μ0, is

where Φ and Φ−1 are the cumulative density function and the inverse of the cumulative density function of a standard normal distribution. For the newly added treatment, the power of rejecting H0,K+1 depends on whether all the control data (of size n0) or only the stage two control data (of size n02) is used in the inference. Elm et al [28] describe a t-test for the case where σ2 is unknown.

Model-based approach: weighted linear regression

One way to account for the changes of a trial design is to consider a model-based approach. Elm et al [28] consider the following weighted linear regression model. Let be the stage-wise difference in mean responses; fit a linear model to the samples of the stage-wise mean differences:

where is normally distributed with mean zero and variance σ2/nks+σ2/n0s. The covariance between mean responses, and k=1,...,K, as patient data are independent; whereas , where l,k=1,...,K, as the data of a shared control group is used in all the pairwise comparisons. For the newly added treatment, and for k=1,...,K, when only the concurrent control data is used.

Elm et al [28] have not considered using all the control data in estimating the difference in mean responses of treatment K+1 and the control, i.e. defined as . This estimate has a smaller variance than , and for k=1,...,K, and s=1,2, as can be re-expressed as .

Using weighted least squares estimation, an estimate of the vector of treatment effects relative to control, δ=(δ1,...,δK,δK+1)T, can be obtained. The estimate, , is unbiased and has a multivariate normal distribution with mean δ when there is no trend. This joint distribution can be used to test the hypotheses, {H01,...,H0,K+1}, in a similar way to the Dunnett test [29]. The marginal power of rejecting a hypothesis can be computed by considering the corresponding marginal distribution. Note that when K>0, the marginal power is different to the power obtained from a Z-test (or a t-test) as the joint distribution accounts for the fact that a common control group is used in the analysis. Note also that depends on the number of control patients.

We label these approaches as WLSall and WLSs2 respectively, when all the control data and when only the concurrent control data are used in the estimation of δK+1.

Model-based approach: linear regression

In the context of an adaptive randomisation procedure, Villar et al. [19] considered fitting a linear regression model to adjust for the presence of a trend in their investigation where all arms start recruiting at the same time. We explore the following linear regression analyses for the trial that adds an arm at the end of stage one:

Ma1:

Ma2:

where β0=0 such that βk represents the difference in mean responses of treatment k and the control treatment; and

Mb1:

Mb2:

where β0=0 such that and represent the difference in mean responses of the newly added treatment and the control treatment. Note that models Ma1 and Ma2 use all data to estimates all β1,...,βK,βK+1, whereas model Mb1 (and Mb2) uses the data of the control arm (both stages) and the newly added arm to estimate (and ) only. The parameter γ in Ma1 and Mb1 represents the effect of continuous recruitment, whereas ν in Ma2 and Mb2 represents the effect of recruitment that happens after the new treatment has been added (Elm et al [28] interpret ν as a stage effect). It is worth mentioning that the amount of the control data used to estimate βK+1 in Ma2 (and respectively in Mb2) is similar to (same as) that of using only the second stage control data, e.g. , since the newly added treatment was not present in stage one and the stage one control data would contribute to the estimation of the stage effect and that of β1,...,βK under such a model set-up. In other words, the stage one control data contributes very little to the estimation of the difference in mean responses of the newly added treatment and the control treatment when we adjust for ν·cj in the analysis.

We note that the inference from Ma1 without adjustment for the linear term in patient ordering is similar to that from WLSall; and that from Ma2 is similar to WLSs2. This is because the joint distribution of is equivalent to the corresponding joint distribution of . The subtle difference is the former approach has one more parameter (i.e. the intercept α) than the weighted regression model, which reflects the mean response of the reference group assuming the model is true. On the other hand, testing and respectively are similar to considering an independent Z-test (or a t-test when assuming unknown variance) for the hypothesis of H0,K+1.

Metrics

We explore the above analysis approaches and consider

bias of estimators;

the type one error rate;

the marginal power of rejecting a false null hypothesis;

- the root mean squared error,

when including or excluding the stage one control data in the analysis of the newly added treatment. As we assume σ2 is known, the presence of a trend would affect the accuracy of the estimate but not the variance of an estimate. We include the following metric to explore the reduction in the variance of the estimate:

where BoS stands for borrowing of strength [30], V0 and Va correspond to the variance of an estimate that is computed with only the concurrent control data and that with all the control data respectively. Note that BoS increases as Va decreases. This measure is similar to the R-squared that measures how close the data are to the fitted regression model. Here BoS≈0 indicates that the variability of the response data around its mean cannot be further explained by including non-concurrent control data; whereas BoS≈1 reflects that using all the control data results in a perfect estimate that has negligible variability. One may interpret BoS as an indicator of the benefit of including non-concurrent control data in the analysis of the newly added treatments. Small values show that including the non-concurrent control data provides little benefit to the estimation of the parameters in terms of precision gain. High value is desirable especially when the estimates of the variance are unbiased and are not affected by the presence of a trend.

In the next section, we consider K=1 and a new arm is added at the end of stage one. Let the effect size of the difference in treatment effects be δ1=δ2=0.15 under the alternative scenario, and δ1=δ2=0 under the null scenario, with σ2=1,n11=n01=N1/2, and n12=n02=N2/2. We choose n0=n1=550 to obtain 80% power and 5% type one error rate of rejecting H01 using the Z-test.

We conduct simulation studies to examine the impact of a trend with the timing of adding a new treatment, n01/n0={0.25,0.5,0.75}, and the sample size of the new arm, n22={n02,n0,2n0}. Following (1), we adjust the generated responses post randomization with λ={0.02,0.04,0.06,0.08} in the linear trend (2) and the stepwise trend (3) respectively. These values indicate a trend of λ×100% of standard deviation. Each scenario is replicated 100 000 times. We illustrate n22=2n0 as one of the options to explore the marginal benefit of having more patients (than necessary) in the newly added arm while keeping the size of the control arm the same as the initial plan.

Results

Analytical power and BoS when there is no trend

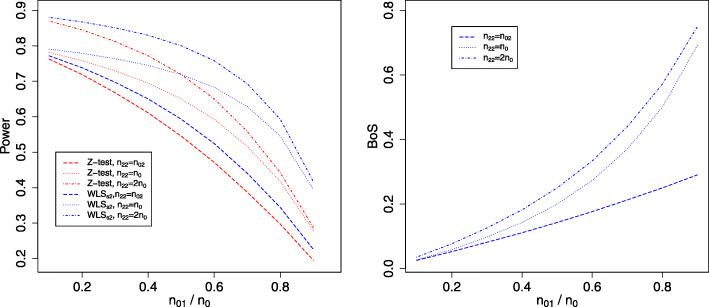

We now compare BoS and the power of rejecting H02 when there is no trend and either all, or only concurrent control data are used in the inference.

We first demonstrate the differences between the independent Z-test (computed with only concurrent control data) and the model-based approach, WLSall and WLSs2, in terms of power. For ease of presentation, we omit the comparisons to the presented linear regression models as they make adjustment for a trend (see the first row of plots in Figs. 2 and 3 for the corresponding marginal power). The left plot in Fig. 1 shows power curves when the individual Z-test (red lines) and WLSs2, i.e. the approach with the bivariate normal distribution of (blue lines), are used respectively for testing H02, both using only the concurrent control responses. The timing of adding the new arm to the on-going trial is reflected on the x-axis; small values indicate the arm is added after a small number of patients have been randomized to the initial treatment arms. Different lines correspond to having different n22.

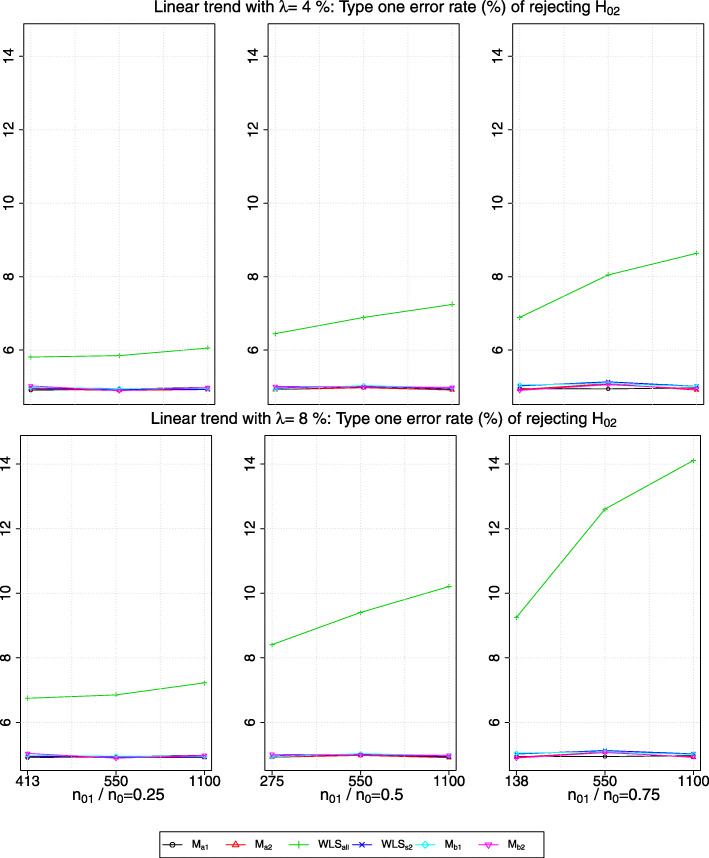

Fig. 2.

The power of rejecting H02 in the presence of no trend or a linear drift with a magnitude of λ>0 standard deviation (row-wise); x-axis indicates the value of n22 and the timing of adding the new arm

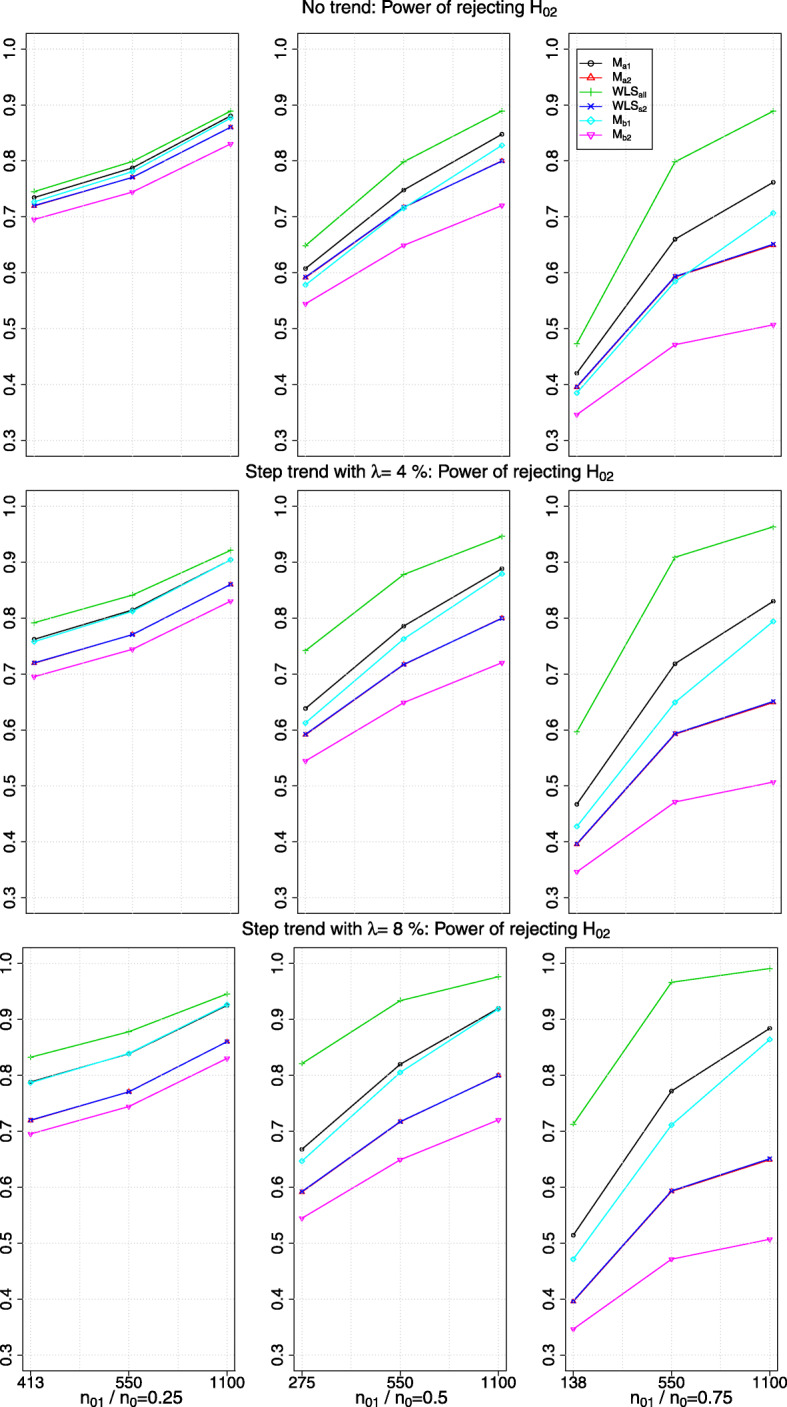

Fig. 3.

The power of rejecting H02 in the presence of no trend or a step trend with a magnitude of λ>0 standard deviation (row-wise); x-axis indicates the value of n22 and the timing of adding the new arm

Fig. 1.

Left: power curves when the individual Z-test (red lines) and WLSs2 (blue lines) are used respectively for testing H02, both using stage two control responses. Right: reduction in when stage one control responses is used relative to not using stage one control responses. Line types correspond to different values of n22 when the new arm is added to the on-going trial. The timing of adding the new arm is reflected by n01/n0 on the x-axis

As expected, the power decreases with n01/n0 when excluding stage one control responses in the inference about the newly added treatment. Comparing the red lines to the blue lines in the left plot of Fig. 1, the power of the individual Z-test is lower than the marginal power obtained from WLSs2 given the same n22. When n22=n02, the difference between the power curves computed by the two approaches is less than 6% (shown by comparing the dashed lines). When n22≥n0, the magnitudes of the differences between the power curves are larger especially for large n01/n0; e.g. compare the dotted-dashed lines at n01/n0=0.8, the marginal power obtained from WLSs2 is 15% more than that computed from the individual Z-test. This finding highlights that considering the joint outcomes of the hypotheses through the joint distribution of the parameters is more efficient than using the individual Z-test when only the concurrent control data is used. This is because the former accounts for using a shared control group in the inference, whereas the Z-test uses the control data independently for each hypothesis test as if they were from several trials. Besides that, compare the (vertical) difference between the dotted-dashed line (n22=2n0) and the dotted line (n22=n0), either red or blue, we see that the magnitude of the difference in the power decreases as n01/n0 increases. This indicates that the marginal benefit of having a larger sample size in one arm than necessary can be small, depending on when the new arm is added to the trial.

For WLSall with n22=n02, the power curve coincides with the red dotted line, i.e. the individual Z-test using only the concurrent control data and with n22=n0. This is not surprising as the same number of patients are used in the inference, albeit the number of patients randomized to the newly added arm is different for the two comparators (i.e. WLSall considers n02 responses of the new treatment and n0 responses of the control treatment; the individual Z-test uses n0 responses of the new treatment and n02 responses of the stage two control treatment). For WLSall with n22=n0 and n22=2n0, the marginal power of rejecting H02 is 0.8 and 0.89 respectively across all n01/n0, which is close to the respective power obtained from Z-test using all the control data.

We now consider the BoS values that are computed with obtained from WLSall and WLSs2 respectively. The right plot in Fig. 1 shows that when all the control data is included in the estimation, BoS increases with n01/n0. When n22=n02, BoS is close to 30% when the new treatment is added at n01/n0=0.9. When n22 has the same number as n0, we see that BoS becomes even larger. However, the marginal return of having large n22 than necessary can be less notable: comparing the dotted-dashed line to the dotted line shows that the magnitudes of the differences between the BoS perhaps are less impressive compared to the likely cost of enrolling an extra n0 patients for the new arm.

Bias of the estimate when there is a trend

We now present the simulation findings of the inference about the newly added treatment when there is a trend.

Consider the estimated difference in mean responses of the newly added treatment and the control treatment. Table 1 shows the maximum absolute bias (and median absolute bias) in the parameter estimations when there is a trend. Each row corresponds to a trend with a value of λ for all the scenarios with different combination of n01/n0={0.25,0.5,0.75} and n22={n02,n0,2n0}. The values with an order of magnitude of -4 are set to zero, as we also obtain such a small number for the unbiased estimators when there is no trend in our simulation, which is due to the Monte Carlo simulation error.

Table 1.

The maximum (median) absolute bias of the estimated difference in mean responses of the newly added treatment and the control arm when there is a trend. Values with -4 order of magnitude are set to zero

| λ | WLSall | WLSs2 | Ma1 | Ma2 | Mb1 | Mb2 |

|---|---|---|---|---|---|---|

| Linear trend | ||||||

| 2% | 0.007 (0.005) | 0 (0) | 0.001 (0) | 0 (0) | 0 (0) | 0 (0) |

| 4% | 0.015 (0.010) | 0 (0) | 0.001 (0) | 0 (0) | 0 (0) | 0 (0) |

| 6% | 0.022 (0.015) | 0 (0) | 0.001 (0) | 0 (0) | 0 (0) | 0 (0) |

| 8% | 0.030 (0.020) | 0 (0) | 0.001 (0) | 0 (0) | 0 (0) | 0 (0) |

| Step trend | ||||||

| 2% | 0.015 (0.010) | 0 (0) | 0.007 (0.004) | 0 (0) | 0.010 (0.005) | 0 (0) |

| 4% | 0.030 (0.020) | 0 (0) | 0.015 (0.008) | 0 (0) | 0.019 (0.010) | 0 (0) |

| 6% | 0.045 (0.030) | 0 (0) | 0.023 (0.012) | 0 (0) | 0.029 (0.015) | 0 (0) |

| 8% | 0.060 (0.040) | 0 (0) | 0.031 (0.016) | 0 (0) | 0.038 (0.019) | 0 (0) |

When there is a positive trend in the simulation, we find that the estimates obtained from WLSs2,Ma2 and Mb2 respectively are unbiased. For those obtained from WLSall, the magnitude of the bias is larger when there is a step trend than when there is a linear trend. This is because the presence of a positive trend inflates the estimate of the overall mean responses of the control treatment while the mean responses of the newly added treatment only have a trend that is similar to the mean responses of the second stage control treatment.

For those obtained from Ma1 and Mb1, the estimated parameter is almost unbiased when there is a linear trend but is biased when there is a step trend. This shows that adjustment with a linear term may not always yield accurate estimate especially when the structure of a trend is not linear.

Marginal power in the presence of a trend

The first row of plots in Figs. 2 and 3 show the marginal power of rejecting H02 when there is no trend; other rows of plots correspond to different magnitudes of λ when there is a linear and a step trend respectively. The x-axis of the plots indicates the value of n22 and n01/n0. Each column of plots has a different range of y-axis due to having different values of n22 when the new arm is added to the on-going trial. As expected, the marginal power obtained from WLSs2 is similar to that from Ma2: the blue line (with ×) superimposes the red line (with red△).

We see that the marginal power obtained from WLSall is the highest and from Mb2 is the lowest among all the comparators. However, the marginal power obtained from WLSall is inflated when there is a positive trend. From our simulation, we find that relative difference in the inflated power to the non-inflated power is larger when n22=550 compared to when n22=1100. The reason could be when n22 is small (relative to the fixed n01), stage one control responses deviate more on average from the responses in stage two, which leads to a larger bias when using all the control data and hence increases the average number of rejected hypotheses.

On the other hand, the marginal power obtained from Ma1 and Mb1 respectively when there is a linear trend remains the same as when there is no trend (i.e. compare the black lines and cyan lines respectively across the row of plots in Fig. 2); but it is inflated when there is a step trend. The magnitude of the inflation increases with the values, λ, of the step trend (see Fig. 3).

We find that the marginal power obtained from WLSs2,Ma2 and Mb2 respectively remains unchanged when there is a trend. This is not surprising as the impact of a positive trend in the mean responses of the newly added arm and the second stage control arm are of similar magnitude, which is being removed when the difference in mean responses is considered.

Type one error rate in the presence of a trend

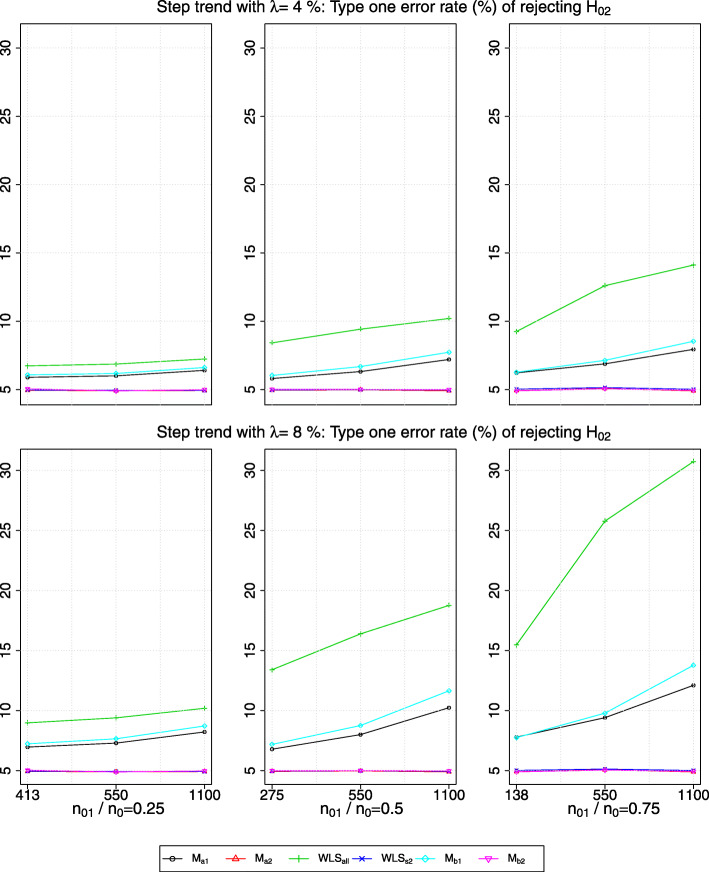

Figures 4 and 5 show the type one error rate of rejecting H02 in the presence of a linear trend and of a step trend respectively, when δ1=δ2=0. A subtle difference in the type one error rate obtained from WLSs2 and Mb2 respectively can be seen in the plots.

Fig. 4.

The type one error rate of rejecting H02 in the presence of a linear trend with a magnitude of λ>0 standard deviation (row-wise); x-axis indicates the value of n22 and the timing of adding the new arm

Fig. 5.

The type one error rate of rejecting H02 in the presence of a step trend with a magnitude of λ>0 standard deviation (row-wise); x-axis indicates the value of n22 and the timing of adding the new arm

We observe that the presence of a step trend would lead to a larger inflation in the type one error rate obtained from WLSall than when there is a linear trend with the same λ, i.e. from comparing the green line (with green+ sign) in Fig. 4 to those in Fig. 5. Besides, the magnitude of inflation is the largest when both the values of n01/n0 and λ are the largest. Having larger n22 also causes more inflation in the type one error rate given the same n01/n0. This may be because, given the same magnitude of the bias, large n22 makes the denominator of the test statistics smaller, and hence increases the number of hypothesis rejections.

For the type one error rate obtained from Ma1 and Mb1, we see a similar finding to that of the marginal power. That is, when there is a linear trend, the type one error rate is maintained within the Monte Carlo simulation error, whereas it is inflated when there is a step trend (see the black line and cyan line respectively in Fig. 5). We find that the type one error rate obtained from WLSs2,Ma2 and Mb2 respectively is maintained within the Monte Carlo simulation error when there is a trend.

rMSE in the presence of a trend

The plots of rMSE are presented in Additional file 1: Figures 1 and 2 in the supplement. When there is a positive trend, we find that Mb2 has the highest rMSE, which remains the same across all the considered values of λ. This is not surprising as the least amount of the data is used. The rMSE values obtained from WLSs2 and Ma2 also remain unchanged when there is a trend.

When there is a linear trend, the rMSE obtained from WLSall increases with λ while remaining smaller than that from Ma1 and Mb1 (which remain unchanged across the linear trends). However, when there is a step trend, the rMSE obtained from these approaches are inflated; the rMSE obtained from WLSall is higher than that from Ma1 when λ≥4%. Nevertheless, across all the considered scenarios, the rMSE obtained from Ma1 is always smaller than the approaches that use less data, i.e. Ma2,Mb1,Mb2, and WLSs2.

These findings highlight that even though the presence of a small trend would lead to a small bias in the estimation, the quality of the estimate computed using all the control data can be better than that computed using only the concurrent control data. This does not hold for larger trends.

Inference about the initial treatment

For the inference about the initial treatment, we find that the bias, type one error rate and power of rejecting H01 are maintained at the same levels as those when there is no trend in the simulation. This finding is observed for all the model-based approaches. This may be due to using the restricted randomization procedure within each stage of the trial in the simulation, which ensures that not all nks patients are being enrolled to a particular arm k during the early or the late phase of the trial. As a result, the average impact of a trend on the stage-wise mean responses of the initial treatment and of the control treatment are similar, which are then being subtracted when the difference in the mean responses is considered. This observation is consistent with the finding of Ryeznik and Sverdlov [17] who compare different randomization procedures in the context of the standard ANOVA F-test.

Discussion

When there is no trend, the advantages of including the non-concurrent control data are greater when the new arm is added at a later time point. Our simulation studies show that given the same magnitude of λ>0, the presence of a step trend affects the validity of the inference more than that of a linear trend when all the control data are used in the analysis of the new arm. We also examine that using a linear regression model adjusting for a linear term may lead to spurious findings when there is a non-linear trend, though the rMSE of the corresponding estimate can be lower than other approaches that use only the concurrent control data. This represents a caveat for including non-concurrent control data in the analysis plan as it is impossible to know the structure of a trend at the planning stage of a trial. After data collection, one can only test the presence of a trend using some quality control techniques such as control charts and CUSUM analysis, see for example a review by Noyez [31]. The analogy between clinical trials and industrial processes is becoming clearer especially when the trial has a long enrolment period. [32–38]

This work has highlighted the impact of a trend when the variance of the observations is known. We emphasise that not all methods assume known variance. We conjecture that the presented results would hold when an estimated variance is unbiased, i.e. the estimate is robust to the presence of a trend. Otherwise, the over- or under-estimated variance would cause the test statistics to be biased and hence provide a spurious result [28]. We also note that when there is a negative trend, i.e. λ<0, the type one error and the marginal power will be deflated, i.e. smaller than the nominal value. This is a direct consequence of a trend that causes responses to be smaller than the true values as the trial progresses.

All things considered, we would recommend that at the design stage of phase III trials, sample size calculations assume only concurrent control information will be used. When the magnitude of a trend is small, we find including the non-concurrent control data in the analysis can improve the efficiency of estimating the parameter of interest. However there could be an inflation (or deflation) in the type one error rate and the marginal power of testing the corresponding hypothesis unless the positive (or negative) trend has a magnitude of less than 0.5% of the standard deviation (based on simulation results not presented here). This finding is similar to the finding of Kopp-Schneider et al [39] for the situation where external information is used in the inference of clinical trials: power gain is not possible when requiring a strict type one error rate control. When the recruitment to all arms do not finish simultaneously, the control data can be separated into before and after adding an arm and those after the initial treatment arms finish recruitment. In this case, the presence of a trend across the three stages can be tested before utilising all the control data to increase the precision of the inference.

We acknowledge that an inflated power is not an issue if we are confident that the intervention is effective. However, using the trial result for other purposes may lead to negative consequences since an inflated power could mean that the estimated effect size is likely to be higher than it should. For example, doing a cost-effectiveness analysis using the estimated effect size may over-estimate the value of the intervention due to the presence of a positive trend. Future work could review real trials that add arms and explore the presence of trends and their structure in the data. One can also investigate the potential of using non-concurrent control data for the situations where i) there are more than one treatment arm being added to the on-going trial; ii) there are more than two-stages; and iii) adaptive randomization procedures are considered.

Conclusion

Platform trials can potentially speed up the drug development processes. The feature of continuous recruitment to the control arm may increase the precision of the inference about the newly added interventions in some situations; or reduce the required sample size for the evaluation of the newly added interventions at the cost of having a less stringent control of the error rates. In light of the presence of a potential trend, it is wise to compare the results of including and excluding non-concurrent control data in the analysis of the newly added interventions.

Supplementary information

Additional file 1 Figures 1 and 2 show the rMSE when there is a linear and a step trend respectively in the simulation.

Acknowledgements

We are grateful to the referees for their helpful comments on an earlier version of this paper.

Abbreviations

- BoS

borrowing of strength

- rMSE

root mean squared error

Authors’ contributions

KL conceived and designed the research and drafted the manuscript. JW provided important intellectual input to interpretation of findings and manuscript writing. All authors critically appraised the final manuscript.

Funding

This research was funded by the Medical Research Council (grant codes MC _UU_00002/6 and MR /N028171 /1). The funder played no role in the design or conduct of the research, nor decision to publish.

Availability of data and materials

Not applicable.

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information accompanies this paper at 10.1186/s12874-020-01043-6.

References

- 1.Bothwell LE, Avorn J, Khan NF, Kesselheim AS. Adaptive design clinical trials: a review of the literature and ClinicalTrials.gov. BMJ Open. 2018;8(2):018320. doi: 10.1136/bmjopen-2017-018320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Woodcock J, LaVange LM. Master Protocols to Study Multiple Therapies, Multiple Diseases, or Both. N Engl J Med. 2017;377(1):62–70. doi: 10.1056/NEJMra1510062. [DOI] [PubMed] [Google Scholar]

- 3.Hirakawa A, Asano J, Sato H, Teramukai S. Master protocol trials in oncology: Review and new trial designs, Contemp Clin Trials Commun. 2018;12:1–8. doi: 10.1016/j.conctc.2018.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Angus DC, Alexander BM, Berry S, Buxton M, Lewis R, Paoloni M, Webb SAR, Arnold S, Barker A, Berry DA, Bonten MJM, Brophy M, Butler C, Cloughesy TF, Derde LPG, Esserman LJ, Ferguson R, Fiore L, Gaffey SC, Gaziano JM, Giusti K, Goossens H, Heritier S, Hyman B, Krams M, Larholt K, LaVange LM, Lavori P, Lo AW, London AJ, Manax V, McArthur C, O’Neill G, Parmigiani G, Perlmutter J, Petzold EA, Ritchie C, Rowan KM, Seymour CW, Shapiro NI, Simeone DM, Smith B, Spellberg B, Stern AD, Trippa L, Trusheim M, Viele K, Wen PY, Woodcock J. Adaptive platform trials: definition, design, conduct and reporting considerations. Nat Rev Drug Discov. 2019:1–11. 10.1038/s41573-019-0034-3.

- 5.Cohen DR, Todd S, Gregory WM, Brown JM. Adding a treatment arm to an ongoing clinical trial: a review of methodology and practice. Trials. 2015;16(1):179. doi: 10.1186/s13063-015-0697-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Karrison TG, Huo D, Chappell R. A group sequential, response-adaptive design for randomized clinical trials. Control Clin Trials. 2003;24(5):506–22. doi: 10.1016/S0197-2456(03)00092-8. [DOI] [PubMed] [Google Scholar]

- 7.Chow S-C, Chang M, Pong A. Statistical Consideration of Adaptive Methods in Clinical Development. J Biopharm Stat. 2005;15(4):575–91. doi: 10.1081/BIP-200062277. [DOI] [PubMed] [Google Scholar]

- 8.Feng H, Shao J, Chow S-C. Adaptive Group Sequential Test for Clinical Trials with Changing Patient Population. J Biopharm Stat. 2007;17(6):1227–38. doi: 10.1080/10543400701645512. [DOI] [PubMed] [Google Scholar]

- 9.Mahajan R, Gupta K. Adaptive design clinical trials: Methodology, challenges and prospect, Indian J Pharmacol. 2010;42(4):201–7. doi: 10.4103/0253-7613.68417. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pallmann P, Bedding AW, Choodari-Oskooei B, Dimairo M, Flight L, Hampson LV, Holmes J, Mander AP, Odondi L, Sydes MR, Villar SS, Wason JMS, Weir CJ, Wheeler GM, Yap C, Jaki T. Adaptive designs in clinical trials: why use them, and how to run and report them, BMC Med. 2018;16(1):29. doi: 10.1186/s12916-018-1017-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Altman DG. Avoiding bias in trials in which allocation ratio is varied. J R Soc Med. 2018;111(4):143–4. doi: 10.1177/0141076818764320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Coad DS. Sequential estimation with data-dependent allocation and time trends. Seq Anal. 1991;10(1-2):91–97. doi: 10.1080/07474949108836227. [DOI] [Google Scholar]

- 13.Hu F, Rosenberger WF, Zidek JV. Relevance weighted likelihood for dependent data. Metrika. 2000;51(3):223–43. doi: 10.1007/s001840000051. [DOI] [Google Scholar]

- 14.Rosenkranz GK. The impact of randomization on the analysis of clinical trials. Stat Med. 2011;30(30):3475–87. doi: 10.1002/sim.4376. [DOI] [PubMed] [Google Scholar]

- 15.Tamm M, Hilgers R-D. Chronological Bias in Randomized Clinical Trials Arising from Different Types of Unobserved Time Trends. Methods Inf Med. 2014;53(06):501–10. doi: 10.3414/ME14-01-0048. [DOI] [PubMed] [Google Scholar]

- 16.Hilgers R-D, Uschner D, Rosenberger WF, Heussen N. ERDO - a framework to select an appropriate randomization procedure for clinical trials. BMC Med Res Methodol. 2017;17(1):159. doi: 10.1186/s12874-017-0428-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ryeznik Y, Sverdlov O. A comparative study of restricted randomization procedures for multiarm trials with equal or unequal treatment allocation ratios. Stat Med. 2018;37(21):3056–77. doi: 10.1002/sim.7817. [DOI] [PubMed] [Google Scholar]

- 18.Lipsky AM, Greenland S. Confounding due to changing background risk in adaptively randomized trials, Clin Trials. 2011;8(4):390–7. doi: 10.1177/1740774511406950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Villar SS, Bowden J, Wason J. Response-adaptive designs for binary responses: How to offer patient benefit while being robust to time trends? Pharm Stat. 2018;17(2):182–97. doi: 10.1002/pst.1845. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Jiang Y, Zhao W, Durkalski-Mauldin V. Time-trend impact on treatment estimation in two-arm clinical trials with a binary outcome and Bayesian response adaptive randomization. J Biopharm Stat. 2019:1–20. 10.1080/10543406.2019.1607368. [DOI] [PMC free article] [PubMed]

- 21.Hilgers R-D, Manolov M, Heussen N, Rosenberger WF. Design and analysis of stratified clinical trials in the presence of bias. Stat Methods Med Res. 2019; 096228021984614. 10.1177/0962280219846146. [DOI] [PMC free article] [PubMed]

- 22.Greenland S. Interpreting time-related trends in effect estimates. J Chron Dis. 1987;40:17–24. doi: 10.1016/S0021-9681(87)80005-X. [DOI] [PubMed] [Google Scholar]

- 23.Wason J, Magirr D, Law M, Jaki T. Some recommendations for multi-arm multi-stage trials, Stat Methods Med Res. 2016;25(2):716–27. doi: 10.1177/0962280212465498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Ventz S, Cellamare M, Parmigiani G, Trippa L. Adding experimental arms to platform clinical trials: randomization procedures and interim analyses. Biostatistics. 2018;19(2):199–215. doi: 10.1093/biostatistics/kxx030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lee KM, Wason J, Stallard N. To add or not to add a new treatment arm to a multiarm study: A decision-theoretic framework. Stat Med. 2019;38(18):8194. doi: 10.1002/sim.8194. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Saville BR, Berry SM. Efficiencies of platform clinical trials: A vision of the future. Clin Trials J Soc Clin Trials. 2016;13(3):358–66. doi: 10.1177/1740774515626362. [DOI] [PubMed] [Google Scholar]

- 27.Butler CC, Coenen S, Saville BR, Cook J, van der Velden A, Homes J, de Jong M, Little P, Goossens H, Beutels P, Ieven M, Francis N, Moons P, Bongard E, Verheij T. A trial like ALIC4E: why design a platform, response-adaptive, open, randomised controlled trial of antivirals for influenza-like illness?. ERJ Open Res. 2018; 4(2). 10.1183/23120541.00046-2018. [DOI] [PMC free article] [PubMed]

- 28.Elm JJ, Palesch YY, Koch GG, Hinson V, Ravina B, Zhao W. Flexible Analytical Methods for Adding a Treatment Arm Mid-Study to an Ongoing Clinical Trial. J Biopharm Stat. 2012;22(4):758–72. doi: 10.1080/10543406.2010.528103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Choodari-Oskooei B, Bratton DJ, Gannon MR, Meade AM, Sydes MR, Parmar MK. Adding new experimental arms to randomised clinical trials: impact on error rates. 2019. https://doi.org/1902.05336. [DOI] [PMC free article] [PubMed]

- 30.Jackson D, White IR, Price M, Copas J, Riley RD. Borrowing of strength and study weights in multivariate and network meta-analysis. Stat Methods Med Res. 2017;26(6):2853–68. doi: 10.1177/0962280215611702. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Noyez L. Control charts, Cusum techniques and funnel plots. A review of methods for monitoring performance in healthcare. Interact Cardiovasc Thorac Surg. 2009;9(3):494–9. doi: 10.1510/icvts.2009.204768. [DOI] [PubMed] [Google Scholar]

- 32.Chang WR, McLean IP. CUSUM: A tool for early feedback about performance?, BMC Med Res Methodol. 2006;6(1):8. doi: 10.1186/1471-2288-6-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Sibanda T, Sibanda N. The CUSUM chart method as a tool for continuous monitoring of clinical outcomes using routinely collected data. BMC Med Res Methodol. 2007;7(1):46. doi: 10.1186/1471-2288-7-46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.McLaren PJ, Hart KD, Dolan JP, Hunter JG. CUSUM analysis of mortality following esophagectomy to allow for identification and intervention of quality problems. J Clin Oncol. 2017;35(4_suppl):203–203. doi: 10.1200/JCO.2017.35.4_suppl.203. [DOI] [Google Scholar]

- 35.Neuburger J, Walker K, Sherlaw-Johnson C, van der Meulen J, Cromwell DA. Comparison of control charts for monitoring clinical performance using binary data, BMJ Qual Saf. 2017;26(11):919–28. doi: 10.1136/bmjqs-2016-005526. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Fortea-Sanchis C, Martínez-Ramos D, Escrig-Sos J. CUSUM charts in the quality control of colon cancer lymph node analysis: a population-registry study. World J Surg Oncol. 2018;16(1):230. doi: 10.1186/s12957-018-1533-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Redd D, Shao Y, Cheng Y, Zeng-Treitler Q. Detecting Secular Trends in Clinical Treatment through Temporal Analysis. J Med Syst. 2019;43(3):74. doi: 10.1007/s10916-019-1173-0. [DOI] [PubMed] [Google Scholar]

- 38.Fortea-Sanchis C, Escrig-Sos J. Quality Control Techniques in Surgery: Application of Cumulative Sum (CUSUM) Charts. Cir Esp (English Edition) 2019;97(2):65–70. doi: 10.1016/j.cireng.2019.01.010. [DOI] [PubMed] [Google Scholar]

- 39.Kopp-Schneider A, Calderazzo S, Wiesenfarth M. Power gains by using external information in clinical trials are typically not possible when requiring strict type I error control. Biom J. 2019; 201800395. 10.1002/bimj.201800395. [DOI] [PMC free article] [PubMed]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1 Figures 1 and 2 show the rMSE when there is a linear and a step trend respectively in the simulation.

Data Availability Statement

Not applicable.